1. Introduction

The rapid advancement of industrial automation has significantly improved production efficiency, but it has also introduced new challenges to factory safety management [

1]. Modern industrial environments involve numerous workers, complex machinery, and dynamic operational conditions, all of which increase the risk of accidents. Ensuring the safety of personnel and equipment requires accurate, timely, and comprehensive monitoring systems capable of detecting multiple potential hazards simultaneously. Traditional approaches, which primarily rely on manual inspection or basic sensor-based alarms, suffer from limited coverage, high labor costs, and high risk of missed detection [

2]. These methods are increasingly inadequate for modern industrial scenarios, where real-time, intelligent, and multi-object detection capabilities have become essential.

Computer vision and deep learning techniques have become key enablers of intelligent industrial safety monitoring [

3]. Object detection algorithms can automatically recognize and localize safety-critical targets [

4], including protective equipment usage, hazardous behaviors such as smoking or mobile phone use, and emergency events such as fire and smoke. However, deploying real-time detection systems in industrial environments presents several challenges [

5]. First, target scales vary widely—from small objects such as cigarettes and sparks to large objects such as forklifts and industrial machines—requiring robust multi-scale feature extraction. Second, industrial scenes are often crowded, with frequent occlusion and overlapping objects, which significantly increases detection difficulty. Third, edge devices used for real-time monitoring typically have limited computational resources, making it challenging to deploy large, high-accuracy models without sacrificing speed [

6].

Existing research has attempted to address these challenges through various improvements. Attention mechanisms such as CBAM [

7] or EMA [

8] have been introduced to enhance feature representation, particularly for small objects. Multi-scale feature pyramids [

9] and progressive feature fusion techniques [

10] further improve detection performance across varying object sizes. Lightweight network designs and shared detection heads have been developed to reduce model complexity while maintaining detection performance [

11]. Additionally, specialized modules have been proposed to improve the extraction of fine-grained or elongated features, thereby improving the detection of certain industrial targets. Despite these advances, most methods optimize for a single target type or a specific aspect of detection, lacking a unified approach for multi-object detection in complex, real-world industrial scenarios. Furthermore, efficiently combining multi-scale features and reducing computational cost without degrading accuracy remain open challenges.

To address these limitations, this study proposes MAS-HENet, a novel real-time detection network for multi-object industrial safety monitoring. MAS-HENet is built upon the YOLOv8 [

12] backbone and introduces a series of synergistic modules designed to enhance feature representation, multi-scale perception, and computational efficiency. The main contributions are as follows:

MAStar module: To improve multi-scale feature representation and gradient flow, MAStar replaces part of the backbone’s conventional structure with multi-branch aggregation and Star Block design. This module enables richer spatial interactions and channel recalibration, enhancing the network’s ability to capture complex textures and diverse target scales.

P2 small-object detection layer [

13]: Small-scale objects are particularly difficult to detect in dense industrial environments. Adding a P2-level detection layer allows the network to utilize higher-resolution features for small targets, improving sensitivity and recall without negatively affecting the detection of larger objects.

Lightweight Shared Convolutional Detection Head (LSCD) [

14]: To reduce computational overhead while maintaining high accuracy, LSCD implements shared convolution operations and group normalization across scales. This design significantly decreases the number of parameters and computational cost, making MAS-HENet suitable for real-time deployment on resource-constrained devices.

High-Efficiency Up-Convolution (HEUC) module: Accurate cross-scale feature fusion is critical for precise localization and classification. HEUC combines efficient upsampling with depthwise separable convolutions [

15] and channel alignment [

16], enhancing feature reconstruction quality and multi-scale detection accuracy.

Collectively, these modules address the key limitations of existing networks. MAStar strengthens multi-scale feature representation, the P2 layer enhances small-object detection, LSCD reduces computational complexity, and HEUC improves feature fusion and reconstruction. Through these complementary improvements, MAS-HENet achieves a balanced trade-off between detection accuracy, multi-scale robustness, and computational efficiency, making it highly suitable for real-time multi-object detection in complex industrial scenarios.

2. Related Work

Industrial safety monitoring has gained increasing attention due to the critical need for accident prevention in complex production environments. Early approaches relied primarily on manual inspections or basic sensor-based detection, such as infrared or motion sensors [

17]. While effective for simple hazard detection, these methods are limited by low coverage, high labor costs, and sensitivity to environmental noise, making them unsuitable for modern industrial settings that demand high-precision and real-time monitoring.

2.1. Object Detection in Industrial Environments

Object detection techniques form the foundation of intelligent safety monitoring. Traditional methods often employed hand-crafted features such as Haar cascades [

18], HOG [

19], or SIFT descriptors [

20] combined with classifiers like SVM [

21]. These approaches demonstrated reasonable performance in controlled conditions but struggled in complex industrial scenes with varying lighting, occlusions, and dense object layouts.

With the emergence of deep learning, convolutional neural network (CNN)-based detectors such as Faster R-CNN [

22], SSD [

23], and YOLO [

24] series have become dominant. Two-stage detectors like Faster R-CNN offer high accuracy but suffer from slow inference speeds, limiting their applicability for real-time monitoring. One-stage detectors, including YOLO and RetinaNet, provide a more favorable trade-off between speed and accuracy, making them suitable for industrial deployment. Recent improvements in these networks include feature pyramid networks (FPN) [

25] for multi-scale detection, attention mechanisms for enhancing critical feature representation, and anchor-free detection heads for simplifying training and inference.

In recent years, Transformer-based detectors such as DETR [

26], Deformable DETR [

27], DINO-DETR [

28], and Vision Transformers [

29] (ViT, Swin-T) have demonstrated strong feature modeling capabilities. However, these models generally require large-scale data and high computational resources, limiting their deployment on industrial edge devices. Compared with these Transformer architectures, convolution-based networks offer better parameter efficiency and real-time inference, making them more practical for factory safety monitoring.

2.2. Attention Mechanisms and Feature Fusion

Attention mechanisms, such as CBAM (Convolutional Block Attention Module) and EMA (Efficient Multi-scale Attention), have been widely adopted to improve network sensitivity to small and occluded targets. These modules allow the network to focus on relevant spatial regions and feature channels, effectively enhancing the detection of fine-grained industrial targets. In addition, multi-scale feature fusion strategies, such as FPN, PAN, and BiFPN [

30], integrate features across different resolutions to improve the recognition of targets of varying sizes. Progressive feature aggregation and hierarchical fusion have been shown to enhance detection robustness, particularly for small or densely packed objects.

2.3. Lightweight Networks and Efficient Detection Heads

Given the deployment constraints of industrial edge devices, lightweight network architectures are crucial. Techniques such as depthwise separable convolutions, shared detection heads, and group normalization [

31] have been introduced to reduce parameter counts and computational cost while maintaining detection performance. Shared convolutional heads, in particular, allow multiple detection layers to leverage the same feature extractor, improving both efficiency and stability in multi-scale detection scenarios.

2.4. Advances in Small-Object Detection

Small-object detection remains a major challenge in industrial monitoring due to limited pixel representation and frequent occlusion. Approaches such as adding high-resolution detection layers, using context-aware attention, and employing multi-branch feature aggregation have been proposed to enhance small-object perception [

13]. These methods increase the receptive field and improve feature discrimination for tiny or low-resolution targets, which is critical in scenarios such as smoke detection, fire monitoring, and behavioral analysis in factories.

2.5. Summary

Although significant progress has been made in industrial object detection, most existing methods focus on optimizing individual aspects—such as small-object perception, feature fusion, or lightweight model design—without providing a unified framework that addresses these challenges simultaneously. This often leads to models that perform well in specific cases but lack robustness and generalization across complex industrial scenes involving multi-scale objects, diverse backgrounds, and dynamic lighting conditions.

To overcome these limitations, MAS-HENet integrates four complementary components within a single coherent architecture: a Mixed Aggregation Star (MAStar) module for enhanced multi-scale feature interaction, a P2 layer for improved high-resolution small-object detection, a Lightweight Shared Convolutional Detection (LSCD) head to reduce redundancy and maintain semantic consistency, and a High-Efficiency Up-Convolution (HEUC) module to optimize spatial information recovery. By combining these improvements, MAS-HENet achieves a better trade-off between detection precision, robustness, and computational efficiency, providing a more comprehensive and scalable solution for real-time industrial safety monitoring.

3. Method

3.1. Overview

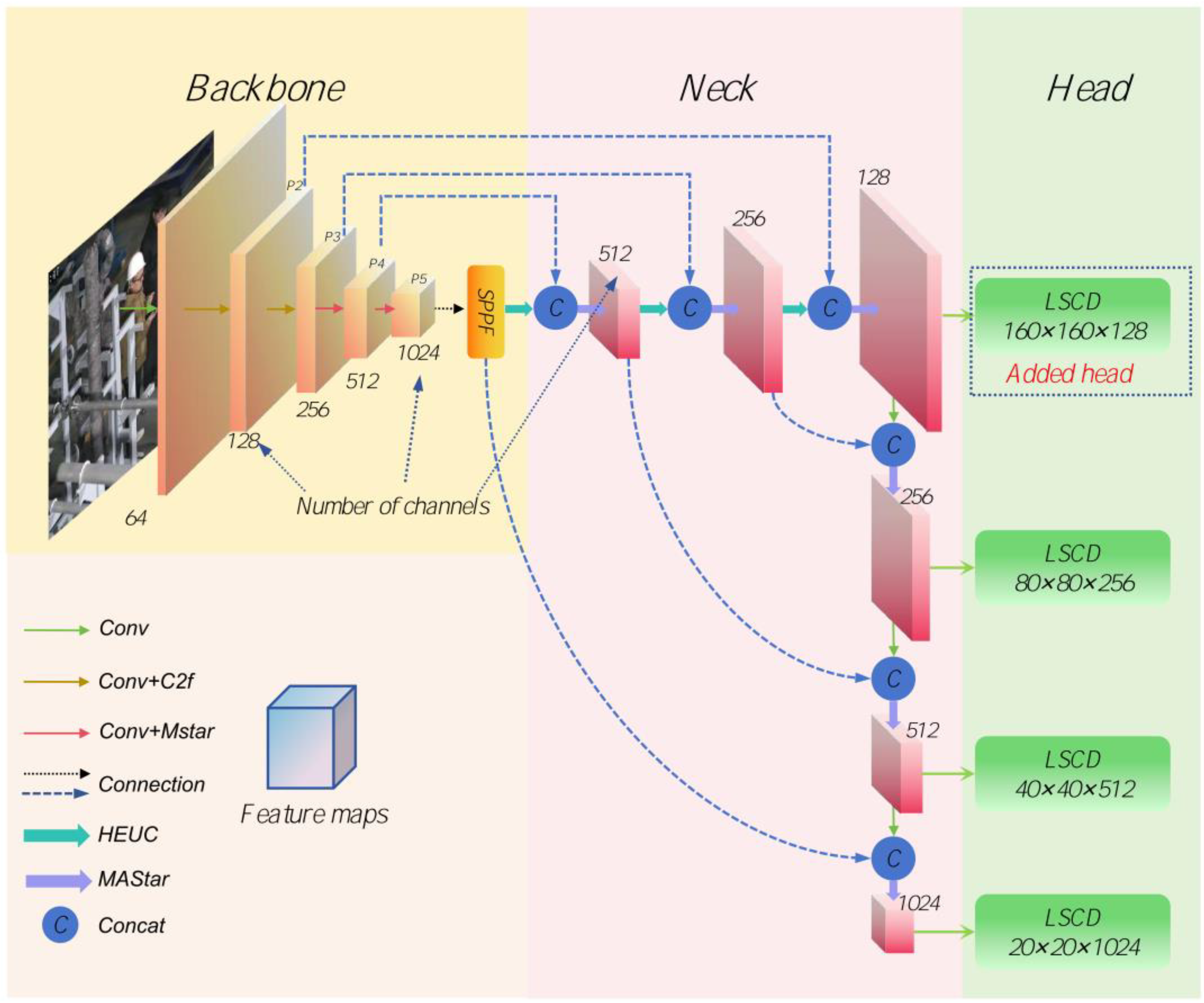

To address the challenges of multi-object detection in complex industrial environments, this study proposes MAS-HENet, a lightweight and efficient detection framework tailored for real-time factory safety monitoring. The network follows the one-stage detection paradigm, consisting of three main components: a feature extraction backbone, a multi-scale feature fusion neck, and a detection head. As illustrated in

Figure 1, the overall pipeline processes input images through hierarchical feature extraction, cross-scale fusion, and final object prediction, achieving an effective balance between accuracy and computational efficiency.

MAS-HENet is designed to overcome key limitations of existing detection models in industrial scenarios, such as the difficulty of recognizing small, dispersed, and variably scaled targets under complex lighting and occlusion conditions. Through a hierarchical representation and enhanced feature interaction across multiple scales, the network captures both fine spatial details and high-level semantic cues, which are essential for identifying small objects like phones, cigarettes, or flames while maintaining robustness for larger targets such as workers or machinery.

Furthermore, the architecture emphasizes practical deployability. By optimizing feature propagation and reducing redundant computation, MAS-HENet achieves real-time inference capability on edge devices commonly used in factory surveillance systems. Overall, the proposed framework integrates feature richness, scale adaptability, and computational efficiency, providing a solid foundation for accurate and reliable safety monitoring in industrial production environments.

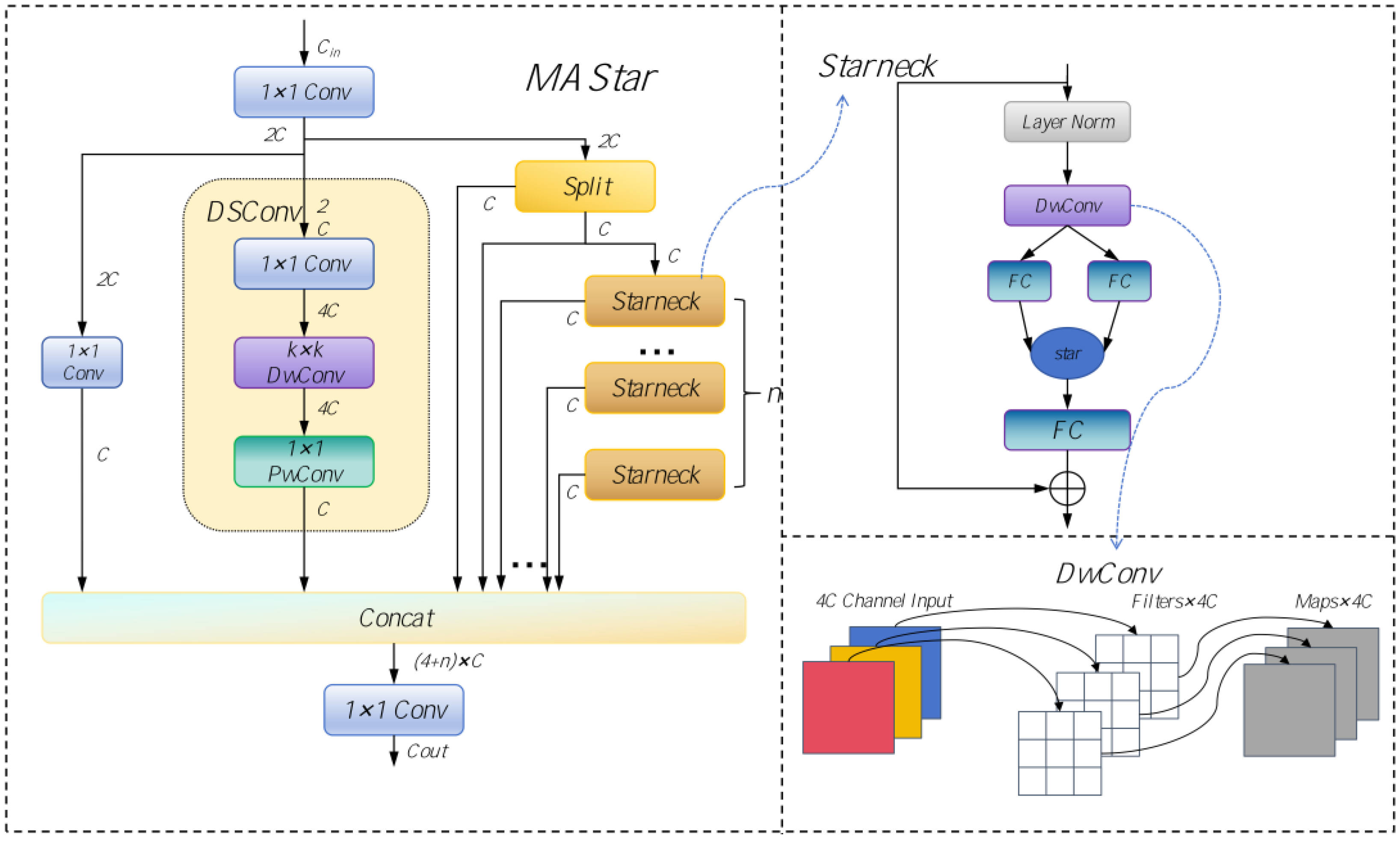

3.2. MAStar: An Enhanced Mixed Aggregation Module Based on MANet

To further enhance the feature extraction capability of the backbone network, this study introduces the MAStar (Mixed Aggregation Star) module, which is structurally improved upon the MANet (Mixed Aggregation Network) [

32]. The overall structure of the MAStar module is illustrated in

Figure 2. It inherits the multi-branch aggregation design philosophy of MANet, incorporating channel re-calibration, depthwise separable convolution, and hierarchical feature fusion to achieve efficient feature representation and transmission.

Unlike the original MANet, MAStar replaces the conventional ConvNeck block with a Star Block [

33], which possesses stronger nonlinear modeling and spatial interaction capabilities. This modification enables the model to capture more diverse texture and multi-scale features while maintaining a lightweight structure.

Within MAStar, the introduced Star Block is designed to enhance spatial modeling and inter-channel interaction under computational constraints. Specifically, a 7 × 7 depthwise convolution is first employed to expand the receptive field, capturing both local and neighboring contextual information. Subsequently, two parallel 1 × 1 convolution branches generate feature transformation paths, followed by element-wise multiplication to achieve dynamic modulation between features, thereby improving the model’s response to critical patterns. Nonlinear activation functions (ReLU) [

34] and a second depthwise convolution further refine the feature distribution, allowing rich feature representation with minimal parameters.

In addition, residual connections and a stochastic depth (DropPath) mechanism are integrated to mitigate gradient vanishing and improve both training stability and generalization capability. The overall computation process of the MAStar module can be formulated as:

where the channel number of

is

, and that of each

is

. Finally, the aggregated features are concatenated and passed through a 1 × 1 convolution for semantic compression and fusion, producing the output feature

:

3.3. P2: Fine-Grained High-Resolution Detection Layer

In industrial monitoring scenarios, the accurate detection of small-scale targets such as cigarettes, fire sources, and handheld objects poses a persistent challenge for conventional detection frameworks. Although deeper layers of convolutional networks capture rich semantic information, the accompanying down-sampling process often leads to the loss of fine spatial details crucial for small object localization. To address this issue, MAS-HENet introduces a fine-grained high-resolution detection layer, denoted as P2, which extends the detection hierarchy toward shallower feature maps.

The P2 layer utilizes higher-resolution features from the backbone network, enabling the preservation of detailed spatial and texture information. By integrating P2 into the detection head, the network is able to capture subtle object boundaries and fine-grained contextual cues that are otherwise lost in lower-resolution feature maps. Furthermore, the feature maps at the P2 level are refined through lateral connections and adaptive channel recalibration, ensuring semantic consistency across scales and mitigating feature redundancy.

This design effectively enhances the model’s sensitivity to small targets while maintaining computational efficiency. As validated in subsequent experiments, the introduction of the P2 detection layer significantly improves the precision and recall of small object categories, demonstrating its effectiveness in enhancing fine-grained perception within complex industrial environments.

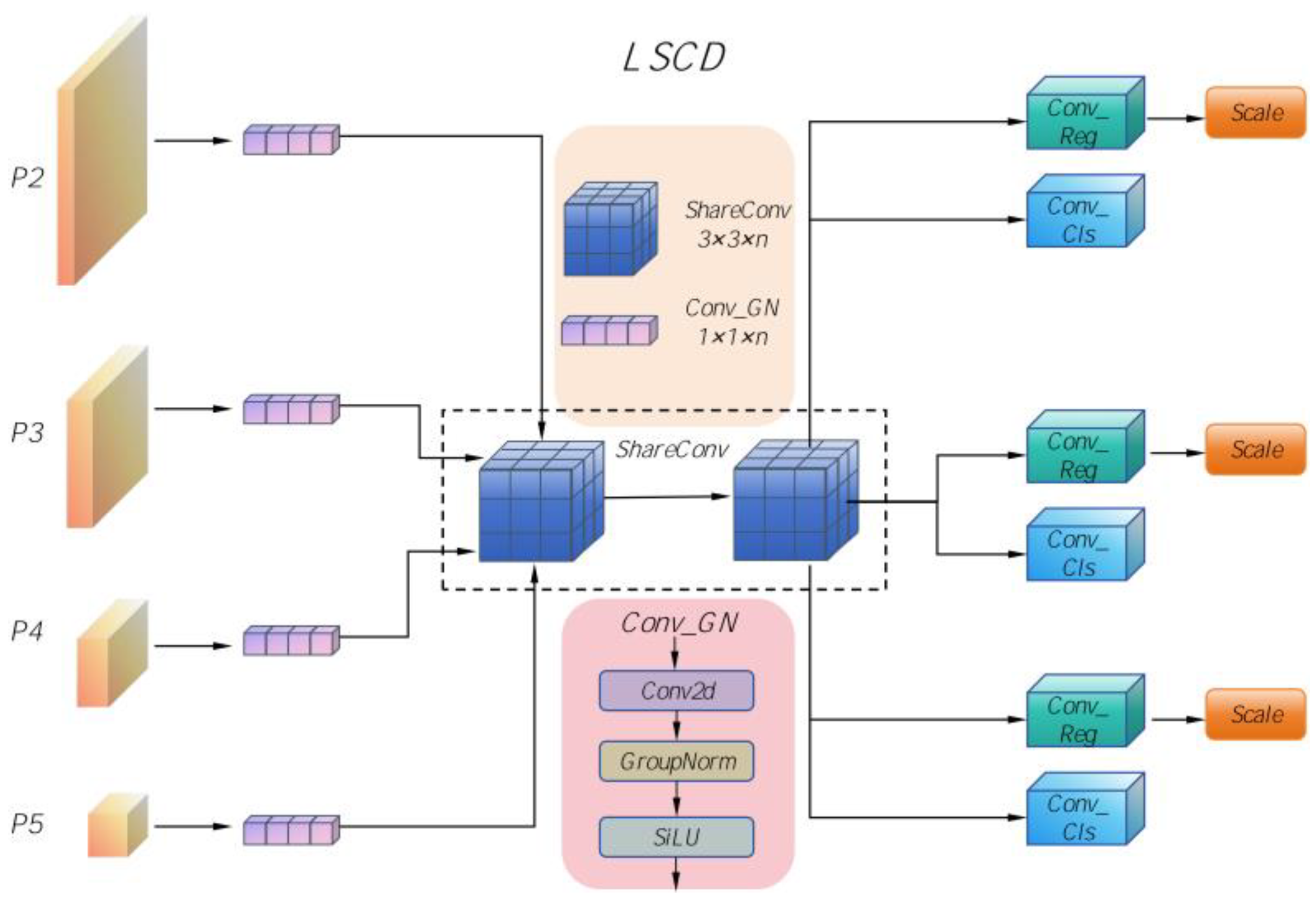

3.4. LSCD: Lightweight Shared Convolutional Detection Head

To enhance multi-scale detection efficiency while maintaining high accuracy, this study introduces the LSCD as the prediction component of MAS-HENet. The overall structure of the LSCD is illustrated in

Figure 3. Unlike traditional multi-branch detection heads that process each scale independently, LSCD adopts a shared convolutional mechanism to extract scale-invariant representations with reduced redundancy.

Let the multi-scale features from the neck be denoted as:

where

,

, and

represent the channel, height, and width of the

-th feature map, respectively. Each feature is first projected into a unified embedding space via a

convolution followed by Group Normalization (GN) [

35]:

Then, the normalized features are processed through a shared convolutional backbone composed of two

convolutions with GN and ReLU activations:

This shared pathway enables consistent feature extraction across scales and strengthens the generalization of the network to objects of varying sizes. After feature extraction, is split into two output branches: a regression branch and a classification branch.

The regression branch predicts discrete distributions for bounding box coordinates using Distribution Focal Loss (DFL)

where

denotes the number of discrete bins (

). The final box coordinates are obtained by expectation over the softmax probabilities:

The classification branch outputs the class confidence map:

where

is the sigmoid activation.

A scale modulation factor

is applied to regression outputs to balance gradient magnitudes across different detection levels:

Finally, all detection results from multiple scales are concatenated and decoded into final predictions:

Through this shared convolutional structure, LSCD substantially reduces parameter count while maintaining precise localization and classification capabilities. The GN-based normalization and DFL-based regression jointly enhance model stability and localization accuracy, making LSCD particularly effective in complex industrial safety monitoring scenarios.

3.5. HEUC: High-Efficiency Feature-Enhancing Up-Convolution Module

To further enhance the semantic restoration and spatial precision of feature maps during the upsampling process, this study designs a High-Efficiency Up-Convolution Block (HEUC), as illustrated in

Figure 4. Traditional upsampling layers (e.g., nearest-neighbor or transposed convolution) often introduce checkerboard artifacts or lose local detail due to inadequate feature interaction. In contrast, the proposed HEUC integrates depthwise separable convolution and pointwise convolution within a two-stage architecture to achieve efficient upsampling while maintaining rich feature representation.

Specifically, given an input feature map X, HEUC first employs an upsampling operation with a scale factor of 2 to increase the spatial resolution:

Subsequently, a depthwise convolution with kernel size

is applied to enhance spatial feature extraction across neighboring pixels:

This operation ensures that each channel learns independent spatial representations, effectively expanding the receptive field while maintaining computational efficiency.

Next, a pointwise convolution is used to aggregate inter-channel information and refine semantic consistency:

To further improve feature diversity and prevent redundancy, the HEUC module optionally incorporates a channel shuffle mechanism:

where g presents the group number used to enhance cross-channel interactions.

Through this hierarchical combination of spatial and channel operations, the proposed HEUC module not only achieves efficient upsampling but also effectively preserves semantic continuity between adjacent feature levels. Compared to conventional transposed convolutions, HEUC significantly reduces computational complexity while enhancing fine-grained detail restoration. This makes it particularly advantageous in industrial safety monitoring, where accurate localization of small and scattered targets is crucial for detection robustness.

4. Experiments

4.1. Dataset

4.1.1. Self-Built Dataset

To evaluate MAS-HENet in industrial safety monitoring scenarios, a comprehensive image dataset was constructed to reflect the complexity and diversity of real factory environments. Data were collected from multiple sources to ensure coverage of varied perspectives, lighting conditions, and object states:

On-site data acquisition: Factory scenes were captured using two types of network cameras. One camera focused on wide-area monitoring, providing full-scene coverage for general surveillance, while the other was positioned to capture detailed views of regions with high-risk activities, enabling precise detection of small-scale objects. This multi-camera setup ensures that the dataset contains both contextual and fine-grained visual information critical for multi-object detection in industrial settings.

Supplementary sources: To further enhance the diversity and representativeness of the dataset, additional images were collected from publicly available datasets and internet resources. This combination helps cover scenarios not easily captured on-site, such as rare safety violations or challenging lighting conditions.

The dataset includes five target categories relevant to factory safety: helmet, no helmet, phone usage, smoking, and fire sources. These categories were selected based on their importance in industrial risk assessment and compliance monitoring. The dataset was divided into training, validation, and test sets with 3000, 800, and 400 images, respectively. To improve model generalization and robustness, data augmentation techniques [

36] were applied to the training set, including geometric transformations (rotation, flipping, scaling), color jittering, and random cropping, resulting in an augmented training set of 8000 images.

All images were manually annotated using the LabelImg tool following a standardized protocol. Each image was labeled by one annotator and independently verified by another to ensure annotation accuracy and consistency. Ambiguous cases were resolved through consensus review. This process guarantees high-quality and reproducible labeling for all categories.

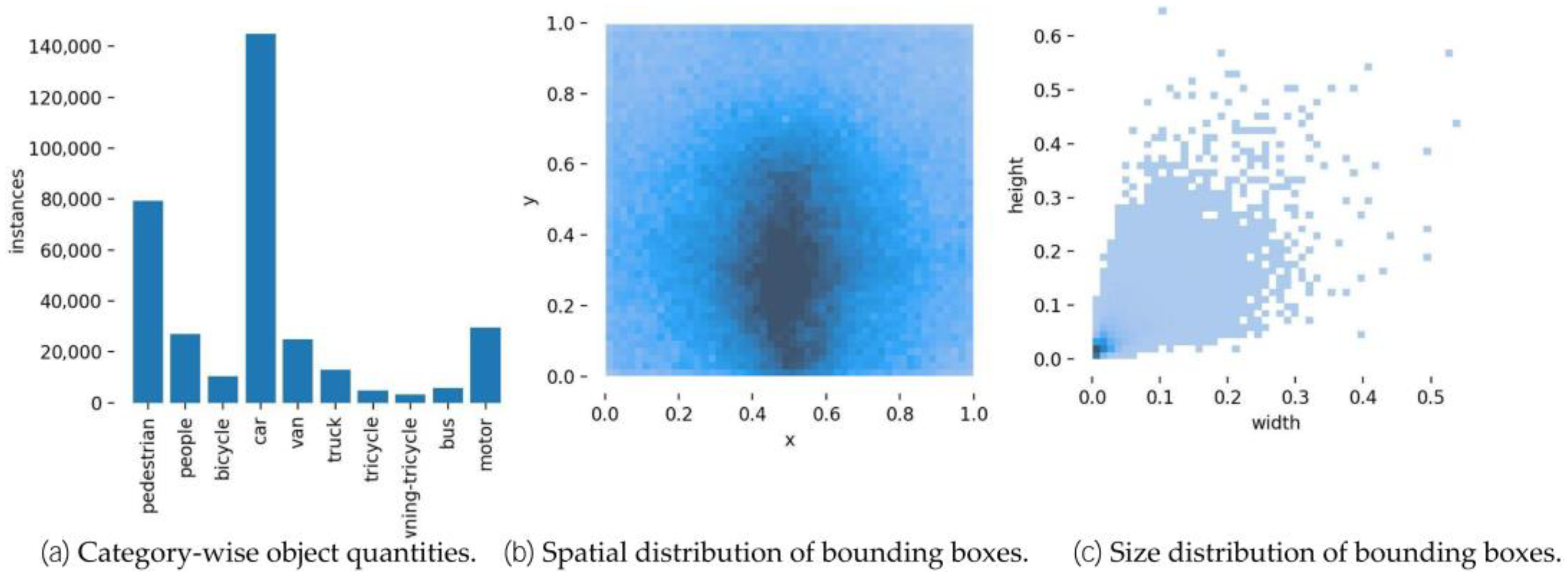

A comprehensive statistical analysis was conducted to investigate the distribution characteristics of the annotated objects within the dataset.

Table 1 summarizes the quantity of each category in the augmented training set, reflecting the overall class balance and diversity. To further understand the spatial and scale-related properties of the dataset, detailed visual analyses were performed, as shown in

Figure 5. Specifically,

Figure 5a illustrates the approximate quantity of each object category, providing a global view of class proportion.

Figure 5b presents the spatial distribution of labeled bounding boxes across the image plane, revealing that most targets are sparsely distributed rather than clustered within limited regions.

Figure 5c depicts the size distribution of bounding boxes, demonstrating that the majority of objects are relatively small in scale. This combination of small-sized and spatially dispersed targets poses considerable challenges for conventional detection models, as it requires fine-grained feature extraction and robust localization under limited contextual cues.

Finally,

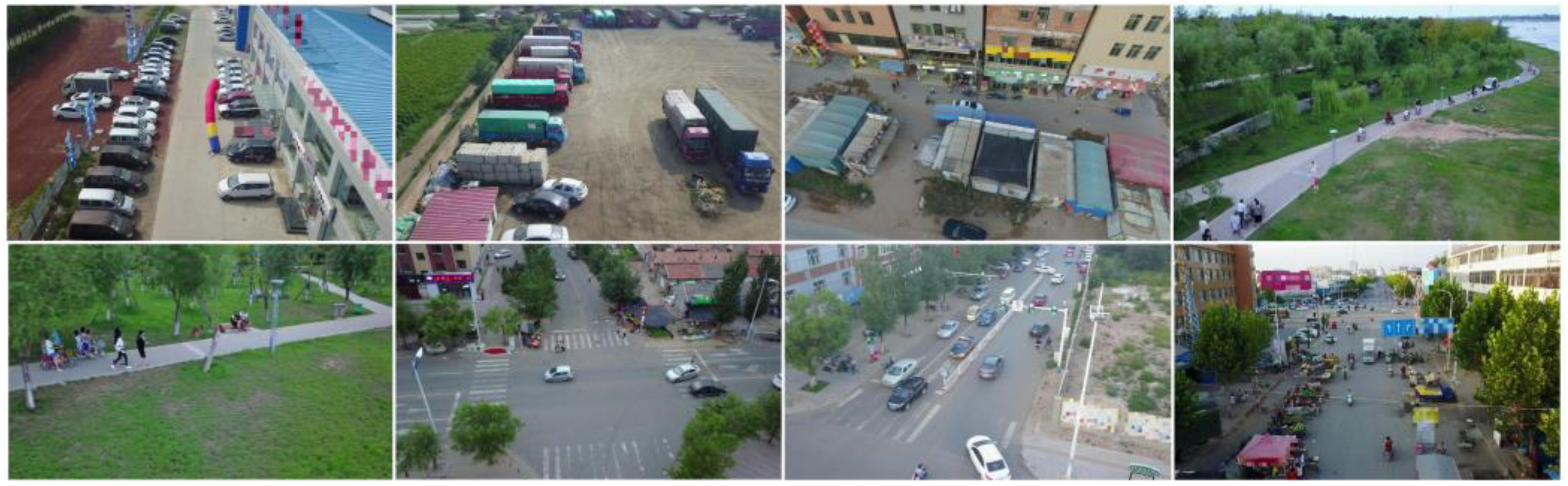

Figure 6 presents several samples from the self-built dataset, showcasing different factory environments, object categories, and behavioral states. These examples visually demonstrate the diversity and realism of the dataset, providing a solid foundation for evaluating the proposed MAS-HENet model in complex industrial monitoring scenarios.

4.1.2. Public Dataset: VisDrone2019

To further validate the generalization capability of the proposed MAS-HENet, experiments were conducted on the publicly available VisDrone2019 dataset [

37]. This dataset serves as one of the most authoritative benchmarks for evaluating object detection algorithms in complex outdoor environments. It was collected by unmanned aerial vehicles (UAVs) operating over various urban and suburban areas, encompassing streets, residential districts, parking lots, and industrial zones. The captured scenes cover a wide range of viewing angles, altitudes, and illumination conditions, which collectively reflect the diverse visual characteristics encountered in real-world applications.

The VisDrone2019 dataset consists of ten representative object categories commonly observed in aerial imagery, including pedestrian, people, bicycle, car, van, truck, tricycle, awning-tricycle, bus, and motor. These categories exhibit significant variations in appearance, scale, and occlusion, making the dataset a comprehensive benchmark for multi-class and multi-scale object detection. The official split comprises 6471 training images, 548 validation images, and 1580 testing images, each annotated with bounding boxes and corresponding category labels.

A detailed statistical analysis of category distribution was performed to examine the dataset’s balance and structural diversity. As shown in

Table 2, small and frequently occurring targets such as pedestrians and cars dominate the dataset, whereas larger and less common targets such as buses and trucks appear less frequently. This long-tail distribution poses a considerable challenge for detection models, which must maintain robust performance across both frequent and rare object classes.

To further characterize the dataset,

Figure 7 illustrates the spatial and scale distribution of annotated objects. The analysis reveals that most targets occupy less than 2% of the total image area and are sparsely distributed across the scene rather than densely aggregated. Such small-scale and scattered targets increase the difficulty of accurate localization and classification, especially for lightweight detection networks. Representative samples from the dataset are shown in

Figure 8, demonstrating its wide variation in camera viewpoints, environmental conditions, and object arrangements.

In summary, the VisDrone2019 dataset provides a challenging and diverse evaluation platform that complements the self-built industrial dataset. Its inclusion ensures a more rigorous and comprehensive assessment of the proposed MAS-HENet model’s generalization performance under different visual domains and scene complexities.

4.2. Experimental Environment and Parameter Settings

To ensure the reliability and reproducibility of the experiments, all model training and testing in this study were conducted in a Linux-based operating system environment. The hardware platform was equipped with an NVIDIA GeForce RTX 4090 GPU (24 GB VRAM) and an AMD EPYC 7352 CPU, providing sufficient computational capacity for large-scale model optimization.

The software environment adopted Python 3.10.15 as the programming language, with PyTorch 2.3.0 serving as the primary deep learning framework and CUDA 12.1 for GPU acceleration. All experiments were implemented under a unified environment to ensure consistency in training and evaluation.

The main hyperparameter settings used during model training are summarized in

Table 3. The input image resolution was fixed at 640 × 640, and the batch size was set to 32 to balance convergence stability and GPU memory utilization. The initial learning rate was set to 0.01, and the SGD (Stochastic Gradient Descent) optimizer was employed with a momentum coefficient of 0.937. The total number of training epochs was 150, ensuring sufficient iterations for convergence. These settings were empirically determined to achieve optimal performance while maintaining training efficiency.

In addition to the parameters summarized in

Table 3, the training loss function consisted of three main components: Complete IoU (CIoU) loss for bounding box regression, Binary Cross-Entropy (BCE) loss for classification, and Distribution Focal Loss (DFL) for localization refinement. Their weighting ratios were set to 7.5, 0.5, and 1.5, respectively. The learning rate followed a cosine decay schedule, starting from an initial value of 0.01 and gradually decreasing to 0.0001 over 150 epochs. A 3-epoch warm-up phase was applied to progressively ramp up the learning rate and momentum, ensuring stable convergence.

To guarantee experimental reproducibility, the random seed was fixed at 0 during all training processes. These configurations align with the Ultralytics YOLO default settings and ensure full transparency and reproducibility of the reported results.

4.3. Evaluation Metrics

To comprehensively evaluate the performance of the proposed MAS-HENet model, multiple quantitative metrics are employed from both efficiency and accuracy perspectives.

From the perspective of model efficiency, the number of parameters (Params) is used to measure the structural complexity of the network, while the floating-point operations (GFLOPs) quantify the computational cost required for inference. These indicators together reflect the balance between the model’s accuracy and computational efficiency.

In terms of detection accuracy, the mean Average Precision at IoU threshold 0.5 (mAP@50) is adopted as the primary evaluation metric. Additionally, Precision (P) and Recall (R) are introduced to jointly assess the model’s discrimination capability between positive and negative samples, thereby evaluating the overall detection performance.

The specific definitions and computation formulas of these metrics are as follows:

where TP (True Positive) denotes the number of correctly predicted positive samples, FP (False Positive) represents the number of negative samples incorrectly predicted as positive, and FN (False Negative) refers to the number of positive samples misclassified as negative. N denotes the total number of categories.

These metrics together provide a comprehensive evaluation of the model’s performance in terms of detection accuracy, balance between precision and recall, and computational efficiency.

4.4. Results

4.4.1. Ablation Experiments

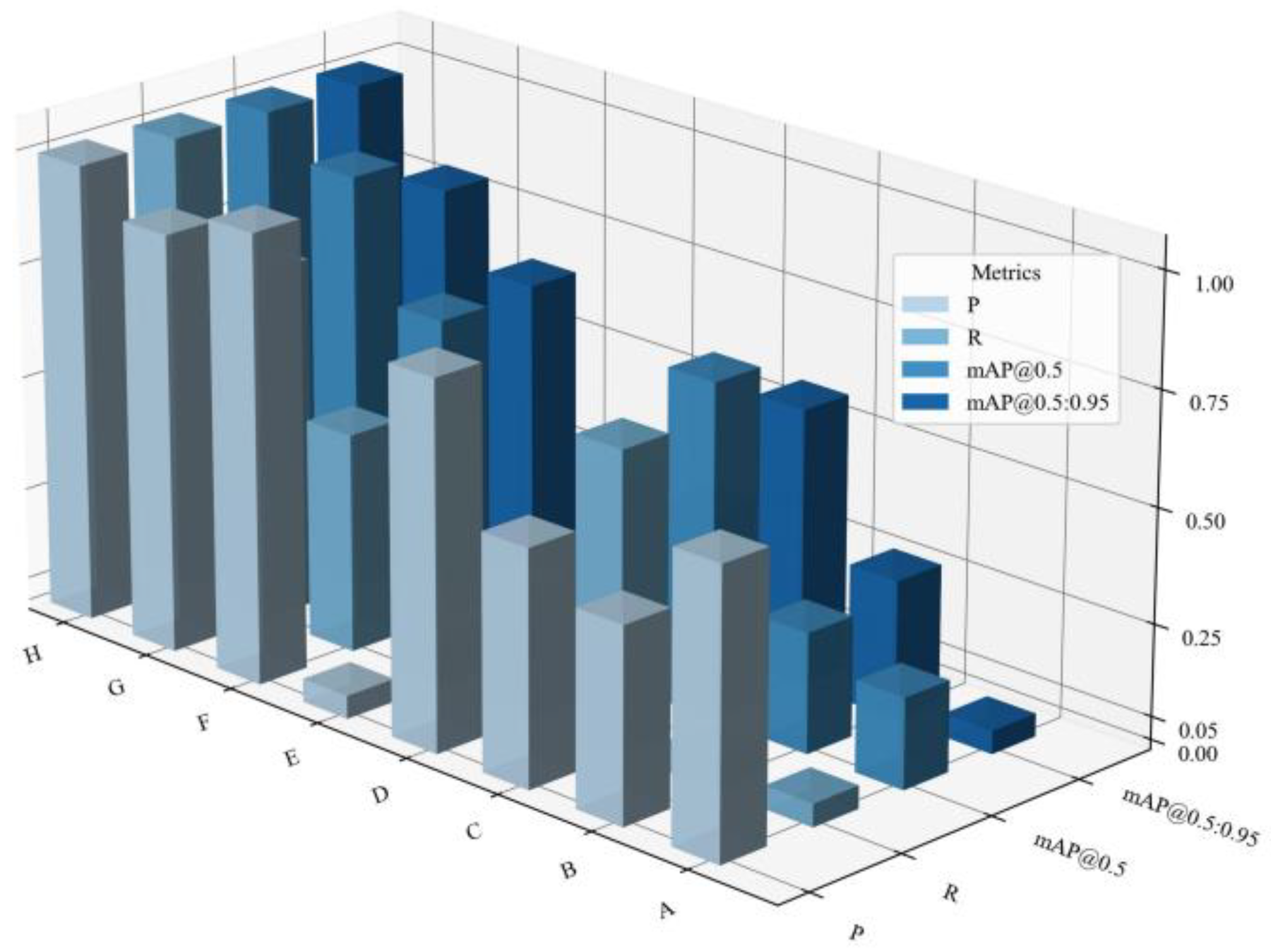

To evaluate the individual and combined contributions of the proposed modules, a series of ablation experiments were conducted based on the YOLOv8n baseline. The MAStar module, P2 small-object detection layer, Lightweight Shared Convolutional Detection head (LSCD), and High-Efficiency Upsampling Convolution module (HEUC) were progressively integrated. The configurations and performance results of all model variants are summarized in

Table 4.

As observed, each proposed module contributes to performance improvement from different aspects. The introduction of the MAStar module alone increased mAP@50 from 79.5% to 79.8%, indicating that it effectively enhances feature reuse and gradient propagation, thereby improving representational capability. When the P2 layer was incorporated, the model achieved a substantial gain in small-object detection, with mAP@50 reaching 81.7% and Recall increasing to 77.5%, demonstrating the significance of shallow fine-grained features for detecting small targets. After integrating the LSCD detection head, the number of parameters was reduced from 3.40 M to 2.89 M, while mAP@50 improved to 82.9%. This confirms that the shared convolution mechanism can effectively minimize parameter redundancy without compromising accuracy. Finally, with the addition of the HEUC module, the model achieved optimal performance, reaching 83.3% mAP@50, 58.0% mAP@50:95, and Precision and Recall of 86.3% and 79.3%, respectively. These results highlight that HEUC plays a crucial role in improving spatial reconstruction and multi-scale feature fusion.

Overall, the four modules complement each other in different aspects: MAStar enhances feature extraction, P2 improves small-object perception, LSCD reduces computational cost, and HEUC optimizes feature fusion. Their synergistic integration enables MAS-HENet to maintain an excellent balance between detection accuracy and model efficiency.

To visualize the performance trends, a 3D comparison chart was plotted based on

Table 4 (

Figure 9). The chart illustrates that all key indicators—Precision, Recall, mAP@50, and mAP@50:95 exhibit a consistent upward trajectory as the proposed modules are progressively integrated, with the best performance achieved after incorporating HEUC.

The experiments were conducted under controlled conditions using fixed random seeds to ensure reproducibility. Preliminary tests under different initializations showed negligible deviations (<0.3% mAP), indicating that the proposed MAS-HENet is stable and robust across runs.

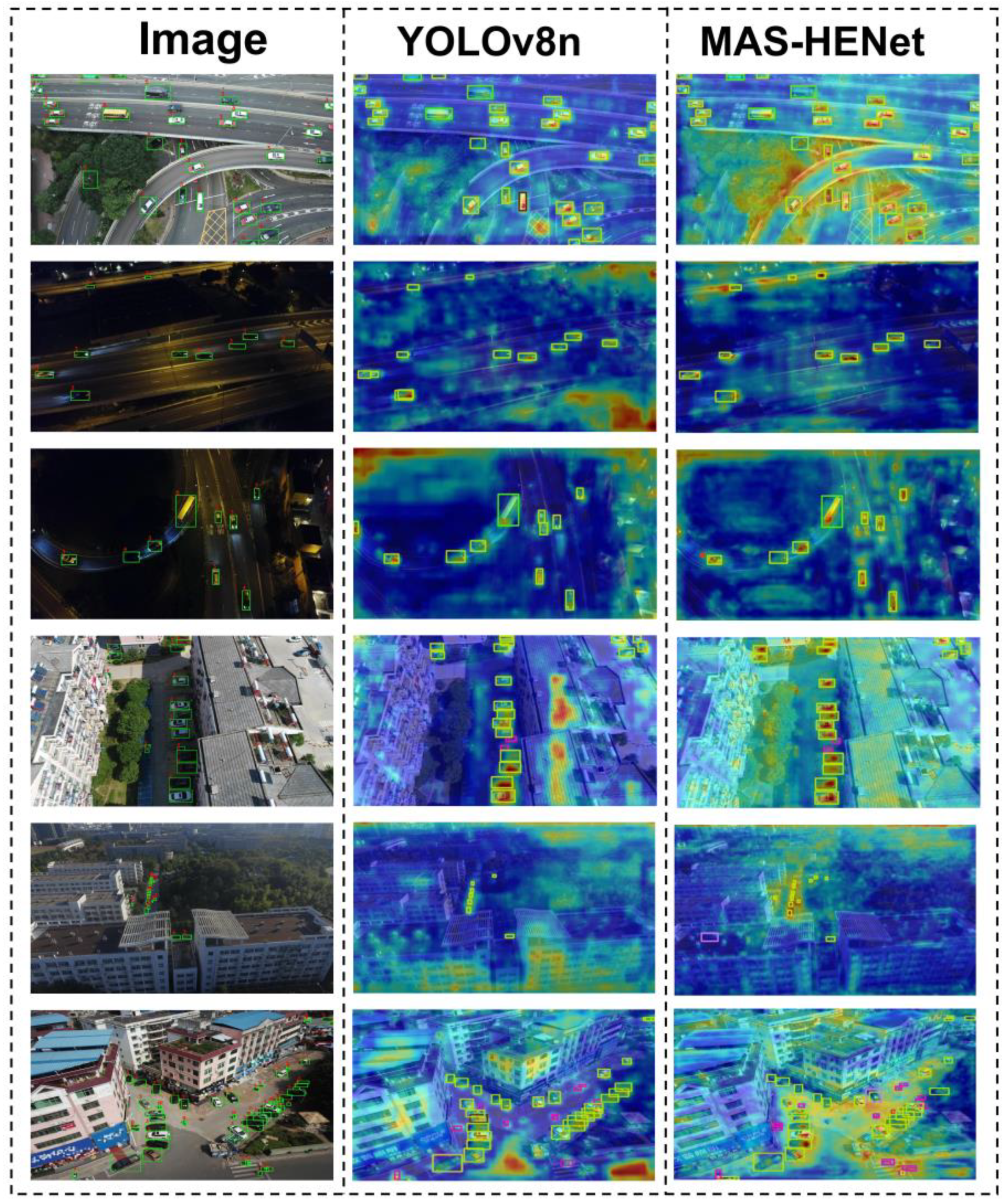

Furthermore, the heatmap comparison between the baseline YOLOv8n and the proposed MAS-HENet (

Figure 10) shows that MAS-HENet achieves stronger activation responses across all categories, particularly for small and complex targets, validating its superior feature discrimination and generalization capabilities.

In summary, the ablation experiments and visual analyses confirm the effectiveness of the proposed modules. MAS-HENet demonstrates outstanding detection performance while maintaining lightweight design, proving its robustness and adaptability to complex industrial detection scenarios.

4.4.2. Comparative Experiments

To comprehensively evaluate the detection performance and efficiency of the proposed MAS-HENet model, a series of comparative experiments were conducted with several representative lightweight models from the YOLO family, including YOLOv5n [

38], YOLOv8s [

12], YOLOv10n [

39], YOLOv11n [

40], and YOLOv12n [

41]. The experimental results are summarized in

Table 5.

As presented in

Table 5, the proposed MAS-HENet achieves the highest accuracy among all compared models, with a mAP@50 of 83.3% and mAP@50:95 of 58.0%, outperforming YOLOv8s and YOLOv12n by 1.0% and 3.4%, respectively. The precision (P) and recall (R) reach 86.3% and 79.3%, which are 3.6% and 5.3% higher than YOLOv12n, respectively. These results demonstrate that MAS-HENet effectively enhances both detection accuracy and recall consistency, particularly for small and occluded targets.

In terms of efficiency, MAS-HENet maintains a compact architecture with only 2.98M parameters, approximately one-quarter the size of YOLOv8s, while reducing computational cost to 13.5 GFLOPs, less than half of YOLOv8s (28.4 GFLOPs). This indicates that MAS-HENet achieves an excellent trade-off between lightweight efficiency and detection performance, validating the effectiveness of its structural design.

To provide an intuitive understanding of the comparative results, a 3D performance comparison chart based on

Table 5 is presented in

Figure 11. The horizontal axis represents model versions, while the vertical categories correspond to key performance indicators (P, R, mAP@50, mAP@50:95). The height of each bar indicates the normalized performance value. As shown in

Figure 11, MAS-HENet consistently achieves the highest scores across all metrics, particularly in mAP@50:95 and recall, highlighting its robustness and superior multi-scale detection capability.

In summary, the comparative experiments demonstrate that MAS-HENet achieves a superior balance between accuracy, recall, and computational efficiency compared with existing lightweight YOLO variants. The combination of the MAStar feature aggregation module, LSCD detection head, and HEUC upsampling design enables the model to deliver state-of-the-art performance while maintaining efficient inference, making it well-suited for real-time industrial safety detection tasks.

4.4.3. Generalization Experiments

To further evaluate the generalization capability of the proposed MAS-HENet model in diverse and complex real-world environments, experiments were conducted on the VisDrone2019 public UAV vision dataset. This dataset, captured by drones across various urban and suburban scenes, contains multiple categories of objects such as pedestrians, vehicles, bicycles, and tricycles. Due to its characteristics of high target density, frequent occlusion, large scale variation, and diverse viewpoints, VisDrone2019 poses significant challenges to object detection models in terms of robustness and transferability. The category statistics of the training set are presented in

Table 2, and the spatial and size distribution of targets are illustrated in

Figure 7.

During evaluation, both MAS-HENet and the baseline model YOLOv8n were trained using identical configurations, without additional fine-tuning or domain adaptation. This ensures an objective assessment of the model’s cross-domain transfer performance. The detection results on the VisDrone2019 test set are shown in

Table 6.

As shown in

Table 6, MAS-HENet exhibits a significant performance improvement over the baseline YOLOv8n model across all evaluation metrics. Specifically, mAP@50 increases by 6.6 percentage points, and mAP@50:95 improves by 4.5 points, while precision (P) and recall (R) rise by 5.3 and 4.4 percentage points, respectively. These results clearly demonstrate that MAS-HENet retains strong feature extraction and object recognition capabilities under unseen environmental conditions, validating its superior generalization and robustness.

To further illustrate the model’s generalization behavior, heatmaps of detection results from YOLOv8n and MAS-HENet are compared in

Figure 12. In these heatmaps, the pixel intensity corresponds to the average precision (AP) of each object class, where deeper colors indicate higher detection accuracy.

As shown in

Figure 12, YOLOv8n exhibits weakened or missing responses for small and densely distributed objects under complex backgrounds, while MAS-HENet produces more focused and consistent activation regions, particularly for occluded and small-scale targets. This confirms that the proposed model achieves enhanced contextual feature representation and multi-scale fusion, enabling better adaptation to challenging real-world visual environments.

Overall, the experiments on the VisDrone2019 dataset confirm that MAS-HENet demonstrates remarkable cross-domain generalization performance, effectively maintaining detection accuracy in unseen aerial scenarios. The results prove that the model’s structural innovations—including the MAStar, LSCD, and HEUC modules—jointly contribute to more robust feature perception, efficient upsampling, and improved adaptability in diverse environments.

5. Conclusions

In this paper, we proposed MAS-HENet, a lightweight and efficient detection network specifically designed for industrial safety scenarios involving dense, small, and multi-scale targets. The network integrates four major innovations: (1) the MAStar module, which enhances feature aggregation and gradient propagation through mixed attention and residual connections; (2) the P2 detection layer, which improves fine-grained perception for small objects; (3) the Lightweight Shared Convolutional Detection Head (LSCD), which significantly reduces parameter redundancy while maintaining detection precision; and (4) the High-Efficiency Upsampling Convolution (HEUC) module, which refines semantic reconstruction and strengthens feature fusion.

Extensive experiments on our self-constructed industrial dataset demonstrate that MAS-HENet achieves a mAP@50 of 83.3% with only 2.98 M parameters, surpassing multiple state-of-the-art YOLO variants. Ablation studies confirm that each proposed module contributes positively to the overall performance, and their combined integration effectively balances accuracy and computational efficiency. Comparative experiments further show that MAS-HENet surpasses YOLOv8s and YOLOv12n in both precision and recall, validating its superior adaptability and detection accuracy under complex conditions. Moreover, cross-scene testing on the VisDrone2019 dataset indicates a 6.6% improvement in mAP@50, demonstrating excellent generalization capability and robustness.

Although MAS-HENet achieved excellent detection performance in various industrial safety scenarios, it still has certain limitations. Most of the dataset used in this study was collected in real factory environments corresponding to our application scenes. However, the fire-related samples were not captured in real factories, since open flame collection is not permitted due to safety restrictions, which may slightly affect the model’s performance when detecting actual fire events. Despite this limitation, MAS-HENet provides a practical and scalable solution for real-time industrial safety monitoring. Its modular design facilitates deployment and can be extended to other visual perception tasks such as UAV-based surveillance, logistics inspection, and intelligent manufacturing. In future work, we plan to expand the dataset with real-world fire and smoke scenes obtained under safe experimental conditions, and further explore knowledge distillation and dynamic inference optimization to enhance model efficiency and deployment performance on embedded devices.