1. Introduction

The development of autonomous driving technologies has elevated transportation systems to a new level, promising safer and more efficient roadways. Among the various techniques used to enable autonomous vehicles (AVs), imitation learning has emerged as a promising approach due to its ability to learn complex driving behaviors from expert demonstrations [

1]. By leveraging large-scale driving datasets and deep neural networks (DNNs), imitation learning has demonstrated remarkable success in training autonomous agents to emulate human driving behaviors [

2,

3,

4,

5,

6].

Despite its impressive performance, DNN-based imitation learning (DNNIL), often implemented using Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) [

7], inherits critical limitations from DNNs that hinder its widespread adoption in real-world autonomous systems. A prominent drawback is the lack of interpretability in the learned driving policies due to the black-box nature of DNNs [

8,

9], making the decision-making process of autonomous driving difficult to verify and validate. This lack of interpretability not only limits human ability to diagnose failures or errors but also hampers the trust and acceptance of autonomous systems by society and regulatory bodies [

10].

Moreover, the generalizability of DNNIL driving policies remains a concern. Although these models can learn to imitate expert drivers in specific scenarios, adapting the learned policies to unseen situations can be problematic, as DNNIL is limited to behaviors observed during training [

11]. Consequently, when there is a mismatch between test and training data distributions [

12], the models often exhibit limited knowledge of novel situations. The rigid nature of these policies frequently leads to suboptimal or unsafe behavior when encountering unfamiliar traffic conditions. Finally, although more sample-efficient than reinforcement learning, DNNIL methods still suffer from data inefficiency, requiring millions of state-action pairs for effective learning [

13,

14]. These limitations call for further research aimed at addressing the transparency, generalizability, and data efficiency challenges of imitation learning.

To improve the transparency and interpretability of imitation learning, explainable AI (XAI) methods [

15,

16,

17] often employ one of the following approaches: white-box (symbolic) models, explainable neural networks, or neurosymbolic frameworks. In white-box models, for example, a learning framework combining imitation learning and logical automata was proposed by Leech [

18] to represent problems as compact finite-state machines with human-interpretable logic states. Additionally, Bewley et al. [

19] employed decision trees to interpret emulated policies using only inputs and outputs, while [

20] leveraged a hierarchical approach to ensure interpretability. On the other hand, pixel-wise CNN-based methods, which are often used in computer vision applications, capture high-level features using heatmaps and their implications [

21].

Furthermore, neurosymbolic learning methods—considered among the cutting-edge—aim to combine the learning capabilities of DNNs with symbolic reasoning [

22,

23]. Most neurosymbolic methods employ symbolic, logic-based reasoning. They extract domain-specific logical rules using various rule-generation techniques [

24,

25]. As such, they are recognized as sample-efficient approaches that exhibit strong generalizability [

26].

Each of the three aforementioned approaches has its own advantages and limitations. Neurosymbolic frameworks such as the Differentiable Logic Machine (DLM) [

25,

27] integrate differentiable reasoning layers into deep neural architectures, enabling end-to-end training. However, they often require large-scale labeled data and may sacrifice full symbolic transparency due to the latent nature of their learned predicates. Pixel-wise CNN-based techniques are commonly applied in visual domains and also need large-scale datasets. In contrast, white-box models can provide explicit logical expressions behind learned behaviors using limited data. Yet, models based on finite-state machines and decision trees often struggle with scalability and problem complexity in challenging tasks.

As a white-box symbolic approach, search-based heuristic methods such as Inductive Logic Programming (ILP) [

28,

29] have demonstrated the ability to efficiently extract abstract rules from a small number of examples when background knowledge is provided. Unlike finite-state machines, ILP-based approaches can scale better and handle more complex tasks by effectively searching for rules that satisfy the given examples. ILP can be employed to imitate human behavior using a limited number of examples; however, it has not yet been applied in the context of autonomous driving.

In this paper, we propose a novel rule-based imitation learning technique, called SIL, which is the first purely ILP-based imitation learning method for autonomous driving. This method aims to generate explainable driving policies instead of black-box, non-interpretable ones by extracting symbolic first-order rules from human-labeled driving scenarios, using basic background knowledge provided by humans. It addresses the transparency and generalizability challenges associated with current neural network-based imitation learning methods. By extracting abstract logical relationships between states and actions in autonomous highway driving, SIL aims to deliver transparent, interpretable, and adaptive driving policies that enhance the safety and reliability of autonomous driving systems.

The main contributions of this paper are:

We propose SIL, a logic-based imitation learning method that extracts the underlying logical rules governing human drivers’ actions in various scenarios. This approach enhances the transparency of learned driving policies by inducing human-readable and interpretable rules that capture essential aspects of safety, legality, and smoothness in driving behavior. Furthermore, SIL improves the generalizability of these policies, enabling AVs to handle diverse and challenging driving conditions.

We compare SIL with state-of-the-art neural-network-based imitation learning methods using real-world HighD and NGSim datasets, demonstrating how a symbolic approach can outperform neural methods—even when trained on a small number of synthetic scenarios labeled as examples for each action.

The remainder of the paper is organized as follows.

Section 2 introduces the prerequisites of the method and

Section 3 describes the proposed approach in general.

Section 4 presents the simulation environment and experimental results, while

Section 5 discusses and evaluates the outcomes. Finally,

Section 6 concludes the paper.

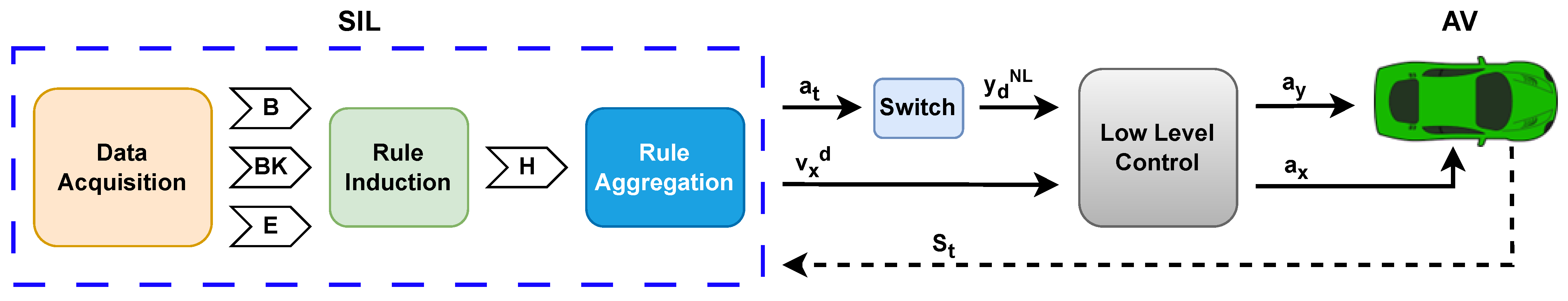

3. Methodology: Symbolic Imitation Learning

The Symbolic Imitation Learning (SIL) framework introduces a novel approach that leverages ILP to extract symbolic policies from human-generated background knowledge. The core objective of this framework is to replicate human behaviors by uncovering explicit rules that govern complex actions demonstrated by humans. As illustrated in

Figure 1, SIL comprises three main components: knowledge acquisition, rule induction, and rule aggregation.

In the knowledge acquisition phase, essential inputs are provided based on prior knowledge about the environment. These include the language bias set , background knowledge , and the set of examples (refer to Algorithm 1). These components enable the ILP system to induce a single rule during the rule induction phase. To construct a complete policy, this process is repeated iteratively for all required rules, progressively assembling them into the hypothesis set . The final rule aggregation component then utilizes and refines these induced rules to infer the desired actions. Since these logical rules are derived directly from human demonstrations, the resulting actions are inherently interpretable and human-like. By capitalizing on ILP’s ability to infer symbolic rules from structured examples, SIL effectively captures nuanced human behavior—an area where conventional DNNIL methods often face limitations.

One of the key advantages of SIL is its sample efficiency. It can generate a coherent and interpretable set of rules from a relatively small number of expert demonstrations. These rules not only support human-understandable decision-making but also enhance the system’s generalizability. The explicit, symbolic nature of the learned policies enables SIL-based agents to better adapt to previously unseen scenarios, outperforming black-box policies that typically lack both transparency and adaptability.

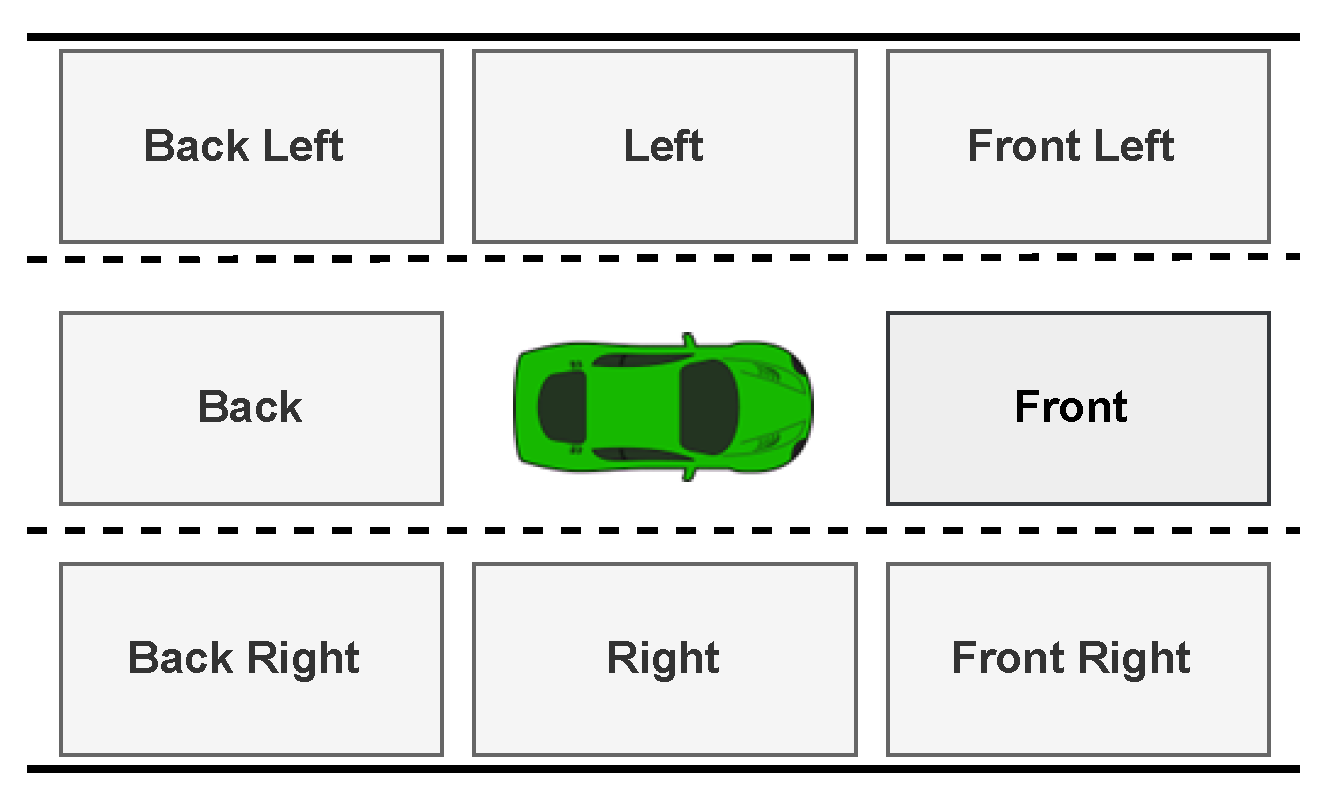

Symbolic Imitation Learning in Autonomous Driving: As a use-case scenario, SIL aims to derive unknown rules for autonomous highway driving. The primary objective of employing SIL in this context is to extract driving rules from human-derived background knowledge and use them to emulate human-like behavior. These behaviors include lane changes and adjustments to the AV’s longitudinal velocity, all of which are essential for achieving safe, efficient, and smooth driving. During lane-change decisions, the AV can execute one of three discrete actions: LK, RLC, or LLC.

Background: Human drivers frequently adjust their lane position and velocity based on the positions and speeds of nearby vehicles, also referred to as target vehicles (TVs). Motivated by this principle, the proposed approach incorporates the relative positions and velocities of TVs surrounding the AV to support informed decision-making regarding lane changes and speed adjustments. To formalize this, the area surrounding the AV is partitioned into eight distinct

sectors:

front,

frontRight,

right,

backRight,

back,

backLeft,

left, and

frontLeft, as shown in

Figure 2. Each sector may either be occupied by a vehicle—indicated by the predicate

sector_isBusy set to

true—or unoccupied, in which case the predicate is set to

false. For example,

right_isBusy is

true if there is a vehicle in the

right sector; otherwise, it is set to

false.

Table A1 in the

Appendix A.1 shows all the necessary predicates used in this research with their definitions. Additional details on predicate definitions are provided in [

30].

To capture the relative longitudinal velocities of TVs, we compute the difference between each TV’s velocity and the AV’s velocity, then assign predicates accordingly. Each sector is associated with three predicates reflecting this velocity difference. If the relative velocity is greater (or smaller) than a predefined threshold , the predicate bigger (or lower) is used. Threshold ensures that velocity differences are significant rather than negligible, and is set to 5 km/h in this context. When the absolute value of the relative velocity is within the threshold range, it is considered equal. For example, the predicates frontVel_isBigger, frontVel_isEqual, and frontVel_isLower describe the relative velocity of the TV in the front sector with respect to the AV.

In addition, AVs—like human drivers—must remain within valid road sections (i.e., highway lanes) and avoid entering off-road or restricted areas. To represent this contextual constraint, each sector is also labeled as either valid (sector_isValid is set to true) or invalid (sector_isValid is set to false).

3.1. Knowledge Acquisition

Autonomous driving systems require a variety of rules to perform effectively under diverse conditions, and each rule must be learned under a consistent setting with appropriate examples. Therefore, to extract distinct rules, it is essential to define unique configurations and provide corresponding examples for each one. We begin by specifying the scope of each desired rule through the definition of its head predicate and a set of candidate body predicates, all encapsulated within a specific bias set . The selection of candidate body predicates depends on their potential impact on the accuracy of the head predicate. Consequently, body predicates that have no effect on the head are discarded, reducing the dimensionality of and avoiding excessive computation during the learning process.

To identify the rules, we initially categorize the actions into four unique sets: fatal, risky, and efficient lane-change actions, along with smooth longitudinal velocities. Fatal actions are the actions leading to serious accidents or crashes, while risky actions are mildly dangerous actions that might lead to law violations. Efficient actions are associated with the actions leading to not only smooth lane changes but also a higher level of safety. These categories can be interdependent.

For each action category, we provide human-labeled datasets comprising scenarios featuring an ego vehicle surrounded by varying numbers of intruders. In each of the scenarios, taking a certain action is either true or false. For example, in the fatal lane change dataset, if there is an intruder on the left, taking the left action is considered fatal. These true/false labels help to define positive and negative examples suitable for ILP systems.

Table 1 indicates the number of positive and negative examples in each action category, with the number of related body predicates to the corresponding action. In general, the goal is to extract rules associated with the aforementioned categories to ensure safe and efficient lateral lane changes and longitudinal velocity control.

In each category, the corresponding rule has a unique head predicate defined in using the head_pred/1 declaration, and a corresponding set of candidate body predicates. These candidate body predicates include all literals that may influence the head predicate. Then, the ILP system searches for optimal body predicates that satisfy all positive examples while simultaneously rejecting negative examples. To enable this, we define multiple possible scenarios using background knowledge and assign a unique identifier to each scenario. Based on expert understanding of the intended action in each scenario, scenarios are labeled as either positive examples using the pos/1 predicate or negative examples using the neg/1 predicate. This process is repeated for each rule to generate sufficient knowledge-driven data for inducing previously unknown logical rules. As such, each rule induction task requires a specific configuration of , background knowledge , and training examples to support effective learning.

The knowledge acquisition process generates a variety of real-world scenarios, each labeled by a real human driver. Each state consists of an AV surrounded by eight spatial sections, each of which is categorized as either occupied by a TV or vacant. Accordingly, we include eight sector_isBusy literals in the candidate body predicates—one for each sector surrounding the AV.

Additionally, for each state, we incorporate the relative velocity of every TV with respect to the AV. This results in 24 relative velocity literals—three per

sector—being added to the candidate body predicates. To ensure legal compliance in driving decisions, we also consider the validity of the

right and

left sections, adding two corresponding literals to represent whether these areas are drivable.

Table 1 summarizes the total number of candidate body predicates associated with each head predicate.

Once the states are defined, we assign positive or negative labels to the head predicates of the target rules based on expert driving knowledge and the behavioral outcome expected in each scenario. For example, to guide the ILP system in deriving fatal RLC rules, we label states in which taking the RLC action is considered fatal as positive examples; otherwise, such states are labeled as negative examples.

The number of predicates in each bias set

, along with the counts of positive and negative examples, are denoted by

,

, and

, respectively. These parameters significantly influence the accuracy and robustness of the induced rules. While including negative examples (

) is optional, their presence improves the generalizability and resilience of the rules across a wider range of environmental states. As indicated in

Table 1, distinct values of

and

are defined for each rule. (All knowledge-based datasets are available at

https://github.com/CAV-Research-Lab/Symbolic-Imitation-Learning/tree/main/data (accessed on 10 November 2025)).

3.2. Rule Induction

After obtaining a sufficient amount of knowledge-driven data for each target rule, we proceed to the rule induction stage of the SIL framework. This stage focuses on learning interpretable rules tailored to autonomous highway driving. The rule extraction process is carried out using Popper, a state-of-the-art ILP system that integrates Answer Set Programming (ASP is a declarative programming paradigm well-suited for solving combinatorial and knowledge-intensive problems by encoding them as logic-based rules and constraints) (ASP) [

31,

32] with Prolog to enhance learning efficiency and accuracy.

One of Popper’s main advantages is its ability to learn from failures (LFF). This is achieved through a three-stage process: generating candidate rules by exploring the hypothesis space via ASP (generate stage), evaluating those candidates against the positive examples

and background knowledge

using Prolog (test stage), and pruning the hypothesis space based on failed hypotheses (constrain stage) [

33].

Given the dynamic nature of decision-making in autonomous driving, a wide range of rules can be induced for different tasks. However, in this work, we focus on extracting only the essential general rules required for highway driving, specifically in the categories of safety, efficiency, and smoothness.

3.2.1. Safe Lane Changing

This section aims to identify unsafe lane-changing actions from human-labeled scenarios, thereby eliminating them from the action space and ensuring that only safe actions remain. To this end, we consider two datasets containing the following types of unsafe actions: fatal and risky lane-changing actions. The RLC and LLC actions may fall into either category depending on the surrounding traffic conditions. In contrast, LK is generally assumed to be safe, except in specific situations where it may pose potential danger.

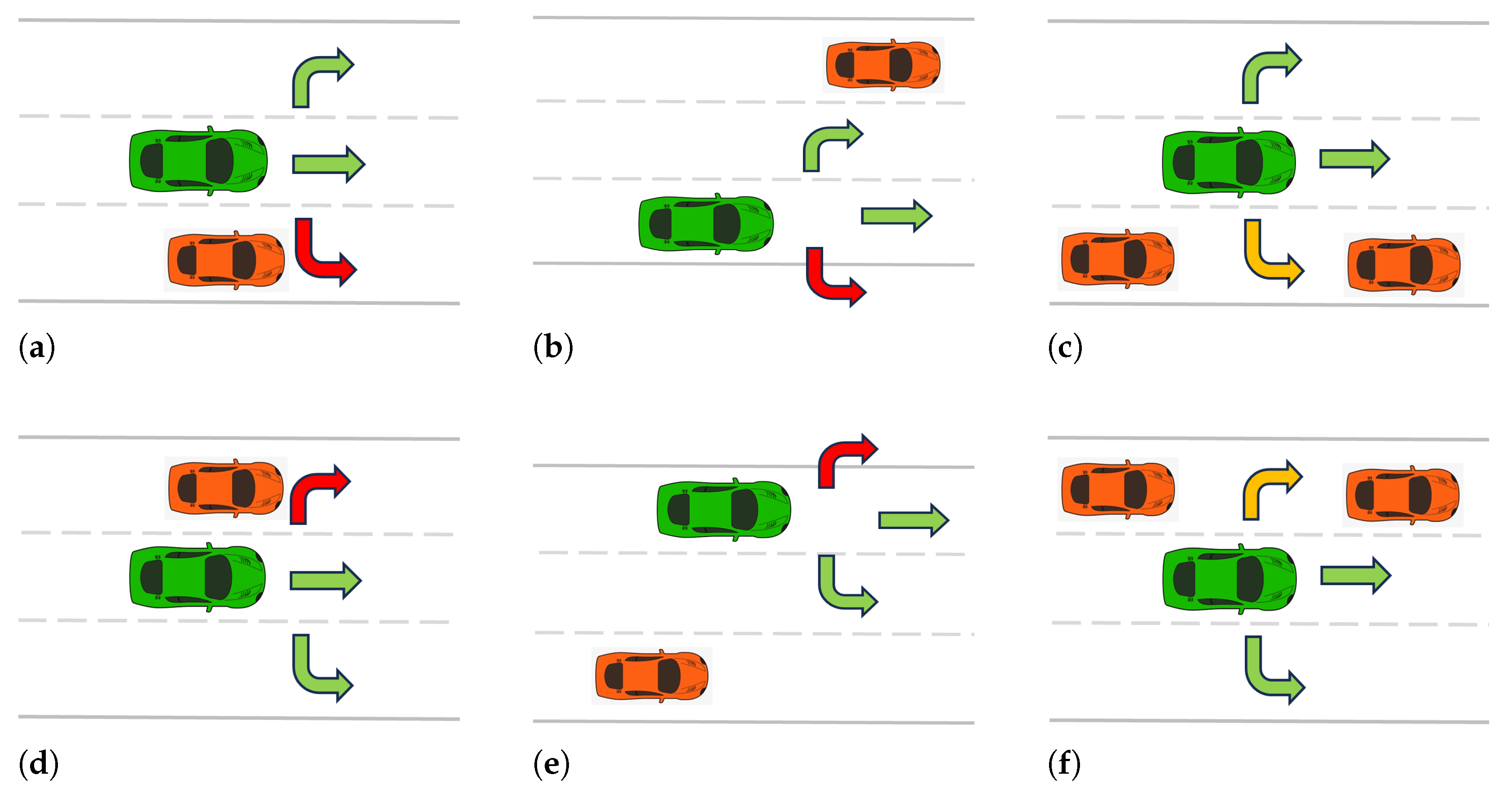

Fatal Lane Changing: The objective here is to uncover previously unknown rules that characterize situations in which executing RLC or LLC would be fatal and could lead to collisions. For instance, four such scenarios are illustrated in

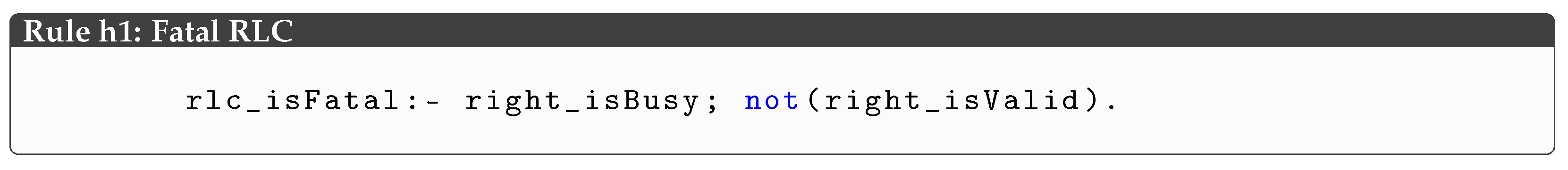

Figure 3—the top-left and top-middle subfigures correspond to fatal RLC examples, while the bottom-left and bottom-middle represent fatal LLC examples. Using ILP, we derive two rules: one for fatal RLC (

h1) and another for fatal LLC (

h2). For example, rule

h1 specifies when taking the RLC action is considered fatal:

![Applsci 15 12464 i001 Applsci 15 12464 i001]()

Here, the comma (,) denotes conjunction, the semicolon (;) represents disjunction, and not(.) indicates negation, following first-order logic notation. Similarly, rule h2 identifies conditions under which executing LLC is fatal:

![Applsci 15 12464 i002 Applsci 15 12464 i002]()

In summary, if a TV is present in the right (or left) section, or if that section is marked as invalid, performing an RLC (or LLC) action is deemed unsafe due to the high risk of collision. Therefore, the AV should avoid these actions in such states.

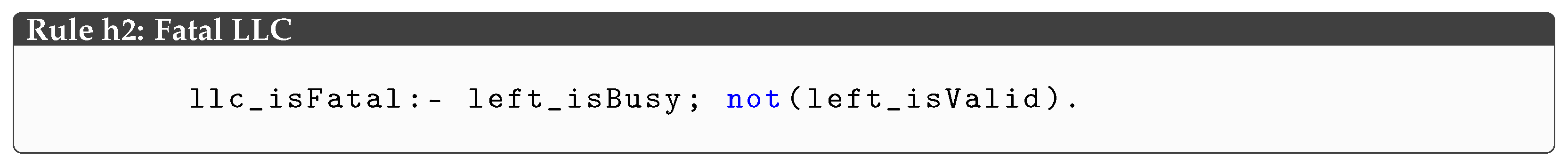

Risky Lane Changing: These actions refer to situations in which the AV selects maneuvers that, while not perilous, may still pose a risk to passenger safety. For instance, as illustrated in the top-right image of

Figure 3, consider a scenario where a vehicle occupies the

backRight (or

backLeft) section of the AV, and its velocity is higher than that of the AV. Although executing an RLC (or LLC) maneuver may be legally or physically feasible, doing so could lead to a collision with the approaching vehicle in the adjacent section. In such cases, the action is not fatal but is considered hazardous and should ideally be avoided.

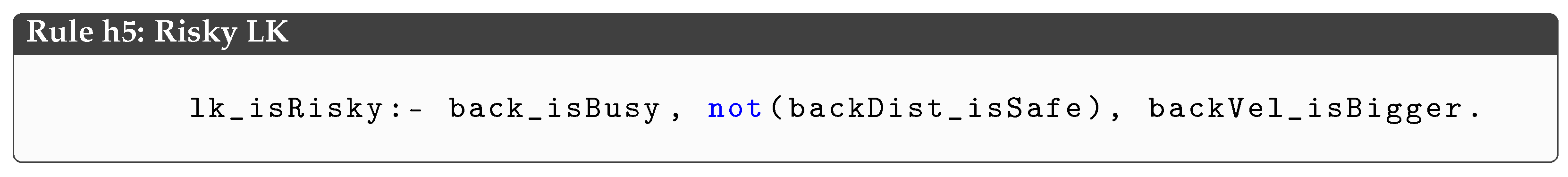

Using the Popper ILP system, we derive three rules that characterize risky actions. The first rule (h3) specifies that if there is a vehicle in the backRight section with a higher velocity than the AV, or if the frontRight section is occupied by a slower-moving vehicle, then performing an RLC maneuver is deemed risky:

![Applsci 15 12464 i003 Applsci 15 12464 i003]()

Similarly, rule h4 captures the conditions under which an LLC maneuver is risky:

![Applsci 15 12464 i004 Applsci 15 12464 i004]()

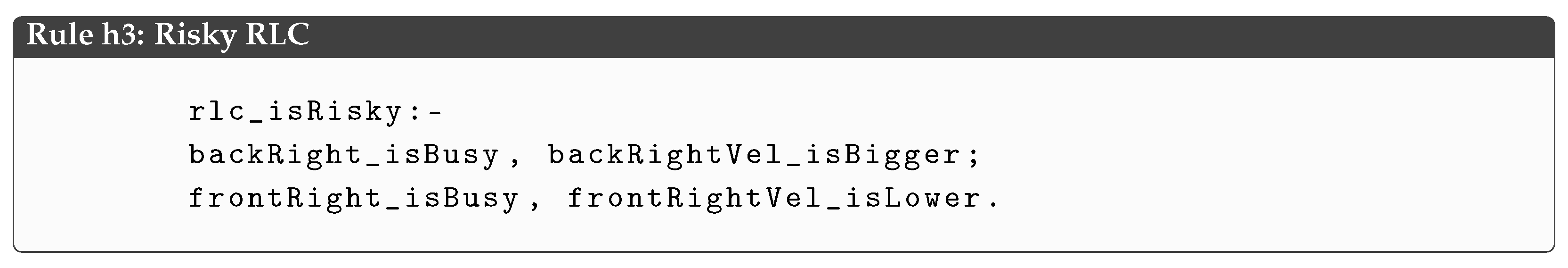

Finally, rule h5 addresses cases where the LK action may pose danger. It states that if a TV is present in the back section, the distance to the AV is unsafe, and the TV is approaching at a higher velocity, then remaining in the current lane becomes hazardous:

![Applsci 15 12464 i005 Applsci 15 12464 i005]()

This rule highlights that even lane keeping—typically considered the safest option—can be endangered when the AV is rapidly approached from behind without adequate spacing, thereby increasing the risk of rear-end collisions.

3.2.2. Efficient Lane Changing

While safety rules identify lane-change actions that are either fatal or risky lane changes in specific traffic conditions, they do not provide guidance on which action is more efficient when none of the options pose safety risks. To address this, we introduce a prioritization scheme that helps the AV make time-efficient decisions.

In general, minimizing driving time requires the AV to change lanes when appropriate. However, unnecessary or abrupt lane changes can compromise passenger comfort and health. Therefore, the default priority is to maintain the current lane unless a lane change is deemed necessary. When the AV needs to overtake a vehicle in the front section, we introduce a secondary prioritization: if both RLC and LLC are viable, the AV should prefer LLC, as the left lane typically supports higher traffic speeds. RLC should be considered only when LLC is not feasible. This rule-based preference allows the AV to make more efficient decisions while preserving safety and comfort.

Based on this prioritization strategy, we label scenarios from the knowledge-driven dataset to reflect the more favorable action and use ILP to learn the corresponding rules. Rule h6 identifies a situation where LLC is preferable to RLC:

![Applsci 15 12464 i006 Applsci 15 12464 i006]()

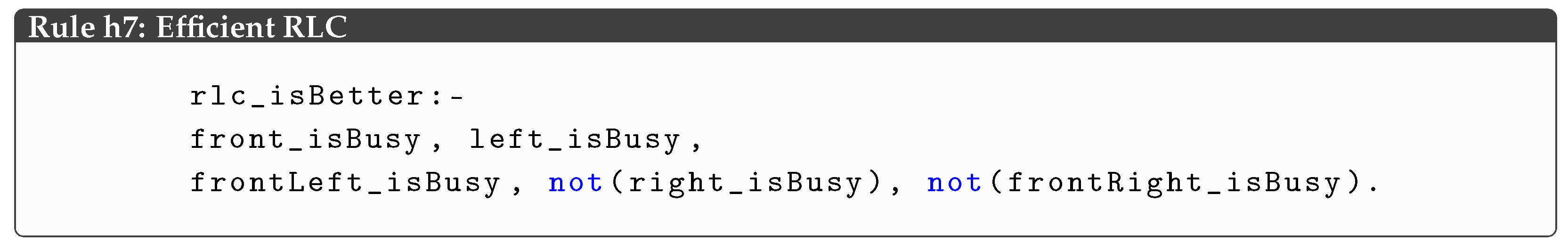

This rule specifies that when the front section is occupied and both the left and frontLeft sections are clear, the AV should prefer LLC over RLC. Conversely, rule h7 describes situations in which RLC becomes the preferred action:

![Applsci 15 12464 i007 Applsci 15 12464 i007]()

This rule applies when the front, left, and frontLeft sections are all occupied, while the right and frontRight sections are available. In such cases, RLC is the more efficient option.

Importantly, the predicates rlc_isBetter and llc_isBetter are mutually exclusive by design. If one holds true, the other cannot, thereby avoiding conflicting recommendations during decision-making.

3.2.3. Smooth Longitudinal Velocity Control

To ensure passenger comfort and overall safety, an AV—like a human driver—must adjust its velocity smoothly. Human drivers typically rely on a limited set of intuitive actions to manage vehicle speed in a continuous manner. These include gradually accelerating to reach a desired cruising speed, adjusting speed to match that of a slower vehicle ahead (referred to as the front TV), and decelerating in response to unsafe following distances. In critical situations, such as when the AV is too close to the front TV while moving faster, the driver—or the AV—must apply the brake to prevent a collision. Based on these observed driving behaviors, the goal is to identify three fundamental rules that govern safe and smooth velocity adjustments.

In the previous work [

30], we introduced a rule-based method for longitudinal velocity control in autonomous vehicles. The results demonstrated that the AV could avoid collisions with the

front TV while ensuring smooth acceleration and deceleration transitions. This was achieved by integrating a low-level controller that eliminated discontinuities in the velocity commands. As shown in Equation (

3), the proposed approach models three distinct acceleration phases, inspired by typical lane-following behavior observed in human drivers, and aligns with methods proposed in [

34].

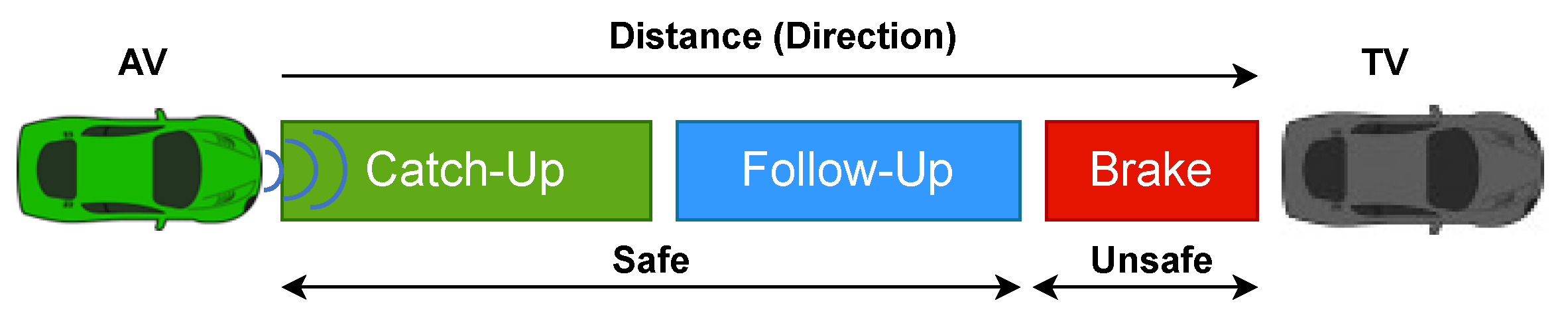

As illustrated in

Figure 4, the

catch-up phase applies when the

front sector is unoccupied. In such cases, the AV has the flexibility to accelerate, decelerate, or maintain speed. To compute the appropriate acceleration, we define a desired longitudinal velocity term (

), which may differ across drivers or situations, and use it to determine the required acceleration to reach this target speed. For this task,

is 110 (km/h).

When a vehicle is present in the

front section, human drivers typically adjust their speed to match that of the

front TV, thereby maintaining a safe distance and avoiding collisions. This behavior corresponds to the

follow-up phase, which applies when a TV occupies the

front sector and the longitudinal distance between the AV and the TV is considered safe. As shown in Equation (

3), the

follow-up phase computes the required acceleration that allows the AV to synchronize its velocity (

) with that of the

front TV (

), while gradually reducing the separation distance

D.

Finally, the

brake phase applies in situations where the AV finds itself at an unsafe distance—specifically, when

D falls below a critical threshold

C—and is traveling at a higher speed than the

front TV. In these emergency scenarios, braking is necessary to prevent a potential collision. The AV must decelerate promptly until a safe following distance is re-established. This phase is illustrated in

Figure 4 and formalized as three cases in the following equation:

where

D is the distance between the AV and the front TV, and

C is the critical braking distance, which is considered 15 (m) in this use case. The time step

is 0.04 (s).

As previously stated, the objective is to define logical rules that correspond to the acceleration conditions described in Equation (

3). However, existing ILP systems are not designed to reason directly over continuous numerical values (except for binary values such as 0 and 1), making it impractical to extract the complete equations symbolically. Instead, we identify which of the three control phases—

catch-up,

follow-up, or

brake—is applicable in a given state. Once the appropriate phase is determined, the corresponding acceleration is computed analytically.

To induce rules for each control phase, we construct training examples by evaluating the relative distance between the AV and the

front TV. Based on this evaluation, each state is labeled as a positive or negative example for the corresponding rule, as detailed in

Table 1. This approach enables Popper to learn one symbolic rule per phase. For instance, the following rule specifies that when the

front section is unoccupied, the AV should enter the

catch-up phase to reach its desired speed:

![Applsci 15 12464 i008 Applsci 15 12464 i008]()

The second rule addresses the follow-up phase. It states that when the front section is occupied and the distance between the AV and the front TV is safe, the AV should adjust its acceleration to match the speed of the vehicle ahead:

![Applsci 15 12464 i009 Applsci 15 12464 i009]()

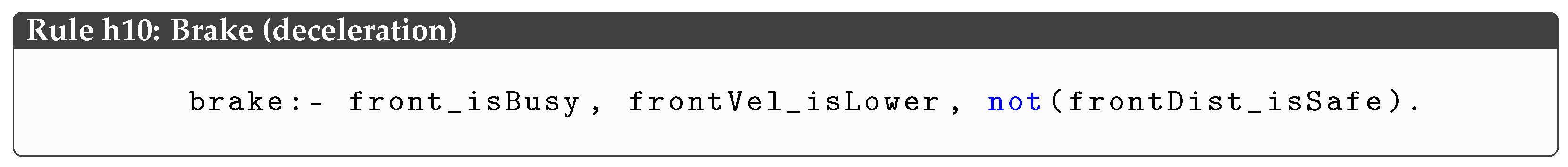

Finally, the third rule pertains to the brake phase. It specifies that when a vehicle is present in the front section, the relative distance is unsafe, and the AV is moving faster than the front TV, the AV should initiate braking to prevent a collision:

![Applsci 15 12464 i010 Applsci 15 12464 i010]()

As summarized in

Table 1, we evaluate the quality of each learned rule using an

accuracy metric, defined as the average of

precision and

recall over the corresponding positive (

) and negative (

) examples. According to the results, all induced rules achieve perfect accuracy (1.00), indicating that all the extracted rules successfully covered all considered scenarios and predicted positive and negative examples within their respective domains. Since there is no mislabeled data in the datasets, it is not beyond expectation that an ILP-based system can find the rules satisfying all positive and negative examples.

In addition to accuracy, we also report the rule induction time (

) for each rule in

Table 1. The values show that

is consistently low across all rules, demonstrating that the SIL framework enables fast and efficient rule induction. This efficiency can be attributed to Popper’s structured approach, which combines symbolic reasoning with effective pruning strategies to learn concise and accurate rules.

To evaluate the robustness of this method, we intentionally flip the data labels randomly to observe how Popper manages the noise. Varying percentages of noise from 2% to 20% are considered for all the datasets. As shown in

Table A2 (see

Appendix A.2), increasing the noise percentage leads to a significant decrease in both precision and recall. Moreover, the induction time

also increases.

3.3. Rule Aggregation

Having extracted the rules h1 to h10 using Popper, these interpretable rules are further processed to generate coherent, human-like decisions for lane changes and velocity adjustments. This post-processing is performed by the rule aggregation component, which integrates the induced rules into a unified decision-making framework.

The primary goal of this component is to identify the best lane-change and velocity actions for each state by combining the symbolic rules with background knowledge. This component prioritizes rules based on their criticality: (1) fatal lane changing rules (h1–h2) identify deadly actions, which are eliminated from the action space. (2) Risky lane changing rules (h3–h5) detect risky actions; these actions are removed from the action space but retained as backup options if no other actions remain. (3) Finally, in the refined action space, efficiency (h6–h7) rules select the action leading to the most efficient path for the AV. Additionally, smoothness (h8–h10) rules adjust the longitudinal velocity to create a smooth driving.

Supplementary rules are also introduced to ensure consistent reasoning across the hypotheses, enabling seamless interpretation and coordination of the high-level symbolic outputs. These outputs are then used to control the AV via a low-level controller.

To determine the best action for a given state

, the framework first applies rules

h1 through

h5 to eliminate actions deemed fatal or risky, thus narrowing the action space to only safe candidates. From the remaining options, the most efficient action is selected using rules

h6 and

h7, which assess the relative quality and priority of the remaining lane-change options. When multiple candidate rules are triggered simultaneously, the system applies a deterministic priority order based on rule specificity. In practice, this means that rules supported by larger numbers of positive examples take precedence. For instance, left-lane change rules are prioritized over right-lane change rules. The final decision yields the optimal action

, which can be LK, LLC, or RLC. As illustrated in

Figure 1, once

is chosen, the AV’s lane ID is updated via a switch box mechanism that maps the decision to a corresponding lateral position

. A low-level controller is then used to generate the lateral acceleration command

, where a proportional-integral-derivative (PID) controller is employed as in [

30]. The controller gains

,

, and

are 4, 1, and 0, respectively.

For longitudinal velocity control, the current state

is evaluated against rules

h8 to

h10 to determine which of the three acceleration phases—

catch-up,

follow-up, or

brake—is applicable. Based on the selected rule and the formulation in Equation (

3), the required longitudinal acceleration

is computed. The desired velocity is then calculated as

and passed as input to a PID controller responsible for regulating thrust. For this task, the controller gains

,

, and

are 3, 1, and 0, respectively. The PID controller ensures smooth and continuous velocity control in accordance with the selected symbolic rule.

In summary, the SIL framework integrates symbolic reasoning and control by mapping learned high-level hypotheses into executable actions. These actions are aligned with the safety, efficiency, and smoothness principles established throughout the rule induction process.

5. Discussion

All methods were evaluated under comparable conditions to facilitate a fair comparison of their overall performance. Each agent was initialized with similar positions, velocities, and driving directions. To assess the effectiveness of each approach, we defined performance metrics in three key categories: safety, efficiency, and smoothness. Safety was evaluated by the two success rates: collision-based success rate

and distance-based success rate

, which are computed as:

where

is the number of collisions, and

N is the number of evaluation episodes. Also,

is the average traveled distance and

L is the total length of the driving scenario.

indicates the percentage of the episodes completed without a collision, while

indicates the percentage of the traveled distance compared to the total traveling distance. Higher values of both metrics show a higher degree of safety.

Furthermore, the efficiency and smoothness were evaluated through the number of lane changes (). Additionally, average agent speed () was used as a composite measure of efficiency, calculated as , where denotes mission time per episode.

Using the HighD dataset, we tested all methods over

episodes with at least five different seeds, each episode consisting of a L = 2100 (m) driving track. For each experiment, the AV operated in either the left-to-right (L2R) or right-to-left (R2L) direction. All agents were trained exclusively in the L2R direction, and their generalization was assessed by evaluating performance in the R2L direction and the NGSim environment. The average comparative results over seeds are summarized in

Table 2.

Safety Analysis: As shown in

Table 2, the SIL agent completed all evaluation scenarios with 100% collision-based success rate in the L2R scenario, demonstrating strong safety performance. In contrast, the corresponding success rates for the DNNIL, BCMDN, and GAIL are significantly smaller. Due to the explicit safety rules considered in the proposed framework, the SIL agent complies with the safety rules and avoids fatal and risky lane changes, which lead to collisions. Moreover, in the R2L scenario and the NGSim scenarios, it consistently maintains superiority over the baselines by success rates 98% and 96%, respectively.

From the distance-based success rate perspective, the SIL agent similarly outperforms the baselines by completing 100% and 99.3% of the path on average in L2R and R2L directions, respectively. These superior results stem not only from explicit safety rules but also from the longitudinal velocity rules, which ensure safe distances from the front and back intruder vehicles.

Efficiency Analysis: To assess the efficiency of the agents, we examined their average speed per episode, denoted by . This metric serves as a proxy for effective lane-change behavior, as faster travel generally correlates with successful overtaking. We computed across both directions for all agents and used the average value for comparison. The results show that DNNIL, BCMDN, GAIL, and SIL achieved values of 109.7, 99.89, 115.07, and 116.06 km/h, respectively. Notably, the SIL agent achieved a higher average speed than the other models, suggesting that its rule-based lane changes enabled it to find free lanes efficiently, maintain higher velocities, and ultimately reduce overall travel time.

Smoothness Analysis: To analyze the smoothness, we consider the number of lane changes (), which was close to zero for the DNNIL and BCMDN agents, indicating their limited ability to learn and execute lane-change maneuvers effectively. While these agents exhibited smooth driving behavior, they often remained in a single lane throughout the episode. This tendency not only reduces responsiveness but may also contribute to traffic congestion due to inefficient lane utilization. Despite being trained on a large volume of data, the inability of these models to generalize lane-change behaviors highlights their inherent sample inefficiency.

The GAIL agent, in contrast, performed a significantly higher number of lane changes. However, this came at the cost of a high collision rate, indicating that its behavior, while active, was not reliably safe. On the other hand, the SIL agent changed lanes approximately once per episode, guided by explicitly learned efficient lane-changing rules. These rules enabled the AV to make strategic, context-aware lane changes, which contributed to shorter travel times without compromising safety.

Generalizability Analysis: To assess the generalizability of the SIL framework, we tested it on a different dataset—NGSim [

36]—which captures diverse urban highway scenarios in the United States. Among the available subsets, US-101 was chosen because its highway characteristics are broadly comparable to those of the HighD dataset, while differing in vehicle density, lane structure, and speed distribution. Given that the NGSim environment is highly congested, we reduced the number of vehicles to ensure feasible driving space for the AV while preserving comparability. To this end, the state-space representation was kept consistent across both datasets by maintaining the same eight-sector structure as in HighD. As shown in

Table 2, the SIL agent continued to perform safely, maintaining a low number of collisions despite the changes in environment and traffic dynamics.

Notably, some collisions observed in the NGSim experiments were unavoidable. This is primarily due to the fact that surrounding vehicles in the dataset are not aware of the SIL-controlled AV and thus do not respond to its presence. As a result, collisions often occurred from the rear, where other vehicles failed to maintain a safe following distance. Additionally, the AV’s average velocity in the NGSim scenarios was lower compared to the HighD due to the overall slower traffic flow and higher congestion levels in the NGSim dataset. Nevertheless, the ILP-generated rules enabled the AV to adapt effectively, demonstrating that the SIL framework is robust to variations in lane configurations and speed distributions.

Another advantage of the SIL framework lies in its computational efficiency. As shown in

Table 1, each rule can be induced in a relatively short time, whereas training a DNNIL model on large datasets demands substantial time and computational resources. Moreover, in many real-world applications, large volumes of labeled data may not be readily available. In contrast, the SIL framework can learn effectively from a small set of human-labeled examples, making it more practical and accessible. Furthermore, the explicit, interpretable nature of the SIL rule base aligns well with established automotive functional safety standards, such as ISO 26262 [

40], by addressing both functional safety and AI system assurance requirements.

Sensitivity to Label Noise: We evaluated SIL under controlled label-noise injections to assess robustness. Performance of model degrades as noise increases: with 2% noise, precision drops to ≈98%; at 5% to ≈95%; at 10% to ≈91%; and at 20% to ≈80%. This indicates a strong dependence on clean supervision. In contrast, deep imitation learning baselines such as DNNIL are typically more tolerant to moderate annotation noise because they optimize over large datasets and can partially smooth out inconsistent labels during training. SIL, by design, induces discrete symbolic rules that must exactly satisfy positive and negative examples, which makes it more brittle to mislabeled samples.

As summarized in

Table A2 and

Appendix A.2, even modest noise levels (2–10%) reduce precision and recall and increase induction time

. This has practical implications such as high-quality labeling becomes critical; noisy rule examples can directly degrade driving decisions.

In addition to sensitivity to label noise, the proposed method has several other limitations. First and most importantly, as discussed in

Section 3.1, each unknown rule must be learned under a carefully defined setting, including a tailored bias set and example set, which adds considerable system design complexity and constitutes a substantial manual bottleneck. Second, all datasets should be carefully labeled to avoid noise issues associated with classical ILP systems; the presence of incorrectly labeled positive or negative examples can significantly hinder the rule induction process. Third, the extracted rules (

h1–

h10) were not validated by independent experts beyond the original annotator(s); future work should include external validation by independent drivers or traffic safety experts to assess rule correctness and completeness. Finally, another challenge arises when attempting to extract rules from real-world human driving data: human drivers often take different actions in similar situations due to personal preferences or unobservable knowledge, making it difficult to infer consistent rules.

Besides these limitations, there is a minor difference between SIL and the DNN-based imitation learning baseline for longitudinal acceleration control: SIL includes rule-based longitudinal speed control (rules h8–h10), whereas the DNNIL baseline controls only lane changes, and velocity is handled separately by rules rather than learned. This may advantage SIL; we therefore highlight this difference and leave fully learned rate control for DNNIL as future work.

Future work should aim to address these challenges by improving the noise tolerance of ILP systems and exploring semi-automated ways to configure rule learning environments. Advancing in these directions would help scale the application of symbolic imitation learning to more diverse and unstructured real-world driving scenarios.