4.1. Validation Dataset and Preprocessing

The experimental data used in this paper were selected from the WT planetary gearbox dataset, which was compiled and released by a team led by Liu Dongdong and Cui Lingli at Beijing University of Technology, in collaboration with researchers including Cheng Weidong at Beijing Jiaotong University [

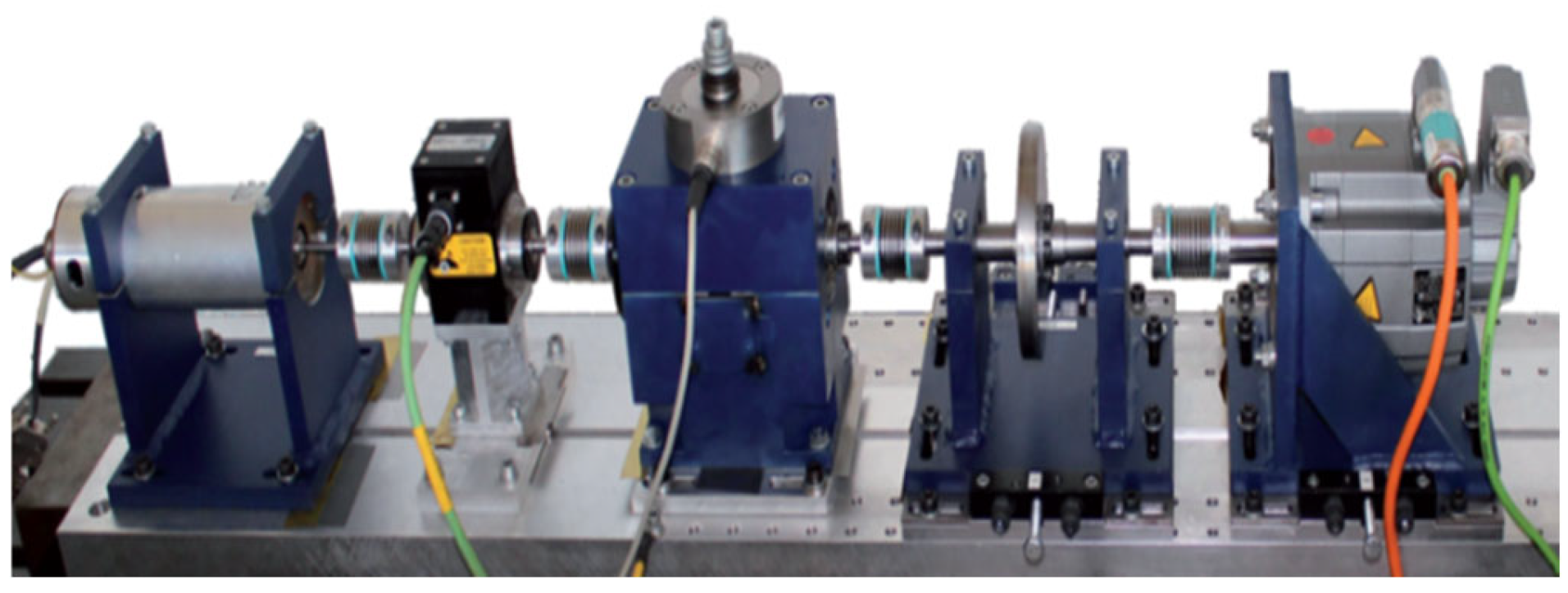

29]. The dataset comprises vibration data collected from a planetary gearbox within a *wind turbine transmission system experimental apparatus, the structure of which is depicted in

Figure 6.

The experimental setup comprises a drive motor, a planetary gearbox, a fixed shaft gearbox and a loading module. A Sinocera CA-YD-1181 accelerometer is used to collect vibration signals, while an encoder simultaneously obtains speed pulses. In the transmission experimental setup, the planetary gearbox comprises four planetary gears that rotate around a sun gear.

The WT planetary gearbox dataset from Beijing, China, contains operational data from five planetary gears under eight rotational speed conditions: 20 Hz, 25 Hz, 30 Hz, 35 Hz, 40 Hz, 45 Hz, 50 Hz, and 55 Hz. This dataset provides a foundation of data for cross-domain diagnostic research under varying operating conditions. At a sampling frequency of 48 kHz, five minutes of data were collected for each operating state and rotational speed, ensuring sufficient data volume. Additionally, vibration signals in the x and y directions were collected simultaneously, paving the way for multi-source fusion fault diagnosis methods to be applied.

To test the model’s noise resistance based on the maximum entropy theorem under power constraints in Shannon’s information theory, the entropy value of a Gaussian-distributed signal source is maximized under average power constraints. This indicates that noise uncertainty is strongest and the interference it causes to the signal source is most significant at this point. Additional Gaussian white noise with signal-to-noise ratios (SNR) of −4 dB, −2 dB, 0 dB, 2 dB and 4 dB was added to the original vibration signal in order to simulate challenging yet realistic strong-noise scenarios in real-world industrial conditions. This range was selected as it represents the severe noise levels realistically encountered in wind turbine operations, based on our analysis of field data and relevant literature. Testing within this range ensures the evaluation is both rigorous and practically meaningful.

The statistics for various operating conditions in the WT dataset are shown in

Table 1.

Add simulated noise environments comprising Gaussian white noise at the aforementioned five intensities. For each dataset, partition 300 samples of one-dimensional acceleration data, each comprising 2048 sampling points. The data comprised one healthy state and four fault states. To prevent data leakage, the entire sample set for each fault state and channel was divided chronologically into a training set (the first 90% of samples, i.e., 270 samples per state per channel) and a test set (the final 10%, i.e., 30 samples per state per channel), ensuring no temporal overlap between the two sets. For each operating condition, data from both x and y channels were collected, with data from both directions treated as a single group. Based on the WT planetary gearbox dataset, the data were partitioned into two distinct subsets characterized by relatively higher and lower rotational speeds, as detailed in

Table 2.

To ensure the reproducibility and comparability of experimental results, all data partitioning processes—including the chronological split of each fault state and channel into training and testing sets—were performed using a fixed random seed. Similarly, the batch sampling order during model training was also controlled by a fixed random seed. After partitioning, Gaussian white noise was added to the original signals at various signal-to-noise ratios (SNR) to simulate different noise environments. This approach ensures that the original signal conditions remain consistent across different noise levels, thereby enhancing the comparability of model performance under varying noise intensities.

4.2. Comparative Experimental Setup

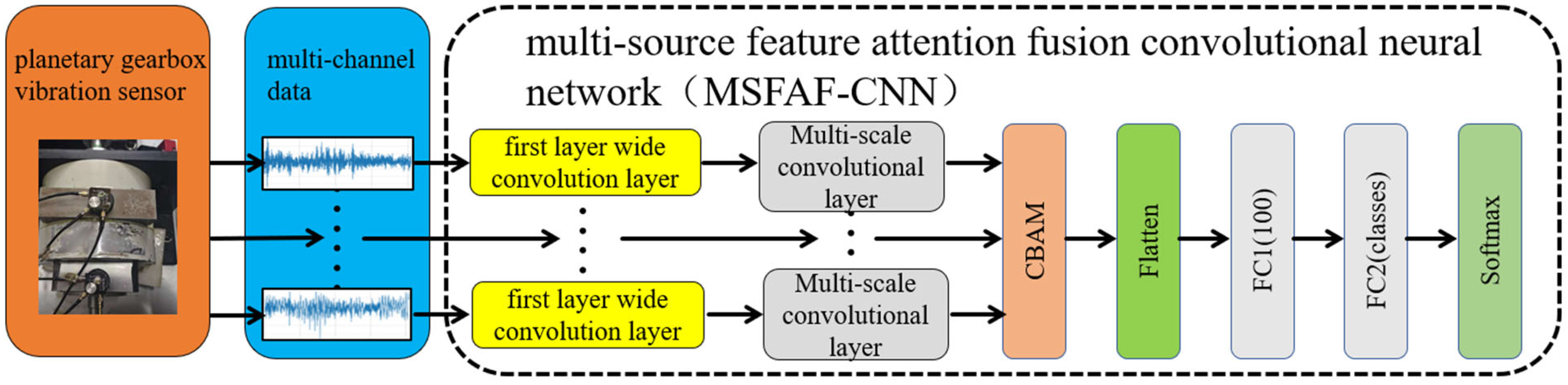

Two types of comparison experiment were set up to verify the robustness and superiority of the proposed MSFAF-CNN model and MS-LMMD method in noisy environments.

- (1)

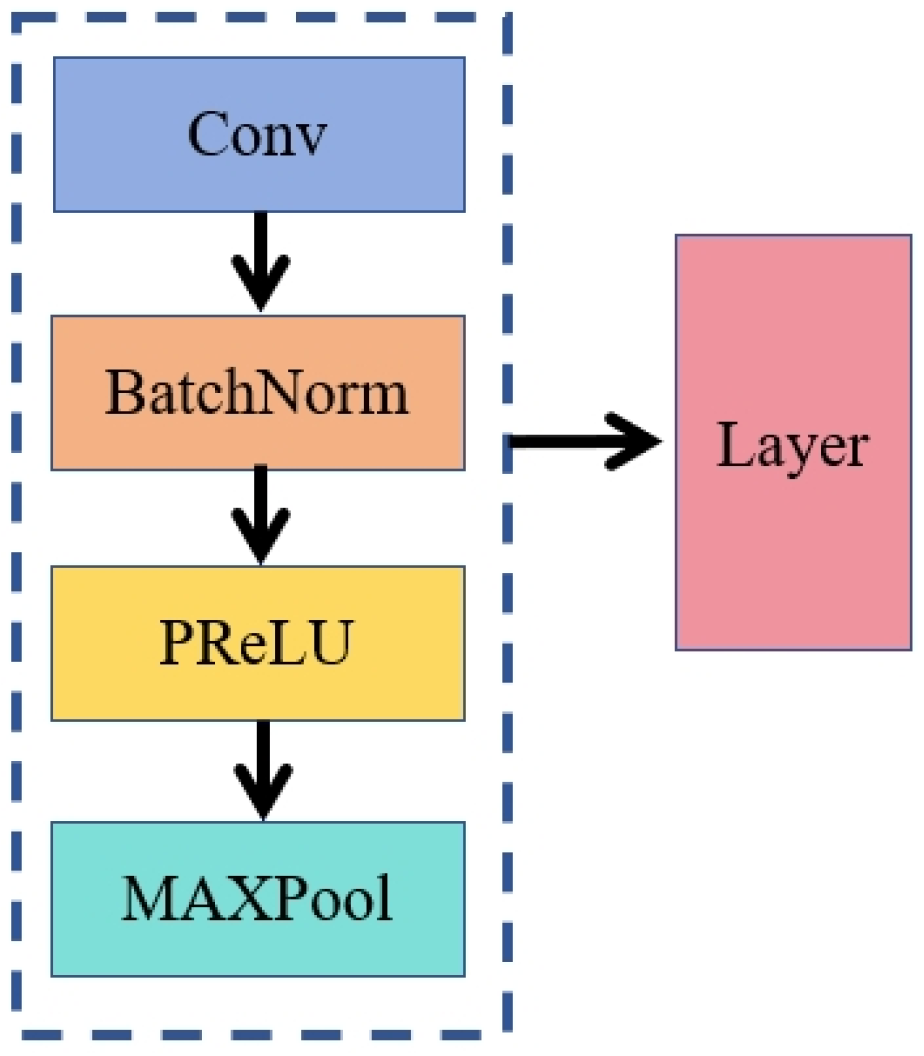

Feature extraction model comparison experiment

TICNN (Traditional Interference Convolutional Neural Network) [

11]: Represents the benchmark performance of traditional convolutional neural networks in the task.

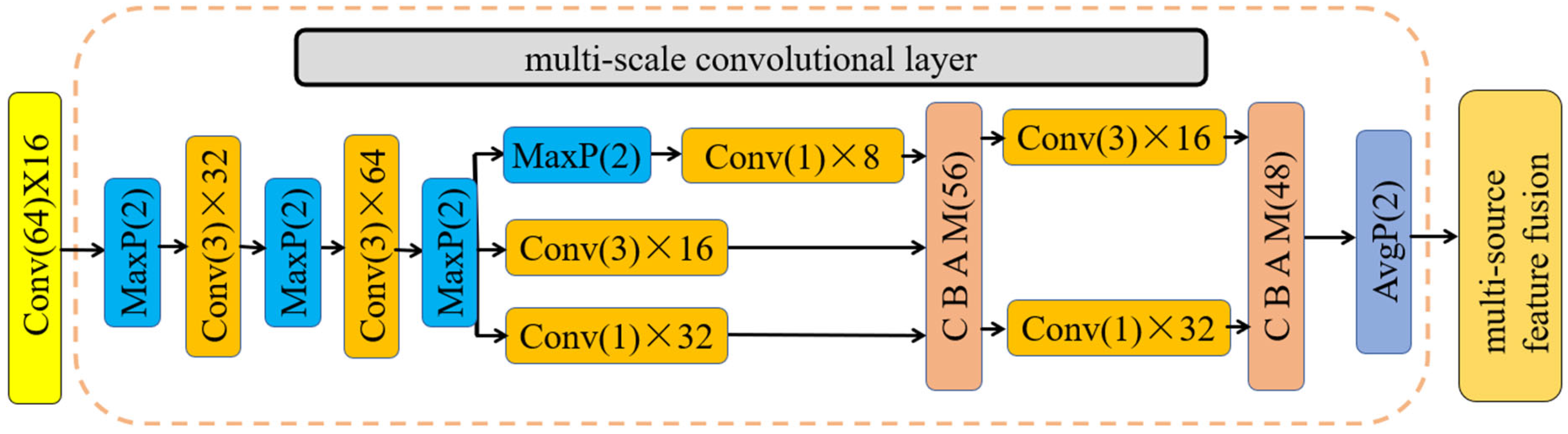

SOCNN (Structural optimisation achieved by integrating multi-scale convolutions with the broad convolutional approach) [

13]: As a cutting-edge research achievement, the model has undergone structural optimization.

SOCNN-CBAM (Introducing Attention Mechanism): Based on multi-scale convolution, the CBAM attention mechanism is added after two layers of multi-scale structure.

MS-SOCNN (multi-source SOCNN): Directly performs multi-source data splicing based on SOCNN without applying the CBAM.

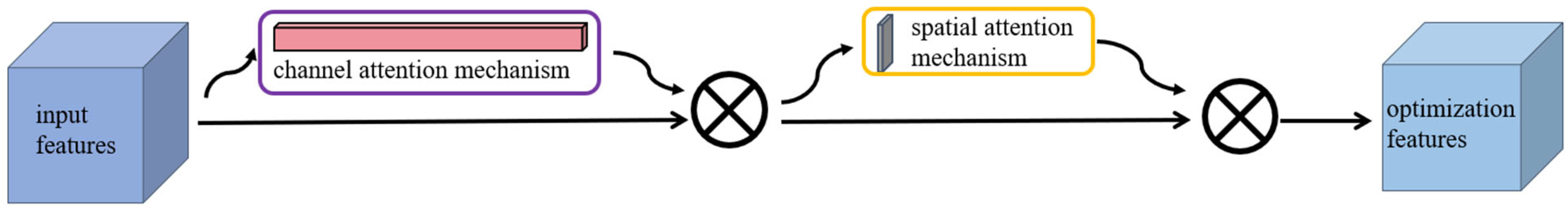

MSFAF-CNN (a novel multi-source data attention fusion network proposed in this study): By transforming data into ‘super channels, CBAM attention is applied to perform weighted fusion of multi-source data.

- (2)

Domain adaptation migration experiment

MSFAF-CNN (CEL): Trained using cross-entropy loss.

MSFAF-CNN (LMMD): A method based on local maximum mean difference for traditional subdomain-adaptive transfer learning.

MSFAF-CNN (MS-LMMD): Based on the proposed multi-source data fusion structure, multi-source subdomain adaptation transfer learning is performed using an improved MS-LMMD loss function.

In the context of model training, the training and testing processes for all datasets were implemented using the PyTorch 2.6 deep learning framework, which is based on Python 3.9. The training hyperparameters were set as follows: batch size batch_size = 50, learning rate lr = 0.001, Adam optimiser, and 250 epochs.

The outcomes of the aforementioned comparison experiments not only verify the robustness and superiority of the MSFAF-CNN model in noisy environments, but also reveal the important role of the MS-LMMD domain adaptation strategy in improving its performance.

4.2.1. Comparison of Feature Extraction Model Performance

In order to eliminate the impact of randomness in deep neural network parameters on evaluation results, this study adopted a five-round independent experiment verification mechanism. Within the confines of fixed hyperparameter conditions, the network weights underwent a reinitialization process in each experimental iteration. The arithmetic mean was then employed as the metric to assess the final performance of the model. The results of the study are presented in

Table 3.

In the single-channel model, the multi-scale structure SOCNN demonstrates a substantial enhancement over TICNN, exhibiting a 3.58% increase in accuracy at the x-channel −4 dB level. It is evident that SOCNN-CBAM demonstrates a substantial enhancement in performance when compared with the base SOCNN. As the level of noise increases, the benefits of the attention mechanism become more evident. At the x-channel −4 dB noise level, an improvement in accuracy from 93.25% to 94.56% (+1.31%) was observed, and a corresponding increase in the accuracy of the y channel from 95.24% to 96.76% (+1.52%) was also noted.

MS-SOCNN attains an accuracy rate of 98.56% at −4 dB through dual-channel direct concatenation, signifying a 1.80% enhancement over the single-channel optimal SOCNN-CBAM (y-channel 96.76%), thereby underscoring the complementary value of multi-source data. Meanwhile, MSFAF-CNN employs ‘super channel’ reconstruction combined with CBAM fusion, further improving accuracy at −4 dB to 99.04%, an increase of 0.48% over MS-SOCNN, and achieving 100% stable diagnosis in conditions above 2 dB.

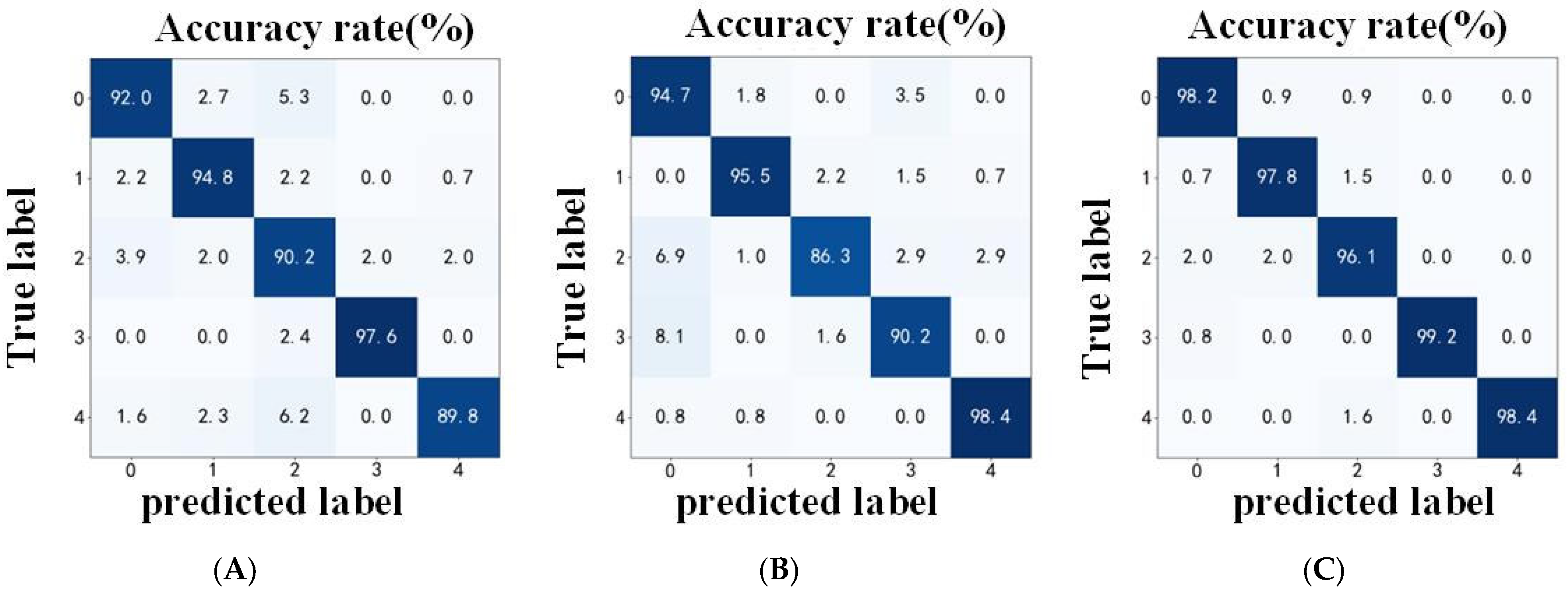

In order to more intuitively demonstrate the synergistic optimization effect between single-channel and multi-channel models, the confusion matrices of the two channels of SOCNN-CBAM at −4 dB are compared with those of MSFAF-CNN, as illustrated in

Figure 7.

In the confusion matrices for each channel method, along the x-axis, the fault recognition accuracy for labels 2 and 4—namely tooth damage and tooth loss conditions—stands at 90.2% and 89.8%, respectively, both below the overall accuracy of 91.03%. This indicates that x-channel data presents greater recognition challenges for these two categories. Along the y-axis, faults labelled 2 and 3—tooth defects and tooth fractures—fell below the overall average accuracy of 92.04%. However, accuracy for label 4 (tooth missing) reached 98.4%, significantly outperforming x-axis data. Thus, despite minimal difference in overall average accuracy, each channel’s data clearly exhibits distinct advantages for different faults.

The high accuracy of MSFAF-CNN diagnostic results is attributable to its core technology, which suppresses noise features through channel attention, reinforces the location of key vibration segments through spatial attention, distinguishes the influence of multiple data sources in diagnosis, and applies multiple data sources for collaborative optimization, ultimately improving the model’s accuracy.

4.2.2. Performance Comparison of Transfer Learning Methods

In accordance with the domain adaptation comparison experiment settings outlined above, the proposed model and method were subjected to training and testing, and the accuracy of the transfer learning target domain was subjected to statistical analysis. Each experiment was conducted on five occasions, and the mean value was calculated. The specific results are displayed in

Table 4.

The investigation into the mean accuracy rate in the context of domain adaptation transfer experiments revealed that the fundamental cross-entropy loss (CEL) exhibited an average accuracy rate that fell short of 84% for all levels of noise in transfer learning. This outcome was found to be considerably lower than those of the subsequent two methods. Following the introduction of the LMMD method, the accuracy rate in the A→B direction increased from 94.27% to 98.95%, while the accuracy in the B→A direction increased from 95.78% to 98.85%. The multi-source improved MS-LMMD method further optimized the transfer performance, maintaining the highest possible level of accuracy under all noise conditions. A comparative analysis with the conventional LMMD approach revealed an average enhancement ranging from 0.5% to 1.2%, underscoring enhanced noise resilience and operational adaptability.

In order to visualize the feature layer output for the migration task from source domain A to target domain B, under the scenario in which the lowest average accuracy of migration learning at a signal-to-noise ratio of −4 dB was achieved, t-SNE dimensionality reduction was employed, as illustrated in

Figure 8.

The feature dimension reduction diagram demonstrates that when the CEL method is employed in the source domain alone, multiple labels become intermingled. This indicates that a single classification loss is inadequate in overcoming inter-domain distribution differences. The CEL + LMMD approach has been shown to achieve subdomain alignment through the utilization of local maximum mean differences, thereby significantly enhancing the model’s generalization capability. This outcome serves to demonstrate the effectiveness of domain adaptation strategies in matching feature distributions. The CEL + MS-LMMD model incorporates a multi-source data fusion mechanism, thereby facilitating more precise domain- invariant feature extraction across a range of operating conditions. The reduced-dimension features exhibit enhanced cohesion, with clearer boundaries.

In order to verify the stability of the transfer learning method, the standard deviation of the accuracy rates for five training sessions in a −4 dB noise scenario was calculated. The results of this calculation are shown in

Table 5.

Table 5 illustrates that the conventional CEL method is deficient in domain adaptation mechanisms, leading to substantial noise interference during training and a standard deviation that is considerably higher than that of domain adaptation methods. LMMD enhances model robustness through subdomain alignment constraints, but MS-LMMD, which uses multi-source optimization, performs better in terms of consistency in the direction of transfer, confirming the strengthening effect of multi-source feature fusion on training stability.

Using the test accuracy at the −6 dB threshold—where model performance differences are most pronounced—as the performance metric, we analyzed the performance and computational overhead of feature-extraction models on the laboratory dataset. The results are shown in

Table 6.

Table 6 demonstrates a significant improvement of 14.81 percentage points in model accuracy, progressing from the baseline model WDCNN (84.34%) to the final proposed model MSFAF-CNN (99.15%). This incremental improvement process clearly demonstrates the contribution of each module: SOCNN yielded a marginal 0.14% gain through structural optimisation; the introduction of the CBAM attention mechanism elevated accuracy to 87.45%, validating the effectiveness of attention mechanisms in feature selection; and the incorporation of the feature fusion module enabled FAF-CNN to reach 91.15%, highlighting the critical role of multi-scale feature fusion in diagnostic performance. Notably, MSFAF-CNN further elevated accuracy to 99.15% through multi-source data fusion (channels 1, 2, and 3), fully validating the complementary advantages of multi-source information.

Beyond accuracy and training time, the memory footprint and inference speed are critical for assessing the industrial applicability of a diagnostic model. The peak GPU memory consumption during training for the proposed MSFAF-CNN model was approximately 2.8 GB with a batch size of 50. While higher than the 1.2 GB required by the single-channel WDCNN baseline, this remains well within the capabilities of modern industrial-grade GPUs, posing no barrier to development or deployment.

4.3. Comparative Experimental Dataset

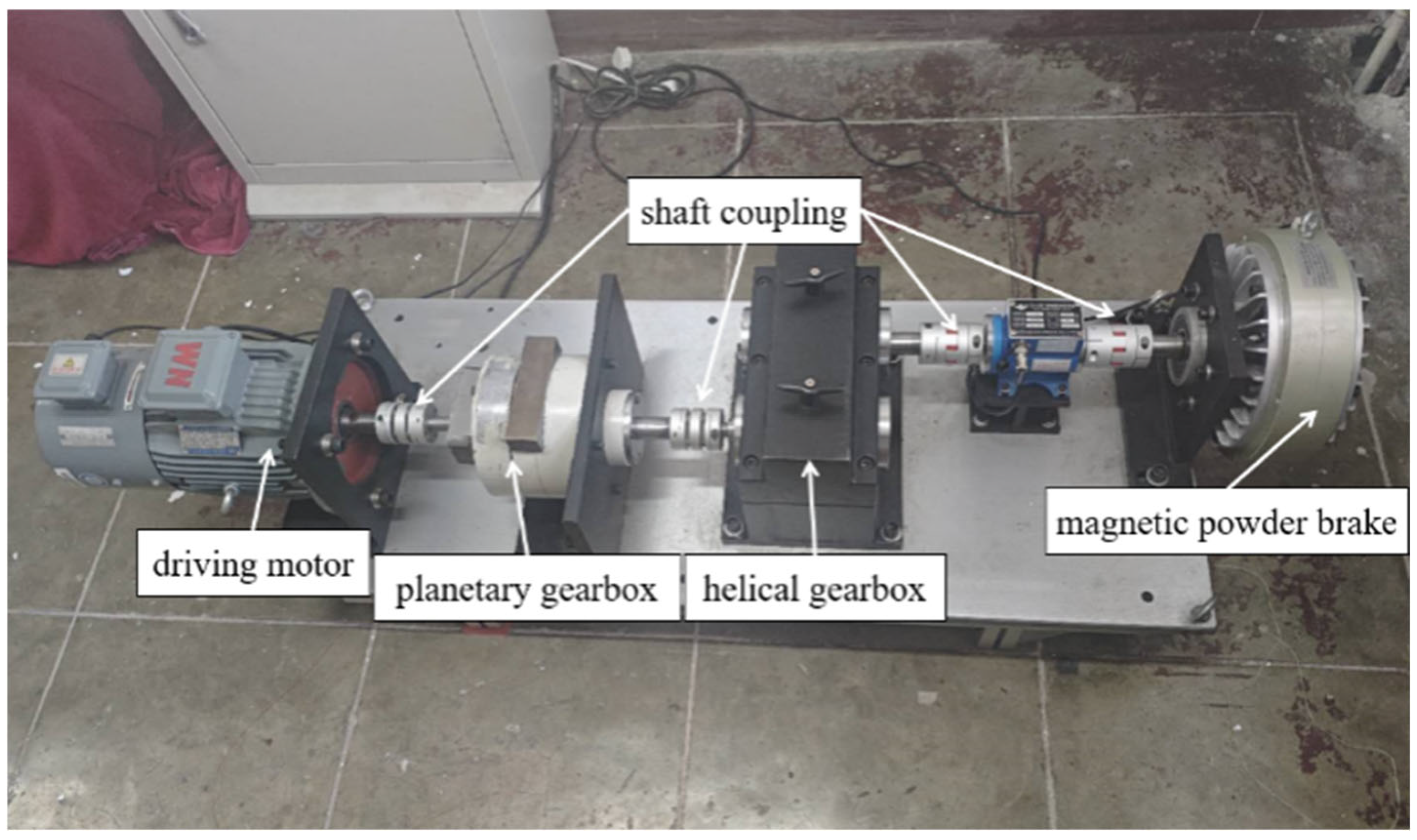

To address the need for adaptable fault diagnosis methods in wind turbine planetary gearboxes, we designed and developed a dedicated experimental data acquisition system. This system is integrated with our laboratory’s gearbox fault diagnosis test bench at North University of China in Taiyuan, China. It serves two primary purposes: to supplement the gaps in existing public datasets and to provide experimental validation for typical planetary gearbox faults.

The test bench primarily consists of a variable-speed drive motor, multiple couplings, a planetary gearbox, a helical gearbox, and a magnetic powder brake. Its structure is illustrated in

Figure 9. Six typical planetary gearbox states were selected from the laboratory dataset for experimental validation: healthy state, root crack in planetary gear teeth, pitting on planetary gear tooth surfaces, wear on planetary gear tooth surfaces, sun gear tooth fracture, and complete tooth breakage in the sun gear.

To simulate the actual operating load, the CZF-10 magnetic particle brake is used to increase the load. Its rated torque is 100 N·m, and the excitation current ranges from 0 to 2.5 A. When the gearbox operates at maximum speed, excessive load may cause the brake to sinter. Therefore, a load of 0 to 0.5 A is applied, which corresponds to an output torque of 0 to 20 N·m to simulate the actual working conditions.

In the actual operation of wind turbine gearboxes, the impact of complex working conditions—characterized by time-varying rotational speeds and variable loads caused by wind instability—on diagnostic results cannot be ignored. To better simulate the real operating conditions of wind turbine gearboxes, data collection for simulating variable working conditions was conducted in the laboratory. In addition to planetary gear faults, sun gear faults were also included; meanwhile, considering the noise interference from harsh environments, the data were divided into datasets as shown in

Table 7. Dataset NUC-A represents the low-speed and heavy-load condition, Dataset NUC-B corresponds to the high-speed and light-load condition, while Dataset NUC-C stands for the condition with time-varying rotational speed and medium load.

To simulate the label-scarcity scenario in real environments where only a single type of data label is available, unsupervised fault diagnosis under variable conditions was performed across three datasets with different rotational speeds and loads. For each level of additional noise, the same experiment was repeated 5 times, and the average accuracy was taken as the result, as shown in

Table 8.

It can be observed from the data table that the accuracy of all methods generally increases as the noise intensity decreases, which indicates the impact of noise on diagnostic accuracy.

Based on the transfer learning experimental results presented in

Table 8, the proposed CEL + 0.5 MS-LMMD + 0.5 MSAF-CNN approach achieved optimal performance across all transfer directions and noise conditions. Particularly under the highly challenging −6 dB strong noise scenario, the diagnostic accuracy for each transfer task exceeded 94%, significantly outperforming other comparative methods. Progressive ablation analysis reveals that incorporating the LMMD loss yields a substantial improvement of approximately 15–20 percentage points, highlighting the critical role of subdomain alignment in cross-domain diagnosis. Further integration of MS-LMMD delivers an additional 0.5–1.5 percentage point gain in complex transfer tasks such as A→C and B→C, demonstrating the efficacy of multi-source local distribution alignment. The integration with MSAF-CNN enables the model to achieve near-100% accuracy in most scenarios, validating the synergistic enhancement effect of multi-source feature fusion and multi-source domain adaptation mechanisms. Moreover, as the signal-to-noise ratio improves from −6 dB to 2 dB, all methods gradually demonstrate enhanced performance. The proposed approach consistently maintains the highest accuracy and strongest robustness across various noise conditions, showcasing superior cross-operating-condition generalisation capability and engineering application value.