Deep Learning Framework for Facial Reconstruction Outcome Prediction: Integrating Image Inpainting and Depth Estimation for Computer-Assisted Surgical Planning

Abstract

1. Introduction

2. Materials and Methods

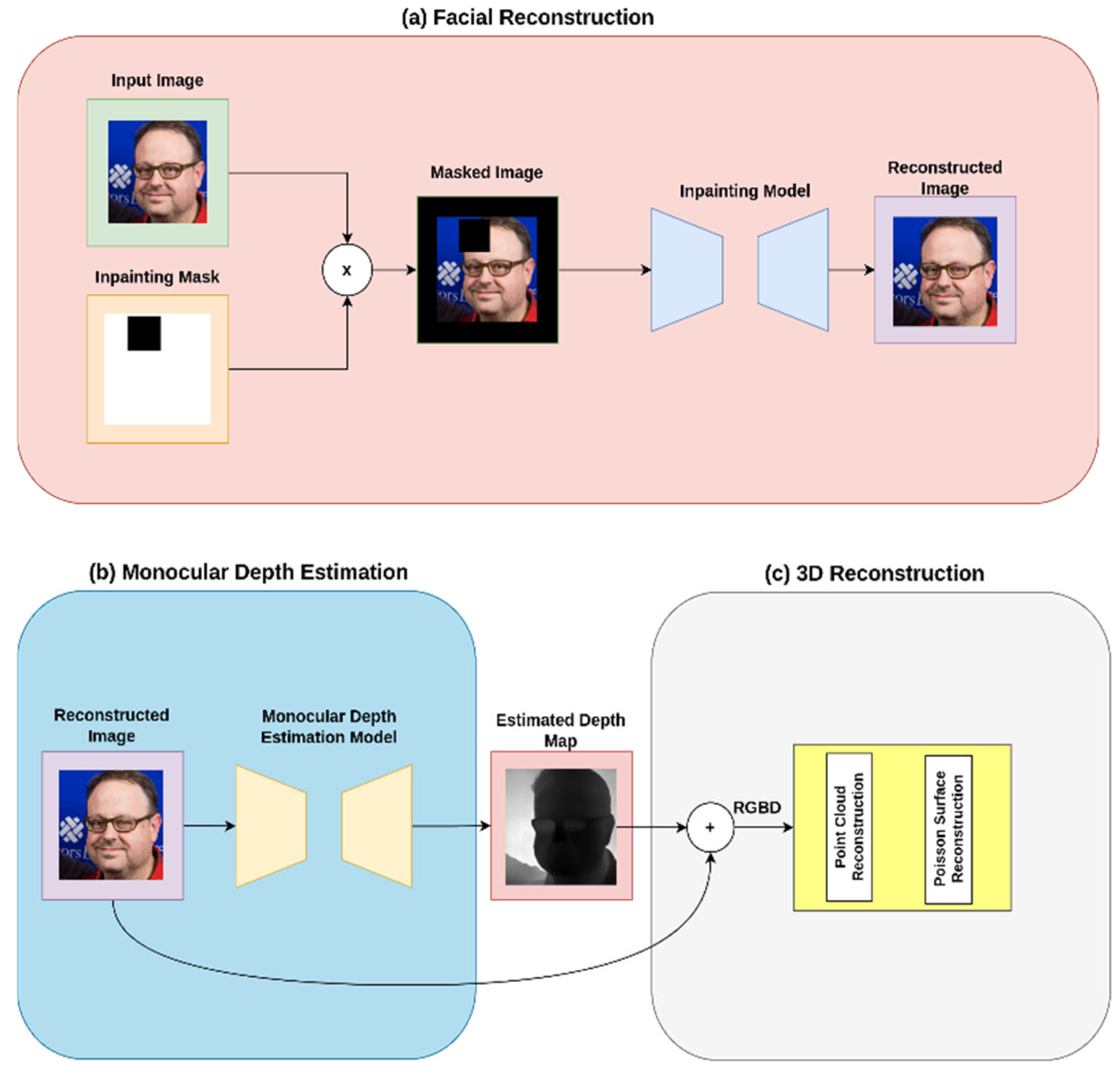

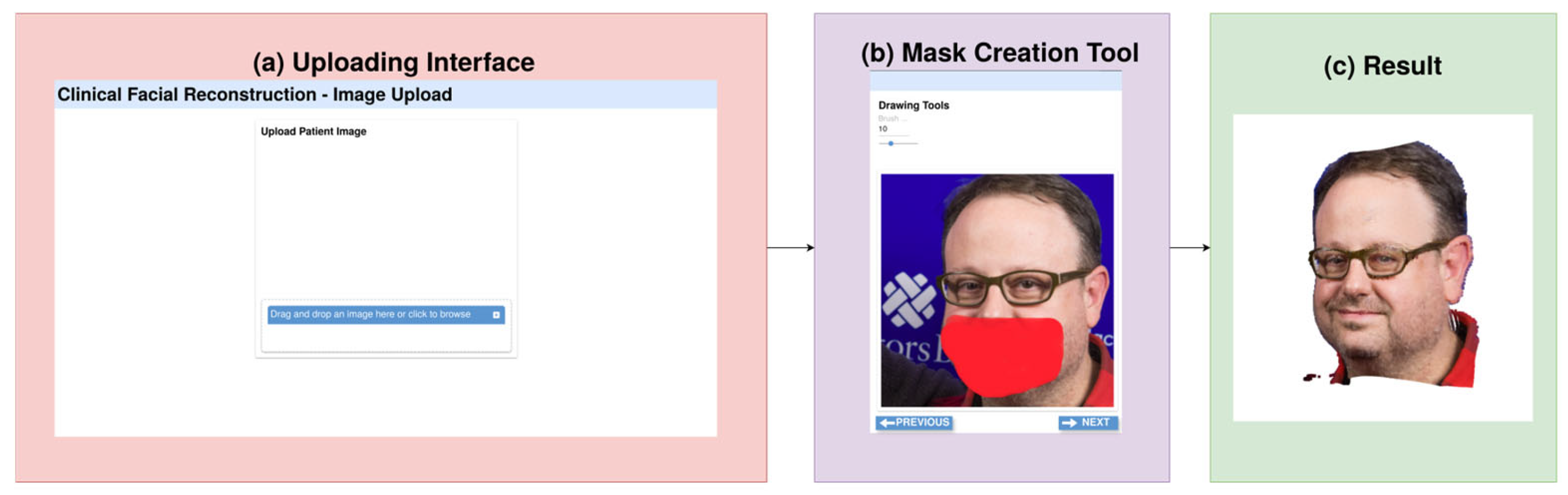

2.1. Proposed System Architecture

2.1.1. Image Acquisition and Preprocessing

2.1.2. Facial Inpainting Module

2.1.3. Depth Estimation and 3D Reconstruction Module

2.2. Model Selection

2.2.1. Facial Inpainting Models

- LaMa [10] represents a significant advancement in image inpainting through its ability to handle large missing regions, a critical feature for facial reconstruction tasks. The model achieves this through its Fast Fourier Convolution-based architecture [20], which enables efficient processing of global image information while maintaining local structure coherence.

- LGNet [11] addresses the challenge of maintaining both local and global coherence through its multi-stage refinement approach. The model achieves this through progressive refinements that focus on local feature consistency and global structure harmony.

- MAT [12] introduces a transformer-based solution specifically designed for handling complex mask shapes and maintaining feature consistency. Its attention mechanism enables the model to reference distant facial features when reconstructing missing regions.

2.2.2. Monocular Depth Estimation Models

- ZoeDepth [13] advances the field of monocular depth estimation through its metric-aware approach and robust performance across varied scenarios. The model’s architecture combines transformer-based feature extraction with a specialized metric bin module to capture the scale of the scene.

- Depth Anything V2 [14] represents a recent advancement in general-purpose depth estimation, utilizing a teacher-student framework to achieve robust depth prediction. The model’s architecture leverages the semantic understanding capabilities of the DINOv2 backbone [23], enabling it to capture both coarse structure and fine details in depth estimation.

- Depth Pro [15] is a foundation model that provides sharp, metric depth maps at high resolution with high-frequency detail. It utilizes an efficient multi-scale Vision Transformer architecture, enabling state-of-the-art performance in boundary accuracy, which is crucial for detailed 3D facial modeling.

2.3. Datasets

2.4. Evaluation Metrics

2.4.1. Inpainting Quality Metrics

2.4.2. Depth Estimation Metrics

3. Results

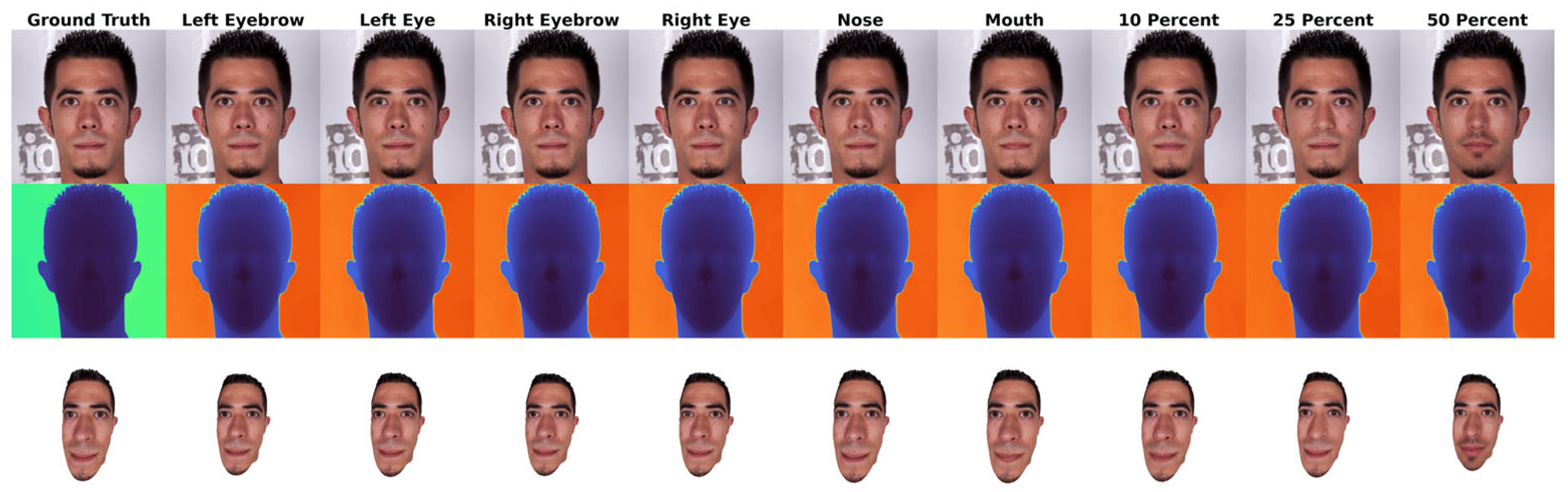

3.1. Inpainting Performance Analysis

3.2. Monocular Depth Estimation Performance Analysis

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shaye, D.A.; Tollefson, T.T.; Strong, E.B. Use of intraoperative computed tomography for maxillofacial reconstructive surgery. JAMA Facial Plast. Surg. 2015, 17, 113–119. [Google Scholar] [CrossRef] [PubMed]

- Heiland, M.; Schulze, D.; Blake, F.; Schmelzle, R. Intraoperative imaging of zygomaticomaxillary complex fractures using a 3d c-arm system. Int. J. Oral Maxillofac. Surg. 2005, 34, 369–375. [Google Scholar] [CrossRef]

- Tarassoli, S.P.; Shield, M.E.; Allen, R.S.; Jessop, Z.M.; Dobbs, T.D.; Whitaker, I.S. Facial reconstruction: A systematic review of current image acquisition and processing techniques. Front. Surg. 2020, 7, 537616. [Google Scholar] [CrossRef]

- Afaq, S.; Jain, S.K.; Sharma, N.; Sharma, S. Acquisition of precision and reliability of modalities for facial reconstruction and aesthetic surgery: A systematic review. J. Pharm. Bioallied Sci. 2023, 15 (Suppl. S2), S849–S855. [Google Scholar] [CrossRef]

- Monini, S.; Ripoli, S.; Filippi, C.; Fatuzzo, I.; Salerno, G.; Covelli, E.; Bini, F.; Marinozzi, F.; Marchelletta, S.; Manni, G.; et al. An objective, markerless videosystem for staging facial palsy. Eur. Arch. Otorhinolaryngol. 2021, 278, 3541–3550. [Google Scholar] [CrossRef]

- Fuller, S.C.; Strong, E.B. Computer applications in facial plastic and reconstructive surgery. Curr. Opin. Otolaryngol. Head Neck Surg. 2007, 15, 233–237. [Google Scholar] [CrossRef] [PubMed]

- Scolozzi, P. Applications of 3d orbital computer-assisted surgery (cas). J. Stomatol. Oral Maxillofac. Surg. 2017, 118, 217–223. [Google Scholar] [CrossRef]

- Davis, K.S.; Vosler, P.S.; Yu, J.; Wang, E.W. Intraoperative image guidance improves outcomes in complex orbital reconstruction by novice surgeons. J. Oral Maxillofac. Surg. 2016, 74, 1410–1415. [Google Scholar] [CrossRef]

- Luz, M.; Strauss, G.; Manzey, D. Impact of image-guided surgery on surgeons’ performance: A literature review. Int. J. Hum. Factors Ergon. 2016, 4, 229–263. [Google Scholar] [CrossRef]

- Suvorov, R.; Logacheva, E.; Mashikhin, A.; Remizova, A.; Ashukha, A.; Silvestrov, A.; Kong, N.; Goka, H.; Park, K.; Lempitsky, V. Resolution-robust large mask inpainting with fourier convolutions. arXiv 2021, arXiv:2109.07161. [Google Scholar] [CrossRef]

- Quan, W.; Zhang, R.; Zhang, Y.; Li, Z.; Wang, J.; Yan, D.-M. Image inpainting with local and global refinement. IEEE Trans. Image Process. 2022, 31, 2405–2420. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Lin, Z.; Zhou, K.; Qi, L.; Wang, Y.; Jia, J. Mat: Mask-aware transformer for large hole image inpainting. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10748–10758. [Google Scholar]

- Bhat, S.F.; Birkl, R.; Wofk, D.; Wonka, P.; Müller, M. Zoedepth: Zero-shot transfer by combining relative and metric depth. arXiv 2023, arXiv:2302.12288. [Google Scholar]

- Yang, L.; Kang, B.; Huang, Z.; Xu, X.; Feng, J.; Zhao, H. Depth anything: Unleashing the power of large-scale unlabeled data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Bochkovskii, A.; Delaunoy, A.; Germain, H.; Santos, M.; Zhou, Y.; Richter, S.R.; Koltun, V. Depth Pro: Sharp Monocular Metric Depth in Less Than a Second. arXiv 2024, arXiv:2410.02073. [Google Scholar] [CrossRef]

- Han, J.J.; Acar, A.; Henry, C.; Wu, J.Y. Depth Anything in Medical Images: A Comparative Study. arXiv 2024, arXiv:2401.16600. [Google Scholar] [CrossRef]

- Manni, G.; Lauretti, C.; Prata, F.; Papalia, R.; Zollo, L.; Soda, P. BodySLAM: A Generalized Monocular Visual SLAM Framework for Surgical Applications. arXiv 2024, arXiv:2408.03078. [Google Scholar] [CrossRef]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Open3D: A modern library for 3D data processing. arXiv 2018, arXiv:1801.09847. [Google Scholar] [CrossRef]

- Kazhdan, M.M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006. [Google Scholar]

- Chi, L.; Jiang, B.; Mu, Y. Fast Fourier Convolution. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020. [Google Scholar]

- Quan, W.; Chen, J.; Liu, Y.; Yan, D.M.; Wonka, P. Deep Learning-Based Image and Video Inpainting: A Survey. Int. J. Comput. Vis. 2024, 132, 2367–2400. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.V.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning robust visual features without supervision. arXiv 2024, arXiv:2304.07193. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4217–4228. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Basak, S.; Khan, F.; Javidnia, H.; Corcoran, P.; McDonnell, R.; Schukat, M. C3i-synface: A synthetic head pose and facial depth dataset using seed virtual human models. Data Brief 2023, 48, 109087. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Rodríguez, J.J.; Lamarca, J.; Morlana, J.; Tardós, J.D.; Montiel, J.M.M. SD-DefSLAM: Semi-Direct Monocular SLAM for Deformable and Intracorporeal Scenes. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5170–5177. [Google Scholar]

- Masoumian, A.; Rashwan, H.A.; Cristiano, J.; Asif, M.S.; Puig, D. Monocular Depth Estimation Using Deep Learning: A Review. Sensors 2022, 22, 5353. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhu, S.; Ge, Y.; Zeng, B.; Imran, M.A.; Abbasi, Q.H.; Cooper, J. Depth-guided Deep Video Inpainting. IEEE Trans. Multimed. 2023, 26, 5860–5871. [Google Scholar] [CrossRef]

- Zhang, F.X.; Chen, S.; Xie, X.; Shum, H.P.H. Depth-Aware Endoscopic Video Inpainting. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI, Marrakesh, Morocco, 6–10 October 2024. [Google Scholar]

| Metric | Formula | Description |

|---|---|---|

| PSNR | Signal-to-noise ratio in decibels, measuring peak error. Higher values indicate better quality reconstruction. | |

| SSIM | Structural similarity index measuring perceived quality through luminance, contrast, and structure correlation. | |

| RMSE-log | Root mean square error in logarithmic space, better handling wide range of values and relative differences. | |

| FID | Inception feature-space distance between real and generated image distributions, correlating with human perception. | |

| LPIPS | Learned perceptual similarity using weighted distances between deep neural network features. |

| Metric | Formula | Description |

|---|---|---|

| Abs. Rel. Diff. | Average of relative depth prediction errors, providing scale-invariant measure of prediction accuracy relative to true depth | |

| Sq. Rel. | Emphasizes larger errors by squaring relative error, particularly sensitive to outliers in depth prediction | |

| RMSE | Standard deviation of prediction errors in metric space, heavily penalizes large deviations from ground truth depth | |

| RMSE-log | Evaluates errors in logarithmic space, better handling wide depth ranges while maintaining sensitivity to small depth differences | |

| Accuracy(δ) | Proportion of predictions within relative threshold of true values, commonly evaluated at = 1.25, 1.252, 1.253 |

| Masking | Model | PSNR ↑ | SSIM ↑ | RMSE-log ↓ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|---|---|

| Left Eyebrow | LaMa | 20.1925 | 0.5694 | 0.3422 | 0.0720 | 1.2552 |

| LGNet | 25.1119 | 0.7542 | 0.2200 | 0.0696 | 2.8648 | |

| MAT | 22.9053 | 0.6943 | 0.2748 | 0.0719 | 2.6511 | |

| Left Eye | LaMa | 16.7562 | 0.342 | 0.7435 | 0.0994 | 1.6457 |

| LGNet | 20.0839 | 0.5311 | 0.5334 | 0.0708 | 2.8785 | |

| MAT | 19.4222 | 0.5228 | 0.5539 | 0.0693 | 2.9148 | |

| Nose | LaMa | 19.7346 | 0.7516 | 0.3319 | 0.1481 | 1.7137 |

| LGNet | 25.6988 | 0.8803 | 0.1925 | 0.1193 | 2.7347 | |

| MAT | 23.6441 | 0.8527 | 0.2334 | 0.1186 | 2.6879 | |

| Mouth | LaMa | 17.9572 | 0.5447 | 0.4530 | 0.1985 | 1.9385 |

| LGNet | 22.3937 | 0.7456 | 0.2922 | 0.1567 | 3.0683 | |

| MAT | 21.0938 | 0.7092 | 0.3327 | 0.1492 | 3.0341 | |

| Right Eye | LaMa | 16.7474 | 0.3342 | 0.7409 | 0.0940 | 1.7031 |

| LGNet | 19.9782 | 0.5254 | 0.5363 | 0.0694 | 2.8752 | |

| MAT | 19.4241 | 0.5210 | 0.5524 | 0.0712 | 2.8912 | |

| Right Eyebrow | LaMa | 20.0869 | 0.5690 | 0.3530 | 0.0737 | 1.2399 |

| LGNet | 24.9909 | 0.7513 | 0.2253 | 0.0711 | 2.8796 | |

| MAT | 22.6738 | 0.6896 | 0.2828 | 0.0729 | 2.6594 |

| Masking | Model | PSNR ↑ | SSIM ↑ | RMSE-log ↓ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|---|---|

| 10% | LaMa | 29.2582 | 0.9538 | 0.2671 | 0.0198 | 0.0030 |

| LGNet | 31.4162 | 0.9587 | 0.5945 | 0.0159 | 0.0020 | |

| MAT | 11.6165 | 0.3972 | 1.4479 | 0.3565 | 0.1571 | |

| 25% | LaMa | 22.7765 | 0.8666 | 0.4563 | 0.0706 | 0.0529 |

| LGNet | 25.3864 | 0.8839 | 0.6643 | 0.0462 | 0.0105 | |

| MAT | 13.3344 | 0.5346 | 1.2856 | 0.2658 | 0.0923 | |

| 50% | LaMa | 18.0442 | 0.7130 | 0.7147 | 0.1826 | 0.3642 |

| LGNet | 20.2653 | 0.7344 | 0.7976 | 0.1153 | 0.0573 | |

| MAT | 16.9843 | 0.7276 | 0.9723 | 0.1409 | 0.0436 |

| Model | Abs. Rel. ↓ | Sq. Rel. ↓ | RMSE ↓ | RMSE-log ↓ | δ1 ↑ | δ2 ↑ | δ3 ↑ |

|---|---|---|---|---|---|---|---|

| Depth Anything V2 | 0.6453 | 0.5072 | 0.6411 | 0.6042 | 0.6697 | 0.7144 | 0.7233 |

| ZoeDepth | 0.6509 | 0.5013 | 0.8607 | 0.6061 | 0.5271 | 0.6811 | 0.7116 |

| DepthPro | 0.1426 | 0.0773 | 0.5424 | 0.2282 | 0.8373 | 0.9778 | 0.9886 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bini, F.; Manni, G.; Marinozzi, F. Deep Learning Framework for Facial Reconstruction Outcome Prediction: Integrating Image Inpainting and Depth Estimation for Computer-Assisted Surgical Planning. Appl. Sci. 2025, 15, 12376. https://doi.org/10.3390/app152312376

Bini F, Manni G, Marinozzi F. Deep Learning Framework for Facial Reconstruction Outcome Prediction: Integrating Image Inpainting and Depth Estimation for Computer-Assisted Surgical Planning. Applied Sciences. 2025; 15(23):12376. https://doi.org/10.3390/app152312376

Chicago/Turabian StyleBini, Fabiano, Guido Manni, and Franco Marinozzi. 2025. "Deep Learning Framework for Facial Reconstruction Outcome Prediction: Integrating Image Inpainting and Depth Estimation for Computer-Assisted Surgical Planning" Applied Sciences 15, no. 23: 12376. https://doi.org/10.3390/app152312376

APA StyleBini, F., Manni, G., & Marinozzi, F. (2025). Deep Learning Framework for Facial Reconstruction Outcome Prediction: Integrating Image Inpainting and Depth Estimation for Computer-Assisted Surgical Planning. Applied Sciences, 15(23), 12376. https://doi.org/10.3390/app152312376