Integrating Sentiment Analysis into Agile Feedback Loops for Continuous Improvement

Abstract

1. Introduction

- Contribution to the Literature on AI Applications in Software Engineering Process Improvement: By combining a literature review with an applied case study, the work bridges gaps between theoretical AI capabilities and practical challenges faced in software project management.

- Demonstration of Sentiment Analysis as a Proactive Risk Detection Mechanism: The study highlights how sentiment trends can signal emerging issues, such as unclear tasks or team stress.

- Through semi-structured interviews, the study gathers expert feedback on the usability, relevance, and potential impact of AI-based sentiment analysis in managing agile software projects, providing valuable practical perspectives to complement the technical development.

- Integration of Sentiment Analysis with Agile Project Management Practices: A methodological approach provides a foundation for further exploration of AI-enhanced feedback analysis in software engineering, encouraging the development of more sophisticated, context-aware sentiment analytics tools.

2. Materials and Methods

2.1. Leveraging Feedback for Continuous Improvement

- RQ1—What sources of feedback are used to promote continuous improvement?

- RQ2—Does continuous improvement through feedback occur at the level of individuals, processes, teams, or all of these?

- RQ3—What individual competencies of each development team member can benefit from the feedback process?

- RQ4—In what ways do companies and software development projects using agile methodologies benefit from the feedback process?

2.1.1. Inclusion Criteria

- Address software development or the management of software development projects.

- Refer to feedback processes of any type or origin, provided they occur within software development teams.

- Refer to software development based on agile methodologies.

- Address the topic of continuous improvement in the context of software development.

- Published between 2014 and 2024.

2.1.2. Exclusion Criteria

- Studies that did not address the topic of software development or its management.

- Studies that did not address feedback from members of development teams.

- Studies that did not address agile methodologies.

- Studies that did not refer to continuous improvement within the scope of software development.

- Studies that were retracted, unavailable, or duplicated.

2.1.3. Data Sources

2.1.4. Selection Process

- 65 excluded after reading the title.

- 17 excluded after reading the abstract.

- 4 excluded after a full-text review.

2.1.5. Analysis of the Selected Studies

2.2. Leveraging AI and NLP for Enhanced Software Project Management

- RQ5—Which project management activities are addressed and intended to be improved using AI or NLP in each study?

- RQ6—What technologies are used in the development of the proposed solutions in each analyzed study?

- RQ7—What is the origin of the data, and what are its properties used in the development of proposed solutions in each study?

- RQ8—What is the final product or output of the solution developed in each analyzed study?

- RQ9—What advantages do the authors of each analyzed study identify in using their proposed solutions compared to conventional approaches in software project management?

2.2.1. Inclusion Criteria

- Address at least one of the following topics: AI or NLP.

- Cover aspects and/or typical activities of software development.

- Present concrete solutions involving the use of the aforementioned technologies.

- Explore how these technologies contribute to the improvement of software project management.

- Be published after 2014.

2.2.2. Exclusion Criteria

- Studies related to areas other than software development.

- Studies published in languages other than Portuguese or English.

- Studies published more than ten years ago.

- Studies addressing a technological area in a generic manner for improving software project management activities.

2.2.3. Data Sources

2.2.4. Selection Process

- 35 excluded after reading the title.

- 49 excluded after reading the abstract.

- 1 excluded due to unavailability.

- 14 excluded after full text review.

2.2.5. Analysis of the Selected Studies

2.3. Complementary Roles of Feedback and AI in Agile Software Development

3. Team Leader Perspectives on Feedback in Software Project Management and the Role of AI in Its Analysis

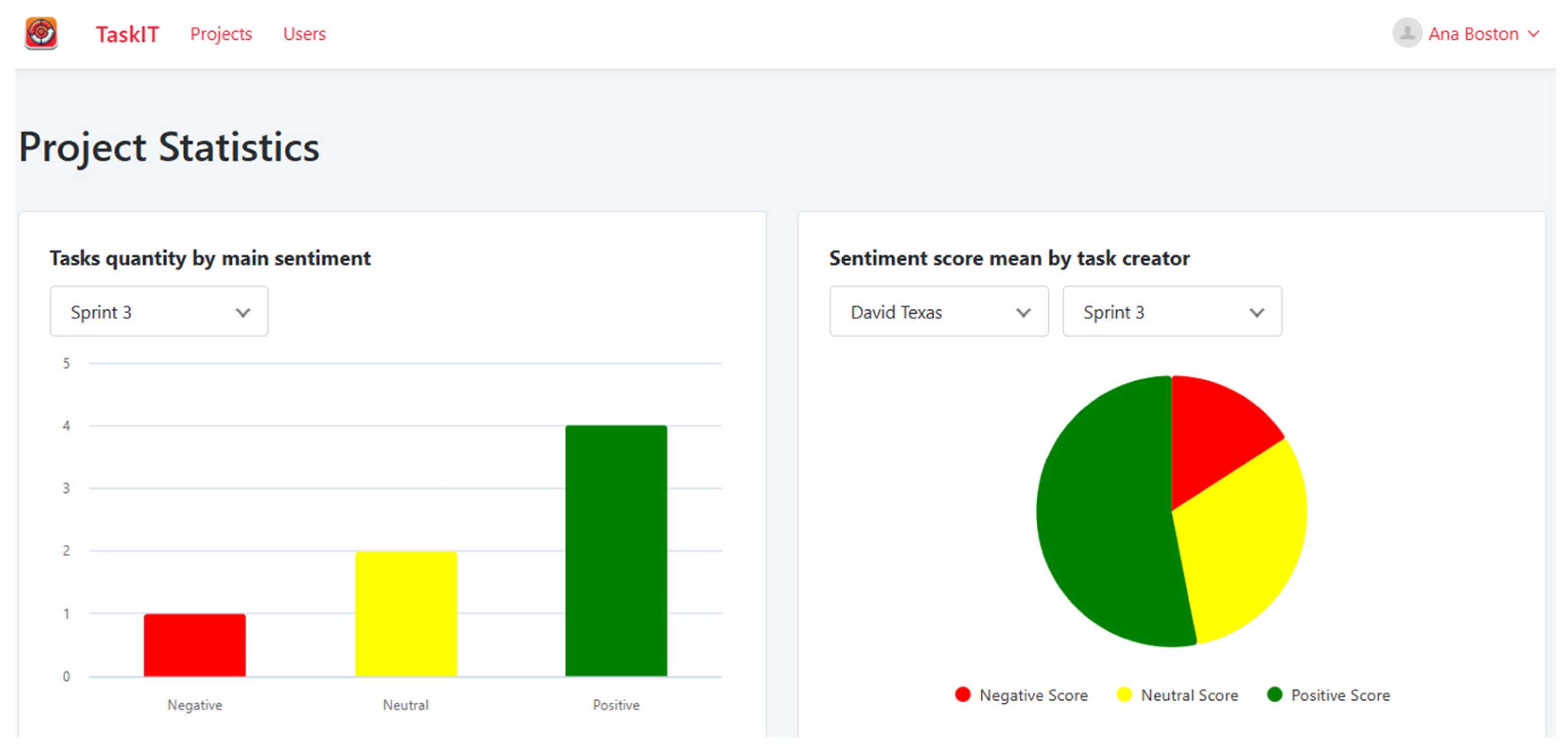

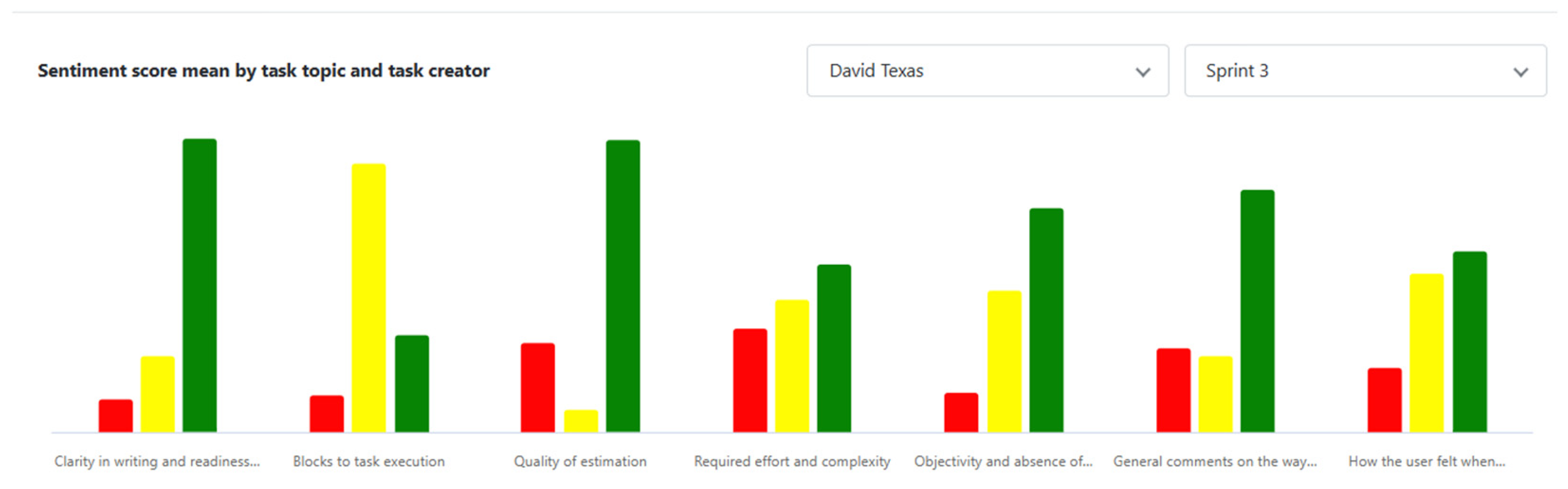

3.1. A Tool for Assessing and Tracking the Improvement of Task Description Quality

- Project Manager: Responsible for overseeing the software development project, managing user roles, configuring project settings, and tracking developer responses and the resulting analyses throughout the sprint.

- Unauthenticated User: Not logged into the system, with very limited functionality.

- Developer: Performs technical tasks such as programming. Developers can assign themselves to tasks, move them across the Kanban board to update their status, and, upon completing a task, provide feedback on the quality of its description.

- Task Creator: The only users who can create tasks, specifying descriptions, titles, and estimated story points.

3.2. Semi-Structured Interviews with Team Leaders

3.2.1. Interview Methodology

3.2.2. Results

4. Discussion and Final Remarks

4.1. Future Work

4.2. Limitations and Threats to Validity

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Macedo, H.I.L. Development of a Continuous Improvement Process for Agile Software Development Teams; Universidade do Minho: Braga, Portugal, 2019. [Google Scholar]

- Compreender a Melhoria Contínua. Available online: https://www.kaizen.com/pt/insights-pt/melhoria-continua-excelencia-operacional/ (accessed on 6 February 2025).

- Understanding Continuous Improvement in Software Development. Available online: https://www.teamhub.com/blog/understanding-continuous-improvement-in-software-development/ (accessed on 6 February 2025).

- What Is Continuous Improvement: Tools and Methodologies. Available online: https://www.atlassian.com/agile/project-management/continuous-improvement (accessed on 6 February 2025).

- O Que é Análise de Sentimento? AWS. Available online: https://www.aws.amazon.com/what-is/sentiment-analysis/ (accessed on 19 January 2025).

- What Is Sentiment Analysis? | IBM. Available online: https://www.ibm.com/think/topics/sentiment-analysis (accessed on 19 January 2025).

- What Is Sentiment Analysis? GeeksforGeeks: Noida, India. 2025. Available online: https://www.geeksforgeeks.org/what-is-sentiment-analysis/ (accessed on 19 January 2025).

- Izhar, R.; Cosh, K.; Bhatti, S.N. Enhancing Agile Software Development: A Novel Approach to Automated Requirements Prioritization. In Proceedings of the 21st International Joint Conference on Computer Science and Software Engineering (JCSSE), Phuket, Thailand, 19–22 June 2024; pp. 286–293. [Google Scholar]

- Phan, H.; Jannesari, A. Story Point Level Classification by Text Level Graph Neural Network. In Proceedings of the 1st International Workshop on Natural Language-Based Software Engineering (NLBSE), Pittsburgh, PA, USA, 8 May 2022; pp. 75–78. [Google Scholar]

- Jiménez, S.; Alanis, A.; Beltrán, C.; Juárez-Ramírez, R.; Ramírez-Noriega, A.; Tona, C. USQA: A User Story Quality Analyzer Prototype for Supporting Software Engineering Students. Comput. Appl. Eng. Educ. 2023, 31, 1014–1024. [Google Scholar] [CrossRef]

- Tarawneh, M.; AbdAlwahed, H.; AlZyoud, F. Innovating Project Management: AI Applications for Success Prediction and Resource Optimization. In Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 956 LNNS, pp. 382–391. [Google Scholar]

- Sandoval-Alfaro, O.E.; Quintero-Meza, R.R. Application of Data Analytics Techniques for Decision Making in the Retrospective Stage of the Agile Scrum Methodology. In Proceedings of the 2021 Mexican International Conference on Computer Science (ENC), Morelia, Mexico, 9–11 August 2021. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Matthies, C. Feedback in Scrum: Data-Informed Retrospectives. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), Montreal, QC, Canada, 25–31 May 2019. [Google Scholar]

- Ribeiro, A.B.C.; Alves, C.F. A Survey Research on Feedback Practices in Agile Software Development Teams. In Proceedings of the ACM International Conference Proceeding Series, Virtual Event Brazil, 8–11 November 2021; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Morales-Trujillo, M.; Galster, M. On Evidence-Based Feedback Practices in Software Engineering for Continuous People Improvement. In Proceedings of the Software Engineering Education Conference, Tokyo, Japan, 7–9 August 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023; Volume 2023-August, pp. 158–162. [Google Scholar]

- Siebra, C.; Sodre, L.; Quintino, J.; Da Silva, F.Q.B.; Santos, A.L.M. Collaborative Feedback and Its Effects on Software Teams. IEEE Softw. 2020, 37, 85–93. [Google Scholar] [CrossRef]

- Ribeiro, A.B.C.; Alves, C.F. Feedback in Virtual Software Development Teams. In Proceedings of the Iberian Conference on Information Systems and Technologies (CISTI), Aveiro, Portugal, 20–23 June 2023; IEEE Computer Society: Washington, DC, USA, 2023; Volume 2023-June. [Google Scholar]

- Eren, K.K.; Ozbey, C.; Eken, B.; Tosun, A. Customer Requests Matter: Early Stage Software Effort Estimation Using k-Grams. In Proceedings of the Proceedings of the ACM Symposium on Applied Computing, Brno, Czechia, 30 March–3 April 2020; pp. 1540–1547. [Google Scholar]

- Ebrahim, E.; Sayed, M.; Youssef, M.; Essam, H.; El-Fattah, S.A.; Ashraf, D.; Magdy, O.; Eladawi, R. AI Decision Assistant ChatBot for Software Release Planning and Optimized Resource Allocation. In Proceedings of the Proceedings—5th IEEE International Conference on Artificial Intelligence Testing (AITest), Athens, Greece, 17–20 July 2023; pp. 55–60. [Google Scholar]

- Feng, Y.; Liu, Q.; Dou, M.; Liu, J.; Chen, Z. Mubug: A Mobile Service for Rapid Bug Tracking. Sci. China Inf. Sci. 2016, 59, 1–5. [Google Scholar] [CrossRef]

- Sarwar, H.; Rahman, M. A Systematic Short Review of Machine Learning and Artificial Intelligence Integration in Current Project Management Techniques. In Proceedings of the 2024 4th IEEE International Conference on Software Engineering and Artificial Intelligence (SEAI), Xiamen, China, 21–23 June 2024; pp. 262–270. [Google Scholar]

- Mohammad, A.; Chirchir, B. Challenges of Integrating Artificial Intelligence in Software Project Planning: A Systematic Literature Review. Digital 2024, 4, 555–571. [Google Scholar] [CrossRef]

| Study | RQ1 | RQ2 | RQ3 | RQ4 |

|---|---|---|---|---|

| [14] | Derived from user stories, tickets, commits, and team reflections during retrospective meetings. | Process. | Does not mention individual skills benefited. | Use of data generated by activities to produce more accurate feedback that can improve both the software product and the way it is developed. |

| [15] | Formal feedback provided by management and informal feedback from both management and direct work colleagues. | Individuals and their skills. | Communication, technical skills, commitment, proactivity, cooperation, and individual productivity. | Increased productivity through enhanced employee motivation. |

| [16] | Provided by management and direct work colleagues. | Individuals and their skills. | Individual productivity, technical knowledge, punctuality, management skills, communication, initiative, and commitment. | Teams motivated by positive feedback and improved skills are more productive and achieve their goals more easily. |

| [17] | Self-assessment by each individual, complemented by feedback from direct colleagues, and overseen by management. | Individuals and their skills. | Communication, productivity, quality, technical knowledge, punctuality, work management, commitment, teamwork, willingness to improve, and initiative. | Human capital with enhanced skills through accurate and personalized development processes is among the most important factors in organizations. Transparency in feedback and alignment between self-feedback and peer feedback. |

| [18] | Performance indicators, management, and team colleagues. | Individuals and their skills, and processes. | Willingness to learn, work motivation and reduction of impostor syndrome. | Feedback fosters greater collaboration and trust within teams, increasing operational performance and reducing undesired staff turnover. It also enhances the motivation of each individual. |

| Study | RQ5 | RQ6 | RQ7 (Data Source) | RQ7 (Data Fields) | RQ8 | RQ9 |

|---|---|---|---|---|---|---|

| [8] | Automated requirements prioritization. | TF-IDF and K-means. | 120 requirements written by 16 software development students. | Textual description, category, and manually assigned priority. | Requirements are automatically classified using MoSCoW rules. | Objectivity (independent of team experience), timesaving, and scalability. |

| [9] | Task effort estimation in story points. | Graph Neural Network. | 23,000 issues from 16 projects. | Title, textual description, and actual story points. | Task classification within story point ranges (small, medium, large, extra-large). | Advantages over other automated methods. Improved prediction accuracy is highlighted. |

| [10] | Evaluation of use case quality. | NLP, spaCy modules, WordNet, among other Python tools. | 35 use cases created by professionals. | Textual description evaluated in terms of length, structure, etc. | Determines whether a use case is of good quality and identifies issues if not. | The solution improves the quality of use case content. |

| [11] | Project success prediction based on its characteristics. | Python, Deep Neural Networks, and forecasting models. | Public datasets from real and simulated projects. | Budget, team size, qualitative risk level, among others. | Indicates whether a software project will succeed (1) or not (0). | More accurate predictions compared to empirical forecasting. |

| [12] | Analysis of project data to identify improvement opportunities for Sprint Retrospectives. | ML models for prediction and classification (KNN, linear regression). | Jira data from software projects. | Issue type, issue priority, sprint info, sprint velocity, started and completed backlog items, etc. | Forecast workload capacity for upcoming sprints. Classification of issues (task, bug, etc.). | Improved forecasting based on historical data analysis. Conventional methods are empirical and resource-intensive. |

| [19] | Effort estimation for client/user-requested change tasks. | Classification and prediction algorithms (Random Forest, k-grams). | 1648 change requests/tasks from 7 real clients. Real data from tools like Jira. | Software type, project year, task description, actual task duration. | Estimated time in minutes to complete the task. | Automated effort estimation. Saves time and resources. Reduces dependency on human estimations that may be error-prone in new projects. |

| [20] | Time estimation for development and testing tasks. Task assignment to the most qualified team member. | Chatbot, MongoDB, linear regression, XGBoost, LSTM, SVM, KNN. | Real Siemens projects tracked in Jira. | Issues: textual descriptions, associated project, priority. Professionals: name, project allocation, task history, role (dev or tester). | Time estimation (in days) to complete a task. Suggestion of first- and second-best candidates to perform the task. | Reduces errors in task estimation and assignment. These activities no longer rely solely on professional experience, but on automated data analysis. |

| [21] | Management of bug reports from mobile app users. | ML and NLP. | Bug reports submitted by users via a messaging app. | Audio, screenshots, and textual bug descriptions. | Transformation of bug reports into developer-assigned tasks, including detection of duplicate reports. | Easier for users to report bugs compared to conventional platforms. |

| Strongly Disagree | Partially Disagree | Neither Agree nor Disagree | Partially Agree | Strongly Agree | |

|---|---|---|---|---|---|

| Adequacy of task performers as feedback providers | 0% | 0% | 0% | 50% | 50% |

| Importance of continuous improvement in task specification | 0% | 0% | 0% | 0% | 100% |

| Tool capacity for rapid and automated feedback analysis | 0% | 0% | 25% | 25% | 50% |

| Clarity of graphical outputs | 0% | 0% | 25% | 0% | 75% |

| Usefulness of results for identifying improvements in task quality | 0% | 0% | 0% | 25% | 75% |

| Overall utility of the tool for supporting continuous improvement | 0% | 0% | 0% | 25% | 75% |

| Study | Focus of the Research | Reported Advantages | Relation to the Present Work |

|---|---|---|---|

| [9] | Story-point classification using graph neural networks | Improved accuracy in effort estimation | TaskIT similarly automates interpretation but targets qualitative feedback rather than estimation metrics |

| [10] | NLP for user-story quality assessment | Improved quality of use case content | TaskIT extends this principle to operational task descriptions, validated by practitioner interviews |

| [12] | Forecast workload capacity | Improved forecasting | TaskIT complements these analytics by integrating sentiment data for richer team reflections |

| [20] | AI-based decision assistant for task assignment | Objective, data-driven decision-making | TaskIT adds an AI-based human-centered feedback layer, assessing how task definitions affect developer experience |

| Present Study (TaskIT) | Sentiment analysis of developer feedback | Real-time, qualitative insight; supports transparency and collaboration | TaskIT bridges human-centered feedback and AI-driven automation within agile feedback loops |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marçal, D.; Metrôlho, J.; Ribeiro, F. Integrating Sentiment Analysis into Agile Feedback Loops for Continuous Improvement. Appl. Sci. 2025, 15, 12329. https://doi.org/10.3390/app152212329

Marçal D, Metrôlho J, Ribeiro F. Integrating Sentiment Analysis into Agile Feedback Loops for Continuous Improvement. Applied Sciences. 2025; 15(22):12329. https://doi.org/10.3390/app152212329

Chicago/Turabian StyleMarçal, Diogo, José Metrôlho, and Fernando Ribeiro. 2025. "Integrating Sentiment Analysis into Agile Feedback Loops for Continuous Improvement" Applied Sciences 15, no. 22: 12329. https://doi.org/10.3390/app152212329

APA StyleMarçal, D., Metrôlho, J., & Ribeiro, F. (2025). Integrating Sentiment Analysis into Agile Feedback Loops for Continuous Improvement. Applied Sciences, 15(22), 12329. https://doi.org/10.3390/app152212329