The Effect of Data Augmentation on Performance of Custom and Pre-Trained CNN Models for Crack Detection

Abstract

1. Introduction

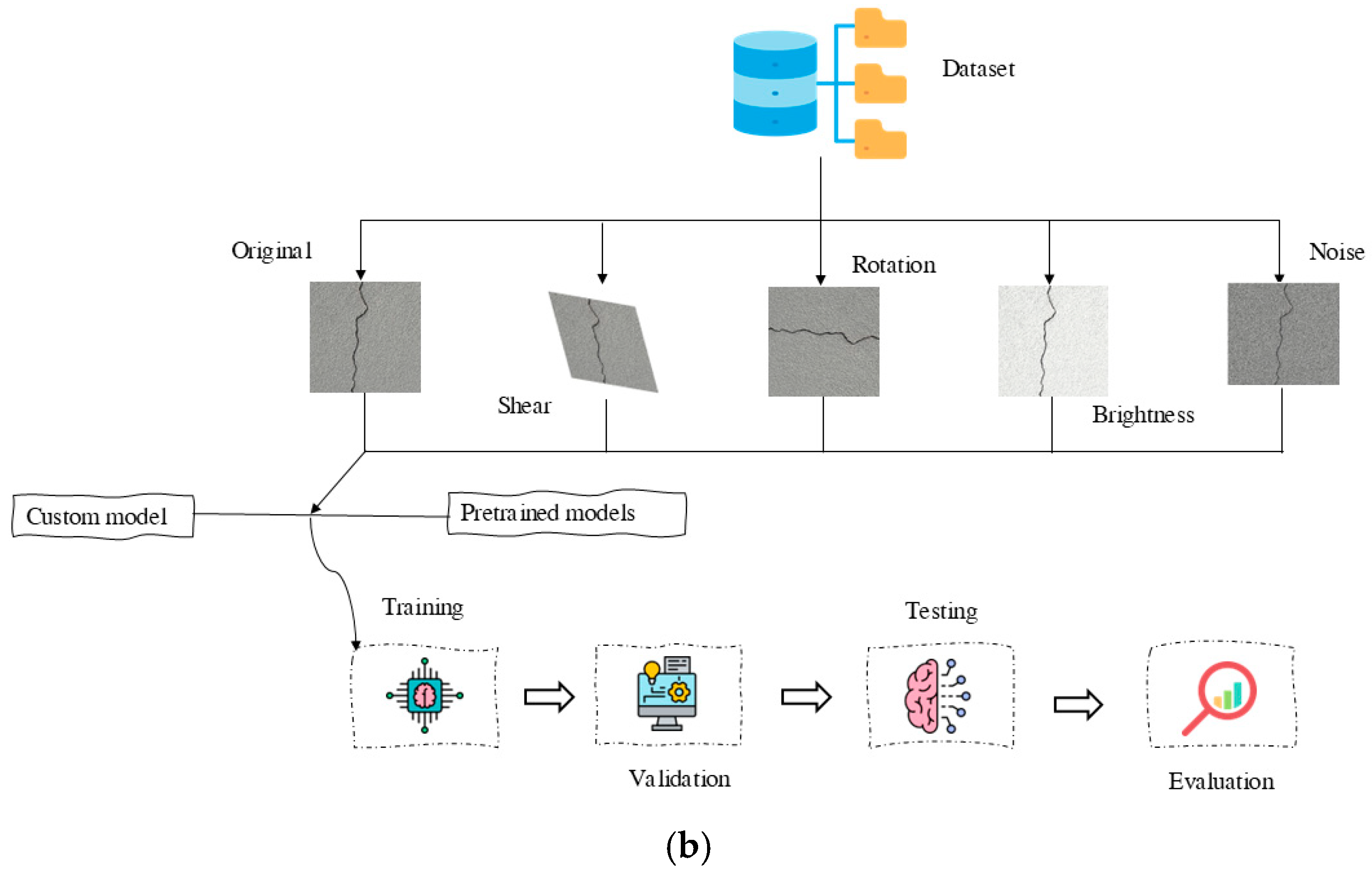

2. Materials and Methods

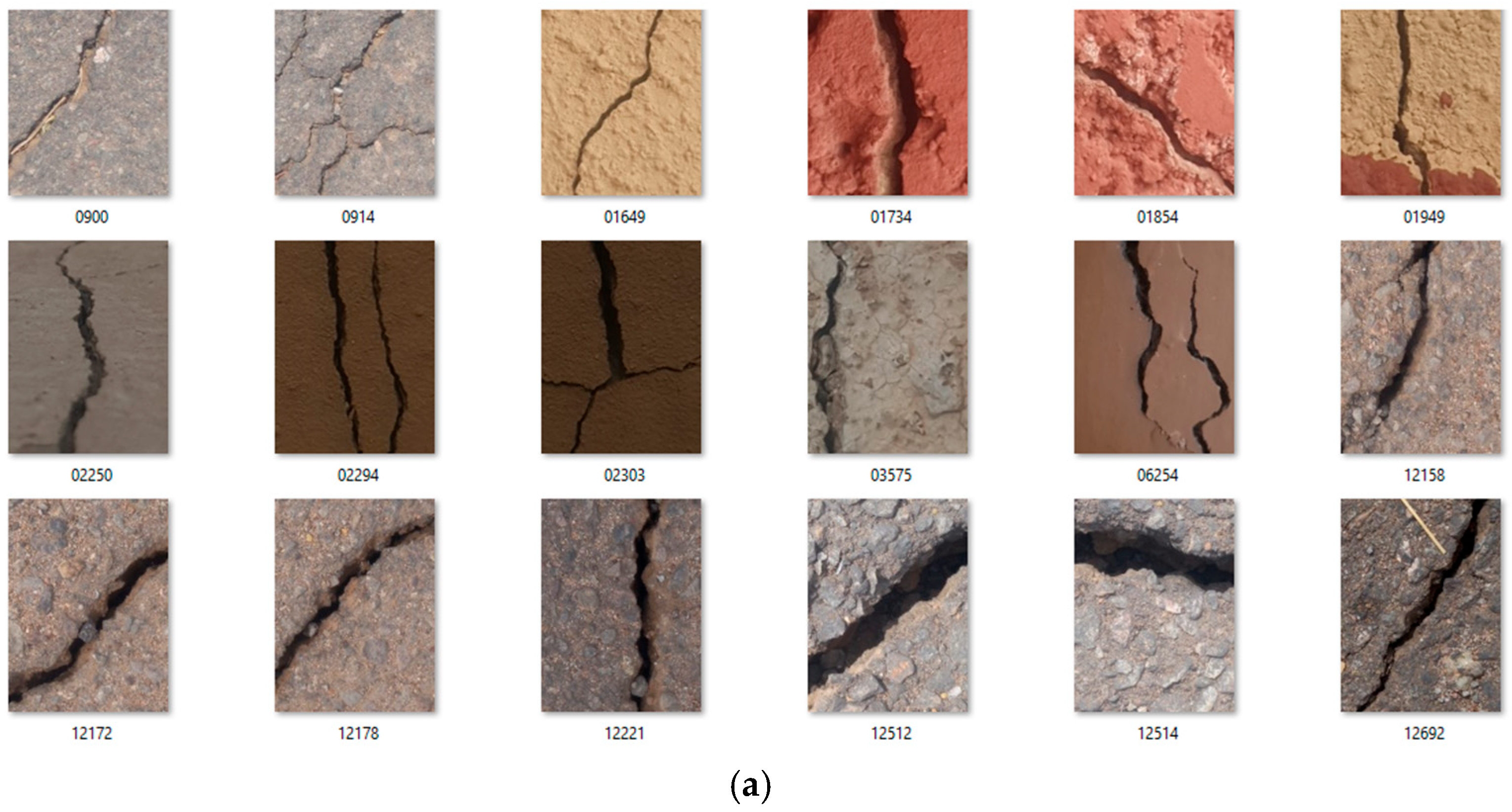

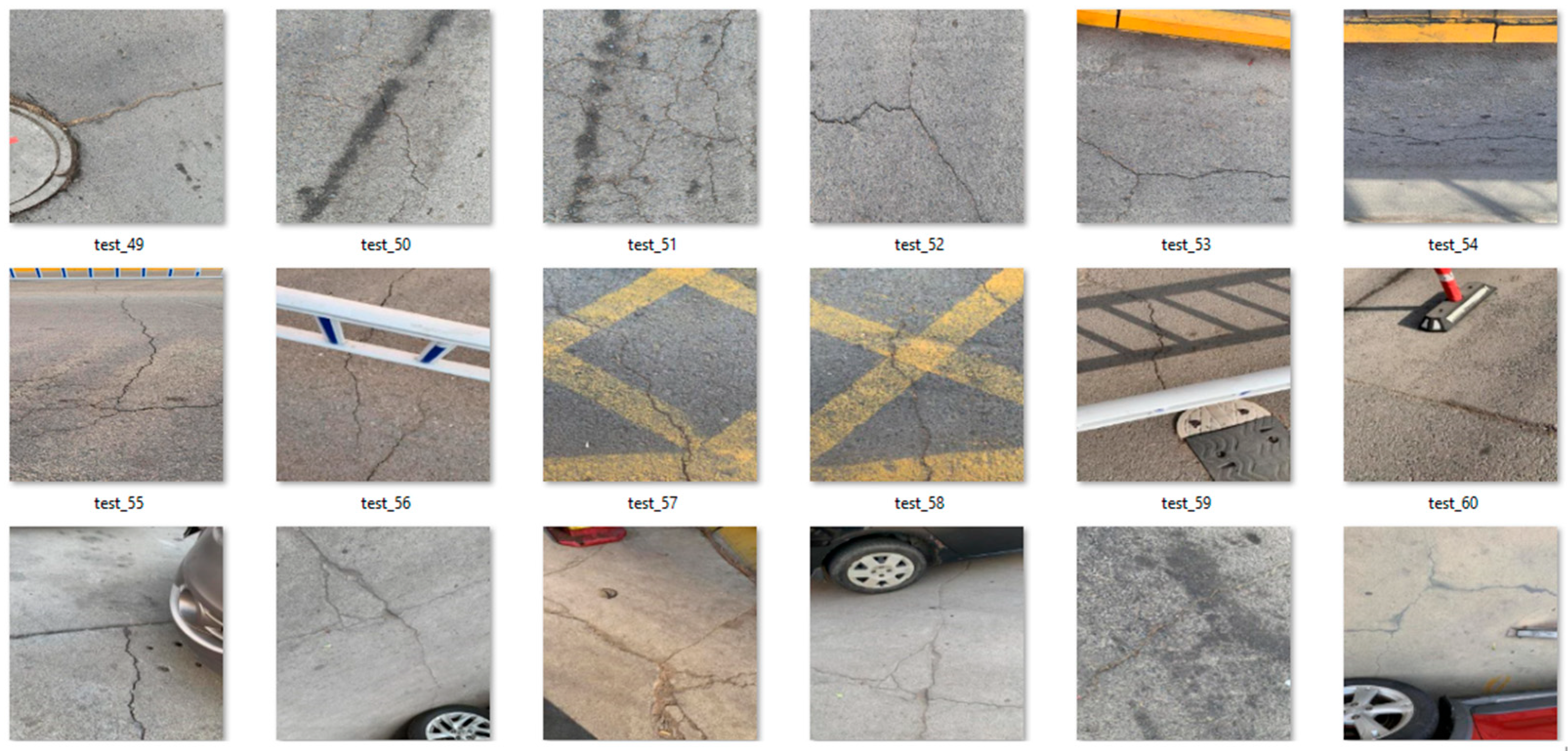

2.1. Dataset Description

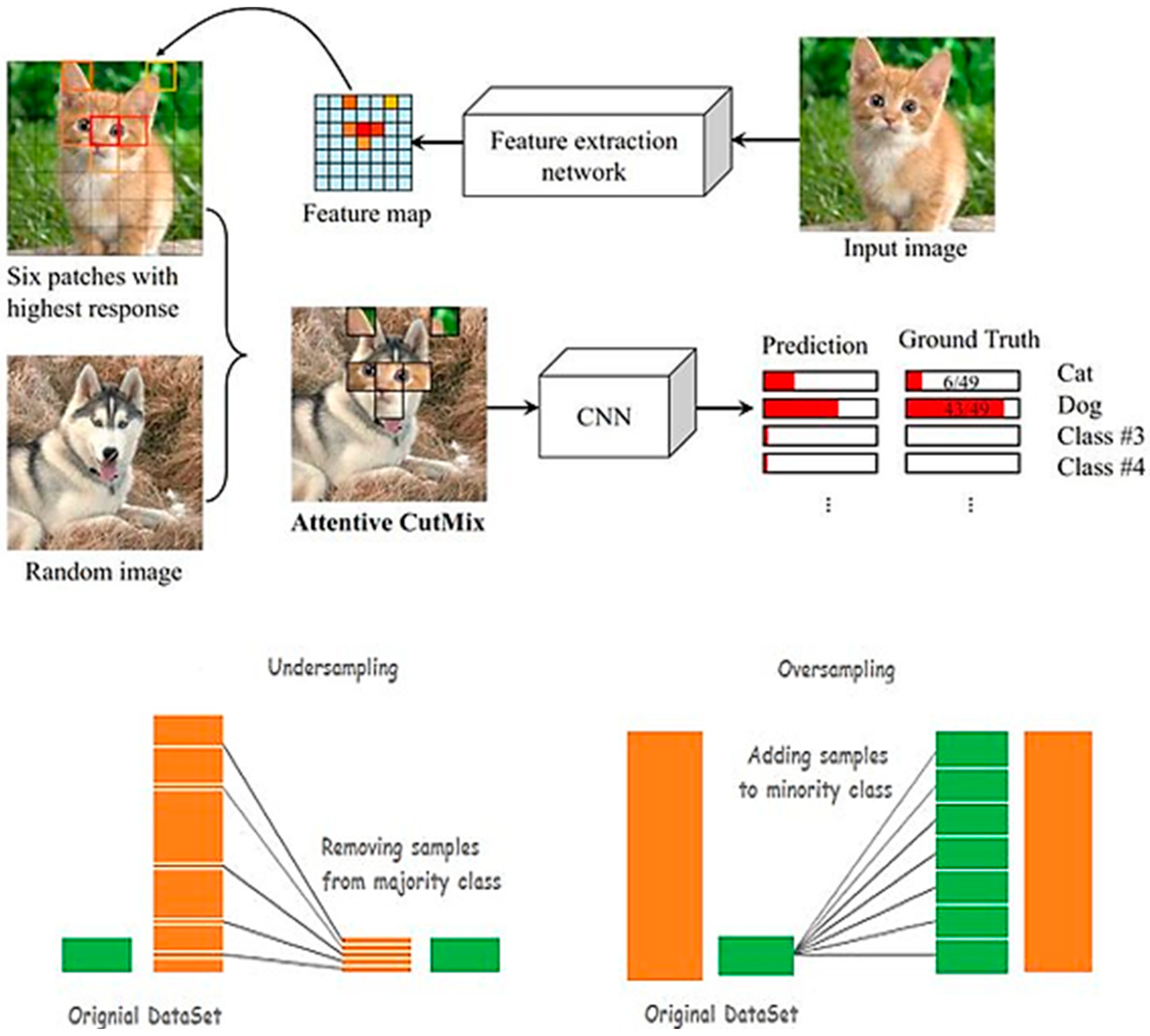

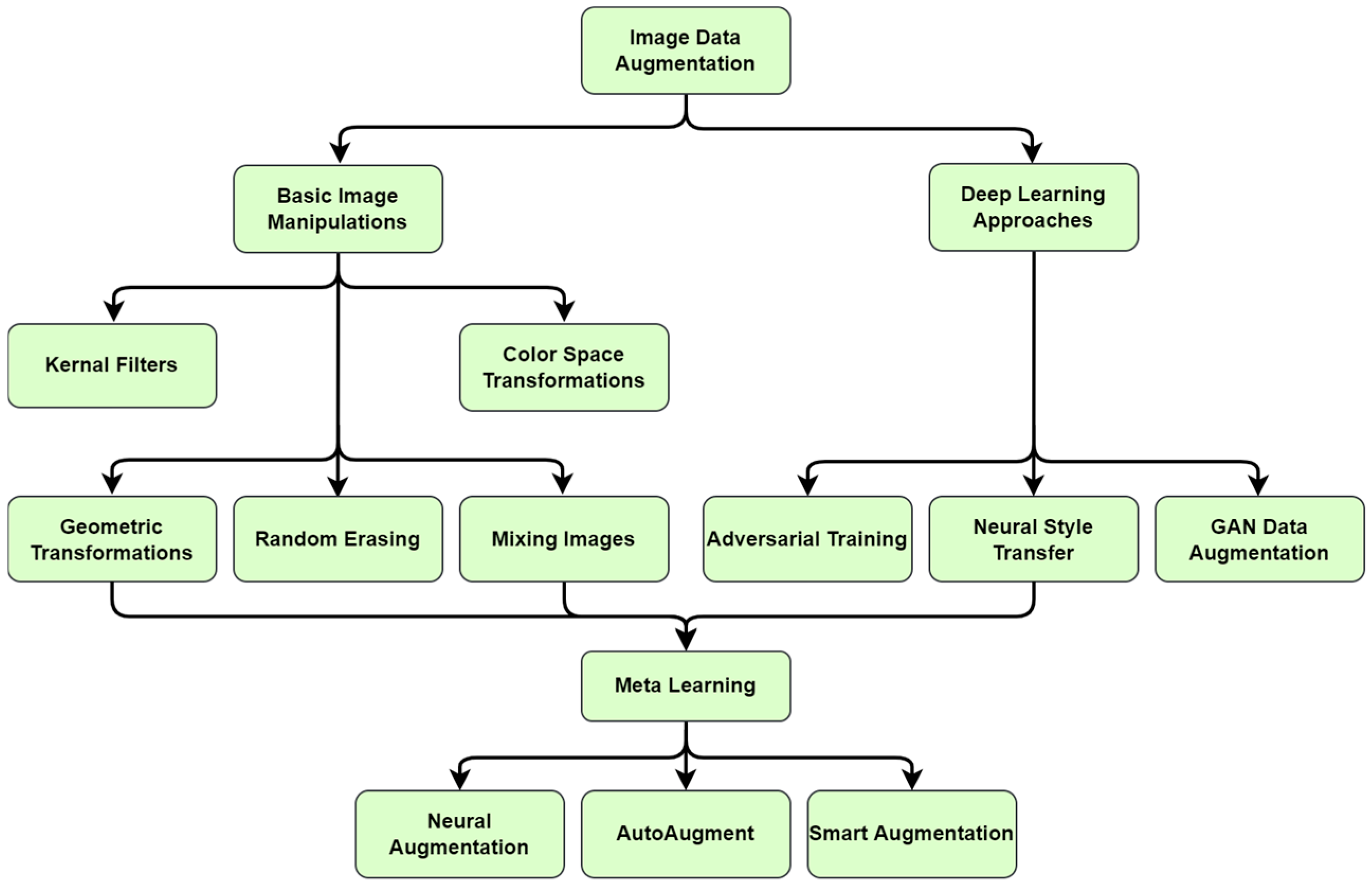

2.2. Data Augmentation

2.3. Model Implementation and Training

2.4. Evaluation Metrics

- i

- Accuracy (A): This reflects the overall correctness of predictions and is defined as: A =where TP = True Positives, TN = True Negatives, FP = False Positives, and FN = False Negatives.

- ii

- Precision (P): This is a measure of the proportion of correctly identified crack instances among all predicted as cracks: P =

- iii

- Recall (R): This is also called sensitivity, and it quantifies the model’s ability to capture all actual crack instances: R =

- iv

- F1-score: This is a measure of a test’s accuracy, defined as the harmonic mean of its precision and recall values: F1-score =

- v

- Confusion Matrix (CM): The confusion matrix provides a detailed breakdown of the model’s classification results, showing the counts of true positives, false positives, true negatives, and false negatives.

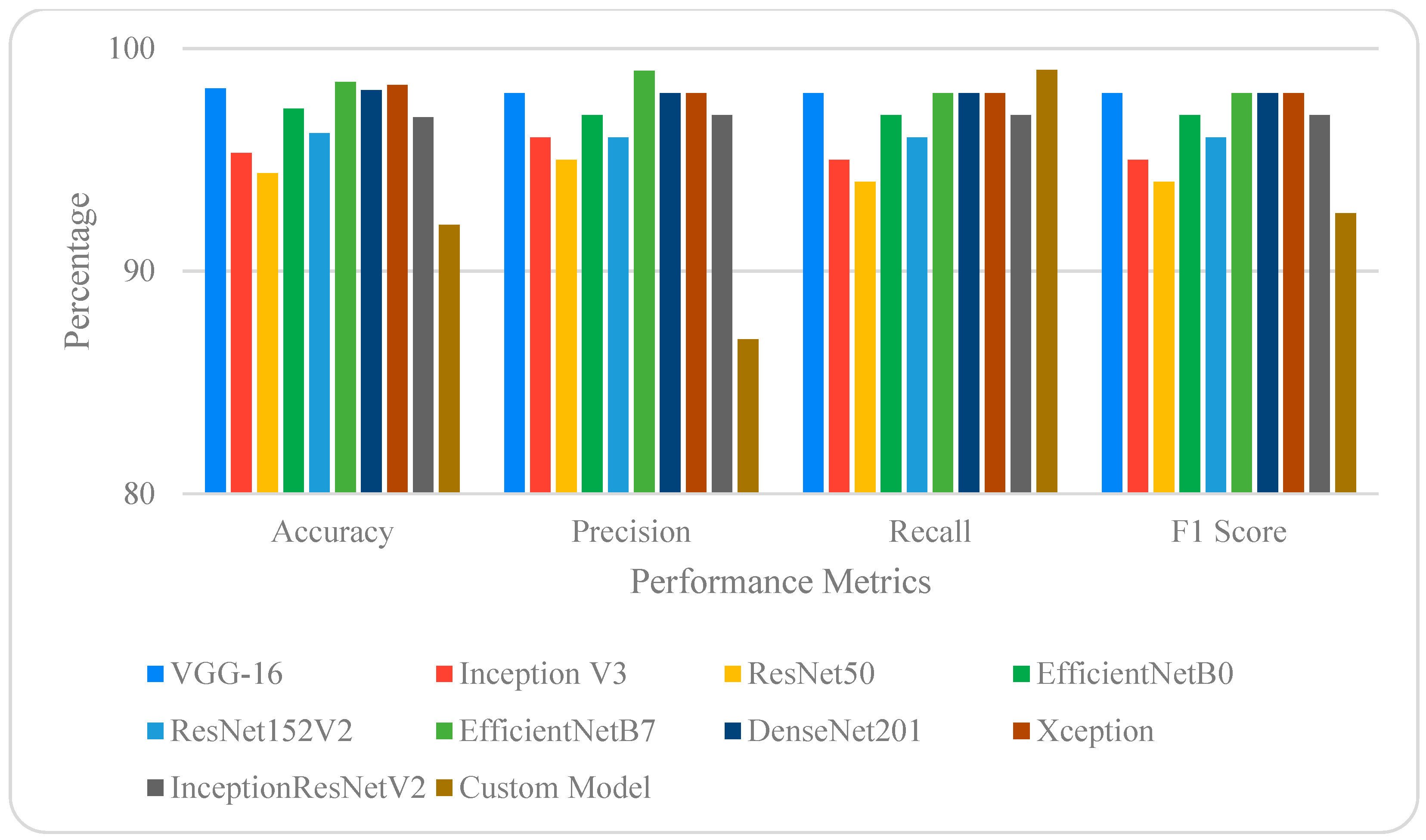

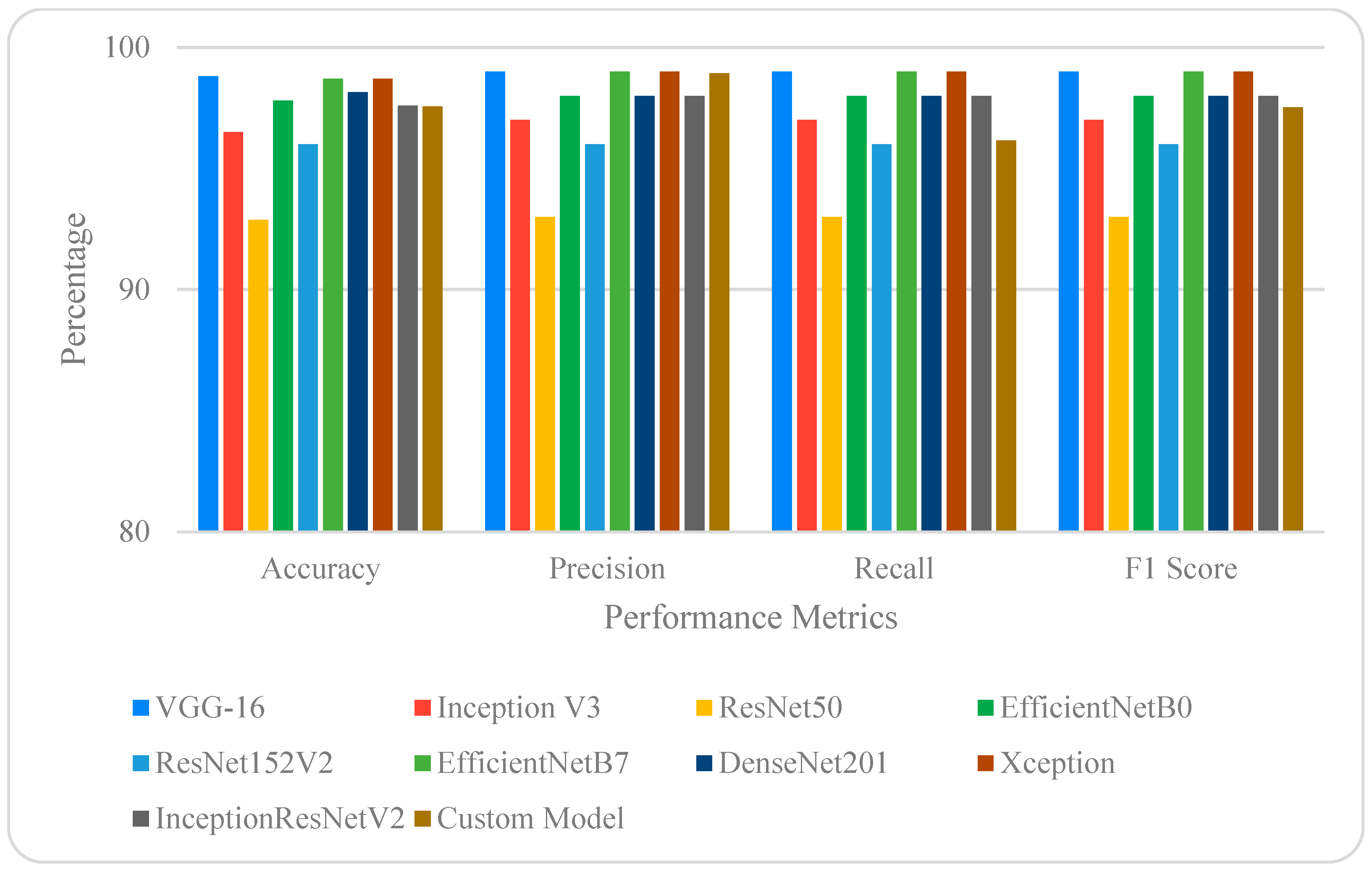

3. Results

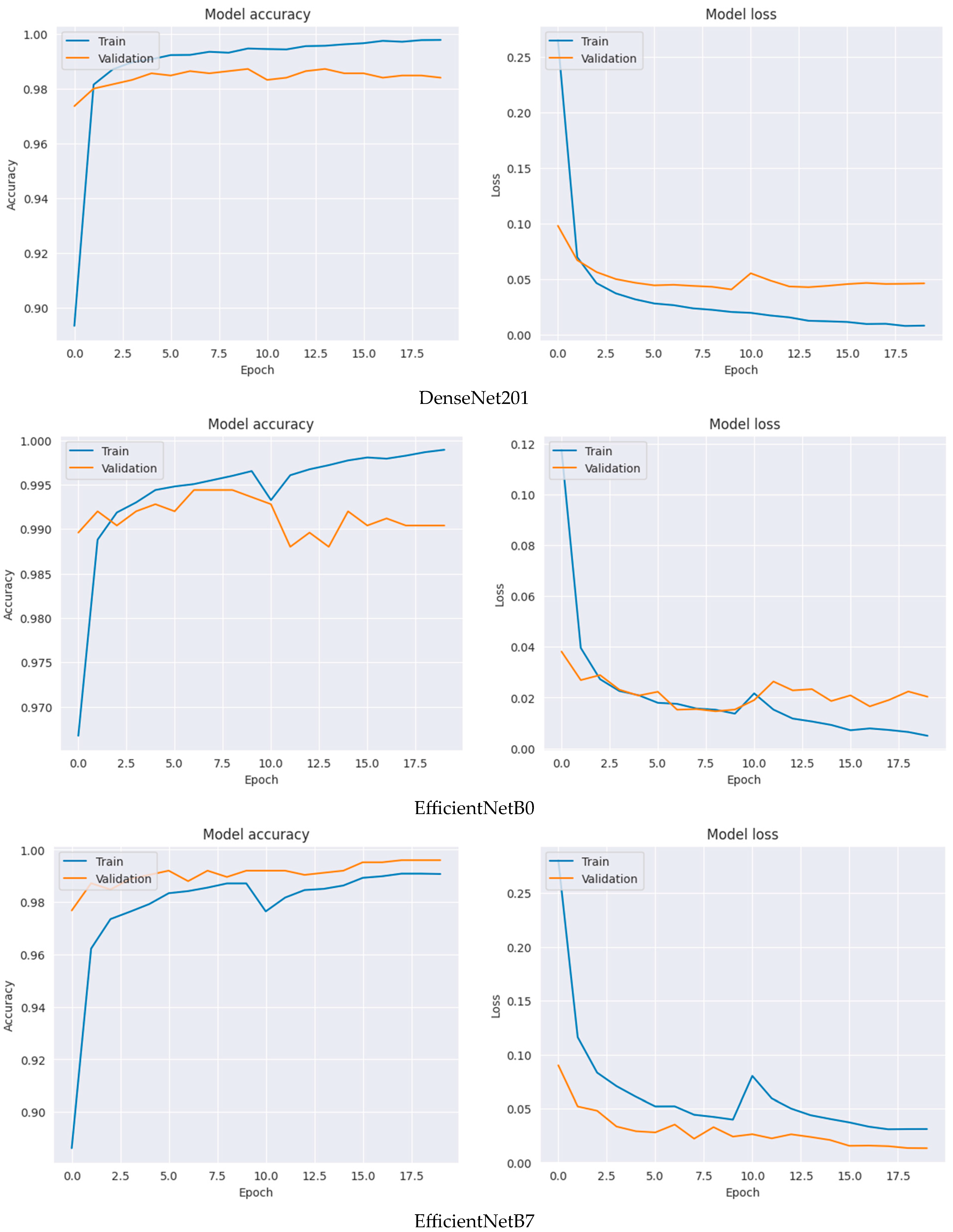

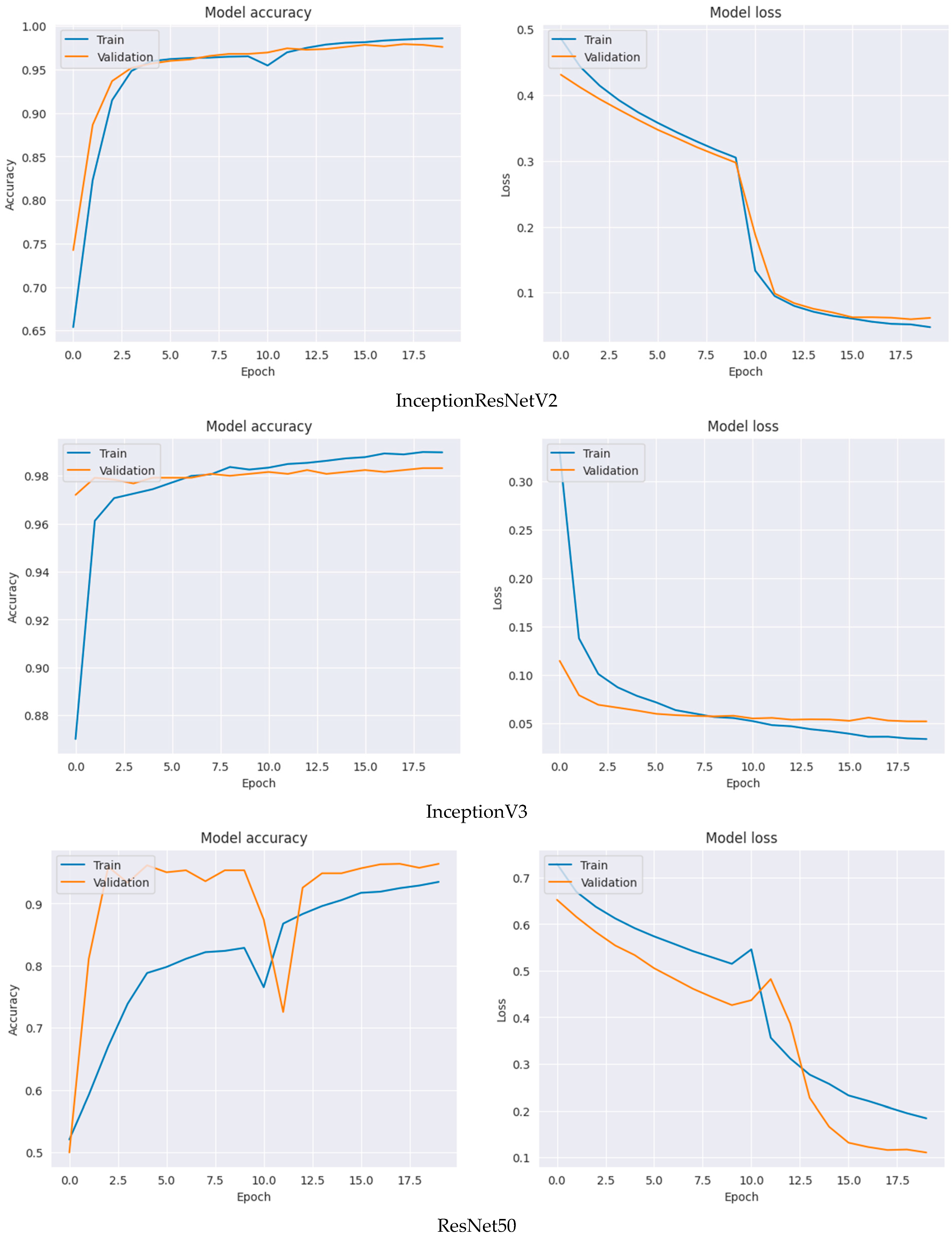

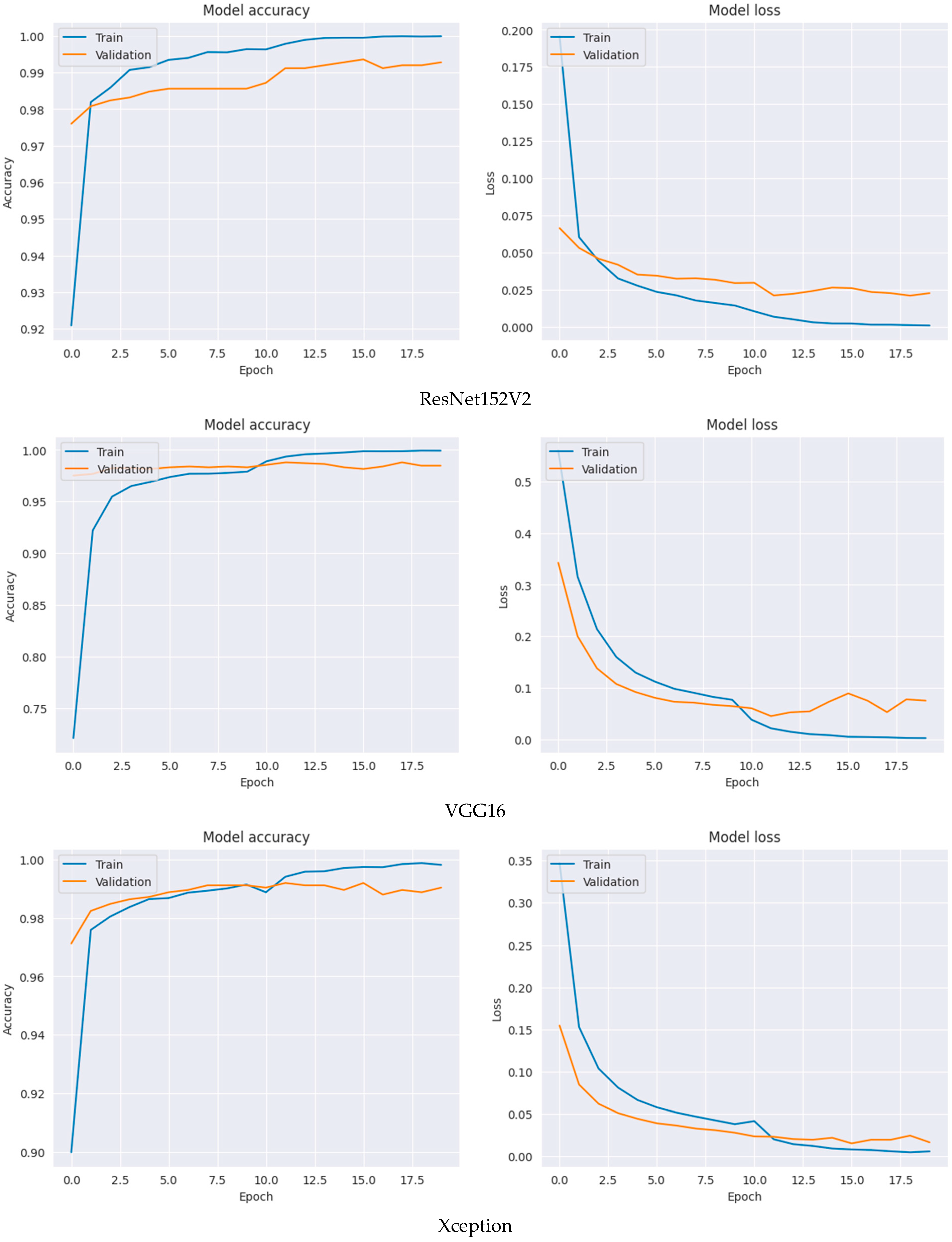

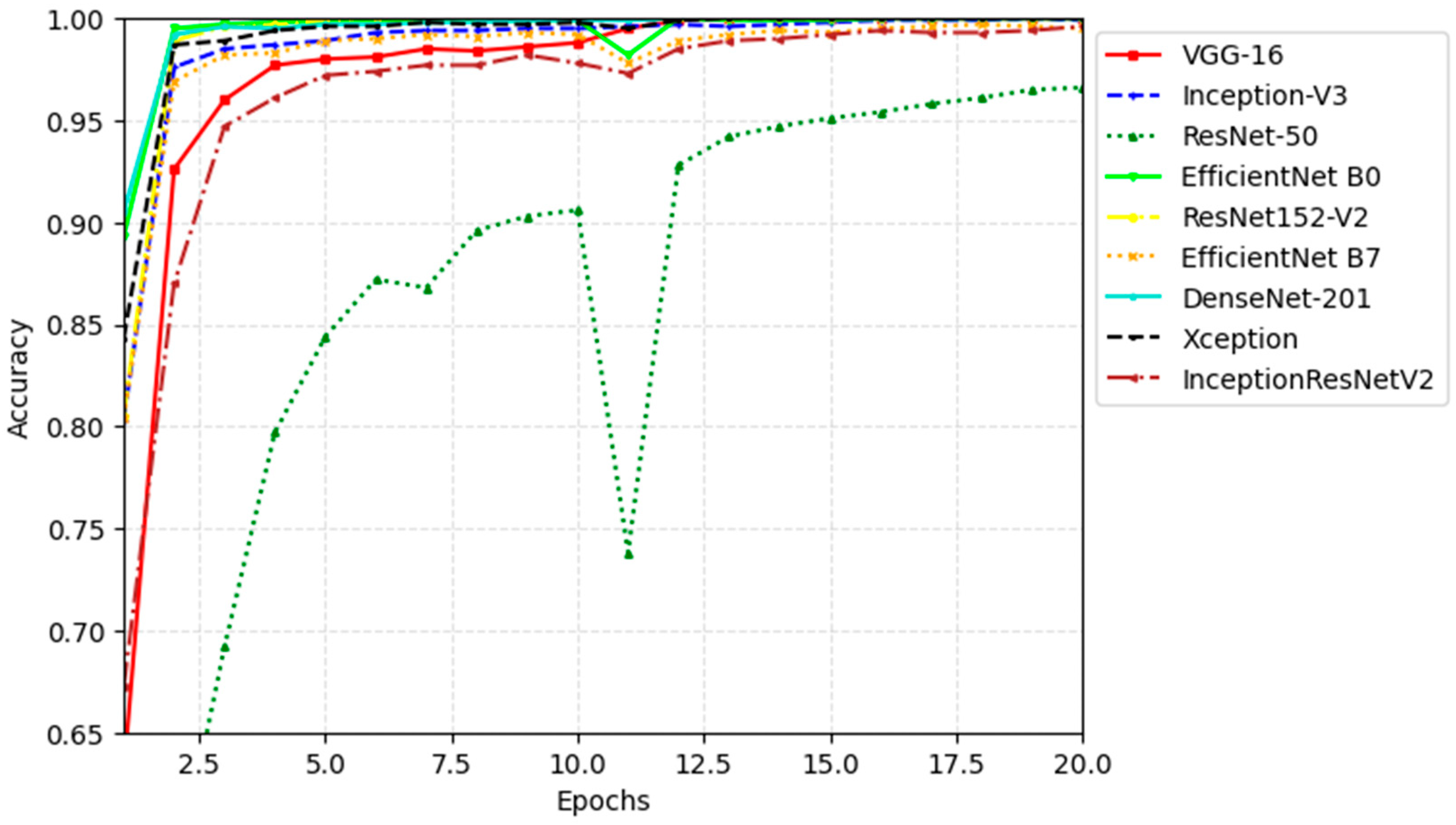

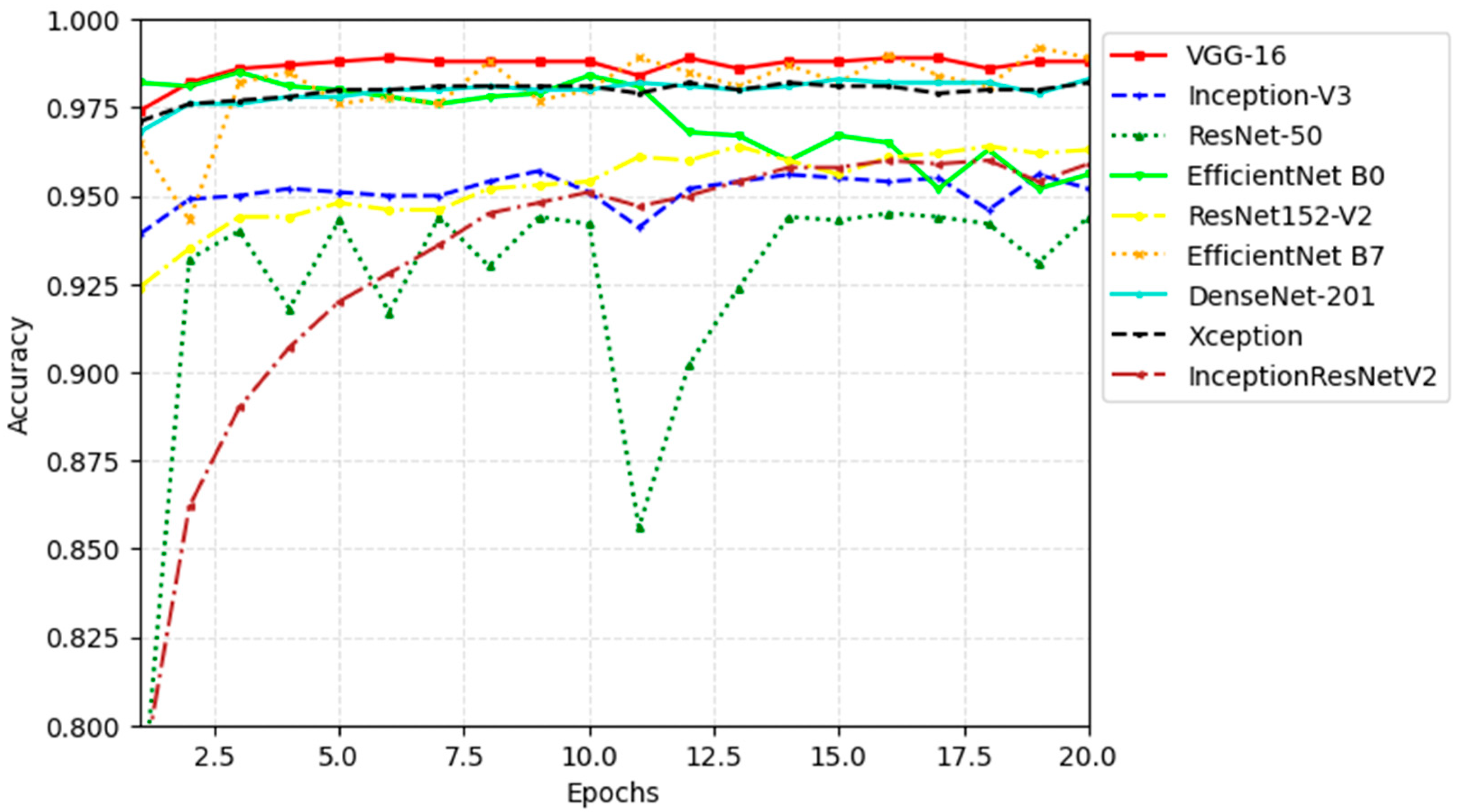

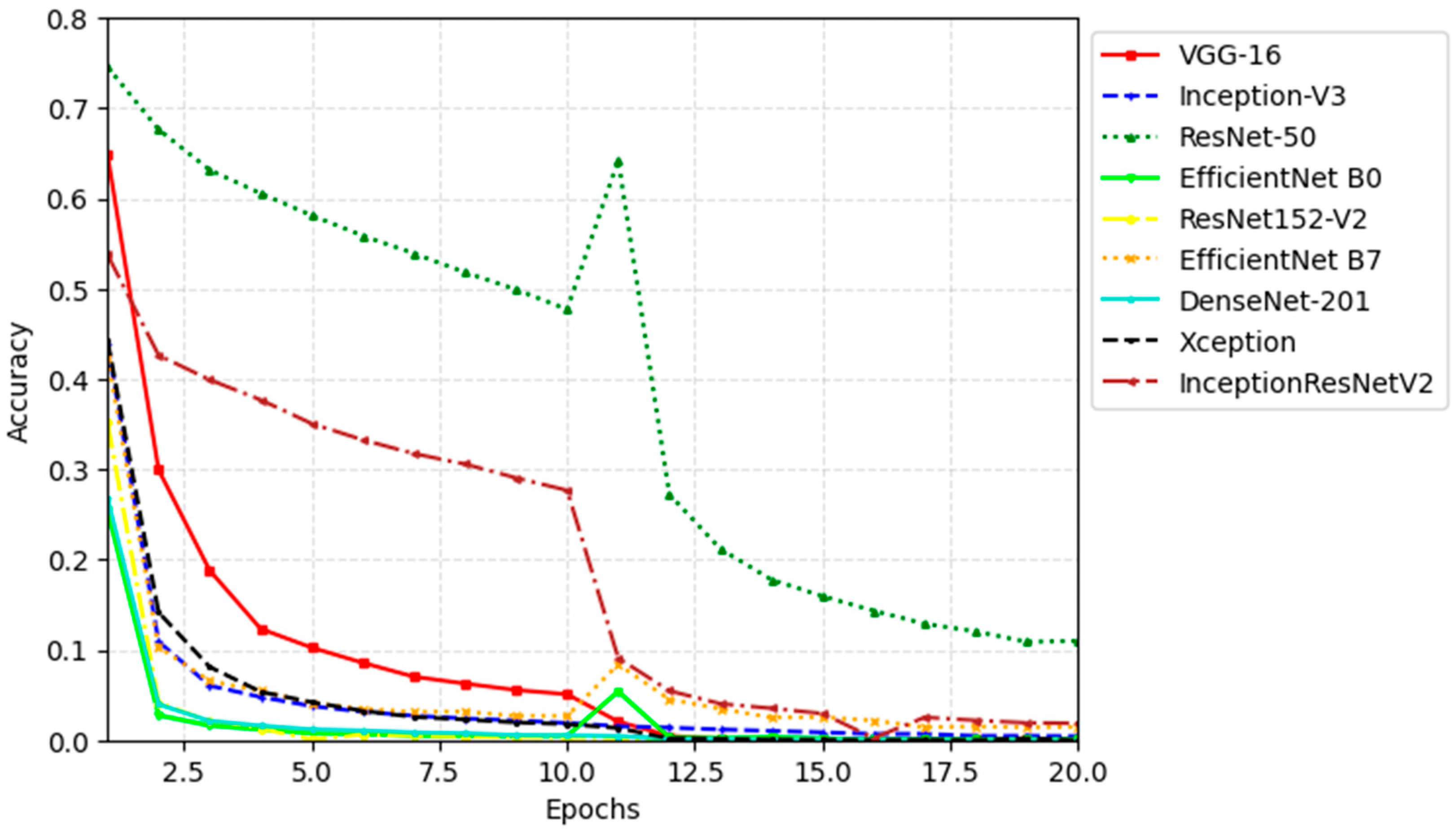

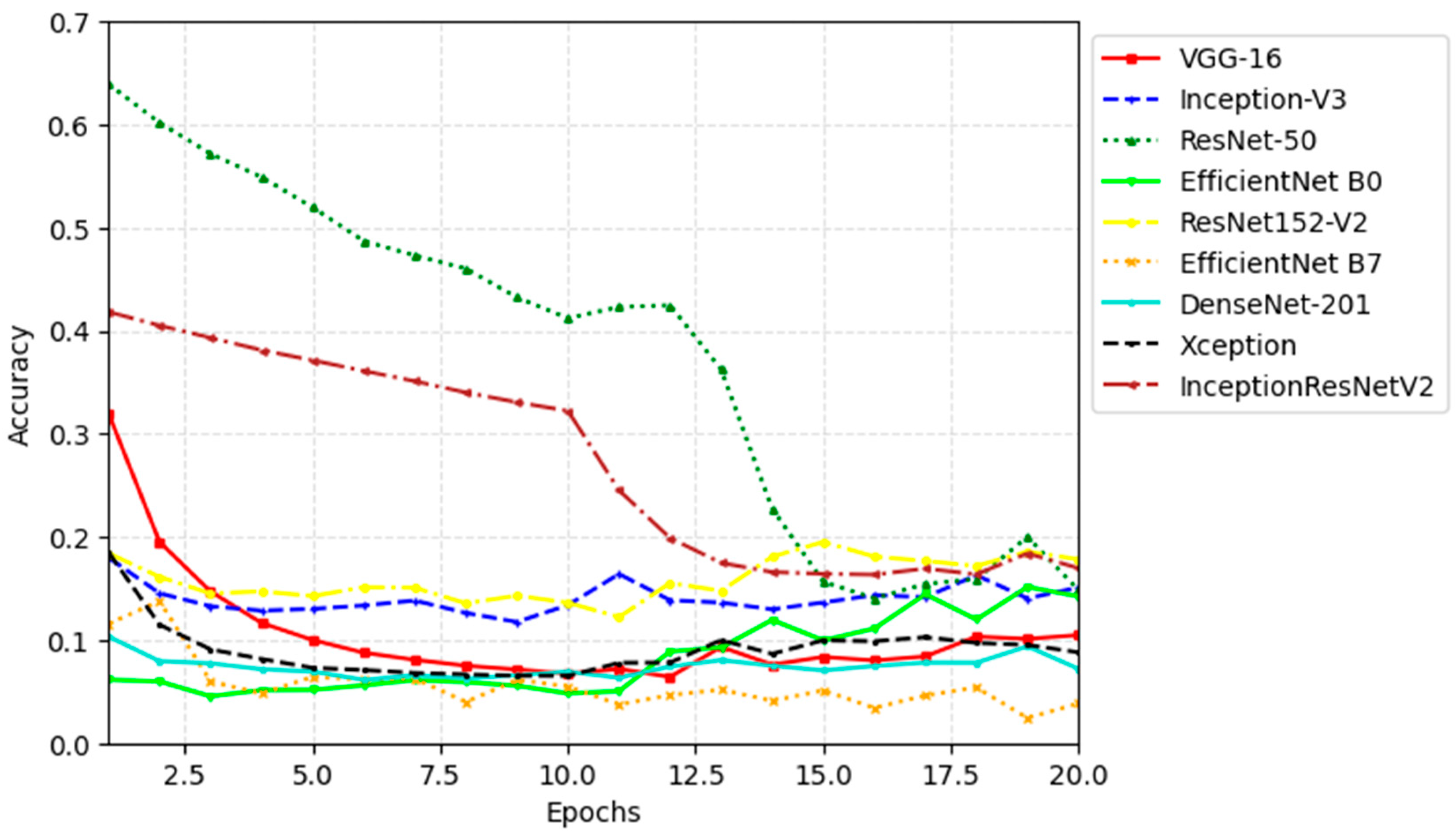

Model Training Patterns

4. Discussion

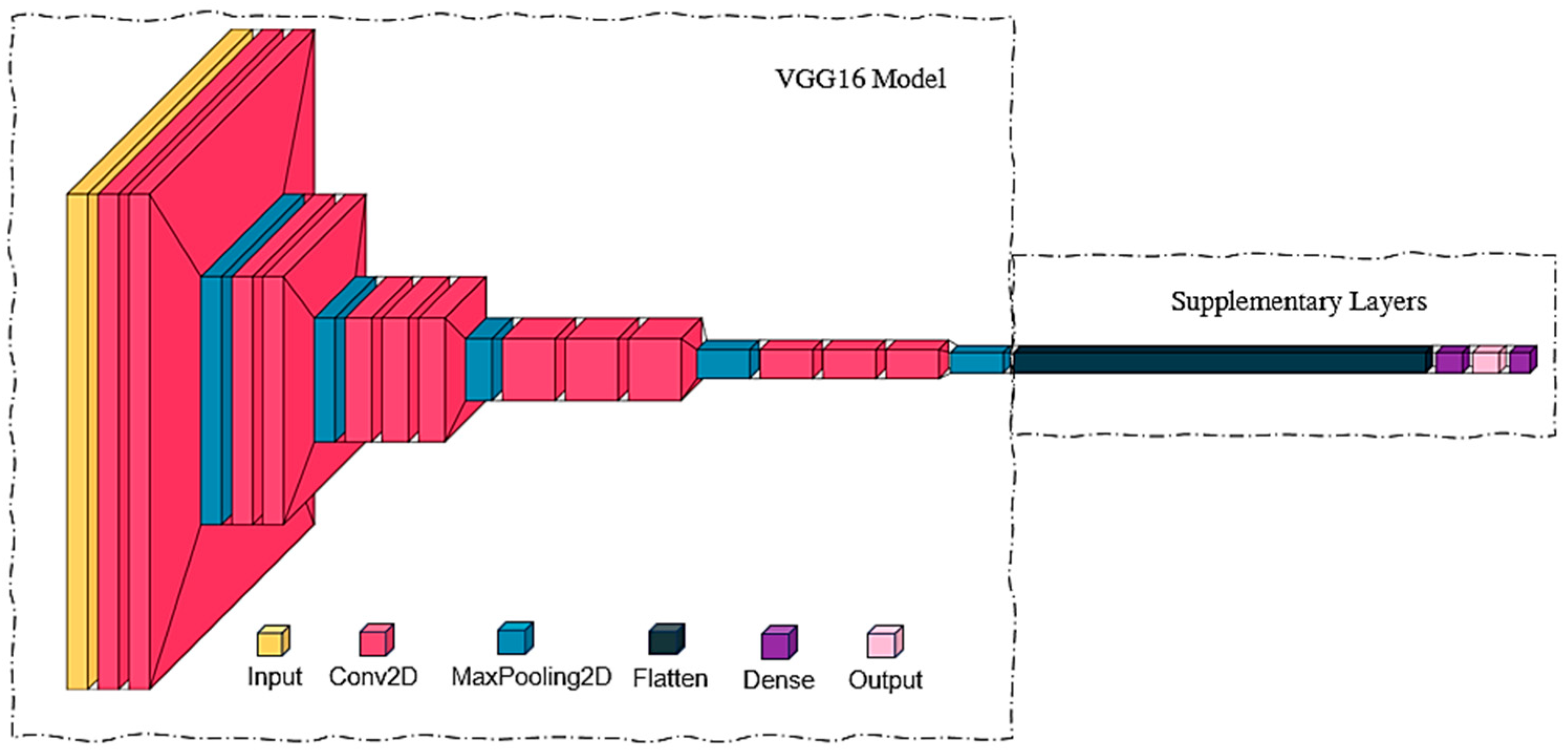

4.1. Proposed Architecture of the Custom-Built Model

4.2. Performance of Custom Model on Baseline and Augmented Datasets

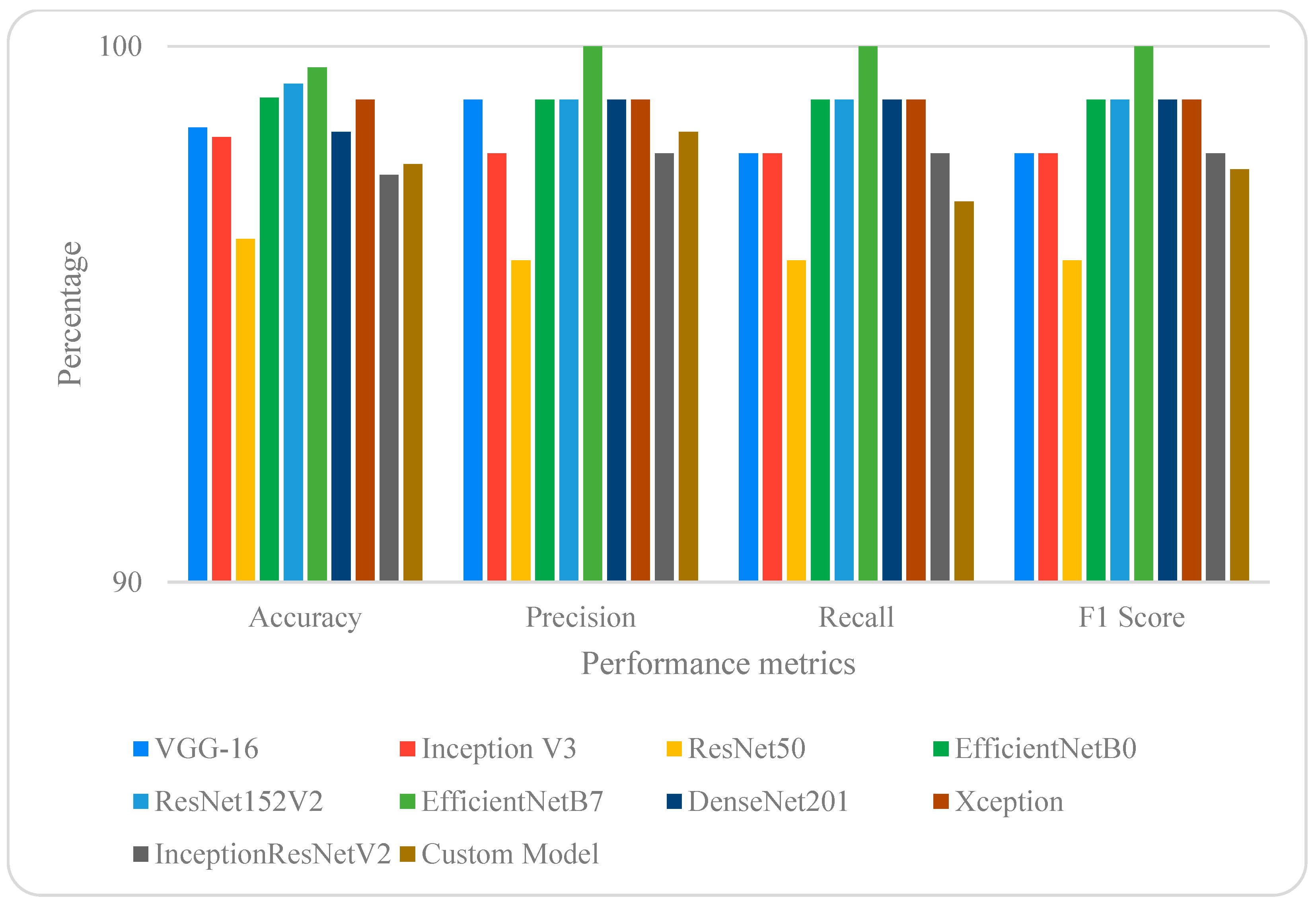

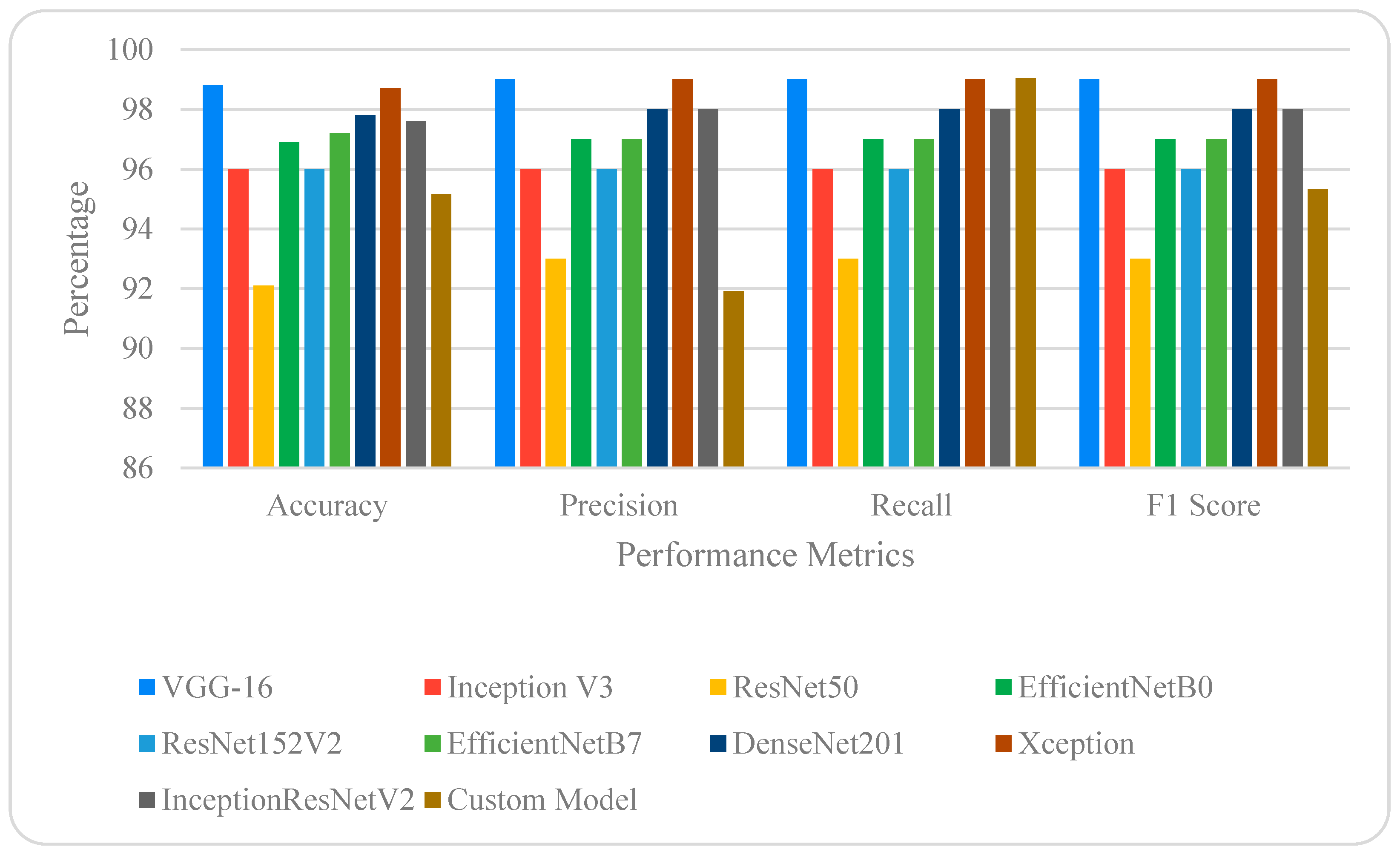

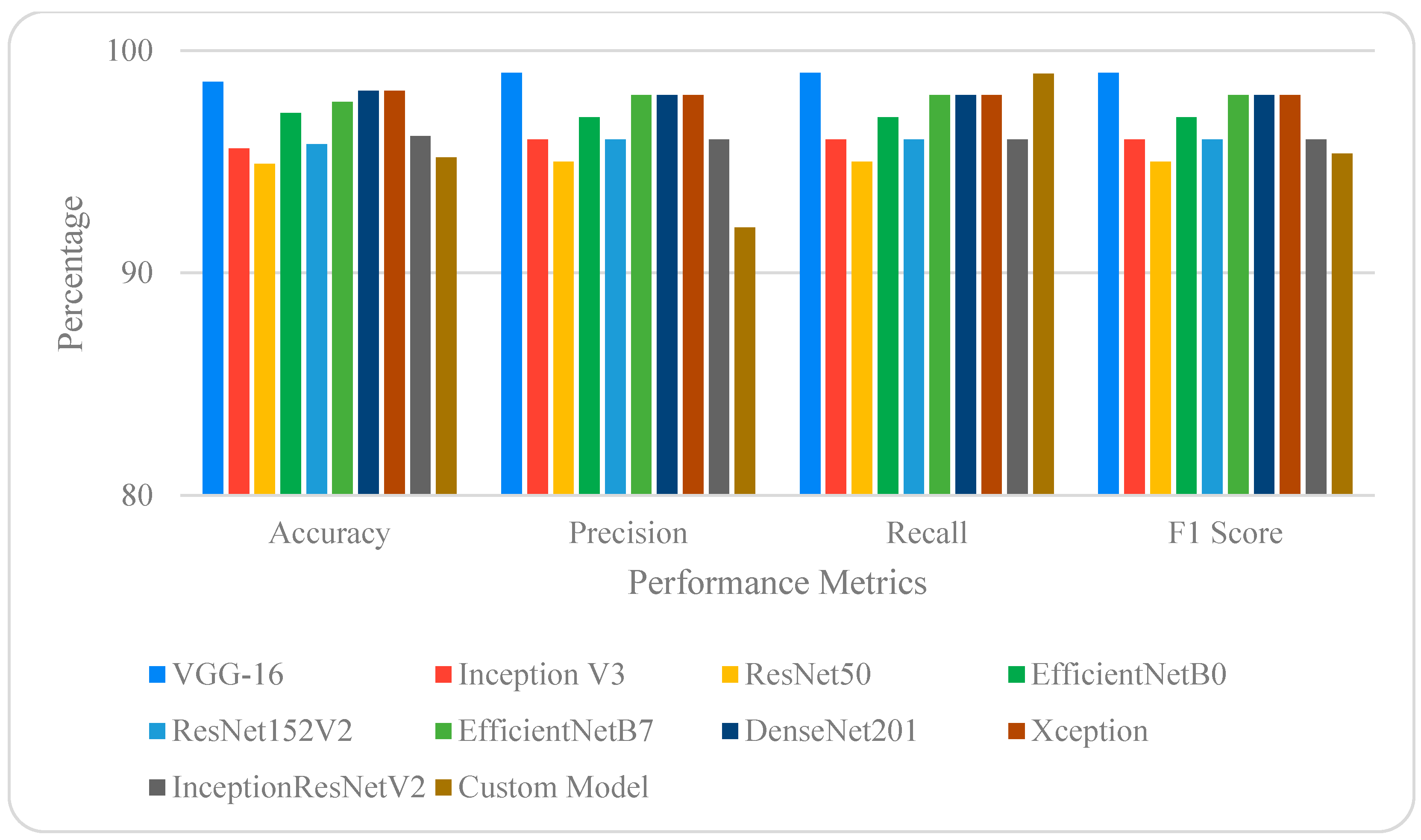

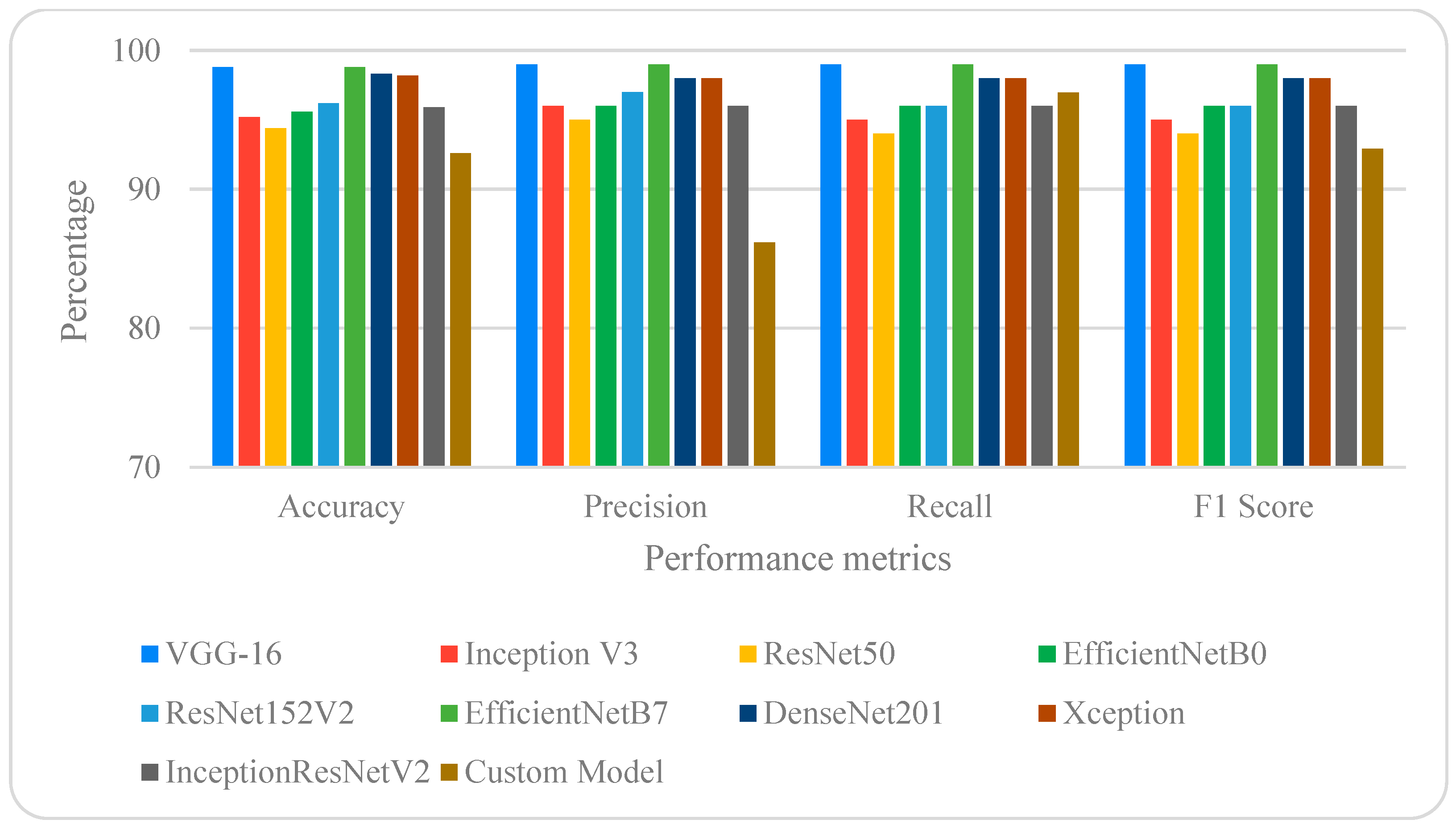

4.3. Evaluation of the Effect of Data Augmentation on Performance of Pre-Trained Models

4.4. Model Performances on Real-World Noise Datasets

4.5. Confusion Matrices

4.6. Comparison of Model Complexities and Computational Efficiency

5. Implication of the Study

6. Conclusions

- The custom CNN model achieved over 97% in accuracy, precision, recall, and F1-score on the baseline dataset. However, its performance declined when trained on augmented data, especially with noise and brightness, which reduced precision to 86.94% and 89.18%, respectively. Shear, rotation, and blur had minimal impact.

- Among all models, EfficientNet-B7 outperformed the rest, achieving 99.6% accuracy and perfect scores (100%) across all metrics on the baseline dataset. Other strong performers included Xception, EfficientNet-B0, and DenseNet-201. Except for ResNet50, all pre-trained models surpassed the custom CNN.

- Across all augmentation techniques, VGG-16, Xception, EfficientNet-B0, EfficientNet-B7, and DenseNet-201 consistently ranked highest in performance. Notably, VGG-16 maintained 99% across all key metrics regardless of the augmentation type.

- Confusion matrices revealed that these top models produced high true positive and true negative counts with minimal misclassifications, confirming their reliability and robustness in real-world crack detection tasks.

- The results demonstrate that applying targeted augmentation techniques in conjunction with pre-trained CNN models can deliver high accuracy, even when training data is limited. This mitigates the need for large-scale datasets in crack detection applications.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| VI | Visual inspection |

| AI | Artificial intelligence |

| DL | Deep learning |

| NLP | Natural language processing |

| CLAHE | Contrast limited adaptive histogram equalization |

| UAV | Unmanned aerial vehicle |

| GAN | Generative adversarial networks |

| SMOTE | Synthetic minority oversampling technique |

| ROC | Receiver operating characteristics |

| PR | Precision-recall |

| NF | No significant fluctuations |

| MNF | Minor and relatively small fluctuations |

| MJF | Major fluctuations |

| TP | True positive |

| TN | True negative |

| FP | False positive |

| FN | False negative |

| TPR | True positive rate |

| TNR | True negative rate |

References

- Abubakr, M.; Rady, M.; Bedran, K.; Mahfouz, S. Application of deep learning in damage classification of reinforced concrete bridges. Ain Shams Eng. J. 2024, 15, 102297. [Google Scholar] [CrossRef]

- Omoebamije, O.; Omoniyi, T.M.; Musa, A.; Duna, S. An improved deep learning convolutional neural network for crack detection based on UAV images. Innov. Infrastruct. Solut. 2023, 8, 236. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr. Build. Mater. 2018, 186, 1031–1045. [Google Scholar] [CrossRef]

- Mohammadzadeh, M.; Kremer, G.E.O.; Olafsson, S.; Kremer, P.A. AI-Driven crack detection for remanufacturing cylinder heads using deep learning and Engineering-Informed data augmentation. Automation 2024, 5, 578–596. [Google Scholar] [CrossRef]

- Minh, T.; Van, T.N.; Nguyen, H.X.; Nguyễn, Q. Enhancing the Structural Health Monitoring (SHM) through data reconstruction: Integrating 1D convolutional neural networks (1DCNN) with bidirectional long short-term memory networks (Bi-LSTM). Eng. Struct. 2025, 340, 120767. [Google Scholar] [CrossRef]

- Minh, T.; Matos, J.C.; Sousa, H.S.; Ngoc, S.D.; Van, T.N.; Nguyen, H.X.; Nguyen, Q. Data reconstruction leverages one-dimensional Convolutional Neural Networks (1DCNN) combined with Long Short-Term Memory (LSTM) networks for Structural Health Monitoring (SHM). Measurement 2025, 253, 117810. [Google Scholar] [CrossRef]

- Thompson, N.; Fleming, M.; Tang, B.J.; Pastwa, A.M.; Borge, N.; Goehring, B.C.; Das, S. A model for estimating the economic costs of computer vision systems that use deep learning. Proc. AAAI Conf. Artif. Intell. 2024, 38, 23012–23018. [Google Scholar] [CrossRef]

- Ying, X. An Overview of Overfitting and its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Wen, Q.; Gao, J.; Sun, L.; Xu, H.; Lv, J.; Song, X. Time series data augmentation for deep learning: A survey. arXiv 2021, arXiv:2002.12478. [Google Scholar]

- Feng, S.Y.; Gangal, V.; Wei, J.; Chandar, S.; Vosoughi, S.; Mitamura, T.; Hovy, E. A survey of Data Augmentation Approaches for NLP. arXiv 2021, arXiv:2105.03075. [Google Scholar] [CrossRef]

- Ko, T.; Peddinti, V.; Povey, D.; Khudanpur, S. Audio augmentation for speech recognition. Proc. Interspeech 2015, 2015, 3586–3589. [Google Scholar] [CrossRef]

- Gu, S.; Pednekar, M.; Slater, R. Improve Image Classification Using Data Augmentation and Neural Network. SMU Data Sci. Rev. 2019, 2, 1. [Google Scholar]

- Kim, B.; Cho, S. Automated Vision-Based detection of cracks on concrete surfaces using a deep learning technique. Sensors 2018, 18, 3452. [Google Scholar] [CrossRef]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A comprehensive survey of image augmentation techniques for deep learning. Pattern Recognit. 2023, 137, 109347. [Google Scholar] [CrossRef]

- Osman, M.K.; Mohammad, Z.E.A.; Idris, M.; Ahmad, K.A.; Muhamed, Y.N.A.; Ibrahim, A.; Hasnur, R.A.; Bahri, I. Pavement Crack Classification using Deep Convolutional Neural Network. J. Mech. Eng. 2021, 1, 227–244. [Google Scholar]

- Nguyen, C.L.; Nguyen, A.; Brown, J.; Byrne, T.; Ngo, B.T.; Luong, C.X. Optimising Concrete Crack Detection: A Study of Transfer Learning with Application on Nvidia Jetson Nano. Sensors 2024, 24, 7818. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Zhu, Y.; Yang, M.; Jin, G.; Zhu, Y.; Lu, Y.; Zou, Y.; Chen, Q. An improved sample selection framework for learning with noisy labels. PLoS ONE 2024, 19, e0309841. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, Y.; Cordeiro, F.R.; Chen, Q. A progressive sample selection framework with contrastive loss designed for noisy labels. Pattern Recognit. 2025, 16, 111284. [Google Scholar] [CrossRef]

- Kim, J.; Seon, S.; Kim, S.; Sun, Y.; Lee, S.; Kim, J.; Hwang, B.; Kim, J. Generative AI-Driven Data Augmentation for Crack Detection in Physical Structures. Electronics 2024, 13, 3905. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, J.; Gong, C.; Wu, W. Automatic tunnel lining crack detection via deep learning with generative adversarial network-based data augmentation. Undergr. Space 2022, 9, 140–154. [Google Scholar] [CrossRef]

- Choi, S.M.; Cha, H.S.; Jiang, S. Hybrid Data Augmentation for Enhanced Crack Detection in Building Construction. Buildings 2024, 14, 1929. [Google Scholar] [CrossRef]

- Jamshidi, M.; El-Badry, M.; Nourian, N. Improving Concrete Crack Segmentation Networks through CutMix Data Synthesis and Temporal Data Fusion. Sensors 2023, 23, 504. [Google Scholar] [CrossRef] [PubMed]

- Widodo, A.O.; Setiawan, B.; Indraswari, R. Machine Learning-Based intrusion detection on multi-class imbalanced dataset using SMOTE. Procedia Comput. Sci. 2024, 234, 578–583. [Google Scholar] [CrossRef]

- Mohammed, R.; Rawashdeh, J.; Abdullah, M. Machine Learning with Oversampling and Undersampling Techniques: Overview Study and Experimental Results. In Proceedings of the 11th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 7–9 April 2020; pp. 243–248. [Google Scholar] [CrossRef]

- Walawalkar, D.; Shen, Z.; Liu, Z.; Savvides, M. Attentive CutMix: An enhanced data augmentation approach for deep learning based image classification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. CVPR 2016, 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-V4, Inception-ResNet and the impact of residual connections on learning. Proc. AAAI Conf. Artif. Intell. 2017, 31. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Pleiss, G.; Van Der Maaten, L.; Weinberger, K.Q. Convolutional Networks with Dense Connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 44, 8704–8716. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. Lecture notes in computer science. In Proceedings of the European Conference on Computer Vision, Honolulu, HI, USA, 17 September 2016; pp. 630–645. [Google Scholar] [CrossRef]

- Mittal, K.; Gill, K.S.; Chattopadhyay, S.; Singh, M. Innovative Solutions for solar panel maintenance: A VGG16-Based approach for early damage Detection. In Proceedings of the International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 17–18 April 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Fan, C. Deep neural networks for automated damage classification in image-based visual data of reinforced concrete structures. Heliyon 2024, 10, e38104. [Google Scholar] [CrossRef] [PubMed]

- Swain, S.; Tripathy, A.K. Automatic detection of potholes using VGG-16 pre-trained network and Convolutional Neural Network. Heliyon 2024, 10, e30957. [Google Scholar] [CrossRef]

- Fu, R.; Cao, M.; Novák, D.; Qian, X.; Alkayem, N.F. Extended efficient convolutional neural network for concrete crack detection with illustrated merits. Autom. Constr. 2023, 156, 105098. [Google Scholar] [CrossRef]

- Ali, H.; Shifa, N.; Benlamri, R.; Farooque, A.A.; Yaqub, R. A fine-tuned EfficientNet-B0 convolutional neural network for accurate and efficient classification of apple leaf diseases. Sci. Rep. 2025, 15, 25732. [Google Scholar] [CrossRef]

- Gülmez, B. A novel deep neural network model based Xception and genetic algorithm for detection of COVID-19 from X-ray images. Ann. Oper. Res. 2022, 328, 617–641. [Google Scholar] [CrossRef] [PubMed]

- Joshi, S.A.; Bongale, A.M.; Olsson, P.O.; Urolagin, S.; Dharrao, D.; Bongale, A. Enhanced Pre-Trained Xception Model Transfer learned for breast cancer detection. Computation 2023, 11, 59. [Google Scholar] [CrossRef]

- Akgül, İ. Mobile-DenseNet: Detection of building concrete surface cracks using a new fusion technique based on deep learning. Heliyon 2023, 9, e21097. [Google Scholar] [CrossRef]

- Alfaz, N.; Hasnat, A.; Khan, A.; Sayom, N.; Bhowmik, A. Bridge Crack Detection Using Dense Convolutional Network (Densenet). In Proceedings of the 2nd International Conference on Computing Advancements, New York, NY, USA, 11 August 2022; pp. 509–515. [Google Scholar] [CrossRef]

- Ahmed, M.; Afreen, N.; Ahmed, M.; Sameer, M.; Ahamed, J. An inception V3 approach for malware classification using machine learning and transfer learning. Int. J. Intell. Netw. 2023, 4, 11–18. [Google Scholar] [CrossRef]

- Ehtisham, R.; Qayyum, W.; Camp, C.V.; Plevris, V.; Mir, J.; Khan, Q.Z.; Ahmad, A. Classification of defects in wooden structures using pre-trained models of convolutional neural network. Case Stud. Constr. Mater. 2023, 19, e02530. [Google Scholar] [CrossRef]

- Meftah, I.; Hu, J.; Asham, M.A.; Meftah, A.; Zhen, L.; Wu, R. Visual Detection of Road Cracks for Autonomous Vehicles Based on Deep Learning. Sensors 2024, 24, 1647. [Google Scholar] [CrossRef]

- Zhang, J.; Bao, T. An Improved ResNet-Based Algorithm for Crack Detection of Concrete Dams Using Dynamic Knowledge Distillation. Water 2023, 15, 2839. [Google Scholar] [CrossRef]

- Wen, L.; Xiao, Z.; Xu, X.; Liu, B. Disaster Recognition and Classification Based on Improved ResNet-50 Neural Network. Appl. Sci. 2025, 15, 5143. [Google Scholar] [CrossRef]

- Zhou, J.H. Noise Crack Dataset. GitHub. 2020. Available online: https://github.com/zhoujh2020/ (accessed on 10 November 2025).

- Zhang, R.; Jiang, H.; Wang, W.; Liu, J. Optimization Methods, Challenges, and Opportunities for Edge Inference: A Comprehensive Survey. Electronics 2025, 14, 1345. [Google Scholar] [CrossRef]

- Alomar, K.; Aysel, H.I.; Cai, X. Data Augmentation in Classification and Segmentation: A Survey and New Strategies. J. Imaging 2023, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Šegota, B.M.; Lorencin, I.; Anđelić, N. Data Augmentation-Driven Improvements in Malignant Lymphoma Image Classification. Computers 2025, 14, 252. [Google Scholar] [CrossRef]

| Pretrained Models | Shear | Blur | Noise | Rotation |

|---|---|---|---|---|

| VGG-16 | Accuracies (MNF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) |

| Inception V3 | Accuracies (MJF)Loss (MJF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) |

| ResNet50 | Accuracies (MNF)Loss (NF) | Accuracies (MJF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) |

| EfficientNetB0 | Accuracies (NF)Loss (NF) | Accuracies (MJF)Loss (MJF) | Accuracies (MJF)Loss (MJF) | Accuracies (MJF)Loss (MJF) |

| EfficientNetB7 | Accuracies (MNF)Loss (MNF) | Accuracies (MNF)Loss (MNF) | Accuracies (MNF)Loss (MNF) | Accuracies (MNF)Loss (MNF) |

| ResNet152V2 | Accuracies (NF)Loss (NF) | Accuracies (MJF)Loss (MJF) | Accuracies (MNF)Loss (MNF) | Accuracies (NF)Loss (NF) |

| DenseNet201 | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (MNF)Loss (MNF) | Accuracies (NF)Loss (NF) |

| Xception | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (MNF)Loss (MNF) | Accuracies (NF)Loss (NF) |

| InceptionResNetV2 | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) | Accuracies (NF)Loss (NF) |

| Mini Batch Size | Learning Rate | Accuracy | Precision | Recall | F1 Score | Training Time (s) | Inference Time (s) |

|---|---|---|---|---|---|---|---|

| 32 | 10−1 | 50.12 | 100 | 0.24 | 0.48 | 181.74 | 2.16 |

| 10−2 | 50.00 | 0.00 | 0.00 | 0.00 | 185.04 | 2.04 | |

| 10−3 | 86.2 | 78.55 | 99.6 | 87.83 | 185.11 | 1.98 | |

| 10−4 | 96.04 | 93.63 | 98.80 | 96.15 | 189.47 | 2.16 | |

| 10−5 | 91.6 | 86.16 | 99.12 | 92.19 | 186.08 | 1.98 | |

| 64 | 10−1 | 93.56 | 95.56 | 91.36 | 93.42 | 170.80 | 2.72 |

| 10−2 | 50.00 | 0.00 | 0.00 | 0.00 | 172.66 | 2.57 | |

| 10−3 | 94.80 | 91.12 | 98.24 | 94.97 | 173.64 | 2.57 | |

| 10−4 | 95.68 | 93.52 | 98.0 | 96.0 | 175.69 | 2.77 | |

| 10−5 | 95.92 | 94.09 | 98.0 | 96.0 | 170.27 | 2.53 | |

| 128 | |||||||

| 10−1 | 50.00 | 0.00 | 0.00 | 0.00 | 166.05 | 2.74 | |

| 10−2 | 89.88 | 83.39 | 99.60 | 90.78 | 163.78 | 2.72 | |

| 10−3 | 93.36 | 89.49 | 99.68 | 93.75 | 173.0 | 3.36 | |

| 10−4 | 95.3 | 92.8 | 99.04 | 95.5 | 177.3 | 4.1 | |

| 10−5 | 97.8 | 98.4 | 97.7 | 97.1 | 170.18 | 3.58 |

| No of Conv. Layers | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) | Training Time (s) | Inference Time (s) |

|---|---|---|---|---|---|---|

| 1 | 68.92 | 62.29 | 95.92 | 75.53 | 102.24 | 2.61 |

| 2 | 90.56 | 86.11 | 96.72 | 91.11 | 150.48 | 2.49 |

| 3 | 97.8 | 98.4 | 97.7 | 97.1 | 170.18 | 3.58 |

| 4 | 92.52 | 87.99 | 98.48 | 92.94 | 178.57 | 2.75 |

| 5 | 78.28 | 69.74 | 99.92 | 82.14 | 183.94 | 3.75 |

| Type of Optimizers | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) | Training Time (s) | Inference Time (s) |

|---|---|---|---|---|---|---|

| Adam | 97.8 | 98.4 | 97.7 | 97.1 | 170.18 | 3.58 |

| RMSProp | 91.32 | 91.96 | 90.56 | 91.25 | 170.16 | 6.08 |

| Adagrad | 58.54 | 58.63 | 60.08 | 59.34 | 169.10 | 4.32 |

| Kernel Size | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) | Training Time (s) | Inference Time (s) |

|---|---|---|---|---|---|---|

| 3 × 3 | 97.8 | 98.4 | 97.7 | 97.1 | 170.18 | 3.58 |

| 5 × 5 | 92.2 | 87.60 | 98.32 | 92.65 | 238.51 | 3.50 |

| 7 × 7 | 97.48 | 95.55 | 99.6 | 97.52 | 329.47 | 4.21 |

| Augmented Data | |||||

|---|---|---|---|---|---|

| Precision | Recall | Accuracy | F1 Score | AUC | |

| 98.4 | 97.1 | 97.8 | 97.7 | 97.8 | |

| Unaugmented data | |||||

| Blur | 92.04 | 98.96 | 95.20 | 95.37 | 95.20 |

| Brightness | 89.18 | 96.96 | 92.60 | 92.91 | 92.60 |

| Noise | 86.94 | 92.04 | 92.08 | 92.60 | 92.08 |

| Rotation | 91.91 | 99.04 | 95.16 | 95.34 | 95.16 |

| Shear | 98.93 | 96.16 | 97.56 | 97.53 | 97.56 |

| Blur | 92.04 | 98.96 | 95.20 | 95.37 | 95.20 |

| Model Evaluation Metrics | ||||||

|---|---|---|---|---|---|---|

| Model | Accuracy | Precision | Recall | F1 Score | Training Time (s) | Inference Time (s) |

| VGG-16 | 97.2 | 95 | 100 | 97 | 91 | 2 |

| Inception V3 | 88.5 | 83 | 98 | 89 | 84 | 12 |

| ResNet50 | 86.9 | 95 | 78 | 86 | 71 | 8 |

| EfficientNetB0 | 63.0 | 100 | 26 | 41 | 71 | 14 |

| ResNet152V2 | 94.6 | 90 | 100 | 95 | 120 | 20 |

| EfficientNetB7 | ||||||

| DenseNet201 | 98.3 | 97 | 100 | 98 | 120 | 47 |

| Xception | 97.2 | 95 | 100 | 97 | 91 | 3 |

| InceptionResNetV2 | 83.6 | 82 | 86 | 84 | 103 | 22 |

| Proposed Model | 97.6 | 100 | 95.2 | 97.6 | 27 | 2 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 606 | 19 | 0 2 | 625 1 |

| Inception V3 | 612 | 13 | 8 | 617 |

| ResNet50 | 602 | 23 | 22 | 603 |

| EfficientNetB0 | 613 | 12 | 0 2 | 625 1 |

| EfficientNetB7 | 622 1 | 3 2 | 2 | 623 |

| ResNet152V2 | 618 | 7 | 2 | 623 |

| DenseNet201 | 614 | 11 | 9 | 616 |

| Xception | 616 | 9 | 3 | 622 |

| InceptionResNetV2 | 607 | 18 | 12 | 613 |

| Proposed ResNet | 615 | 10 | 18 | 607 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 1222 1 | 28 2 | 2 | 1248 |

| Inception V3 | 1165 | 85 | 15 | 1235 |

| ResNet50 | 1085 | 165 | 31 | 1219 |

| EfficientNetB0 | 1173 | 77 | 0 2 | 1250 1 |

| EfficientNetB7 | 1182 | 68 | 1 | 1249 |

| ResNet152V2 | 1153 | 97 | 3 | 1247 |

| DenseNet201 | 1212 | 38 | 17 | 1233 |

| Xception | 1220 | 30 | 3 | 1247 |

| InceptionResNetV2 | 1210 | 40 | 20 | 1230 |

| Proposed ResNet | 1141 | 109 | 12 | 1238 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 1219 1 | 31 2 | 4 | 1246 |

| Inception V3 | 1148 | 102 | 7 | 1243 |

| ResNet50 | 1167 | 83 | 43 | 1207 |

| EfficientNetB0 | 1187 | 67 | 1 | 1249 |

| EfficientNetB7 | 1193 | 57 | 0 2 | 1250 1 |

| ResNet152V2 | 1147 | 103 | 2 | 1248 |

| DenseNet201 | 1216 | 34 | 11 | 1239 |

| Xception | 1211 | 39 | 6 | 1244 |

| InceptionResNetV2 | 1162 | 88 | 8 | 1242 |

| Proposed ResNet | 1098 | 152 | 16 | 1234 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 1222 1 | 28 2 | 2 | 1248 |

| Inception V3 | 1139 | 111 | 8 | 1242 |

| ResNet50 | 1146 | 104 | 35 | 1215 |

| EfficientNetB0 | 1139 | 111 | 0 | 1250 |

| EfficientNetB7 | 1222 1 | 28 2 | 0 2 | 1250 1 |

| ResNet152V2 | 1164 | 86 | 6 | 1244 |

| DenseNet201 | 1222 | 28 | 14 | 1236 |

| Xception | 1211 | 39 | 6 | 1244 |

| InceptionResNetV2 | 1154 | 96 | 6 | 1244 |

| Proposed ResNet | 1092 | 158 | 23 | 1227 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 1208 | 42 | 1 | 1249 |

| Inception V3 | 1139 | 111 | 7 | 1243 |

| ResNet50 | 1152 | 98 | 41 | 1209 |

| EfficientNetB0 | 1183 | 67 | 0 1 | 1250 1 |

| EfficientNetB7 | 1217 1 | 33 2 | 0 2 | 1250 1 |

| ResNet152V2 | 1157 | 93 | 2 | 1248 |

| DenseNet201 | 1204 | 46 | 1 | 1249 |

| Xception | 1210 | 40 | 1 | 1249 |

| InceptionResNetV2 | 1180 | 70 | 7 | 1243 |

| Proposed ResNet | 1064 | 186 | 12 | 1238 |

| CNN Models | Parameters | |||

|---|---|---|---|---|

| TP | FP | TN | FN | |

| VGG-16 | 1223 | 27 | 3 | 1247 |

| Inception V3 | 1175 | 75 | 11 | 1239 |

| ResNet50 | 1097 | 153 | 25 | 1225 |

| EfficientNetB0 | 1197 | 53 | 0 | 1250 |

| EfficientNetB7 | 1217 | 33 | 0 2 | 1250 1 |

| ResNet152V2 | 1156 | 94 | 4 | 1246 |

| DenseNet201 | 1215 | 35 | 11 | 1239 |

| Xception | 1223 | 27 | 4 | 1246 |

| InceptionResNetV2 | 1205 | 45 | 15 | 1235 |

| Proposed ResNet | 1237 1 | 13 2 | 48 | 1202 |

| Model Parameters | |||||

|---|---|---|---|---|---|

| Model | Parameter (in Millions) | Size (Mb) | Depth | FLOPs | Training Time (s) |

| VGG-16 | 138.4 | 528 | 16 | 16 G | 839 |

| Inception V3 | 23.9 | 92 | 189 | 6 G | 331 |

| ResNet50 | 25.6 | 98 | 107 | 4 G | 482 |

| EfficientNetB0 | 5.3 | 29 | 132 | 0.39 G | 323 |

| ResNet152V2 | 60.4 | 232 | 307 | 11 G | 1003 |

| EfficientNetB7 | 66.7 | 256 | 438 | 0.37 G | 1615 |

| DenseNet201 | 20.2 | 80 | 402 | 4 G | 820 |

| Xception | 22.9 | 88 | 81 | 11 G | 694 |

| InceptionResNetV2 | 55.9 | 215 | 449 | 15.1 G | 666 |

| Proposed Model | 7.48 | 28.5 | 4 | 0.296 G | 170 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Omoniyi, T.M.; Abel, B.; Omoebamije, O.; Onimisi, Z.M.; Matos, J.C.; Tinoco, J.; Minh, T.Q. The Effect of Data Augmentation on Performance of Custom and Pre-Trained CNN Models for Crack Detection. Appl. Sci. 2025, 15, 12321. https://doi.org/10.3390/app152212321

Omoniyi TM, Abel B, Omoebamije O, Onimisi ZM, Matos JC, Tinoco J, Minh TQ. The Effect of Data Augmentation on Performance of Custom and Pre-Trained CNN Models for Crack Detection. Applied Sciences. 2025; 15(22):12321. https://doi.org/10.3390/app152212321

Chicago/Turabian StyleOmoniyi, Tope Moses, Barnabas Abel, Oluwaseun Omoebamije, Zuberu Mark Onimisi, Jose C. Matos, Joaquim Tinoco, and Tran Quang Minh. 2025. "The Effect of Data Augmentation on Performance of Custom and Pre-Trained CNN Models for Crack Detection" Applied Sciences 15, no. 22: 12321. https://doi.org/10.3390/app152212321

APA StyleOmoniyi, T. M., Abel, B., Omoebamije, O., Onimisi, Z. M., Matos, J. C., Tinoco, J., & Minh, T. Q. (2025). The Effect of Data Augmentation on Performance of Custom and Pre-Trained CNN Models for Crack Detection. Applied Sciences, 15(22), 12321. https://doi.org/10.3390/app152212321