SmaAt-UNet Optimized by Particle Swarm Optimization (PSO): A Study on the Identification of Detachment Diseases in Ming Dynasty Temple Mural Paintings in North China

Abstract

1. Introduction

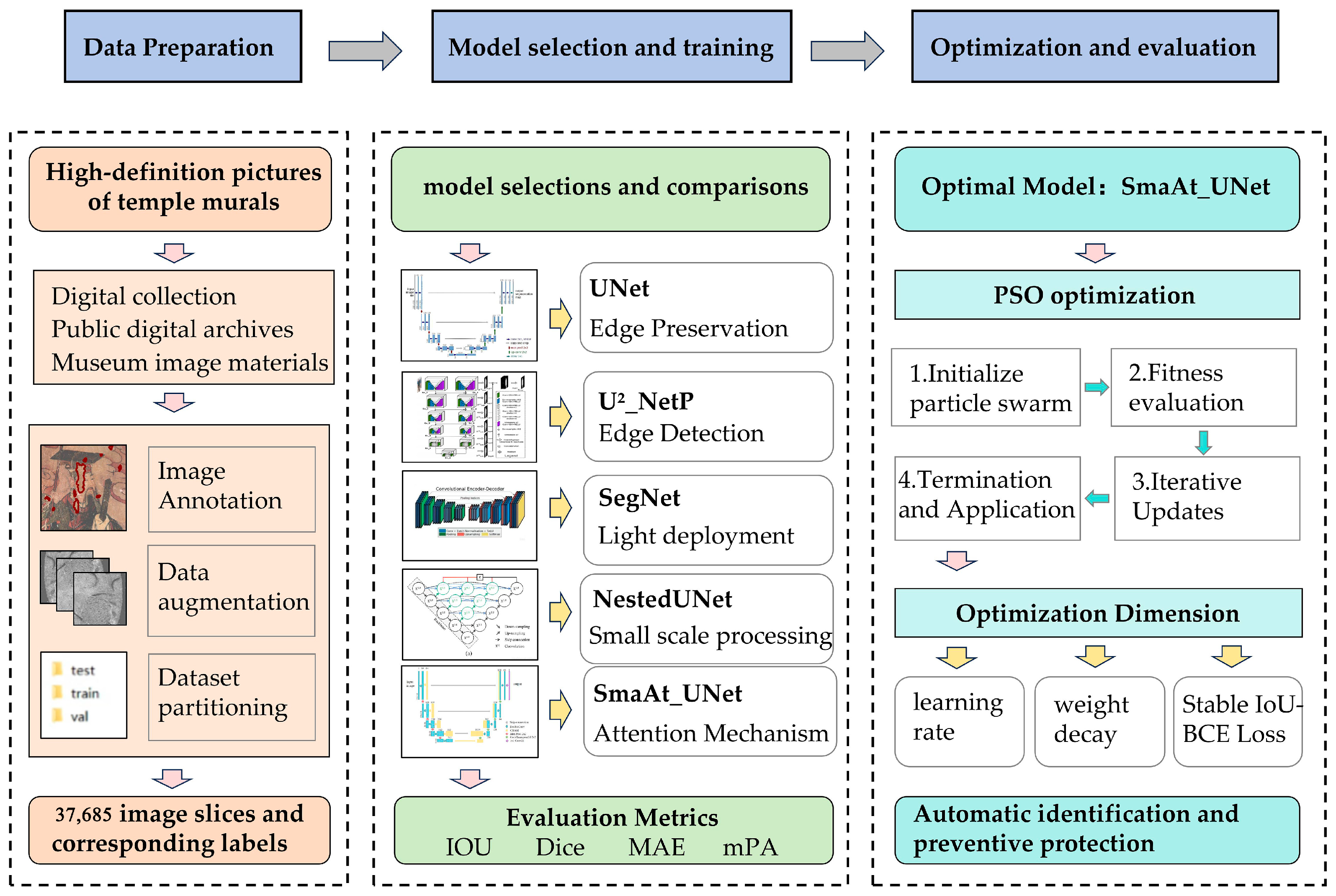

2. Materials and Methods

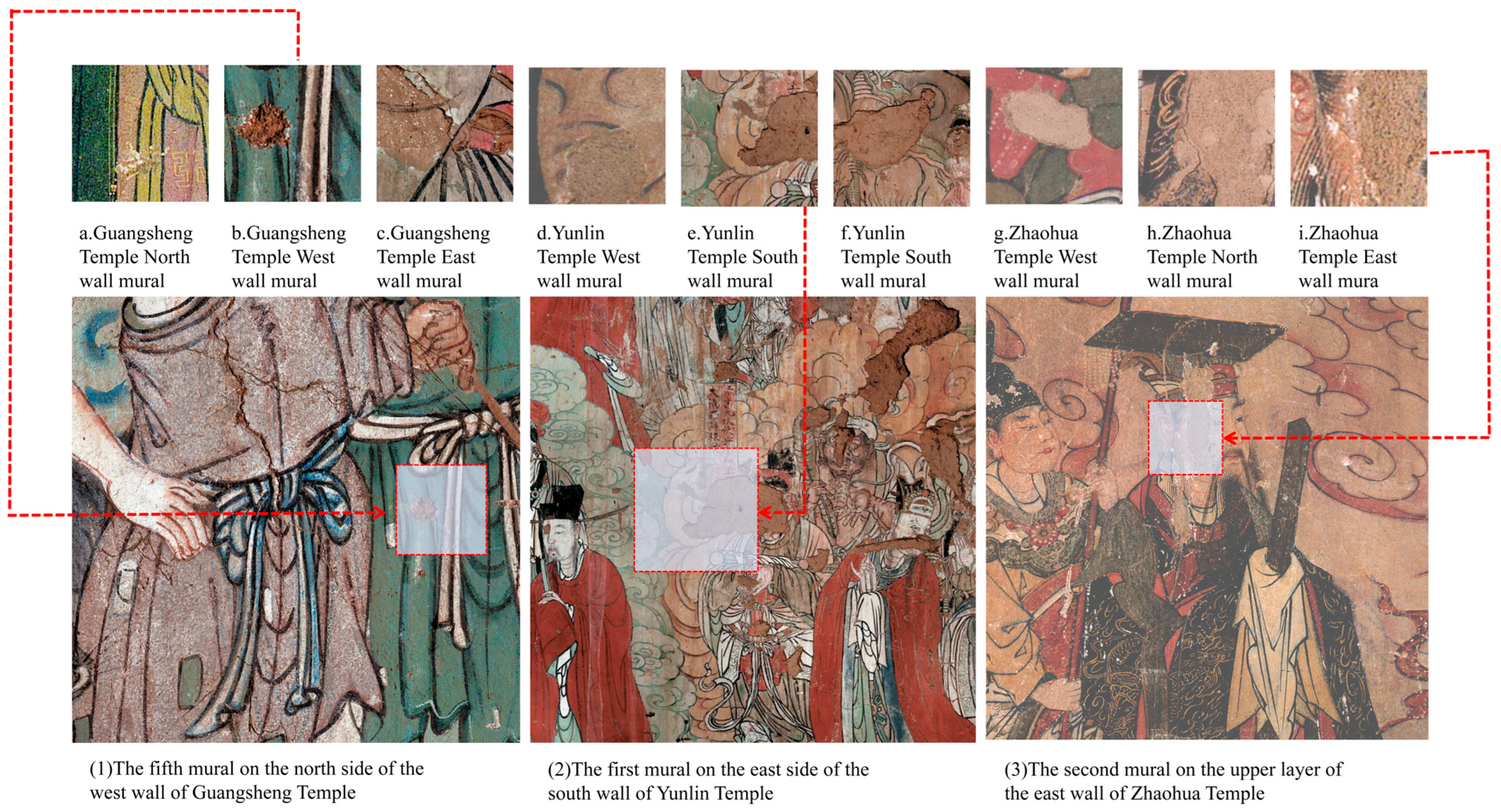

2.1. Research Objects and Data Collection

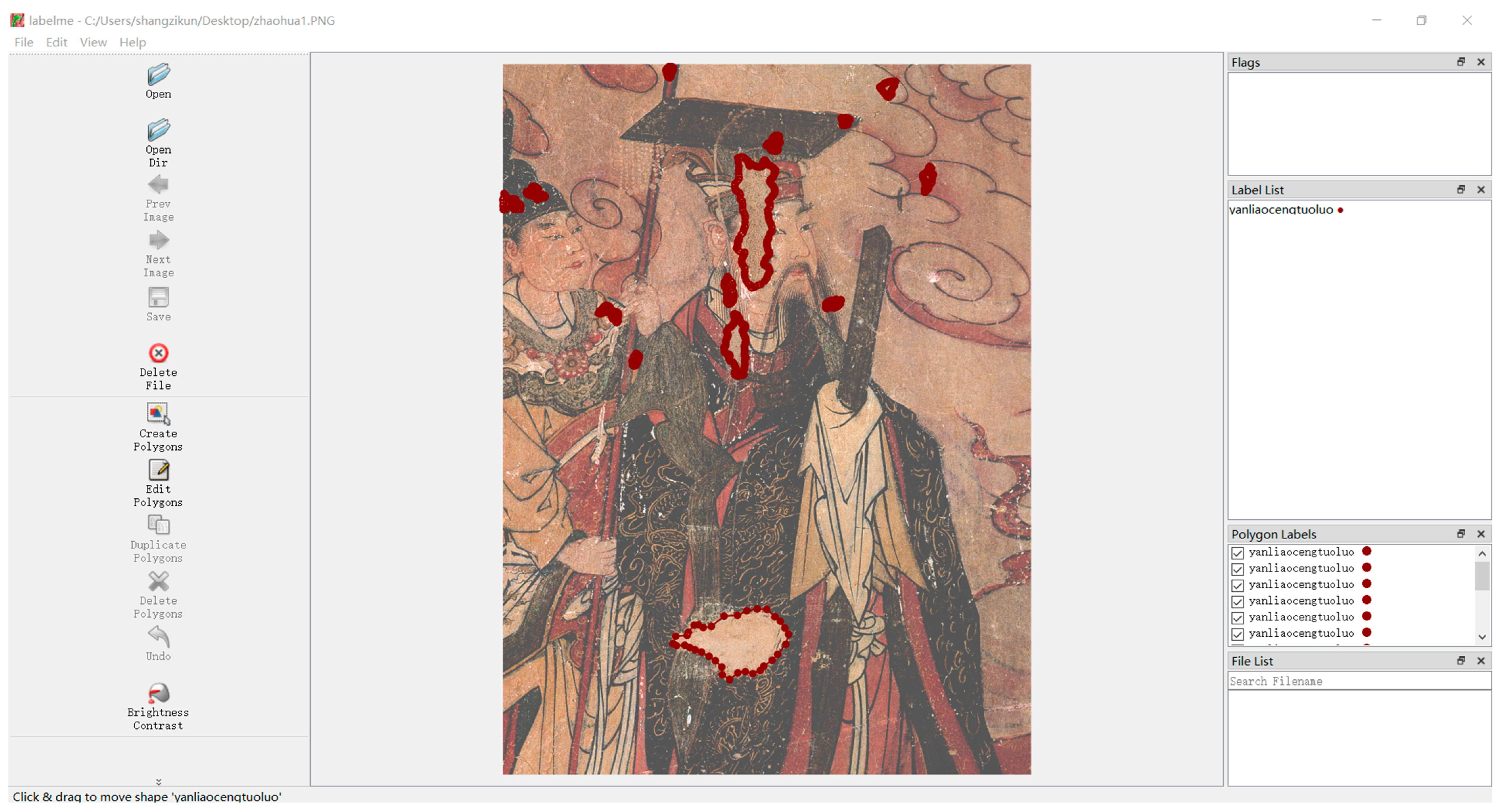

2.2. Image Annotation and Preprocessing

2.3. Segment Models

2.4. Hyperparameter Optimization via Particle Swarm Optimization (PSO) Algorithm

3. Experiments and Validation

3.1. Definition and Explanation of Evaluation Metrics

3.2. Loss Function

3.3. Design of Comparative Experiments

- (1)

- Data Preparation: The preprocessed dataset, consisting of 37,685 image slices, was loaded. Consistent data augmentation (e.g., rotation, flipping, and brightness adjustment) was applied to all models to mitigate overfitting and ensure data distribution consistency.

- (2)

- Model Initialization: All models were implemented using the PyTorch deep learning framework. Their predefined architectures were strictly followed—e.g., UNet’s symmetric encoder–decoder with skip connections, and SmaAt-UNet’s integration of spatial attention modules—and model weights were initialized using the same random seed to eliminate initialization bias.

- (3)

- Training was executed as a cyclic process on the training set, with each epoch encompassing the processing of all mini-batches. The key training configurations were standardized across the models:

- Batch size: 16 (optimized to balance computational efficiency and gradient stability);

- Parameter update: Model parameters were updated iteratively after each epoch using a dynamic loss function (Stable IoU—BCE Loss = α ∗ Stable IoU Loss + β ∗ BCE Loss with α = 10 and β = 1 for the comparison phase) to adapt to the evolving data distribution during training;

- Maximum epochs: 80 (enforced for all models to eliminate performance bias caused by variable training durations, ensuring that each model reaches a fully converged state).

Performance monitoring and optimization were implemented consistently as follows:- Validation frequency: Every 5 epochs, to track training progress and prevent overfitting;

- Learning rate scheduling: ReduceLROnPlateau strategy (patience = 10), which decreases the learning rate when the validation IoU plateaus, facilitating fine-grained parameter tuning;

- Early stopping safeguard: Triggered if validation IoU stagnates for 15 consecutive epochs (to capture the optimal model state), though the full 80-epoch training was completed to maintain experimental consistency.

- (4)

- To minimize the impact of random initialization on the experimental results, this study conducted repeated experiments under multiple random seed conditions. Five different random seeds (42, 123, 456, 789, and 1011) were set, and the model was independently trained five times. All performance metrics (IoU, Dice, MAE, and mPA) were averaged over the five experimental results to obtain the final reported values, and the 95% confidence intervals were calculated to quantify the statistical stability of the model performance.

- (5)

- Post-training evaluation was conducted on the independent test set (not involved in training/validation) to ensure unbiased results: the models performed inference to generate pixel-level prediction masks, and four quantitative metrics were computed for comprehensive assessment—IoU, Dice, MAE, and mPA. Strict experimental controls were applied to guarantee comparative fairness: all models shared the same dataset (training/validation/test splits), unified training protocols, identical data preprocessing and augmentation pipelines, and the same loss function.

3.4. PSO Experiment Design

4. Results

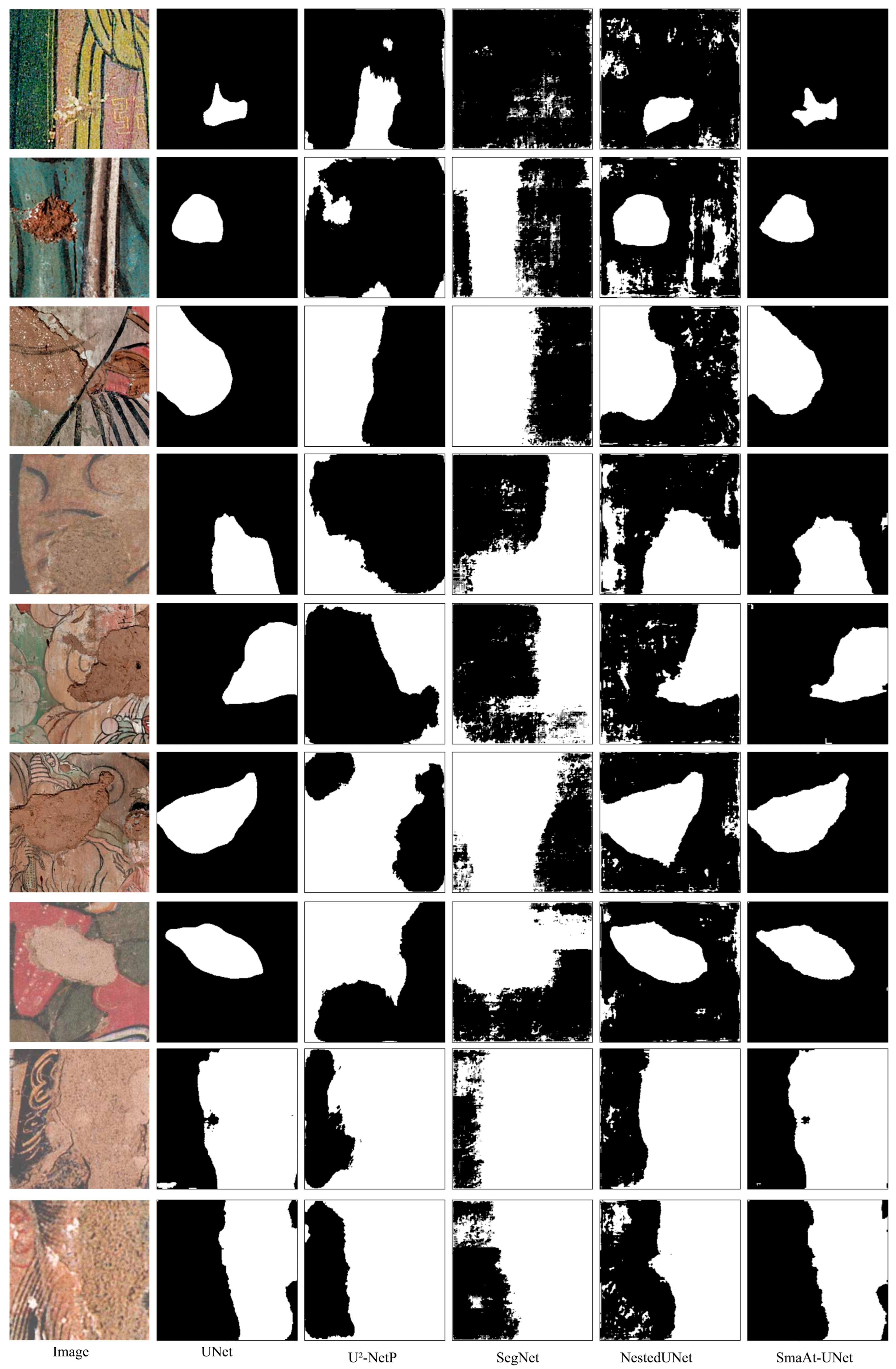

4.1. Comparison Results of the Segmentation Models

4.1.1. Segmentation Accuracy

4.1.2. Model Comparison Training and Validation Curves

4.1.3. Model Comparison Segmentation Result Visualization

4.2. Performance Results of the Optimized Model

4.2.1. Performance Metrics

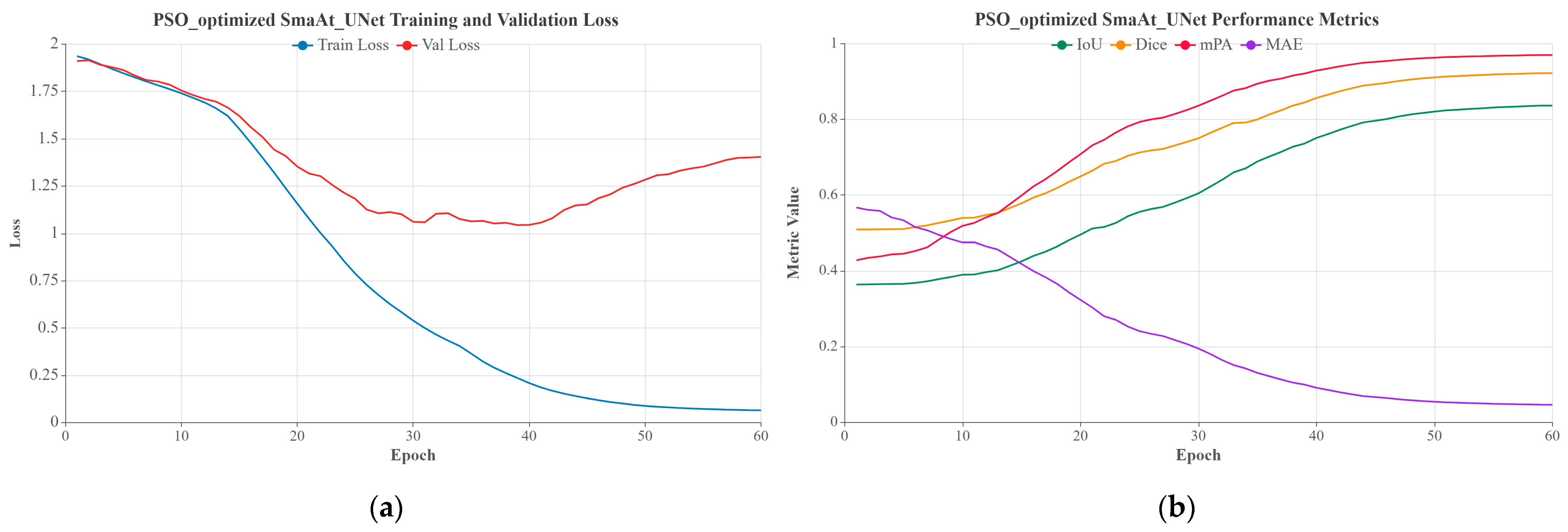

4.2.2. Model Optimization Training and Validation Curves

4.2.3. Model Optimization Segmentation Result Visualization

5. Discussion

5.1. Model Performance Analysis

- Lightweight convolutional units: Unlike the standard convolutions in UNet, this unit decomposes spatial convolution and channel convolution, drastically reducing the parameter count and computational cost. Crucially, it retains the model’s multi-scale feature extraction capability—essential for handling mural images with intricate textures (e.g., overlapping brushstrokes) and variable deterioration morphologies (e.g., dot-like vs. sheet-like detachment);

- Attention module integration: During feature extraction, the module dynamically assigns higher weights to pixels corresponding to detachment regions and lower weights to background pixels. This selective attention enhances the model’s discriminative ability at edge regions, addressing the common challenge of blurry boundaries between detachment areas and mural backgrounds, and enabling precise boundary localization.

5.2. Model Limitations

- Limited data diversity: The study primarily utilized digital resources from specific institutions, leading to data concentration in two key dimensions—geographical coverage (e.g., focusing on murals from a few regions) and temporal scope (e.g., spanning a narrow historical period). This lack of diversity may restrict the model’s generalization to murals with distinct stylistic or deteriorative characteristics from underrepresented areas/eras;

- High cost and subjectivity of manual annotation: Detachment regions were entirely manually annotated. Even with strict adherence to the China national standard, the workflow was labor-intensive. Moreover, subjective biases (e.g., differences in annotators’ judgment of blurred detachment boundaries) inevitably introduce annotation errors, which may propagate to the model training;

- Challenges in complex scenarios: For murals featuring intricate decorative patterns (e.g., overlapping motifs) and rich color gradients, the models struggled with two common issues: false detection (misclassifying decorative elements as detachment) and missed detection (failing to identify small-scale or edge-blurred detachments). This reflects the models’ insufficient ability to distinguish between detachment and complex background textures.

5.3. Model Application and Protection Practices

- These segmentation results can be integrated into digital archives and visualization databases for cultural heritage, enabling long-term monitoring, evolutionary analysis, and multi-temporal comparisons of mural deterioration to achieve dynamic tracking of damage progression. When integrated with Historical Building Information Modeling (HBIM) systems, this approach enables precise localization and visualization of mural detachment areas in three-dimensional space. By correlating structural information with damage distribution, it enhances the efficiency and accuracy of interdisciplinary collaboration [44,45].

- The model’s lightweight architecture and rapid inference speed confer it excellent portability and field application potential. The research findings can be deployed on mobile terminals or on-site inspection systems to enable real-time identification of mural deterioration and synchronous database updates, establishing an intelligent closed-loop process spanning data collection, automated recognition, and restoration decision-making [46]. This integrated application model significantly enhances the efficiency and responsiveness of mural conservation efforts, providing an intelligent supplement to traditional manual inspections. It transforms heritage monitoring from static documentation to dynamic management.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Xie, Q.; Shi, W.; Lin, H.; He, J.; Ao, J. Cultural rituality and heritage revitalization values of ancestral temple architecture painting art from the perspective of relational sociology theory. Herit. Sci. 2024, 12, 340. [Google Scholar] [CrossRef]

- Kosel, J.; Kavčič, M.; Legan, L.; Retko, K.; Ropret, P. Evaluating the xerophilic potential of moulds on selected egg tempera paints on glass and wooden supports using fluorescent microscopy. J. Cult. Herit. 2021, 52, 44–54. [Google Scholar] [CrossRef]

- Sun, P.; Hou, M.; Lyu, S.; Wang, W.; Li, S.; Mao, J.; Li, S. Enhancement and restoration of scratched murals based on hyperspectral imaging—A case study of murals in the Baoguang Hall of Qutan Temple, Qinghai, China. Sensors 2022, 22, 9780. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zou, Q.; Zhang, F.; Yu, H.; Chen, L.; Song, C.; Huang, X.; Wang, X.; Li, Q. Line Drawing-Guided Progressive Inpainting for Mural Damage. ACM J. Comput. Cult. Herit. 2025, 18, 1–20. [Google Scholar] [CrossRef]

- Wei, X.; Fan, B.; Wang, Y.; Feng, Y.; Fu, L. Progressive enhancement and restoration for mural images under low-light and defective conditions based on multi-receptive field strategy. NPJ Herit. Sci. 2025, 13, 63. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, X. Current progress on murals: Distribution, conservation and utilization. Herit. Sci. 2023, 11, 61. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, C.A.; Guo, Q.; Wang, Y.; Xia, Y.; Tang, S.; Zhao, L. Analysis of cracking behavior of murals in Mogao Grottoes under environmental humidity change. J. Cult. Herit. 2024, 67, 183–193. [Google Scholar] [CrossRef]

- Abd El-Tawab, N.; Mahran, A.; Gad, K. Conservation of the Mural Paintings of the Greek Orthodox Church Dom E of Saint George, Old Cairo-Egypt. Eur. Sci. J. 2014, 10, 324–354. [Google Scholar]

- Rivas, T.; Alonso-Villar, E.M.; Pozo-Antonio, J.S. Forms and factors of deterioration of urban art murals under humid temperate climate; influence of environment and material properties. Eur. Phys. J. Plus 2022, 137, 1257. [Google Scholar] [CrossRef]

- Du, P. A comprehensive automatic labeling and repair strategy for cracks and peeling conditions of literary murals in ancient buildings. J. Intell. Fuzzy Syst. 2024, 46, 3557–3568. [Google Scholar] [CrossRef]

- Deng, X.; Yu, Y. Automatic calibration of crack and flaking diseases in ancient temple murals. Herit. Sci. 2022, 10, 163. [Google Scholar] [CrossRef]

- Cao, J.; Li, Y.; Cui, H.; Zhang, Q. Improved region growing algorithm for the calibration of flaking deterioration in ancient temple murals. Herit. Sci. 2018, 6, 67. [Google Scholar] [CrossRef]

- Chai, B.; Xiao, D.; Su, B.; Feng, W.; Yu, Z. Development and Application of a Multispectral Digital Recognition System for Mogao Caves’ Paint Colors. Dunhuang Stud. 2018, 3, 123–130. [Google Scholar]

- Zhang, H.; Xu, D.; Luo, H.; Yang, B. Multi-scale mural restoration method based on edge reconstruction. J. Graph. 2021, 42, 590. [Google Scholar]

- Yang, T.; Wang, S.; Pen, H.; Wang, Z. Automatic Recognition and Repair of Cracks Inmural Images Based on Improved SOM. J. Tianjin Univ. Sci. Technol. 2020, 53, 932–938. [Google Scholar]

- Li, B.; Chu, X.; Lin, F.; Wu, F.; Jin, S.; Zhang, K. A highly efficient tunnel lining crack detection model based on Mini-Unet. Sci. Rep. 2024, 14, 28234. [Google Scholar] [CrossRef]

- Zhang, Z.; He, Y.; Hu, D.; Jin, Q.; Zhou, M.; Liu, Z.; Chen, H.; Wang, H.; Xiang, X. Algorithm for pixel-level concrete pavement crack segmentation based on an improved U-Net model. Sci. Rep. 2025, 15, 6553. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, R.; Yang, K.; Zou, K. Dual degradation image inpainting method via adaptive feature fusion and U-net network. Appl. Soft Comput. 2025, 174, 113010. [Google Scholar] [CrossRef]

- Zeng, Y.; Gong, Y.; Zeng, X. Controllable digital restoration of ancient paintings using convolutional neural network and nearest neighbor. Pattern Recognit. Lett. 2020, 133, 158–164. [Google Scholar] [CrossRef]

- Rakhimol, V.; Maheswari, P.U. Restoration of ancient temple murals using cGAN and PConv networks. Comput. Graph. 2022, 109, 100–110. [Google Scholar] [CrossRef]

- Shen, J.; Liu, N.; Sun, H.; Li, D.; Zhang, Y.; Han, L. An algorithm based on lightweight semantic features for ancient mural element object detection. NPJ Herit. Sci. 2025, 13, 70. [Google Scholar] [CrossRef]

- Yuan, Q.; He, X.; Han, X.; Guo, H. Automatic recognition of craquelure and paint loss on polychrome paintings of the Palace Museum using improved U-Net. Herit. Sci. 2023, 11, 65. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, L.; Shi, J.; Zhang, Y.; Wan, J. Damage detection of grotto murals based on lightweight neural network. Comput. Electr. Eng. 2022, 102, 108237. [Google Scholar] [CrossRef]

- Zou, Z.; Zhao, P.; Zhao, X. Virtual restoration of the colored paintings on weathered beams in the Forbidden City using multiple deep learning algorithms. Adv. Eng. Inform. 2021, 50, 101421. [Google Scholar] [CrossRef]

- Zhang, J.; Bai, S.; Zeng, X.; Liu, K.; Yuan, H. Supporting historic mural image inpainting by using coordinate attention aggregated transformations with U-Net-based discriminator. NPJ Herit. Sci. 2025, 13, 1–12. [Google Scholar] [CrossRef]

- Cui, J.; Tao, N.; Omer, A.M.; Zhang, C.; Zhang, Q.; Ma, Y.; Zhang, Z.; Yang, D.; Zhang, H.; Duan, Y.; et al. Attention-enhanced U-Net for automatic crack detection in ancient murals using optical pulsed thermography. J. Cult. Herit. 2024, 70, 111–119. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, Y.; Li, S.; Deng, F. Study on Digital Chromatography of Fahai Temple Frescoes in Ming Dynasty Based on Visualization. In Proceedings of the 2019 IEEE Fourth International Conference on Data Science in Cyberspace (DSC), Hangzhou, China, 23–25 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 581–586. [Google Scholar]

- Li, T.T. Exploration on the Origin of Architectures Murals—Analysis on Geographical Features of Song, Liao and Jin Dynasty in Shanxi. Adv. Mater. Res. 2014, 838, 2870–2874. [Google Scholar] [CrossRef]

- Pen, H.; Wang, S.; Zhang, Z. Mural image shedding diseases inpainting algorithm based on structure priority. In Proceedings of the Third International Conference on Artificial Intelligence and Computer Engineering (ICAICE 2022), Wuhan, China, 4–6 November 2022; SPIE: Bellingham, WA, USA, 2023; Volume 12610, pp. 347–352. [Google Scholar]

- Li, J.; Zhang, H.; Fan, Z.; He, X.; He, S.; Sun, M.; Ma, Y.; Fang, S.; Zhang, H.; Zhang, B. Investigation of the renewed diseases on murals at Mogao Grottoes. Herit. Sci. 2013, 1, 31. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, X.; Chen, W.; Liu, J.; Xu, T.; Wang, Z. Muraldiff: Diffusion for ancient murals restoration on large-scale pre-training. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2169–2181. [Google Scholar] [CrossRef]

- Wu, M.; Jia, M.; Wang, J. TMCrack-Net: A U-shaped network with a feature pyramid and transformer for mural crack segmentation. Appl. Sci. 2022, 12, 10940. [Google Scholar] [CrossRef]

- Hu, X.; Naiel, M.A.; Wong, A.; Lamm, M.; Fieguth, P. RUNet: A robust UNet architecture for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16−17 June 2019. [Google Scholar]

- Li, X.; Fang, X.; Yang, G.; Su, S.; Zhu, L.; Yu, Z. Transu2-net: An effective medical image segmentation framework based on transformer and u2-net. IEEE J. Transl. Eng. Health Med. 2023, 11, 441–450. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Li, K.; Li, Z.; Fang, S. Siamese NestedUNet networks for change detection of high-resolution satellite image. In Proceedings of the 2020 1st International Conference on Control, Robotics and Intelligent System, Xiamen, China, 27–29 October 2020; pp. 42–48. [Google Scholar]

- Trebing, K.; Staǹczyk, T.; Mehrkanoon, S. SmaAt-UNet: Precipitation nowcasting using a small attention-UNet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- De Oca, M.A.M.; Stutzle, T.; Birattari, M.; Dorigo, M. Frankenstein’s PSO: A composite particle swarm optimization algorithm. IEEE Trans. Evol. Comput. 2009, 13, 1120–1132. [Google Scholar] [CrossRef]

- Jain, M.; Saihjpal, V.; Singh, N.; Singh, S.B. An overview of variants and advancements of PSO algorithm. Appl. Sci. 2022, 12, 8392. [Google Scholar] [CrossRef]

- Yeghiazaryan, V.; Voiculescu, I. Family of boundary overlap metrics for the evaluation of medical image segmentation. J. Med. Imaging 2018, 5, 015006. [Google Scholar] [CrossRef]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In International Workshop on Deep Learning in Medical Image Analysis; Springer International Publishing: Cham, Germany, 2017; pp. 240–248. [Google Scholar]

- Huang, F.; Tan, E.L.; Yang, P.; Huang, S.; Ou-Yang, L.; Cao, J.; Wang, T.; Lei, B. Self-weighted adaptive structure learning for ASD diagnosis via multi-template multi-center representation. Med. Image Anal. 2020, 63, 101662. [Google Scholar] [CrossRef]

- Saifullah, S.; Dreżewski, R. PSO-UNet: Particle Swarm-Optimized U-Net Framework for Precise Multimodal Brain Tumor Segmentation. arXiv 2025, arXiv:2503.19152. [Google Scholar]

- Xu, Z.; Yang, Y.; Fang, Q.; Chen, W.; Xu, T.; Liu, J.; Wang, Z. A comprehensive dataset for digital restoration of Dunhuang murals. Sci. Data 2024, 11, 955. [Google Scholar] [CrossRef]

- Zhan, J.; Meng, Y.; Zhang, L.; Li, K.; Yan, F. Research on computer vision in intelligent damage monitoring of heritage conservation: The case of Yungang Cave Paintings. NPJ Herit. Sci. 2025, 13, 50. [Google Scholar] [CrossRef]

- Mazzetto, S. Integrating emerging technologies with digital twins for heritage building conservation: An interdisciplinary approach with expert insights and bibliometric analysis. Heritage 2024, 7, 6432–6479. [Google Scholar] [CrossRef]

| Name | Location | Period | Type |

|---|---|---|---|

| Fahai Temple | Shijingshan District, Beijing | Mid-Ming Dynasty | Imperially Commissioned |

| Zhaohua Temple | Huaian, Hebei Province | Mid-Ming Dynasty | Imperially Commissioned |

| Pilu Temple | Shijiazhuang, Hebei Province | Mid-Ming Dynasty | Local Folk-Built |

| Yong’an Temple | Hunyuan, Shanxi Province | Late Ming Dynasty | Local Folk-Built |

| Yunlin Temple | Yanggao, Shanxi Province | Late Ming Dynasty | Imperially Commissioned |

| Gongzhu Temple | Fanshi, Shanxi Province | Mid-Ming Dynasty | Local Folk-Built |

| Duofu Temple | Taiyuan, Shanxi Province | Mid-Ming Dynasty | Local Folk-Built |

| Foguang Temple | Wutai, Shanxi Province | Early Ming Dynasty | Local Folk-Built |

| Shengmu Temple | Fenyang, Shanxi Province | Mid-Ming Dynasty | Local Folk-Built |

| Zishou Temple | Lingshi, Shanxi Province | Mid-Ming Dynasty | Local Folk-Built |

| Guangsheng Temple | Hongtong, Shanxi Province | Early Ming Dynasty | Local Folk-Built |

| Jiyi Temple | Xinjiang, Shanxi Province | Mid-Ming Dynasty | Local Folk-Built |

| Model | Structure Features | Parameter Size | Key Advantages | Applicable Scenarios |

|---|---|---|---|---|

| UNet | Symmetric encoder–decoder structure and skip connections | Medium | Good edge information preservation and clear structure | Suitable for identifying medium-scale pigment detachment regions in murals |

| U2-NetP | Nested residual block (RSU) and lightweight design | Small | Strong multi-scale capability and good edge detection performance | Regions with blurred detachment boundaries and fine textures |

| SegNet | Max-pooling index upsampling with a compact structure | Medium | Fewer parameters and good spatial reducibility | Suitable for model lightweight deployment or fast inference environments |

| NestedUNet | Multi-layer nested skip connections and dense feature fusion | Large | Strong feature representation ability and good handling of complex structures | Small-scale or multi-morphological pigment detachment regions |

| SmaAt-UNet | Attention mechanism and depthwise separable convolution | Tiny | Fewer parameters but excellent performance, with strong edge preservation capability | Scenarios where mural detachment regions are semantically sparse but structurally critical |

| Model | IoU (Mean, 95% CI) | Dice (Mean, 95% CI) | MAE (Mean, 95% CI) | mPA (Mean, 95% CI) |

|---|---|---|---|---|

| UNet | 0.6418 ± 0.0125 | 0.7263 ± 0.0151 | 0.0608 ± 0.0032 | 0.9287 ± 0.0084 |

| U2-NetP | 0.3527 ± 0.0213 | 0.4392 ± 0.0287 | 0.4980 ± 0.0315 | 0.6315 ± 0.0246 |

| SegNet | 0.3672 ± 0.0198 | 0.4551 ± 0.0264 | 0.4796 ± 0.0293 | 0.6683 ± 0.0221 |

| NestedUNet | 0.4368 ± 0.0176 | 0.4795 ± 0.0239 | 0.4403 ± 0.0278 | 0.8258 ± 0.0195 |

| SmaAt-UNet | 0.6633 ± 0.0281 | 0.7246 ± 0.0253 | 0.0592 ± 0.0047 | 0.9325 ± 0.0168 |

| Model | IoU (Mean, 95% CI) | Dice (Mean, 95% CI) | MAE (Mean, 95% CI) | mPA (Mean, 95% CI) |

|---|---|---|---|---|

| SmaAt-UNet | 0.6633 ± 0.0281 | 0.7246 ± 0.0253 | 0.0592 ± 0.0047 | 0.9325 ± 0.0168 |

| PSO-SmaAt-UNet | 0.7352 ± 0.0295 | 0.7936 ± 0.0238 | 0.0455 ± 0.0039 | 0.9702 ± 0.0125 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, C.; Shang, Z.; Zhang, Y.; Pan, H.; Nuermaimaiti, A.; Wang, C.; Li, N.; Zhang, B. SmaAt-UNet Optimized by Particle Swarm Optimization (PSO): A Study on the Identification of Detachment Diseases in Ming Dynasty Temple Mural Paintings in North China. Appl. Sci. 2025, 15, 12295. https://doi.org/10.3390/app152212295

Luo C, Shang Z, Zhang Y, Pan H, Nuermaimaiti A, Wang C, Li N, Zhang B. SmaAt-UNet Optimized by Particle Swarm Optimization (PSO): A Study on the Identification of Detachment Diseases in Ming Dynasty Temple Mural Paintings in North China. Applied Sciences. 2025; 15(22):12295. https://doi.org/10.3390/app152212295

Chicago/Turabian StyleLuo, Chuanwen, Zikun Shang, Yan Zhang, Hao Pan, Abdusalam Nuermaimaiti, Chenlong Wang, Ning Li, and Bo Zhang. 2025. "SmaAt-UNet Optimized by Particle Swarm Optimization (PSO): A Study on the Identification of Detachment Diseases in Ming Dynasty Temple Mural Paintings in North China" Applied Sciences 15, no. 22: 12295. https://doi.org/10.3390/app152212295

APA StyleLuo, C., Shang, Z., Zhang, Y., Pan, H., Nuermaimaiti, A., Wang, C., Li, N., & Zhang, B. (2025). SmaAt-UNet Optimized by Particle Swarm Optimization (PSO): A Study on the Identification of Detachment Diseases in Ming Dynasty Temple Mural Paintings in North China. Applied Sciences, 15(22), 12295. https://doi.org/10.3390/app152212295