Computer Vision Methods for Vehicle Detection and Tracking: A Systematic Review and Meta-Analysis

Abstract

1. Introduction

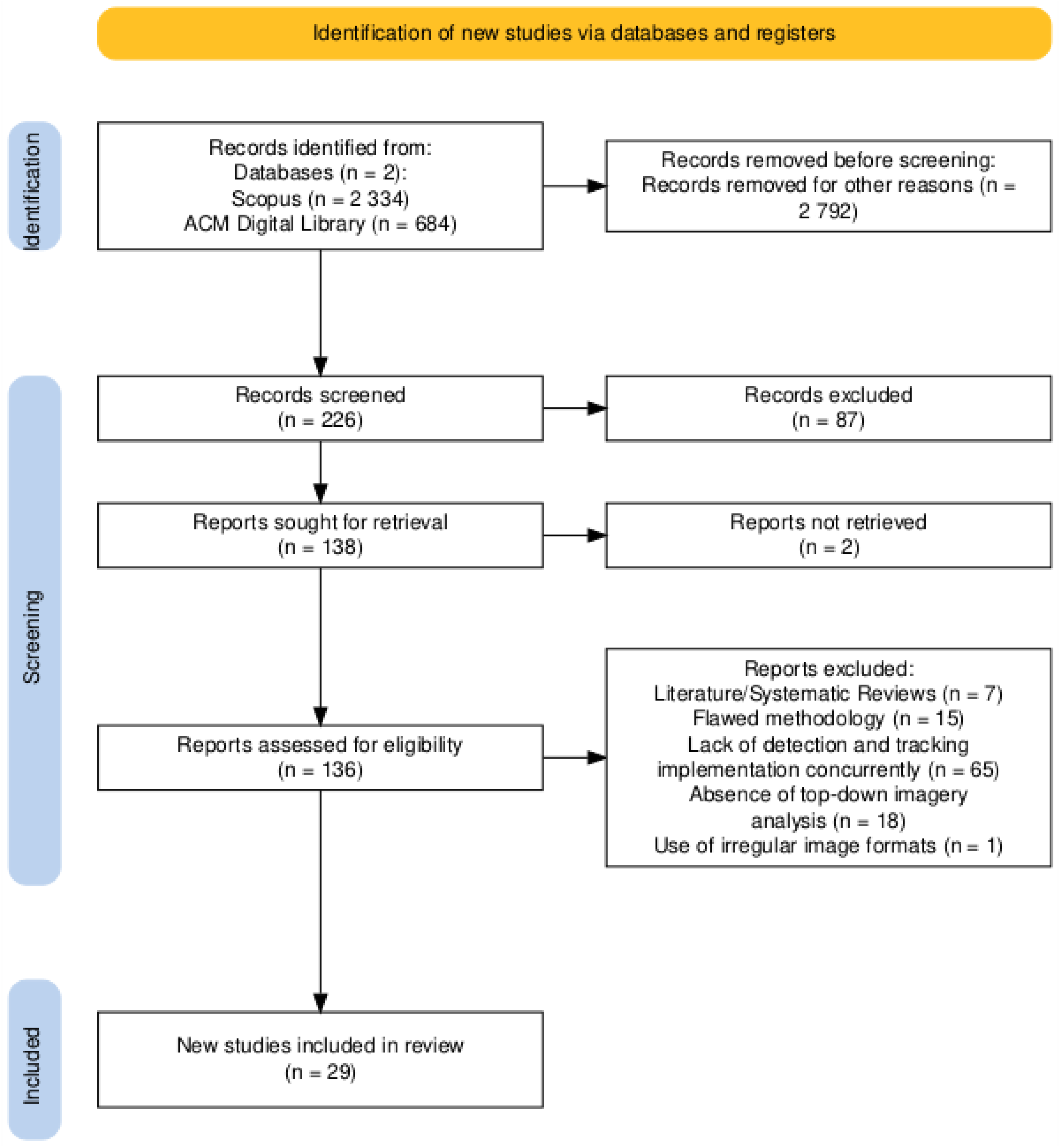

2. Methodology

2.1. Research Questions

2.2. Search Strategy

2.2.1. Sources

2.2.2. Search Query Design

2.2.3. Inclusion and Exclusion Criteria

2.2.4. Data Extraction

3. Results and Discussion

3.1. Performance Metrics

3.1.1. Detection-Related Metrics

- Precision

- Recall

- Precision–Recall Curve

- Average Precision (AP) and Average Recall (AR)

- Mean Average Precision (mAP) and Mean Average Recall (mAR)

- F1-Score

- Intersection over Union (IoU)

- Jaccard Index

3.1.2. Tracking-Related Metrics

- Multiple Object Tracking Accuracy (MOTA)

- Multiple Object Tracking Precision (MOTP)

- Mostly Tracked (MT) and Mostly Lost (ML)

- IDSW

- Fragmentation (FM)

- Identity F1-Score (IDF1)

- Precision–Recall Metrics (PR-MOTA, PR-MOTP, PR-MT, PR-ML, PR-IDSW, PR-FM and PR-IDF1)

- Displacement Error (DetA)

- Assignment Error (AssA)

- Higher-Order Tracking Accuracy (HOTA)

- Detection Recall (DetRe)

- Detection Precision (DetPr)

- Association Recall (AssRe)

- Association Precision (AssPr)

- Localisation Accuracy (LocA)

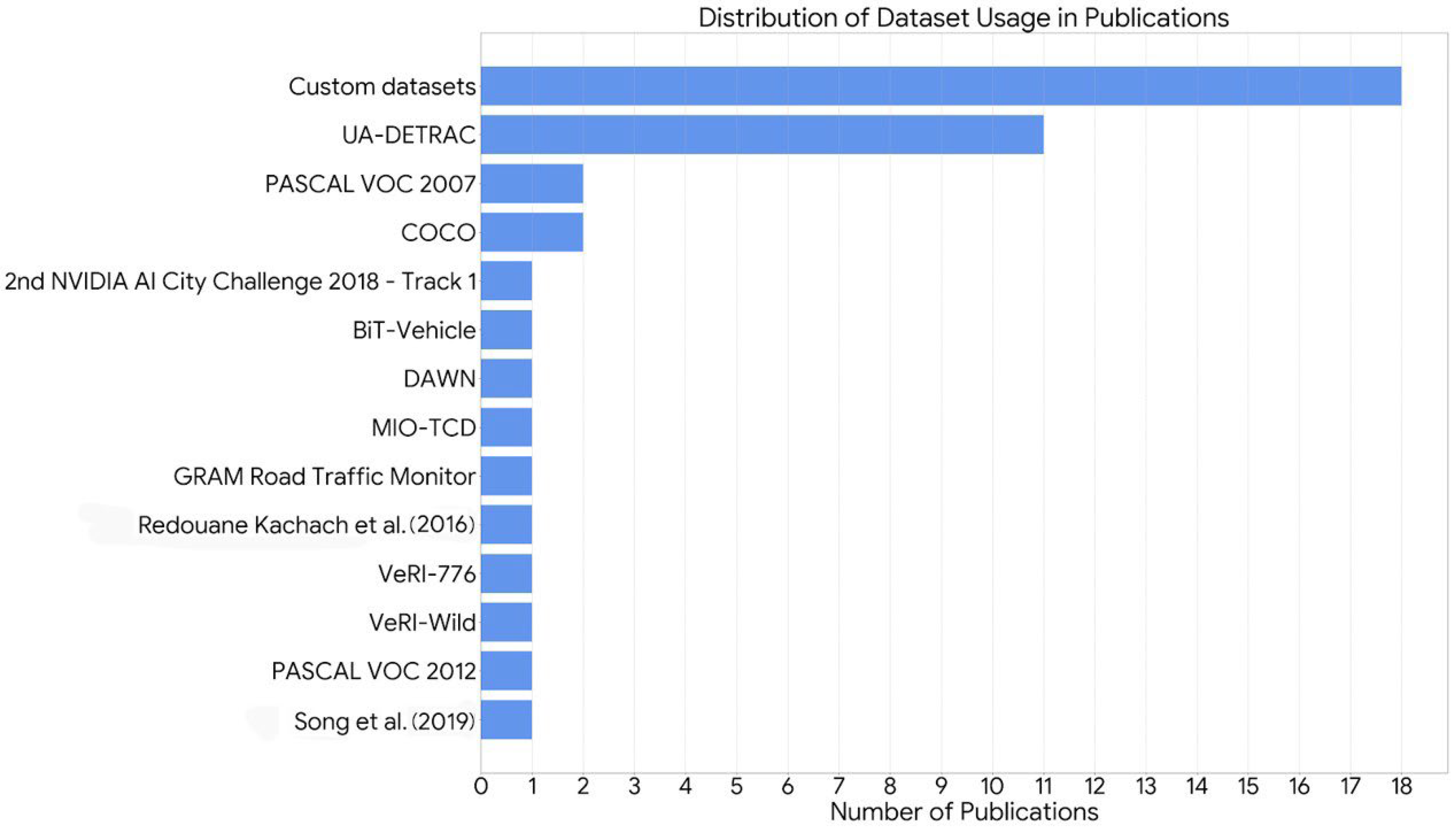

3.2. Datasets

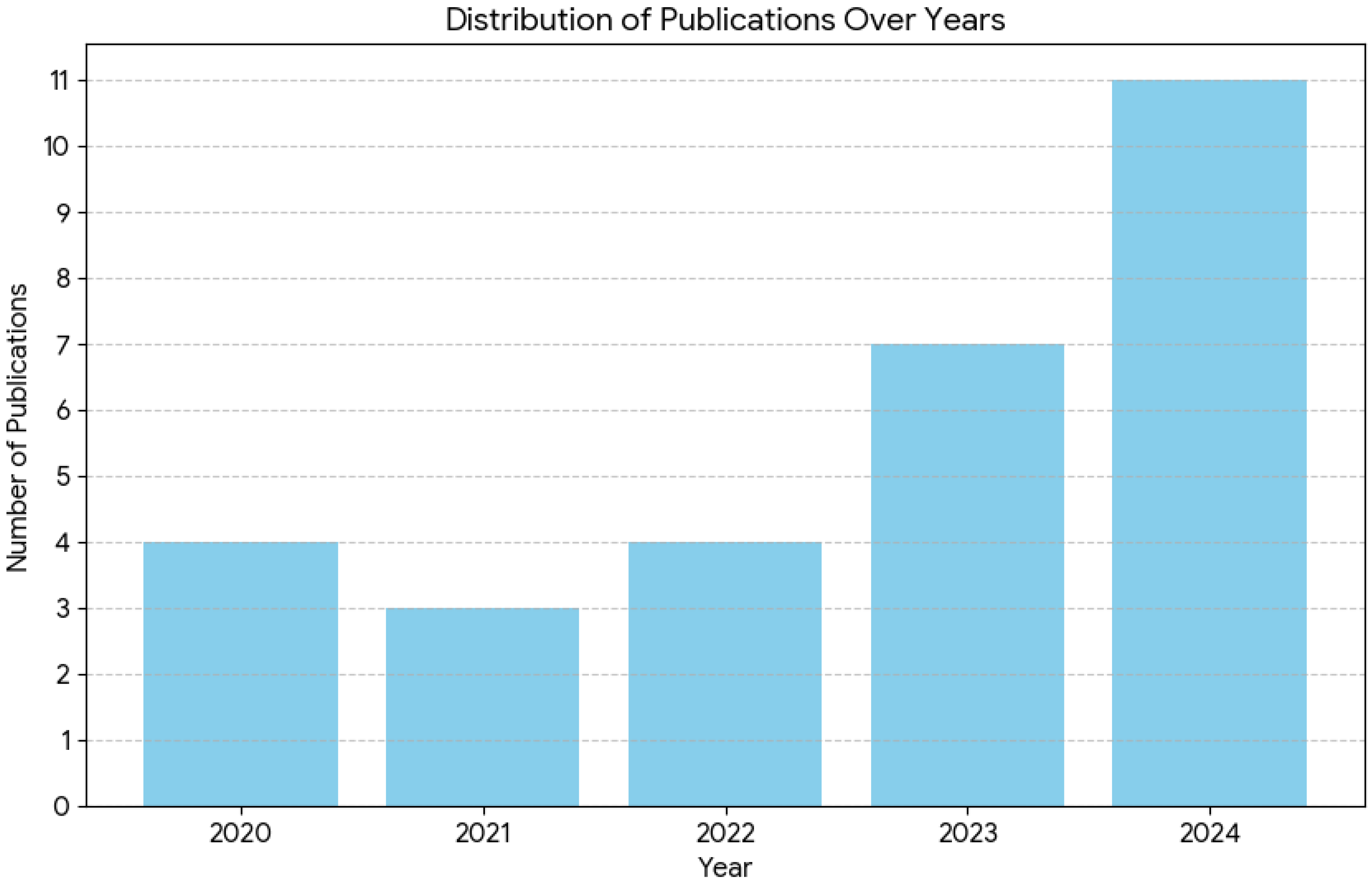

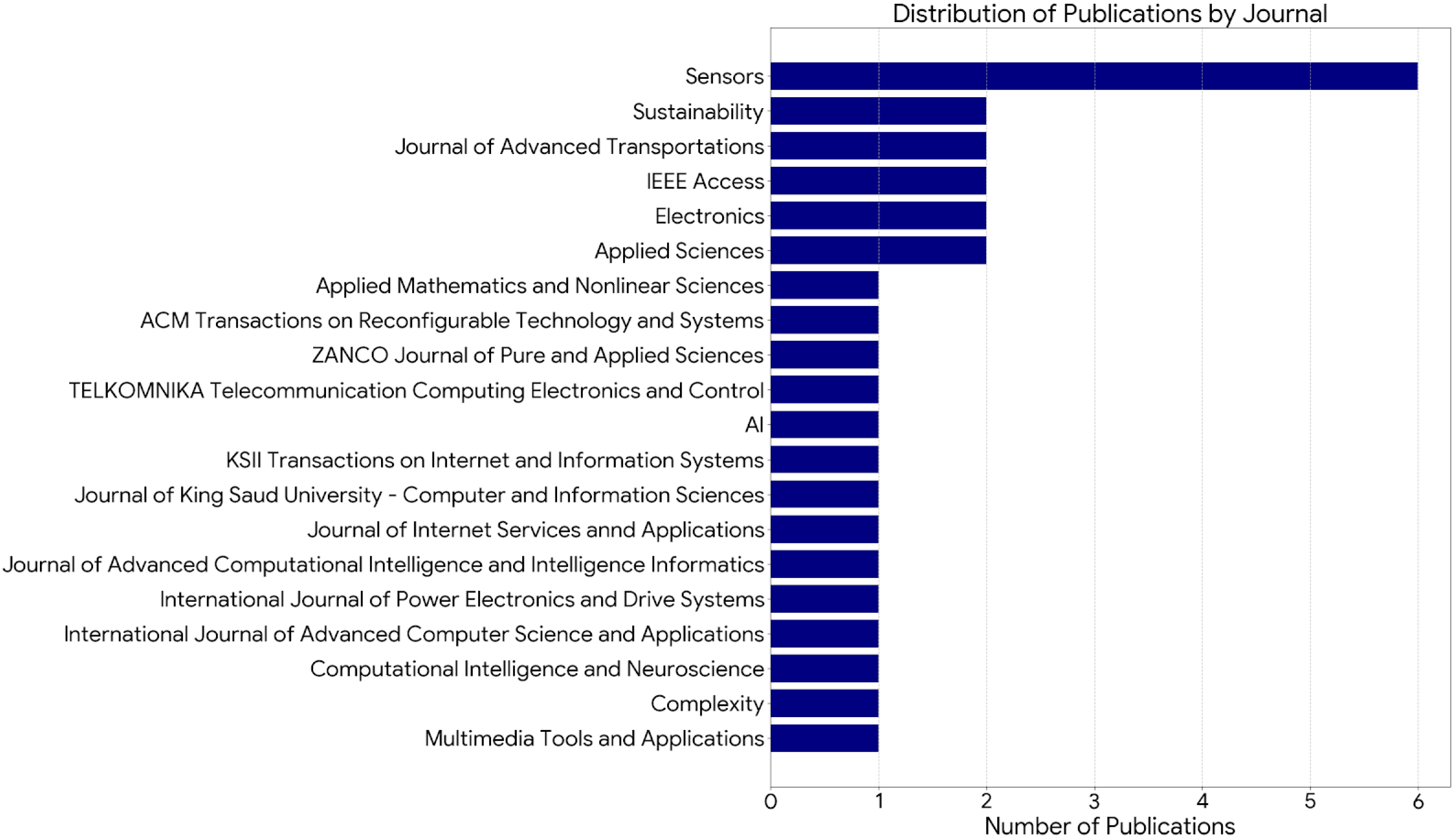

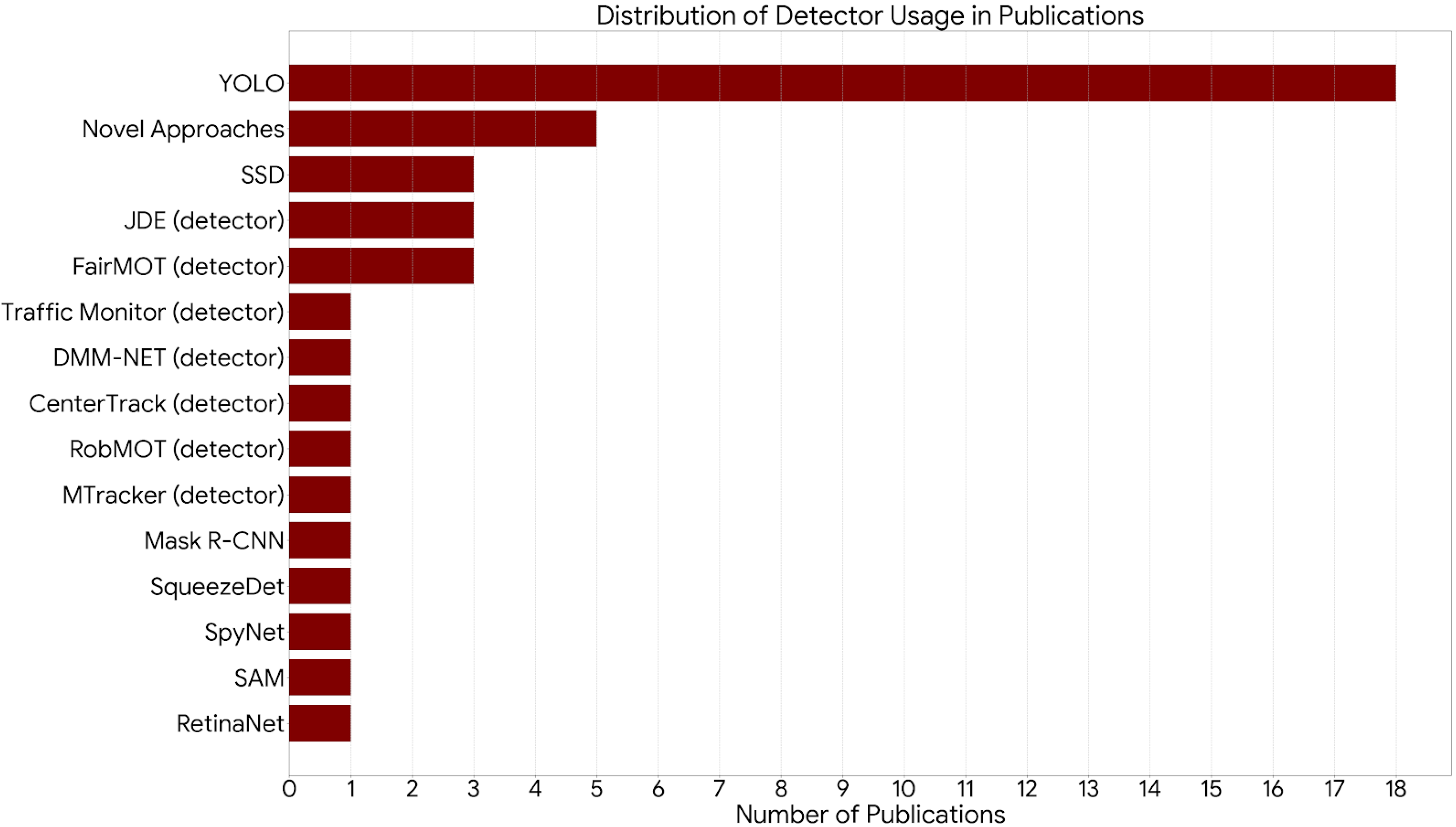

3.3. Article’s Analysis

3.4. Meta-Analysis

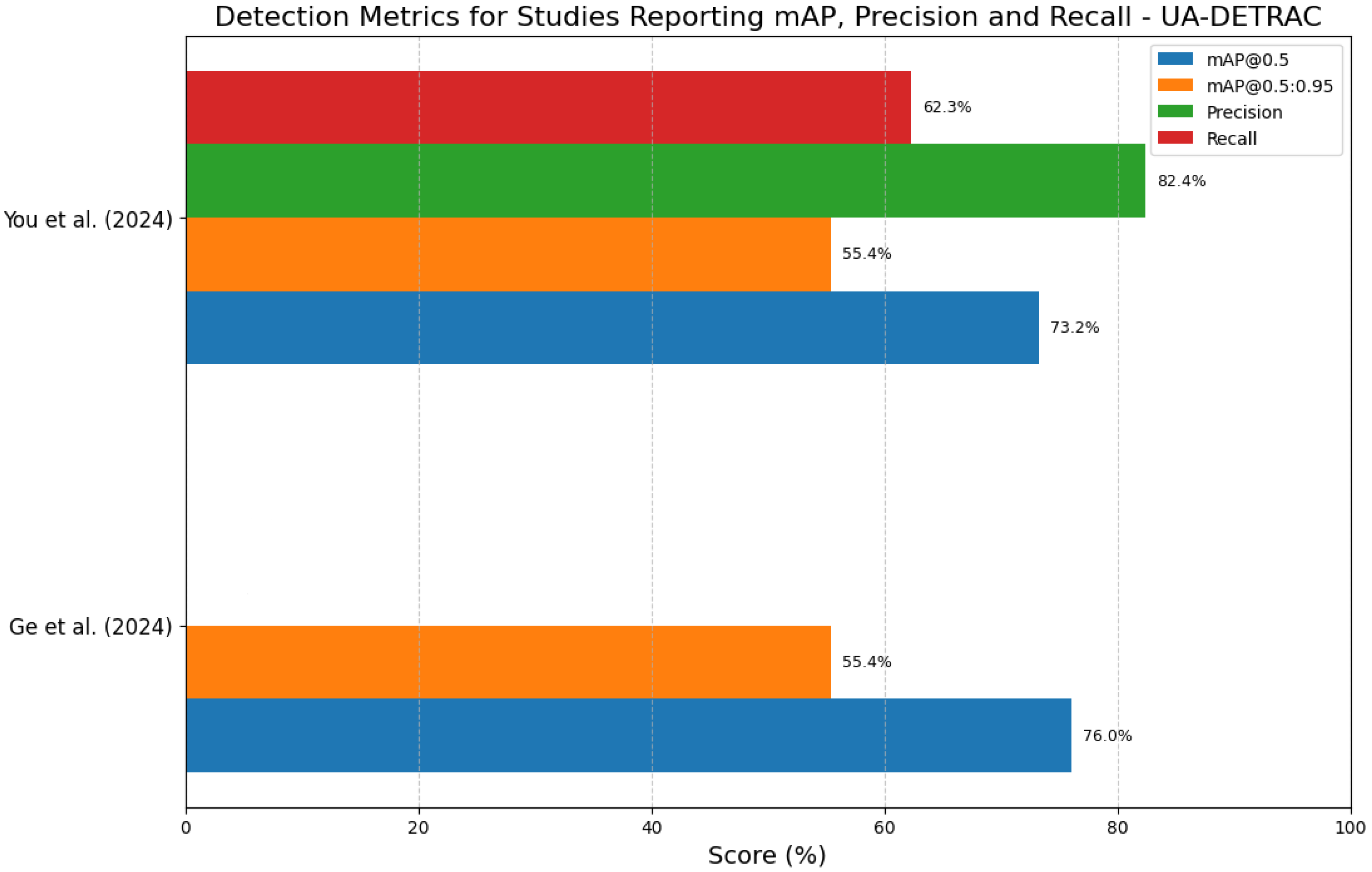

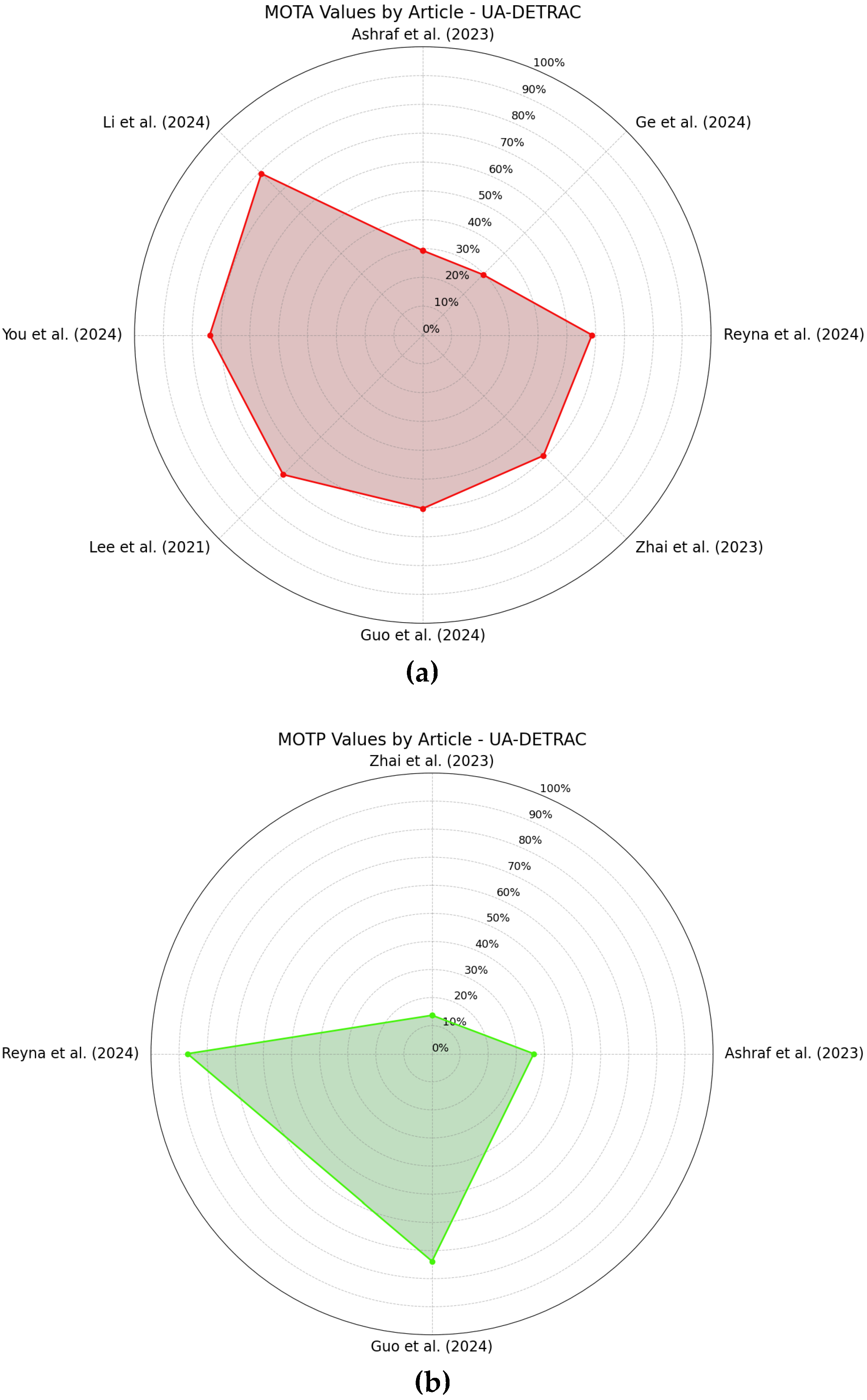

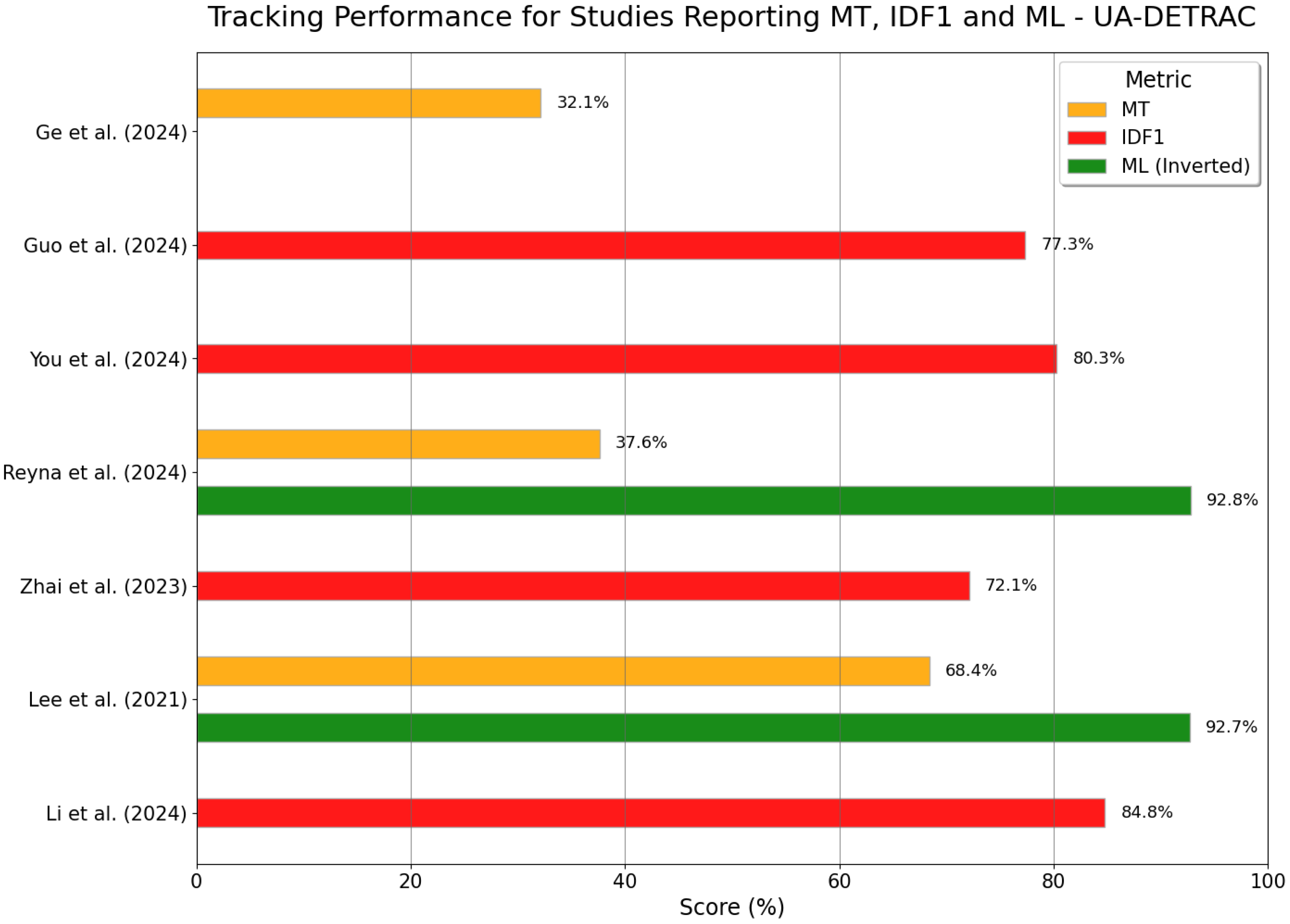

3.4.1. UA-DETRAC Analysis

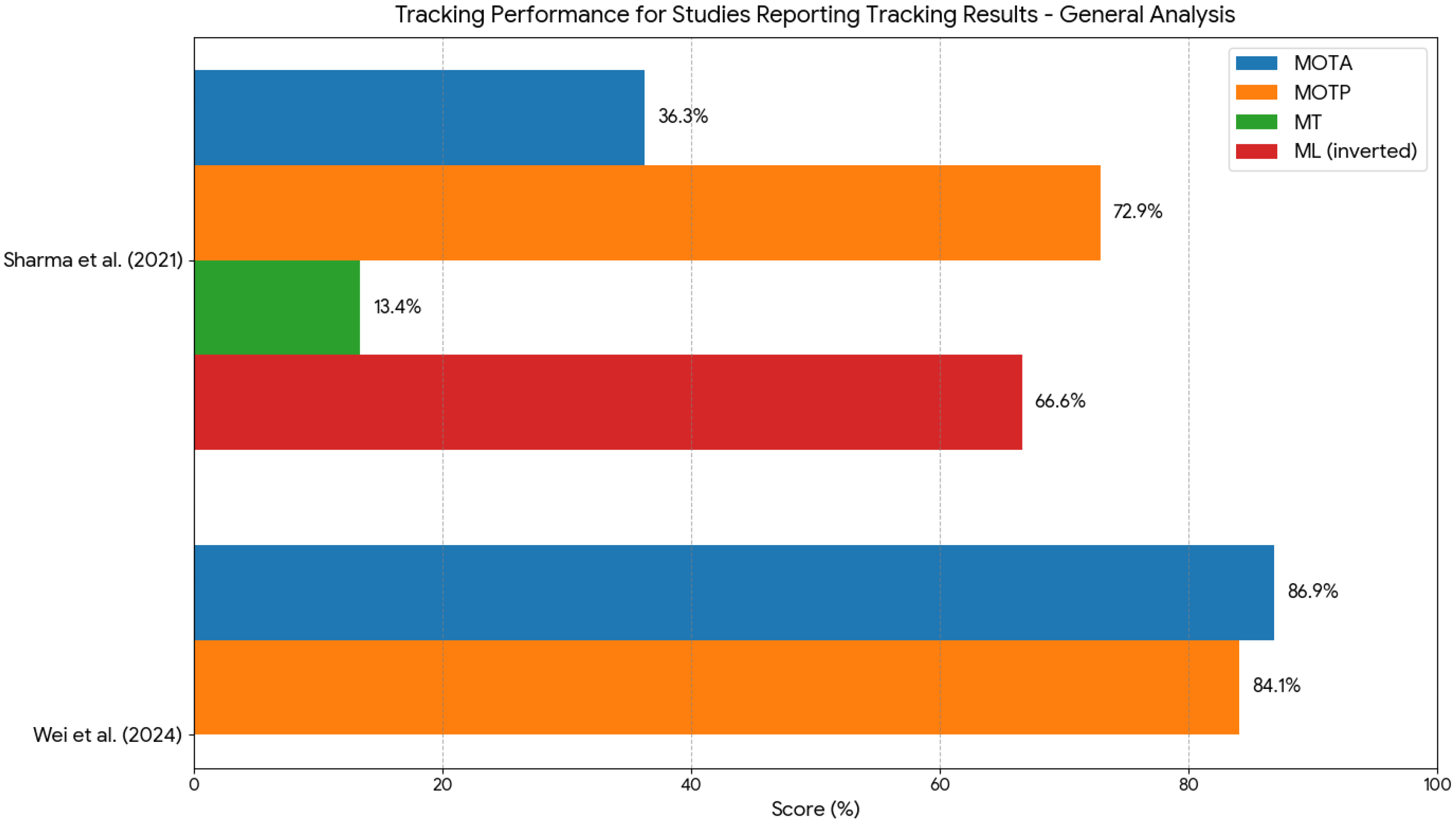

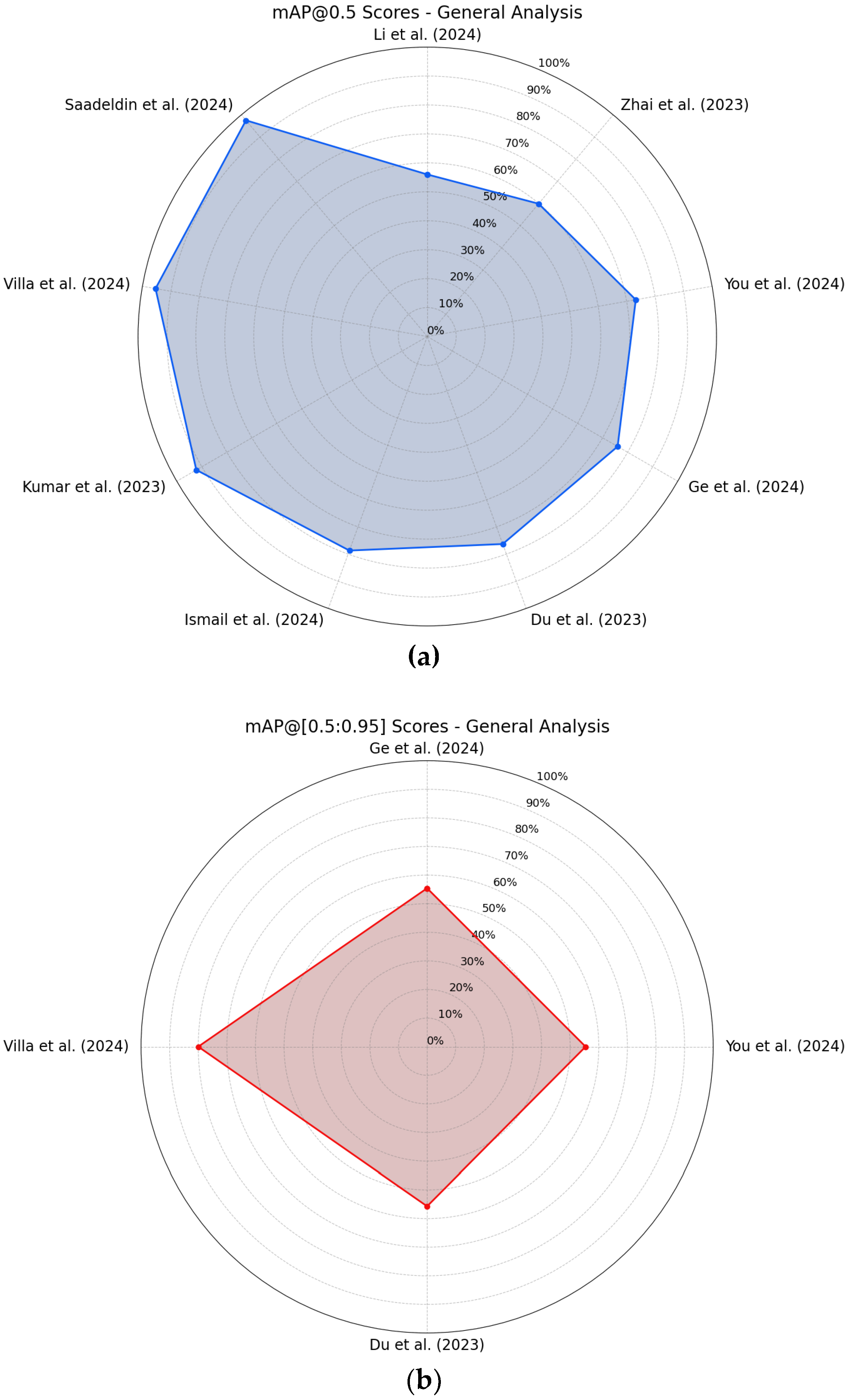

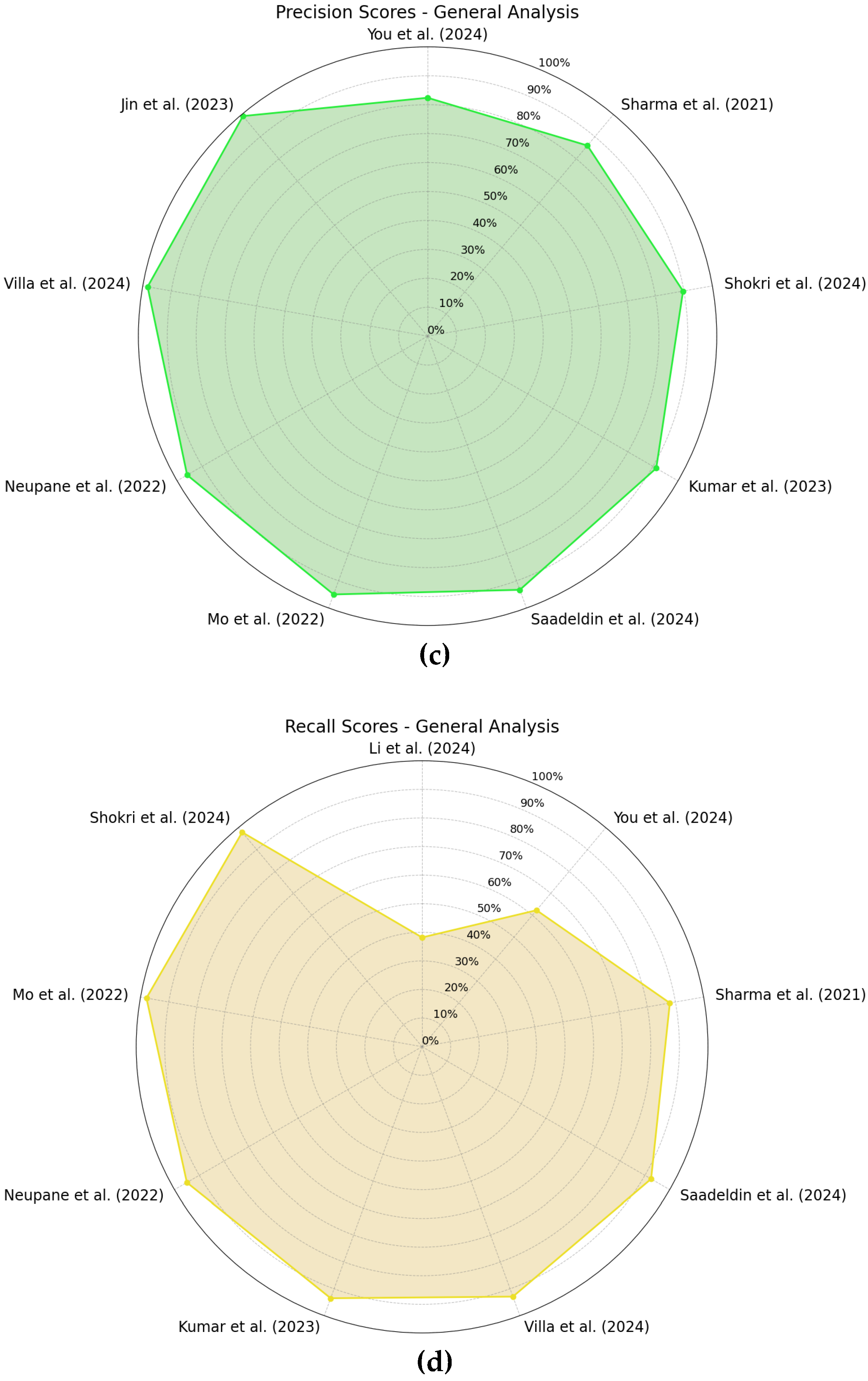

3.4.2. General Analysis

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Ref. | Testing Hardware Platform | Training/Tuning Dataset | Testing Dataset | Detection Method | Tracking Method | Results |

|---|---|---|---|---|---|---|

| [43] | CPU: 11th Gen Intel(R) Core (TM) i7-11800H @ 2.30 GHz RAM: 16 GB GPU: NIVIDIA GeForce RTX3070 | IStock videos + Open-source dataset by Song et al. 2019 [31] + Custom Dataset | Custom Dataset | YOLOv5m6 | DeepSORT | Average Counting Accuracy: 95% mAP@0.5: 78.7% |

| [44] | CPU: 15 vCPU Intel(R) Xeon(R) Platinum 8358P CPU @ 2.60 GHz. GPU: NVIDIA RTX 3090 | UA-DETRAC + VeRI dataset | UA-DETRAC | YOLOv5 | DeepSORT | mAP@0.5: 70.3% mAP@[0.5:0.95]: 51.8% MOTA: 23.1% MT: 27.4% IDSW: 130 |

| DeepSORT + Input Layer Resizing + Removal of the Second Convolutional Layer + Addition of a New Residual Convolutional Layer + Replacement of Fully Connected Layers to an Average Pooling Layer | mAP@0.5: 70.3% mAP@[0.5:0.95]: 51.8% MOTA: 23.9% MT: 27.5% IDSW: 110 | |||||

| YOLOv5 + Better Bounding Box Alignment (WIoU) + Multi-scale Feature Extractor (C3_Res2) + Multi-Head Self-Attention Mechanism (MHSA) + Attention Mechanism (SGE) | DeepSORT | mAP@0.5: 76% mAP@[0.5:0.95]: 55.4% MOTA: 28.8% MT: 31.6% IDSW: 100 | ||||

| DeepSORT + Input Layer Resizing + Removal of the Second Convolutional Layer + Addition of a New Residual Convolutional Layer + Replacement of Fully Connected Layers to an Average Pooling Layer | mAP@0.5: 76% mAP@[0.5:0.95]: 55.4% MOTA: 29.6% MT: 32.1% IDSW: 94 | |||||

| [45] | GPU: GeForce RTX 3070 | Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras | Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—good conditions | YOLOv3 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 89.3% mAR: 90.1% |

| DeepSORT | mAP: 81.6% mAR: 86.9% | |||||

| YOLOv4 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 90.6% mAR: 96.7% | ||||

| Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—bad weather | YOLOv3 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 99% mAR: 99.3% | |||

| DeepSORT | mAP: 98% mAR: 98.2% | |||||

| YOLOv4 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 99% mAR: 99.5% | ||||

| Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—poor quality | YOLOv3 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 94.4% mAR: 94.4% | |||

| DeepSORT | mAP: 88.5% mAR: 89.1% | |||||

| YOLOv4 | TrafficSensor (Spatial Proximity Tracker + Kanade–Lucas–Tomasi Feature Tracker) | mAP: 99% mAR: 99.1% | ||||

| [46] | CPU: Intel Core i5-9400F 2.90 GHz RAM: 16 GB | MPI Sintel + Custom Dataset | Custom Dataset | YOLOv3 | DeepSORT | Precision: 88.6% Recall: 92.1% Rt: 82.3% Rf: 7.1% Ra: 10.6% |

| [47] | NVIDIA Jetson Nano | Highway Vehicle Dataset + Miovision Traffic Camera (MIO-TCD) Dataset + Custom Dataset | Highway Vehicle Dataset + Miovision Traffic Camera (MIO-TCD) Dataset + Custom Dataset | YOLOv8-Nano + Small Object Detection Layer + CBAM | DeepSORT | mAP@0.5: 97.5% Precision: 93.3% Recall: 92.5% Average Counting Accuracy: 96.8% |

| [48] | Not described | DRR of Thailand’s Surveillance Camera Images + Custom Dataset | Custom Dataset | YOLOv3 | Centroid-Based Tracker | Precision: 88% Recall: 92% Overall Accuracy: 90% |

| YOLOv3-Tiny | Centroid-Based Tracker | Precision: 88% Recall: 92% Overall Accuracy: 90% | ||||

| YOLOv5-Small | Centroid-Based Tracker | Precision: 85% Recall: 82% Overall Accuracy: 83% | ||||

| YOLOv5-Large | Centroid-Based Tracker | Precision: 96% Recall: 95% Overall Accuracy: 95% | ||||

| Custom Dataset—Noisy | YOLOv5-Large | Centroid-Based Tracker | Precision: 84.1% Recall: 78.8% Overall Accuracy: 81.4% | |||

| Custom Dataset—Clear | YOLOv5-Large | Centroid-Based Tracker | Precision: 94% Recall: 94% Overall Accuracy: 94% | |||

| [49] | CPU: AMD Ryzen 9 5950X GPU: NVIDIA GeForce RTX3090TI | UA-DETRAC + VeRI-776 Dataset | UA-DETRAC | YOLOv8 | DeepSORT | MOTA: 55.6% MOTP: 70.2% IDF1: 71.4% IDSW: 476 |

| YOLOv8 + FasterNet Backbone + Small Target Detection Head + SimAM Attention Mechanism + WIOUv1 Bounding Box Loss | DeepSORT | MOTA: 57.6% MOTP: 72.6% IDF1: 73.2% IDSW: 455 | ||||

| DeepSORT + OS-NET for Appearance Feature Extraction | MOTA: 58.8% MOTP: 73.2% IDF1: 73.8% IDSW: 421 | |||||

| DeepSORT + GIoU Metric | MOTA: 58.6% MOTP: 71.5% IDF1: 75.4% IDSW: 414 | |||||

| DeepSORT + OS-NET for Appearance Feature Extraction + GIoU Metric | MOTA: 60.2% MOTP: 73.8% IDF1: 77.3% IDSW: 406 | |||||

| [50] | GPU: NVIDIA GeForce RTX 4090 CPU: Intel Core i9-9900K @3.60 GHz | UA-DETRAC | UA-DETRAC | YOLOv8-Small | ByteTrack | Precision: 71.8% Recall: 58.6% mAP@0.5: 64.2% mAP@[0.5:0.95]: 46.6% mIDF1: 76.9% IDSW: 855 mMOTA: 67.2% |

| YOLOv8-Small + Context Guided Module + Dilated Reparam Block + Soft-NMS | ByteTrack | Precision: 82.4% Recall: 62.3% mAP@0.5: 73.2% mAP@[0.5:0.95]: 55.4% mIDF1:78.2% IDSW: 785 mMOTA: 70.6% | ||||

| ByteTrack + Improved Kalman Filter | Precision: 82.4% Recall: 62.3% mAP@0.5: 73.2% mAP@[0.5:0.95]: 55.4% mIDF1: 79.7% IDSW: 717 mMOTA: 73.1% | |||||

| ByteTrack + Improved Kalman Filter + Gaussian Smooth Interpolation | Precision: 82.4% Recall: 62.3% mAP@0.5: 73.2% mAP@[0.5:0.95]: 55.4% mIDF1: 80.3% IDSW: 530 mMOTA: 73.9% | |||||

| [51] | CPU: 8-core Intel Corei7 RAM: 16 GB GPU: NVidia RTX-3060 card with 12 GB of video memory | UA-DETRAC | UA-DETRAC | YOLOv7-W6 | MEDAVET (Bipartite Graphs Integration + Convex Hull Filtering + QuadTree for Occlusion Handling) | MOTA: 58.7% MOTP: 87% IDSW: 636 MT: 37.6% ML: 7.2% |

| [52] | Nvidia Jetson Orin AGX 64 GB Developer Kit | Custom dataset | Custom dataset | YOLOv5-Nano | DeepSORT | Precision: 98.3% Recall: 92.9% mAP@0.5: 95.4% mAP@[0.5:0.95]: 80% |

| DAWN—Snow | YOLOv5-Nano | DeepSORT | mAP@[0.5:0.95]: 33.2% | |||

| DAWN—Fog | YOLOv5-Nano | DeepSORT | mAP@[0.5:0.95]: 40.4% | |||

| DAWN—Rain | YOLOv5-Nano | DeepSORT | mAP@[0.5:0.95]: 39.7% | |||

| [53] | Not described | Custom dataset | Custom dataset | YOLOv4 + Distance IoU | IoU-based Tracking + Kalman Filter + OSNet | Accuracy: 97.7% Precision: 99.3% |

| [54] | Not described | Custom Dataset | PASCAL VOC 2007 | YOLOv5-Small | DeepSORT | Precision: 65.7% Recall: 83.4% mAP: 81.2% |

| Custom Dataset | YOLOv5-Small | DeepSORT | Precision: 91.3% Recall: 93.5% mAP@0.5: 92.2% | |||

| [55] | CPU: Intel(R) Core(TM) i7-13620H 2.40 GHz RAM: 48 GB GPU: NVIDIA GeForce RTX 4060. CUDA cores: 3072. Max-Q Technology 8.188 MB GDDR6 | Custom Dataset (including COCO) | Custom Dataset—Sunny | YOLOR CSP X | DeepSORT | Average Accuracy@0.35: 79.9% Average Accuracy@0.55: 75% Average Accuracy@0.75: 66.3% |

| YOLOR CSP | DeepSORT | Average Accuracy@0.35: 83.8% Average Accuracy@0.55: 76.8% Average Accuracy@0.75: 54.7% | ||||

| YOLOR P6 | DeepSORT | Average Accuracy@0.35: 75% Average Accuracy@0.55: 68.2% Average Accuracy@0.75:65.4% | ||||

| Custom Dataset—Cloudy | YOLOR CSP X | DeepSORT | Average Accuracy@0.35: 66.3% Average Accuracy@0.55: 75.6% Average Accuracy@0.75:52.9% | |||

| YOLOR CSP | DeepSORT | Average Accuracy@0.35: 78.9% Average Accuracy@0.55: 70.2% Average Accuracy@0.75: 47.6% | ||||

| YOLOR P6 | DeepSORT | Average Accuracy@0.35: 56.7% Average Accuracy@0.55: 77.5% Average Accuracy@0.75: 46.7% | ||||

| Custom Dataset—Sunny and Class Dropping | YOLOR CSP | DeepSORT | Average Accuracy@0.35: 91% Total Accuracy@0.35: 99.3% | |||

| Custom Dataset—Cloudy and Class Dropping | YOLOR CSP | DeepSORT | Average Accuracy@0.35: 93.6% Total Accuracy@0.35: 98.5% | |||

| Custom Dataset—No Sunlight and Class Dropping | YOLOR CSP | DeepSORT | Average Accuracy@0.35: 89.6% Total Accuracy@0.35: 98% | |||

| [56] | Not described | No training | BiT-Vehicle Dataset | YOLOv4 | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | mAP: 94.4% |

| YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | mAP: 95.7% | ||||

| Custom Dataset | YOLOv4 | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | MOTA: 85.9% MOTP: 83.8% IDS: 45 | |||

| YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | MOTA: 86.9% MOTP: 84.1% IDS: 36 | ||||

| Custom Dataset—Daytime and sunny | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 100% | |||

| Custom Dataset—Daytime and cloudy | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 98.6% | |||

| Custom Dataset—Daytime and rainy | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 96.1% | |||

| Custom Dataset—Nighttime and sunny | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 100% | |||

| Custom Dataset—Nighttime and cloudy | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 97.7% | |||

| Custom Dataset—Nighttime and rainy | YOLOv4 + Enhanced Feature Fusion (SPP + PANet) + Deep-Separable Convolutions + Improved Loss Function | Kalman Filter + Expanded State Vector + Larger State Matrix + Improved Initialization + Aspect Ratio Handling | Correct Identification Rate: 95.4% | |||

| [57] | NVIDIA Jetson Nano | Custom Dataset | Custom Dataset | YOLOv4-Tiny | FastMOT (DeepSORT + Kanade–Lucas–Tomasi Optical Flow) | mAP: 78.7% |

| [58] | Zynq-7000 | UA-DETRAC | UA-DETRAC | YOLOv3 + Pruning (85%) | DeepSORT + RE-ID Module retraining | AP@0.5: 71.1% |

| YOLOv3-Tiny + Pruning (85% + 30%) | DeepSORT + RE-ID Module retraining | AP@0.5: 59.9% | ||||

| YOLOv3 + Pruning (85%) & YOLOv3-Tiny + Pruning (85% + 30%) | DeepSORT + RE-ID Module retraining | MOTA: 59.2% MOTP: 13.7% IDF1: 72.1% IDP: 85.2% IDR: 64.6% IDSW: 25 | ||||

| UA-DETRAC—Daytime | YOLOv3 + Pruning (85%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 96.2% | |||

| YOLOv3-Tiny + Pruning (85% + 30%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 92.3% | ||||

| UA-DETRAC—Nighttime | YOLOv3 + Pruning (85%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 94% | |||

| YOLOv3-Tiny + Pruning (85% + 30%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 92% | ||||

| UA-DETRAC—Rainy | YOLOv3 + Pruning (85%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 81.8% | |||

| YOLOv3-Tiny + Pruning (85% + 30%) | DeepSORT + RE-ID Module retraining | Accuracy Rate: 75.8% | ||||

| [59] | GPU: NVIDIA GeForce GTX 1050 TI 4 GB GDDR5 and NVIDIA GeForce RTX 2080 TI 11 GB GDDR6 | Custom Dataset—Afternoon. High Camera | Custom Dataset—Afternoon. High Camera | YOLOv3 + Frame Reduction + Loss Function Adjustment | Kalman Filter | Total Accuracy: 77% |

| Centroid-Based Tracker | Total Accuracy: 84.5% | |||||

| Custom Dataset—Afternoon. Distant Camera | Custom Dataset—Afternoon. Distant Camera | YOLOv3 + Frame Reduction + Loss Function Adjustment | Kalman Filter | Total Accuracy: 81.7% | ||

| Centroid-Based Tracker | Total Accuracy: 88.4% | |||||

| Custom Dataset—Morning. Low Camera | Custom Dataset—Morning. Low Camera | YOLOv3 + Frame Reduction + Loss Function Adjustment | Kalman Filter | Total Accuracy: 65.8% | ||

| Centroid-Based Tracker | Total Accuracy: 78.7% | |||||

| Custom Dataset—Evening. Close Camera | Custom Dataset—Evening. Close Camera | YOLOv3 + Frame Reduction + Loss Function Adjustment | Kalman Filter | Total Accuracy: 81.9% | ||

| Centroid-Based Tracker | Total Accuracy: 88.5% | |||||

| Custom Dataset—Afternoon. High Angle Camera | Custom Dataset—Afternoon. High Angle Camera | YOLOv3 + Frame Reduction + Loss Function Adjustment | Kalman Filter | Total Accuracy: 70.7% | ||

| Centroid-Based Tracker | Total Accuracy: 82.9% | |||||

| [60] | Not described | UA-DETRAC | UA-DETRAC | YOLOX + Feature Adaptive Fusion Pyramid Network (FAFPN) | DeepSORT | AP@0.5: 76.3% AP@0.75: 65.7% AP@[0.5:0.95]: 55.7% |

| Ref. | Testing Hardware Platform | Training Dataset | Testing Dataset | Detection Method | Tracking Method | Results |

|---|---|---|---|---|---|---|

| [43] | CPU: 11th Gen Intel(R) Core (TM) i7-11800H @ 2.30 GHz 2.30 GHz RAM: 16 GB GPU: NIVIDIA GeForce RTX3070 | IStock videos from Google + Open-source dataset by Song et al. 2019 [31] + Custom Dataset | Custom Dataset | SSD | DeepSORT | Average Counting Accuracy: 84% mAP@0.5: 83.2% |

| Mask R-CNN | DeepSORT | Average Counting Accuracy: 91% mAP@0.5: 76.5% | ||||

| [61] | NVIDIA Jetson Tx2 | UA-DETRAC + Custom Dataset | Custom Dataset | SpyNet + Background Subtraction + Fine-Tuning + Human-in-the-Loop | SORT | mAP: 54.6% |

| [46] | CPU: Intel Core i5-9400F 2.90 GHz RAM: 16 GB | MPI Sintel + Custom Dataset | Custom Dataset | Mask-SpyNet (SpyNet Optical Flow + Mask-Based Segmentation Branch) | DeepSORT | Precision: 95% Recall: 97.9% Rt: 93.1% Rf: 2% Ra: 4.9% |

| [45] | GPU: GeForce RTX 3070 graphics card | Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras | Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—Good Conditions | Traffic Monitor | mAP: 43.7% mAR: 59.4% | |

| Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—Bad Weather | Traffic Monitor | mAP: 24.1% mAR: 31.6% | ||||

| Redouane Kachach dataset + GRAM Road-Traffic Monitoring + Open Online Cameras—Poor Quality | Traffic Monitor | mAP: 44.8% mAR: 63% | ||||

| [51] | CPU: 8-core Intel Corei7 RAM: 16 GB GPU: NVidia RTX-3060 card with 12 GB of video memory. | UA-DETRAC | UA-DETRAC | Model2 | MOTA: 55.1% MOTP: 85.5% IDSW: 2311 MT: 47.1% ML: 6.8% | |

| JDE | MOTA: 24.5% MOTP: 68.5% IDSW: 994 MT: 28.4% ML: 41.7% | |||||

| FairMOT | MOTA: 31.7% MOTP: 82.4% IDSW: 521 MT: 36.8% ML: 36.5% | |||||

| ECCNet | MOTA: 55.5% MOTP: 86.3% IDSW: 2893 MT: 57.2% ML: 20.7% | |||||

| [62] | RAM: 16 GB DDR3 CPU: Intel(R) Core (TM) i7-4700HQ CPU @ 2.4 GHz | No training | Quebec’s Surveillance Cameras’ Images + 2nd NVIDIA AI City Challenge Track 1 | Segment Anything Model + Manual Region of Interest Selection + Motion-based Filtering + Vehicle Segment Merging | DeepSORT | Precision: 89.7% Recall: 97.9% F1-Score: 93.6% |

| [63] | CPU: Intel Xeon E5-2620. RAM: 47 GB GPU: Single NVIDIA GTX 1080 Ti | MARS Dataset | UA-DETRAC | SSD | DeepSORT | HOTA: 43.9% |

| UA-DETRAC | UA-DETRAC | SSD + Appearance Embedding | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings | HOTA: 43.1% | ||

| SSD + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings | HOTA: 47.2% | ||||

| SSD + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman + Integrated Embeddings + Hyperparameter Optimization | HOTA: 47.4% DetA: 40.2% AssA: 56.41% DetRe: 55.05% DetPr: 55% AssRe: 64.61% AssPr: 75.13% LocA: 82.42% | ||||

| SSD | SORT | HOTA: 42.33% DetA: 36.35% AssA: 49.93% DetRe: 43.82% DetPr: 61.84% AssRe: 53.24% AssPr: 82.77% LocA: 82.41% | ||||

| DeepSORT | HOTA: 44.20% DetA: 37.71% AssA: 52.54% DetRe: 48.34% DetPr: 57.08% AssRe: 57.39% AssPr: 78.95% LocA: 81.14% | |||||

| JDE (576 × 320) | HOTA: 35.77% DetA: 25.18% AssA: 51.59% DetRe: 30.09% DetPr: 54.52% AssRe: 56.57% AssPr: 79.56% LocA: 79.93% | |||||

| JDE (864 × 480) | HOTA: 40.28% DetA: 28.76% AssA: 56.9% DetRe: 34.06% DetPr: 59.09% AssRe: 64.05% AssPr: 79.13% LocA: 82.37% | |||||

| RetinaNet | SORT | HOTA: 56.21% DetA: 49.86% AssA: 63.69% DetRe: 59.63% DetPr: 70.55% AssRe: 68.3% AssPr: 87.05% LocA: 87.37% | ||||

| DeepSORT | HOTA: 56.65% DetA: 49.27% AssA: 65.45% DetRe: 60.85% DetPr: 67.38% AssRe: 71.02% AssPr: 85.11% LocA: 86.41% | |||||

| RetinaNet + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 58.04% DetA: 51.82% AssA: 65.34% DetRe: 63.98% DetPr: 68.42% AssRe: 72.07% AssPr: 83.18% LocA: 86.82% | ||||

| UA-DETRAC—Easy | SSD | SORT | HOTA: 49.26% | |||

| DeepSORT | HOTA: 50.13% | |||||

| SSD + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman + Integrated Embeddings + Hyperparameter Optimization | HOTA: 49.84% | ||||

| JDE (576 × 320) | HOTA: 43.75% | |||||

| JDE (864 × 480) | HOTA: 49.99% | |||||

| RetinaNet | SORT | HOTA: 63.74% | ||||

| DeepSORT | HOTA: 62.77% | |||||

| RetinaNet + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 61.89% | ||||

| UA-DETRAC—Medium | SSD | SORT | HOTA: 44.35% | |||

| DeepSORT | HOTA: 46.24% | |||||

| SSD + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 50.09% | ||||

| JDE (576 × 320) | HOTA: 33.93% | |||||

| JDE (864 × 480) | HOTA: 38.66% | |||||

| RetinaNet | SORT | HOTA: 58.02% | ||||

| DeepSORT | HOTA: 58.35% | |||||

| RetinaNet + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 61.01% | ||||

| UA-DETRAC—Hard | SSD | SORT | HOTA: 31% | |||

| DeepSORT | HOTA: 33.41% | |||||

| SSD + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 35.96% | ||||

| JDE (576 × 320) | HOTA: 16.65% | |||||

| JDE (864 × 480) | HOTA: 18.01% | |||||

| RetinaNet | SORT | HOTA: 41.83% | ||||

| DeepSORT | HOTA: 42.74% | |||||

| RetinaNet + Appearance Embedding + Augmentation Module | Cosine Distance + Hungarian Algorithm + Kalman Filter + Integrated Embeddings + Hyperparameter Optimization | HOTA: 45.53% | ||||

| [64] | CPU: Intel Core i7-10700K (3.80 GHz) GPU: NVIDIA GeForce RTX 2080 SUPER | UA-DETRAC | UA-DETRAC | DMM-NET | AP: 20.69% MOTA: 20.3% MT: 19.9% ML: 30.3% IDSW: 498 FM: 1428 FP: 104,142 FN: 399,586 | |

| JDE | AP: 63.57% MOTA: 55.1% MT: 68.4% ML: 6.5% IDSW: 2169 FM: 4224 FP: 128,069 FN: 153,609 | |||||

| FairMOT | AP: 67.74% MOTA: 63.4% MT: 64% ML: 7.8% IDSW: 784 FM: 4443 FP: 71,231 FN: 159,523 PR-MOTA: 22.7% PR-MT: 23.7% PR-ML: 10% PR-IDSW: 347.1 PR-FM: 2992.6 PR-FP: 49,385.4 PR-FN: 123,124.5 | |||||

| UA-DETRAC—Easy | DMM-NET | AP: 31.21% | ||||

| JDE | AP: 79.84% | |||||

| FairMOT | AP: 81.33% MOTA: 84.9% MT: 86% ML: 1.4% IDSW: 121 FM: 379 FP: 9276 FN: 9468 PR-MOTA: 30.8% PR-MT: 32.3% PR-ML: 5.2% PR-IDSW: 56.8 PR-FM: 648.3 PR-FP: 10,022.7 PR-FN:14,017.6 | |||||

| UA-DETRAC—Medium | DMM-NET | AP: 23.6% | ||||

| JDE | AP: 70.46% | |||||

| FairMOT | AP: 73.32% MOTA: 69.6% MT: 66.8% ML: 8% IDSW: 421 FM: 2056 FP: 27,159 FN: 80,934 PR-MOTA: 25.8% PR-MT: 24.3% PR-ML: 9.9% PR-IDSW: 199 PR-FM: 1613.1% PR-FP: 21,497.6 PR-FN:64,824.3 | |||||

| UA-DETRAC—Hard | DMM-NET | AP: 14.03% | ||||

| JDE | AP: 49.88% | |||||

| FairMOT | AP: 56.78% MOTA: 52.2% MT: 47.9% ML: 13.5% IDSW: 294 FM: 1246 FP: 14,309 FN: 56,981 PR-MOTA: 16.2% PR-MT: 17.6% PR-ML: 13.4% PR-IDSW: 123.1 PR-FM: 695.8 PR-FP: 12,420.3 PR-FN: 38,018.9 | |||||

| UA-DETRAC—Cloudy Weather | DMM-NET | AP: 26.1% | ||||

| JDE | AP: 76.02% | |||||

| FairMOT | AP: 77.21% MOTA: 79.5% MT: 80% ML: 3.5% IDSW: 141 FM: 738 FP: 12,272 FN: 27,800 PR-MOTA: 30.9% PR-MT: 28.7% PR-ML: 7.6% PR-IDSW: 76 PR-FM: 925.5 PR-FP: 8481.9 PR-FN: 28,943.4 | |||||

| UA-DETRAC—Rainy Weather | DMM-NET | AP: 14.56% | ||||

| JDE | AP: 50.42% | |||||

| FairMOT | AP: 55.46% MOTA: 57.4% MT: 54.2% ML: 14.5% IDSW: 270 FM: 1413 FP: 17,300 FN: 70,055 PR-MOTA: 20.9% PR-MT: 19.6% PR-ML: 14% PR-IDSW: 122.3 PR-FM: 849.2 PR-FP: 11,493.5 PR-FN:48,182.2 | |||||

| UA-DETRAC—Sunny | DMM-NET | AP: 36.89% | ||||

| JDE | AP: 73% | |||||

| FairMOT | AP: 75.44% MOTA: 73.1% MT: 78.1% ML: 2.4% IDSW: 95 FM: 343 FP: 8253 FN: 11,605 PR-MOTA: 22.8% PR-MT: 29.5% PR-ML: 5.4% PR-IDSW: 41.5 PR-FM: 390.4 PR-FP: 9354.7 PR-FN: 10,777.2 | |||||

| UA-DETRAC—Nighttime | DMM-NET | AP: 15.01% | ||||

| FAIR | AP: 58.92% | |||||

| FairMOT | AP: 69.05% MOTA: 67.4% MT: 63.9% ML: 7.5% IDSW: 330 FM: 1187 FP: 12,929 FN: 37,923 PR-MOTA: 22.1% PR-MT: 23.9% PR-ML: 9.3% PR-IDSW: 139.2 PR-FM: 792.2 PR-FP: 14,610.5 PR-FN: 28,958 | |||||

| [65] | Not described | Custom Dataset | Custom Dataset | SSD + GAN Image Restoration | BEBLID Feature Extraction + MLESAC + Homography Transformation | mAP: 84.4% Precision: 86% Recall: 88% MOTA: 36.3% MOTP: 72.9% FAF: 1.4% MT: 13.4% ML: 33.4% FP: 140 FN: 304 IDSW: 35 Frag: 28 |

| [66] | GPU: 3 NVIDIA RTX 3060 | UA-DETRAC | COCO Dataset | FairMOT | AP: 36.4% AP@50: 53.9% AP@75: 38.8% Recall: 35.6% | |

| FairMOT + Swish Activation Function + Multi-Scale Dilated Attention + Block Efficient Module | AP: 38.1% AP@50: 56% AP@75: 40.5% Recall: 38.2% | |||||

| UA-DETRAC | FairMOT | MOTA: 77.5%/77.7% IDF1: 84.2% MT: 160 ML: 4 IDSW: 48/47 | ||||

| FairMOT + Swish Activation Function + Multi-Scale Dilated Attention + Block Efficient Module | MOTA: 79.2% IDF1: 84.8% MT: 162 ML: 3 IDSW: 50 | |||||

| FairMOT + Joint Loss | MOTA: 78.4% IDF1: 84.4% MT: 160 ML: 4 IDSW: 46 | |||||

| FairMOT + Swish Activation Function + Multi-Scale Dilated Attention + Block Efficient Module + Joint Loss | MOTA: 79% IDF1: 84.5% MT: 159 ML: 4 IDSW: 45 | |||||

| CenterTrack | MOTA: 77% IDF1: 84.6% MT: 155 ML: 4 IDSW: 50 | |||||

| RobMOT | MOTA: 76% IDF1: 83% MT: 150 ML: 5 IDSW: 53 | |||||

| MTracker | MOTA: 75.5% IDF1: 82.7% MT: 152 ML: 4 IDSW: 54 | |||||

| Ref. | Testing Hardware Platform | Training Dataset | Testing Dataset | Detection Method | Tracking Method | Results |

|---|---|---|---|---|---|---|

| [67] | NVIDIA Jetson | Not described | Custom Dataset | Feature Extraction + Houglass-like CNN + Bounding Box Outputting | Tracking-by-Detection | Tracking Accuracy: 98.2% |

| Custom Dataset | Feature Extraction + Houglass-like CNN + Bounding Box Outputting | Tracking-by-Detection | Detection Accuracy: 96.4% | |||

| [68] | NVIDIA Jetson | Not applicable | Custom Dataset—Good Visibility | Horn-Schunck Optical Flow + Shadow Detection and Removal | CAMSHIFT | Centroid Errors: 4.2 Target Domain Coverage Accuracy: 69.1% |

| Kalman Filter | Centroid Errors: 3.8 Target Domain Coverage Accuracy: 71.3% | |||||

| Immune Particle Filter | Centroid Errors: 2.1 Target Domain Coverage Accuracy: 93.6% | |||||

| Custom Dataset—Poor Visibility | Horn-Schunck Optical Flow + Shadow Detection and Removal | CAMSHIFT | Centroid Errors: 7.4 Target Domain Coverage Accuracy: 60.8% | |||

| Kalman Filter | Centroid Errors: 5.9 Target Domain Coverage Accuracy: 67.1% | |||||

| Immune Particle Filter | Centroid Errors: 3.5 Target Domain Coverage Accuracy: 75.3% | |||||

| [69] | CPU: Intel (R) Core(TM) i7-7700 K CPU with a maximum turbo frequency of 4.50 GHz. RAM: 32 GB GPU: NVIDIA Titan X GPU with 12.00 GB of memory | UA-DETRAC + PASCAL VOC 2007 + PASCAL VOC 2012 | UA-DETRAC | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 80.7% MOTA: 29.3% MOTP: 36.2% PR-IDSW: 191 PR-FP: 18,078 PR-FN: 169,219 |

| UA-DETRAC—Cloudy Weather | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 69.1% | |||

| UA-DETRAC—Nighttime | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 69.2% | |||

| UA-DETRAC—Rainy Weather | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 54.2% | |||

| UA-DETRAC—Sunny Weather | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 73.5% | |||

| PASCAL VOC 2007 + PASCAL VOC 2012 | HVD-Net (First 5 DarkNet19 Layers + Dense Connection Block (DCB) and Dense Spatial Pyramid Pooling (DSPP) Multi-scale Processing + Feature Fusion + Detection Head + Loss Calculation) | SORT | mAP: 92.6% | |||

| [64] | CPU: Intel Core i7-10700K (3.80 GHz) GPU: NVIDIA GeForce RTX 2080 SUPER | UA-DETRAC | UA-DETRAC | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 69.93% MOTA: 68.5% MT: 68.4% ML: 7.3% IDSW: 836 FM: 3681 FP: 50,754 FN: 147,383 PR-MOTA: 24.5% PR-MT: 25.2% PR-ML: 9.3% PR-IDSW: 379 PR-FM: 2957.3 PR-FP: 43,940.6 PR-FN: 116,860.7 |

| UA-DETRAC—Easy | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 86.19% MOTA: 82.8% MT: 81.8% ML: 1.2% IDSW: 62 FM: 498 FP: 10,159 FN: 11,304 PR-MOTA: 29.7% PR-MT: 30.8% PR-ML: 5.2% PR-IDSW: 42.7 PR-FM: 644.5 PR-FP: 10,371.5 PR-FN: 15,027.4 | |||

| UA-DETRAC—Medium | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 74.26% MOTA: 65.3% MT: 61% ML: 8.7% IDSW: 445 FM: 2590 FP: 34,081 FN: 89,466 PR-MOTA: 24.3% PR-MT: 22.4% PR-ML: 10.7% PR-IDSW: 189 PR-FM: 1652.4 PR-FP: 23,031.3 PR-FN: 68,472.9 | |||

| UA-DETRAC—Hard | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 59% MOTA: 42.5% MT: 46.8% ML: 14.5% IDSW: 277 FM: 1355 FP: 26,991 FN: 58,753 PR-MOTA: 12.8% PR-MT: 17.1% PR-ML: 15.1% PR-IDSW: 115.4 PR-FM: 696.7 PR-FP: 15,982.6 PR-FN: 39,624.1 | |||

| UA-DETRAC—Cloudy Weather | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 79.2% MOTA: 77% MT: 71.1% ML: 3.5% IDSW: 147 FM: 1216 FP: 12,749 FN: 32,191 PR-MOTA: 29.4% PR-MT: 25.4% PR-ML: 7.8% PR-IDSW: 70.3 PR-FM: 973 PR-FP: 8485.1 PR-FN: 31,822.1 | |||

| UA-DETRAC—Nighttime | EfficientDet-D0 + Output Feature Fusion + Detection Heads | Re-ID Head for Appearance Embedding Extraction + Hungarian Algorithm + Kalman Filter + Tracklet Pool | AP: 69.32% MOTA: 60.3% MT: 64.2% ML: 6.6% IDSW: 308 FM: 1186 FP: 24,880 FN: 37,113 PR-MOTA: 20.7% PR-MT: 24.5% PR-ML: 9.6% PR-IDSW: 135.2 PR-FM: 783.9 PR-FP: 17,365.8 PR-FN: 28,406.3 | |||

| [70] | Not available | Not applicable | Custom Dataset—Near a traffic light | Background Subtraction via MoGs + Shadow Removal + Bounding Box Generation | Centroid-Based Tracking with Euclidean Distance Matching | Average Accuracy: 96.1% |

| Custom Dataset—Standard urban road | Background Subtraction via MoGs + Shadow Removal + Bounding Box Generation | Centroid-Based Tracking with Euclidean Distance Matching | Average Accuracy: 97.4% | |||

| [71] | Ultra96 | Not applicable | Custom Dataset—Inbound Traffic | REMOT (Event data processing by Attention Units) | DetA: 50.9% AssA: 58% HOTA: 54.3% | |

| Custom Dataset—Outbound Traffic | REMOT (Event data processing by Attention Units) | DetA: 39% AssA: 47.8% HOTA: 43.1% | ||||

References

- United Nations, Department of Economic and Social Affairs, Population Division. World Urbanization Prospects: The 2018 Revision (ST/ESA/SER.A/420); United Nations: New York, NY, USA, 2019; pp. 1–126. Available online: https://population.un.org/wup/assets/WUP2018-Report.pdf (accessed on 9 December 2024).

- Brook, R.D.; Rajagopalan, S.; Pope, C.A., III; Brook, J.R.; Bhatnagar, A.; Diez-Roux, A.V.; Holguin, F.; Hong, Y.; Luepker, R.V.; Mittleman, M.A.; et al. Particulate Matter Air Pollution and Cardiovascular Disease: An update to the scientific statement from the american heart association. Circulation 2010, 121, 2331–2378. [Google Scholar] [CrossRef]

- Schrank, D. 2023 Urban Mobility Report; The Texas A&M Transportation Institute: Bryan, TX, USA, 2024. [Google Scholar]

- Taylor, A.H.; Dorn, L. Stress, Fatigue, Health, And Risk of Road Traffic Accidents Among Professional Drivers: The Contribution of Physical Inactivity. Annu. Rev. Public Health 2006, 27, 371–391. [Google Scholar] [CrossRef] [PubMed]

- Ritchie, H.; Rosado, P.; Roser, M. Breakdown of Carbon Dioxide, Methane and Nitrous Oxide Emissions by Sector. Our World in Data. 2020. Available online: https://ourworldindata.org/emissions-by-sector (accessed on 9 December 2024).

- Ritchie, H. Cars, Planes, Trains: Where do CO2 Emissions from Transport Come from? Our World in Data. 2020. Available online: https://ourworldindata.org/co2-emissions-from-transport (accessed on 9 December 2024).

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Saba, T. Computer vision for microscopic skin cancer diagnosis using handcrafted and non-handcrafted features. Microsc. Res. Tech. 2021, 84, 1272–1283. [Google Scholar] [CrossRef]

- MBorowiec, M.L.; Dikow, R.B.; Frandsen, P.B.; McKeeken, A.; Valentini, G.; White, A.E. Deep learning as a tool for ecology and evolution. Methods Ecol. Evol. 2022, 13, 1640–1660. [Google Scholar] [CrossRef]

- Djahel, S.; Doolan, R.; Muntean, G.-M.; Murphy, J. A Communications-Oriented Perspective on Traffic Management Systems for Smart Cities: Challenges and Innovative Approaches. IEEE Commun. Surv. Tutorials 2014, 17, 125–151. [Google Scholar] [CrossRef]

- Tian, B.; Morris, B.T.; Tang, M.; Liu, Y.; Yao, Y.; Gou, C.; Shen, D.; Tang, S. Hierarchical and Networked Vehicle Surveillance in ITS: A Survey. IEEE Trans. Intell. Transp. Syst. 2014, 16, 557–580. [Google Scholar] [CrossRef]

- Liu, D.; Hui, S.; Li, L.; Liu, Z.; Zhang, Z. A Method for Short-Term Traffic Flow Forecasting Based On GCN-LSTM. In Proceedings of the 2020 International Conference on Computer Vision, Image and Deep Learning (CVIDL), Chongqing, China, 10–12 July 2020; pp. 364–368. [Google Scholar]

- Zhang, C.; Wang, X.; Yong, S.; Zhang, Y.; Li, Q.; Wang, C. An Energy-Efficient Convolutional Neural Network Processor Architecture Based on a Systolic Array. Appl. Sci. 2022, 12, 12633. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Arif, M.U.; Farooq, M.U.; Raza, R.H.; Lodhi, Z.U.A.; Hashmi, M.A.R. A Comprehensive Review of Vehicle Detection Techniques Under Varying Moving Cast Shadow Conditions Using Computer Vision and Deep Learning. IEEE Access 2022, 10, 104863–104886. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. A Systematic Review of Drone Based Road Traffic Monitoring System. IEEE Access 2022, 10, 101537–101555. [Google Scholar] [CrossRef]

- Dolatyabi, P.; Regan, J.; Khodayar, M. Deep Learning for Traffic Scene Understanding: A Review. IEEE Access 2025, 13, 13187–13237. [Google Scholar] [CrossRef]

- Nigam, N.; Singh, D.P.; Choudhary, J. A Review of Different Components of the Intelligent Traffic Management System (ITMS). Symmetry 2023, 15, 583. [Google Scholar] [CrossRef]

- Porto, J.V.d.A.; Szemes, P.T.; Pistori, H.; Menyhárt, J. Trending Machine Learning Methods for Vehicle, Pedestrian, and Traffic for Detection and Tracking Task in the Post-Covid Era: A Literature Review. IEEE Access 2025, 13, 77790–77803. [Google Scholar] [CrossRef]

- Holla, A.; Pai, M.M.M.; Verma, U.; Pai, R.M. Vehicle Re-Identification and Tracking: Algorithmic Approach, Challenges and Future Directions. IEEE Open, J. Intell. Transp. Syst. 2025, 6, 155–183. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef] [PubMed]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2009, 88, 303–338. [Google Scholar] [CrossRef]

- Stiefelhagen, R.; Bernardin, K.; Bowers, R.; Garofolo, J.; Mostefa, D.; Soundararajan, P. The CLEAR 2006 Evaluation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2007; pp. 1–44. [Google Scholar] [CrossRef]

- KBernardin, K.; Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. EURASIP J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef]

- Wu, B.; Nevatia, R. Tracking of Multiple, Partially Occluded Humans based on Static Body Part Detection. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Volume 1 (CVPR’06), New York, NY, USA, 17 June 2006; pp. 951–958. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Rita, C.; Carlo, T. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision Springer, Amsterdam, The Netherlands, 8–16 October 2016; pp. 17–35. [Google Scholar] [CrossRef]

- Luiten, J.; OšeP, A.; Dendorfer, P.; Torr, P.; Geiger, A.; Leal-Taixé, L.; Leibe, B. HOTA: A Higher Order Metric for Evaluating Multi-object Tracking. Int. J. Comput. Vis. 2020, 129, 548–578. [Google Scholar] [CrossRef]

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.C.; Qi, H.; Lim, J.; Yang, M.H.; Lyu, S. UA-DETRAC: A New Benchmark and Protocol for Multi-Object Detection and Tracking. arXiv 2015, arXiv:1511.04136v3. [Google Scholar] [CrossRef]

- Song, H.; Liang, H.; Li, H.; Dai, Z.; Yun, X. Vision-based vehicle detection and counting system using deep learning in highway scenes. Eur. Transp. Res. Rev. 2019, 11, 1–16. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Everingham, M.; Van-Gool, C.K.I.; Winn, J. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. Pattern Anal. Stat. Model. Comput. Learn. 2011, 8, 2–5. [Google Scholar]

- Leibe, B.; Matas, J.; Sebe, N.; Welling, M. (Eds.) A Deep Learning-Based Approach to Progressive Vehicle Re-identification for Urban Surveillance. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9906. [Google Scholar] [CrossRef]

- Lou, Y.; Bai, Y.; Liu, J.; Wang, S.; Duan, L. VERI-Wild: A Large Dataset and a New Method for Vehicle Re-Identification in the Wild. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3230–3238. [Google Scholar]

- Kachach, R.; Cañas, J.M. Hybrid three-dimensional and support vector machine approach for automatic vehicle tracking and classification using a single camera. J. Electron. Imaging 2016, 25, 33021. [Google Scholar] [CrossRef]

- Guerrero-Gómez-Olmedo, R.; López-Sastre, R.J.; Maldonado-Bascón, S.; Fernández-Caballero, A. Vehicle Tracking by Simultaneous Detection and Viewpoint Estimation. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation; Springer: Berlin/Heidelberg, Germany, 2013; pp. 306–316. [Google Scholar]

- Luo, Z.; Branchaud-Charron, F.; Lemaire, C.; Konrad, J.; Li, S.; Mishra, A.; Achkar, A.; Eichel, J.; Jodoin, P.-M. MIO-TCD: A New Benchmark Dataset for Vehicle Classification and Localization. IEEE Trans. Image Process. 2018, 27, 5129–5141. [Google Scholar] [CrossRef]

- Kenk, M.A.; Hassaballah, M. DAWN: Vehicle Detection in Adverse Weather Nature Dataset. Available online: https://doi.org/10.17632/766ygrbt8y.3 (accessed on 10 April 2025).

- Dong, Z.; Wu, Y.; Pei, M.; Jia, Y. Vehicle Type Classification Using a Semisupervised Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2247–2256. [Google Scholar] [CrossRef]

- Naphade, M.; Chang, M.-C.; Sharma, A.; Anastasiu, D.C.; Jagarlamudi, V.; Chakraborty, P.; Huang, T.; Wang, S.; Liu, M.-Y.; Chellappa, R.; et al. The 2018 NVIDIA AI City Challenge. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 53–537. [Google Scholar]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Ismail, T.S.; Ali, A.M. Vehicle Detection, Counting, and Classification System based on Video using Deep Learning Models. ZANCO J. PURE Appl. Sci. 2024, 36, 27–39. [Google Scholar] [CrossRef]

- Ge, X.; Zhou, F.; Chen, S.; Gao, G.; Wang, R. Vehicle detection and tracking algorithm based on improved feature extraction. KSII Trans. Internet Inf. Syst. 2024, 18, 2642–2664. [Google Scholar] [CrossRef]

- Fernández, J.; Cañas, J.M.; Fernández, V.; Paniego, S. Robust Real-Time Traffic Surveillance with Deep Learning. Comput. Intell. Neurosci. 2021, 2021, 4632353. [Google Scholar] [CrossRef]

- Mo, X.; Sun, C.; Zhang, C.; Tian, J.; Shao, Z. Research on Expressway Traffic Event Detection at Night Based on Mask-SpyNet. IEEE Access 2022, 10, 69053–69062. [Google Scholar] [CrossRef]

- Saadeldin, A.; Rashid, M.M.; Shafie, A.A.; Hasan, T.F. Real-time vehicle counting using custom YOLOv8n and DeepSORT for resource-limited edge devices. TELKOMNIKA Telecommun. Comput. Electron. Control. 2024, 22, 104–112. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Aryal, J. Real-Time Vehicle Classification and Tracking Using a Transfer Learning-Improved Deep Learning Network. Sensors 2022, 22, 3813. [Google Scholar] [CrossRef]

- Guo, D.; Li, Z.; Shuai, H.; Zhou, F. Multi-Target Vehicle Tracking Algorithm Based on Improved DeepSORT. Sensors 2024, 24, 7014. [Google Scholar] [CrossRef] [PubMed]

- You, L.; Chen, Y.; Xiao, C.; Sun, C.; Li, R. Multi-Object Vehicle Detection and Tracking Algorithm Based on Improved YOLOv8 and ByteTrack. Electronics 2024, 13, 3033. [Google Scholar] [CrossRef]

- Reyna, A.R.H.; Farfán, A.J.F.; Filho, G.P.R.; Sampaio, S.; De Grande, R.; Nakamura, L.H.V.; Meneguette, R.I. MEDAVET: Traffic Vehicle Anomaly Detection Mechanism based on spatial and temporal structures in vehicle traffic. J. Internet Serv. Appl. 2024, 15, 25–38. [Google Scholar] [CrossRef]

- Villa, J.; García, F.; Jover, R.; Martínez, V.; Armingol, J.M. Intelligent Infrastructure for Traffic Monitoring Based on Deep Learning and Edge Computing. J. Adv. Transp. 2024, 2024, 3679014. [Google Scholar] [CrossRef]

- Jin, T.; Ye, X.; Li, Z.; Huo, Z. Identification and Tracking of Vehicles between Multiple Cameras on Bridges Using a YOLOv4 and OSNet-Based Method. Sensors 2023, 23, 5510. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K.; Varshney, S.; Singh, S.; Kumar, P.; Kim, B.-G.; Ra, I.-H. Fusion of Deep Sort and Yolov5 for Effective Vehicle Detection and Tracking Scheme in Real-Time Traffic Management Sustainable System. Sustainability 2023, 15, 16869. [Google Scholar] [CrossRef]

- Guzmán-Torres, J.A.; Domínguez-Mota, F.J.; Tinoco-Guerrero, G.; García-Chiquito, M.C.; Tinoco-Ruíz, J.G. Efficacy Evaluation of You Only Learn One Representation (YOLOR) Algorithm in Detecting, Tracking, and Counting Vehicular Traffic in Real-World Scenarios, the Case of Morelia México: An Artificial Intelligence Approach. AI 2024, 5, 1594–1613. [Google Scholar] [CrossRef]

- Wei, D.; Chen, B.; Lin, Y. Automatic Identification and Tracking Method of Case-Related Vehicles Based on Computer Vision Algorithm. Appl. Math. Nonlinear Sci. 2024, 9, 1–15. Available online: https://amns.sciendo.com/article/10.2478/amns-2024-1522; (accessed on 9 December 2024).

- Suttiponpisarn, P.; Charnsripinyo, C.; Usanavasin, S.; Nakahara, H. An Autonomous Framework for Real-Time Wrong-Way Driving Vehicle Detection from Closed-Circuit Televisions. Sustainability 2022, 14, 10232. [Google Scholar] [CrossRef]

- Zhai, J.; Li, B.; Lv, S.; Zhou, Q. FPGA-Based Vehicle Detection and Tracking Accelerator. Sensors 2023, 23, 2208. [Google Scholar] [CrossRef]

- Azimjonov, J.; Özmen, A.; Varan, M. A vision-based real-time traffic flow monitoring system for road intersections. Multimedia Tools Appl. 2023, 82, 25155–25174. [Google Scholar] [CrossRef]

- Du, Z.; Jin, Y.; Ma, H.; Liu, P. A Lightweight and Accurate Method for Detecting Traffic Flow in Real Time. J. Adv. Comput. Intell. Intell. Inform. 2023, 27, 1086–1095. [Google Scholar] [CrossRef]

- Cygert, S.; Czyżewski, A. Vehicle Detection with Self-Training for Adaptative Video Processing Embedded Platform. Appl. Sci. 2020, 10, 5763. [Google Scholar] [CrossRef]

- Shokri, D.; Larouche, C.; Homayouni, S. Proposing an Efficient Deep Learning Algorithm Based on Segment Anything Model for Detection and Tracking of Vehicles through Uncalibrated Urban Traffic Surveillance Cameras. Electronics 2024, 13, 2883. [Google Scholar] [CrossRef]

- Mohamed, I.S.; Chuan, L.K. PAE: Portable Appearance Extension for Multiple Object Detection and Tracking in Traffic Scenes. IEEE Access 2022, 10, 37257–37268. [Google Scholar] [CrossRef]

- Lee, Y.; Lee, S.-H.; Yoo, J.; Kwon, S. Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios. Sensors 2021, 21, 6358. [Google Scholar] [CrossRef]

- Sharma, D.; Jaffery, Z.A. Categorical Vehicle Classification and Tracking using Deep Neural Networks. Int. J. Adv. Comput. Sci. Appl. 2021, 12. [Google Scholar] [CrossRef]

- Li, M.; Liu, M.; Zhang, W.; Guo, W.; Chen, E.; Zhang, C. A Robust Multi-Camera Vehicle Tracking Algorithm in Highway Scenarios Using Deep Learning. Appl. Sci. 2024, 14, 7071. [Google Scholar] [CrossRef]

- Nikodem, M.; Słabicki, M.; Surmacz, T.; Mrówka, P.; Dołęga, C. Multi-Camera Vehicle Tracking Using Edge Computing and Low-Power Communication. Sensors 2020, 20, 3334. [Google Scholar] [CrossRef]

- Sun, W.; Sun, M.; Zhang, X.; Li, M. Moving Vehicle Detection and Tracking Based on Optical Flow Method and Immune Particle Filter under Complex Transportation Environments. Complexity 2020, 2020, 1–15. [Google Scholar] [CrossRef]

- Ashraf, M.H.; Jabeen, F.; Alghamdi, H.; Zia, M.; Almutairi, M.S. HVD-Net: A Hybrid Vehicle Detection Network for Vision-Based Vehicle Tracking and Speed Estimation. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101657. [Google Scholar] [CrossRef]

- Atouf, I.; Al Okaishi, W.Y.; Zaaran, A.; Slimani, I.; Benrabh, M. A real-time system for vehicle detection with shadow removal and vehicle classification based on vehicle features at urban roads. Int. J. Power Electron. Drive Syst. (IJPEDS) 2020, 11, 2091–2098. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, S.; So, H.K.-H. A Reconfigurable Architecture for Real-time Event-based Multi-Object Tracking. ACM Trans. Reconfigurable Technol. Syst. 2023, 16, 1–26. [Google Scholar] [CrossRef]

- Leal-Taixé, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar] [CrossRef]

| Reference | Limitations |

|---|---|

| [16] |

|

| [17] |

|

| [18] |

|

| [19] |

|

| [20] |

|

| [21] |

|

| Reference | Name | Number of Images | Image Size (px) | Number of Classes | Availability | Problem Domain |

|---|---|---|---|---|---|---|

| [30] | UA-DETRAC | Over 140,000 | 960 × 540 | 4 (car, bus, van and others) | Available | Detection and Tracking |

| [31] | Song et al. 2019 | 11,129 | 1920 × 1080 | 3 (car, bus and truck) | Restricted | Detection and Tracking (limited) |

| [32] | COCO | Over 330,000 | 640 × 480 | 91 (including car, bus, bicycle, motorcycle and truck) | Available | Detection |

| [24] | PASCAL VOC 2007 | 9963 | Varying | 20 (including car, bus, bicycle and motorbike) | Available | Detection |

| [33] | PASCAL VOC 2012 | 11,540 | Varying | 20 (including car, bus, bicycle and motorbike) | Available | Detection |

| [34] | VeRI-776 | 49,360 | Varying | 1 (vehicle) | Available | Re-identification |

| [35] | VeRI-Wild | 416,314 | Varying | 1 (vehicle) | Available | Re-identification |

| [36] | Redouane Kachach et al. 2016 | 3460 | Not available | 7 (car, motocycle, van, bus, truck, small truck and tank truck) | Restricted | Detection and Tracking (limited) |

| [37] | GRAM Road Traffic Monitor | 40,345 | Varying (800 × 480/1200 × 720/600 × 360) | 4 (car, truck, van and big truck) | Available | Detection and Tracking (limited) |

| [38] | Miovision Traffic Camera Dataset (MIO-TCD) | 786,702 | Varying | 11 (including articulated truck, bicycle, bus, car, motorcycle, non-motorized vehicle, pickup truck, single-unit and work van) | Available | Detection and Tracking (limited) |

| [39] | DAWN | 1000 | Not available | 5 (including car, bus, truck and motorcycles + bicycles) | Available | Detection |

| [40] | BiT-Vehicle | 9850 | Varying (1600 × 1200/1920 × 1080) | 6 (bus, microbus, minivan, sedan, SUV and truck) | Available | Detection and Tracking (limited) |

| [41] | 2nd NVIDIA AI City Challenge—Track 1 | 48,600 | 1920 × 1080 | 1 (vehicle) | Unavailable | Detection and Tracking (limited) |

| Name | Environmental Coverage | Urban | Highway | |||

|---|---|---|---|---|---|---|

| Sunny | Nighttime | Cloudy | Rainy | |||

| UA-DETRAC | ✓ 1 | ✓ | ✓ | ✓ | ✓ | ✓ |

| GRAM Road-Traffic Monitoring | ✓ | ✓ | ✓ | ✓ | ||

| Miovision Traffic Camera Dataset (MIO-TCD) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| BiT-Vehicle | ✓ | ✓ | ✓ | |||

| Statistic | MOTA | MOTP |

|---|---|---|

| Number of articles | 8 | 4 |

| ) | 57.3% | 52.7% |

| Standard deviation (SD) | 18.7% | 22.1% |

| Range (Min–Max) | 49.9% | 73.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matias, J.; Pinto, F.C.; Couto, P. Computer Vision Methods for Vehicle Detection and Tracking: A Systematic Review and Meta-Analysis. Appl. Sci. 2025, 15, 12288. https://doi.org/10.3390/app152212288

Matias J, Pinto FC, Couto P. Computer Vision Methods for Vehicle Detection and Tracking: A Systematic Review and Meta-Analysis. Applied Sciences. 2025; 15(22):12288. https://doi.org/10.3390/app152212288

Chicago/Turabian StyleMatias, João, Filipe Cabral Pinto, and Pedro Couto. 2025. "Computer Vision Methods for Vehicle Detection and Tracking: A Systematic Review and Meta-Analysis" Applied Sciences 15, no. 22: 12288. https://doi.org/10.3390/app152212288

APA StyleMatias, J., Pinto, F. C., & Couto, P. (2025). Computer Vision Methods for Vehicle Detection and Tracking: A Systematic Review and Meta-Analysis. Applied Sciences, 15(22), 12288. https://doi.org/10.3390/app152212288