Control and Decision-Making in Deceptive Multi-Computer Systems Based on Previous Experience for Cybersecurity of Critical Infrastructure

Abstract

1. Introduction

1.1. Motivation

1.2. Previous Works

1.3. Related Works

2. Materials and Methods

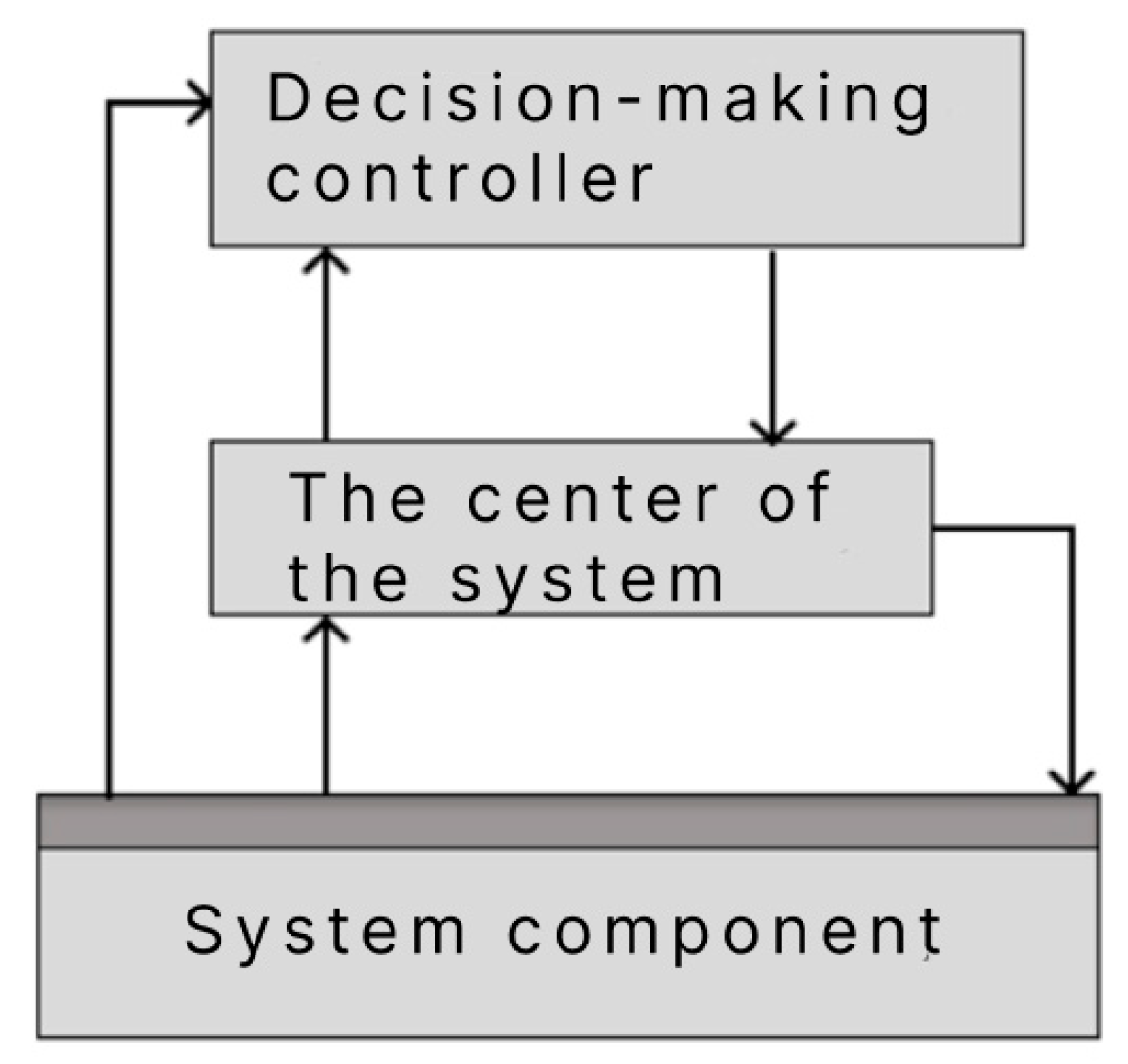

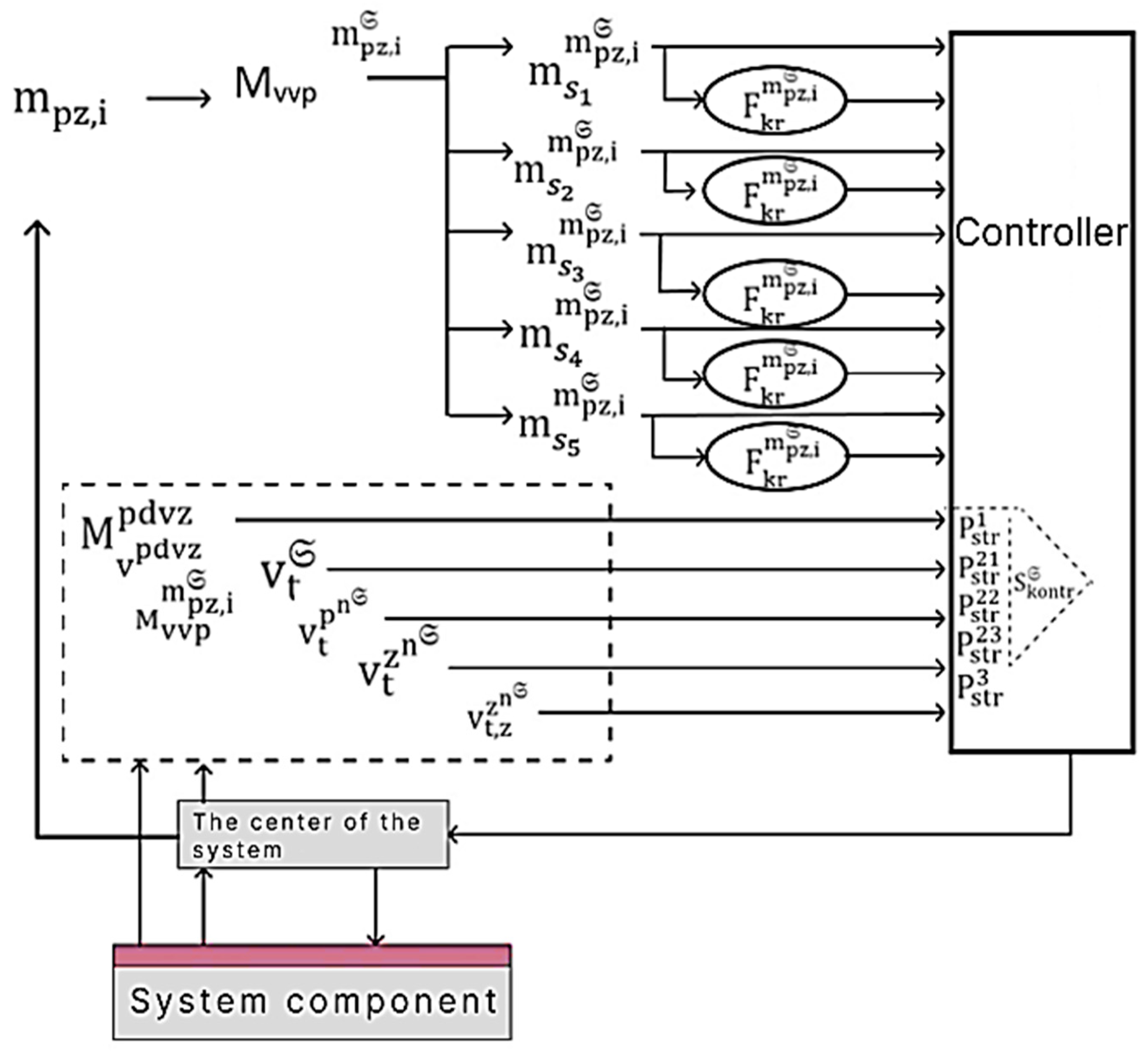

2.1. Method of Organizing the Functioning of the Decision-Making Controller

- (1)

- planned restructuring of the classroom system architecture ;

- (2)

- rebuilding the architecture of classroom systems , which is caused by external influences;

- (3)

- restructuring the architecture of class systems , which is caused by internal influences;

- (4)

- selecting the next centralization option in the classroom system architecture ;

- (5)

- processing of events caused by external influences;

- (6)

- processing of events caused by internal influences;

- (7)

- restructuring of connections in system components outside their center;

- (8)

- activation of individual baits and traps in class systems ;

- (9)

- activation of combined anti-virus baits and traps in class systems ;

- (10)

- disabling some components in class systems ;

- (11)

- studying the results of baits and traps in class systems ;

- (12)

- selection of the following components for the system center;

- (13)

- selection of the following components for the decision-making controller;

- (14)

- processing of events related to emergency shutdown of computer stations with system components;

- (15)

- adding class system components after turning on computer stations with components and assigning them the appropriate status for performing tasks;

- (16)

- removal of class system components after the computer stations with components are correctly turned on and their task execution functions are transferred to other components;

- (17)

- formation of a system together with the center and controller in a separate unconnected part of the entire system as a result of its disintegration;

- (18)

- formation of a system with a center and a controller from separate unrelated parts of the entire system as a result of establishing a connection between the parts and its transition to an integral system;

- (19)

- setting the emergency state of the entire system or its parts;

- (20)

- setting the normal operating mode of the entire system or its parts.

- (1)

- —reliability of the system center during the operation of the considered variant of the task;

- (2)

- —reliability of the entire system during the operation of the considered variant of the task;

- (3)

- —assessment of the quality of the task using the option under consideration for the event that prompted the use of this option;

- (4)

- —assessment of the quality of all tasks performed by the system during the time of using the option under consideration for the event that prompted the use of this task option.

- (1)

- —selecting the option with the highest value of the objective function (Formula (6));

- (2)

- —selecting the option with the lowest value of the objective function (Formula (6));

- (3)

- —selecting the option with the second value after the minimum value of the objective function (Formula (7));

- (4)

- —selecting the option with the third value after the minimum value of the objective function (Formula (7));

- (5)

- —selecting a task option that has already been repeated more times than the rest of the options under consideration according to the data from the set of previous experience (Formula (6)) using all options;

- (6)

- —selecting the option of performing the task that has already been repeated the least number of times compared to the rest of the options under consideration according to the data from the set of previous experience (Formula (6)) using all options;

- (7)

- —choosing the option of performing the task that has already been repeated second in number from the option with the largest number of times compared to the rest of the options under consideration according to the data from the set of previous experience (Formula (5)) using all options;

- (8)

- —randomly selecting a task option from two options that have already been repeated the same least number of times compared to the rest of the options under consideration according to the data from the set previous experience (Formula (6)) using all options;

- (9)

- —randomly selecting a task option from two options that have already been repeated the same number of times compared to the rest of the options under consideration according to the data from the set previous experience (Formula (6)) using all options;

- (10)

- —selection of a task variant in which it is planned to involve components with a security level that is higher than the system security level (Formulas (12) and (13));

- (11)

- —selection of a task variant in which it is planned to involve components with a security level that is higher than the system security level (Formulas (12) and (13)) and that is less than the system security level by no more than 10%;

- (12)

- —selecting a task variant in which it is planned to involve components with communication states for which the corresponding values are determined by the vector coordinates by Formula (16) fully correspond to those determined by the system;

- (13)

- —selecting a task variant in which it is planned to involve components with communication states for which the corresponding values are determined by the vector coordinates according to Formula (16) are less than the values determined by the system, but contain at least two links defined in the corresponding coordinates of the vector;

- (14)

- —selecting a task variant in which it is planned to involve components with communication states for which the corresponding values are determined by the vector coordinates according to Formula (16) are less than the values determined by the system, but contain at least half of the links defined in the corresponding coordinates of the vector.

- (1)

- Formation of the decision-making controller in certain components of the system;

- (2)

- Restructuring the architecture of the decision controller;

- (3)

- Moving the decision-making controller to certain system components;

- (4)

- Establishing a connection between the components containing the current decision controller and the rest of the system components;

- (5)

- Receiving input data from the system center and other system components in the format of the i-th task (; —is the number of tasks that the system can perform) (Formula (1)), j-th option fulfillment of the i-th task (; —is the total number of options for performing the i-th task ) (Formula (3)), characteristics of previous experience with the variant task fulfillment (; —the total number of indicators characterizing the previous experience of using the option task fulfillment ) (Formula (5)), the sets of previous experience (Formula (6)), the value of the objective function (Formula (7)) for the considered variants of the task, the vector taking into account the current time from the beginning of the system operation and the value of the levels of functional and cybersecurity of computer stations of the system components (Formula (12)), vector of the state of functional and cybersecurity of the system (Formula (13)), vector representation of connections for each component (Formula (15)), vector of the completeness of the links (Formula (16)), the k-th strategy selecting the next option for completing the task by the decision-maker (; —the total number of strategies in the set of strategies of choosing the next option for performing the task by the decision-maker) (Formula (17));

- (6)

- Random selection and application of one of the rules for forming a strategy for selecting the next option for completing the task by the decision-maker (Formula (18));

- (7)

- Transmitting the result of selecting the next option for completing the task to the system center and the rest of the system components.

2.2. Method of Organizing the Functioning of Multi-Computer Systems

- (1)

- The current formation of the system (Formula (19)) from active components that are in the newly turned on computer stations or were in the previously turned on computer stations;

- (2)

- Constant acquisition of data on the time of operation of the system and its components to form a vector taking into account the current time from the beginning of the system’s operation and the value of the levels of functional and cybersecurity of computer stations of the system components (Formula (12)), the vector will contain the characteristics of the system during the entire time of its operation at certain time intervals (Formula (22)), the vector (i—component number) functioning of the component (Formula (23)) during the entire period of system operation (; —number of components in the system ), matrices the maximum time spent on establishing a connection between two system components (Formula (24)), the matrix the average time spent on establishing communication between two system components (Formula (25));

- (3)

- Obtaining data for the execution of instructions from the system center and the decision-making controller to form a vector taking into account the current time from the beginning of the system’s operation and the value of the levels of functional and cybersecurity of computer stations of the system components (Formula (12)) and the vector the state of functional and cybersecurity of the system (Formula (13));

- (4)

- Obtaining data for the execution of instructions from the system center and the decision-making controller to form a vector representation of connections for each component (Formula (15)), vector completeness of connections (Formula (16));

- (5)

- Detection of an event in the corporate network caused by external and internal influences, its identification and selection of tasks from the set of system tasks (Formula (1)) to respond to the event;

- (6)

- Launching the system center to prepare response options for the task of performing the event from clause 5;

- (7)

- Launching the decision-making controller to approve the option for performing the task from clause 6;

- (8)

- Transfer for execution and execution of the approved variant of the task from clause 7;

- (9)

- Removal by the system of a part of the components in which the value of the levels of functional and cybersecurity of the computer stations of the system components (Formula (12)) and the state of functional and cybersecurity of the system (Formula (13)) exceeds the permissible values;

- (10)

- Fulfillment of clauses 1 to 9 for each unconnected part of the system when it is divided;

- (11)

- Repeated execution of clauses 6 to 8 in case of failure to fulfill clause 8;

- (12)

- Execution of steps 1 to 4 in case of successful execution of step 8.

3. Case Study

3.1. Methodology

- Consideration of the system’s previous operating experience and the use of execution variants when forming polymorphic responses to events;

- System stability;

- System responsiveness;

- System integrity;

- System security;

- Evaluation of the adopted decisions.

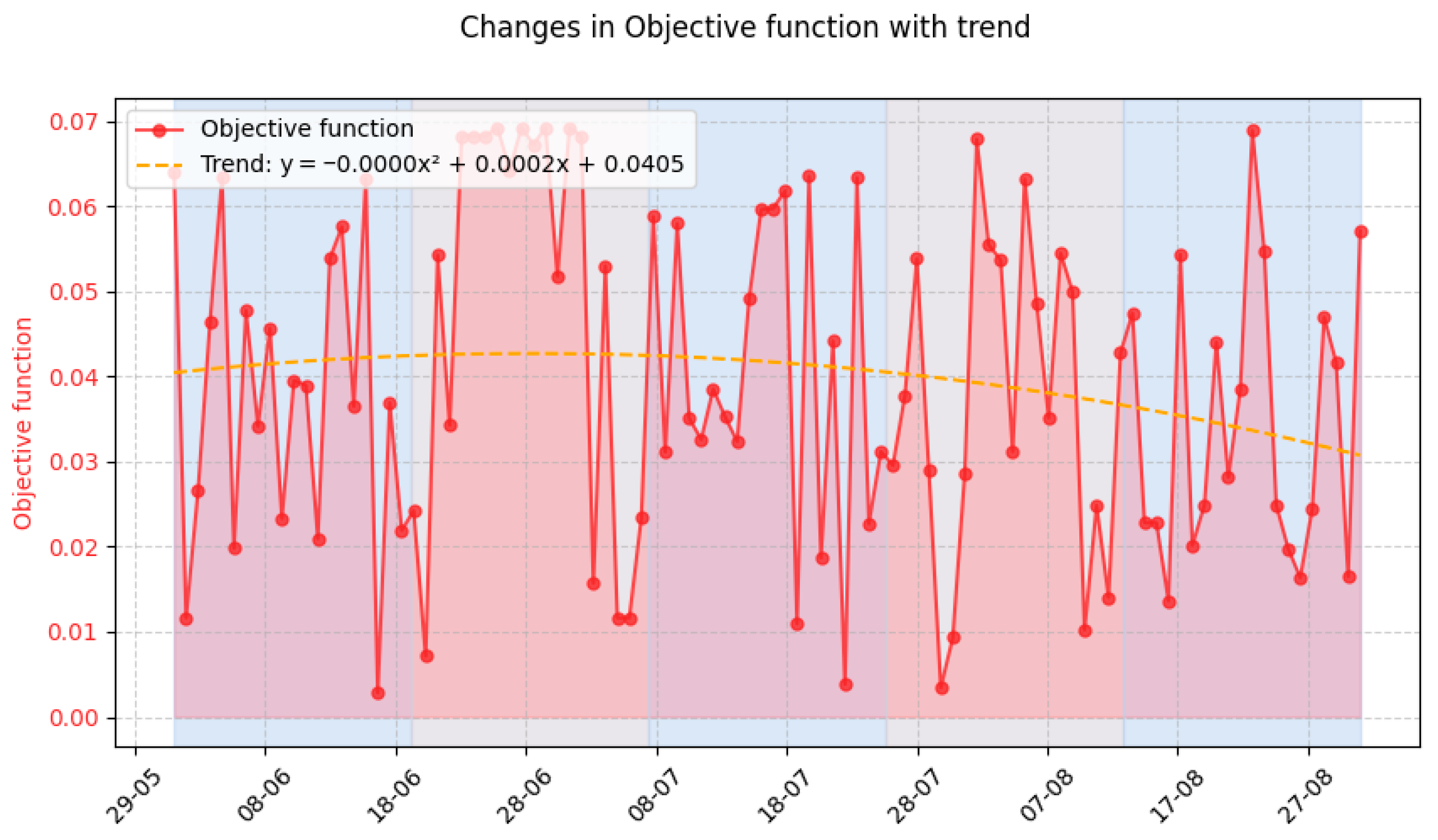

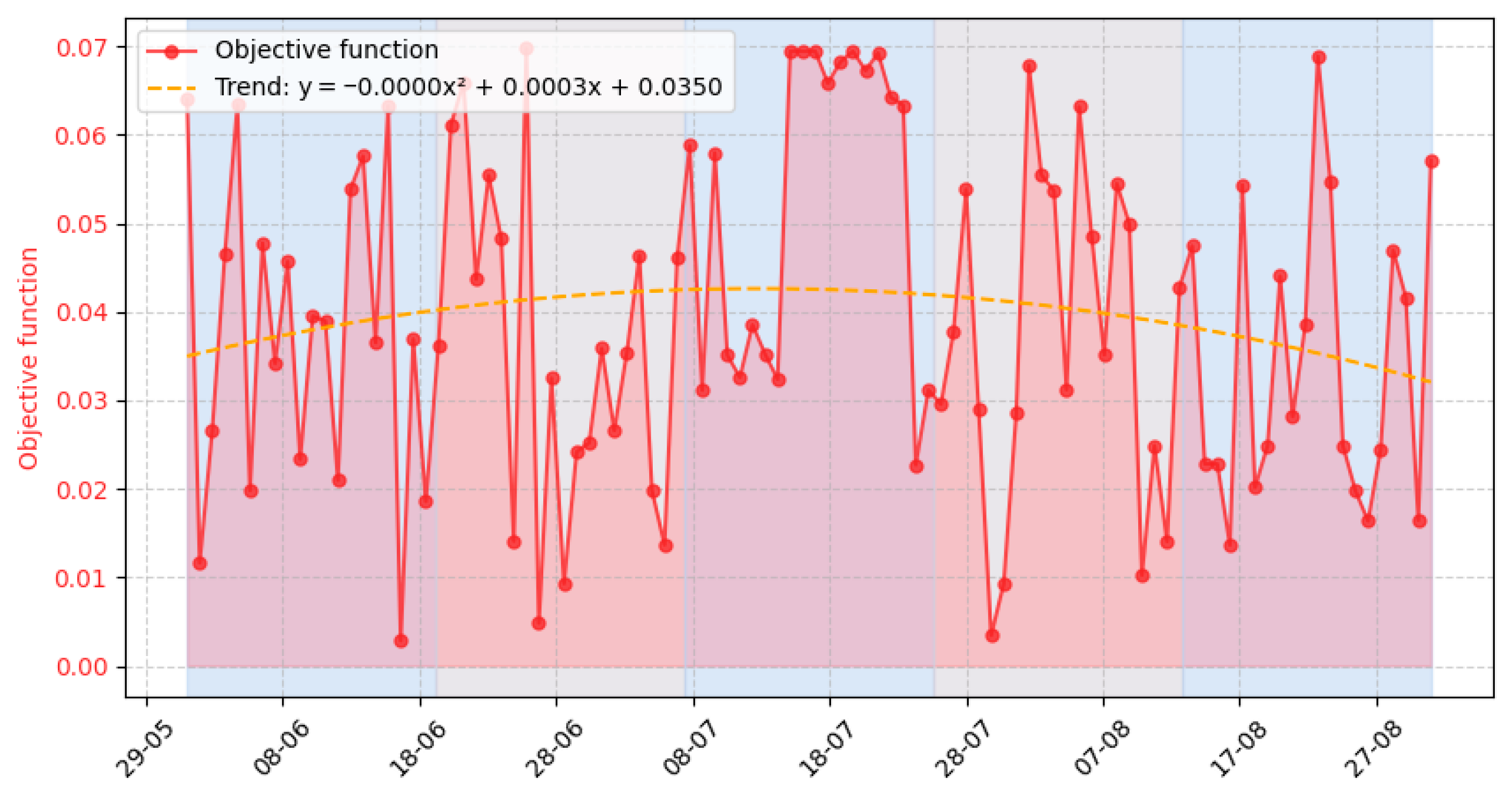

3.2. Conducting the Experiment

3.3. Experiment Results

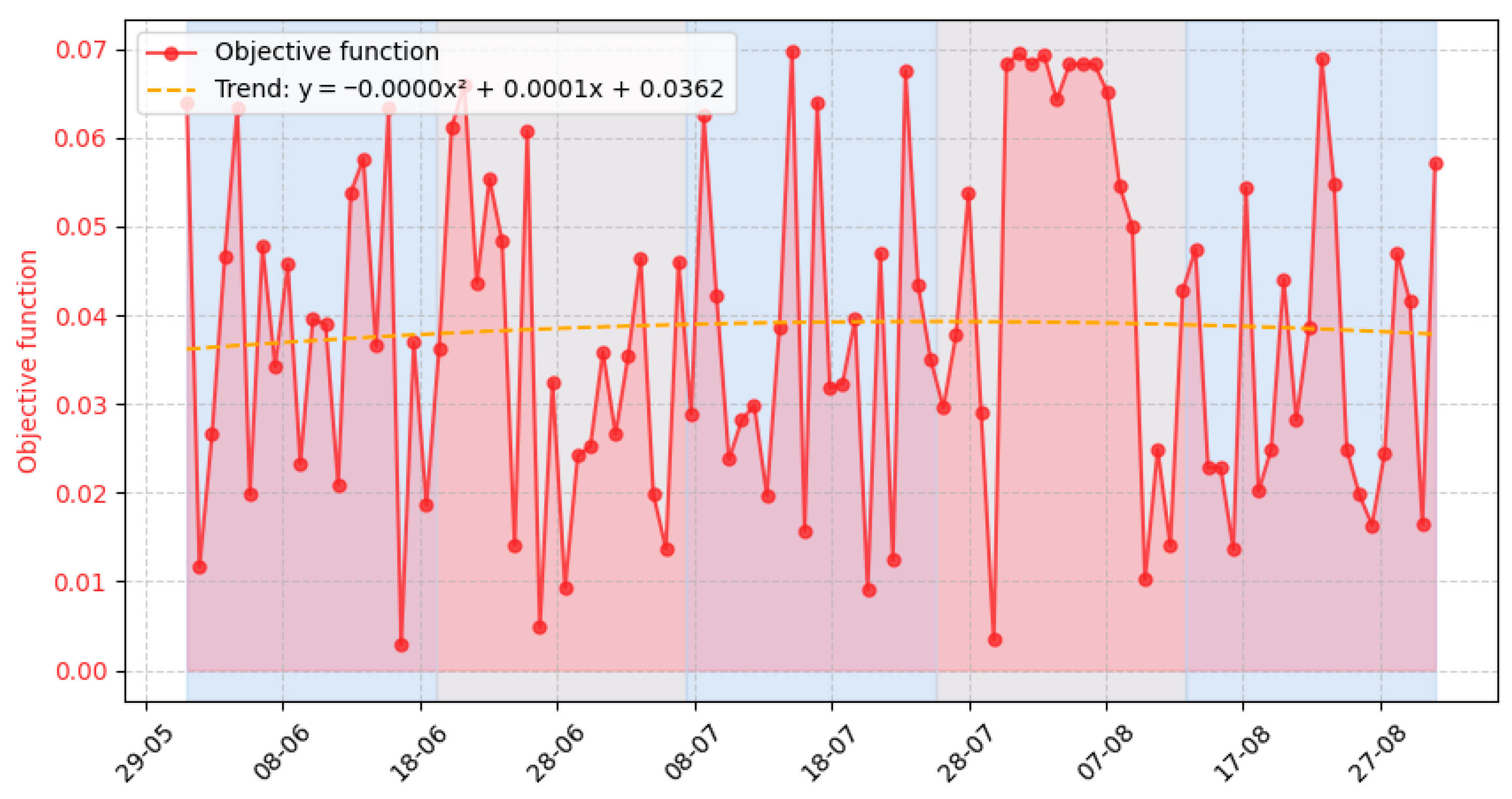

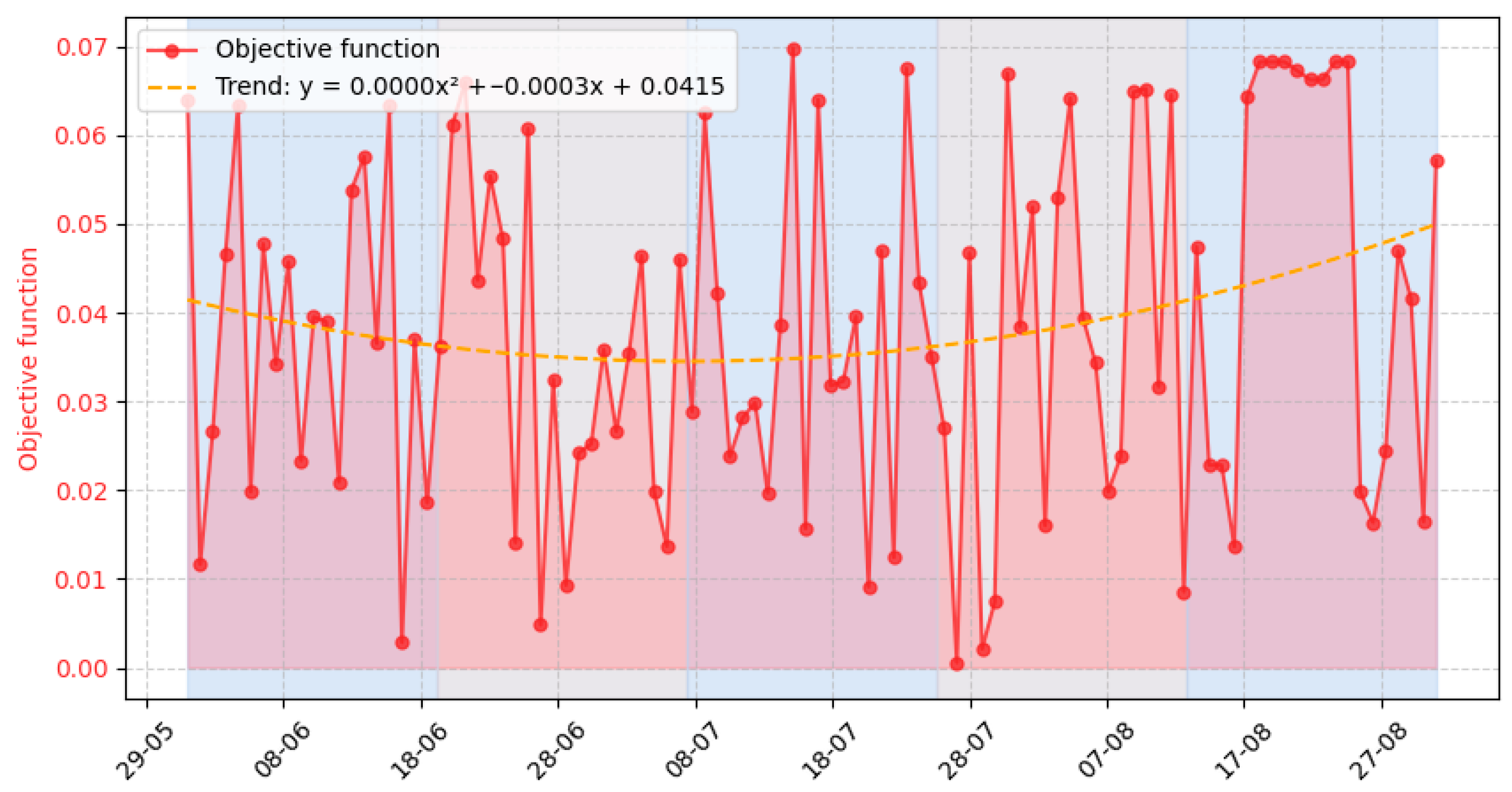

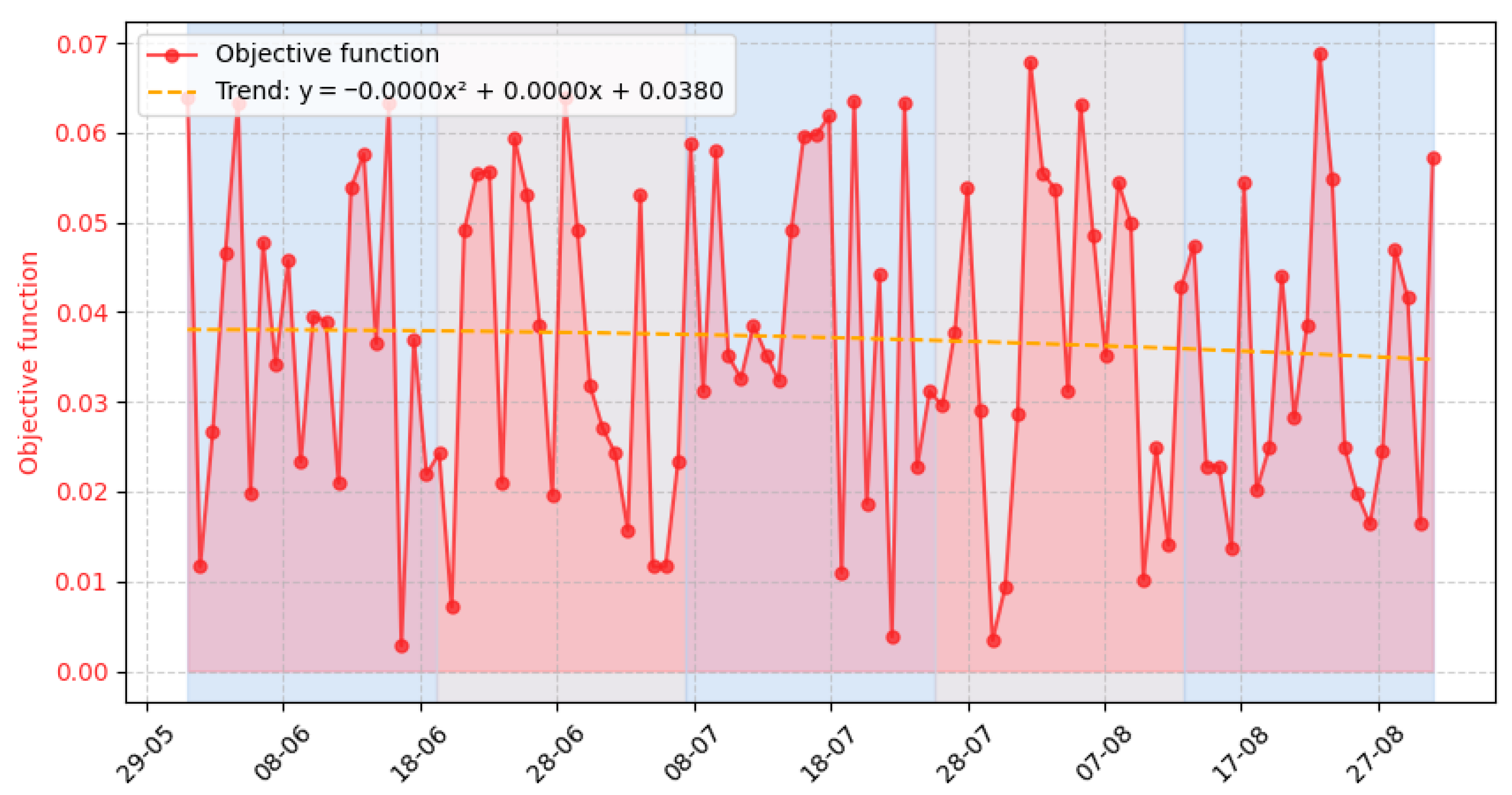

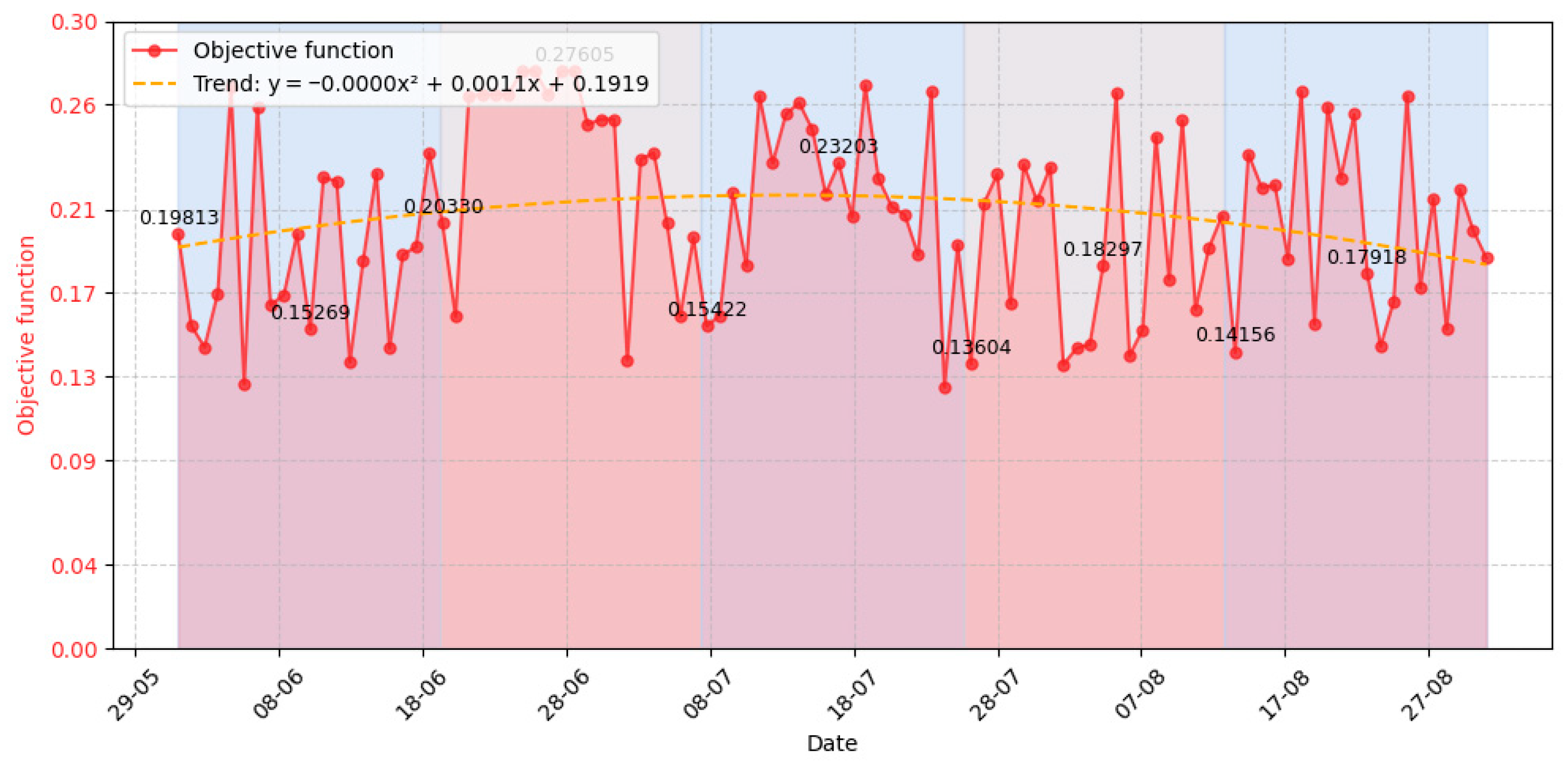

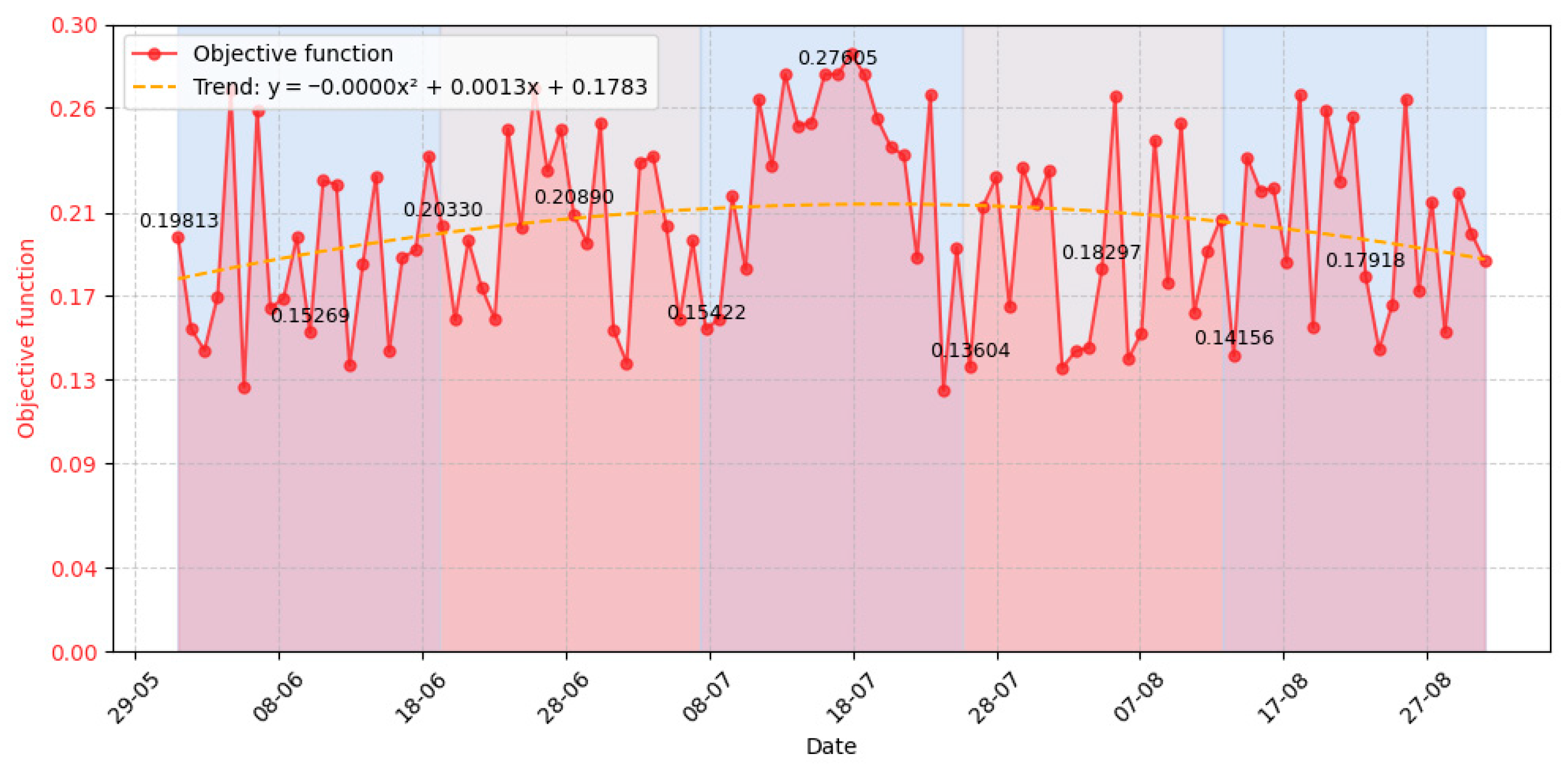

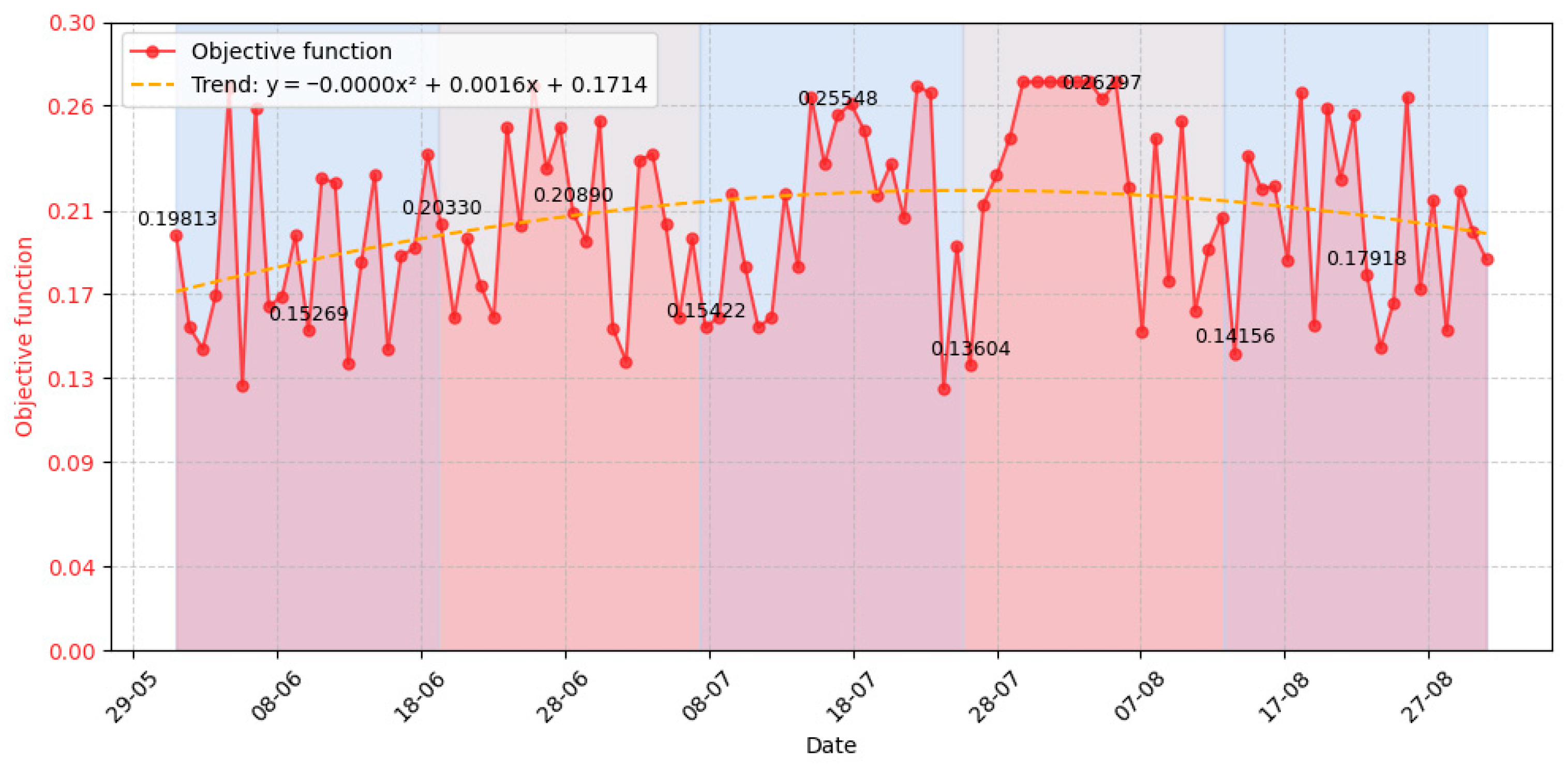

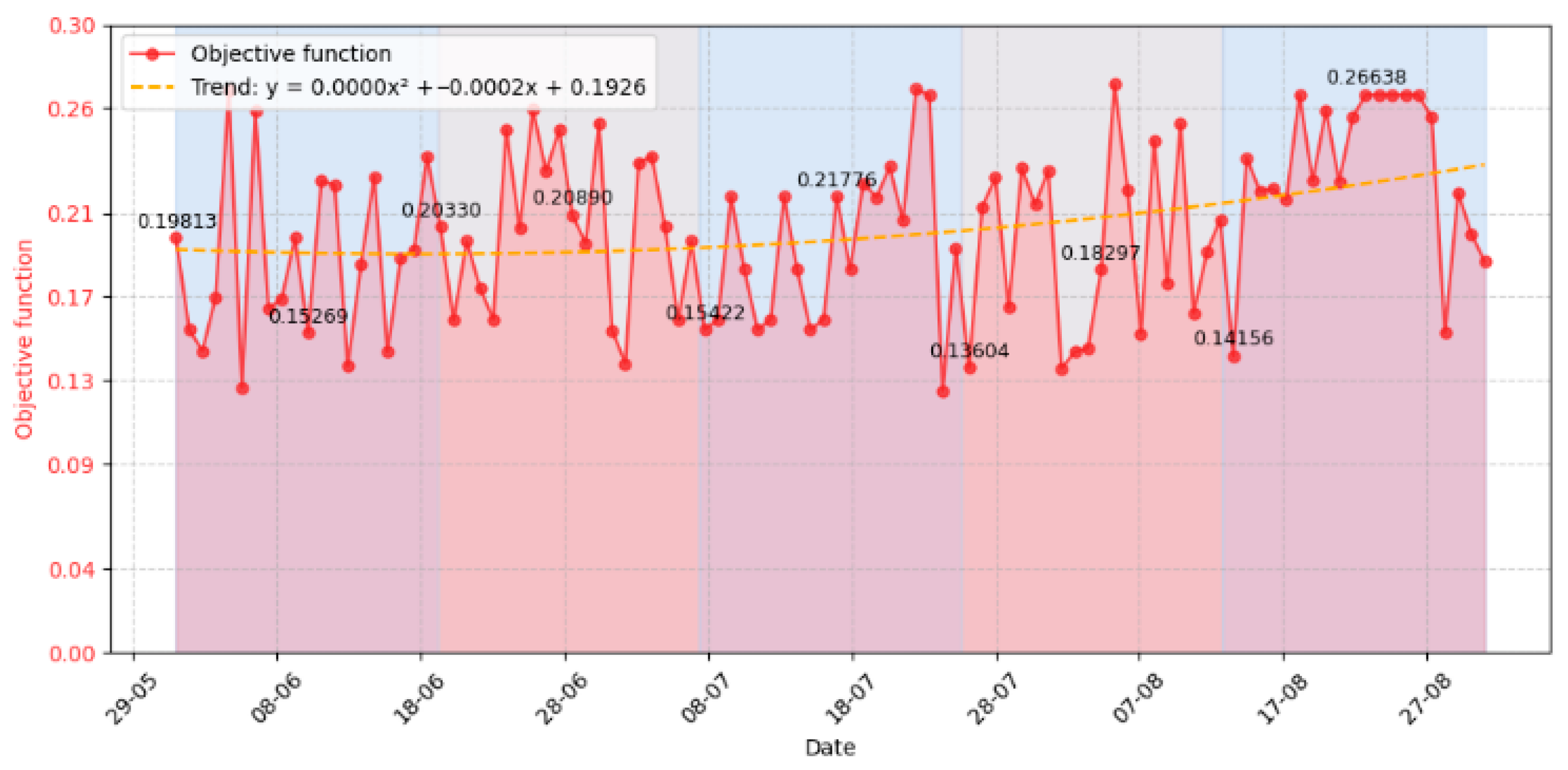

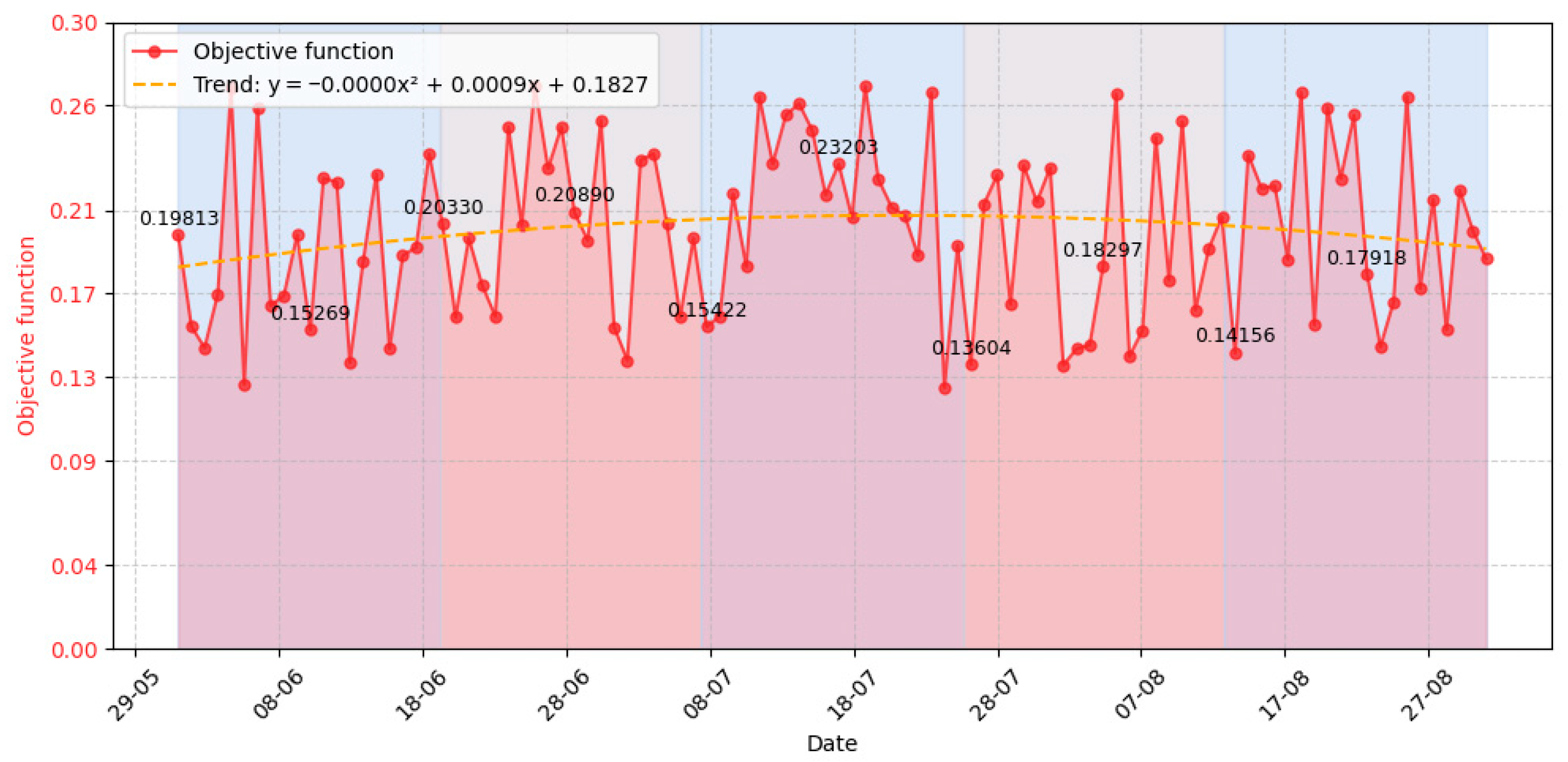

- Event number requiring the system’s response (column 1);

- Time of selection of task execution variants for responding to an event (column 2);

- Task completion time (column 3);

- Execution time (column 4);

- Task number that is called upon to respond to the specified event (column 5);

- Task execution variants determined for processing the event (column 6);

- Value of the function for the stability criterion (column 7);

- Value of the function for the responsiveness criterion (column 8);

- Value of the function for the integrity criterion (column 9);

- Value of the function for the security criterion (column 10);

- Value of the evaluation objective function for the task execution variants determined to process the event (column 11);

- Variant number of the chosen task execution variant (column 12);

- Repeat number of the task execution variant when processing repeated events over certain time intervals during system operation (column 13);

- Previous value of the objective function for the same task execution variant that recurred upon repeated occurrence of the same event (column 14);

- Execution time of the repeated task execution variant on the previous step relative to the current selection of that same variant (column 15);

- Strategy number applied to the chosen task execution variant (column 16);

- Rule number applied to the chosen task execution variant (column 17).

4. Discussion

5. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Denomination | Meaning |

| set of system tasks | |

| Set of potential options for the implementation of the I task | |

| the total number of all options for performing all tasks by the system | |

| a vector of previous experience in using a task execution option, which is given for each task from a set of tasks by a set of potential task options | |

| set of vectors of options for performing the -that task | |

| a set of previous experience in using all the options that are given for each task from the set of tasks | |

| target function for evaluating options for completing tasks | |

| Definition of criterion i | |

| a set of indicators that characterize the functional and cybersecurity of computer stations and system components in them | |

| a set of functions to determine the level of functional and cybersecurity of a computer station and the components of the system in it | |

| Determining the values of functions from the set | |

| vector of functional and cybersecurity levels of all components | |

| Functional and Cybersecurity Status Vector of the System | |

| Vector of the level of communication between components in the system | |

| completeness vector of the relationship representation for each component | |

| vector for determining the completeness of connections, in which will be reflected by the coordinates of the values that will express the ratio of the existing connections of the th component to the number of connections determined for it by the system | |

| A set of strategies for choosing the next option for the task of the decision controller | |

| , , , , | Designation of rules |

| vector characteristics of the system during the entire time of its operation according to the definition of all vectors | |

| vector characteristics of the system during the entire time of its operation according to the definition of all vectors | |

| vector (—number of components) functioning of components during the entire time of system operation | |

| matrix of the extreme time spent establishing communication between two components of the system | |

| Matrix of the average time taken to establish communication between two components of the system |

References

- Breeden, J. 5 Top Deception Tools and How They Ensnare Attackers. CSO Online 2025. Retrieved 6 February 2025. Available online: https://www.csoonline.com/article/570063/5-top-deception-tools-and-how-they-ensnare-attackers.html (accessed on 12 September 2025).

- Labyrinth Deception Platform. Labyrinth Tech 2025. Retrieved 6 February 2025. Available online: https://labyrinth.tech/platform (accessed on 12 September 2025).

- Kashtalian, A.; Lysenko, S.; Savenko, B.; Sochor, T.; Kysil, T. Principle and method of deception systems synthesizing for malware and computer attacks detection. Radioelectron. Comput. Syst. 2023, 4, 112–151. [Google Scholar] [CrossRef]

- Kashtalian, A.; Lysenko, S.; Savenko, O.; Nicheporuk, A.; Sochor, T.; Avsiyevych, V. Multi-computer malware detection systems with metamorphic functionality. Radioelectron. Comput. Syst. 2024, 2024, 152–175. [Google Scholar] [CrossRef]

- Savenko, B.; Kashtalian, A.; Lysenko, S.; Savenko, O. Malware detection by distributed systems with partial centralization. In Proceedings of the 2023 IEEE 12th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Dortmund, Germany, 7–9 September 2023; pp. 265–270. [Google Scholar] [CrossRef]

- Proofpoint Identity Threat Defense. Proofpoint 2025. Retrieved 6 February 2025. Available online: https://www.proofpoint.com/us/illusive-is-now-proofpoint (accessed on 12 September 2025).

- The Commvault Data Protection Platform. Commvault 2025. Retrieved 6 February 2025. Available online: https://www.commvault.com/ (accessed on 12 September 2025).

- SentinelOne. SentinelOne 2025. Retrieved 6 February 2025. Available online: https://www.sentinelone.com/surfaces/identity/ (accessed on 12 September 2025).

- Counter Craft Security. CounterCraft 2025. Retrieved 26 January 2025. Available online: https://www.countercraftsec.com/ (accessed on 12 September 2025).

- Fidelis Security. Fidelis Security 2025. Retrieved 6 February 2025. Available online: https://fidelissecurity.com/fidelis-elevate/ (accessed on 12 September 2025).

- Acosta, J.C.; Basak, A.; Kiekintveld, C.; Kamhoua, C. Lightweight on-demand honeypot deployment for cyber deception. Lect. Notes Inst. Comput. Sci. Soc. Inform. Telecommun. Eng. 2022, 441, 294–312. [Google Scholar] [CrossRef]

- Katakwar, H.; Aggarwal, P.; Maqbool, Z.; Dutt, V. Influence of network size on adversarial decisions in a deception game involving honeypots. Front. Psychol. 2020, 11, 535803. [Google Scholar] [CrossRef]

- Anwar, A.H.; Zhu, M.; Wan, Z.; Cho, J.-H.; Kamhoua, C.A.; Singh, M.P. Honeypot-based cyber deception against malicious reconnaissance via hypergame theory. In Proceedings of the IEEE GLOBECOM, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 3393–3398. [Google Scholar] [CrossRef]

- Wang, H.; Wu, B. SDN-based hybrid honeypot for attack capture. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019; pp. 1602–1606. [Google Scholar] [CrossRef]

- Zhang, L.; Thing, V.L.L. Three decades of deception techniques in active cyber defense—Retrospect and outlook. Comput. Secur. 2021, 106, 102288. [Google Scholar] [CrossRef]

- Oluoha, U.O.; Yange, T.S.; Okereke, G.E.; Bakpo, F.S. Cutting edge trends in deception-based intrusion detection systems—A survey. J. Inf. Secur. 2021, 12, 250–269. [Google Scholar] [CrossRef]

- Han, X.; Kheir, N.; Balzarotti, D. Deception techniques in computer security. ACM Comput. Surv. (CSUR) 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Trassare, S.T. A Technique for Presenting a Deceptive Dynamic Network Topology. Semantic Scholar 2023. Available online: https://www.semanticscholar.org/paper/A-Technique-for-Presenting-a-Deceptive-Dynamic-Trassare-Monterey/2d976496fff27f6b7d3fdd4076a353070be12342 (accessed on 12 September 2025).

- Sharma, S.; Kaul, A. A survey on intrusion detection systems and honeypot-based proactive security mechanisms in VANETs and VANET Cloud. Veh. Commun. 2018, 12, 138–164. [Google Scholar] [CrossRef]

- Baykara, M.; Das, R. A novel honeypot-based security approach for real-time intrusion detection and prevention systems. J. Inf. Secur. Appl. 2018, 41, 103–116. [Google Scholar] [CrossRef]

- Rajendran, P.; Thakur, R.S. Design and implementation of intelligent security framework using hybrid intrusion detection and prevention system. Comput. Electr. Eng. 2016, 56, 456–473. [Google Scholar]

- Parveen, S.; Khan, M.A.; Mirza, A.H. A comprehensive survey of honeypot-based cybersecurity threat detection and mitigation tools: A taxonomy and future directions. J. Netw. Comput. Appl. 2021, 182, 103036. [Google Scholar]

- Zhu, Y.; Yu, L.; Liu, Y.; Xu, Z. Honeypot-based moving target defense mechanism for industrial internet of things. IEEE Trans. Ind. Inform. 2023, 19, 2174–2183. [Google Scholar]

- Mitchell, R.; Chen, R. A survey of intrusion detection techniques for cyber-physical systems. ACM Comput. Surv. (CSUR) 2014, 46, 55. [Google Scholar] [CrossRef]

- Zarpelão, J.; Miani, R.; Kawakani, C.; de Alvarenga, S. A survey of intrusion detection in Internet of Things. J. Netw. Comput. Appl. 2017, 84, 25–37. [Google Scholar] [CrossRef]

- Modi, K.; Patel, U.; Borisaniya, B.; Patel, A.; Rajarajan, M. A survey of intrusion detection techniques in cloud. J. Netw. Comput. Appl. 2013, 36, 42–57. [Google Scholar] [CrossRef]

- Modi, S.; Dave, V.; Shinde, S. A survey of intrusion detection techniques using machine learning. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2018, 3, 670–675. [Google Scholar]

- Zhang, Y.; Zheng, Z.; Chen, X. A survey on anomaly detection methods in industrial control systems. Comput. Secur. 2021, 106, 102280. [Google Scholar]

- Scalas, M.; Gasparini, M.; Cencetti, G.; Bondavalli, A. A review of anomaly detection systems in networked embedded systems. Sensors 2020, 20, 6490. [Google Scholar]

- Jalowski, Ł.; Zmuda, M.; Rawski, M. A Survey on Moving Target Defense for Networks: A Practical View. Electronics 2022, 11, 2886. [Google Scholar] [CrossRef]

- Vakilinia, A.; Sharif, R.; Liu, D. Modeling and analysis of deception games using dynamic Bayesian networks. In Proceedings of the 2017 IEEE 2nd International Conference on Connected and Autonomous Driving (MetroCAD), Brussels, Belgium, 3–4 April 2017; pp. 86–91. [Google Scholar]

- Wu, K.; Tan, L.; Xia, Y.; Xie, M. Cyber deception-based defense against DDoS attack: A game theoretical approach. Comput. Secur. 2019, 87, 101580. [Google Scholar]

- Liang, X.; Xiao, Y. Game theory for network security. IEEE Commun. Surv. Tutor. 2013, 15, 472–486. [Google Scholar] [CrossRef]

- Morić, Z.; Dakić, V.; Regvart, D. Advancing Cybersecurity with Honeypots and Deception Strategies. Informatics 2025, 12, 14. [Google Scholar] [CrossRef]

- Liu, C.; Wang, W.; Zhang, Y.; Chen, J.; Guan, X. Moving target defense for web applications using Bayesian Stackelberg game model. Comput. Netw. 2022, 209, 108914. [Google Scholar]

- Hu, H.; Cao, L.; Liu, Y.; Wang, J. Defending against DDoS attacks based on cyber deception and game theory. IEEE Access 2020, 8, 170174–170184. [Google Scholar]

- Fu, X.; Qin, Y.; Yang, B. Cyber deception-based DDoS defense mechanism using Bayesian game theory. J. Netw. Comput. Appl. 2021, 177, 102949. [Google Scholar]

- Naghmouchi, M.; Boudriga, H.; Laurent, M. Cyber deception and defense modeling in a cloud environment: A game-theoretic approach. In Proceedings of the 2019 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Oxford, UK, 3–4 June 2019; pp. 1–6. [Google Scholar]

- Han, Z.; Marina, N.; Debbah, M.; Hjørungnes, A. Game Theory in Wireless and Communication Networks: Theory, Models, and Applications; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar] [CrossRef]

- Alpcan, T.; Başar, T. Network Security: A Decision and Game Theoretic Approach. Cambridge University Press: Cambridge, UK, 2010; p. 332. [Google Scholar]

- Manshaei, M.; Zhu, Q.; Alpcan, T.; Başar, T.; Jean-Pierre, H. Game theory meets network security and privacy. ACM Comput. Surv. (CSUR) 2013, 45, 25. [Google Scholar] [CrossRef]

- QLiu, C.; Li, X. Game-theoretic analysis of cyber deception: Evidence-based strategies and implications. IEEE Access 2018, 6, 60101–60110. [Google Scholar]

- Yang, K.; Wu, H.; Sun, J. A survey on cyber deception: Motivation, taxonomy, and challenges. IEEE Access 2020, 8, 229555–229578. [Google Scholar]

- Wang, Y.; Liu, X.; Yu, X. Research on Joint Game-Theoretic Modeling of Network Attack and Defense Under Incomplete Information. Entropy 2025, 27, 892. [Google Scholar] [CrossRef]

- Kashtalian, A.; Lysenko, S.; Sachenko, A.; Savenko, B.; Savenko, O.; Nicheporuk, A. Evaluation criteria of centralization options in the architecture of multicomputer systems with traps and baits. Radioelectron. Comput. Syst. 2025, 1, 264–297. [Google Scholar] [CrossRef]

- Kashtalian, A.; Lysenko, S.; Kysil, T.; Sachenko, A.; Savenko, O.; Savenko, B. Method and Rules for Determining the Next Centralization Option in Multicomputer System Architecture. Int. J. Comput. 2025, 24, 35–51. [Google Scholar] [CrossRef]

- Savenko, O.; Sachenko, A.; Lysenko, S.; Markowsky, G.; Vasylkiv, N. Botnet detection approach based on the distributed systems. Int. J. Comput. 2020, 19, 190–198. [Google Scholar] [CrossRef]

- Anđelić, N.; Baressi Šegota, S.; Car, Z. Improvement of Malicious Software Detection Accuracy through Genetic Programming Symbolic Classifier with Application of Dataset Oversampling Techniques. Computers 2023, 12, 242. [Google Scholar] [CrossRef]

- Kamdan; Pratama, Y.; Munzi, R.S.; Mustafa, A.B.; Kharisma, I.L. Static Malware Detection and Classification Using Machine Learning: A Random Forest Approach. Eng. Proc. 2025, 107, 76. [Google Scholar] [CrossRef]

- Wang, P.; Li, H.-C.; Lin, H.-C.; Lin, W.-H.; Xie, N.-Z. A Transductive Zero-Shot Learning Framework for Ransomware Detection Using Malware Knowledge Graphs. Information 2025, 16, 458. [Google Scholar] [CrossRef]

- Alshomrani, M.; Albeshri, A.; Alturki, B.; Alallah, F.S.; Alsulami, A.A. Survey of Transformer-Based Malicious Software Detection Systems. Electronics 2024, 13, 4677. [Google Scholar] [CrossRef]

- Gyamfi, N.K.; Goranin, N.; Ceponis, D.; Čenys, H.A. Automated System-Level Malware Detection Using Machine Learning: A Comprehensive Review. Appl. Sci. 2023, 13, 11908. [Google Scholar] [CrossRef]

- Komar, M.; Golovko, V.; Sachenko, A.; Bezobrazov, S. Intelligent system for detection of networking intrusion. In Proceedings of the 6th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems, Prague, Czech Republic, 15–17 September 2011; pp. 374–377. [Google Scholar] [CrossRef]

- Balyk, A.; Karpinski, M.; Naglik, A.; Shangytbayeva, G.; Romanets, I. Using Graphic Network Simulator 3 for Ddos Attacks Simulation. Int. J. Comput. 2017, 16, 219–225. [Google Scholar] [CrossRef]

| No. s/p | Features of Deception Technologies | Source |

|---|---|---|

| common network technologies that are implemented in deception systems | [1,2,13,16,17,25,27,28,33,36,37,39,44,45,46,51,52,53] | |

| Use of historical data in deception systems | [3,4] | |

| providing different options for responding to repetitive actions of intruders in the architecture of systems | [3,4] | |

| providing polymorphic responses to repetitive actions of intruders in the architecture of systems | [3] | |

| use of deceptive systems of baits and traps | [3] | |

| imitation of services and context of the real part of the network | [2] | |

| Dynamic traffic redirection | [11,23,38] | |

| using the game of deception | [12,50] | |

| combined lures (high and low levels of interaction) | [14,19,20] | |

| protection of moving targets | [15] | |

| Misleading topology | [18] | |

| Industrial Control Systems Baits | [21,26,35,41] | |

| virtual network for each host of the corporate network | [24,31,34] | |

| Intelligent decoy network based on software-configured networks | [30] | |

| Containerization Techniques for Dynamically Creating Decoy Networks | [32] | |

| web-based cyber deception system (based on Docker) | [40,43] | |

| Automated decoy network deployment system for active protection of container-based cloud environments | [42] |

| № | Time for Choosing Options for Completing Tasks, s | Task Completion Time, s | Execution Time, s | Task Number | Options for Completing the Task | The Importance of the target Assessment Function | Option Number 1–5 | Variant Replay Number | Previous Value of the Target Function | Execution Time | Strategy | Rule | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| System Stability | System Responsiveness | System Integrity | System Security | |||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 1 | 0.154 | 0.599 | 0.445 | 1 | 1 | 0.0003 | 0.0657 | 0.0484 | 0.0149 | 0.0323 | 8 | 1.1 | ||||

| 0.190 | 0.346 | 0.156 | 2 | 0.0294 | 0.0391 | 0.0011 | 0.0429 | 0.0281 | 5 | 3.1 | ||||||

| 0.156 | 0.568 | 0.412 | 3 | 0.0672 | 0.0294 | 0.0289 | 0.0551 | 0.0451 | 3 | 4 | 2.3 | |||||

| 0.168 | 0.363 | 0.195 | 4 | 0.0696 | 0.0633 | 0.0161 | 0.0056 | 0.0386 | 11 | 2.3 | ||||||

| 0.153 | 0.350 | 0.197 | 5 | 0.0402 | 0.0234 | 0.0162 | 0.0625 | 0.0356 | 2 | 2.2 | ||||||

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 34 | 0.136 | 0.593 | 0.457 | 3 | 1 | 0.0294 | 0.0563 | 0.0353 | 0.0418 | 0.0407 | 0.0281 | 0.226 | 3 | 2.1 | ||

| 0.154 | 0.519 | 0.365 | 2 | 0.0181 | 0.0304 | 0.0031 | 0.0226 | 0.0185 | 2 | 0.0398 | 0.303 | 6 | 2.2 | |||

| 0.127 | 0.559 | 0.432 | 3 | 0.0143 | 0.0154 | 0.0237 | 0.0368 | 0.0226 | 3 | 0.0427 | 0.173 | 4 | 2.3 | |||

| 0.184 | 0.473 | 0.289 | 4 | 0.0437 | 0.0005 | 0.0673 | 0.0390 | 0.0376 | 0.0238 | 0.350 | 8 | 2.3 | ||||

| 0.147 | 0.436 | 0.289 | 5 | 0.0497 | 0.0227 | 0.0090 | 0.0419 | 0.0308 | 0.0364 | 0.408 | 4 | 2.3 | ||||

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 100 | 0.143 | 0.545 | 0.402 | 7 | 1 | 0.0536 | 0.0437 | 0.0352 | 0.0371 | 0.0424 | 1 | 0.0243 | 0.263 | 14 | 3.1 | |

| 0.102 | 0.460 | 0.358 | 2 | 0.0300 | 0.0397 | 0.0473 | 0.0670 | 0.0460 | 0.0107 | 0.346 | 8 | 1.1 | ||||

| 0.166 | 0.549 | 0.383 | 3 | 0.0011 | 0.0029 | 0.0563 | 0.0152 | 0.0188 | 0.0308 | 0.395 | 5 | 2.2 | ||||

| 0.104 | 0.367 | 0.263 | 4 | 0.0503 | 0.0113 | 0.0580 | 0.0122 | 0.0330 | 4 | 0.0305 | 0.177 | 1 | 1.1 | |||

| 0.134 | 0.435 | 0.301 | 5 | 0.0525 | 0.0014 | 0.0355 | 0.0082 | 0.0244 | 0.0330 | 0.215 | 3 | 2.3 | ||||

| Average Execution Time, s | Task Number-Number | Average Value of Stability | Average Value of Efficiency | Average Value of Integrity | Average Value of Security | Average Value of Objective Function | Variant Number 1–5 | Repeat Number of the Variant | Number of Selected Rules | Number of Selected Strategies -Number |

|---|---|---|---|---|---|---|---|---|---|---|

| 156 | 1–22 2–8 3–16 4–14 5–24 6–9 7–7 | 6-3-5-2-6 0-3-1-2-2 4-1-6-3-2 5-2-0-4-3 7-3-5-2-7 1-3-1-1-3 0-2-1-3-1 | 0.0683 | 0.0368 | 0.0389 | 0.0361 | 0.0359 | 1–18 2–22 3–35 4–5 5–20 | 1–18 2–22 3–34 4–5 5–20 | 1–47 2–33 3–39 4–28 5–42 6–55 7–36 8–31 9–24 10–38 11–41 12–29 13–19 14–37 |

| № | Time to Choose Options for Completing Tasks, s | Time to Complete Tasks, s | Time of Execution, s | Task Number, s | Options for Completing a Task | Variant Number 1–5 | Variant Repeat Number | Previous Value of the Objective Function | Execution Time | Strategy | Rule | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| System Stability | System Responsiveness | System Integrity | System Security | |||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 1 | 0.109 | 0.312 | 0.203 | 1 | 1 | 0.1901 | 0.2463 | 0.1256 | 0.1727 | 0.1837 | 1 | 4 | 2.1 | |||

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 34 | 0.145 | 0.317 | 0.172 | 6 | 1 | 0.2024 | 0.1207 | 0.1932 | 0.1980 | 0.1786 | 1 | 1 | 0.1733 | 37.8 | 12 | 2.1 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 100 | 0.156 | 0.471 | 0.315 | 7 | 1 | 0.2164 | 0.1572 | 0.1659 | 0.2536 | 0.1983 | 1 | 1 | 0.1227 | 34.1 | 6 | 3.1 |

| Average Execution Time, s | Task Number | Average Value of Stability | Average Value of Efficiency | Average Value of Integrity | Average Value of Safety | Average Value of Objective Function | Variant Number 1–5 | Variant Repetition Number | Number of Selected Rules | Number of Selected Strategies |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.248 | 1–18 2–12 3–15 4–13 5–20 6–11 7–11 | 0.2049 | 0.1966 | 0.1964 | 0.1916 | 0.1974 | 1–100 | 1–99 | 1–8 2–6 3–9 4–4 5–7 6–10 7–8 8–6 9–4 10–7 11–9 12–5 13–3 14–13 | 1.1–13 2.1–11 2.2–14 2.3–9 3.1–16 3.2–37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kashtalian, A.; Ścisło, Ł.; Rucki, R.; Lysenko, S.; Sachenko, A.; Savenko, B.; Savenko, O.; Nicheporuk, A. Control and Decision-Making in Deceptive Multi-Computer Systems Based on Previous Experience for Cybersecurity of Critical Infrastructure. Appl. Sci. 2025, 15, 12286. https://doi.org/10.3390/app152212286

Kashtalian A, Ścisło Ł, Rucki R, Lysenko S, Sachenko A, Savenko B, Savenko O, Nicheporuk A. Control and Decision-Making in Deceptive Multi-Computer Systems Based on Previous Experience for Cybersecurity of Critical Infrastructure. Applied Sciences. 2025; 15(22):12286. https://doi.org/10.3390/app152212286

Chicago/Turabian StyleKashtalian, Antonina, Łukasz Ścisło, Rafał Rucki, Sergii Lysenko, Anatoliy Sachenko, Bohdan Savenko, Oleg Savenko, and Andrii Nicheporuk. 2025. "Control and Decision-Making in Deceptive Multi-Computer Systems Based on Previous Experience for Cybersecurity of Critical Infrastructure" Applied Sciences 15, no. 22: 12286. https://doi.org/10.3390/app152212286

APA StyleKashtalian, A., Ścisło, Ł., Rucki, R., Lysenko, S., Sachenko, A., Savenko, B., Savenko, O., & Nicheporuk, A. (2025). Control and Decision-Making in Deceptive Multi-Computer Systems Based on Previous Experience for Cybersecurity of Critical Infrastructure. Applied Sciences, 15(22), 12286. https://doi.org/10.3390/app152212286