Artificial Intelligence Algorithms for Epiretinal Membrane Detection, Segmentation and Postoperative BCVA Prediction: A Systematic Review and Meta-Analysis

Abstract

1. Introduction

2. Materials and Methods

2.1. Eligibility Criteria

2.2. Information Sources, Search Strategy and Study Selection

2.3. Data Extraction

2.4. Quality Assessment

2.5. Statistical Analysis

3. Results

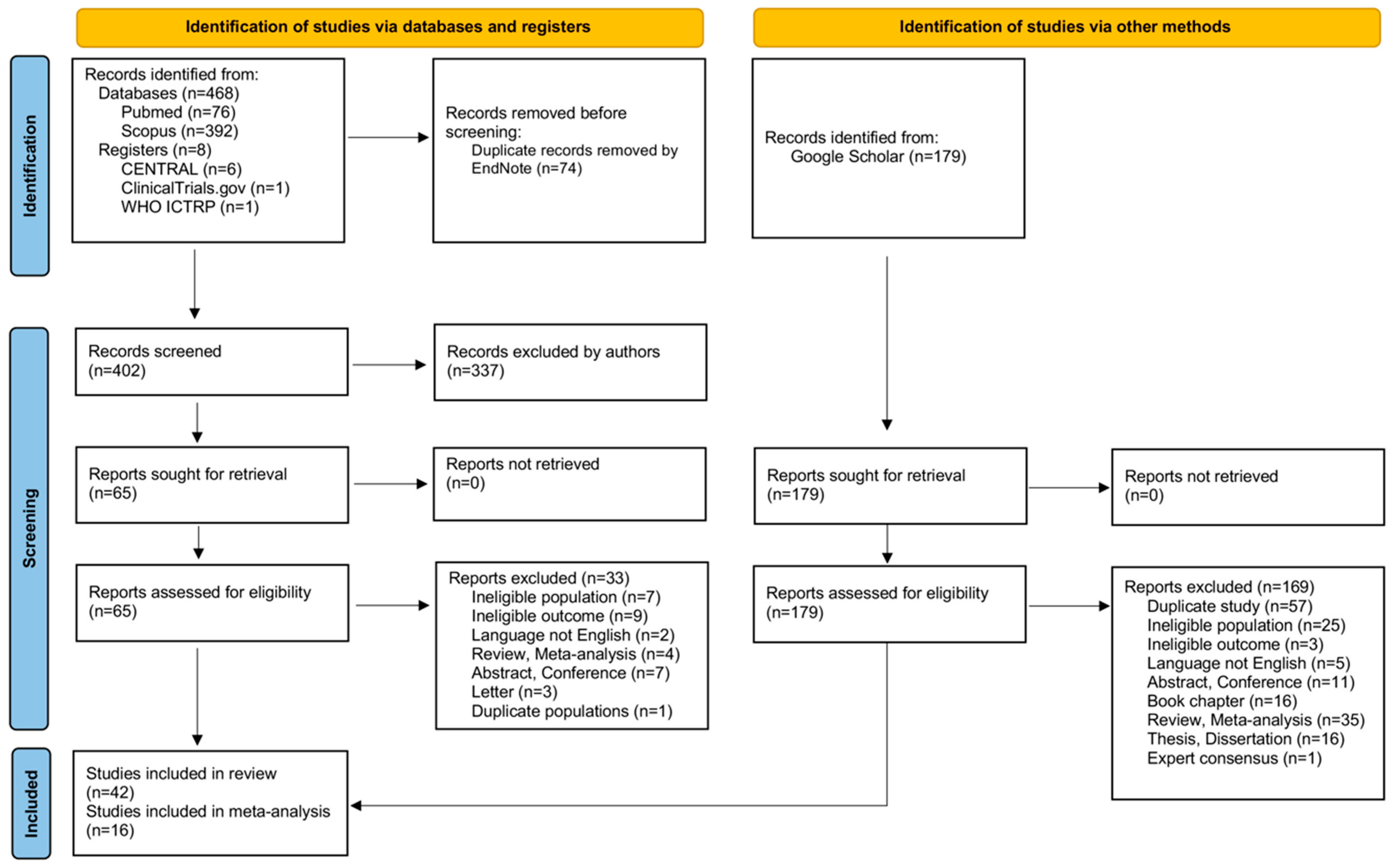

3.1. Study Selection

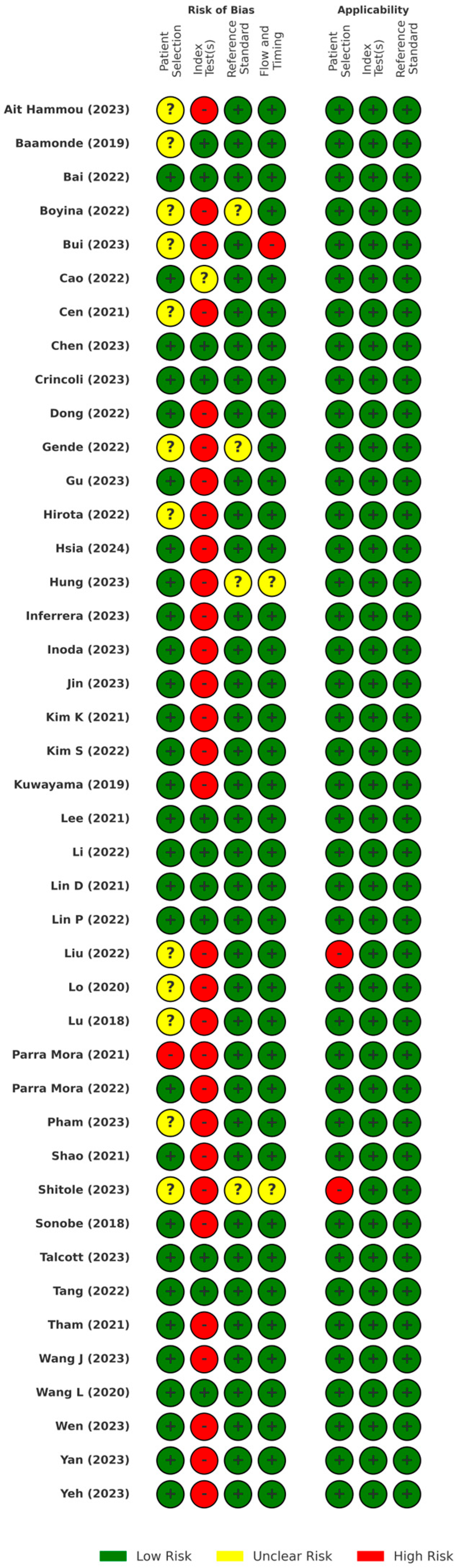

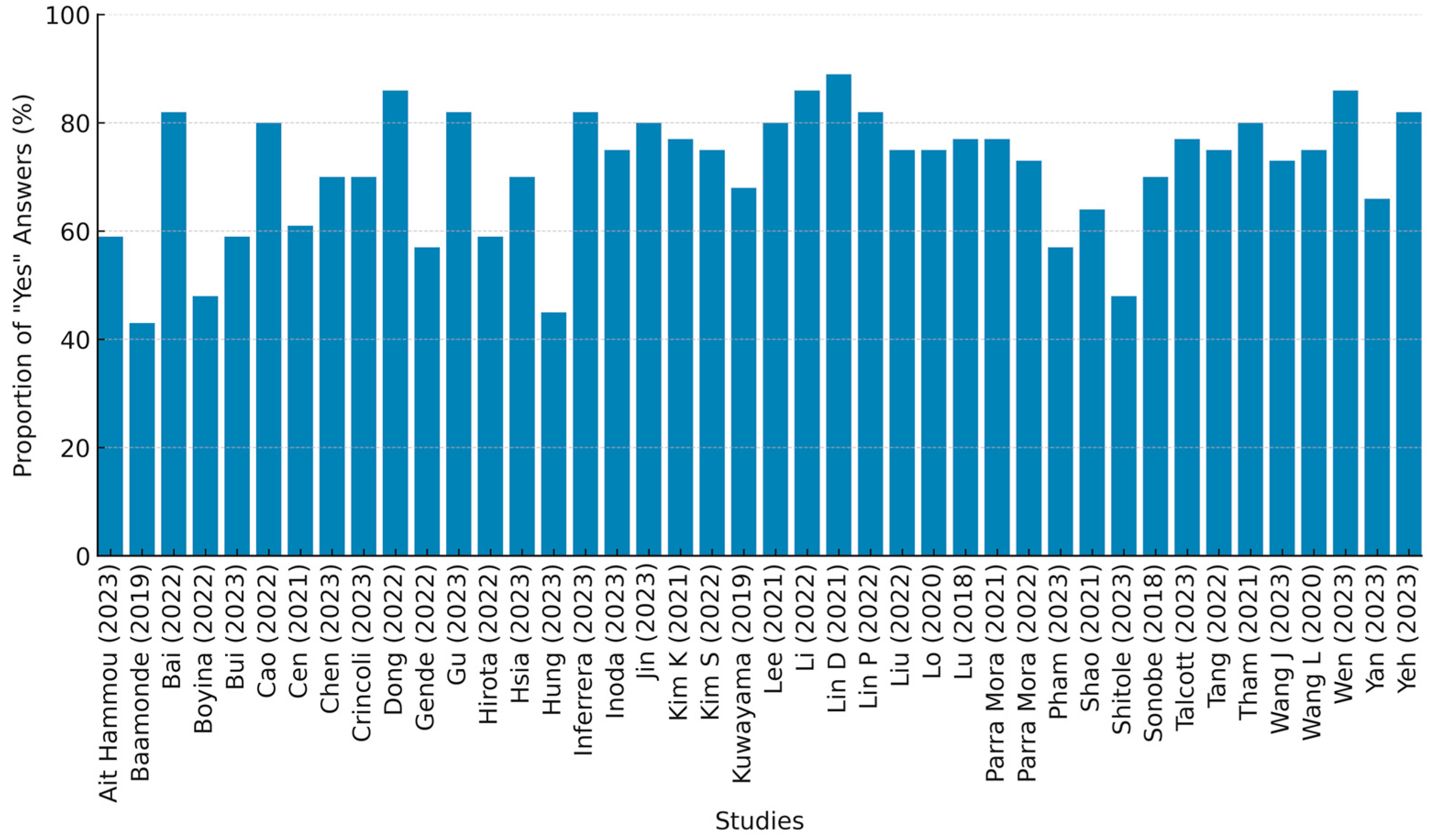

3.2. Study Quality Assessment

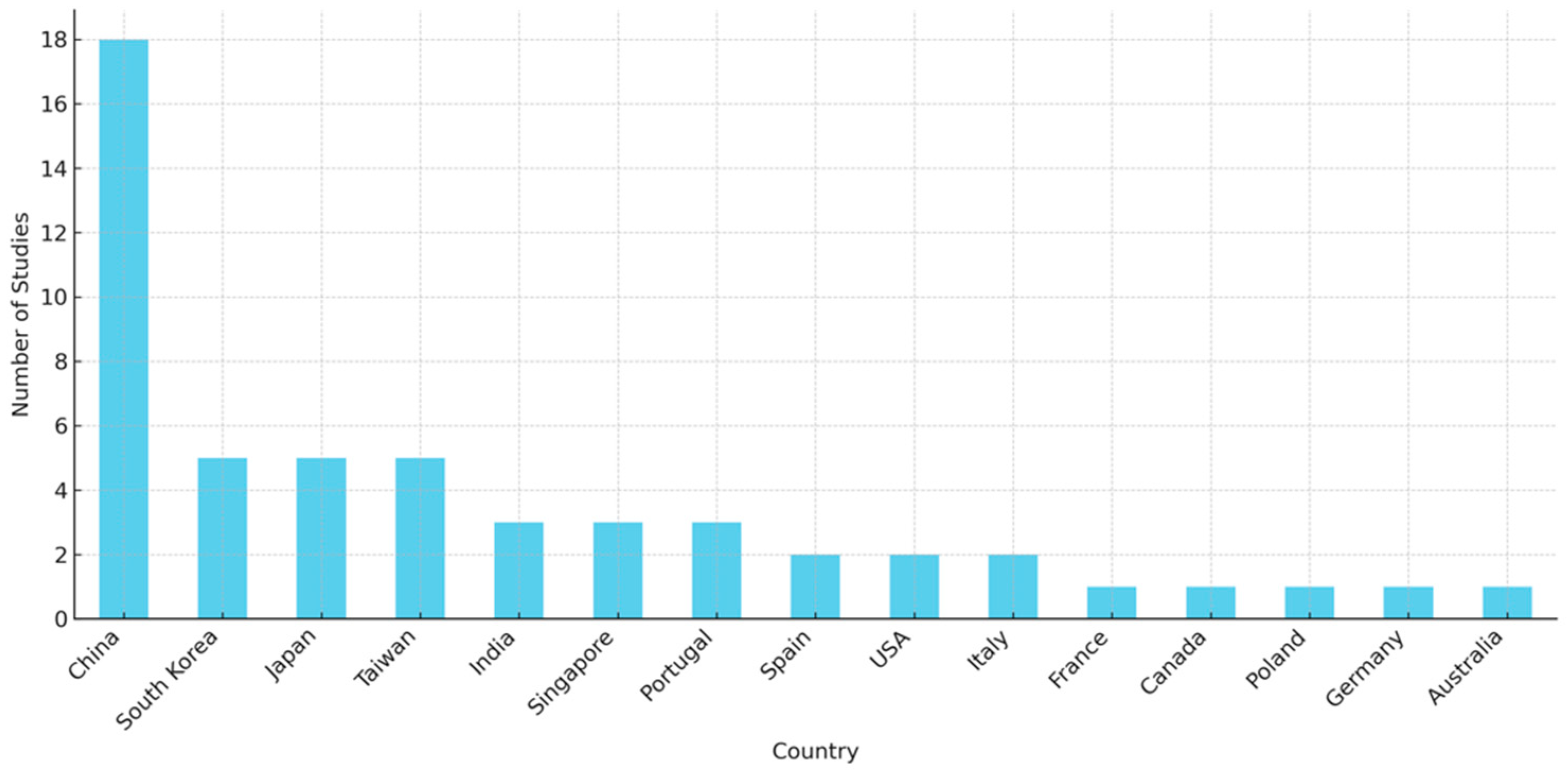

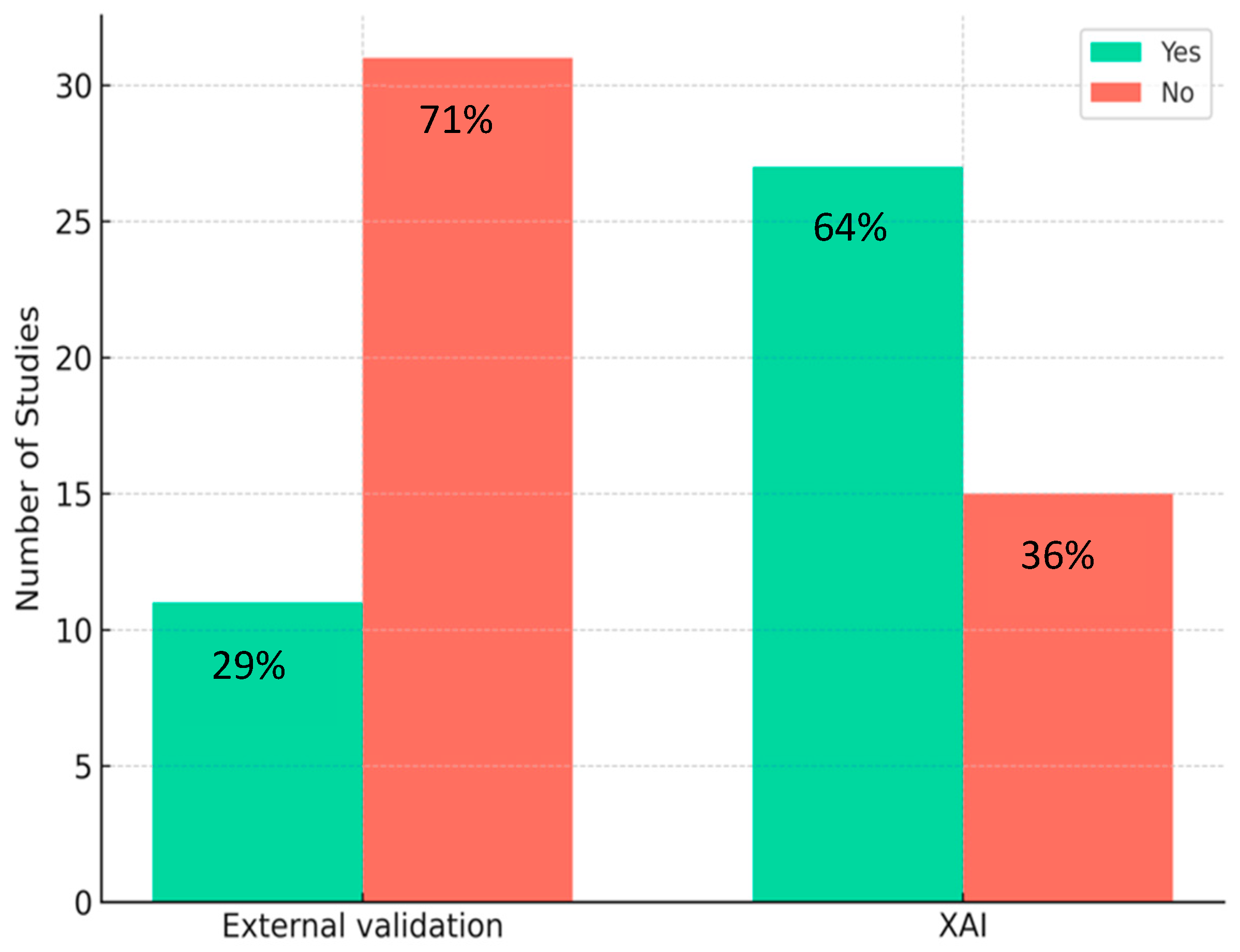

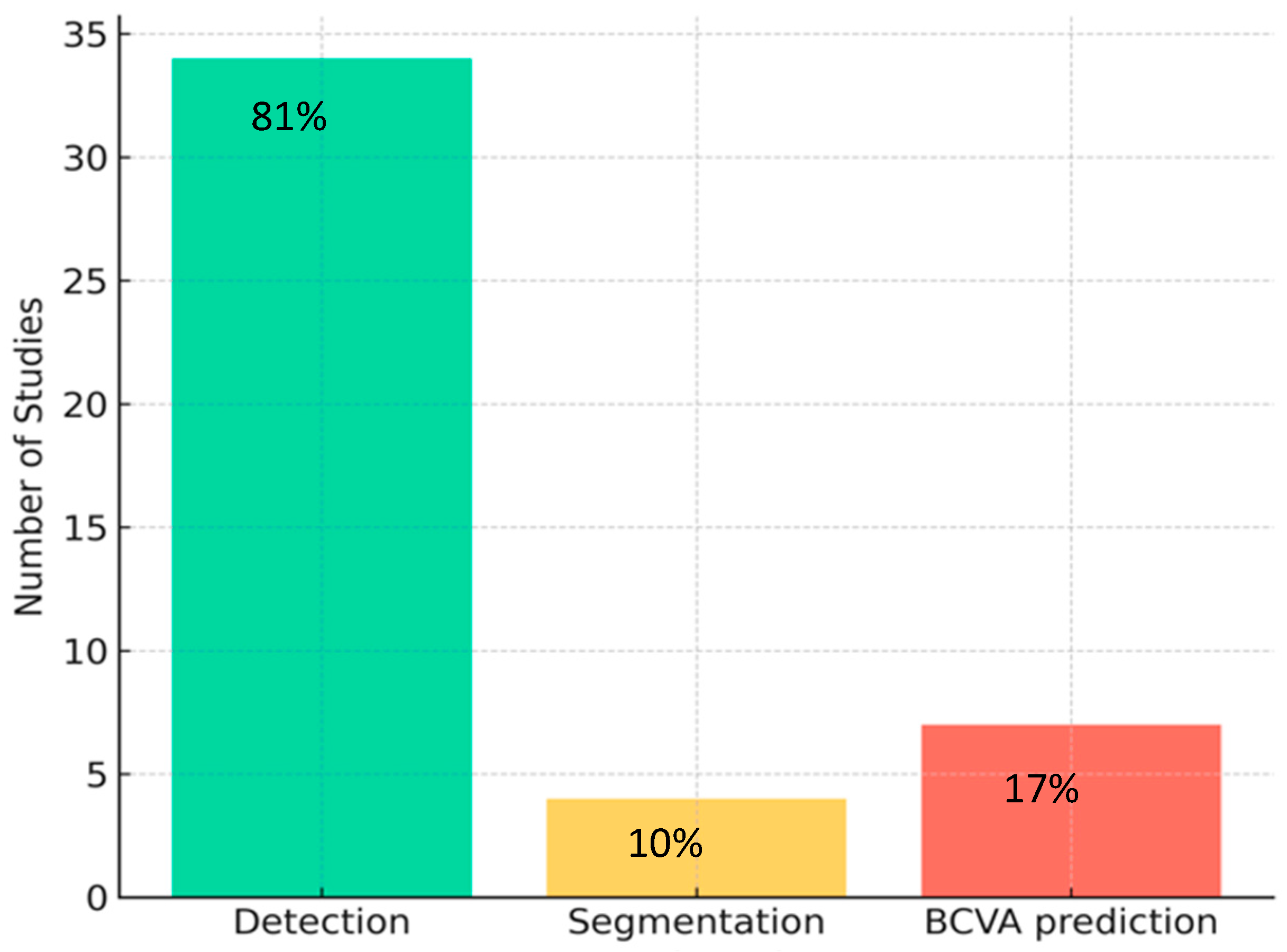

3.3. Study Characteristics

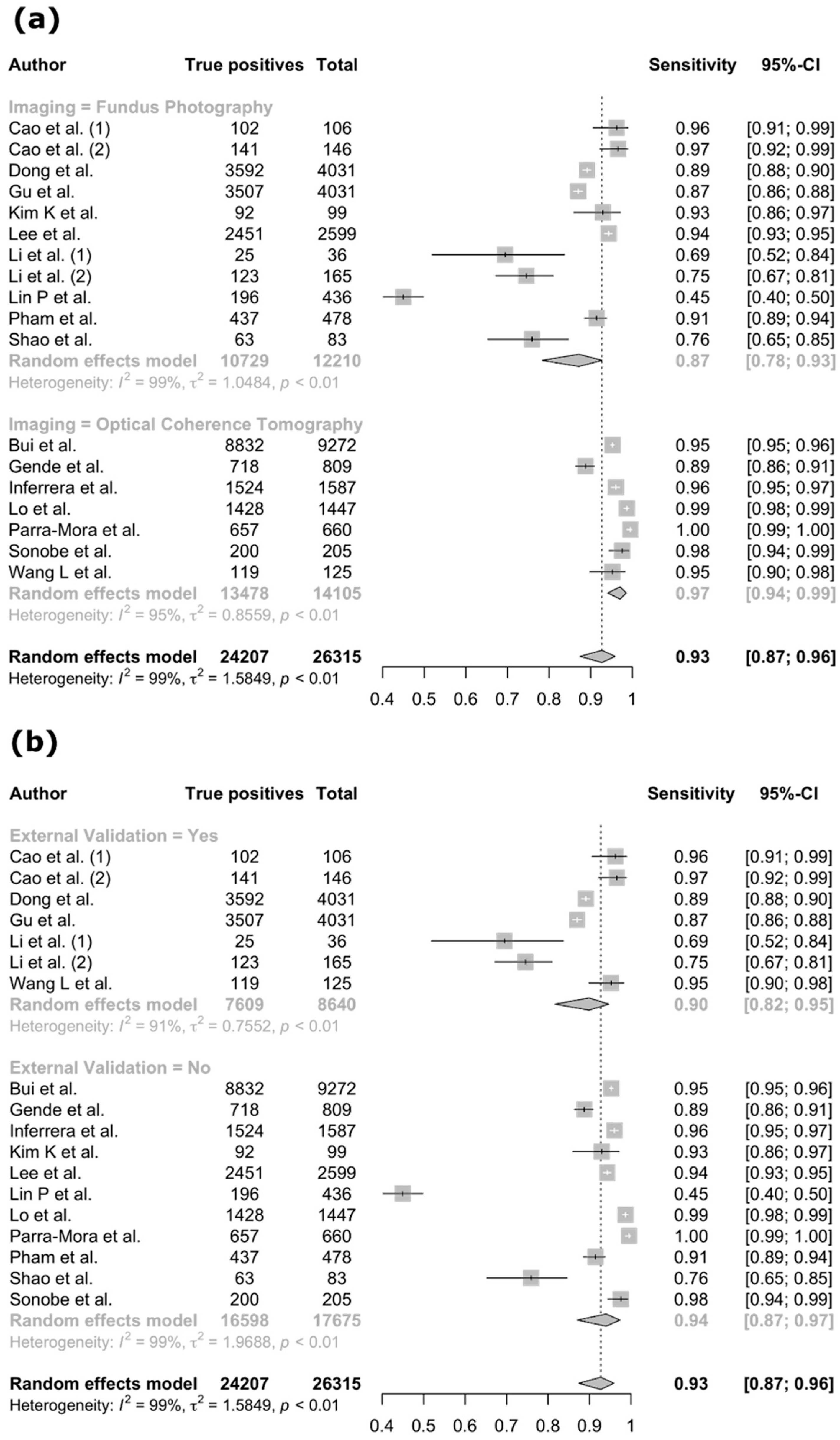

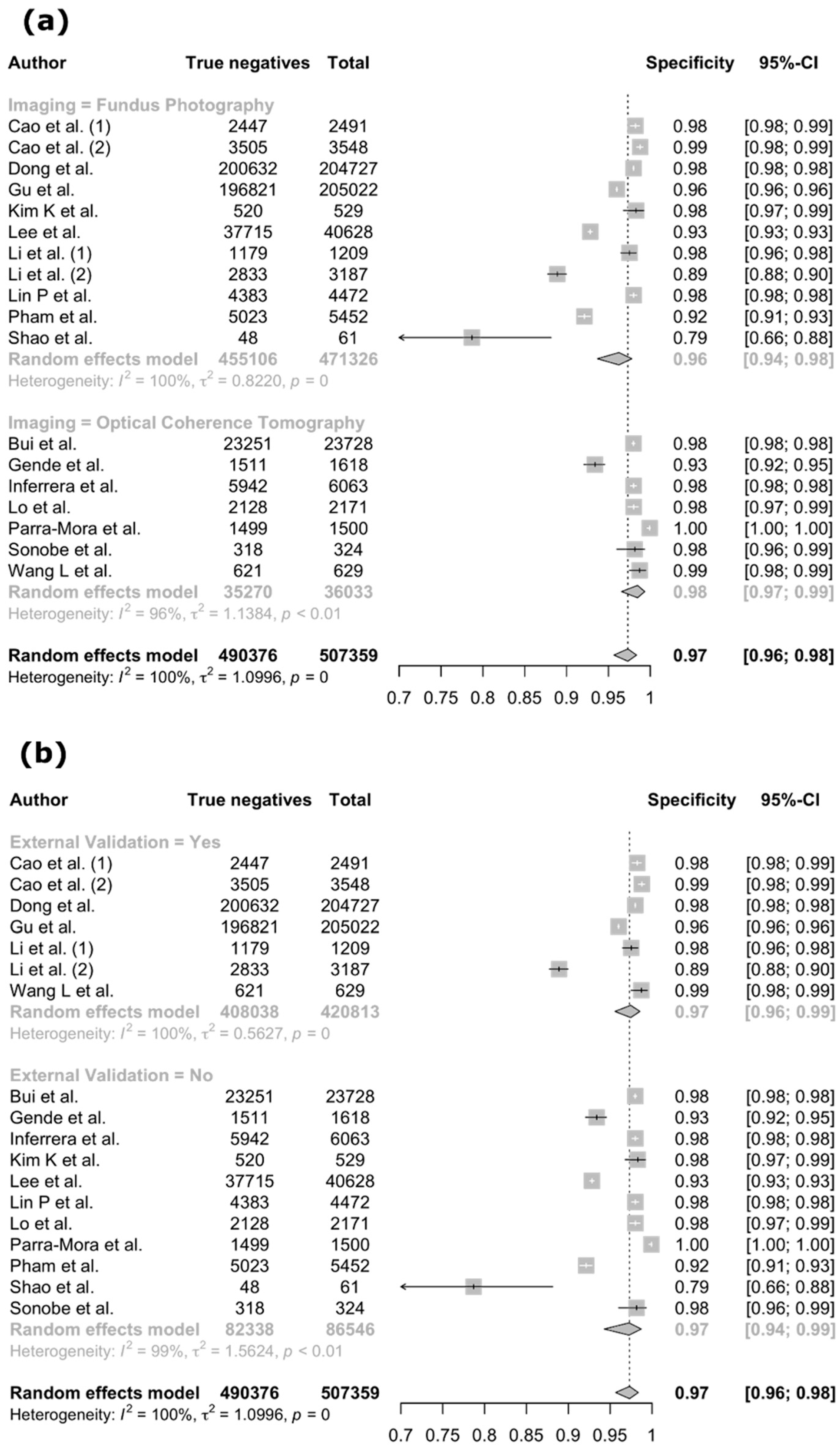

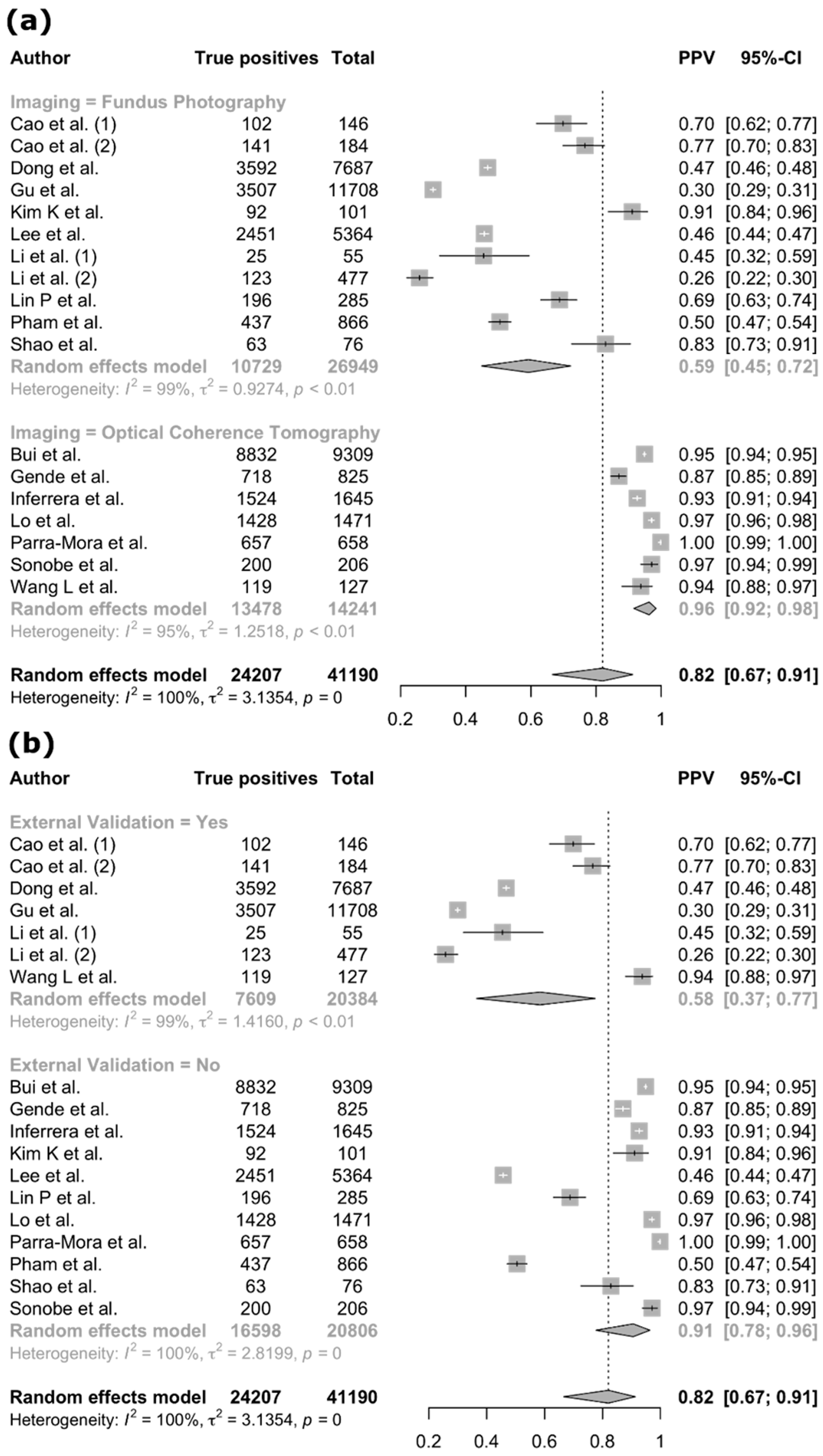

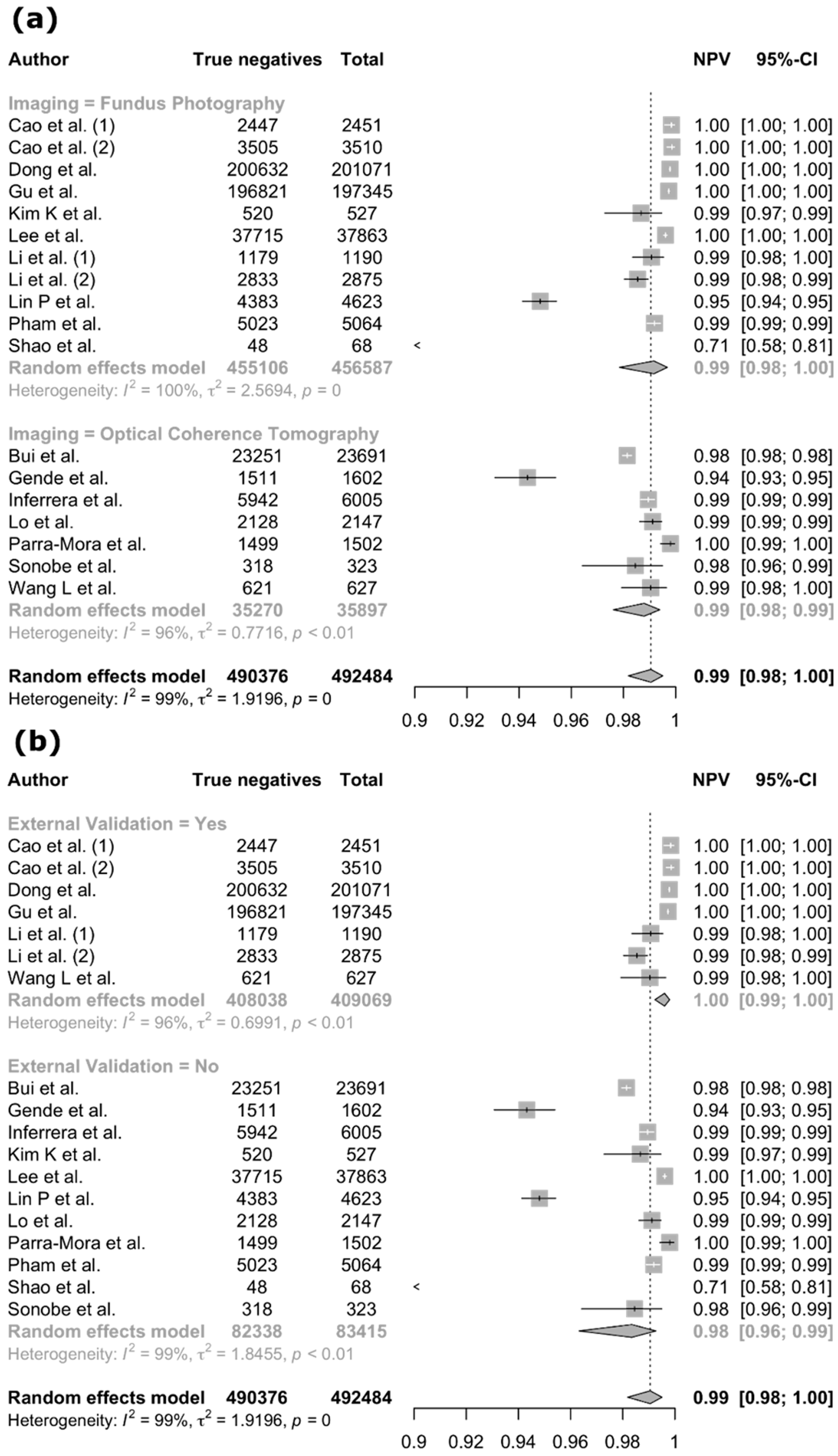

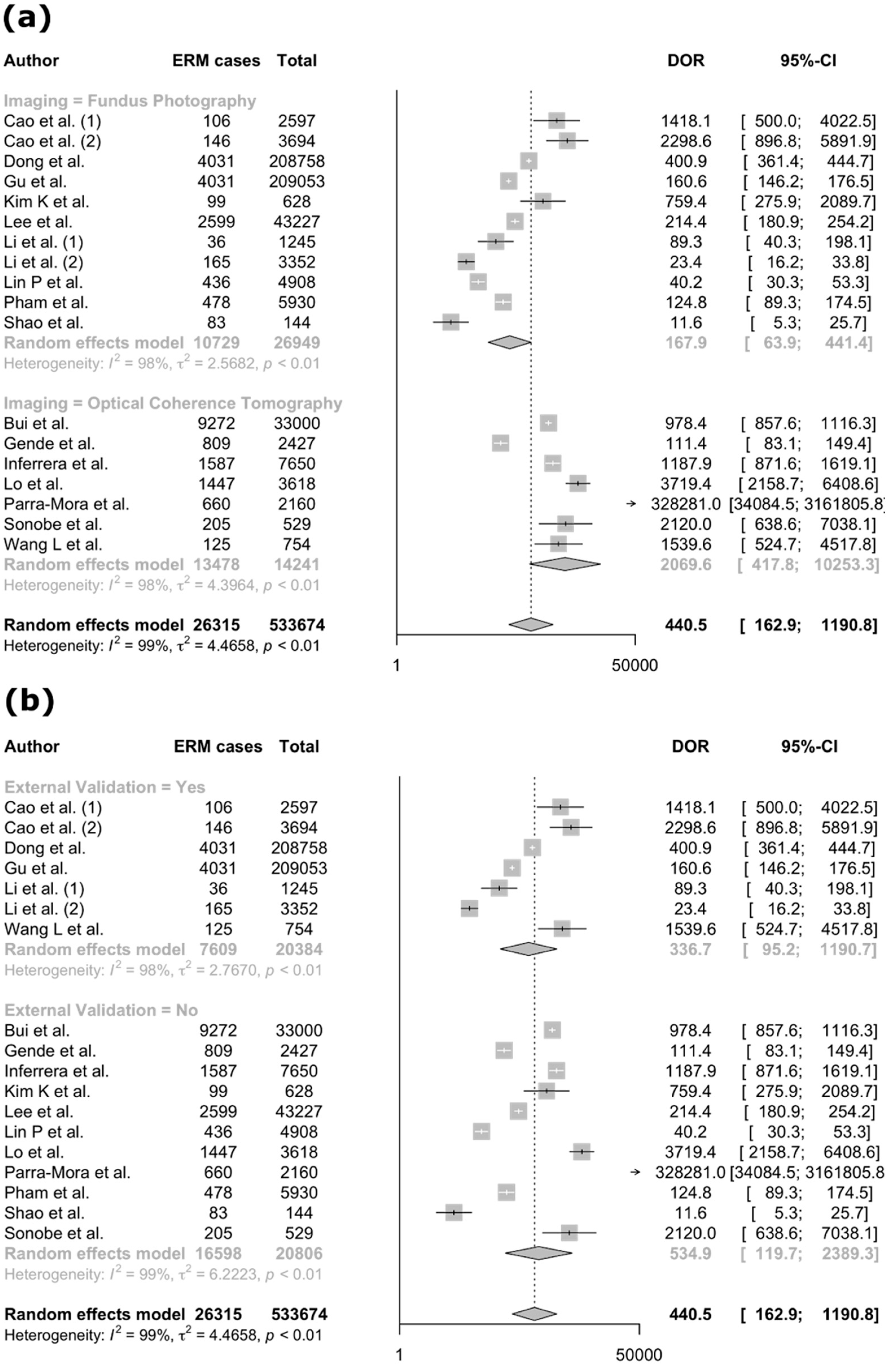

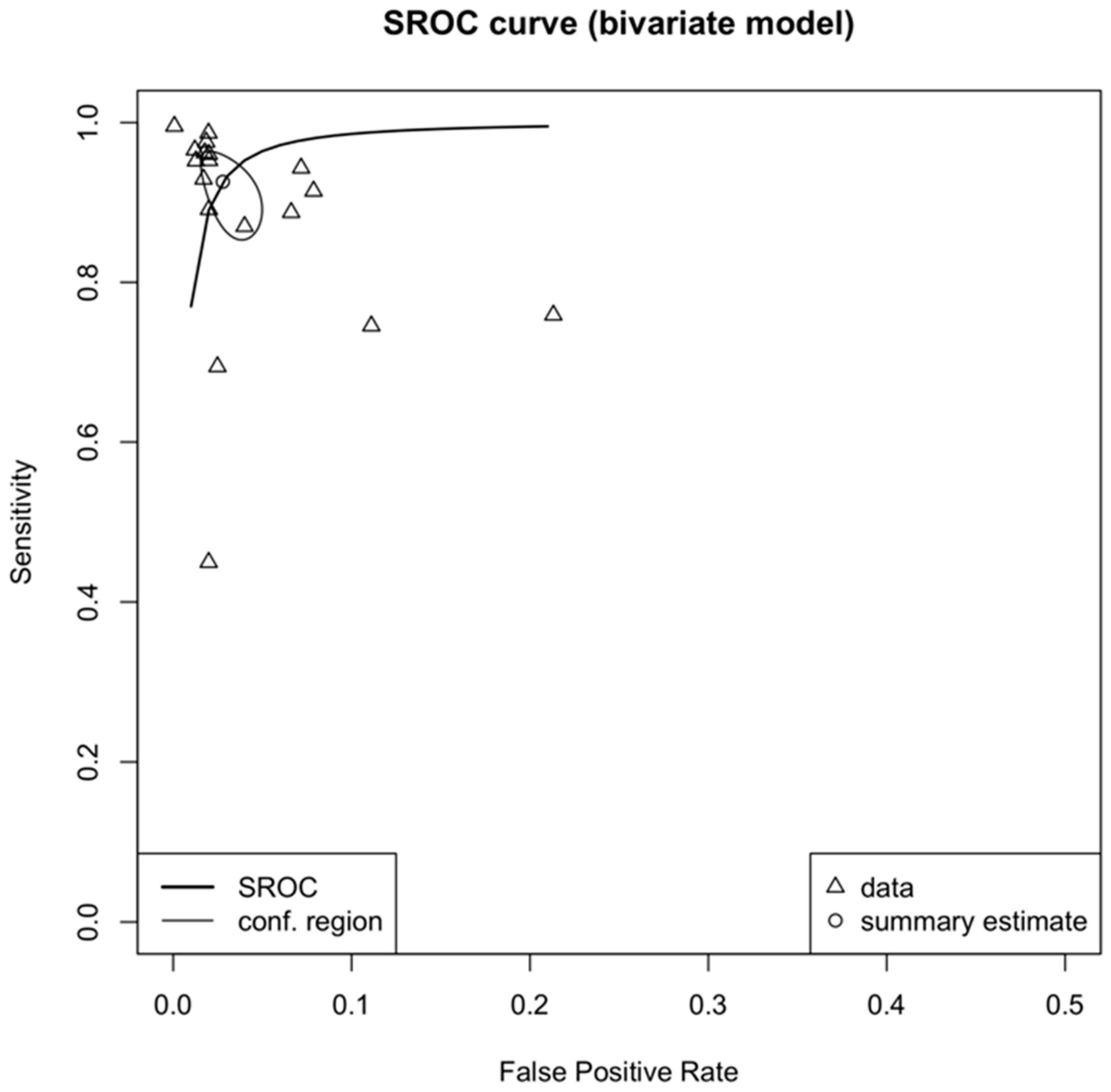

3.4. Meta-Analysis

4. Discussion

4.1. Overview and Comparison with Previous Work

4.2. AI Architecture and Model Characteristics

4.3. Model Explainability

4.4. Strengths and Limitations

4.5. QUADAS-2 and CLAIM Assessments

4.6. Approach for Future Studies

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AMD | Age-related Macular Degeneration |

| ANN | Artificial Neural Network |

| AUC | Area Under the Curve |

| BCVA | Best Corrected Visual Acuity |

| BRVO | Branch Retinal Vein Occlusion |

| CAM | Class Activation Map |

| CFI | Color Fundus Images |

| CI | Confidence Interval |

| CLAIM | Checklist for Artificial Intelligence in Medical Imaging |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DOR | Diagnostic Odds Ratio |

| ERM | Epiretinal Membrane |

| EU | European Union |

| FN | False Negative |

| FP | False Positive |

| GDPR | General Data Protection Regulation |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| HD | High-Definition |

| ICTRP | International Clinical Trials Registry Platform |

| ILM | Internal Limiting Membrane |

| LIME | Local Interpretable Model-agnostic Explanations |

| ML | Machine Learning |

| NPV | Negative Predictive Value |

| OCT | Optical Coherence Tomography |

| pAUC | Partial Area Under the Curve |

| PICOS | Population Intervention Comparator Outcome Study Design |

| PPV | Positive Predictive Value |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| QUADAS-2 | Quality Assessment of the Diagnostic Accuracy Studies-2 |

| RD | Retinal Detachment |

| ROC | Receiver Operating Characteristics |

| RPE | Retinal Pigment Epithelium |

| SD | Spectral-Domain |

| SROC | Summary Receiver Operating Characteristics |

| SS | Swept-Source |

| TN | True Negative |

| TP | True Positive |

| WHO | World Health Organization |

| XAI | Explainable Artificial Intelligence |

References

- Gende, M.; Moura, J.D.; Novo, J.; Ortega, M. End-to-End Multi-Task Learning Approaches for the Joint Epiretinal Membrane Segmentation and Screening in OCT Images. Comput. Med. Imaging Graph. 2022, 98, 102068. [Google Scholar] [CrossRef] [PubMed]

- Hsia, Y.; Lin, Y.-Y.; Wang, B.-S.; Su, C.-Y.; Lai, Y.-H.; Hsieh, Y.-T. Prediction of Visual Impairment in Epiretinal Membrane and Feature Analysis: A Deep Learning Approach Using Optical Coherence Tomography. Asia-Pac. J. Ophthalmol. 2023, 12, 21–28. [Google Scholar] [CrossRef]

- Kim, J.; Chin, H.S. Deep Learning-Based Prediction of the Retinal Structural Alterations after Epiretinal Membrane Surgery. Sci. Rep. 2023, 13, 19275. [Google Scholar] [CrossRef] [PubMed]

- Fung, A.T.; Galvin, J.; Tran, T. Epiretinal Membrane: A Review. Clin. Exp. Ophthalmol. 2021, 49, 289–308. [Google Scholar] [CrossRef] [PubMed]

- Crincoli, E.; Savastano, M.C.; Savastano, A.; Caporossi, T.; Bacherini, D.; Miere, A.; Gambini, G.; De Vico, U.; Baldascino, A.; Minnella, A.M.; et al. New artificial intelligence analysis for prediction of long-term visual improvement after epiretinal membrane surgery. Retina 2023, 43, 173–181. [Google Scholar] [CrossRef]

- Baamonde, S.; De Moura, J.; Novo, J.; Charlón, P.; Ortega, M. Automatic Identification and Characterization of the Epiretinal Membrane in OCT Images. Biomed. Opt. Express 2019, 10, 4018. [Google Scholar] [CrossRef]

- Yeh, T.-C.; Chen, S.-J.; Chou, Y.-B.; Luo, A.-C.; Deng, Y.-S.; Lee, Y.-H.; Chang, P.-H.; Lin, C.-J.; Tai, M.-C.; Chen, Y.-C.; et al. Predicting visual outcome after surgery in patients with idiopathic epiretinal membrane using a novel convolutional neural network. Retina 2023, 43, 767–774. [Google Scholar] [CrossRef]

- Saleh, G.A.; Batouty, N.M.; Haggag, S.; Elnakib, A.; Khalifa, F.; Taher, F.; Mohamed, M.A.; Farag, R.; Sandhu, H.; Sewelam, A.; et al. The Role of Medical Image Modalities and AI in the Early Detection, Diagnosis and Grading of Retinal Diseases: A Survey. Bioengineering 2022, 9, 366. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How Artificial Intelligence is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Bai, J.; Wan, Z.; Li, P.; Chen, L.; Wang, J.; Fan, Y.; Chen, X.; Peng, Q.; Gao, P. Accuracy and Feasibility with AI-Assisted OCT in Retinal Disorder Community Screening. Front. Cell Dev. Biol. 2022, 10, 1053483. [Google Scholar] [CrossRef]

- Karamanli, K.-E.; Maliagkani, E.; Petrou, P.; Papageorgiou, E.; Georgalas, I. Artificial Intelligence in Decoding Ocular Enigmas: A Literature Review of Choroidal Nevus and Choroidal Melanoma Assessment. Appl. Sci. 2025, 15, 3565. [Google Scholar] [CrossRef]

- Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H. A Review on Deep Learning Approaches in Healthcare Systems: Taxonomies, Challenges, and Open Issues. J. Biomed. Inform. 2021, 113, 103627. [Google Scholar] [CrossRef] [PubMed]

- Cabitza, F.; Campagner, A.; Soares, F.; García de Guadiana-Romualdo, L.; Challa, F.; Sulejmani, A.; Seghezzi, M.; Carobene, A. The Importance of Being External. Methodological Insights for the External Validation of Machine Learning Models in Medicine. Comput. Methods Programs Biomed. 2021, 208, 106288. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef] [PubMed]

- Methley, A.M.; Campbell, S.; Chew-Graham, C.; McNally, R.; Cheraghi-Sohi, S. PICO, PICOS and SPIDER: A Comparison Study of Specificity and Sensitivity in Three Search Tools for Qualitative Systematic Reviews. BMC Health Serv. Res. 2014, 14, 579. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M.; the QUADAS-2 Group. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Tejani, A.S.; Klontzas, M.E.; Gatti, A.A.; Mongan, J.T.; Moy, L.; Park, S.H.; Kahn, C.E., Jr.; for the CLAIM 2024 Update Panel. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): 2024 Update. Radiol. Artif. Intell. 2024, 6, e240300. [Google Scholar] [CrossRef]

- Ait Hammou, B.; Antaki, F.; Boucher, M.-C.; Duval, R. MBT: Model-Based Transformer for Retinal Optical Coherence Tomography Image and Video Multi-Classification. Int. J. Med. Inform. 2023, 178, 105178. [Google Scholar] [CrossRef]

- Boyina, L.; Boddu, K.; Tankasala, Y.; Vani, K.S. Classification of Uncertain ImageNet Retinal Diseases Using ResNet Model. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 35–42. [Google Scholar]

- Bui, P.-N.; Le, D.-T.; Bum, J.; Kim, S.; Song, S.J.; Choo, H. Multi-Scale Learning with Sparse Residual Network for Explainable Multi-Disease Diagnosis in OCT Images. Bioengineering 2023, 10, 1249. [Google Scholar] [CrossRef]

- Cao, J.; You, K.; Zhou, J.; Xu, M.; Xu, P.; Wen, L.; Wang, S.; Jin, K.; Lou, L.; Wang, Y.; et al. A Cascade Eye Diseases Screening System with Interpretability and Expandability in Ultra-Wide Field Fundus Images: A Multicentre Diagnostic Accuracy Study. eClinicalMedicine 2022, 53, 101633. [Google Scholar] [CrossRef] [PubMed]

- Cen, L.-P.; Ji, J.; Lin, J.-W.; Ju, S.-T.; Lin, H.-J.; Li, T.-P.; Wang, Y.; Yang, J.-F.; Liu, Y.-F.; Tan, S.; et al. Automatic Detection of 39 Fundus Diseases and Conditions in Retinal Photographs Using Deep Neural Networks. Nat. Commun. 2021, 12, 4828. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xue, Y.; Wu, X.; Zhong, Y.; Rao, H.; Luo, H.; Weng, Z. Deep Learning-Based System for Disease Screening and Pathologic Region Detection From Optical Coherence Tomography Images. Trans. Vis. Sci. Tech. 2023, 12, 29. [Google Scholar] [CrossRef]

- Dong, L.; He, W.; Zhang, R.; Ge, Z.; Wang, Y.X.; Zhou, J.; Xu, J.; Shao, L.; Wang, Q.; Yan, Y.; et al. Artificial Intelligence for Screening of Multiple Retinal and Optic Nerve Diseases. JAMA Netw. Open 2022, 5, e229960. [Google Scholar] [CrossRef] [PubMed]

- Gu, C.; Wang, Y.; Jiang, Y.; Xu, F.; Wang, S.; Liu, R.; Yuan, W.; Abudureyimu, N.; Wang, Y.; Lu, Y.; et al. Application of Artificial Intelligence System for Screening Multiple Fundus Diseases in Chinese Primary Healthcare Settings: A Real-World, Multicentre and Cross-Sectional Study of 4795 Cases. Br. J. Ophthalmol. 2024, 108, 424–431. [Google Scholar] [CrossRef]

- Hirota, M.; Ueno, S.; Inooka, T.; Ito, Y.; Takeyama, H.; Inoue, Y.; Watanabe, E.; Mizota, A. Automatic Screening of the Eyes in a Deep-Learning–Based Ensemble Model Using Actual Eye Checkup Optical Coherence Tomography Images. Appl. Sci. 2022, 12, 6872. [Google Scholar] [CrossRef]

- Hung, C.-L.; Lin, K.-H.; Lee, Y.-K.; Mrozek, D.; Tsai, Y.-T.; Lin, C.-H. The Classification of Stages of Epiretinal Membrane Using Convolutional Neural Network on Optical Coherence Tomography Image. Methods 2023, 214, 28–34. [Google Scholar] [CrossRef]

- Inferrera, L.; Borsatti, L.; Miladinovic, A.; Marangoni, D.; Giglio, R.; Accardo, A.; Tognetto, D. OCT-Based Deep-Learning Models for the Identification of Retinal Key Signs. Sci. Rep. 2023, 13, 14628. [Google Scholar] [CrossRef]

- Inoda, S.; Takahashi, H.; Arai, Y.; Tampo, H.; Matsui, Y.; Kawashima, H.; Yanagi, Y. An AI Model to Estimate Visual Acuity Based Solely on Cross-Sectional OCT Imaging of Various Diseases. Graefes Arch. Clin. Exp. Ophthalmol. 2023, 261, 2775–2785. [Google Scholar] [CrossRef]

- Jin, K.; Yan, Y.; Wang, S.; Yang, C.; Chen, M.; Liu, X.; Terasaki, H.; Yeo, T.-H.; Singh, N.G.; Wang, Y.; et al. iERM: An Interpretable Deep Learning System to Classify Epiretinal Membrane for Different Optical Coherence Tomography Devices: A Multi-Center Analysis. J. Clin. Med. 2023, 12, 400. [Google Scholar] [CrossRef]

- Kim, K.M.; Heo, T.-Y.; Kim, A.; Kim, J.; Han, K.J.; Yun, J.; Min, J.K. Development of a Fundus Image-Based Deep Learning Diagnostic Tool for Various Retinal Diseases. J. Clin. Med. 2021, 11, 321. [Google Scholar] [CrossRef]

- Kim, S.H.; Ahn, H.; Yang, S.; Soo Kim, S.; Lee, J.H. Deep learning-based prediction of outcomes following noncomplicated epiretinal membrane surgery. Retina 2022, 42, 1465–1471. [Google Scholar] [CrossRef] [PubMed]

- Kuwayama, S.; Ayatsuka, Y.; Yanagisono, D.; Uta, T.; Usui, H.; Kato, A.; Takase, N.; Ogura, Y.; Yasukawa, T. Automated Detection of Macular Diseases by Optical Coherence Tomography and Artificial Intelligence Machine Learning of Optical Coherence Tomography Images. J. Ophthalmol. 2019, 2019, 6319581. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Lee, J.; Cho, S.; Song, J.; Lee, M.; Kim, S.H.; Lee, J.Y.; Shin, D.H.; Kim, J.M.; Bae, J.H.; et al. Development of Decision Support Software for Deep Learning-Based Automated Retinal Disease Screening Using Relatively Limited Fundus Photograph Data. Electronics 2021, 10, 163. [Google Scholar] [CrossRef]

- Li, B.; Chen, H.; Zhang, B.; Yuan, M.; Jin, X.; Lei, B.; Xu, J.; Gu, W.; Wong, D.C.S.; He, X.; et al. Development and Evaluation of a Deep Learning Model for the Detection of Multiple Fundus Diseases Based on Colour Fundus Photography. Br. J. Ophthalmol. 2021, 106, 1079–1086. [Google Scholar] [CrossRef]

- Lin, D.; Xiong, J.; Liu, C.; Zhao, L.; Li, Z.; Yu, S.; Wu, X.; Ge, Z.; Hu, X.; Wang, B.; et al. Application of Comprehensive Artificial Intelligence Retinal Expert (CARE) System: A National Real-World Evidence Study. Lancet Digit. Health 2021, 3, e486–e495. [Google Scholar] [CrossRef]

- Lin, P.-K.; Chiu, Y.-H.; Huang, C.-J.; Wang, C.-Y.; Pan, M.-L.; Wang, D.-W.; Mark Liao, H.-Y.; Chen, Y.-S.; Kuan, C.-H.; Lin, S.-Y.; et al. PADAr: Physician-Oriented Artificial Intelligence-Facilitating Diagnosis Aid for Retinal Diseases. J. Med. Imag. 2022, 9, 044501. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, C.; Wang, L.; Wang, G.; Lv, B.; Lv, C.; Xie, G.; Wang, F. Evaluation of an OCT-AI–Based Telemedicine Platform for Retinal Disease Screening and Referral in a Primary Care Setting. Trans. Vis. Sci. Technol. 2022, 11, 4. [Google Scholar] [CrossRef]

- Lo, Y.-C.; Lin, K.-H.; Bair, H.; Sheu, W.H.-H.; Chang, C.-S.; Shen, Y.-C.; Hung, C.-L. Epiretinal Membrane Detection at the Ophthalmologist Level Using Deep Learning of Optical Coherence Tomography. Sci. Rep. 2020, 10, 8424. [Google Scholar] [CrossRef]

- Lu, W.; Tong, Y.; Yu, Y.; Xing, Y.; Chen, C.; Shen, Y. Deep Learning-Based Automated Classification of Multi-Categorical Abnormalities From Optical Coherence Tomography Images. Trans. Vis. Sci. Technol. 2018, 7, 41. [Google Scholar] [CrossRef]

- Parra-Mora, E.; Cazanas-Gordon, A.; Proenca, R.; Da Silva Cruz, L.A. Epiretinal Membrane Detection in Optical Coherence Tomography Retinal Images Using Deep Learning. IEEE Access 2021, 9, 99201–99219. [Google Scholar] [CrossRef]

- Parra-Mora, E.; Da Silva Cruz, L.A. LOCTseg: A Lightweight Fully Convolutional Network for End-to-End Optical Coherence Tomography Segmentation. Comput. Biol. Med. 2022, 150, 106174. [Google Scholar] [CrossRef] [PubMed]

- Pham, V.-N.; Le, D.-T.; Bum, J.; Kim, S.H.; Song, S.J.; Choo, H. Discriminative-Region Multi-Label Classification of Ultra-Widefield Fundus Images. Bioengineering 2023, 10, 1048. [Google Scholar] [CrossRef] [PubMed]

- Shao, E.; Liu, C.; Wang, L.; Song, D.; Guo, L.; Yao, X.; Xiong, J.; Wang, B.; Hu, Y. Artificial Intelligence-Based Detection of Epimacular Membrane from Color Fundus Photographs. Sci. Rep. 2021, 11, 19291. [Google Scholar] [CrossRef]

- Shitole, A.; Kenchappagol, A.; Jangle, R.; Shinde, Y.; Chadha, A.S. Enhancing Retinal Scan Classification: A Comparative Study of Transfer Learning and Ensemble Techniques. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 520–528. [Google Scholar] [CrossRef]

- Sonobe, T.; Tabuchi, H.; Ohsugi, H.; Masumoto, H.; Ishitobi, N.; Morita, S.; Enno, H.; Nagasato, D. Comparison between Support Vector Machine and Deep Learning, Machine-Learning Technologies for Detecting Epiretinal Membrane Using 3D-OCT. Int. Ophthalmol. 2019, 39, 1871–1877. [Google Scholar] [CrossRef]

- Talcott, K.E.; Valentim, C.C.S.; Perkins, S.W.; Ren, H.; Manivannan, N.; Zhang, Q.; Bagherinia, H.; Lee, G.; Yu, S.; D’Souza, N.; et al. Automated Detection of Abnormal Optical Coherence Tomography B-Scans Using a Deep Learning Artificial Intelligence Neural Network Platform. Int. Ophthalmol. Clin. 2024, 64, 115–127. [Google Scholar] [CrossRef]

- Tang, Y.; Gao, X.; Wang, W.; Dan, Y.; Zhou, L.; Su, S.; Wu, J.; Lv, H.; He, Y. Automated Detection of Epiretinal Membranes in OCT Images Using Deep Learning. Ophthalmic Res. 2023, 66, 238–246. [Google Scholar] [CrossRef]

- Tham, Y.-C.; Anees, A.; Zhang, L.; Goh, J.H.L.; Rim, T.H.; Nusinovici, S.; Hamzah, H.; Chee, M.-L.; Tjio, G.; Li, S.; et al. Referral for Disease-Related Visual Impairment Using Retinal Photograph-Based Deep Learning: A Proof-of-Concept, Model Development Study. Lancet Digit. Health 2021, 3, e29–e40. [Google Scholar] [CrossRef]

- Wang, J.; Zong, Y.; He, Y.; Shi, G.; Jiang, C. Domain Adaptation-Based Automated Detection of Retinal Diseases from Optical Coherence Tomography Images. Curr. Eye Res. 2023, 48, 836–842. [Google Scholar] [CrossRef]

- Wang, L.; Wang, G.; Zhang, M.; Fan, D.; Liu, X.; Guo, Y.; Wang, R.; Lv, B.; Lv, C.; Wei, J.; et al. An Intelligent Optical Coherence Tomography-Based System for Pathological Retinal Cases Identification and Urgent Referrals. Trans. Vis. Sci. Technol. 2020, 9, 46. [Google Scholar] [CrossRef]

- Wen, D.; Yu, Z.; Yang, Z.; Zheng, C.; Ren, X.; Shao, Y.; Li, X. Deep Learning-Based Postoperative Visual Acuity Prediction in Idiopathic Epiretinal Membrane. BMC Ophthalmol. 2023, 23, 361. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Huang, X.; Jiang, X.; Gao, Z.; Liu, X.; Jin, K.; Ye, J. Clinical Evaluation of Deep Learning Systems for Assisting in the Diagnosis of the Epiretinal Membrane Grade in General Ophthalmologists. Eye 2024, 38, 730–736. [Google Scholar] [CrossRef] [PubMed]

- Cheung, R.; Chun, J.; Sheidow, T.; Motolko, M.; Malvankar-Mehta, M.S. Diagnostic Accuracy of Current Machine Learning Classifiers for Age-Related Macular Degeneration: A Systematic Review and Meta-Analysis. Eye 2022, 36, 994–1004. [Google Scholar] [CrossRef] [PubMed]

- Senapati, A.; Tripathy, H.K.; Sharma, V.; Gandomi, A.H. Artificial Intelligence for Diabetic Retinopathy Detection: A Systematic Review. Inform. Med. Unlocked 2024, 45, 101445. [Google Scholar] [CrossRef]

- Prashar, J.; Tay, N. Performance of Artificial Intelligence for the Detection of Pathological Myopia from Colour Fundus Images: A Systematic Review and Meta-Analysis. Eye 2024, 38, 303–314. [Google Scholar] [CrossRef]

- Mikhail, D.; Gao, A.; Farah, A.; Mihalache, A.; Milad, D.; Antaki, F.; Popovic, M.M.; Shor, R.; Duval, R.; Kertes, P.J.; et al. Performance of Artificial Intelligence-Based Models for Epiretinal Membrane Diagnosis: A Systematic Review and Meta-Analysis. Am. J. Ophthalmol. 2025, 277, 420–432. [Google Scholar] [CrossRef]

- Chatzara, A.; Maliagkani, E.; Mitsopoulou, D.; Katsimpris, A.; Apostolopoulos, I.D.; Papageorgiou, E.; Georgalas, I. Artificial Intelligence Approaches for Geographic Atrophy Segmentation: A Systematic Review and Meta-Analysis. Bioengineering 2025, 12, 475. [Google Scholar] [CrossRef]

- Moradi, M.; Chen, Y.; Du, X.; Seddon, J.M. Deep Ensemble Learning for Automated Non-Advanced AMD Classification Using Optimized Retinal Layer Segmentation and SD-OCT Scans. Comput. Biol. Med. 2023, 154, 106512. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Wong, C.Y.T.; Antaki, F.; Woodward-Court, P.; Ong, A.Y.; Keane, P.A. The Role of Saliency Maps in Enhancing Ophthalmologists’ Trust in Artificial Intelligence Models. Asia-Pac. J. Ophthalmol. 2024, 13, 100087. [Google Scholar] [CrossRef]

- Arora, A.; Alderman, J.E.; Palmer, J.; Ganapathi, S.; Laws, E.; McCradden, M.D.; Oakden-Rayner, L.; Pfohl, S.R.; Ghassemi, M.; McKay, F.; et al. The Value of Standards for Health Datasets in Artificial Intelligence-Based Applications. Nat. Med. 2023, 29, 2929–2938. [Google Scholar] [CrossRef]

- Yang, F.; Zamzmi, G.; Angara, S.; Rajaraman, S.; Aquilina, A.; Xue, Z.; Jaeger, S.; Papagiannakis, E.; Antani, S.K. Assessing Inter-Annotator Agreement for Medical Image Segmentation. IEEE Access 2023, 11, 21300–21312. [Google Scholar] [CrossRef]

- Aboy, M.; Minssen, T.; Vayena, E. Navigating the EU AI Act: Implications for Regulated Digital Medical Products. npj Digit. Med. 2024, 7, 237. [Google Scholar] [CrossRef]

- European Union General Data Protection Regulation (GDPR). Available online: https://gdpr.eu (accessed on 6 October 2025).

| Author (Year) | Country | Diseases | Imaging Modality | Dataset | Reference Standard | AI Task | AI Type | AI Architecture | Internal Validation Method | External Validation | Explainable AI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ait Hammou (2023) [18] | Canada | ERM; normal; 5 other retinal diseases | OCT | public (one dataset); private (one image and one video dataset) | experienced fellowship trained retina specialist | detection | DL ML | Swin Transformer; Vision Transformer; Multiscale Vision Transformer; EfficientNetB0; NasNetLarge; NasNetMobile; Xception | cross validation | no | saliency maps |

| Baamonde (2019) [6] | Spain | ERM | SD-OCT | private | one expert clinician | detection | ML | Multilayer Perceptron; Naive Bayes; K-Nearest Neighbors; Random Forest | 10-fold cross validation | no | no |

| Bai (2022) [10] | China | ERM; 13 other retinal diseases | SD-OCT | private (4 local communities) | 3 retina professors with more than 12 yoe | detection | DL | Cascade-RCNN | 6:2:2 holdout validation | no | no |

| Boyina (2022) [19] | India | ERM; normal; 6 other ocular diseases | CFI | public (one dataset) | ophthalmologists | detection | DL | ResNet | 7:2:1 holdout validation | no | no |

| Bui (2023) [20] | South Korea | ERM; normal; 2 other retinal diseases | OCT | private (one hospital) | annotated by a junior doctor and verified by a senior doctor | detection | DL | Sparse Residual Network (multi-scale) | holdout validation; train–test split (80%-20%) | no | Grad-CAM |

| Cao (2022) [21] | China | ERM; 23 other ocular diseases | UWFI | Private (3 hospitals) | expert ophthalmologists | detection | DL, ML | Channel-attention feature pyramid network; ResNetXt-50; | train–test-validation split | yes | lesion atlas; Grad-CAM |

| Cen (2021) [22] | China, USA | ERM; 29 other ocular diseases | CFI | public (7 datasets); private (3 hospitals) | expert ophthalmologists | detection | DL | custom CNN; (based on Inception-V3; Xception; InceptionResNet-V2) | split | yes | Grad-CAM; DeepSHAP |

| Chen (2023) [23] | China | ERM; 10 other retinal diseases | OCT | private (one hospital) | two certified ophthalmologists | detection | DL | ResNet50; YOLOv3; AlexNet; VGG16; DenseNet; InceptionV3 (ensemble learning approach) | 4:1:1 holdout validation | no | Grad-CAM |

| Crincoli (2023) [5] | Italy France | ERM stage II | OCT | private (2 hospitals) | 2 expert graders | postoperative BCVA prediction | DL | Inception-ResNet-V2 | holdout validation | no | LIME |

| Dong (2022) [24] | China | ERM; normal; 8 other ocular diseases | CFI | private (10 healthcare centers and one hospital) | 3 examiners of a group of 40 certified ophthalmologists, discrepancies resolved by 6 senior specialists | detection | DL | Yolov3 | holdout validation | yes | Grad-CAM |

| Gende (2022) [1] | Spain | ERM; normal | HD-OCT | private | one expert | detection and segmentation | DL | Multi-scale feature pyramid network (with DenseNet-121; ResNet-18; Inception-v4) | 4-fold cross-validation (eye level) | no | no |

| Gu (2023) [25] | China | ERM; normal; 13 other ocular diseases | CFI | private (6 primary healthcare settings) | 2 retina specialists with 5–10 yoe | detection | DL | Yolov3; EfficientNet-B3 | 5:1 holdout validation | yes | attention heatmap |

| Hirota (2022) [26] | Japan | ERM; 9 other retinal diseases | OCT | private (3 hospitals) | 2ophthalmologists at each hospital | detection | DL ML | ResNet-152; DenseNet-201; EfficientNet-B7; Ensemble model using Random Forest | 3-fold cross validation | no | Grad-CAM |

| Hsia (2023) [2] | Taiwan | ERM | SD-OCT | private (one hospital) | 2 retina specialists | postoperative BCVA prediction | DL | ResNet-50; ResNet-18 | 9:1 holdout validation | no | Grad-CAM |

| Hung (2023) [27] | Taiwan Poland | ERM | SD-OCT | private (one hospital) | expert-labeled ERM staging by ophthalmologists | detection | DL | Fusion network including ResNet; MobileNet; EfficientNet; Swin Transformer; MLP-Mixer | 5-fold cross validation | no | Grad-CAM |

| Inferrera (2023) [28] | Italy | ERM; normal; 7 other retinal diseases | SD-OCT | private (one hospital) | 2 experienced retina specialists | detection | DL | VGG-16 | 9:1 holdout validation; 5-fold cross validation for training and validation | no | Grad-CAM |

| Inoda (2023) [29] | Japan | ERM; normal; other retinal diseases | SS-OCT | private (one hospital) | one ophthalmologist and one retina specialist; BCVA by an optometrist | postoperative BCVA prediction | DL | GoogLeNet (Inception Net) | 10-fold cross validation | yes | no |

| Jin (2023) [30] | China Japan Singapore | ERM classified into 6 severity stages (normal is the stage 0) | OCT | private (9 international medical centers and one hospital) | expert-labeled images by 4 experienced retina specialists | detection and segmentation | DL | iERM with two-stage deep learning architecture; ResNet-34 backbone; Segmentation model based on U-Net | train–validation-test split (7:1:2 ratio) | yes | CAM and segmentation-based feature analysis |

| Kim K (2021) [31] | South Korea | ERM; 6 other retinal diseases | CFI | private (one hospital) | one retina specialist | detection | DL | ResNet-50; VGG-19; Inception v3 | 5-fold cross validation | no | Grad-CAM |

| Kim S (2022) [32] | South Korea | ERM | SD-OCT | private (one hospital) | ophthalmologists | postoperative BCVA prediction | DL | VGG-16 | 7:1.5:1.5 holdout validation | no | attention maps |

| Kuwayama (2019) [33] | Japan | ERM; normal; other retinal diseases | HD-OCT | private (one hospital) | one ophthalmologist | detection | DL | custom CNN | 9:1 holdout validation | no | no |

| Lee (2021) [34] | South Korea | ERM; 4 other retinal diseases | CFI | private (one hospital) | 2 retina specialists and three residents with third to fourth year training | detection | DL | ResNet-50 | stratified bootstrapping | yes | Grad-CAM |

| Li (2022) [35] | China | ERM; 10 other ocular diseases | CFI | private (3 hospitals) | 17 senior board-certified ophthalmologists | detection | DL | SeResNext50 | 4:1 holdout validation | yes | Grad-CAM |

| Lin D (2021) [36] | China | ERM; normal; 13 other ocular diseases | CFI | private (16 clinical settings) | 40 ophthalmologists; 6 retina specialists | detection | DL | InceptionResNetV2 CNN Comprehensive AI Retinal Expert—CARE system | 8:2 holdout validation | yes | attention heatmaps |

| Lin P (2022) [37] | Taiwan | ERM; normal; 3 other retinal diseases | CFI | private (one hospital) | expert-labeled fundus images | detection | DL ML | VGG-16 | 8:2 holdout validation | no | Grad-CAM++ |

| Liu (2022) [38] | China | ERM; other ocular diseases | SD-OCT | private (4 primary care stations) | 2 ophthalmologists with more than 15yoe | detection | DL | Deep and Shallow Feature Fusion Network | no | no | |

| Lo (2020) [39] | Taiwan | ERM; normal; other ocular diseases | SD-OCT | private (one hospital) | senior retinal specialist with more than 18 yoe | detection | DL | ResNet-101 | 8:2 holdout validation | no | Grad-CAM |

| Lu (2018) [40] | China | ERM; normal; 3 other retinal diseases | HD-OCT | private (one hospital) | 17 licensed retina experts | detection | DL | ResNet | 10-fold cross validation | no | no |

| Parra Mora (2021) [41] | Portugal | ERM; non-ERM | SD-OCT | private (one hospital) | medical ophthalmology specialists | detection | DL | AlexNet; SqueezeNet; ResNet; VGGNet | 10-fold cross validation | no | Grad-CAM |

| Parra Mora (2022) [42] | Portugal | ERM; non-ERM | SD-OCT | public (2 datasets); private (one dataset) | 2 graders | segmentation | DL | LOCTSeg (Fully Convolutional Network) | equal split; 6-fold cross-validation; even–odd patient split | no | no |

| Pham (2023) [43] | South Korea | ERM; 5 other retinal diseases | UWFI | private (one hospital) | annotated by experienced ophthalmologists | detection | DL | Xception; ResNet50; MobileNetV3, EfficientNetB3 | train–validation split (9:1 ratio) | no | no |

| Shao (2021) [44] | China | ERM; non-ERM | CFI | private (one hospital) | 3 ophthalmologists (resident doctor, attending, retina specialist) | detection | DL | combination of Inception-Resnet-v2 and Xception | not reported | no | Grad-CAM |

| Shitole (2023) [45] | India | ERM; other ocular diseases | CFI | public (one dataset) | annotated by ophthalmologists | detection | DL | DenseNet-201; ResNet152V2; XceptionNet; EfficientNet-B7; MobileNetV2; EfficientNetV2M + Ensemble Model | train–validation-test split (60%-20%-20%) | no | no |

| Sonobe (2018) [46] | Japan | ERM; non-ERM | 3D-OCT | private (one hospital) | 2 ophthalmologists | detection | DL ML | Support Vector Machine; custom CNN | 8:2 holdout validation | no | no |

| Talcott (2023) [47] | USA Germany Portugal Singapore | ERM; normal; other ocular diseases | HD-OCT | private (9 hospitals) | 2 ophthalmologists | detection | DL | Modified ResNet-50 | 5-fold cross validation | yes | no |

| Tang (2022) [48] | China | ERM | HD-OCT | private (one hospital) | one expert with more than 20 yoe | detection | DL | U-net | 9:1 holdout validation | no | no |

| Tham (2021) [49] | Singapore China India Australia | ERM; other ocular diseases | CFI | public (6 datasets) | trained ophthalmologists | postoperative BCVA prediction | DL | ResNet-50 | 8:2 holdout validation | yes | Grad-CAM |

| Wang J (2023) [50] | China | ERM; normal; other ocular diseases | OCT | private (2 hospitals) | 2 specialists | detection | DL | Custom model | random train–test split (target data) | no | Grad-CAM |

| Wang L (2020) [51] | China | ERM; normal; other ocular diseases | SD-OCT | private (2 hospitals) | 2 ophthalmologists and one senior retina specialist | detection | DL ML | Feature pyramid network; Random Forest | 8:2 holdout validation | yes | feature importance |

| Wen (2023) [52] | China | ERM | SD-OCT | private (one hospital) | postoperative BCVA prediction | DL | Inception-Resnet-v2 | 6:2:2 holdout validation | no | Grad-CAM | |

| Yan (2023) [53] | China | ERM; normal | SD-OCT | private (3 hospitals) | 4 experienced retina specialists with more than 10 yoe | detection and segmentation | DL | SegNet; ResNet | 9:1 holdout validation | no | no |

| Yeh (2023) [7] | Taiwan | ERM | SD-OCT | private (one hospital) | experts | postoperative BCVA prediction | DL | Heterogeneous Data Fusion Net (HDF-Net) | 9:1 holdout validation; 10-fold cross validation | no | Grad-CAM |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maliagkani, E.; Mitri, P.; Mitsopoulou, D.; Katsimpris, A.; Apostolopoulos, I.D.; Sandali, A.; Tyrlis, K.; Papandrianos, N.; Georgalas, I. Artificial Intelligence Algorithms for Epiretinal Membrane Detection, Segmentation and Postoperative BCVA Prediction: A Systematic Review and Meta-Analysis. Appl. Sci. 2025, 15, 12280. https://doi.org/10.3390/app152212280

Maliagkani E, Mitri P, Mitsopoulou D, Katsimpris A, Apostolopoulos ID, Sandali A, Tyrlis K, Papandrianos N, Georgalas I. Artificial Intelligence Algorithms for Epiretinal Membrane Detection, Segmentation and Postoperative BCVA Prediction: A Systematic Review and Meta-Analysis. Applied Sciences. 2025; 15(22):12280. https://doi.org/10.3390/app152212280

Chicago/Turabian StyleMaliagkani, Eirini, Petroula Mitri, Dimitra Mitsopoulou, Andreas Katsimpris, Ioannis D. Apostolopoulos, Athanasia Sandali, Konstantinos Tyrlis, Nikolaos Papandrianos, and Ilias Georgalas. 2025. "Artificial Intelligence Algorithms for Epiretinal Membrane Detection, Segmentation and Postoperative BCVA Prediction: A Systematic Review and Meta-Analysis" Applied Sciences 15, no. 22: 12280. https://doi.org/10.3390/app152212280

APA StyleMaliagkani, E., Mitri, P., Mitsopoulou, D., Katsimpris, A., Apostolopoulos, I. D., Sandali, A., Tyrlis, K., Papandrianos, N., & Georgalas, I. (2025). Artificial Intelligence Algorithms for Epiretinal Membrane Detection, Segmentation and Postoperative BCVA Prediction: A Systematic Review and Meta-Analysis. Applied Sciences, 15(22), 12280. https://doi.org/10.3390/app152212280