Multi-Chain of Thought Prompt Learning for Aspect-Based Sentiment Analysis

Abstract

1. Introduction

- (1)

- This article draws on human thinking patterns to gradually build a complete thinking path and deeply explore implicit emotional clues in the text.

- (2)

- Given the intermediate steps generated by single-thought chain prompt learning, there may be limitations in terms of word sentiment polarity in assisting LLM recognition. Based on the grammatical structure and semantic logic of the text, this study constructs a multi-thought chain prompt template from four different dimensions of text understanding and conducts multi-dimensional emotional semantic analysis research.

- (3)

- The MC-TPL model was tested on a benchmark dataset, and the experimental results showed the effectiveness of multi-thought chain prompt learning and external knowledge assistance.

2. Related Work

2.1. Neural Network Methods in Sentiment Analysis

2.2. Pre-Trained Model Methods

2.3. Large Language Model Methods

2.4. Prompt Learning Methods

3. Methodology

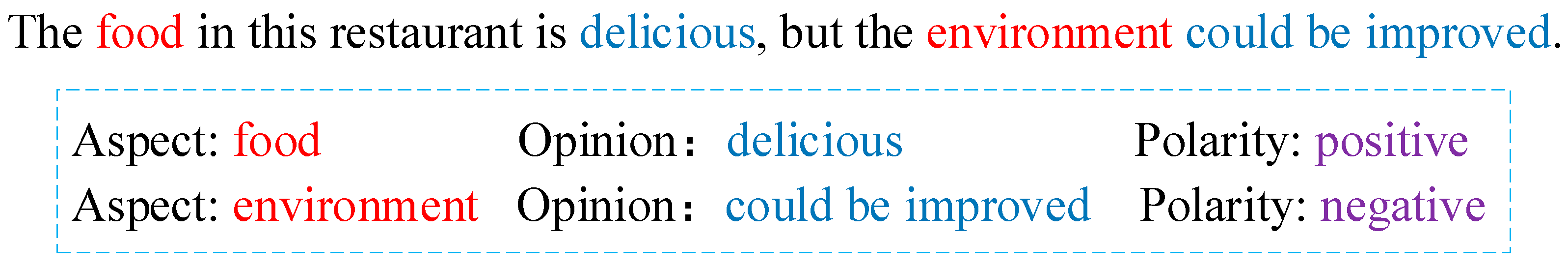

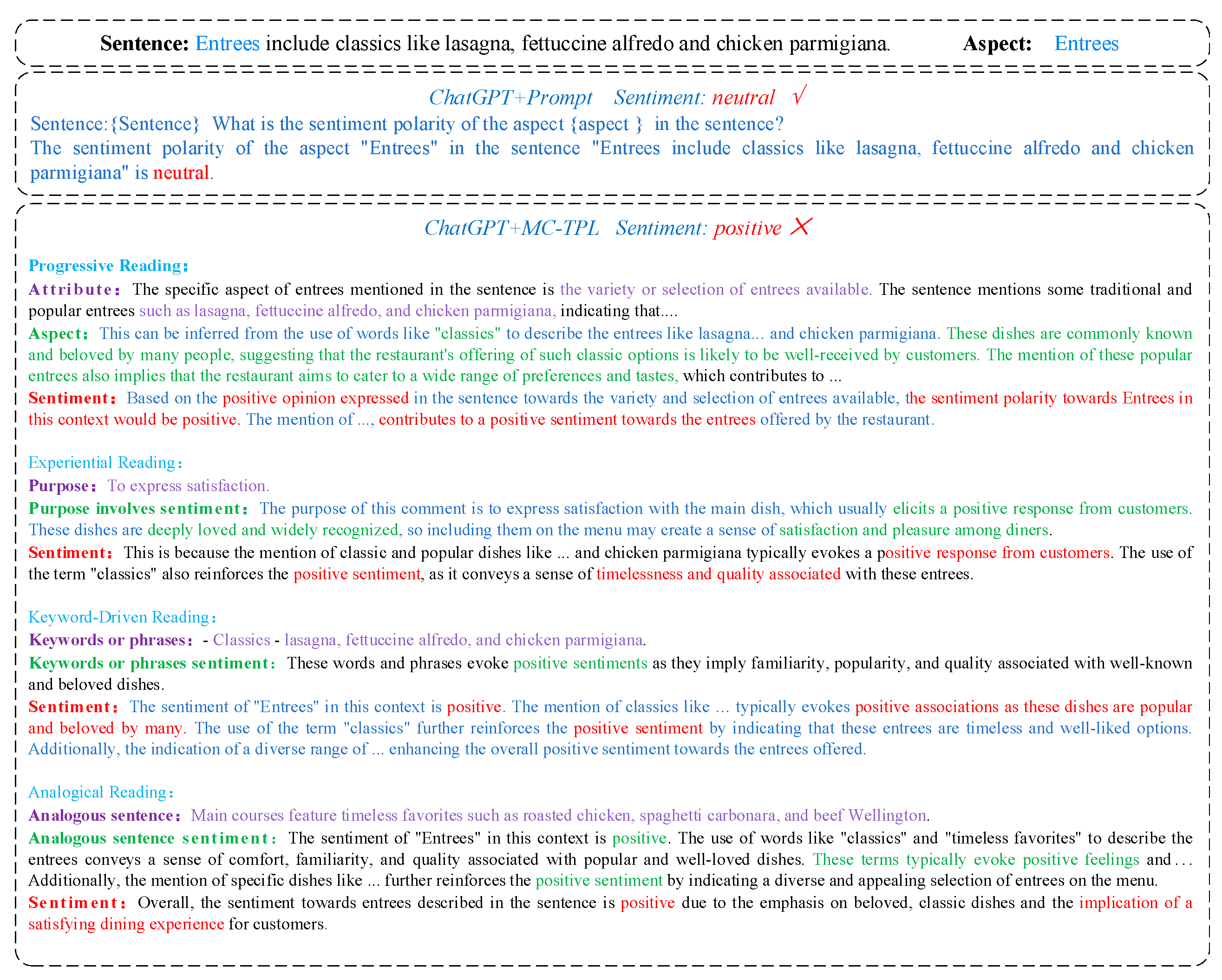

3.1. Multi-Thinking Chain Prompt Template

3.1.1. Progressive Reading

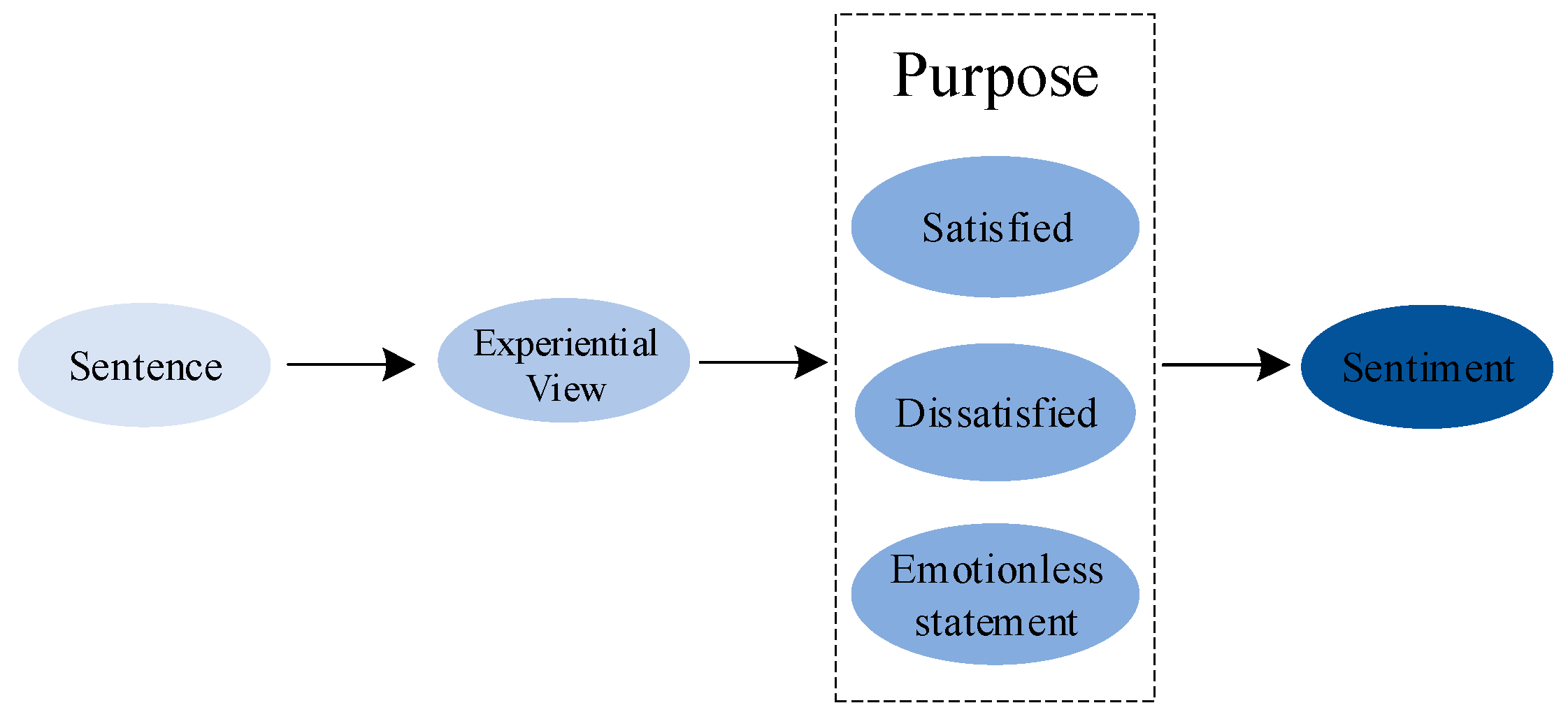

3.1.2. Experiential Reading

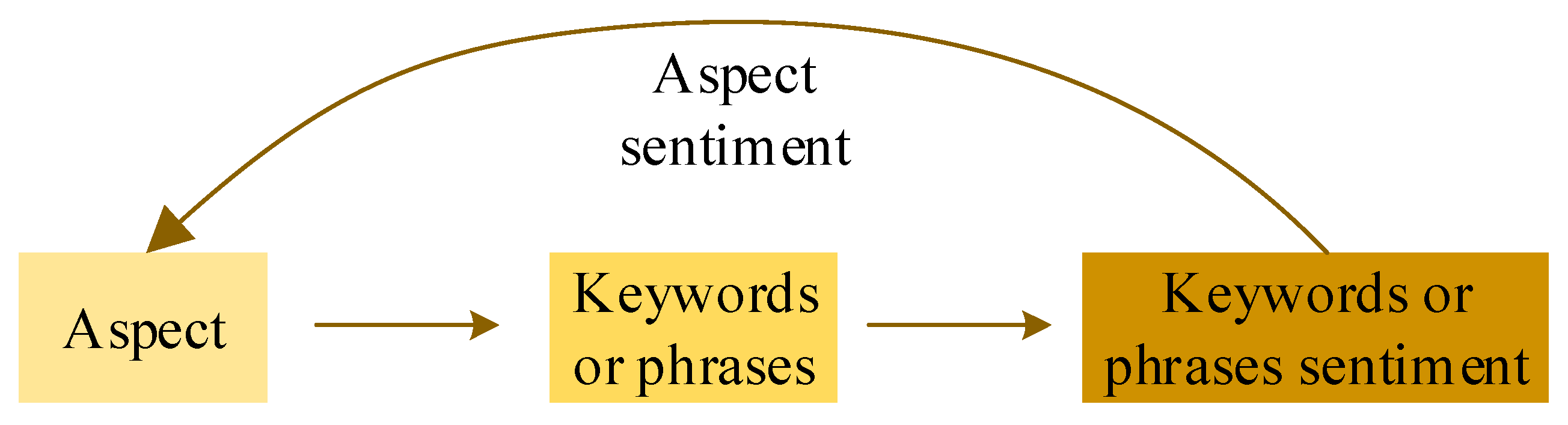

3.1.3. Keyword-Driven Reading

3.1.4. Analogical Reading

3.2. Emotional Reasoning Enhancement Strategies

4. Experiment

4.1. Relevant Parameters

4.2. Baseline Model

4.3. Comparative Experiments and Analysis

4.4. Comparative Experiments and Analysis of Implicit Sentiment Analysis

4.5. Ablation Experiment

4.6. Error Analysis

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nazir, A.; Rao, Y.; Wu, L.; Sun, L. Issues and challenges of aspect-based sentiment analysis: A comprehensive survey. IEEE Trans. Affect. Comput. 2020, 13, 845–863. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Gelbukh, A. Aspect extraction for opinion mining with a deep convolutional neural network. Knowl. Based Syst. 2016, 108, 42–49. [Google Scholar] [CrossRef]

- Ren, F.; Feng, L.; Xiao, D.; Cai, M.; Cheng, S. DNet: A lightweight and efficient model for aspect based sentiment analysis. Expert Syst. Appl. 2020, 151, 113393. [Google Scholar] [CrossRef]

- Paranjape, B.; Michael, J.; Ghazvininejad, M.; Zettlemoyer, L.; Hajishirzi, H. Prompting Contrastive Explanations for Commonsense Reasoning Tasks. Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. arXiv 2021, arXiv:2106.06823. [Google Scholar]

- Liu, J.; Liu, A.; Lu, X.; Welleck, S.; West, P.; Bras, R.L.; Hajishirzi, H. Generated knowledge prompting for commonsense reasoning. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 3154–3169. [Google Scholar]

- Fei, H.; Li, B.; Liu, Q.; Bing, L.; Li, F.; Chua, T.S. Reasoning implicit sentiment with chain-of-thought prompting. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; pp. 1171–1182. [Google Scholar]

- Přibáň, P.; Šmíd, J.; Steinberger, J.; Mištera, A. A comparative study of cross-lingual sentiment analysis. Expert Syst. Appl. 2024, 247, 123247. [Google Scholar] [CrossRef]

- Reynolds, L.; McDonell, K. Prompt programming for large language models: Beyond the few-shotparadigm. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Online, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; McGrew, B. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Madaan, A.; Hermann, K.; Yazdanbakhsh, A. What makes chain-of-thought prompting effective? A counterfactual study. In Proceedings of the Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 1448–1535. [Google Scholar]

- Chen, Z.; Zhou, Q.; Shen, Y.; Hong, Y.; Sun, Z.; Gutfreund, D.; Gan, C. Visual chain-of-thought prompting for knowledge-based visual reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 26–27 February 2024; Volume 38, pp. 1254–1262. [Google Scholar]

- Stanovich, K.E.; West, R.F.; Alder, J. Individual differences in reasoning: Implications for the rationality debate? Open peer commentary-three fallacies. Behav. Brain Sci. 2000, 23, 665. [Google Scholar] [CrossRef]

- Evans, J.S.B. Intuition and reasoning: A dual-process perspective. Psychol. Inq. 2010, 21, 313–326. [Google Scholar] [CrossRef]

- Sloman, S.A. The empirical case for two systems of reasoning. Psychol. Bull. 1996, 119, 3. [Google Scholar] [CrossRef]

- Scherer, K.R.; Schorr, A.; Johnstone, T. Appraisal Processes in Emotion: Theory, Methods, Research; Oxford University Press: Oxford, UK, 2001. [Google Scholar]

- Baccianella, S.; Esuli, A.; Sebastiani, F. Sentiwordnet 3.0: An enhanced lexical resource for sentiment analysis and opinion mining. In Proceedings of the International Conference on Language Resources and Evaluation, Valletta, Malta, 19–21 May 2010; Volume 10, pp. 2200–2204. [Google Scholar]

- Ding, X.; Liu, B.; Yu, P.S. A holistic lexicon-based approach to opinion mining. In Proceedings of the International Conference on Web Search and Web Data Mining, Palo Alto, CA, USA, 11–12 February 2008; pp. 231–240. [Google Scholar]

- Wang, H.; Ester, M. A sentiment-aligned topic model for product aspect rating prediction. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1192–1202. [Google Scholar]

- Li, W.; Shao, W.; Ji, S.; Cambria, E. BiERU: Bidirectional emotional recurrent unit for conversational sentiment analysis. Neurocomputing 2022, 467, 73–82. [Google Scholar] [CrossRef]

- Li, X.; Bing, L.; Lam, W.; Shi, B. Transformation networks for target-oriented sentiment classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics ACL, Melbourne, Australia, 15–20 July 2018; pp. 946–956. [Google Scholar]

- Li, W.; Zhu, L.; Shi, Y.; Guo, K.; Cambria, E. User reviews: Sentiment analysis using lexicon integrated two-channel CNN-LSTM family models. Appl. Soft Comput. 2020, 94, 106435. [Google Scholar] [CrossRef]

- Tang, D.; Qin, B.; Feng, X.; Liu, T. Effective LSTMs for target-dependent sentiment classification. In Proceedings of the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 3298–3307. [Google Scholar]

- Fan, F.; Feng, Y.; Zhao, D. Multi-grained attention network for aspect-level sentiment classification. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing Brussels, Belgium, 31 October–4 November 2018; pp. 3433–3442. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhang, C.; Li, Q.; Song, D. Aspect-based Sentiment Classification with Aspect specific Graph Convolutional Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, 3–7 November 2019; pp. 4568–4578. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Wang, K.; Shen, W.; Yang, Y.; Quan, X.; Wang, R. Relational Graph Attention Network for Aspect-based Sentiment Analysis. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3229–3238. [Google Scholar]

- Li, R.; Chen, H.; Feng, F.; Ma, Z.; Wang, X.; Hovy, E. Dual graph convolutional networks for aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Bangkok, Thailand, 1–6 August 2021; Volume 1: Long Papers, pp. 6319–6329. [Google Scholar]

- Bai, X.; Chen, Y.; Zhang, Y. Graph Pre-training for AMR Parsing and Generation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1: Long Papers, pp. 6001–6015. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Lomeli, M.; Hambro, E.; Scialom, T. Toolformer: Language models can teach themselves to use tools. Adv. Neural Inf. Process. Syst. 2023, 36, 68539–68551. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Yang, Z.; Einolghozati, A.; Inan, H.; Diedrick, K.; Fan, A.; Donmez, P.; Gupta, S. Improving text-to-text pre-trained models for the graph-to-text task. In Proceedings of the 3rd International Workshop on Natural Language Generation from the Semantic Web (WebNLG+), Online, 15–18 December 2020; pp. 107–116. [Google Scholar]

- Habu, R.; Ratnaparkhi, R.; Askhedkar, A.; Kulkarni, S. A hybrid extractive-abstractive framework with pre & post-processing techniques to enhance text summarization. In Proceedings of the 13th International Conference on Advanced Computer Information Technologies (ACIT), Wrocław, Poland, 21–23 September 2023; pp. 529–533. [Google Scholar]

- Yang, W.; Gu, T.; Sui, R. A faster method for generating Chinese text summaries-combining extractive summarization and abstractive summarization. In Proceedings of the 5th International Conference on Machine Learning and Natural Language Processing, Online, 23–25 December 2022; pp. 54–58. [Google Scholar]

- Deng, Y.; Zhang, W.; Pan, S.J.; Bing, L. Bidirectional generative framework for cross-domain aspect-based sentiment analysis. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; pp. 12272–12285. [Google Scholar]

- Zhang, W.; Li, X.; Deng, Y.; Bing, L.; Lam, W. Towards generative aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Bangkok, Thailand, 1–6 August 2021; pp. 504–510. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. Preprint 2018. [Google Scholar]

- Wang, M.; Wang, M.; Xu, X.; Yang, L.; Cai, D.; Yin, M. Unleashing ChatGPT’s Power: A case study on optimizing information retrieval in flipped classrooms via prompt engineering. IEEE Trans. Learn. Technol. 2023, 17, 629–641. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Lowe, R. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Amodei, D. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Wang, Z.; Xie, Q.; Feng, Y.; Ding, Z.; Yang, Z.; Xia, R. Is ChatGPT a good sentiment analyzer? A preliminary study. arXiv 2023, arXiv:2304.04339. [Google Scholar]

- Ping, Z.; Sang, G.; Liu, Z.; Zhang, Y. Aspect category sentiment analysis based on prompt-based learning with attention mechanism. Neurocomputing 2024, 565, 126994. [Google Scholar] [CrossRef]

- Inaba, T.; Kiyomaru, H.; Cheng, F.; Kurohashi, S. MultiTool-CoT: GPT-3 can use multiple external tools with chain of thought prompting. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; pp. 1522–1532. [Google Scholar]

- Fu, Y.; Peng, H.; Sabharwal, A.; Clark, P.; Khot, T. Complexity-based prompting for multi-step reasoning. arXiv 2022, arXiv:2210.00720. [Google Scholar]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.V.; Chi, E.H.; Zhou, D. Self-consistency improves chain of thought reasoning in language models. arXiv 2022, arXiv:2203.11171. [Google Scholar]

- Li, Y.; Lin, Z.; Zhang, S.; Fu, Q.; Chen, B.; Lou, J.-G.; Chen, W. On the advance of making language models better reasoners. arXiv 2022, arXiv:2206.02336. [Google Scholar]

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androut-sopoulos, I.; Manandhar, S. Semeval-2014 task 4: Aspect-based sentiment analysis. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 27–35. [Google Scholar]

- Song, Y.; Wang, J.; Jiang, T.; Liu, Z.; Rao, Y. Attentional encoder network for targeted sentiment classification. arXiv 2019, arXiv:1902.09314. [Google Scholar] [CrossRef]

- Xu, L.; Pang, X.; Wu, J.; Cai, M.; Peng, J. Learn from structural scope: Improving aspect-level sentiment analysis with hybrid graph convolutional networks. Neurocomputing 2023, 518, 373–383. [Google Scholar] [CrossRef]

- Wu, H.; Huang, C.; Deng, S. Improving aspect-based sentiment analysis with knowledge-aware dependency graph network. Inf. Fusion 2023, 92, 289–299. [Google Scholar] [CrossRef]

- Li, Z.; Zou, Y.; Zhang, C.; Zhang, Q.; Wei, Z. Learning Implicit Sentiment in Aspect-based Sentiment Analysis with Supervised Contrastive Pre-Training. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 246–256. [Google Scholar]

- He, B.; Zhao, R.; Tang, D. CABiLSTM-BERT: Aspect-based sentiment analysis model based on deep implicit feature extraction. Knowl.-Based Syst. 2025, 309, 112782. [Google Scholar] [CrossRef]

- Lawan, A.; Pu, J.; Yunusa, H.; Umar, A.; Lawan, M. Enhancing Long-range Dependency with State Space Model and Kolmogorov-Arnold Networks for Aspect-based Sentiment Analysis. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 2176–2186. [Google Scholar]

- Huang, C.; Li, X.; Zheng, X.; Du, Y.; Chen, X.; Huang, D.; Fan, Y. Hierarchical and position-aware graph convolutional network with external knowledge and prompt learning for aspect-based sentiment analysis. Expert Syst. Appl. 2025, 278, 127290. [Google Scholar] [CrossRef]

| Dataset | Positive | Negative | Neutral | |||

|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | |

| Restaurant | 2164 | 728 | 807 | 196 | 637 | 196 |

| Laptop | 994 | 341 | 870 | 128 | 464 | 169 |

| Models | Restaurants14 | Laptop14 | |||

|---|---|---|---|---|---|

| Acc | F1 | Acc | F1 | ||

| pre-train | CABiLSTM-BERT | 83.75 | 75.87 | 77.91 | 73.04 |

| SPC-BERT | 84.46 | 76.98 | 78.99 | 75.03 | |

| RGAT-BERT | 86.33 | 81.04 | 78.21 | 74.07 | |

| HGCN | 86.45 | 80.60 | 79.59 | 76.24 | |

| KDGN | 87.01 | 81.94 | 81.32 | 77.59 | |

| MambaForGCN | 86.68 | 80.86 | 81.80 | 78.56 | |

| HPEP-GCN | 86.86 | 80.65 | 81.96 | 79.10 | |

| prompt | BERT + Prompt | - | 81.34 | - | 78.58 |

| T5-base + Prompt | - | 81.50 | - | 79.02 | |

| T5-base + CoT # | 86.16 | 79.70 | 81.03 | 77.25 | |

| T5-large + CoT # | 88.04 | 82.17 | 83.31 | 79.55 | |

| our | T5-base + MC-TPL | 87.86 | 81.99 | 83.45 | 79.62 |

| T5-large + MC-TPL | 88.75 | 83.73 | 84.21 | 80.33 | |

| Models | Restaurants14 | Laptop14 | |||

|---|---|---|---|---|---|

| Acc | F1 | Acc | F1 | ||

| pre-train | SPC-BERT | - | 21.76 | - | 25.34 |

| RGAT-BERT | - | 27.48 | - | 25.68 | |

| SCAPT-BERT | - | 30.02 | - | 25.77 | |

| prompt | BERT + Prompt | - | 33.62 | - | 35.17 |

| T5-base + Prompt # | 76.87 | 53.32 | 68.49 | 51.06 | |

| T5-large + Prompt # | 78.39 | 55.03 | 69.28 | 52.08 | |

| T5-base + CoT # | 77.14 | 53.58 | 68.34 | 51.34 | |

| T5-large + CoT # | 79.02 | 55.66 | 70.85 | 53.54 | |

| our | T5-base + MC-TPL | 77.67 | 54.31 | 69.43 | 51.82 |

| T5-large + MC-TPL | 79.82 | 56.36 | 71.32 | 54.06 | |

| Models | Restaurant14 | Laptop14 | ||

|---|---|---|---|---|

| Acc | F1 | Acc | F1 | |

| Progressive | 86.25 | 79.79 | 82.28 | 78.67 |

| Experiential | 87.69 | 80.75 | 81.03 | 76.21 |

| Keyword | 86.79 | 81.67 | 81.96 | 78.25 |

| Analogical | 86.60 | 80.31 | 81.19 | 76.86 |

| MC-TPL | 87.86 | 81.99 | 83.45 | 79.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; He, Z.; Gu, T.; Gu, B.; Wan, Y.; Li, M. Multi-Chain of Thought Prompt Learning for Aspect-Based Sentiment Analysis. Appl. Sci. 2025, 15, 12225. https://doi.org/10.3390/app152212225

He Y, He Z, Gu T, Gu B, Wan Y, Li M. Multi-Chain of Thought Prompt Learning for Aspect-Based Sentiment Analysis. Applied Sciences. 2025; 15(22):12225. https://doi.org/10.3390/app152212225

Chicago/Turabian StyleHe, Yating, Zhenzhen He, Tiquan Gu, Bowen Gu, Yaling Wan, and Min Li. 2025. "Multi-Chain of Thought Prompt Learning for Aspect-Based Sentiment Analysis" Applied Sciences 15, no. 22: 12225. https://doi.org/10.3390/app152212225

APA StyleHe, Y., He, Z., Gu, T., Gu, B., Wan, Y., & Li, M. (2025). Multi-Chain of Thought Prompt Learning for Aspect-Based Sentiment Analysis. Applied Sciences, 15(22), 12225. https://doi.org/10.3390/app152212225