1. Introduction

Epilepsy is one of the most common chronic neurological disorders, affecting millions of people worldwide [

1,

2]. Electroencephalography (EEG), a method that measures the brain’s bioelectrical activity using electrodes placed on the scalp, plays a fundamental role in the diagnosis of this condition [

3,

4]. A key aspect of EEG analysis is the identification of so-called interictal epileptiform discharges (IEDs), which are among the main indicators of epilepsy and are used, among other purposes, for localizing the epileptic focus and selecting appropriate therapy [

5]. IEDs typically present as spikes (20–70 ms) or sharp waves (70–200 ms), and often appear in the form of spike-and-wave complexes where the subsequent slow wave lasts 200–500 ms [

6]. Their amplitudes usually range from 50 to 300 µV. Morphologically, IEDs are characterized by a steep ascending slope and a pointed peak, which allows them to be distinguished from background activity and artifacts. Traditionally, EEG recordings are analyzed manually by experienced neurophysiologists. However, this approach is time-consuming, requires substantial expertise, and is susceptible to subjectivity and errors arising from artifacts, individual variability, or the length of the recording. Consequently, automated analysis methods based on artificial intelligence (AI)—in particular, deep learning—are gaining increasing importance, as they can significantly streamline and objectify the diagnostic process. Among AI techniques, convolutional neural networks (CNNs) have gained particular popularity for analyzing data with spatiotemporal structure, such as EEG signals [

7,

8,

9]. CNNs are capable of automatically learning feature representations characteristic of epileptic discharges, without the need for manual design of filters or indicators. This enables the detection of complex patterns of brain activity even in the presence of substantial variability in the input data [

10,

11].

In this work, we present the architecture of a lightweight CNN specifically adapted for the task of IED detection in EEG signals. The aim was to achieve a balance between classification effectiveness and computational simplicity, making the model suitable for both research applications and clinical systems.

2. Related Articles

Interictal epileptiform discharges (IEDs) are a key diagnostic marker in epilepsy [

12]. They help distinguish pathological from physiological brain activity and support both the diagnosis and monitoring of the disease [

13]. However, it should be emphasized that the presence of IEDs is not equivalent to a diagnosis of epilepsy. In a certain proportion of individuals—particularly children and the elderly—IEDs may appear in EEG recordings despite the absence of seizures [

14]. This limits their specificity as a diagnostic marker and necessitates interpretation within the clinical context.

Detection of IEDs has significant clinical importance [

15]. It facilitates the diagnosis of epilepsy, since such discharges may be visible in EEG recordings even during asymptomatic periods when the patient is not experiencing a seizure [

15,

16]. Analyzing the type and location of discharges also enables the classification of epilepsy (focal vs. generalized), which directly influences the choice of therapy [

17]. Detailed mapping of IED sources is further useful in planning surgical treatment, allowing precise identification of brain regions that require intervention [

18,

19]. In addition, systematic monitoring of the frequency and characteristics of discharges makes it possible to evaluate the effectiveness of pharmacological treatment [

20]. The detection of interictal epileptiform discharges in EEG signals has long been a challenging diagnostic task in epileptology [

21,

22,

23]. The gold standard has traditionally been the subjective visual analysis of EEG by an expert, a process that is both time-consuming and susceptible to significant inter-rater variability [

22]. Studies show that the agreement between experts in detecting IEDs is only moderate, and the error rate reaches as high as 30–40% depending on the type of recording and the experience of the raters [

23,

24,

25].

Attempts to automate this task date back to the 1970s, ranging from pattern-matching algorithms to classical machine learning methods [

26,

27]. Da Silva Lourenço et al. reviewed these classical approaches, which include Gotman’s template-matching algorithms, signal feature-based methods (e.g., time-frequency analyses), and wavelet transforms [

21,

28]. These techniques have formed the foundation of spike detection for decades, though they have notable limitations in terms of generalizability [

1,

28].

Contemporary approaches to the problem distinguish several main categories of IED detection methods. For example, Abdi-Sargezeh et al. [

28], in a recent review, divide existing techniques into: (1) template matching, (2) signal feature-based methods (mimetic, time-frequency, non-linear), (3) matrix decompositions, (4) tensor factorizations, (5) neural networks, and (6) hybrid approaches. Traditional algorithms (categories 1–4) require expert-defined features or manually set detection thresholds. For instance, Olejarczyk et al. [

1] proposed a morphological method for detecting spike-wave (SW) patterns based on shape and the synchronous presence of spikes across multiple EEG channels, achieving high sensitivity and specificity in studied patients. However, such approaches are vulnerable to inter-patient signal variability and artifacts, which hinders the development of a universal classical detector [

29,

30,

31].

Deep learning—particularly convolutional neural networks (CNNs)—has recently achieved a dominant position in automatic EEG analysis. CNNs can automatically learn feature representations from raw EEG signals, whereas previous algorithms required manual feature extraction [

32,

33]. Within the field of automated epileptic spike detection, convolutional neural networks [

34,

35,

36] and, more recently, graph convolutional networks (GCNs) [

37] have emerged as some of the most extensively studied solutions. Mera-Gaona et al. [

38] developed an approach combining matched filtering with a neural network for verifying detected spikes in pediatric EEG recordings. This method achieved high sensitivity and specificity. Similarly, Prasanth et al. [

35] designed a CNN model that processed both raw EEG signals and band-pass filtered data, resulting in improved detection precision and a reduction in false alarms. Jing et al. [

39] introduced SpikeNet—a deep neural network that outperformed expert-level performance in both IED identification and whole-recording EEG classification. The most recent work by Tong and collaborators [

40] describes a transformer-based model enhanced with ICA and a clinical wave pattern database, achieving high classification accuracy while maintaining a low false detection rate. An interesting study by Lin and colleagues [

29] utilized entire blocks of raw EEG signals as input to a deep convolutional neural network based on the VGG (Visual Geometry Group) architecture. Originally developed by the Visual Geometry Group at the University of Oxford for large-scale image classification, VGG consists of multiple stacked convolutional layers with small receptive fields, interleaved with pooling layers, followed by fully connected layers for classification [

41]. By adapting VGG to one-dimensional EEG time-series data, the authors aimed to automatically learn discriminative temporal–spatial features relevant for the detection of interictal epileptiform discharges, achieving improved detection performance compared to traditional feature-engineering approaches.

Collectively, these studies clearly demonstrate that, in most cases, deep learning models achieve much higher accuracy, sensitivity, and specificity than classical methods. However, several challenges remain: generalization to different EEG types and populations, the need for standardized datasets, and clinical validation of models. Additionally, many of the reviewed CNN-based architectures are quite complex, featuring a large number of layers, attention mechanisms, recurrent connections, or autoencoders. While this increases their capacity to model complex EEG patterns, it also leads to longer training times, higher computational demands, and greater difficulty in implementation on portable devices. In many cases, training times are measured in hours or even days, complicating testing and deployment. Therefore, there is a growing demand for the design of lighter, optimized CNN architectures that can maintain high classification performance while reducing training time and facilitating practical application in clinical settings—including on mobile devices, bedside EEG systems, or implants. Such models may also increase clinicians’ trust due to their simpler structure and greater transparency of operation.

3. Aim of the Article

The aim of this article is to design and evaluate a lightweight convolutional neural network for the automatic detection of interictal epileptiform discharges in EEG signals. The proposed architecture has been optimized in terms of the number of trainable parameters, network depth, and the number and type of layers to provide a balance between classification performance and low computational demands. The model was tested on a large, real-world, and clinically diverse EEG dataset comprising recordings from multiple patients with various types of epileptic seizures. Its effectiveness was assessed using standard classification metrics such as AUC, precision, sensitivity, specificity, and F1-score. Special attention was given to the issue of dataset imbalance, where epochs without discharges significantly outnumber those containing IEDs.

A unique aspect of this study is the use of an optimized, simplified CNN architecture that, despite having a small number of parameters, achieves high detection performance. This makes it potentially valuable for clinical decision support systems as well as for mobile and real-time applications. Although modern computers offer high computational power and model training can be performed offline, employing a lightweight CNN architecture still has significant advantages. A reduced number of parameters results in shorter inference times, which is particularly important in applications requiring real-time analysis, such as bedside systems or ambulatory monitoring. Lower complexity also facilitates the integration of the algorithm into mobile or embedded devices and reduces the cost of computational infrastructure in long-term operation. Furthermore, simplifying the model structure enhances its stability and reduces the risk of overfitting, which is particularly important given the limited availability of high-quality medical data.

4. Materials

For the experiments, a publicly available EEG dataset published by Lin et al. [

29] was used. This dataset contains 4-s EEG epochs labeled for the presence or absence of interictal epileptiform discharges. The dataset includes recordings from 84 patients: 52 patients with epilepsy who exhibited at least one IED, and 32 patients with normal EEGs. IED episodes were classified according to their location into five categories: generalized, frontal, temporal, centro-parietal, and occipital, with individual patients possibly exhibiting more than one type of discharge. Most spike and sharp wave discharges exhibited durations between 40 and 240 ms with amplitudes in the range of 16–184 µV. In contrast, spike–wave and sharp–wave complexes generally lasted longer (160–660 ms) and reached amplitudes of 26–364 µV [

29]. The raw dataset comprised 25,449 four-second EEG epochs, of which 2516 contained IEDs and 22,933 were non-IEDs. For training the classifier, the dataset was stratified into training (72%), validation (18%), and test (10%) subsets, maintaining the class distribution. To address class imbalance, oversampling of the minority class (IED) was applied only in the training set, which increased its size to 32,932 epochs, while the validation and test sets contained 4570 and 2538 epochs, respectively. The recordings used the standard 19-channel 10–20 electrode placement system, along with additional EMG and ECG channels that were not included in the analysis. EEG signals were band-pass filtered between 1 and 45 Hz and resampled from 500 Hz to 100 Hz in accordance with the Nyquist theorem. The lower cutoff of 1 Hz was applied to suppress very slow components (e.g., electrode drifts, sweating artifacts, movement disturbances), while the upper cutoff of 45 Hz reduced high-frequency muscle artifacts and minimized contamination from 50 Hz power line noise. As a result, the 1–45 Hz range provided a practical balance between retaining physiologically relevant activity and ensuring robustness against artifacts. Resampling reduced the number of samples per epoch from 2000 (500 Hz × 4 s) to 400 (100 Hz × 4 s), significantly decreasing computational requirements.

It should be noted that examples from the same patients could appear in the training, validation, and test sets, meaning the data split was not subject-independent. Information on wake or sleep state was not considered, so the model was trained and tested on epochs originating from various physiological states of the patients. This approach makes the model robust to intra-individual variability and potentially suitable for use in diverse clinical contexts without the need for prior data segmentation.

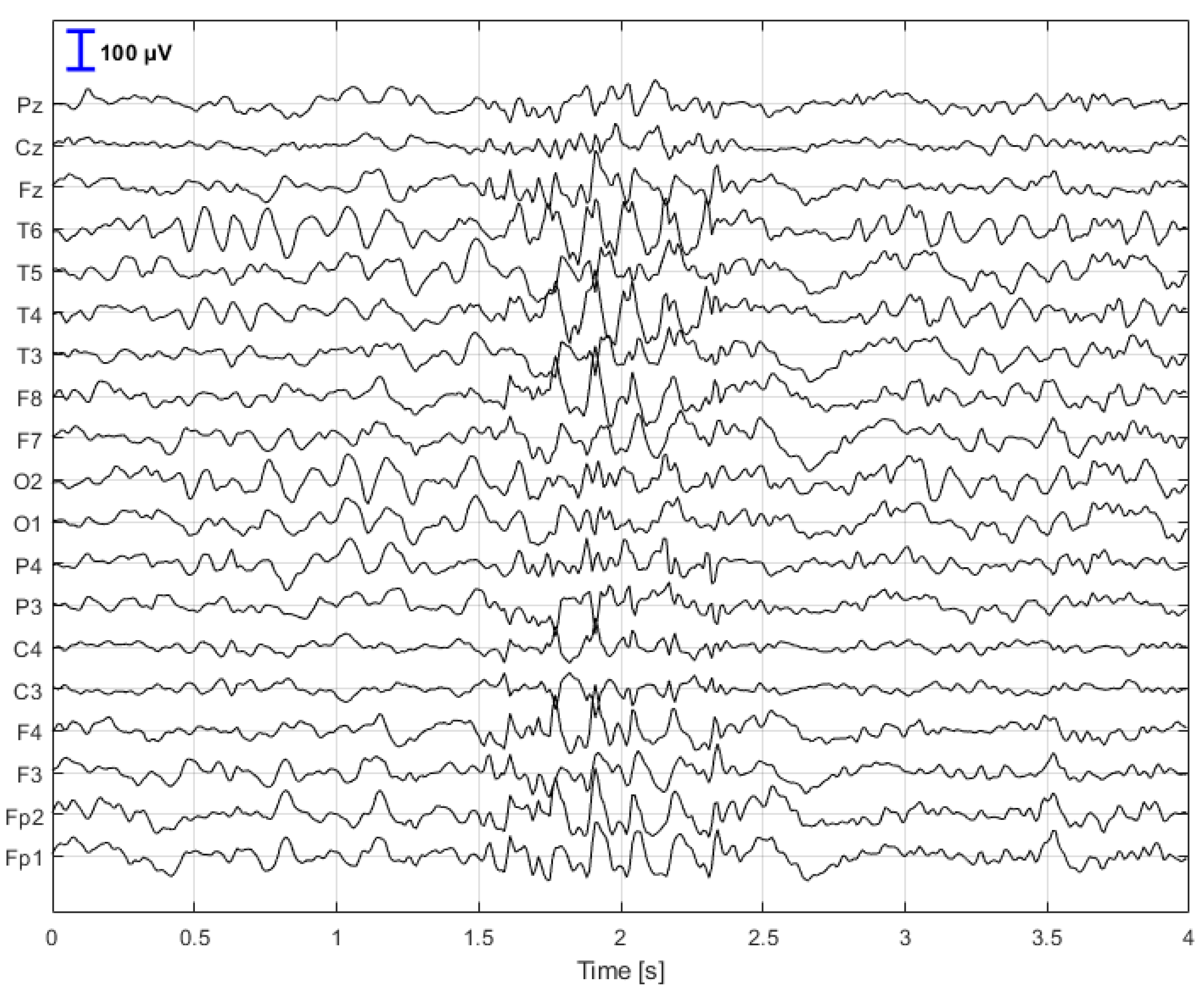

Figure 1 illustrates a sample 4-s EEG segment containing interictal epileptiform discharges, which served as the input to the CNN model.

Figure 2 depicts an example segment without interictal epileptiform discharges.

5. Methods

A convolutional neural network was employed for the detection of interictal discharges, as this type of architecture is particularly well suited for analyzing data with spatiotemporal structure, such as EEG signals. CNNs enable the automatic extraction of both local and global features from the data, eliminating the need for manual feature engineering and allowing for the detection of subtle patterns characteristic of epileptiform discharges. Moreover, a well-designed CNN can provide an effective balance between model complexity and performance, making it an attractive solution for clinical applications, including those running on devices with limited computational resources.

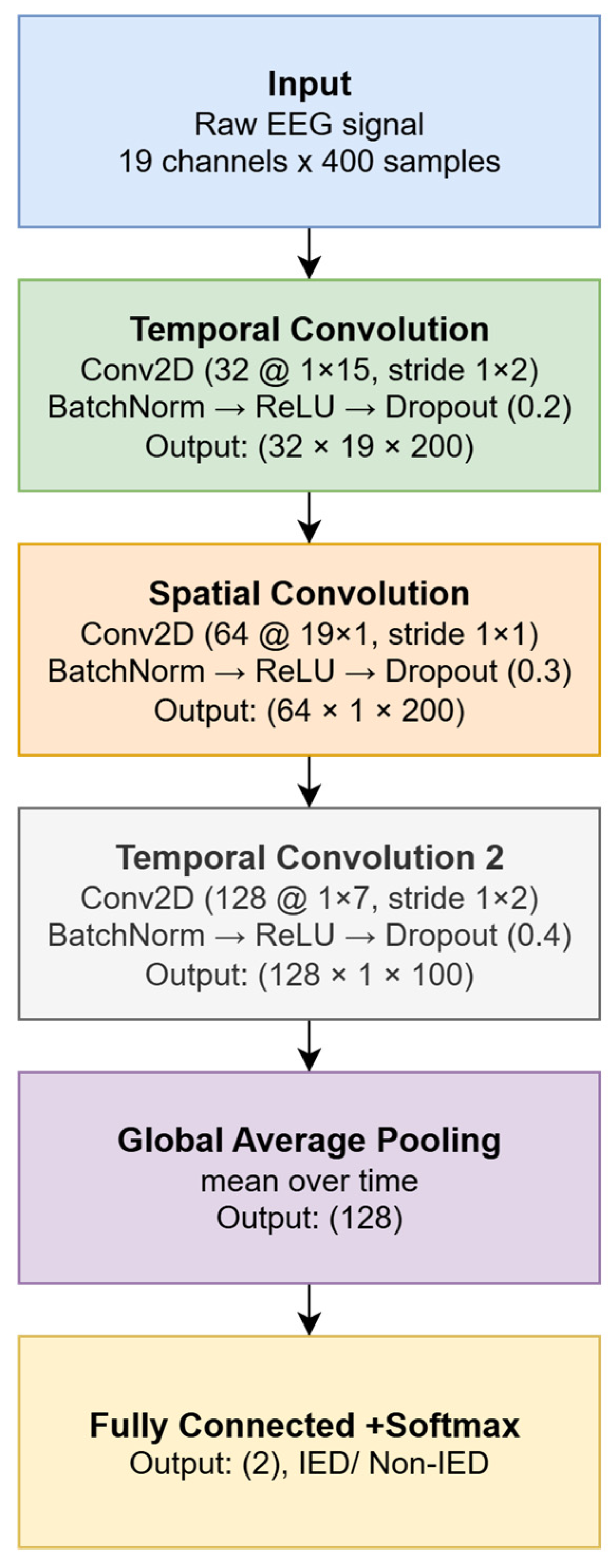

Figure 3 illustrates the block diagram of the convolutional network used to classify EEG segments as either IED or non-IED.

A simple CNN architecture was designed to process multichannel EEG signals with dimensions of 19 × 400 samples (corresponding to 4 s at a sampling rate of 100 Hz). The input layer receives normalized EEG signals, while subsequent convolutional layers extract temporal patterns and spatial relationships across channels. For this study, the input data were labeled as follows: label 0 for epochs without interictal epileptiform discharges (non-IED) and label 1 for epochs containing IEDs. These labels served as the target classes during CNN training.

The described convolutional neural network architecture was designed to enable efficient analysis of both the temporal and spatial dimensions of EEG signals. It consists of three consecutive convolutional blocks, each serving a distinct analytical function. The first block focuses on analyzing local temporal changes within individual EEG channels. It employs 32 filters of size 1 × 15 samples, moving along the time axis with a stride of 2. Appropriate padding is used to preserve the output signal length, allowing for precise detection of short-term changes characteristic of epileptic discharges. The second convolutional block performs spatial filtering, simultaneously processing all 19 EEG channels. To this end, 64 filters of size 19 × 1 are utilized, enabling the network to capture spatial dependencies between electrodes. This stage allows the model to recognize where and in what configuration characteristic patterns of brain activity occur. The third convolutional block is responsible for detecting more complex, high-level temporal patterns. Here, 128 filters of size 1 × 7 are used, with a stride of 2. This construction facilitates the aggregation of information from earlier layers and the enhancement of salient signal features typical of interictal epileptiform discharges.

After each convolutional layer, batch normalization, the ReLU activation function, and dropout layers (with rates of 0.2, 0.3, and 0.4, respectively) are applied to improve the model’s generalization and reduce the risk of overfitting. At the end of the network, a global average pooling layer is used instead of the classic flatten and dense approach to limit the number of parameters. This is followed by a fully connected layer with two output neurons (for the IED and non-IED classes), a softmax layer, and a classification layer with class weighting. The final classification layer incorporates class weighting to compensate for the data imbalance between IED and non-IED epochs. The weights are set according to the following rule:

where

N0 is the size of the majority class (non-IED) and

N1 is the size of the minority class (IED). Assigning a weight of 1 to class 0 and increasing the weight of class 1 increases the penalty for misclassifying rare events, which in practice improves the model’s sensitivity to the IED class [

42].

There are several strategies for addressing the problem of class imbalance in EEG datasets. The most commonly used approaches include methods that modify the training set (e.g., oversampling the minority class, undersampling the majority class, or generating synthetic examples using techniques such as SMOTE), as well as methods that adjust the loss function, such as focal loss, class-balanced loss, or dynamic sample weighting during training. Alternatively, data augmentation techniques can also be applied, including jittering (adding noise with controlled amplitude), amplitude scaling, time shifting, time stretching, or signal segment mixing. In this study, we combined minority-class oversampling with the use of class weights in the loss function, as this solution is relatively simple to implement, computationally stable, and allows for controlled adjustment of the model’s decision balance during training. This approach is one of the recommended methods for addressing the challenge of imbalanced datasets in machine learning, particularly in tasks where the positive class is rare and its detection is of high clinical importance.

Table 1 presents the layers and detailed parameters of each processing stage in the convolutional network architecture. The implemented CNN model operates hierarchically—initially identifying local temporal fluctuations in the EEG signal, then analyzing the spatial co-occurrence of these changes across different locations (electrodes), and finally learning more abstract and complex patterns that may indicate the presence of epileptiform discharges. This approach enables efficient extraction of diagnostic features without the need for manual feature engineering and allows for practical application of the model in clinical settings.

The network was trained using the Adam optimizer with a default learning rate of 0.001 and parameters β1 = 0.9 and β2 = 0.999. This optimizer was selected for its ability to efficiently adapt the learning rate across different layers and to achieve rapid convergence even on large and heterogeneous datasets. Training was performed for 10 epochs, with mini-batches of size 64, using validation data to monitor learning progress.

During the experiments, the training process of the convolutional network was analyzed by monitoring the loss function and classification accuracy on both the training and validation sets. The learning curves exhibited a typical pattern: a rapid decrease in error and an increase in accuracy during the initial epochs, followed by stabilization around the 8th–10th epoch. Extending the training beyond this point did not improve validation results and, in some cases, led to symptoms of overfitting, manifested by an increasing gap between training and validation errors. Based on these observations, 10 epochs were determined as the optimal training duration, providing the best compromise between classification performance and the model’s generalization ability. Extending the training to 13–15 epochs did not enhance sensitivity or AUC, whereas reducing the number of epochs to 3–7 resulted in decreased classification performance and greater instability of the learning process.

Additionally, an early stopping mechanism with a patience parameter of 5 was applied to automatically halt training if no improvement in validation metrics was observed over five consecutive evaluations. However, in the conducted experiments, this mechanism was not activated, indicating that the convolutional network continued to improve its classification quality throughout the defined 10 epochs. These results demonstrate that the adopted architecture and set of hyperparameters ensured stable convergence of the learning process without signs of overfitting. The application of early stopping confirmed the adequacy of the chosen number of epochs—the model reached its maximum generalization capacity without a loss of sensitivity.

All computations were performed in MATLAB R2024b Update 4 on a workstation equipped with an Intel(R) Core(TM) i9-14900K processor, an NVIDIA GeForce RTX 4070 SUPER graphics card, and 128 GB of RAM. The computational workload was distributed between the CPU and GPU, enabling rapid iteration and testing of various network architecture configurations. The Experiment Manager toolbox was used for network parameter selection. The MATLAB implementation of the CNN model and data preprocessing routines is publicly available at

https://github.com/kolodzima/Lightweight_CNN_IED (accessed on 11 November 2025).

6. Results and Discussion

6.1. Evaluation of the Proposed CNN Performance for IED Detection

The final trained CNN was tested on an independent dataset that was not used during model training or validation. The test set consisted of EEG epochs evaluated by experts for the presence of interictal epileptiform discharges. The neural network’s prediction results were compared against reference labels assigned by specialists. Initial identification of IED discharges was carried out by a single expert during routine clinical evaluation, followed by an independent review conducted by an epileptologist and an experienced EEG technician [

29]. In cases of disagreement, a third expert provided the final decision, ensuring the reliability of the reference labels. This evaluation procedure allowed for an objective assessment of the model’s performance in a realistic clinical setting, while accounting for the classification challenges arising from the inherent diversity of EEG signals. The confusion matrix presented in

Table 2 shows the distribution of correct and incorrect classifications made by the model relative to the expert-assigned labels. Of all epochs without IEDs, 2210 were correctly identified as negative (

TN), while 77 were incorrectly classified as containing IEDs (

FP). Among epochs with true IEDs, 187 were correctly detected (

TP), and 64 were missed by the model (

FN).

Based on this confusion matrix, several standard performance metrics for the classifier were calculated:

Accuracy = (TP + TN)/(TP + TN + FP + FN): a measure of the overall effectiveness of the classifier, indicating the percentage of all cases that were correctly classified. However, for imbalanced datasets (e.g., with a large predominance of the “non-IED” class), this metric can be misleading.

Precision = TP/(TP + FP): indicates the proportion of examples classified as positive that truly belong to the positive class. High precision means a low rate of false alarms (FP).

Recall (Sensitivity) = TP/(TP + FN): a measure indicating the percentage of actual positive cases that were correctly identified. This is especially important in medical applications, where missing, for example, an epileptic spike (FN) can have serious clinical consequences.

Specificity = TN/(TN + FP): a measure evaluating the classifier’s ability to correctly identify negative cases, i.e., those without IEDs. High specificity indicates a low number of false alarms.

F1-score = 2 × (Precision × Recall)/(Precision + Recall): the harmonic mean of precision and recall, particularly useful for imbalanced datasets. It provides an averaged assessment of the classifier’s effectiveness in detecting positive cases without ignoring FP and FN.

AUC (Area Under the ROC Curve): the area under the Receiver Operating Characteristic curve, which illustrates the trade-off between sensitivity and the false positive rate. AUC measures the model’s ability to distinguish between positive and negative classes across different decision thresholds. An AUC close to 1.0 indicates very good classification quality, while a value near 0.5 indicates random classification.

Table 3 presents a summary of the key performance metrics for the deep learning classifier used in IED detection from EEG signals. The dataset was stratified into training (72%), validation (18%), and test (10%) sets. The accuracy reached 94.44%, indicating that the model correctly classified more than 94% of all analyzed cases. High accuracy reflects strong overall performance, both in detecting IEDs and correctly identifying their absence. However, it should be noted that in imbalanced datasets (where negative cases greatly outnumber positives), accuracy alone does not provide a complete picture of model quality. The precision was 70.83%, meaning that of all the cases the model classified as containing IEDs, nearly 71% truly contained such discharges. This is a crucial parameter when assessing the effectiveness of a system for detecting potentially pathological events—a high precision reduces the number of false positives, which in clinical practice could otherwise lead to unnecessary interventions or patient anxiety.

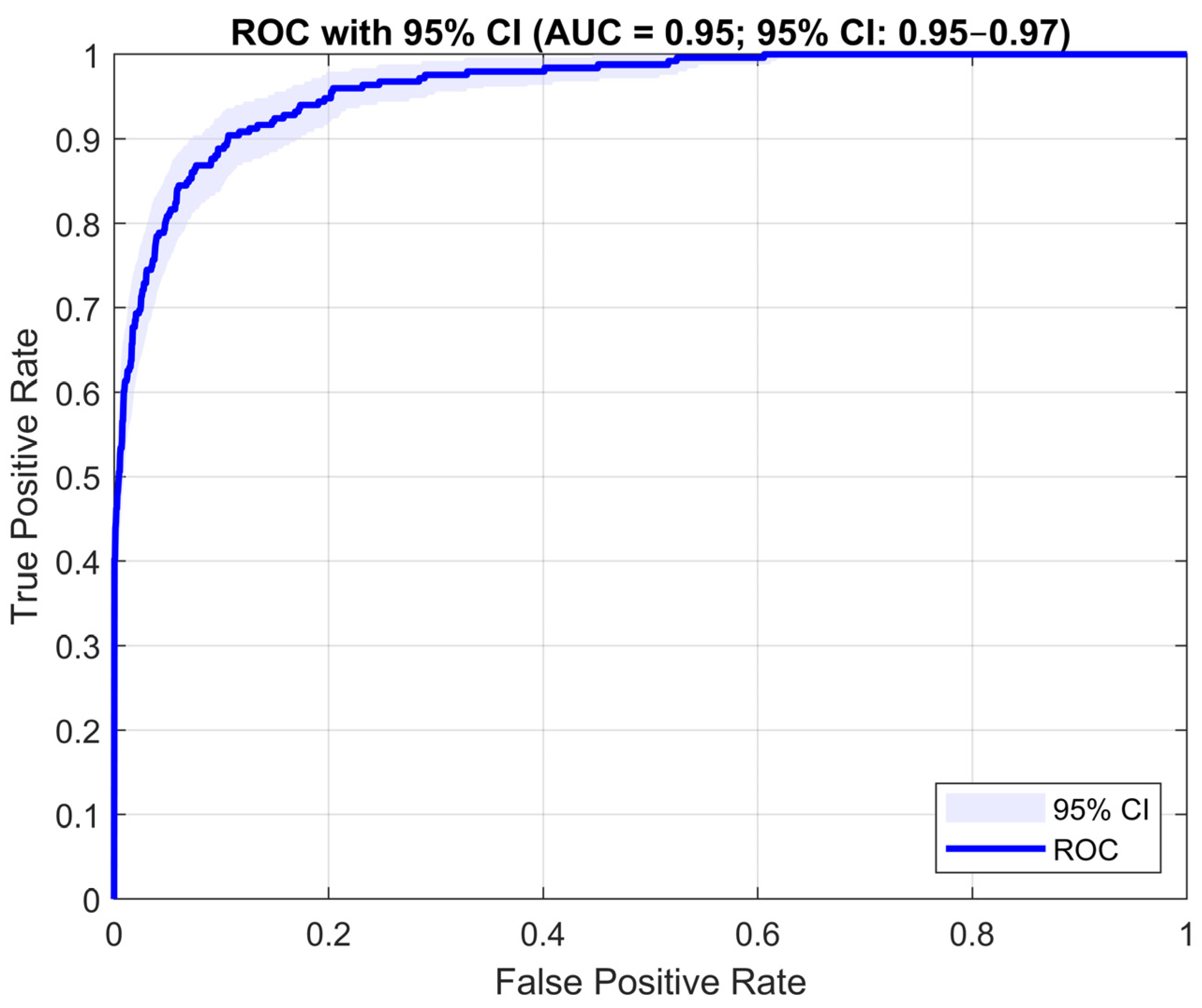

The recall (sensitivity) of 74.50% shows that the model identified nearly three-quarters of all true EEG epochs containing IEDs. High sensitivity is important for diagnostic safety, as it enables the detection of most pathological events. The specificity was 96.63%, demonstrating the model’s ability to correctly disregard signals that do not contain IEDs. This is important for minimizing false positives and increasing trust in negative predictions. The F1-score of 72.62% simultaneously reflects both precision and recall. It serves as a balanced metric, especially useful when working with imbalanced data. The obtained value suggests a stable trade-off between the ability to detect true IED cases and limiting misclassifications. The AUC reached 0.9526 (95% CI: 0.950–0.971), indicating a very strong ability of the model to distinguish between classes. The narrow confidence interval confirms the robustness of the result, and the high AUC demonstrates that the network effectively differentiates EEG signal segments containing interictal discharges from those without them, regardless of the decision threshold.

In summary, the designed CNN demonstrates high effectiveness in detecting epileptiform discharges in 4-s EEG segments. From a practical standpoint, the most important aspects are its high specificity (reducing the number of false alarms), adequate precision (reliability of IED detection), and a high AUC value, indicating the overall quality of the classification model.

Figure 4 presents the Receiver Operating Characteristic curve, which illustrates the relationship between sensitivity (True Positive Rate) and the inverse of specificity (1-Specificity) at varying decision thresholds. Although the developed algorithm demonstrates moderate sensitivity (74.5%), it remains a valuable tool in clinical applications, serving as a Clinical Decision Support System (CDSS). Rather than replacing the expert, it provides automatic preselection of EEG segments with an increased likelihood of containing interictal discharges, enabling the clinician to significantly reduce analysis time and focus on the most diagnostically relevant portions of the recording. In practice, the model classifies individual EEG signal blocks and indicates time intervals where epileptiform discharges are most likely to occur. By reviewing these suggested segments, the physician can quickly verify detection accuracy, confirm the presence of discharges, and assess their morphology and spatial extent.

In clinical diagnostics, the system can therefore act as a kind of “virtual EEG assistant” that:

automatically scans long EEG recordings for potential IEDs,

indicates the time and approximate spatial location of each detected event,

allows for visual verification of the results by a specialist,

supports clinical decision-making in identifying epileptogenic regions.

Thus, despite the limitations typical of deep learning–based algorithms, the proposed model proves useful for semi-automatic EEG analysis, serving as a support tool for clinicians rather than a replacement for their judgment. The combination of automatic detection, post-processing, and result visualization enhances clinical workflow efficiency, enabling faster, more targeted, and more objective identification of epileptic foci.

6.2. Evaluation of a Subject-Independent Approach to IED Detection

To fully evaluate the effectiveness of the IED detection system, a subject-independent approach was applied. This approach requires that the evaluation be performed using a data split in which all recordings from a given patient are assigned exclusively to either the training or the test set. Such a setup eliminates information leakage and enables a reliable assessment of the model’s generalization capability. In this study, EEG recordings were divided with respect to subject identity. Data were collected from 68 individuals, of whom 47 were randomly assigned to the training set (29 with IEDs and 18 without IEDs) and 21 were randomly assigned to the test set (13 with IEDs and 8 without IEDs). The split ratio of approximately 70% for training and 30% for testing ensured that no recordings from the same subject appeared in both sets simultaneously.

The developed convolutional neural network (CNN) model was then trained to classify EEG signals into two categories: IED and Non-IED. Training was conducted using the Adam optimizer for 10 epochs with mini-batches of 64 samples, utilizing a GPU environment. As in the previous approach, class imbalance was mitigated using oversampling of IED samples and logarithmic class weighting in the loss function. The evaluation was conducted on data originating exclusively from subjects not used in the training process (30% of the dataset). The following key performance metrics were calculated: accuracy, precision, recall, specificity, F1-score, and AUC. Additionally, a per-subject analysis was carried out to assess the model’s stability and its ability to generalize under real inter-subject variability.

Table 4 presents a summary of the key performance metrics of the developed deep learning classifier used for IED detection in EEG signals. The reported results refer to all test segments combined, without differentiation by subject.

The achieved accuracy of 94.08% indicates that the model correctly classified over 94% of all analyzed segments. This high accuracy demonstrates the overall strong performance of the network, both in detecting IED discharges and in correctly identifying EEG segments without such events. The obtained precision of 70.19% indicates that among all segments classified by the model as containing IEDs, approximately 7 out of 10 indeed included such discharges. This parameter is clinically important, as high precision reduces the number of false alarms that could otherwise lead to unnecessary diagnostic interventions. The recall (sensitivity) of 66.50% shows that the model detected about two-thirds of all true IED discharges. High sensitivity is crucial for diagnostic safety, as it ensures that most pathological events in the EEG signal are captured. The specificity of 97.01% confirms that the network effectively identifies EEG segments without IEDs, minimizing the number of false detections. High specificity increases the reliability of negative predictions and reduces false alerts. The F1-score (68.30%) represents a balanced measure that combines precision and recall, reflecting a trade-off between the model’s ability to detect true IEDs and its resistance to false classifications. The AUC value of 0.93 indicates a strong ability of the model to distinguish between classes regardless of the chosen decision threshold. This high value confirms that the developed CNN effectively differentiates EEG segments containing IED discharges from those without, ensuring robust classification performance across the entire test set.

Table 5 presents the classification results in a per-subject perspective, i.e., calculated separately for each patient in the test set. The analysis reveals considerable variation in model performance across subjects, reflecting the natural variability present in EEG signals. For patients whose test sets did not contain any EEG segments with IED discharges (e.g., DA00100B–DA00100N, DC11304D, DC11304I), the network achieved 100% accuracy and specificity, correctly identifying all segments as belonging to the Non-IED class. In these cases, precision and recall values are zero, which is expected given the absence of positive examples in the data.

Among patients with IED discharges present in their recordings, classification results were more varied. The highest performance was observed for cases such as DA00103G (ACC = 1.00, SPEC = 1.00, REC = 1.00), DA00102S (ACC = 0.97, PREC = 0.92, SPEC = 1.00), and DA00102T (ACC = 0.89, PREC = 0.95, REC = 0.91), confirming the model’s strong ability to correctly identify both positive and negative EEG segments. In contrast, lower results were obtained for patients such as DA00100V (ACC = 0.77, PREC = 0.30) and DA00103P (ACC = 0.94, REC = 0.15), indicating difficulties in detecting rare or atypical IED patterns. The variation in performance across patients suggests that the effectiveness of IED detection depends on individual EEG characteristics, recording quality, and the class balance within each patient’s data. Despite these differences, most cases maintained high accuracy and specificity values, confirming the overall stability and robustness of the model in subject-independent conditions.

6.3. Selection of the Convolutional Network Architecture

As part of the experiments, an extensive and time-consuming process was conducted to determine the optimal convolutional neural network architecture using the MATLAB Experiment Manager tool. Networks consisting of two, three, or four convolutional layers were analyzed. A total of 900 configuration variants were tested, varying the number of layers (2–4), number of filters (8–128), kernel sizes (2–15 time samples), presence and type of pooling layers (max pooling or average pooling), dropout parameters (0.2–0.4), and the use of batch normalization. In many cases, different combinations of these parameters were explored, allowing for a comprehensive analysis of how each component affected classification performance.

The final architecture was selected empirically, with the Area Under the Curve (AUC) serving as the primary selection criterion. For each configuration, the AUC was calculated on the test set, and the model achieving the highest score was chosen for further analysis. It was observed that networks with a small number of filters (e.g., 8) and small convolutional kernels were unable to extract representative features from EEG signals—the training process often failed to converge or resulted in poor classification performance (AUC < 0.7). These results confirmed that both the number of filters and the kernel length are crucial for effectively capturing the complex spatiotemporal patterns characteristic of IED discharges. Additionally, an ablation study was performed, involving the gradual removal of selected network components. The analysis showed that the batch normalization layer significantly stabilized the training process, dropout improved the model’s generalization ability, and removing the global average pooling layer led to a 3–4% decrease in AUC. Based on these observations, a final three-layer “time–space–time” architecture was selected as the optimal compromise between model complexity and representational capacity, ensuring high IED detection performance while maintaining a low number of parameters and good cross-subject generalization.

Although a large number of experiments were conducted with different hyperparameter configurations, it was not feasible to present the full set of results in this work. Therefore, only the best-performing configurations—selected based on AUC as the main evaluation criterion—are reported. The entire experimental process was highly time-consuming, with many tested networks requiring several days of computation for a single training run. During model development, inspiration was drawn from existing EEG analysis frameworks, particularly the EEGNet architecture. The proposed model retains the time–space processing sequence but implements it in a simplified form consisting of three compact convolutional blocks with optimally selected receptive fields. This approach substantially reduces computational complexity and parameter count while maintaining high representational capacity and stable learning performance—even when the number of training examples is limited. Unlike deeper models from the VGGEEG family, which employ numerous layers and kernels designed for image analysis, the proposed network uses filters specifically tailored to the characteristics of EEG signals. This design more accurately represents the true spatiotemporal structure of EEG data, improves feature extraction efficiency, and facilitates neurophysiological interpretability of the results. Consequently, the developed architecture represents a deliberate balance between complexity and generalizability, effectively capturing both the morphology and topography of IED discharges while maintaining implementation simplicity, shorter training time, and strong resistance to overfitting.

6.4. Explainability and Model Interpretation

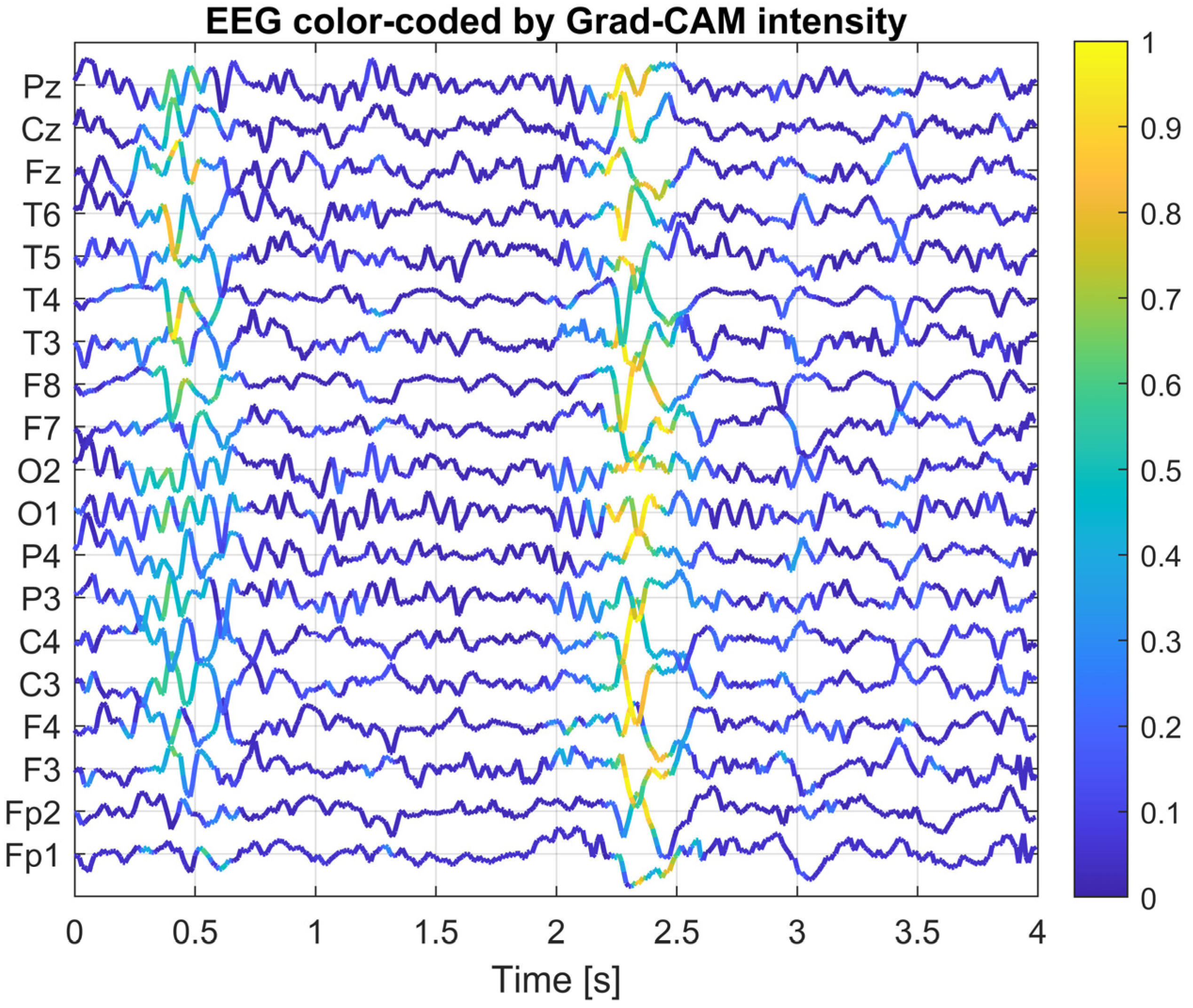

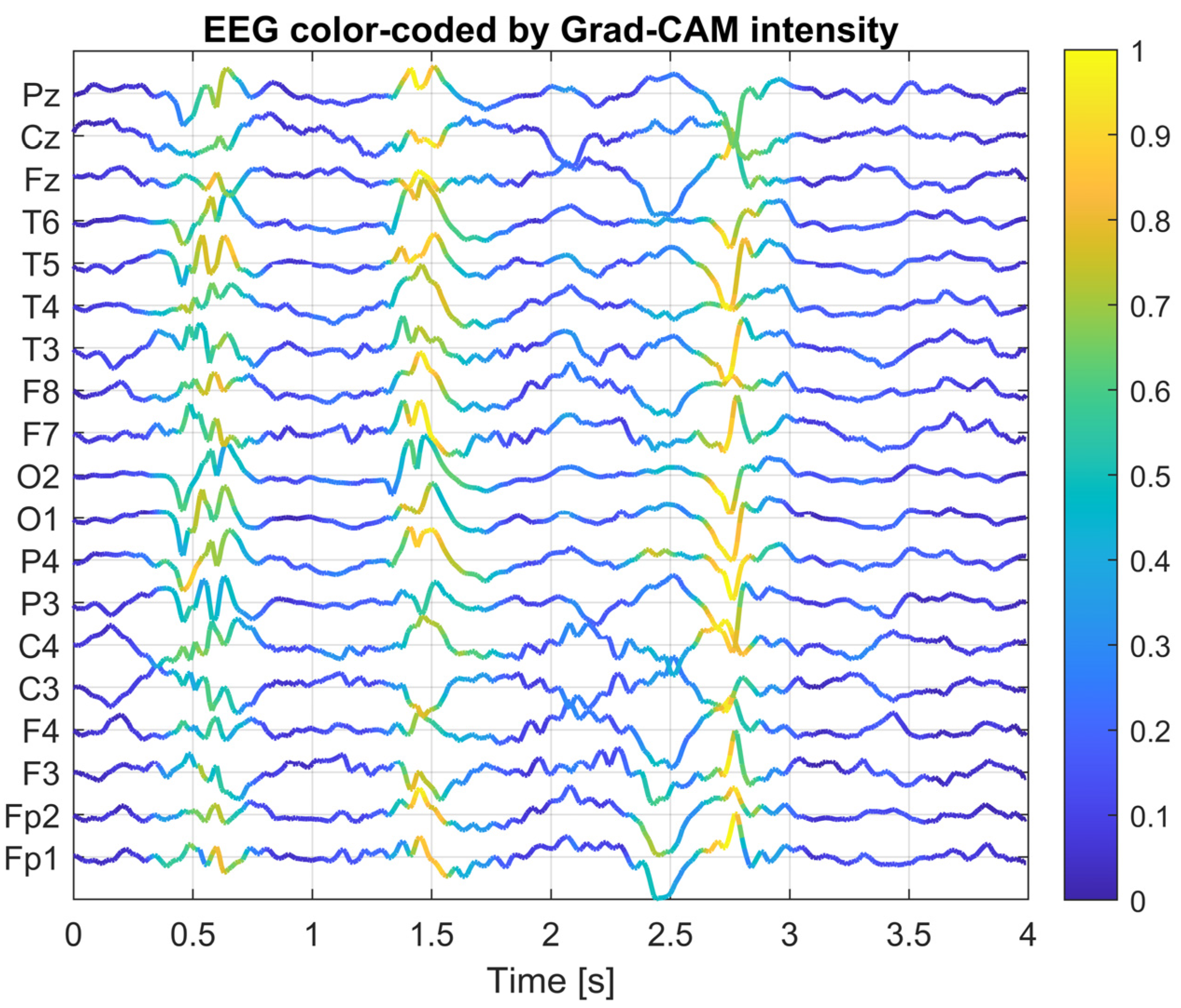

To gain a deeper understanding of how the designed convolutional neural network operates, an explainability analysis was conducted using Grad-CAM (Gradient-weighted Class Activation Mapping) and saliency map visualization methods. The goal of this analysis was to identify which portions of the EEG signal and which channels had the greatest influence on the classifier’s decision in the context of interictal discharge (IED) detection.

The Grad-CAM method computes the local contribution of gradients from the network output (for the IED class) with respect to the activations of a selected convolutional layer. This produces a two-dimensional activation map, where the intensity of each value reflects the importance of a given location in the EEG signal for classification. In this study, the last convolutional layer of the network was chosen as the reference layer, as it is responsible for detecting complex temporal patterns after prior spatial integration. This layer has the most significant impact on the final classification outcome, as it aggregates information about the temporal dynamics and spatial topology of the signal following earlier filtering stages. For selected EEG segments, composite plots were generated, where the color intensity of each EEG channel over time was proportional to the corresponding Grad-CAM activation value. This allows for simultaneous visualization of both the EEG waveform and its relevance to the model’s decision-making process.

Figure 5,

Figure 6 and

Figure 7 present Grad-CAM visualization results for three randomly selected EEG segments containing IED discharges. The color of the EEG traces varies according to the Grad-CAM activation intensity at each time point. The X-axis represents time (0–4 s), while the Y-axis corresponds to EEG channels, ordered from frontal electrodes (bottom) to posterior electrodes (top). Warm colors (yellow) highlight signal fragments to which the model assigns high decision relevance, whereas cool colors (blue) indicate areas with lower importance for classification. This visualization approach enables concurrent observation of EEG morphology and the temporal–spatial locations of features that most strongly influenced the CNN’s decision, thereby facilitating interpretation and validation of the model’s behavior. The visualizations indicate that the network focuses its attention on short, sharp deflections in the EEG signal (20–200 ms), particularly within central and temporal channels, which is consistent with the typical characteristics of epileptiform activity. These methods confirm that the model’s decisions are not arbitrary, but rather based on morphologically and topographically meaningful features of the EEG signal.

As a result, the Grad-CAM and saliency map analyses provide an interpretable insight into the CNN’s decision-making process, revealing which components of the EEG signal contribute most to IED detection. This enhances the transparency and trustworthiness of applying deep learning techniques in EEG analysis. The use of such visualization techniques has both validation and practical value. The trained CNN, combined with Grad-CAM visualization, can serve as a support tool for neurophysiologists, allowing for rapid identification of EEG regions with the highest diagnostic relevance. This form of result presentation creates a bridge between traditional EEG interpretation by specialists and AI-assisted automatic analysis, improving the interpretability of algorithmic decisions and facilitating their integration into clinical workflows. Moreover, it provides tangible support for neurophysiologists, enabling the rapid localization of potential IED foci and drawing attention to EEG fragments that require closer examination. This can significantly accelerate the diagnostic process and improve its overall efficiency.

6.5. Comparison with Other Studies and Results

Since this study used exactly the same dataset as Lin et al. [

29], it was possible to make a direct comparison of the classification results. In both cases, the EEG signals were segmented into 4-s epochs labeled as either containing interictal epileptiform discharges or not. The only significant modification in our pipeline was the downsampling of the signal from the original 500 Hz to 100 Hz, which reduced the number of samples and simplified the network architecture without losing essential temporal information.

Our simple CNN model achieved an accuracy of 94.44%. For comparison, in the study by Lin et al., the VGGEEG model at 80% sensitivity obtained a precision of 74.2% and a specificity of 97.0% [

29]. In our case, the precision was lower (70.83% vs. 74.2%), which means a higher number of false alarms. Similarly, the sensitivity of our model (74.50%) was lower than the 80% reported by Lin et al., indicating that our network is more conservative—it detects fewer IED cases. On the other hand, the specificity of 96.63% was very close to the 97.0% achieved by VGGEEG, confirming the high effectiveness of both approaches in recognizing normal signals. Our model also achieved an F1-score of 72.62%, which is slightly lower than the value that can be calculated for VGGEEG (~77%). Additionally, our obtained ROC-AUC of 95.26% confirms good class separability, although Lin et al. reported only an AUPRC of 0.858, which makes direct comparison difficult. An advantage of our approach is the significantly reduced number of parameters. Calculations showed that the network contains only about 97,000 trainable parameters (including 32 filters in the first layer, 64 in the second, and 128 in the third), while the VGG EEG model consists of as many as 13 convolutional layers and exceeds 3 million parameters. Such complexity negatively impacts training time and increases the risk of overfitting, especially in settings with limited data.

EEGNet is a compact architecture originally designed for BCI tasks (P300, SSVEP, MI), based on depthwise–separable convolutions, which drastically reduces the number of parameters and supports generalization with small datasets [

43]. Our network provides a higher learning capacity, using a classical convolutional architecture with a global average pooling layer, and is tailored to capture complex spatiotemporal patterns associated with IEDs, which are often characterized by variable morphology and frequent artifacts. This architectural choice promotes higher precision and specificity (fewer false alarms), at the cost of slightly lower sensitivity. These differences align with the observed trade-off between recall and precision on imbalanced data. As part of the tests, we also conducted experiments with the EEGNet architecture, maintaining identical training parameters, the same output layer, and comparable evaluation conditions as for our CNN. The results showed that EEGNet achieved an accuracy of 91.84%, while our CNN architecture reached 94.44%. In terms of precision, the difference was even more pronounced (70.83% vs. 55.82%), indicating a significantly lower number of false alarms in our model. On the other hand, EEGNet demonstrated higher sensitivity (84.06% vs. 74.50%), confirming its stronger tendency to detect IED events, at the expense of lower reliability of positive predictions. Our network, however, achieved better specificity (96.63% vs. 92.70%) and a higher F1-score (72.62% vs. 67.09%), indicating a more favorable balance between precision and sensitivity. Regarding global measures, EEGNet obtained a slightly higher AUC (96.21% vs. 95.26%), while the overall advantage of our architecture lies in fewer false alarms and more stable classification of non-IED cases.

It should be noted, however, that a simplified architecture may have limitations—particularly in detecting complex spatiotemporal patterns. The lack of advanced mechanisms such as attention, recurrent layers (LSTM/GRU), or autoencoders can limit the model’s flexibility. On the other hand, for mobile and embedded applications (e.g., ambulatory EEG), a lightweight CNN architecture such as the one presented in this study may offer a favorable trade-off between accuracy and computational efficiency.

6.6. Practical Implementation of CNN for Interictal Discharge Detection

The proposed method based on a convolutional neural network for the detection of interictal epileptiform discharges in EEG signals offers several advantages in terms of automation, scalability, and the potential for integration with existing diagnostic workflows. Nevertheless, its practical implementation requires careful consideration of various technical and clinical factors.

One of the key strengths of the proposed approach is its relatively simple network architecture, which translates into lower computational demands during both training and inference. The computational complexity of our network was estimated at approximately 520.6 MFLOPs per inference sample (for a 4-s EEG segment with 19 input channels). In practical tests on a computer, the average processing time per sample was 690 µs, confirming the high computational efficiency of the proposed architecture. This makes it potentially suitable for real-time systems or devices with limited processing power.

Table 6 presents a comparison of inference times for two neural network architectures: the deep VGGEEG network and the simplified Lightweight CNN. Both models were used to classify 4-s EEG segments but differ significantly in complexity and data processing strategy. The VGGEEG-like model consists of four convolutional blocks with 3 × 3 filters and pooling layers, processing signals sampled at 500 Hz (2000 samples). In contrast, the Light CNN operates on signals downsampled to 100 Hz (400 samples) and employs a shallower structure composed of three convolutional blocks with global feature aggregation.

The results reveal clear differences in processing time—the simplified network achieves significantly shorter inference times on both CPU and GPU, confirming the effectiveness of computational complexity reduction. The decrease in the number of layers and the lower sampling frequency resulted in a substantial acceleration of inference—approximately 60× faster on CPU and 27× faster on GPU. These findings indicate that the Light CNN can be effectively applied in real-time applications, where low processing latency is crucial for efficient EEG diagnostic system performance. Additionally, the proposed method is purely data-driven and does not rely on hand-crafted features, which simplifies the EEG preprocessing stage and may improve the model’s robustness to morphological variability in the signals.

6.7. Future Work

This study relied on a publicly available dataset and did not involve a clinical trial. Future research should therefore include prospective validation in clinical settings to confirm the applicability of the proposed method in real-world diagnostics. Moreover, our ongoing work will focus on extending the analysis to additional EEG datasets and, ultimately, to a clinical trial with patient participation. Since the model was trained and tested on a single dataset with 19 EEG channels and a specific electrode configuration, its generalizability to other datasets, montages, or acquisition systems cannot be guaranteed. For practical deployment, the following steps are necessary:

Verification that the model achieves comparable results across different EEG systems.

Investigation of the impact of signal referencing (e.g., CAR, bipolar) or channel normalization on classification performance.

Assessment of the model’s robustness to signal variability caused by artifacts.

Validation on additional datasets with varying demographics, acquisition protocols, and clinical contexts.

Another important aspect is the compatibility of EEG signal preprocessing. The way input data are prepared (e.g., filtering, resampling, epoch length, channel selection) must match the data on which the model was trained; otherwise, classification accuracy may decline. The current implementation assumes a fixed sampling rate (100 Hz) and epoch length (4 s). To enable use in real-time or multi-center environments, these preprocessing steps should either be standardized or made adaptable.

The performance of the algorithm may be partially affected by common EEG artifacts, such as muscle (EMG) activity, eye movements, and electrode contact noise. In the present study, no separate analysis was conducted to assess the model’s robustness to such disturbances; however, the applied band-pass filtering and signal normalization procedures helped to mitigate their impact during the preprocessing stage. In future work, it is planned to extend the method with a module for automatic artifact detection and rejection, which will enhance the reliability of classification and reduce the number of erroneous decisions under clinical conditions.

From a clinical perspective, the model can provide preliminary classifications, assisting neurophysiologists in analyzing lengthy EEG recordings. Such a semi-automated approach can significantly accelerate IED detection, especially in routine monitoring or large-scale analyses. Nevertheless, final verification and interpretation should always be performed by an expert, as false positives or negatives may have significant clinical consequences.

An additional advantage of the method is its potential integration with clinical decision support systems, which could help balance the workload of medical staff and increase the consistency of assessments. It is also worth emphasizing that the simplicity of the architecture promotes model transparency, which is important for clinical deployment.