1. Introduction

Human Activity Recognition (HAR) aims to identify and classify the physical activities performed by an individual or a group of individuals, depending on the specific application context. This recognition process involves understanding and interpreting various movements and actions, which can range from simple daily tasks to more complex activities. For instance, activities such as walking, running, jumping, or sitting can be performed by a single person, each involving distinct patterns of body motion and posture changes. These tasks require the system to capture variations in body movements to accurately detect and classify the activities being performed. HAR systems are designed to extract meaningful information from these movements, making it possible to monitor, analyze, and respond to human behavior in diverse scenarios. This capability is particularly valuable in fields such as healthcare, where monitoring patient mobility can be crucial, or in surveillance, where detecting suspicious actions can enhance security. By accurately recognizing and distinguishing these activities, HAR technologies contribute significantly to improving the responsiveness and efficiency of automated systems in various domains [

1,

2]. Certain activities are carried out through the movement of specific body parts, such as making gestures with one’s hands [

3,

4]. In some instances, activities involve interacting with objects, such as preparing meals in the kitchen [

5,

6]. Several surveys have comprehensively reviewed the development of wearable sensors and multimodal interfaces for human activity recognition [

7,

8,

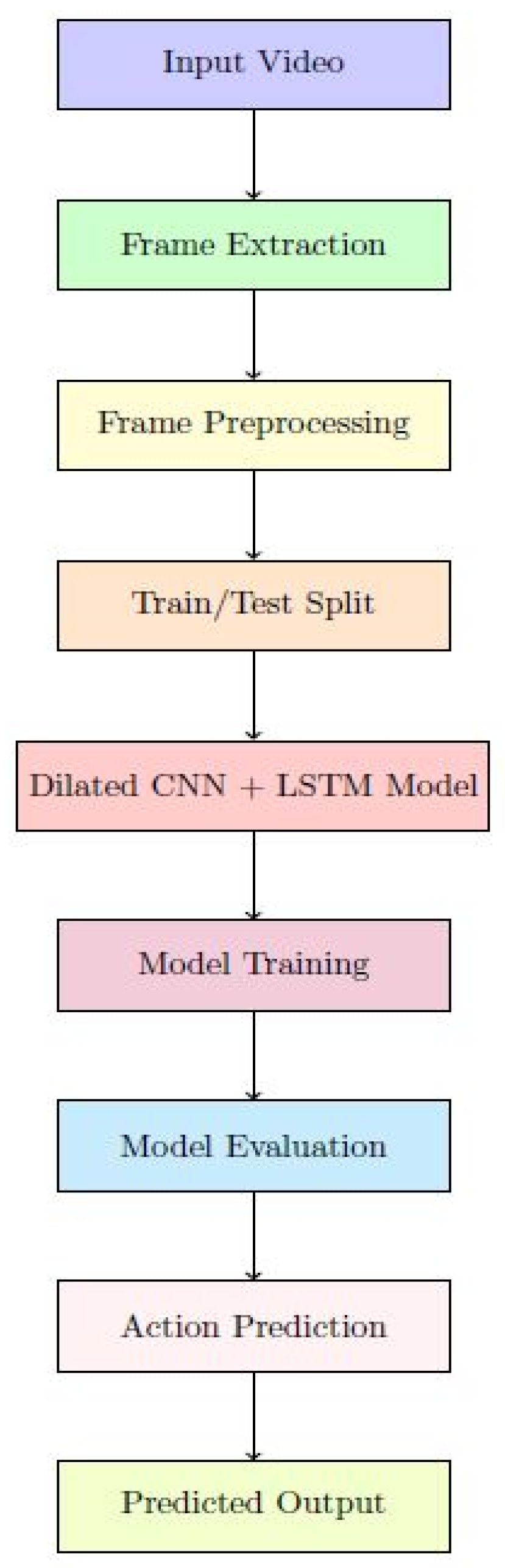

9]. The process of recognizing human activities using deep learning architectures, particularly Convolutional Neural Networks (CNNs), involves multiple critical stages that form a cohesive and complex system. A general framework for a CNN-based activity recognition. The initial phase focuses on the selection and integration of appropriate sensing devices tailored to the specific recognition tasks. Following this, data acquisition is conducted, where edge devices are utilized to capture data from various input sources. This data is then transmitted to a centralized server using communication technologies like Wi-Fi or Bluetooth.

Edge computing plays a crucial role in this architecture by bringing computational and storage capabilities closer to the data source, thus facilitating efficient and real-time processing. This approach ensures that sensors deployed for data collection can transmit information directly to edge servers, which are capable of processing the data promptly. By leveraging edge computing, the system achieves low-latency responses and enhanced performance, making it particularly suitable for applications requiring real-time activity recognition. The authors in [

8] conducted an in-depth review of HAR frameworks that specifically utilize accelerometer data. This analysis included discussions on various parameters, such as sampling rates, window sizes, and overlap percentages, which are critical for processing time-series data. The review also shed light on feature extraction techniques and highlighted key factors that influence the effectiveness of HAR systems. Cornacchia et al. [

9] emphasized the use of wearable sensors in HAR systems, covering a wide range of sensor types, including pressure sensors, accelerometers, gyroscopes, depth sensors, and hybrid modalities. They classified recent HAR studies based on the sensor data processing techniques employed, particularly those leveraging machine learning algorithms.

Additionally, Beddiar et al. [

10] provided a comprehensive survey on the latest advancements in HAR, focusing on significant features like the types of activities recognized, input data formats, validation methods, targeted body parts, and camera viewpoints used in data collection. Their review involved a comparative analysis of state-of-the-art methods based on the diversity of activities they could detect. Furthermore, they offered a detailed overview of vision-based datasets, which are crucial for advancing HAR research by providing standardized benchmarks for model evaluation. Collectively, these studies highlight the rapid advancements in HAR technologies, particularly in leveraging wearable sensors and machine learning techniques. They emphasize the need for continued innovation in sensor fusion, data processing, and feature extraction to develop robust systems capable of real-time and accurate activity recognition across diverse environments.

Moreover, incorporating edge devices reduces the dependency on cloud infrastructure by enabling localized data processing, which is vital for maintaining data privacy and reducing network congestion. Thus, the entire system is designed to handle large-scale, continuous data streams efficiently, which is essential for complex tasks like human activity recognition in dynamic environments.

In recent years, deep learning (DL) algorithms have gained significant traction due to their ability to automatically extract features from complex datasets, including visual or image data sequential time-series data. This indicates the need for manual feature engineering, which is often time-consuming and domain-specific, making DL models highly efficient and adaptable across diverse applications. As a result, DL methods have been widely adopted in areas such as computer vision, natural language processing, and predictive analytics, where high-dimensional data is prevalent. The flexibility and robustness of DL models in capturing intricate patterns have made them a preferred choice for tasks requiring accurate, data-driven insights [

11,

12].

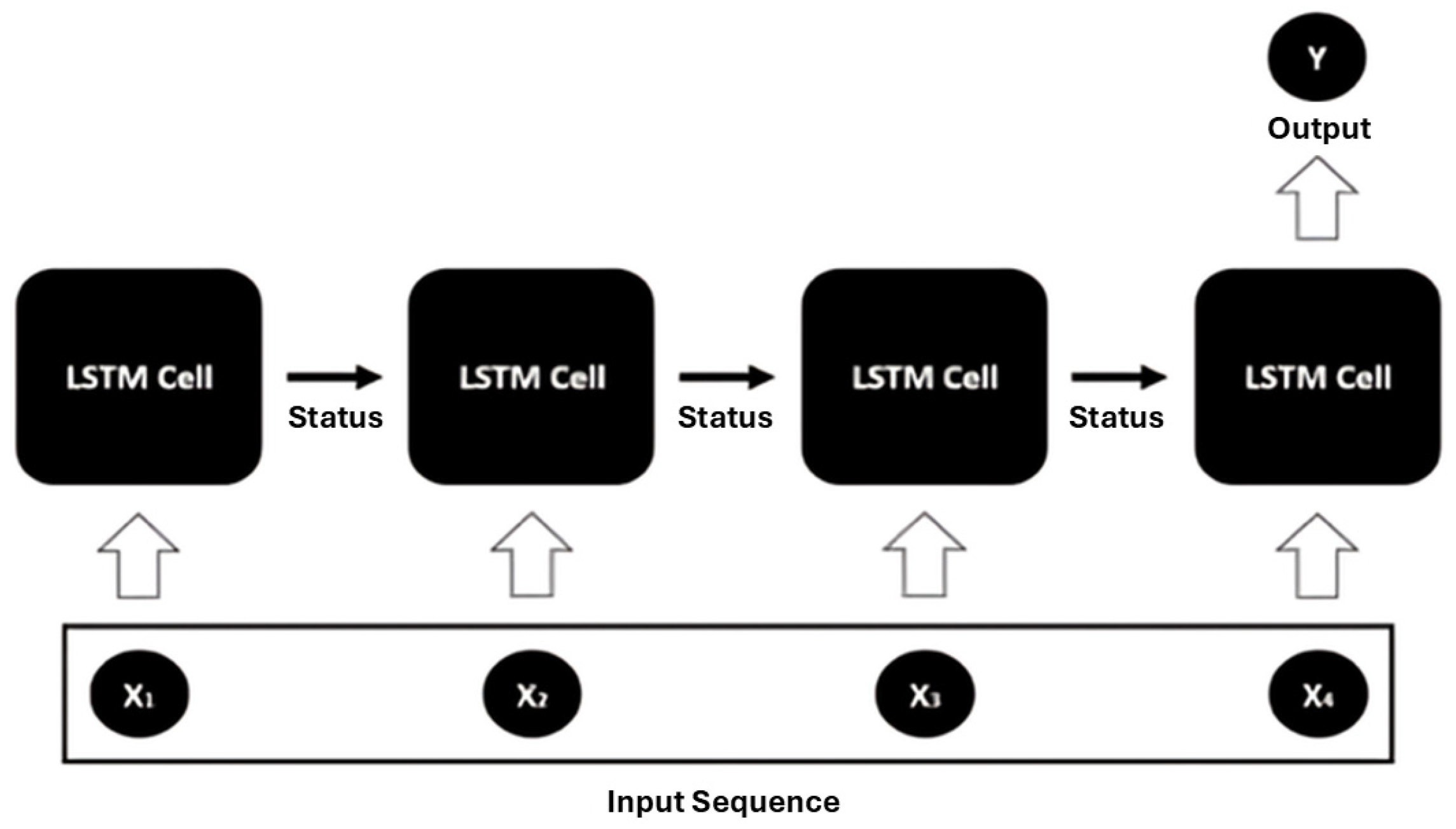

Convolutional Neural Networks (CNNs) are known to effectively learn hierarchical features, progressing from low-level to high-level representations. Researchers have observed that the features extracted by CNNs tend to outperform traditional handcrafted ones. In recent years, substantial efforts have been dedicated to designing neural networks that can effectively capture spatiotemporal characteristics for human activity recognition. Many studies leverage deep learning approaches in this area, as they enable automated extraction and learning of hierarchical features crucial for behavior analysis. This has led to the development of various systems demonstrating promising outcomes in human activity recognition. Deep Learning (DL) techniques have garnered significant interest due to their impressive performance across diverse domains. It is, therefore, unsurprising that DL-based models have seen a surge in applications for tasks such as identification, prediction, and intention recognition. In particular, Recurrent Neural Networks (RNNs) have achieved notable success in behavior analysis, with the Long Short-Term Memory (LSTM) architecture being the most prevalent. LSTMs, an enhanced form of RNNs, utilize gated memory cells that efficiently manage long-term temporal dependencies, making them especially suitable for understanding sequential and time-dependent data in human behavior analysis. Uddin et al. [

11] proposed an innovative approach to Human Activity Recognition (HAR) using a distinctive feature descriptor known as the Adaptive Local Motion Descriptor (ALMD), which builds upon the Local Ternary Pattern (LTP). The ALMD effectively captures human motion and appearance within video sequences, utilizing a random forest classifier for recognition. This method was validated on three well-known datasets—KTH, UCF Sports, and UCF-50—demonstrating high accuracy.

Similarly, Luvizon et al. [

12] proposed a skeleton-sequence–based HAR method that learns discriminative combinations of pose-derived features. Starting from per-frame joint coordinates (and their temporal variations), their deep model aggregates joint trajectories over time and automatically weights the most informative joints and motions, producing a compact spatio-temporal representation for action classification. By focusing on skeleton cues rather than appearance, the approach is robust to background and illumination changes and reports competitive results on standard skeleton-based benchmarks. Gao et al. [

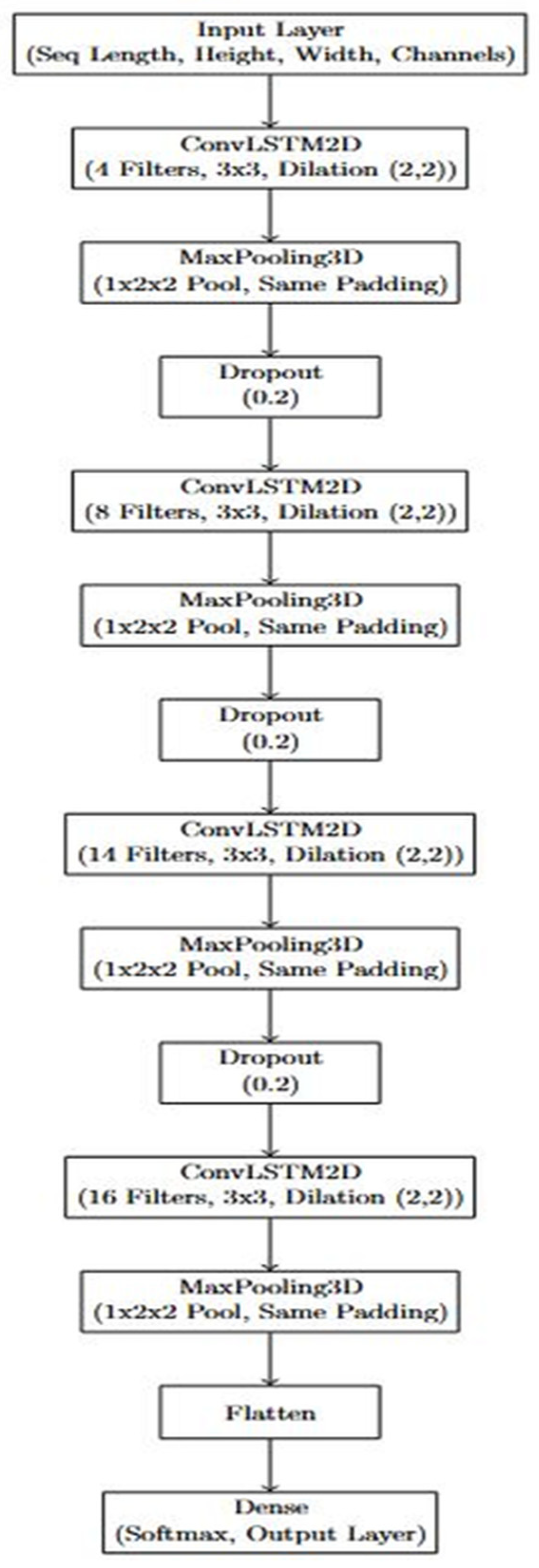

13] introduced an advanced model for multidimensional Human Activity Recognition (HAR) by leveraging image set-based analysis and group sparsity techniques. This method begins with the extraction of dense trajectory features, followed by the construction of a codebook using k-means clustering. The resulting Bag-of-Words (BoW) representation is then utilized alongside the codebook to recognize actions from multiple viewpoints. The effectiveness of this approach was evaluated across three well-known datasets: Northwestern UCLA, IXMAX, and CVS-MV-RGBD-Single. Experimental results demonstrated that this method significantly enhances recognition accuracy and achieves robust performance, especially in scenarios involving complex multi-view action sequences. By focusing on multi-dimensional feature representation, Gao et al.’s model offers a promising solution for recognizing activities across different perspectives and conditions. To enhance the performance of human activity recognition, my proposed approach integrates Convolutional Neural Networks (CNNs) with dilated convolutions alongside Long Short-Term Memory (LSTM) networks. The incorporation of dilated convolutions significantly reduces computational complexity while maintaining a broader receptive field, allowing the model to capture essential spatiotemporal features more effectively. This design not only accelerates the training process but also optimizes resource efficiency, leading to superior performance compared to traditional methods. The synergy of CNNs for spatial feature extraction, combined with LSTMs for handling temporal dependencies, results in more accurate recognition of human activities. The proposed approach demonstrates remarkable improvements in accuracy and training speed, offering a robust alternative to conventional deep learning models in this domain. Lightweight networks used in activity recognition frequently implement grouped convolution techniques.

2. Literature Review

Several factors, such as background noise, varying viewpoints, and changes in lighting, can significantly affect the environment in which human activities are captured in real-time video. These elements can make it challenging to clearly observe and recognize actions, as they introduce variability that obscures human activities. Traditional activity recognition techniques aim to overcome this issue by extracting specific features from the video data to classify different patterns.

Deep learning approaches, particularly convolutional neural networks (CNNs), provide a more effective solution by automatically learning hierarchical features. This capability allows the system to progressively build complex representations from simpler elements, improving the recognition process. The use of pooling layers and weight-sharing in convolutional architectures helps streamline the network’s search space, making it more efficient by leveraging the inherent structure of images. Additionally, pooling operations and weight-sharing mechanisms enhance the model’s robustness to changes in scale and spatial variations, enabling more consistent and accurate recognition of activities across different conditions. By doing so, CNNs can effectively handle the challenges posed by real-world video data, leading to more reliable human activity recognition.

2.1. CNN Approach for Human Activity Recognition

Zeiler and Fergus [

14] showed that the filters learned by convolutional neural network (CNN) models follow a hierarchical structure. In the early layers, the network detects simple, low-level features like edges and textures. As the data moves through deeper layers, the network identifies more abstract, high-level features, such as shapes and object components. This hierarchical approach not only demonstrates the flexibility of CNNs but also their effectiveness as generalized feature extractors across a wide range of tasks. By progressively enhancing the feature details at each layer, CNNs can build intricate data representations, significantly improving their performance in areas such as human activity recognition. This capability of automatically learning both fundamental and complex patterns from the data reduces the dependence on manually crafted features, making CNNs exceptionally robust for analyzing extensive datasets.

2.2. Deep Convolutional Neural Network for Human Activity Recognition (DCNN)

Granada et al. [

15] utilized deep convolutional neural networks (CNNs) to extract video representations directly from raw inputs, such as RGB frames and optical flow fields, training their recognition model in a fully end-to-end fashion. By feeding these CNNs with both types of input data, they generated probability scores for each class and predicted activity labels using a fusion technique. This approach demonstrated superior performance in activity recognition compared to methods that rely solely on handcrafted features or deep learning models using only RGB or optical flow inputs. However, despite the advantages of deep learning, the results did not always surpass handcrafted feature-based methods, particularly due to the limited availability of large-scale RGB activity recognition datasets needed for effective supervised training. The scarcity of comprehensive datasets hampers the full potential of CNNs in this domain, emphasizing the need for more extensive and diverse data to achieve better generalization in recognizing human activities.

2.3. Binary Motion Image Method for HAR

In Dobhal et al. [

16] proposed a human activity recognition model that utilizes a unique 2D representation of actions by combining sequences of images into a single image, known as a Binary Motion Image (BMI). In their approach, they first generate binary foreground images using a Gaussian Mixture Model (GMM) to highlight motion areas, which are then combined to form the BMI. A convolutional neural network (CNN) is subsequently trained on these BMIs for effective activity classification. The authors extended their work to include 3D depth maps, where a similar feature extraction method was applied to compute BMIs from depth data. This approach demonstrated the flexibility of the BMI representation, as it efficiently captures spatiotemporal motion patterns, allowing the CNN to learn from both 2D visual data and 3D depth information. By leveraging depth maps, the model was able to enhance recognition accuracy, particularly in scenarios where standard RGB data alone may fall short due to changes in lighting or background noise.

2.4. 3D Convolutional Neural Network for Human Activity Recognition

In Ji et al. [

17] introduced an innovative approach to activity recognition by utilizing 3D convolutional networks, which extend traditional 2D convolutions into the temporal domain. Unlike standard 2D CNNs that operate solely on spatial dimensions, 3D convolutional networks employ filters that span both spatial and temporal axes. This allows them to effectively capture spatiotemporal features and motions embedded across consecutive video frames, enabling a deeper understanding of dynamic content. By incorporating temporal information directly into the learning process, these networks can better model the motion patterns essential for human activity recognition. However, for optimal performance, the network requires additional input, such as optical flow, to enhance its training capabilities. Ji et al. [

17] demonstrated through experiments that 3D convolutional networks significantly outperform traditional 2D CNNs, particularly in scenarios where understanding motion across frames is crucial. This improvement highlights the potential of 3D convolutions in extracting richer feature representations, making them well-suited for complex video-based applications where both spatial details and temporal dynamics are important for accurate recognition.

2.5. Slow Fusion Method for Human Activity Recognition

In Karpathy et al. [

18] introduced a method to enhance the temporal awareness of convolutional networks through a technique known as slow fusion. In this approach, the network is provided with multiple adjacent segments of a video, and it processes them using the same set of convolutional layers. By doing this, the network captures temporal information across these video segments, enabling it to learn the patterns of motion and events over time. The network’s output for each segment is then processed by fully connected layers to generate a comprehensive video descriptor. Furthermore, Karpathy et al. [

18] proposed the use of a multi-resolution approach, where two separate networks are employed, each handling smaller inputs. This method not only improves the accuracy of activity recognition by allowing the network to focus on different resolutions of the video data but also reduces the number of parameters the network needs to learn. By utilizing smaller inputs and processing them in parallel streams, the network becomes more efficient, allowing for faster training and improved generalization across diverse video sequences. This approach significantly boosts the network’s ability to recognize actions with higher precision while keeping the computational cost manageable.

2.6. 3DDCNN Approach for Human Activity Recognition

In Liu et al. [

19] proposed a novel 3D convolutional deep neural network (3DDCNN) designed to automatically learn spatiotemporal features from raw depth sequences. In their method, the network also integrates a Joint Vector, which is calculated using the position and angle information of skeleton joints, to improve the recognition of human activities. This approach allows the model to capture both the spatial and temporal aspects of human motion, which are essential for activity recognition tasks. One of the key advantages of this method is that the learned feature representation is both time-invariant and viewpoint-invariant. This means that the model is capable of recognizing activities accurately regardless of the time at which they occur or the viewpoint from which the action is captured. As a result, the network can generalize better to different scenarios, making it robust to variations in camera angles and temporal shifts. The method achieves results that are comparable to state-of-the-art techniques, demonstrating its effectiveness in recognizing complex human activities while maintaining a high level of accuracy. This approach highlights the potential of combining depth information with skeleton-based features to improve the robustness and performance of activity recognition systems.

2.7. 4K-Dimensional Per-Segment Descriptors Based on CNN

Ryoo et al. [

20] evaluated temporal pooling strategies (average, max, and pooled time series with temporal pyramids) to summarize CNN features into ~4K-dimensional per-segment descriptors. These representations capture scene dynamics over time, improving robustness to noisy motion and enabling multi-scale temporal reasoning. Building on the need for efficient spatio-temporal representations, recent sensor-centric HAR work explores lightweight neural designs that reduce parameters and compute while preserving accuracy. LIMUNet [

21] exemplifies this direction for smartwatch data, using compact architectural choices tailored to resource-constrained devices and reporting competitive recognition performance in a low-overhead footprint. Complementary to lightweight CNNs, sequence models remain effective for modeling temporal dependencies in dynamic activities. Hassan et al. [

22] present a Deep BiLSTM approach enhanced with transfer-learning–based feature extraction, showing that pretrained feature encoders coupled with bidirectional temporal modeling can yield strong results on dynamic HAR benchmarks.

Broader surveys synthesize these trends across modalities, models, and deployment settings. Gu et al. [

23] review deep-learning HAR end-to-end—from architectures and fusion strategies to datasets and evaluation protocols—highlighting open issues such as cross-dataset generalization and domain shift. For wearable-sensor pipelines specifically, Zhang et al. [

24] provides an extensive overview of deep models, preprocessing/windowing choices, and practical considerations (latency, energy, on-device inference). Wang et al. [

25] survey deep learning for sensor-based HAR, detailing convolutional/recurrent formulations, feature learning for time-series signals, and challenges in real-world deployment (subject variability, annotation cost, and robustness). Together, refs. [

23,

24,

25] position lightweight CNNs and sequence models [

21,

22] as complementary tools for efficient, accurate HAR under practical constraints.

4. Results and Discussions

4.1. Dataset UCF 50

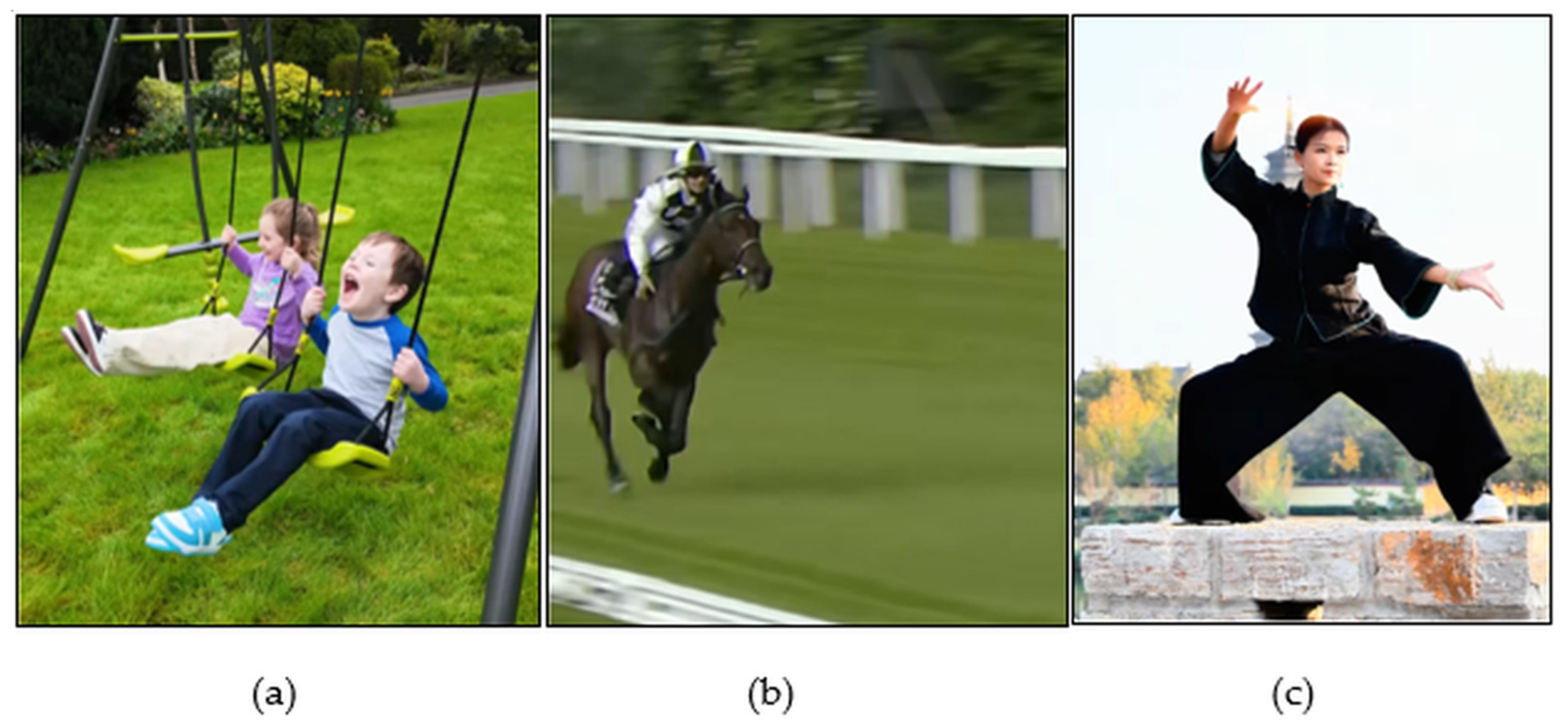

The UCF50 dataset is a comprehensive action recognition dataset that features 50 distinct action categories, sourced from realistic videos on YouTube. It is an expanded version of the earlier YouTube Action dataset (UCF11), which focused on only 11 action categories. Unlike many existing datasets in the field, which rely on staged and controlled environments, the UCF50 dataset emphasizes realism as can be seen in

Figure 5. It provides the computer vision community with challenging, real-world scenarios to test the robustness of action recognition models. One of the primary strengths of this dataset is its diversity and complexity. The videos exhibit significant variations in factors such as camera motion, object appearance, pose, scale, and viewpoint, alongside varying illumination conditions and cluttered backgrounds. These variations make the dataset highly challenging and suitable for evaluating models’ performance in real-world scenarios. The dataset is organized into 50 action categories, which are further divided into 25 groups per category. Each group contains at least four video clips, often sharing common traits like the same individual, similar backgrounds, or comparable viewpoints, thereby testing models’ ability to generalize across different contexts. The 50 action classes span a wide range of activities, including both sports and everyday actions. Some of the notable categories are Basketball Shooting, Bench Press, Billiards Shot, Drumming, Horse Riding, Kayaking, Pull-Ups, Tennis Swing, Volleyball Spiking, and Walking with a Dog, among others. This diversity in actions, captured in realistic settings, makes UCF50 a valuable resource for advancing research in human activity recognition.

4.2. Dataset Preprocessing

The pre-processing of the UCF50 dataset involved several essential steps to prepare the data for effective training of the ConvLSTM model. Initially, a subset of action categories was chosen from the dataset, focusing on classes like WalkingWithDog, TaiChi, Swing, and others. For each selected class, the script identified all available video files within the designated directory. The core of the pre-processing was handled by the frame’s extraction function, which read each video using OpenCV’s VideoCapture. It determined the total frame count and extracted a fixed number of frames sequence length was set to 20 at uniform intervals to ensure consistency. To achieve this, a frame sampling interval was computed based on the video’s total frame count, allowing the model to learn temporal patterns efficiently. Each extracted frame was resized to a standard dimension of 256 × 256 pixels to maintain uniformity across the dataset. The frames were then normalized by scaling pixel values to a range between 0 and 1, which enhances training performance by stabilizing gradient updates. The dataset function brought everything together by processing each video file, extracting frames, and storing them in structured lists. Only videos with at least 20 frames were included, ensuring all sequences were of consistent length. The resulting frames, labels, and video paths were then converted into numpy arrays for efficient handling during model training. This comprehensive pre-processing strategy ensured the data was optimally formatted, allowing the ConvLSTM model to focus on learning the spatial and temporal patterns critical for accurate action recognition.

4.3. Model Evaluation Performance Metrics

After the model has been trained, testing it on real-world data, such as YouTube videos, involves several key steps. First, the video to be tested is obtained by downloading it from YouTube. This is done by providing the URL of the YouTube video, which is then fetched and stored in a designated directory. The title of the video is extracted, and the video is saved with a meaningful filename. The downloaded video is now ready to be used for testing the model. Once the video is downloaded, it is prepared for action recognition. To do this, the video is read frame by frame, and specific frames are selected based on the sequence length required by the model. These frames are resized to fit the input dimensions expected by the model, and they are normalized to ensure that pixel values lie between 0 and 1. After preprocessing, a fixed number of frames are passed as input to the trained LRCN model. The model processes these frames, generating a probability distribution over the possible classes for each frame sequence. The class with the highest probability is selected as the predicted action. Finally, the predicted action, along with the confidence score (the probability of the predicted class), is output. This gives insight into the model’s performance on the test video. The process concludes by displaying the results, typically in the form of the predicted action label and its associated confidence, which reflects how certain the model is about its prediction. This approach allows for the effective evaluation of the model’s performance on unseen video data, providing a real-world application of the trained action recognition model. Furthermore, the ability to test on varied and complex real-world video data demonstrates the robustness and versatility of the model in handling different scenarios. This real-time action recognition capability can be applied in a wide range of industries, from surveillance and security to sports and entertainment, highlighting its potential for practical use beyond controlled datasets.

The model was tested on three different YouTube videos, each depicting a distinct action: a child swinging at a playground, a horse race, and Tai Chi. For the first test (

Figure 6a), the model correctly identified the activity as “Swing,” demonstrating sensitivity to repetitive, cyclic motion. In the second test (

Figure 6b), the model predicted “Horse Race,” highlighting proficiency in recognizing high-speed activities involving multiple moving subjects. The final test (

Figure 6c) featured Tai Chi, characterized by slow and controlled movements; the model accurately classified it as “Tai Chi,” indicating the ability to capture subtle, fluid motion patterns. Across all three examples, the predicted labels were accompanied by high confidence scores, reflecting certainty in each decision.

This ability is essential for recognizing actions that do not involve abrupt movements but rather require a keen understanding of slower, deliberate gestures. In all three cases, the model not only identified the correct action but also provided a confidence score, reflecting its certainty in each prediction. The overall performance comparison of the proposed Dilated ConvLSTM model with other baseline architectures is summarized in

Table 1. The table presents the accuracy and loss values for both training and validation phases, demonstrating that the Dilated ConvLSTM achieves the highest accuracy (94.9%) and the lowest loss (0.20), indicating superior learning efficiency and generalization capability. These results underline the effectiveness of the Dilated ConvLSTM model in performing action recognition on diverse types of activities, ranging from rapid motions to slow, controlled movements.

The incorporation of dilated convolutions in the CNN layers enables the model to expand its receptive field, allowing it to capture more extensive spatial patterns without a corresponding increase in computational cost. This improvement enhances its ability to recognize complex spatial and temporal features across a larger context. Additionally, by integrating Long Short-Term Memory (LSTM) units, the model is able to effectively learn and predict dynamic sequential dependencies, making it well-suited for action recognition tasks. This combination of dilated convolutions and LSTMs results in superior performance compared to conventional CNN and CNN-LSTM models.

4.4. Plotting Accuracy vs. Validation Accuracy

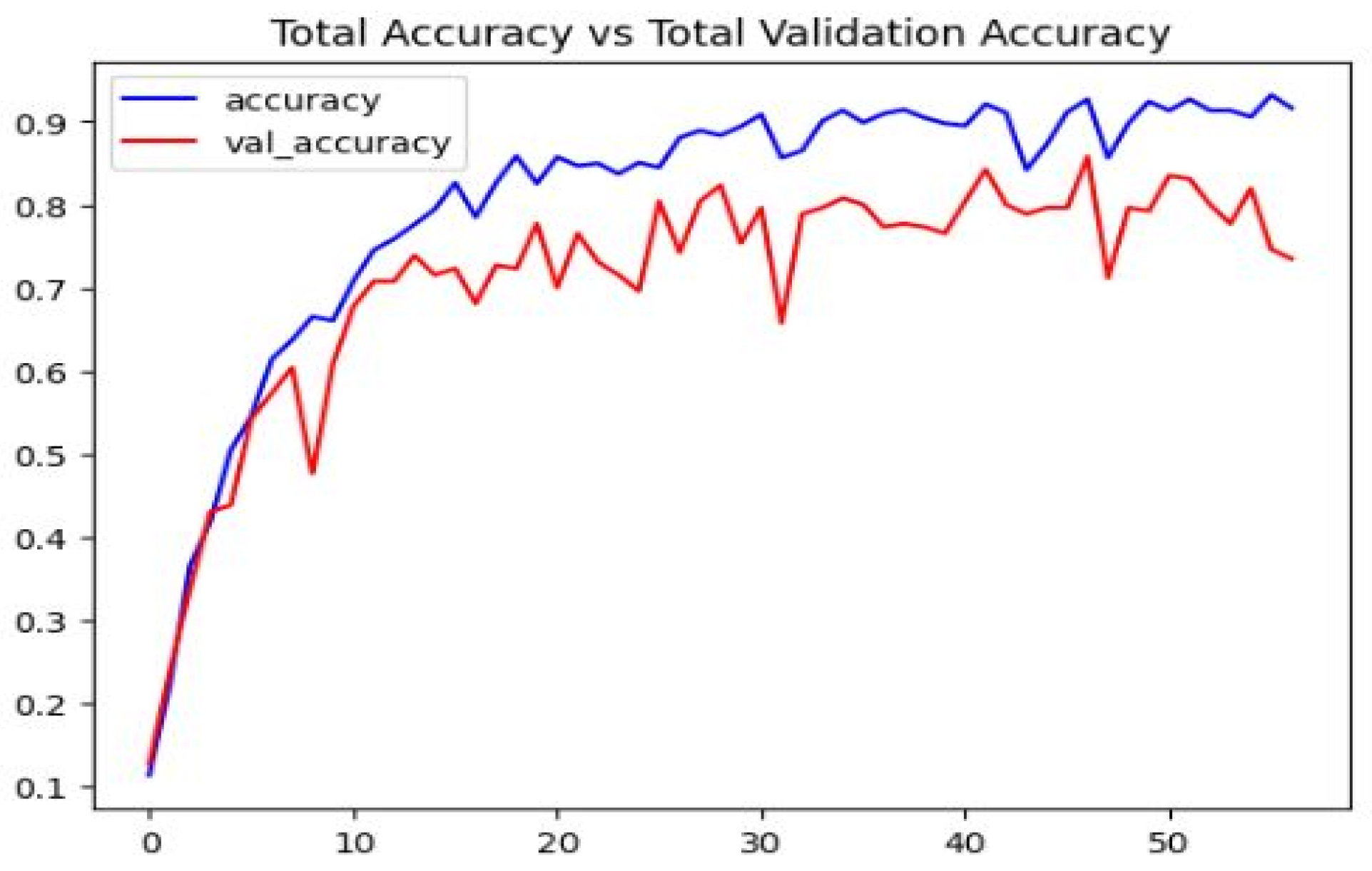

The Total Accuracy vs. Validation Accuracy graph as shown in

Figure 7 for the Dilated ConvLSTM model clearly demonstrates its superior performance. The blue curve represents total accuracy (training accuracy) over epochs. The red curve represents validation accuracy over epochs. As seen in the graph, the model maintains a significantly higher training accuracy (94.9%) and validation accuracy (88.34%) compared to the other models. This indicates not only strong learning on the training data but also excellent generalization to unseen data, which is crucial for real-world applications. The close alignment between training and validation accuracy further supports the model’s robustness and its ability to effectively handle action recognition tasks across diverse inputs. Although the training and validation curves align closely, slight divergence after later epochs may indicate mild overfitting, which could be further mitigated through extended regularization or larger datasets in future work.

4.5. Plotting Loss vs. Validation Loss

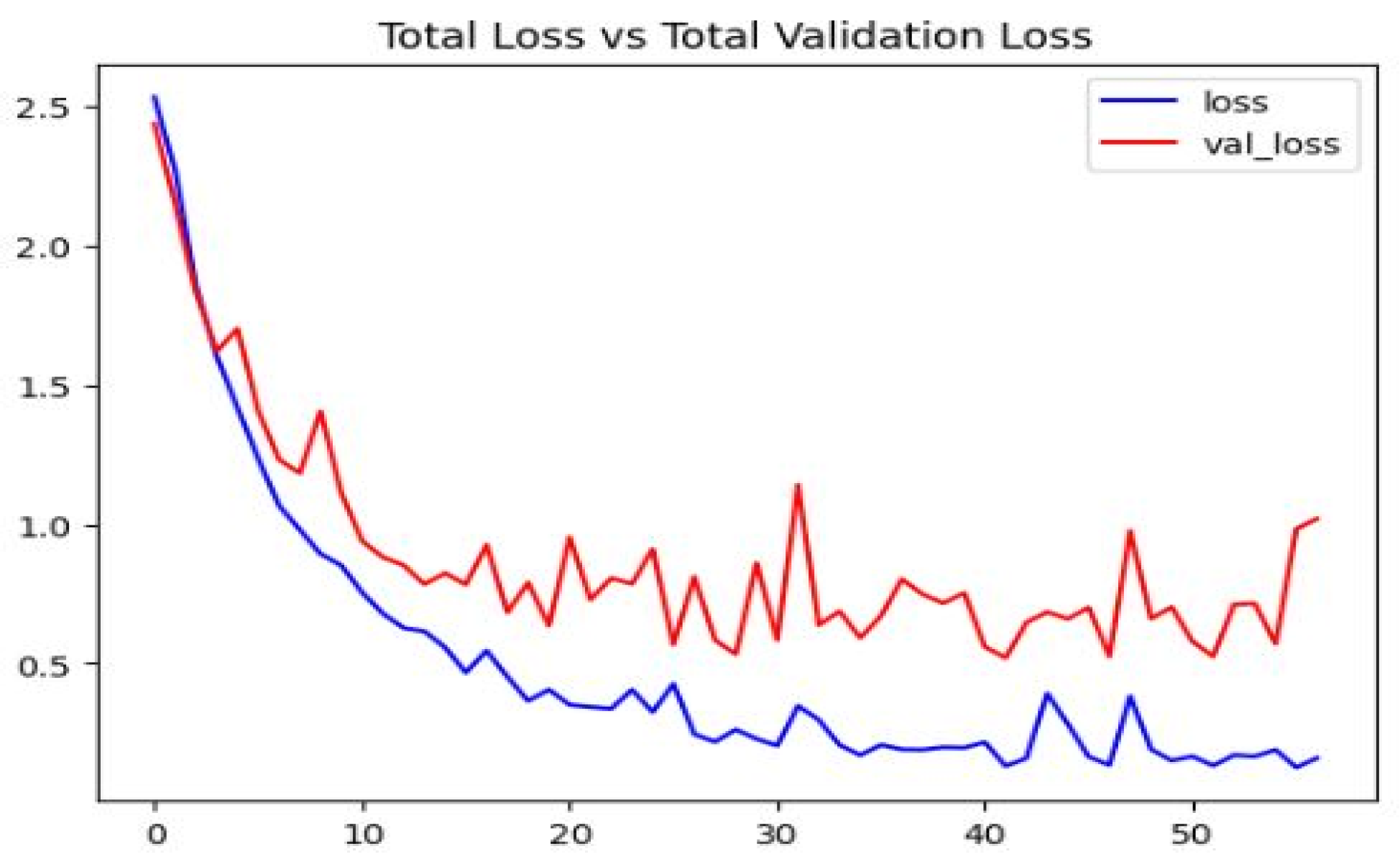

The total Loss vs. Validation Loss graph as shown in

Figure 8. The blue curve represents total loss (training loss) over epochs. The red curve represents validation loss over epochs. The Dilated ConvLSTM model highlights its efficient training process. As depicted, the model achieves a low training loss of 0.20 and a validation loss of 0.32, both of which are significantly lower than those of the other models. This shows that the model not only minimizes error during training but also maintains a good balance between training and validation, suggesting it is not overfitting. The smaller gap between training and validation loss indicates strong generalization, which is essential for ensuring the model’s effectiveness in real-world action recognition tasks. Future work could also incorporate ablation studies on regularization strength and alternative optimization strategies to better validate robustness.

5. Conclusions

This project effectively demonstrates the application of a Dilated CNN-LSTM (Long Short-Term Memory) model for recognizing actions in video sequences. By integrating dilated convolutional layers with LSTM units, the model captures both spatial and temporal dynamics, enabling it to identify complex actions over time. The model was trained on a varied set of video data, achieving strong performance in action classification. During the testing phase, the model was successfully applied to real-world YouTube videos, accurately predicting actions. This highlights the model’s robustness and its ability to generalize across different video scenarios. Additionally, the process of downloading, preprocessing, and evaluating the model on previously unseen data, along with its assessment based on accuracy and loss metrics, further illustrates the model’s practical applicability in real-world settings. Future research could focus on enhancing the model’s performance by refining the architecture, incorporating larger, more diverse datasets, or adapting it for real-time use. Overall, the findings suggest that the Dilated CNN-LSTM model holds considerable promise for a variety of applications, such as surveillance, entertainment, and human–computer interaction.