1. Introduction

With the rapid advancement of artificial intelligence technologies and the widespread adoption of Internet of Things (IoT) applications, ASR is now extensively deployed on a wide range of smart and embedded devices, including smartphones, wearables, smart home systems, and industrial IoT nodes [

1,

2,

3]. Consequently, the efficient deployment of high-performance ASR models on resource-constrained edge devices has become imperative. This capability has significant potential to enable more intelligent applications by providing greater ubiquity, enhancing real-time capabilities, and improving privacy protection [

4,

5,

6].

However, the practical deployment of ASR models on edge devices faces two primary challenges. First, there is a fundamental tension between model complexity and resource constraints. Mainstream high-performance ASR models are typically large and computationally intensive, requiring resources that far exceed the limited processing capacity, storage space, and power of edge devices. Second, environmental noise is a major obstacle. The operational environments of edge devices are complex and unpredictable; factors such as background noise, reverberation, and far-field conditions can severely degrade speech signal quality, leading to significantly reduced recognition accuracy and model robustness. Consequently, developing ASR models that are both lightweight and noise-robust is a critical research challenge at the intersection of speech processing and embedded artificial intelligence.

Thus, we introduce and validate an efficient, robust end-to-end ASR framework that advances current speech recognition paradigms. By synergistically integrating specialized components, our framework enables accurate multi-scale speech processing in noisy environments while maintaining computational efficiency on resource-constrained edge devices.

This paper proposes OS-Denseformer, a lightweight end-to-end automatic speech recognition model, to address the challenge of robust speech recognition on edge devices operating in complex acoustic environments. The model employs a cascaded architecture comprising three key components: dilated convolutional downsampling, anti-noise feature-encoding blocks, and upsampling. This design enables multi-scale acoustic representation fusion to mitigate interference from redundant information, enhances the discriminability of acoustic features in noisy speech, and significantly reduces computational complexity. Additionally, we designed an enhanced local feature extraction module, termed OS-Conv. This module integrates a residual OS block, a densely connected depthwise separable convolution, and an ExpNorm function to improve the network’s capability to filter out non-semantic fragments. The residual OS block enables efficient receptive field modeling, while the densely connected depthwise separable convolution structure promotes feature reusability and reduces the number of parameters. Furthermore, the ExpNorm function helps reduce computational overhead during training and improve convergence stability. We employ a phased training strategy with a loss function that leverages MBR to directly align the optimization objective with the final evaluation metric. To enhance generalization in specific noisy environments, we adopt a transfer learning strategy that involves pre-training on clean speech before fine-tuning on noisy speech.

Experimental results on public datasets—including AISHELL-1, QUT-NOISE, and Noisex92—demonstrate that OS-Denseformer achieves superior performance compared to mainstream ASR models in both noise robustness and lightweight characteristics. Furthermore, deployment tests demonstrate that OS-Denseformer maintains robust performance with a compact footprint across diverse mobile hardware platforms.

2. Related Work

2.1. End-to-End ASR

End-to-end ASR models have been widely studied in recent years and have gradually become the mainstream. Zhang et al. [

7] first proposed integrating a deep convolutional neural network structure into an end-to-end ASR framework. Subsequently, Miyazaki et al. [

8] unified the decoding processes of speech recognition and synthesis through a unified state-space modeling approach. Inspired by the success of the Transformer architecture in natural language processing, researchers have successfully integrated the attention mechanism into end-to-end speech recognition models [

9,

10,

11]. Both Convolutional Neural Networks (CNNs) and the Transformer architecture have become core components of end-to-end ASR systems. Nevertheless, CNNs are limited in capturing global features, whereas the self-attention mechanism in Transformers is less proficient at capturing fine-grained local patterns. The Conformer [

12] bridges this gap by combining convolutional layers with self-attention modules. This hybrid model excels in modeling both global and local features, leading to significant performance improvements.

Recent efforts to reduce computational cost and enhance performance have focused on modifying the Conformer architecture. Peng et al. [

13] introduced the Branchformer, which employs a dual-path architecture with parallel branches dedicated to extracting global dependencies and local patterns, respectively. E-Branchformer [

14] builds upon this foundation by integrating dynamic gating and learnable convolutional modules to improve adaptability during local modeling of different speech segments. Kim et al. [

15] proposed Squeezeformer, which simplifies the Conformer’s macaron structure into a more Transformer-like block and restructures the overall framework into a U-Net architecture to reduce computational overhead. Similarly, Yao et al. [

16] introduced Zipformer, which also uses a U-Net-like structure and processes information at multiple sampling rates across its layers. Gao et al. [

17] developed Speech-Mamba, a model that integrates a selective state space model (Mamba) with the Transformer to more efficiently capture long-range dependencies in speech, leading to faster inference. In a different approach, Gao et al. [

18] proposed Paraformer, a non-autoregressive ASR system that uses parallel decoding to accelerate inference speed.

Our work is substantially inspired by Zipformer’s multi-sampling architecture, which effectively reduces computational costs while extracting fused speech–noise features from noisy signals. However, Zipformer’s rate transformation modules are relatively simplistic: downsampling uses averaging of adjacent frames, while upsampling simply duplicates frames. This downsampling approach suffers from severe aliasing artifacts, provides limited noise robustness, and restricts the receptive field to immediate neighbors, thereby failing to capture longer-range contextual dependencies. The upsampling method lacks anti-aliasing filters, introducing significant mirror frequency components in the spectral domain—another critical limitation we address.

Notably, contemporary speech recognition systems continue to rely predominantly on the CTC + AED framework as their core optimization objective [

12,

13,

14,

15,

16]. However, in high-noise environments, acoustic feature distortion disperses the probability mass of correct transcriptions across multiple noisy hypotheses, causing these models to frequently converge to suboptimal solutions that capture noise artifacts rather than essential speech characteristics. Overcoming this fundamental limitation represents a central focus of our work.

Breakthroughs in large language models (LLMs) have spurred exploration into their integration with ASR systems. For instance, Xu et al. [

19] proposed FireRedASR, which cascades an ASR system with an LLM to correct recognition errors—including homophones, technical terms, and colloquial ellipses. In another approach, Yu et al. [

20] compressed frame-level speech encoder features into semantic unit sequences compatible with LLMs and aligned speech with text via dynamic duration prediction. Despite their powerful contextual modeling and semantic error correction capabilities, the massive size of LLMs is incompatible with the limited memory resources of edge devices. Moreover, a cloud-edge collaborative deployment strategy for LLMs introduces network dependency, along with inherent latency and privacy concerns. Consequently, employing LLMs to assist edge ASR systems faces significant technical bottlenecks, rendering this approach unsuitable for the scenarios targeted in this study.

2.2. Noise-Robust Speech Recognition

In practical scenarios, ASR systems are often degraded by ambient noise. The complex interplay of noise and speech distorts the original speech frames, introducing significant redundant information that interferes with the acoustic model during feature extraction [

21].

To mitigate this, researchers have developed noise-robust speech recognition techniques, which fall into two principal categories: signal-domain processing and model-domain processing. Signal-domain methods treat speech enhancement as an independent front-end module, aiming to isolate clean information from noisy signals. Common techniques include spectral subtraction [

22], Wiener filtering [

23], and deep learning-based speech enhancement [

24,

25,

26]. However, these approaches exhibit limited generalization across diverse and non-stationary noise types and often introduce detrimental front-end distortions. They also require real-time buffering of multiple audio frames to compute spectral masks or reconstruct waveforms, imposing significant memory and computational burdens that render them unsuitable for edge devices. In contrast, model-domain methods directly improve the ASR model’s inherent robustness by adapting its architecture or training procedure, enabling it to learn noise-resistant representations implicitly. For example, Pizarro et al. [

27] used slow feature analysis to enhance noise robustness, and Burchi et al. [

28] employed a multimodal strategy that incorporated visual information from lip movements. Because these techniques achieve robustness through end-to-end inference within a single model, their computational characteristics are better suited for deployment on resource-constrained edge devices.

Given the above, we focus on model-domain strategies, using the Conformer as our reference model. We use the Conformer as a reference model for our analysis. Its local feature extraction relies heavily on convolutional modules that use depthwise separable convolutions. This design is suboptimal for local feature extraction in high-noise conditions. Secondly, the Conformer uses a uniform stack of blocks operating at a single sampling rate, which fails to capture multi-scale acoustic features in noise and can lead to the accumulation of redundant information. Furthermore, under noisy conditions, the hybrid CTC/AED approach becomes highly susceptible to distortion of local acoustic features, consequently leading to inefficient optimization and training instability.To overcome these drawbacks, OS-Denseformer introduces three key innovations: (1) the OS-Conv module, an enhanced replacement for the depthwise separable convolution; (2) a multi-sampling architecture for enriched feature diversity and reduced computational overhead; and (3) a phased MBR loss function for direct optimization of CER.

3. Proposed OS-Denseformer

3.1. OS-Denseformer Encoder

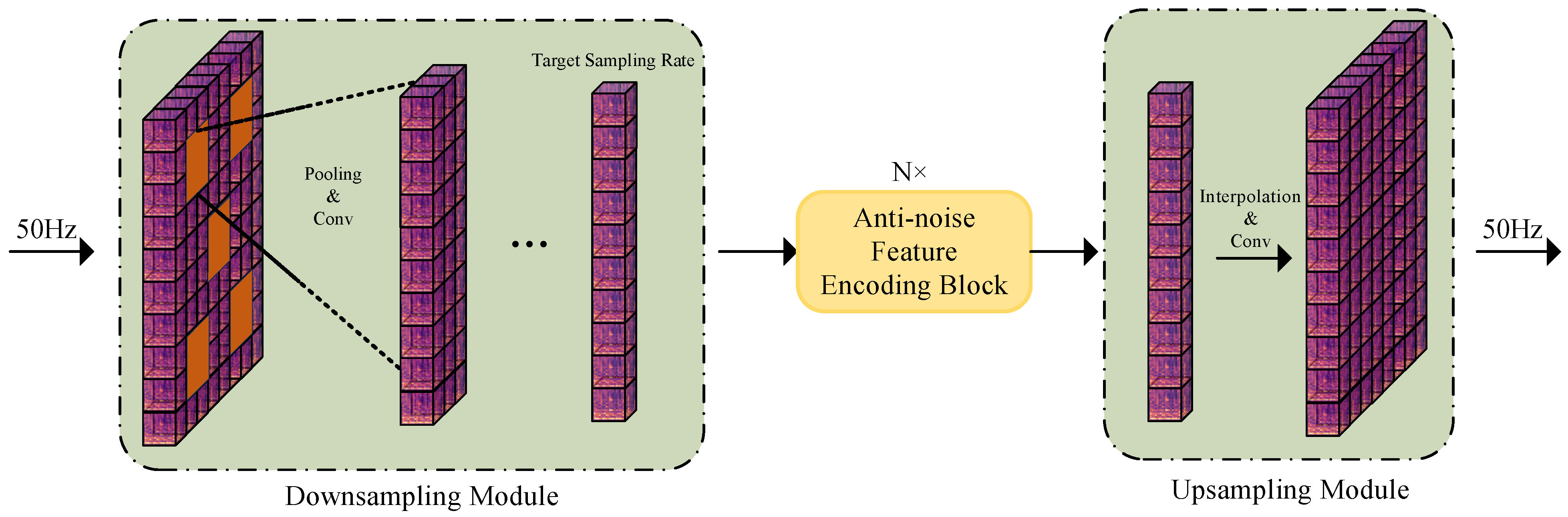

Figure 1 illustrates the overall architecture of the OS-Denseformer encoder. It uses a cascaded feature extraction module designed to effectively extract multi-scale acoustic features from noisy speech and enhance the resulting representations. The pipeline comprises three main components: dilated convolutional downsampling, an anti-noise feature-encoding block, and upsampling. Each anti-noise feature-encoding block operates on the downsampled, low-sampling-rate signals, significantly reducing the computational load during processing. In the figure, dashed boxes represents a distinct feature extraction module.

The encoder employs a cascade of six feature extraction modules to capture multi-scale speech features. The first module contains two stacked anti-noise feature-encoding blocks. The remaining five modules each follow a downsampling–encoding–upsampling pattern. They contain varying numbers of anti-noise feature-encoding blocks: 2, 3, 4, 3, and 2, respectively. Their embedding dimensions are 192, 256, 384, 512, 384, and 256, respectively, with the central module having the largest dimension. This architecture places more blocks in the higher-dimensional intermediate modules, forming a symmetric distribution. This design avoids the representation saturation problem inherent in single-sampling-rate architectures like the Conformer. Each feature extraction module uses a connector to fuse its output features back to its input features at a 50 Hz sampling rate. Finally, the sampling rate is reduced from 50 Hz down to 25 Hz by a convolutional downsampling layer, yielding the encoder’s final output.

3.2. Sampling Module and Connector

To improve the modeling of fused speech-noise features, we adapt an image-processing technique for sampling-rate transformation within the anti-noise feature-encoding block. In this analogy, the speech frame information is conceptualized as a grayscale image, where the single channel of the speech frame corresponds to that of a grayscale image, the FBank feature dimension (typically 80) defines the image height, and the variable number of speech frames (determined by sampling rate and utterance length) defines the image width.

The workflow of the sampling module is illustrated in

Figure 2. We propose a downsampling module that integrates average pooling with dilated convolution. First, average pooling serves as an effective anti-aliasing filter by attenuating signal components above the target Nyquist frequency, thereby substantially reducing spectral aliasing in subsequent processing stages. The subsequent dilated convolution then performs feature extraction from the low-resolution representation while systematically expanding the receptive field. Crucially, unlike fixed averaging operations, the dilated convolution employs learnable kernel parameters that enable the model to adaptively discover optimal feature integration patterns directly from training data. The downsampled vectors then undergo high-level semantic feature modeling in the anti-noise feature-encoding block at this lower sampling rate.

Finally, the sampling rate is restored to 50 Hz through an upsampling module to reestablish temporal alignment. We employ bilinear interpolation combined with CNN post-processing as the upsampling module. Bilinear interpolation inherently functions as a weak anti-imaging filter, attenuating a portion of the imaging frequencies. Subsequently, a CNN-based residual learning strategy is applied to eliminate artifacts such as residual imaging frequencies from the interpolation and recover high-frequency details.

As shown in

Figure 3, the

X and

Y axes represent positions in the FBank, while the P axis denotes the spectral energy value. The spectral energy value at the interpolation point is calculated based on known values at four coordinates:

,

,

, and

. First, interpolation is performed along the

x-direction between

and

and

and

:

where

and

denote the interpolated spectral energy values at points

and

, respectively, as obtained from the initial

x-direction interpolation. Subsequently, the final interpolated value

p is calculated by performing interpolation along the

y-direction between

and

, as given by the following expression:

where

p represents the final spectral energy value at the target interpolation point

. This bilinear interpolation process within the FBank domain upsamples the feature representation from a lower sampling rate back to 50 Hz, thereby preserving acoustic information that might be lost after aggressive downsampling. Finally, a CNN is used for post-processing to mitigate interpolation artifacts and restore high-frequency details in the upsampled features.

As shown in

Figure 1, the proposed OS-Denseformer incorporates a learnable connector to fuse features. This operation is formulated as follows:

where

c is a learnable parameter,

x is the feature embedding after processing,

y is the original feature embedding, and ⊙ denotes element-wise multiplication. This connective structure adaptively adjusts the parameter

c during training to control the relative contribution of the transformed features

x and the original features

y, acting as a learned gating mechanism.

3.3. Anti-Noise Feature-Encoding Block

To capture multi-scale representations, the anti-noise feature-encoding block processes feature vectors from different sampling rates. As shown in

Figure 4, each encoding block comprises a 4-head self-attention module, two two-layer feed-forward networks (FFNs), an OS-Conv module, and the ExpNorm normalization function. The self-attention mechanism projects the 512-dimensional input into four parallel heads of 128 dimensions each. The FFN uses an inner dimension of 2048 with sigmoid activation. These identical blocks (N = 16) are stacked to form the complete encoder.

The feed-forward modules apply independent nonlinear transformations to each position in the feature sequence, enhancing the model’s capacity to learn complex patterns. The multi-head self-attention module employs a self-attention mechanism with relative positional encoding to capture global dependencies within the input. The OS-Conv module handles local feature extraction through its Omni-scale block (OS block) [

29] and convolutional components. Finally, the ExpNorm function normalizes the outputs to stabilize training. Given an input feature vector

to the

i-th encoding block, the output

is computed as follows:

where

is the feed-forward module,

is the multi-head self-attention module,

is the OS-Conv module, and

is the ExpNorm normalization function.

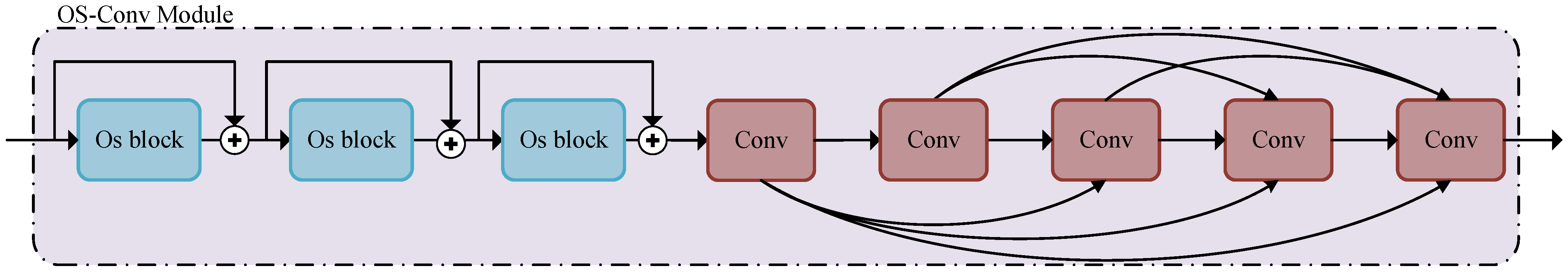

In noisy environments, the superposition of speech and noise often causes acoustic models to misclassify non-speech segments as speech. This introduces non-semantic information as valid features, degrading recognition accuracy. To address this, our OS-Denseformer incorporates an OS-Conv module to enhance local feature extraction, improving upon Conformer architectures that rely on a single convolutional module. As shown in

Figure 5, this enhanced OS-Conv module consists of three OS blocks with residual connections and five densely connected depthwise separable convolution modules, collectively enhancing the network’s capacity to model complex acoustic features. When processing features from diverse noise types, the network adaptively approximates the optimal receptive field. The five depthwise separable convolution modules (kernel size = 15) enable deep abstract feature extraction. This design mitigates gradient vanishing and explosion problems caused by increasing network depth and improves feature reuse via maximized feature interactions [

30].

For the normalization of the anti-noise feature-encoding block’s output, we introduce the ExpNorm function, motivated by RMSNorm, as a replacement for the standard Conformer’s LayerNorm to enhance computational efficiency. It reduces computational overhead and improves training stability. The mathematical definition of ExpNorm is as follows:

where

x is the input,

is a scaling parameter,

is the root mean square operator, and

serves as a numerical stabilizer to prevent division by zero errors. The key differences from LayerNorm are that ExpNorm uses the root mean square instead of the mean and variance, and it employs a single scalar

rather than a per-channel vector, significantly reducing computational overhead. Furthermore, we observed that during early training stages, the optimizer tends to drive

toward zero to mitigate the negative impact of untrained modules. This causes

to oscillate near zero, resulting in frequent gradient sign changes. Such inconsistent gradient directions can trap the module in poor local optima that are difficult to escape, thereby impeding training convergence. In ExpNorm, we employ an exponential parameterization for

, which ensures the scaling parameter remains consistently positive, thus effectively circumventing the aforementioned issues.

3.4. Loss Function

To overcome the limitations of conventional loss functions in noisy acoustic environments, we introduce a loss function that directly aligns model optimization with the CER, the primary evaluation metric. This alignment is achieved through a joint optimization framework based on MBR [

31].

The training process is divided into two stages. First, a conventional hybrid loss

is used for initial training, allowing the model to learn initial acoustic–linguistic mappings. This stage provides a stable starting point for subsequent MBR training, mitigating potential instability from direct MBR training from a random initialization. The Stage 1 loss function is defined as follows:

where

denotes the conventional hybrid loss, comprising the connectionist temporal classification (CTC) loss

, the attention-based encoder–decoder (AED) loss

, and a balancing hyperparameter

.

Given that CTC’s alignment is vital for early training stability and the Attention mechanism’s semantic modeling dominates later, we employ a dynamic scheduling strategy for

, defined as follows:

where

and

denote the minimum and initial CTC weight coefficients,

e is the current training epoch, and

is the total number of epochs.

Stage 2 replaces the AED loss with MBR optimization. For each training sample

, the warm-started decoder generates N candidate sequences

through stochastic sampling of the output distribution. The risk

for each candidate

is defined as its CER with respect to the reference

. The expected risk is approximated by a finite-sample weighted average over the N candidates:

where

is the probability of candidate sequence

computed from import

x by the AED decoder.

Because

is a non-differentiable function, we employ the REINFORCE algorithm to estimate the gradient of the MBR loss

. The gradient estimation procedure is detailed below:

where

b is the baseline used to reduce the variance of the gradient estimate. It is calculated as the average risk over all candidate sequences for all utterances in the current mini-batch, a common practice termed standard batch-average risk.

Stage 2 final loss is a combination of the CTC and MBR losses:

where the CTC weight coefficients

uses the same value as defined in Stage 1.

4. Experimental Results and Analysis

4.1. Experimental Data and Preprocessing

Clean speech data were sourced from the AISHELL-1 Mandarin speech database [

32], with noise samples drawn from the QUT-NOISE [

33] and Noisex92 [

34] datasets. The QUT-NOISE dataset encompasses three primary acoustic scenes—domestic, office, and public and urban environments—which are further categorized into nine distinct noise types. The NOISEX-92 dataset includes eight noise categories, such as white noise, HF channel noise, and factory workshop noise. We selected an equal proportion of samples from each noise category to ensure balanced representation and prevent bias toward any specific noise type during training.

The noise-augmented training set was constructed by mixing clean speech with environmental noise at prescribed signal-to-noise ratios (SNRs), as outlined in

Figure 6. The procedure was as follows: First, the clean speech training set was divided equally into seven subsets. Next, we randomly extracted noise segments matching the duration of the clean speech. To ensure balanced representation across all noise conditions and prevent bias toward any specific noise type, we maintained equal sampling proportions from each noise category during this process. These segments were then mixed with the corresponding clean speech subsets at SNRs of −15 dB, −10 dB, −5 dB, 0 dB, 5 dB, 10 dB, and 15 dB. Finally, all noise-mixed samples across different SNR conditions were combined to form the final noise-augmented training dataset.

The model was initially pre-trained for 150 epochs on the clean AISHELL-1 dataset to establish a robust baseline. Subsequently, it was fine-tuned for 50 epochs on noisy speech synthesized from AISHELL-1 and QUT-NOISE. Robustness was evaluated on two distinct noisy test sets: AISHELL-1 with QUT-NOISE noise and AISHELL-1 with Noisex92 noise. This yields two evaluation scenarios: the first tests performance on matched noise (QUT-NOISE), while the second assesses generalization to unmatched noise (Noisex92).

We emphasize that all mixed datasets were constructed by strictly following the original official data partitions of each source dataset. Crucially, speakers appearing in the official AISHELL-1 test set were excluded from both training and validation partitions. Additionally, noise segments from QUT-NOISE and Noisex92 were rigorously separated to ensure completely disjoint sets for training, validation, and testing.

4.2. Experimental Environment and Parameter Settings

All experiments were run on a server equipped with a 64-bit Ubuntu OS, an Intel Xeon Platinum 8352V CPU, an NVIDIA GeForce RTX 4090 GPU (24 GB VRAM), and 32 GB of DDR4 RAM. The models were developed in Python 3.10 with the PyTorch 2.9.0 framework. Comprehensive hyperparameter settings are listed in

Table 1. To improve generalization, we employed a parameter-averaging strategy, where the parameters from the top-10 performing epochs were averaged to produce the final model.

4.3. Experimental Verification and Analysis

To evaluate the proposed OS-Denseformer, we conducted a comprehensive set of experiments: (1) a performance comparison with mainstream ASR models; (2) an analysis of noisy-data fine-tuning on robustness; and (3) ablation studies on key architectural components.

4.3.1. Comparison and Analysis with Mainstream Models

We evaluated OS-Denseformer by comparing it against state-of-the-art speech recognition models; results are presented in

Table 2. Models were categorized by size into three groups: L (large), M (medium), and S (small).

Table 2 presents two principal findings. (1) Model Scale Enhances Robustness: Results indicate a clear trend within models above, which is that noise robustness improves with scale. The Conformer illustrates this, with CER on matched noise decreasing from 13.79% (S, 10.3 M) and 11.42% (M, 32.6 M) to 10.83% (L, 125.6 M), and on unmatched noise from 13.98%, 11.66% to 11.32%. This comes at a high computational cost, with GFLOPs rising from 30.5 (S), 79.0 (M), to 294.1 (L). (2) OS-Denseformer’s Performance Superiority: OS-Denseformer demonstrates compelling performance advantages. It achieves the lowest CER against Transformer, Conformer-L, Squeezeformer-L, and Zipformer-L, while reducing parameters by 147.9 M, 12.9 M, 128.9 M, and 43.1 M and GFLOPs by 544.2, 206.6, 215.7, and 26.8, respectively. This validates OS-Denseformer’s dual strengths in efficiency and accuracy for noisy speech recognition.

While Speech-Mamba achieves competitive performance on clean speech, its CER degrades substantially more than all other models under noisy conditions. This indicates that the state-space modeling approach currently lacks the robustness of optimized CNN-Transformer hybrid architectures when processing complex, non-stationary environmental noise.

As shown in

Figure A3 (

Appendix A), the scatter plot of GFLOPs versus CER across model architectures illustrates that OS-Denseformer occupies a position on the Pareto frontier within the lower-left quadrant—demonstrating its optimal balance between computational efficiency and recognition accuracy.

Figure 7 presents the CER performance of various models under different SNR conditions. A performance comparison was conducted between OS-Denseformer and the large-scale Conformer, Squeezeformer, and Zipformer. As expected, a consistent trend in decreasing CER with increasing SNR is observed for all models. OS-Denseformer consistently achieves the lowest CER across the SNR range, with its superiority being most pronounced under low-SNR conditions. To quantify the performance advantage across the −15 dB to 15 dB SNR range, we computed the Area Under the Curve (AUC) for each model. OS-Denseformer achieved the lowest AUC of 407.0, reflecting marked improvements of 22.5%, 19.2%, and 12.4% over the Conformer-L (525.4), Squeezeformer-L (503.6), and Zipformer-L (464.5), respectively.

The performance advantage of OS-Denseformer grows as the SNR declines, highlighting its superior noise robustness. At −15 dB, the CER improvements reach 9.95%, 7.97%, and 4.85% relative to Squeezeformer-L, Conformer-L, and Zipformer-L, respectively. These findings underscore the model’s exceptional robustness in low-SNR conditions.

4.3.2. Model Fine-Tuning Results and Analysis

We assessed the effect of noise-augmented fine-tuning using the following four experimental conditions: (1) Baseline: The original pre-trained model (no fine-tuning); (2) Clean FT: Fine-tuning on clean speech; (3) Unmatched Noise FT: Fine-tuning on Noisex92; (4) Matched Noise FT: Fine-tuning on QUT-NOISE.

We assessed all four model variants on a test set constructed by mixing the AISHELL-1 dataset with QUT-NOISE noise.

As shown in

Figure 8, the CER results across different SNR conditions reveal a clear performance hierarchy: the pre-trained model without fine-tuning showed the highest CER, followed by the model fine-tuned only on clean speech. The model fine-tuned with noise-augmented data achieved better performance in unmatched noise conditions and the best performance in matched noise conditions. The comparative analysis revealed that fine-tuning with noise-augmented datasets most effectively improves speech recognition accuracy under strong noise interference. Specifically, when fine-tuned on matched noise data, the model achieved CER reductions of 1.37%, 2.89%, and 4.1% at −5 dB, −10 dB, and −15 dB SNR, respectively, compared to the model fine-tuned on clean speech.

The results further confirm that OS-Denseformer maintains remarkably stable performance on unmatched noise types, with no significant degradation. Compared to matched noise conditions, the CER on unseen noise increased by only 0.59%, 1.17%, and 2.1% at −5 dB, −10 dB, and −15 dB, respectively. Despite these minor increments, model’s CER remained substantially lower than both the baseline and the model fine-tuned only on clean speech. These findings confirm that noise-augmented fine-tuning—especially with matched noise—effectively enhances the robustness of OS-Denseformer in challenging acoustic environments.

4.3.3. Ablation Experiments and Analysis

An ablation study was conducted to assess the individual contributions of key modules in the OS-Denseformer architecture. Following a controlled-variable methodology, only one target component was altered per experiment while maintaining all other elements fixed. The corresponding results are summarized in

Table 3.

Ablation results in

Table 3 delineate each component’s contribution:

Multi-sampling Architecture: This design reduces CER versus a single downsampling baseline, while concurrently reducing parameters by 24.2% (from 148.6 M to 112.7 M) and GFLOPs substantially, affirming its efficient acoustic feature extraction.

Downsampling and Upsampling Module: Replacing the proposed downsampling and upsampling modules with simple frame averaging and duplication directly increases CER. This finding provides empirical validation that our sampling architecture successfully mitigates the performance degradation typically induced by spectral distortion during acoustic signal processing. As supplementary material,

Table A1 in the

Appendix A quantifies the spectral distortion introduced by different sampling modules before and after rate transformation.

OS-Conv Module: Ablating the OS block raised CER, confirming its critical role in noisy speech modeling.

Normalization Function: Replacing ExpNorm with LayerNorm raised computational cost, validating ExpNorm’s efficiency advantage for inference. Additionally, we conducted comparative evaluations of the training convergence behavior and CER performance for models employing ExpNorm versus RMSNorm. The corresponding results are presented in

Figure A1 and

Figure A2 in the

Appendix A.

MBR Loss Function: Substituting the two-stage MBR loss with the conventional CTC + AED loss function results in a noticeable increase in CER. This result further validates that our two-stage MBR framework directly minimizes the expected edit distance between reference texts and N-best hypothesis sets. By evaluating the relative quality among hypotheses rather than relying on absolute probability estimates, MBR effectively guides the model toward noise-robust representations when acoustic features are corrupted. The online decoding process generates diverse N-best candidates, enabling information integration across multiple hypotheses. Through this ensemble-like approach, the model captures essential speech patterns even when some hypotheses contain noise artifacts, thereby reducing the detrimental effects of noise on individual predictions. Additionally, we conducted a sensitivity analysis on the hyperparameter N, with the results presented in

Table A2 in the

Appendix A.

To quantify temporal redundancy in the multi-sampling architecture, we use cosine similarity as a metric. The similarity was measured between outputs of adjacent encoding blocks, with higher values denoting increased feature redundancy.

Figure 9 shows that the multi-sampling architecture maintains substantially lower cosine similarity compared to the single downsampling structure, particularly in deeper layers. This effect stems from the architecture’s inherent multi-scale design, which promotes diverse feature learning across sampling rates and reduces redundancy. Consequently, the design mitigates temporal redundancy, sharpens salient feature extraction, and reduces inference cost.

A key observation from

Figure 9 is the increase in temporal redundancy when processing noisy versus clean speech. The multi-sampling architecture, however, generates feature representations from noisy inputs that are more aligned with those from clean speech. This suggests that the multi-scale mechanism better captures the underlying speech structure amidst noise. Consequently, it is less prone to incorporating non-speech noise into features, leading to improved robustness.

5. Mobile Deployment and Performance Analysis

The resource constraints and power sensitivity of mobile devices, which differ fundamentally from server environments, necessitate thorough system-level evaluation. We deployed OS-Denseformer on smartphones and evaluated its performance using four key metrics: (1) Loading Time: The duration from loading the model into memory until it is ready for inference, affecting cold-start performance; (2) Memory Consumption: Peak memory consumption during operation; (3) Time Delay per Word: The latency for streaming inference to generate text from input audio; (4) Power Consumption: Energy used during model execution.

Our mobile end-to-end speech recognition system implements a complete low-latency pipeline that converts continuous audio signals into text output. A lightweight GRU-based Voice Activity Detection (VAD) module processes the raw 16 kHz single-channel audio stream in real-time. When speech onset is detected, the VAD initiates processing and enables the downstream pipeline. Detected speech segments are forwarded to the feature extraction module to produce streaming log-Mel spectrograms. These features are fed to the acoustic model for streaming encoding, and finally, an on-device decoder incorporating a quantized MobileBERT generates recognized text using beam search.

The PyTorch-trained model was first converted to the ONNX format using ONNX Runtime Mobile. To accommodate mobile resource constraints, we applied static quantization, converting FP32 weights to INT8. The impact of quantization on storage, inference latency and accuracy was then tested for both OS-Denseformer and Conformer on a Xiaomi 10 smartphone, the results are presented in

Table 4. Within the table cells, Inference Latency and Memory Consumption are reported in the “Median/Mean/P95” format.

Experimental results confirm that quantization reduces storage requirements by approximately 75% while significantly accelerating inference. In comparative evaluations, OS-Denseformer demonstrates significantly lower memory usage and achieves a reduced CER while cutting inference latency by more than half compared to Conformer.

The cross-platform compatibility of the OS-Denseformer system was evaluated on three smartphones: Xiaomi 10, Xiaomi 12S, and Vivo X100 Pro. The corresponding performance benchmarks are provided in

Table 5. OS-Denseformer maintains stable and efficient performance across diverse mobile platforms, without introducing CER spikes or excessive power drain due to variations in hardware and software configurations.

We evaluated quantization robustness in four common noisy environments: server rooms, busy streets, subway platforms, and riverside areas.

Table 6 compares CER of OS-Denseformer and Conformer under both FP32 and INT8 precision.

Our experimental results reveal three principal findings:(1) OS-Denseformer consistently surpasses Conformer across all tested environments under both FP32 and INT8 precision, achieving superior CER performance. (2) Quantization-induced CER increase is substantially more pronounced for Conformer (+1.37% to +2.67%) than for OS-Denseformer (+1.07% to +1.88%), confirming superior quantization robustness. (3) OS-Denseformer demonstrates reduced variance across noise conditions (e.g., 15.27 ± 0.92% vs. 16.05 ± 0.98% in subway environments), indicating more stable performance.

These results demonstrate OS-Denseformer’s superior robustness in maintaining accuracy under both quantization constraints and diverse acoustic conditions, underscoring its strong suitability for deployment on resource-constrained devices where computational efficiency and environmental adaptability are paramount.

We have validated the model’s compliance with core mobile deployment requirements—accuracy, latency, and memory footprint. However, thermal stability under prolonged usage and its potential impact on sustained performance remain critical factors requiring thorough empirical evaluation in real-world product environments to ensure product-ready robustness.

6. Conclusions and Future Works

This paper introduces OS-Denseformer, a lightweight and noise-robust end-to-end model for ASR designed to tackle the persistent challenges of computational complexity and performance degradation in noisy environments. Its multi-sampling architecture achieves a favorable efficiency–robustness trade-off by hierarchically compressing speech signals into multi-scale representations, thereby reducing computational demand. The proposed OS-Conv module further enhances local feature extraction and promotes parameter efficiency through a reuse mechanism. The proposed phased MBR loss function directly integrates CER evaluation metric into the training process, thereby enhancing parameter optimization. Comprehensive evaluations demonstrate that OS-Denseformer achieves superior recognition accuracy over leading models, including Transformer, Conformer, and Squeezeformer, with significantly lower computational and memory footprints in noisy settings.

While this study focuses on model-domain lightweight noise robustness, future works will enhance edge adaptability through: (1) developing a runtime model selection strategy guided by real-time acoustic environment profiling (noise type and SNR); (2) implementing a dynamic sub-module orchestration framework that optimally balances recognition accuracy with resource constraints. In addition, we will (3) explore synergistic integration with lightweight front-end speech enhancement modules to further improve performance in challenging acoustic conditions; (4) extend the evaluation to multilingual scenarios, particularly in English, to validate the generalizability of the proposed approach.