Characterization of Upper Extremity Joint Angle Error for Virtual Reality Motion Capture Compared to Infrared Motion Capture

Abstract

1. Introduction

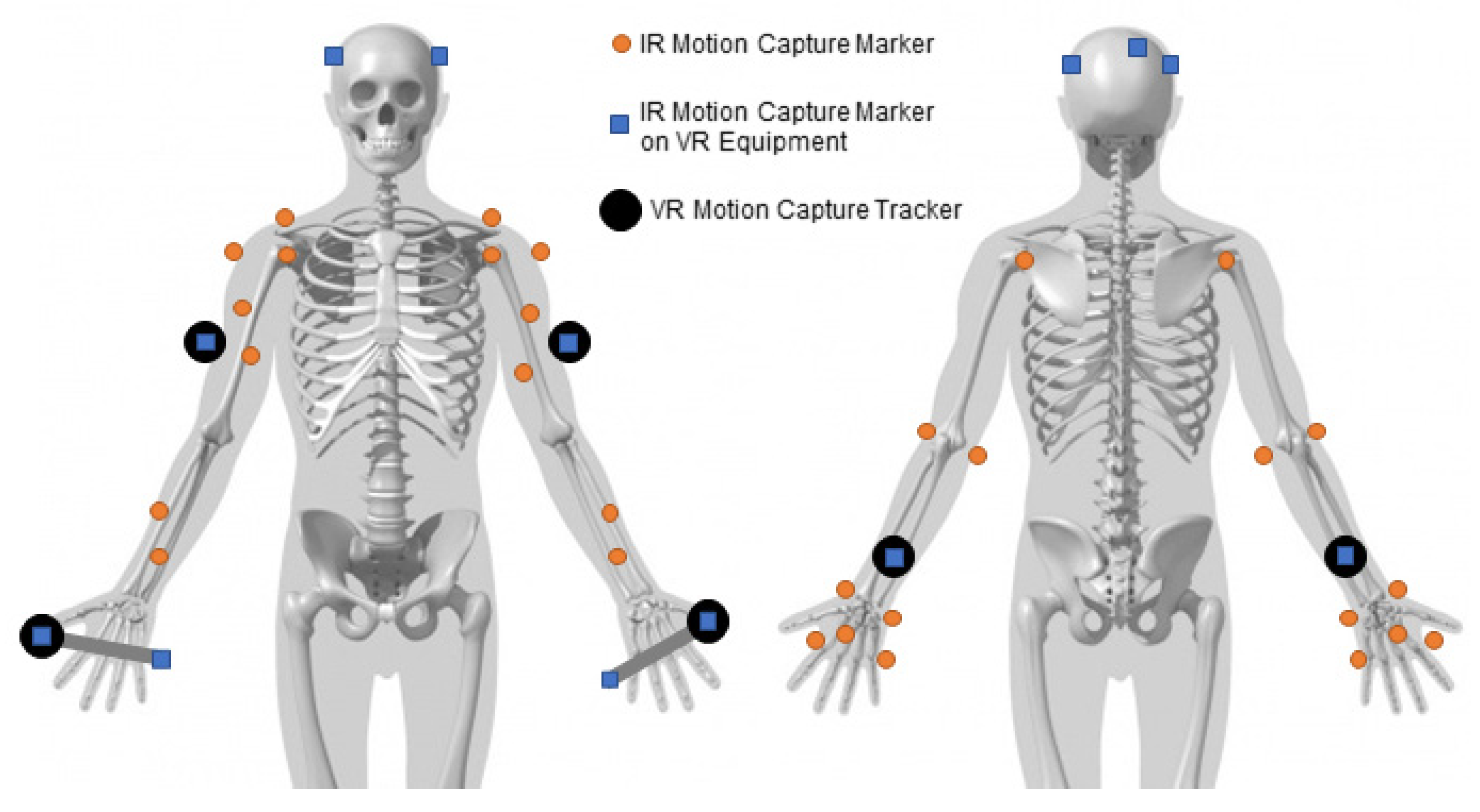

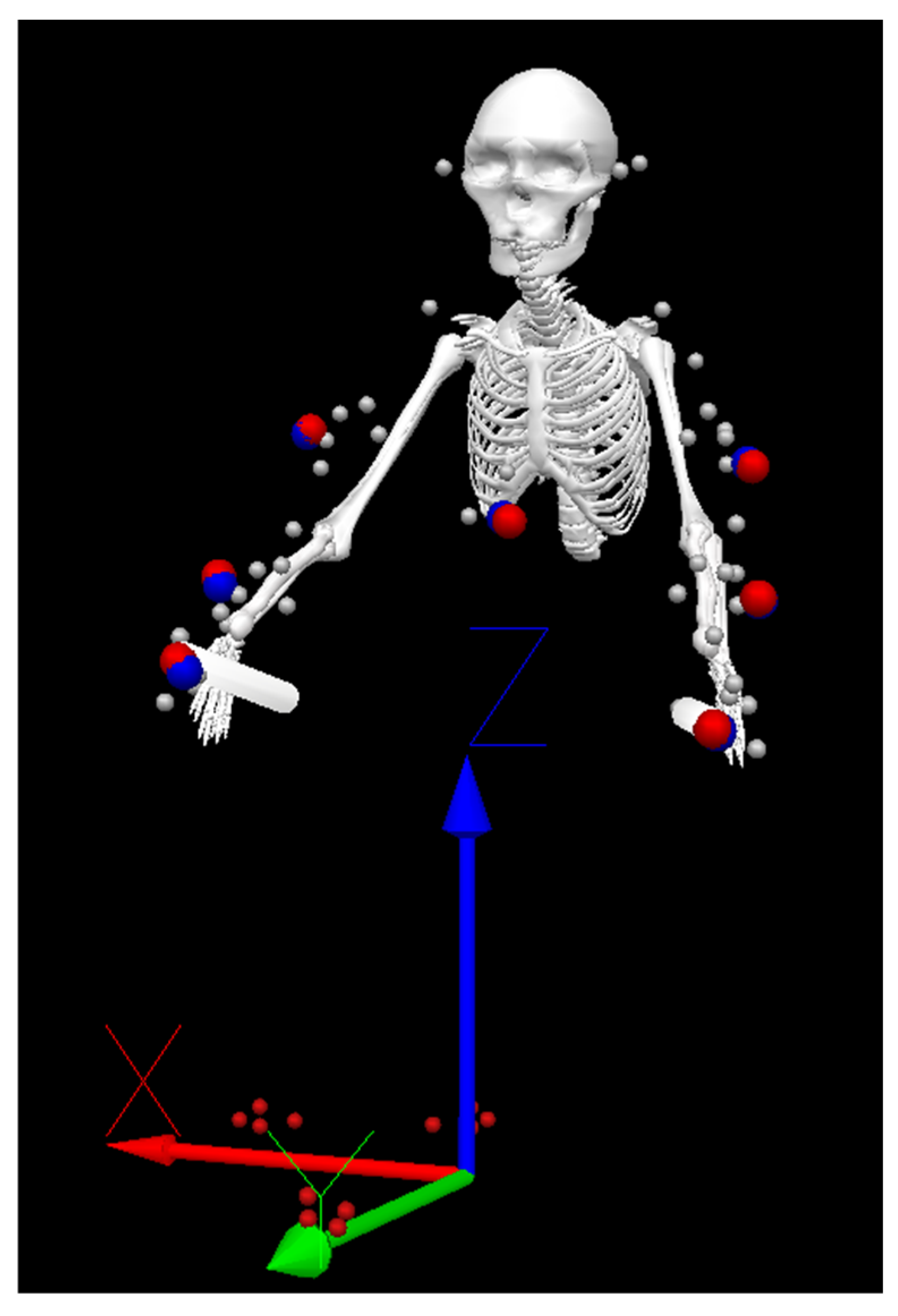

2. Materials and Methods

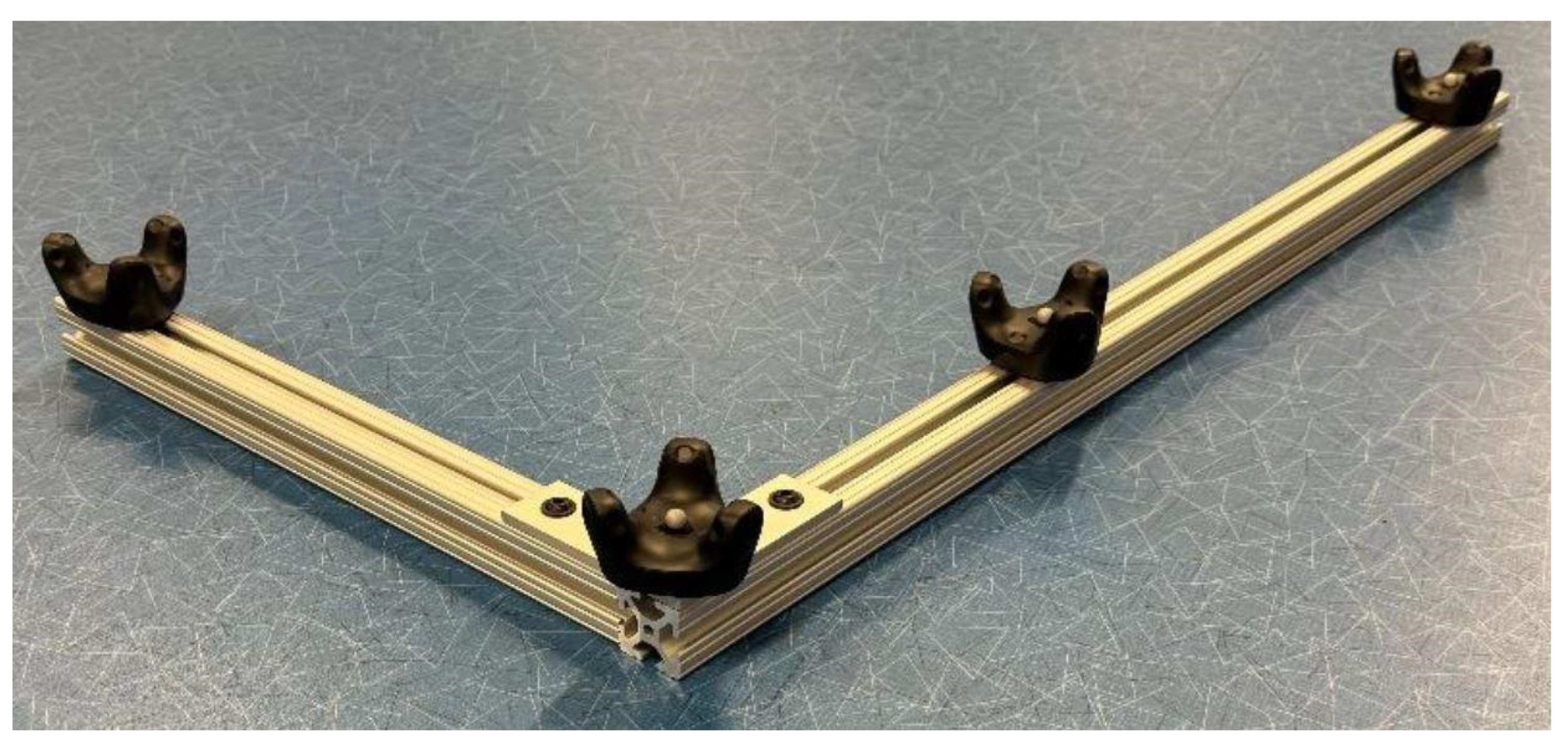

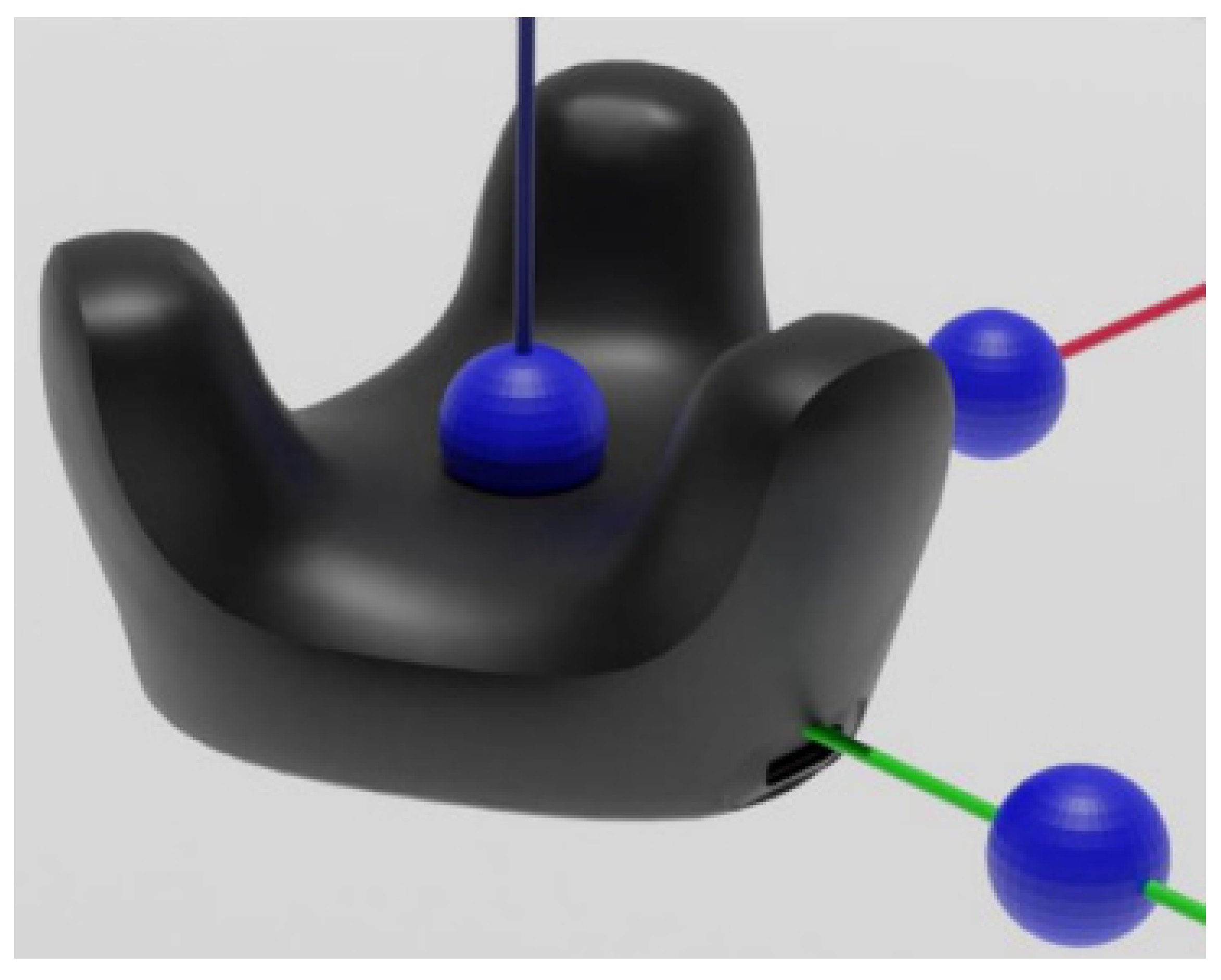

2.1. Equipment and Software

2.2. Participants

2.3. Data Collection

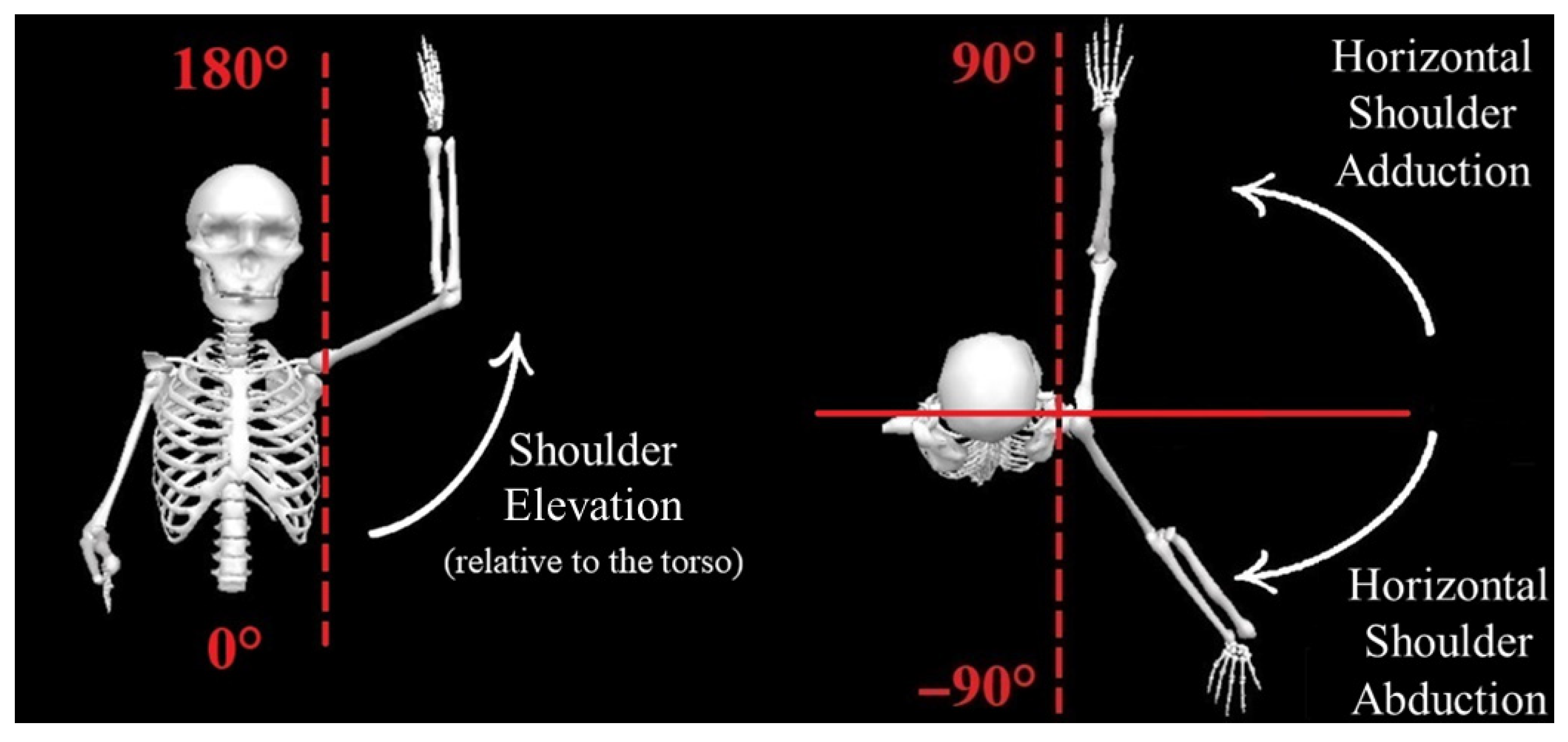

2.4. Post Processing

2.5. Data Analysis

3. Results

3.1. Statistical Comparisons

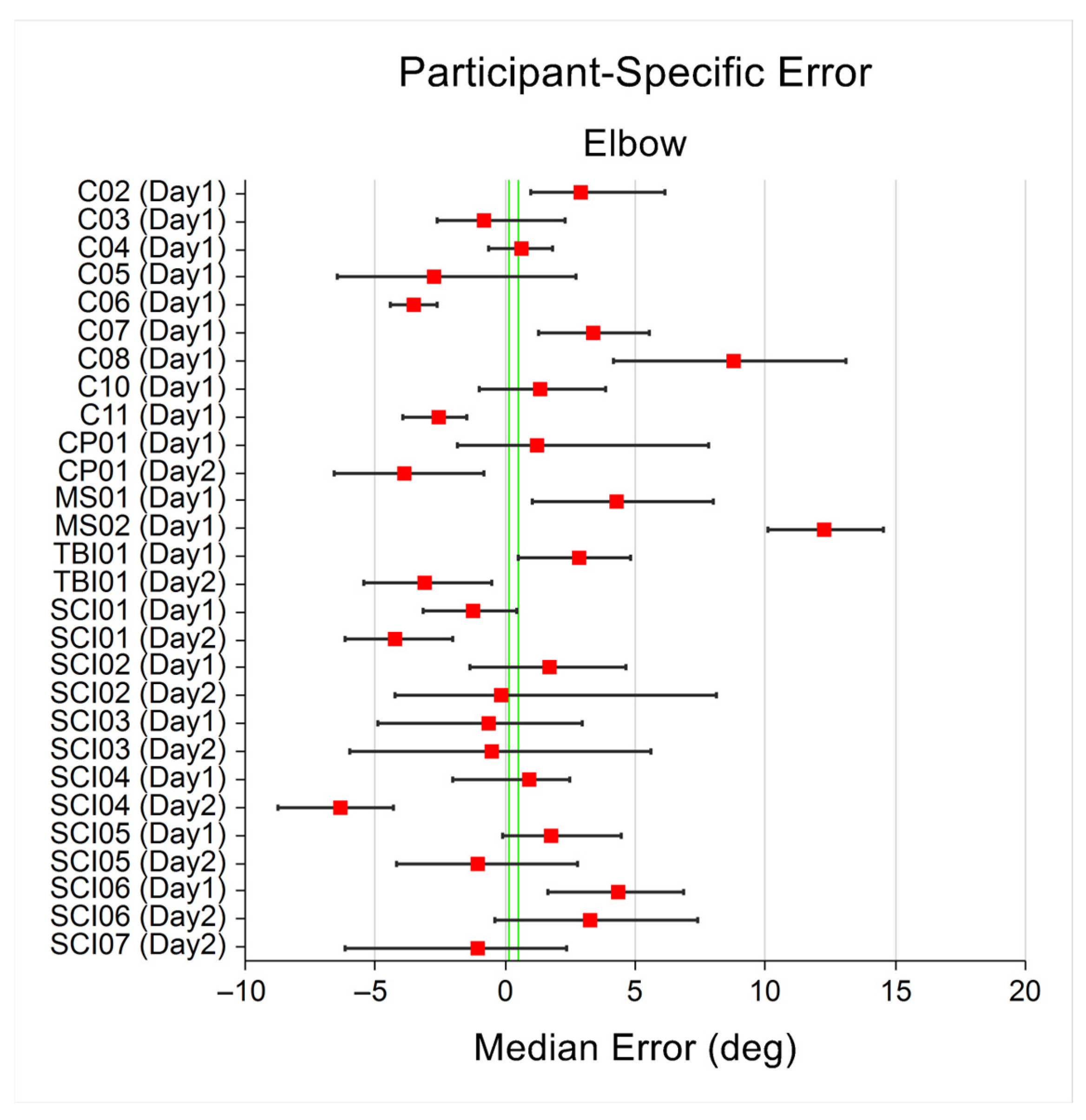

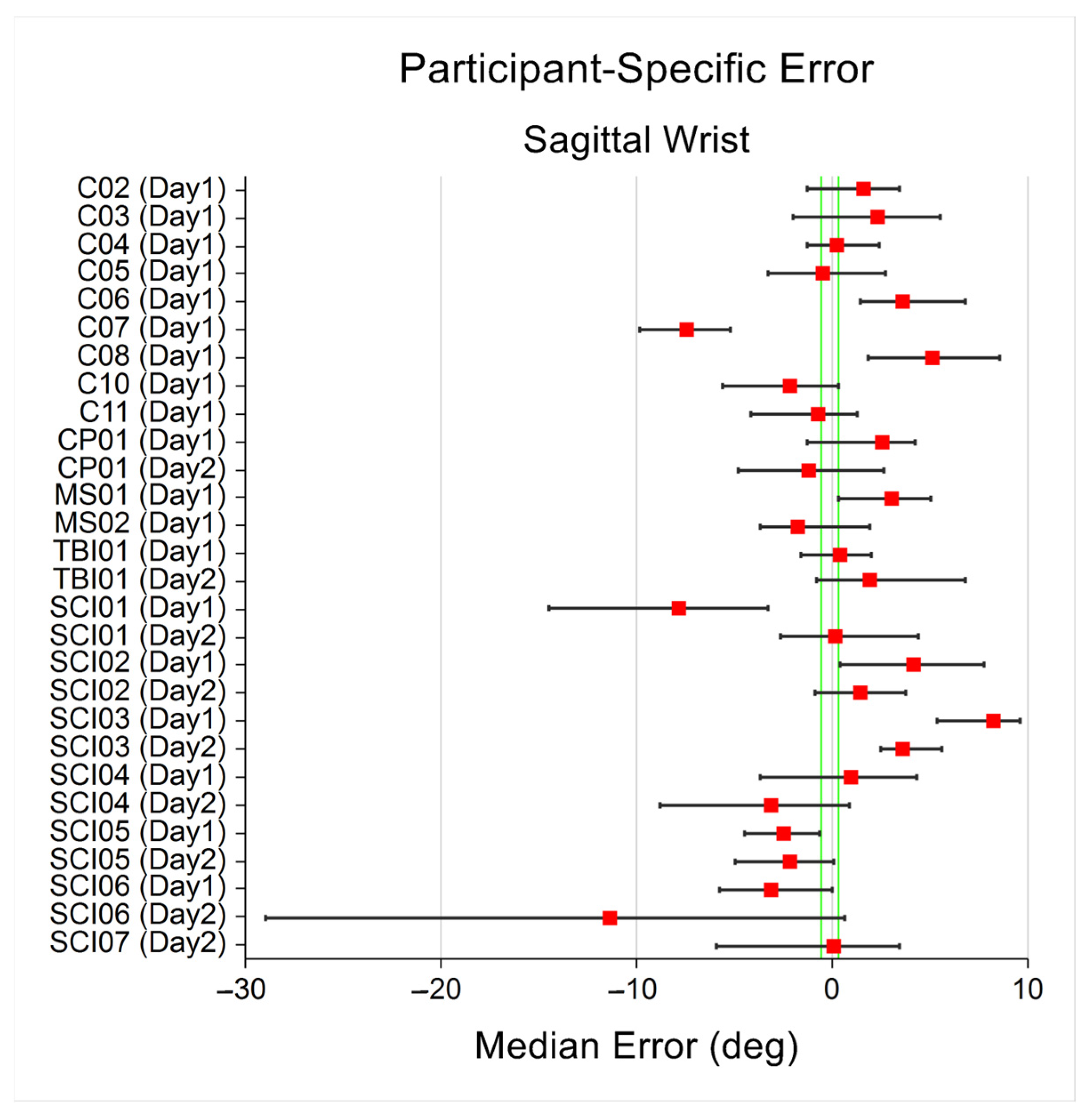

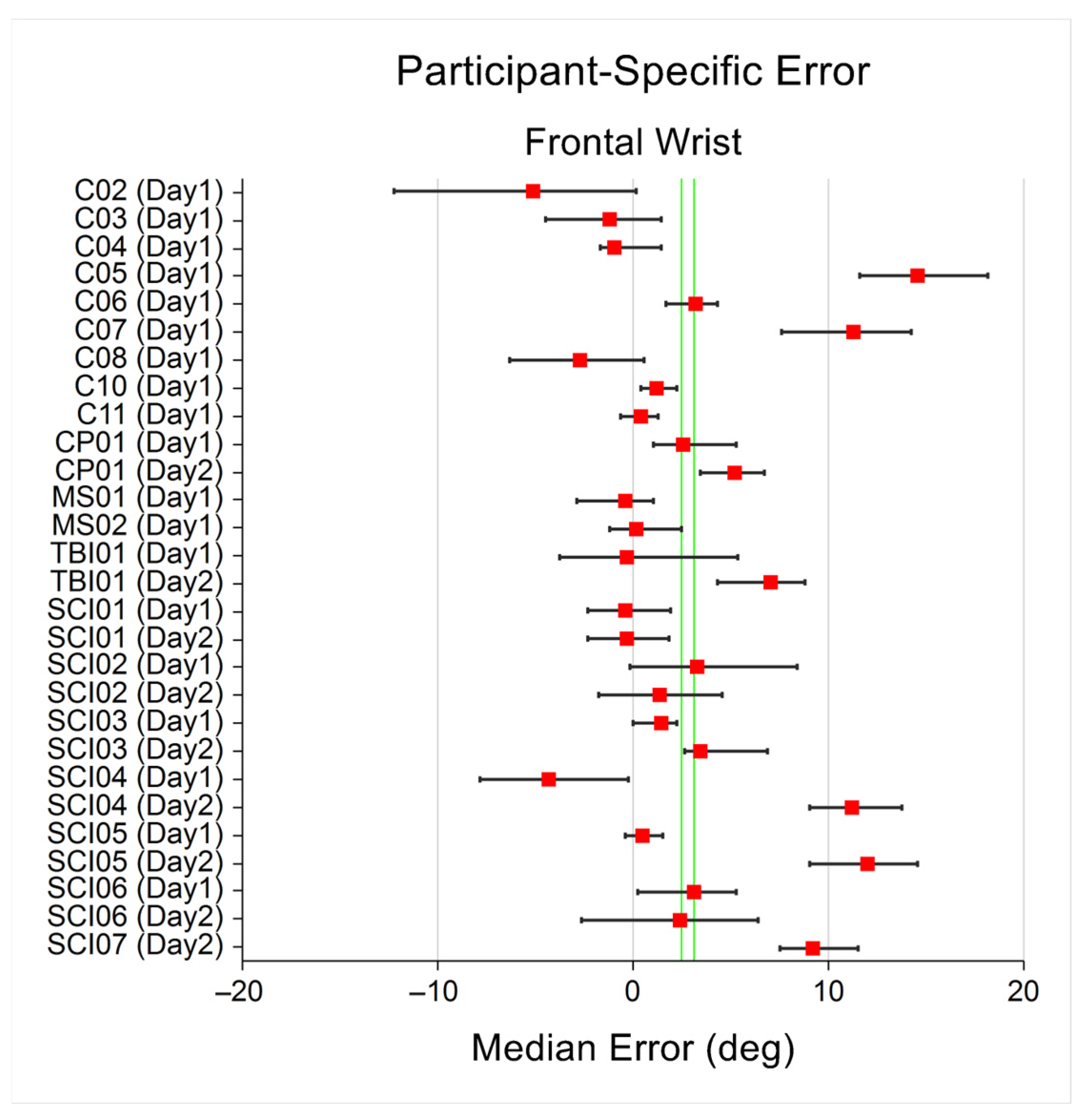

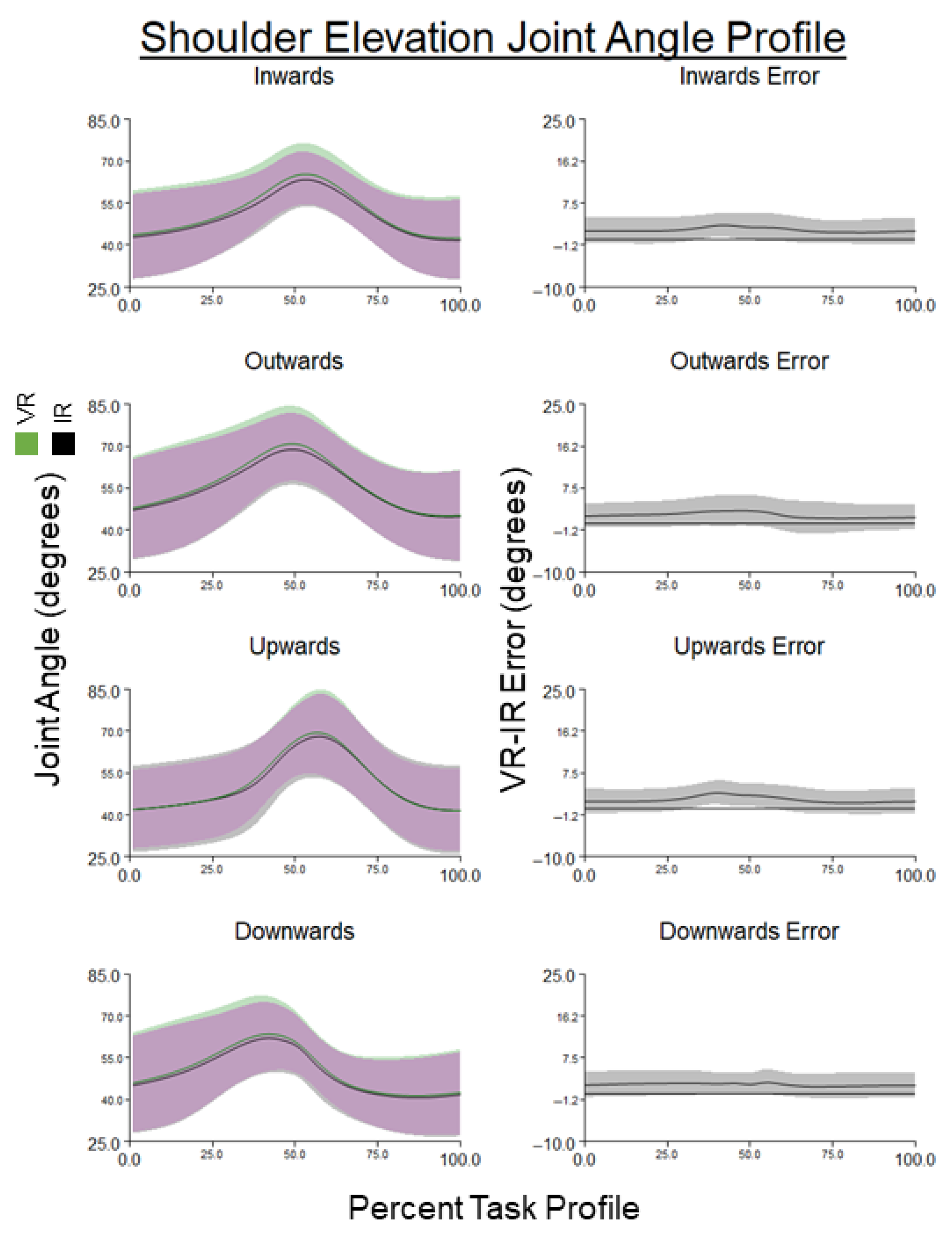

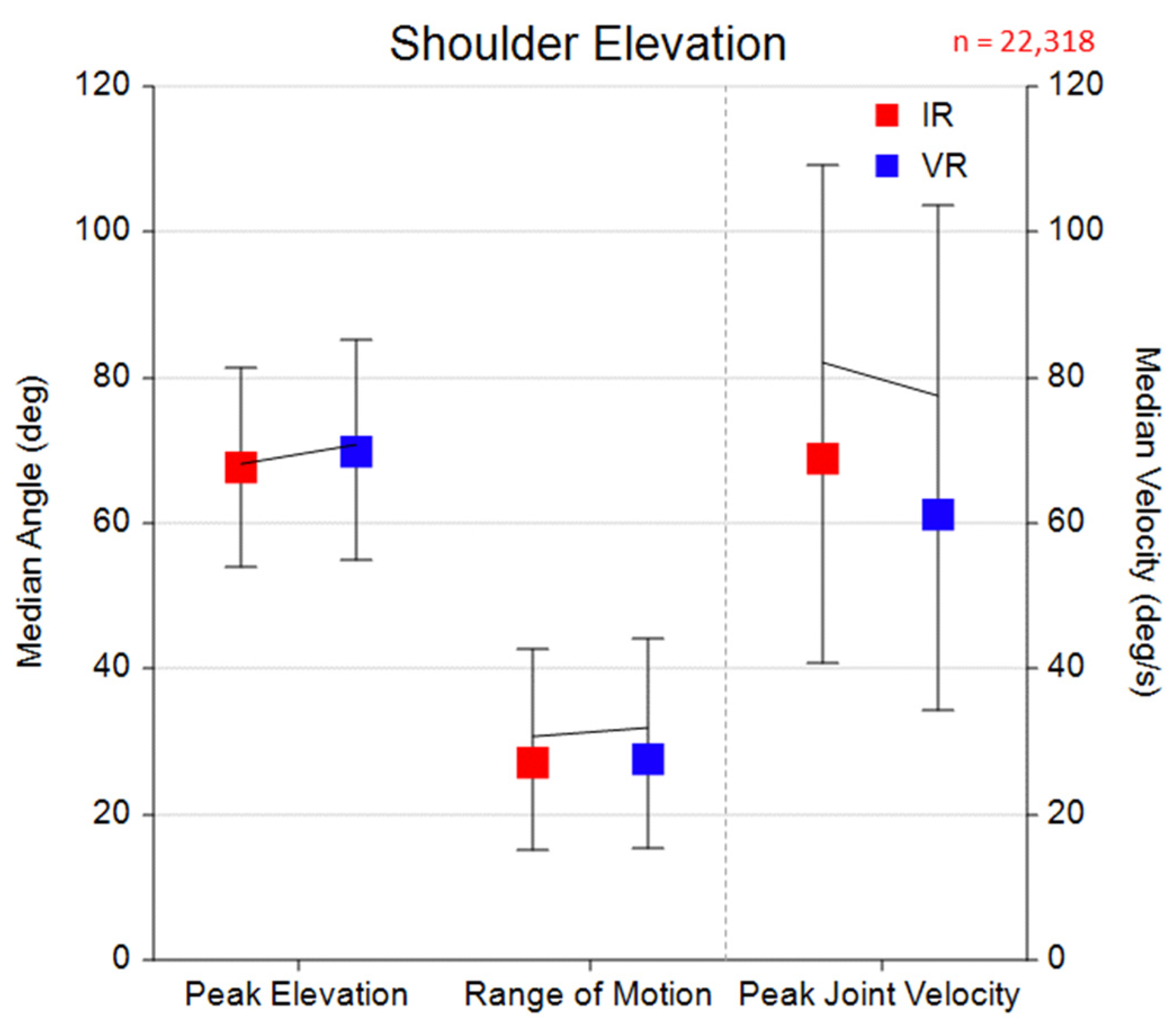

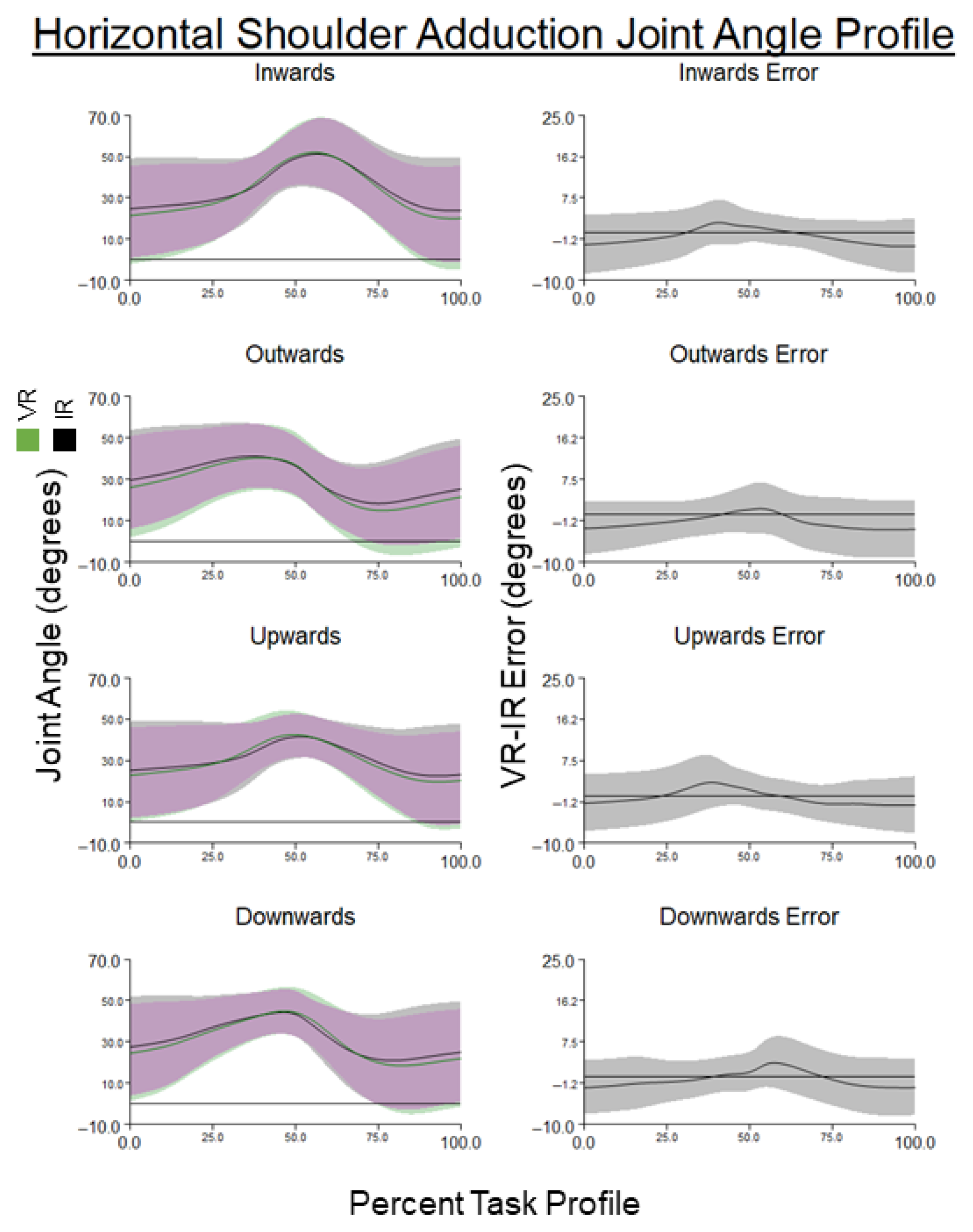

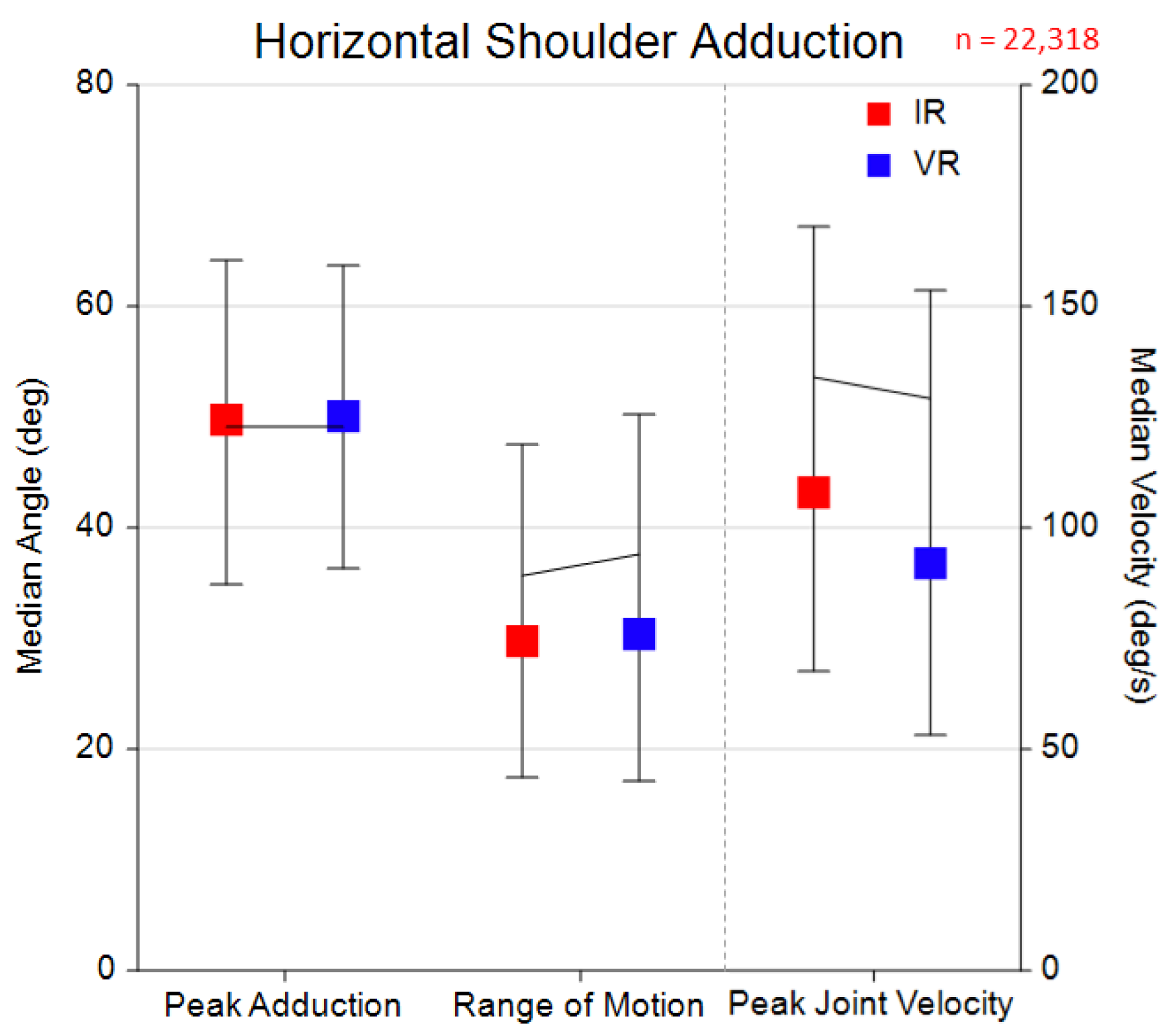

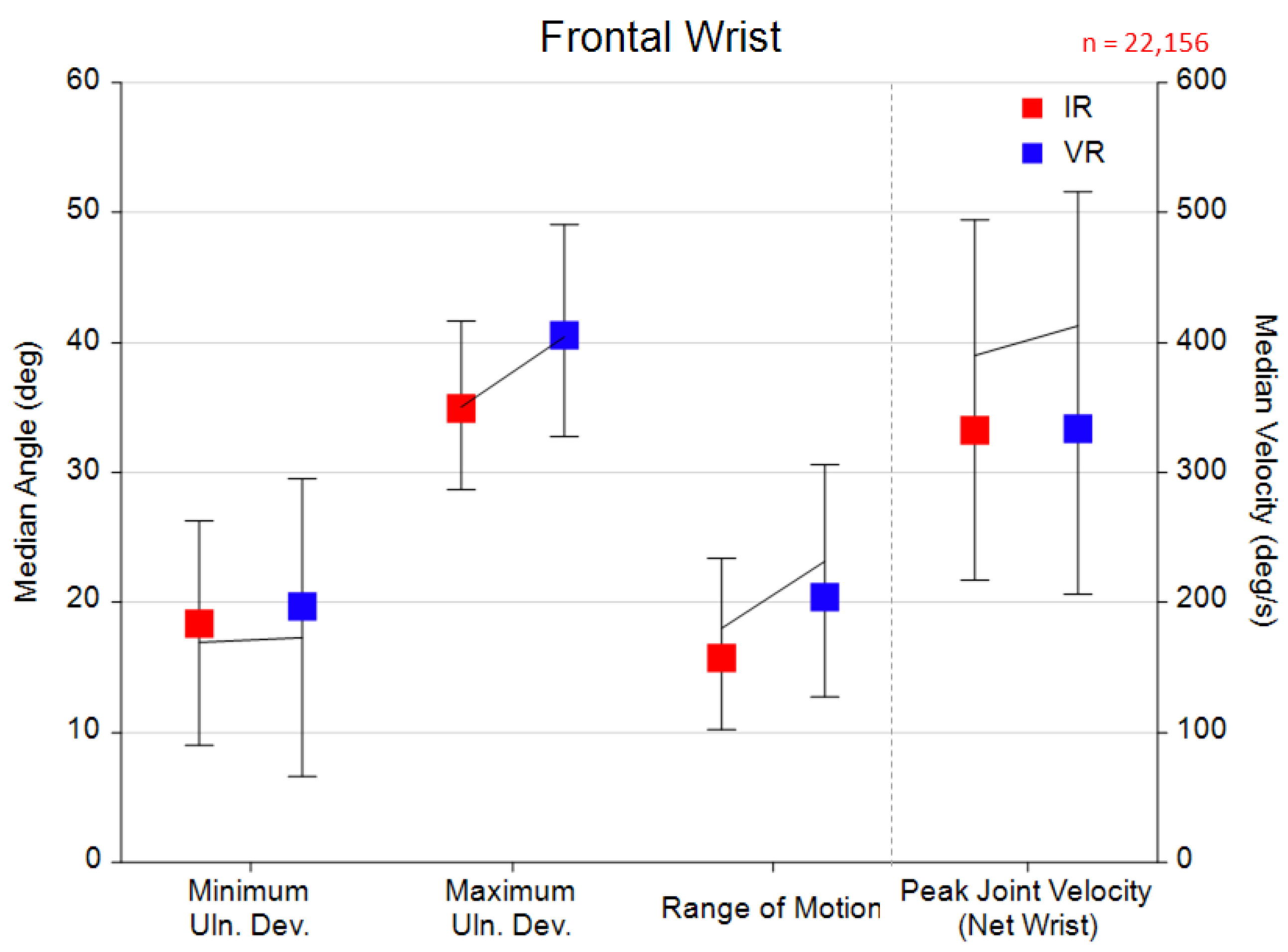

3.2. Joint Angle Error Characterizations

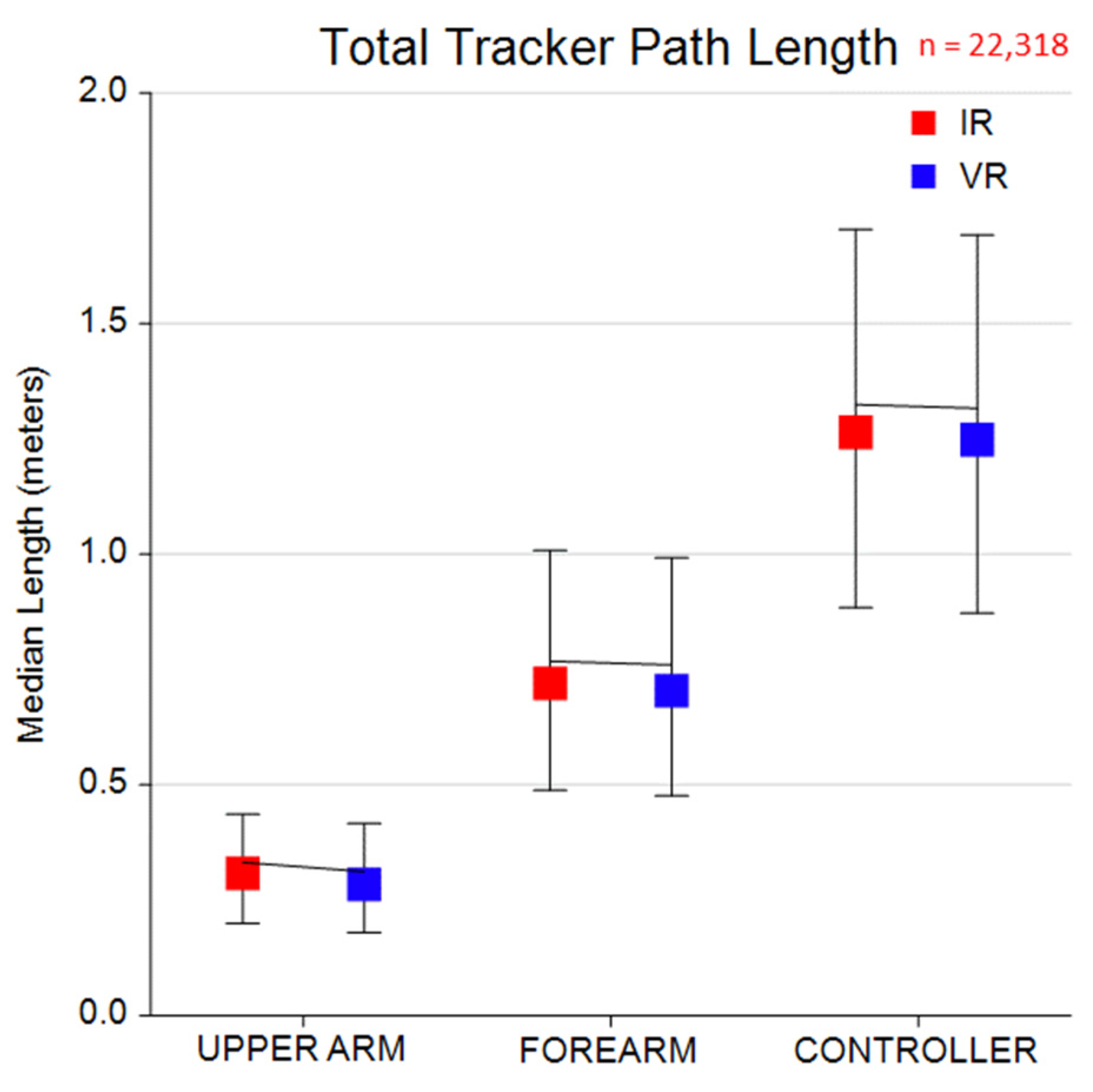

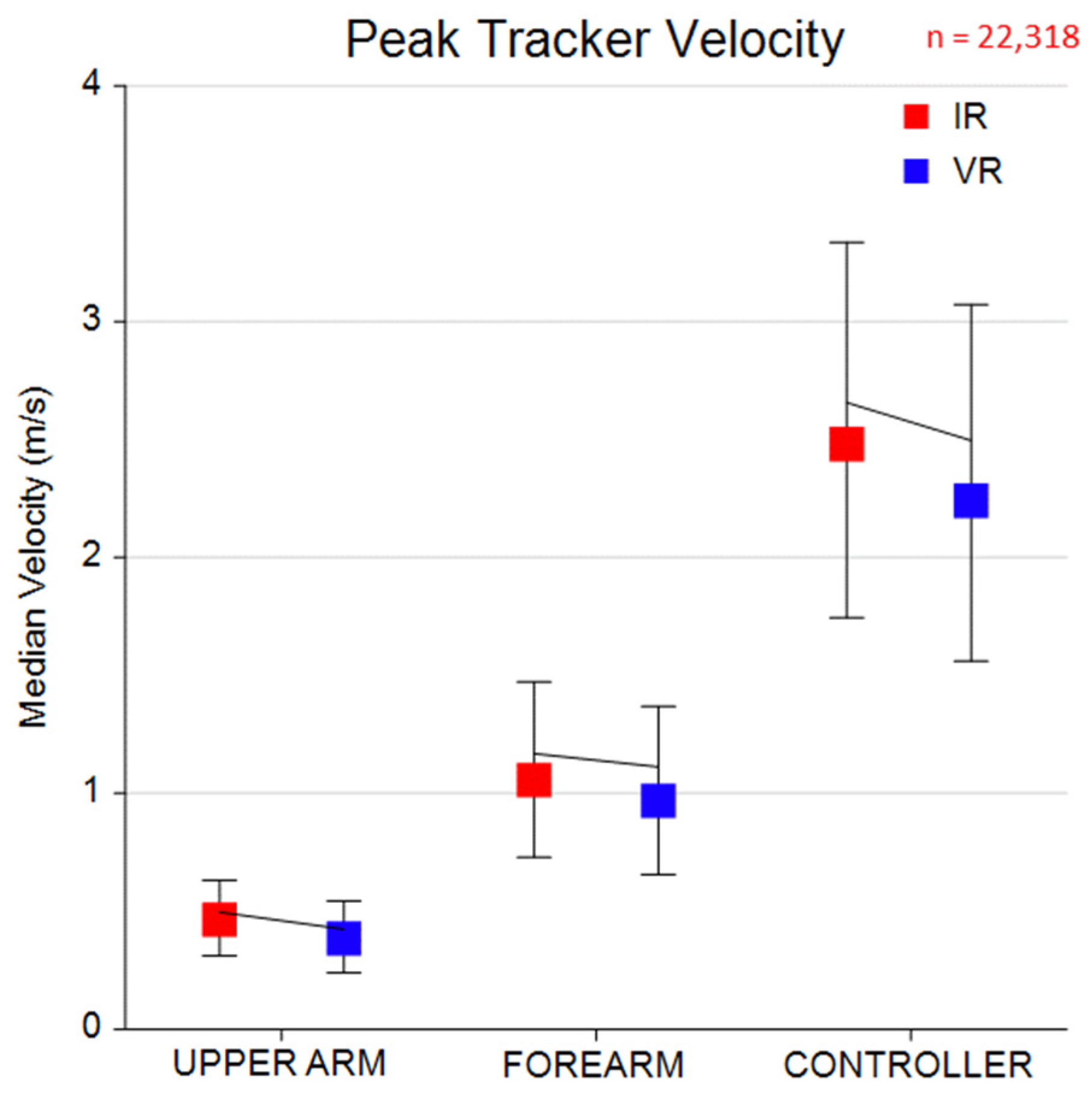

3.3. Tracker Error Characterizations

4. Discussion

4.1. Kinematic Metric Error Characterizations

4.2. Tracker Metric Error Characterizations

4.3. Limitations and Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VR | Virtual Reality |

| IR | Infrared |

| UE | Upper Extremity |

| SCI | Spinal Cord Injury |

| CP | Cerebral Palsy |

| MS | Multiple Sclerosis |

| TBI | Post-Traumatic Brain Injury |

| ROM | Range of Motion |

| RMSE | Root Mean Squared Error |

Appendix A

| Mean (% of Median Value) | SD (% of Median Value) | Median (% of Median Value) | ||

|---|---|---|---|---|

| Shoulder Elevation | RMSE | 4.45 (14.51%) | 2.95 (15.44%) | 3.79 (13.98%) |

| Median Error | 1.76 (5.76%) | 4.45 (23.29%) | 1.54 (5.67%) | |

| Median Absolute Error | 3.92 (12.79%) | 2.93 (15.30%) | 3.20 (11.81%) | |

| Mean Error | 1.90 (6.21%) | 4.37 (22.85%) | 1.72 (6.35%) | |

| Absolute Mean Error | 3.68 (12.00%) | 3.03 (15.84%) | 2.92 (10.79%) | |

| Mean Absolute Error | 4.07 (13.28%) | 2.85 (14.89%) | 3.37 (12.44%) | |

| ROM Error | 1.23 (4.03%) | 5.24 (27.43%) | 0.47 (1.74%) | |

| Mean Absolute Participant-Level Bias | 2.32 (7.56%) | 3.56 (18.60%) | 2.25 (8.31%) | |

| Mean Absolute Participant-Level Bias of ROM | 2.12 (6.91%) | 4.35 (22.74%) | 1.70 (6.28%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles | 3.05 (4.47%) | 4.65 (23.75%) | 2.81 (4.16%) | |

| Error at Peak Joint Angles | 2.63 (3.86%) | 5.44 (27.80%) | 2.18 (3.22%) | |

| Error at Peak Joint Velocities | 4.5 (5.52%) | 31.0 (53.99%) | 4.7 (6.77%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Velocities | 7.4 (9.04%) | 25.3 (44.10%) | 6.5 (9.48%) | |

| Horizontal Shoulder Adduction | RMSE | 7.23 (20.29%) | 4.64 (19.02%) | 6.20 (20.86%) |

| Median Error | −0.83 (−2.34%) | 7.19 (29.46%) | −0.40 (−1.35%) | |

| Median Absolute Error | 6.18 (17.35%) | 4.32 (17.70%) | 5.20 (17.49%) | |

| Mean Error | −0.78 (−2.19%) | 7.13 (29.18%) | −0.36 (−1.21%) | |

| Absolute Mean Error | 5.63 (15.80%) | 4.44 (18.17%) | 4.65 (15.65%) | |

| Mean Absolute Error | 6.48 (18.18%) | 4.27 (17.49%) | 5.51 (18.53%) | |

| ROM Error | 1.96 (5.50%) | 10.32 (42.27%) | 0.53 (1.80%) | |

| Mean Absolute Participant-Level Bias | 3.27 (9.18%) | 5.60 (22.95%) | 3.24 (10.89%) | |

| Mean Absolute Participant-Level Bias of ROM | 3.83 (10.75%) | 8.31 (34.05%) | 3.31 (11.15%) | |

| Mean Absolute Participant-Level Bias at Peak Angles | 2.62 (5.33%) | 6.53 (29.78%) | 2.69 (5.41%) | |

| Error at Peak Joint Angles | −0.03 (−0.06%) | 7.58 (34.59%) | 0.04 (0.07%) | |

| Error at Peak Joint Velocities | 4.8 (3.58%) | 515.9 (182.16%) | 11.9 (11.02%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Velocities | 23.0 (17.20%) | 248.7 (87.80%) | 17.5 (16.20%) | |

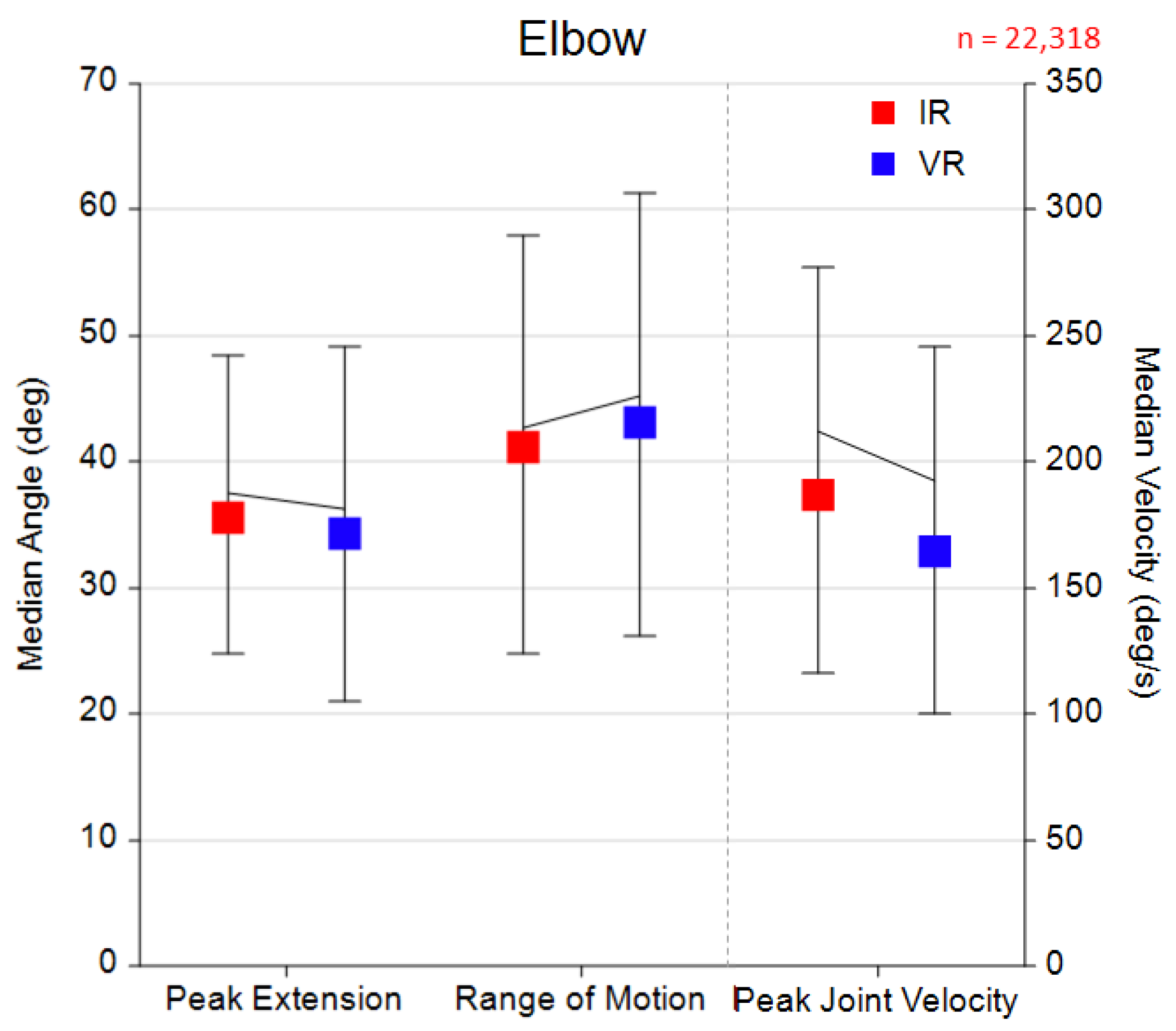

| Elbow | RMSE | 6.10 (14.29%) | 3.68 (16.41%) | 5.33 (12.94%) |

| Median Error | 0.49 (1.14%) | 6.09 (27.16%) | 0.14 (0.34%) | |

| Median Absolute Error | 5.13 (12.03%) | 3.62 (16.13%) | 4.22 (10.25%) | |

| Mean Error | 0.22 (0.52%) | 6.10 (27.21%) | −0.25 (−0.61%) | |

| Absolute Mean Error | 4.77 (11.18%) | 3.81 (16.98%) | 3.95 (9.60%) | |

| Mean Absolute Error | 5.45 (12.77%) | 3.51 (15.64%) | 4.62 (11.22%) | |

| ROM Error | 2.52 (5.89%) | 7.09 (31.61%) | 1.95 (4.74%) | |

| Mean Absolute Participant-Level Bias | 2.92 (6.85%) | 4.38 (19.55%) | 2.81 (6.82%) | |

| Mean Absolute Participant-Level Bias of ROM | 2.60 (6.10%) | 6.48 (28.88%) | 2.21 (5.36%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles | 3.28 (8.74%) | 6.44 (35.81%) | 3.06 (8.62%) | |

| Error at Peak Joint Angles | −1.30 (−3.46%) | 7.98 (44.36%) | −1.65 (−4.66%) | |

| Error at Peak Joint Velocities | 19.6 (9.23%) | 149.1 (112.82%) | 20.4 (10.94%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Velocities | 19.6 (9.23%) | 149.1 (112.82%) | 20.4 (10.94%) | |

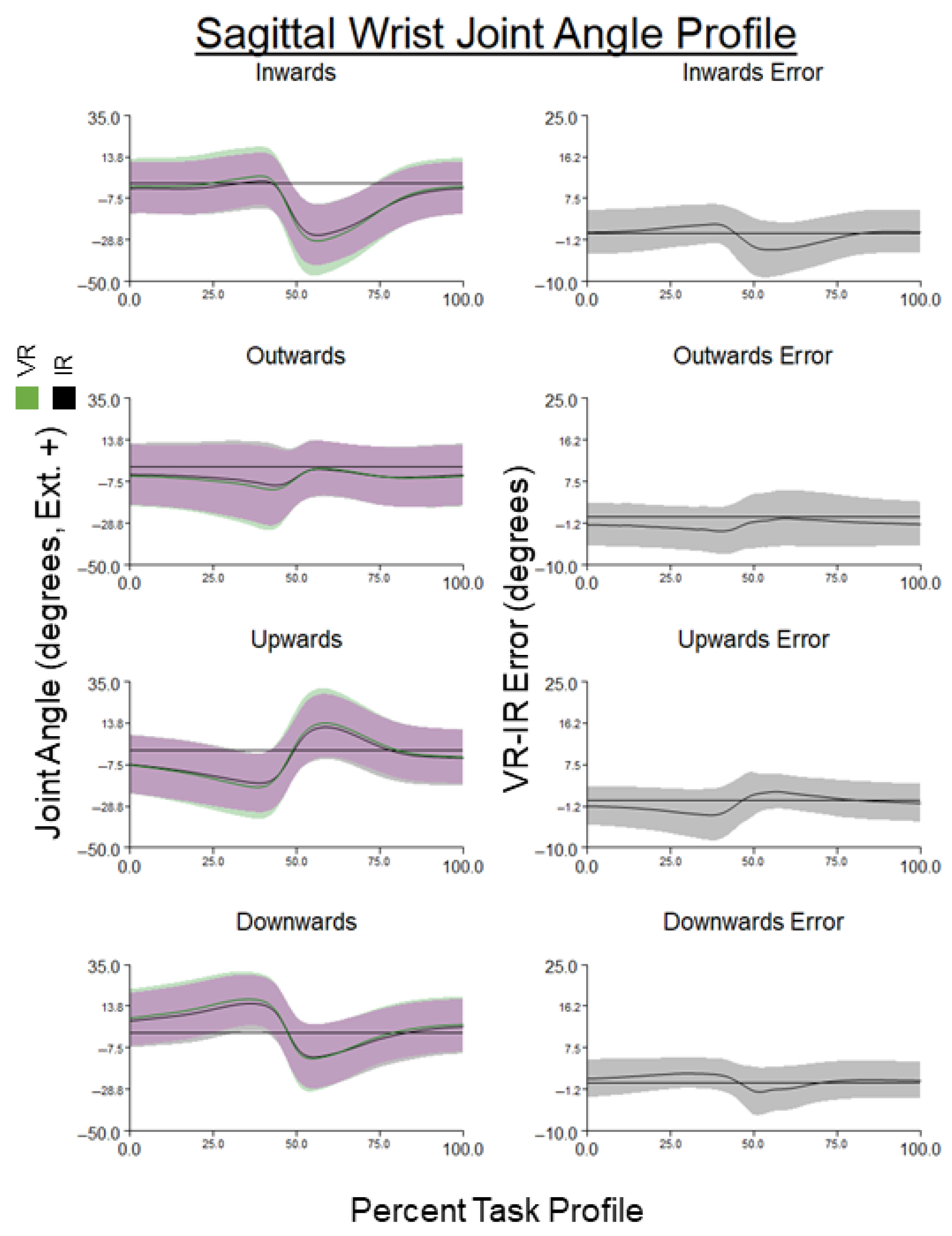

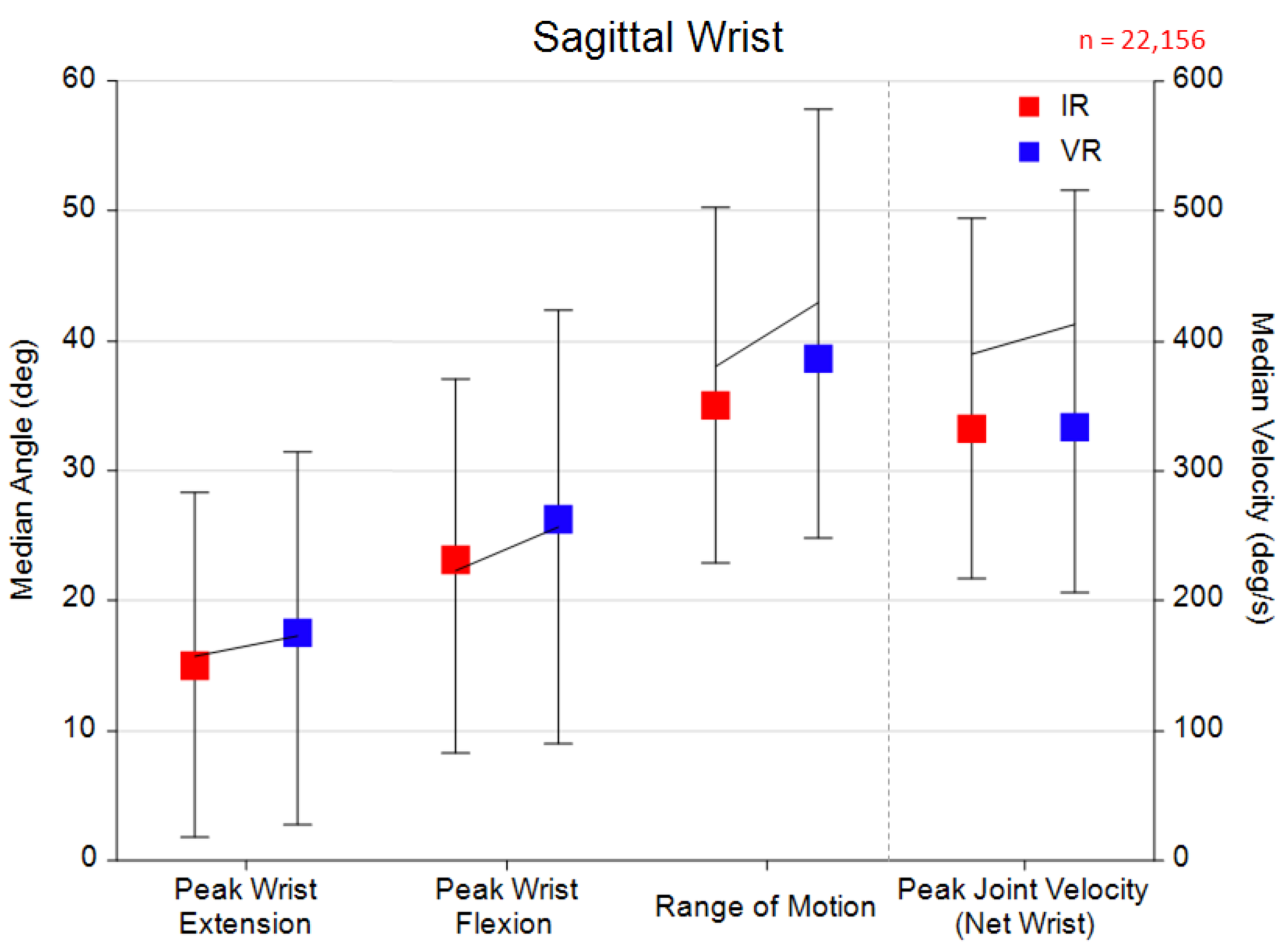

| Sagittal Wrist | RMSE | 6.04 (15.89%) | 4.94 (24.72%) | 4.88 (13.95%) |

| Median Error | −0.52 (−1.37%) | 7.04 (35.22%) | 0.33 (0.95%) | |

| Median Absolute Error | 5.27 (13.87%) | 4.86 (24.32%) | 4.03 (11.50%) | |

| Mean Error | −0.60 (−1.57%) | 6.98 (34.89%) | 0.26 (0.76%) | |

| Absolute Mean Error | 4.89 (12.87%) | 5.01 (25.06%) | 3.66 (10.45%) | |

| Mean Absolute Error | 5.54 (14.58%) | 4.92 (24.59%) | 4.31 (12.30%) | |

| ROM Error | 4.98 (13.10%) | 7.61 (38.06%) | 3.98 (11.37%) | |

| Mean Absolute Participant-Level Bias | 3.15 (8.29%) | 4.76 (23.78%) | 3.01 (8.61%) | |

| Mean Absolute Participant-Level Bias of ROM | 5.11 (13.43%) | 6.00 (29.97%) | 4.90 (14.00%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles (Flexion) | −4.42 (−19.77%) | 6.24 (30.89%) | −4.16 (−17.92%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles (Extension) | 3.42 (21.79%) | 5.39 (27.19%) | 3.19 (21.24%) | |

| Error at Peak Joint Angles (Flexion) | −3.35 (−15.01%) | 8.91 (44.13%) | −2.16 (−9.30%) | |

| Error at Peak Joint Angles (Extension) | 1.63 (10.37%) | 7.08 (35.73%) | 2.26 (15.04%) | |

| Error at Peak Joint Velocities | −22.7 (−5.83%) | 790.3 (133.04%) | −2.8 (−0.83%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Velocities | 55.2 (14.16%) | 321.2 (54.08%) | 27.8 (8.37%) | |

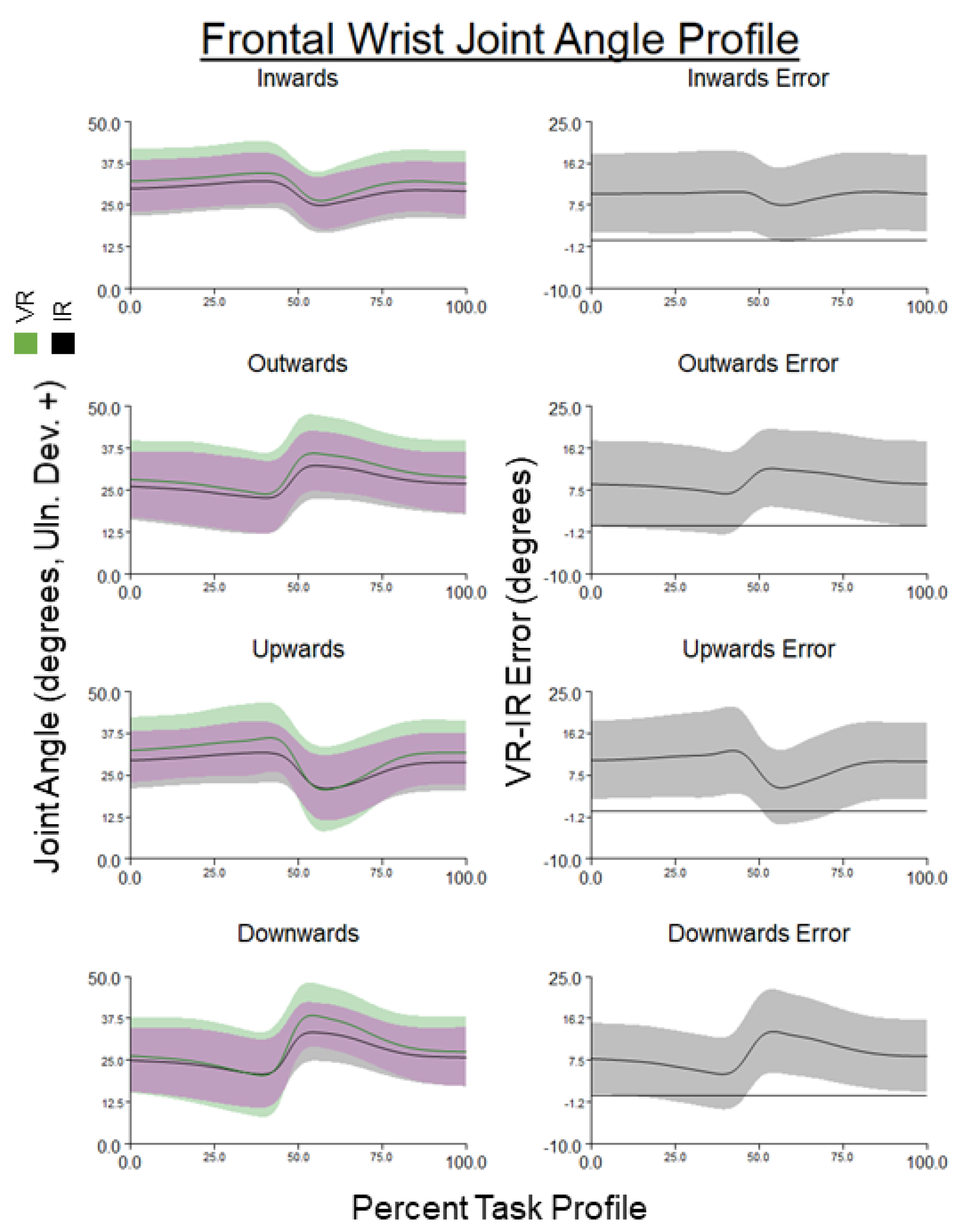

| Frontal Wrist | RMSE | 6.21 (34.40%) | 4.57 (41.63%) | 4.85 (30.80%) |

| Median Error | 3.12 (17.30%) | 6.57 (59.83%) | 2.46 (15.63%) | |

| Median Absolute Error | 5.67 (31.40%) | 4.67 (42.53%) | 4.13 (26.24%) | |

| Mean Error | 3.00 (16.59%) | 6.52 (59.35%) | 2.33 (14.81%) | |

| Absolute Mean Error | 5.39 (29.84%) | 4.74 (43.14%) | 3.92 (24.88%) | |

| Mean Absolute Error | 5.88 (32.55%) | 4.68 (42.57%) | 4.38 (27.83%) | |

| ROM Error | 5.15 (28.52%) | 5.56 (50.60%) | 4.19 (26.60%) | |

| Mean Absolute Participant-Level Bias | −3.89 (−21.57%) | 3.64 (33.13%) | −3.91 (−24.83%) | |

| Mean Absolute Participant-Level Bias of ROM | −5.23 (−28.95%) | 4.41 (40.15%) | −5.00 (−31.77%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles (Minimum Ulnar Deviation) | −4.22 (−24.90%) | 4.80 (34.47%) | −4.07 (−22.20%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Angles (Maximum Ulnar Deviation) | −5.33 (−15.22%) | 4.13 (36.66%) | −5.19 (−14.84%) | |

| Error at Peak Joint Angles (Minimum Ulnar Deviation) | 0.32 (1.89%) | 7.66 (54.97%) | 0.20 (1.07%) | |

| Error at Peak Joint Angles (Maximum Ulnar Deviation) | 5.47 (15.62%) | 6.88 (61.01%) | 4.45 (12.73%) | |

| Error at Peak Joint Velocities | −22.7 (−5.83%) | 790.3 (133.04%) | −2.8 (−0.83%) | |

| Mean Absolute Participant-Level Bias at Peak Joint Velocities | 55.2 (14.16%) | 321.2 (54.08%) | 27.8 (8.37%) | |

| Upper Arm | Path Length | −0.020 (−6.10%) | 0.024 (14.32%) | −0.019 (−6.05%) |

| Velocity Error at Peak | −0.084 (−16.82%) | 0.096 (38.56%) | −0.069 (−14.89%) | |

| Forearm | Tracker Path Length Error | −0.014 (−1.85%) | 0.036 (9.42%) | −0.012 (−1.69%) |

| Tracker Velocity Error at Peak | −0.104 (−8.84%) | 0.129 (20.21%) | −0.081 (−7.64%) | |

| Controller | Tracker Path Length Error | −0.007 (−0.50%) | 0.123 (20.74%) | −0.010 (−0.80%) |

| Tracker Velocity Error at Peak | −0.161 (−6.04%) | 0.831 (63.75%) | −0.185 (−7.43%) | |

Appendix B

References

- Merker, S.; Pastel, S.; Bürger, D.; Schwadtke, A.; Witte, K. Measurement Accuracy of the HTC VIVE Tracker 3.0 Compared to Vicon System for Generating Valid Positional Feedback in Virtual Reality. Sensors 2023, 23, 7371. [Google Scholar] [CrossRef] [PubMed]

- Joyner, J.; Kontson, K. Movement Tracking Accuracy of HTC Vive VR System during Upper Body Discrete Motion Tasks. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Orlando, FL, USA, 15–19 July 2024. [Google Scholar] [CrossRef]

- Mancuso, M.; Charbonnier, C. Technical Evaluation of the Fidelity of the HTC Vive for Upper Limb Tracking. ISBS Proc. Arch. 2024, 42, 624. [Google Scholar]

- Sansone, L.G.; Stanzani, R.; Job, M.; Battista, S.; Signori, A.; Testa, M. Robustness and Static-Positional Accuracy of the SteamVR 1.0 Virtual Reality Tracking System. Virtual Real. 2022, 26, 903–924. [Google Scholar] [CrossRef]

- Kulozik, J.; Jarrassé, N. Evaluating the Precision of the HTC VIVE Ultimate Tracker with Robotic and Human Movements under Varied Environmental Conditions. arXiv 2024, arXiv:2409.01947. [Google Scholar] [CrossRef]

- Williams, A.M.; Ma, J.K.; Martin Ginis, K.A.; West, C.R. Effects of a Tailored Physical Activity Intervention on Cardiovascular Structure and Function in Individuals with Spinal Cord Injury. Neurorehabilit. Neural Repair 2021, 35, 692–703. [Google Scholar] [CrossRef] [PubMed]

- Shen, C.; Yu, S.; Wang, J.; Huang, G.Q.; Wang, L. A Comprehensive Survey on Deep Gait Recognition: Algorithms, Datasets and Challenges. IEEE Trans. Biom. Behav. Identity Sci. 2022, 7, 270–292. [Google Scholar] [CrossRef]

- Hansen, R.M.; Arena, S.L.; Queen, R.M. Validation of Upper Extremity Kinematics Using Markerless Motion Capture. Biomed. Eng. Adv. 2024, 7, 100128. [Google Scholar] [CrossRef]

- van Amstel, R.N.; Dijk, I.E.; Noten, K.; Weide, G.; Jaspers, R.T.; Pool-Goudzwaard, A.L. Wireless Inertial Measurement Unit-Based Methods for Measuring Lumbopelvic-Hip Range of Motion Are Valid Compared with Optical Motion Capture as Golden Standard. Gait Posture 2025, 120, 72–80. [Google Scholar] [CrossRef] [PubMed]

- Spitzley, K.A.; Karduna, A.R. Feasibility of Using a Fully Immersive Virtual Reality System for Kinematic Data Collection. J. Biomech. 2019, 87, 172–176. [Google Scholar] [CrossRef] [PubMed]

- Bauer, P.; Lienhart, W.; Jost, S. Accuracy Investigation of the Pose Determination of a VR System. Sensors 2021, 21, 1622. [Google Scholar] [CrossRef] [PubMed]

- Kuhlmann de Canaviri, L.; Meiszl, K.; Hussein, V.; Abbassi, P.; Mirraziroudsari, S.D.; Hake, L.; Potthast, T.; Ratert, F.; Schulten, T.; Silberbach, M.; et al. Static and Dynamic Accuracy and Occlusion Robustness of SteamVR Tracking 2.0 in Multi-Base Station Setups. Sensors 2023, 23, 725. [Google Scholar] [CrossRef] [PubMed]

- Vox, J.P.; Weber, A.; Wolf, K.I.; Izdebski, K.; Schüler, T.; König, P.; Wallhoff, F.; Friemert, D. An Evaluation of Motion Trackers with Virtual Reality Sensor Technology in Comparison to a Marker-Based Motion Capture System Based on Joint Angles for Ergonomic Risk Assessment. Sensors 2021, 21, 3145. [Google Scholar] [CrossRef]

- van der Veen, S.M.; Thomas, J.S. A Pilot Study Quantifying Center of Mass Trajectory during Dynamic Balance Tasks Using an HTC Vive Tracker Fixed to the Pelvis. Sensors 2021, 21, 8034. [Google Scholar] [CrossRef] [PubMed]

- Amadi, H.O.; Bull, A.M.J. A Motion-Decomposition Approach to Address Gimbal Lock in the 3-Cylinder Open Chain Mechanism Description of a Joint Coordinate System at the Glenohumeral Joint. J. Biomech. 2010, 43, 3232–3236. [Google Scholar] [CrossRef] [PubMed]

- Walmsley, C.P.; Williams, S.A.; Grisbrook, T.; Elliott, C.; Imms, C.; Campbell, A. Measurement of Upper Limb Range of Motion Using Wearable Sensors: A Systematic Review. Sports Med. Open 2018, 4, 53. [Google Scholar] [CrossRef]

- Adans-Dester, C.; Hankov, N.; O’Brien, A.; Vergara-Diaz, G.; Black-Schaffer, R.; Zafonte, R.; Dy, J.; Lee, S.I.; Bonato, P. Enabling Precision Rehabilitation Interventions Using Wearable Sensors and Machine Learning to Track Motor Recovery. NPJ Digit. Med. 2020, 3, 121. [Google Scholar] [CrossRef] [PubMed]

- Vicon Upper Body Modeling with Plug-in Gait. Available online: https://help.vicon.com/space/Nexus216/11602259/Upper+body+modeling+with+Plug-in+Gait (accessed on 20 September 2025).

- Nair, V.; Guo, W.; Wang, R.; O’Brien, J.F.; Rosenberg, L.; Song, D. Berkeley Open Extended Reality Recordings 2023 (BOXRR-23): 4.7 Million Motion Capture Recordings from 105,000 XR Users. IEEE Trans. Vis. Comput. Graph. 2024, 30, 2239–2246. [Google Scholar] [CrossRef]

| Participant Code | Sex | Age | Diagnoses | SCI Level | CUE-Q | MMT |

|---|---|---|---|---|---|---|

| C02 | Male | 43 | N/A, Healthy Control | N/A | N/A | N/A |

| C03 | Male | 54 | N/A, Healthy Control | N/A | N/A | N/A |

| C04 | Male | 26 | N/A, Healthy Control | N/A | N/A | N/A |

| C05 | Male | 20 | N/A, Healthy Control | N/A | N/A | N/A |

| C06 | Male | 27 | N/A, Healthy Control | N/A | N/A | N/A |

| C07 | Male | 36 | N/A, Healthy Control | N/A | N/A | N/A |

| C08 | Male | 11 | N/A, Healthy Control | N/A | N/A | N/A |

| C10 | Female | 10 | N/A, Healthy Control | N/A | N/A | N/A |

| C11 | Female | 38 | N/A, Healthy Control | N/A | N/A | N/A |

| CP01 | Female | 42 | Cerebral Palsy | N/A | 79% | 98% |

| MS01 | Female | 44 | Multiple Sclerosis | N/A | 84% | 99% |

| MS02 | Female | 51 | Multiple Sclerosis | N/A | 95% | 100% |

| TBI01 | Male | 39 | Post-Traumatic Brain Injury | N/A | 99% | 100% |

| SCI01 | Male | 26 | Spinal Cord Injury | C6-C7 Incomplete | 64% | 98% |

| SCI02 | Male | 51 | Spinal Cord Injury | C6-C7 Incomplete | 70% | 97% |

| SCI03 | Male | 43 | Spinal Cord Injury | C5-C6 Incomplete | 29% | 83% |

| SCI04 | Male | 34 | Spinal Cord Injury | T9-T12 Incomplete | 99% | 100% |

| SCI05 | Female | 36 | Spinal Cord Injury | C3-C6 Incomplete | 82% | 98% |

| SCI06 | Female | 8 | Spinal Cord Injury | T1-T2 Complete | 87% | 98% |

| SCI07 | Male | 11 | Spinal Cord Injury | T11-T12 Complete | 97% | 80% |

| Error Metric | ||

|---|---|---|

| RMSE | RMSE across each task profile | |

| Median Error | Median error across each task profile | |

| Median Absolute Error | Median value of the absolute error across each task profile | |

| Mean Error | Mean error across each task profile | |

| Absolute Mean Error | Absolute value of the mean error across each task profile | |

| Mean Absolute Error | Mean value of the absolute error across each task profile | |

| ROM Error | ROM Error for each task profile | |

| Error at Peak Joint Angles | Error in peak joint angle across each task profile | |

| Error at Peak Joint Velocities | Error in peak joint angle velocity across each task profile | |

| Total Tracker Path Length Error | Error in total tracker path length across each task profile | |

| Error at Peak Tracker Velocities | Error in peak tracker velocity across each task profile | |

| Absolute Participant-Level Mean Bias | ||

| Absolute Participant-Level Bias of ROM | ||

| Absolute Participant-Level Bias at Peak Angles | ||

| Absolute Participant-Level Bias at Peak Joint Velocities | ||

| Setting | Goal | Error Metric | Error Summary |

|---|---|---|---|

| Research (Participant-Grouped Metrics) | Comparing tracking quality to other systems and studies | RMSE | Median RMSE is below 7° for all joint metrics and below 5° for shoulder elevation and both wrist joint metrics |

| VR–IR Error | Median error is below 3° for all joint metrics | ||

| Absolute VR–IR Error | Absolute median error is below 6° for all joint metrics | ||

| Large motions | ROM Error | Percent median error is below 30% for frontal wrist, 15% for sagittal wrist, and 5% for all other joint metrics | |

| Fast motions | Error at Peak Joint Velocity | Percent median error is below 12% for all joint metrics, below 10% for shoulder elevation, and below 1% for net wrist | |

| Large joint angles (could be holding the position) | Error at Peak Joint Angle | Percent median error is below 23% for all wrist joint metrics and 5% for all other joint metrics | |

| Patient-Specific (Participant-Level Bias Metrics) | Large motions | ROM Error | Percent median error is below 32% for frontal wrist and 15% for all other joint metrics |

| Fast motions | Error at Peak Joint Velocity | Percent median error is below 20% for all joint metrics and below 10% for shoulder elevation and net wrist joint metrics | |

| Large joint angles (could be holding the position) | Error at Peak Joint Angle | Percent median error is below 16% for all joint metrics and below 5% for shoulder and elbow joint metrics |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barclay, S.A.; Brown, T.; Hill, T.M.; Smith, A.; Reissman, T.; Kinney, A.L.; Reissman, M.E. Characterization of Upper Extremity Joint Angle Error for Virtual Reality Motion Capture Compared to Infrared Motion Capture. Appl. Sci. 2025, 15, 12081. https://doi.org/10.3390/app152212081

Barclay SA, Brown T, Hill TM, Smith A, Reissman T, Kinney AL, Reissman ME. Characterization of Upper Extremity Joint Angle Error for Virtual Reality Motion Capture Compared to Infrared Motion Capture. Applied Sciences. 2025; 15(22):12081. https://doi.org/10.3390/app152212081

Chicago/Turabian StyleBarclay, Skyler A., Trent Brown, Tessa M. Hill, Ann Smith, Timothy Reissman, Allison L. Kinney, and Megan E. Reissman. 2025. "Characterization of Upper Extremity Joint Angle Error for Virtual Reality Motion Capture Compared to Infrared Motion Capture" Applied Sciences 15, no. 22: 12081. https://doi.org/10.3390/app152212081

APA StyleBarclay, S. A., Brown, T., Hill, T. M., Smith, A., Reissman, T., Kinney, A. L., & Reissman, M. E. (2025). Characterization of Upper Extremity Joint Angle Error for Virtual Reality Motion Capture Compared to Infrared Motion Capture. Applied Sciences, 15(22), 12081. https://doi.org/10.3390/app152212081