Comparative Analysis of Self-Labeled Algorithms for Predicting MOOC Dropout: A Case Study

Abstract

1. Introduction

- We present a systematic evaluation of semi-supervised learning methods, comparing Self-Training, Co-Forest and CoBC across multiple performance metrics;

- We provide an empirical analysis of how ensemble-based methods improve classification accuracy under varying levels of labeled data availability;

- We discuss the trade-off between performance gains and computational efficiency, offering practical insights for practitioners operating under resource constraints.

2. Related Work

3. Semi-Supervised Learning

- Self-training [23] is one of the earliest used and most fundamental SSL techniques and is often used as a baseline for evaluating other self-labeled approaches. The method begins by training a base classifier on a small set of labeled examples (L). The trained model then predicts labels for the unlabeled instances (U) and the most confidently classified samples are added to L for retraining. This iterative process continues until a stopping criterion is met. Self-training has been successfully applied in diverse domains, including text classification, bioinformatics and EDM, due to its conceptual simplicity and ability to leverage abundant unlabeled data. However, a key limitation of this approach lies in the potential propagation of labeling errors, which can degrade model performance over iterations [24].

- SetRed (Self-training with editing) enhances the robustness of self-training by introducing a noise-filtering mechanism that mitigates the risk of error propagation [25]. After pseudo-labels are assigned, SetRed applies an editing phase, commonly based on the k-NN algorithm, to evaluate the local consistency of pseudo-labeled samples. Instances whose labels disagree with the majority of their neighbors are removed before the next training iteration. By filtering out unreliable pseudo-labels, Setred produces cleaner training sets and more stable classifiers, particularly useful in noisy or imbalanced data contexts such as EDM, where dropout cases or minority outcomes can distort training.

- Co-training [26] is a seminal disagreement-based SSL method that assumes that each instance can be described by two distinct and conditionally independent feature subsets (views). Two classifiers are trained independently on each view using the labeled data. Each classifier then predicts labels for the unlabeled set and the most confident predictions from one classifier are used to augment the labeled set of the other. This mutual exchange of high-confidence examples continues iteratively until a stopping criterion is reached. Co-training has inspired numerous extensions and remains a foundational paradigm for multi-view learning and ensemble-based SSL [27].

- Co-Training by Committee (CoBC) [28] extends the co-training concept by combining it with ensemble diversity. A committee of classifiers, each trained on randomly sampled labeled subsets of the feature space, iteratively collaborates to label unlabeled instances. Only pseudo-labels with high confidence and consensus among the committee members are added to the labeled dataset, reducing the risk of reinforcing incorrect predictions. This ensemble-based mechanism promotes robustness and minimizes noise propagation, making CoBC suitable for tasks involving complex, high-dimensional data representations.

- Democratic Co-Learning, introduced by Zhou and Goldman [29], is a semi-supervised ensemble learning framework that generalizes the co-training paradigm by employing multiple diverse classifiers that collaboratively label unlabeled data through a majority-voting mechanism. Unlike traditional co-training, which typically relies on two views or classifiers, Democratic Co-Learning constructs a committee of classifiers, each potentially using different learning algorithms or feature subsets, that iteratively exchange pseudo-labels based on collective confidence rather than pairwise agreement. During each iteration, unlabeled instances receiving consistent predictions from the majority of the committee are incorporated into the labeled set, reinforcing reliable patterns while mitigating the influence of noisy or biased individual models. This democratic voting strategy enhances robustness, reduces overfitting to erroneous pseudo-labels and performs effectively even when the assumption of conditional independence between feature views does not hold.

- Rasco (Random subspace co-training) [30] and Rel-Rasco (Relevant Random subspace co-training) [31] are multi-view co-training variants that exploit random subspace and feature selection strategies to create diverse learning views. In Rasco, multiple classifiers are trained on randomly generated feature subspaces, each representing a distinct view of the data. Their predictions on unlabeled instances are aggregated to determine consensus labels, facilitating complementary learning across subspaces. Rel-Rasco enhances this approach by constructing subspaces based on feature relevance scores, typically computed via mutual information between features and class labels. This systematic subspace generation increases interpretability and improves the reliability of pseudo-labels, particularly in structured and high-dimensional datasets.

- Co-Forest (Co-training Random Forest) [32] integrates the co-training framework into the ensemble learning paradigm of Random Forests [33]. Multiple decision trees are trained on different labeled subsets and during each iteration, each tree labels a portion of the unlabeled data. High-confidence predictions are shared among trees, effectively expanding their training sets. This collaborative labeling process enhances model diversity and generalization, preserving the strengths of RF, robustness and low variance, while leveraging unlabeled data to further improve classification performance.

- Tri-training [34] is a multiple-classifier SSL approach that eliminates the need for explicit multi-view assumptions. It employs three classifiers trained on the labeled data and iteratively refines them using unlabeled examples. When two classifiers agree on the label of an unlabeled instance, that instance is pseudo-labeled and added to the training set of the third classifier. This majority-voting mechanism reduces labeling errors and encourages consensus-driven learning. Tri-training is computationally efficient and has demonstrated competitive performance in settings where data are noisy, imbalanced, or limited, conditions frequently encountered in EDM and dropout prediction tasks.

- Tri-Training with Data Editing (DE-Tri-training), proposed by Deng and Guo [35], extends the classical tri-training algorithm by introducing an additional data-cleaning mechanism to improve pseudo-label reliability and model stability. Similar to tri-training, the method employs three classifiers that iteratively label unlabeled data through a consensus-based strategy, where two agreeing classifiers assign pseudo-labels for retraining the third. The key innovation lies in its editing phase, during which potentially mislabeled or noisy instances are detected and removed using neighborhood-based criteria, such as k-NN consistency checks. This selective filtering prevents the accumulation of erroneous pseudo-labels that can degrade performance in later iterations.

4. Dataset Description

Dataset Attributes

- Demographics Subset: This subset comprises 10 primarily categorical variables describing participants’ backgrounds, including mother tongue, education level, employment status, current occupation, years of professional experience, working hours per day, advanced technical English skills, advanced digital skills, prior MOOC experience and average study time per week. These attributes offer valuable insights into the diversity and prior preparedness of the learner population, aligning with established findings on demographic influences in MOOC engagement and success [38].

- Performance Subset: Containing 4 numerical variables, this subset reflects participants’ early academic outcomes, such as study hours per week, Quiz1 score (Week1-Unit1), Quiz2 score (Week1-Unit2) and average grade from the first two course modules. These indicators enable the exploration of correlations between initial performance and overall course completion, a relationship frequently highlighted in LA within MOOCs [39].

- Activity Subset: This subset includes 7 numerical variables that quantify learners’ engagement on the MOOC platform, such as the number of module1 discussions, module1 posts, module1 forum views, module1 connections per day, announcements forum views, introductory forum views, discussion participations and total time (minutes) spent on learning activities in module1. These metrics serve as behavioral proxies for engagement intensity and learning strategies, which are strong predictors of persistence and dropout in online learning environments [40].

5. Experimental Design

5.1. Configuration Parameters

5.2. Evaluation Metrics

- The F1-score is particularly valuable in scenarios with class imbalance, as it emphasizes the model’s ability to correctly detect minority classes while penalizing excessive false positives or false negatives [44]. In dropout prediction, for instance, where the number of students who drop out is typically smaller than those who complete the course, the F1-score offers a more balanced assessment of model performance than accuracy alone.

- The MCC yields a value between −1 and +1, where +1 indicates perfect prediction, 0 indicates random performance and −1 indicates total disagreement between predictions and true labels. Unlike accuracy and F1-score, the MCC remains reliable even in highly imbalanced datasets, as it provides a balanced measure that accounts for both the true and false classifications in all classes. For this reason, MCC is increasingly recommended as a preferred metric in binary classification problems, particularly in EDM and health-related predictive modeling [46].

6. Results and Analysis

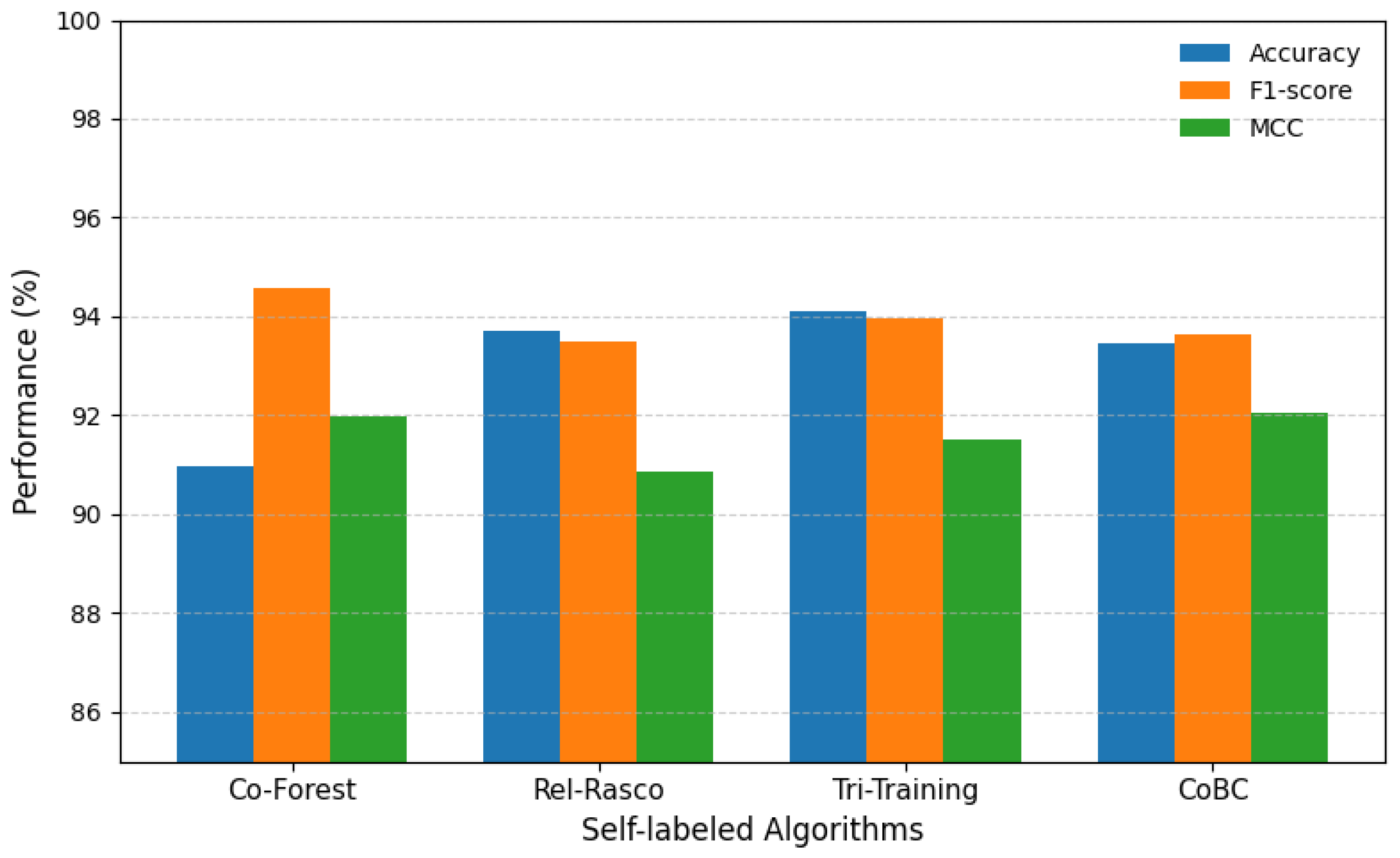

6.1. Overall Performance Trends

6.2. Accuracy Analysis

6.3. F1-Score Analysis

6.4. Matthews Correlation Coefficient (MCC) Analysis

7. Discussion

- CoBC demonstrated the most consistent performance, combining high mean accuracy with low variability. Rel-RASCO also maintained relatively stable results, though slightly below CoBC. Co-Forest, while competitive in some cases, exhibited greater variance across folds. Overall, ensemble and multi-view algorithms tend to outperform simpler approaches like Self-Training, as they can better leverage diversity across learners and feature representations [47].

- Tri-Training and CoBC demonstrated exceptional adaptability to scarce labeled conditions, validating the effectiveness of consensus-based and committee-driven labeling strategies in minimizing error propagation.

- SetRed maintained moderate but consistent performance, indicating the value of its noise-filtering component but suggesting limited scalability benefits in this dataset’s structure.

- De-Tri-Training’s weak results emphasize that additional editing or data-pruning layers may not always be beneficial, especially when the base models already handle noise adequately.

7.1. Summary of Findings

7.1.1. Performance–Efficiency Trade-Off

7.1.2. Pedagogical Implications

7.2. Methodological Significance

8. Conclusions

8.1. Limitations

8.2. Practical Implications

8.3. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yuan, L.; Powell, S. MOOCs and open education: Implications for Higher Education: A white paper. JISC Cetis 2013, 1–21. [Google Scholar] [CrossRef]

- Feng, W.; Tang, J.; Liu, T.X. Understanding dropouts in MOOCs. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 517–524. [Google Scholar]

- Kizilcec, R.F.; Piech, C.; Schneider, E. Deconstructing disengagement: Analyzing learner subpopulations in massive open online courses. In Proceedings of the Third International Conference on Learning Analytics and Knowledge, Leuven, Belgium, 8–12 April 2013; pp. 170–179. [Google Scholar]

- Liyanagunawardena, T.R.; Adams, A.A.; Williams, S.A. MOOCs: A systematic study of the published literature 2008–2012. Int. Rev. Res. Open Distrib. Learn. 2013, 14, 202–227. [Google Scholar] [CrossRef]

- Moreno-Marcos, P.M.; Alario-Hoyos, C.; Muñoz-Merino, P.J.; Estevez-Ayres, I.; Kloos, C.D. A learning analytics methodology for understanding social interactions in MOOCs. IEEE Trans. Learn. Technol. 2018, 12, 442–455. [Google Scholar] [CrossRef]

- Khalil, H.; Ebner, M. MOOCs completion rates and possible methods to improve retention-A literature review. In Proceedings of the EdMedia 2014–World Conference on Educational Media and Technology, Tampere, Finland, 23–26 June 2014; Viteli, J., Leikomaa, M., Eds.; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2014; pp. 1305–1313. [Google Scholar]

- Chen, J.; Fang, B.; Zhang, H.; Xue, X. A systematic review for MOOC dropout prediction from the perspective of machine learning. Interact. Learn. Environ. 2024, 32, 1642–1655. [Google Scholar] [CrossRef]

- Kloft, M.; Stiehler, F.; Zheng, Z.; Pinkwart, N. Predicting MOOC dropout over weeks using machine learning methods. In Proceedings of the EMNLP 2014 Workshop on Analysis of Large Scale Social Interaction in MOOCs, Doha, Qatar, 25–29 October 2014; pp. 60–65. [Google Scholar]

- Zhu, X.; Goldberg, A. Introduction to Semi-Supervised Learning; Morgan & Claypool Publishers: San Rafael, CA, USA, 2009. [Google Scholar]

- Goel, Y.; Goyal, R. On the effectiveness of self-training in MOOC dropout prediction. Open Comput. Sci. 2020, 10, 246–258. [Google Scholar] [CrossRef]

- Dalipi, F.; Imran, A.S.; Kastrati, Z. MOOC dropout prediction using machine learning techniques: Review and research challenges. In Proceedings of the 2018 IEEE Global Engineering Education Conference (EDUCON), Canary Islands, Spain, 18–20 April 2018; pp. 1007–1014. [Google Scholar]

- Kostopoulos, G.; Kotsiantis, S.; Pintelas, P. Estimating student dropout in distance higher education using semi-supervised techniques. In Proceedings of the 19th Panhellenic Conference on Informatics, Athens, Greece, 1–3 October 2015; pp. 38–43. [Google Scholar]

- Li, W.; Gao, M.; Li, H.; Xiong, Q.; Wen, J.; Wu, Z. Dropout prediction in MOOCs using behavior features and multi-view semi-supervised learning. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 3130–3137. [Google Scholar]

- Zhou, S.; Cao, L.; Zhang, R.; Sun, G. M-ISFCM: A semisupervised method for anomaly detection of MOOC learning behavior. In Proceedings of the International Conference of Pioneering Computer Scientists, Engineers and Educators, Chengdu, China, 19–22 August 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 323–336. [Google Scholar]

- Cam, H.N.T.; Sarlan, A.; Arshad, N.I. A hybrid model integrating recurrent neural networks and the semi-supervised support vector machine for identification of early student dropout risk. PeerJ Comput. Sci. 2024, 10, e2572. [Google Scholar] [CrossRef] [PubMed]

- Psathas, G.; Chatzidaki, T.K.; Demetriadis, S.N. Predictive modeling of student dropout in MOOCs and self-regulated learning. Computers 2023, 12, 194. [Google Scholar] [CrossRef]

- Chi, Z.; Zhang, S.; Shi, L. Analysis and prediction of MOOC learners’ dropout behavior. Appl. Sci. 2023, 13, 1068. [Google Scholar] [CrossRef]

- Chapelle, O.; Scholkopf, B.; Zien, A. Semi-supervised learning (chapelle, o. et al., eds.; 2006) [book reviews]. IEEE Trans. Neural Netw. 2009, 20, 542. [Google Scholar] [CrossRef]

- Gui, J.; Chen, T.; Zhang, J.; Cao, Q.; Sun, Z.; Luo, H.; Tao, D. A survey on self-supervised learning: Algorithms, applications, and future trends. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9052–9071. [Google Scholar] [CrossRef]

- Kostopoulos, G.; Kotsiantis, S. Exploiting semi-supervised learning in the education field: A critical survey. In Advances in Machine Learning/Deep Learning-Based Technologies: Selected Papers in Honour of Professor Nikolaos G. Bourbakis–Vol. 2; Springer: Cham, Switzerland, 2021; pp. 79–94. [Google Scholar]

- Triguero, I.; García, S.; Herrera, F. Self-labeled techniques for semi-supervised learning: Taxonomy, software and empirical study. Knowl. Inf. Syst. 2015, 42, 245–284. [Google Scholar] [CrossRef]

- Arazo, E.; Ortego, D.; Albert, P.; O’Connor, N.E.; McGuinness, K. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In Proceedings of the 2020 International joint conference on neural networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Yarowsky, D. Unsupervised word sense disambiguation rivaling supervised methods. In Proceedings of the 33rd Annual Meeting of the Association for Computational Linguistics, Cambridge, MA, USA, 26–30 June 1995; pp. 189–196. [Google Scholar]

- Zou, Y.; Yu, Z.; Liu, X.; Kumar, B.; Wang, J. Confidence regularized self-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5982–5991. [Google Scholar]

- Li, M.; Zhou, Z.H. SETRED: Self-training with editing. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Hanoi, Vietnam, 18–20 May 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 611–621. [Google Scholar]

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 92–100. [Google Scholar]

- Zhou, Z.H.; Li, M. Semi-supervised learning by disagreement. Knowl. Inf. Syst. 2010, 24, 415–439. [Google Scholar] [CrossRef]

- Hady, M.F.A.; Schwenker, F. Co-training by committee: A new semi-supervised learning framework. In Proceedings of the 2008 IEEE International Conference on Data Mining Workshops, Pisa, Italy, 15–19 December 2008; pp. 563–572. [Google Scholar]

- Zhou, Y.; Goldman, S. Democratic co-learning. In Proceedings of the 16th IEEE International Conference on Tools with Artificial Intelligence, Boca Raton, FL, USA, 15–17 November 2004; pp. 594–602. [Google Scholar]

- Wang, J.; Luo, S.w.; Zeng, X.h. A random subspace method for co-training. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 195–200. [Google Scholar]

- Yaslan, Y.; Cataltepe, Z. Co-training with relevant random subspaces. Neurocomputing 2010, 73, 1652–1661. [Google Scholar] [CrossRef]

- Li, M.; Zhou, Z.H. Improve computer-aided diagnosis with machine learning techniques using undiagnosed samples. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2007, 37, 1088–1098. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhou, Z.H.; Li, M. Tri-training: Exploiting unlabeled data using three classifiers. IEEE Trans. Knowl. Data Eng. 2005, 17, 1529–1541. [Google Scholar] [CrossRef]

- Deng, C.; Guo, M.Z. Tri-training and data editing based semi-supervised clustering algorithm. In Proceedings of the Mexican International Conference on Artificial Intelligence, Apizaco, Mexico, 13–17 November 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 641–651. [Google Scholar]

- Panagiotakopoulos, T.; Lazarinis, F.; Stefani, A.; Kameas, A. A competency-based specialization course for smart city professionals. Res. Pract. Technol. Enhanc. Learn. 2024, 19, 13. [Google Scholar] [CrossRef]

- Panagiotakopoulos, T.; Kotsiantis, S.; Kostopoulos, G.; Iatrellis, O.; Kameas, A. Early dropout prediction in MOOCs through supervised learning and hyperparameter optimization. Electronics 2021, 10, 1701. [Google Scholar] [CrossRef]

- Kizilcec, R.F.; Halawa, S. Attrition and achievement gaps in online learning. In Proceedings of the Second (2015) ACM Conference on Learning@ Scale, Vancouver, BC, Canada, 14–18 March 2015; pp. 57–66. [Google Scholar]

- Joksimović, S.; Poquet, O.; Kovanović, V.; Dowell, N.; Mills, C.; Gašević, D.; Dawson, S.; Graesser, A.C.; Brooks, C. How do we model learning at scale? A systematic review of research on MOOCs. Rev. Educ. Res. 2018, 88, 43–86. [Google Scholar] [CrossRef]

- Crossley, S.; Dascalu, M.; McNamara, D.S.; Baker, R.; Trausan-Matu, S. Predicting Success in Massive Open Online Courses (MOOCs) Using Cohesion Network Analysis; International Society of the Learning Sciences: Philadelphia, PA, USA, 2017. [Google Scholar]

- Romero, C.; Ventura, S. Educational data mining and learning analytics: An updated survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1355. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Garrido-Labrador, J.L.; Maudes-Raedo, J.M.; Rodríguez, J.J.; García-Osorio, C.I. SSLearn: A semi-supervised learning library for Python. SoftwareX 2025, 29, 102024. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Kostopoulos, G.; Fazakis, N.; Kotsiantis, S.; Dimakopoulos, Y. Enhancing Semi-Supervised Learning in Educational Data Mining Through Synthetic Data Generation Using Tabular Variational Autoencoder. Algorithms 2025, 18, 663. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Song, Z.; Yang, X.; Xu, Z.; King, I. Graph-based semi-supervised learning: A comprehensive review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8174–8194. [Google Scholar] [CrossRef]

| Method | Default Base Estimator(s) | Key Hyperparameters (Defaults) |

|---|---|---|

| Self-Training | DecisionTreeClassifier * | threshold = 0.75; criterion = threshold; max_iter = 10 |

| Setred | kNeighborsClassifier (k = 3) | max_iterations = 40; poolsize = 0.25; rejection_threshold = 0.05 |

| Co-Training | DecisionTreeClassifier * | max_iterations = 30; poolsize = 75; threshold = 0.5; force_second_view = True |

| CoBC | BaggingClassifier | max_iterations = 100; poolsize = 100; min_instances_for_class = 3 |

| Democratic Co-Learning | DecisionTreeClassifier *, GaussianNB, kNeighborsClassifier (k = 3) | alpha = 0.95; q_exp = 2; expand_only_mislabeled = True |

| Rasco | DecisionTreeClassifier * | max_iterations = 10; n_estimators = 30; subspace_size = None |

| Rel-Rasco | DecisionTreeClassifier * | max_iterations = 10; n_estimators = 30; subspace_size = None |

| Co-Forest | DecisionTreeClassifier * | n_estimators = 7; threshold = 0.75; bootstrap = True |

| Tri-Training | DecisionTreeClassifier * | collection_size = 3; max_iterations = None |

| De-Tri-Training | DecisionTreeClassifier * (uses kNeighborsClassifier (k = 3) internally) | same as Tri-Training + depuration step |

| SSL Algorithms | 2% | 3% | 4% | 20% | 40% | 100% |

|---|---|---|---|---|---|---|

| Self-Training | 87.36% | 89.90% | 92.04% | 94.14% | 94.25% | 91.43% |

| Setred | 88.73% | 88.94% | 89.75% | 94.99% | 94.88% | 90.30% |

| Co-Training | 87.32% | 90.21% | 93.50% | 93.82% | 94.46% | 92.76% |

| CoBC | 90.46% | 92.60% | 93.46% | 95.10% | 95.20% | 94.83% |

| Democratic Co-Learning | 80.21% | 82.38% | 84.32% | 92.32% | 94.78% | 88.11% |

| Rasco | 86.91% | 91.73% | 93.05% | 94.56% | 94.46% | 93.82% |

| Rel-Rasco | 89.29% | 91.83% | 93.71% | 95.20% | 95.31% | 93.13% |

| Co-Forest | 90.97% | 90.72% | 93.76% | 95.20% | 94.99% | 94.03% |

| Tri-Training | 87.52% | 91.53% | 94.11% | 94.14% | 94.14% | 93.82% |

| De-Tri-Training | 72.83% | 72.83% | 72.88% | 72.70% | 72.80% | 72.75% |

| SSL Algorithms | 2% | 3% | 4% | 20% | 40% | 100% |

|---|---|---|---|---|---|---|

| Self-Training | 85.17% | 89.04% | 92.75% | 94.04% | 94.25% | 94.40% |

| Setred | 88.99% | 88.94% | 90.13% | 95.00% | 94.88% | 95.27% |

| Co-Training | 88.77% | 90.29% | 92.92% | 93.72% | 94.14% | 94.48% |

| CoBC | 88.02% | 91.65% | 93.64% | 94.84% | 95.00% | 95.55% |

| Democratic Co-Learning | 80.85% | 83.83% | 85.82% | 93.10% | 94.68% | 94.41% |

| Rasco | 87.69% | 91.86% | 93.45% | 94.47% | 94.73% | 95.57% |

| Rel-Rasco | 88.11% | 92.79% | 93.51% | 95.11% | 95.31% | 95.41% |

| Co-Forest | 89.07% | 91.92% | 94.58% | 95.11% | 94.74% | 94.81% |

| Tri-Training | 89.45% | 89.64% | 93.96% | 94.47% | 94.26% | 94.67% |

| De-Tri-Training | 69.68% | 70.03% | 69.67% | 70.29% | 70.34% | 69.61% |

| SSL Algorithms | 2% | 3% | 4% | 20% | 40% | 100% |

|---|---|---|---|---|---|---|

| Self-Training | 81.07% | 86.30% | 89.64% | 91.50% | 92.31% | 91.96% |

| Setred | 83.97% | 84.25% | 85.77% | 93.15% | 92.98% | 93.20% |

| Co-Training | 82.29% | 87.23% | 88.05% | 91.48% | 91.95% | 92.04% |

| CoBC | 84.55% | 87.59% | 92.04% | 93.25% | 93.50% | 93.87% |

| Democratic Co-Learning | 72.42% | 76.32% | 80.24% | 91.10% | 92.67% | 92.25% |

| Rasco | 84.42% | 87.84% | 90.36% | 92.89% | 93.38% | 93.28% |

| Rel-Rasco | 83.94% | 88.56% | 90.88% | 93.47% | 93.90% | 93.39% |

| Co-Forest | 86.16% | 88.07% | 91.99% | 93.09% | 93.03% | 92.83% |

| Tri-Training | 82.33% | 86.88% | 91.50% | 92.16% | 92.01% | 92.13% |

| De-Tri-Training | 59.08% | 58.82% | 58.65% | 60.37% | 60.33% | 58.88% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raftopoulos, G.; Kostopoulos, G.; Davrazos, G.; Panagiotakopoulos, T.; Kotsiantis, S.; Kameas, A. Comparative Analysis of Self-Labeled Algorithms for Predicting MOOC Dropout: A Case Study. Appl. Sci. 2025, 15, 12025. https://doi.org/10.3390/app152212025

Raftopoulos G, Kostopoulos G, Davrazos G, Panagiotakopoulos T, Kotsiantis S, Kameas A. Comparative Analysis of Self-Labeled Algorithms for Predicting MOOC Dropout: A Case Study. Applied Sciences. 2025; 15(22):12025. https://doi.org/10.3390/app152212025

Chicago/Turabian StyleRaftopoulos, George, Georgios Kostopoulos, Gregory Davrazos, Theodor Panagiotakopoulos, Sotiris Kotsiantis, and Achilles Kameas. 2025. "Comparative Analysis of Self-Labeled Algorithms for Predicting MOOC Dropout: A Case Study" Applied Sciences 15, no. 22: 12025. https://doi.org/10.3390/app152212025

APA StyleRaftopoulos, G., Kostopoulos, G., Davrazos, G., Panagiotakopoulos, T., Kotsiantis, S., & Kameas, A. (2025). Comparative Analysis of Self-Labeled Algorithms for Predicting MOOC Dropout: A Case Study. Applied Sciences, 15(22), 12025. https://doi.org/10.3390/app152212025