Artificial Intelligence in Medicine and Healthcare: A Complexity-Based Framework for Model–Context–Relation Alignment

Abstract

1. Introduction

1.1. AI in Medicine and Health Sciences

- AI for Medicine, oriented toward the individual patient, diagnosis, therapy, and precision medicine;

- AI for Healthcare, focused on population health, prevention, service planning, and the sustainability of healthcare systems.

1.2. Complexity and Adaptive Systems in Medicine and Healthcare

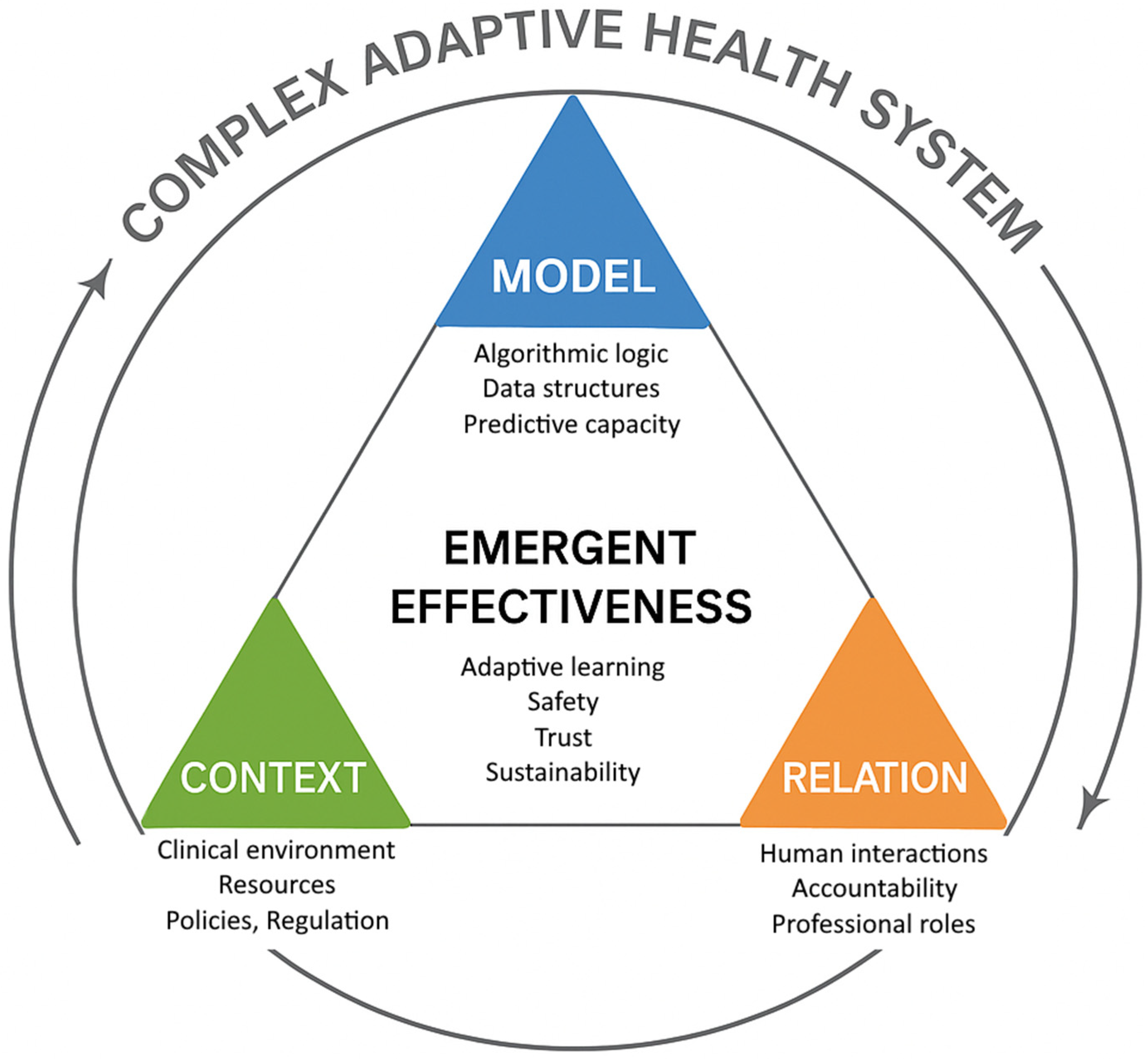

- Model: the algorithmic representation and computational output;

- Context: the operational, regulatory, and organizational environment of application;

- Relation: the human and institutional interactions that guide its use.

1.3. Toward a Medicine of Complexity

1.4. The Revision of the Semiological Paradigm: Epistemological Foundation of the M–C–R Framework

- Model—Instrumental Semiotics. Algorithms are understood as cognitive instruments that expand the perceptual field of the physician, transforming raw data into structured digital signs. Their validity depends on their capacity to preserve interpretability and traceability, not merely statistical accuracy.

- Context—Situated Meaning. Every clinical or organizational environment provides the situational matrix within which digital signs acquire significance. The same algorithm may yield different meanings and values depending on workflow, infrastructure, and regulatory setting.

- Relation—Interpretive Mediation. Diagnosis and decision-making occur through the interaction among humans, clinicians, patients, and institutional actors, who assign meaning to algorithmic outputs. Relation is therefore the ethical and epistemic bridge that ensures that computation remains embedded within the human act of care.

2. Materials and Methods

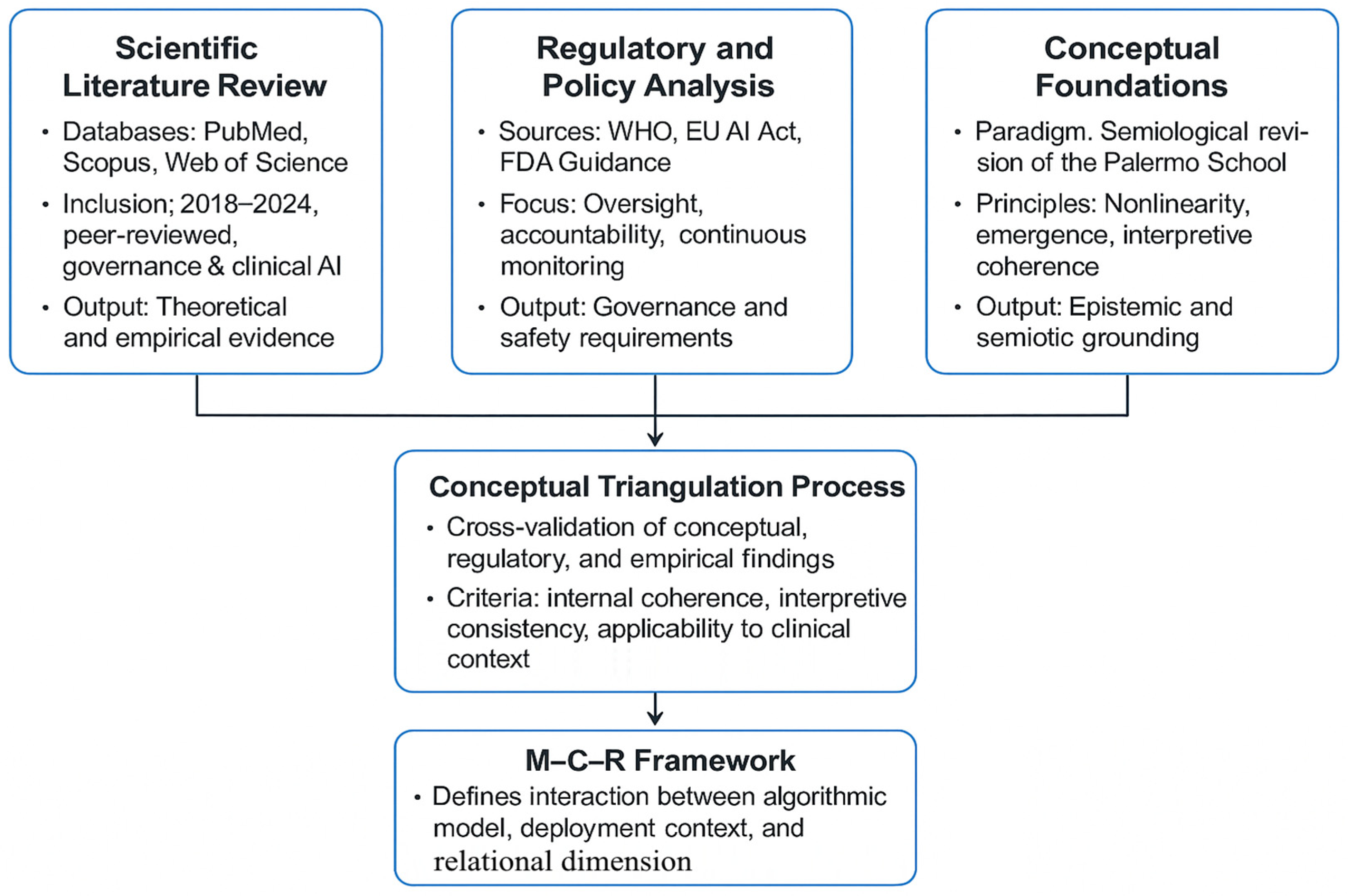

2.1. General Methodological Approach

- Paradigmatic analysis, grounded in the Revision of the Semiological Paradigm developed by the Palermo School, to situate AI within biomedical reasoning as a cognitive and semiotic extension;

- Comparative regulatory analysis, integrating institutional guidelines (WHO, European Union, FDA) to derive criteria for safety, accountability, and adaptive governance;

- Systemic synthesis, applying the M–C–R framework as an interpretive lens to map the emergent properties of AI across clinical and organizational domains.

2.2. Search Strategy and Source Identification

2.3. Inclusion and Exclusion Criteria

- Peer-reviewed papers addressing the implementation, governance, or ethical implications of AI in medicine or healthcare systems;

- Regulatory and policy documents from WHO, EU, and FDA concerning AI-based health technologies;

- Conceptual and epistemological works on complexity science and medical semiotics relevant to clinical reasoning.

- Studies exclusively focused on algorithmic performance or technical architecture without reference to clinical deployment or human oversight;

- Non-English or non-Italian sources without accessible translation;

- Non–peer-reviewed material unless produced by recognized health authorities.

2.4. Screening, Synthesis, and Conceptual Triangulation

- Nonlinearity—acknowledgment of feedback loops and interdependent interactions;

- Emergence—identification of system-level properties not reducible to individual components;

- Adaptive feedback—evidence of learning or contextual adjustment within socio-technical systems.

2.5. Methodological Limitations and Reflexivity

3. Discussion

3.1. Rethinking Artificial Intelligence Within the Biomedical Paradigm: From the Myth of Substitution to Integrated Semiotics

- In perception, AI acts as a sensory amplifier, capable of detecting weak patterns invisible to the human eye;

- In interpretation, it provides statistical correlations or probabilistic inferences that orient clinical reasoning;

3.2. Applications of Artificial Intelligence in Medicine

- Prediction of Multimorbidity and Personalized Risk. Transformer architectures and Large Language Models (LLMs) applied to longitudinal clinical databases estimate the combined probability of multiple chronic diseases, adapting flexibly to different healthcare contexts [24].

3.3. AI for Healthcare: Systemic Intelligence and Public Health

- Epidemiological Forecasting and Surveillance—neural networks and Bayesian models are used to anticipate epidemic trends and the spread of chronic diseases [27].

- Resource Optimization and Healthcare Logistics—reinforcement learning algorithms support hospital flow management and reduction in systemic inefficiencies [19].

- Analysis of Social Determinants of Health (SDH)—machine learning models integrate health, environmental, and socio-economic data to identify disparities [28].

- Service Planning and Economic Sustainability—predictive simulations assess the potential impact of public health interventions, guiding policy and financial decision-making [5].

- Systemic Observation—AI systems reveal complex interaction patterns among resources, regulations, and outcomes, making the healthcare system increasingly self-learning [29].

3.4. AI and Complexity: Medicine as an Adaptive System

3.4.1. Positioning of the M–C–R Framework

- Epistemic grounding in clinical semiotics. By anchoring AI within the semiological process of perception–interpretation–decision, M–C–R redefines the algorithm not as an autonomous agent but as a semiotic amplifier that extends clinical perception and reasoning. This establishes a direct continuity between computational modeling and medical sense-making.

- Co-equality of human and algorithmic components. Whereas many implementation frameworks treat “human oversight” as an external safeguard added post-design, M–C–R conceptualizes Relation, the network of physicians, care teams, and institutions, as a co-constitutive dimension of AI effectiveness, on par with the Model and Context. This shift formalizes accountability and interpretive responsibility as intrinsic properties of system performance.

- Emergent and contextual evaluation of value. Unlike metrics-based assessments centered on accuracy or AUROC, M–C–R frames AI value as an emergent outcome of its adaptive alignment with the clinical and organizational environment. Effectiveness is thus not a static attribute of the algorithm but a dynamic property of the socio-technical ecosystem in which it operates.

3.4.2. Limitations of Existing Complexity Approaches and Added Value of the M–C–R Framework

- Lack clear epistemic grounding in clinical reasoning and semiotics;

- Focus on organizational adaptiveness rather than interpretive reliability;

- Offer limited criteria for evaluating alignment between algorithmic behavior, clinical context, and human judgment.

3.5. International Regulatory Framework

- Life-cycle approach, covering the entire lifespan of the system, from design to decommissioning;

- Risk management, proportional to the system’s impact on health and safety;

3.6. Ethical, Social, and Organizational Dimensions

- Interpersonal, concerning clinician–patient communication and shared decision-making;

- Interprofessional, encompassing collaboration among healthcare workers, data experts, and administrators;

- Institutional, involving regulatory oversight, ethical governance, and social participation.

3.7. Conceptual Validation of the M–C–R Framework: The Case of Early Sepsis Diagnosis

- Model refers to the neural network generating risk scores from real-time electronic health record data;

- Context denotes the hospital infrastructure, interoperability, staffing, and local protocols governing alert management;

- Relation captures the trust and coordination among clinicians, nurses, and data scientists who interpret and act on alerts.

3.8. Applicative Example and Operational Implications: Predictive Management of Hospital Flows

- The Model represents predictive engines trained on operational and epidemiological data;

- The Context includes hospital logistics, regulatory constraints, and available human resources;

- The Relation encompasses communication among management teams, clinical departments, and regional authorities that translate predictions into actionable decisions.

4. Conclusions

4.1. Limitations and Future Perspectives

4.2. Proposed Study Designs for Empirical Validation of the M–C–R Framework

- Model (M): external validation present (yes/no); calibration (Brier score or calibration slope); AUROC; drift monitoring in place (yes/no); explainability artifacts available (docs/dashboards, yes/no).

- Context (C): EHR interoperability score; alert routing latency (sec); protocol availability for escalation (yes/no); staffing adequacy index; regulatory/QA procedures embedded (yes/no).

- Relation (R): proportion of staff trained (%); role clarity index (RACI completed, yes/no); compliance with alerts (% acted within protocol time); perceived trust/usability (Likert 1–5); presence of governance/ethics oversight (yes/no).

- Time-to-antibiotics (hours) from first qualifying alert;

- Sepsis-related mortality (in-hospital or 28-day).

- Lead time before clinician suspicion (hours);

- PPV/alert burden; alert-fatigue rate (% alerts ignored);

- Protocol adherence (% alerts followed within X minutes);

- LOS, ICU transfer within 24–48 h.

- ED boarding time (median minutes);

- Bed-turnover efficiency (% beds available within target time).

- Elective-surgery cancelations;

- Variability of occupancy (% SD);

- Staff overtime hours;

- Time-to-placement for high-acuity patients.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Artificial Intelligence | AI |

| Complex Adaptive System | CAS |

| Electronic Health Record | EHR |

| Food and Drug Administration | FDA |

| Large Language Model | LLM |

| Model–Context–Relation framework | M–C–R |

| Organization for Economic Co-operation and Development | OECD |

| Software as a Medical Device | SaMD |

| Social Determinants of Health | SDH |

| World Health Organization | WHO |

References

- Saghiri, M.A.; Vakhnovetsky, J.; Nadershahi, N. Scoping review of artificial intelligence and immersive digital tools in dental education. J. Dent. Educ. 2022, 86, 736–750. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Göstemeyer, G.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Sciarra, F.M.; Caivano, G.; Cacioppo, A.; Messina, P.; Cumbo, E.M.; Di Vita, E.; Scardina, G.A. Dentistry in the Era of Artificial Intelligence: Medical Behavior and Clinical Responsibility. Prosthesis 2025, 7, 95. [Google Scholar] [CrossRef]

- Briganti, G.; Le Moine, O. Artificial Intelligence in Medicine: Today and Tomorrow. Front. Med. 2020, 7, 27. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nutbeam, D.; Milat, A.J. Artificial intelligence and public health: Prospects, hype and challenges. Public Health Res. Pract. 2025, 35, PU24001. [Google Scholar] [CrossRef] [PubMed]

- Johnson, K.B.; Wei, W.Q.; Weeraratne, D.; Frisse, M.E.; Misulis, K.; Rhee, K.; Zhao, J.; Snowdon, J.L. Precision Medicine, AI, and the Future of Personalized Health Care. Clin. Transl. Sci. 2021, 14, 86–93. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kwong, J.C.C.; Nickel, G.C.; Wang, S.C.Y.; Kvedar, J.C. Integrating artificial intelligence into healthcare systems: More than just the algorithm. npj Digit. Med. 2024, 7, 52. [Google Scholar] [CrossRef]

- Feng, J.; Phillips, R.V.; Malenica, I.; Bishara, A.; Hubbard, A.E.; Celi, L.A.; Pirracchio, R. Clinical artificial intelligence quality improvement: Towards continual monitoring and updating of AI algorithms in healthcare. npj Digit. Med. 2022, 5, 66. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Soenksen, L.R.; Ma, Y.; Zeng, C.; Boussioux, L.; Villalobos Carballo, K.; Na, L.; Wiberg, H.M.; Li, M.L.; Fuentes, I.; Bertsimas, D. Integrated multimodal artificial intelligence framework for healthcare applications. npj Digit. Med. 2022, 5, 149. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Crossnohere, N.L.; Elsaid, M.; Paskett, J.; Bose-Brill, S.; Bridges, J.F.P. Guidelines for Artificial Intelligence in Medicine: Literature Review and Content Analysis of Frameworks. J. Med. Internet Res. 2022, 24, e36823. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Cacioppo, A.; Caivano, G.; Sciarra, F.M.; Cumbo, E.; Messina, P.; Argo, A.; Zerbo, S.; Albano, D.; Scardina, G.A. Digital dentistry: Clinical, ethical and medico-legal aspects in the use of new technologies. Odontoiatria digitale: Aspetti clinici, etici e medico-legali nell’utilizzo delle nuove tecnologie. Dent. Cadmos 2025, 93, 40–55. [Google Scholar] [CrossRef]

- Organisation for Economic Co-operation and Development (OECD). Artificial Intelligence in Society; OECD Publishing: Paris, France, 2019. [Google Scholar] [CrossRef]

- European Parliament. EU AI Act: First Regulation on Artificial Intelligence; European Parliament: Brussels, Belgium, 2023. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 20 August 2025).

- World Health Organization (WHO). WHO Outlines Considerations for Regulation of Artificial Intelligence for Health; WHO: Geneva, Switzerland, 2023; Available online: https://www.who.int/news/item/19-10-2023-who-outlines-considerations-for-regulation-of-artificial-intelligence-for-health (accessed on 20 August 2025).

- Ulnicane, I. Artificial intelligence in the European Union: Policy, ethics and regulation. In The Routledge Handbook of European Integrations, 1st ed.; Hoerber, T., Weber, G., Cabras, I., Eds.; Routledge: London, UK, 2022; pp. 254–269, ISBN (print): 978-0-367-20307-8; ISBN (electronic): 978-0-429-26208-1. [Google Scholar] [CrossRef]

- Keskinbora, K.H. Medical ethics considerations on artificial intelligence. J. Clin. Neurosci. 2019, 64, 277–282. [Google Scholar] [CrossRef] [PubMed]

- Avanzo, M.; Stancanello, J.; Pirrone, G.; Drigo, A.; Retico, A. The Evolution of Artificial Intelligence in Medical Imaging: From Computer Science to Machine and Deep Learning. Cancers 2024, 16, 3702. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Secinaro, S.; Calandra, D.; Secinaro, A.; Muthurangu, V.; Biancone, P. The role of artificial intelligence in healthcare: A structured literature review. BMC Med. Inform. Decis. Mak. 2021, 21, 125. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Cacioppo, A.; Sciarra, F.M.; Caivano, G.; Cumbo, E.; Messina, P.; Scardina, G.A. Autonomy and competence of the dentist in radiology: The missing link. Autonomia e competenza dell’odontoiatra in ambito radiologico: L’anello mancante. Dent. Cadmos 2025, 93, 358–367. [Google Scholar] [CrossRef]

- Ahmed, Z. Practicing precision medicine with intelligently integrative clinical and multi-omics data analysis. Hum. Genom. 2020, 14, 35. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lipkova, J.; Chen, R.J.; Chen, B.; Lu, M.Y.; Barbieri, M.; Shao, D.; Vaidya, A.J.; Chen, C.; Zhuang, L.; Williamson, D.F.K.; et al. Artificial intelligence for multimodal data integration in oncology. Cancer Cell 2022, 40, 1095–1110. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Albano, D.; Argo, A.; Bilello, G.; Cumbo, E.; Lupatelli, M.; Messina, P.; Sciarra, F.M.; Sessa, M.; Zerbo, S.; Scardina, G.A. Oral Squamous Cell Carcinoma: Features and Medico-Legal Implications of Diagnostic Omission. Case Rep. Dent. 2024, 2024, 2578271. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I.; Precise4Q Consortium. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Alhaidry, H.M.; Fatani, B.; Alrayes, J.O.; Almana, A.M.; Alfhaed, N.K. ChatGPT in Dentistry: A Comprehensive Review. Cureus 2023, 15, e38317. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Cheng, S.L.; Tsai, S.J.; Bai, Y.M.; Ko, C.H.; Hsu, C.W.; Yang, F.C.; Tsai, C.K.; Tu, Y.K.; Yang, S.N.; Tseng, P.T.; et al. Comparisons of Quality, Correctness, and Similarity Between ChatGPT-Generated and Human-Written Abstracts for Basic Research: Cross-Sectional Study. J. Med. Internet Res. 2023, 25, e51229. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Brunello, G.H.V.; Nakano, E.Y. A Bayesian Measure of Model Accuracy. Entropy 2024, 26, 510. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kelkar, A.H.; Hantel, A.; Koranteng, E.; Cutler, C.S.; Hammer, M.J.; Abel, G.A. Digital Health to Patient-Facing Artificial Intelligence: Ethical Implications and Threats to Dignity for Patients with Cancer. JCO Oncol. Pract. 2024, 20, 314–317. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhang, Z.M. Ethics and governance of trustworthy medical artificial intelligence. BMC Med. Inform. Decis. Mak. 2023, 23, 7. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Caivano, G.; Sciarra, F.M.; Messina, P.; Cumbo, E.M.; Caradonna, L.; Di Vita, E.; Nigliaccio, S.; Fontana, D.A.; Scardina, A.; Scardina, G.A. Antimicrobial Resistance and Causal Relationship: A Complex Approach Between Medicine and Dentistry. Medicina 2025, 61, 1870. [Google Scholar] [CrossRef] [PubMed]

- Jonkisz, A.; Karniej, P.; Krasowska, D. SERVQUAL method as an “old new” tool for improving the quality of medical services: A literature review. Int. J. Environ. Res. Public Health 2021, 18, 10758. [Google Scholar] [CrossRef]

- Thabit, A.K.; Aljereb, N.M.; Khojah, O.M.; Shanab, H.; Badahdah, A. Towards Wiser Prescribing of Antibiotics in Dental Practice: What Pharmacists Want Dentists to Know. Dent. J. 2024, 12, 345. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Tekkeşin, A.İ. Artificial Intelligence in Healthcare: Past, Present and Future. Anatol. J. Cardiol. 2019, 22 (Suppl. S2), 8–9. [Google Scholar] [CrossRef] [PubMed]

- Morley, J.; Machado, C.C.V.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The ethics of AI in health care: A mapping review. Soc. Sci. Med. 2020, 260, 113172. [Google Scholar] [CrossRef] [PubMed]

- Volkman, R.; Gabriels, K. AI Moral Enhancement: Upgrading the Socio-Technical System of Moral Engagement. Sci. Eng. Ethics 2023, 29, 11. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- U.S. Food and Drug Administration (FDA). Artificial Intelligence in Software as a Medical Device; FDA: Silver Spring, MD, USA, 2025. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-software-medical-device (accessed on 20 August 2025).

- European Union. Regulation (EU) 2024/1689—Article 6: Classification Rules for High-Risk AI Systems; European Union: Brussels, Belgium, 2024. Available online: https://artificialintelligenceact.eu/article/6/ (accessed on 20 August 2025).

- Felländer-Tsai, L. Al ethics, accountability, and sustainability: Revisiting the Hippocratic path. Acta Orthop. 2020, 91, 1–2. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Char, D.S.; Shah, N.H.; Magnus, D. Implementing Machine Learning in Health Care—Addressing Ethical Challenges. N. Engl. J. Med. 2018, 378, 981–983. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Luxton, D.D. Recommendations for the ethical use and design of artificial intelligent care providers. Artif. Intell. Med. 2014, 62, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.; Henry, K.E.; Sridharan, A.; Soleimani, H.; Zhan, A.; Rawat, N.; Johnson, L.; Hager, D.N.; Cosgrove, S.E.; Markowski, A.; et al. Prospective, multi-site study of patient outcomes after implementation of the TREWS machine learning-based early warning system for sepsis. Nat. Med. 2022, 28, 1455–1460. [Google Scholar] [CrossRef] [PubMed]

- Moor, M.; Rieck, B.; Horn, M.; Jutzeler, C.R.; Borgwardt, K. Early Prediction of Sepsis in the ICU Using Machine Learning: A Systematic Review. Front. Med. 2021, 8, 607952. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Reddy, S.; Rogers, W.; Makinen, V.P.; Coiera, E.; Brown, P.; Wenzel, M.; Weicken, E.; Ansari, S.; Mathur, P.; Casey, A.; et al. Evaluation framework to guide implementation of AI systems into healthcare settings. BMJ Health Care Inform. 2021, 28, e100444. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wulff, A.; Montag, S.; Marschollek, M.; Jack, T. Clinical Decision-Support Systems for Detection of Systemic Inflammatory Response Syndrome, Sepsis, and Septic Shock in Critically Ill Patients: A Systematic Review. Methods Inf. Med. 2019, 58, e43–e57. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ankolekar, A.; Eppings, L.; Bottari, F.; Pinho, I.F.; Howard, K.; Baker, R.; Nan, Y.; Xing, X.; Walsh, S.L.; Vos, W.; et al. Using artificial intelligence and predictive modelling to enable learning healthcare systems (LHS) for pandemic preparedness. Comput. Struct. Biotechnol. J. 2024, 24, 412–419. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Fontaine, P.; Ross, S.E.; Zink, T.; Schilling, L.M. Systematic review of health information exchange in primary care practices. J. Am. Board. Fam. Med. 2010, 23, 655–670. [Google Scholar] [CrossRef] [PubMed]

| Main Challenges | Key Opportunities | Typical Applications | Focus | Domain |

|---|---|---|---|---|

| Validation, interpretability, professional accountability | Personalized care, improved accuracy, multimodal data integration | Digital pathology, oncology, predictive modeling, clinical decision support | Individual patient, diagnosis, therapy, precision medicine | AI for Medicine |

| Data governance, policy alignment, ethical oversight | Efficiency, equity, systemic learning | Epidemiological forecasting, resource optimization, health inequity analysis | Population health, prevention, organization, sustainability | AI for Health Systems |

| Managing uncertainty, maintaining trust, balancing automation and human judgment | Co-evolution of human and artificial reasoning, emergent knowledge | System-level intelligence, feedback-based learning, relational modeling | Adaptive interaction among people, technologies, and institutions | AI and Complexity |

| Added Value of M–C–R | Main Limitations in Healthcare AI | Key Principles | Scope and Orientation | Framework |

|---|---|---|---|---|

| Embeds interpretive responsibility within the system; defines “Relation” as co-constitutive of AI performance | Often descriptive; weak epistemic link to clinical reasoning; accountability treated as ex-post | Interaction between human and technical components | Integration of people, technology, and organization | Socio-technical models |

| Adds semiotic and ethical layers that connect feedback to interpretive coherence and clinical reasoning | Focus on data feedback, not on meaning interpretation or semiotics; governance remains managerial | Feedback, adaptation, learning loops | Continuous data-driven improvement cycles | Learning Health Systems (LHS) |

| Couples complexity dynamics with epistemic accountability and relational supervision | Lacks explicit human interpretive agency; limited operational guidance for governance of AI tools | Emergence, interdependence, adaptation | Health systems as dynamic, nonlinear networks | Complex Adaptive Systems (CAS) |

| M–C–R provides a theoretical rationale linking regulation to semiotic and clinical dimensions | Normative, not explanatory; limited conceptual integration with epistemology or organizational practice | Traceability, monitoring, post-market control | Safety, transparency, and human oversight of AI systems | Regulatory risk frameworks (WHO/EU/FDA) |

| Offers a testable, epistemically grounded, and ethically aligned model bridging technical, organizational, and human domains | — | Model–Context–Relation alignment; interpretive accountability; emergent value | Clinically grounded governance of AI as semiotic system | M–C–R Framework (proposed) |

| Corresponding M–C–R Element | FDA (2025) | EU AI Act (2024–2025) | WHO (2023) | Regulatory Principle |

|---|---|---|---|---|

| Model—ensures interpretability and auditability of the algorithm | Algorithmic transparency and labeling within SaMD framework | Mandatory technical documentation and explainability requirements for “high-risk” AI systems (Art. 13) | Traceability and documentation of data provenance and model logic | Transparency and explainability |

| Relation—guarantees interpretive responsibility and ethical mediation | Adaptive AI control plans and human review of outputs | Mandatory human-in-the-loop and human-on-the-loop safeguards (Art. 14) | Requirement for human-in-the-loop supervision | Human oversight and control |

| Context—embeds safety and quality management into operational environments | Total product life-cycle (TPLC) approach for SaMD | Risk management system proportional to impact on health (Annex III) | Continuous monitoring across the AI life cycle | Risk management and life-cycle monitoring |

| Relation/Context—ensures fairness and participatory governance | Public transparency and stakeholder feedback in post-market evaluation | Non-discrimination and fairness requirements | Promotion of equitable access and avoidance of bias | Equity, inclusiveness, and societal participation |

| Model/Context—supports adaptive and context-aware validation | Periodic algorithm updates and real-world performance monitoring | Continuous performance assessment and compliance with technical standards | Ongoing evaluation of AI behavior and outcomes | Post-market surveillance and adaptability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di Vita, E.; Caivano, G.; Sciarra, F.M.; Lo Bianco, S.; Messina, P.; Cumbo, E.M.; Caradonna, L.; Nigliaccio, S.; Fontana, D.A.; Scardina, A.; et al. Artificial Intelligence in Medicine and Healthcare: A Complexity-Based Framework for Model–Context–Relation Alignment. Appl. Sci. 2025, 15, 12005. https://doi.org/10.3390/app152212005

Di Vita E, Caivano G, Sciarra FM, Lo Bianco S, Messina P, Cumbo EM, Caradonna L, Nigliaccio S, Fontana DA, Scardina A, et al. Artificial Intelligence in Medicine and Healthcare: A Complexity-Based Framework for Model–Context–Relation Alignment. Applied Sciences. 2025; 15(22):12005. https://doi.org/10.3390/app152212005

Chicago/Turabian StyleDi Vita, Emanuele, Giovanni Caivano, Fabio Massimo Sciarra, Simone Lo Bianco, Pietro Messina, Enzo Maria Cumbo, Luigi Caradonna, Salvatore Nigliaccio, Davide Alessio Fontana, Antonio Scardina, and et al. 2025. "Artificial Intelligence in Medicine and Healthcare: A Complexity-Based Framework for Model–Context–Relation Alignment" Applied Sciences 15, no. 22: 12005. https://doi.org/10.3390/app152212005

APA StyleDi Vita, E., Caivano, G., Sciarra, F. M., Lo Bianco, S., Messina, P., Cumbo, E. M., Caradonna, L., Nigliaccio, S., Fontana, D. A., Scardina, A., & Scardina, G. A. (2025). Artificial Intelligence in Medicine and Healthcare: A Complexity-Based Framework for Model–Context–Relation Alignment. Applied Sciences, 15(22), 12005. https://doi.org/10.3390/app152212005