An Integrated Rule-Based and Deep Learning Method for Automobile License Plate Image Generation with Enhanced Geometric and Radiometric Details

Abstract

1. Introduction

- (1)

- This paper introduces a novel high-quality automobile license plate image generation framework by integrating rule-based constraints with deep learning. This framework ensures both geometric and radiometric properties of generated images, demonstrating robust performance under challenging conditions such as extreme viewing angles and partial covers.

- (2)

- To address the issue of inaccurate vertices of the license plate, we propose a module that combines the YOLO-v5 detector with the character endpoint feature matching strategy. This method leverages filtered character contours and their endpoints to rectify the license plate vertices in the expanded license plate region detected by YOLO-v5.

- (3)

- To enhance radiometric properties of the generated license plate, a two-stage radiometric property learning module is proposed. It employs a region-specific and channel-wise strategy for character mask extraction and background inpainting, followed by a pre-trained TET-GAN model to maintain consistency with original character styles.

- (4)

- A comprehensive evaluation methodology, combining novel physical validation and evaluation with standard image quality metrics, is adopted to evaluate the quality of generated images. Experimental results indicate the superiority of the proposed framework in both geometric accuracy and radiometric fidelity, demonstrating its value for intelligent transportation systems.

2. Related Work

2.1. Automobile License Plate Detection Methods

2.2. Automobile License Plate Image Generation Methods

3. Method

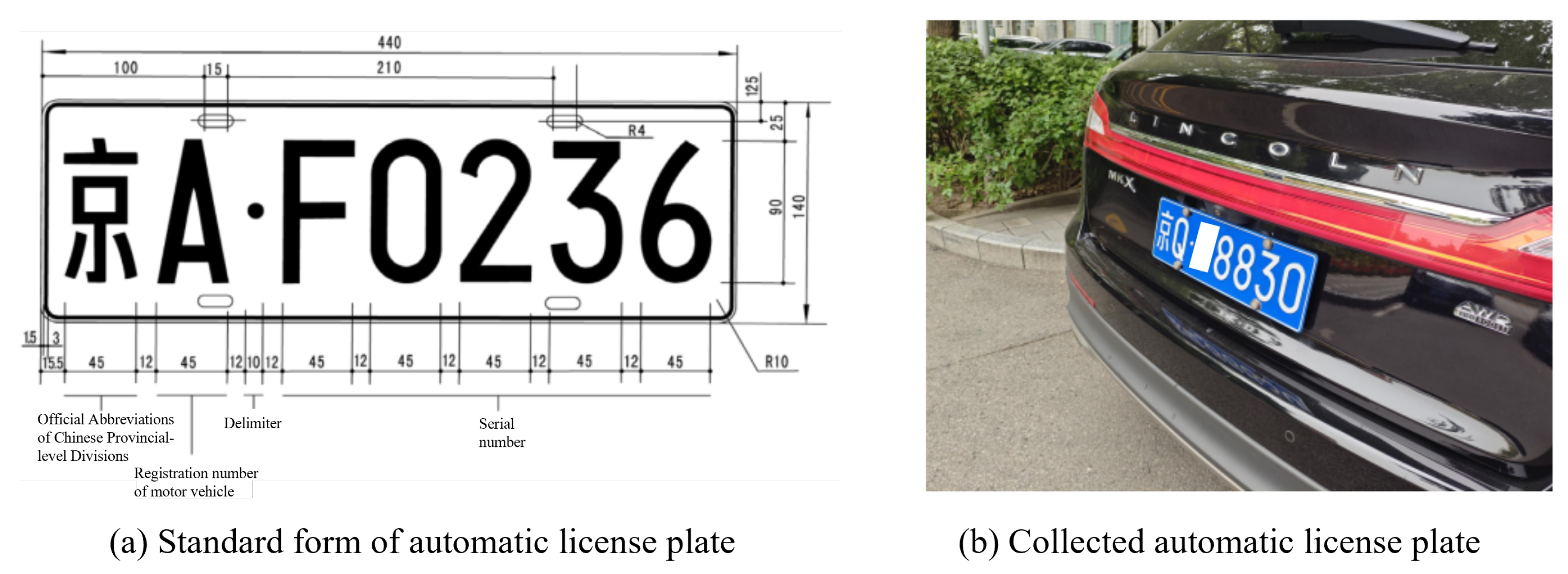

3.1. Problem Description

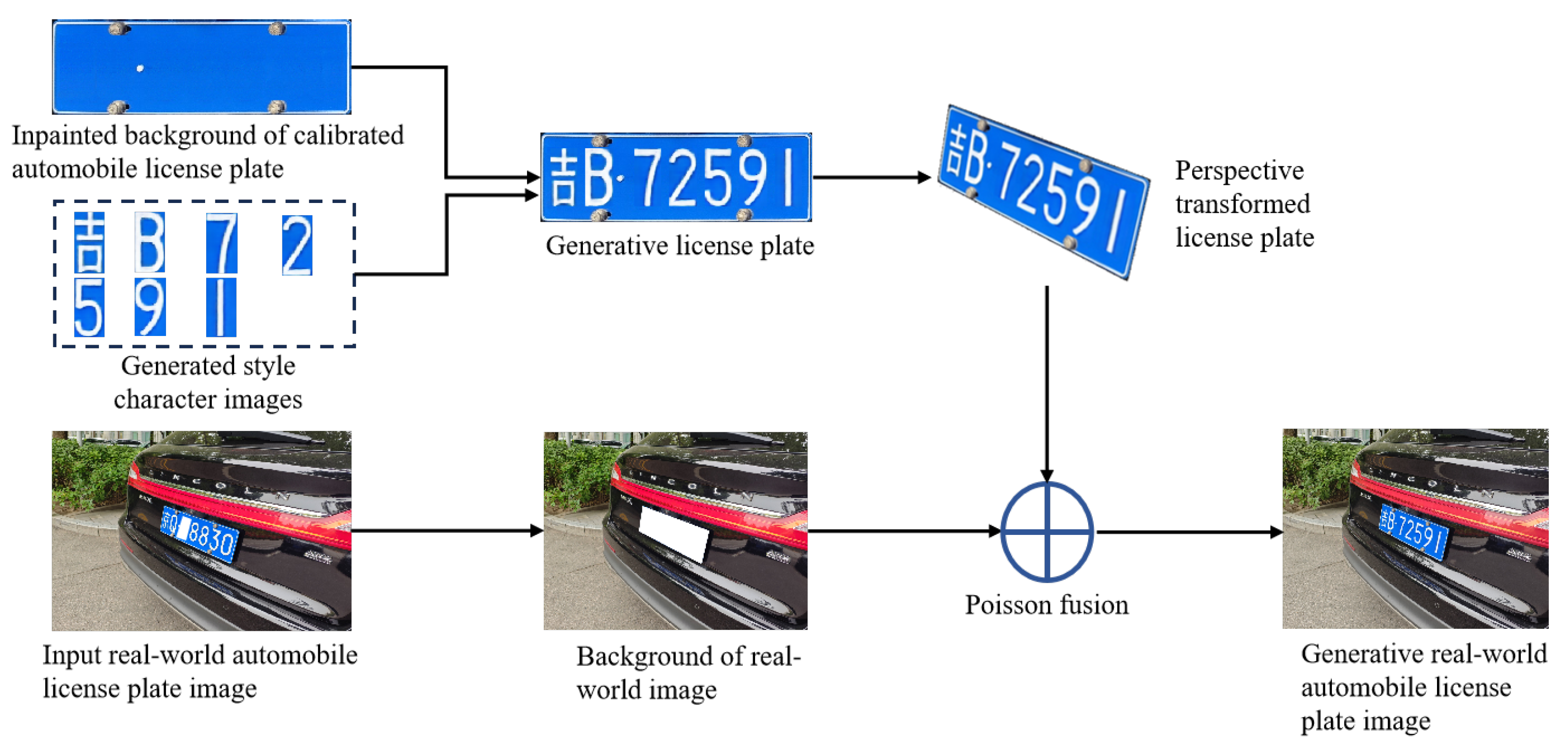

3.2. Radiometric Enhancement Generation Framework

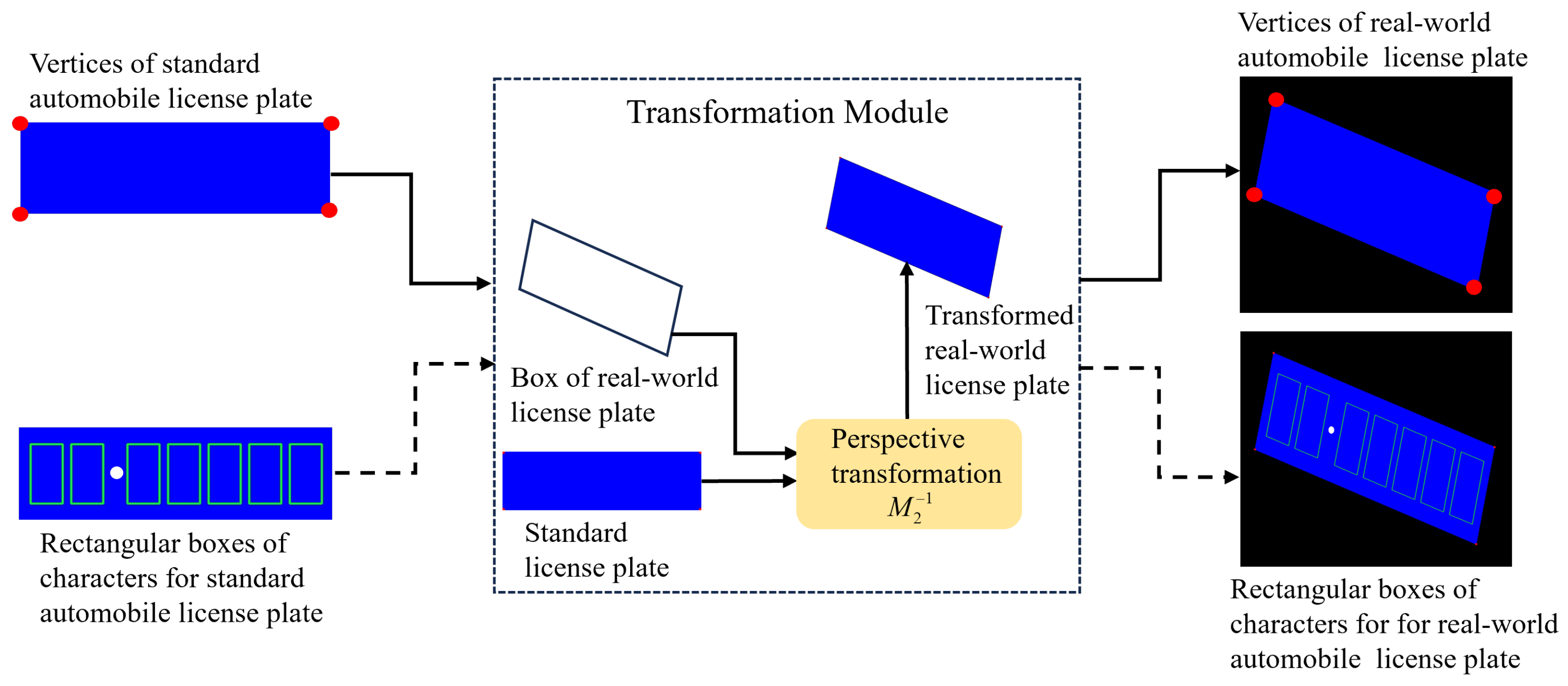

3.2.1. Precise Geometric Rectification Module

Automobile License Plate Detection and Recognition

Precise Geometric Rectification Method Based on Character Endpoint Feature Matching

- (1)

- Dual-Arc Endpoints: When both top endpoints exhibit arc-like geometric properties, a spatial convex optimization approach is employed. The minimal-area tangent method is applied, wherein the line connecting the highest points of the two characters serves as the initial tangent. The slope and intercept parameters of this tangent are iteratively optimized to minimize the enclosed area between the tangent and the character contours. The optimization objective function F is formulated as:The intersection points of the optimized tangent with the character contours are output as the refined vertex coordinates.

- (2)

- Dual-Corner Endpoints: When both top endpoints are identified as corners, the Shi-Tomasi algorithm is directly employed for corner detection. To enhance location precision, a sub-pixel level method is applied, optimizing the precise corner coordinates to achieve higher spatial precision [35].

- (3)

- Hybrid Endpoints (Arc-Corner Combination): For cases involving one arc endpoint and one corner, a hybrid approach is used. The arc endpoint is processed using the minimal-area tangent optimization, while the corner endpoint is computed via the Shi-Tomasi method with sub-pixel refinement. The resulting coordinates are integrated to form the final vertex pair, ensuring consistency and accuracy across disparate geometric types.

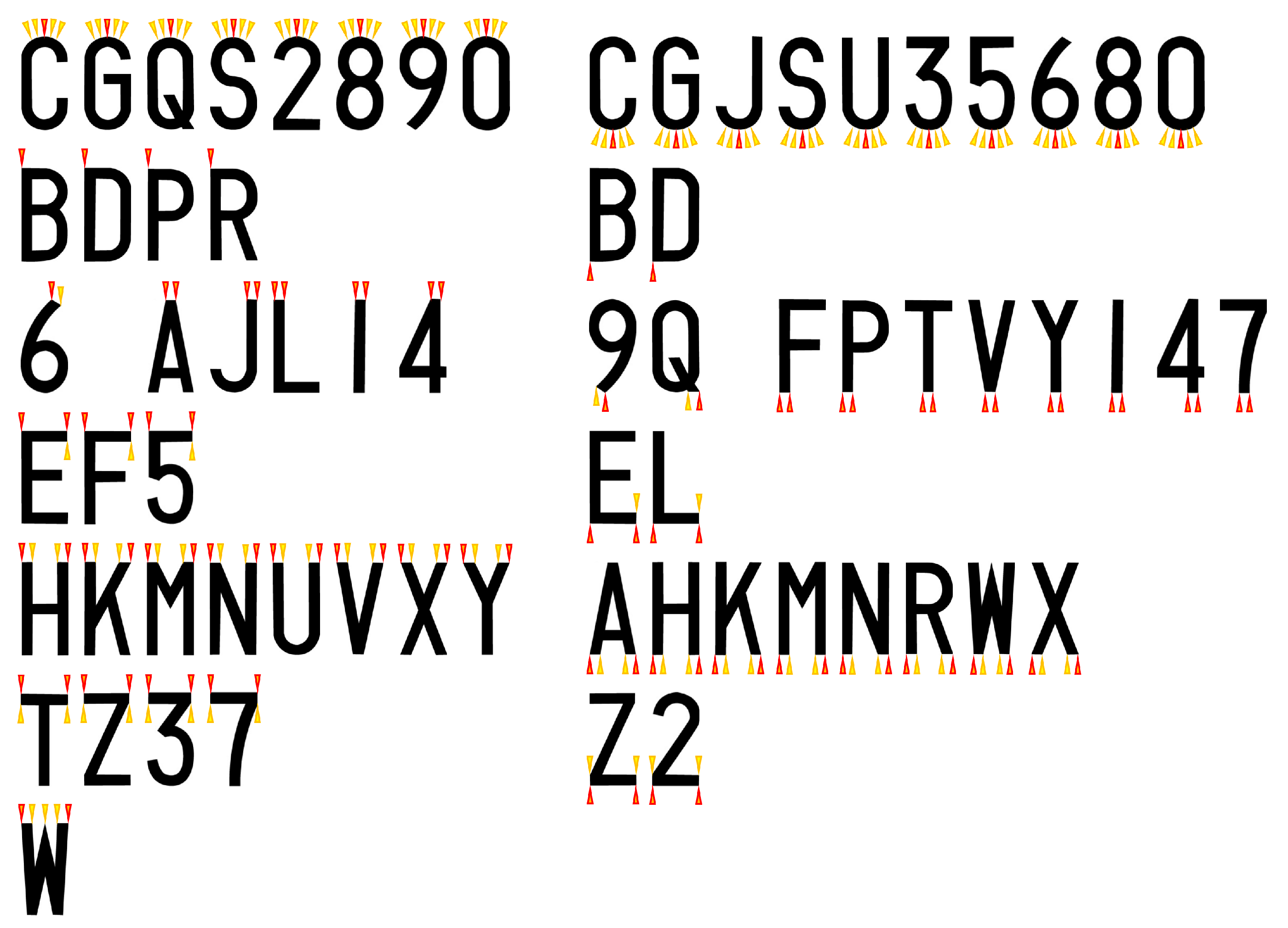

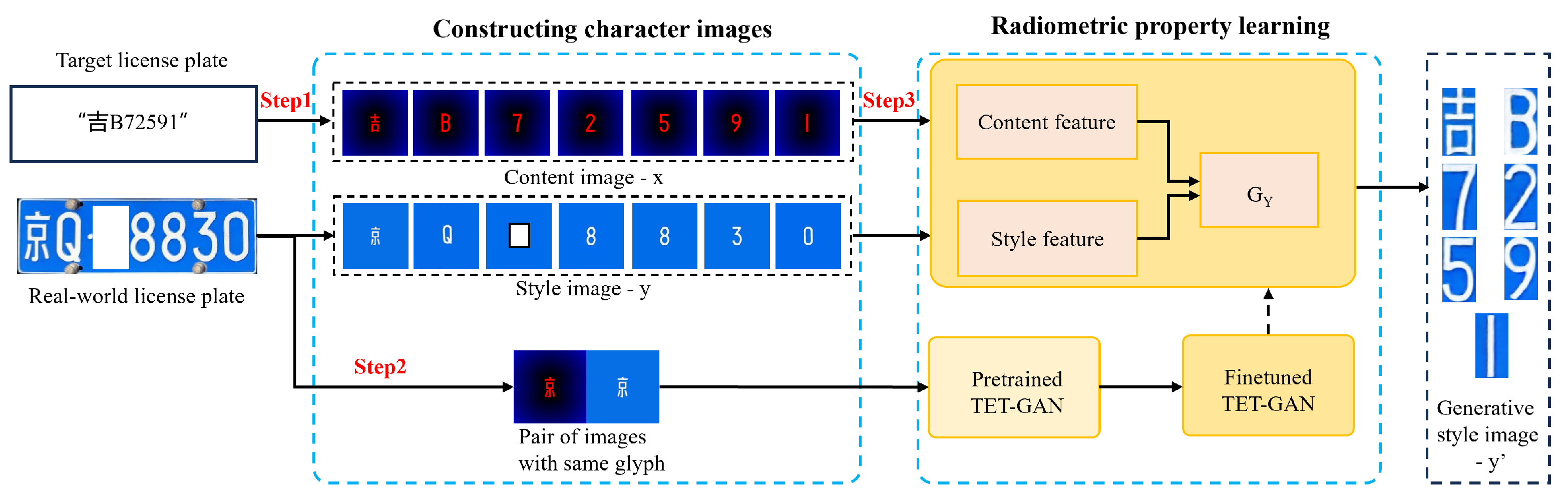

3.2.2. Radiometric Property Learning Module

Automobile License Plate Background Image Inpainting

| Algorithm 1 Pixel-Difference Dynamic Threshold (PDDT) algorithm |

| Require: The rectified image , the pixel values of the three-channel images are . The perturbation cutoff ratio for the entire image and each row are and , respectively. Ensure: the image with characters removed .

|

| Algorithm 2 Baseline fitting and fluctuation compensation inpainting algorithm |

| Require: Character mask , background of removed character . Ensure: Inpainted background image .

|

Style Transfer Learning Method Based on TET-GAN

4. Experiments and Results

4.1. Dataset

4.2. Evaluation Metrics

- (1)

- Peak Signal-to-Noise Ratio (PSNR): This metric quantifies the quality of a generated image by measuring the ratio between the maximum possible signal power and the power of corrupting noise. A higher PSNR value indicates a smaller difference between the generated image and the real-world image, reflecting better image quality.where is the maximum value of image pixel and is the mean square error.

- (2)

- Structural Similarity Index Measurement (SSIM): The SSIM metric assesses the perceptual similarity between the generated and real-world images through three aspects: luminance, contrast, and structural similarity, which are estimated from the mean intensity, standard deviation, and covariance, respectively [39]. The SSIM value ranges from 0 to 1. Higher SSIM values correspond to better perceptual quality of the generated image [30].where and represent the mean intensities of image x and y respectively, and denote their variances, and is the covariance between the two images. The terms and are constants included to stabilize the division when the denominators are close to zero.

- (3)

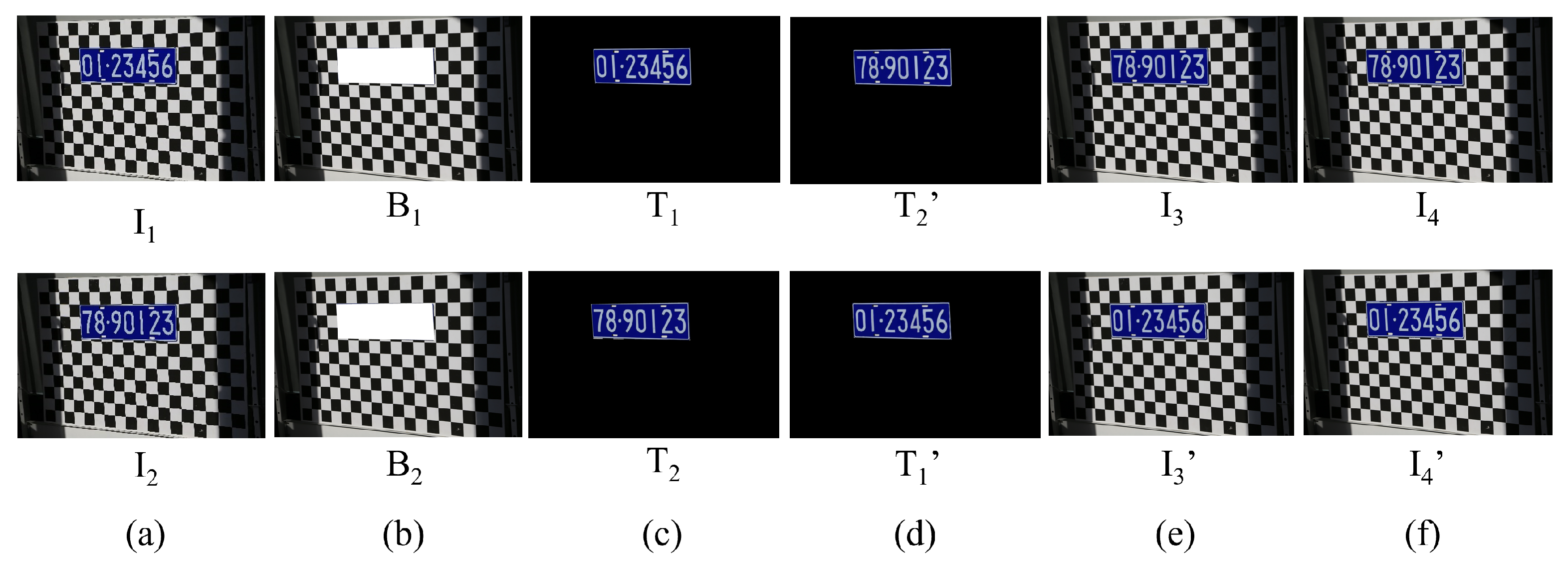

- Physical Validation and Evaluation Method: Two customized automobile license plate images are captured as the first spatio-temporal image and the second spatio-temporal image . Here, consists of the background and the target region , while consists of the background and target region . To evaluate the radiometric enhancement method, two matching rules are employed to calculate sub-scores, respectively, which are then weighted and fused into a composite score. This comprehensive scoring mechanism provides an objective assessment of the method’s performance. By leveraging collected data and multi-rule integration, this method effectively addresses the limitation of lacking ground truth in conventional evaluation metrics.

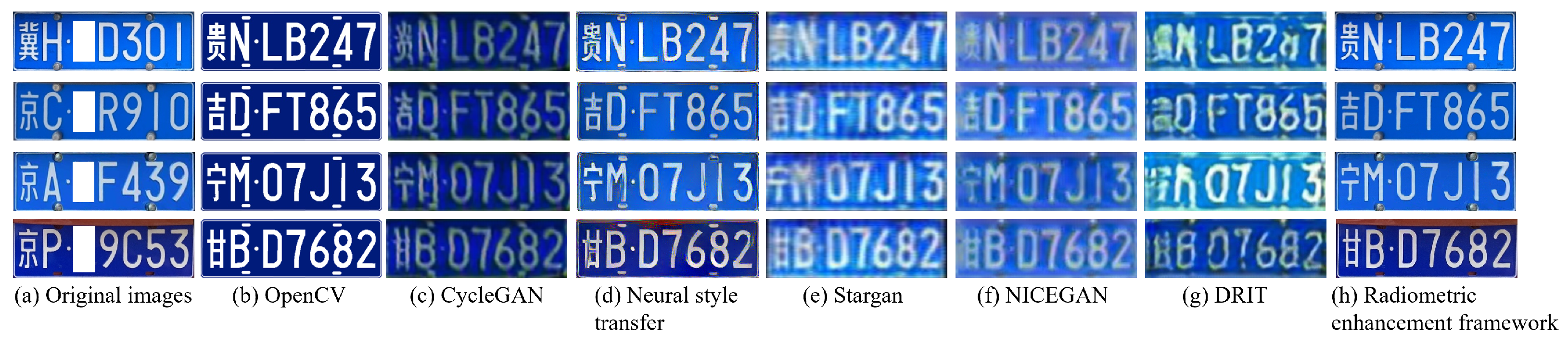

4.3. Comparative Results for Generated Automobile License Plate Images

4.3.1. Comparative Results of Geometric Rectification

4.3.2. Comparative Results of Radiometric Property Learning

4.4. Results of Physical Validation and Evaluation Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, C.; Xu, S.; Song, G.; Zhang, S. How many labeled license plates are needed? In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 334–346. [Google Scholar]

- Liu, Q.; Chen, S.L.; Chen, Y.X.; Yin, X.C. Improving license plate recognition via diverse stylistic plate generation. Pattern Recognit. Lett. 2024, 183, 117–124. [Google Scholar] [CrossRef]

- Whang, S.E.; Roh, Y.; Song, H.; Lee, J.G. Data collection and quality challenges in deep learning: A data-centric ai perspective. VLDB J. 2023, 32, 791–813. [Google Scholar] [CrossRef]

- Zha, D.; Bhat, Z.P.; Lai, K.H.; Yang, F.; Jiang, Z.; Zhong, S.; Hu, X. Data-centric artificial intelligence: A survey. ACM Comput. Surv. 2025, 57, 1–42. [Google Scholar] [CrossRef]

- Habeeb, D.; Alhassani, A.; Abdullah, L.N.; Der, C.S.; Alasadi, L.K.Q. Advancements and Challenges: A Comprehensive Review of GAN-based Models for the Mitigation of Small Dataset and Texture Sticking Issues in Fake License Plate Recognition. Eng. Technol. Appl. Sci. Res. 2024, 14, 18401–18408. [Google Scholar] [CrossRef]

- Izidio, D.M.; Ferreira, A.P.; Medeiros, H.R.; Barros, E.N.d.S. An embedded automatic license plate recognition system using deep learning. Des. Autom. Embed. Syst. 2020, 24, 23–43. [Google Scholar] [CrossRef]

- Björklund, T.; Fiandrotti, A.; Annarumma, M.; Francini, G.; Magli, E. Robust license plate recognition using neural networks trained on synthetic images. Pattern Recognit. 2019, 93, 134–146. [Google Scholar] [CrossRef]

- Silva, S.M.; Jung, C.R. License plate detection and recognition in unconstrained scenarios. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 580–596. [Google Scholar]

- Wang, X.; Man, Z.; You, M.; Shen, C. Adversarial generation of training examples: Applications to moving vehicle license plate recognition. arXiv 2017, arXiv:1707.03124. [Google Scholar] [CrossRef]

- Wu, S.; Zhai, W.; Cao, Y. PixTextGAN: Structure aware text image synthesis for license plate recognition. IET Image Process. 2019, 13, 2744–2752. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, P.; Li, H.; Li, Z.; Shen, C.; Zhang, Y. A robust attentional framework for license plate recognition in the wild. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6967–6976. [Google Scholar] [CrossRef]

- Shvai, N.; Hasnat, A.; Nakib, A. Multiple auxiliary classifiers GAN for controllable image generation: Application to license plate recognition. IET Intell. Transp. Syst. 2023, 17, 243–254. [Google Scholar] [CrossRef]

- Shpir, M.; Shvai, N.; Nakib, A. License Plate Images Generation with Diffusion Models. In Proceedings of the European Conference on Artificial Intelligence ECAI, Santiago de Compostela, Spain, 19–24 October 2024. [Google Scholar]

- Yang, S.; Liu, J.; Wang, W.; Guo, Z. TET-GAN: Text effects transfer via stylization and destylization. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1238–1245. [Google Scholar]

- Peng, Y.; Xu, M.; Jin, J.S.; Luo, S.; Zhao, G. Cascade-based license plate localization with line segment features and haar-like features. In Proceedings of the 2011 Sixth International Conference on Image and Graphics, Hefei, China, 12–15 August 2011; pp. 1023–1028. [Google Scholar]

- Silvano, G.; Ribeiro, V.; Greati, V.; Bezerra, A.; Silva, I.; Endo, P.T.; Lynn, T. Synthetic image generation for training deep learning-based automated license plate recognition systems on the Brazilian Mercosur standard. Des. Autom. Embed. Syst. 2021, 25, 113–133. [Google Scholar] [CrossRef]

- Kim, T.G.; Yun, B.J.; Kim, T.H.; Lee, J.Y.; Park, K.H.; Jeong, Y.; Kim, H.D. Recognition of vehicle license plates based on image processing. Appl. Sci. 2021, 11, 6292. [Google Scholar] [CrossRef]

- Asif, M.R.; Chun, Q.; Hussain, S.; Fareed, M.S.; Khan, S. Multinational vehicle license plate detection in complex backgrounds. J. Vis. Commun. Image Represent. 2017, 46, 176–186. [Google Scholar] [CrossRef]

- Yuan, Y.; Zou, W.; Zhao, Y.; Wang, X.; Hu, X.; Komodakis, N. A Robust and Efficient Approach to License Plate Detection. IEEE Trans. Image Process. 2017, 26, 1102–1114. [Google Scholar] [CrossRef]

- Zhao, Y.; Gu, J.; Liu, C.; Han, S.; Gao, Y.; Hu, Q. License Plate Location Based on Haar-Like Cascade Classifiers and Edges. In Proceedings of the 2010 Second WRI Global Congress on Intelligent Systems, Wuhan, China, 16–17 December 2010; Volume 3, pp. 102–105. [Google Scholar]

- Dlagnekov, L. License Plate Detection Using Adaboost; Computer Science and Engineering Department, UC San Diego: San Diego, CA, USA, 2004. [Google Scholar]

- Selmi, Z.; Ben Halima, M.; Alimi, A.M. Deep Learning System for Automatic License Plate Detection and Recognition. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1132–1138. [Google Scholar]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Goncalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–10. [Google Scholar]

- Zou, Y.; Zhang, Y.; Yan, J.; Jiang, X.; Huang, T.; Fan, H.; Cui, Z. License plate detection and recognition based on YOLOv3 and ILPRNET. Signal Image Video Process. 2022, 16, 473–480. [Google Scholar] [CrossRef]

- Wang, Y.; Bian, Z.P.; Zhou, Y.; Chau, L.P. Rethinking and designing a high-performing automatic license plate recognition approach. IEEE Trans. Intell. Transp. Syst. 2021, 23, 8868–8880. [Google Scholar] [CrossRef]

- Bala, R.; Zhao, Y.; Burry, A.; Kozitsky, V.; Fillion, C.; Saunders, C.; Rodríguez-Serrano, J. Image simulation for automatic license plate recognition. In Proceedings of the Visual Information Processing and Communication III, Burlingame, CA, USA, 24–26 January 2012; Volume 8305, pp. 294–302. [Google Scholar]

- Bulan, O.; Kozitsky, V.; Ramesh, P.; Shreve, M. Segmentation-and annotation-free license plate recognition with deep localization and failure identification. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2351–2363. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Han, B.G.; Lee, J.T.; Lim, K.T.; Choi, D.H. License plate image generation using generative adversarial networks for end-to-end license plate character recognition from a small set of real images. Appl. Sci. 2020, 10, 2780. [Google Scholar] [CrossRef]

- Maltsev, A.; Lebedev, R.; Khanzhina, N. On realistic generation of new format license plate on vehicle images. Procedia Comput. Sci. 2021, 193, 190–199. [Google Scholar] [CrossRef]

- Li, C.; Yang, X.; Wang, G.; Zheng, A.; Tan, C.; Tang, J. Disentangled generation network for enlarged license plate recognition and a unified dataset. Comput. Vis. Image Underst. 2024, 238, 103880. [Google Scholar] [CrossRef]

- Ministry of Public Security of the People’s Republic of China. License Plates of Motor Vehicles of the People’s Republic of China; Ministry of Public Security of the People’s Republic of China: Beijing, China, 2018.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. YOLOv5; Zenodo: Geneva, Switzerland, 2020. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. (TOG) 2003, 22, 313–318. [Google Scholar] [CrossRef]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. In Seminal Graphics Papers: Pushing the Boundaries; Association for Computing Machinery: New York, NY, USA, 2023; Volume 2, pp. 577–582. [Google Scholar]

- Xu, Z.; Yang, W.; Meng, A.; Lu, N.; Huang, H.; Ying, C.; Huang, L. Towards end-to-end license plate detection and recognition: A large dataset and baseline. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 255–271. [Google Scholar]

- Wang, Z. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; proceedings, part III 18. pp. 234–241. [Google Scholar]

- Zheng, Y. License Plate Generator. 2019. Available online: https://github.com/zheng-yuwei/license-plate-generator (accessed on 18 April 2019).

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8789–8797. [Google Scholar]

- Chen, R.; Huang, W.; Huang, B.; Sun, F.; Fang, B. Reusing discriminators for encoding: Towards unsupervised image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8168–8177. [Google Scholar]

- Lee, H.Y.; Tseng, H.Y.; Huang, J.B.; Singh, M.; Yang, M.H. Diverse image-to-image translation via disentangled representations. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 35–51. [Google Scholar]

| Comparative Methods | Average RMSE ↓ |

|---|---|

| Unet [40] vs. Manual | 14.81 |

| YOLOv5 [34] vs. Manual | 35.83 |

| Radimetric enhancement | 6.78 |

| framework vs. Manual |

| Comparative Methods | PSNR (dB) ↑ | SSIM ↑ |

|---|---|---|

| OpenCV [41] | 9.19 | 0.32 |

| CycleGAN [11] | 10.61 | 0.27 |

| Neural style transfer [30] | 12.89 | 0.36 |

| StarGAN [42] | 11.45 | 0.21 |

| NICEGAN [43] | 12.46 | 0.27 |

| DRIT [44] | 11.52 | 0.22 |

| Radiometric | 13.83 | 0.57 |

| enhancement framework |

| Pair of Images | OpenCV | CycleGAN | Neural Style Transfer | StarGAN | NICEGAN | DRIT | Radiometric Enhancement Framework |

|---|---|---|---|---|---|---|---|

| 0.96 | 0.96 | 0.97 | 0.95 | 0.95 | 0.95 | 0.98 | |

| 0.72 | 0.67 | 0.77 | 0.44 | 0.50 | 0.58 | 0.77 | |

| 0.96 | 0.97 | 0.97 | 0.98 | 0.98 | 0.96 | 0.98 | |

| 0.71 | 0.68 | 0.74 | 0.44 | 0.45 | 0.60 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Y.; Peng, Z.; Liu, W.; Gan, H. An Integrated Rule-Based and Deep Learning Method for Automobile License Plate Image Generation with Enhanced Geometric and Radiometric Details. Appl. Sci. 2025, 15, 11990. https://doi.org/10.3390/app152211990

Dong Y, Peng Z, Liu W, Gan H. An Integrated Rule-Based and Deep Learning Method for Automobile License Plate Image Generation with Enhanced Geometric and Radiometric Details. Applied Sciences. 2025; 15(22):11990. https://doi.org/10.3390/app152211990

Chicago/Turabian StyleDong, Yuanrui, Zhe Peng, Wende Liu, and Haiyong Gan. 2025. "An Integrated Rule-Based and Deep Learning Method for Automobile License Plate Image Generation with Enhanced Geometric and Radiometric Details" Applied Sciences 15, no. 22: 11990. https://doi.org/10.3390/app152211990

APA StyleDong, Y., Peng, Z., Liu, W., & Gan, H. (2025). An Integrated Rule-Based and Deep Learning Method for Automobile License Plate Image Generation with Enhanced Geometric and Radiometric Details. Applied Sciences, 15(22), 11990. https://doi.org/10.3390/app152211990