Enhancing Object Detection with Shape-IoU and Scale–Space–Task Collaborative Lightweight Path Aggregation

Abstract

1. Introduction

- (1)

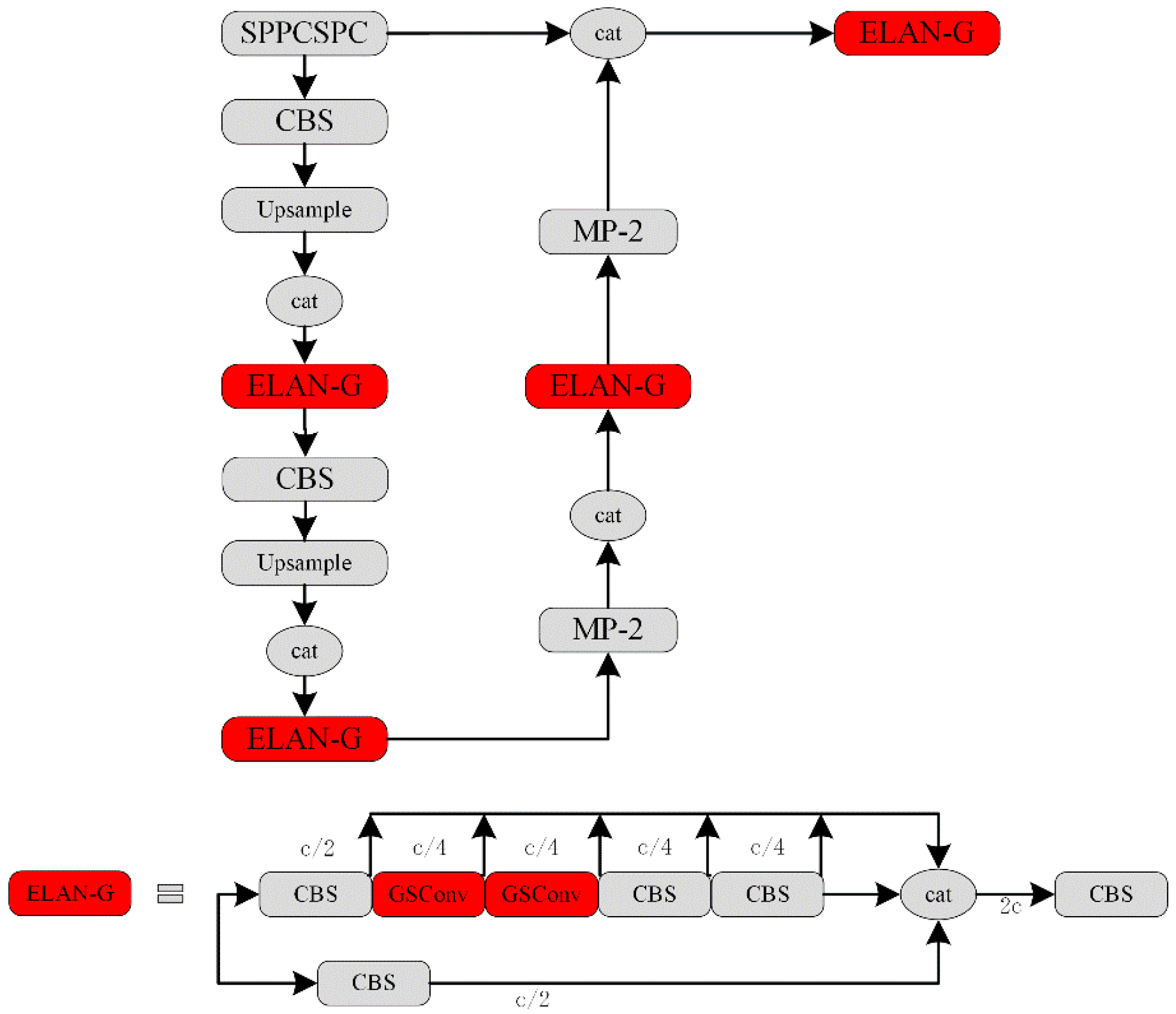

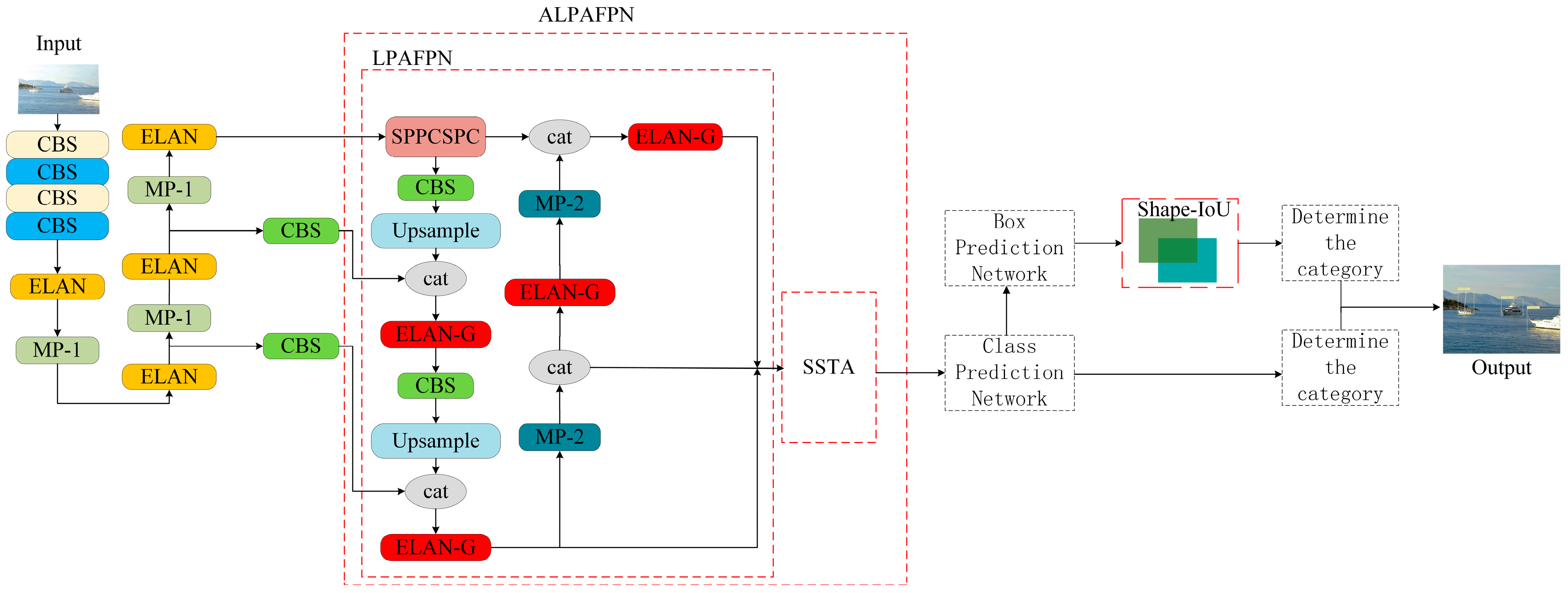

- We construct Lightweight Path Aggregation Feature Pyramid Network (LPAFPN), which integrates and shuffles information from standard convolutions and depth-wise separable convolutions to reduce Parameter Count.

- (2)

- We design Lightweight Path Aggregation Feature Pyramid Network with scale–space–task collaborative enhancement (ALPAFPN), which enhances the perceptual capabilities of the model by synergistically processing features from distinct feature layers, spatial locations, and task-specific channels.

- (3)

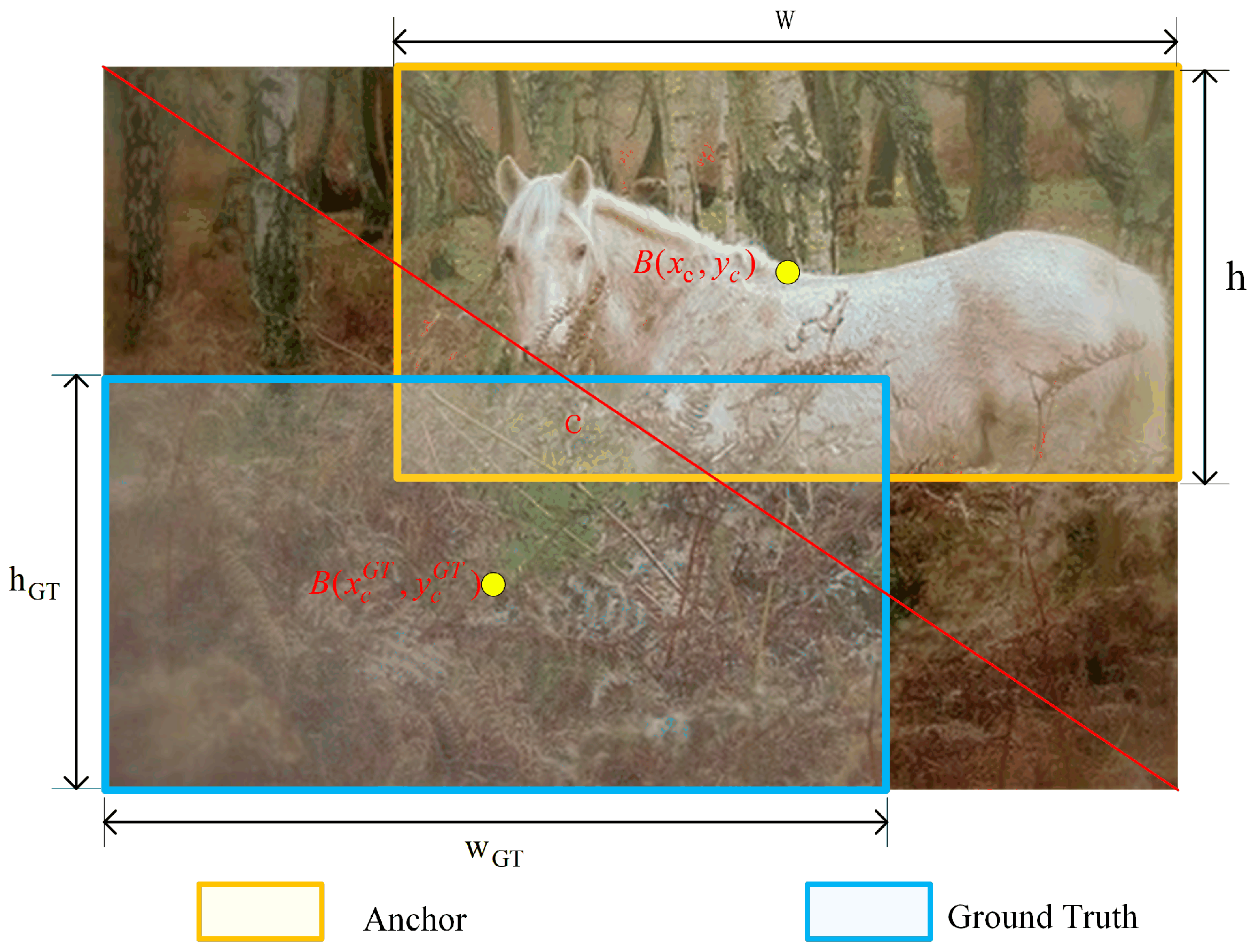

- We introduce a shape-scale bounding box regression loss method that incorporates target shape attributes to optimize the regression loss function, thereby improving the detection accuracy of the model.

- (4)

- We conduct extensive experiments on the Pascal VOC dataset and VisDrone2019-DET dataset, and the experimental results reveal that the F1 score, Precision, and Mean Average Precision of LSCA are superior to those of the state-of-the-art methods.

2. Related Work

2.1. CNN-Based Methods

2.2. Path Aggregation Feature Pyramid Network

2.3. Loss Function

3. Method

3.1. Lightweight Path Aggregation Feature Pyramid Network

3.2. Scale–Space–Task Co-Enhanced Lightweight Path Aggregation Feature Pyramid Network

3.2.1. Scale-Aware Attention Mechanism

3.2.2. Spatial-Aware Attention Mechanism

3.2.3. Task-Aware Attention Mechanism

3.3. Shape-IoU Loss

3.4. Overall Architecture of LSCA

4. Experimental Results and Analysis

4.1. Dataset and Evaluation Metrics

4.2. Experimental Platform and Training Details

4.3. Quantitative Analysis

4.3.1. Quantitative Analysis on Pascal VOC Dataset

4.3.2. Quantitative Analysis on VisDrone2019-DET Dataset

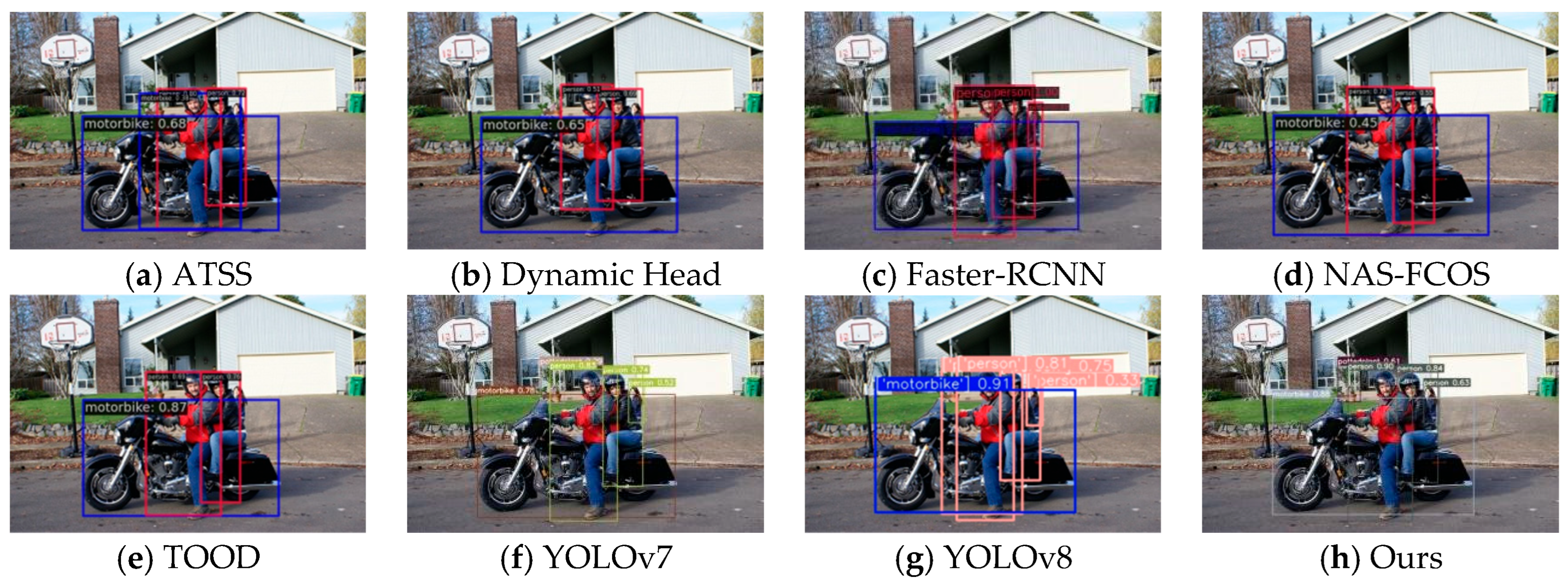

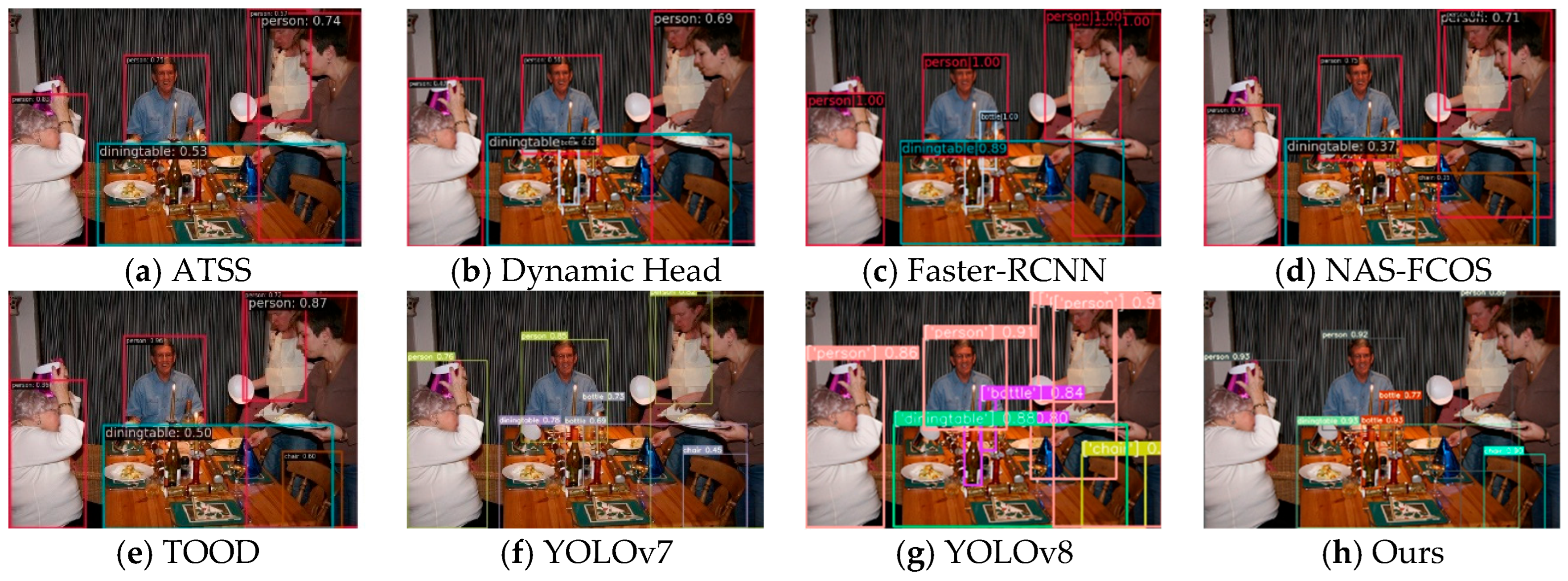

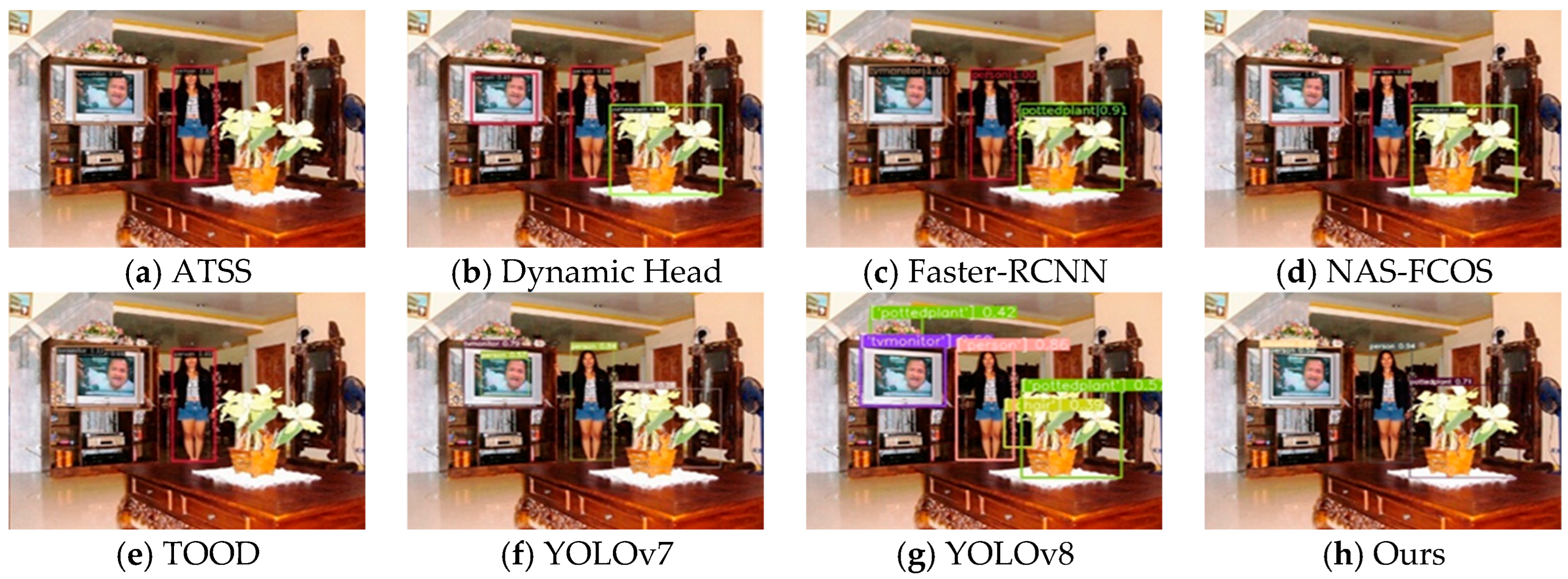

4.4. Qualitative Analysis

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yuan, Q.; Chen, X.; Liu, P.; Wang, H. A review of 3D object detection based on autonomous driving. Vis. Comput. 2025, 41, 1757–1775. [Google Scholar]

- Cao, Y.; Zhao, Y.; Wu, X.; Tang, M.; Gu, C. An improved YOLOv5-based method for robotic vision detection of grain caking in silos. J. Meas. Eng. 2025, 13, 504–517. [Google Scholar] [CrossRef]

- Rahimpour, S.M.; Kazemi, M.; Moallem, P.; Safayani, M. Video anomaly detection based on attention and efficient spatio-temporal feature extraction. Vis. Comput. 2024, 40, 6825–6841. [Google Scholar] [CrossRef]

- Cao, J.; Peng, B.; Gao, M.; Hao, H.; Li, X.; Mou, H. Object Detection Based on CNN and Vision-Transformer: A Survey. IET Comput. Vis. 2025, 19, e70028. [Google Scholar] [CrossRef]

- Albuquerque, C.; Henriques, R.; Castelli, M. Deep learning-based object detection algorithms in medical imaging: Systematic review. Heliyon 2025, 11, e41137. [Google Scholar] [CrossRef]

- Nowakowski, M. Operational Environment Impact on Sensor Capabilities in Special Purpose Unmanned Ground Vehicles. In Proceedings of the 2024 21st International Conference on Mechatronics-Mechatronika (ME), Brno, Czech Republic, 4–6 December 2024. [Google Scholar] [CrossRef]

- Hu, B.; Zhang, S.; Feng, Y.; Li, B.; Sun, H.; Chen, M.; Zhuang, W.; Zhang, Y. Engineering applications of artificial intelligence a knowledge-guided reinforcement learning method for lateral path tracking. Eng. Appl. Artif. Intell. 2024, 139, 109588. [Google Scholar] [CrossRef]

- Qasim, A.; Mehak, G.; Hussain, N.; Gelbukh, A.; Sidorov, G. Detection of Depression Severity in Social Media Text Using Transformer-Based Models. Information 2025, 16, 114. [Google Scholar] [CrossRef]

- Wang, D.; Rong, X.; Sun, S.; Hu, Y.; Zhu, C.; Lu, J. Adaptive Convolution for CNN-based Speech Enhancement Models. arXiv 2025, arXiv:2502.14224. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Shehzadi, T.; Hashmi, K.A.; Stricker, D.; Afzal, M.Z. Object Detection with Transformers: A Review. arXiv 2023, arXiv:2306.04670. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, J.; Fu, J.; Wu, S. River floating object detection with transformer model in real time. Sci. Rep. 2025, 15, 9026. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic Ship Detection Based on RetinaNet Using Multi-Resolution Gaofen-3 Imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hu, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhang, Y.; Ma, Y.; Li, Y.; Wen, L. Intelligent analysis method of dam material gradation for asphalt-core rock-fill dam based on enhanced Cascade Mask R-CNN and GCNet. Adv. Eng. Inform. 2023, 56, 102001. [Google Scholar] [CrossRef]

- Gandarias, J.M.; Garcia-Cerezo, A.J.; Gómez-de-Gabriel, J.M. CNN-Based Methods for Object Recognition with High-Resolution Tactile Sensors. IEEE Sens. J. 2019, 19, 6872–6882. [Google Scholar] [CrossRef]

- Tang, P.; Wang, X.; Wang, A.; Yan, Y.; Liu, W.; Huang, J.; Yuille, A. Weakly Supervised Region Proposal Network and Object Detection. In Proceedings of the Computer Vision—ECCV, Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 260–275. [Google Scholar] [CrossRef]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse R-CNN: End-to-End Object Detection with Learnable Proposals. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Zhou, D.; Fang, J.; Song, X.; Guan, C.; Yin, J.; Dai, Y.; Yang, R. Iou Loss for 2d/3d Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Quebec City, QC, Canada, 16–19 September 2019; pp. 85–94. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2021, 8, 8574–8586. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap Between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seoul, Republic of Korea, 16–18 June 2020. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Liu, Y.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. arXiv 2021, arXiv:2106.08322. [Google Scholar] [CrossRef]

- Wang, N.; Gao, Y.; Chen, H.; Wang, P.; Tian, Z.; Shen, C.; Zhang, Y. NAS-FCOS: Fast Neural Architecture Search for Object Detection. arXiv 2020, arXiv:1906.04423. [Google Scholar] [CrossRef]

- Li, D.; Lu, Y.; Gao, Q.; Li, X.; Yu, X.; Song, Y. LiteYOLO-ID: A Lightweight Object Detection Network for Insulator Defect Detection. IEEE Trans. Instrum. Meas. 2024, 73, 1–12. [Google Scholar] [CrossRef]

- Bae, M.-H.; Park, S.-W.; Park, J.; Jung, S.-H.; Sim, C.-B. YOLO-RACE: Reassembly and convolutional block attention for enhanced dense object detection. Pattern Anal. Appl. 2025, 28, 90. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

| Method | mAP (%) | AP of 20 Classes (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| ATSS | 74.08 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 70.78 | 83.55 | 74.89 | 62.37 | 51.64 | 82.36 | 79.56 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 89.10 | 59.20 | 80.06 | 70.59 | 85.37 | 83.71 | 79.15 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 76.58 | 48.21 | 75.21 | 72.08 | 80.89 | 76.21 | |||

| TOOD | 75.03 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 68.88 | 85.14 | 74.16 | 61.78 | 50.30 | 85.32 | 79.91 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 87.74 | 62.75 | 81.53 | 70.09 | 84.62 | 86.05 | 83.33 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 77.74 | 50.04 | 73.01 | 77.68 | 83.94 | 76.59 | |||

| Faster R-CNN | 73.20 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 73.31 | 83.90 | 73.30 | 60.59 | 53.20 | 83.21 | 83.40 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 86.71 | 42.61 | 78.80 | 68.91 | 84.72 | 82.01 | 76.61 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 69.92 | 31.81 | 70.12 | 74.80 | 80.41 | 70.42 | |||

| Dynamic Head | 72.41 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 79.13 | 71.78 | 80.40 | 51.32 | 46.40 | 79.77 | 66.31 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 90.08 | 56.01 | 78.54 | 71.20 | 85.95 | 76.48 | 77.73 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 75.88 | 51.61 | 77.42 | 73.78 | 83.52 | 74.04 | |||

| NAS-FCOS | 75.19 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 74.48 | 82.29 | 77.43 | 64.33 | 49.42 | 84.98 | 79.43 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 87.02 | 62.24 | 82.13 | 72.46 | 87.21 | 83.76 | 79.43 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 75.20 | 54.85 | 74.38 | 72.92 | 84.69 | 75.12 | |||

| YOLOv8 | 77.51 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 85.47 | 79.91 | 68.30 | 68.26 | 67.55 | 85.78 | 83.12 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 91.70 | 59.93 | 78.41 | 66.79 | 83.50 | 89.77 | 87.61 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 86.05 | 58.14 | 76.82 | 75.46 | 88.30 | 68.88 | |||

| YOLOv7 | 82.98 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 92.47 | 77.38 | 91.13 | 67.64 | 66.17 | 91.60 | 88.65 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 95.59 | 71.48 | 88.71 | 77.64 | 94.28 | 83.01 | 86.27 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 87.74 | 64.06 | 88.90 | 79.48 | 88.94 | 82.96 | |||

| LSCA | 84.12 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 93.31 | 77.44 | 92.18 | 70.44 | 66.04 | 93.28 | 88.11 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 96.36 | 70.43 | 90.07 | 82.01 | 94.80 | 83.72 | 88.27 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 87.93 | 65.20 | 90.70 | 79.39 | 91.68 | 81.04 | |||

| YOLOv9-c | 83.07 | Aero | Bicycle | Bird | Boat | Bottle | Bus | Car |

| 92.31 | 79.03 | 89.64 | 68.52 | 64.10 | 92.17 | 89.97 | ||

| Cat | Chair | Cow | D-table | Dog | Horse | M-bike | ||

| 96.31 | 68.49 | 89.51 | 80.05 | 94.10 | 84.13 | 88.24 | ||

| Person | P-plant | Sheep | Sofa | Train | Tv | |||

| 87.82 | 60.50 | 88.78 | 78.36 | 89.78 | 80.98 | |||

| Method | mAP (%) | Params (M) | F1 Score (%) | Precision (%) | Recall (%) | FPS |

|---|---|---|---|---|---|---|

| LSCA | 84.12 | 35.10 | 80.03 | 81.16 | 80.78 | 68.1 |

| ATSS | 74.08 | 31.93 | 72.01 | 60.83 | 88.16 | 49 |

| TOOD | 75.03 | 31.84 | 68.97 | 56.51 | 88.60 | 22.8 |

| Faster R-CNN | 73.20 | 41.16 | 63.04 | 49.21 | 87.50 | 7 |

| Dynamic Head | 72.41 | 38.79 | 76.89 | 67.01 | 90.49 | 46.5 |

| NAS-FCOS | 75.19 | 32.08 | 78.1 | 70.08 | 87.18 | 42.1 |

| YOLOV8 | 77.51 | 105.97 | 75.93 | 80.95 | 68.79 | 143.2 |

| YOLOV7 | 82.98 | 37.62 | 79.21 | 78.91 | 79.68 | 62.3 |

| YOLOv9-c | 83.07 | 51.04 | 77.87 | 79.84 | 77.60 | 99.7 |

| Lite YOLO-ID | 78.48 | 3.76 | 75.35 | 78.26 | 72.63 | 137.2 |

| YOLO-RACE | 77.31 | 3.2 | 73.02 | 76.44 | 69.87 | 153.6 |

| MLCA | 56.59 | 3.01 | 57.17 | 60.37 | 54.31 | 82.1 |

| Efficientdet_d0 | 30.18 | 3.84 | 21.36 | 62.33 | 13.43 | 128.4 |

| Method | mAP (%) | F1 Score (%) | Precision (%) | Recall (%) | Params (M) | FPS |

|---|---|---|---|---|---|---|

| Faster-RCNN | 32.9 | 39.17 | 44.9 | 34.2 | 41.39 | 28.5 |

| SSD | 24.1 | 26.03 | 20.8 | 35.9 | 28.37 | 93.7 |

| YOLOv5l | 38.7 | 39.98 | 44.2 | 36.1 | 44.82 | 31.5 |

| YOLOv7 | 42.0 | 48.07 | 57.2 | 41.9 | 36.5 | 38.3 |

| YOLOv8s | 40.9 | 45.1 | 52.6 | 39.6 | 11.1 | 124.1 |

| YOLOv9c | 39.7 | 46.12 | 52.7 | 40.7 | 8.04 | 53.4 |

| YOLOv10l | 43.8 | 46.97 | 55.8 | 41.6 | 25.7 | 50.6 |

| YOLOv11l | 44.3 | 48.08 | 55.6 | 42.3 | 25.2 | 63.4 |

| YOLO-RACE | 31.67 | 36.47 | 41.95 | 32.11 | 3.2 | 103.8 |

| YOLO-MMS | 27.16 | 45.11 | 51.08 | 40.14 | 3.28 | 100.5 |

| LSCA | 44.7 | 49.12 | 58.1 | 43.1 | 33.9 | 47.3 |

| Group | GSConv | SSTA | Shape-IoU | mAP (%) | Params (M) |

|---|---|---|---|---|---|

| 1 | 82.98 | 37.6 | |||

| 2 | √ | 82.94 | 35.9 | ||

| 3 | √ | 84.06 | 36.9 | ||

| 4 | √ | 83.74 | 37.6 | ||

| 5 | √ | √ | 83.44 | 35.1 | |

| 6 | √ | √ | 83.17 | 35.9 | |

| 7 | √ | √ | 84.21 | 36.6 | |

| 8 | √ | √ | √ | 84.12 | 35.1 |

| Method | Params (M) | mAP (%) |

|---|---|---|

| Conv | 37.6 | 82.98 |

| PConv | 35.7 | 82.18 |

| DySnakeConv | 41.8 | 82.87 |

| GSConv | 35.9 | 82.94 |

| Dynamic Head | Params (M) | mAP (%) |

|---|---|---|

| 0 | 37.61 | 82.98 |

| 1 | 34.54 | 82.90 |

| 2 | 36.88 | 84.06 |

| 3 | 38.33 | 83.91 |

| 4 | 40.29 | 83.71 |

| Method | mAP (%) |

|---|---|

| Faster R-CNN | 73.20 |

| Faster R-CNN + SSTA | 74.12 |

| ATSS | 74.08 |

| ATSS + SSTA | 75.01 |

| YOLOv7 | 82.98 |

| YOLOv7 + SSTA | 84.06 |

| YOLOv8 | 77.51 |

| YOLOv8 + SSTA | 78.43 |

| NAS-FCOS | 75.19 |

| NAS-FCOS + SSTA | 76.03 |

| Group | Method | Scale | mAP (%) |

|---|---|---|---|

| 1 | Shape-IoU | 0 | 83.41 |

| 2 | Shape-IoU | 0.5 | 83.55 |

| 3 | Shape-IoU | 1.0 | 83.53 |

| 4 | Shape-IoU | 1.2 | 83.74 |

| 5 | Shape-IoU | 1.3 | 83.56 |

| 6 | Shape-IoU | 1.4 | 83.44 |

| Group | Method | mAP (%) |

|---|---|---|

| 1 | CIoU | 82.98 |

| 2 | DIoU | 83.22 |

| 3 | Wise-IoU | 83.34 |

| 4 | SIoU | 83.65 |

| 5 | Shape-IoU | 83.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, G.; Zhao, X.; Dang, D.; Wang, J.; Chen, Y. Enhancing Object Detection with Shape-IoU and Scale–Space–Task Collaborative Lightweight Path Aggregation. Appl. Sci. 2025, 15, 11976. https://doi.org/10.3390/app152211976

Wang G, Zhao X, Dang D, Wang J, Chen Y. Enhancing Object Detection with Shape-IoU and Scale–Space–Task Collaborative Lightweight Path Aggregation. Applied Sciences. 2025; 15(22):11976. https://doi.org/10.3390/app152211976

Chicago/Turabian StyleWang, Guogang, Xin Zhao, Denghui Dang, Junlong Wang, and Yaqiu Chen. 2025. "Enhancing Object Detection with Shape-IoU and Scale–Space–Task Collaborative Lightweight Path Aggregation" Applied Sciences 15, no. 22: 11976. https://doi.org/10.3390/app152211976

APA StyleWang, G., Zhao, X., Dang, D., Wang, J., & Chen, Y. (2025). Enhancing Object Detection with Shape-IoU and Scale–Space–Task Collaborative Lightweight Path Aggregation. Applied Sciences, 15(22), 11976. https://doi.org/10.3390/app152211976