Data Mining to Identify University Student Dropout Factors

Abstract

1. Introduction

2. Materials and Methods

2.1. Methodology

2.2. Data Collection

2.3. Data Analysis

3. Results

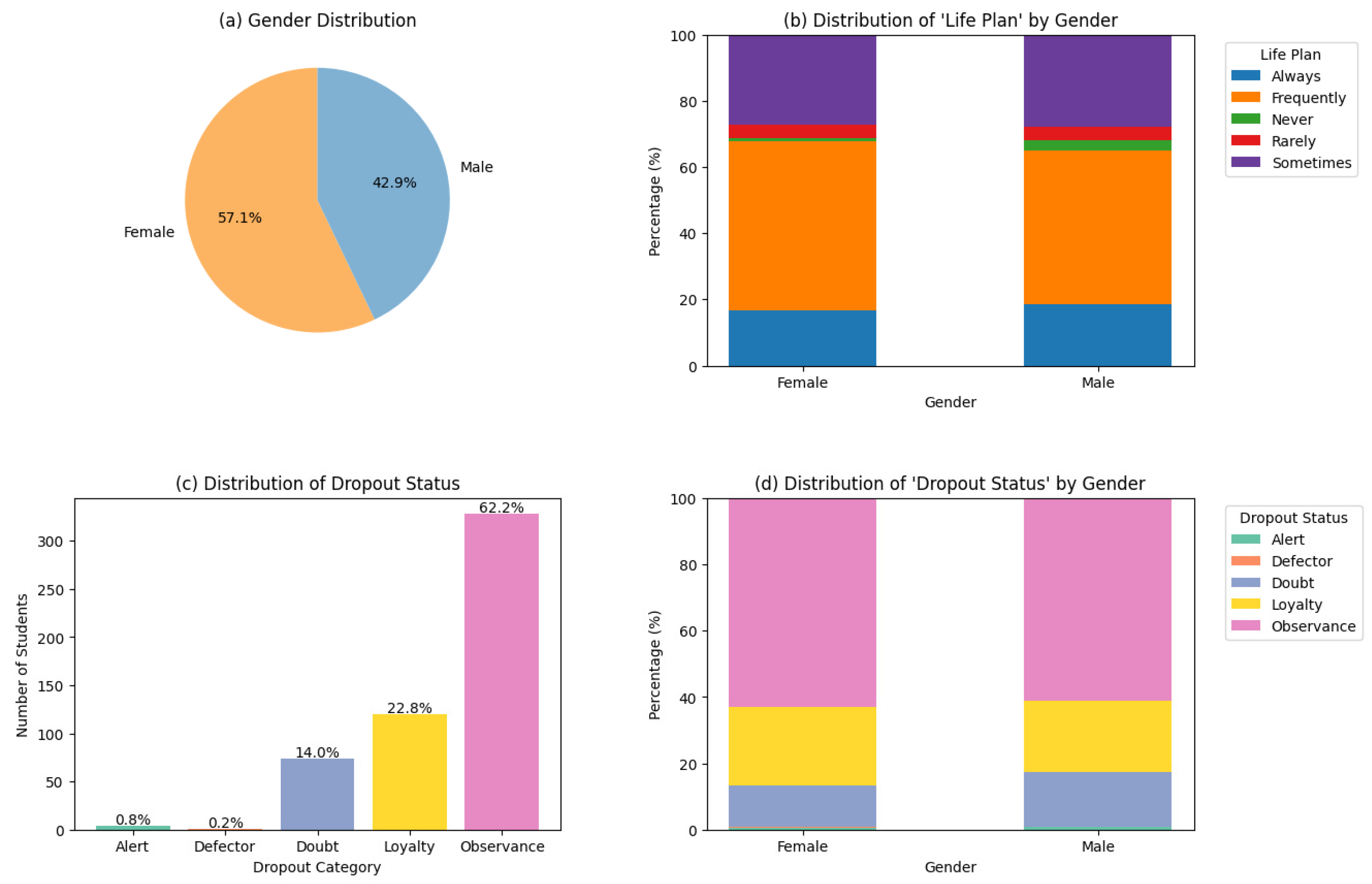

3.1. Descriptive Data

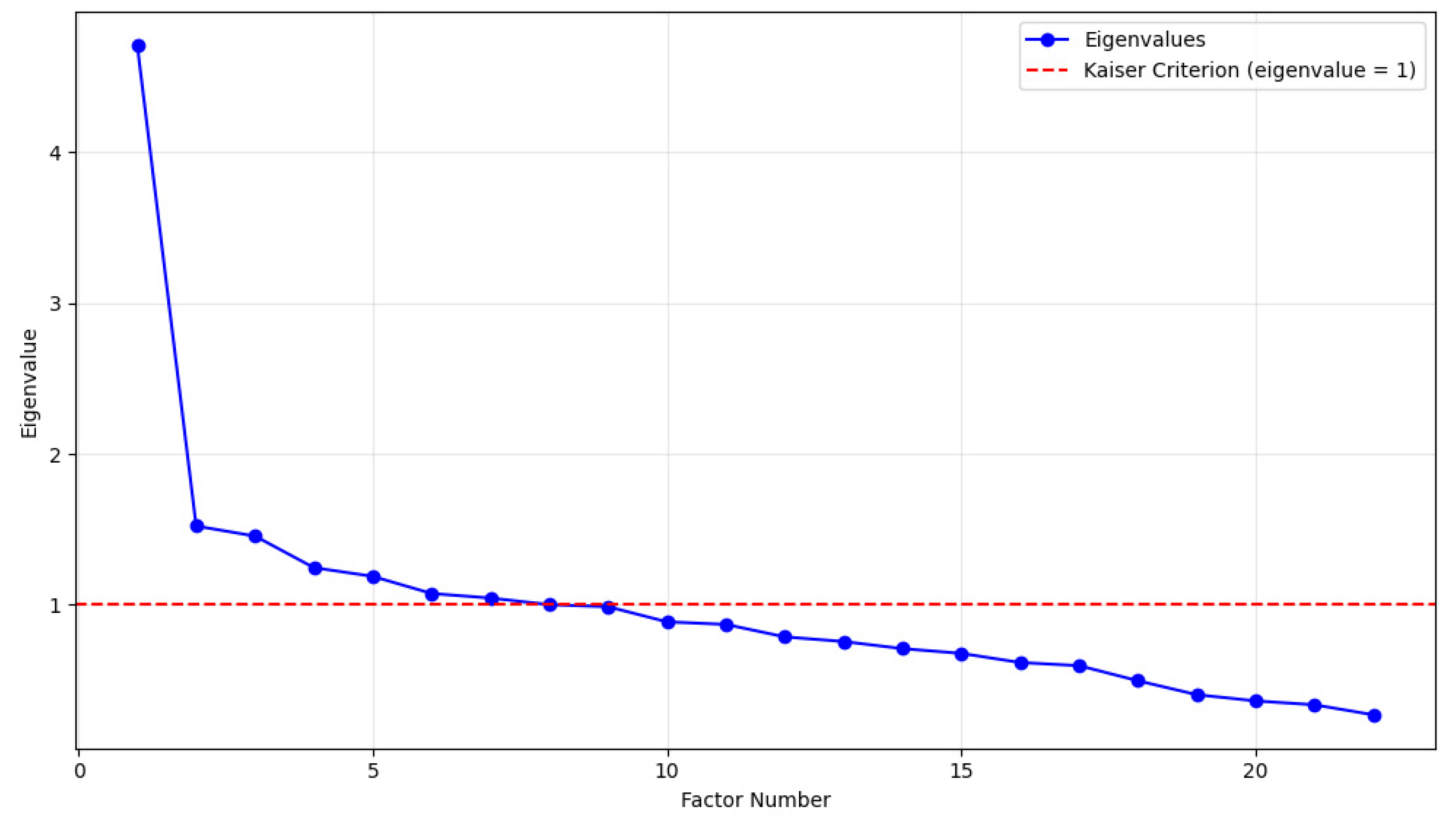

3.2. Attribute Identification

3.3. Correlation of Variables

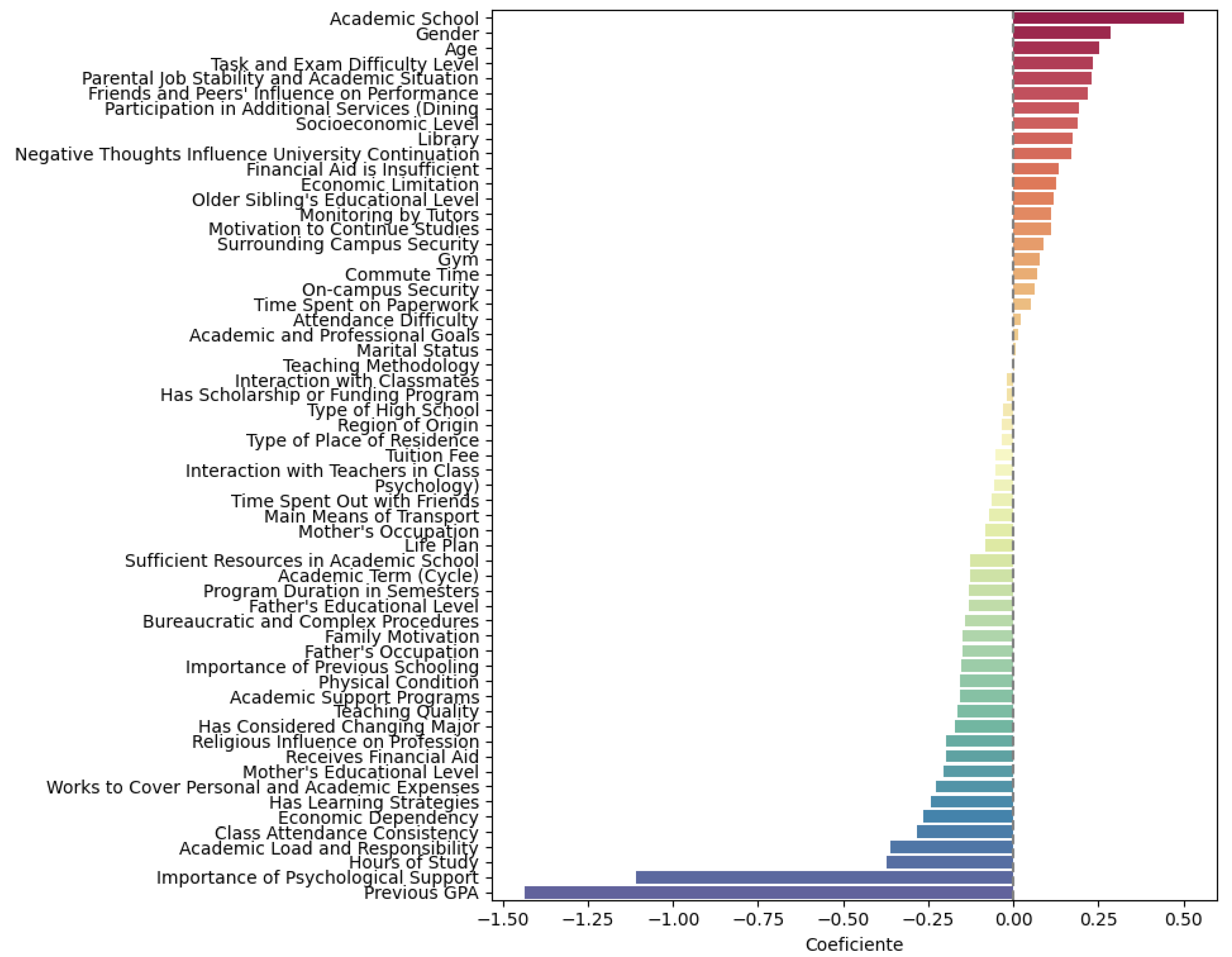

3.4. Importance Analysis

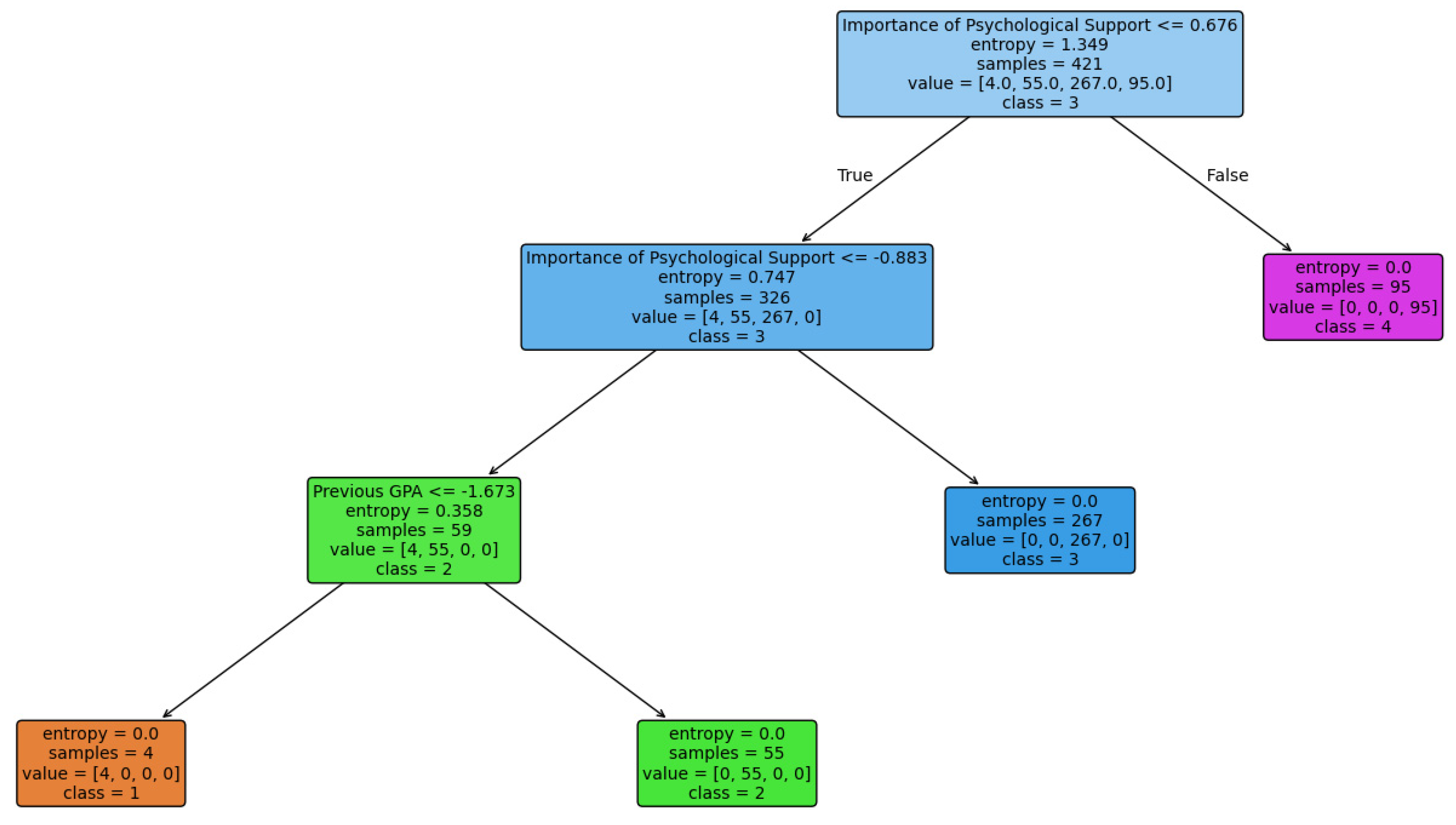

3.5. Decision Tree

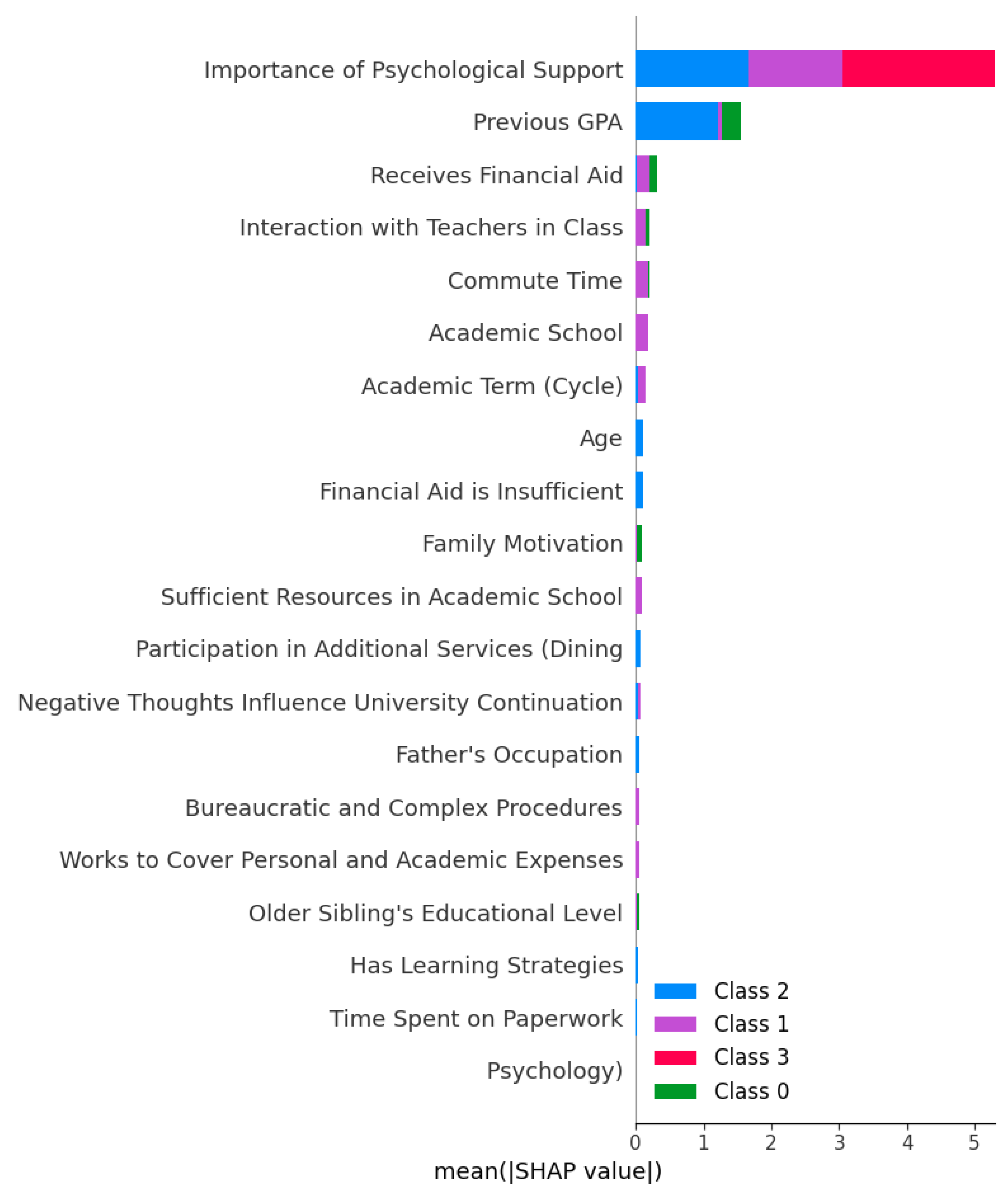

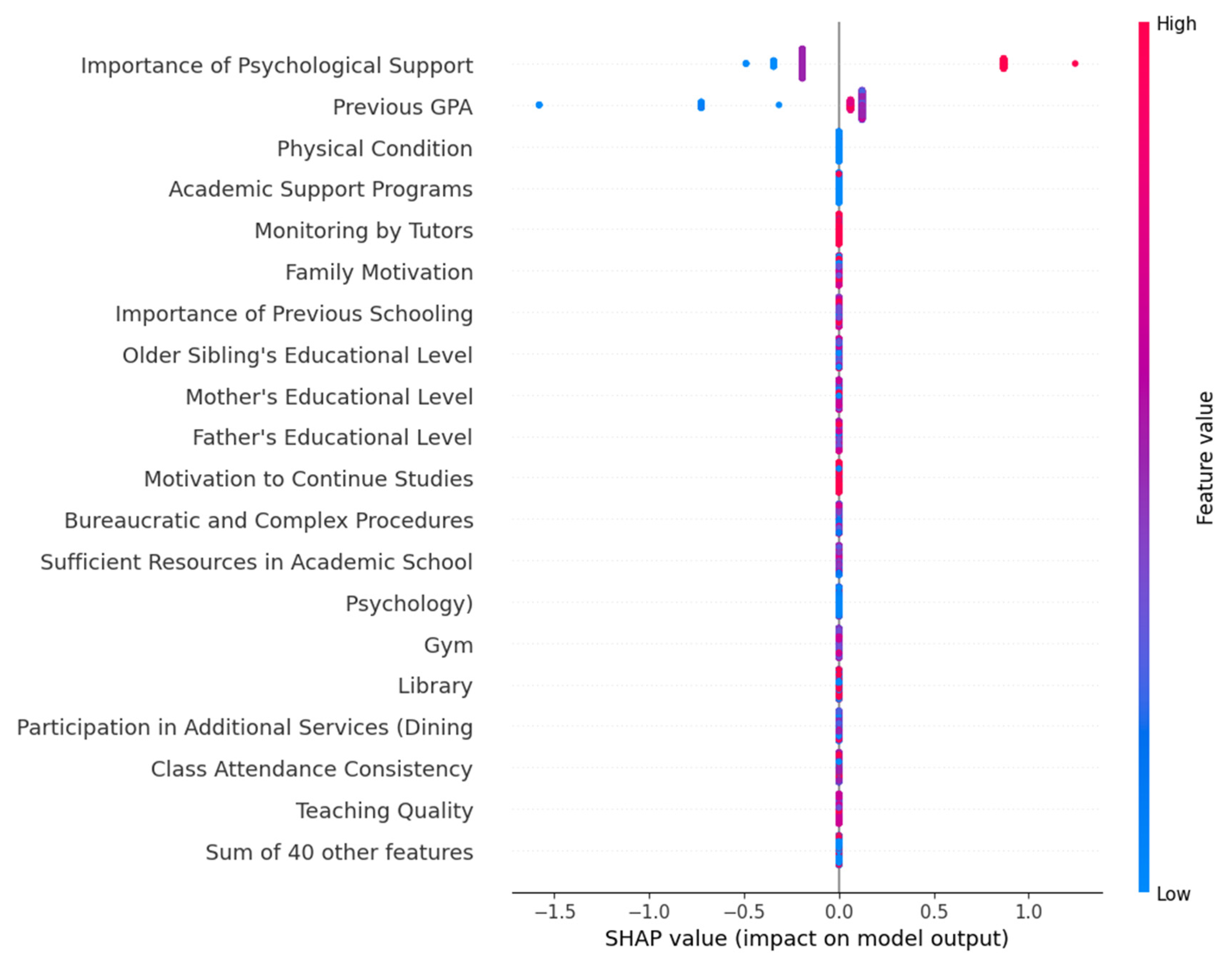

3.6. Shap Analysis

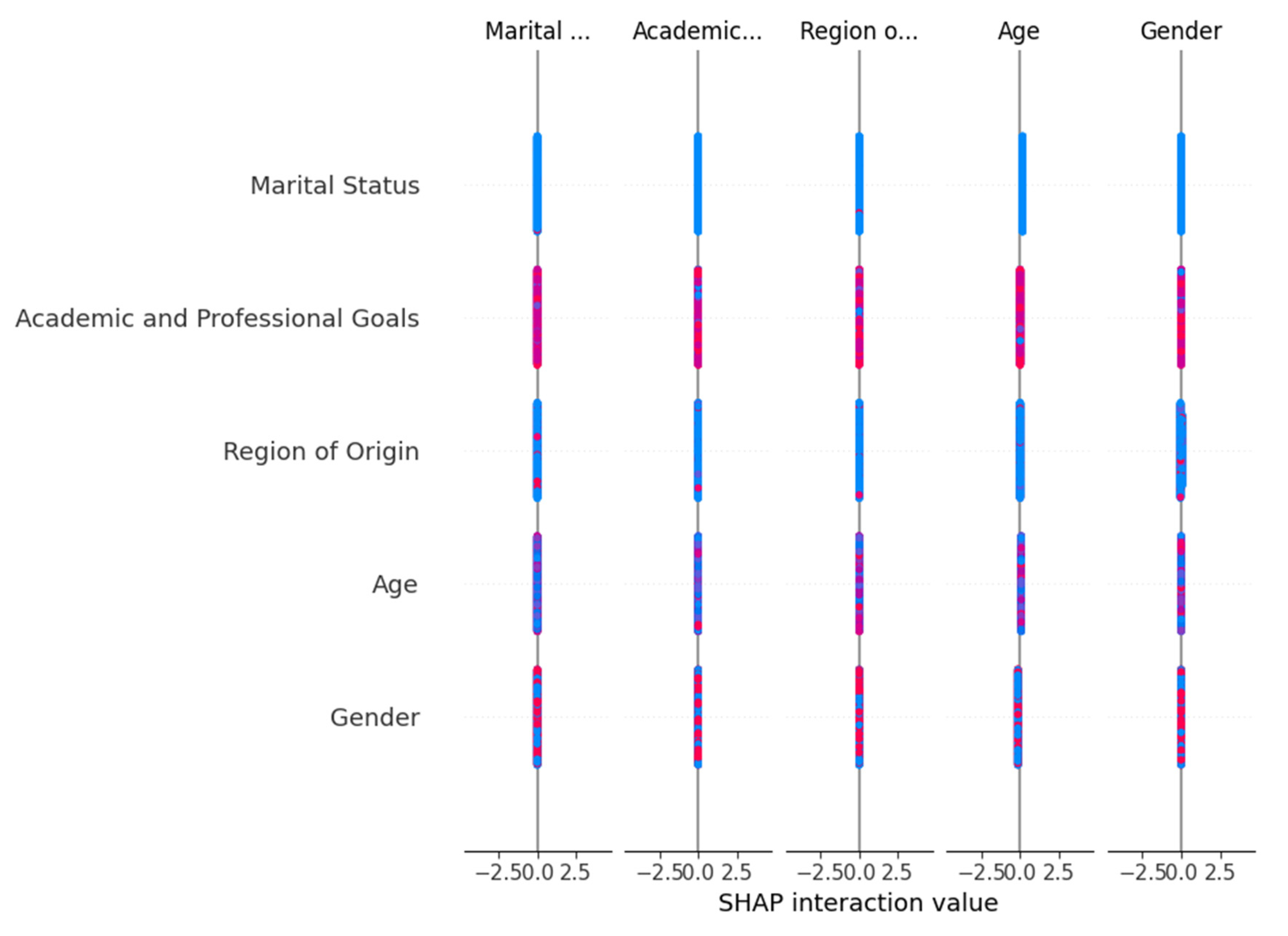

3.7. Sensitivity Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Escalante, J.; Medina, C.; Vásquez, A. La deserción universitaria: Un problema no resuelto en el Perú. Hacedor—AIAPAEC 2023, 7, 60–72. [Google Scholar] [CrossRef]

- OECD. Education at a Glance 2020; OECD: Paris, France, 2020. [Google Scholar] [CrossRef]

- Sotomayor, P.; Rodríguez, D. Factores explicativos de la deserción académica en la Educación Superior Técnico Profesional: El caso de un centro de formación técnica. Rev. Estud. Exp. Educ. 2020, 19, 199–223. [Google Scholar] [CrossRef]

- Ortiz, F. El Problema del Abandono Escolar: Un Análisis de los Factores de Deserción en el Tesis para Optar por el Grado de Magíster en Educación. Master’s Thesis, Universidad Nacional de la Plata, La Plata, Argentine, 2024. Available online: https://sedici.unlp.edu.ar/bitstream/handle/10915/178147/Documento_completo.pdf-PDFA.pdf?sequence=1&isAllowed=y (accessed on 17 May 2025).

- Villegas, B.; Núñez, L. Factores asociados a la deserción estudiantil en el ámbito universitario. Una revisión sistemática 2018–2023. RIDE Rev. Iberoam. Para Investig. Desarro. Educ. 2024, 14, e671. [Google Scholar] [CrossRef]

- UNESCO. La Educación Superior en América Latina y el Caribe: Avances y Retos; Documentos de Apoyo Para la CRES+5. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000392578.locale=en (accessed on 17 May 2025).

- Herreño, M.; Romero, J.; Mejía, J.; Román, W. Deserción estudiantil en educación superior. Tendencias y oportunidades en la era post pandemia. Rev. Arbitr. Interdiscip. Koinonía 2024, 9, 156–177. [Google Scholar] [CrossRef]

- Londoño, L. Facteurs de risque présents dans la désertion d’étudiants dans la Corporation Universitaire Lasallista. Rev. Virtual Univ. Católica Norte 2013, 1, 183–194. Available online: https://bit.ly/1OnjEwM (accessed on 4 March 2025).

- Zamora-Vélez, G.A.; Bermúdez-Cevallos, L.D.R. Socioeconomic factors and university student dropout. Int. J. Soc. Sci. 2024, 7, 103–112. [Google Scholar] [CrossRef]

- Reyes, I. Deserción Universitaria: Causas y Cómo la Educación Virtual Puede Ayudar a Reducirla. Available online: https://cognosonline.com/desercion-universitaria/ (accessed on 17 May 2025).

- Gómez, L.; Moreno, G.; Zapata, S. La pandemia del COVID-19 y su impacto en la deserción estudiantil. Rev. Cienc. Humanidades 2022, 38, 352–374. [Google Scholar] [CrossRef]

- Urbina, A.; Camino, J.; Cruz, R. Deserción escolar universitaria: Patrones para prevenirla aplicando minería de datos educativa. RELIEVE—Rev. Electrónica Investig. Evaluación Educ. 2020, 26, 1–19. [Google Scholar] [CrossRef]

- Aulakh, K.; Kumar, R.; Kaushal, M. E-learning enhancement through educational data mining with COVID-19 outbreak period in backdrop: A review. Int. J. Educ. Dev. 2023, 101, 102814. [Google Scholar] [CrossRef] [PubMed]

- Barbeiro, L.; Gomes, A.; Correia, F.; Bernardino, J. A Review of Educational Data Mining Trends. Procedia Comput. Sci. 2024, 237, 88–95. [Google Scholar] [CrossRef]

- Cerezo, R.; Lara, J.; Azevedo, R.; Romero, C. Reviewing the differences between learning analytics and educational data mining: Towards educational data science. Comput. Hum. Behav. 2024, 154, 108155. [Google Scholar] [CrossRef]

- Maniyan, S.; Ghousi, R.; Haeri, A. Data mining-based decision support system for educational decision makers: Extracting rules to enhance academic efficiency. Comput. Educ. Artif. Intell. 2024, 6, 100242. [Google Scholar] [CrossRef]

- Rabelo, A.; Zárate, L. A model for predicting dropout of higher education students. Data Sci. Manag. 2025, 8, 72–85. [Google Scholar] [CrossRef]

- Feng, G.; Fan, M. Research on learning behavior patterns from the perspective of educational data mining: Evaluation, prediction and visualization. Expert Syst. Appl. 2024, 237, 121555. [Google Scholar] [CrossRef]

- Peña, A. Educational data mining: A survey and a data mining-based analysis of recent works. Expert Syst. Appl. 2014, 41, 1432–1462. [Google Scholar] [CrossRef]

- Chalaris, M.; Gritzalis, S.; Maragoudakis, M.; Sgouropoulou, C.; Tsolakidis, A. Improving Quality of Educational Processes Providing New Knowledge Using Data Mining Techniques. Procedia Soc. Behav. Sci. 2014, 147, 390–397. [Google Scholar] [CrossRef]

- Mustofa, S.; Emon, Y.; Mamun, S.; Akhy, S.; Ahad, M. A novel AI-driven model for student dropout risk analysis with explainable AI insights. Comput. Educ. Artif. Intell. 2025, 8, 100352. [Google Scholar] [CrossRef]

- Vaarma, M.; Li, H. Predicting student dropouts with machine learning: An empirical study in Finnish higher education. Technol. Soc. 2024, 76, 102474. [Google Scholar] [CrossRef]

- Martínez, J.; Castillo, D. Prediction of student dropout using Artificial Intelligence algorithms. Procedia Comput. Sci. 2024, 251, 764–770. [Google Scholar] [CrossRef]

- Chytas, K.; Tsolakidis, A.; Triperina, E.; Karanikolas, N.; Skourlas, C. Academic data derived from a university e-government analytic platform: An educational data mining approach. Data Brief 2023, 49, 109357. [Google Scholar] [CrossRef]

- Shaik, T.; Tao, X.; Dann, C.; Xie, H.; Li, Y.; Galligan, L. Sentiment analysis and opinion mining on educational data: A survey. Nat. Lang. Process. J. 2023, 2, 100003. [Google Scholar] [CrossRef]

- Lemay, D.J.; Baek, C.; Doleck, T. Comparison of learning analytics and educational data mining: A topic modeling approach. Comput. Educ. Artif. Intell. 2021, 2, 100016. [Google Scholar] [CrossRef]

- Romero, C.; Ventura, S. Educational Data Mining: A Review of the State of the Art. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2010, 40, 601–618. [Google Scholar] [CrossRef]

- Sarker, S.; Kumar, M.; Sheikh, T.; Al Mehedi, M. Analyzing students’ academic performance using educational data mining. Comput. Educ. Artif. Intell. 2024, 7, 100263. [Google Scholar] [CrossRef]

- Cardoso, R.; Brito, K.; Leitão, P. A data mining framework for reporting trends in the predictive contribution of factors related to educational achievement. Expert Syst. Appl. 2023, 221, 119729. [Google Scholar] [CrossRef]

- Alam, M.; Raza, M. Analyzing energy consumption patterns of an educational building through data mining. J. Build. Eng. 2021, 44, 103385. [Google Scholar] [CrossRef]

- Dol, S.; Jawandhiya, P. Classification Technique and its Combination with Clustering and Association Rule Mining in Educational Data Mining—A survey. Eng. Appl. Artif. Intell. 2023, 122, 106071. [Google Scholar] [CrossRef]

- Rodrigues, M.; Isotani, S.; Zárate, L. Educational Data Mining: A review of evaluation process in the e-learning. Telemat. Inform. 2018, 35, 1701–1717. [Google Scholar] [CrossRef]

- Aldowah, H.; Al-Samarraie, H.; Mohamad, W. Educational data mining and learning analytics for 21st century higher education: A review and synthesis. Telemat. Inform. 2019, 37, 13–49. [Google Scholar] [CrossRef]

- Mohamad, S.K.; Tasir, Z. Educational Data Mining: A Review. Procedia Soc. Behav. Sci. 2013, 97, 320–324. [Google Scholar] [CrossRef]

- Asif, R.; Merceron, A.; Ali, S.A.; Haider, N.G. Analyzing undergraduate students’ performance using educational data mining. Comput. Educ. 2017, 113, 177–194. [Google Scholar] [CrossRef]

- Aina, C.; Baici, E.; Casalone, G.; Pastore, F. The determinants of university dropout: A review of the socio-economic literature. Socioecon. Plan. Sci. 2022, 79, 101102. [Google Scholar] [CrossRef]

- Phan, M.; De Caigny, A.; Coussement, K. A decision support framework to incorporate textual data for early student dropout prediction in higher education. Decis. Support. Syst. 2023, 168, 113940. [Google Scholar] [CrossRef]

- Abarca, A.; Sánchez, A. La deserción estudiantil en la educación superior: El caso de la universidad de Costa Rica. Rev. Electrónica Actual. Investig. Educ. 2005, 5, 1–22. Available online: https://bit.ly/35TVeLE (accessed on 3 March 2025).

- Cabrera, L.; Bethencourt, J.; Alvarez, P.; González, M. El problema del abandono de los estudios universitarios. RELIEVE—Rev. Electrónica Investig. Evaluación Educ. 2014, 12, 171–203. [Google Scholar] [CrossRef]

- Ruíz, L. Deserción en la Educación Superior Recinto las Minas. Período 2001–2007. Cienc. Intercult. 2009, 4, 30–46. [Google Scholar] [CrossRef]

- Núñez, D. Modelo Predictivo basado en Aprendizaje Automático para la retención Estudiantil en Educación Superior. Eur. Public Soc. Innov. Rev. 2025, 10, 1–21. [Google Scholar] [CrossRef]

- Pierrakeas, C.; Koutsonikos, G.; Lipitakis, A.; Kotsiantis, S.; Xenos, M.; Gravvanis, G. The Variability of the Reasons for Student Dropout in Distance Learning and the Prediction of Dropout-Prone Students. In Machine Learning Paradigms; Springer: Cham, Switzerland, 2020; pp. 91–111. [Google Scholar] [CrossRef]

- Utami, S.; Winarni, I.; Handayani, S.; Zuhauri, F. When and Who Dropouts from Distance Education? Turk. Online J. Distance Educ. 2020, 21, 141–152. [Google Scholar] [CrossRef]

- Fozdar, B.; Kumar, L.; Kannan, S. A Survey of a Study on the Reasons Responsible for Student Dropout from the Bachelor of Science Programme at Indira Gandhi National Open University. Int. Rev. Res. Open Distance Learn. 2006, 7. [Google Scholar] [CrossRef]

- Marczuk, A. Is it all about individual effort? The effect of study conditions on student dropout intention. Eur. J. High. Educ. 2023, 13, 509–535. [Google Scholar] [CrossRef]

- Barragán, S.; González, L. Complexities of student dropout in higher education: A multidimensional analysis. Front. Educ. 2024, 9, 1461650. [Google Scholar] [CrossRef]

- Stinebrickner, T.R.; Stinebrickner, R. Learning about academic ability and the college drop-out decision. J. Labor Econ. 2012, 30, 707–748. [Google Scholar] [CrossRef]

- Bardach, L.; Lüftenegger, M.; Oczlon, S.; Spiel, C.; Schober, B. Context-related problems and university students’ dropout intentions—The buffering effect of personal best goals. Eur. J. Psychol. Educ. 2020, 35, 477–493. [Google Scholar] [CrossRef]

- Bargmann, C.; Thiele, L.; Kauffeld, S. Motivation matters: Predicting students’ career decidedness and intention to drop out after the first year in higher education. High. Educ. 2022, 83, 845–861. [Google Scholar] [CrossRef]

- Geisler, S. What role do students’ beliefs play in a successful transition from school to university mathematics? Int. J. Math. Educ. Sci. Technol. 2023, 54, 1458–1473. [Google Scholar] [CrossRef]

- Wild, S.; Schulze, L. Student dropout and retention: An event history analysis among students in cooperative higher education. Int. J. Educ. Res. 2020, 104, 101687. [Google Scholar] [CrossRef]

- Anttila, S.; Lindfors, H.; Hirvonen, R.; Määttä, S.; Kiuru, N. Dropout intentions in secondary education: Student temperament and achievement motivation as antecedents. J. Adolesc. 2023, 95, 248–263. [Google Scholar] [CrossRef]

- Parr, A.K.; Bonitz, V.S. Role of Family Background, Student Behaviors, and School-Related Beliefs in Predicting High School Dropout. J. Educ. Res. 2015, 108, 504–514. [Google Scholar] [CrossRef]

- Álvarez, N.; Castellanos, S.; Niño, R. Cuestionario variables de riesgo de deserción universitaria: Comprensión y pertinencia del instrumento. Rastros Rostros 2023, 26, 1–21. [Google Scholar] [CrossRef]

- Archambault, I.; Janosz, M.; Dupéré, V.; Brault, M.; Andrew, M.M. Individual, social, and family factors associated with high school dropout among low-SES youth: Differential effects as a function of immigrant status. Br. J. Educ. Psychol. 2017, 87, 456–477. [Google Scholar] [CrossRef]

- Piepenburg, J.; Beckmann, J. The relevance of social and academic integration for students’ dropout decisions. Evidence from a factorial survey in Germany. Eur. J. High. Educ. 2022, 12, 255–276. [Google Scholar] [CrossRef]

- Rumberger, R.; Ghatak, R.; Poulos, G.; Ritter, P.L.; Dornbusch, S.M. Family Influences on Dropout Behavior in One California High School. Sociol. Educ. 1990, 63, 283. [Google Scholar] [CrossRef]

- Sweet, R. Student dropout in distance education: An application of Tinto’s model. Distance Educ. 1986, 7, 201–213. [Google Scholar] [CrossRef]

- Stoessel, K.; Ihme, T.A.; Barbarino, M.; Fisseler, B.; Stürmer, S. Sociodemographic Diversity and Distance Education: Who Drops Out from Academic Programs and Why? Res. High. Educ. 2015, 56, 228–246. [Google Scholar] [CrossRef]

- Bardales, E.; Carrasco, A.; Marín, Y.; Caro, O.; Fernández, M.; Santos, R. Determinants of academic desertion: A case study in a Peruvian university. Power Educ. 2025. [Google Scholar] [CrossRef]

- Carrasco, A.; Bardales, E.; Marín, Y.; Caro, O.; Santos, R.; Rubio, Y.d.C.M. Comprehensive Wellness in University Life: An Analysis of Student Services and Their Impact on Quality of Life. J. Educ. Soc. Res. 2024, 14, 514. [Google Scholar] [CrossRef]

- Kocsis, Z.; Pusztai, G. Student Employment as a Possible Factor of Dropout. Acta Polytech. Hung. 2020, 17, 183–199. Available online: https://acta.uni-obuda.hu/Kocsis_Pusztai_101.pdf (accessed on 15 April 2025). [CrossRef]

- Lenon, M.; Majid, M. Student Dropouts and their Economic Impact in the Post-Pandemic Era: A Systematic Literature Review. Int. J. Acad. Res. Bus. Soc. Sci. 2024, 14, 2142–2161. [Google Scholar] [CrossRef] [PubMed]

- Villanueva, N.; Rios, S.; Meneses, B. Exploration of theoretical conceptualizations of the causes of college dropout. Semin. Med. Writ. Educ. 2022, 1, 15. [Google Scholar] [CrossRef]

- Katel, N.; Katel, K.P. Factors Influencing Students’ Dropout in Bachelor’s Level. Sotang Yrly. Peer Rev. J. 2024, 6, 69–84. [Google Scholar] [CrossRef]

- Chen, R. Institutional Characteristics and College Student Dropout Risks: A Multilevel Event History Analysis. Res. High. Educ. 2012, 53, 487–505. [Google Scholar] [CrossRef]

- De Silva, L.M.H.; Chounta, I.; Rodríguez, M.; Roa, E.; Gramberg, A.; Valk, A. Toward an Institutional Analytics Agenda for Addressing Student Dropout in Higher Education. J. Learn. Anal. 2022, 9, 179–201. [Google Scholar] [CrossRef]

- Gubbels, J.; van der Put, C.; Assink, M. Risk Factors for School Absenteeism and Dropout: A Meta-Analytic Review. J. Youth Adolesc. 2019, 48, 1637–1667. [Google Scholar] [CrossRef]

- Cuevas, M.; Díaz, F.; Díaz, M.; Vicente, M. Prediction analysis of academic dropout in students of the Pablo de Olavide University. Front. Educ. 2023, 7, 1083923. [Google Scholar] [CrossRef]

- Nurmalitasari, A.; Faizuddin, M. Factors Influencing Dropout Students in Higher Education. Educ. Res. Int. 2023, 2023, 7704142. [Google Scholar] [CrossRef]

- Lee, Y.; Choi, J.; Kim, T. Discriminating factors between completers of and dropouts from online learning courses. Br. J. Educ. Technol. 2013, 44, 328–337. [Google Scholar] [CrossRef]

- Alencar, A.; Fernandes, M.A.; Vedana, K.G.G.; Lira, J.A.C.; Barbosa, N.S.; Rocha, E.P.; Cunha, K.R.F. Mental health and university dropout among nursing students: A cross-sectional study. Nurse Educ. Today 2025, 147, 106571. [Google Scholar] [CrossRef]

- Košir, S.; Aslan, M.; Lakshminarayanan, R. Application of school attachment factors as a strategy against school dropout: A case study of public school students in Albania. Child. Youth Serv. Rev. 2023, 152, 107085. [Google Scholar] [CrossRef]

- Deleña, R.; Dia, N.J.; Sacayan, R.R.; Sieras, J.C.; Khalid, S.A.; Macatotong, A.H.T.; Gulam, S.B. Predicting student retention: A comparative study of machine learning approach utilizing sociodemographic and academic factors. Syst. Soft Comput. 2025, 7, 200352. [Google Scholar] [CrossRef]

- Alwarthan, S.; Aslam, N.; Khan, I.U. An Explainable Model for Identifying At-Risk Student at Higher Education. IEEE Access 2022, 10, 107649–107668. [Google Scholar] [CrossRef]

- Vijayalakshmi, V.; Venkatachalapathy, K. Comparison of Predicting Student‘s Performance using Machine Learning Algorithms. Int. J. Intell. Syst. Appl. 2019, 11, 34–45. [Google Scholar] [CrossRef]

- Almalawi, A.; Soh, B.; Li, A.; Samra, H. Predictive Models for Educational Purposes: A Systematic Review. Big Data Cogn. Comput. 2024, 8, 187. [Google Scholar] [CrossRef]

- Costa, T.; Falcão, B.; Mohamed, M.; Annuk, A.; Marinho, M. Employing machine learning for advanced gap imputation in solar power generation databases. Sci. Rep. 2024, 14, 23801. [Google Scholar] [CrossRef]

- Tyagi, A. ¿Qué es el algoritmo XGBoost? Analytics Vidhya. Available online: https://www.analyticsvidhya.com/blog/2018/09/an-end-to-end-guide-to-understand-the-math-behind-xgboost/ (accessed on 30 July 2025).

- Carvajal, P.; Trejos, Á. Revisión de estudios sobre deserción estudiantil en educación superior en Latinoamérica bajo la perspectiva de Pierre Bourdieu. In Proceedings of the Congresos CLABES, Quito, Ecuador, 9–11 November 2016. [Google Scholar]

- Vélez, A.; López, D. Estrategias para vencer la deserción universitaria. Educ. Educ. 2004, 7, 177–203. Available online: https://bit.ly/39MgeEJ (accessed on 3 March 2025).

- Urbina, A.; Téllez, A.; Cruz, R. Patrones que identifican a estudiantes universitarios desertores aplicando minería de datos educativa. Rev. Electrónica Investig. Educ. 2021, 23, e1507. [Google Scholar] [CrossRef]

- Toscano, B.; Margain, L.; Ponce, J.; López, R. Aplicación de Minería de Datos para la Identificación de Factores de Riesgo Asociados a la Muerte Fetal. In Proceedings of the VIII Congreso Internaconal en Ciencias Computacionales—CICOMP 2016, Ensenada, CA, USA, 9–11 November 2016; Available online: https://www.researchgate.net/publication/310797578 (accessed on 10 October 2025).

- Amrita; Ahmed, P. A Hybrid-Based Feature Selection Approach for IDS. In Networks and Communications (NetCom2013); Lecture Notes in Electrical Engineering; Springer: Cham, Switzerland, 2014; Volume 284, pp. 195–211. [Google Scholar] [CrossRef]

- Murcia, A.; Salazar, J. Predictive and Visual Analytics Models Applied in a Stylistic and Technological Analysis of Spindle Whorls: A Case Study. Cirex-ID. 2018. Available online: https://www.researchgate.net/publication/351512849 (accessed on 10 October 2025).

- Badache, I. 2SRM: Learning social signals for predicting relevant search results. Web Intell. 2020, 18, 15–33. [Google Scholar] [CrossRef]

- Kononenko, I. Estimación de atributos: Análisis y extensiones de RELIEF. In Proceedings of the Conferencia Europea Sobre Aprendizaje Automático, Catania, Italy, 6–8 April 1994; pp. 171–182. [Google Scholar]

- Kira, K.; Rendell, L.A. A Practical Approach to Feature Selection. In Machine Learning Proceedings 1992; Morgan Kaufmann: San Francisco, CA, USA, 1992; pp. 249–256. [Google Scholar] [CrossRef]

- Córdova, D.; Terven, J.; Romero-González, J.-A.; Córdova-Esparza, K.-E.; López-Martínez, R.-E.; García-Ramírez, T.; Chaparro-Sánchez, R. Predicting and Preventing School Dropout with Business Intelligence: Insights from a Systematic Review. Information 2025, 16, 326. [Google Scholar] [CrossRef]

- Song, Z.; Sung, S.; Park, D.; Park, B. All-Year Dropout Prediction Modeling and Analysis for University Students. Appl. Sci. 2023, 13, 1143. [Google Scholar] [CrossRef]

- Leelaluk, S.; Tang, C.; Švábenský, V.; Shimada, A. Knowledge Distillation in RNN-Attention Models for Early Prediction of Student Performance. In Proceedings of the ACM Symposium on Applied Computing, Catania, Italy, 31 March–4 April 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 64–73. [Google Scholar] [CrossRef]

- Dia, N.; Sieras, J.C.; Khalid, S.A.; Macatotong, A.H.T.; Mondejar, J.M.; Genotiva, E.R.; Delena, R.D. EduGuard RetainX: An advanced analytical dashboard for predicting and improving student retention in tertiary education. SoftwareX 2025, 29, 102057. [Google Scholar] [CrossRef]

- Roslan, N.; Jamil, J.M.; Shaharanee, I.; Alawi, S. Prediction of Student Dropout in Malaysian’s Private Higher Education Institute using Data Mining Application. J. Adv. Res. Appl. Sci. Eng. Technol. 2025, 45, 168–176. [Google Scholar] [CrossRef]

| Factors | Description | Authors |

|---|---|---|

| Beliefs | It measures the student’s personal perception and goals, as well as the clarity of their academic and professional objectives. | Carvajal & Trejos [80] |

| Distance and transportation | It refers to the distance between the student’s home and the university, as well as the costs and time spent on transportation. | Vélez & López [81] Abarca & Sánchez [38] |

| Academic factors | It addresses the student’s academic performance, the perceived difficulty of the courses, the learning and teaching strategies used, as well as the length of the degree and the time dedicated to study. | Ruiz [40] Londoño [8] Fozdar et al. [44] Carvajal & Trejos [80] |

| Economic factors | It includes key economic aspects such as the cost of tuition and materials, available scholarships and agreements, the student’s financial dependency, their socioeconomic status, and financial limitations. | Vélez & López [81] Ruiz [40] Cabrera et al. [39] Carvajal & Trejos [80] |

| Personal/family factors | It refers to the student’s family background, including the educational level of the parents or guardians, and the motivational support the student receives from his or her family. | Fozdar et al. [44] Carvajal & Trejos [80] Cabrera et al. [39] |

| Social factors | It includes the student’s interactions with his friends, classmates, and teachers. | Vélez & López [81] Ruiz [40] Cabrera et al. [39] Carvajal & Trejos [80] |

| Institutional factors | It aims to study the quality of teaching offered by the institution, the attendance and commitment of teachers, the infrastructure available for learning, and accessibility to additional services such as libraries and psychological support. | Ruiz [40] Abarca & Sánchez [38] Carvajal & Trejos [80] Fozdar et al. [44] Carvajal & Trejos [80] |

| Lack of orientation | The lack of academic and emotional support that the student may experience during their college career. | Abarca & Sánchez [38] |

| Security | It is related to the perception of security on and off the university campus. | Vélez & López [81] |

| Vocation for the career | It seeks to measure the level of interest, motivation, and commitment that the student has toward his or her career and studies. | Abarca & Sánchez [38] |

| Health situation | It refers to any physical or mental condition that the student may have, which may affect their academic continuity. | Abarca & Sánchez [38] |

| Employment Status | It measures the students’ financial need to work and covers their personal and academic expenses, as well as the employment status of the parents. | Ruiz [40] |

| Item | Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 |

|---|---|---|---|---|---|

| Academic and Professional Goals | 0.180 | 0.000 | 0.007 | 0.091 | 0.602 |

| Life Plan | 0.146 | −0.027 | 0.086 | 0.096 | 0.720 |

| Negative Thoughts Influence University Continuation | −0.320 | 0.076 | 0.426 | −0.179 | −0.306 |

| Religious Influence on Profession | −0.166 | 0.369 | 0.238 | −0.109 | 0.409 |

| On-campus Security | 0.296 | 0.091 | −0.006 | 0.767 | 0.165 |

| Surrounding Campus Security | 0.269 | 0.089 | 0.047 | 0.792 | 0.117 |

| Attendance Difficulty | −0.018 | −0.099 | 0.333 | −0.278 | −0.350 |

| Tuition Fee | 0.332 | −0.122 | −0.168 | 0.277 | 0.005 |

| Friends and Peers’ Influence on Performance | −0.170 | 0.542 | 0.242 | 0.139 | −0.330 |

| Interaction with Teachers in Class | 0.725 | −0.018 | −0.015 | 0.054 | 0.276 |

| Interaction with Classmates | 0.523 | 0.151 | 0.125 | 0.170 | 0.263 |

| Task and Exam Difficulty Level | 0.120 | 0.015 | 0.529 | −0.182 | −0.084 |

| Teaching Methodology | 0.722 | 0.282 | −0.015 | 0.017 | 0.120 |

| Teaching Quality | 0.770 | 0.169 | 0.066 | 0.174 | 0.038 |

| Class Attendance Consistency | 0.700 | −0.006 | 0.099 | 0.233 | 0.002 |

| Participation in Additional Services (dining, library, etc.) | 0.022 | 0.453 | −0.091 | 0.370 | 0.038 |

| Bureaucratic and Complex Procedures | −0.041 | −0.070 | 0.602 | 0.069 | 0.078 |

| Importance of Previous Schooling | 0.078 | 0.134 | 0.159 | −0.136 | 0.123 |

| Family Motivation | 0.115 | −0.088 | 0.485 | 0.240 | 0.203 |

| Monitoring by Tutors | 0.218 | 0.216 | −0.114 | 0.250 | 0.318 |

| Academic Support Programs | 0.387 | 0.662 | −0.120 | −0.031 | 0.080 |

| Importance of Psychological Support | 0.376 | 0.648 | −0.207 | 0.026 | 0.130 |

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 | |

|---|---|---|---|---|---|

| Explained Variance | 3.2594 | 1.7484 | 1.4573 | 1.8819 | 1.7711 |

| Proportion of Variance | 0.1482 | 0.0795 | 0.0662 | 0.0855 | 0.0805 |

| Cumulative Variance | 0.1482 | 0.2276 | 0.2939 | 0.3794 | 0.4599 |

| CorrelationAttributeEval | GainRatioAttributeEval | InfoGainAttributeEval | OneRAttributeEval | ReliefFAttributeEval | SymmetricalUncertAttributeEval | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ranked | Factors | Ranked | Factors | Ranked | Factors | Ranked | Factors | Ranked | Factors | Ranked | Factors |

| 0.428 | Previous GPA | 0.944 | Previous GPA | 1.308 | Previous GPA | 97.723 | Previous GPA | 0.263 | Previous GPA | 0.946 | Previous GPA |

| 0.111 | Program Duration Semesters | 0.076 | Marital Status | 0.170 | Academic School | 62.998 | Time Spent on Paperwork | 0.068 | Academic Term | 0.067 | Academic School |

| 0.102 | Academic Term | 0.046 | Father’s Occupation | 0.148 | Father’s Occupation | 62.239 | Surrounding Campus Security | 0.060 | Academic School | 0.065 | Father’s Occupation |

| 0.087 | Time Spent Out with Friends | 0.046 | Academic School | 0.128 | Academic Term | 62.239 | Motivation Continue Studies | 0.039 | Participation Additional Services | 0.057 | Academic Term |

| 0.073 | Physical Condition | 0.041 | Academic Term | 0.075 | Mothers’ Occupation | 62.239 | Works to cover expenses | 0.032 | Teaching Methodology | 0.040 | Mothers’ Occupation |

| 0.071 | Academic School | 0.037 | Program Duration Semesters | 0.056 | Mothers’ Educational Level | 62.239 | Parental Job Stability Academic Situation | 0.032 | Fathers’ Educational Level | 0.031 | Region Origin |

| 0.068 | Academic Support Programs | 0.034 | Region Origin | 0.040 | Hours of Study | 62.239 | Life Plan | 0.031 | Economic Dependency | 0.028 | Mothers’ Educational Level |

| 0.065 | Age | 0.032 | Mothers Occupation | 0.039 | Region Origin | 62.239 | Main Means of Transport | 0.030 | Gender | 0.025 | Program Duration Semesters |

| 0.064 | Has Scholarship Funding Program | 0.021 | Mothers’ Educational Level | 0.035 | Fathers’ Educational Level | 62.239 | Academic Professional Goals | 0.029 | Father’s Occupation | 0.020 | Hours of Study |

| 0.060 | Motivation Continue Studies | 0.021 | Has Considered Changing Major | 0.032 | Participation Additional Services | 62.239 | Religious Influence Profession | 0.028 | Teaching Quality | 0.019 | Time Spent Out with Friends |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reina Marín, Y.; Quiñones Huatangari, L.; Cruz Caro, O.; Maicelo Guevara, J.L.; Alva Tuesta, J.N.; Sánchez Bardales, E.; Chávez Santos, R. Data Mining to Identify University Student Dropout Factors. Appl. Sci. 2025, 15, 11911. https://doi.org/10.3390/app152211911

Reina Marín Y, Quiñones Huatangari L, Cruz Caro O, Maicelo Guevara JL, Alva Tuesta JN, Sánchez Bardales E, Chávez Santos R. Data Mining to Identify University Student Dropout Factors. Applied Sciences. 2025; 15(22):11911. https://doi.org/10.3390/app152211911

Chicago/Turabian StyleReina Marín, Yuri, Lenin Quiñones Huatangari, Omer Cruz Caro, Jorge Luis Maicelo Guevara, Judith Nathaly Alva Tuesta, Einstein Sánchez Bardales, and River Chávez Santos. 2025. "Data Mining to Identify University Student Dropout Factors" Applied Sciences 15, no. 22: 11911. https://doi.org/10.3390/app152211911

APA StyleReina Marín, Y., Quiñones Huatangari, L., Cruz Caro, O., Maicelo Guevara, J. L., Alva Tuesta, J. N., Sánchez Bardales, E., & Chávez Santos, R. (2025). Data Mining to Identify University Student Dropout Factors. Applied Sciences, 15(22), 11911. https://doi.org/10.3390/app152211911