Big Data Management and Quality Evaluation for the Implementation of AI Technologies in Smart Manufacturing

Abstract

1. Introduction

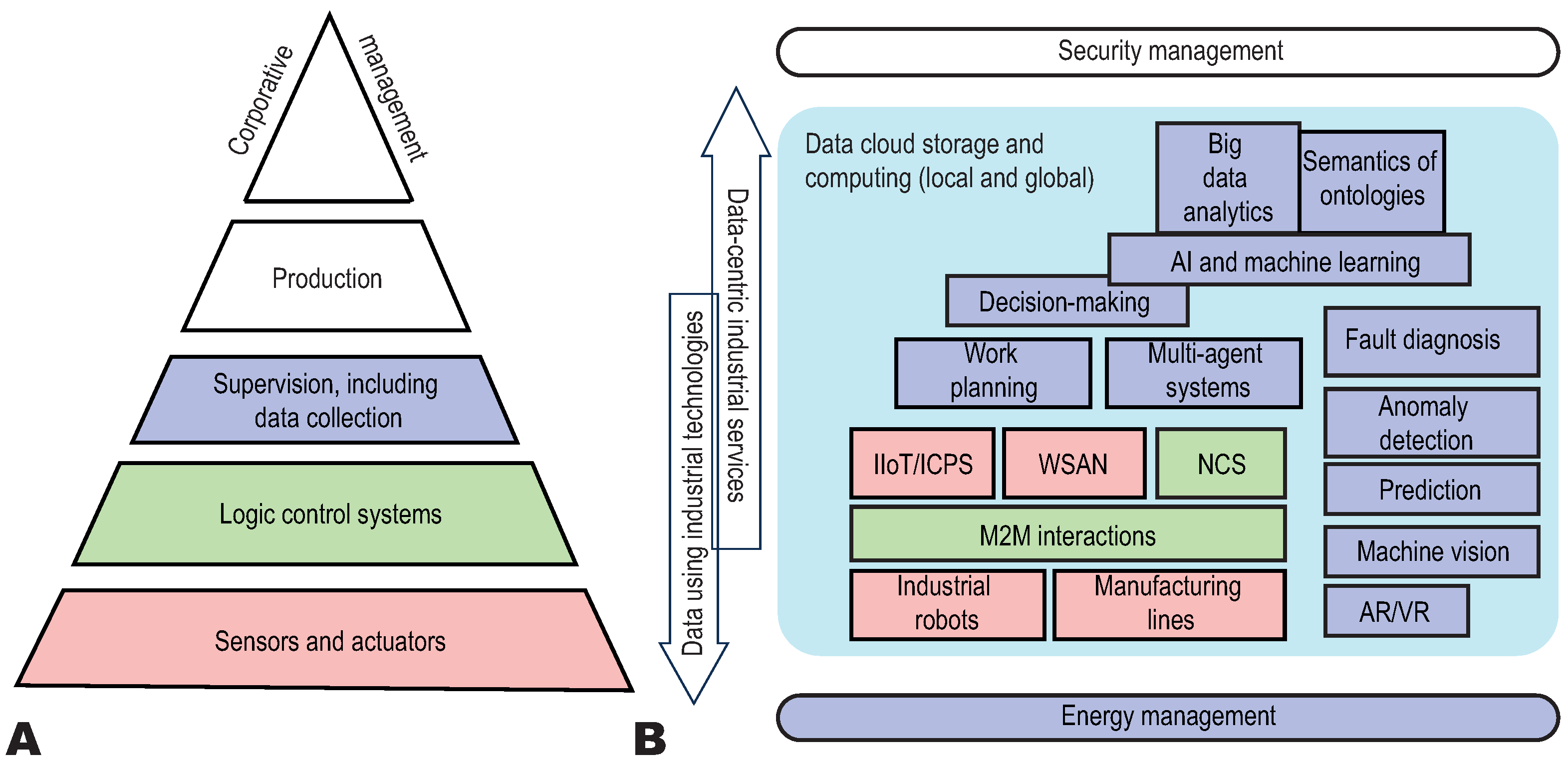

2. The Role of Industrial Data in Smart Manufacturing Infrastructure

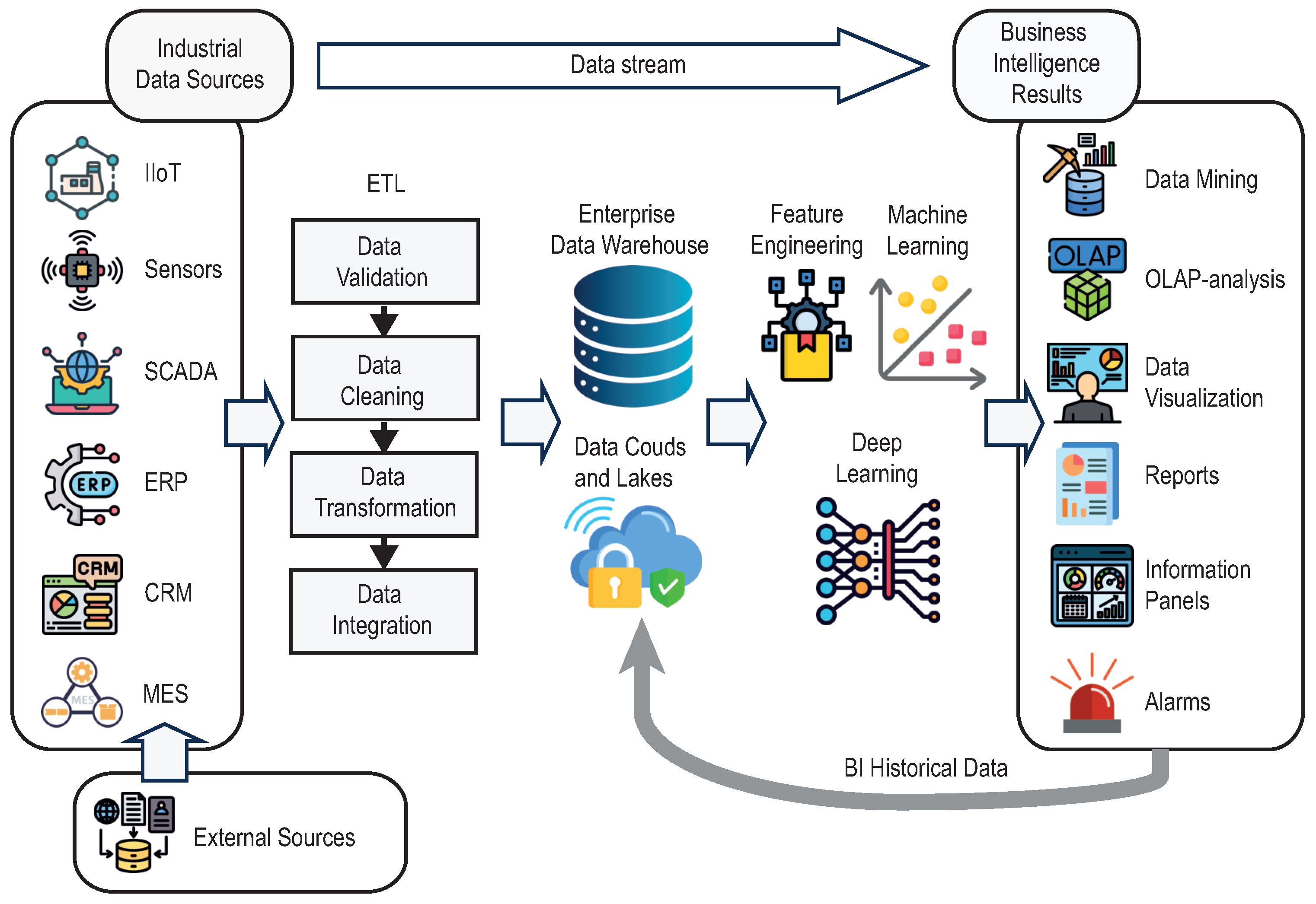

3. Data Sources in Industry

3.1. Sensors and IIoT Devices

- The sampling update rate of the data can be very high ( Hz), generating large amounts of information [36].

- Collected data can be both numerical (temperature, pressure) and categorical (equipment status), which fundamentally requires multimodal data processing [37].

- Errors and omissions may occur due to equipment or data transmission failures, which requires data preprocessing, error correction, and gap filling [17].

3.2. Enterprise Resource Planning Systems

- Data in ERP systems are stored in a structured manner, usually in relational databases (e.g., SQL). This means that information is organized into tables where each row represents a record and each column represents an attribute (e.g., product name, quantity, price). Importantly, modern ERP systems provide an application programming interface (API) to access the data, allowing integration with other applications such as business intelligence or AI systems. This greatly simplifies data analysis due to the clear structure of data collection and presentation, as well as the ability to use standard database tools (e.g., SQL queries).

- Data are updated less frequently than data from sensors or IIoT devices. For example, inventory data may be updated once a day, while financial reports may be updated once a week or month. Many processes in ERP systems, such as financial reporting, are performed in batch processing, which causes delays in data updates, and data are often manually entered into ERP systems, which also slows down data accumulation and processing. On the one hand, low update frequency simplifies data management, as real-time processing is not required, but on the other hand, it makes it difficult to make quick decisions. Also, ERP systems have limited applicability for tasks that require real-time data (e.g., production line management).

- ERP systems rarely operate in isolation. They integrate with other enterprise systems such as manufacturing execution system (MES), customer relationship management (CRM), and supply chain management. To integrate ERP with MES or supervisory control and data acquisition (SCADA), middleware such as Apache Kafka or MQTT are often used to provide real-time data transfer [42]. For integration of such systems, the unified standards such as Open Platform Communications Unified Architecture (OPC UA) or electronic data interchange have been developed [43].

- ERP systems are designed to process large volumes of data, making them suitable for large enterprises. However, this requires significant computing resources. By storing historical data, these systems enable trend analysis, which in turn supports long-term strategic decision-making.

3.3. SCADA Systems

- SCADA systems operate in real time, which means that data from sensors, transducers, and other devices are continuously being collected and processed. This allows operators to react instantly to changes in production processes.

- SCADA systems collect data at a high level of detail, which means there are a large number of parameters for each device or process. For example, for a pump, parameters such as pressure, temperature, vibration, rotational speed, and energy consumption can be monitored. Integrating data from a large number of sensors and devices requires powerful computing resources for processing. To manage such data streams, a ‘tagging’ system is commonly used, meaning that each parameter in the SCADA system is identified by a unique label (‘tag’), allowing data to be easily tracked and analyzed.

- Data in SCADA systems are often redundant, containing duplicate or irrelevant information. This redundancy stems from the large number of data sources and the high frequency of data updates. For instance, pipeline pressure might be measured by two independent sensors, creating duplicates. Furthermore, not all collected data are useful for analysis; ambient temperature readings, for example, may have no impact on the core process but are still logged by default. Consequently, data post-processing is essential to eliminate this redundancy using methods such as averaging, interpolation, and duplicate removal.

3.4. Other Sources of Industrial Data

- MES systems, which provide information about the production process, including data on product quality and lead times [47].

- CRM systems, which contain data about customers, orders and sales, which is useful for demand forecasting [48].

- A variety of external data sources that are not directly industrial: market data, weather, or logistics data that can influence production processes [49].

4. Challenges of Industrial Data Collection, Processing, and Storage at Industrial Facilities

4.1. Data Collection and Preparation for AI Applications

- Integration of data from different sources. Data can come from sensors, IIoT devices, ERP and SCADA systems, which requires them to be combined into a unified system [2]. For example, in a chemical plant, data from sensors that monitor pressure and temperature are integrated with data from an ERP system that manages raw material inventory. This allows the production process to be optimized and costs to be reduced.

- Real-time and stream processing. Stream processing technologies such as Apache Flink or Apache Storm [55] are used to process real-time data. For example, in a factory, data from assembly robots are processed in real time to detect defects early in the production process. This minimizes scrap losses and increases product quality. In real-time data processing, edge computing is tried to be used [56]. The latter is an approach to data processing in which computations are performed closer to the data source (at the ‘edge’ of the network, hence ‘edge’) rather than in centralized cloud servers. This reduces computational latency, reduces the load on the network as it reduces transmission costs and improves reliability and security by preventing data leakage over the Internet, and as a result, improves system performance, especially in environments where data processing speed is critical. However, edge computing has disadvantages, in particular, computing resources on edge devices are usually limited, and also the distributed network of edge devices requires a complex management and synchronization system.

- Ensuring data quality. During the data acquisition phase, it is important to ensure data accuracy and completeness, which requires the use of calibrated sensors and reliable data transfer protocols. This raises the issue of regularly checking the devices and the industrial data itself for accuracy and errors [57].

4.2. Data Preprocessing

- Data validation and cleaning involves fixing data gaps and filtering out noise in the data. Missing values can be filled in using interpolation or ML techniques, e.g., regression algorithms such as the k-nearest neighbor method. For example, reactor temperature data may contain missing values due to sensor failures. Interpolation can recover missing values and ensure data continuity. Data filtration typically uses techniques such as moving average, digital filters, or wavelet transforms that help remove noise and improve data quality. For example, equipment vibration data may contain noise due to external influences. Filtering helps to isolate useful signals to analyze the condition of the equipment.

- Data transformation includes both normalization and standardization. The first is to bring the data to a single normalized range, e.g., or , to eliminate the effects of data scale on the performance of AI models. The latter is particularly relevant when processing data from sensors at different scales and of different physical nature. For example, a manufacturing facility may collect temperature and pressure data inside the plant, which have different scales. Normalization allows them to be used in the same AI model. Standardization involves the conversion of data to a standard normal distribution with zero mean and unit variance. This is also important when building ML models. In the previous example, temperature and pressure data can be standardised for use in clustering algorithms. One of the simplest ways to perform data harmonization is the standard score conversion procedure, which is well known in statistics. If we have a set of measurements , where N is the number of measurements, we calculate the standardised score as , where is the mean and is the standard deviation of the set of measurements .

4.3. Data Integration

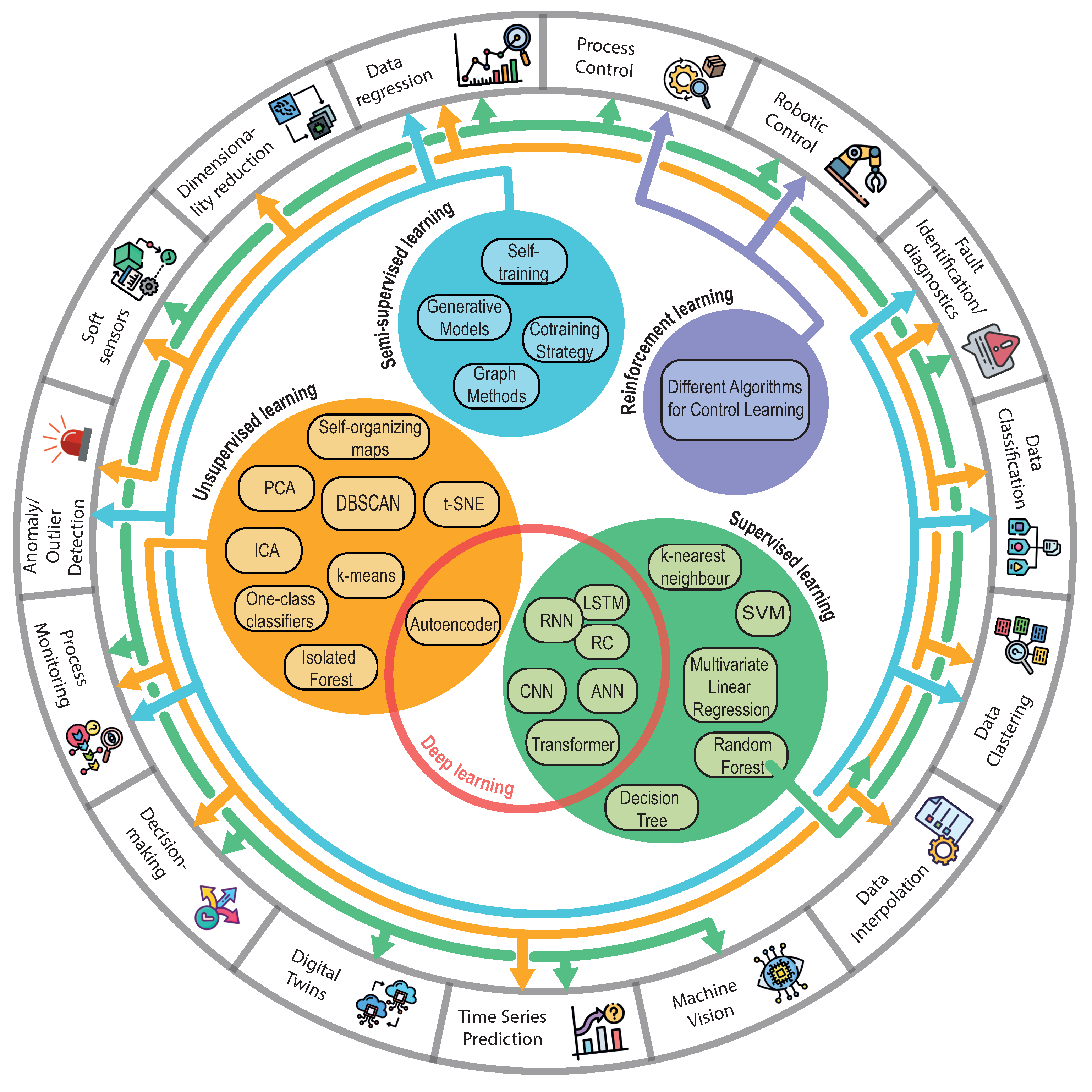

4.4. Machine Learning in Industrial Data Analysis

- Supervised learning is used when all accumulated data are labeled and the desired outcome or goal is known. Then the problem is defined as a type of classification and regression task, such as determining the state of equipment (operational/faulty) or predicting parameters (temperature, pressure) based on collected labeled historical data [62].

- Unsupervised learning is applied to data which have no labels (tags) and used to find hidden structures in data, such as clustering to detect anomalies or analyzing relationships between parameters of manufacturing processes [63].

- Reinforcement learning (RL) utilizes a trial and error learning approach to learning by direct interaction with the environment [64]. It does not need supervision or a predefined dataset with/without labels and is applied whenever tasks in dynamic environments requiring real-time decision-making need to be solved, allowing adaptation to changing environmental conditions. Therefore, RL is most effective when applied to tasks requiring interaction with a changing environment, e.g., application in manufacturing process optimization, where the model learns to control parameters (e.g., conveyor speed) to maximize efficiency, robot motion control, or interaction of several linked machines in a serial production line [65].

- Semi-supervised learning (SSL) is increasingly finding application in industrial data processing tasks when the cost of labeling data samples is expensive or time consuming [66]. The main difference between semi-supervised and fully supervised ML is that the latter can only be trained using fully labeled datasets, while the former uses both labeled and unlabeled data samples during training. For example, determining the type of faulty data samples detected is a difficult labor-intensive task for engineers. As a consequence, most faulty samples turn out to be unlabeled, but they still contain important process information. If these unlabeled samples can be put to good use, the efficiency of the fault classification system can be greatly improved. SSL methods modify or augment the supervised algorithm, the so-called base learner, to incorporate information from unlabeled examples. The labeled data are used to justify the predictions of the base learner and add structure (e.g., the number of existing classes and the main characteristics of each class) to the learning task. In this case, semi-supervised MLs using both labeled and unlabeled samples yield an improved fault classification model compared to a model that depends only on a small fraction of labeled data samples.

- Feature extraction. Creating new features from existing data, such as calculating derivatives or integrals for time series, can reveal underlying dynamics that are not apparent in the raw signal. For instance, methods inspired by the analysis of complex systems can help identify synchronized patterns or coherent structures within chaotic-looking industrial data [67]. For example, at a wind farm, wind speed data can be converted into features such as average speed over the last hour, which helps to improve prediction of power generation without creating redundant information.

- Feature selection or data dimensionality reduction. Removing redundant or irrelevant features using techniques such as Principal Component Analysis (PCA), Independent Component Analysis (ICA), or Lasso regression. For example, in manufacturing, data on thousands of sensor measured parameters can be reduced to a few key attributes which can be a combination of the original measured quantities.

- Encoding categorical data numerically using one-hot encoding or label encoding. For example, in logistics, cargo type data (e.g., “fragile,” “dangerous,” etc.) can be encoded for use in route optimization models.

- Artificial neural networks (ANNs) are the foundational architecture of deep learning, consisting of interconnected layers of neurons that can model complex relationships in data. ANNs are applied in a wide range of industries, including retail for demand forecasting, and in logistics for optimizing supply chain operations. Their versatility makes them suitable for both regression and classification tasks across diverse domains.

- Recurrent neural networks (RNNs), including long short-term memory (LSTM) and reservoir computing (RC), for time series prediction and digital twin creation. These are particularly useful in industries like finance for stock price forecasting, in energy for predicting electricity demand, and in manufacturing for predictive maintenance.

- Convolutional neural networks (CNNs) for visual inspection tasks such as product quality control. CNNs are widely used in the automotive industry for detecting defects in car parts and in retail for automated checkout systems.

- Autoencoders (AEs) for automatic feature extraction which is particularly useful in vibration or sound analysis tasks for fault diagnosis. AEs are applied in industries like aerospace for monitoring aircraft engine health and in manufacturing for anomaly detection in machinery.

- Transformers, originally developed for natural language processing (NLP), have become a cornerstone in sequence modeling due to their ability to handle long-range dependencies efficiently. Transformers are used in industries for applications such as automated customer support (chatbots) and document summarization in legal and financial sectors. Their self-attention mechanism makes them highly effective in tasks requiring context-aware decision-making.

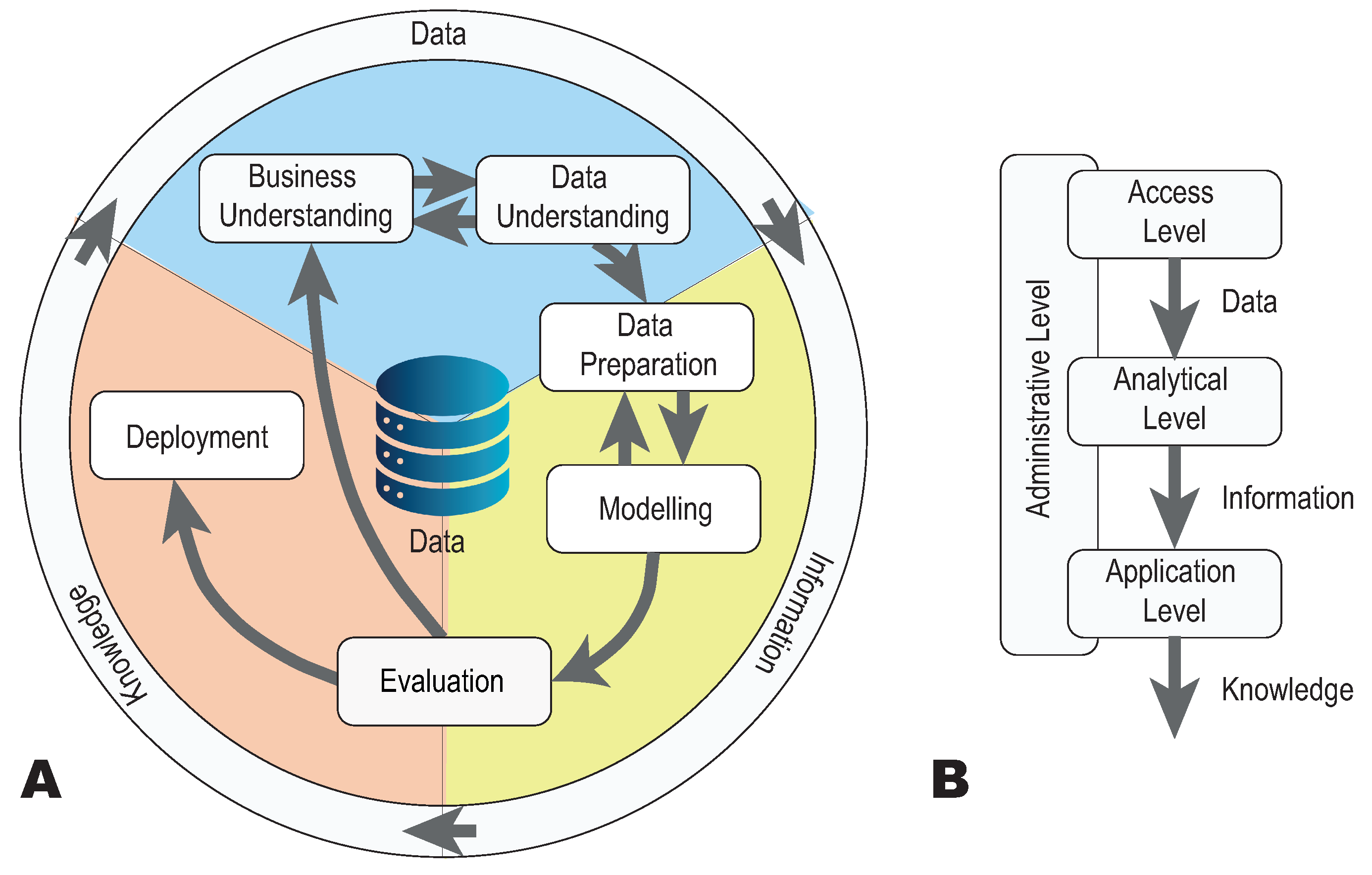

5. Assessing the Quality of Industrial Data

5.1. Steps in Assessing the Quality of Industrial Data

- Data access and provisioning. The first step is to provide access to the necessary data sources, including selecting relevant sources, filling in missing data, and preparing data for analysis. This step corresponds to the initial stages of CRISP-DM—’Business Understanding’ and ‘Data Understanding’ (see Figure 4A).

- Data analysis. In the second stage, data are analyzed to extract useful information. Various tools and techniques are used, including data science algorithms. This stage covers the ‘Data Preparation’ and ‘Modeling’ stages in CRISP-DM.

- Application of information. The third stage is the utilization of the information obtained in industrial processes. This includes both one-off analyses and long-term monitoring. In CRISP-DM, this corresponds to the ‘Deployment’ stage, where knowledge of the problem and subject matter knowledge help to extract the necessary knowledge from the data.

- Process management. The fourth stage covers the management of peripheral processes such as long-term data management, responsibility allocation, security, and ethical use of data. This step has no direct analogue in CRISP-DM, but is critical for industrial applications.

5.2. Level of Assessing the Quality of Industrial Data

- Access level covers the collection of the necessary data according to the defined analysis objectives at the stage of understanding the processes in the industrial system. This level addresses aspects related to the quality of the raw (‘raw’) data and relevant business processes. The criteria shown in Table 2 support the objectives of collecting and preparing data for analysis.

- Analytical level refers to the quality of data analysis and is primarily concerned with information. This corresponds to the second and third step of CRISP-DM (see Figure 4A) ‘Data Preparation’ and ‘Modeling’. In this step, data access needs the context of a special use case or problem definition. The context guides the processing steps using at least one special analysis method. The criteria presented in Table 2 assess the quality of the data analysis.

- Application level concerns the assessment of data quality during the application phase in industrial settings, which is related to the ‘Evaluation’ and ‘Deployment’ phases of CRISP-DM (see Figure 4A). The criteria for this level, presented in Table 2, aim to establish high quality of data analysis results. They specifically target the inclusion of personnel not previously involved in the analyses as future users of deployment solutions.

5.3. Developing Recommendations for Preparing Big Data for AI Implementation

5.3.1. Industrial Data Collection

- Integration of Heterogeneous Data Sources. Industrial data are inherently multi-source, originating from systems such as SCADA (Supervisory Control and Data Acquisition), ERP (Enterprise Resource Planning), and IoT sensors. Integrating these disparate streams into a unified data platform is essential to form a holistic view of operations, combining equipment status, process parameters, and management data. Technologies like Apache Kafka or MQTT middleware are commonly employed to facilitate this real-time data integration and synchronization.

- Real-time Data Acquisition and Processing. For time-sensitive industrial processes, the ability to acquire and process data in real time is critical. This capability enables rapid response to anomalies, minimizing production scrap and downtime. Implementing stream processing frameworks (e.g., Apache Flink, Apache Storm) allows for continuous data analysis as it is generated. Furthermore, leveraging edge computing architectures processes data closer to source, significantly reducing latency and alleviating network load.

- Proactive Data Quality Assurance. The accuracy and reliability of AI models are directly contingent upon the quality of the input data. To mitigate the risks of erroneous predictions caused by poor data, a proactive approach to quality assurance is mandatory. This involves the regular maintenance and calibration of sensors, the use of robust and reliable data transmission protocols, and the implementation of automated data quality monitoring systems to detect and correct errors and omissions at the point of collection.

5.3.2. Data Processing

- Data Cleansing and Denoizing. Industrial datasets frequently contain noise, outliers, and missing values that can severely degrade model performance. To mitigate this, a suite of signal processing techniques must be applied. This includes using interpolation methods (e.g., linear or spline) to impute missing points and digital filters (e.g., moving average filters or wavelet transforms) to suppress noise and isolate meaningful signals.

- Normalization and Standardization. The heterogeneous nature of industrial sensors results in data with varying scales and units. To ensure stable and efficient model convergence, these data must be brought to a common, dimensionless scale. Normalization (e.g., scaling to a range) and standardization (e.g., scaling to zero mean and unit variance) are essential preprocessing steps, particularly for machine learning algorithms like gradient-based optimizers that are highly sensitive to input feature magnitudes.

- Data Structuring and Feature Engineering. Processed data streams must be structured into a coherent format suitable for model ingestion. This involves aligning time-series data from disparate sources, handling different sampling frequencies, and creating derived features that enhance predictive power. While ETL or ELT pipelines are instrumental in this structuring, the focus at this stage is on the transformational logic: aggregating, windowing, and engineering features to create a curated training dataset. Cloud platforms such as AWS IoT or Microsoft Azure should be used to integrate data from different sources; encryption and authentication protocols such as OPC UA should be used to ensure data security.

5.3.3. Data Governance and Management

- Data Security and Access Control. Industrial data assets often comprise sensitive operational intelligence and must be protected against unauthorized access and cyber threats. A comprehensive security framework is essential, incorporating encryption (e.g., AES-256) and authentication protocols (e.g., OPC UA) for data in transit and at rest. This must be supplemented with rigorous role-based access control systems and consistently updated security patches to mitigate evolving risks.

- Long-term Data Storage and Lifecycle Management. The continuous refinement of AI models depends on the systematic storage and management of historical data. Implementing scalable, cloud-based data warehouses (e.g., Amazon S3, Google BigQuery, or Azure Data Lake) enables cost-effective long-term archiving and facilitates efficient analysis of large historical datasets. A defined data lifecycle policy ensures that data are retained, archived, and purged according to value, maintaining system performance and relevance for future model retraining.

5.3.4. Data Quality Assessment for ML-Based Models

5.4. Utilizing ML-Based Techniques

5.4.1. Supervised Learning for Predictive Modeling

- Classification: For tasks such as equipment state determination or part inspection, algorithms like Support Vector Machines (SVMs), Adaptive Neuro-Fuzzy Inference System (ANFIS), and Random Forests are highly effective. Researchers often combine such classifiers to boost their efficiency. A common approach is to integrate them with low-cost sensors, including RGB cameras, accelerometers, and gyroscopes. This synergy, especially when powered by DL algorithms like CNNs, facilitates sophisticated visual monitoring capabilities.To enhance the accuracy and robustness of fault detection and diagnosis in an industrial steam turbine, Salahshoor et al. [90] developed a hybrid framework fusing an ANFIS and a SVM via an ordered weighted averaging operator. This data fusion strategy capitalized on the complementary strengths of both classifiers, yielding a system that outperformed either classifier used in isolation. The results demonstrated tangible improvements, including a reduction in diagnosis time for key faults—such as cutting the time for a thermocouple fault from 32 to 13 samples—and the elimination of specific misclassifications, thereby increasing overall diagnostic reliability and reducing the potential for false alarms.Other example here is study [91], where a cost-effective IMU sensor (MPU6050) capturing three-axis accelerometer and gyroscope data was used to monitor the condition of a computer fan. Instead of relying on expensive or specialized hardware, the authors creatively transformed the multi-axis vibration signals into time-frequency spectrograms using the Short-Time Fourier Transform. These spectrograms were then combined into a single RGB image, effectively converting vibration analysis into a visual recognition task. This RGB image was processed using a CNN, which successfully classified the fan’s operational state—such as normal, idle, or faulty—with high accuracy. This approach demonstrates how low-cost sensors, when paired with DL techniques like CNNs, can enable sophisticated visual monitoring and fault diagnosis without the need for complex or expensive sensing systems.

- Regression: For predicting continuous variables (e.g., temperature), methods like linear regression and tree-based algorithms are standard. A critical application is in accurate fault diagnosis, where surveys indicate SVM-based algorithms (39% of studies) and ANN-based DL (34% of studies) are most prevalent [26]. The practical implementation and enhancement of these methodologies are well illustrated in recent research on tree-based approaches, which demonstrate both robust predictive capability for continuous parameters and exceptional performance in diagnostic frameworks.The application of tree-based models for forecasting continuous parameters is effectively demonstrated by Tran et al. [92], who employed Regression Trees for one-step-ahead prediction of vibration amplitude in a low methane compressor. Their work shows the viability of tree-based models in time-series forecasting, a critical step for anticipating machine degradation. Furthermore, the flexibility of these models enables their enhancement and integration into sophisticated diagnostic frameworks. For instance, Li et al. [93] developed an improved Decision Tree-based method incorporating virtual sensor-based fault indicators for diagnosing faults in variable refrigerant flow systems. Their hybrid approach, combining physical knowledge with data-driven learning, provided more reliable diagnosis results compared to several other tree-based data-driven models, demonstrating a pathway to augment diagnostic capability.The robustness and high accuracy achievable with tree-based ensembles are further emphasized by Noura et al. [94]. Their bi-phase framework, leveraging an ensemble of tree-based classifiers including Random Forest and XGBoost, achieved perfect accuracy in both detection and diagnosis of faults in a diesel engine system. Notably, their feature importance analysis revealed that optimal performance could be attained with a minimal feature set, underscoring the method’s efficiency and interpretability. These findings collectively demonstrate that tree-based algorithms constitute a versatile toolkit, capable of addressing interconnected challenges of continuous state prediction and discrete fault classification with high precision, thereby enriching the ecosystem of machine learning techniques in industry prognostics.

5.4.2. Unsupervised Learning for Data Exploration

- Clustering: Algorithms such as k-means and DBSCAN are used to group similar data points, identifying natural patterns or operational regimes. These methods are particularly valuable in industrial process monitoring, where they help uncover underlying structures in high-dimensional sensor data without requiring pre-labeled datasets. For instance, Thomas et al. [95] evaluated various clustering techniques, including k-means, DBSCAN, Balanced Iterative Reducing and Clustering using Hierarchies (BIRCH), and mean shift, combined with dimensionality reduction methods like PCA and ICA. Their study demonstrated that DBSCAN effectively identified fault states in the Tennessee Eastman Process and an industrial separation tower, even when fault labels were unknown a priori. Similarly, Bagherzade et al. [96] proposed an ensemble clustering approach to detect operational modes in industrial gas turbines. By aggregating multiple partitions from diverse algorithms (e.g., k-means, hierarchical clustering, DBSCAN, and others) and applying a consensus function, their method identified consistent clusters corresponding to distinct operational states such as idle, partial load, and full load. This ensemble strategy improved robustness and enabled the discovery of sub-operational modes, providing a scalable framework for real-time process monitoring and knowledge discovery in complex industrial systems.The systematic review by Chaudhry et al. [97] provided a comprehensive analysis of unsupervised clustering algorithms, evaluating their strengths and weaknesses for pattern identification in complex data. This overview is particularly valuable for industrial applications, offering guidance on selecting appropriate algorithms like DBSCAN and k-means for process monitoring and operational regime detection in high-dimensional sensor data.

- Anomaly Detection: Techniques like one-class SVM, isolation forest, and AEs are vital for identifying rare events or deviations from normal operation, which is crucial for predictive maintenance. These one-class methods are particularly valuable in industrial settings where labeled fault data is scarce, allowing models to be trained exclusively on normal operating data to effectively detect anomalies [82,84].For instance, AEs achieve high detection performance with F1-scores up to 93% on industrial datasets like UNSW-NB15, making them suitable for complex pattern recognition in sensor data and images [82]. Variational Autoencoders (VAEs) further enhance this capability by learning probabilistic representations, improving generalization on diverse operational data [84]. In contrast, Isolation Forest offers superior computational efficiency with inference times as low as 1.3 ms per sample, ideal for real-time monitoring applications despite slightly lower accuracy (F1-score: 91%) [82]. One-Class SVM provides a balanced approach with 92% F1-score and moderate latency (2.8 ms), effective for boundary-based anomaly detection in multidimensional sensor data [82]. The choice of method depends on operational constraints: deep learning models (AEs/VAEs) for accuracy-critical applications versus lightweight methods (Isolation iForest) for resource-constrained environments.

5.4.3. Deep Learning for Complex Pattern Recognition

- Time-Series Analysis: RNNs, particularly LSTM, and RC networks are standard for analyzing temporal data like sensor streams [98,99]. So, Lei et al. [76] used a novel self-supervised deep LSTM network for industrial temperature prediction in aluminum processes application, which achieved a testing Root Mean Square Error (RMSE) as low as 0.0078 and a prediction accuracy of up to 89.5% in the middle temperature zone, demonstrating effective performance with limited labeled data. In other example, Zhang et al. [78] used a LSTM network optimized with orthogonal experimental design and feature engineering, which reduced the prediction RMSE by up to 97.6% compared to the auto regressive integrated moving average (ARIMA) model on sensor data from an industrial pump. The proposed LSTM-based methods demonstrated not just a small improvement, but a dramatic increase in the accuracy of predicting the condition of industrial equipment on real data, which directly indicates its practical value for predictive maintenance in I4.0.

- Complex Signal Processing: DL is particularly powerful for tasks involving vibration, sound, and image analysis, where raw data contain intricate patterns [100]. For example, in [79], a CNN-based method for online weld defect detection was used with a result of 99.38% mean classification accuracy, outperforming their previous audio-based method (87.16% accuracy). In [81], a Deep CNN with data augmentation was used for weld defect detection, achieving 99.01% accuracy and reducing overfitting on a dataset of 9 680 images.

5.4.4. Semi-Supervised Learning for Industrial Fault Detection and Diagnosis

5.4.5. Reinforcement Learning in Industrial Process Optimization

5.4.6. Specialized Applications: Soft Sensors and Dimensionality Reduction

- Soft Sensors: These are mathematical models that estimate parameters which are difficult to measure directly (e.g., real-time chemical concentration) using data from other, more affordable sensors (e.g., temperature, pressure). Data-driven soft sensors, which build models using regression, ANNs, or SVM to relate input data to a target parameter, are a promising area [112,113,114]. This is closely related to the problem of system identification, where ML also shows significant promise [100,115]. For example, Curreri et al. [116] used transfer learning with RNN and LSTM-based soft sensors, achieving near-optimal performance on a target industrial process while reducing design time by over 100 h compared to full re-training.

- Dimensionality Reduction: Industrial processes often generate vast amounts of data. Dimensionality reduction simplifies models, accelerates training, and improves interpretability by preserving key information while reducing the number of features. Methods like PCA, t-distributed stochastic neighbor embedding (t-SNE), and AEs are used for sensor data analysis, failure prediction, and quality control, as they help extract relevant parameters and reduce noise.

6. Limitations and Prospects for the Use of AI Data in Industry

6.1. Limitations of the Use of Data for AI in Industry and Possible Ways to Overcome Them

6.2. Prospects for the Use of AI Data in Industry

- Start with a Critical Asset: Focus on a single, high-value piece of equipment rather than a full production line to demonstrate value and manage scope.

- Ensure Foundational Data Quality: Prioritize the collection of clean, consistent, and time-synchronized data from a few critical sensors over amassing large volumes of unstructured data.

- Establish Robust Data Integration: Build a simple, reliable, and automated pipeline from the asset to a central storage (e.g., a cloud database) to ensure the digital twin receives a live, trustworthy data feed.

7. Conclusions

- Proliferation of Digital Twins: The evolution from static models to dynamic, self-learning digital twins will enable real-time simulation, predictive what-if analysis, and autonomous optimization of physical assets.

- Rise of Knowledge Automation and Generative AI: Beyond predictive analytics, AI systems will increasingly codify expert knowledge and generate novel process optimizations, shifting their role from decision-support to proactive decision-making.

- Ubiquitous Cloud-Edge Integration: The maturation of hybrid cloud-edge architectures will seamlessly distribute computational load, facilitating scalable AI deployment while ensuring low-latency control for critical operations.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AE | Autoencoder |

| AI | Artificial Intelligence |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| BIRCH | Balanced Iterative Reducing and Clustering using Hierarchies |

| CRM | Customer Relationship Management |

| CRISP-DM | Cross-industry Standard Process for Data Mining |

| DBSCAN | Density-based Spatial Clustering of Applications with Noise |

| DDPG | Deep Deterministic Policy Gradient |

| DL | Deep Learning |

| DQN | Deep Q-Network |

| ELT | Extract, Load, andTransform |

| ERP | Enterprise Resource Planning |

| ETL | Extract, Transform, and Load |

| I4.0 | Industry 4.0 |

| IoT | Internet of Things |

| IIoT | Industrial Internet of Things |

| ICA | Independent Component Analysis |

| LSTM | Long Short-Term Memory |

| MES | Manufacturing Execution System |

| ML | Machine Learning |

| NCS | Networked Control System |

| OPC UA | Open Platform Communications Unified Architecture |

| PCA | Principal Component Analysis |

| PPO | Proximal Policy Optimization |

| RL | Reinforcement Learning |

| RL-RTO | RL-Based Real-Time Optimization |

| RC | Reservoir Computing |

| RMSE | Root Mean Square Error |

| RNN | Recurrent Neural Network |

| SCADA | Supervisory Control and Data Acquisition |

| SME | Small and Medium-sized Enterprise |

| SMB | Small and Medium-sized Business |

| SSL | Semi-supervised Learning |

| SVM | Support Vector Machine |

| TD3 | Twin Delayed DDPG |

| t-SNE | t-distributed Stochastic Neighbor Embedding |

| VAE | Variational Autoencoder |

| WSAN | Wireless Sensor and Actuator Network |

References

- Lu, Y. Industry 4.0: A survey on technologies, applications and open research issues. J. Ind. Inf. Integr. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Lee, J.; Bagheri, B.; Kao, H.A. A cyber-physical systems architecture for industry 4.0-based manufacturing systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Jardim-Goncalves, R.; Romero, D.; Grilo, A. Factories of the future: Challenges and leading innovations in intelligent manufacturing. Int. J. Comput. Integr. Manuf. 2017, 30, 4–14. [Google Scholar]

- Indri, M.; Grau, A.; Ruderman, M. Guest editorial special section on recent trends and developments in industry 4.0 motivated robotic solutions. IEEE Trans. Ind. Inform. 2018, 14, 1677–1680. [Google Scholar] [CrossRef]

- Qiu, J.; Gao, H.; Chow, M.Y. Networked control and industrial applications [special section introduction]. IEEE Trans. Ind. Electron. 2015, 63, 1203–1206. [Google Scholar] [CrossRef]

- Caiado, R.G.G.; Machado, E.; Santos, R.S.; Thomé, A.M.T.; Scavarda, L.F. Sustainable I4. 0 integration and transition to I5. 0 in traditional and digital technological organisations. Technol. Forecast. Soc. Change 2024, 207, 123582. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T. From cyber–physical convergence to digital twins: A review on edge computing use case designs. Appl. Sci. 2023, 13, 13262. [Google Scholar] [CrossRef]

- Raptis, T.P.; Passarella, A.; Conti, M. Data management in industry 4.0: State of the art and open challenges. IEEE Access 2019, 7, 97052–97093. [Google Scholar] [CrossRef]

- Conti, M.; Das, S.K.; Bisdikian, C.; Kumar, M.; Ni, L.M.; Passarella, A.; Roussos, G.; Tröster, G.; Tsudik, G.; Zambonelli, F. Looking ahead in pervasive computing: Challenges and opportunities in the era of cyber–physical convergence. Pervasive Mob. Comput. 2012, 8, 2–21. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F. Digital twin and big data towards smart manufacturing and industry 4.0: 360 degree comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Lai, Y.C. Digital twins of nonlinear dynamical systems: A perspective. Eur. Phys. J. Spec. Top. 2024, 233, 1391–1399. [Google Scholar] [CrossRef]

- Hramov, A.E.; Kulagin, N.; Andreev, A.V.; Pisarchik, A.N. Forecasting coherence resonance in a stochastic Fitzhugh–Nagumo neuron model using reservoir computing. Chaos Solitons Fractals 2024, 178, 114354. [Google Scholar] [CrossRef]

- Kong, L.W.; Weng, Y.; Glaz, B.; Haile, M.; Lai, Y.C. Reservoir computing as digital twins for nonlinear dynamical systems. Chaos Interdiscip. J. Nonlinear Sci. 2023, 33, 033111. [Google Scholar] [CrossRef]

- Andreev, A.V.; Pisarchik, A.N.; Kulagin, N.; Jaimes-Reátegui, R.; Huerta-Cuellar, G.; Badarin, A.A.; Hramov, A.E. Stochastic cloning of dynamical systems with hidden variables. Phys. Rev. E 2025, 112, 015303. [Google Scholar] [CrossRef]

- Human, C.; Basson, A.H.; Kruger, K. A design framework for a system of digital twins and services. Comput. Ind. 2023, 144, 103796. [Google Scholar] [CrossRef]

- Schluse, M.; Rossmann, J. From simulation to experimentable digital twins: Simulation-based development and operation of complex technical systems. In Proceedings of the 2016 IEEE International Symposium on Systems Engineering (ISSE), Edinburgh, UK, 3–5 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Wang, L.; Törngren, M.; Onori, M. Current status and advancement of cyber-physical systems in manufacturing. J. Manuf. Syst. 2015, 37, 517–527. [Google Scholar] [CrossRef]

- Qin, S.J.; Badgwell, T.A. A survey of industrial model predictive control technology. Control Eng. Pract. 2003, 11, 733–764. [Google Scholar] [CrossRef]

- Cupek, R.; Ziebinski, A.; Zonenberg, D.; Drewniak, M. Determination of the machine energy consumption profiles in the mass-customised manufacturing. Int. J. Comput. Integr. Manuf. 2018, 31, 537–561. [Google Scholar] [CrossRef]

- Sisinni, E.; Saifullah, A.; Han, S.; Jennehag, U.; Gidlund, M. Industrial internet of things: Challenges, opportunities, and directions. IEEE Trans. Ind. Inform. 2018, 14, 4724–4734. [Google Scholar] [CrossRef]

- Dean-Leon, E.; Ramirez-Amaro, K.; Bergner, F.; Dianov, I.; Cheng, G. Integration of robotic technologies for rapidly deployable robots. IEEE Trans. Ind. Inform. 2017, 14, 1691–1700. [Google Scholar] [CrossRef]

- Zhu, J.; Zou, Y.; Zheng, B. Physical-layer security and reliability challenges for industrial wireless sensor networks. IEEE Access 2017, 5, 5313–5320. [Google Scholar] [CrossRef]

- Raza, S.; Faheem, M.; Guenes, M. Industrial wireless sensor and actuator networks in industry 4.0: Exploring requirements, protocols, and challenges—A MAC survey. Int. J. Commun. Syst. 2019, 32, e4074. [Google Scholar] [CrossRef]

- Pang, Z.; Luvisotto, M.; Dzung, D. Wireless high-performance communications: The challenges and opportunities of a new target. IEEE Ind. Electron. Mag. 2017, 11, 20–25. [Google Scholar] [CrossRef]

- Otto, B.; Jürjens, J.; Schon, J.; Auer, S.; Menz, N.; Wenzel, S.; Cirullies, J. Industrial Data Space. Digital Souvereignity Over Data; Fraunhofer-Gesellschaft: Munich, Germany, 2016. [Google Scholar]

- Ge, Z.; Song, Z.; Ding, S.X.; Huang, B. Data mining and analytics in the process industry: The role of machine learning. IEEE Access 2017, 5, 20590–20616. [Google Scholar] [CrossRef]

- Wang, D. Building value in a world of technological change: Data analytics and industry 4.0. IEEE Eng. Manag. Rev. 2018, 46, 32–33. [Google Scholar] [CrossRef]

- Folgado, F.J.; Calderón, D.; González, I.; Calderón, A.J. Review of Industry 4.0 from the perspective of automation and supervision systems: Definitions, architectures and recent trends. Electronics 2024, 13, 782. [Google Scholar] [CrossRef]

- ISA–95; Enterprise—Control System Integration. International Society of Automation (ISA): Research Triangle Park, NC, USA, 1995.

- IEC 62264; Enterprise—Control System Integration. International Electrotechnical Commission (IEC): Geneva, Switzerland, 2013.

- Lucizano, C.; de Andrade, A.A.; Facó, J.F.B.; de Freitas, A.G. Revisiting the Automation Pyramid for the Industry 4.0. In Proceedings of the 2023 15th IEEE International Conference on Industry Applications (INDUSCON), São Bernardo do Campo, Brazil, 22–24 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1195–1198. [Google Scholar]

- Jirkovskỳ, V.; Obitko, M.; Mařík, V. Understanding data heterogeneity in the context of cyber-physical systems integration. IEEE Trans. Ind. Inform. 2016, 13, 660–667. [Google Scholar] [CrossRef]

- Trentesaux, D.; Borangiu, T.; Thomas, A. Emerging ICT concepts for smart, safe and sustainable industrial systems. Comput. Ind. 2016, 81, 1–10. [Google Scholar] [CrossRef]

- Lechevalier, D.; Narayanan, A.; Rachuri, S.; Foufou, S. A methodology for the semi-automatic generation of analytical models in manufacturing. Comput. Ind. 2018, 95, 54–67. [Google Scholar] [CrossRef]

- Cardin, O.; Ounnar, F.; Thomas, A.; Trentesaux, D. Future industrial systems: Best practices of the intelligent manufacturing and services systems (IMS2) French Research Group. IEEE Trans. Ind. Inform. 2016, 13, 704–713. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Tsanousa, A.; Bektsis, E.; Kyriakopoulos, C.; González, A.G.; Leturiondo, U.; Gialampoukidis, I.; Karakostas, A.; Vrochidis, S.; Kompatsiaris, I. A review of multisensor data fusion solutions in smart manufacturing: Systems and trends. Sensors 2022, 22, 1734. [Google Scholar] [CrossRef]

- de Castro-Cros, M.; Velasco, M.; Angulo, C. Machine-learning-based condition assessment of gas turbines—A review. Energies 2021, 14, 8468. [Google Scholar] [CrossRef]

- Majhi, A.A.K.; Mohanty, S. A comprehensive review on internet of things applications in power systems. IEEE Internet Things J. 2024, 11, 34896–34923. [Google Scholar] [CrossRef]

- Fast, Efficient, Reliable: Artificial Intelligence in BMW Group Production. Retrieved 15 July 2019. Available online: https://www.press.bmwgroup.com/middle–east/article/detail/T0299271EN/fast–efficient–reliable:–artificial–intelligence–in–bmw–group–production?language=en (accessed on 26 October 2025).

- Shehab, E.; Sharp, M.; Supramaniam, L.; Spedding, T.A. Enterprise resource planning: An integrative review. Bus. Process Manag. J. 2004, 10, 359–386. [Google Scholar] [CrossRef]

- Dingorkar, S.; Kalshetti, S.; Shah, Y.; Lahane, P. Real-Time Data Processing Architectures for IoT Applications: A Comprehensive Review. In Proceedings of the 2024 First International Conference on Technological Innovations and Advance Computing (TIACOMP), Bali, Indonesia, 29–30 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 507–513. [Google Scholar]

- Khan, A.A.; Abonyi, J. Information sharing in supply chains–Interoperability in an era of circular economy. Clean. Logist. Supply Chain 2022, 5, 100074. [Google Scholar] [CrossRef]

- Gerig, I. Standardization and Automation as the Basis for Digitalization in Controlling at Siemens Building Technologies. In The Digitalization of Management Accounting: Use Cases from Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2023; pp. 193–215. [Google Scholar]

- Powell, D. ERP systems in lean production: New insights from a review of lean and ERP literature. Int. J. Oper. Prod. Manag. 2013, 33, 1490–1510. [Google Scholar] [CrossRef]

- Pliatsios, D.; Sarigiannidis, P.; Lagkas, T.; Sarigiannidis, A.G. A survey on SCADA systems: Secure protocols, incidents, threats and tactics. IEEE Commun. Surv. Tutor. 2020, 22, 1942–1976. [Google Scholar] [CrossRef]

- Shojaeinasab, A.; Charter, T.; Jalayer, M.; Khadivi, M.; Ogunfowora, O.; Raiyani, N.; Yaghoubi, M.; Najjaran, H. Intelligent manufacturing execution systems: A systematic review. J. Manuf. Syst. 2022, 62, 503–522. [Google Scholar] [CrossRef]

- Buttle, F.; Maklan, S. Customer Relationship Management: Concepts and Technologies; Routledge: London, UK, 2019. [Google Scholar]

- Ikegwu, A.C.; Nweke, H.F.; Anikwe, C.V.; Alo, U.R.; Okonkwo, O.R. Big data analytics for data-driven industry: A review of data sources, tools, challenges, solutions, and research directions. Clust. Comput. 2022, 25, 3343–3387. [Google Scholar] [CrossRef]

- Manjunath, T.; Pushpa, S.; Hegadi, R.S.; Ananya Hathwar, K. A study on big data engineering using cloud data warehouse. In Data Engineering and Data Science: Concepts and Applications; Wiley: Hoboken, NJ, USA, 2023; pp. 49–69. [Google Scholar]

- Acosta, J.N.; Falcone, G.J.; Rajpurkar, P.; Topol, E.J. Multimodal biomedical AI. Nat. Med. 2022, 28, 1773–1784. [Google Scholar] [CrossRef] [PubMed]

- Karpov, O.E.; Pitsik, E.N.; Kurkin, S.A.; Maksimenko, V.A.; Gusev, A.V.; Shusharina, N.N.; Hramov, A.E. Analysis of publication activity and research trends in the field of ai medical applications: Network approach. Int. J. Environ. Res. Public Health 2023, 20, 5335. [Google Scholar] [CrossRef]

- Khorev, V.; Kiselev, A.; Badarin, A.; Antipov, V.; Drapkina, O.; Kurkin, S.; Hramov, A. Review on the use of AI-based methods and tools for treating mental conditions and mental rehabilitation. Eur. Phys. J. Spec. Top. 2025, 234, 4139–4158. [Google Scholar] [CrossRef]

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Davidson, G.P.; Ravindran, D.D. Technical review of apache flink for big data. Int. J. Aquat. Sci. 2021, 12, 3340–3346. [Google Scholar]

- Kumar, R.; Agrawal, N. Analysis of multi-dimensional Industrial IoT (IIoT) data in Edge–Fog–Cloud based architectural frameworks: A survey on current state and research challenges. J. Ind. Inf. Integr. 2023, 35, 100504. [Google Scholar] [CrossRef]

- Goknil, A.; Nguyen, P.; Sen, S.; Politaki, D.; Niavis, H.; Pedersen, K.J.; Suyuthi, A.; Anand, A.; Ziegenbein, A. A Systematic Review of Data Quality in CPS and IoT for Industry 4.0. ACM Comput. Surv. 2023, 55, 327. [Google Scholar] [CrossRef]

- Rahimi, M.; Jafari Navimipour, N.; Hosseinzadeh, M.; Moattar, M.H.; Darwesh, A. Toward the efficient service selection approaches in cloud computing. Kybernetes 2022, 51, 1388–1412. [Google Scholar] [CrossRef]

- Asim, M.; Wang, Y.; Wang, K.; Huang, P.Q. A review on computational intelligence techniques in cloud and edge computing. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 4, 742–763. [Google Scholar] [CrossRef]

- Jardine, A.K.; Lin, D.; Banjevic, D. A review on machinery diagnostics and prognostics implementing condition-based maintenance. Mech. Syst. Signal Process. 2006, 20, 1483–1510. [Google Scholar] [CrossRef]

- Kim, W.; Sung, M. Standalone OPC UA wrapper for industrial monitoring and control systems. IEEE Access 2018, 6, 36557–36570. [Google Scholar] [CrossRef]

- Mowbray, M.; Vallerio, M.; Perez-Galvan, C.; Zhang, D.; Chanona, A.D.R.; Navarro-Brull, F.J. Industrial data science–a review of machine learning applications for chemical and process industries. React. Chem. Eng. 2022, 7, 1471–1509. [Google Scholar]

- Frey, C.W. A hybrid unsupervised learning strategy for monitoring complex industrial manufacturing processes. In Proceedings of the IECON 2023-49th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 16–19 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–8. [Google Scholar]

- Barto, A.G. Reinforcement learning: An introduction. by richard’s sutton. SIAM Rev. 2021, 6, 423. [Google Scholar]

- Panzer, M.; Bender, B. Deep reinforcement learning in production systems: A systematic literature review. Int. J. Prod. Res. 2022, 60, 4316–4341. [Google Scholar] [CrossRef]

- Ramírez-Sanz, J.M.; Maestro-Prieto, J.A.; Arnaiz-González, Á.; Bustillo, A. Semi-supervised learning for industrial fault detection and diagnosis: A systemic review. ISA Trans. 2023, 143, 255–270. [Google Scholar] [CrossRef]

- Hramov, A.E.; Koronovskii, A.A.; Kurovskaya, M.K.; Moskalenko, O.I. Synchronization of spectral components and its regularities in chaotic dynamical systems. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2005, 71, 056204. [Google Scholar] [CrossRef][Green Version]

- Juran, J.M.; Gryna, F.M. Quality Planning and Analysis: From Product Development Through Usage; McGraw-Hill: New York, NY, USA, 1970. [Google Scholar][Green Version]

- Eppler, M.J. Managing Information Quality: Increasing the Value of Information in Knowledge-Intensive Products and Processes; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar][Green Version]

- Wang, R.Y.; Strong, D.M. Beyond accuracy: What data quality means to data consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- West, N.; Gries, J.; Brockmeier, C.; Göbel, J.C.; Deuse, J. Towards integrated data analysis quality: Criteria for the application of industrial data science. In Proceedings of the 2021 IEEE 22nd International Conference on Information Reuse and Integration for Data Science (IRI), Las Vegas, NV, USA, 10–12 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 131–138. [Google Scholar]

- Chapman, P.; Clinton, J.; Kerber, R.; Khabaza, T.; Reinartz, T.; Shearer, C.; Wirth, R. CRISP-DM 1.0: Step-by-Step Data Mining Guide; SPSS Inc.: Chicago, IL, USA, 2000; Volume 9, pp. 1–73. [Google Scholar]

- North, K.; Kumta, G. Knowledge Management: Value Creation Through Organizational Learning; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Wang, R.Y. A product perspective on total data quality management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, D.; Wang, H. Data-driven methods for predictive maintenance of industrial equipment: A survey. IEEE Syst. J. 2019, 13, 2213–2227. [Google Scholar] [CrossRef]

- Lei, Y.; Karimi, H.R.; Chen, X. A novel self-supervised deep LSTM network for industrial temperature prediction in aluminum processes application. Neurocomputing 2022, 502, 177–185. [Google Scholar] [CrossRef]

- Wahid, A.; Breslin, J.G.; Intizar, M.A. Prediction of machine failure in industry 4.0: A hybrid CNN-LSTM framework. Appl. Sci. 2022, 12, 4221. [Google Scholar] [CrossRef]

- Zhang, W.; Guo, W.; Liu, X.; Liu, Y.; Zhou, J.; Li, B.; Lu, Q.; Yang, S. LSTM-based analysis of industrial IoT equipment. IEEE Access 2018, 6, 23551–23560. [Google Scholar] [CrossRef]

- Zhang, Z.; Wen, G.; Chen, S. Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding. J. Manuf. Process. 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J.; Wang, G. Intelligent welding defect detection model on improved r-cnn. IETE J. Res. 2023, 69, 9235–9244. [Google Scholar] [CrossRef]

- Madhav, M.; Ambekar, S.S.; Hudnurkar, M. Weld defect detection with convolutional neural network: An application of deep learning. Ann. Oper. Res. 2025, 350, 579–602. [Google Scholar] [CrossRef]

- Paolini, D.; Dini, P.; Soldaini, E.; Saponara, S. One-Class Anomaly Detection for Industrial Applications: A Comparative Survey and Experimental Study. Computers 2025, 14, 281. [Google Scholar] [CrossRef]

- ismail Hossain, M.; Sanim, S.; Kamruzzaman, S.; Yesmin, M.; Sayem, M.S.; Shufian, A. Anomaly Detection in Industrial Machinery Using Machine Learning and Deep Learning Techniques with Vibration Data for Predictive Maintenance. In Proceedings of the 2025 2nd International Conference on Advanced Innovations in Smart Cities (ICAISC), Jeddah, Saudi Arabia, 9–11 February 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Liso, A.; Cardellicchio, A.; Patruno, C.; Nitti, M.; Ardino, P.; Stella, E.; Renò, V. A review of deep learning-based anomaly detection strategies in industry 4.0 focused on application fields, sensing equipment, and algorithms. IEEE Access 2024, 12, 93911–93923. [Google Scholar] [CrossRef]

- Mahadevan, S.; Theocharous, G. Optimizing Production Manufacturing using Reinforcement Learning. In Proceedings of the AAAI Eleventh International FLAIRS Conference, Sanibel Island, FL, USA, 18–20 May 1998. [Google Scholar]

- Powell, K.M.; Machalek, D.; Quah, T. Real-time optimization using reinforcement learning. Comput. Chem. Eng. 2020, 143, 107077. [Google Scholar] [CrossRef]

- Khdoudi, A.; Masrour, T.; El Hassani, I.; El Mazgualdi, C. A Deep-Reinforcement-Learning-Based Digital Twin for Manufacturing Process Optimization. Systems 2024, 12, 38. [Google Scholar] [CrossRef]

- Rai, R.; Tiwari, M.K.; Ivanov, D.; Dolgui, A. Machine learning in manufacturing and industry 4.0 applications. Int. J. Prod. Res. 2021, 59, 4773–4778. [Google Scholar] [CrossRef]

- Yildirim, P.; Birant, D.; Alpyildiz, T. Data mining and machine learning in textile industry. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1228. [Google Scholar] [CrossRef]

- Salahshoor, K.; Kordestani, M.; Khoshro, M.S. Fault detection and diagnosis of an industrial steam turbine using fusion of SVM (support vector machine) and ANFIS (adaptive neuro-fuzzy inference system) classifiers. Energy 2010, 35, 5472–5482. [Google Scholar] [CrossRef]

- Łuczak, D.; Brock, S.; Siembab, K. Cloud Based Fault Diagnosis by Convolutional Neural Network as Time–Frequency RGB Image Recognition of Industrial Machine Vibration with Internet of Things Connectivity. Sensors 2023, 23, 3755. [Google Scholar] [CrossRef] [PubMed]

- Tran, V.T.; Yang, B.S.; Oh, M.S.; Tan, A.C.C. Machine condition prognosis based on regression trees and one-step-ahead prediction. Mech. Syst. Signal Process. 2008, 22, 1179–1193. [Google Scholar] [CrossRef]

- Li, G.; Chen, H.; Hu, Y.; Wang, J.; Guo, Y.; Liu, J.; Li, H.; Huang, R.; Lv, H.; Li, J. An improved decision tree-based fault diagnosis method for practical variable refrigerant flow system using virtual sensor-based fault indicators. Appl. Therm. Eng. 2018, 129, 1292–1303. [Google Scholar] [CrossRef]

- Noura, H.N.; Allal, Z.; Salman, O.; Chahine, K. An optimized tree-based model with feature selection for efficient fault detection and diagnosis in diesel engine systems. Results Eng. 2025, 27, 106619. [Google Scholar] [CrossRef]

- Thomas, M.C.; Zhu, W.; Romagnoli, J.A. Data mining and clustering in chemical process databases for monitoring and knowledge discovery. J. Process Control 2018, 67, 160–175. [Google Scholar] [CrossRef]

- Bagherzade Ghazvini, M.; Sanchez-Marre, M.; Bahlio, E.; Angulo, C. Operational Modes Detection in Industrial Gas Turbines Using an Ensemble of Clustering Methods. Sensors 2021, 21, 8047. [Google Scholar] [CrossRef]

- Chaudhry, M.; Shafi, I.; Mahnoor, M.; Ramírez Vargas, D.L.; Thompson, E.B.; Ashraf, I. A Systematic Literature Review on Identifying Patterns Using Unsupervised Clustering Algorithms: A Data Mining Perspective. Symmetry 2023, 15, 1679. [Google Scholar] [CrossRef]

- Hüsken, M.; Stagge, P. Recurrent neural networks for time series classification. Neurocomputing 2003, 50, 223–235. [Google Scholar] [CrossRef]

- Butcher, J.B.; Verstraeten, D.; Schrauwen, B.; Day, C.R.; Haycock, P.W. Reservoir computing and extreme learning machines for non-linear time-series data analysis. Neural Netw. 2013, 38, 76–89. [Google Scholar] [CrossRef]

- Chiuso, A.; Pillonetto, G. System identification: A machine learning perspective. Annu. Rev. Control. Robot. Auton. Syst. 2019, 2, 281–304. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Suman, R. Digital twin applications toward industry 4.0: A review. Cogn. Robot. 2023, 3, 71–92. [Google Scholar] [CrossRef]

- Soleimani, M.; Campean, F.; Neagu, D. Diagnostics and prognostics for complex systems: A review of methods and challenges. Qual. Reliab. Eng. Int. 2021, 37, 3746–3778. [Google Scholar] [CrossRef]

- Cheng, S.; Quilodrán-Casas, C.; Ouala, S.; Farchi, A.; Liu, C.; Tandeo, P.; Fablet, R.; Lucor, D.; Iooss, B.; Brajard, J.; et al. Machine learning with data assimilation and uncertainty quantification for dynamical systems: A review. IEEE/CAA J. Autom. Sin. 2023, 10, 1361–1387. [Google Scholar] [CrossRef]

- Hramov, A.E.; Kulagin, N.; Pisarchik, A.N.; Andreev, A.V. Strong and weak prediction of stochastic dynamics using reservoir computing. Chaos Interdiscip. J. Nonlinear Sci. 2025, 35, 033140. [Google Scholar] [CrossRef]

- Zheng, S.; Zhao, J. A self-adaptive temporal-spatial self-training algorithm for semisupervised fault diagnosis of industrial processes. IEEE Trans. Ind. Inform. 2022, 18, 6700–6711. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Dong, H.; Yan, Y. Semi-supervised defect classification of steel surface based on multi-training and generative adversarial network. Opt. Lasers Eng. 2019, 122, 294–302. [Google Scholar] [CrossRef]

- Jiang, L.; Ge, Z.; Song, Z. Semi-supervised fault classification based on dynamic Sparse Stacked auto-encoders model. Chemom. Intell. Lab. Syst. 2017, 168, 72–83. [Google Scholar] [CrossRef]

- Razavi-Far, R.; Hallaji, E.; Farajzadeh-Zanjani, M.; Saif, M.; Kia, S.H.; Henao, H.; Capolino, G.A. Information fusion and semi-supervised deep learning scheme for diagnosing gear faults in induction machine systems. IEEE Trans. Ind. Electron. 2019, 66, 6331–6342. [Google Scholar] [CrossRef]

- Jia, X.; Tian, W.; Li, C.; Yang, X.; Luo, Z.; Wang, H. A dynamic active safe semi-supervised learning framework for fault identification in labeled expensive chemical processes. Processes 2020, 8, 105. [Google Scholar] [CrossRef]

- Xu, M.; Wang, Y. An imbalanced fault diagnosis method for rolling bearing based on semi-supervised conditional generative adversarial network with spectral normalization. IEEE Access 2021, 9, 27736–27747. [Google Scholar] [CrossRef]

- Liu, W.; Wang, P.; You, Y. Ensemble-based semi-supervised learning for milling chatter detection. Machines 2022, 10, 1013. [Google Scholar] [CrossRef]

- Jiang, Y.; Yin, S.; Dong, J.; Kaynak, O. A review on soft sensors for monitoring, control, and optimization of industrial processes. IEEE Sens. J. 2020, 21, 12868–12881. [Google Scholar] [CrossRef]

- Shokry, A.; Vicente, P.; Escudero, G.; Pérez-Moya, M.; Graells, M.; Espuña, A. Data-driven soft-sensors for online monitoring of batch processes with different initial conditions. Comput. Chem. Eng. 2018, 118, 159–179. [Google Scholar] [CrossRef]

- Sun, Q.; Ge, Z. A survey on deep learning for data-driven soft sensors. IEEE Trans. Ind. Inform. 2021, 17, 5853–5866. [Google Scholar] [CrossRef]

- Li, Z.; Andreev, A.; Hramov, A.; Blyuss, O.; Zaikin, A. Novel efficient reservoir computing methodologies for regular and irregular time series classification. Nonlinear Dyn. 2025, 113, 4045–4062. [Google Scholar] [CrossRef] [PubMed]

- Curreri, F.; Patanè, L.; Xibilia, M.G. RNN-and LSTM-based soft sensors transferability for an industrial process. Sensors 2021, 21, 823. [Google Scholar] [CrossRef]

- Yasin, A.; Pang, T.Y.; Cheng, C.T.; Miletic, M. A roadmap to integrate digital twins for small and medium-sized enterprises. Appl. Sci. 2021, 11, 9479. [Google Scholar] [CrossRef]

- Burinskienė, A.; Nalivaikė, J. Digital and sustainable (twin) transformations: A case of SMEs in the European Union. Sustainability 2024, 16, 1533. [Google Scholar] [CrossRef]

- Abolghasem, S.; Carpitella, S.; Mohan, G.T. Digital Twin Implementation in Small and Medium Size Enterprises: A Case Study. In Analytics Modeling in Reliability and Machine Learning and Its Applications; Springer: Berlin/Heidelberg, Germany, 2025; pp. 321–341. [Google Scholar]

- Pisarchik, A.N.; Maksimenko, V.A.; Andreev, A.V.; Frolov, N.S.; Makarov, V.V.; Zhuravlev, M.O.; Runnova, A.E.; Hramov, A.E. Coherent resonance in the distributed cortical network during sensory information processing. Sci. Rep. 2019, 9, 18325. [Google Scholar] [CrossRef]

- Pisarchik, A.N.; Hramov, A.E. Coherence resonance in neural networks: Theory and experiments. Phys. Rep. 2023, 1000, 1–57. [Google Scholar] [CrossRef]

- Peres, R.S.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.W.; Barata, J. Industrial artificial intelligence in industry 4.0-systematic review, challenges and outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Canese, L.; Cardarilli, G.C.; Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Re, M.; Spanò, S. Multi-agent reinforcement learning: A review of challenges and applications. Appl. Sci. 2021, 11, 4948. [Google Scholar] [CrossRef]

- Bahrpeyma, F.; Reichelt, D. A review of the applications of multi-agent reinforcement learning in smart factories. Front. Robot. AI 2022, 9, 1027340. [Google Scholar] [CrossRef]

| Aspect | ETL (Extract, Transform, Load) | ELT (Extract, Load, Transform) |

|---|---|---|

| Processing Sequence | Data transformation occurs before loading into target system | Data transformation occurs after loading into target system |

| Transformation Location | Separate processing server/staging area | Within target data warehouse/lake |

| Data Volume Handling | Suitable for moderate volumes of structured data | Optimized for large volumes of structured and unstructured data |

| Flexibility | Limited flexibility; transformations are predefined | High flexibility; transformations can be modified post-loading |

| Real-time Processing | Challenging due to preprocessing requirements | More adaptable to real-time and streaming scenarios |

| Infrastructure Requirements | Requires substantial intermediate processing resources | Demands powerful target system with computational capacity |

| Data Latency | Higher latency due to staging transformations | Lower latency for raw data availability |

| Implementation Complexity | Moderate complexity with well-defined transformation rules | Higher complexity in managing transformations within target system |

| Cost Considerations | Higher intermediate infrastructure costs | Higher target system and storage costs |

| Typical Use Cases | Data warehousing, structured business intelligence | Big data analytics, data lakes, exploratory analysis |

| Industrial Applicability | Mature processes with stable data schemas | Evolving processes requiring analytical flexibility |

| Criterion | Description | Measurement/Evaluation |

|---|---|---|

| Access level | ||

| Accessibility | The data must be available through defined interfaces for further processing. | Evaluated binary: available/not available. |

| Relevance | The data must be relevant to the purpose of the analysis. | Evaluated at three levels: insufficient, ideal, excessive. |

| Timeliness | The data should be available at the right time. | Evaluated binary: timely/not timely. |

| Uniqueness | The data should be free of technical duplicates and redundancy, which is ensured by basic data integration [see Section 4.3]. | Number of duplicate and redundant data identified during analysis using algorithms to identify repeated records. |

| Consistency | The data should be consistent over time and between different sources which is ensured by basic data integration [see Section 4.3]. | Check that data from different systems are not inconsistent. The data are up to date and do not contain time gaps or anomalies. The number of inconsistencies between sources and time periods is assessed. |

| Validity | The data must conform to established rules (format, value ranges) and must not contain inconsistencies. | Evaluated binary: valid/non-valid. |

| Analytical level | ||

| Accuracy | The data must match the reference values. | Measured as the proportion of correct values in the total data. |

| Completeness | The data should be complete, with no omissions. | Measured as the proportion of non-zero values. |

| Error-free | The data should be free of logical inconsistencies. Defined by the objectives of the analysis. | Evaluated through logical consistency checks, proportion of data passing validation, number of inconsistencies with reference data, automated tests and anomaly analysis. |

| Value Added | Data should enable the creation of new information useful for analysis. | Evaluated through the cost-benefit ratio of the data. |

| Application level | ||

| Cost-effectiveness | Solutions must be economically justifiable. | Evaluated through the ratio of costs to value achieved. |

| Conciseness of presentation | The results of the analysis should be compact and understandable. | Evaluated through user surveys for ease of comprehension, share of visualized data in the total data volume, number of key indicators in reports compared to the total data volume. |

| Consistency of presentation | Solutions should be homogeneous and compatible with previous data. | Evaluated through the number of errors or inconsistencies in data structure, the proportion of data validated against data homogeneity metrics. |

| Interpretability | Results should be presented in understandable terms and units. | Evaluated qualitatively through surveys. |

| Understandability | Decisions should be easily understandable for operational decision-making. | Evaluated through ad hoc interviews with experts. |

| Administrative level | ||

| Accessibility | The data must be available through defined interfaces for further processing. | Evaluated binary: available/not available |

| Relevance | The data must be relevant to the purpose of the analysis. | Evaluated at three levels: insufficient, ideal, excessive |

| Timeliness | The data should be available at the right time. | Evaluated binary: timely/not timely. |

| Uniqueness | The data should be free of technical duplicates and redundancy, which is ensured by basic data integration [see Section 4.3]. | Number of duplicate and redundant data identified during analysis using algorithms to identify repeated records. |

| Consistency | The data should be consistent over time and between different sources which is ensured by basic data integration [see Section 4.3]. | Check that data from different systems are not inconsistent. The data are up to date and do not contain time gaps or anomalies. The number of inconsistencies between sources and time periods is assessed. |

| Validity | The data must conform to established rules (format, value ranges) and must not contain inconsistencies. | Evaluated binary: valid/non-valid. |

| Industrial Task | ML Category | Key Methods | Strengths | Notes [Sources] |

|---|---|---|---|---|

| Predictive Maintenance | Supervised Learning | SVM, Random Forest, ANFIS | High accuracy in failure prediction | Prediction of equipment failures based on historical data from sensors (e.g., vibration, temperature, and other bearing data) [62,75] |

| Deep Learning | RNN, LSTM, RC | Automated feature extraction from images; High precision | Sufficient increase in the accuracy of predicting the condition of industrial equipment [76,77,78] | |

| Quality Control | Deep Learning | CNN, AE | Multivariate time-series forecasting | CNN for defect detections outperformed the traditional computer vision [79,80,81] |

| Anomaly Detection | Unsupervised Learning | One-Class SVM, Isolation Forest, AE & VAE | Effective with unlabeled data; Identifies novel failure modes | Improving industrial fault detection with one-class deep learning models trained solely on normal data, without needing labeled anomalies [63,82,83,84] |

| Process Optimization | Reinforcement Learning | DDPG, TD3, PPO, Q-learning & DQN | Autonomous real-time decision-making; No need for precise physical models; Handles complex state-action spaces | Enables self-improving systems through trial-and-error learning in simulation environments (digital twins); Reduces online computation by 87.7% compared to traditional optimization [85,86,87] |

| Soft Sensor | Semi-supervised Learning | Label Propagation, Semi-supervised AE | Reduces need for expensive labeled data; Leverages unlabeled process data | Boosting fault diagnosis accuracy by leveraging unlabeled data to augment scarce labeled examples [66] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hramov, A.E.; Pisarchik, A.N. Big Data Management and Quality Evaluation for the Implementation of AI Technologies in Smart Manufacturing. Appl. Sci. 2025, 15, 11905. https://doi.org/10.3390/app152211905

Hramov AE, Pisarchik AN. Big Data Management and Quality Evaluation for the Implementation of AI Technologies in Smart Manufacturing. Applied Sciences. 2025; 15(22):11905. https://doi.org/10.3390/app152211905

Chicago/Turabian StyleHramov, Alexander E., and Alexander N. Pisarchik. 2025. "Big Data Management and Quality Evaluation for the Implementation of AI Technologies in Smart Manufacturing" Applied Sciences 15, no. 22: 11905. https://doi.org/10.3390/app152211905

APA StyleHramov, A. E., & Pisarchik, A. N. (2025). Big Data Management and Quality Evaluation for the Implementation of AI Technologies in Smart Manufacturing. Applied Sciences, 15(22), 11905. https://doi.org/10.3390/app152211905