Abstract

The widespread use of deepfake technologies has increased the demand for accurate and effective detection methods. This study presents a novel deepfake detection framework that utilizes meta-heuristic feature selection to enhance classification performance. The performance of the Artificial Hummingbird Algorithm (AHA), Polar Lights Optimization (PLO), and their hybrid model, AHA-PLO, is investigated. The hybrid model aims to conduct a more effective search in the feature space by combining AHA’s global exploration ability with PLO’s local exploitation precision. Experimental evaluations conducted on two benchmark datasets, FaceForensics++ (FF++) and Celeb-DF (CDF), demonstrate that the proposed AHA-PLO model consistently outperforms its individual components, achieving state-of-the-art AUC scores of 99.36% on FF++ and 98.78% on CDF. These findings support the hybrid model’s potential as a robust and generalizable solution for deepfake video detection.

1. Introduction

1.1. Background and Motivation

Recent rapid developments in deepfake technology have created significant threats in various fields such as cybersecurity, digital media, and digital forensics. The increasing complexity of artificial intelligence-based methods used in deepfake production makes it more difficult to detect fake content and increases the need for reliable verification mechanisms [1]. Most deepfake detection strategies rely heavily on hierarchical pattern recognition and transfer optimization, employing well-established architectures such as Xception, ResNet50, and Inception [2]. However, these methods struggle with generalization across different datasets and have limited applicability due to high computational costs [3]. This situation highlights the need for more effective and computationally efficient feature selection methods in deepfake detection processes.

Meta-heuristic optimization algorithms are widely used to optimize feature selection in high-dimensional datasets and offer potential for balancing model accuracy with computational costs [4]. In this context, the artificial hummingbird algorithm AHA [5] and polar lights optimization PLO [6] stand out with their successful performance in complex optimization problems. However, the individual implementation of both algorithms may fail to provide an adequate balance between exploration and exploitation in high-dimensional feature spaces and might carry the risk of falling into suboptimal convergence. The primary motivation of this study is to enhance the accuracy and generalization capability of deepfake detection systems by integrating the AHA and PLO algorithms into a hybrid framework. This integration aims to develop a more balanced and efficient feature selection mechanism, enabling a more effective differentiation of manipulated content. Furthermore, the study provides a comprehensive analysis of the proposed method’s efficacy in distinguishing synthetic media from authentic content.

1.2. Related Works

Optimization is regarded as a subfield of mathematics and computer science that investigates methods and strategies aimed at finding the ideal solution to engineering problems [7]. Priya et al. proposed a hybrid model, the competitive swarm and sunflower optimization algorithm (CSSFOA), for fake content detection [8]. In their study, facial detection was performed using the Viola-Jones algorithm, followed by the identification of manipulations within detected faces through a random multimodal deep learning (RMDL) approach. The RMDL model was trained using the proposed hybrid CSSFOA framework, enhancing its ability to effectively detect fraudulent content. Another study on the hybrid utilization of meta-heuristic algorithms employed the particle swarm optimization (PSO) algorithms and ant colony optimization (ACO) [9]. The proposed hybrid model (ACO-PSO) was utilized for feature extraction, where it was specifically designed to determine the orientation and scale of images. In this framework, PSO and genetic algorithm (GA) were applied for orientation estimation, while ACO was used for scale determination. The extracted hybrid features were subsequently classified using a deep learning model, enhancing the robustness and accuracy of the classification process.

Leveraging the global optimization capabilities of the PSO algorithm, Cunha et al. proposed a hybrid model combining EfficientNet and EfficientNet-GRU for fake content detection [10]. In the proposed framework, hyperparameter selection was performed using PSO alongside other meta-heuristic algorithms to enhance the model’s performance and generalization capability.

Adwan et al. employed the PSO algorithm for hyperparameter optimization in their study [11]. A hybrid convolutional neural network-recurrent neural network (CNN-RNN) model was utilized, where feature extraction was performed using a CNN to obtain discriminative image representations. The extracted features were then optimized using PSO to achieve the best possible performance. After feature dimensionality reduction, classification was carried out using an RNN with long short-term memory (LSTM) units, enhancing the model’s ability to capture temporal dependencies within the data. Al-Qazzaz et al. utilized the Xception architecture, a CNN-based model, for feature extraction from fake images [12]. To enhance the accuracy of the proposed approach, the authors optimized the parameter values of the Xception model using the snake optimization algorithm (SOA), ensuring improved performance in fake image detection.

In another study where meta-heuristic algorithms were employed for hyperparameter selection, the artificial rabbits optimization (ARO) algorithm was utilized [13]. The study leveraged the DarkNet model for feature extraction, ensuring robust representation learning. For deepfake detection, the weighted regularized extreme learning machine method was applied, with hyperparameter selection performed to enhance classification accuracy and model efficiency. Krishnan et al. proposed the efficient skip connections-based residual network (ESkip-ResNet) architecture for fake content detection [14]. This architecture introduces a hybrid model by integrating skip connections within the ResNet framework. To optimize the performance of the proposed model, parameter values related to batch normalization and downsampling were fine-tuned using the coot bird optimization (CBO) algorithm, ensuring improved feature representation and classification accuracy.

Literature review reveals that the use of meta-heuristic algorithms in deepfake detection is a rapidly growing research area. The findings indicate that there is no single optimization algorithm capable of addressing all challenges in deepfake detection with absolute superiority. This highlights the importance of developing more effective strategies. In this study, we propose a hybrid AHA-PLO model, which combines the strengths of multiple meta-heuristic approaches to enhance feature selection and improve detection performance.

1.3. Key Contributions

The essential contributions of this research can be summarized as follows:

- The study presents a new hybrid model by combining AHA and PLO, which have not been used before in deepfake detection.

- A hybrid feature vector was obtained with the AHA-PLO model for deepfake detection.

- AHA-PLO hybrid model increases ACC and AUC values by optimizing the feature selection process in high-dimensional datasets.

- The proposed approach is systematically compared with baseline methods and current literature, showing competitive or superior results, thereby positioning AHA-PLO as a strong candidate for future deepfake detection research.

1.4. Organization of the Article

This paper consists of six main sections. After the introduction that presents the background and motivation, related works, and key contributions, the definition of the problem is presented in Section 2. Section 3 summarizes the optimization algorithms used in the study. Section 4 describes the proposed AHA-PLO hybrid model. Section 5 presents the performance metrics of the algorithms used. Section 6 interprets the results of the study and suggests future work methods.

2. Definition of Problem

2.1. Datasets

The FF++ dataset is a widely used benchmark dataset for deepfake and facial manipulation detection [15]. In this study, 4 or 5 frames were extracted from each video, resulting in a total of 9527 images; of these, 4585 were derived from real videos, while 4942 were generated from fake videos.

The CDF dataset is an imbalanced dataset containing 590 authentic videos and 5639 synthetically manipulated videos [16]. A total of 2704 frames were extracted from the real videos, while 5639 fake frames were obtained by selecting one frame from each fake video.

2.2. Frame Extraction

In this study, the image dataset was obtained by applying various preprocessing steps to the video data. First, certain frames were extracted from the videos. During this process, the Multi-Task Cascaded Convolutional Networks (MTCNN) [17] method, which provides successful results in face detection and tracking, was preferred. MTCNN correctly identified the face region among the frames in the videos, extracted each frame, and ensured that the dataset was prepared for modeling. After face detection using the MTCNN algorithm, a fixed number of representative frames were extracted from each video.

For the FF++ dataset, 4–5 evenly spaced frames per video were selected to ensure temporal diversity without introducing redundancy. In the CDF dataset, due to the imbalance between real and fake samples, 4–5 frames were taken from each real video, whereas one frame was taken from each fake video to achieve proportional balance in the overall dataset. The total numbers of frames in Table 1 reflect the sum of the extracted frames obtained under these selection criteria for both the training and test sets.

Table 1.

Frame numbers in both datasets.

In this study, we chose frame-level feature extraction rather than video-level. This is because deepfake manipulations often reveal spatial inconsistencies within individual frames, such as facial edge distortions, color transition errors, and skin texture distortions. Many fundamental studies in the literature have similarly adopted a frame-based analysis approach. Selecting a limited number of frames from videos reduces computational costs and increases representational diversity by reducing repetitive information in consecutive frames. Thus, the model maintains its generalizability while avoiding the risk of overfitting.

2.3. Feature Extraction

In this study, the Xception [18] and ResNet50 [19] models were employed for deep feature extraction. These models enabled the acquisition of high-level representational features by processing the input images, which were preprocessed to ensure compatibility with the network architectures.

3. Optimization Algorithms for Feature Selection

This study presents a novel AHA-PLO hybrid model developed for deepfake detection. The primary objective of the proposed methodology is to optimize feature vectors extracted from CNN architectures to more effectively distinguish between real and fake video content. The proposed methodology consists of four fundamental stages: frame extraction, feature extraction, feature selection using the meta-heuristic algorithms and the AHA-PLO hybrid model, and classification. Algorithms used for feature selection are given under subheadings.

3.1. AHA

AHA is inspired by the flight capabilities of hummingbirds and foraging strategies [5]. The foraging strategies incorporated in the algorithm include territorial foraging, guided foraging, and migration foraging, enabling efficient exploration and exploitation of the search space. Furthermore, three distinct flight mechanisms, diagonal flight, axial flight, and omnidirectional flight, are modeled to enhance adaptability and maneuverability during the optimization process. “In order to model the memory function of hummingbirds, a visit table is utilized, supporting the effective tracing of visited food sources while enhancing search accuracy and reducing unnecessary evaluations.

3.2. PLO

The PLO algorithm is a meta-heuristic optimization approach modeled after the aurora phenomenon, also known as the polar lights [6]. Auroras appear as brilliant displays in the sky, resulting from the interaction of charged solar wind particles with Earth’s magnetosphere and atmospheric gases. The PLO algorithm is designed based on this natural occurrence, aiming to mimic the motion dynamics of particles influenced by these interactions. In this model, gyration motion is incorporated for local exploration, while aurora oval walk is utilized for global exploration, ensuring an effective balance between exploitation and exploration in the optimization process.

3.3. Fitness Function

In this study, a fitness function based on the ROC-AUC score was utilized to evaluate the effectiveness of the feature selection process. The dataset is filtered according to the selected features and a multilayer perceptron (MLP) model is trained. AUC is an important metric measuring the discriminative performance of the model and is obtained by calculating the area under the ROC curve.

In Equation (1), and denote the true positive rate and false positive rate at the i-th threshold, respectively. The AUC value is computed as the sum of the trapezoidal areas under the ROC curve, where each segment is determined by consecutive pairs of () points. represents the total number of thresholds evaluated. This approach provides a numerical approximation of the area under the ROC curve, quantifying the overall discriminative ability of the classifier.

4. Proposed AHA-PLO Hybrid Model

In this study, a hybrid feature selection model named AHA-PLO is proposed by combining the AHA and PLO to improve deepfake detection performance. The core motivation for this hybridization is to balance exploration and exploitation in the feature selection process. While AHA demonstrates strong exploration capabilities through biologically inspired flight behaviors, PLO complements this by focusing on fine-grained exploitation using magnetically guided search dynamics. The sequential combination of these two algorithms aims to overcome the limitations of using either one alone.

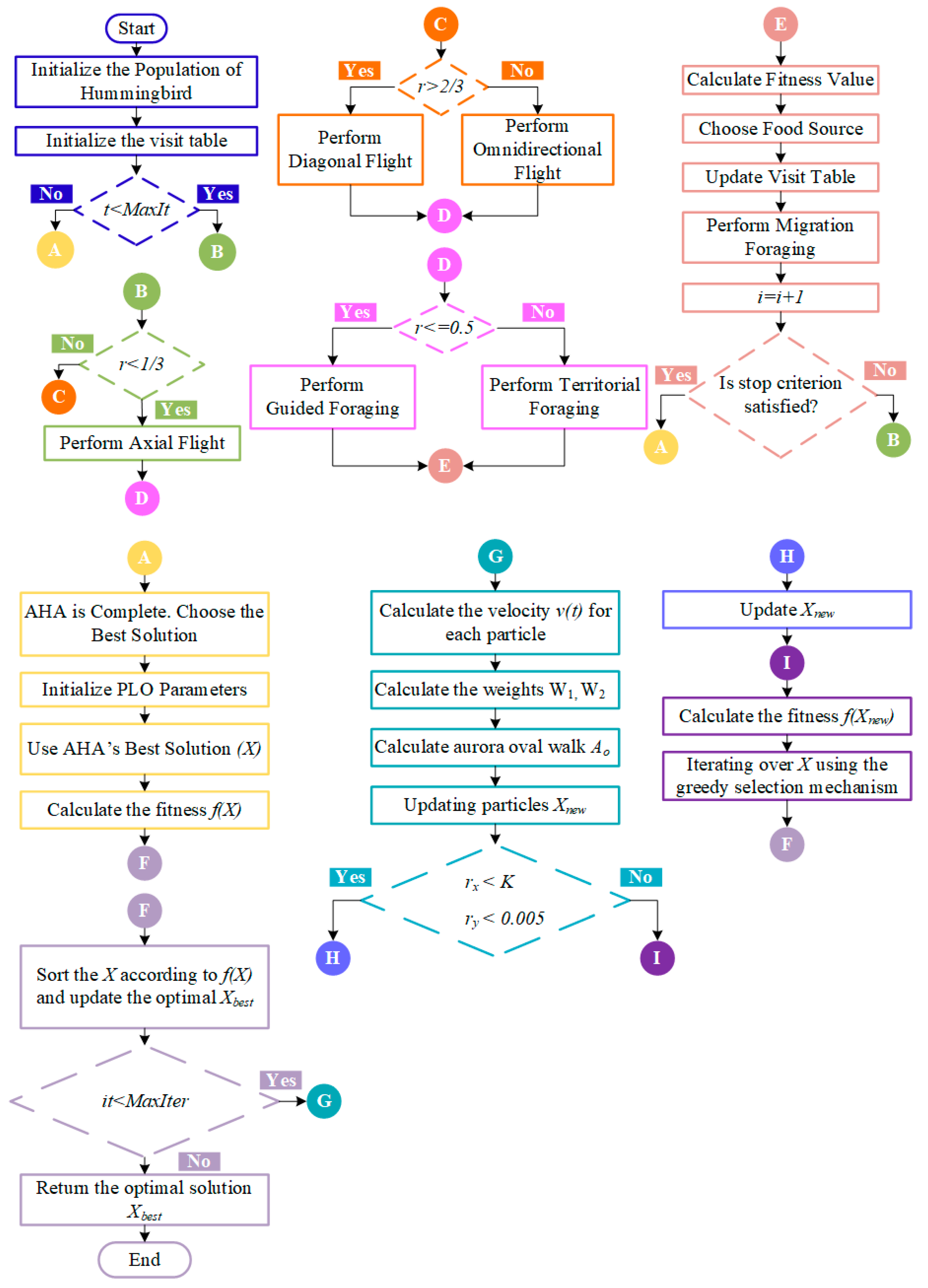

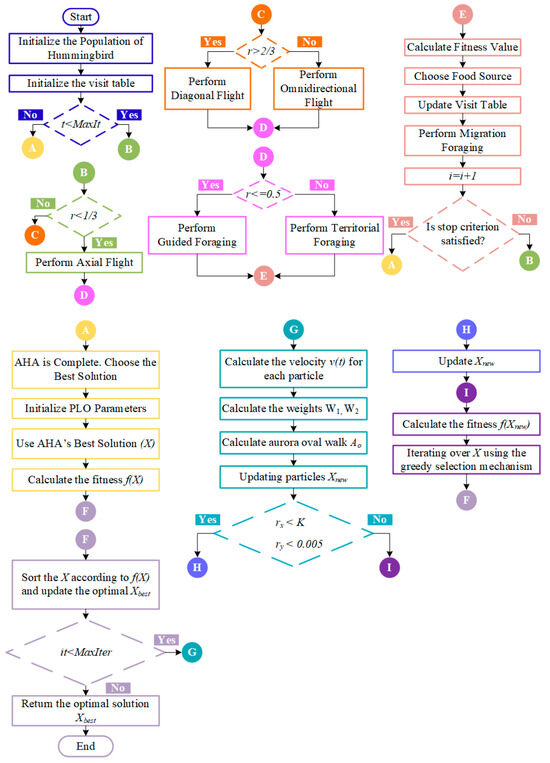

The overall workflow of the proposed AHA-PLO hybrid feature selection model is presented in Figure 1. In the first stage, the AHA is executed to explore the high-dimensional feature space and identify multiple promising regions. In AHA, Equations (2)–(4) define axial, diagonal, and omnidirectional flight, which regulate the exploration–exploitation dynamics during the guided foraging process. This phase lasts for the first 50% of the total iteration.

Figure 1.

Flowchart of the proposed AHA–PLO hybrid feature selection model.

After this phase, the best-performing individuals obtained from AHA (based on their AUC scores) are passed to the PLO algorithm, which takes over the remaining 50% of the iterations. PLO operates as a local search mechanism, refining the solutions received from AHA by simulating the dynamic behavior of particles influenced by polar magnetic fields. Equation (9) models the gyration motion for local refinement, while Equations (10), (11), (14) and (15) describe the aurora oval walk mechanism that enhances global search through expansion–contraction dynamics. This structured transition allows the model to first explore broadly, then exploit intensively. Equation (13) defines the particle position update, integrating the effects of gyration and auroral motion.

No weighted averaging or probabilistic blending is applied between the algorithms. However, the handover is dynamic and informed, as PLO uses the best outputs from AHA as its initialization. This sequential cooperation enables a complementary interaction, where AHA’s global diversity prevents premature convergence, and PLO’s precision accelerates convergence toward optimal feature subsets.

The AHA consists of three main components: guided, territorial and migration foraging [20].

4.1. Guided Foraging

In the AHA, axial, diagonal and omnidirectional flights are simulated using a direction change vector during feeding. This vector controls which directions are active in d-dimensional space and governs three different flight behaviors.

Equations (2), (3), and (4) define axial, diagonal and omnidirectional flight, respectively. is a random integer between 1 and . Here, represents the dimensionality of the feature space, which corresponds to the total number of extracted deep features. In this study, equals 2048 for both the Xception and ResNet50 backbone networks used during feature extraction. The parameter is a random number uniformly distributed in the range [0, 1] and is used to determine the number of active flight directions during the omnidirectional movement. The variable specifies the number of dimensions to be updated, ensuring diversity in directional movement during the foraging process.

Equation (5) defines the guided foraging behavior.

defines the position of the -th food source at time. determines the target location of the -th hummingbird’s food source. The guided factor is represented by . The pseudocode for guided foraging is given in Algorithm 1.

| Algorithm 1. Pseudocode of Guided Foraging |

| For i = 1; n |

| Perform Equation (5) |

| If f (vi (t + 1)) < f (xi (t)) |

| xi (t + 1) = vi (t + 1) |

| For j = 1: n (j ≠ tar, i) |

| VisitTable (i, j) = VisitTable(i, j) + 1 |

| End For |

| VisitTable (i, tar) = 0 |

| For j = 1: n |

| VisitTable (j, i) = (VisitTable (j, l)) + 1 |

| End For |

| Else |

| For j = 1: n (j ≠ tar, i) |

| VisitTable (i, j) = VisitTable (i, j)+1 |

| End For |

| VisitTable (i, tar) = 0 |

| End If |

| End For |

4.2. Territorial Foraging

After extracting nectar from a chosen flower, hummingbirds instinctively seek out a new food source. Thus a more efficient alternative can be found to replace the current resource. This process is represented by a mathematical equation developed to model the local food search behavior of hummingbirds in Equation (6). The pseudocode for territorial foraging is given in Algorithm 2.

4.3. Migration Foraging

| Algorithm 2. Pseudocode of Territorial Foraging |

| For i = 1; n |

| Perform Equation (6) |

| If f (vi (t + 1)) < f (xi (t)) |

| xi (t + 1) = vi (t + 1) |

| For j = 1: n (j ≠ i) |

| VisitTable (i, j) = VisitTable(i, j) + 1 |

| End For |

| For j = 1: n |

| VisitTable (j, i) = (VisitTable (j, l)) + 1 |

| End For |

| Else |

| For j = 1: n (j ≠ i) |

| VisitTable (i, j) = VisitTable (i, j) + 1 |

| End For |

| End If |

| End For |

Once food is exhausted in a region where hummingbirds regularly feed, they typically seek nourishment in a more remote location. This process is modeled with a migration coefficient. If a hummingbird reaches a food source with minimal nectar and the iteration limit surpasses the migration coefficient, it shifts to a randomly selected food source from the entire search space. With this transition, it abandons the old feeding point and continues feeding at the new source. This change also ensures the updating of the visit table that tracks the feeding movements of hummingbirds. Equation (8) provides the mathematical expression of the process by which a hummingbird migrates from the least productive source to a randomly generated new source.

corresponds to the least optimal fitness value. After the AHA was completed, the best results were selected and these values were assigned as initial values to the PLO algorithm. The pseudocode for migration foraging is given in Algorithm 3.

| Algorithm 3. Pseudocode of Migration Foraging |

| If mod (t, 2n) == 0 |

| Perform Equation (8) |

| For j = 1: n (j ≠ wor) |

| VisitTable (wor, j) = VisitTable(wor, j) + 1 |

| End For |

| For j = 1: n |

| VisitTable (j, wor) = (VisitTable (j, l)) + 1 |

| End For |

| End If |

4.4. Gyration Motion

The rotational movement in the PLO algorithm helps particles explore the best potential solutions more precisely by enabling them to perform local searches more efficiently within the current search space. This mechanism contributes to increasing solution quality and faster convergence of the algorithm. Rooted in the natural behavior of charged particles under magnetic influence, the PLO algorithm is developed to, particle velocities are represented by Equation (9).

The values of , , and are 1, while the mass value is 100. is a random value between [1, 1.5].

4.5. Aurora Oval Walk

Aurora oval walk reflects the interaction of energetic particles simulated by Levy Flight (LF) with geomagnetic activity and atmospheric effects, demonstrating processes of contraction toward the pole and expansion toward the equator. In the PLO algorithm, this mechanism improves the global exploration capability of particles by allowing them to move freely within the search space, facilitating the investigation of optimal solution regions. The mathematical expression of this process is given in Equation (10).

is an important LF parameter for adjustment stability, while determines the step size. represents the adjustment amount of the system, and defining the LF’s step length, which is commonly fixed at 1.5. represents the average particle swarm position, and is randomly generated within the interval [0, 1].

represents the new position of the energetic particle after the update. is a variable that simulates the influence of uncontrollable environmental factors on the particle and takes a random value in the range [0, 1]. The algorithm uses two adaptive weights, and , to maximize local and global exploitation efficiency in each iteration. These weights are calculated using Equations (14) and (15).

4.6. Particle Collision

The concept of particle collision is used in the PLO algorithm to enhance exploration capability and prevent early entrapment in local optima, inspired by high-energy physics processes. Particles can generate new positions by performing chaotic collisions with each other while moving in random directions. This process is similar to energetic particles in the aurora oval creating continuously changing forms through interaction with the atmosphere. The updated positions are mathematically modeled by Equation (16).

X(α,j) represents a randomly selected particle, while enotes the collision probability. Additionally, and are random values within the range [0, 1].

Together, these equations establish the mathematical framework of the proposed AHA–PLO hybrid optimization model summarized in Algorithm 4.

| Algorithm 4. Pseudocode of AHA-PLO hybrid feature selection model | |

| Parameter Initializing: n,d,f, MaxIt | Parameter Initializing: t, Fes, MaxIter |

| Create a set of initial solution (P), and VisitTable | Use AHA’s best solutions (X) |

| While t ≤ MaxIt | Calculate fitness value f(X) |

| For ith hummingbird from 1 to n, Do | Update the current optimum solution Xbest |

| If r < 1/3 Then perform Equation (2) | While it ≤ MaxIter |

| Else if r > 2/3 | Calculate the velocity v(t) Equation (9) |

| Then perform Equation (3) | Calculate oval walk Ao Equation (10) |

| Else perform Equation (4) | Calculate weights W1 and W2 Equations (14) and (15) |

| End if | For each energetic particle Do |

| End if | Updating particles Xnew using Equation (13) |

| If rand < 0.5 | If rx < K and ry < 0.005 |

| Then perform Guided foraging | Particle collision strategy: update particle Xnew using Equation (16) |

| Else perform Territorial foraging | End if |

| End if | Calculate the fitness f (Xnew) |

| End For | FEs = Fes + 1 |

| If mod (t, 2n) == 0 | End For |

| Then perform Migration foraging | If f (Xnew) < f(X) |

| End if | Iterating over X using the greedy selection mechanism |

| End While | End if |

| AHA is complete. Choose the best solutions (X) | Sort X according to f(X) |

| Update the optimal solution Xbest | |

| t = t + 1 | |

| End While | |

| Return the Xbest | |

5. Experiment Result and Discussion

5.1. Experimental Settings

Google Colab, a cloud-based platform with GPU and TPU support in a Python3 Jupyter Notebook environment, was used to conduct the experiments. In the experimental study, the metaheuristic algorithms AHA and PLO, which have recently attracted significant attention in the optimization literature, were utilized for feature selection. The parameter configurations of these algorithms were determined based on both literature recommendations and computational efficiency considerations. Specifically, a population size of 30 and a maximum iteration limit of 30 were adopted to maintain a balanced trade-off between convergence performance, exploration capability, and runtime cost. Given the high dimensionality of the extracted deep features, increasing these values would substantially raise computational complexity and memory usage without yielding meaningful improvements in accuracy. The detailed configuration of control parameters for AHA and PLO is presented in Table 2.

Table 2.

Control parameters of algorithms.

Since metaheuristic optimization inherently involves stochastic elements, random initialization was controlled through fixed random seeds, and the consistency of optimization outcomes was verified during preliminary trials. The observed variations across runs were negligible, confirming that the proposed optimization process yields stable and reproducible results.

For classification, an MLP model was employed, with the number of iterations set to 100 and the learning rate initialized at 0.005. Because the CDF dataset exhibits a partial imbalance between real and fake video samples, the parameter class_weight = ‘balanced’ was used in the MLP classifier to compensate for this proportional difference during training. This strategy increased the contribution of the minority class to the loss function and prevented bias toward the majority class. Furthermore, random undersampling experiments were conducted to verify stability, yielding similar results and confirming that the class-weighting approach effectively mitigated imbalance effects.

Each solution in the population is encoded as a binary vector of length d, where d is the total number of deep features extracted (e.g., 2048). A value of ‘1’ denotes selection of the corresponding feature, and ‘0’ denotes exclusion. At each iteration, the fitness of a candidate solution is evaluated by training an MLP classifier on the training data and computing the AUC score on the fixed test set. This AUC value serves as the fitness function to guide the selection of optimal feature subsets. A consistent train-test (80–20%) split was maintained across all runs to ensure comparability.

5.2. Performance Evaluation Criteria

Various performance metrics were utilized to analyze the model’s success. This study utilized a binary classification model where real data was labeled as ‘1’ and fake data as ‘0’. AUC was selected as the primary benchmark metric to measure the model’s accuracy and discriminative power. AUC is a robust metric that indicates how well the model can distinguish between classes and is preferred as a reliable performance measure, particularly in imbalanced datasets. Additionally, ACC, F1-score (F1), precision (P) and recall (R) metrics were also evaluated to enable a more comprehensive analysis of different aspects of the model.

5.3. Results of Feature Selection

This section presents the performance evaluation of the proposed hybrid AHA-PLO model for deepfake video detection. The performance evaluation of the AHA-PLO hybrid model was conducted by considering the AUC as the primary benchmark metric. In addition to this parameter, ACC, F1, P, R, and the number of selected features are also taken into consideration. To provide a comprehensive and detailed analysis, the results for both the ‘1’ (real) and ‘0’ (fake) classes are presented separately in the following subsections. The proposed model is run on two different datasets, and the optimization outputs and performance metrics are presented accordingly.

5.3.1. Results Without Feature Selection

Before applying meta-heuristic feature selection, baseline experiments were conducted using the raw deep features extracted from the Xception and ResNet50 backbones. These results provide an initial benchmark to highlight the benefits of the subsequent feature selection process. Table 3 reports the classification performance (AUC, ACC, F1, precision and recall) obtained without feature selection. As expected, the performance of both backbones is slightly lower compared to the feature-selected models, mainly due to the presence of redundant and irrelevant features. Nevertheless, these baseline results serve as an important reference point to demonstrate the improvements achieved by the proposed hybrid AHA-PLO approach in later sections.

Table 3.

Baseline classification performance without feature selection on the FF++ and CDF datasets.

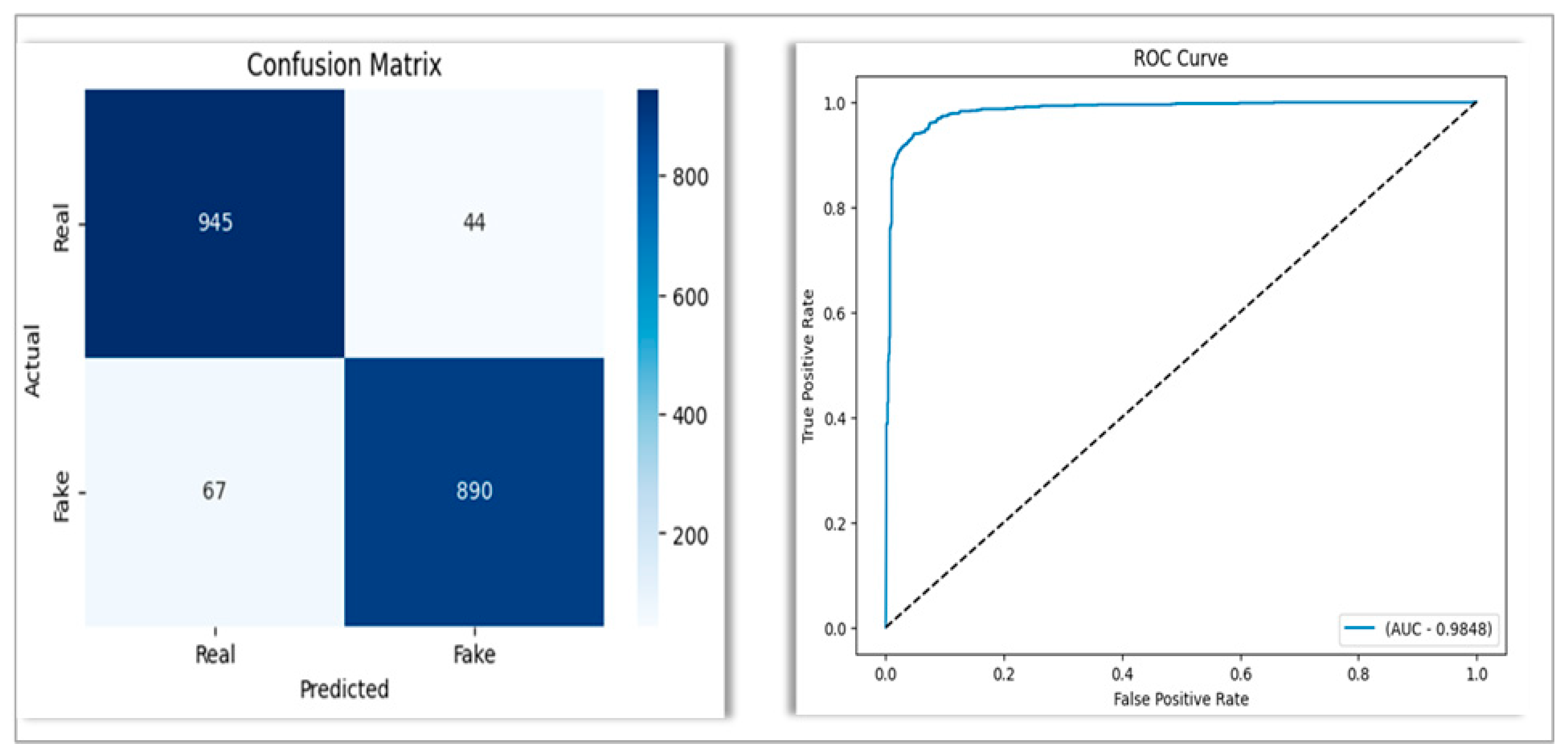

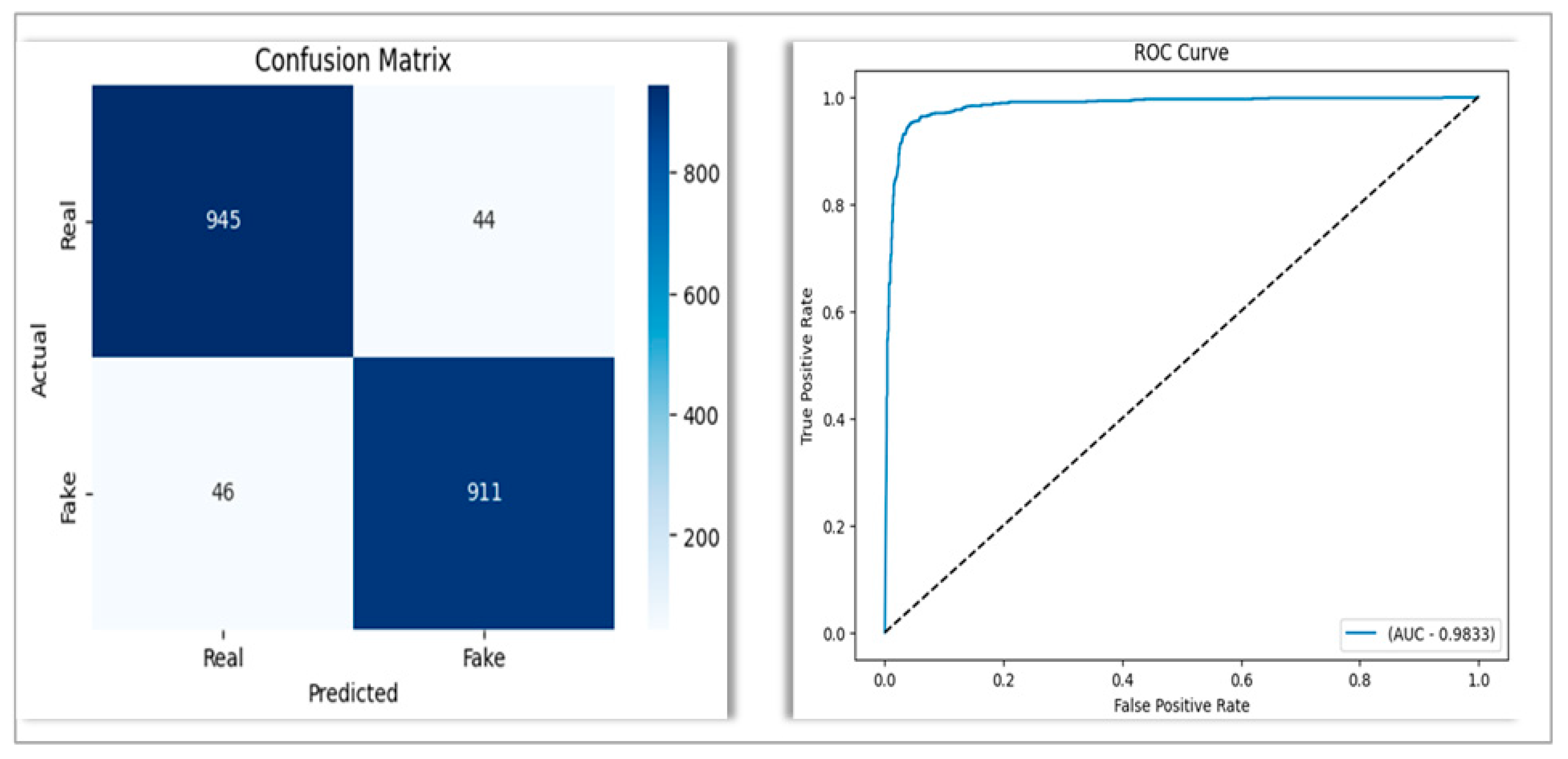

On the FF++ dataset, both feature extractors exhibit high AUC values, with Xception achieving 98.48% and ResNet50 reaching 98.33%. While the difference is marginal, the slightly higher AUC obtained with Xception suggests a marginally better ability to distinguish between real and fake instances under this dataset. The remaining metrics (ACC, F1, P, R) show a comparable trend, with ResNet50 showing slightly higher classification accuracy and class-wise balance.

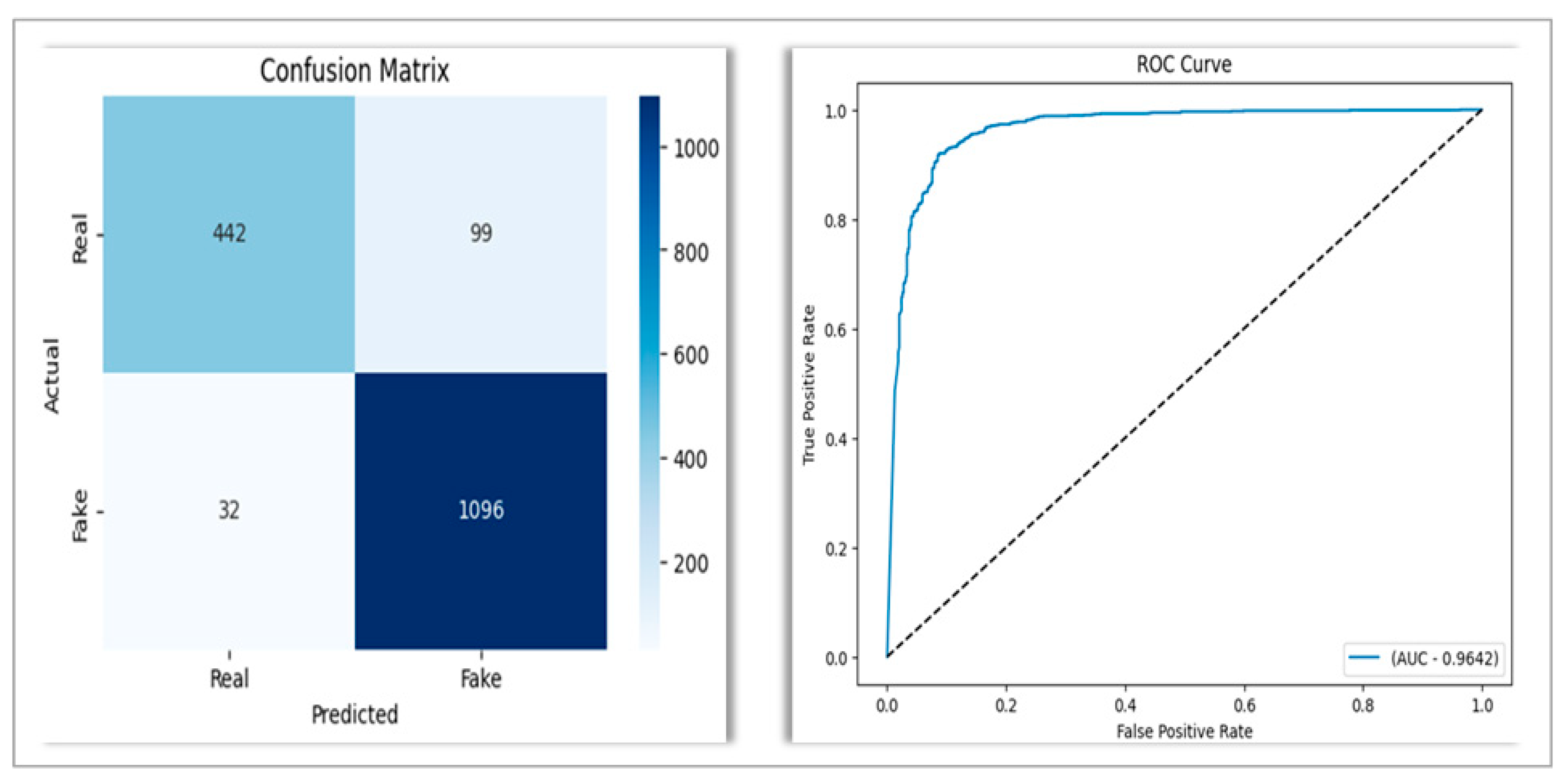

In the case of the Celeb-DF dataset, the performance gap in AUC becomes more noticeable. The ResNet50 model attains 97.65%, whereas Xception reaches 96.42%. This may indicate that ResNet50 features are somewhat more generalizable when applied to unseen data.

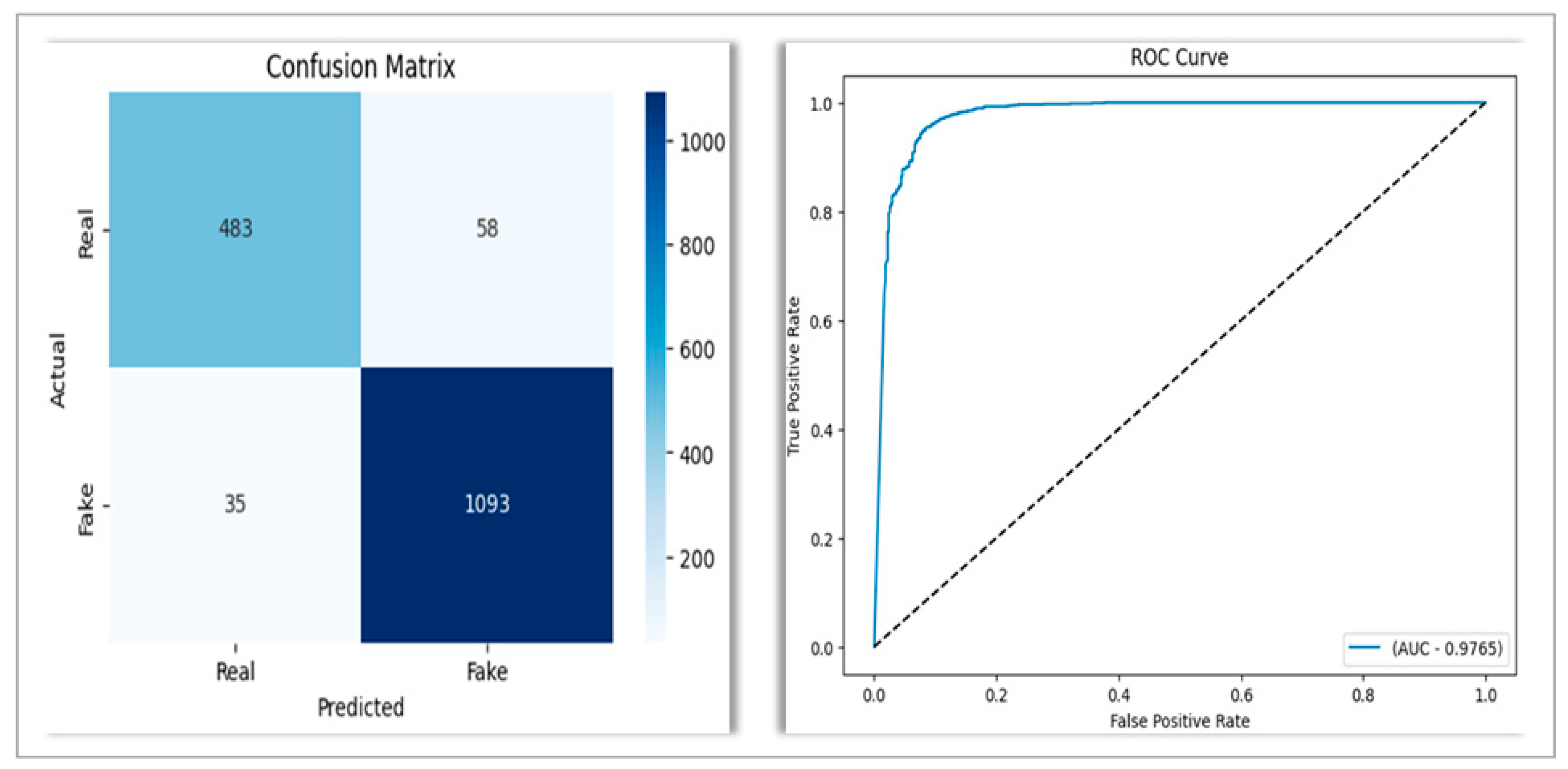

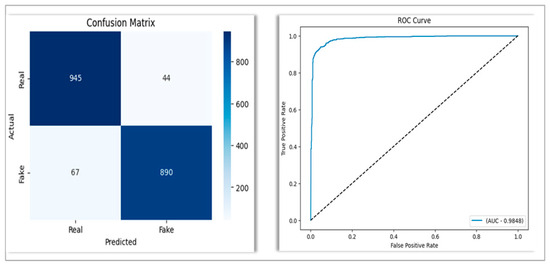

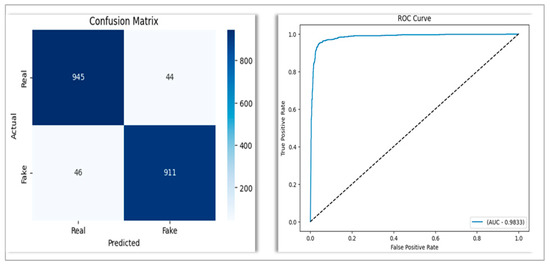

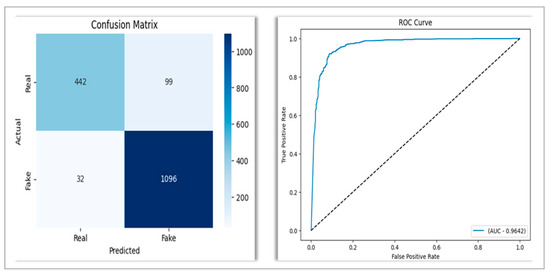

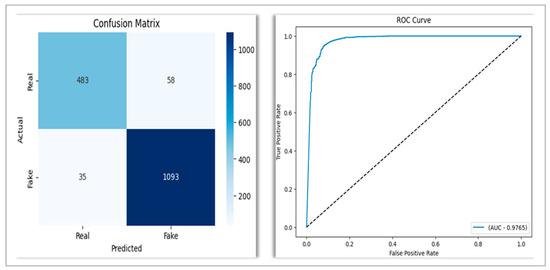

To further interpret these results, Figure 2, Figure 3, Figure 4 and Figure 5 present the corresponding confusion matrices and ROC curves for each case. The confusion matrices in Figure 2 and Figure 3 show that both Xception and ResNet50 models can distinguish between real and fake videos with relatively low misclassification rates. The majority of real (class 1) and fake (class 0) instances are correctly classified. The ROC curves further confirm this by presenting high separability, with AUC values exceeding 98% for both models.

Figure 2.

Confusion matrix and ROC curve obtained on the FF++ dataset using Xception features without feature selection.

Figure 3.

Confusion matrix and ROC curve obtained on the FF++ dataset using ResNet50 features without feature selection.

Figure 4.

Confusion matrix and ROC curve obtained on the CDF dataset using Xception features without feature selection.

Figure 5.

Confusion matrix and ROC curve obtained on the CDF dataset using ResNet50 features without feature selection.

Figure 4 and Figure 5 illustrate the classification performance of the models on the Celeb-DF dataset. Although both models maintain high true positive rates for real videos (class 1), ResNet50 shows fewer misclassifications for fake instances (class 0), resulting in better overall balance. This is reflected in the ROC curve of ResNet50, which displays a smoother and more dominant curve than that of Xception.

While both backbones perform well, the results clearly suggest that ResNet50 generalizes better, especially on unseen datasets like Celeb-DF, whereas Xception provides marginally stronger within-dataset discrimination on FF++. The following sections will demonstrate how applying the proposed AHA-PLO hybrid feature selection model leads to even higher AUC values by eliminating redundant and less informative features from these raw representations.

5.3.2. Evaluation on FF++ Dataset

The classification results obtained after applying feature selection algorithms on the FF++ dataset are summarized in Table 4. As shown, the proposed AHA-PLO hybrid model achieved the highest AUC scores for both backbone architectures, highlighting its superior ability to select discriminative features. In particular, the hybrid model attained an AUC of 99.36% with the ResNet50 backbone, indicating notable improvements compared to individual algorithms.

Table 4.

Performance metrics (%) and feature number for the FF++ dataset.

Table 4 summarizes the classification performance of three meta-heuristic feature selection methods, AHA, PLO, and AHA-PLO, on the FF++ dataset using deep features extracted via Xception and ResNet50 architectures.

Focusing on the Xception-based results, the proposed AHA-PLO hybrid model achieves the highest AUC (99.36%), slightly outperforming both AHA (99.12%) and PLO (99.25%). This improvement is further supported by robust F1-scores and high recall values for both classes, suggesting that the hybrid model maintains a strong balance between true positive and true negative rates. While PLO yields marginally better accuracy (96.29%) than AHA-PLO (96.15%), the hybrid approach offers a more consistent trade-off across all metrics, particularly recall and AUC, which are critical for minimizing false negatives in deepfake detection.

In the ResNet50-based setting, AHA-PLO also delivers the highest AUC (98.80%) and shows a reduction in selected feature count (1029 features) compared to AHA (1776) and PLO (1015), indicating improved compactness without sacrificing performance.

Overall, the hybrid method demonstrates a synergistic effect, especially when applied to Xception features, highlighting its advantage in selecting more discriminative feature subsets for the FF++ dataset.

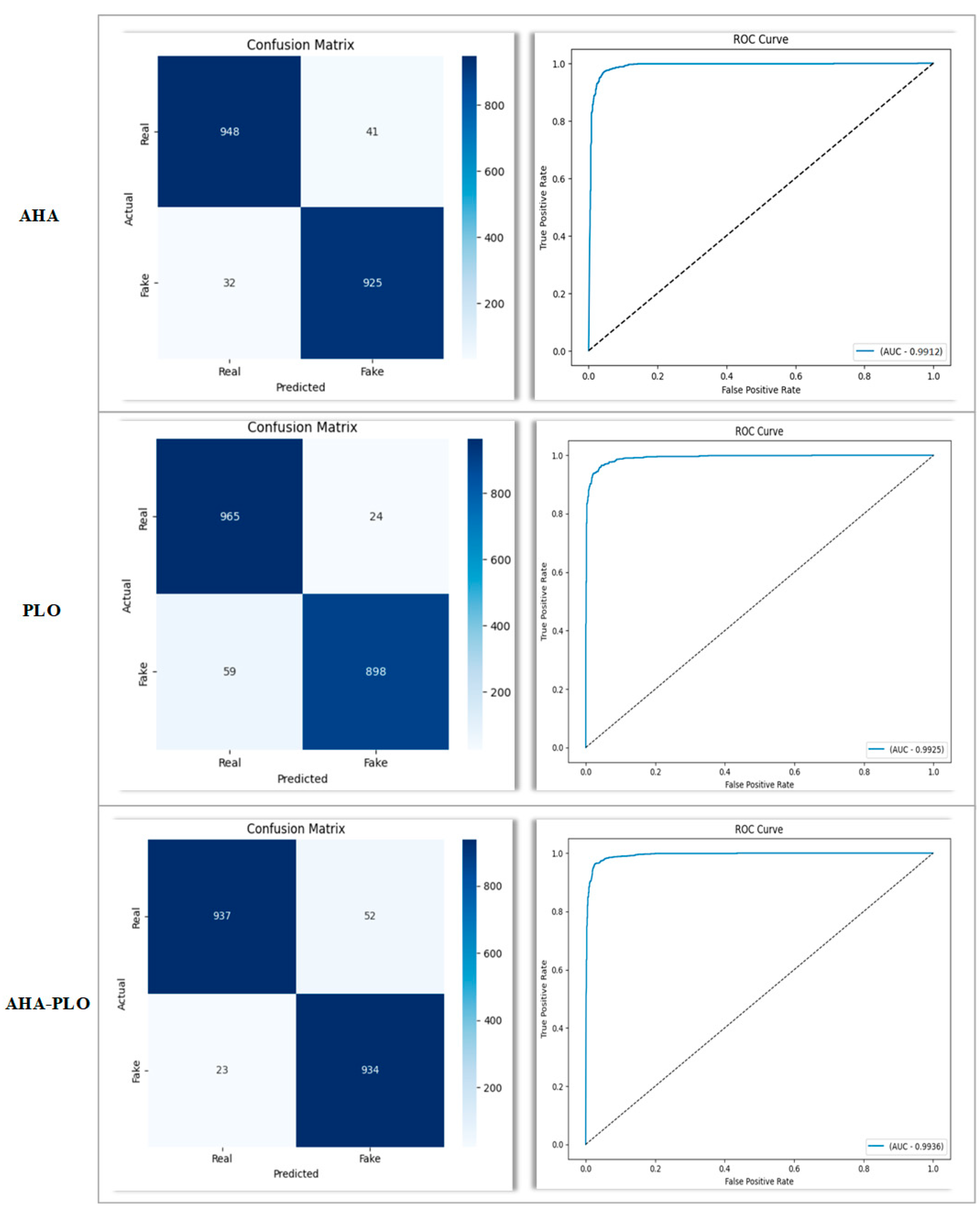

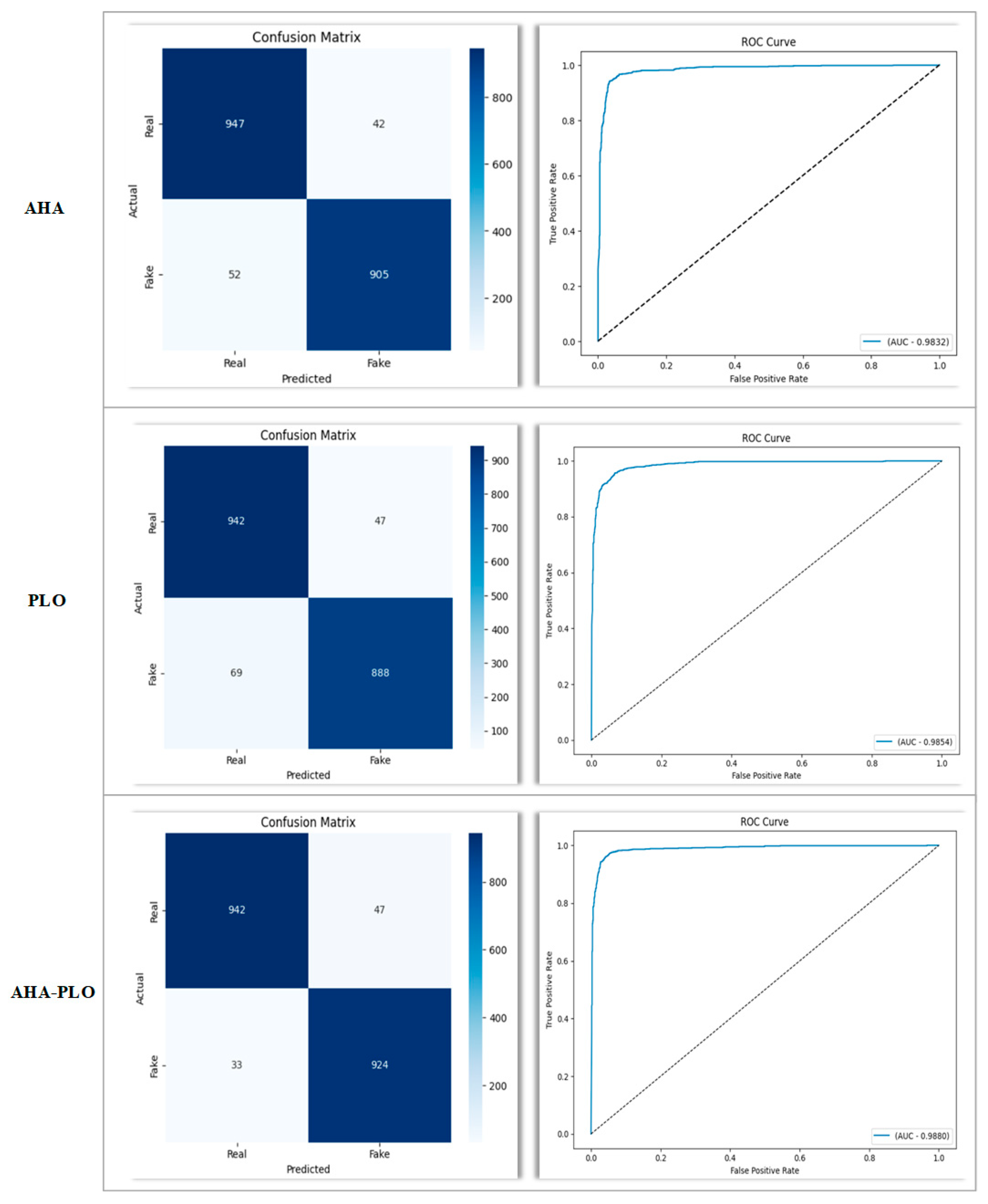

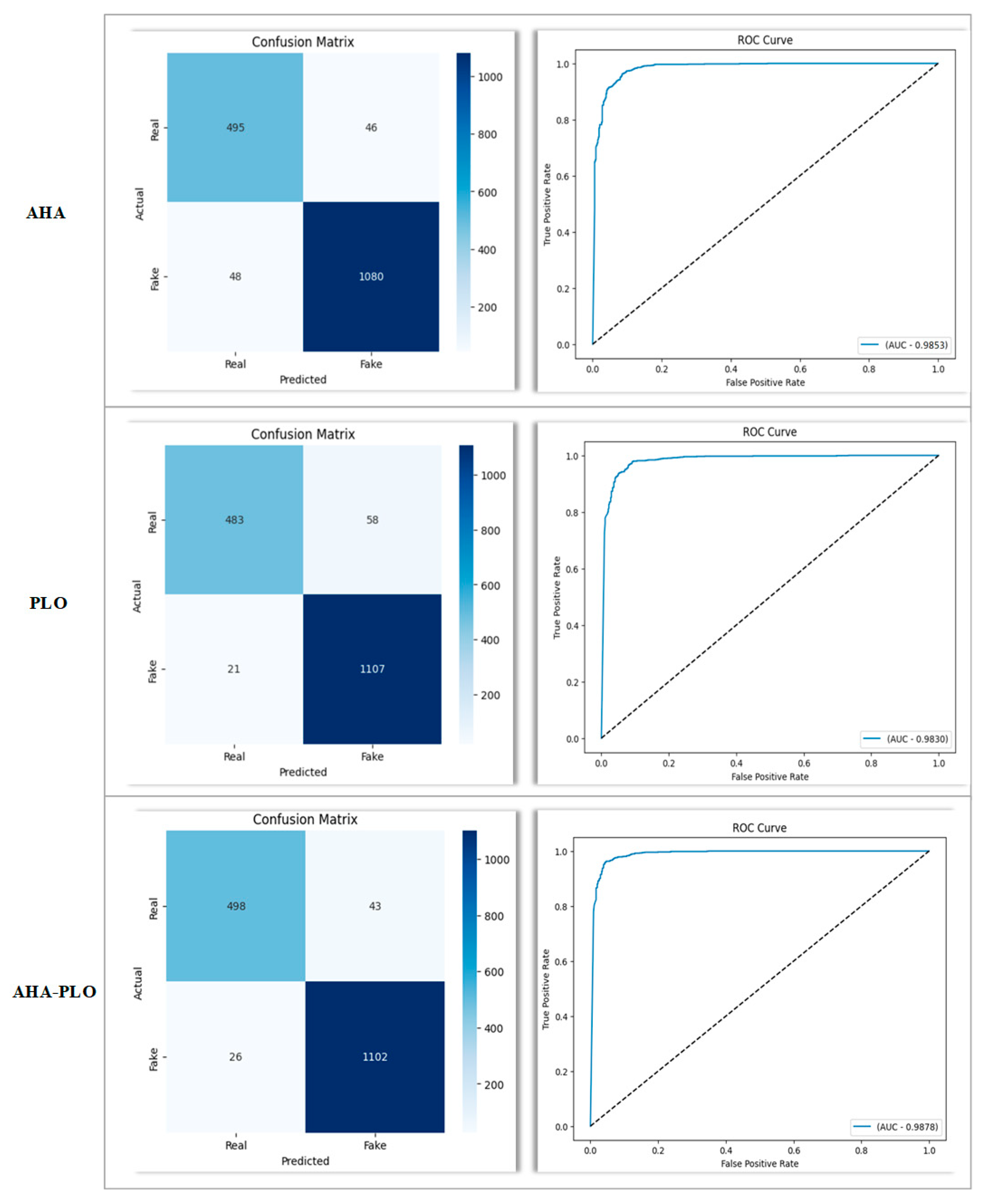

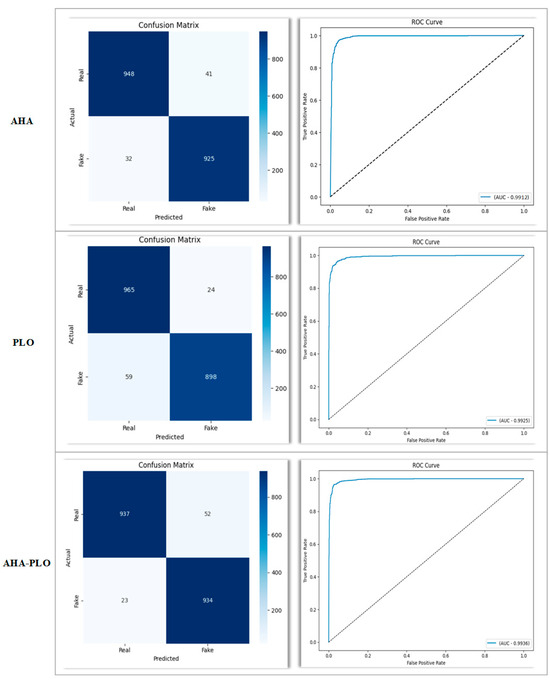

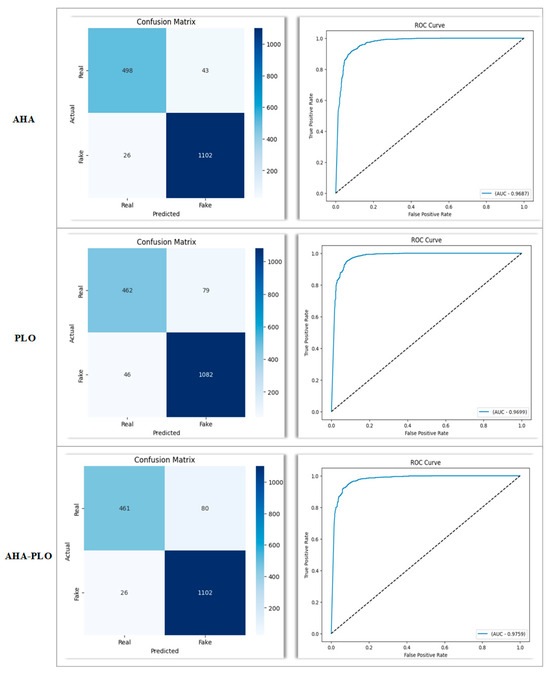

Figure 6 and Figure 7 further illustrate the performance via confusion matrices and ROC curves, confirming the hybrid model’s effectiveness in reducing misclassifications and increasing overall detection accuracy.

Figure 6.

Comparative performance of AHA, PLO, and AHA-PLO on the FF++ dataset using Xception-based features.

Figure 7.

Comparative performance of AHA, PLO, and AHA-PLO on the FF++ dataset using ResNet50-based features.

Figure 6 illustrates the confusion matrices and ROC curves for the AHA, PLO, and AHA-PLO algorithms applied to the FF++ dataset using Xception-extracted features. Among the three, the hybrid AHA-PLO model exhibits the highest AUC score, 99.36%, indicating superior overall classification capability. Additionally, it achieves a lower false positive rate and false negative rate compared to its individual components. While AHA shows a balanced detection performance with relatively few misclassifications, PLO yields a higher number of false negatives. The combined strengths of AHA and PLO enable the hybrid model to maintain both strong generalization and precise decision boundaries.

Figure 7 presents the confusion matrices and ROC curves for AHA, PLO, and their hybrid AHA-PLO algorithm on the FF++ dataset using ResNet50-based features. The AHA-PLO hybrid model achieves the highest AUC value %98.60, reflecting improved detection capability compared to its individual components. Notably, it significantly reduces false negatives relative to PLO and demonstrates a better balance between sensitivity and specificity. While AHA and PLO each show competitive performance, their combination leads to a synergistic improvement, particularly in distinguishing fake samples more accurately.

5.3.3. Evaluation on CDF Dataset

To further assess the generalizability and robustness of the proposed AHA-PLO framework, experiments were conducted on the CDF dataset. The classification performance of AHA, PLO, and the hybrid AHA-PLO model was evaluated using both Xception and ResNet50-based feature representations.

To further clarify the effectiveness of the hybridization strategy, an ablation study was conducted by individually applying the AHA and PLO algorithms and comparing their results with the hybrid AHA-PLO model on the CDF dataset. As presented in Table 5, the AHA-PLO model consistently outperforms both standalone algorithms across almost all evaluation metrics—most notably achieving the highest AUC of 98.78% when using ResNet50-based features.

Table 5.

Performance metrics (%) and feature number for the CDF dataset.

Although AHA tends to select a larger number of features and PLO achieves slightly higher precision values in some classes, the hybrid model offers a more balanced trade-off between feature compactness and classification performance. This performance gain supports the synergistic integration of AHA’s global exploration capacity and PLO’s local exploitation strength, leading to a more discriminative and optimized feature subset. Overall, these results provide empirical evidence for the operational complementarity of the two metaheuristic components within the proposed hybrid framework.

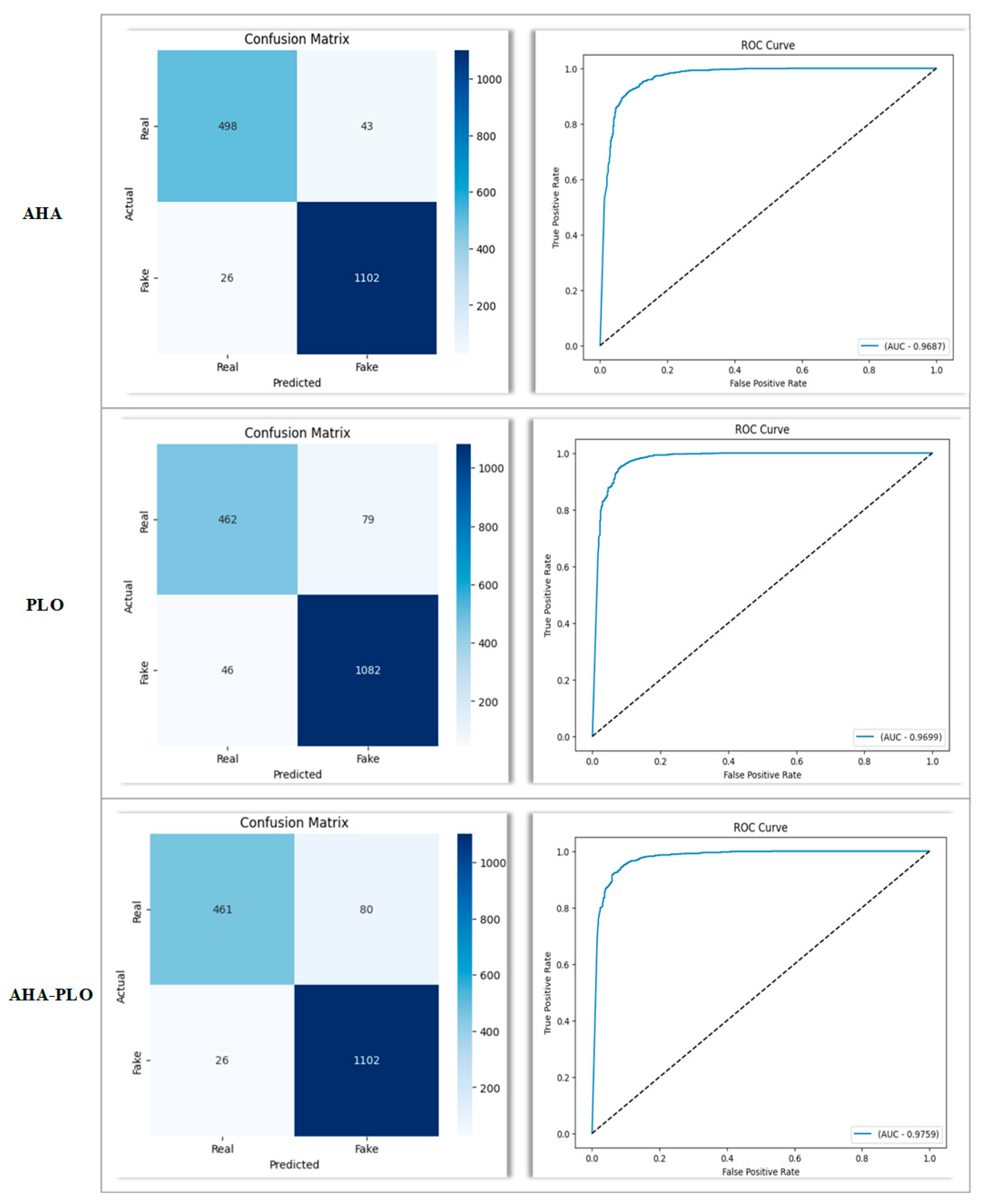

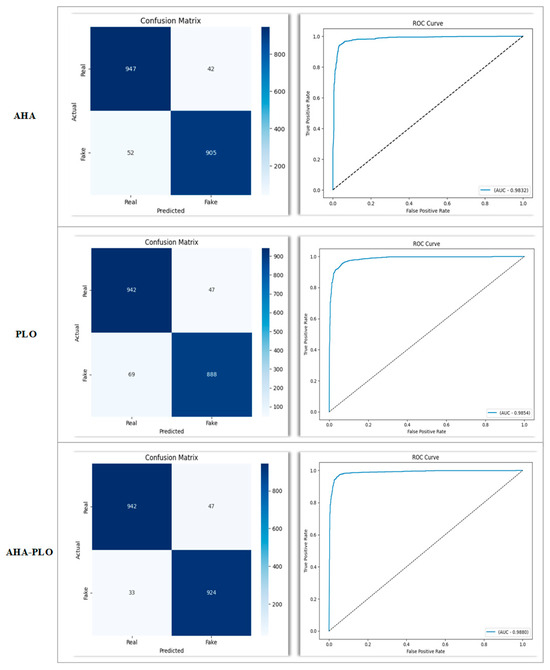

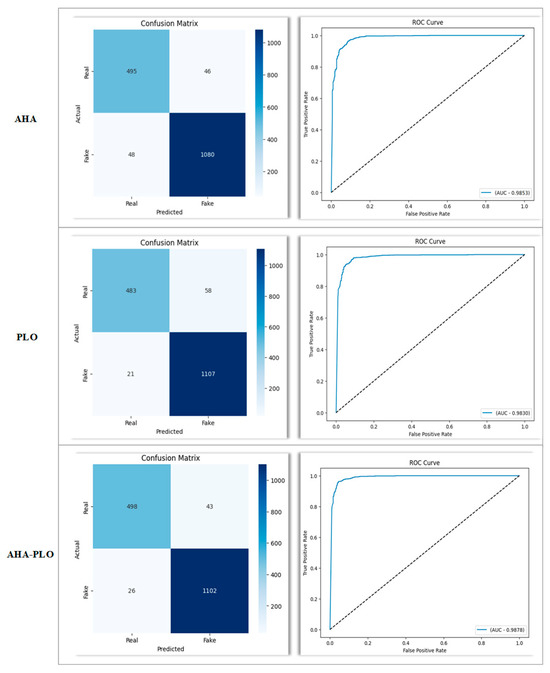

The classification performance of the proposed methods on the CDF dataset is further illustrated through visual analysis. Figure 8 presents the confusion matrices and ROC curves obtained using Xception features, while Figure 9 demonstrates the corresponding results based on ResNet50 features. These visualizations offer additional insights into the comparative behavior of AHA, PLO, and AHA-PLO algorithms.

Figure 8.

Comparative performance of AHA, PLO, and AHA-PLO on the CDF dataset using Xception-based features.

Figure 9.

Comparative performance of AHA, PLO, and AHA-PLO on the CDF dataset using ResNet50-based features.

As illustrated in Figure 8, the classification performance of the individual and hybrid metaheuristic algorithms on the CDF dataset is evaluated using Xception-based deep features. The AHA-PLO hybrid model exhibits superior discriminative capability by achieving the highest AUC of %97.59, compared to AHA 96.67% and PLO 96.99%. In terms of the confusion matrix, AHA-PLO demonstrates a more balanced classification between real and fake samples, correctly identifying 1102 fake samples with only 26 false negatives, outperforming both AHA and PLO in minimizing misclassification of fake images.

As shown in Figure 9, the classification results obtained using ResNet50-based features on the CDF dataset reveal the performance distinctions between standalone AHA, PLO, and hybrid AHA-PLO metaheuristic algorithms. The AHA-PLO model stands out with the highest AUC score of %98.78, surpassing both AHA 98.53% and PLO 98.30%.

The confusion matrix further supports this finding. AHA-PLO successfully detects 1102 fake samples, with only 26 false negatives, matching AHA in fake detection accuracy but outperforming it in the classification of real samples. Compared to PLO, which misclassifies 58 real samples, AHA-PLO also achieves better balance in both classes, indicating a more robust generalization capability.

These observations validate that the hybrid strategy not only preserves the strengths of both AHA and PLO but also refines their weaknesses. In particular, AHA-PLO minimizes false negatives, a crucial metric in deepfake detection, without a trade-off in false positives, suggesting its strong potential as a reliable detector on more challenging datasets like CDF.

6. Discussion

This section discusses the experimental findings by focusing on two main aspects: the impact of feature selection on model performance and the comparison of the proposed hybrid framework with state-of-the-art deepfake detection methods. First, the results obtained before and after applying metaheuristic-based feature selection are examined to highlight how redundant features influence performance and how the proposed algorithms improve classification robustness. Next, the performance of the hybrid AHA-PLO model is compared with existing approaches in the literature, providing insights into the strengths and competitiveness of the proposed method across different datasets.

6.1. Evaluating Feature Selection Before and After Optimization

In this section, the results obtained before and after feature selection are discussed. Considering the FF++ dataset, feature selection with metaheuristic algorithms, and particularly the hybrid AHA-PLO model, led to clear improvements over the baseline without feature selection.

- AUC values improved after feature selection, rising from 98.48 with Xception and 98.33 with ResNet50 to 99.36 and 98.80 with AHA-PLO.

- Accuracy also increased, most notably for Xception where it rose from 94.30 to 96.15, indicating more reliable predictions.

- Recall for real samples showed clear gains with AHA-PLO, reaching 97.60% and thereby reducing the number of real instances misclassified as fake.

- Precision for fake samples was enhanced, with PLO achieving 97.39%, which lowered the number of fake instances misclassified as real.

- Feature dimensionality was substantially reduced, especially with ResNet50, where the feature count decreased from 2048 to about 1029 while maintaining high classification performance.

- Overall, the AHA-PLO hybrid model delivered the most balanced improvements and consistently outperformed standalone AHA and PLO.

When the CDF dataset is considered, applying metaheuristic-based feature selection leads to clear performance improvements over the baseline, with the hybrid AHA-PLO model providing the most consistent gains across metrics.

- AUC values improved with feature selection: Xception increased from 96.42 to 97.59 with AHA-PLO, and ResNet50 rose from 97.65 to 98.78.

- Accuracy also showed notable gains, especially for ResNet50, where AHA-PLO raised accuracy from 94.43 to 95.87.

- Recall for real samples improved substantially with AHA-PLO, reaching 92.05% with ResNet50 and reducing the misclassification of real videos as fake.

- Precision for fake samples was strengthened, reaching 96.24% with ResNet50 and minimizing the misclassification of fake videos as real.

- Feature dimensionality was reduced by nearly half, particularly with ResNet50 where the feature count decreased from 2048 to around 1523, while classification power was preserved or enhanced.

- Overall, the AHA-PLO hybrid model achieved the best trade-off between compactness and performance, consistently surpassing standalone AHA and PLO.

6.2. Comparison with State-of-the-Art

We have compared the effectiveness of the proposed AHA-PLO hybrid model with state-of-the-art deepfake video detection models [10,21,22,23,24,25,26]. The studies presented in Table 6 were conducted on the same datasets that we used. Therefore, the performance of different methods on deepfake detection becomes directly comparable. Particularly in studies [10,26], meta-heuristic algorithms were employed for deepfake detection.

Table 6.

Comparison of the proposed AHA-PLO hybrid model with state-of-the-art deepfake detection approaches.

In addition to the performance-oriented comparison given in Table 6, Table 7 presents an extended summary of MHS methods that have been applied for deepfake detection across various datasets. While only one MHS study in Table 7 reported the AUC metric under conditions directly comparable to the present work, several other hybrid metaheuristic approaches have employed different datasets and evaluation metrics such as accuracy, F1-score, or precision.

Table 7.

Overview of MHS methods applied to deepfake video detection.

As shown in Table 7, various MHS approaches have been proposed for deepfake detection, typically integrating swarm or evolutionary algorithms with deep learning architectures. Most existing studies focus on hyperparameter optimization or feature extraction enhancement rather than direct feature selection. The Domain column indicates the specific model level at which the hybrid was implemented—such as RMDL, EfficientNet, CNN-RNN, or Xception—demonstrating that metaheuristic optimization has predominantly been applied to classifier- or feature extraction–level tuning. In contrast, the proposed AHA–PLO hybrid model operates explicitly within the feature selection domain, aiming to reduce redundancy in high-dimensional feature spaces extracted from deepfake datasets.

6.3. Cross-Dataset Evaluation

To further evaluate the generalization capability of the proposed AHA–PLO hybrid model, an additional cross-dataset experiment was conducted. In this experiment, the model trained on the FF++ dataset was tested on two unseen datasets, namely WildDeepfake (WDF) [27] and CDF, to assess its ability to adapt to different data distributions and manipulation characteristics. The results of these experiments are presented in Table 8.

Table 8.

Cross-dataset generalization results of the proposed AHA–PLO hybrid model.

As shown in Table 8, the cross-dataset evaluation demonstrates the generalization ability of the proposed AHA–PLO hybrid model when it was trained on the FF++ dataset and tested on unseen datasets, WDF and CDF.

In the experiment where the model trained on FF++ was evaluated on WDF, the hybrid AHA–PLO achieved the highest AUC value of 74.97%, outperforming the standalone AHA and PLO models, as well as the baseline model without feature selection. This improvement shows that combining the exploration capability of the AHA with the exploitation-oriented refinement of the PLO algorithm enhances the model’s ability to generalize to new data domains.

When the same model trained on FaceForensics++ was tested on the CDF dataset, all configurations produced lower AUC values, approximately between %51 and %52 percent, due to the greater domain gap, variations in video compression, and different manipulation artifacts between the two datasets.

Despite the overall drop in performance, the AHA–PLO hybrid model maintained the most stable behavior across both unseen datasets, demonstrating its robustness and adaptability under domain shift conditions.

7. Conclusions

In this study, a pioneering approach to deepfake detection is introduced by leveraging meta-heuristic algorithms for feature selection, significantly enhancing detection performance. The proposed AHA-PLO hybrid model demonstrates outstanding effectiveness, achieving the highest AUC values among the compared methods. To emphasize its superiority, AHA, PLO, and the hybrid AHA-PLO algorithms are extensively evaluated, showcasing the remarkable impact of the hybrid strategy. Experimental results on the FF++ and CDF datasets, which are among the most comprehensive and widely used benchmarks in deepfake detection, confirm that the proposed method not only surpasses baseline approaches but also outperforms current state-of-the-art techniques. Notably, on the FF++ dataset, the AHA-PLO model achieves an excellent AUC of 99.36%, setting a new benchmark in deepfake detection, while also maintaining a competitive accuracy of 96.15%. Similarly, on the CDF dataset, the proposed model delivers highly competitive results, achieving an AUC of 98.78% and an accuracy of 95.87%, reinforcing its robustness and adaptability across different datasets. These findings underscore the critical role of hybrid meta-heuristic algorithms in deepfake detection, establishing them as a powerful tool for optimizing feature selection in deep learning-based approaches. The exceptional performance of the proposed model paves the way for future advancements, where we plan to further refine our approach with novel meta-heuristic optimization strategies and validate its effectiveness on a wider range of deepfake datasets.

Author Contributions

Conceptualization, A.K. and M.A.; methodology, A.K.; software, A.K.; validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, visualization, project administration, M.A., and A.K.; supervision, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hariprasad, Y.; Iyengar, S.; Subramanian, N. Deepfake video detection using lip region analysis with advanced artificial intelligence based anomaly detection technique. Hilar. Clin. J. 2024. Authorea Preprints. [Google Scholar]

- Qureshi, S.M.; Saeed, A.; Almotiri, S.H.; Ahmad, F.; Al Ghamdi, M.A. Deepfake forensics: A survey of digital forensic methods for multimodal deepfake identification on social media. PeerJ Comput. Sci. 2024, 10, e2037. [Google Scholar] [CrossRef] [PubMed]

- Tay, W.X.; Na Chua, H.; Jasser, M.B.; Issa, B.; Wong, R.T. DeepDect: A Facial Deepfake Video Detection Application using Ensemble Learning. In Proceedings of the 2024 IEEE 12th Conference on Systems, Process & Control (ICSPC), Malacca, Malaysia, 7 December 2024; pp. 310–315. [Google Scholar]

- Al-Shalif, S.A.; Senan, N.; Saeed, F.; Ghaban, W.; Ibrahim, N.; Aamir, M.; Sharif, W. A systematic literature review on meta-heuristic based feature selection techniques for text classification. PeerJ Comput. Sci. 2024, 10, e2084. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Chen, H. Polar lights optimizer: Algorithm and applications in image segmentation and feature selection. Neurocomputing 2024, 607, 128427. [Google Scholar] [CrossRef]

- Rezk, H.; Olabi, A.G.; Wilberforce, T.; Sayed, E.T. Sayed, Metaheuristic optimization algorithms for real-world electrical and civil engineering application: A review. Results Eng. 2024, 23, 102437. [Google Scholar] [CrossRef]

- Priya, G.N.; Kishore, B.; Ganeshan, R.; Cristin, R. Video forgery detection using competitive swarm sun flower optimization algorithm based deep learning. Wirel. Netw. 2024, 31–49. [Google Scholar]

- Alhaji, H.S.; Celik, Y.; Goel, S. An approach to deepfake video detection based on ACO-PSO features and deep learning. Electronics 2024, 13, 2398. [Google Scholar] [CrossRef]

- Cunha, L.; Zhang, L.; Sowan, B.; Lim, C.P.; Kong, Y. Video deepfake detection using Particle Swarm Optimization improved deep neural networks. Neural Comput. Appl. 2024, 36, 8417–8453. [Google Scholar] [CrossRef]

- Al-Adwan, A.; Alazzam, H.; Al-Anbaki, N.; Alduweib, E. Detection of Deepfake Media Using a Hybrid CNN–RNN Model and Particle Swarm Optimization (PSO) Algorithm. Computers 2024, 13, 99. [Google Scholar] [CrossRef]

- Al-Qazzaz, A.S.; Salehpour, P.; Aghdasi, H.S. Robust deepfake face detection leveraging xception model and novel snake optimization technique. J. Robot. Control. (JRC) 2024, 5, 1444–1456. [Google Scholar]

- Alazwari, S.; Alsamri, M.O.J.; Alamgeer, M.; Alabdan, R.; Alzahrani, I.; Rizwanullah, M.; Osman, A.E. Artificial rabbits optimization with transfer learning based deepfake detection model for biometric applications. Ain Shams Eng. J. 2024, 15, 103057. [Google Scholar] [CrossRef]

- Krishnan, V.G.; Vadivel, R.; Sankar, K.; Sathyamoorthy, K.; Laxmi, B.P. Coot Bird Optimization-Based ESkip-ResNet Classification for Deepfake Detection. J. Comput. Cogn. Eng. 2022. [Google Scholar] [CrossRef]

- Rossler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Nießner, M. Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar]

- Li, Y.; Yang, X.; Sun, P.; Qi, H.; Lyu, S.C.D. Celeb-df: A large-scale challenging dataset for deepfake forensics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3207–3216. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Elaziz, M.A.; Dahou, A.; El-Sappagh, S.; Mabrouk, A.; Gaber, M.M. AHA-AO: Artificial hummingbird algorithm with Aquila optimization for efficient feature selection in medical image classification. Appl. Sci. 2022, 12, 9710. [Google Scholar] [CrossRef]

- Zhao, H.; Wei, T.; Zhou, W.; Zhang, W.; Chen, D.; Yu, N. Multi-attentional deepfake detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2185–2194. [Google Scholar]

- Qian, Y.; Yin, G.; Sheng, L.; Chen, Z.; Shao, J. Thinking in frequency: Face forgery detection by mining frequency-aware clues. In Proceedings of the European Conference on Computer Vision, Virtual Event, 23–28 August 2020; pp. 86–103. [Google Scholar]

- Cozzolino, D.; Rössler, A.; Thies, J.; Nießner, M.; Verdoliva, L. Id-reveal: Identity-aware deepfake video detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15108–15117. [Google Scholar]

- Wang, Y.; Sun, Q.; Rong, D.; Geng, R. Multi-domain awareness for compressed deepfake videos detection over social networks guided by common mechanisms between artifacts. Comput. Vis. Image Underst. 2024, 247, 104072. [Google Scholar] [CrossRef]

- Liu, H.; Li, X.; Zhou, W.; Chen, Y.; He, Y.; Xue, H.; Zhang, W.; Yu, N. Spatial-phase shallow learning: Rethinking face forgery detection in frequency domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 772–781. [Google Scholar]

- Zhang, L.; Zhao, D.; Lim, C.P.; Asadi, H.; Huang, H.; Yu, Y.; Gao, R. Video deepfake classification using particle swarm optimization-based evolving ensemble models. Knowl.-Based Syst. 2024, 289, 111461. [Google Scholar] [CrossRef]

- Zi, B.; Chang, M.; Chen, J.; Ma, X.; Jiang, Y.G. Wilddeepfake: A challenging real-world dataset for deepfake detection. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2382–2390. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).