Unpaired Image Captioning via Cross-Modal Semantic Alignment

Abstract

1. Introduction

- (1)

- A multi-level joint feature representation module based on CLIP’s cross-modal alignment capability is proposed, which can automatically extract semantic entities from images and deeply fuse them with image features to construct rich cross-modal joint features, effectively guiding the language model to generate descriptions that are highly consistent with the image content and semantically precise.

- (2)

- Design a joint reward function that integrates image-text semantic alignment and language fluency, combined with a progressive multi-stage optimization strategy, to significantly enhance the semantic accuracy and naturalness of the generated text.

- (3)

- A novel progressive multi-stage optimization framework is developed, which seamlessly combines initialization and reinforcement learning to ensure training stability and enhance cross-modal adaptability in unpaired conditions.

2. Related Work

2.1. Unpaired Image Captioning

2.2. Reinforcement Learning

3. Method

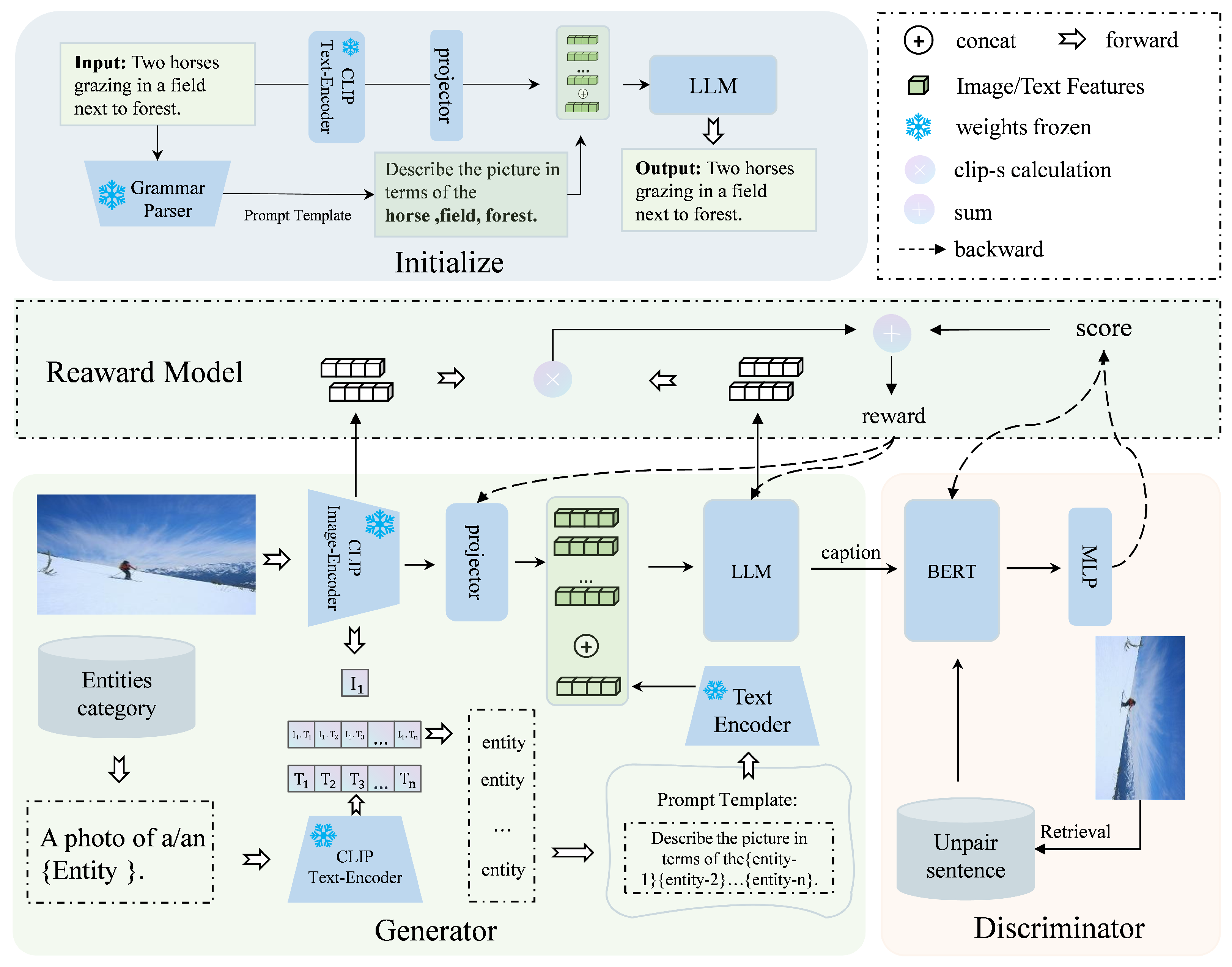

3.1. Initialize

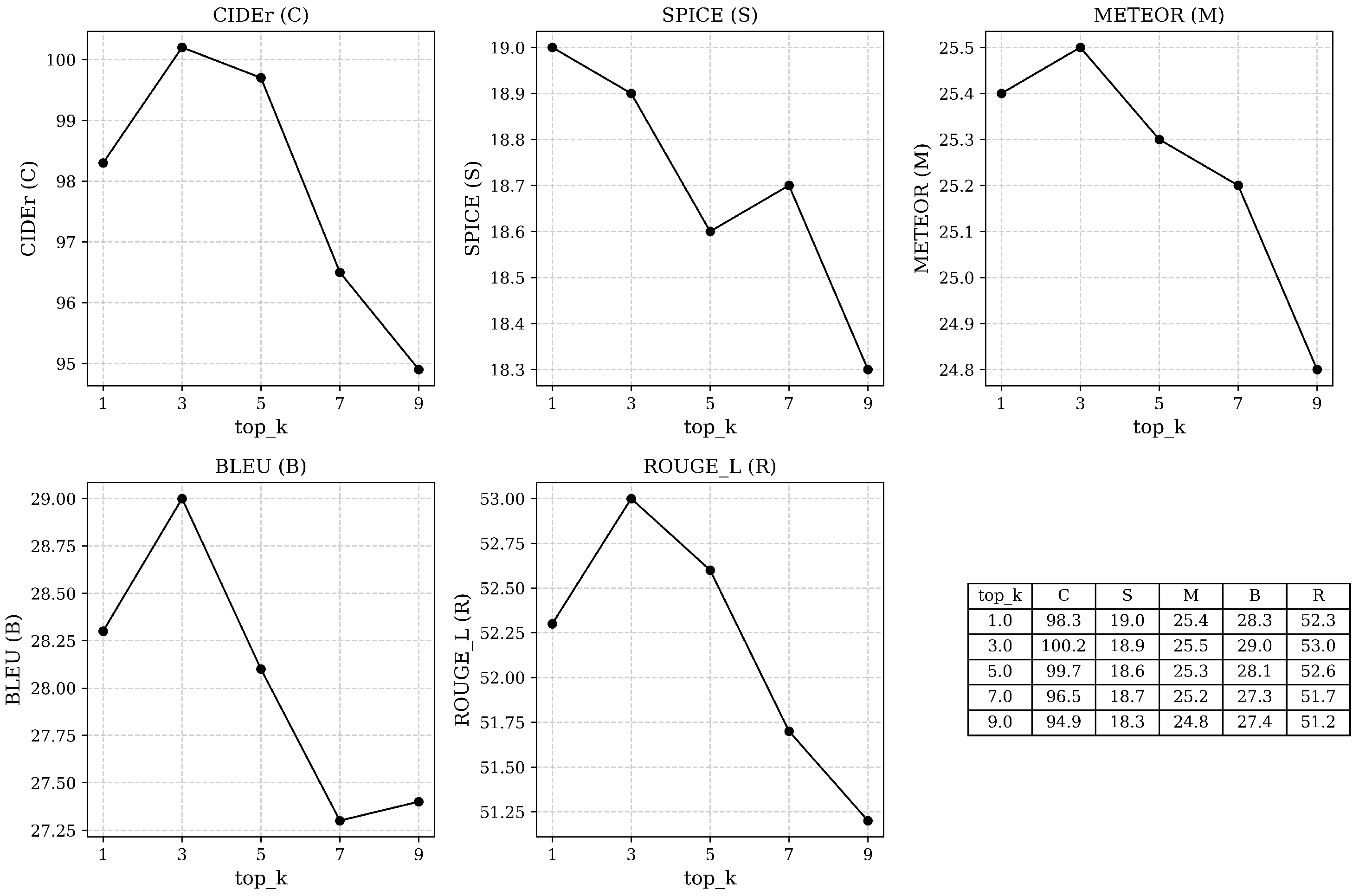

3.2. Generator

3.3. Discriminator

3.4. Reward

4. Experimental

4.1. Dataset

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Comparison on Benchmarks

4.5. Overall Performance Evaluation of the Model

4.6. Cross-Domain Generalization Performance Analysis

4.7. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, L.; Wang, W.; Chen, J.; Wei, X.Y. Attention on attention for image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4634–4643. [Google Scholar]

- Changpinyo, S.; Sharma, P.; Ding, N.; Soricut, R. Conceptual 12M: Pushing web-scale image-text pre-training to recognize long-tail visual concepts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3558–3568. [Google Scholar]

- Wang, Z.; Yu, J.; Yu, A.W.; Dai, Z.; Tsvetkov, Y.; Cao, Y. Simvlm: Simple visual language model pretraining with weak supervision. arXiv 2021, arXiv:2108.10904. [Google Scholar]

- Gu, S.; Clark, C.; Kembhavi, A. I can’t believe there’s no images! learning visual tasks using only language supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 2672–2683. [Google Scholar]

- Tewel, Y.; Shalev, Y.; Schwartz, I.; Wolf, L. Zerocap: Zero-shot image-to-text generation for visual-semantic arithmetic. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17918–17928. [Google Scholar]

- Nukrai, D.; Mokady, R.; Globerson, A. Text-only training for image captioning using noise-injected clip. arXiv 2022, arXiv:2211.00575. [Google Scholar]

- Li, W.; Zhu, L.; Wen, L.; Yang, Y. Decap: Decoding clip latents for zero-shot captioning via text-only training. arXiv 2023, arXiv:2303.03032. [Google Scholar]

- Qiu, L.; Ning, S.; He, X. Mining fine-grained image-text alignment for zero-shot captioning via text-only training. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 4605–4613. [Google Scholar]

- Fei, J.; Wang, T.; Zhang, J.; He, Z.; Wang, C.; Zheng, F. Transferable decoding with visual entities for zero-shot image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 3136–3146. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PmLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Feng, Y.; Ma, L.; Liu, W.; Luo, J. Unsupervised Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4125–4134. [Google Scholar]

- Zhu, P.; Wang, X.; Luo, Y.; Sun, Z.; Zheng, W.S.; Wang, Y.; Chen, C. Unpaired image captioning by image-level weakly-supervised visual concept recognition. IEEE Trans. Multimed. 2022, 25, 6702–6716. [Google Scholar] [CrossRef]

- Ben, H.; Pan, Y.; Li, Y.; Yao, T.; Hong, R.; Wang, M.; Mei, T. Unpaired image captioning with semantic-constrained self-learning. IEEE Trans. Multimed. 2021, 24, 904–916. [Google Scholar] [CrossRef]

- Ben, H.; Wang, S.; Wang, M.; Hong, R. Pseudo Content Hallucination for Unpaired Image Captioning. In Proceedings of the 2024 International Conference on Multimedia Retrieval (ICMR), Phuket, Thailand, 10–14 June 2024; pp. 320–329. [Google Scholar]

- Su, Y.; Lan, T.; Liu, Y.; Liu, F.; Yogatama, D.; Wang, Y.; Kong, L.; Collier, N. Language models can see: Plugging visual controls in text generation. arXiv 2022, arXiv:2205.02655. [Google Scholar] [CrossRef]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-critical sequence training for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7008–7024. [Google Scholar]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. CIDEr: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Karpathy, A.; Fei-Fei, L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-entropy loss functions: Theoretical analysis and applications. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- Yu, J.; Li, H.; Hao, Y.; Zhu, B.; Xu, T.; He, X. CgT-GAN: Clip-guided text GAN for image captioning. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 2252–2263. [Google Scholar]

- Zhang, L.; Sung, F.; Liu, F.; Xiang, T.; Gong, S.; Yang, Y.; Hospedales, T.M. Actor-critic sequence training for image captioning. arXiv 2017, arXiv:1706.09601. [Google Scholar] [CrossRef]

- Hessel, J.; Holtzman, A.; Forbes, M.; Bras, R.L.; Choi, Y. Clipscore: A reference-free evaluation metric for image captioning. arXiv 2021, arXiv:2104.08718. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k entities: Collecting region-to-phrase correspondences for richer image-to-sentence models. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2641–2649. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. SPICE: Semantic propositional image caption evaluation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 382–398. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Zhu, P.; Wang, X.; Zhu, L.; Sun, Z.; Zheng, W.S.; Wang, Y.; Chen, C. Prompt-based learning for unpaired image captioning. IEEE Trans. Multimed. 2023, 26, 379–393. [Google Scholar] [CrossRef]

- Yu, Y.; Chung, J.; Yun, H.; Hessel, J.; Park, J.; Lu, X.; Ammanabrolu, P.; Zellers, R.; Le Bras, R.; Kim, G.; et al. Multimodal knowledge alignment with reinforcement learning. arXiv 2022, arXiv:2205.12630. [Google Scholar] [CrossRef]

| Method | B-4 | M | R | C | S |

|---|---|---|---|---|---|

| ZeroCap [5] | 7.0 | 15.4 | - | 34.5 | 9.2 |

| MAGIC [15] | 12.9 | 17.4 | - | 49.3 | - |

| UIC-GAN [11] | 18.6 | 17.9 | 43.1 | 54.9 | 11.1 |

| WS-UIC [12] | 22.6 | 20.9 | 46.6 | 69.2 | 14.1 |

| MacCap [8] | 17.4 | 22.3 | 45.9 | 69.7 | 15.7 |

| SCS [13] | 22.8 | 21.4 | 47.7 | 74.7 | 15.1 |

| PCH [14] | 24.2 | 22.5 | 48.8 | 77.2 | 16.3 |

| PL-UIC [32] | 25.0 | 22.6 | 49.4 | 77.9 | 15.1 |

| ESPER-Style [33] | 21.9 | 21.9 | - | 78.2 | - |

| DeCap [7] | 24.7 | 25.0 | - | 91.2 | 18.7 |

| CapDec [6] | 26.4 | 25.1 | - | 91.8 | 18.2 |

| ViECap [9] | 27.2 | 24.8 | - | 92.9 | 18.2 |

| CMSA (Ours) | 29.0 | 25.5 | 53.0 | 100.2 | 18.9 |

| Method | B-4 | M | R | C | S |

|---|---|---|---|---|---|

| ZeroCap [5] | 5.4 | 11.8 | - | 16.8 | 6.2 |

| MAGIC [15] | - | - | - | - | - |

| UIC-GAN [11] | 10.8 | 14.2 | 33.4 | 15.4 | - |

| WS-UIC [12] | - | - | - | - | - |

| MacCap [8] | - | - | - | - | - |

| SCS [13] | 14.3 | 15.6 | 38.5 | 20.5 | - |

| PCH [14] | - | - | - | - | - |

| PL-UIC [32] | 21.4 | 20.1 | - | 8.8 | 13.6 |

| ESPER-Style [33] | - | - | - | - | - |

| DeCap [7] | 21.2 | 21.8 | - | 56.7 | 15.2 |

| CapDec [6] | 17.7 | 20.0 | 43.9 | 39.1 | - |

| ViECap [9] | 21.4 | 20.1 | - | 47.9 | 13.6 |

| CMSA (Ours) | 22.7 | 21.3 | 48.3 | 58.2 | 14.5 |

| Method | B-4 | M | R | C | S |

|---|---|---|---|---|---|

| MAGIC [15] | 5.2 | 12.5 | — | 18.3 | 5.7 |

| DeCap [7] | 12.1 | 18.0 | — | 44.4 | 10.9 |

| CapDec [6] | 9.2 | 16.3 | — | 27.3 | 17.2 |

| ViECap [9] | 12.6 | 19.3 | — | 54.2 | 12.5 |

| CMSA (Ours) | 21.8 | 20.9 | 47.8 | 60.8 | 14.5 |

| Method | B-4 | M | R | C | S |

|---|---|---|---|---|---|

| MAGIC [15] | 6.2 | 12.2 | — | 17.5 | 5.9 |

| DeCap [7] | 16.3 | 17.9 | — | 35.7 | 11.1 |

| CapDec [6] | 17.3 | 18.6 | — | 35.7 | — |

| ViECap [9] | 17.4 | 18.0 | — | 38.4 | 11.2 |

| CMSA (Ours) | 17.4 | 17.7 | 42.3 | 39.2 | 13.8 |

| Method | Evaluation Metric | |||||||

|---|---|---|---|---|---|---|---|---|

| I-F | T-F | C-R | B-R | B-4 | M | R | C | S |

| ✓ | ✓ | ✓ | 23.1 | 20.8 | 47.3 | 82.5 | 14.2 | |

| ✓ | ✓ | ✓ | 26.7 | 23.1 | 49.5 | 85.4 | 15.8 | |

| ✓ | ✓ | ✓ | 25.9 | 24.3 | 51.2 | 78.6 | 17.2 | |

| ✓ | ✓ | ✓ | ✓ | 29.0 | 25.5 | 53.0 | 100.2 | 18.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Zhou, K.; Ren, G. Unpaired Image Captioning via Cross-Modal Semantic Alignment. Appl. Sci. 2025, 15, 11588. https://doi.org/10.3390/app152111588

Yang Y, Zhou K, Ren G. Unpaired Image Captioning via Cross-Modal Semantic Alignment. Applied Sciences. 2025; 15(21):11588. https://doi.org/10.3390/app152111588

Chicago/Turabian StyleYang, Yong, Kai Zhou, and Ge Ren. 2025. "Unpaired Image Captioning via Cross-Modal Semantic Alignment" Applied Sciences 15, no. 21: 11588. https://doi.org/10.3390/app152111588

APA StyleYang, Y., Zhou, K., & Ren, G. (2025). Unpaired Image Captioning via Cross-Modal Semantic Alignment. Applied Sciences, 15(21), 11588. https://doi.org/10.3390/app152111588