From Benchmarking to Optimisation: A Comprehensive Study of Aircraft Component Segmentation for Apron Safety Using YOLOv8-Seg

Abstract

1. Introduction

1.1. Motivation and Research Questions

- What are the essential characteristics of a benchmark dataset designed to effectively train and validate deep learning models for the high-fidelity detection of individual aircraft components in diverse apron environments?

- How do state-of-the-art object detection and segmentation architectures compare in terms of accuracy, computational efficiency, and practical robustness when systematically benchmarked for aircraft component identification?

- What constitutes a systematic optimisation framework for a state-of-the-art segmentation model (YOLOv8-Seg) to enhance its performance for the specific demands of apron safety, and what is the quantifiable and qualitative impact of such a framework?

1.2. Key Contributions

- We developed and publicly released a novel hybrid dataset of 1112 images featuring detailed, pixel-level annotations for five critical aircraft components. This resource directly addresses the critical gap of coarse-labelled and proprietary datasets in aviation research, enabling reproducible and fine-grained analysis.

- We conducted a systematic benchmark of twelve state-of-the-art detection and segmentation models, spanning three distinct architectural paradigms. This analysis provides a definitive performance comparison using critical metrics (mAP, Recall, F1-Score, FPS), establishing a clear hierarchy of model suitability for apron safety.

- We introduce a systematic and reproducible optimisation framework for YOLOv8-Seg. This framework is rigorously validated through an eight-step ablation study that first quantifies the individual impact of each technique from data augmentation to architectural scaling and then demonstrates the powerful cumulative effect of combining the most effective strategies. The final optimised model achieves a quantifiable (8.04 p.p.) in mAP@0.5:0.95 gain and significantly enhances robustness, bridging the gap between benchmark accuracy and operational reliability.

1.3. Structure of the Paper

2. Related Work

3. Methodology

3.1. Dataset Development

3.2. Selection of Deep Learning Models

3.3. Experimental Configuration for Model Comparison

3.4. Performance Evaluation Metrics

3.5. Methodological Validation: Statistical and Visual Analysis

- Statistical evaluation through k-fold cross-validation and,

- Quantitative failure mode and qualitative visual analysis under various apron scenarios.

3.5.1. Statistical Evaluation Using K-Fold Cross Validation

3.5.2. Quantitative Failure Mode and Visual Scenario-Based Analysis

3.6. Systematic Optimisation Framework for a YOLOv8-Seg

- Loss Function Modification: To reduce class imbalance between large fuselages and smaller components (wings, tails, and noses), a custom function called v8WeightedSegmentationLoss was used instead of the standard Loss function. This function combines class-weighted cross-entropy with geometric metrics such as IoU and Dice to improve boundary accuracy on long structures such as wings. In practice, all other hyperparameters were kept constant.

- Inference Efficiency Optimisation: In this step, an adjustment was made to torch.inference_mode() in the YOLOv8-Seg model. To improve computational efficiency during the distribution process, gradient calculations were disabled during forward propagation. This eliminated some unnecessary calculations during feature extraction and aimed to reduce memory usage and latency. This aimed to enable the selected model to perform faster and more resource-efficient real-time inference under apron supervision.

- Mixed-Precision Computing: In this optimisation step, we enabled Automatic Mixed Precision (AMP) via the torch.cuda.amp.autocast() mode. This change allowed some tasks to run at FP16, while keeping critical operations running at FP32. This optimisation step aims to reduce memory usage and speed up inference. In addition, a callback mechanism has also been added to the prediction function.

- Increasing Input Resolution: In this step, only the model’s input resolution was changed, while all other hyperparameters were held constant. The input size was increased from 640 × 640 pixels to 1024 × 1024 pixels. This adjustment was intended to enable the model to process finer spatial details of components such as the fuselage, wings, and tail. It was anticipated that using higher resolution would enable the convolution layers to capture more local texture and boundary information, particularly for thin and long geometric structures like wings.

- Epoch Count Adjustment: In this control experiment, only the training time parameter was changed. The epoch count was increased from 130 to 170 to allow the network to perform more iterations for weight optimisation. The aim was to ensure that the model could learn more complex spatial patterns more reliably across different classes and lighting conditions. To prevent potential over-learning, the early stopping and verification-based monitoring mechanisms were retained. Thus, the training time extension was implemented solely to enhance representation learning. To measure the individual impact of this change, all other parameters (resolution, learning rate, etc.) were kept the same as the baseline model.

- Adjusting the Learning Rate: In this step, to examine its impact on the final performance of the model, the learning rate (LR) was slightly adjusted from 0.00111 to 0.001. This minor modification aimed to test the sensitivity of the model’s training dynamics to subtle variations in the learning rate and potentially provide a more stable weight update trajectory. This experiment was also run in isolation, independent of all other hyperparameters.

- Model Scaling: The YOLOv8-Seg model was scaled from its nano configuration to a larger version. This step increased the model’s depth and parameter count. This scaling allowed the model to handle more complex geometric features and generate more detailed segmentation masks for aircraft components.

- Data Augmentation and Expansion: The dataset was expanded by 3.5-fold through augmentation techniques applied exclusively to the training split, as detailed in Table 4. This strategic expansion enhances the model’s capacity for robust feature learning in object detection while maintaining evaluation integrity, as validation and test sets contained no augmented samples.

4. Results

4.1. Model Performance Comparison

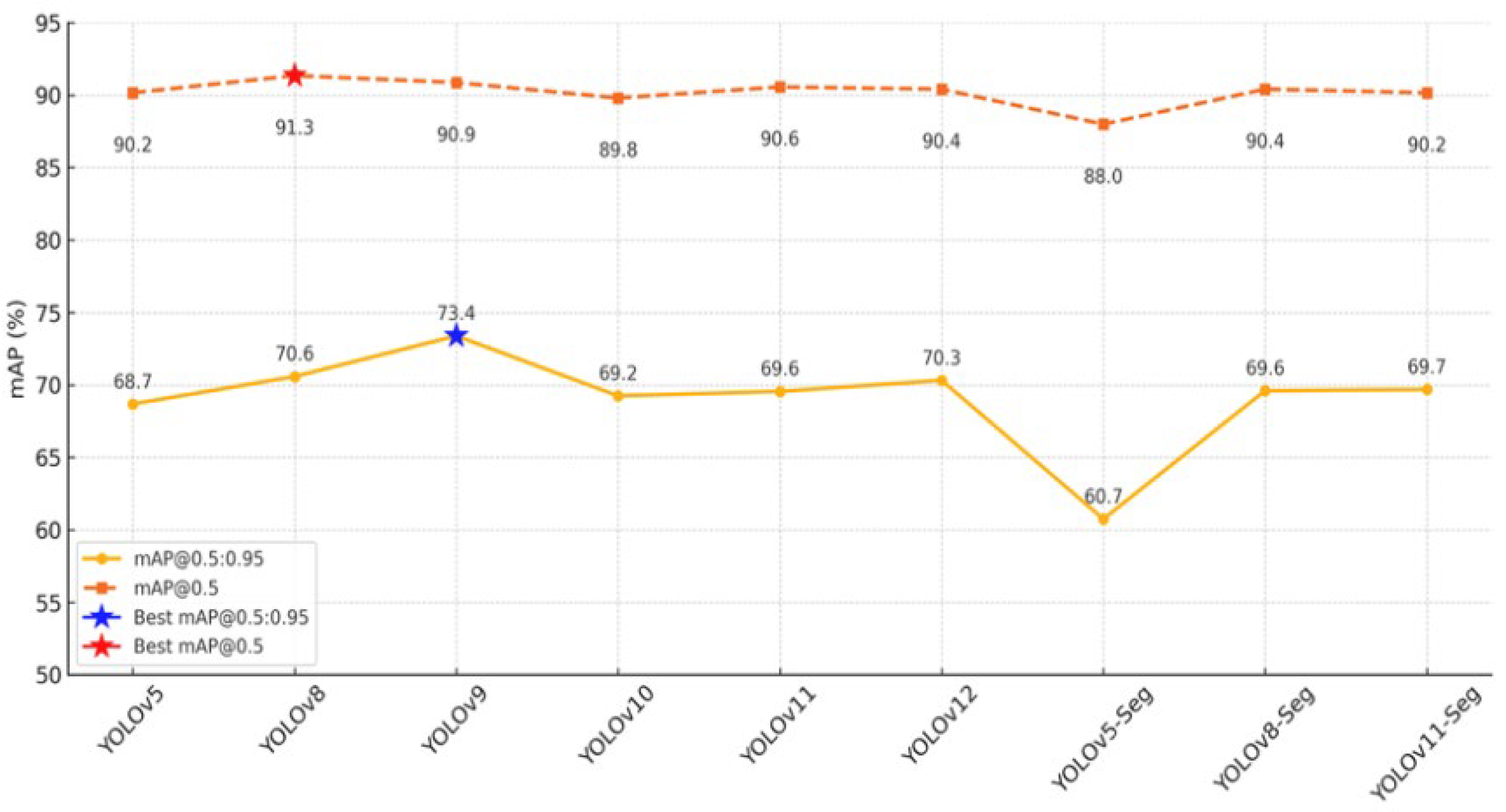

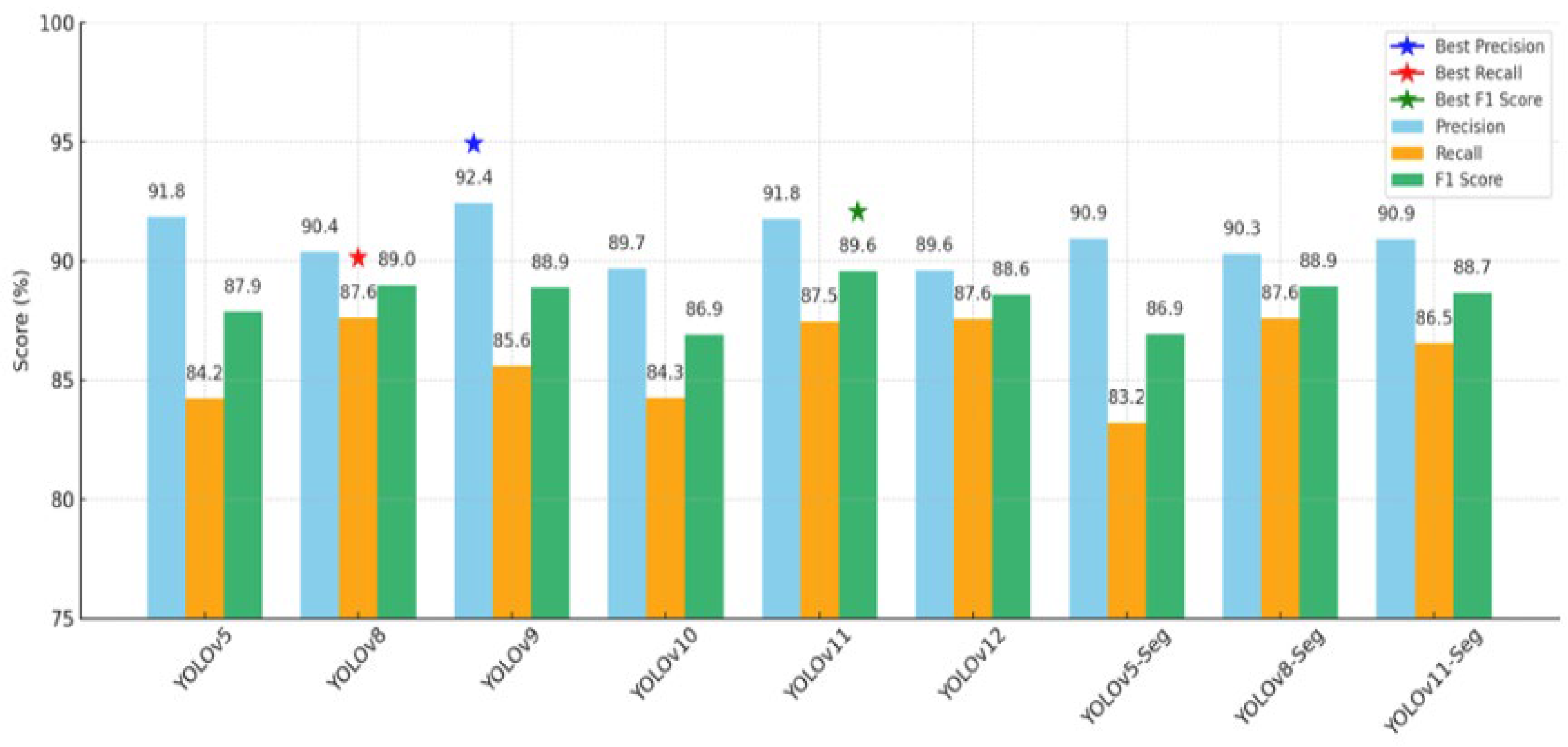

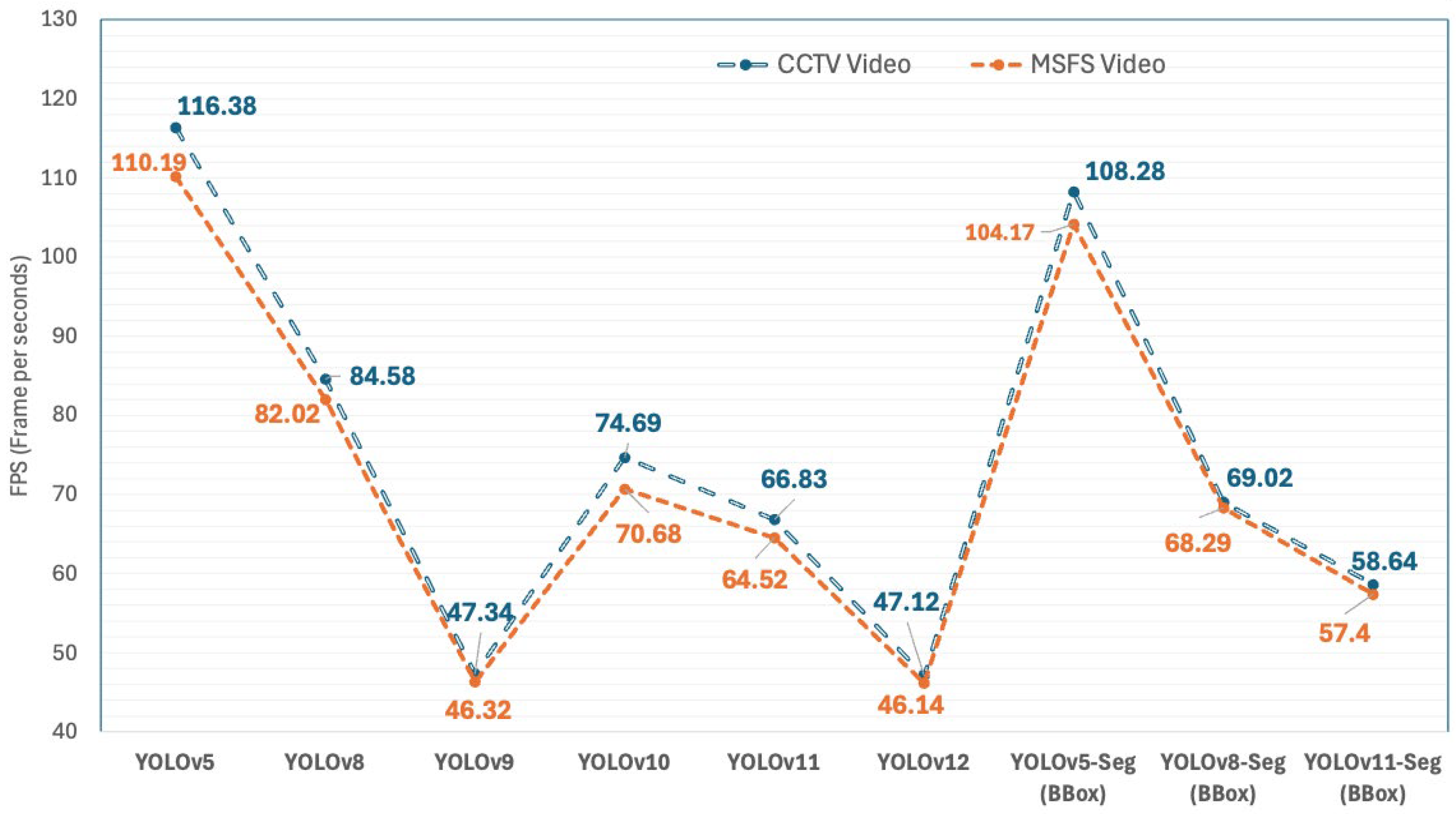

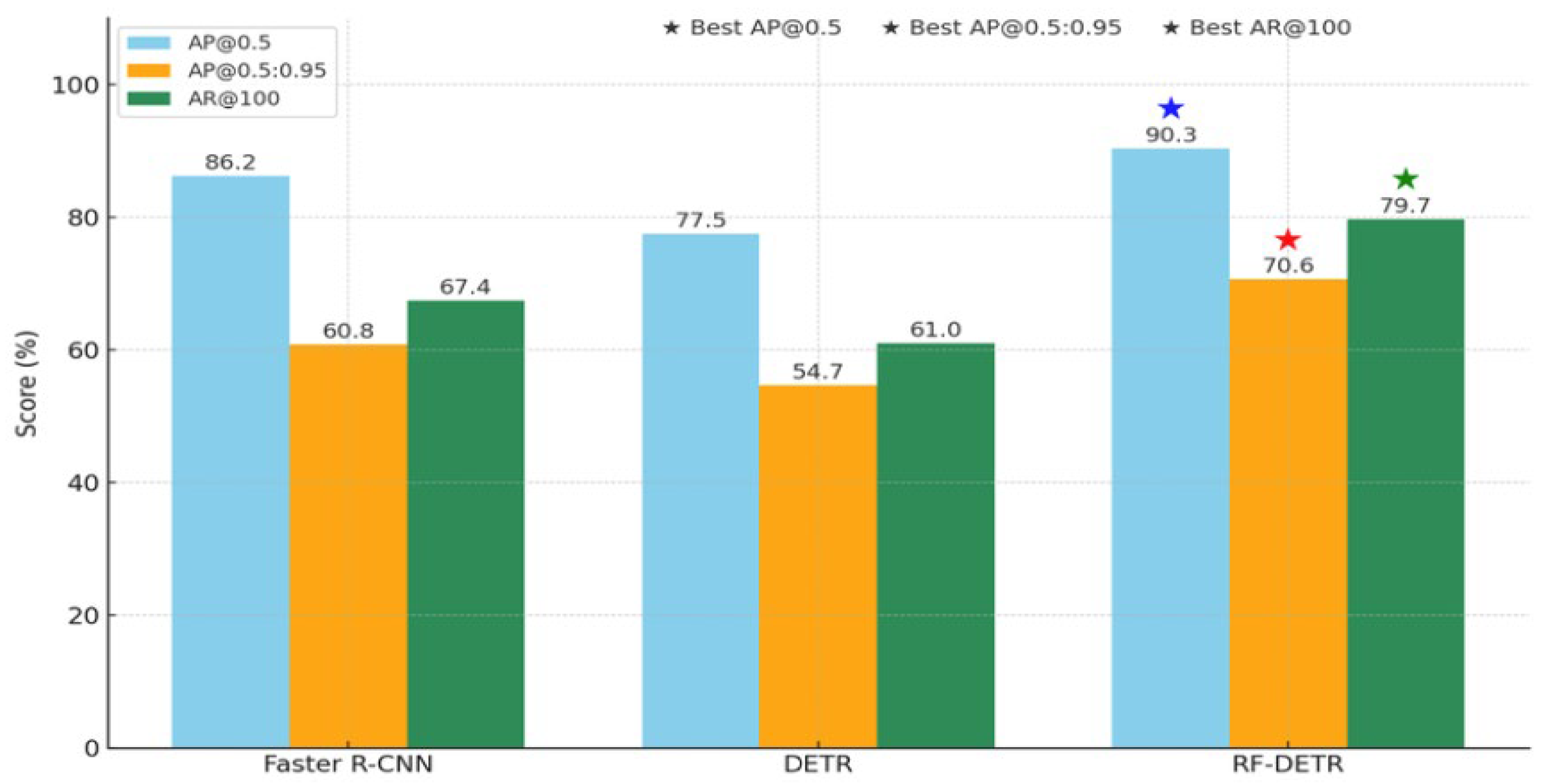

4.1.1. Performance of the Evaluated Models

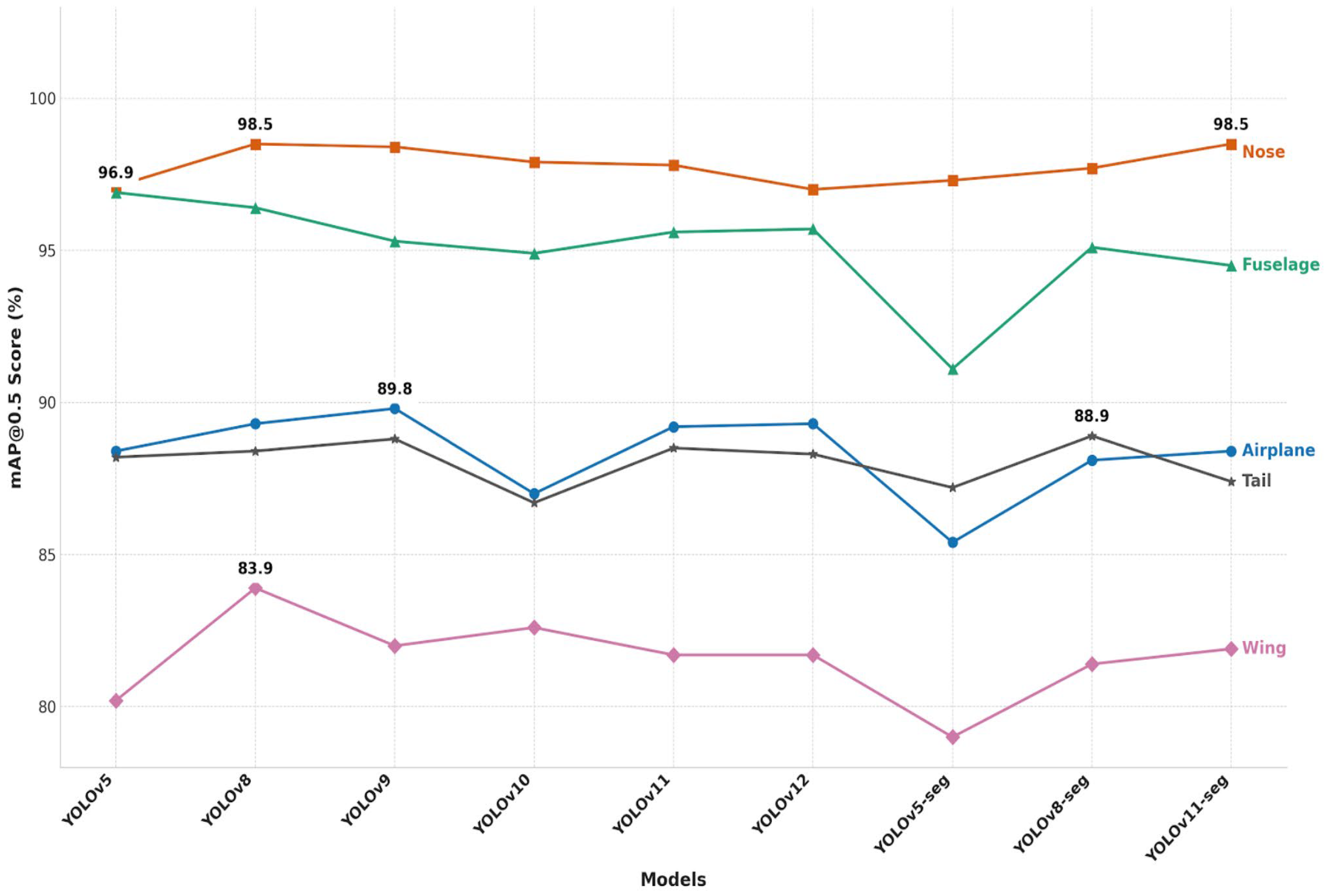

4.1.2. Class-Specific BBox Accuracy

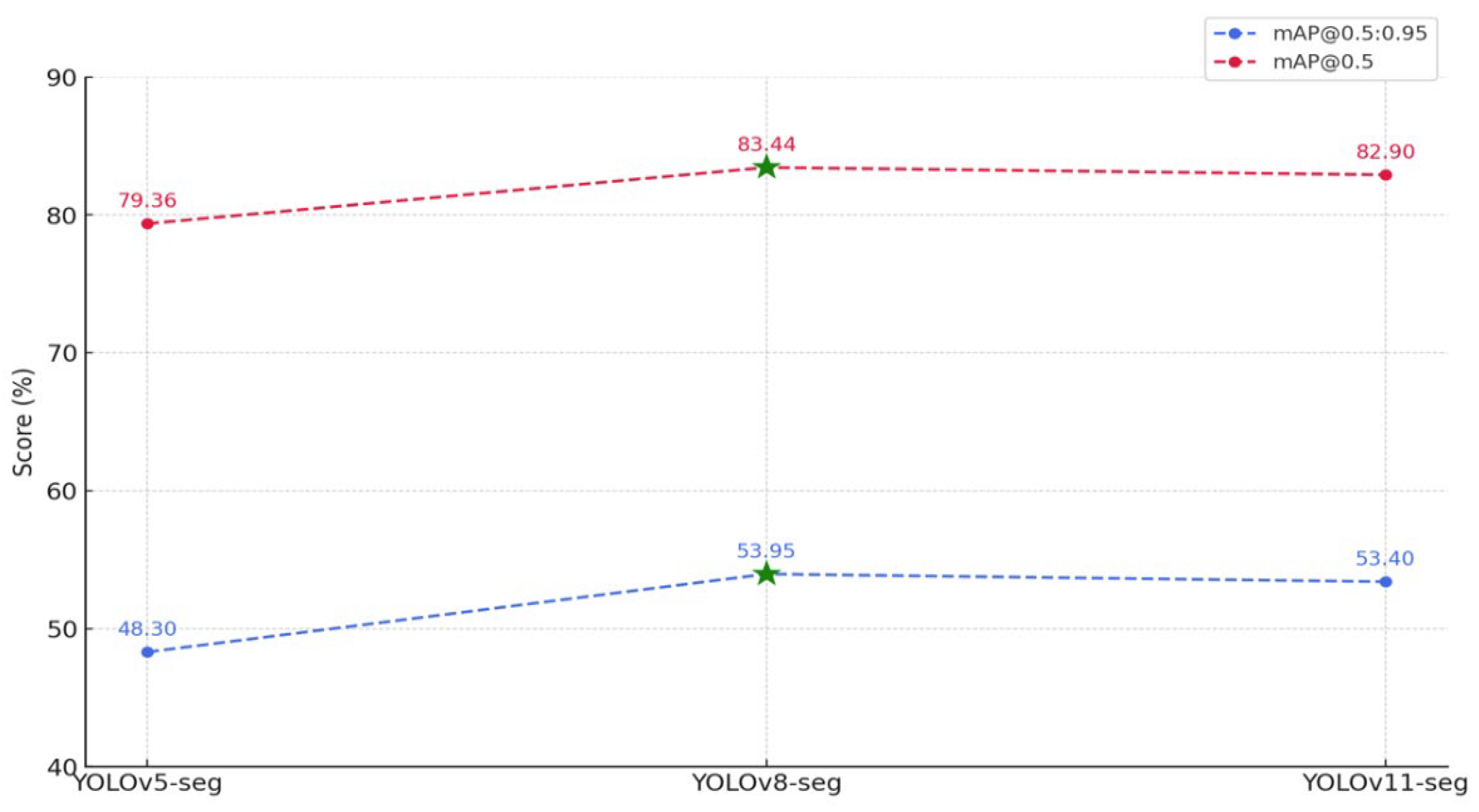

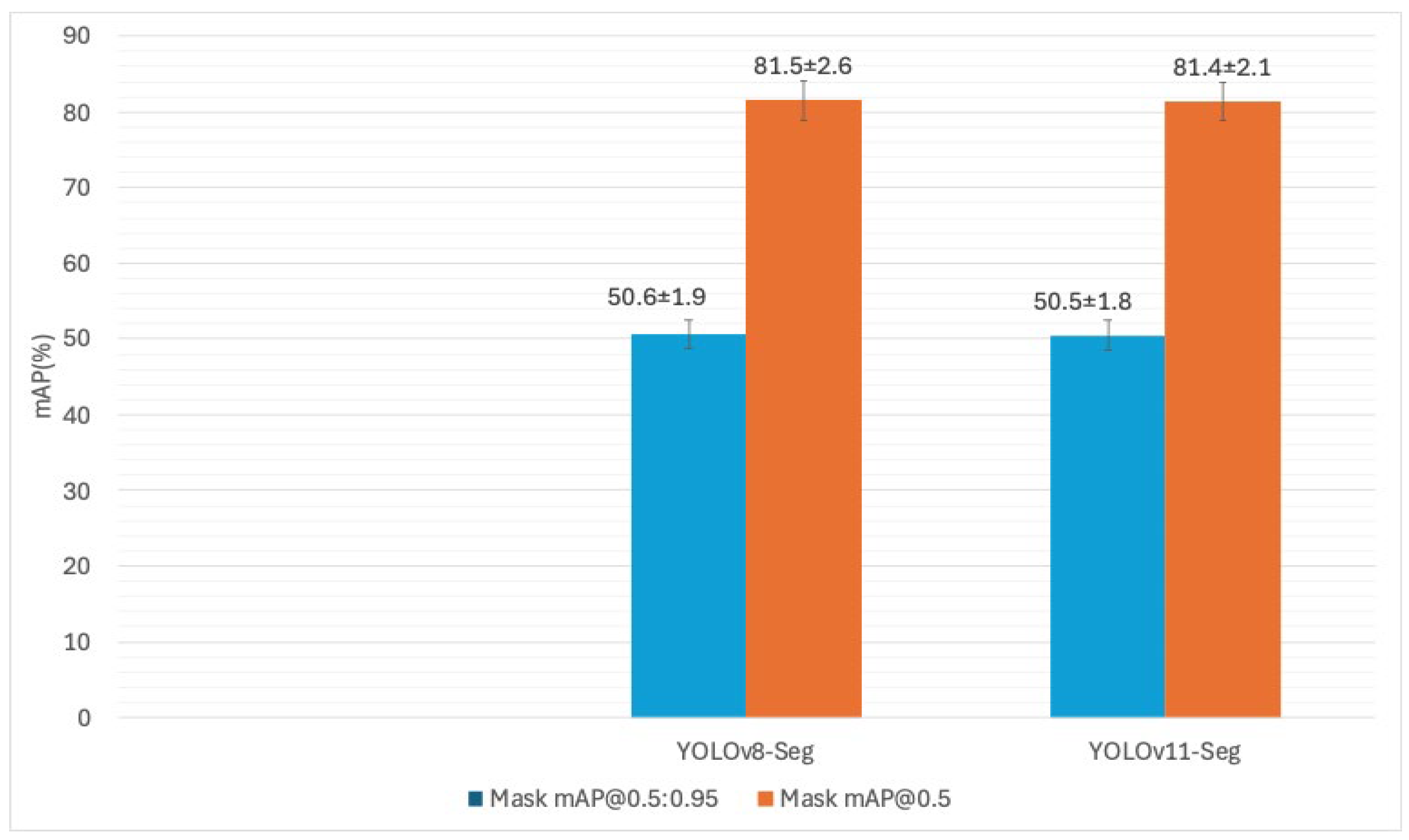

4.1.3. Mask Performance of Segmentation Models

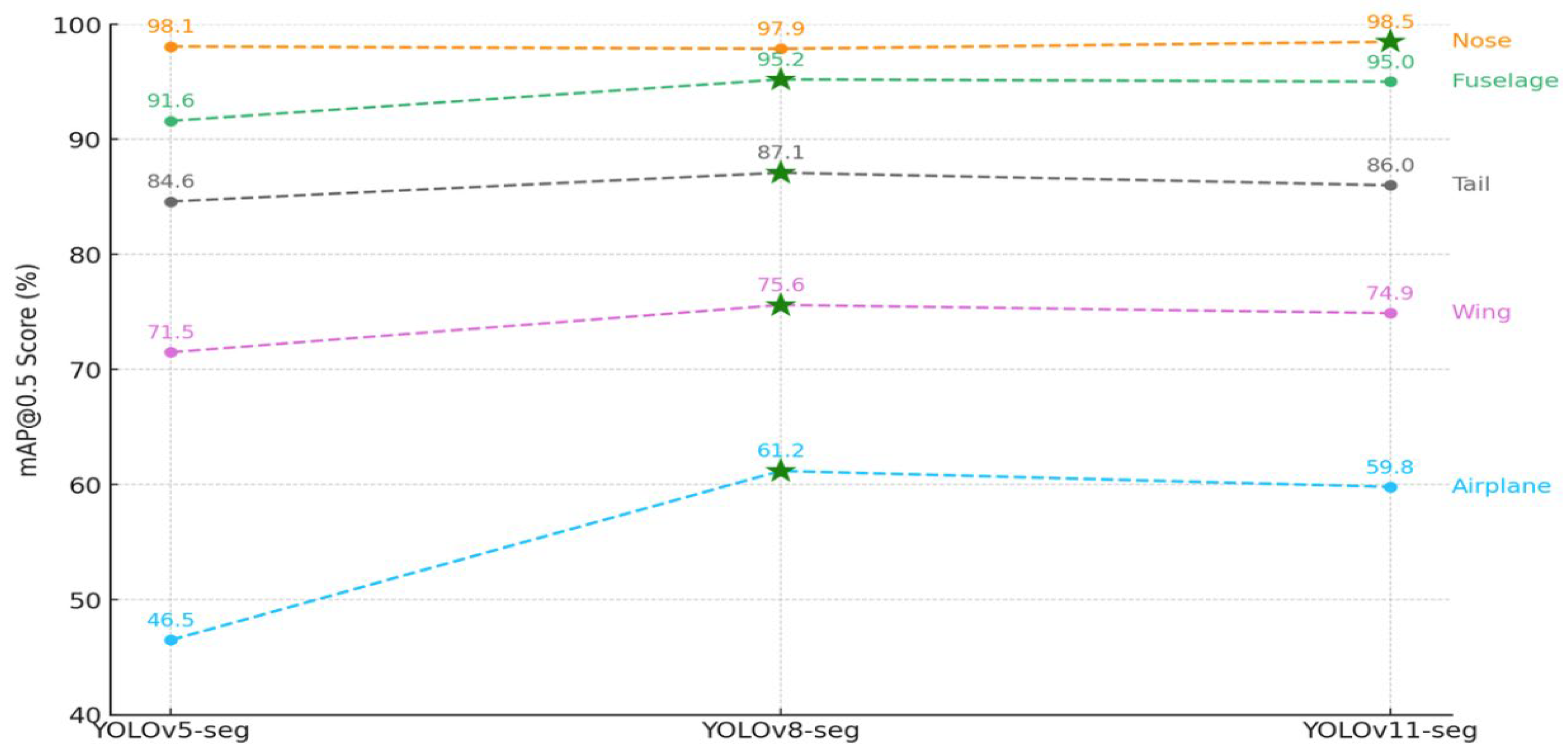

4.1.4. Class-Specific Segmentation Mask Accuracy

4.1.5. Failure Mode Analysis: FP/FN Comparison on Wing and Tail Classes

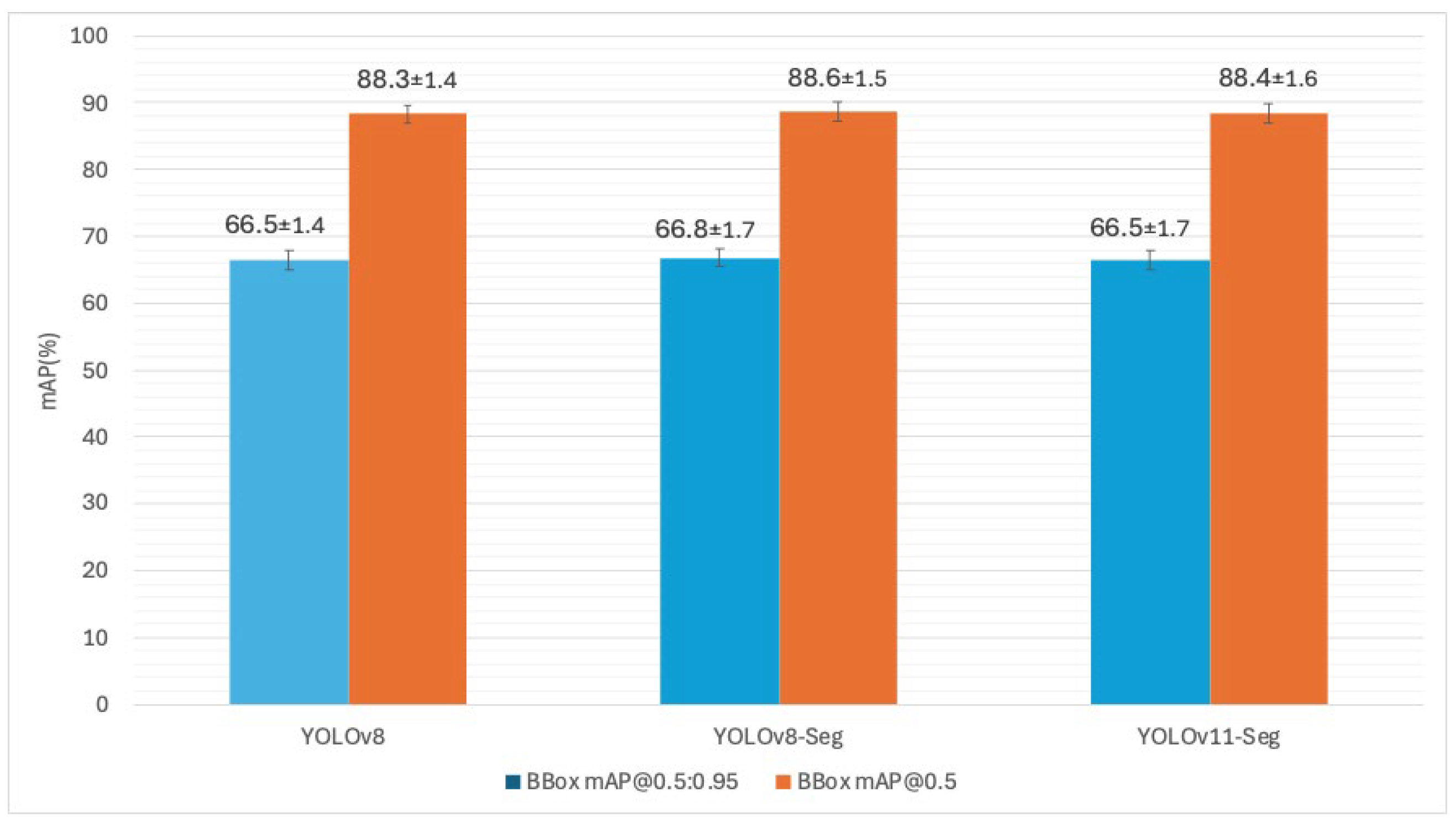

4.1.6. Statistical Robustness Analysis via Repeated 10-Fold Cross-Validation

4.1.7. Qualitative Performance Evaluation in Challenging Apron Scenarios

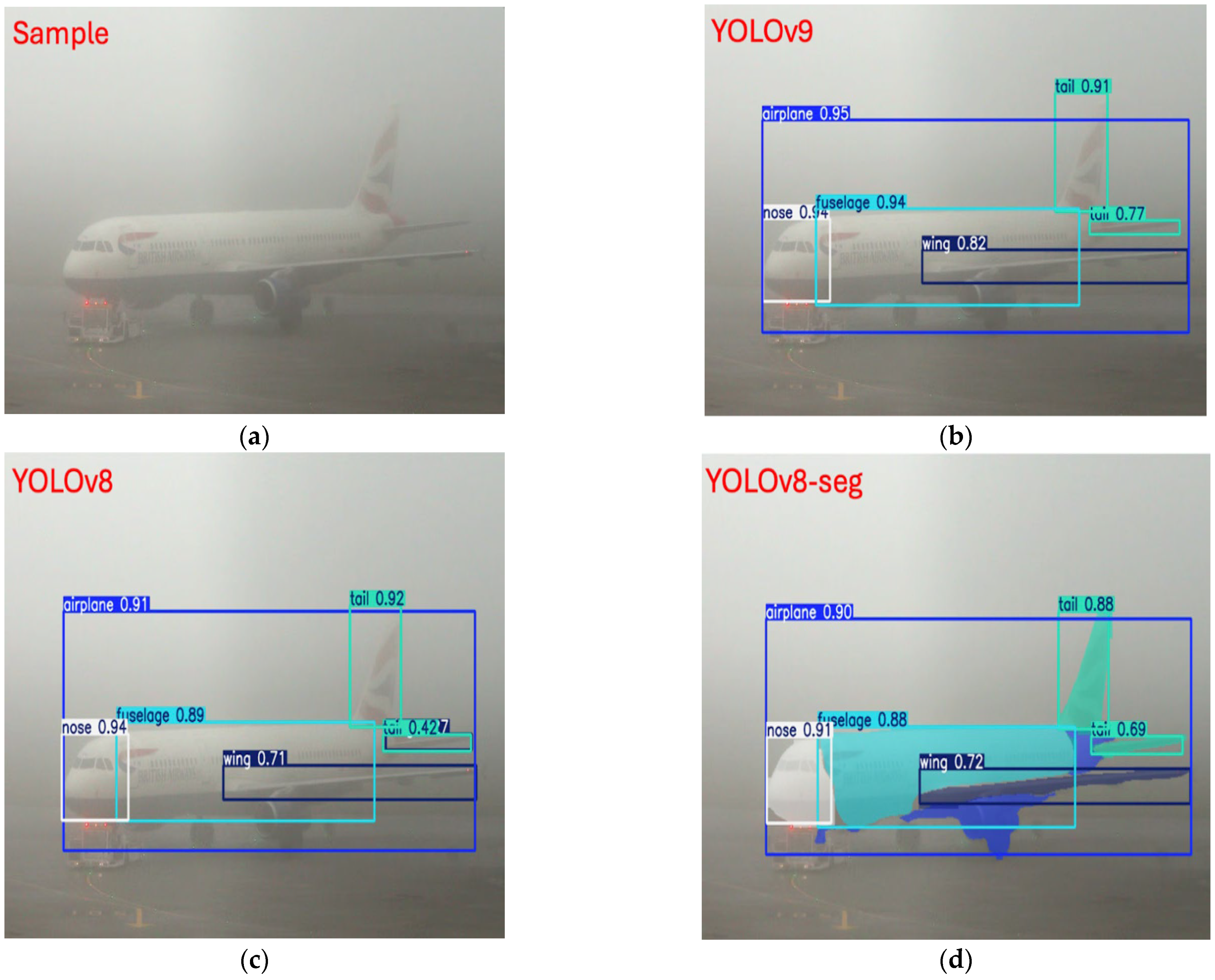

Scenario 1: Detection Under Foggy Apron Conditions

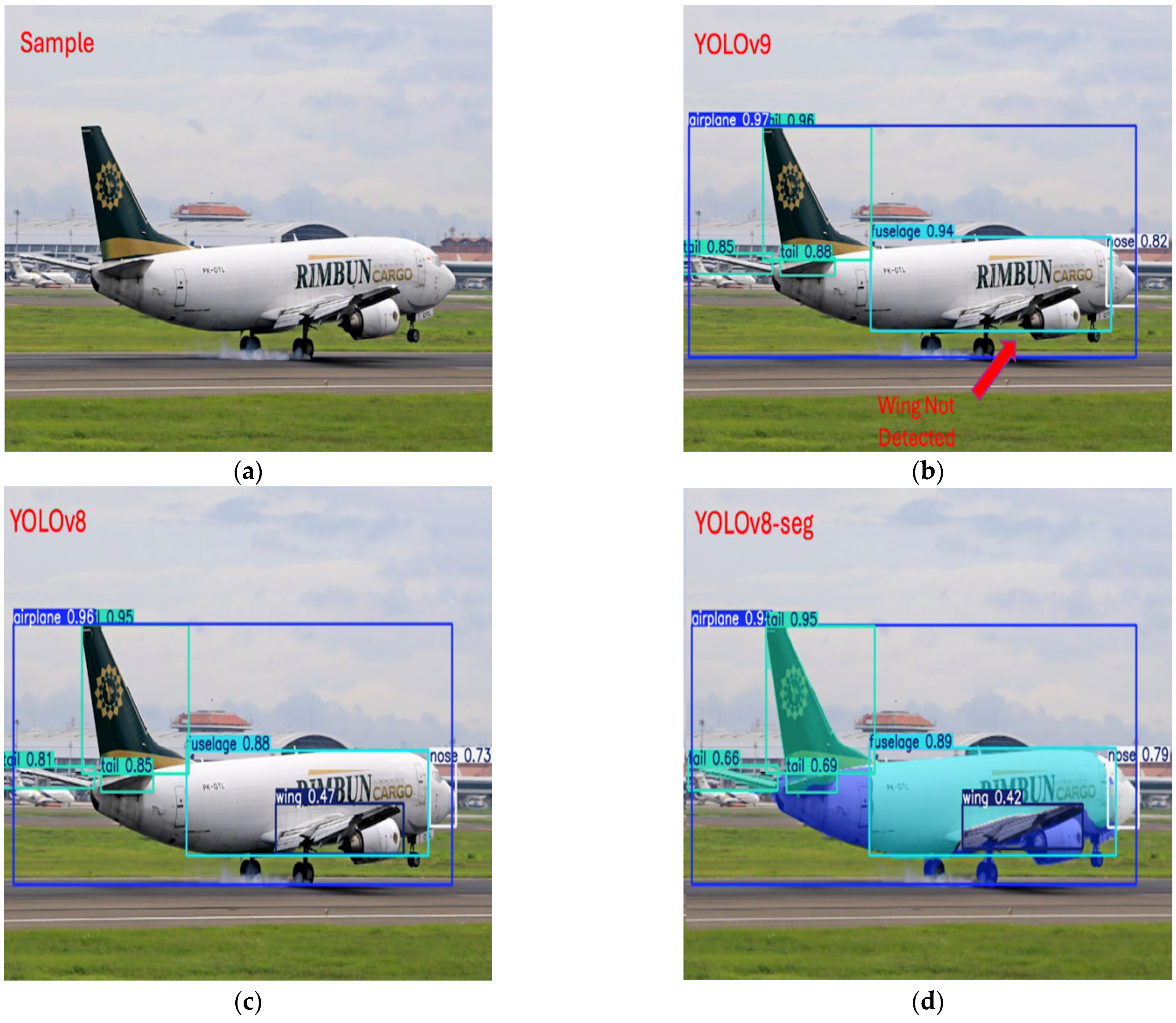

Scenario 2: Detection Under Clear Visibility Conditions

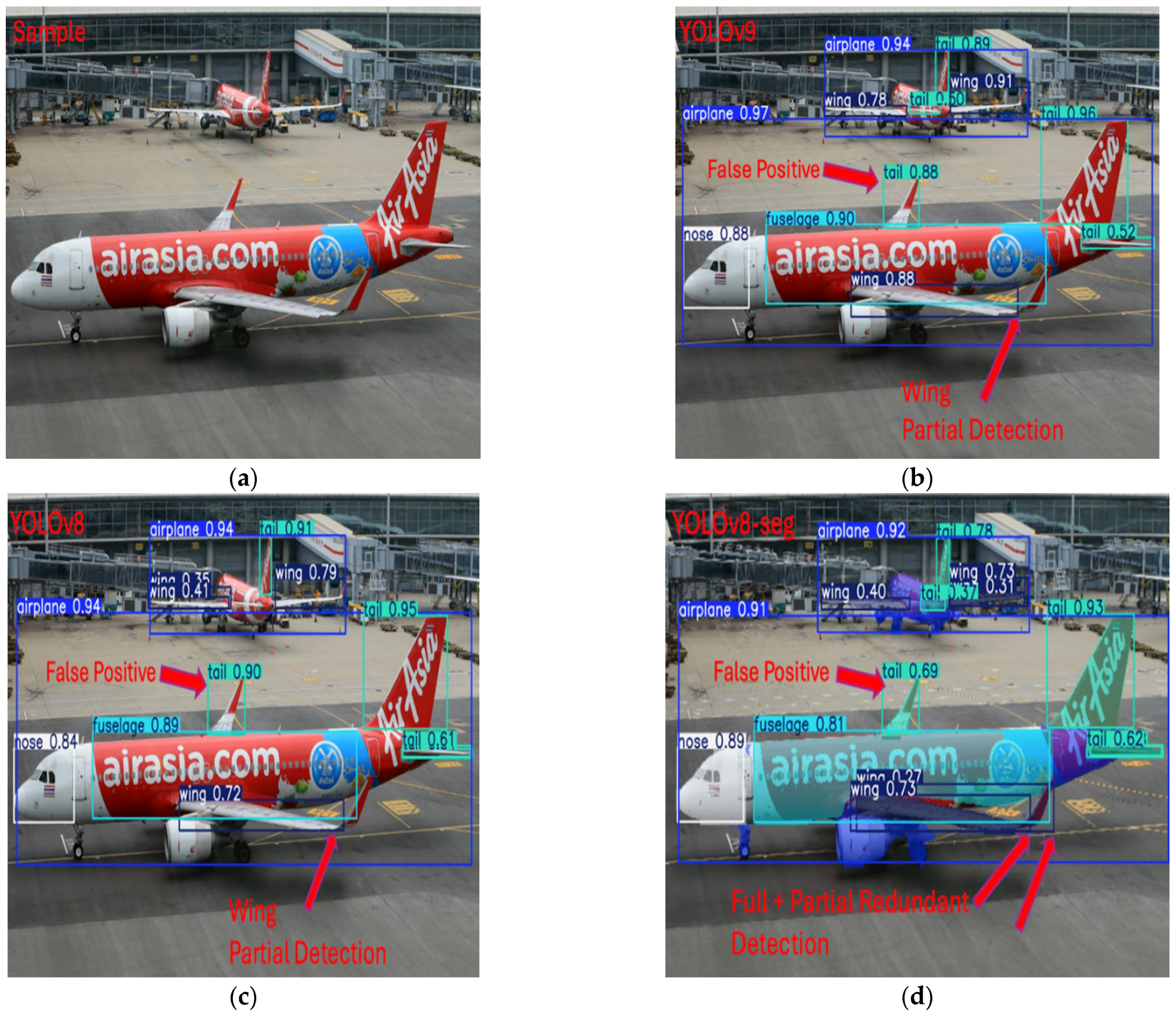

Scenario 3: Detection Under Complex Background and Geometric Challenges

4.2. Ablation Study on YOLOv-8-Seg

4.2.1. Bounding-Box Detection Performance After Optimisation

4.2.2. Segmentation Performance After Optimisation

4.2.3. Class-Specific Segmentation Mask Accuracy After Optimisation

4.2.4. Comparative Summary of Baseline and Optimised YOLOv8-Seg Models

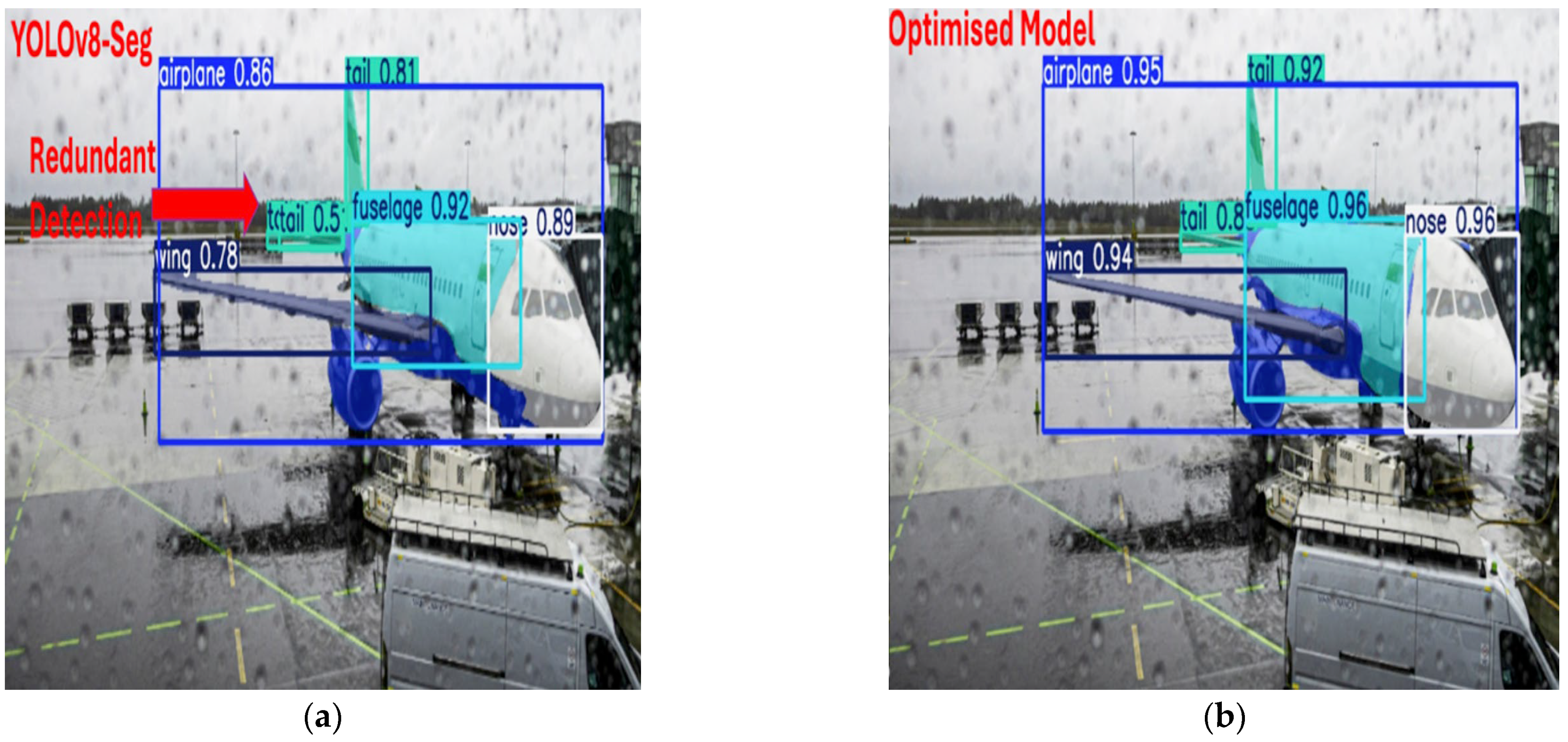

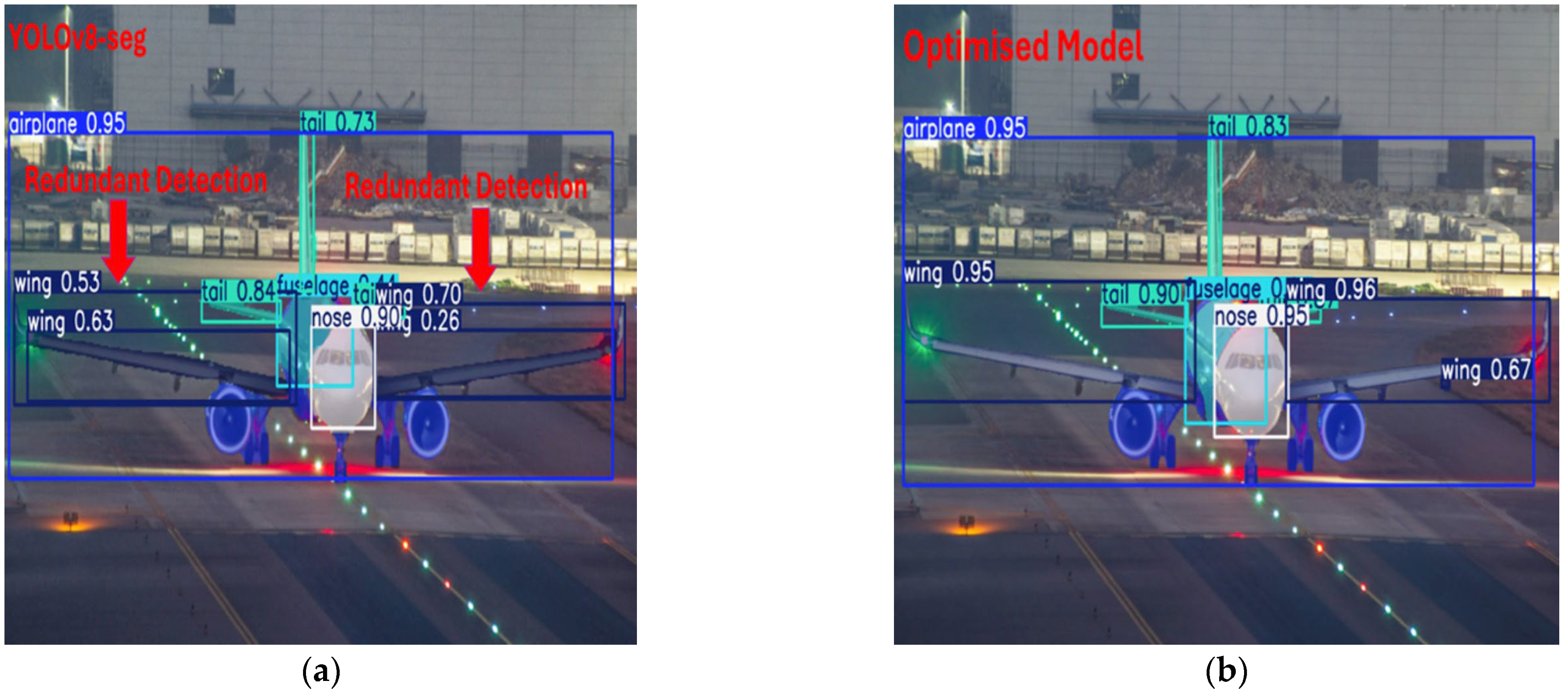

4.2.5. Qualitative Comparison: Baseline vs. Optimised Model

Scenario 4: Detection Reliability Under Optimal Conditions

Scenario 5: Robustness Under Low-Visibility and Sensor Noise

Scenario 6: Stability Under Low-Light, Glare, and HDR Conditions

5. Discussion

5.1. Model Benchmarking, Error Characterisation, and Statistical Reliability

5.1.1. Model Performance and Architectural Comparison

5.1.2. Class-Wise Error Trends and Confusion Matrix Insights

5.1.3. Statistical Robustness via 10-Fold Cross-Validation

5.1.4. Qualitative Scenario-Based Discussion Under Apron Conditions

5.2. Interpretation of Optimisation Efficacy

5.2.1. Implications of the Quantitative Findings

5.2.2. Qualitative Validation Under Realistic Apron Scenarios

5.3. Practical Implications for Apron Safety

5.4. Limitations and Future Work Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADS-B | Automatic Dependent Surveillance–Broadcast |

| AI | Artificial Intelligence |

| AMP | Automatic Mixed Precision |

| AP | Average Precision |

| CCTV | Closed-Circuit Television |

| COCO | Common Objects in Context |

| CV | Computer Vision |

| DETR | DEtection TRansformer |

| DOTA | Dataset for Object deTection in Aerial Images |

| FOD | Foreign Object Debris |

| FP | Floating-Point (as in FP16/FP32) |

| FPS | Frames Per Second |

| GPU | Graphics Processing Unit |

| IATA | International Air Transport Association |

| IOU | Intersection over Union |

| LIDAR | Light Detection and Ranging |

| mAP | mean Average Precision |

| mIoU | mean Intersection over Union |

| MSFS | Microsoft Flight Simulator |

| MOT | Multi Object Tracking |

| PGI | Programmable Gradient Information |

| PR | Precision–Recall |

| R-CNN | Region-based Convolutional Neural Network |

| RF-DETR | Real-time DEtection TRansformer |

| RSOD | Remote Sensing Object Detection |

| SMR | Surface Movement Radar |

| SSD | Single Shot Detector |

| YOLO | You Only Look Once |

References

- International Air Transport Association (IATA). 2023 Industry Statistics Fact Sheet; International Air Transport Association (IATA): Montreal, QC, Canada, 2023; Available online: https://www.iata.org/en/iata-repository/publications/economic-reports/industry-statistics-fact-sheet-december-2023/ (accessed on 1 October 2025).

- International Civil Aviation Organization. First ICAO Global Air Cargo Summit. Available online: https://www.icao.int/Meetings/IACS/Pages/default.aspx (accessed on 11 May 2025).

- O’Kelly, M.E. Transportation Security at Hubs: Addressing Key Challenges across Modes of Transport. J. Transp. Secur. 2025, 18, 4. [Google Scholar] [CrossRef]

- Flight Safety Foundation (FSF). 2022 Safety Report; Flight Safety Foundation (FSF): Alexandria, VA, USA, 2023; p. 11. [Google Scholar]

- Abdulaziz, A.; Yaro, A.; Ahmad, A.A.; Namadi, S. Surveillance Radar System Limitations and the Advent of the Automatic Dependent Surveillance Broadcast System for Aircraft Monitoring. ATBU J. Sci. Technol. Educ. (JOSTE) 2019, 7, 15. Available online: http://www.atbuftejoste.com.ng/index.php/joste/article/view/683 (accessed on 7 April 2019).

- Thai, P.; Alam, S.; Lilith, N.; Nguyen, B.T. A Computer Vision Framework Using Convolutional Neural Networks for Airport-Airside Surveillance. Transp. Res. Part. C Emerg. Technol. 2022, 137, 103590. [Google Scholar] [CrossRef]

- Chen, X.; Gao, Z.; Chai, Y. The Development of Air Traffic Control Surveillance Radars in China. In Proceedings of the 2017 IEEE Radar Conference, RadarConf 2017, Seattle, WA, USA, 8–12 May 2017; pp. 1776–1784. [Google Scholar] [CrossRef]

- Galati, G.; Leonardi, M.; Cavallin, A.; Pavan, G. Airport Surveillance Processing Chain for High Resolution Radar. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1522–1533. [Google Scholar] [CrossRef]

- Lukin, K.; Mogila, A.; Vyplavin, P.; Galati, G.; Pavan, G. Novel Concepts for Surface Movement Radar Design. Int. J. Microw. Wirel. Technol. 2009, 1, 163–169. [Google Scholar] [CrossRef]

- Skybrary Surface Movement Radar (SMR). Available online: https://skybrary.aero/articles/surface-movement-radar (accessed on 15 April 2024).

- Ding, M.; Ding, Y.-Y.; Wu, X.-Z.; Wang, X.-H.; Xu, Y.-B. Action Recognition of Individuals on an Airport Apron Based on Tracking Bounding Boxes of the Thermal Infrared Target. Infrared Phys. Technol. 2021, 117, 103859. [Google Scholar] [CrossRef]

- Lee, S. M-ABCNet: Multi-Modal Aircraft Motion Behavior Classification Network at Airport Ramps. IEEE Access 2024, 12, 133982–133993. [Google Scholar] [CrossRef]

- Štumper, M.; Kraus, J. Thermal Imaging in Aviation. MAD-Mag. Aviat. Dev. 2015, 3, 13. [Google Scholar] [CrossRef][Green Version]

- Rivera Velázquez, J.M.; Khoudour, L.; Saint Pierre, G.; Duthon, P.; Liandrat, S.; Bernardin, F. Analysis of Thermal Imaging Performance Under Extreme Foggy Conditions: Applications to Autonomous Driving. J. Imaging 2022, 8, 306. [Google Scholar] [CrossRef]

- Brassel, H.; Zouhar, A.; Fricke, H. 3D Modeling of the Airport Environment for Fast and Accurate LiDAR Semantic Segmentation of Apron Operations. In Proceedings of the 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 11–15 October 2020. [Google Scholar] [CrossRef]

- Atlioğlu, M.C.; Gökhan, K.O.Ç. An AI Powered Computer Vision Application for Airport CCTV Users. J. Data Sci. 2021, 4, 21–26. [Google Scholar]

- Munyer, T.; Brinkman, D.; Huang, C.; Zhong, X. Integrative Use of Computer Vision and Unmanned Aircraft Technologies in Public Inspection: Foreign Object Debris Image Collection. In Proceedings of the 22nd Annual International Conference on Digital Government Research, Omaha, NE, USA, 9–11 June 2021; pp. 437–443. [Google Scholar] [CrossRef]

- ICAO. International Civil Aviation Organization FOD Management Programme. Available online: https://www2023.icao.int/ESAF/Documents/meetings/2024/Aerodrome%20Certification%20Worksljop%20Luanda%20Angola%2013-17%20May%202024/Presentations/FOD%20Management%20Programme.pdf (accessed on 19 June 2024).

- Shan, J.; Miccinesi, L.; Beni, A.; Pagnini, L.; Cioncolini, A.; Pieraccini, M. A Review of Foreign Object Debris Detection on Airport Runways: Sensors and Algorithms. Remote Sens. 2025, 17, 225. [Google Scholar] [CrossRef]

- Mo, Y.; Wang, L.; Hong, W.; Chu, C.; Li, P.; Xia, H. Small-Scale Foreign Object Debris Detection Using Deep Learning and Dual Light Modes. Appl. Sci. 2024, 14, 2162. [Google Scholar] [CrossRef]

- Kucuk, N.S.; Aygun, H.; Dursun, O.O.; Toraman, S. Detection and Classification of Foreign Object Debris (FOD) with Comparative Deep Learning Algorithms in Airport Runways. Signal Image Video Process 2025, 19, 316. [Google Scholar] [CrossRef]

- Friederich, N.; Specker, A.; Beyerer, J. Security Fence Inspection at Airports Using Object Detection. In Proceedings of the 2024 IEEE Winter Conference on Applications of Computer Vision Workshops, WACVW 2024, Waikoloa, HI, USA, 1–6 January 2024; pp. 310–319. [Google Scholar] [CrossRef]

- Bahrudeen, A.A.A.; Bajpai, A. Attire-Based Anomaly Detection in Restricted Areas Using YOLOv8 for Enhanced CCTV Security. arXiv 2024, arXiv:2404.00645. [Google Scholar] [CrossRef]

- Kheta, K.; Delgove, C.; Liu, R.; Aderogba, A.; Pokam, M.-O. Vision-Based Conflict Detection Within Crowds Based on High-Resolution Human Pose Estimation for Smart and Safe Airport. arXiv 2022, arXiv:2207.00477. [Google Scholar]

- Yıldız, S.; Aydemir, O.; Memiş, A.; Varlı, S. A Turnaround Control System to Automatically Detect and Monitor the Time Stamps of Ground Service Actions in Airports: A Deep Learning and Computer Vision Based Approach. Eng. Appl. Artif. Intell. 2022, 114, 105032. [Google Scholar] [CrossRef]

- Muecklich, N.; Sikora, I.; Paraskevas, A.; Padhra, A. The Role of Human Factors in Aviation Ground Operation-Related Accidents/Incidents: A Human Error Analysis Approach. Transp. Eng. 2023, 13, 100184. [Google Scholar] [CrossRef]

- Said Hamed Alzadjail, N.; Balasubaramainan, S.; Savarimuthu, C.; Rances, E.O. A Deep Learning Framework for Real-Time Bird Detection and Its Implications for Reducing Bird Strike Incidents. Sensors 2024, 24, 5455. [Google Scholar] [CrossRef]

- Mendonca, F.A.C.; Keller, J. Enhancing the Aeronautical Decision-Making Knowledge and Skills of General Aviation Pilots to Mitigate the Risk of Bird Strikes: A Quasi-Experimental Study. Coll. Aviat. Rev. Int. 2022, 40, 7. [Google Scholar] [CrossRef]

- Dat, N.N.; Richardson, T.; Watson, M.; Meier, K.; Kline, J.; Reid, S. WildLive: Near Real-Time Visual Wildlife Tracking Onboard UAVs. arXiv 2025, arXiv:2504.10165. [Google Scholar]

- Zeng, B.; Ming, D.; Ji, F.; Yu, J.; Xu, L. Top-Down Aircraft Detection in Large-Scale Scenes Based on Multi-Source Data and FEF-R-CNN. Int. J. Remote Sens. 2022, 43, 1108–1130. [Google Scholar] [CrossRef]

- Zhou, L.; Yan, H.; Shan, Y.; Zheng, C.; Liu, Y. Aircraft Detection for Remote Sensing Images Based on Deep Convolutional Neural Networks. J. Electr. Comput. Eng. 2021, 2021, 4685644. [Google Scholar] [CrossRef]

- Tahir, A.; Adil, M.; Ali, A. Rapid Detection of Aircrafts in Satellite Imagery Based on Deep Neural Networks. arXiv 2021, arXiv:2104.11677. [Google Scholar] [CrossRef]

- Yang, Y.; Xie, G.; Qu, Y. Real-Time Detection of Aircraft Objects in Remote Sensing Images Based on Improved YOLOv4. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; pp. 1156–1164. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Wu, H.; Shuai, L.; Peng, C.; Yang, Z. Detection of Plane in Remote Sensing Images Using Super-Resolution. PLoS ONE 2022, 17, e0265503. [Google Scholar] [CrossRef]

- Flight Safety Foundation Ground Accident Prevention (GAP). Available online: https://flightsafety.org/toolkits-resources/past-safety-initiatives/ground-accident-prevention-gap/ (accessed on 1 October 2025).

- Van Phat, T.; Alam, S.; Lilith, N.; Tran, P.N.; Binh, N.T. Deep4Air: A Novel Deep Learning Framework for Airport Airside Surveillance. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo Workshops, ICMEW 2021, Shenzhen, China, 5–9 July 2021. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics. 2020. Available online: http://github.com/ultralytics/yolov5 (accessed on 29 May 2025). [CrossRef]

- Ultralytics YOLOv8 Models. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 23 December 2024).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the 18th European Conference on Computer Vision. (ECCV), Milan, Italy, 29 September–4 October 2024; pp. 1–18. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. Available online: https://arxiv.org/abs/2405.14458 (accessed on 12 February 2025).

- Ultralytics Ultralytics YOLO11. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 12 February 2025).

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Ultralytics_Team. Introducing Instance Segmentation in Ultralytics YOLOv5 v7.0. Available online: https://www.ultralytics.com/blog/introducing-instance-segmentation-in-yolov5-v7-0 (accessed on 19 April 2023).

- Ultralytics Instance Segmentation—Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/tasks/segment/ (accessed on 23 April 2024).

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-To-End Object Detection. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Robicheaux, P.; Gallagher, J.; Nelson, J.; Robinson, I. RF-DETR: A SOTA Real-Time Object Detection Model. Available online: https://blog.roboflow.com/rf-detr/ (accessed on 31 May 2025).

- He, Z.; He, Y.; Lv, Y. DT-YOLO: An Improved Object Detection Algorithm for Key Components of Aircraft and Staff in Airport Scenes Based on YOLOv5. Sensors 2025, 25, 1705. [Google Scholar] [CrossRef]

- Huang, B.; Ding, Y.; Liu, G.; Tian, G.; Wang, S. ASD-YOLO: An Aircraft Surface Defects Detection Method Using Deformable Convolution and Attention Mechanism. Measurement 2024, 238, 115300. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; LNCS; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Zhou, W.; Cai, C.; Zheng, L.; Li, C.; Zeng, D. ASSD-YOLO: A Small Object Detection Method Based on Improved YOLOv7 for Airport Surface Surveillance. Multimed. Tools Appl. 2023, 83, 55527–55548. [Google Scholar] [CrossRef]

- Zhou, W.; Cai, C.; Li, C.; Xu, H.; Shi, H. AD-YOLO: A Real-Time YOLO Network with Swin Transformer and Attention Mechanism for Airport Scene Detection. IEEE Trans. Instrum. Meas. 2024, 73, 5036112. [Google Scholar] [CrossRef]

- Lyu, Z.; Luo, J. A Surveillance Video Real-Time Object Detection System Based on Edge-Cloud Cooperation in Airport Apron. Appl. Sci. 2022, 12, 128. [Google Scholar] [CrossRef]

- Zhou, R.; Li, M.; Meng, S.; Qiu, S.; Zhang, Q. Aircraft Objection Detection Method of Airport Surface Based on Improved YOLOv5. J. Electr. Syst. 2024, 20, 16–25. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, Y.; Shi, K.; Wang, X.; Li, Y.; Chen, J. An Airport Apron Ground Service Surveillance Algorithm Based on Improved YOLO Network. Electron. Res. Arch. 2024, 32, 3569–3587. [Google Scholar] [CrossRef]

- CAPTAIN-WHU DOTA: Dataset for Object DeTection in Aerial Images. Available online: https://captain-whu.github.io/DOTA/index.html (accessed on 10 September 2024).

- RSIA-LIESMARS-WHU Remote Sensing Object Detection Dataset (RSOD-Dataset). Available online: https://github.com/RSIA-LIESMARS-WHU/RSOD-Dataset- (accessed on 20 February 2024).

- Utomo, S.; Sulistyaningrum, D.R.; Setiyono, B.; Nasution, A.H.I. Image Augmentation For Aircraft Parts Detection Using Mask R-CNN. In Proceedings of the 2024 International Conference on Smart Computing, IoT and Machine Learning, SIML 2024, Surakarta, Indonesia, 6–7 June 2024; pp. 186–192. [Google Scholar] [CrossRef]

- Thomas, J.; Kuang, B.; Wang, Y.; Barnes, S.; Jenkins, K. Advanced Semantic Segmentation of Aircraft Main Components Based on Transfer Learning and Data-Driven Approach. Vis. Comput. 2025, 41, 4703–4722. [Google Scholar] [CrossRef]

- Yilmaz, B.; Karsligil, M.E. Detection of Airplane and Airplane Parts from Security Camera Images with Deep Learning. In Proceedings of the 2020 28th Signal Processing and Communications Applications Conference, SIU 2020, Gaziantep, Turkey, 5–7 October 2020; pp. 21–24. [Google Scholar] [CrossRef]

- Bakirman, T.; Sertel, E. A Benchmark Dataset for Deep Learning-Based Airplane Detection: HRPlanes. Int. J. Eng. Geosci. 2023, 8, 212–223. [Google Scholar] [CrossRef]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-Grained Visual Classification of Aircraft. arXiv 2013, arXiv:1306.5151. [Google Scholar] [CrossRef]

- Ultralytics YOLO12: Attention-Centric Object Detection. Available online: https://docs.ultralytics.com/models/yolo12/ (accessed on 20 May 2025).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Padilla, R.; Passos, W.L.; Dias, T.L.B.; Netto, S.L.; Da Silva, E.A.B. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; Da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals, and Image Processing, Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Ultralytics YOLO Performance Metrics—COCO Metrics Evaluation. Available online: https://docs.ultralytics.com/guides/yolo-performance-metrics/#coco-metrics-evaluation (accessed on 23 May 2024).

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P. COCO: Common Objects in Context. Available online: https://cocodataset.org/#home (accessed on 16 January 2022).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Montreal, QC, Canada, 20–25 August 1995; pp. 1137–1143. [Google Scholar]

- Zhang, Y.; Zhou, B.; Zhao, X.; Song, X. Enhanced Object Detection in Low-Visibility Haze Conditions with YOLOv9s. PLoS ONE 2025, 20, e0317852. [Google Scholar] [CrossRef]

| Dataset/Authors | Labelling Type | Images/Objects | Domain | Openness | Key Focus |

|---|---|---|---|---|---|

| HRPlanes [67] | BBox (YOLO/VOC) | 3092 Google Earth images, 18,477 airplanes | Satellite (Google Earth) | Private | Aircraft detection in high-res Satellite imagery |

| (DOTA) [62] | Oriented BBox | 2806 images/188 k objects | Aerial/ Remote sensing | Restricted/ Academic | Multi-class aerial object detection including aircraft |

| (RSOD) [63] | BBox (PASCAL VOC) | 976 images (446 for aircraft) | Remote sensing | Public | Aircraft and airport object detection in satellite imagery |

| (AAD) [54] | BBox | 8643 images/6 classes | Apron CCTV | Private | Aircraft, monitoring ground Staff, and ground support equipment. |

| COCO) [56] | BBox + Instance Segmentance | 328,000 images/ 80 different categories | Generic Scenes (incl. aircraft) | Public | General object detection and segmentation (Person, Car, Cat, Stop Sign, etc.) |

| (FGVC) [68] | Bbox | 10,000 Images/102 models of Aircraft Images | Airport/Spotting | Public/ Research Only | Fine-grained aircraft model classification |

| Yilmaz and Karsligil [66] | Instance Segmentation (Mask R-CNN) PASCAL VOC | 1000 Images/2 classes | Apron Security Camera | Private (Turkish Airlines R&D Centre) | Detection and segmentation of aircraft parts (tail and doors) from apron CCTV |

| Our Proposed Dataset | BBox + Instance Segmentation | 1112 images/7420 labels, 5 classes | Hybrid (Real, CCTV + Synthetic) | Public (Planned) | Aircraft and Component-level segmentation for apron safety |

| Feature | Value |

|---|---|

| Number of images | 1112 |

| Number of annotations | 7420 |

| Average annotations per image | 6.7 |

| Number of classes | 5 |

| Average image size, MP * | 0.97 |

| Min image size MP * | 0.09 |

| Max image size MP * | 44.76 |

| Median resolution (px) | 1200 × 822 |

| Annotation type | Segmentation |

| Model | Year | Architecture Type | Key Strength |

|---|---|---|---|

| YOLOv5 | 2020 | Single-Stage | Lightweight, real-time detection |

| YOLOv8 | 2023 | Single-Stage | Improved backbone, strong accuracy |

| YOLOv9 | 2024 | Single-Stage | Enhanced bounding-box accuracy |

| YOLOv10 | 2024 | Single-Stage | Speed–accuracy trade-off |

| YOLOv11 | 2024 | Single-Stage | Extended segmentation capability |

| YOLOv12 | 2025 | Single-Stage | Latest YOLO variant, stability focus |

| YOLOv5-Seg | 2020 | Single-Stage (Seg) | Pixel-level segmentation |

| YOLOv8-Seg | 2023 | Single-Stage (Seg) | Best segmentation accuracy |

| YOLOv11-Seg | 2024 | Single-Stage (Seg) | Newer segmentation variant |

| Faster R-CNN | 2015 | Two-Stage | High accuracy, region proposals |

| DETR | 2020 | Transformer-Based | Anchor-free, attention reasoning |

| RF-DETR | 2025 | Transformer-Based | Refined DETR, faster convergence |

| Data Augmentations | Rates |

|---|---|

| Rotation | Between −15° and +15° |

| Saturation changes | Up to 18% |

| Brightness adjustment | Up to 22% |

| Exposure changes | Up to 15% |

| Blur | Up to 1.2 pixels |

| Adding Random Noise | 2.2% |

| MODEL | mAP @0.5:0.95 | mAP @0.5 | Precision | Recall | F1 Score | FPS CCTV | FPS MSFS | Val Box Loss | ValCls Loss | Val Dfl Loss |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5 | 68.679 | 90.157 | 91.836 | 84.210 | 87.858 | 116.38 | 110.19 | 0.946 | 0.635 | 1.149 |

| YOLOv8 | 70.578 | 91.341 | 90.370 | 87.619 | 88.973 | 84.58 | 82.02 | 0.882 | 0.579 | 1.107 |

| YOLOv9 | 73.378 | 90.862 | 92.430 | 85.590 | 88.879 | 47.34 | 46.32 | 0.842 | 0.553 | 1.212 |

| YOLOv10 | 69.243 | 89.797 | 89.686 | 84.254 | 86.885 | 74.69 | 70.68 | 1.900 | 1.263 | 2.238 |

| YOLOv11 | 69.552 | 90.558 | 91.769 | 87.453 | 89.558 | 66.83 | 64.52 | 0.902 | 0.605 | 1.113 |

| YOLOv12 | 70.314 | 90.407 | 89.594 | 87.574 | 88.574 | 47.12 | 46.14 | 0.900 | 0.581 | 1.137 |

| YOLOv5-Seg (BBox) | 60.746 | 87.980 | 90.939 | 83.212 | 86.919 | 108.28 | 104.17 | 0.032 | 0.006 | - |

| YOLOv8-Seg (BBox) | 69.599 | 90.407 | 90.300 | 87.593 | 88.926 | 69.02 | 68.29 | 0.890 | 0.589 | 1.118 |

| YOLOv11-Seg (BBox) | 69.694 | 90.154 | 90.902 | 86.533 | 88.664 | 58.64 | 57.40 | 0.904 | 0.628 | 1.123 |

| MODEL | AP@0.5:0.95 | AP@0.5 | AR@100 | AP-Small | AP-Med | AP- large |

|---|---|---|---|---|---|---|

| Faster R-CNN | 60.80 | 86.2 | 67.4 | 18.1 | 41.7 | 65.3 |

| DETR | 54.70 | 77.5 | 61.0 | 3.8 | 17.0 | 65.3 |

| RF-DETR | 70.60 | 90.3 | 79.7 | 14.8 | 47.8 | 75.6 |

| Model | Airplane | Nose | Fuselage | Wing | Tail | All Class |

|---|---|---|---|---|---|---|

| YOLOv5 | 88.4 | 97.1 | 96.9 | 80.2 | 88.2 | 90.2 |

| YOLOv8 | 89.3 | 98.5 | 96.4 | 83.9 | 88.4 | 91.3 |

| YOLOv9 | 89.8 | 98.4 | 95.3 | 82.0 | 88.8 | 90.9 |

| YOLOv10 | 87.0 | 97.9 | 94.9 | 82.6 | 86.7 | 89.8 |

| YOLOv11 | 89.2 | 97.8 | 95.6 | 81.7 | 88.5 | 90.6 |

| YOLOv12 | 89.3 | 97.0 | 95.7 | 81.7 | 88.3 | 90.4 |

| YOLOv5-Seg (Bbox) | 85.4 | 97.3 | 91.1 | 79.0 | 87.2 | 88.0 |

| YOLOv8-Seg (BBox) | 88.1 | 97.7 | 95.1 | 81.4 | 88.9 | 90.3 |

| YOLOv11-Seg (BBox) | 88.4 | 98.5 | 94.5 | 81.9 | 87.4 | 90.1 |

| Model | mAP@0.5:0.95 | mAP@0.5 | Precision | Recall | FPS CCTV | FPS MSFS | F1 Score | Val Seg Loss |

|---|---|---|---|---|---|---|---|---|

| YOLOv5-Seg (Mask) | 48.299 | 79.363 | 84.946 | 78.632 | 108.28 | 104.17 | 81.66 | 0.029 |

| YOLOv8-Seg (Mask) | 53.953 | 83.435 | 85.034 | 82.810 | 69.02 | 68.29 | 83.90 | 1.523 |

| YOLOv11-Seg (Mask) | 53.395 | 82.902 | 85.741 | 81.991 | 58.64 | 57.40 | 83.82 | 1.563 |

| Model | Airplane | Nose | Fuselage | Wing | Tail | All-Class |

|---|---|---|---|---|---|---|

| YOLOv5-Seg (Mask) | 46.5 | 98.1 | 91.6 | 71.5 | 84.6 | 78.4 |

| YOLOv8-Seg (Mask) | 61.2 | 97.9 | 95.2 | 75.6 | 87.1 | 83.4 |

| YOLOv11-Seg (Mask) | 59.8 | 98.5 | 95.0 | 74.9 | 86.0 | 82.8 |

| Model | Class | TP | FP | FN | Precision (%) | Recall (%) |

|---|---|---|---|---|---|---|

| YOLOv8 | Tail | 292 | 47 | 58 | 86.1 | 83.5 |

| Wing | 190 | 36 | 52 | 84.1 | 78.5 | |

| YOLOv8-Seg | Tail | 285 | 54 | 35 | 84.1 | 89.1 |

| Wing | 189 | 37 | 73 | 83.6 | 72.1 | |

| YOLOv9 | Tail | 266 | 73 | 32 | 78.5 | 89.3 |

| Wing | 189 | 37 | 66 | 83.6 | 74.1 |

| Model | Task | Metric | Mean (μ) ± Std. Dev. (σ) | 95% CI (t-dist) | 95% CI (Bootstrap) |

|---|---|---|---|---|---|

| YOLOv8 | BBox | mAP@0.5:0.95 | 66.5 ± 1.4% | [65.6, 67.6] | [65.7, 67.4] |

| BBox | mAP@0.5 | 88.3 ± 1.38% | [87.3, 89.3] | [87.5, 89.1] | |

| YOLOv8-Seg | BBox | mAP@0.5:0.95 | 66.8 ± 1.7% | [65.6, 68.0] | [65.8, 67.7] |

| BBox | mAP@0.5 | 88.6 ± 1.5% | [87.5, 89.7] | [87.7, 89.4] | |

| Mask | mAP@0.5:0.95 | 50.6 ± 1.9% | [49.3, 52.0] | [49.5, 51.7] | |

| Mask | mAP@0.5 | 81.5 ± 2.6% | [79.6, 83.3] | [79.9, 82.9] | |

| YOLOv11-Seg | BBox | mAP@0.5:0.95 | 66.5 ± 1.7% | [65.3, 67.6] | [65.5, 67.4] |

| BBox | mAP@0.5 | 88.4 ± 1.6% | [87.2, 89.6] | [87.5, 89.4] | |

| Mask | mAP@0.5:0.95 | 50.5 ± 1.8% | [49.2, 51.7] | [49.4, 51.5] | |

| Mask | mAP@0.5 | 81.4 ± 2.1% | [79.9, 82.9] | [80.2, 82.7] |

| Optimisation Steps | mAP @0.5:0.95 | mAP @0.5 | Precision | Recall | F1 Score | Val Box Loss | Val Cls Loss | Val Dfl Loss |

|---|---|---|---|---|---|---|---|---|

| Base YOLOv8-Seg (BBox) | 69.599 | 90.40 | 90.30 | 87.59 | 88.92 | 0.89 | 0.589 | 1.12 |

| Loss Function | 70.22 | 90.07 | 91.51 | 86.64 | 89.01 | 0.89 | 0.596 | 1.10 |

| Inference Opt. | 70.33 | 90.08 | 90.68 | 87.25 | 88.93 | 0.89 | 0.590 | 1.11 |

| AMP | 70.90 | 90.88 | 91.72 | 87.65 | 89.63 | 0.92 | 0.591 | 1.12 |

| Resolution (1024 × 1024) | 69.21 | 90.75 | 91.41 | 87.02 | 89.17 | 0.95 | 0.643 | 1.18 |

| Epochs (130→170) | 69.86 | 90.09 | 91.25 | 85.79 | 88.43 | 0.90 | 0.609 | 1.14 |

| L. Rate (0.00111→0.001) | 69.87 | 90.49 | 90.16 | 87.61 | 88.86 | 0.90 | 0.587 | 1.12 |

| Model Scl. (N→L) | 74.37 | 91.75 | 89.84 | 87.96 | 88.89 | 0.80 | 0.517 | 1.16 |

| Data Augmentation (3.5×) | 71.61 | 90.63 | 92.74 | 85.09 | 88.74 | 0.88 | 0.614 | 1.19 |

| Optimised Model | 75.77 | 92.28 | 93.156 | 87.24 | 90.06 | 0.79 | 0.51 | 1.41 |

| Optimisation Steps | mAP@0.5:0.95 | mAP@0.5 | Precision | Recall | F1 Score | Val Loss |

|---|---|---|---|---|---|---|

| Base YOLOv8-Seg (Mask) | 53.953 | 83.43 | 85.03 | 82.81 | 83.91 | 1.52 |

| Loss Function | 53.86 | 83.18 | 86.59 | 81.13 | 83.77 | 1.48 |

| Inference Opt. | 53.76 | 83.65 | 86.05 | 82.53 | 84.25 | 1.54 |

| AMP | 54.49 | 83.88 | 86.94 | 82.87 | 84.86 | 1.50 |

| Resolution (1024 × 1024) | 55.68 | 84.24 | 85.81 | 83.14 | 84.46 | 1.18 |

| Epochs (130→170) | 55.06 | 83.47 | 83.82 | 82.32 | 83.07 | 1.52 |

| L. Rate (0.00111→0.001) | 54.12 | 84.72 | 88.13 | 81.16 | 84.52 | 1.52 |

| Model Scl. (N→L) | 57.51 | 85.89 | 87.69 | 83.91 | 85.76 | 1.61 |

| Data Augmentation (3.5×) | 56.39 | 85.43 | 89.13 | 80.58 | 84.64 | 2.07 |

| Optimised Model | 61.986 | 88.17 | 89.32 | 83.29 | 86.18 | 2.15 |

| Optimisation Steps | Airplane | Nose | Fuselage | Wing | Tail | All-Class |

|---|---|---|---|---|---|---|

| Base YOLOv8-Seg (Mask) | 61.2 | 97.9 | 95.2 | 75.6 | 87.1 | 83.4 |

| Loss Function | 59.5 | 98.2 | 94.8 | 76.2 | 87.0 | 83.2 |

| Inference Opt. | 62.2 | 98.0 | 95.4 | 74.9 | 87.7 | 83.7 |

| AMP | 58.3 | 98.0 | 96.3 | 79.7 | 87.2 | 83.9 |

| Resolution (1024 × 1024) | 60.3 | 97.5 | 95.3 | 75.5 | 88.6 | 83.4 |

| Epochs (130→170) | 62.2 | 98.8 | 94.8 | 75.3 | 86.4 | 83.5 |

| L. Rate (0.00111→0.001) | 65.6 | 98.2 | 94.3 | 78.0 | 86.2 | 84.5 |

| Model Scl. (N→L) | 67.2 | 96.8 | 94.6 | 80.7 | 89.9 | 85.9 |

| Data Augmentation (3.5×) | 70.8 | 99.1 | 95.7 | 75 | 86.3 | 85.4 |

| Optimised Model | 72.7 | 98.4 | 95.5 | 85.5 | 88.7 | 88.2 |

| Metric | Baseline (BBox) | Optimised (BBox) | Δ (p.p.) | Baseline (Mask) | Optimised (Mask) | Δ (p.p.) |

|---|---|---|---|---|---|---|

| mAP @0.5:0.95 | 69.60 | 75.77 | +6.17 | 53.95 | 61.99 | +8.04 |

| mAP @0.5 | 90.41 | 92.28 | +1.87 | 83.44 | 88.18 | +4.74 |

| Precision | 90.30 | 93.16 | +2.86 | 85.03 | 89.33 | +4.30 |

| Recall | 87.59 | 87.24 | –0.35 | 82.81 | 83.29 | +0.48 |

| F1 Score | 88.93 | 90.06 | +1.13 | 83.91 | 86.18 | +2.27 |

| Val. Loss | 0.89 | 0.79 | –0.10 | 1.52 | 2.15 | +0.63 |

| Class (Mask) | Baseline mAP (%) | Optimised mAP (%) | Δ (p.p.) |

|---|---|---|---|

| Airplane | 61.2 | 72.7 | +11.5 |

| Nose | 97.9 | 98.4 | +0.5 |

| Fuselage | 95.2 | 95.5 | +0.3 |

| Wing | 75.6 | 85.5 | +9.9 |

| Tail | 87.1 | 88.7 | +1.6 |

| All-Class Mean | 83.4 | 88.2 | +4.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bingol, E.C.; Al-Raweshidy, H. From Benchmarking to Optimisation: A Comprehensive Study of Aircraft Component Segmentation for Apron Safety Using YOLOv8-Seg. Appl. Sci. 2025, 15, 11582. https://doi.org/10.3390/app152111582

Bingol EC, Al-Raweshidy H. From Benchmarking to Optimisation: A Comprehensive Study of Aircraft Component Segmentation for Apron Safety Using YOLOv8-Seg. Applied Sciences. 2025; 15(21):11582. https://doi.org/10.3390/app152111582

Chicago/Turabian StyleBingol, Emre Can, and Hamed Al-Raweshidy. 2025. "From Benchmarking to Optimisation: A Comprehensive Study of Aircraft Component Segmentation for Apron Safety Using YOLOv8-Seg" Applied Sciences 15, no. 21: 11582. https://doi.org/10.3390/app152111582

APA StyleBingol, E. C., & Al-Raweshidy, H. (2025). From Benchmarking to Optimisation: A Comprehensive Study of Aircraft Component Segmentation for Apron Safety Using YOLOv8-Seg. Applied Sciences, 15(21), 11582. https://doi.org/10.3390/app152111582