1. Introduction

The lungs are among the most important organs in the body, and lung diseases often have a substantial impact on overall health. Pneumonia is an infection of the lungs caused by pathogens and can be broadly classified as infectious or non-infectious. Infectious pneumonia is further subdivided into cases caused by bacteria, viruses, mycoplasma species, or Chlamydia. The most prevalent pathogens causing viral pneumonia are influenza virus, respiratory syncytial virus (RSV), and SARS-CoV-2. Streptococcus pneumoniae is the most common cause of bacterial pneumonia [

1]. Chest X-ray examinations have long been used to image the chest, and radiographs of the head, teeth, and bones are also commonly performed. This imaging modality helps clinicians identify anatomical abnormalities and skeletal injuries. Although chest radiographs provide relatively low-cost imaging with limited information content, they remain a valuable diagnostic tool for detecting abnormalities and are commonly used in diagnosing pneumonia. However, even for experienced radiologists, diagnosing pneumonia from chest X-ray images can be challenging. Radiographic findings in pneumonia are often nonspecific and can be easily confused with those of other diseases, or may reflect other conditions, leading to subjective interpretations and diagnostic variability. Consequently, there is a need to develop computer-assisted diagnostic tools to support radiologists in diagnosing pneumonia.

In recent years, Artificial Intelligence (AI), especially its subfields Machine Learning (ML) and Deep Learning (DL), has demonstrated advantages in visual tasks, playing an increasingly pivotal role especially in the field of materials science [

2,

3]. AI has been applied to the early auxiliary diagnosis of various diseases, such as lung disease prediction, diabetic retinopathy, and the novel coronavirus (COVID-19) outbreak in 2019 [

4]. These applications have also been successfully applied to pneumonia detection based on X-ray images. Given the difficulty of accurately identifying pneumonia from chest X-rays, developing efficient and highly accurate automated diagnostic methods is crucial for achieving early detection and reducing mortality rates. Meanwhile, attention mechanisms are gradually replacing traditional CNN models as a key advancement in current computer vision tasks [

5]. Conventional CNNs often exhibit a tendency to uniformly process all features within an input image, an approach that can result in the accumulation of superfluous information and the occurrence of false negative predictions. Conversely, attention mechanisms have the capacity to selectively focus on valuable features and suppress redundant information, thereby enhancing model interpretability and classification performance without a substantial increase in computational costs. Kaya et al. [

6] proposed a novel integrated CNN model that combines the model yielding the highest accuracy with the model yielding the lowest false negatives. This is achieved by identifying the optimal CNN model and combination weight ratios, with genetic algorithms (GA) setting the optimal weights. On public datasets, the model achieved an accuracy of 97.23% and an F1 score of 97.45. Lafraxo et al. [

7] proposed a novel combined deep learning framework that employs median filters for image enhancement, followed by regularized convolutional neural networks and long short-term memory for feature extraction and classification. This approach ultimately achieved accuracies of 99.91% and 88.86% on the Kermany and RSNA datasets, respectively. Sunil Kumar et al. [

8] proposed an innovative ensemble model, Efficient-VGG16, to address the need for rapid and precise classification of patients with confirmed cases of pneumonia due to the novel coronavirus (SARS-CoV-2) using chest X-ray images. This method combines the advantages of two models and compares them with traditional machine learning and transfer learning methods. The study utilised the COVID-Xray-5k dataset and employed a three-training-testing split ratio (80:20, 75:25, 70:30) to validate the model, achieving an accuracy rate of 99.46% and an F1 score of 98.41%. Mamalakis et al. [

9] developed a new transfer learning pipeline named DenResCov-19, this integrated pipeline leverages DenseNet-121 and ResNet-50 architectures for chest X-ray image analysis, targeting the classification and detection of pneumonia, COVID-19, tuberculosis, and normal cases. The model attained an AUC of 96.51%, with performance metrics including 87.29% F1-score, 85.28% accuracy, and 89.38% overall recall. The hybrid model proposed by Ukwuoma et al. [

10] combined a CNN and a Transformer encoder, using two ensemble models for feature extraction in different scenarios. Utilising the Mendeley dataset and the Chest X-ray-15k dataset, the model attained an overall accuracy of 99.21% and an F1 score of 99.21% for binary classification, and an accuracy of 98.19% and an F1 score of 97.29% for multi-class classification.

In ensemble learning research, Rajasekar et al. [

11] proposed a Generative Autoencoder with Attention Mechanism (GAME) that integrates ensemble learning, unsupervised learning, and attention mechanisms. By incorporating attention mechanisms into generative autoencoders, it accurately localizes and extracts features within lung images. Simultaneously, it reduces the demand for high-quality data, ultimately achieving an F1 score of 0.95 and an accuracy of 0.95 on the CheXpert dataset. Meanwhile, Yanar et al. [

12] introduced a novel deep learning framework—PELM (Pneumonia Ensemble Learning Model)—which sequentially integrates four high-performance pre-trained models: InceptionV3, ResNet50, VGG16, and Vision Transformer. This approach achieved a recall rate of 91% and an accuracy rate of 96% on a large dataset sourced from four distinct data sets. Prasath et al. [

13] enhanced pneumonia detection accuracy by 20.7% and recall by 21.8%. This improvement was achieved through image preprocessing using region-aware neural graph collaborative filtering (RNGCF), feature extraction via wavelet transform, and final optimization with the Hunter Prey Optimization Algorithms (HPOA).

Transfer learning applied to various CNN architectures in an appropriate manner has been demonstrated to enhance the feature extraction abilities of machine learning models. Abbas et al. [

14] trained a binary model based on DeTraCResNet18 to detect COVID-19 using a dataset of 196 images (105 COVID-19, 80 Normal, and 11 SARS cases). The model achieved an accuracy of 95.12%, sensitivity of 97.91%, specificity of 91.87%, and an overall accuracy of 93.36%. Lamouadene et al. [

15] used a ResNet18 model combined with SVM applying transfer learning on chest X-rays containing 21,165 slides and used different optimizers to obtain classification rates of 94% with Adagrad optimizer, 96% with RMSProp optimizer, and 97% with Adam optimizer. Kurt et al. [

16] emphasized the importance of image preprocessing and suggested a semi-automated process to improve the quality of the images, they proposed a transfer learning approach using the EfficientNet model and finally obtained results with 97.93% accuracy by fine-tuning techniques. Montalbo et al. [

17] proposed the Fused-DenseNet-Tiny model: a lightweight DCNN model based on a densely connected neural network (DenseNet) truncated and concatenated. Through training transfer learning, and feature fusion, this model achieved an accuracy rate of 97.99%. Hussain et al. [

18] introduced CoroDet, a novel CNN for automatic COVID-19 detection using raw chest X-ray and CT images; evaluated against ten existing techniques on a claimed largest X-ray dataset, it achieved accuracies of 99.1% (2-class), 94.2% (3-class) and 91.2% (4-class), outperforming state-of-the-art methods. Gifani et al. [

19] posited a pre-trained model ensemble method based on a majority voting strategy. The scheme was trained and evaluated on a CT dataset containing 349 COVID-19 positive and 397 negative cases, ultimately achieving an accuracy rate of 85%. Mostafiz et al. [

20] extracted the best features by using minimum redundancy and maximum relevance as well as recursive feature elimination in the mixture of features, and then detected the chest X-rays by using a random forest based bagging method. The overall accuracy was more than 98.5%. Nasiri et al. [

21] gathered the features from the X-ray images through DenseNet-169 and used the collected features as inputs to the classification task performed by the XGBoost algorithm, which ultimately achieved accuracies of 98.23% for the two-class task and 89.70% for the three-class task. Aslan et al. [

22] used features harvested by CNN model. They then used Bayesian optimisation to determine the hyperparameters of the machine learning algorithm and image segmentation based on ANN, which was applied to the COVID-19 X-ray dataset, achieving an accuracy rate of 96.29% and an F1 score of 94.53%. In another work, Jangam et al. [

23] combined VGG-16 with DenseNet-169 to construct stacking ensemble model for COVID-19 detection in individual CT or chest X-ray. Evaluation showed that this hybrid approach performed best in SARS-CoV-2 identification, achieving an accuracy rate of 91.5% and a sensitivity of 95.5%.

In this study, we propose a novel approach that integrates deep learning with transfer learning. Specifically, three pre-trained models—DenseNet-121, ResNet-50, and VGG-19—are fine-tuned by augmenting them with task-specific classification layers. The performance of each model is evaluated using accuracy, precision, recall, and F1-score across binary, three-class, and four-class classification tasks. Furthermore, a multi-head attention mechanism is introduced to effectively fuse the feature representations from these models. This integration leads to an ensemble framework that significantly improves both predictive performance and stability across all evaluation metrics.

The experiments are conducted using three distinct datasets sourced from the public Kaggle repository. The study is structured around three main classification tasks: a binary classification distinguishing normal and pneumonia cases; a three-class classification extending the previous task by incorporating COVID-19 as a separate category; and a four-class classification further differentiating pneumonia into viral and bacterial subtypes. In summary, the principal contributions of this work are summarized as follows:

- ▪

We propose an ensemble model leveraging transfer learning based on DenseNet-121, ResNet-50, and VGG-19 to address classification tasks across varied datasets.

- ▪

A more extensive and balanced dataset is utilized to undertake more challenging multi-class classification tasks, leading to more stable and reliable model performance.

The remainder of this paper is organized as follows. We describe the datasets used, different pre-trained models, and the proposed ensemble model method in

Section 2. Experimental results are presented in

Section 3. Finally, discussions and conclusions are provided in

Section 4 and

Section 5.

2. Materials and Methods

This section outlines the data preprocessing procedures employed in our study. It further presents the pre-trained deep learning models and the transfer learning framework adopted, as well as the subsequent ensemble learning method constructed thereafter. Finally, we describe the evaluation metrics used to assess the performance of the proposed models.

2.1. Description of the Dataset

Three different types of chest radiograph datasets are used in the deep learning model proposed in this paper, which are categorized as the binary dataset [

24], tertiary dataset [

25], and quaternary dataset [

26], dataset [

24] were selected from retrospective cohorts of pediatric patients of one to five years old from Guangzhou Women and Children’s Medical Center, Guangzhou, dataset [

25] was compiled from dataset [

24] and various publicly available resources published on the Kaggle website, dataset [

26] was created by a group of researchers from Qatar University in Doha, Qatar, and the University of Dhaka in Bangladesh, in collaboration with doctors and partners from Pakistan and Malaysia.wherein dataset [

24] denotes the dataset with two types of data (normal, pneumonia), and dataset [

25] categorizes the normal chest images, viral pneumonia and COVID-19. Finally, dataset [

26] is more diverse as it categorizes normal chest pictures, viral pneumonia, lung opacity images (non-COVID lung infection) and COVID-19. In total, three publicly available datasets were analyzed, with a total of 13,949 normal images, 4308 COVID-19 images, 6473 viral pneumonia images, and 6012 lung opacity images (non-COVID lung infection) obtained prior to data analysis.

Figure 1 provides examples.

In the data preprocessing step, the chest radiographs exhibit varying resolutions, so all images were resized to 224 × 224 pixels prior to training and augmentation. This resizing step was crucial for ensuring consistency and compatibility with our chosen model. Paths for both training and testing datasets were specified. The data loading function loaded images from all categories in the training set. Stratified sampling was employed during data splitting to keep the proportion of each class in the training/validation/test sets consistent with the overall dataset, thereby preserving minority class representation even in smaller subsets. The total number of training images was determined.

Data augmentation is a necessary part of the deep learning model as it now requires a large amount of data to improve performance. An image data generator was created to implement data augmentation. The augmentation techniques applied included:

- ▪

Zooming: Pixel values are normalized to [0, 1] (i.e., multiplied by 1.0/255). Simultaneously, the scaling range dynamically resizes the original image to a ±10% scale variation.

- ▪

Random horizontal Flipping: Random mirroring along the vertical axis simulates lateral pose variations, while avoiding vertical flipping to preserve anatomical positioning of the thoracic cavity.

- ▪

Random Rotation: Introducing random rotations to the images, control the random rotation amplitude of the image within the range of angles [−15, 15].

By combining these preprocessing and enhancement techniques, we enable our model to adeptly adapt to variations in image quality, size, and perspective, ultimately significantly improving the accuracy of our pneumonia detection capabilities.

2.2. Pre-Trained Deep Learning Model

In this study, the selected pre-trained backbone deep learning models are VGG-19, ResNet-50 and DenseNet-121. Owing to the pre-training on the ImageNet dataset, these backbone networks demonstrate strong representational capabilities in capturing low-level features including spatial structure, rotation invariance, and edge information. All models are compatible with TensorFlow and Keras frameworks. In this study, they serve as base models, each of which is fine-tuned to adapt to the specific characteristics of different target datasets.

VGG-19 [

27]: VGG-19 adopts a stacked structure of consecutive 3 × 3 convolution layers: the first two convolution blocks each contain two convolution layers, and the subsequent three convolution blocks each contain four convolution layers, with 2 × 2 maximum pooling following each block. At the end of the last convolution operation, three fully connected layers (FC) and softmax (for output) are added to complete the architecture.

DenseNet [

28]: This architecture employs dense connections where each layer receives concatenated feature maps from all preceding layers within the same dense block. Transition layers between blocks use 1 × 1 convolutions followed by pooling to reduce feature dimensions. A global average pooling layer is typically applied after the final dense block but before the softmax classifier, effectively replacing fully connected layers while maintaining classification accuracy.

ResNet [

29]: The milestone model proposed by He Kaiming’s team in 2015 solves the gradient vanishing problem in deep networks through residual learning. The core component is the residual block: input data bypasses the convolution layer through a shortcut connection and is directly added to the output, enabling the network to learn ‘differences’ rather than direct mappings, thereby stabilising the training of thousand-layer networks. The ResNet-50 model was employed in this study.

2.3. Transfer Learning

Transfer learning is a machine learning technique that utilizes pre-trained models that have been trained for a specific problem and adapts them to new tasks by means of fine-tuning. This technique can effectively reduce the model training time and improve the generalization ability. Consider CNN as an example: training a model from scratch usually requires large-scale labeled datasets and substantial computing resources, whereas transfer learning leveraging pre-trained weights significantly accelerates convergence and enhances performance, especially with limited data. A typical example is the benchmark model in the ImageNet image recognition task, which was pre-trained on over a million images. In the initial stage of the feature extraction experiment, we removed the network head or the final layer of the pre-trained model, which was originally pre-trained on the ImageNet dataset. This step is important because pre-trained models are optimized for different classification tasks. Removing the classifier head discards the weights and biases associated with the original class scores, and the removed portion is replaced with newly initialized layers suitable for the target task. The architectural modifications of the VGG-19 pre-trained model primarily involves the following core improvements: adjust the average pooling size to 4 × 4, adjust the fully connected network dimensions to 512 and 256, and set the dropout layer parameter to 0.5, which is shown in

Figure 2. The final layer sets different classification heads for the four categories of normal, COVID-19, viral pneumonia, and lung opacity according to the classification requirements of the dataset. The fine-tuning of the other two models is roughly the same as that of VGG-19.

2.4. Multi-Head Attention Mechanism

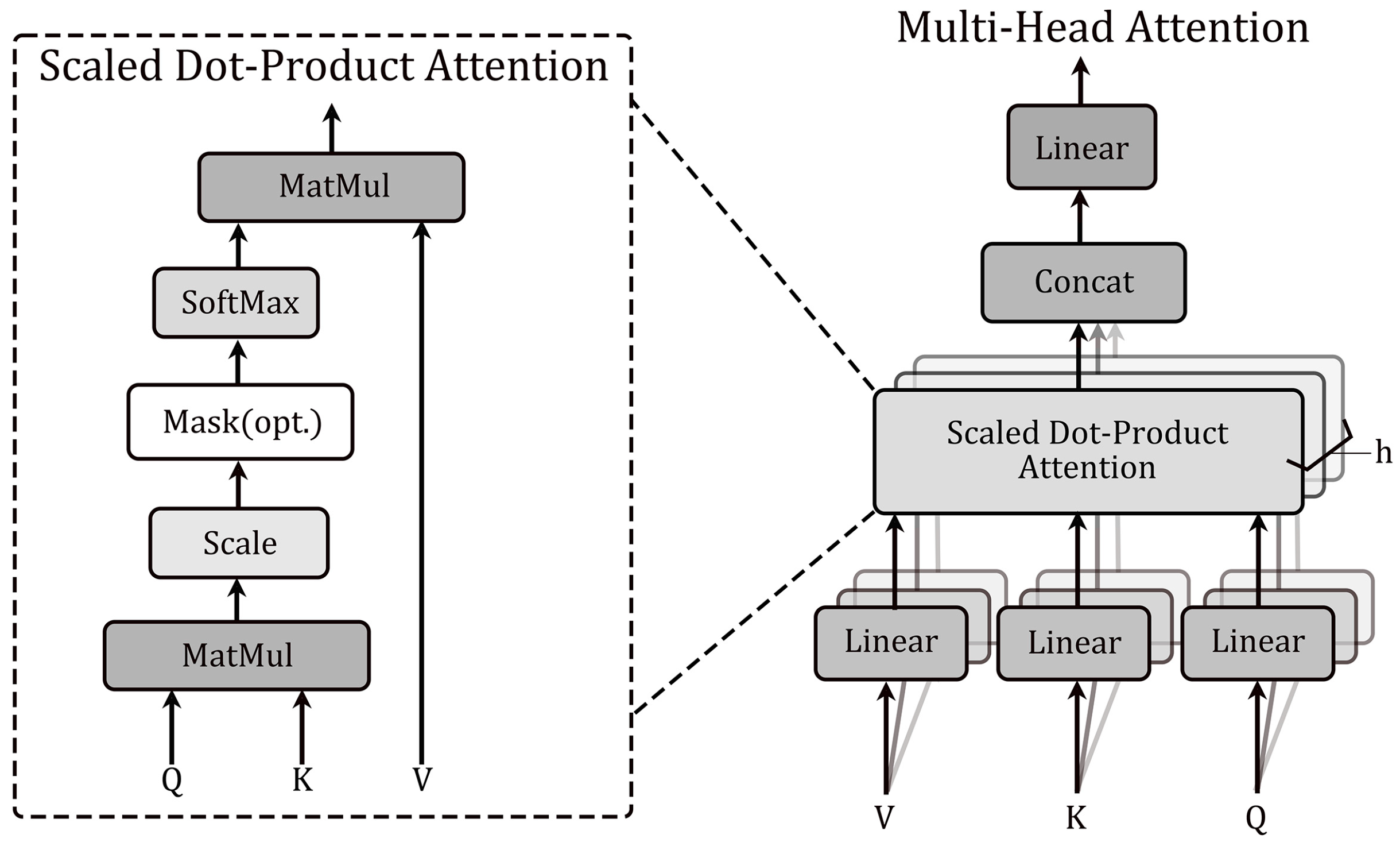

The attention mechanism is a central concept in deep learning that mimics human visual attention and forms a core component of the transformer architecture. In essence, it is a process of weighting and summing the ‘Values’ based on the ‘Query’ and ‘Key’, and then redistributing the weights and generating the final output. Meanwhile, in the self-attention layer, a scaled dot-product attention mechanism is used to form the attention function, mathematically speaking the ‘Key’ dimension

and the ‘Value’ dimension

are fed into the network. The similarity between ‘Query’ and ‘Key’ vectors is computed using the dot product and divided by

, while using softmax to obtain the weight of each value. Use scaled dot-product attention to compute the self-attention output formula as

Multi-head attention, based on self-attention, computes multiple self-attention heads in parallel, enabling the model to learn diverse features from different subspaces and to capture multiple dependencies. By employing multiple attention heads, the model can flexibly capture both local and global dependencies in the input sequence, which improves the performance and representation ability. Moreover, the multi-head attention mechanism does not raise computational costs but allows the model to capture relevant features across multiple representation subspaces. which improves the model’s perceptual ability.

Figure 3 shows the structural diagram of Scaled dot-product attention and multi-head attention mechanisms, and the calculation formula is as follows:

2.5. Multi-Model Ensemble Learning

In fact, deep learning networks are nonlinear models that offer considerable flexibility when training on small or sparse datasets. They are fine-tuned using random algorithms, and each training session involves some changes to the weights, which causes the neural network to produce different predictions for the results, resulting in high variance. To reduce this high variance generated during the training of deep neural networks, ensemble learning techniques can be used to learn from two, three, or more different deep neural network models. These different neural networks are then combined to predict the final results. To address the demand for model optimization within the field of pneumonia recognition in medical image analysis, the majority of existing studies focus on performance improvement of a single deep convolutional architecture. In contrast, the systematic exploration of multi-model collaborative frameworks specifically for pneumonia recognition is still insufficient. In this study, we propose an ensemble learning approach based on a multi-head attention mechanism to analyze datasets from multiple sources, fuse features from several pre-trained models, and construct a hierarchical feature fusion mechanism to enhance classification robustness and performance. The aim is to achieve better feature extraction and overall performance gains.

In the ensemble model, the workflow begins with data preprocessing, followed by fine-tuning each of three pre-trained models. Then, the features corresponding to the final convolutional layers of each model are extracted. The feature vectors from multiple models are first concatenated to form a new feature sequence. Next, the first multi-head attention layer is introduced, which uses self-attention mechanism with multiple attention heads to extract important information from the overall features. To facilitate deep learning, residual connections are used to add the attention outputs to the input features. This architectural choice mitigates vanishing gradients and helps preserve information during training. After applying layer normalization and dropout, the model’s training performance is further enhanced and overfitting is reduced. The model’s output is subsequently flattened and reshaped to serve as input to the second multi-head attention layer, where the same sequence of operations is repeated. Finally, the resulting representations are passed through a dense (fully connected) layer and a softmax activation to yield the final classification results, as shown in

Figure 4.

2.6. Performance Evaluation Metrics

A range of performance evaluation metrics is employed to assess the proposed model, including accuracy, recall, precision, and the F1 score, and the specific formula is as follows:

In the equation above, TP stands for true positives (cases that are truly positive and correctly predicted as positive by the model), TN stands for true negatives (cases that are truly negative and correctly predicted as negative), FP stands for false positives (cases that are actually negative but mistakenly predicted as positive), and FN stands for false negatives (cases that are actually positive but mistakenly predicted as negative). They form the basis for metrics such as accuracy, recall, specificity, and precision, which help assess overall correctness, the model’s ability to detect positive cases, and its tendency to produce false alarms.

4. Discussion

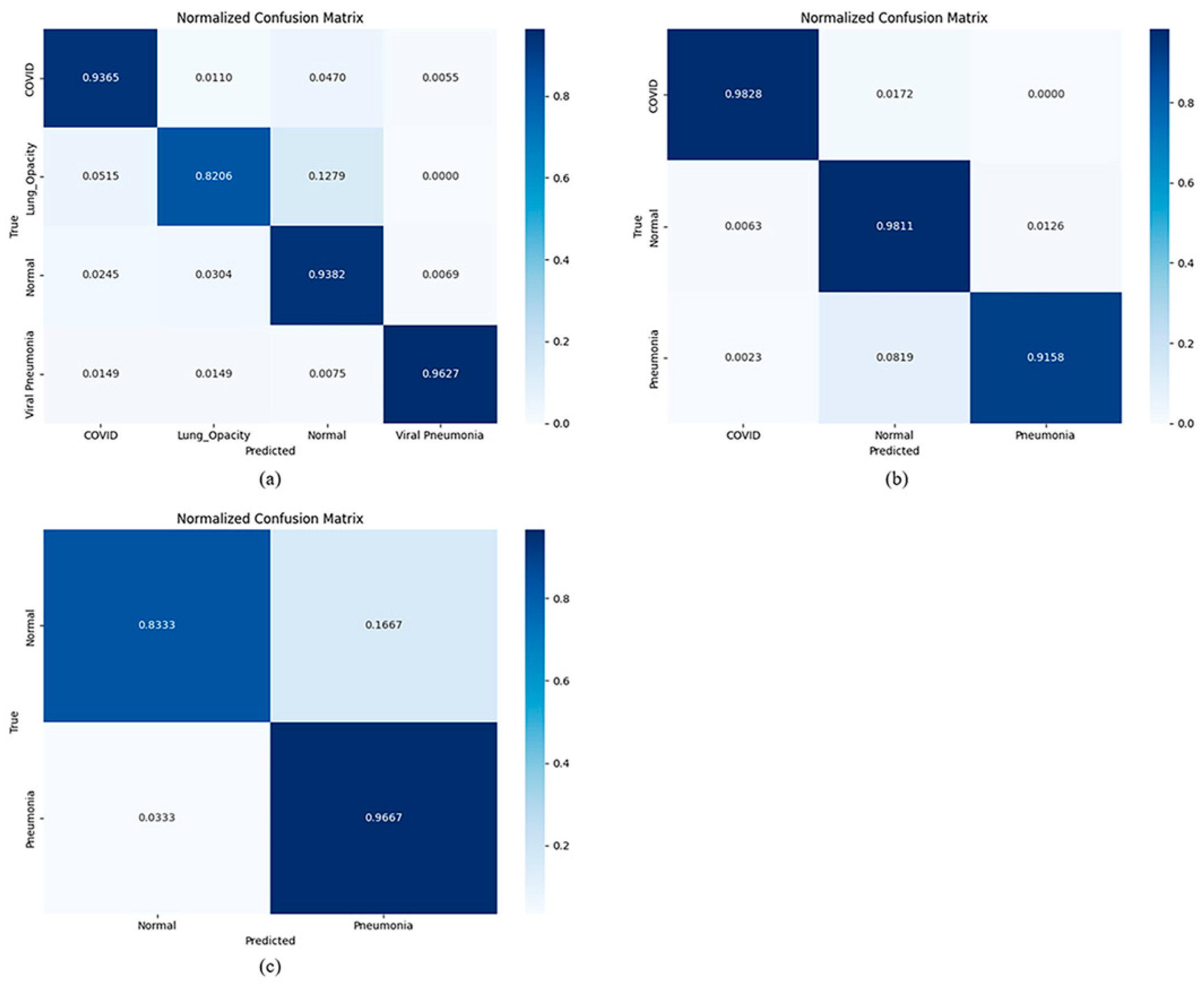

We compared our work with recent literature and present the results in

Table 5. The numbers in the first column represent the two-class, three-class, and four-class classifications. The results show that the integrated model proposed in this study performs well on different types of datasets because it achieves good results on multiple large and balanced datasets. We have reason to believe that the model we propose is meaningful.

This study shows that the ensemble model is based on the multi-head attention mechanism. By integrating three pre-trained networks, the model allows users to flexibly combine prediction models according to the quality of datasets or pre-trained models. By extracting deep features from multiple training models, it can effectively ensure the independence of features during the integration process. Comparisons among

Table 1,

Table 2 and

Table 3 indicate that the ensemble model generally outperforms other deep CNN architectures, exhibiting higher accuracy, recall, and robustness, and it also demonstrates strong generalization. Ensemble modeling combines three pre-trained deep CNNs, enabling the meta-learner to determine predictions based on distinct features learned by the base models. Deep features extracted from the three trained models ensure feature independence within the ensemble process. Unlike traditional ensemble learning models that aggregate final predictions from base models to generate ensemble forecasts, deep ensemble learning models utilize deep feature vectors or feature maps from trained models to train shallow or deep meta-learners. We plan to augment the dataset with additional data resources. For future work, we also plan to explore other deep learning models and compare their performance with the models employed in this study.

Typically, multi-head attention mechanisms are applied within a single model (e.g., in Vision Transformers) to capture complex relationships and patterns among features in different regions of the same X-ray image. In our research, the multi-head attention mechanism is employed as a meta-classifier within the ensemble framework. Its input is not the raw pixels or local features of the original image, but rather the high-level abstract features extracted by multiple pre-trained models (such as DenseNet-121, VGG-19, ResNet-50, etc.). Its core task is to dynamically learn and balance the importance and interrelationships among these heterogeneous features from different architectures. Simultaneously, it incorporates residual connections by linking the attention outputs to the inputs through residual connections, combined with LayerNormalization and Dropout, which facilitates stable training and preserves original feature information. This design enables the attention module to refine/reconstruct the fused features without losing the original discriminative power.

5. Conclusions

As detailed in

Table 5, we evaluate our method alongside the latest top-performing techniques. Although we have compared the performance of the proposed model with existing literature (as shown in

Table 5), caution must be exercised when interpreting these cross-sectional comparisons. A significant limitation lies in the fact that the cited studies employed datasets that differ in scale, category distribution, and image sources.

These variations introduce inherent methodological limitations to direct comparisons of accuracy metrics. For instance, a model trained on a small, balanced dataset may report high accuracy that fails to generalize to more challenging, large-scale datasets closer to real-world distributions. This also represents an unresolved standard in current deep learning research. There is currently no unified large-scale public dataset available. Moreover, the objectives of research are not entirely consistent. Therefore, the comparisons in

Table 5 should be viewed as indicative trends, aiming to situate our work within a broader academic context rather than asserting absolute superiority. We strongly recommend that future research be conducted on unified public benchmark datasets to enable fairer and more meaningful comparisons.

Using chest X-rays to predict COVID-19 can prevent the disease from spreading in the chest and detect the virus more quickly. In this study, we used transfer learning to train, test, and validate three widely deep learning algorithms. We tested DenseNet-121, VGG-19, and ResNet-50 as pre-trained models to classify whether chest X-rays can predict COVID-19, prevent the disease from spreading in the chest, and detect the virus more quickly. In this study, we used transfer learning to train, validate, and test three popular deep learning algorithms. We tested DenseNet-121, VGG-19, and ResNet-50 as pre-trained models for classifying CXR images of pneumonia. The results showed that the DenseNet-121 pretrained model achieved the best performance. Additionally, this study introduced pneumonia datasets collected from three different sources. After data augmentation, the dataset consisted of 13,949 normal images, 6012 images of lung opacity, 6473 images of viral pneumonia, and 4308 chest X-ray images of COVID-19. Furthermore, the proposed multi-head attention-based ensemble model demonstrated consistent performance across different datasets and achieved strong training outcomes. The results can assist medical experts in early identification of pneumonia types from chest X-rays, thereby supporting faster clinical decision-making. Future work will focus on expanding the dataset if more open-source data becomes available, as well as incorporating chest X-ray images of other thoracic diseases.