Abstract

Three-dimensional medical images, such as those obtained from MRI scans, offer a comprehensive view that aids in understanding complex shapes and abnormalities better than 2D images, such as X-ray, mammogram, ultrasound, and 2D CT slices. However, MRI machines are often inaccessible in certain regions due to their high cost, space and infrastructure requirements, a lack of skilled technicians, and safety concerns regarding metal implants. A viable alternative is generating 3D images from 2D scans, which can enhance medical analysis and diagnosis and also offer earlier detection of tumors and other abnormalities. This systematic review is focused on Generative Adversarial Networks (GANs) for 3D medical image analysis over the last three years, due to their dominant role in 3D medical imaging, offering unparalleled flexibility and adaptability for volumetric medical data, as compared to other generative models. GANs offer a promising solution by generating high-quality synthetic medical images, even with limited data, improving disease detection and classification. The existing surveys do not offer an up-to-date overview of the use of GANs in 3D medical imaging. This systematic review focuses on advancements in GAN technology for 3D medical imaging, analyzing studies, particularly from the recent years 2022–2025, and exploring applications, datasets, methods, algorithms, challenges, and outcomes. It affords particular focus to the modern GAN architectures, datasets, and codes that can be used for 3D medical imaging tasks, so readers looking to use GANs in their research could use this review to help them design their study. Based on PRISMA standards, five scientific databases were searched, including IEEE, Scopus, PubMed, Google Scholar, and Science Direct. A total of 1530 papers were retrieved on the basis of the inclusion criteria. The exclusion criteria were then applied, and after screening the title, abstract, and full-text volume, a total of 56 papers were extracted from these, which were then carefully studied. An overview of the various datasets that are used in 3D medical imaging is also presented. This paper concludes with a discussion of possible future work in this area.

1. Introduction

The significant increase in medical imaging procedures and advancements in computational capabilities have driven the rapid evolution of artificial intelligence (AI) in medical imaging. AI plays a critical role across all sorts of medical imaging, regardless of the imaging technique or the organs being examined. AI has the potential to be integrated into every stage of diagnostic imaging, including data acquisition, reconstruction, analysis, and reporting [1]. It significantly influences all the aspects of the daily workflow of radiologists, who are increasingly burdened by rising workloads, inevitably leading to errors due to intense pressure [2]. The advancement of AI in this context is motivated by a desire to improve the effectiveness and efficiency of clinical practices.AI has emerged as a robust tool in image analysis, increasingly adopted by radiologists for early disease detection and minimizing diagnostic inaccuracies in preventive healthcare, and supporting the decision-making process [3,4]. By accurately detecting and segmenting tumors and other abnormal formations in organs, the early detection and precise diagnosis of diseases can be achieved, additionally supporting the development of personalized treatment plans for patients [5,6]. Computer-aided diagnosis for medical image analysis includes several key tasks: segmentation (separating the relevant parts of the image from the background), detection (locating and counting specific areas of interest), denoising (removing irrelevant or noisy pixels), reconstruction (converting lower-dimensional data like 2D images into higher-dimensional formats like 3D), and classification (assigning labels to images based on their content). Each of these tasks plays an important role and poses significant challenges in developing automated systems for medical imaging diagnostics [7,8].

A 3D image constitutes of an image along with its volume component. Viewing the entire volume at once gives a broad perspective, which helps in better understanding shapes and abnormalities that may be unfamiliar, when compared to viewing 2D images only [9]. Working with 3D volumetric medical data is especially challenging because of the complexity [10,11] caused by variations in anatomy, pathology, and imaging techniques. Compared to 2D medical images, 3D images are larger and more complex, making them harder to analyze. As a result, research focused on 3D volumetric data has become increasingly popular among researchers [12].

MRI-obtained images are mostly referred to as 3D or volumetric images. However, MRI machines are not accessible in some regions due to a number of reasons, a few of which are listed below:

- Their high cost, which involves the price of equipment, installation costs, and maintenance or operation costs [13].

- Lack of significant infrastructure and space, as MRI machines require a large amount of physical space to be installed [14].

- Limited access in underfunded or rural regions that can not afford the cost of MRI machines. Another issue is the unavailability of skilled technicians to operate these machines [14].

- Performing an MRI can be dangerous if there are ferromagnetic objects nearby or if a patient has metal implants [15].

- Unavailability of large amounts of datasets due to privacy concerns [16,17].

- Exposure to a high volume of harmful radiation [18].

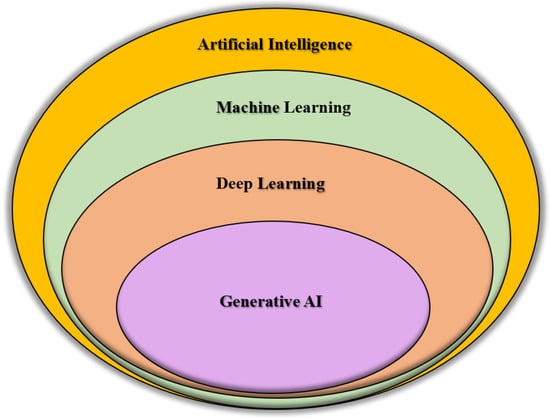

In the above-mentioned scenarios, since the cost of directly producing multimodal images from medical devices is high [19], a practical solution is the generation of 3D images from 2D images, as this will improve the analysis as well as diagnosis in healthcare settings. Ref. [20] suggests that reconstructing 3D images from X-rays is an ideal method for visualizing a patient’s anatomy for clinical analysis. This approach is cost-effective, widely accessible, and minimizes the patient’s exposure to radiation compared to other imaging techniques. The solution lies in the use of Generative AI models; these are a form of machine learning models that create new data based on patterns and structures they learn from existing data/training data. These generative models have enriched the existing medical datasets in recent years by producing realistic synthetic data [21]. Generative AI has had a significant impact across various fields, including image generation, text creation, music composition, drug discovery, and healthcare [22], and is also very effective in the early diagnosis of underlying conditions [23]. Most popular among these are GANs and Transformers. To visualize the hierarchy and relationship between AI, Machine Learning (ML), Deep Learning (DL), and Generative AI, a Venn Diagram is presented in Figure 1. AI represents the broad field of creating intelligent systems, while ML is a subset of AI that enables machines to learn from data. Within ML, DL is a more specific approach involving neural networks with multiple layers. Generative AI falls under DL and ML, focusing on models that can generate new data resembling the training data. In recent years, Generative AI has received significant attention due to the huge amounts of data and the increasing sophistication of the computing technologies [24,25]. The Figure 1 provides a comprehensive overview with a conceptual hierarchy for the readers, providing a clearer understanding of where Generative AI stands in the overall landscape. It establishes a logical progression towards this paper’s main focus: the use of Generative AI in 3D medical imaging.

Figure 1.

Venn diagram visualizing the hierarchical and overlapping relationships between AI, ML, DL, and Generative AI. AI represents the broad field of creating intelligent systems, while ML is a subset of AI that enables machines to learn from data. Within ML, DL is a more specific approach involving neural networks with multiple layers. Generative AI falls under DL and ML, focusing on models that can generate new data resembling the training data.

While Generative AI comprises different models, including diffusion models and transformers, this systematic review will focus on the role of GANs in 3D medical imaging because of various reasons, some of which include the following:

- The extensive use, applications, and proven effectiveness of GANs in 3D medical imaging for data generation, super-resolution, denoising, cross-modality translation, segmentation, and reconstruction [26,27,28];

- GANs offer flexible architecture for handling 3D volumes, including multi-resolution generators and discriminators [29];

- GANs offer faster computing speeds, the potential for real-time synthesis when applied in clinical settings [30], and greater efficiency compared to other generative AI models, e.g., transformers and diffusion models [31,32].

The GAN was introduced by Goodfellow and his colleagues [33] in 2014, and since then, it has evolved and transformed medical imaging by generating high-quality images, even when datasets are limited, leading to improved diagnostic accuracy and enhanced image quality. GANs stand out for their ability to learn patterns and data, in order to generate images or to translate images to other modalities [34,35,36,37]. GANs are used for the generation of 3D medical images, covering key techniques like anomaly detection, complex data synthesis, denoising, reconstruction, segmentation, classification, and image translation [38,39,40,41,42,43]. Recently, GANs have been used in 3D generation techniques in medical imaging, such as image reconstruction, synthesis, and high-precision tumor detection, to name a few [44].

While the existing surveys [45,46,47,48,49,50,51] explore the role of GANs in 3D medical imaging, they do not offer an up-to-date overview of the advancements of GANs in 3D medical imaging, as they explore the use of GANs for 3D medical imaging only up to the year 2022. A recent survey [52] covers the latest research but only focuses on image enhancement. Therefore, this review offers the first comprehensive synthesis that is exclusively focused on 3D medical image analysis, specifically focusing on the recent research articles from the years 2022–2025 to provide an up-to-date review of the recent advancements in Generative AI in 3D medical imaging. The distinction is critical as 3D medical imaging analysis introduces unique computational, architectural, and clinical challenges. By systematically categorizing 3D GAN models, focusing on the different applications of GANs, the datasets that are commonly used, any pre-processing methods that are applied, the algorithms, their challenges and the findings, evaluating the model’s performance across volumetric tasks, and identifying limitations and challenges, this review fills a crucial gap in the research, making this paper a valuable addition to the existing body of knowledge. This review provides a roadmap for future research and clinical implementation in this rapidly evolving field, helping to push forward the cutting-edge advancements in 3D medical imaging.

1.1. Generative Models for Synthetic Data Generation—Generative Adversarial Networks (GANs)

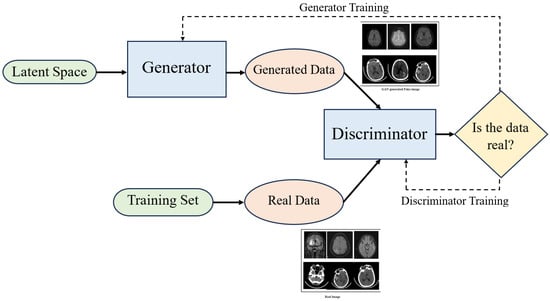

In medical image analysis, DL models are applied across various tasks such as registration, detection, classification, image-to-image translation, segmentation, and video-based applications. AI consists of the application of artificial neural networks, specifically deep learning techniques like GANs, which have significant implications in radiology. GANs consist of two neural networks: a generator that creates synthetic images resembling real ones and a discriminator that distinguishes between synthetic and real images [46,53,54]. In the context of radiology, the generator model can replicate images consistent with its training data and generate new images with similar features. Meanwhile, the discriminator is trained to classify images. By choosing an appropriate loss function and through repeated training, the generated images become more realistic and closer in distribution to real images. The working of a GAN is illustrated in Figure 2. GANs are widely used for generating synthetic images. The latest advancements in GANs involve a style-based generation approach, where style vectors from a mapping network control the image generation process. Researchers have been drawn to GANs because of their impressive ability to generate images, which has led to their widespread adoption in medical image augmentation.

Figure 2.

Conceptual overview of the GAN architecture, illustrating the adversarial interplay between the generator and discriminator. Synthetic images are iteratively refined through the feedback loop. The generated and real images have been taken from [55].

GANs offer a promising solution for creating synthetic medical images, which helps address the problems of having limited labeled data and imbalanced class distributions. This, in turn, boosts the effectiveness of disease classification and detection models. By leveraging GAN technology, researchers strive to enhance diagnostic processes, treatment planning, and overall patient care through improved medical imaging techniques. However, current methods face challenges like interpretability issues, limited data, overfitting, unstable training, domain adaptation, and ethical concerns. Researchers are thus exploring new GAN architectures and methods to address these issues and improve the quality and reliability of generated medical images.

GANs are used for image-to-image translation, enabling the conversion of medical images from one modality to another, such as transforming CT scans into MRI images or creating synthetic X-ray images from CT data. This is particularly useful when certain imaging modalities are expensive or involve ionizing radiation. GANs also improve image quality by denoising medical images, aiding in accurate analysis. Furthermore, they help in anomaly detection by differentiating between normal and abnormal cases, which supports early disease detection. GANs have revolutionized image enhancement and diagnostic accuracy by producing high-quality images from the limited datasets [28]. GANs play a vital role in tackling imbalanced datasets by generating synthetic samples of rare medical conditions, thereby creating more balanced training sets. Innovative GAN technologies can greatly improve image quality, broaden applications, and evolve the GAN framework. Image generation provides promising solutions for improving the image quality, translating it into other modalities, and also for modeling the progression of diseases [56].

1.1.1. GANs for 2D Medical Imaging

This paper focuses on surveying the use of GANs in 3D medical images;however, a background is provided regarding the 2D medical images since a great deal of research and advancement has been done on 2D medical imaging, and also numerous 2D imaging GAN models lay a foundation for 3D medical imaging GAN models, both architecturally and conceptually. GANs generate realistic synthetic images, which is especially helpful in expanding small labeled datasets, enhancing image quality by creating higher-resolution versions of lower-quality images, improving diagnostic accuracy, and enabling cross-modal image conversions [57,58]. Overall, the realistic and varied images produced by GANs are helping to improve image analysis, segmentation, and clinical decision-making in healthcare [59,60]. GAN models for 2D medical images have become a stepping stone for GANs that deal with 3D medical images, since the demand for 3D medical images is increasing as they offer a clear three-dimensional overview of the whole volume to better understand the unfamiliar shapes or abnormalities leading to improved disease diagnosis, as well as better patient monitoring and treatment planning [51,61].

1.1.2. GANs for 3D Medical Imaging

While 2D images are useful for many purposes, 3D images offer greater insight into the shape and structure of tumors. Understanding the 2D and 3D geometry of a tumor is crucial for assessing its growth patterns, which can aid in improving surgical planning and drug delivery strategies. GAN-based methods often face challenges such as memory limitations and stability problems. As a result, most GAN models have been trained on low-resolution 3D images [62,63,64]. It is only recently that they have been able to generate full-resolution images by learning smaller parts, or sub-volumes, of the image [65]. Three-dimensional generative models require an extremely long training time due to the large number of parameters, features, and model complexity. The segmentation and visualization of 3D medical images allows for a better understanding of the condition and helps with better treatment planning [5].

The progress in 3D medical image research is slow, mainly because of the lack of large-scale 3D medical image datasets [66]. This scarcity is due to the complexity involved in data collection, the need for expert annotation, privacy issues, and obtaining patient consent. To address this, GANs have been widely used to generate synthetic images that replicate real medical data. However, most GAN-based methods focus on 2D image generation. When it comes to 3D medical imaging, there are two major challenges:

- Insufficient availability of 3D medical images to train effective models, due to high annotation costs, patient consent issues, and the difficulty of expert annotations, making it hard to train 3D medical models effectively [67];

- The use of 3D convolutional layers introduces a large number of parameters, slowing down the training process and increasing the risk of overfitting because the number of parameters is disproportionately large compared to the small dataset size [68];

- Three-dimensional modeling for medical imaging is computationally intensive, as it requires long training hours and significant memory and hardware due to the complexity of generative architectures and volumetric data [68].

1.2. Systematic Review Objectives

In this review, intensive research has been carried out on the existing literature. Past reviews give valuable insights into the 3D medical imaging sector up until the year 2022 [45,46,47,48,49,50,51]. A recent survey [52] covers the latest research works, but its main focus is on image enhancement only, overlooking the broader coverage of GANs across multiple image processing tasks like 3D image generation, segmentation, reconstruction, and clinical translation. A research gap lies in exploring the works that have been published from 2022 till now regarding 3D medical imaging that covers generation, segmentation, enhancement, translation, and reconstruction. It is important to study new research from after the year 2022 for a number of reasons:

- The field relating to GANs in 3D medical imaging has exponentially evolved since 2022, introducing some new models, increased clinical applicability, and new tasks;

- Earlier surveys may be outdated now, since there has been significant advancement in this field in recent years;

- Some breakthrough 3D GAN models that efficiently enhance the image fidelity have also been introduced since 2022, such as multi-resolution GANs [27], memory-efficient CRF-Guided GANs [69], and hybrid frameworks like HA-GAN [70], to name a few.

Therefore, this systematic review focuses on the existing research (published between 2022 and 2025) that covers the multiple medical image processing tasks, i.e., generation, segmentation, reconstruction, translation, and enhancement. The older studies are consciously excluded, and only the recent studies are included to avoid any sort of redundancy. The innovations in GAN architectures, their evaluation strategies, and clinical applicability are highlighted, offering a comprehensive and comparative analysis. By focusing on the latest advancements in GANs and their multiple imaging application tasks, this systematic review provides a comprehensive resource for researchers and clinicians who are navigating this fast-evolving field.

To facilitate the readers’ and researchers’ understanding, the following questions form the primary focus of this work:

- What are the applications of GANs for generating 3D medical images?

- Which methods are most common and most effective for this purpose?

- What datasets have been used for each work?

- What evaluation metrics have been used for comparisons and results?

- Are there any pre-processing techniques used?

- What is the accuracy and the limitation of each method?

- What could be the possible future work to pursue with this technology?

While this review focuses exclusively on the use of GANs for 3D medical image analysis, we acknowledge the impact of diffusion models in 3D medical imaging. We encourage the researchers and readers seeking broader coverage to consult emerging reviews dedicated to diffusion model methods.

2. Survey Methodology

In this review, a thorough and comprehensive search is carried out following the PRISMA guidelines [71], which are established standards for conducting and reporting systematic reviews and meta-analyses.

2.1. Databases and Search Strategy

A comprehensive literature search was carried out across these scientific databases: IEEE, Science Direct, Google Scholar, Scopus, and PubMed. All the relevant studies that were published between 2022 and 2025 were collected by using targeted search queries. The sorting was based on relevance and citation count, where feasible. The search tags included “Generative AI”, “3D Medical Imaging”, “Three-Dimensional Imaging”, and “GANs”. The database and search strategy are summarized in Table 1.

Table 1.

Table depicting database and search strategy for study selection.

This approach and these databases are sufficient for achieving the aims of this paper for the following reasons:

- Limiting the search years to 2022–2025 ensures that the latest developments in this field are captured. This time window aligns with the emergence of benchmark datasets like CT-ORG, UDPET, VerSe, and GLIS-RT, and also the use of hybrid GAN architectures.

- The targeted tags, “Generative AI”, “3D Medical Imaging”, “Three-Dimensional Imaging”, and “GANs”, ensure that the retrieved studies align with the concept of this systematic review paper.

- The databases used are IEEE, Science Direct, Google Scholar, Scopus, PubMed. These provide influential, relevant, and cutting-edge research papers, thus offering a comprehensive view of advancements in the generative AI field.

- Highly relevant studies are prioritized, which helps to ensure that impactful research is selected.

Anomaly Detection Screening: While anomaly detection is an advancing area, using targeted queries “anomaly detection”,“3D GANs”, and “3D medical imaging” between the years 2022–2025, some publications were found; however, none were found that combined both GANs and 3D medical imaging for anomaly detection. Therefore, no 3D GAN anomaly studies are included.

For a detailed analysis of the retrieved studies regarding the Database and Search Strategy, date of search, search tags, and hit count, refer to Supplementary Table S1.

2.2. Eligibility Criteria

This section discusses the inclusion criteria (Section 2.2.1), exclusion criteria (Section 2.2.2), and risk of bias (Section 2.3) for the publications.

2.2.1. Inclusion Criteria

The selected studies were based on the chosen topic and research questions, meeting the following key requirements:

- Involve the use of Generative AI: GANs.

- Focused on 3D or volumetric medical imaging.

- Provide enough information to answer at least one of the research questions, which are listed in Section 1.2.

- The publications chosen were mainly conference papers and journal articles to ensure methodological rigor and peer-reviewed quality.

- To ensure the most current trends and methods, the studies chosen were published between 2022 and 2025.

- Only the studies published in the English language were selected.

- Only the studies with full-text accessibility were included.

The studies that explicitly implemented 3D volumetric GANs were included. Meanwhile, 2.5D, patch-wise, or stack 2D were excluded unless they performed full-volume synthesis or evaluation. To highlight true 3D innovation, borderline cases, e.g., pseudo-3D stacking, were excluded.

2.2.2. Exclusion Criteria

Studies with the following characteristics were not selected:

- Studies focusing on 2D medical images only;

- Duplicate entries;

- Extended abstracts;

- Studies in languages other than English;

- Articles that were not relevant to this study, where relevance is determined by reference to the medical imaging domain, such as image generation, segmentation, reconstruction, or transformation to other modalities;

- Using GAN models for processing;

- Publications that work on 3D medical imaging or 3D/2D medical imaging;

- Articles related to non-medical study;

- Studies that were published before 2022 were excluded;

- Studies that were reviews.

All the retrieved studies had their duplicates removed, the titles and abstracts were screened, followed by full-text screening to assess the research’s eligibility for inclusion in this systematic review. Any disagreements were resolved through discussion between the authors. The final selection of the retrieved studies resulted in a total of 56 research papers, as illustrated in the PRISMA flow diagram in Figure 3.

Figure 3.

PRISMA diagram showing the literature review process, where 56 publications were selected from a total of 1530 collected from the five databases.

2.3. Risk of Bias

The risk of bias assessment was conducted for this systematic review using the Risk of Bias in Systematic Reviews (ROBIS) tool [72], focusing on the four main domains: (1) eligibility criteria of the studies, (2) identification and selection of studies, (3) data extraction and outcome evaluation, and (4) results and interpretation of findings. Each domain was rated with ROBIS response options (Yes, Probably Yes, Probably No, No, No Information), allowing for the final classification of risk of bias (high, low, or unclear). If the outcome of all the domains is Yes or Probably Yes, then the overall risk of bias of the review is taken as Low, if any of the domains is marked with No or Probably No, then the overall Risk of Bias is set as High, and if there is insufficient information present for judgement only then the Risk of Bias in the review in marked as Unclear [72]. The outcome of the ROBIS risk of bias assessment is summarized in Table 2.

Table 2.

Risk of bias assessment across included studies. Each study was independently reviewed and assessed to ensure transparency and reproducibility. Assessed domains include (1) eligibility criteria of the studies, (2) identification and selection of studies, (3) data extraction and outcome evaluation, (4) results and interpretation of findings.

For this systematic review, the overall risk of bias is assessed as ’low’ because a transparent methodology was used for the selection of literature, data extraction, and the interpretation of results. This review clearly abides by the inclusion/exclusion criteria, with publications extracted from reputable databases, and limited to only the recent studies from the years 2022–2025. This enables the reduction of the selection and reporting bias. There are some research papers that have been marked as high or unclear due to some limitations. The unclear papers had insufficient detail on evaluation metrics, characteristics of the used model, or the dataset used. The high-risk papers used private datasets or had no quantitative comparisons.

3. Results of Survey

The database search returned a total of 1530 papers, as illustrated in Figure 3. Considering the eligibility criteria from Section 2.2, the duplicates were removed, followed by title screening, abstract screening, and full-text screening. A total of 56 publications were retrieved for detailed review regarding the use of generative AI in 3D medical imaging, which are presented in Section 3.4, after careful review.

Based on the collected publications, this review has been organized to elaborate these research works from several perspectives including medical image modality (Section 3.1), medical applications (Section 3.2), 2D medical images (Section 3.3), 3D medical images (Section 3.4), public datasets (Section 3.5), code availability (Section 3.6), and evaluation metrics (Section 3.7). Table 3 is provided for the ease and better understanding of readers and researchers, to help familiarize them with how each section contributes to the aims of this survey. This table aims to provide a better overview to the readers to see what is most relevant to them.

Table 3.

Table explaining how each divided section contributes to the aims of this survey.

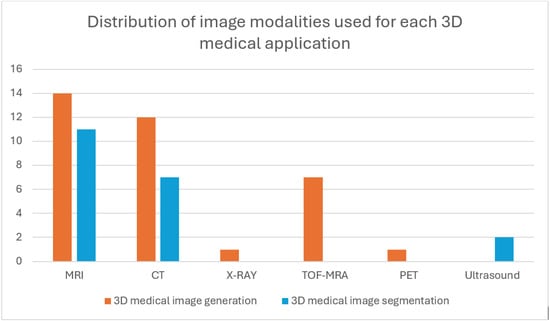

3.1. Medical Image Modality

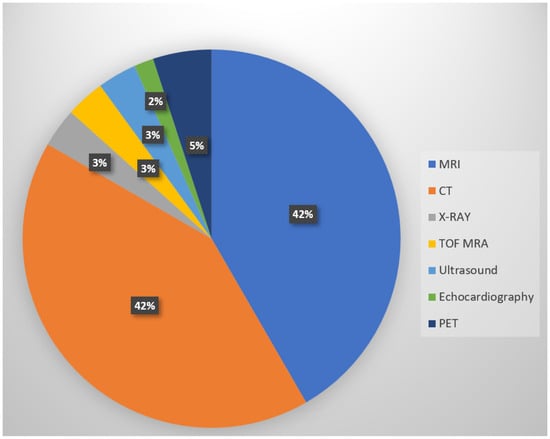

In this section, the modality of medical images used in the reviewed publications is summarized and presented in Figure 4. The majority of the reviewed studies used MRI and CT, a potential reason for this is the greater number of publicly available datasets. In this section, the various medical image modalities used will be discussed.

Figure 4.

Types and distribution of medical image modalities used in reviewed studies. The majority of the reviewed papers use MRI or CT due to their widespread clinical use and availability in the public datasets.

- MRI: Among all the reviewed papers, the most popular image modality is magnetic resonance imaging, or MRI, which covered 42% of the publications. MRI utilizes strong magnetic fields and magnetic field gradients along with radio waves to capture images of organs [126]. It is a non-invasive and radiation-free imaging technique, providing promising results [127,128].

- CT: In total, 42% of the reviewed publications used computed tomography (CT) image modality, which is equally as popular as the MRI. CT scan involves radiation exposure, using X-rays to generate high-resolution cross-sectional images of the body [129,130].

- PET: PET or positron emission technology was used in 5% of the reviewed papers.

- X-ray: About 3% of the reviewed papers focused on the use of X-ray.

- TOF MRA: TOF MRA was used in 3% of the reviewed publications.

- Ultrasound: In total, 3% of the reviewed papers worked with ultrasound images.

- Echocardiography: Echocardiography was used in 2% of the reviewed papers.

Most of the research work focuses on MRI and CT scans since they help produce high-resolution 3D volumes, with MRI offering rich soft-tissue contrast [131] and CT providing vivid information regarding bone and density [132]. Their dominance in the literature reviewed is because their publicly-accessible datasets are easily available, which are also standardized (e.g., BraTS, LIDC, UK Biobank), making them suitable for training GANs [133]. MRI and CT scans are predominantly used for diagnosis and treatment planning in oncology, neurology, and cardiology, which are areas of strong research interest [134]. This could potentially lead to the other modalities lagging behind in AI advancements.

3.2. Medical Applications

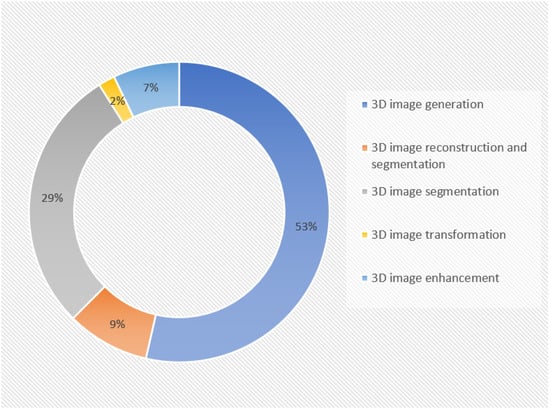

In this section, we have summarized the medical applications that have been focused on in the reviewed papers. This distribution is illustrated in Figure 5.

Figure 5.

Distribution of medical applications in reviewed papers. The figure characterizes the primary research and clinical applications of GANs in 3D medical image analysis, as seen in the included research studies, showing that 3D image generation and 3D image segmentation are more prevalent applications.

Three-dimensional image generation: About 58% of the reviewed publications performed experiments only on the generation of three-dimensional medical images. One of the major challenges in applying deep learning in medical research is the limited availability of data [135,136]. GANs can generate entirely new data that does not correspond to any actual person; this helps in avoiding privacy and anonymity concerns in clinical applications. Traditional data augmentation methods, such as the translation, rotation, scaling, and flipping of existing samples, are commonly used to create synthetic data, which face limitations in the medical field because medical images cannot be significantly altered in terms of their shape or color, as it can affect the anatomical features and lead to misdiagnosis and inaccurate treatment planning. Therefore, GANs overcome this by producing entirely new scans that closely resemble real patient images, allowing for the expansion of datasets without distorting the original medical data [137,138].

Three-dimensional image segmentation: The second most common task performed in these reviewed papers was the segmentation of three-dimensional medical images, accounting for 29%. Image segmentation helps in delineating the pathological regions, actioning image-guided interventions, and surgical planning [139].

Three-dimensional image reconstruction and segmentation: In total, 9% of the reviewed publications focused on both the medical image generation and segmentation.

Three-dimensional image transformation: Three-dimensional image transformation was performed in 2% of the reviewed publications. GANs can convert images from one modality to another, such as transforming CT images into MR or PET images, a process known as cross-modality synthesis. They can also generate new images within the same modality, like converting MRI images from T1-weighted sequences to T2-weighted ones, a form of image-to-image translation, which helps in significantly reducing acquisition times, lowering radiation exposure, and preventing patients from undergoing multiple scans [140]. Another important process is denoising, where GAN architectures, such as conditional GANs, CycleGANs, and super-resolution GANs (SRGANs), have been successfully applied to reduce noise in low-dose CT (LDCT) scans [141]. Low- to high-dose conversion, where GANs are employed to enable safer imaging protocols to generate enhanced image reconstructions that retain structural integrity, has supported clinical decision-making without compromising patient safety [142,143].

Three-dimensional image enhancement involves tasks like image quality improvement, super-resolution, and artifact correction.

Table 4 shows the arrangement of these reviewed publications regarding the medical applications performed.

Table 4.

Research publications organized according to the medical applications performed. This representation enables a clear understanding of how the GAN-based approaches are being applied in practice across the various clinical tasks.

3.3. Literature Survey Regarding 2D Medical Imaging

This review paper focuses on GANs in 3D medical imaging; however, it is very important to provide a brief insight into the 2D GAN models, to establish a technical and conceptual background, and help better understand the recent advancements of GANs in 3D medical imaging.

- Much research has been carried out on the use of GANs in 2D medical imaging, and a few state-of-the-art research studies are the foundational influence from which the later research on 3D imaging has been derived. For example, the 2D models CycleGAN, pix2pix, and StyleGAN were extended and adapted to 3D GAN architectures. These 2D imaging models were altered with a few modifications and the addition of a third component/dimension; these were used to generate volumetric images.

- The evolution of 3D GANs can be contextualized by understanding these 2D architectural roots.

- Two-dimensional GANs offer insights into the data augmentation, training stability, and also evaluation metrics that are helpful for three-dimensional implementation.

Figure 6 represents a taxonomy of the use of GANs in 2D medical imaging, explaining the applications, modalities, basic structure, and training strategies.

Figure 6.

Taxonomy diagram of GANs in 2D medical image analysis, organized by architecture type, clinical applications, training strategies, and image modalities used.

Alqushaibi et al., 2024 [144] use Pix2PixGAN augmented with Attention U-Net generator and a PatchGAN discriminator, guided by a sine cosine algorithm (SCA) for enhancing the selection of optimal hyperparameters in GANs for the synthesis and segmentation of medical images, outperforming baseline methods in terms of Dice, IoU, similarity index, and MAE.

Abdollahi et al., 2023 [145] use GAN and incorporate vision transformers (ViT). The model is trained end-to-end in two stages: super-resolution (to reconstruct high-resolution image from low-resolution input) and realistic modality translation (to map images between different domains). The model provides a framework for medical image enhancement with perceptually realistic and detailed outputs, but lacks comparison with other methods.

Liu et al., 2023 [146] use MVI-Wise GAN, which works on liver CT-MR pair images to train and then can generate MRIs using CT images, eliminating the requirement for liver MRIs that use CA injections. The performance results show better FID and KID scores than baseline GAN models, with a tumor detection accuracy of 92.3% in the synthetic images. This can be implemented on 3D medical imaging by taking the dimensions to another level by adding a volume component.

Tripathi et al., 2023 [147] use U-Net segmentation to extract a segmentation mask, followed by DCGAN to generate synthetic images (using both this extracted mask and noise as input). The synthetic mammograms closely resemble real mammograms in terms of visual appearance and relevant features, thus claiming to provide the research community with diverse and realistic synthetic mammograms, transcending data scarcity.

Ju et al., 2024 [70] lay the foundation of a hybrid augmented generative adversarial network (HAGAN), which contains three modules: Attention Mixed (AttnMix) Generator, Hierarchical Discriminator, and Reverse Skip Connection between Discriminator and Generator. HAGAN demonstrates the best FID score compared to six other baseline methods.

Yu et al., 2023 [148] uses a Fuzzy Self-Guided Structure Retention GAN (FS-GAN), which includes a Self-Guided Structure Retention Module (SSRM) that enables the generator to learn features better and retain the correct neural fiber structure. Additionally, an Illumination Distribution Correction Module (IDCM) regulates the illumination distribution of the enhanced image, making it more consistent with human perception. The comparison with traditional methods CLAHE and DCP, and six DL methods NST, MSG-Net, EnlightenGAN, CycleGAN, StillGAN, and SynDiff shows that FS-GAN achieves the best results in AvG, Brisque, and PIQE.

Onakpojeruo et al., 2024 [149] use DCGAN to synthesize data, then grid-search optimization strategy is used for a Conditional Deep Convolutional Neural Network (C-DCNN) model for a classification task to detect tumors and differentiate brain tumors from kidney tumors. A thorough comparison of the performance of the novel C-DCNN model with SOTA models is carried out, showing that the proposed model achieved accuracy, precision, recall, and F1-scores of 99% on both synthetic and augmented images, outperforming the comparative models. The success of synthetic data in improving classification performance suggests potential applications in other medical imaging tasks, potentially revolutionizing disease prediction and diagnosis.

Alauthman et al., 2024 [150] use Boruta feature selection followed by LS-GAN for augmentation, with the primary goal of generating synthetic data that closely resembles the real data. This GAN-based augmentation improved the stability and generalization of the classifiers for classification applications, reducing overfitting issues commonly associated with small datasets.

Sravani et al., 2024 [151] use a GAN with an Adam Optimizer, followed by post-processing, where a Gaussian kernel is applied to smooth the generated images for improved resolution (ESRGAN). The real and generated images are compared using SSIM score, resulting in a promising solution to generate synthetic medical data instead of preparing original medical data, particularly for brain tumor diagnosis.

3.4. Literature Survey Regarding 3D Medical Imaging

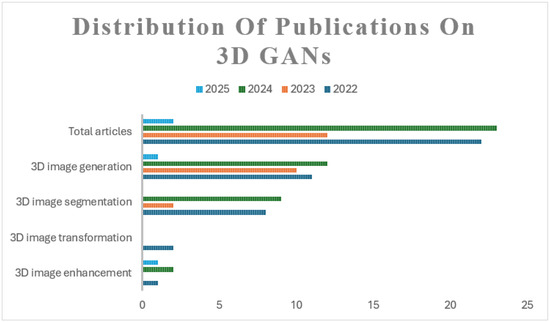

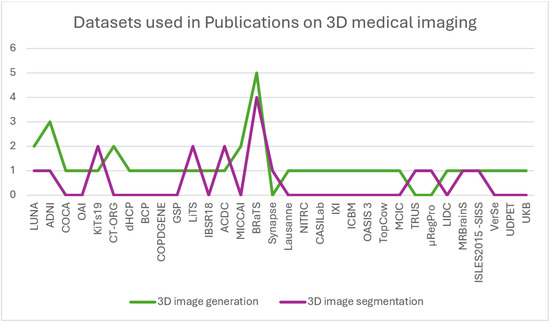

A summary of the distribution of the total publications in accordance with the years they were published in and the medical imaging applications they perform is outlined in Figure 7. The distribution of these publications according to the datasets used is represented in Figure 8, and their distribution according to the image modalities used to perform the imaging applications is represented in Figure 9.

Figure 7.

The distribution of reviewed publications on the use of GANs in 3D medical imaging applications characterized by clinical tasks and publication year. The distribution shows the total number of publications that are reviewed from each year, and then further divides them by the medical imaging task performed.

Figure 8.

The distribution of publications on GANs in 3D medical imaging organized by the datasets used.

Figure 9.

Distribution of image modalities used for each of the 3D medical imaging applications. The figure displays trends in modality–task alignment, offering insights into the current research trends.

3D Image Generation: The publications featuring 3D medical image generation are explained. Kim et al. [73] propose a 3D data-guided generative adversarial network (3D-DGGAN) where features (reference codes) are extracted from CT/MR images, which are then validated through a decoding process to ensure their accuracy. These reference codes are combined with Gaussian noise and passed through the generator to create 3D images. The discriminator is divided into 3 components: volume, slab, and slice. The volume discriminator analyzes the entire set of 3D image slices, the slab discriminator focuses on consecutive slices, and the slice discriminator evaluates individual randomly selected slices. This combination of discriminators allows for detailed evaluation of both specific slices and the continuity between adjacent slices. The volume discriminator enhances the ability to capture fine details in 3D images, leading to higher fidelity in the generated images. However, no analysis for memory usage is provided.

Sun et al. [74] claim that previous works that used 3D GANs generated low-resolution images (128 × 128 × 128 or smaller) due to memory constraints during training, so the authors propose a Hierarchical Amortized GAN (HA-GAN), where different configurations are used for training and inference. During training, HA-GAN generates both a low-resolution image and a randomly selected sub-volume of a high-resolution image. An encoder is used to extract features from images and stabilize training, preventing mode collapse. This sub-volume approach reduces memory requirements while preserving fine details in the high-resolution image. The low-resolution image ensures that the overall anatomical structure is consistent. During inference, the full high-resolution image can be generated without the need for sub-volume selection. The addition of a low-resolution branch helps the model learn the global structure, while the encoder improves performance. HA-GAN produces sharper images compared to other baseline methods, particularly at a higher resolution of 2563. Some limitations apply, as this architecture could be expanded to other imaging modalities, and removing blank axial slices of training images could reduce the gap between the generated and real images.

Hwang et al. [76] uses a GAN incorporating CutMix and GRAF. CutMix involves cutting and mixing knee MRI image patches to create diverse training samples. Two generators and two discriminators were used for translating images between X-ray and MRI. Neural Radiance Fields (NerF), known for generating high-fidelity 3D images, model the radiance field and depth of a scene to capture fine details in 3D space. GRAF, a hybrid of NerF and GANs, is used to generate 3D MRIs with enhanced realism by combining volumetric scene representation with GAN-based refinement. The use of cycle consistency loss helps minimize information loss during translation; CutMix enhances the model’s ability to differentiate between the knee and background. However, this study can be extended to other organ images.

Liu et al. [67] introduce a 3D Split-and-Shuffle-GAN, which incorporates StyleGAN, designed to efficiently generate high-quality 3D medical images. The training strategy uses the available 2D image slices to train a 2D GAN model; the 2D weights are then inflated to initialize the 3D GAN model to generate detailed 3D images. The channel Split-and-Shuffle modules are also introduced, which reduce the number of parameters in both the generator and discriminator networks while maintaining performance. These modules help avoid overfitting, especially due to limited 3D medical data. Experiments with five different weight-inflation strategies are performed, and network designs on both the heart (COCA) and brain (ADNI) datasets confirm that this method produces diverse, high-quality 3D medical images. This model outperforms other baseline methods significantly on FID (across axial, sagittal, and coronal planes), PSNR, and MS-SSIM. t-SNE shows a similar distribution to real images, confirming that this method produces diverse, high-quality 3D medical images. A possible limitation can be the exploration of network weight initialization strategies beyond inflation.

Hu et al. [77] propose a hierarchical shape-perception network (HSPN), designed to reconstruct 3D point clouds (PC) from a single incomplete MRI image, specifically for brain surgery applications. HSPN consists of an encoder–decoder architecture, where the encoder, which uses a predictor based on GAN combined with PointNet++ blocks, extracts features, and the decoder, which has multiple layers, rebuilds the 3D shape. A hierarchical attention pipeline is employed to transfer feature information between the encoder and decoder stages. The generator, which contains multiple graph convolutional networks (GCNs), refines the incomplete point clouds, while a discriminator similar to WGAN-GP ensures the accuracy of the generated 3D structures. This model is designed for real-time feedback, to enable surgeons to quickly receive critical 3D information about local brain structures. HSPN outperforms other models in terms of visual quality, quantitative analysis, and classification performance, as evaluated by Chamfer distance (CD) and point-cloud-to-point-cloud (PC-to-PC) error. This study can be advanced beyond brain MRI.

Rezaei et al. [79] employ GAN in three stages: lung segmentation, tumor segmentation, and 3D lung tumor reconstruction. Lung segmentation is performed using snake optimization, which also reduces the dimensions. Tumor segmentation is achieved using Gustafson–Kessel (GK) clustering. For the reconstruction phase, features are first extracted from 2D CT scans of tumors using a pre-trained VGG model, and are then fed into a long short-term memory (LSTM) network, which outputs compressed features. These compressed features are used as input for the GAN generator, which reconstructs the 3D image of the lung tumor. Transfer learning from 2D images is also employed, which speeds up the training process. A possible limitation is the application of this model to other medical applications, such as COVID-19 diagnosis.

Safari et al. [81] use MedFusionGAN, which is an unsupervised GAN designed for medical image fusion. Its goal is to merge CT scans, which capture bone structures, with high-resolution 3D T1-Gd MRI, known for soft tissue contrast. This fusion produces images that better delineate tumor regions and reduce the time required for radiotherapy planning. MedFusionGAN employs a generator to blend the information from MRI and CT images and a PatchGAN discriminator to distinguish between the original and fused images. This model outperformed other approaches and enhances treatment accuracy by reducing the radiation exposure to healthy organs, improving auto-segmentation algorithms, radiotherapy planning, and tumor delineation. This can be extended to other organs to fill a possible gap.

Tudosiu et al. [84] propose a model using VQ-VAE and transformer, using Voxel-Based Morphometry (VBM) and Geodesic Information Flows (GIF), which demonstrates that the synthetic data retains the morphological features of real data. The model uses VQ-VAE, which compresses high-resolution images into a latent space, and a transformer that captures relationships within the compressed representations. Compared to a baseline VAE model, the proposed VQ-VAE significantly outperforms by generating realistic brain images. The model captures both healthy and diseased brain structures accurately. Future work should focus on adding conditioning mechanisms, enhancing diversity, and extending the model to include disease progression and privacy preservation features.

Poonkodi et al. [87] propose 3D-MedTranCSGAN, a 3D medical image transformation system that combines non-adversarial loss components with a Cyclic Synthesized GAN (CSGAN). This model uses 3DCascadeNet in the generator that refines image transformations by combining encoding-decoding pairs (which improves the visual output) with skip links for better resolution and smoother results, and also PatchGAN’s discriminator to assess the difference between the original and synthesized images while calculating non-adversarial losses such as content, perception, and style transfer losses to enhance the perceptual quality of transformed images. The 3D-MedTranCSGAN performs multiple tasks without modifying its core design, such as transforming PET to CT images, reconstructing CT to PET, correcting motion artifacts in MR images, and denoising PET images. The model’s performance was tested on various tasks and compared with other GAN-based models like pix2pix, PAN, Fila-sGAN, CycleGAN, and MedGAN, consistently outperforming them in accuracy and quality. The model’s outputs are not intended for clinical diagnostics, as they don’t provide significant investigative information. Instead, the model is more suited for post-processing tasks. Future improvements could include incorporating hinge loss and Wasserstein loss to enhance adversarial training and exploring the use of transformed images for diagnostic tasks.

Jung et al. [88] present a novel cGAN with a 3D discriminator that uses an attention-based 2D generator to create realistic 2D image slices, a 2D discriminator to ensure these slices meet the target condition, and a 3D discriminator to evaluate the continuity and structural coherence of consecutive 2D slices, simulating a full 3D volume. This allows the model to account for 3D structure without the computational burden typically associated with 3D cGANs. The 3D discriminator checks groups of 2D slices generated in the same mini-batch to ensure continuous and consistent output across all directions. Future work can apply this model to earlier time points in longitudinal datasets to examine its accuracy in predicting brain deformations in specific subjects. It can be extended to multiple datasets, including OASIS, and analyze the entire brain region, including subcortical structures, to detect broader brain deformations.

Aydin et al. [90] adapt the StyleGANv2 architecture to work with 3D data to generate synthetic Time-of-Flight Magnetic Resonance Angiography (TOF MRA) volumes of the Circle of Willis (CoW), highlighting the potential of this approach for broader medical imaging applications. This model generated realistic and diverse TOF MRA volumes of CoW when analyzed visually.

King et al. [91] use -SN-GAN, consisting of 3D DCGAN with spectral normalization regulation and an additional encoder. Spectral normalization regularization counteracts the vanishing gradients problem that occurs for small sample sizes. The encoder alleviates mode collapse. This model is named -SN-GAN. It produces synthetic images with the highest level of quality and variety, as demonstrated through both visual assessments and numerical evaluations. These synthetic images improve the accuracy of the diagnostic classifier.

Zhou et al. [92] introduce 3D Vector-Quantization GAN (3D-VQGAN) with a transformer using masked token modeling to generate high-resolution, diverse 3D brain tumor ROIs, which are used to enhance the classification of brain tumors. The model combines CNN with an auto-regressive transformer, preventing mode collapse and enabling high-resolution image generation. The CNN-based autoencoder extracts local features and the transformer captures long-term interrelations, resulting in competitive performance and improved classification across different brain tumor types. The authors highlight that the synthetic data generated can be directly used in tumor classification tasks, validating the superiority of their method. This approach achieves significant performance improvements, surpassing baseline models.

Zhou et al. [94] present 3D-VQGAN-cond using a class-conditioned masked transformer. This framework generates high-resolution and diverse 3D ROIs of brain tumors for both low-grade and high-grade gliomas (LGG/HGG). A temporal-agnostic masking strategy is used to help the model learn relationships between semantic tokens in the latent space. To generate the ROIs, they start with a class token (0 for LGG or 1 for HGG) and have the transformer complete the remaining indices. The generated LGG and HGG ROIs from this method are compared with baseline models, training a classification model to confirm that the proposed 3D-VQGAN-cond model improves the ability to distinguish between LGG and HGG tumors, achieving better results compared to baseline models.

Corona et al. [95] propose a Swin UNEt-TRansformer (Swin UNETR) that operates by concatenating 2D views into higher-channel 3D volumes, which turns the 3D reconstruction task into a straightforward 3D-to-3D generative modeling problem, avoiding more complex approaches, retaining key information from the 2D inputs, which are passed through Swin UNETR backbone, and uses neural optimal transport for fast, stable training. This approach integrates signals across multiple views without requiring precise alignment, producing 3D reconstructions with limited training. Compared to other models, Swin UNETR produced the highest-quality outputs. The method treats the 2D-to-3D task as a 3D-to-3D problem, improving the correlation between the generated 3D volumes and the 2D inputs. However, this method performs a non-linear transformation in a single step, which introduces uncertainty and blurriness in the outputs. So an iterative, multi-step approach, such as a probabilistic diffusion model, could improve performance. Additionally, while the method is somewhat invariant to input alignment, optimizing the alignment, especially for high-frequency details, could enhance accuracy.

Kim et al. [97] propose Volumetric Imitation GAN (VI-GAN), with a generator incorporating 3D U-Net and ResNet framework for feature extraction and up-sampling, and a 3D convolution-based Local Feature Fusion Block (LFFB) to handle features at multiple scales. The discriminator uses 3D convolution and assesses how closely the generated volumes match real anatomical data. VI-GAN reduces errors in the relationships between neighboring slices, producing more accurate tomographic images that closely resemble the ground truth, particularly for complex anatomical structures like the lumbar vertebra, hip bone, and liver, outperforming other methods across various metrics.

Sun et al. [98] propose DU-CycleGAN, with a U-Net generator and a U-Net-like discriminator, incorporating an encoder and decoder, ensuring a pixel-by-pixel correspondence between input and output image. These two components are connected via skip connections, which improve the resolution of the discriminator. A Content-Aware Re-Assembly of Features (CARAFE) is incorporated into both generator and discriminator, consisting of two parts; one part calculates a reassembly kernel based on the content of specific target areas, while the other part recombines features using those kernels. By stacking adjacent 2D slices into a 2.5D slice, the model captures 3D information without needing the memory-heavy 3D convolutions typically required for 3D image generation. DU-CycleGAN excels in both 2D and 3D image generation, and the model is capable of converting MRI images to CT images using poorly matched data pairs and still produces better results compared to existing methods.

Mensing et al. [99] propose a GAN model largely based on FastGAN. Linear conditioning is applied in both the generator and discriminator. The discriminator reduces feature map resolution by a half using strided convolutions and applies the Leaky ReLU activation function after each convolution. Both the generator and discriminator utilize Skip-Layer-Excitation layers, which connect blocks at different network depths, aiding in error propagation to the earlier layers of the model. This model outperforms 3D-StyleGAN.

Chithra et al. [106] combine different GANs (DCGAN, Pix2Pix GAN, and WGAN) with style transfer techniques. The synthetic MRI images produced by GANs are passed into a style neural network, which applies texture-based transformations. The original 3D MRI images from the dataset are input as content images to the style neural network. This network uses a pre-trained VGG model with 16 layers and 5 pooling layers to transfer textures from one image to another while preserving the core information of the original image. This technique produces synthetic MRI images with high accuracy.

Gao et al. [107] introduce 3DSRNet using GAN, where the generator uses an encoder–decoder network architecture. The model integrates a CNN and a transformer model in a framework called Spine Reconstruction (SRCT). The CNN focuses on capturing the detailed surface information of spine, while the transformer captures the global structure of the skeleton, for more accurate spine reconstruction. The texture extraction (SRTE) method is used to capture low-level texture details from the spine images, improving the model’s ability to reconstruct the 3D spine, outperforming many existing algorithms, making it a useful tool for assisting orthopedic surgeons during diagnosis.

Xue et al. [109] propose a Classification-Guided GAN with Super Resolution Refinement (CG-3DSRGAN), comprising three components: a multi-task reconstruction network (ML-Net), which uses a 3D U-Net structure for image reconstruction, with an additional classification head sharing the same encoder, to generate an initial prediction of the synthetic PET image along with dose reduction level. A super resolution network (Contextual-Net) refines the initial result to preserve high-dimensional features and contextual details. A discriminator based on pix2pix architecture using 3D operations is used to verify the authenticity of the refined image. CG-3DSRGAN generates high-quality synthetic PET images with reduced tracer doses, making it a valuable tool for enhancing PET imaging in clinical practice.

Zhang et al. [110] propose a Pyramid Transformer Network (PTNet3D), which incorporates transformer/performer layers, skip connections, and multi-scale pyramid representation. The transformer block, used in the bottleneck layer, leverages self-attention to capture global dependencies across the latent features. The performer-based encoder (PFE) and decoder (PFD) reduce computational complexity, enabling the model to handle high-resolution 3D blocks. The pyramid representation layer avoids information loss by retaining fine structural details of the brain, resulting in better accuracy and efficiency in MRI synthesis tasks.

Pradhan et al. [111] pre-process the input data for noise removal, resampling, rescaling, and normalization, followed by augmentation. Noise includes air, soft tissues, and fat. Resampling and rescaling convert images to a uniform format. Normalization is used to standardize pixel values, optimize the learning process, and reduce the computation costs. Data augmentation techniques, such as angle and axis rotations, are applied to expand the dataset. A customized CGAN is proposed, inspired by the U-Net architecture, with a three-path design, comprising contracting, bottleneck, and expanding paths. The model can predict views from all angles (0° to 360°), providing a comprehensive 3D representation of bones and joints.

Xia et al. [112] propose a collaborative consent GAN named AwCPM-Net, with a generator with two branches—one for cardiac phase retrieval (CPR) and the other for membrane border extraction (MBE)—and a dual-task discriminator that evaluates both tasks together. The CPR branch uses a self-supervised learning approach, doesn’t require labeled data, and focuses on detecting inter-frame deformation fields to identify cardiac phases. The MBE branch is semi-supervised and handles 3D segmentation, requiring fewer annotations than traditional models. These two tasks are connected through a warming-up connection to mutually enhance the other’s performance. AwCPM-Net simultaneously performs both CPR and MBE in a single step. GAN improves dual-task learning by aligning predicted outcomes with ground-truth data using high-dimensional feature matching. This model outperforms existing CPR methods in capturing motion signals and cardiac phases and excels in detecting arterial wall structures better than current MBE techniques. The reconstructed 3D artery anatomy allows for accurate localization and assessment of vessel stenosis.

Xing et al. [120] propose DP-GAN+B to reconstruct 3D CT volumes from 2D X-ray images. This network uses an encoder–decoder structure, where extracted features are integrated and processed through a novel sampling decoder to obtain the 3D CT output, outperforming its baseline model. Fujita et al. [121] use two GAN-based models, CycleGAN and X2CT-GAN, to generate 3D CT images from X-ray images, successfully achieving the 3D reconstruction and exhibiting exceptional PSNR and SSIM. Touati et al. [122] use dual CT-synthesis GAN model, composed of a dual branch 2D and multi-planar generator network integrating dual feature representation learning, and a discriminator network. This model not only considers 2D query image features, but also captures the 3D information by modeling different 2D planar views of the volumetric input data.

Bazangani et al. [124] propose a separable convolution-based Elicit GAN (E-GAN), where the discriminator is a 3D fully convolutional network and the generator features an encoder and decoder. The encoder uses two major components: Elicit network (extracts spatial information from FDG-PET) and Sobel filter (detects edges and boundaries between different tissues). This model produces a high-quality 3D T1-weighted MRI exhibiting good performance compared to SOTA methods.

Three-Dimensional Image Generation and Segmentation: The publications featuring 3D medical image generation and segmentation are explained. Prakash et al. [75] propose SculptorGAN with Weight Pruning U-Net (WP-UNet), where 3D images are reconstructed from 2D slices, after processing and interpolation, maintaining spatial and contextual continuity through weight pruning. Kidney and kidney tumors are segmented using another WP-UNet model specifically tuned for this task. This model performs voxel-wise classification, extracting detailed features from the reconstructed 3D images. This approach leads to a 35% reduction in reconstruction time and a 20% improvement in segmentation accuracy, setting a new benchmark in medical imaging by improving both 3D reconstruction and segmentation precision, demonstrating the potential to significantly enhance diagnostic and therapeutic applications. Through depthwise separable convolutions and pruning, the approach ensures both computational efficiency and detailed feature extraction, enabling high-accuracy identification of renal tissues and tumors. However, it needs to be extended to other modalities and beyond renal imaging.

Subramaniam et al. [82] claim that this is the first work to present GANs that generate realistic 3D TOF-MRA volumes along with segmentation labels. Their approach involves four variants of 3D Wasserstein GANs (WGAN), including gradient penalty (GP), spectral normalization (SN), and mixed precision models (SN-MP and c-SN-MP). The models using mixed precision yielded the best results, with the lowest FID scores (measuring image quality) and optimal PRD curves (capturing data quality and variety). A key innovation is their ability to generate both 3D image patches and corresponding labels for brain vessel segmentation, which enables training deep learning models like 3D U-Nets in an end-to-end framework. The c-SN-MP model led to the best segmentation performance based on the DSC and bAVD metrics. The study used TOF-MRA data from 137 patients with cerebrovascular disease from two datasets: PEGASUS and 1000Plus. The results demonstrate the benefits of mixed precision for generating realistic 3D volumes and labels. This research paves the way for better sharing of labeled 3D medical data, which could improve deep learning model generalizability and advance medical research in cerebrovascular diseases.

Zi et al. [83] designed a DL model, focusing on critical image regions, with attention-based U-Net, with an encoder–decoder structure, that enhances segmentation and reconstruction by dynamically prioritizing important areas. The proposed models are trained on large public datasets such as ACDC (cardiac MRI), BraTS (brain tumor MRI), and LiTS (liver tumor CT). Pre-processing steps like resampling and data augmentation (adjusting brightness, contrast, rotations, etc.) are applied. Normalizing pixel values also improves model stability and accelerates convergence during training. The proposed model achieved strong results. The integration of the self-attention mechanism significantly improved both segmentation and reconstruction tasks.

Tiago et al. [102] propose a conditional GAN based on the Pix2pix model, which is extended to 3D by incorporating a 3D U-Net as the generator. This translates anatomical label data into echocardiography-like images, enabling the model to perform paired domain translation. This approach introduces an automatic data augmentation pipeline that uses the 3D GAN model to generate additional synthetic 3D echocardiography images and labels. These GAN-generated datasets are valuable for training deep learning models, particularly for tasks like heart segmentation, providing a useful resource for cardiac imaging when real patient data is limited.

Sun et al. [105] propose three models—Per-CycleGAN-CACNN, DualCMP-GAN-CACNN, and DualCMP-GAN-3D ResU—for brain tumor, stroke image generation, and lesion segmentation using GAN and 3D ResU-Net architectures. Per-CycleGAN-CACNN generates corresponding 2D target images, and then recombines the slices into a 3D image, which is then segmented using the CACNN network, producing effective segmentation results. DualCMP-GAN-CACNN focuses on both global and local image details to generate high-precision images by maintaining consistency at different scales, enhancing image quality, and segmentation accuracy for lesions. DualCMP-GAN-3D ResU uses real data from two modalities and simulated data from DualCMP-GAN to perform lesion segmentation with a 3D Residual U-Net. It show superior performance, especially in the segmentation of stroke lesions. These three models offer valuable support for clinical decision-making and treatment by providing clear and precise images.

Three-Dimensional Image Segmentation: The publications featuring 3D medical image segmentation are explained. Elloumi et al. [80] use PGGAN combined with VGG 16+U-Net and ResNet 50+U-Net. Combined VGG 16+U-Net and ResNet 50+U-Net are applied in the generator for image segmentation. The discriminator is constructed using pix2pix PGGAN, which helps characterize images more accurately. DCNN is used, which helps to further enhance the performance of this hybrid architecture, leading to highly accurate segmentation results. This study can be extended to other organs.

Bui et al. [85] use SAM3D, which combines SAM’s transformer-based encoder with a lightweight 3D CNN decoder. It combines SAM’s transformer-based encoder with a lightweight 3D CNN decoder to handle volumetric data more efficiently. It includes 3D convolutional blocks with skip connections, offering an efficient approach to 3D medical image segmentation. It prioritizes precision at the cost of increased complexity and training time. It is simple and computationally efficient while still achieving high segmentation performance. The model uses a frozen SAM encoder to extract features and a custom 3D decoder to capture depth relationships, addressing challenges like weak boundaries in medical images.

Tyagi et al. [86] address two major challenges in medical image segmentation: data scarcity and class imbalance, which can lead to overfitting and poor performance. They propose a novel method CSE-GAN based on a 3D CGAN for lung nodule segmentation. The generator is modeled with a concurrent spatial and channel squeeze and excitation (CSE) module, improving the segmentation performance. It learns features from input patches using ground truth as a reference during training. The discriminator is a simple classification network that uses a spatial squeeze and channel excitation (sScE) module to differentiate between real and fake segmentation masks. This helps in the channel-wise recalibration of feature maps and improves the classification accuracy. To avoid overfitting, they use patch-based training. Back-propagation is applied to both networks to update weights, improving their performance over time. The CSE-GAN outperforms other tested network architectures, such as various U-Net models and R2UNet, highlighting its effectiveness in lung nodule segmentation, also proving its generalizability.

Ge et al. [89] propose an average super-resolution generative adversarial network (ASRGAN), consisting of a generator, which is a 3D convolutional network; with three multi-path average blocks with convolutional layers; followed by the instance normalization and the linear rectification function (ReLU) activation function to produce thin-slice CT images, where feature extraction and image reconstruction are learnt end-to-end at the same time.The discriminator contains five convolutional layers followed by a ReLU activation function and instance normalization, where features are captured by convolutional layers with different kernel sizes and strides, ensuring realistic reconstructions. The network also uses a segmentation process that focuses on both rough and detailed segmentation stages, similar to the 3D U-Net structure. ASRGAN demonstrates strong generalization across different CT scanner models without requiring extra retraining, outperforming other methods.

Liu et al. [93] propose a 3D Edge-aware Attention GAN (3D EAGAN) with a discriminator network to distinguish between predicted and real prostates. The EASNet is built on a U-Net encoder–decoder backbone and incorporates several components: a detail compensation module (DCM), four 3D spatial and channel attention modules (3D SCAM), an edge enhancement module (EEM), and a global feature extractor (GFE). The proposed method significantly improved performance metrics compared to SOTA segmentation methods.

Çelik et al. [96] propose Vol2SegGAN, where pre-processing is carried out, involving steps like brain region extraction, dataset label editing, MNI152 registration, and sampling. Then, segmentation is performed using a generator incorporating Attention Context Fusion (ACFP) and Position Attention Mechanism (PAM), and a discriminator that distinguishes between real and fake data. The model performed best in segmenting cerebrospinal fluid, gray matter, and white matter. It shows potential for use in training for medical professionals.

Vagni et al. [100] adapted Vox2Vox GAN, originally proposed by Cirillo et al. [152]. The Vox2Vox generator uses a combination of U-Net and ResNet architectures. It consists of an encoder–decoder structure connected by skip connections at each level, with a bottleneck containing four residual blocks. The encoder processes 3D input images through convolutional layers to extract hierarchical features, while the decoder upscales the features back to their original spatial dimensions using up-convolutions. The discriminator is a CNN classifier following a PatchGAN style, which classifies each patch of an image as real or fake. This model successfully performed auto-segmentation of the bladder, rectum, and femoral heads from pelvic MRIs with high accuracy, indicating that it could be a robust tool for medical segmentation.

Kanakatte et al. [101] implement a 3D GAN with a generator that includes three main components: an encoder, a decoder with skip connections, and a bottleneck. The bottleneck consists of 3D convolutional layers that reduce the number of parameters and improve feature representation. The feature maps from each encoder layer are concatenated with the respective bottleneck layers. Skip connections between the encoder and decoder help recover lost features during down-sampling, crucial for preserving important medical imaging details. The discriminator incorporates 3D convolution layers and follows Patch GAN architecture. The model’s performance shows high accuracy and matches the performance of 2D models for some classes by effectively incorporating 3D contextual information.

Elloumi et al. [103] use Pix2pix GAN to generate artificial medical images and semi-supervised DCGAN for 3D lung segmentation. The generator follows a U-Net design with one convolution layer in both the encoder and decoder blocks to conserve GPU memory. An improved 3D U-Net network is integrated as the discriminator. Patient data is safeguarded through a watermarking technique using the Schur vector. The simulation results demonstrate that this approach effectively combines deep learning through GANs for medical image segmentation while simultaneously securing the images with an appropriate watermarking algorithm.

Sharaby et al. [104] present a modified Pix2Pix GAN model, where the generator uses a residual U-Net-based model with convolutional blocks for feature extraction and transformation. The encoder–decoder structure captures image features during downsampling and reconstructs the image during upsampling. The discriminator, with convolutional layers, downscales the inputs to focus on high-level features and distinguish between real and generated images, improving the segmentation performance, which is particularly effective in renal diagnosis.

Kermi et al. [108] use a 3D GAN where the generator employs a U-Net-based encoder–decoder architecture, enhanced with a bottleneck block, and the discriminator is structured as a PatchGAN, to segment HGG and LGG glioma sub-regions in 3D brain MRI.

He et al. [113] embed a 3D U-Net into a DCGAN framework to create a semi-supervised 3D liver segmentation algorithm. The 3D U-Net acts as the discriminator to differentiate real from generated images and produce the final segmentation. DCCN is used to generate synthetic images by restoring feature maps from real images. Data pre-processing is also emphasized for better training and the U-Net model, originally designed for 2D medical image segmentation, is extended to a 3D application by modifying all layers to handle 3D data.

Amran et al. [117] use a brain-vessels generative-adversarial-network (BV-GAN) to segment brain blood vessels, which typically suffer from memory and imbalance-related issues. This shortens the analysis time and improves the diagnosis of cerebrovascular vessel disorders.

3D Image Transformation: The publications featuring the 3D medical image transformation are explained. Zhou et al. [78] use a Segmentation Guided Style-based Generative Adversarial Network (SGSGAN), which incorporates a style-based generator, using style modulation which controls and adjusts the hierarchical features during image translation to generate realistic textures. The different features carry varying levels of importance, so the model adjusts them accordingly after each convolutional layer. The discriminator conducts adversarial training with the generator. A segmentation-guided strategy is used to enhance the quality of the images, especially in clinically relevant regions of interest (ROIs). The segmentation network generates masks of specific regions in the image (e.g., liver, brain, kidney, and bladder), which guide the generator to improve the key anatomical regions in the generated image. This method could be extended to other medical imaging, such as CT and MRI image translation, or this model can be particularly enhanced in patch-level synthesis, improving the use of style information.

Joseph et al. [114] use a supervised 3D CycleGAN model consisting of two generators (G1, G2), using U-Net with residual connections, and two discriminators (D1, D2) using CNN. G1 maps CBCT to pseudo-FBCT images, and G2 maps pseudo-FBCT images back to CBCT. U-Net includes long skip connections to preserve the structural similarities between CBCT and FBCT images. The model incorporates identity loss and a gradient difference loss (GDL) to improve the accuracy of the transformation. D1 and D2 evaluate these respective images. Results indicate that the proposed CycleGAN model performs well with pseudo-FBCT images closely resembling the real FBCT images.

Three-Dimensional Image Enhancement: The publications featuring 3D medical image enhancement are explained. Dong et al. [115] improve the quality of 3D MRI by using a Denoising CycleGAN and Enhancement CycleGAN. The Denoising CycleGAN denoises the cine images and the Enhancement CycleGAN enhances the spatial resolution and contrast. This framework enhances the image quality significantly with high computational efficiency.

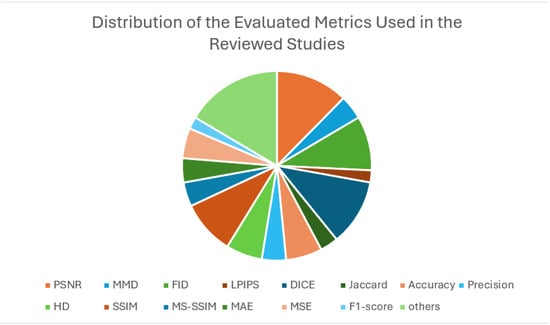

Zhang et al. [116] propose a Super-resolution Optimized Using Perceptual-tuned Generative Adversarial Network (SOUP-GAN), which produces high-resolution, thinner slices of image, with anti-aliasing and deblurring, outperforming other conventional resolution-enhancement methods and previous SR work on medical images based on both qualitative and quantitative comparisons.