Real-Time Deep-Learning-Based Recognition of Helmet-Wearing Personnel on Construction Sites from a Distance

Abstract

1. Introduction

1.1. Hardware-Based Helmet Detection and Worker Identification

1.2. Visual-Based Helmet Detection

1.3. Face-Based Identification

1.4. QR-Based Identification

1.5. Traffic Signs and Symbol Detection

1.6. Human Detection and Tracking

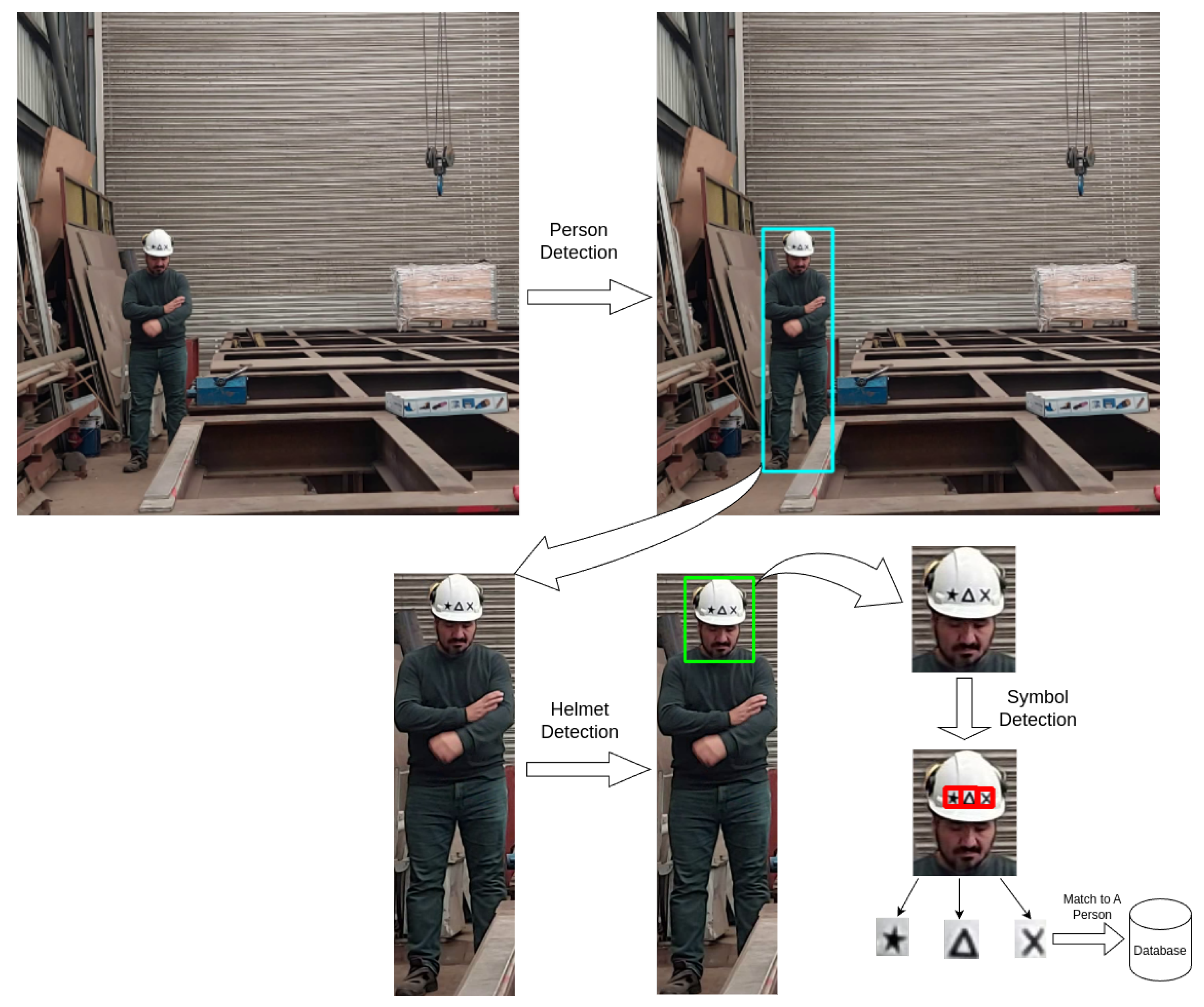

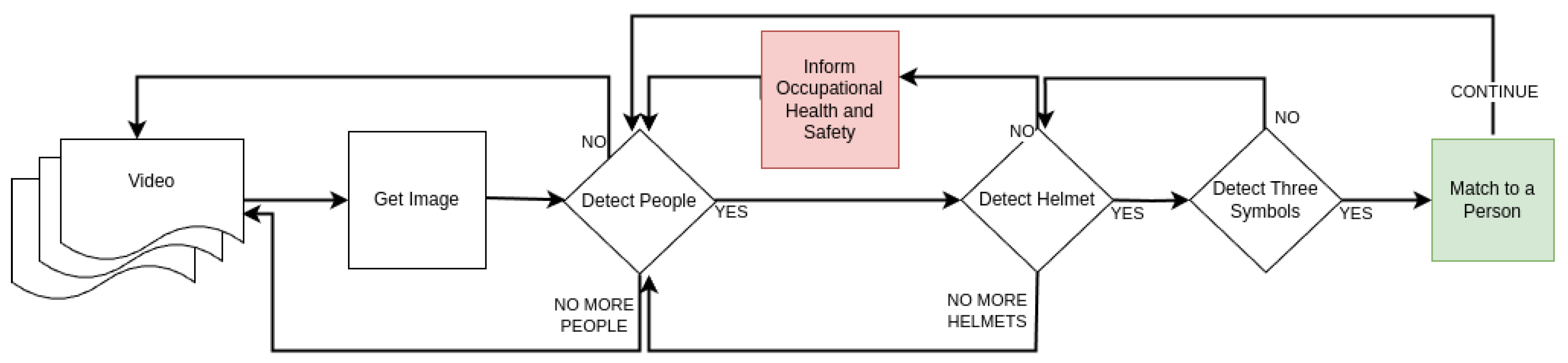

- In the first place, publicly available datasets are trained from personnel and helmets and mini boxes from images are generated in order to look for symbols that constitute the identity of a worker.

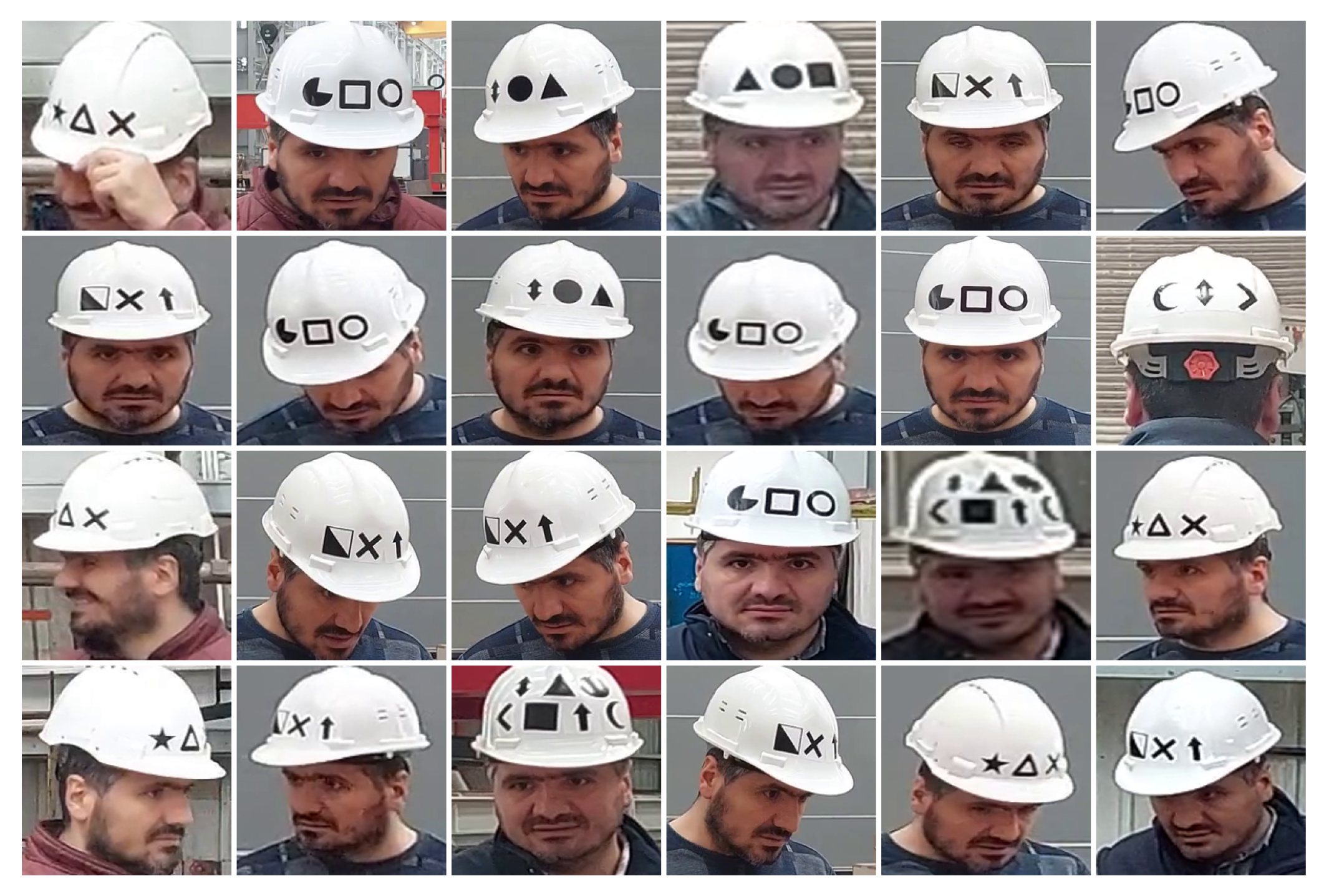

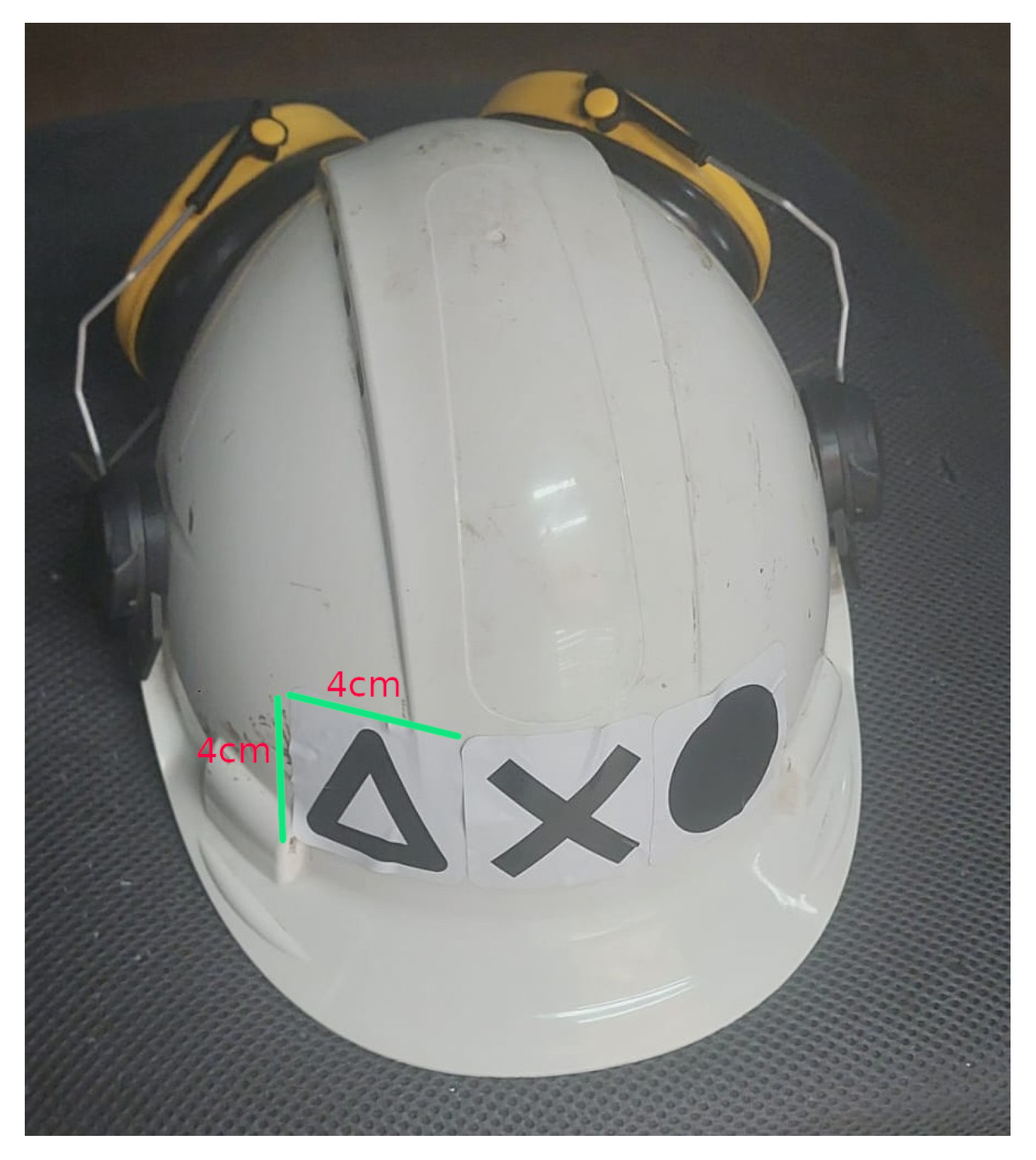

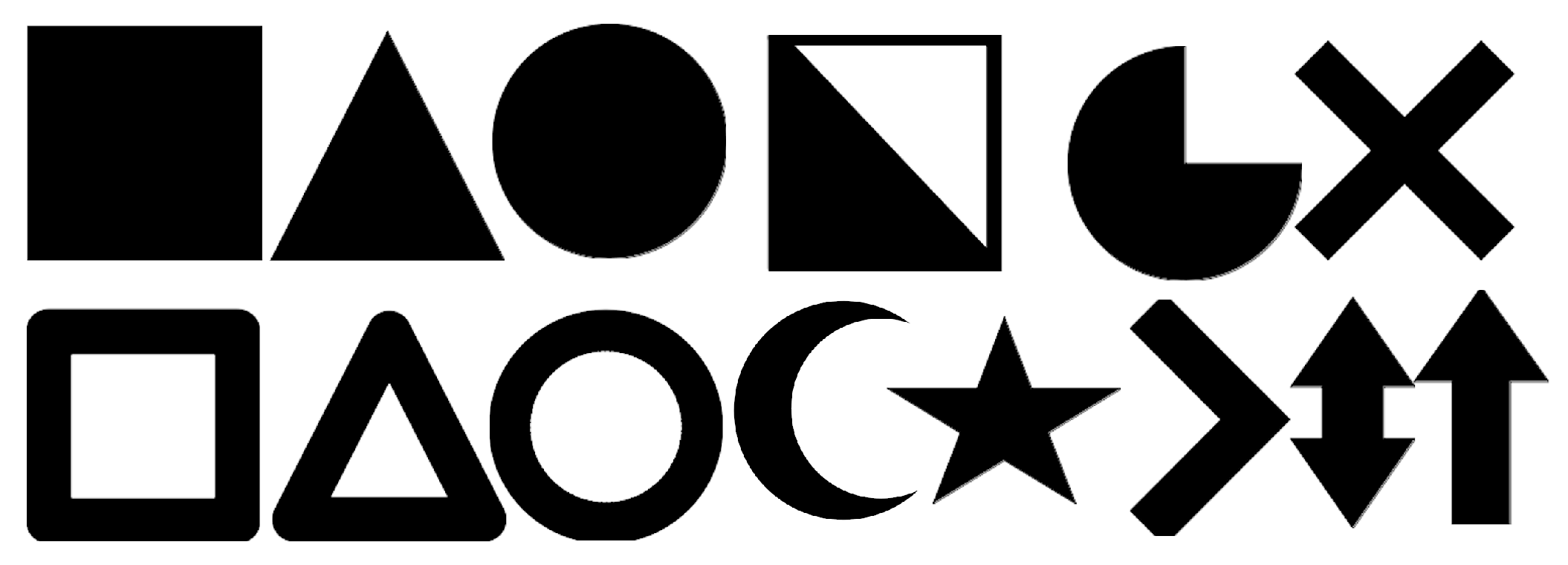

- For symbol recognition, 14 distinguishable different symbols are generated and put on helmets in different combinations. For that purpose, 11,243 images are taken from a construction site and annotated.

- Then, personnel, helmet and symbol datasets are trained with two methods of YOLOv5 and YOLOv8 with different hyperparameters in order to compare results.

- A location-based symbol ordering algorithm is proposed. Since symbol detections do not follow any order and they work using confidence values in the inference mode, a left to right approach is followed.

- A testing dataset containing different distances is generated in order to measure accuracy by distance. According to the results, up to 10 m accuracy was obtained for recognizing three symbols per helmet.

- There is not a feasible solution for worker safety and tracking systems at construction sites except some hardware-based means that involve battery and management issues. This study primarily suggests passive symbol detection without any installation on workers using already placed cameras.

2. Material and Methods

2.1. Datasets

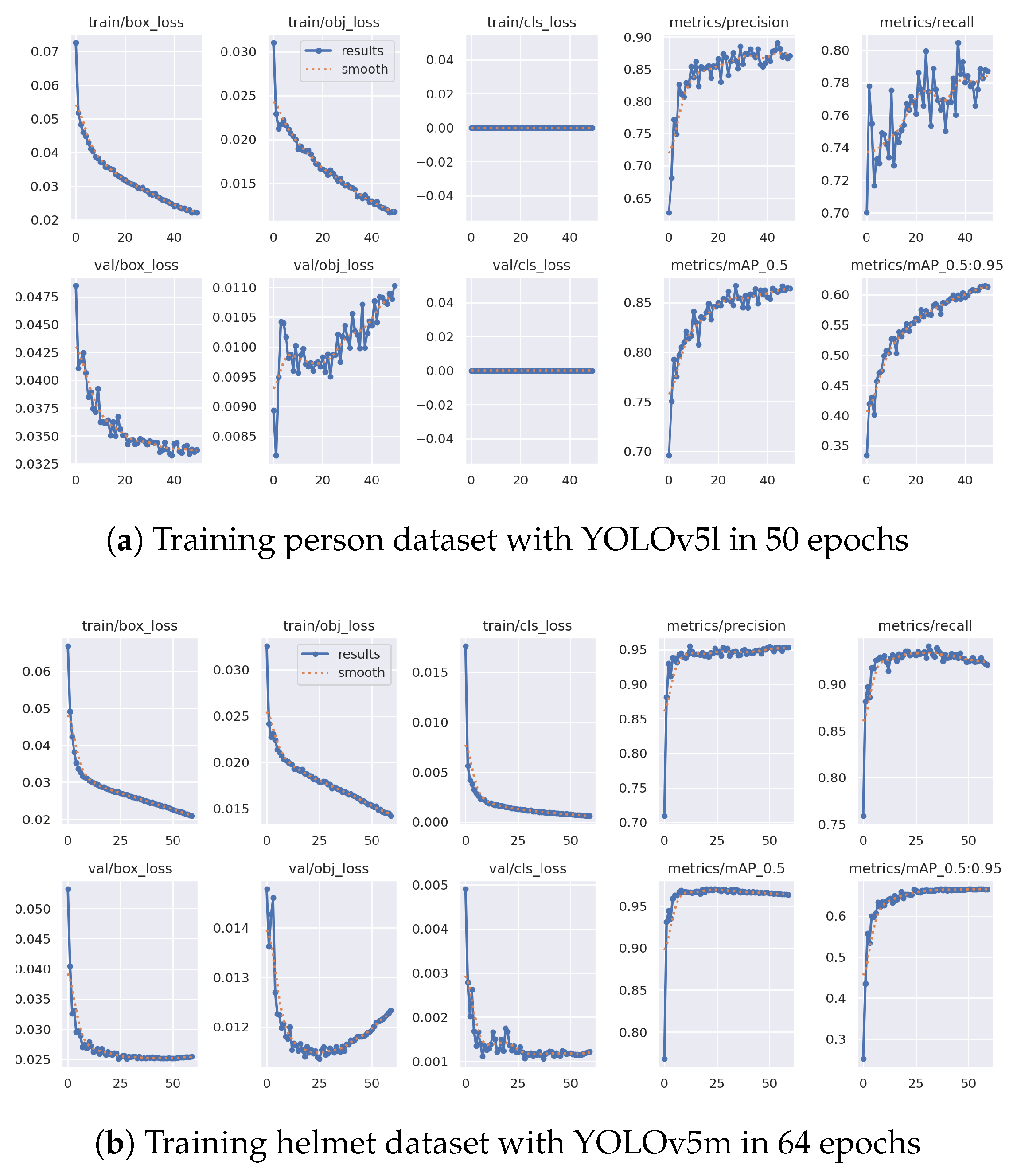

2.1.1. Personnel Dataset

2.1.2. Helmet Dataset

2.1.3. Symbol Dataset

2.2. Deep Learning

2.2.1. Convolutional Neural Networks

2.2.2. YOLO: You Only Look Once

2.3. Proposed Method

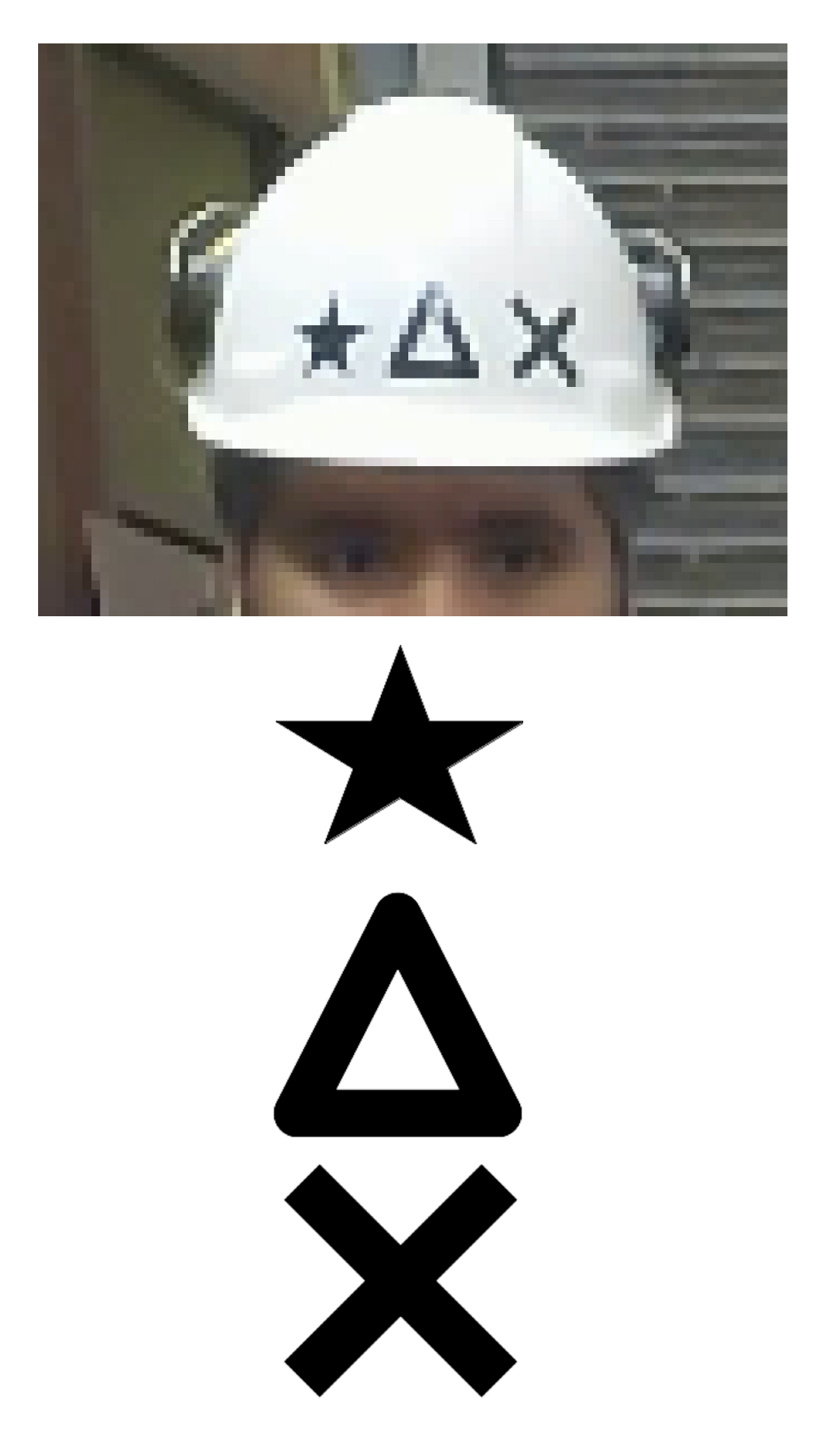

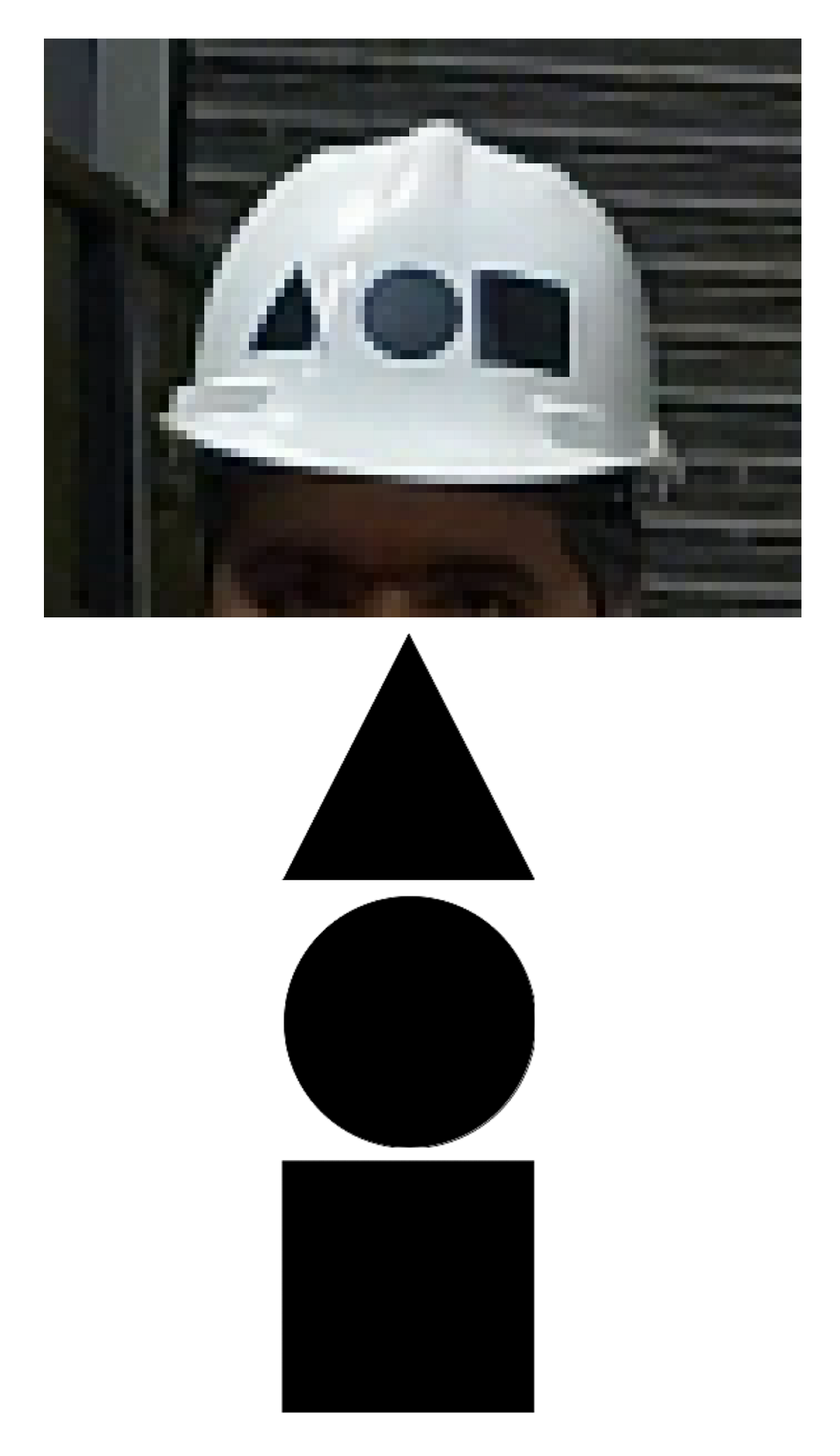

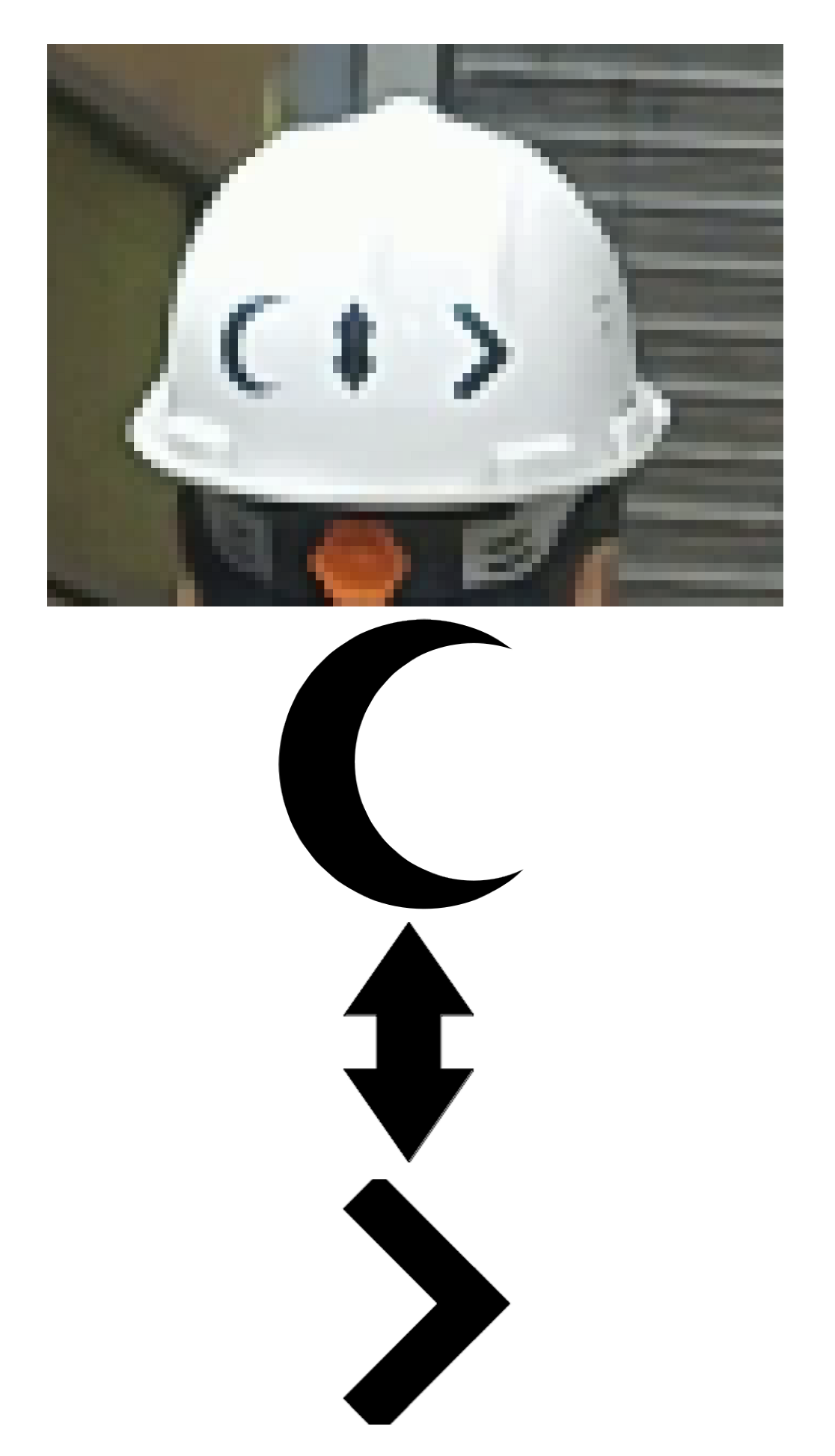

2.3.1. Symbols

- Having a generic shape like triangle, square, star, circle, etc.

- Being distinguisable from an acceptable distance by the human eye, and therefore by computer.

- Should not be confused each other.

2.3.2. Deep Learning-Based Symbol Detection Method

2.3.3. Symbol Ordering and Matching to Database

| Algorithm 1 Ordering Points by Most-Left Rule Recursively. |

|

- Get the list of points named P representing the top-left corner of bounding boxes.

- Get the first point in the list as a pivot.

- Divide points as Left and Right depending on x-coordinate, small ones on the Left and others on the Right.

- Then, recursively, Left and Right lists are processed.

- Combine results as in the following order: Left list, pivot point, Right list.

- Return ordered list.

3. Results

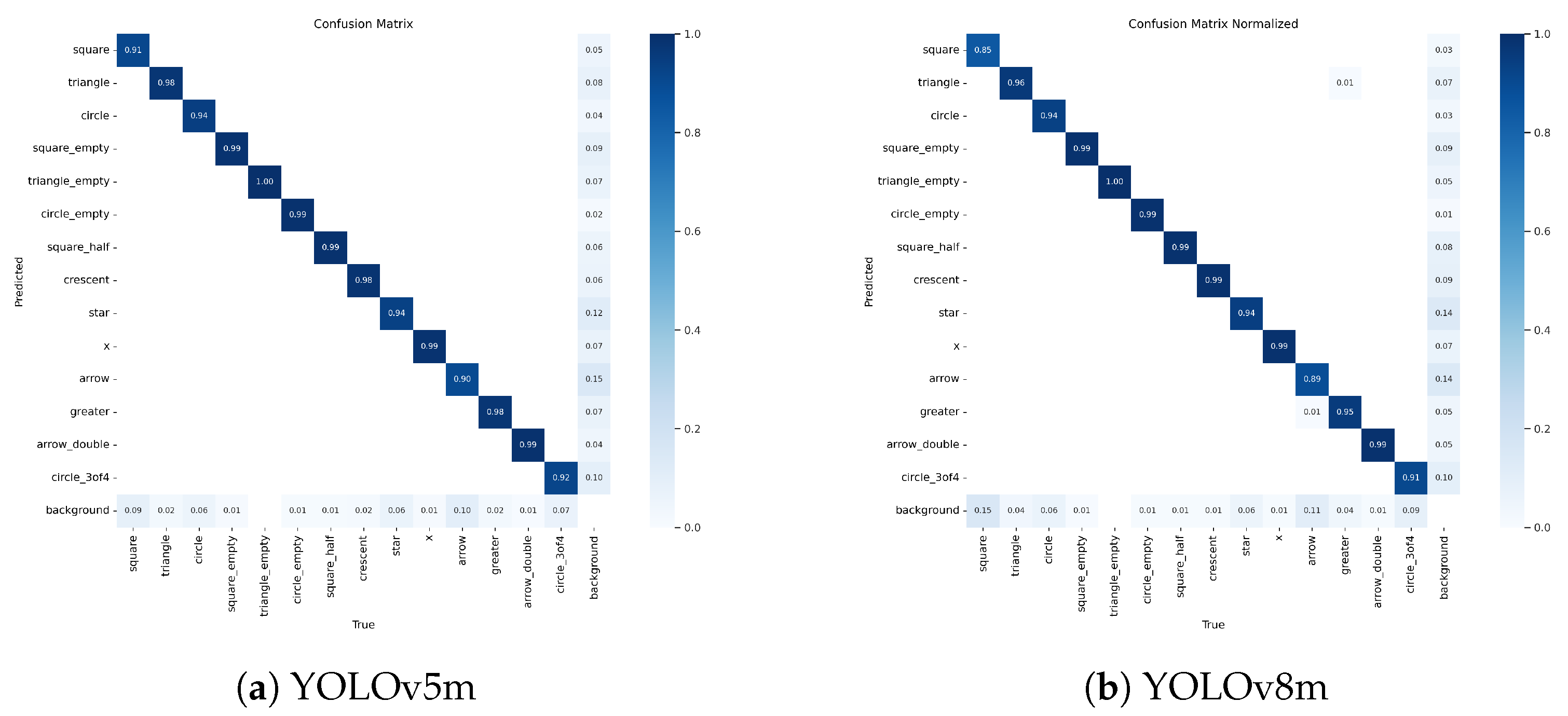

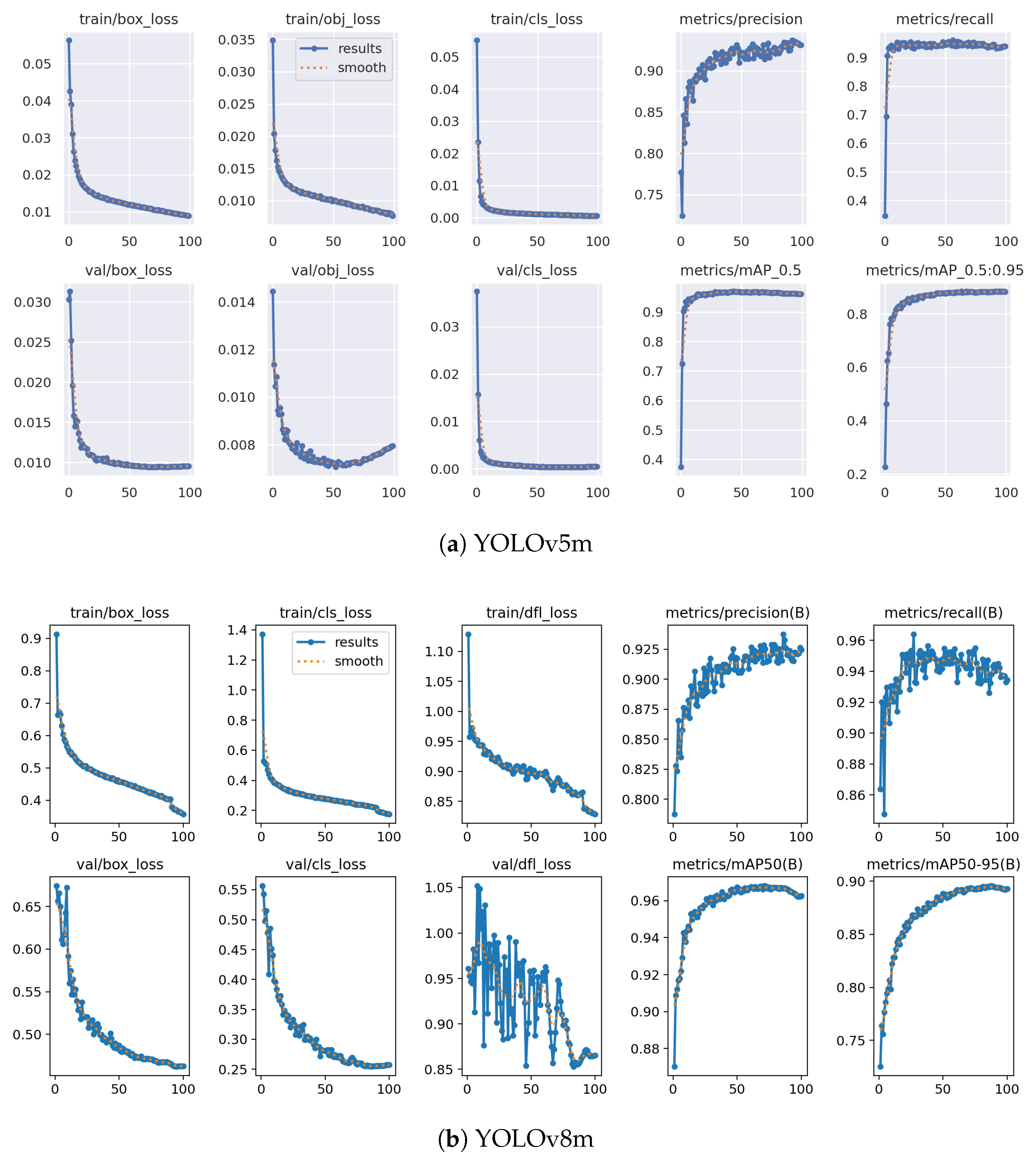

3.1. Symbol Training Results

3.1.1. YOLOv8m Training Results

3.1.2. YOLOv5m Training Results

- YOLOv5m produces better precision and recall metrics by a tiny margin, as seen in the average values.

- As precision measures the correctness of positive predictions, the best precision performance for both methods is gained by X, circle_empty, triangle_empty, square_half, and arrow_double in a row. In contrast, the symbols circle_3of4, arrow, and square have the lowest precision values.

- As recall measures the ability of the model to avoid missing positive instances, triangle_empty was not missed in any case, followed by the symbols circle_empty, x, square_empty, and square_half. Only the square symbol had a recall value lower than 90%.

3.2. Distance Test Results for Symbol Detection

4. Conclusions and Future Work

- A huge symbol training dataset is generated.

- A testing dataset containing different distances is generated in order to measure accuracy by distance. According to the results, up to 10 m accuracy is obtained.

- A location-based symbol ordering algorithm is proposed. Since symbol detections do not follow any order and they work through confidence values in the inference mode, a left to right approach is followed.

- There is not a a feasible solution for worker safety and tracking systems at construction sites, except some hardware-based means that involve battery and management issues. This study suggests a passive symbol detection method without any installation on workers using already-placed cameras.

- Other deep learning approaches can be used to train and test the images, though YOLO is known for its real-time performances at speed.

- In this study, only white helmets are studied. Although other colors are detected in the detection experiments, they are not tested at all.

- The person and helmet datasets taken from online repositories can be extended, although they are trained and tested with high accuracy results.

- Also, the symbol dataset must be extended with very exceptional light and angle conditions in order to increase accuracy for far distances. This will definitely have a positive affect on the confidence values of detections.

- Since not every frame is available for three symbol detections, a sequential people tracking system can be run simultaneously with the proposed method. Once a person is identified, all corresponding previously detected helmets can be retroactively associated with that person.

- Since the target number of workers to be identified determines the number of symbols, symbol selection and generation processes can be studied independently.

- Finally, the identification process must be tested in a crowd environment where more than two people wear helmets.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- NIOSH Science Blog. Traumatic Brain Injuries in Construction. 2024. Available online: https://blogs.cdc.gov/niosh-science-blog/2016/03/21/constructiontbi (accessed on 13 August 2024).

- Occupational Safety and Health Administration. Top 10 Most Frequently Cited Standards. 2024. Available online: https://www.osha.gov/top10citedstandards (accessed on 13 August 2024).

- Chang, X.; Liu, X.M. Fault Tree Analysis of Unreasonably Wearing Helmets for Builders. J. Jilin Jianzhu Univ. 2018, 35, 67–71. [Google Scholar]

- Rubaiyat, A.H.M.; Toma, T.T.; Kalantari-Khandani, M.; Rahman, S.A.; Chen, L.; Ye, Y.; Pan, C.S. Automatic Detection of Helmet Uses for Construction Safety. In Proceedings of the 2016 IEEE/WIC/ACM International Conference on Web Intelligence Workshops (WIW), Omaha, NE, USA, 13–16 October 2016; pp. 135–142. [Google Scholar]

- Zhang, H.; Yan, X.; Li, H.; Jin, R.; Fu, H. Real-Time Alarming, Monitoring, and Locating for Non-Hard-Hat Use in Construction. J. Constr. Eng. Manag. 2019, 145, 04019006. [Google Scholar] [CrossRef]

- Nath, N.D.; Behzadan, A.H.; Paal, S.G. Deep Learning for Site Safety: Real-Time Detection of Personal Protective Equipment. Autom. Constr. 2020, 112, 103085. [Google Scholar] [CrossRef]

- Kelm, A.; Laußat, L.; Meins-Becker, A.; Platz, D.; Khazaee, M.J.; Costin, A.M.; Helmus, M.; Teizer, J. Mobile Passive Radio Frequency Identification (RFID) Portal for Automated and Rapid Control of Personal Protective Equipment (PPE) on Construction Sites. Autom. Constr. 2013, 36, 38–52. [Google Scholar] [CrossRef]

- Barro-Torres, S.; Fernández-Caramés, T.M.; Pérez-Iglesias, H.J.; Escudero, C.J. Real-Time Personal Protective Equipment Monitoring System. Comput. Commun. 2012, 36, 42–50. [Google Scholar] [CrossRef]

- Hayat, A.; Morgado-Dias, F. Deep Learning-Based Automatic Safety Helmet Detection System for Construction Safety. Appl. Sci. 2022, 12, 8268. [Google Scholar] [CrossRef]

- Li, Y.; Wei, H.; Han, Z.; Huang, J.; Wang, W. Deep Learning-Based Safety Helmet Detection in Engineering Management Based on Convolutional Neural Networks. Adv. Civ. Eng. 2020, 2020, 9703560. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Yang, L.; Thirunavukarasu, A.; Evison, C.; Zhao, Y. Fast Personal Protective Equipment Detection for Real Construction Sites Using Deep Learning Approaches. Sensors 2021, 21, 3478. [Google Scholar] [CrossRef] [PubMed]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, Present, and Future of Face Recognition: A Review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In Proceedings of the ECCV 2016: 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Amsterdam, The Netherlands, 2016; pp. 499–515. [Google Scholar]

- Wu, Y.; Liu, H.; Li, J.; Fu, Y. Deep Face Recognition with Center Invariant Loss. In Proceedings of the Thematic Workshops of ACM Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 408–414. [Google Scholar]

- Yin, X.; Yu, X.; Sohn, K.; Liu, X.; Chandraker, M. Feature Transfer Learning for Face Recognition with Under-Represented Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5704–5713. [Google Scholar]

- Philippe, V.; Bourlai, T. Exploring Image Augmentation Methods for Long-Distance Face Recognition Using Deep Learning. In Proceedings of the SoutheastCon 2024, Atlanta, GA, USA, 15–24 March 2024; pp. 1144–1150. [Google Scholar]

- Ge, S.; Zhao, S.; Li, C.; Li, J. Low-Resolution Face Recognition in the Wild via Selective Knowledge Distillation. IEEE Trans. Image Process. 2018, 28, 2051–2062. [Google Scholar] [CrossRef] [PubMed]

- Kortli, Y.; Jridi, M.; Al Falou, A.; Atri, M. Face Recognition Systems: A Survey. Sensors 2020, 20, 342. [Google Scholar] [CrossRef] [PubMed]

- Andreev, P.; Aprahamian, B.; Marinov, M. QR Code’s Maximum Scanning Distance Investigation. In Proceedings of the 2019 16th Conference on Electrical Machines, Drives and Power Systems (ELMA), Varna, Bulgaria, 6–8 June 2019; pp. 1–4. [Google Scholar]

- Jose, A.; Thodupunoori, H.; Nair, B.B. A Novel Traffic Sign Recognition System Combining Viola–Jones Framework and Deep Learning. In Proceedings of the Soft Computing and Signal Processing: Proceedings of ICSCSP 2018, Chennai, India, 3–5 April 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 507–517. [Google Scholar]

- Avramović, A.; Sluga, D.; Tabernik, D.; Skočaj, D.; Stojnić, V.; Ilc, N. Neural-Network-Based Traffic Sign Detection and Recognition in High-Definition Images Using Region Focusing and Parallelization. IEEE Access 2020, 8, 189855–189868. [Google Scholar] [CrossRef]

- Dharnesh, K.; Prramoth, M.M.; Saravanan, A.S.; Sivabalan, M.A.; Sivraj, P. Performance Comparison of Road Traffic Sign Recognition System Based on CNN and YOLO v5. In Proceedings of the 2023 Innovations in Power and Advanced Computing Technologies (i-PACT), Kuala Lumpur, Malaysia, 8–10 December 2023; pp. 1–6. [Google Scholar]

- Megalingam, R.K.; Thanigundala, K.; Musani, S.R.; Nidamanuru, H.; Gadde, L. Indian Traffic Sign Detection and Recognition Using Deep Learning. Int. J. Transp. Sci. Technol. 2023, 12, 683–699. [Google Scholar] [CrossRef]

- Temel, D.; Chen, M.; AlRegib, G. Traffic Sign Detection under Challenging Conditions: A Deeper Look into Performance Variations and Spectral Characteristics. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3663–3673. [Google Scholar] [CrossRef]

- Cao, N. Research and Development of Icon Recognition System Based on Machine Vision. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Xi’an, China, 25–27 October 2020; Volume 740, p. 012183. [Google Scholar]

- Aslan, F.; Becerikli, Y. Symbol Detection with Deep Learning. Int. J. Adv. Nat. Sci. Eng. Res. 2023, 7, 239–243. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Martinson, E.; Yalla, V. Real-Time Human Detection for Robots Using CNN with a Feature-Based Layered Pre-Filter. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 1120–1125. [Google Scholar]

- Wibowo, M.E.; Ashari, A.; Putra, M.P.K. Improvement of Deep Learning-Based Human Detection Using Dynamic Thresholding for Intelligent Surveillance System. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 472–477. [Google Scholar] [CrossRef]

- Li, F.; Chen, Y.; Hu, M.; Luo, M.; Wang, G. Helmet-Wearing Tracking Detection Based on StrongSORT. Sensors 2023, 23, 1682. [Google Scholar] [CrossRef]

- Li, J.; Zhao, X.; Kong, L.; Zhang, L.; Zou, Z. Construction Activity Recognition Method Based on Object Detection, Attention Orientation Estimation, and Person Re-Identification. Buildings 2024, 14, 1644. [Google Scholar] [CrossRef]

- Titulacin. Person Detection Computer Vision Project. 2023. Available online: https://universe.roboflow.com/titulacin/person-detection-9a6mk/dataset/16 (accessed on 1 May 2023).

- Northeastern University, China. Hard Hat Workers Dataset. 2022. Available online: https://universe.roboflow.com/joseph-nelson/hard-hat-workers/dataset/14 (accessed on 1 September 2022).

- Dong, S.; Wang, P.; Abbas, K. A Survey on Deep Learning and Its Applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Rhee, E.J. A Deep Learning Approach for Classification of Cloud Image Patches on Small Datasets. J. Inf. Commun. Converg. Eng. 2018, 16, 173. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 1311. [Google Scholar]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A Review of Object Detection Based on Deep Learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Shen, J.; Shafiq, M.O. Deep Learning Convolutional Neural Networks with Dropout—A Parallel Approach. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 572–577. [Google Scholar]

- Li, L.; Liu, X.; Chen, X.; Yin, F.; Chen, B.; Wang, Y.; Meng, F. SDMSEAF-YOLOv8: A Framework to Significantly Improve the Detection Performance of Unmanned Aerial Vehicle Images. Geocarto Int. 2024, 39, 2339294. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An Improved Fire Detection Approach Based on YOLO-v8 for Smart Cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Cai, Y.; Chen, L.; Li, Y. YOLOv8-QSD: An Improved Small Object Detection Algorithm for Autonomous Vehicles Based on YOLOv8. IEEE Trans. Instrum. Meas. 2024, 73, 2513916. [Google Scholar] [CrossRef]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. DC-YOLOv8: Small-Size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

- Terzi, D.S.; Azginoglu, N. In-Domain Transfer Learning Strategy for Tumor Detection on Brain MRI. Diagnostics 2023, 13, 2110. [Google Scholar] [CrossRef]

| Class | Instances | P | R | mAP@50 | mAP@50-95 |

|---|---|---|---|---|---|

| (v5/v8) | (v5/v8) | (v5/v8) | (v5/v8) | ||

| all | 7212 | 0.924/0.923 | 0.951/0.943 | 0.968/0.967 | 0.884/0.898 |

| square | 78 | 0.809/0.864 | 0.872/0.814 | 0.923/0.908 | 0.901/0.892 |

| triangle | 336 | 0.902/0.907 | 0.970/0.955 | 0.967/0.957 | 0.879/0.884 |

| circle | 154 | 0.917/0.917 | 0.931/0.896 | 0.970/0.971 | 0.912/0.924 |

| square_empty | 412 | 0.912/0.915 | 0.983/0.985 | 0.982/0.982 | 0.913/0.924 |

| triangle_empty | 1103 | 0.979/0.983 | 1.000/1.000 | 0.991/0.991 | 0.904/0.919 |

| circle_empty | 438 | 0.984/0.985 | 0.989/0.991 | 0.992/0.991 | 0.920/0.938 |

| square_half | 581 | 0.961/0.944 | 0.978/0.981 | 0.990/0.989 | 0.926/0.939 |

| crescent | 474 | 0.952/0.924 | 0.970/0.978 | 0.989/0.987 | 0.894/0.909 |

| star | 515 | 0.920/0.900 | 0.916/0.909 | 0.953/0.963 | 0.837/0.859 |

| x | 1783 | 0.985/0.985 | 0.985/0.987 | 0.993/0.994 | 0.906/0.921 |

| arrow | 398 | 0.876/0.855 | 0.877/0.862 | 0.929/0.926 | 0.797/0.814 |

| greater | 370 | 0.938/0.938 | 0.957/0.949 | 0.983/0.982 | 0.883/0.897 |

| arrow_double | 342 | 0.946/0.939 | 0.977/0.986 | 0.983/0.982 | 0.886/0.904 |

| circle_3of4 | 228 | 0.849/0.861 | 0.917/0.908 | 0.899/0.914 | 0.818/0.844 |

| Average | 515 | 0.924/0.923 | 0.952/0.943 | 0.967/0.967 | 0.884/0.898 |

| Symbols | Distance (m) | # Frames | # Frames A Person Detected Correctly | # Frames A Helmet Detected Correctly | # Frames YOLOv5 Identified Correctly | # Frames YOLOv8 Identified Correctly | Confidence Average (YOLOv5) | Confidence Average (YOLOv8) |

|---|---|---|---|---|---|---|---|---|

| 5 | 300 | 300 | 300 | 228 | 193 | 0.78 | 0.75 |

| 6 | 510 | 510 | 510 | 472 | 350 | 0.87 | 0.79 | |

| 7 | 360 | 360 | 360 | 338 | 326 | 0.87 | 0.82 | |

| 8 | 390 | 390 | 390 | 389 | 361 | 0.91 | 0.85 | |

| 9 | 390 | 390 | 390 | 329 | 235 | 0.84 | 0.81 | |

| 10 | 420 | 420 | 420 | 361 | 269 | 0.85 | 0.79 | |

| 11 | 420 | 420 | 420 | 277 | 149 | 0.82 | 0.75 | |

| 12 | 420 | 420 | 420 | 222 | 72 | 0.8 | 0.69 | |

| 13 | 450 | 450 | 450 | 86 | 65 | 0.69 | 0.7 | |

| 14 | 420 | 420 | 420 | 122 | 67 | 0.74 | 0.69 | |

| 15 | 480 | 480 | 480 | 23 | 15 | 0.62 | 0.65 | |

| 5 | 510 | 510 | 510 | 263 | 10 | 0.69 | 0.72 |

| 6 | 464 | 464 | 464 | 14 | 0 | 0.58 | 0 | |

| 7 | 522 | 522 | 522 | 65 | 13 | 0.68 | 0.57 | |

| 8 | 493 | 493 | 493 | 0 | 0 | 0 | 0 | |

| 9 | 464 | 464 | 464 | 1 | 0 | 0.53 | 0 | |

| 10 | 435 | 435 | 435 | 0 | 25 | 0 | 0.59 | |

| 11 | 522 | 522 | 522 | 0 | 0 | 0 | 0 | |

| 12 | 551 | 551 | 551 | 0 | 0 | 0 | 0 | |

| 13 | 493 | 493 | 493 | 0 | 0 | 0 | 0 | |

| 14 | 493 | 493 | 493 | 0 | 0 | 0 | 0 | |

| 15 | 522 | 508 | 505 | 0 | 0 | 0 | 0 | |

| 5 | 464 | 464 | 464 | 203 | 113 | 0.73 | 0.64 |

| 6 | 551 | 551 | 551 | 251 | 267 | 0.76 | 0.67 | |

| 7 | 551 | 551 | 551 | 170 | 178 | 0.72 | 0.62 | |

| 8 | 493 | 493 | 493 | 126 | 50 | 0.73 | 0.63 | |

| 9 | 493 | 493 | 493 | 52 | 1 | 0.72 | 0.49 | |

| 10 | 551 | 551 | 551 | 7 | 0 | 0.67 | 0 | |

| 11 | 493 | 493 | 493 | 0 | 16 | 0 | 0.57 | |

| 12 | 522 | 522 | 522 | 6 | 0 | 0.62 | 0 | |

| 13 | 522 | 522 | 522 | 0 | 1 | 0 | 0.48 | |

| 14 | 522 | 519 | 519 | 0 | 0 | 0 | 0 | |

| 15 | 570 | 553 | 553 | 0 | 2 | 0 | 0.54 | |

| 5 | 510 | 510 | 510 | 52 | 54 | 0.76 | 0.79 |

| 6 | 480 | 480 | 480 | 42 | 27 | 0.71 | 0.74 | |

| 7 | 480 | 480 | 480 | 0 | 4 | 0 | 0.72 | |

| 8 | 480 | 480 | 480 | 0 | 0 | 0 | 0 | |

| 9 | 450 | 450 | 450 | 0 | 0 | 0 | 0 | |

| 10 | 450 | 450 | 450 | 0 | 0 | 0 | 0 | |

| 11 | 480 | 480 | 480 | 0 | 0 | 0 | 0 | |

| 12 | 450 | 450 | 450 | 0 | 0 | 0 | 0 | |

| 13 | 480 | 480 | 480 | 0 | 0 | 0 | 0 | |

| 14 | 450 | 450 | 450 | 13 | 2 | 0.56 | 0.41 | |

| 15 | 540 | 526 | 526 | 158 | 2 | 0.52 | 0.48 | |

| 5 | 510 | 510 | 510 | 508 | 495 | 0.93 | 0.9 |

| 6 | 390 | 390 | 390 | 390 | 389 | 0.93 | 0.9 | |

| 7 | 420 | 420 | 420 | 413 | 417 | 0.89 | 0.85 | |

| 8 | 450 | 450 | 450 | 414 | 436 | 0.83 | 0.77 | |

| 9 | 480 | 480 | 480 | 446 | 413 | 0.81 | 0.73 | |

| 10 | 480 | 480 | 480 | 451 | 417 | 0.81 | 0.69 | |

| 11 | 510 | 510 | 510 | 403 | 342 | 0.77 | 0.67 | |

| 12 | 450 | 450 | 450 | 295 | 68 | 0.69 | 0.54 | |

| 13 | 510 | 510 | 510 | 278 | 182 | 0.69 | 0.56 | |

| 14 | 450 | 450 | 450 | 7 | 2 | 0.5 | 0.5 | |

| 15 | 450 | 450 | 450 | 4 | 0 | 0.53 | 0 |

| Model | Average Time (ms) |

|---|---|

| YOLOv5m | 11.11 |

| YOLOv8m | 13.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aslan, F.; Becerikli, Y. Real-Time Deep-Learning-Based Recognition of Helmet-Wearing Personnel on Construction Sites from a Distance. Appl. Sci. 2025, 15, 11188. https://doi.org/10.3390/app152011188

Aslan F, Becerikli Y. Real-Time Deep-Learning-Based Recognition of Helmet-Wearing Personnel on Construction Sites from a Distance. Applied Sciences. 2025; 15(20):11188. https://doi.org/10.3390/app152011188

Chicago/Turabian StyleAslan, Fatih, and Yaşar Becerikli. 2025. "Real-Time Deep-Learning-Based Recognition of Helmet-Wearing Personnel on Construction Sites from a Distance" Applied Sciences 15, no. 20: 11188. https://doi.org/10.3390/app152011188

APA StyleAslan, F., & Becerikli, Y. (2025). Real-Time Deep-Learning-Based Recognition of Helmet-Wearing Personnel on Construction Sites from a Distance. Applied Sciences, 15(20), 11188. https://doi.org/10.3390/app152011188