Loop-MapNet: A Multi-Modal HDMap Perception Framework with SDMap Dynamic Evolution and Priors

Abstract

1. Introduction

2. Related Work

2.1. Online HDMap Construction

2.2. SDMap Construction

2.3. SDMap-Prior-Aided Mapping

2.4. Dynamic Map Update

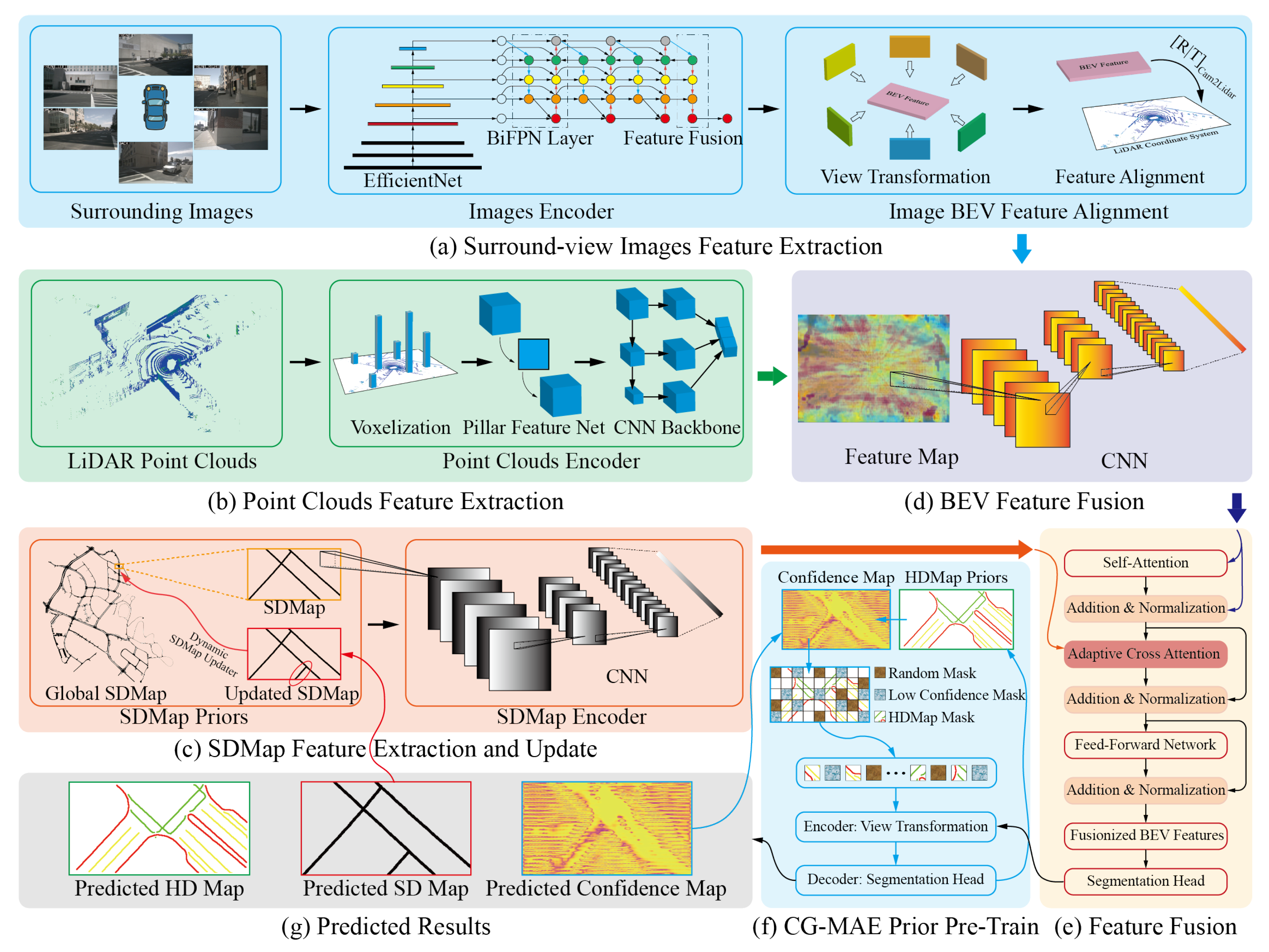

3. Method

3.1. Feature Extraction

3.1.1. Surround-View Image Feature Extraction

3.1.2. Point Cloud Feature Extraction

3.1.3. SDMap Feature Extraction

3.2. Cross-Modal Feature Fusion

3.2.1. Sensor Feature Fusion

3.2.2. Bidirectional Adaptive Cross-Attention Mechanism

3.3. Multi-Task Inference Framework

3.3.1. Multi-Task Feature Fusion and Encoding

3.3.2. Multi-Task Optimization

3.4. Confidence-Guided Masked Autoencoder (CG-MAE)

3.4.1. Feature Embedding and Confidence-Guided Masking Generation

3.4.2. Transformer Encoding and Feature Reconstruction

3.4.3. Decoding, Optimization and Training Strategy

3.5. Dynamic SDMap Update Strategy

4. Experiments and Discussion

4.1. Implementation Details

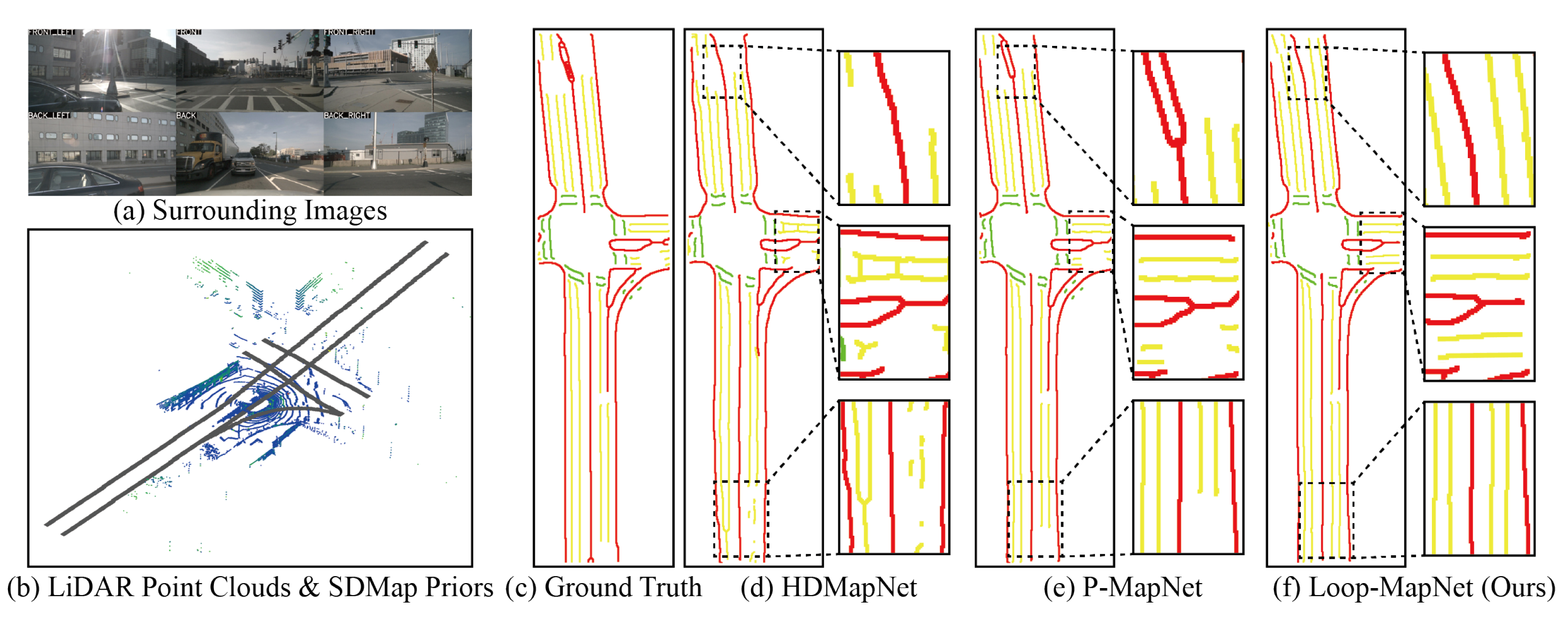

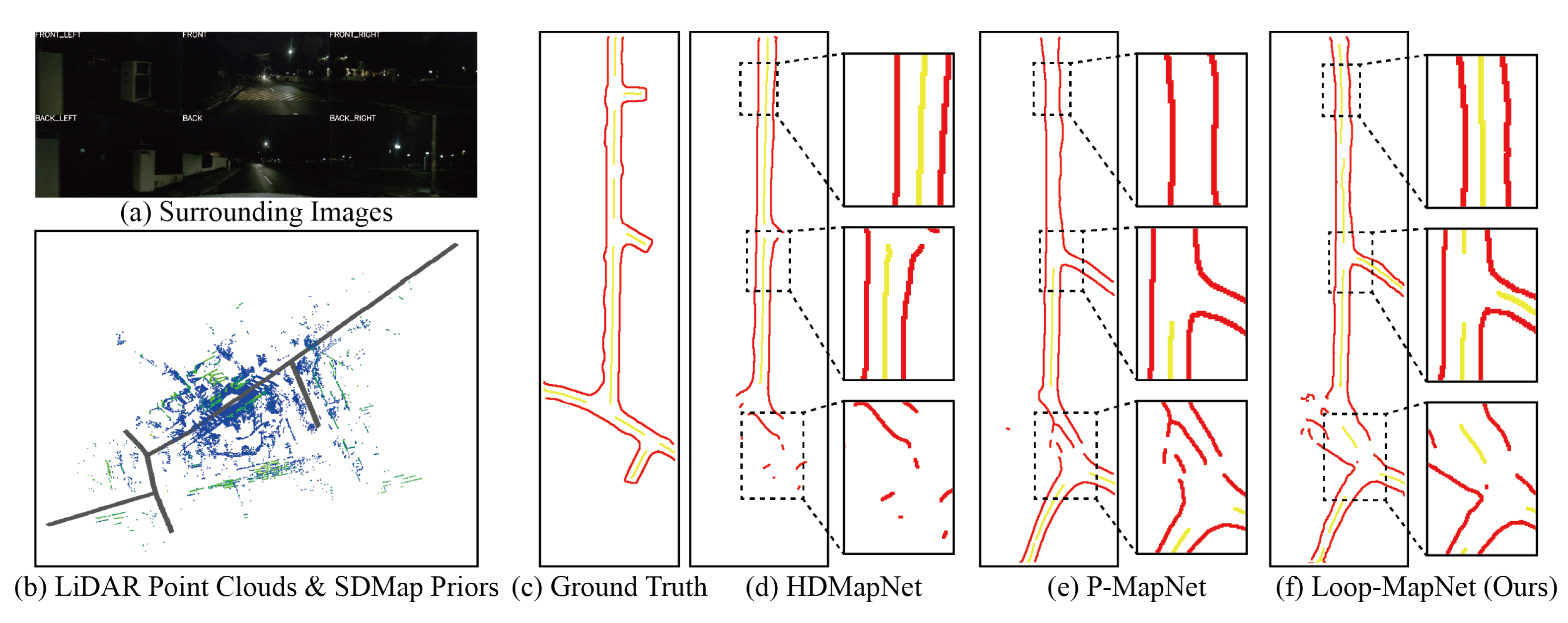

4.2. Far-Range Perception Experiments

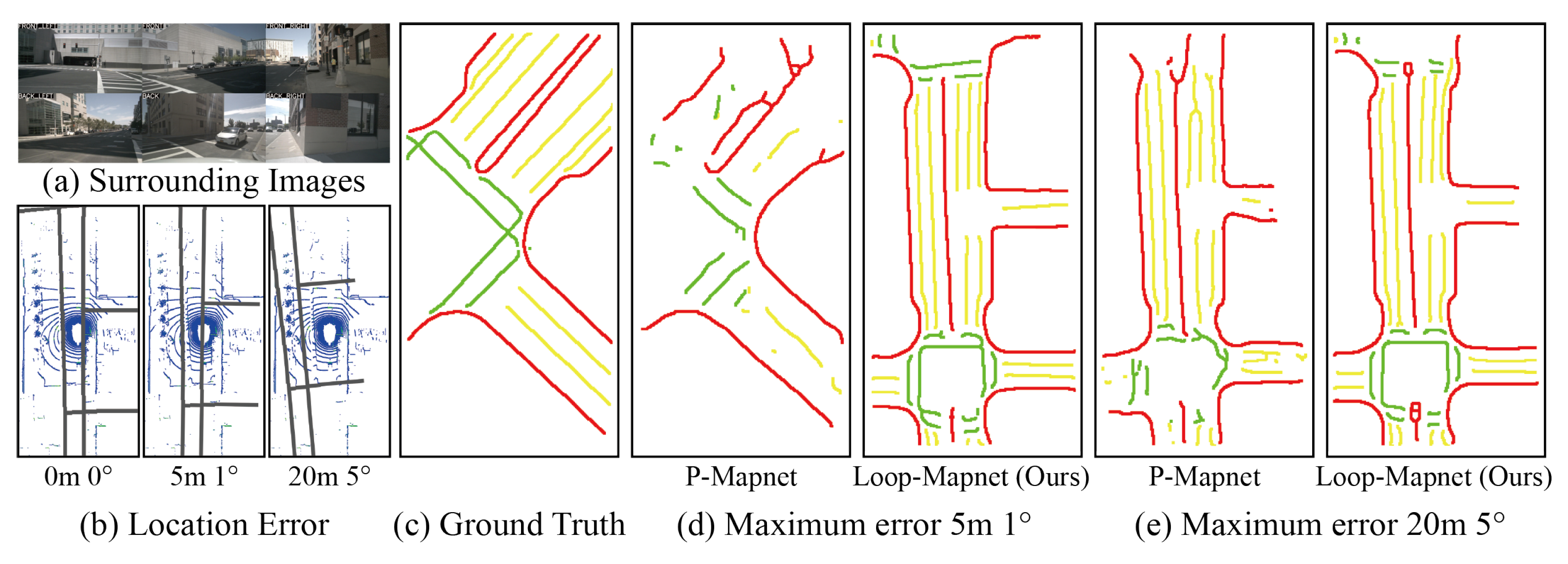

4.3. Robustness to SDMap Prior Alignment Errors

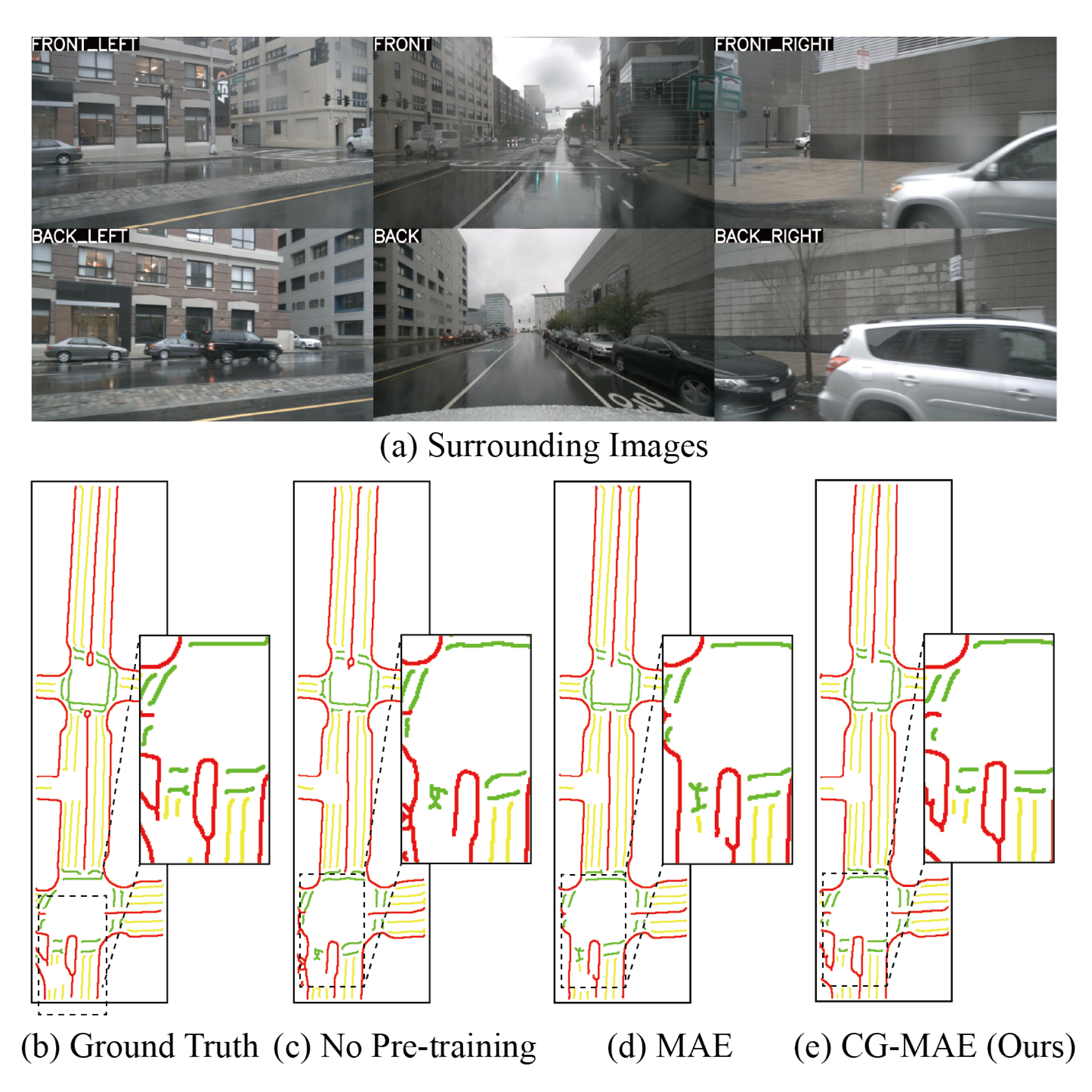

4.4. Ablation Study: CG-MAE Pre-Training Models

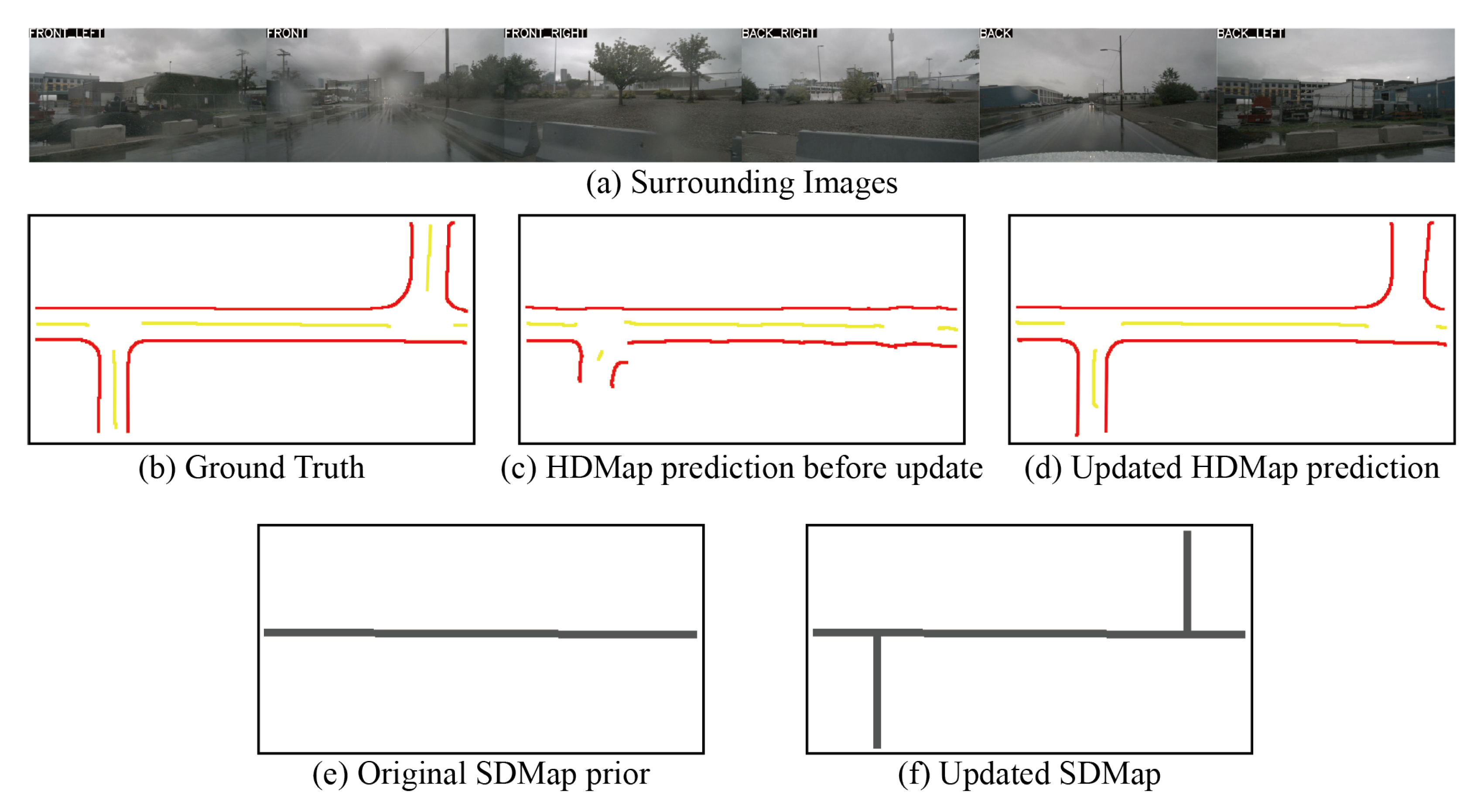

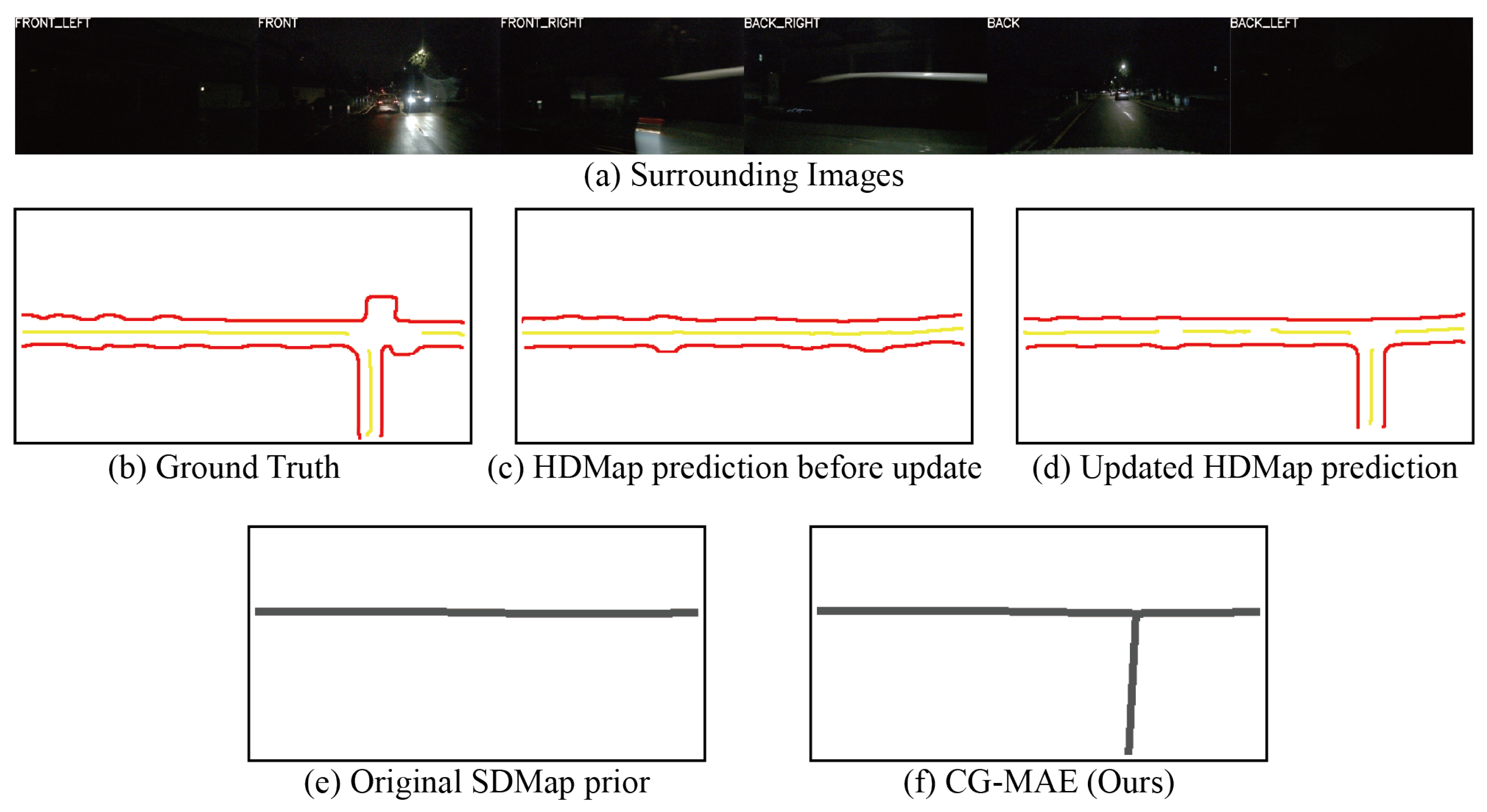

4.5. Impact of HDMap Closed-Loop Updates on SDMap Perception Performance Under Road Network Structure Changes

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HDMap | A high-definition map with centimeter-level geometry and rich semantics supporting localization and planning. |

| SDMap | A low-cost prior providing road topology and coarse geometry to assist online perception. |

| OpenStreetMap (OSM) | A community-curated SD map source used as prior for topology and geometry. |

| BEV | A top-down representation on the ground plane aligned with the map frame. |

| Bidirectional Adaptive Cross-Attention | A cross-modal alignment module predicting inter-modal correlation and spatial offsets. |

| SimilarityNet | A network predicting inter-modal correlation weights for fusion. |

| OffsetNet | A network predicting spatial offsets to align SDMap features. |

| GridSample | A differentiable sampling operator applying learned offsets for alignment. |

| CG-MAE | A confidence-guided masked autoencoder that reconstructs low-confidence regions to enhance details. |

| Confidence Gating | A fusion scheme combining CG-MAE output with the main prediction based on confidence. |

| Spatiotemporal Consistency | A constraint enforcing temporal and spatial coherence during SDMap updates. |

| Similarity Loss | A cross-modal alignment loss enforcing consistency between BEV and SDMap features. |

References

- Wang, C.; Aouf, N. Explainable deep adversarial reinforcement learning approach for robust autonomous driving. IEEE Trans. Intell. Veh. 2024, 10, 2551–2563. [Google Scholar] [CrossRef]

- Reda, M.; Onsy, A.; Haikal, A.Y.; Ghanbari, A. Path planning algorithms in the autonomous driving system: A comprehensive review. Robot. Auton. Syst. 2024, 174, 104630. [Google Scholar] [CrossRef]

- Xiao, Z.; Yang, D.; Wen, T.; Jiang, K.; Yan, R. Monocular localization with vector HD map (MLVHM): A low-cost method for commercial IVs. Sensors 2020, 20, 1870. [Google Scholar] [CrossRef]

- Bao, Z.; Hossain, S.; Lang, H.; Lin, X. A review of high-definition map creation methods for autonomous driving. Eng. Appl. Artif. Intell. 2023, 122, 106125. [Google Scholar] [CrossRef]

- Liu, R.; Wang, J.; Zhang, B. High definition map for automated driving: Overview and analysis. J. Navig. 2020, 73, 324–341. [Google Scholar] [CrossRef]

- Tang, K.; Cao, X.; Cao, Z.; Zhou, T.; Li, E.; Liu, A.; Zou, S.; Liu, C.; Mei, S.; Sizikova, E.; et al. THMA: Tencent HD map AI system for creating HD map annotations. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 15585–15593. [Google Scholar]

- Chen, S.; Zhang, Y.; Liao, B.; Xie, J.; Cheng, T.; Sui, W.; Zhang, Q.; Huang, C.; Liu, W.; Wang, X. VMA: Divide-and-conquer vectorized map annotation system for large-scale driving scene. arXiv 2023, arXiv:2304.09807. [Google Scholar]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R. Hierarchical graph-based segmentation for extracting road networks from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Zheng, S.; Wang, J.; Rizos, C.; Ding, W.; El-Mowafy, A. SLAM for autonomous driving: Concept and analysis. Remote Sens. 2023, 15, 1156. [Google Scholar] [CrossRef]

- Batra, A.; Singh, S.; Pang, G.; Basu, S.; Jawahar, C.; Paluri, M. Improved road connectivity by joint learning of orientation and segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Li, J.; Jia, P.; Chen, J.; Liu, J.; He, L.; Li, K. Local Map Construction with SDMap: A Comprehensive Survey. arXiv 2024, arXiv:2409.02415. [Google Scholar]

- Jiang, Z.; Zhu, Z.; Li, P.; Gao, H.-A.; Yuan, T.; Shi, Y.; Zhao, H.; Zhao, H. P-MapNet: Far-seeing map generator enhanced by both SDMap and HDMap priors. IEEE Robot. Autom. Lett. 2024, arXiv:2403.10521. [Google Scholar] [CrossRef]

- Xiang, C.; Feng, C.; Xie, X.; Shi, B.; Lu, H.; Lv, Y.; Yang, M.; Niu, Z. Multi-sensor fusion and cooperative perception for autonomous driving: A review. IEEE Intell. Transp. Syst. Mag. 2023, 15, 36–58. [Google Scholar] [CrossRef]

- Liao, B.; Chen, S.; Wang, X.; Cheng, T.; Zhang, Q.; Liu, W.; Huang, C. MapTR: Structured modeling and learning for online vectorized HD map construction. arXiv 2022, arXiv:2208.14437. [Google Scholar]

- Li, Q.; Wang, Y.; Chen, L. HDMapNet: An Online HD Map Construction and Evaluation Framework. IEEE T-ITS 2022, 23, 2105–2118. [Google Scholar]

- Wu, H.; Zhang, Z.; Lin, S.; Qin, T.; Pan, J.; Zhao, Q.; Xu, C.; Yang, M. BLOS-BEV: Navigation map enhanced lane segmentation network, beyond line of sight. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024. [Google Scholar]

- Liao, B.; Chen, S.; Zhang, Y.; Jiang, B.; Zhang, Q.; Liu, W.; Huang, C.; Wang, X. MapTRv2: An end-to-end framework for online vectorized HD map construction. Int. J. Comput. Vis. 2025, 133, 1352–1374. [Google Scholar] [CrossRef]

- Tang, X.; Jiang, K.; Yang, M.; Liu, Z.; Jia, P.; Wijaya, B.; Wen, T.; Cui, L.; Yang, D. High-definition maps construction based on visual sensor: A comprehensive survey. IEEE Trans. Intell. Veh. 2024, 9, 5973–5994. [Google Scholar] [CrossRef]

- Zhu, X.; Cao, X.; Dong, Z.; Zhou, C.; Liu, Q.; Li, W.; Wang, Y. NeMo: Neural map growing system for spatiotemporal fusion in bird’s-eye view and BDD-Map benchmark. arXiv 2023, arXiv:2306.04540. [Google Scholar]

- Liu, Y.; Yuan, T.; Wang, Y.; Wang, Y.; Zhao, H. VectorMapNet: End-to-end vectorized HD map learning. In Proceedings of the 2023 International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 22352–22369. [Google Scholar]

- Tang, L.; Deng, Y.; Ma, Y.; Huang, J.; Ma, J. SuperFusion: A versatile image registration and fusion network with semantic awareness. IEEE/CAA J. Autom. Sin. 2022, 9, 2121–2137. [Google Scholar] [CrossRef]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. RoadTracer: Automatic extraction of road networks from aerial images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4720–4728. [Google Scholar]

- He, S.; Bastani, F.; Jagwani, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Elshrif, M.M.; Madden, S.; Sadeghi, A. Sat2Graph: Road graph extraction through graph-tensor encoding. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 51–67. [Google Scholar]

- Van Etten, A.; Lindenbaum, D.; Bacastow, T.M. SpaceNet: A remote sensing dataset and challenge series. arXiv 2018, arXiv:1807.01232. [Google Scholar]

- Mai, G.; Huang, W.; Sun, J.; Song, S.; Mishra, D.; Liu, N.; Gao, S.; Liu, T.; Cong, G.; Hu, Y.; et al. Opportunities and challenges of foundation models for GeoAI. ACM T-SAS 2024, 10, 1–46. [Google Scholar]

- Qin, T.; Zheng, Y.; Chen, T.; Chen, Y.; Su, Q. A light-weight semantic map for visual localization towards autonomous driving. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11248–11254. [Google Scholar]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An overview on visual SLAM: From tradition to semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Alsadik, B.; Karam, S. The SLAM: An overview. Surv. Geospat. Eng. J. 2021, 1, 1–12. [Google Scholar]

- Mooney, P.; Minghini, M. A review of OpenStreetMap data. In Mapping and the Citizen Sensor; Ubiquity Press: London, UK, 2017; pp. 37–59. [Google Scholar]

- Zhang, H.; Paz, D.; Guo, Y.; Das, A.; Huang, X.; Haug, K.; Christensen, H.I.; Ren, L. Enhancing online road network perception and reasoning with standard definition maps. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 1086–1093. [Google Scholar]

- Plachetka, C.; Maier, N.; Fricke, J.; Termohlen, J.-A.; Fingscheidt, T. Terminology and analysis of map deviations in urban domains. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 63–70. [Google Scholar]

- Xu, H.; Xiao, Y.; Li, W.; Hu, Y. Generating Synthetic Deviation Maps for Prior-Enhanced Vectorized HD Map Construction. In Proceedings of the 2025 IEEE Intelligent Vehicles Symposium (IV), Cluj-Napoca, Romania, 22–25 June 2025; pp. 419–426. [Google Scholar]

- Biagioni, J.; Eriksson, J. Map inference in the face of noise and disparity. In Proceedings of the SIGSPATIAL ’12: Proceedings of the 20th International Conference on Advances in Geographic Information Systems, Redondo Beach, CA, USA, 6–9 November 2012; pp. 79–88. [Google Scholar]

- Zhang, M.; Zhang, Y.; Zhang, L.; Liu, C.; Khurshid, S. DeepRoad: GAN-based metamorphic testing and input validation for ADS. In Proceedings of the 2018 33rd IEEE/ACM International Conference on Automated Software Engineering (ASE), Montpellier, France, 3–7 September 2018. [Google Scholar]

- Xia, D.; Zhang, W.; Liu, X.; Zhang, W.; Gong, C.; Tan, X.; Huang, J.; Yang, M.; Yang, D. LDMapNet-U: An End-to-End System for City-Scale Lane-Level Map Updating. arXiv 2025, arXiv:2501.02763. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding images by implicitly unprojecting to 3D. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Koonce, B. EfficientNet. In CNNs with Swift for TensorFlow; Apress: Berkeley, CA, USA, 2021; pp. 109–123. [Google Scholar]

- Bertozzi, M.; Broggi, A.; Fascioli, A. Stereo inverse perspective mapping: Theory and applications. Image Vis. Comput. 1998, 16, 585–590. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast encoders for object detection from point clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, J.L. Guibas PointNet: Deep learning on point sets. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. BEVDet: High-performance multi-camera 3D object detection in BEV. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. ResNet in ResNet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollar, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

| Range | Method | Epoch | Modal | Div. | Ped. | Bound. | mIoU | FPS |

|---|---|---|---|---|---|---|---|---|

| 60 × 30 | HDMapNet [16] | 30 | C + L | 45.13 | 30.76 | 56.05 | 43.98 | 21.62 |

| P-MapNet [13] | 30 | C + L + SD | 53.35 | 39.81 | 63.36 | 52.17 | 9.65 | |

| Ours | 30 | C + L + SD | 54.26 | 40.13 | 63.97 | 52.79 | 8.10 | |

| 120 × 60 | HDMapNet [16] | 30 | C+L | 54.18 | 38.03 | 57.92 | 50.04 | 21.55 |

| P-MapNet [13] | 30 | C + L + SD | 63.64 | 50.25 | 66.95 | 60.28 | 9.92 | |

| Ours | 30 | C + L + SD | 64.26 | 51.32 | 67.58 | 61.05 | 7.53 | |

| 240 × 60 | HDMapNet [16] | 30 | C+L | 39.69 | 26.42 | 43.53 | 36.55 | 13.58 |

| P-MapNet [13] | 30 | C + L + SD | 52.46 | 41.83 | 53.56 | 49.28 | 6.92 | |

| Ours | 30 | C + L + SD | 53.26 | 42.14 | 54.37 | 49.92 | 6.50 |

| Max Pos. | Max Yaw | Method | Div. | Ped. | Bound. | mIoU |

|---|---|---|---|---|---|---|

| 0 m | 0° | P-MapNet [13] | 63.64 | 50.25 | 66.95 | 60.28 |

| Ours | 64.26 | 51.32 | 67.58 | 61.05 | ||

| 5 m | 1° | P-MapNet [13] | 63.31 | 49.87 | 66.03 | 59.74 |

| Ours | 64.07 | 51.43 | 67.45 | 60.98 | ||

| 20 m | 5° | P-MapNet [13] | 60.75 | 47.63 | 62.37 | 56.92 |

| Ours | 63.96 | 51.05 | 66.38 | 60.46 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Hu, J.; Zhang, D.; Xu, W.; Zhao, F.; Cheng, X. Loop-MapNet: A Multi-Modal HDMap Perception Framework with SDMap Dynamic Evolution and Priors. Appl. Sci. 2025, 15, 11160. https://doi.org/10.3390/app152011160

Tang Y, Hu J, Zhang D, Xu W, Zhao F, Cheng X. Loop-MapNet: A Multi-Modal HDMap Perception Framework with SDMap Dynamic Evolution and Priors. Applied Sciences. 2025; 15(20):11160. https://doi.org/10.3390/app152011160

Chicago/Turabian StyleTang, Yuxuan, Jie Hu, Daode Zhang, Wencai Xu, Feiyu Zhao, and Xinghao Cheng. 2025. "Loop-MapNet: A Multi-Modal HDMap Perception Framework with SDMap Dynamic Evolution and Priors" Applied Sciences 15, no. 20: 11160. https://doi.org/10.3390/app152011160

APA StyleTang, Y., Hu, J., Zhang, D., Xu, W., Zhao, F., & Cheng, X. (2025). Loop-MapNet: A Multi-Modal HDMap Perception Framework with SDMap Dynamic Evolution and Priors. Applied Sciences, 15(20), 11160. https://doi.org/10.3390/app152011160