BMIT: A Blockchain-Based Medical Insurance Transaction System

Abstract

1. Introduction

- Selection of underlying architecture: The FISCO BCOS consortium blockchain is adopted as the basic platform. Leveraging its distributed ledger, permission management, and optimized PBFT consensus mechanism, it supports multiple entities such as hospitals, patients, and insurance companies to collaboratively maintain data, ensuring that transactions are traceable and immutable.

- Design of roles and processes: The functional boundaries of the four parties are clarified. Hospitals are responsible for collecting medical data and generating 3D coordinate hash values for on-chain storage through an improved Bloom filter. Patients control data access permissions through private keys and unique verification parameters (keys). Insurance companies verify insurance eligibility and claim conditions based on on-chain Bloom filters. The blockchain network stores encrypted core data (such as user public keys, Bloom filter coordinates, timestamps, etc.), achieving “data availability without visibility.”

- Innovation in privacy protection: To address the false positive problem of traditional Bloom filters, they are upgraded to a 3D structure, storing only the 3D coordinates of medical data instead of original information. Combined with user-specific hash functions and key control, this not only reduces storage costs (only 1.27 KB for 100 records) but also lowers the error rate to nearly 0 (a reduction compared to the traditional scheme when processing 10,000 data entries).

- Support from smart contracts: Registration contracts, medical information data contracts, etc., are developed to automatically handle identity authentication, data on-chain, and verification processes. In a 4-node consortium chain test, the system achieved a transaction processing capacity of 736 TPS, with an overall process calculation overhead of approximately 181.7 ms, meeting the needs of large-scale applications.

2. Related Work

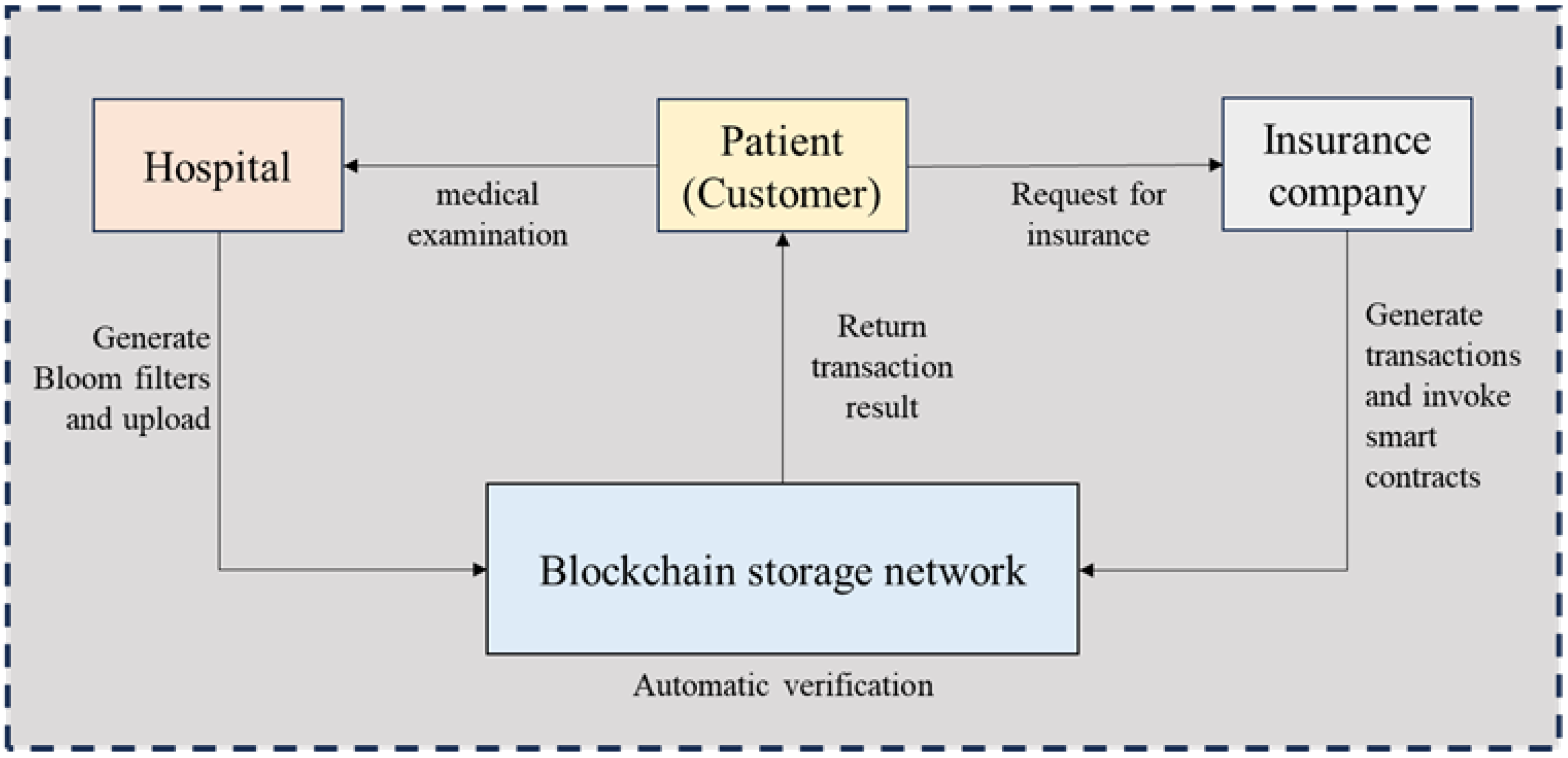

3. Design of Blockchain-Based Medical Insurance Transaction Model

3.1. Design Goal

- Fraud Prevention and Transparency Enhancement: Transaction data should be recorded on the blockchain in chronological order, leveraging blockchain and cryptographic technologies to ensure data transparency, immutability, traceability, verifiability, and censorship resistance. This guarantees the tamper-proof nature of health data and transaction records, preventing insurance companies or hospitals from falsifying data;

- Privacy Protection and Data Minimization: Bloom filters store hashes of health information rather than raw sensitive data (e.g., hypertension diagnoses) on the blockchain, retaining only the capability for existence verification. This reduces the risk of privacy leakage by minimizing the exposure of sensitive information;

- Efficient Querying: Leveraging the efficient querying features of Bloom filters— specifically low storage requirements and fast verification—smart contracts facilitate rapid result queries, support high-concurrency transaction processing, and meet the needs of large-scale user bases.

3.2. Why Blockchain Is Needed

3.3. System Model

- Hospitals: Responsible for collecting patients’ health data (e.g., hypertension; diabetes), processing it through a hash function, storing it in a Bloom filter, and uploading the data digest to the blockchain;

- Patients (Customers): Entities assuming different roles when interacting with different parties, holding a unique private key and verification parameter;

- –

- As patients: After collaborating with hospitals to collect health data, they provide the unique verification parameter to assist hospitals in data processing, then use the unique private key to sign off, facilitating hospitals to upload the processed data to the blockchain;

- –

- As customers of insurance companies: Provide the unique verification parameter to help insurance companies validate eligibility criteria.

- Blockchain Network: Serving as the core layer, it stores the set of 3D coordinates processed by the improved Bloom filter and provides an environment for data verification and transaction execution through smart contracts;

- Insurance Companies: Initiate query requests via smart contracts, using the Bloom filter to determine whether patients’ health conditions meet the criteria for insurance application/claim settlement.

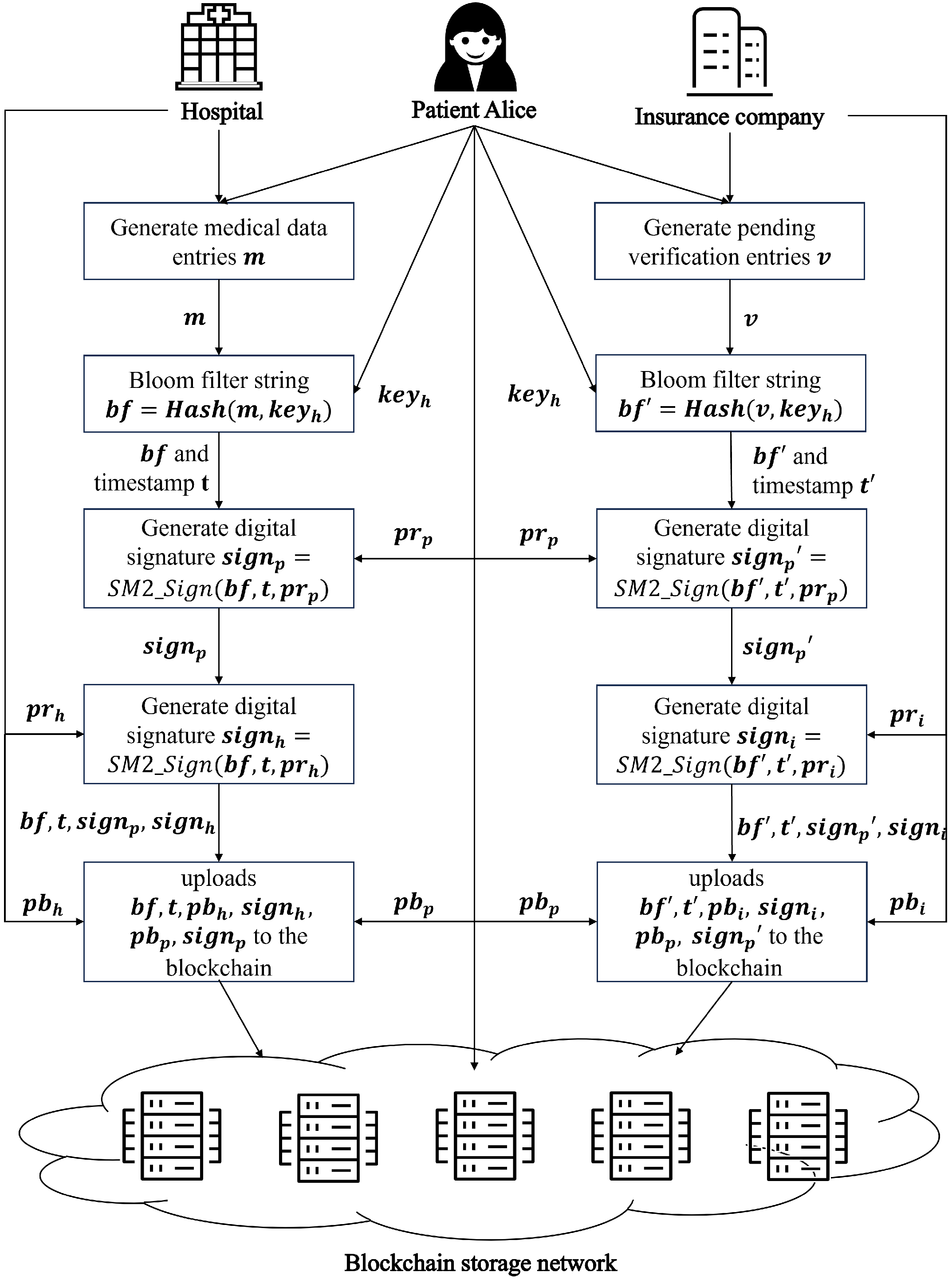

- Registration of Roles in the Blockchain Network: First, patients, hospitals, and insurance companies register in the blockchain network. The blockchain network assigns each role a unique public key address pb and a unique private key pr. The public key serves as the role’s unique account, while the private key is stored independently by each role. For users, a unique verification parameter keyh is generated during registration, encrypted with the user’s public key, and stored on the blockchain network to prevent malicious tampering. Since only the user’s private key can decrypt the ciphertext, only the user holding the private key can access the relevant key.

- Interaction Between Patients and Hospitals: Patients (customers) undergo health checks at hospitals in daily life, and each check generates a medical data term m (e.g., “hypertension”). The process is as follows:

- (a)

- The hospital and the patient (customer) jointly retrieve the unique verification parameter keyh stored in the blockchain network;

- (b)

- The term m is processed through a specialized Bloom filter based on keyh, generating a string bf containing the 3D coordinate set of the health check term;

- (c)

- The patient (customer) uses their unique private key and a timestamp t to perform an SM2 digital signature on bf, obtaining the signature ;

- (d)

- The hospital uses its unique private key and timestamp t to sign bf again, generating the second signature ;

- (e)

- The hospital uploads bf, the two signatures and , and the public keys and to the blockchain network;

- (f)

- The blockchain network first checks the timestamp t: if t is earlier than the stored timestamp, the request is rejected as invalid or overdue; if t is later, the network verifies the signatures using the public keys. Upon successful verification, bf and t are saved under the patient’s unique blockchain account.

- Interaction Between Patients and Insurance Companies:

- Insurance Purchase: When a patient (customer) applies for insurance, the insurance company conducts the following:

- –

- Collaborates with the user via a smart contract to retrieve and the 3D coordinate set string bf from the blockchain;

- –

- Generates health data parameter terms v based on the policy requirements, using the user’s specialized Bloom filter to verify compliance. For example, if a query for “hypertension” in bf returns a match, the insurance application is rejected; otherwise, an electronic policy is issued.

- Claims Settlement: When a patient (customer) files a claim, they do not need to manually provide medical records. The insurance company verifies the Bloom filter stored on the blockchain: if the required medical condition exists in bf, the claim is approved; otherwise, it is rejected.

3.4. BMIT Data Storage Solution

- Rigid Storage Cost Growth: For Amazon EC2 nodes, storing 1 TB of blockchain data annually costs USD 276. A network with tens of thousands of nodes would incur total storage expenses exceeding USD hundreds of millions.

- Node Synchronization Efficiency Degradation: Bitcoin’s median block propagation delay reaches 12.6 s, while new nodes require 72 h to complete initial block synchronization (under 10 Mbps bandwidth conditions).

- State Verification Performance Bottlenecks: Ethereum’s global state tree contains 140 million account nodes, with average query latency increasing from 8 ms in 2016 to 53 ms currently.

- Patient : A unique public key issued to the patient upon registration in the blockchain system. It serves as the primary key for on-chain data storage, enabling data retrieval. Additionally, as a public key, it validates SM2 digital signatures uploaded by users (e.g., verifying whether the signature of user-uploaded data is generated by the legitimate user);

- Bloom Filter String bf: This core data component stores 3D coordinate values of medical terms processed by the 3D Bloom filter, deliberately avoiding storage of the complete filter structure. The design leverages inherent sparsity in real-world applications, where individual patients typically trigger fewer than 100 active markers in the filter space. By storing only populated coordinates, the system achieves an over storage reduction while preserving essential verification capabilities;

- Timestamp t: A timestamp (e.g., Unix epoch time with millisecond precision) recorded when the user uploads the Bloom filter string at the hospital, enabling chronological identification of data versions;

- Encrypted : The ciphertext of a user’s unique verification parameters, generated by encrypting the verification parameters directly with the user’s public key. Only the holder of the corresponding private key can decrypt this ciphertext to retrieve the original verification parameters. Patients subsequently provide the decrypted verification parameters to hospitals or insurance providers, enabling these entities to generate the corresponding Bloom filter string from the patient’s medical data;

- Hospital : A unique public key issued to the hospital upon blockchain registration. Combined with the timestamp t, it forms a composite identifier for medical examination records, facilitating future data audits.

4. Blockchain Network Platform

4.1. Classification of Blockchain Platforms

- Public Chain Platforms: Represented by Ethereum, they support the deployment of smart contracts and the development of decentralized applications (DApps) and are the most active blockchain networks in the global developer ecosystem. As the earliest public chain, Bitcoin remains the cryptocurrency network with the highest market value, with its security and degree of decentralization widely recognized. Additionally, emerging public chains such as Solana and Avalanche have risen rapidly in decentralized finance (DeFi) and non-fungible token (NFT) fields, relying on their high TPS (transactions per second) performance;

- Consortium Chain Platforms: Managed jointly by multiple pre-selected organizations, nodes require authorization to join, and read/write permissions are customized according to alliance rules, making them suitable for inter-institutional collaboration (e.g., finance and supply chains). For example, Hyperledger Fabric—led by the Linux Foundation—is specifically designed for enterprise-level applications, supporting permission management and flexible consensus mechanisms, and is widely used in scenarios such as supply chains and cross-border trade. R3 Corda focuses on the financial services sector and has become the preferred solution for inter-bank settlement, insurance claims, and other scenarios through its unique “peer-to-peer transaction privacy protection” technology. China’s consortium chain platforms, such as Ant Chain and WeBank’s FISCO BCOS, have implemented numerous practical applications in fields such as government services and digital copyright;

- Private Blockchain Platforms: controlled by a single organization, node access and permissions are highly centralized, making them suitable for scenarios such as internal enterprise audits and government management (e.g., digital currency systems of some central banks).

4.2. Advantages of Consortium Blockchains

- In terms of permissions: The core feature of public blockchains is “full decentralization,” where any node can freely join or exit the network, and data is visible to all participants. While this ensures extreme decentralization and transparency, it increases the risk of intrusion by malicious nodes from unknown entities and fails to meet institutions’ requirements for data privacy (e.g., corporate business data; sensitive government information). Private blockchains, by contrast, are completely closed, with all nodes controlled by a single institution—resulting in high data privacy and centralized permissions. However, this sacrifices blockchain’s core value of “multi-node collaboration,” making them more akin to traditional distributed databases. Consortium blockchains, by contrast, require nodes to be authorized by consortium institutions (e.g., an inter-bank consortium blockchain only allows member banks to access it). Unauthorized nodes cannot participate in ledger maintenance or view sensitive data. Meanwhile, consortium nodes jointly maintain the ledger, avoiding the “single-entity control risk” of private blockchains and addressing the “privacy leakage risks” of public blockchains through permission management. This makes them particularly suitable for multi-institutional collaboration scenarios (e.g., upstream and downstream enterprises in supply chains; cross-departmental government collaboration);

- In terms of performance and efficiency: To ensure decentralization and security, public blockchains typically adopt inefficient consensus mechanisms such as “Proof of Work (PoW)” (e.g., Bitcoin supports only 7 transactions per second). Additionally, all nodes must synchronize full data, leading to slow transaction confirmation and high costs (e.g., soaring transaction fees during Ethereum network congestion). Consortium blockchains significantly improve performance by optimizing consensus mechanisms (e.g., adopting “Practical Byzantine Fault Tolerance (PBFT)” or “RAFT”). Since the number of nodes is limited and trusted (consortium institutions are usually known and reputable), there is no need for computing power competition to verify transactions. Transaction confirmation time can be shortened to seconds or even milliseconds, with transactions per second (TPS) reaching thousands (e.g., Hyperledger Fabric can achieve tens of thousands of TPS). Furthermore, consortium blockchains can flexibly design data storage strategies (e.g., synchronizing only key transaction information instead of the full ledger), further reducing node burdens and meeting the needs of high-frequency transaction scenarios (e.g., cross-border payments; real-time updates of supply chain logistics information);

- In terms of operation and maintenance: Public blockchains rely on “miners” or “validator nodes,” and their incentive mechanisms (e.g., Bitcoin mining rewards) result in high operational costs (e.g., electricity and hardware investments). Moreover, the threshold for ordinary users to participate in ledger maintenance is extremely high (requiring meeting computing power or token staking requirements). Consortium blockchains have a limited number of nodes (usually dozens to hundreds) with operational costs jointly borne by consortium institutions, without reliance on external incentives. Hardware and network investments for nodes can be flexibly configured according to consortium needs, significantly lowering the technical threshold for multi-institutional collaboration (e.g., small and medium-sized enterprises joining industry consortium blockchains do not need to build complex infrastructure themselves);

- In terms of rules: Public blockchain rules (e.g., consensus mechanisms; smart contract logic) are difficult to modify once deployed and require “hard forks” for upgrades—demanding consensus from all network nodes, resulting in extremely poor flexibility. Consortium blockchains can flexibly customize technical rules based on the joint needs of consortium institutions: for example, adjusting consensus mechanisms to adapt to different trust environments (e.g., using PBFT for low-trust scenarios and RAFT for high-trust scenarios); designing differentiated data permissions (e.g., core transaction data visible only to key consortium nodes, while basic logistics information is public to all nodes); and even supporting dynamic upgrades of smart contracts (without forking). This customization capability allows them to precisely match industry scenarios (e.g., medical consortium blockchains need to protect patient privacy while enabling hospitals to share diagnostic data).

- The precise division of access permissions is the primary link in ensuring system information security. Given that the system processes data potentially involving hospital diagnosis records, patients’ personal health information, and insurance companies’ business data—all of which are highly sensitive and private—any leakage could cause incalculable losses to relevant parties. Therefore, access permissions must be strictly and meticulously divided. Specifically, only hospitals, patients, and insurance companies that have formally registered and completed identity verification are granted system access. This mechanism effectively blocks unauthorized access at the source, minimizing the risk of privacy leaks. For example, hospitals must submit relevant qualification documents during registration to confirm their legitimate medical service qualifications; patients must complete real-name registration and identity verification to protect their legal rights to health information; insurance companies must provide corresponding business license documents to ensure their access complies with industry norms and legal regulations;

- Second, the system’s operational efficiency is critical to meeting large-scale application scenarios. With the accelerating digitalization of the medical industry and the continuous expansion of insurance services, the system will inevitably face massive transaction processing demands in the future, placing high requirements on its transactions per second (TPS). While public blockchains offer decentralization and transparency, their consensus mechanisms often result in slow processing speeds when handling large-scale transactions, failing to meet high-concurrency business needs. Consortium blockchains, by contrast—managed jointly by pre-selected nodes—maintain a certain degree of decentralization while significantly improving transaction efficiency through optimized consensus mechanisms, achieving higher TPS. This enables consortium blockchains to better handle potential large-scale transaction scenarios, ensuring stable operation even under high loads. Therefore, considering the system’s efficiency requirements, consortium blockchains are more suitable than public blockchains for this system.

4.3. FISCO BCOS

- Security: It fully adopts national cryptographic algorithms such as SM2 and SM3, strictly complies with national cryptographic standards, and provides high-strength encryption protection for the entire process of data transmission, storage, and transactions. It is particularly suitable for fields with extremely high security requirements, such as healthcare and finance;

- Performance: Through technological innovations such as the optimized PBFT consensus mechanism and parallel computing, it can support high-concurrency transaction processing, effectively meeting the efficiency needs of large-scale business scenarios;

- Flexibility: It provides rich smart contract interfaces and development tools, facilitating developers to quickly build and deploy applications according to actual business needs. Additionally, it supports dynamic node expansion and flexible permission configuration, enabling adaptation to collaboration modes of organizations of different scales;

- Cost advantage: As a fully open-source platform (with code hosted on GitHub), enterprises do not need to pay authorization fees, and the supporting full-link toolchain significantly reduces the development threshold;

- Ecological and implementation capabilities: It has one of the most active consortium blockchain ecosystems in China. A community of over 100,000 developers provides detailed documents, tutorials, and offline training support. To date, it has been implemented in over 300 cases across fields such as finance (supply chain finance; cross-border payment), government affairs (electronic certificates; judicial evidence storage), and public welfare (medical data sharing; charity traceability), verifying its adaptability in actual business.

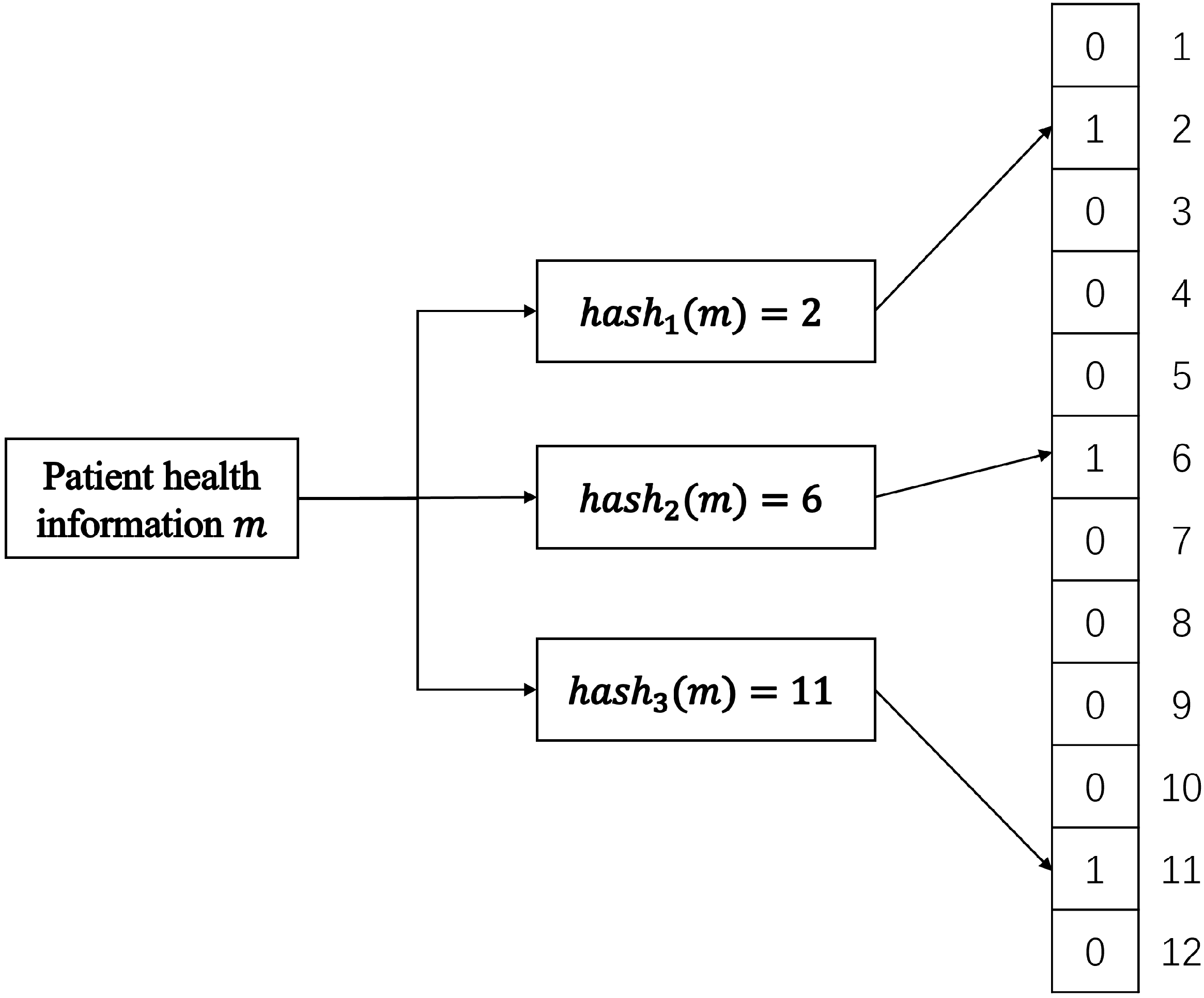

5. Bloom Filter in BMIT

5.1. The Traditional Bloom Filter

- Adding Elements: To add an element to the Bloom filter, k distinct hash functions are applied to it. Each hash function produces an index value between 0 and m-1, and the corresponding bits at these index positions in the bit array are set to 1. For example, if the first hash function yields index 10 and the second yields index 25, bits 10 and 25 in the array are set to 1. This process uses multiple hash functions to map the element onto the bit array from different perspectives, creating a distributed representation;

- Querying Elements: To check if an element is present, the same k hash functions are applied to it, yielding k index values. The Bloom filter then checks if all the bits at these index positions in the array are set to 1:

- –

- If all corresponding bits are 1, the Bloom filter considers the element possibly present in the set;

- –

- If any bit is 0, it can definitively conclude the element is not in the set.

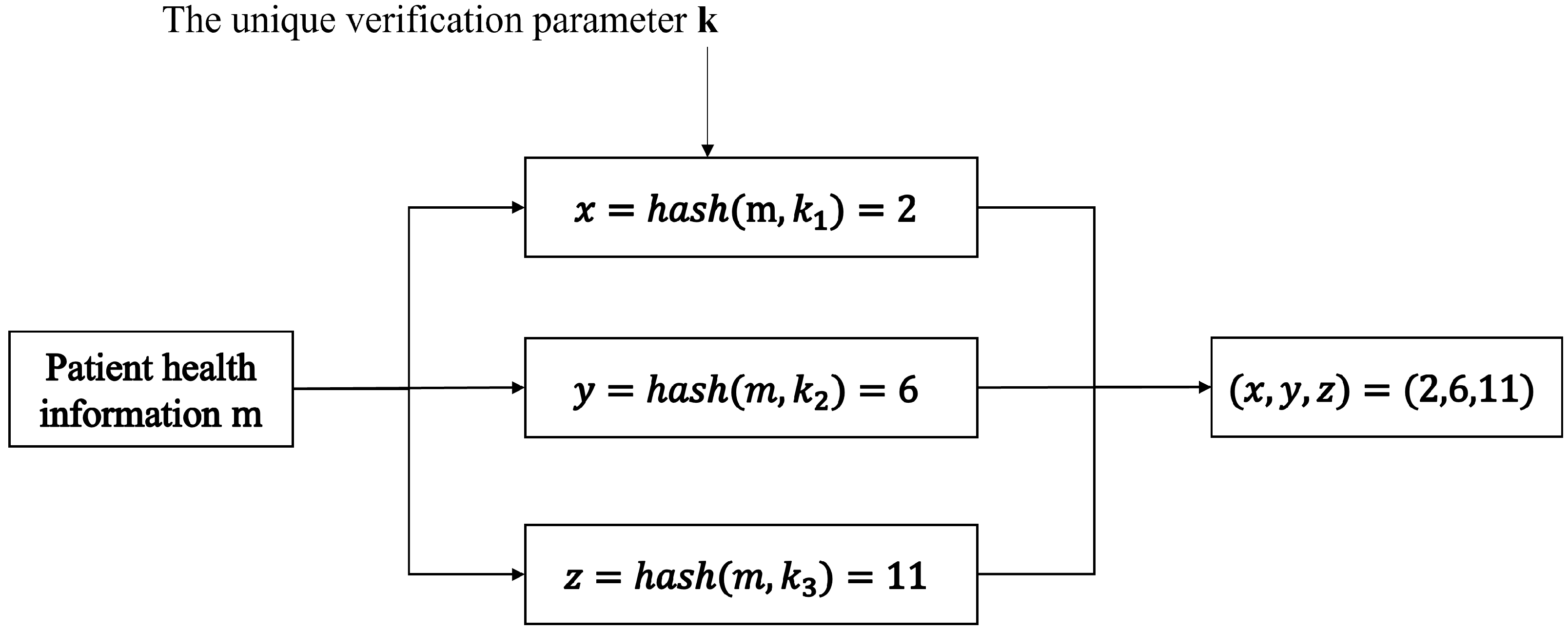

5.2. Improved Bloom Filter for BMIT

- Since we are maintaining medical information for individual patients, a single patient cannot have an excessive number of disease terms. Therefore, an extremely large indexing space is unnecessary;

- Given the involvement of insurance claims, insurance companies naturally desire the lowest possible false positive rate, prioritizing accuracy over retrieval speed.

- From Bit Array to Multidimensional Array: We replace the one-dimensional bit array with a multidimensional array structure. Considering practical needs, we specifically adopt a three-dimensional array. This structure significantly reduces the mutual influence between different elements, thereby greatly lowering the probability of false positives during retrieval;

- Storing Array Coordinates on the Blockchain: Instead of storing the entire Bloom filter array, we store only the coordinates within the three-dimensional array on the blockchain. This approach is motivated by the observation that most patients in reality do not suffer from a large number of diseases. Storing coordinates drastically reduces storage requirements compared to storing the entire multidimensional Bloom filter space;

- User-Specific Hash Functions with Key Control: Each user is assigned dedicated hash functions. The user retains the associated key, and only possession of this key allows generation and retrieval of that specific user’s Bloom filter. This measure transfers control of the data to the user via the key, significantly enhancing user privacy and security. Furthermore, since only coordinate values are stored on the blockchain, even if hackers obtain these values, they cannot decipher them, thus protecting user privacy.

5.3. Operation of the Improved Bloom Filter in the BMIT System

- User Registration:

- k-value generation: During the registration process, the user is assigned three large random prime numbers. These primes collectively serve as the user’s Unique Verification Parameter k. For practical computational efficiency, these primes are randomly selected from a pre-generated prime number database rather than being generated on-the-fly for each use;

- Integrity Verification: To ensure the integrity and authenticity of the assigned k value throughout its lifecycle, a digital signature is generated using the user’s private key. This signature corresponds specifically to the k value;

- Verification Process: Whenever the validity of a user-provided k value needs to be confirmed (e.g., by a hospital or insurer), the verifier performs the following steps:

- (a)

- Compute the hash value of the provided k;

- (b)

- Verify this hash against the digital signature stored on the blockchain using the user’s public key;

- (c)

- A successful verification confirms that the k-value is correct and untampered with.

- Adding Elements (Medical Term Creation):

- When a medical term (e.g., a diagnosis) is generated for the user at a hospital, the user provides their k value to the hospital;

- The hospital first verifies the authenticity of the provided k using the digital signature stored on the blockchain, as described in step 1;

- Upon successful verification, the hospital generates a new element for the user’s Bloom filter, represented as a three-dimensional coordinate;

- Coordinate Generation: The coordinate is algorithmically generated based on the user’s verified k value and the specific medical term being recorded;

- Storage: The hospital then uploads this newly generated three-dimensional coordinate to the blockchain system, where it is stored as part of the user’s Bloom filter representation.

- Retrieving Elements (Insurance Application):

- When a user applies for insurance with a company, they provide their k value to the insurer;

- The insurer verifies the authenticity of the provided k using the digital signature stored on the blockchain, following the same process as the hospital;

- Upon successful verification, the insurer generates a set of three-dimensional coordinates corresponding to the medical terms (e.g., pre-existing conditions; specific diagnoses) relevant to the insurance policy being applied for;

- Coordinate Generation: These query coordinates are algorithmically generated based on the verified user k and the specific medical terms the insurer needs to check;

- Comparison and Decision:

- (a)

- The insurer retrieves the user’s stored Bloom filter coordinates from the blockchain;

- (b)

- The insurer then compares the generated query coordinates against the retrieved coordinates (user’s actual record);

- (c)

- Based on the comparison results (e.g., presence or absence of matches for specific query coordinates), the insurer determines whether the user qualifies for the policy or under what terms. This allows confirmation of relevant medical history without accessing raw medical data.

5.4. The Hash Function of the Improved Bloom Filter in the BMIT System

- s is the input string;

- denotes the ASCII value of the i-th character in the string;

- p represents the chosen prime base;

- n is the string length;

- M is the modulus.

6. Smart Contract Design

6.1. Registry Contract

6.2. Medical Information Data Contract

7. Simulation Results and Discussion

- Processor: Intel Core i7-14700HX 2.10 GHz;

- Memory: 32 GB RAM at 5600 MHz;

- Operating Systems: Windows 11 and Ubuntu 20.04 (dual-boot configuration);

- Bloom Filter Testing: Implemented in Java using IntelliJ IDEA 2021.1 and JDK 1.8.0;

- Smart Contracts: Authored in Solidity.

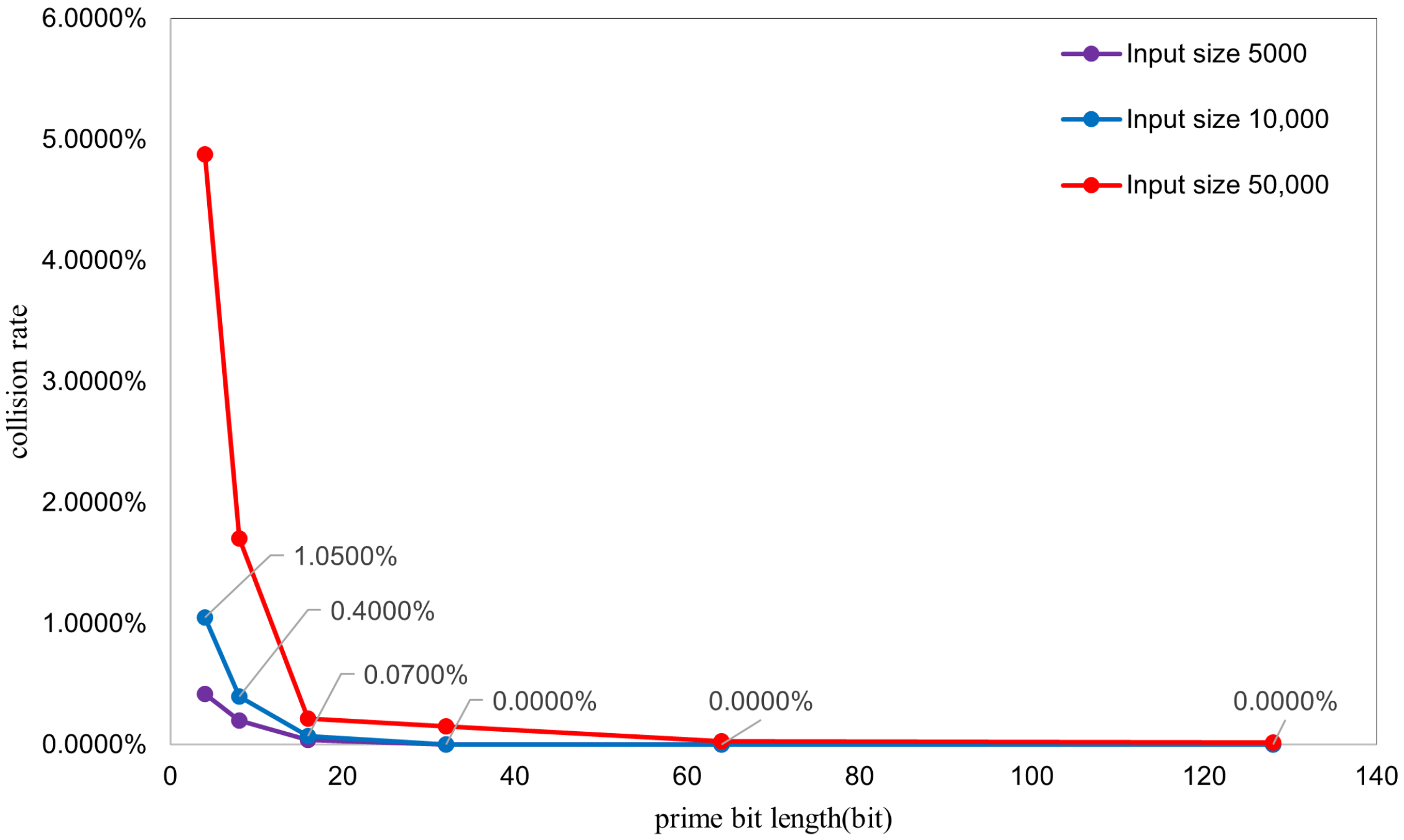

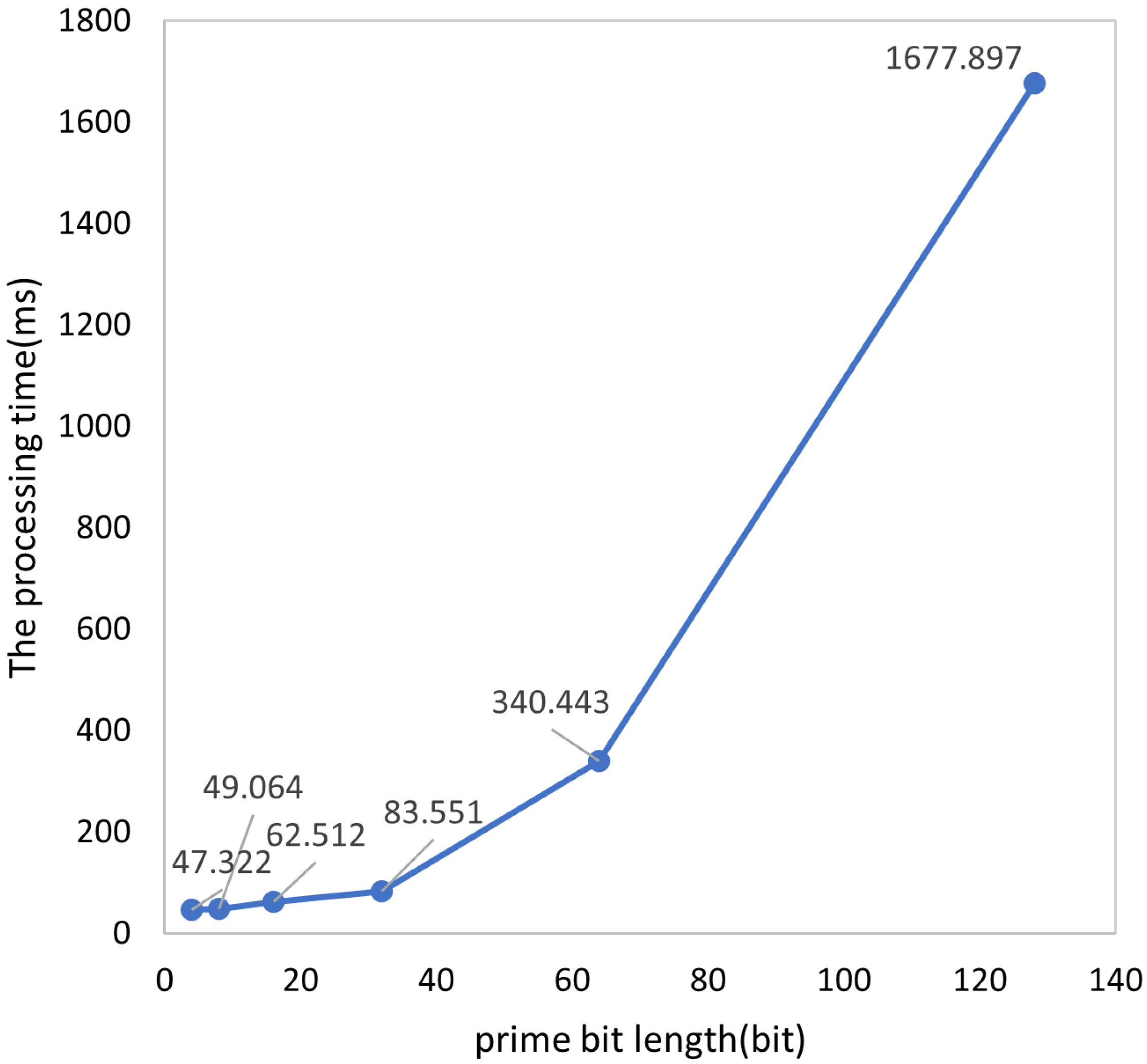

7.1. Performance Testing of the Enhanced Bloom Filter

- Collision Rate: Calculated as . This metric represents the proportion of strings experiencing collisions within the total input (note: if multiple strings map to the same coordinate, all except the first are considered collided). Thus, this rate reflects the percentage of strings that collide with at least one other string (i.e., multiple strings mapping to a single coordinate). It differs from the conventional hash collision probability (the likelihood of two random strings colliding), as it specifically addresses multi-input collisions occurring in a single hash operation;

- Average Processing Time: Derived as .

- Increased Collision Probability with Larger Inputs: As the input size increases, collision probability rises significantly. This aligns with the theoretical expectation for Bloom filters, where a fixed element space inevitably leads to higher collision probabilities as more elements are inserted.

- Decreased Collision Rate with Larger Prime Bit Lengths: Higher prime bit lengths correlate with reduced collision rates. This occurs because larger primes yield outputs closer to an ideal uniform distribution. The underlying mechanism involves polynomial calculations within an expansive integer space (determined by prime p) prior to the modulo M operation. When larger primes are employed the following occurs:

- Polynomial values occupy a broader numerical range before modulo reduction;

- Increased base magnitude disperses hash values more widely across different strings;

- Lower collision probability results from reduced hash value clustering.

Specifically, the three distinct hash functions utilize three separate large primes. Small primes increase collision susceptibility due to the following:- Repetitive cyclic patterns inherent in polynomial computations with limited primes;

- Weakened independence between hash functions.

Conversely, larger prime bit lengths enhance inter-function independence because of the following:- The primes themselves exhibit greater mutual independence;

- They lack small common factors.

7.2. Comparative Analysis with Conventional Bloom Filters

- Storage Efficiency:Following optimization, storage of the entire data structure is unnecessary. Instead, a series of spatial coordinates must be stored. Storing a single coordinate requires three integers (12 bytes). Thus, storing coordinates for n elements demands bytes of space. Given that the system stores medical insurance related entries (e.g., genetic diseases; infectious diseases) for individual patients, real-world data typically contain 100 entries. Therefore, we calculate the storage for 100 medical records, with collision probability measured at in prior experiments. Since coordinates are stored as 13-character ASCII strings (1 byte per character), 100 records require 1300 bytes (1.27 KB). In practice, most patients exhibit single-digit condition counts, with few barely reaching double digits, reducing typical storage to 100 bytes.For the conventional Bloom filter, its size can be calculated through the false positive rate calculation formula of the Bloom filter, which is expressed as follows: where p represents the false positive rate, k denotes the number of hash functions employed, n denotes the quantity of elements, and m denotes the bit array size. To enable a direct comparison with the enhanced Bloom filter, we construct a conventional Bloom filter using three hash functions (k = 3) with an ultra-low false positive rate (), which aligns with the performance characteristics of the enhanced system. Solving this formula yields a required bit array size of m = 13,781 bits, corresponding to a storage requirement of 1723 bytes. Since this filter maintains a complete dataset representation, its storage footprint remains fixed at 1723 bytes irrespective of actual operational conditions.

- Error Rates:Given the divergent operational principles, direct comparative analysis of the enhanced filter as a conventional filter is infeasible. To enable valid comparison, both filters were constrained to identical storage allocation. When processing 10,000 inputs, the enhanced Bloom filter requires 12 KB for coordinate storage. Consequently, the conventional filter’s bit array size was configured to the same size of 12 KB (96,000 bits) for performance simulation. Experimental results revealed the following:

- Conventional filter’s false positive rate: ;

- Enhanced filter’s collision rate: .

- Element Insertion:For the enhanced Bloom filter, which stores a set of 3D coordinates, new elements are added by appending fresh 3D coordinates to the existing set, achieving a time complexity of O(1). For the conventional Bloom filter, which maintains a complete bit array, insertion involves setting the corresponding k bit positions to 1, which also operates in O(1) time.

- Query Performance:For the enhanced Bloom filter, querying involves searching the stored point set of 3D coordinates. Although this point set is stored in string format, assuming the exclusion of data structure conversion time (since both implementations require string-to-queryable conversion), lookups via a hash table implementation achieve a time complexity of O(1). For the conventional Bloom filter, querying entails checking the corresponding bits in the bit array. Similarly, disregarding data conversion overhead, direct bit array querying also exhibits a time complexity of O(1).

7.3. Smart Contract Testing

7.4. Main Computational Overhead of the BMIT System

- SM2 encryption/decryption targets the unique verification parameter k, so the simulated data size is set to 1 KB.

- SM2 signing/verification primarily handles request information, with a simulated data size of 10 KB.

- The optimized Bloom filter’s time consumption adopts the conclusion from the previous section, with a single-operation overhead of 83.6 ms under extreme conditions.

- Three registration operations;

- One SM2 encryption;

- Two SM2 decryptions;

- Four SM2 signatures;

- Four SM2 signature verifications;

- Two optimized Bloom filter calculations.

8. Challenges in Practical Deployment

8.1. Interoperability Challenges

8.2. Regulatory Compliance Challenges

8.3. Implementation Cost Challenges

8.3.1. Cost Advantages of the FISCO BCOS Consortium Blockchain

- Open-source Features Reduce Upfront Investment: As an open-source consortium blockchain platform, FISCO BCOS allows free access to its core code, eliminating the need to pay third parties for software licensing fees and directly reducing the upfront technical introduction costs of the project. At the same time, the open-source community has an active group of developers. When the BMIT system encounters technical problems (e.g., smart contract vulnerabilities; node communication failures), it can quickly obtain solutions with the support of community resources, reducing the trial-and-error costs and time costs of technical research and development.

- High-performance Design Reduces Resource Consumption: FISCO BCOS significantly improves the system’s transaction throughput (TPS) and processing efficiency by optimizing consensus mechanisms (e.g., parallel processing of PBFT consensus) and transaction processes (e.g., pre-execution optimization), which can meet the demand for “centralized processing of peak reimbursement applications” in medical insurance scenarios. The high-performance feature can reduce over-reliance on hardware resources; for example, in scenarios with 100,000 daily medical insurance transactions, there is no need for frequent server configuration upgrades, indirectly reducing hardware procurement and operation and maintenance costs.

8.3.2. Cost Pressures in Practical Deployment

8.3.3. Balance Between Costs and Benefits

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BMIT | Blockchain-Based Medical Insurance Transaction |

| NS3 | Network simulator 3 |

| IoT | Internet of Things |

| pb | Public key address |

| pr | Private key |

| bf | Bloom filter string |

| The patient’s public key address | |

| The patient’s private key | |

| The patient’s digital signature | |

| The hospital’s public key address | |

| The hospital’s private key | |

| The hospital’s digital signature | |

| The insurance company’s public key address | |

| The insurance company’s private key | |

| The insurance company’s digital signature | |

| t | timestamp |

| The ciphertext of a user’s unique verification parameters |

References

- Elangovan, D.; Long, C.S.; Bakrin, F.S.; Tan, C.S.; Goh, K.W.; Yeoh, S.F.; Loy, M.J.; Hussain, Z.; Lee, K.S.; Idris, A.C.; et al. The use of blockchain technology in the health care sector: Systematic review. JMIR Med. Inform. 2022, 10, e17278. [Google Scholar] [CrossRef] [PubMed]

- Tomar, K.; Sharma, S. A proposed artificial intelligence and blockchain technique for solving health insurance challenges. In Data-Driven Technologies and Artificial Intelligence in Supply Chain; CRC Press: Boca Raton, FL, USA, 2023; pp. 31–57. [Google Scholar]

- Najar, A.V.; Alizamani, L.; Zarqi, M.; Hooshmand, E. A global scoping review on the patterns of medical fraud and abuse: Integrating data-driven detection, prevention, and legal responses. Arch. Public Health 2025, 83, 43. [Google Scholar] [CrossRef] [PubMed]

- Tiwari, A. Blockchain technology for health insurance. In Sensor Networks for Smart Hospitals; Elsevier: Amsterdam, The Netherlands, 2025; pp. 389–410. [Google Scholar]

- Radanović, I.; Likić, R. Opportunities for use of blockchain technology in medicine. Appl. Health Econ. Health Policy 2018, 16, 583–590. [Google Scholar] [CrossRef] [PubMed]

- Kar, A.K.; Navin, L. Diffusion of blockchain in insurance industry: An analysis through the review of academic and trade literature. Telemat. Inform. 2021, 58, 101532. [Google Scholar] [CrossRef]

- Rahimi, N.; Gudapati, S.S.V. Emergence of blockchain technology in the healthcare and insurance industries. In Blockchain Technology Solutions for the Security of IoT-Based Healthcare Systems; Elsevier: Amsterdam, The Netherlands, 2023; pp. 167–182. [Google Scholar]

- Antwi, M.; Adnane, A.; Ahmad, F.; Hussain, R.; ur Rehman, M.H.; Kerrache, C.A. The case of HyperLedger Fabric as a blockchain solution for healthcare applications. Blockchain Res. Appl. 2021, 2, 100012. [Google Scholar] [CrossRef]

- Ismail, L.; Zeadally, S. Healthcare insurance frauds: Taxonomy and blockchain-based detection framework (Block-HI). IT Prof. 2021, 23, 36–43. [Google Scholar] [CrossRef]

- Liu, W.; Yu, Q.; Li, Z.; Li, Z.; Su, Y.; Zhou, J. A blockchain-based system for anti-fraud of healthcare insurance. In Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC), Chengdu, China, 6–9 December 2019; pp. 1264–1268. [Google Scholar]

- Saldamli, G.; Reddy, V.; Bojja, K.S.; Gururaja, M.K.; Doddaveerappa, Y.; Tawalbeh, L. Health care insurance fraud detection using blockchain. In Proceedings of the 2020 Seventh International Conference on Software Defined Systems (SDS), Paris, France, 30 June–3 July 2020; pp. 145–152. [Google Scholar]

- Mishra, A.S. Study on blockchain-based healthcare insurance claim system. In Proceedings of the 2021 Asian Conference on Innovation in Technology (ASIANCON), Pune, India, 27–29 August 2021; pp. 1–4. [Google Scholar]

- Zheng, H.; You, L.; Hu, G. A novel insurance claim blockchain scheme based on zero-knowledge proof technology. Comput. Commun. 2022, 195, 207–216. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, L.; Sun, Y. MIStore: A blockchain-based medical insurance storage system. J. Med. Syst. 2018, 42, 149. [Google Scholar] [CrossRef] [PubMed]

- Al Amin, M.; Shah, R.; Tummala, H.; Ray, I. Utilizing Blockchain and Smart Contracts for Enhanced Fraud Prevention and Minimization in Health Insurance through Multi-Signature Claim Processing. In Proceedings of the 2024 International Conference on Emerging Trends in Networks and Computer Communications (ETNCC), Windhoek, Namibia, 23–25 July 2024; pp. 1–9. [Google Scholar]

- Purswani, P. Blockchain-based parametric health insurance. In Proceedings of the 2021 IEEE Symposium on Industrial Electronics & Applications (ISIEA), Langkawi Island, Malaysia, 10–11 July 2021; pp. 1–5. [Google Scholar]

- Alnuaimi, A.; Alshehhi, A.; Salah, K.; Jayaraman, R.; Omar, I.A.; Battah, A. Blockchain-based processing of health insurance claims for prescription drugs. IEEE Access 2022, 10, 118093–118107. [Google Scholar] [CrossRef]

- Namitha, T.S.; Rai, B.K.; Pallavi, P.; Pavana, R.; Pooja, M. Blockchain Based Health Insurance System. In Proceedings of the 2025 3rd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 7–8 March 2025; pp. 1216–1221. [Google Scholar] [CrossRef]

- Liang, X.; Zhao, J.; Shetty, S.; Liu, J.; Li, D. Integrating blockchain for data sharing and collaboration in mobile healthcare applications. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–5. [Google Scholar]

- Raza, A.A.; Arora, B.; Irfan, M.T. Securing Health Insurance Claims with Decentralization and Transparency: A blockchain-based Approach. Procedia Comput. Sci. 2025, 259, 1918–1926. [Google Scholar] [CrossRef]

- Kapadiya, K.; Ramoliya, F.; Gohil, K.; Patel, U.; Gupta, R.; Tanwar, S.; Rodrigues, J.J.; Alqahtani, F.; Tolba, A. Blockchain-assisted healthcare insurance fraud detection framework using ensemble learning. Comput. Electr. Eng. 2025, 122, 109898. [Google Scholar] [CrossRef]

- Joginipalli, S.K. Leveraging blockchain for secure and transparent insurance claim processing. J. Comput. Anal. Appl. 2022, 30, 12. [Google Scholar]

- Alnavar, K.; Babu, C.N. Blockchain-based smart contract with machine learning for insurance claim verification. In Proceedings of the 2021 5th International Conference on Electrical, Electronics, Communication, Computer Technologies and Optimization Techniques (ICEECCOT), Mysuru, India, 10–11 December 2021; pp. 247–252. [Google Scholar]

- El-Samad, W.; Atieh, M.; Adda, M. Transforming health insurance claims adjudication with blockchain-based solutions. Procedia Comput. Sci. 2023, 224, 147–154. [Google Scholar] [CrossRef]

- Kapadiya, K.; Patel, U.; Gupta, R.; Alshehri, M.D.; Tanwar, S.; Sharma, G.; Bokoro, P.N. Blockchain and AI-empowered healthcare insurance fraud detection: An analysis, architecture, and future prospects. IEEE Access 2022, 10, 79606–79627. [Google Scholar] [CrossRef]

- Elhence, A.; Goyal, A.; Chamola, V.; Sikdar, B. A blockchain and ML-based framework for fast and cost-effective health insurance industry operations. IEEE Trans. Comput. Soc. Syst. 2022, 10, 1642–1653. [Google Scholar] [CrossRef]

- Alhasan, B.; Qatawneh, M.; Almobaideen, W. Blockchain technology for preventing counterfeit in health insurance. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordan, 14–15 July 2021; pp. 935–941. [Google Scholar]

- Guerar, M.; Migliardi, M.; Russo, E.; Khadraoui, D.; Merlo, A. SSI-MedRx: A fraud-resilient healthcare system based on blockchain and SSI. Blockchain Res. Appl. 2025, 6, 100242. [Google Scholar] [CrossRef]

- Chouhan, G.K.; Singh, S.; Jaduvansy, A.; Rai, P.; Rath, A. A Blockchain Based Decentralized Identifiers for Entity Authentication in Electronic Health Records. In Proceedings of the 2025 International Conference on Intelligent and Cloud Computing (ICoICC), Bhubaneswar, India, 2–3 May 2025; pp. 1–6. Available online: https://ieeexplore.ieee.org/abstract/document/11052007 (accessed on 14 October 2025).

| Methods | Key Outcomes | Limitations |

|---|---|---|

| Directly storing all medical data a | Support the storage of various unstructured data and introduce blockchain to ensure immutability | Excessive on-chain data storage and storing plaintext cannot protect user privacy |

| Introduce cutting-edge cryptographic technologies b | zk-SNARK, Homomorphic Encryption, Schnorr Protocol, and Three-Phase Multi-Signature Claim Process | There has been significant progress in terms of privacy protection, but the issue of excessive on-chain data remains |

| Introduce IPFS c | Integrate with the IPFS system to achieve collaborative storage of on-chain and off-chain data, reducing the burden on the blockchain. | The problem of excessive on-chain data has been solved, but the IPFS system cannot guarantee data persistence |

| Introduce AI algorithm model d | Enhance the accuracy of fraud detection through ensemble learning, machine learning, etc. | It is highly dependent on datasets, mainly providing fraud identification, and fails to leverage the advantages of blockchain |

| Improve the consensus mechanism to be more suitable for medical insurance e | Propose a consortium blockchain consensus algorithm based on FIFO and result length | This consensus algorithm relies on pre-selected verification nodes, which may face the risk of node collusion. Moreover, its lightweight design fails to clarify how to balance decentralization and security |

| Introduce distributed digital identity f | Decentralized Identifiers (DIDs), SSI-MedRx System | The introduction of DID enables permission control, but it lacks encryption methods to protect privacy and does not process on-chain data |

| Metric | Enhanced Filter | Conventional Filter |

|---|---|---|

| Storage Consumption (n = 100) | KB | KB |

| False Positive Rate (n = 10,000) | ||

| Insertion Complexity | ||

| Query Complexity |

| Component | Operation | Time Consumption |

|---|---|---|

| SM2 Cryptographic Module | Key Generation | 0.8 ms |

| Encryption | 1.1 ms | |

| Decryption | 1.5 ms | |

| Signing | 1.0 ms | |

| Verification | 1.1 ms | |

| Optimized Bloom Filter | Single Calculation (extreme case) | 83.6 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fei, J.; Ling, L. BMIT: A Blockchain-Based Medical Insurance Transaction System. Appl. Sci. 2025, 15, 11143. https://doi.org/10.3390/app152011143

Fei J, Ling L. BMIT: A Blockchain-Based Medical Insurance Transaction System. Applied Sciences. 2025; 15(20):11143. https://doi.org/10.3390/app152011143

Chicago/Turabian StyleFei, Jun, and Li Ling. 2025. "BMIT: A Blockchain-Based Medical Insurance Transaction System" Applied Sciences 15, no. 20: 11143. https://doi.org/10.3390/app152011143

APA StyleFei, J., & Ling, L. (2025). BMIT: A Blockchain-Based Medical Insurance Transaction System. Applied Sciences, 15(20), 11143. https://doi.org/10.3390/app152011143