The Influence of Information Redundancy on Driving Behavior and Psychological Responses Under Different Fog and Risk Conditions: An Analysis of AR-HUD Interface Designs

Abstract

1. Introduction

2. Literature Review and Hypotheses

2.1. The Design of AR-HUD Warnings

2.2. Risky Driving

2.3. Information Redundancy

3. Materials and Methods

3.1. Participants

3.2. Apparatus

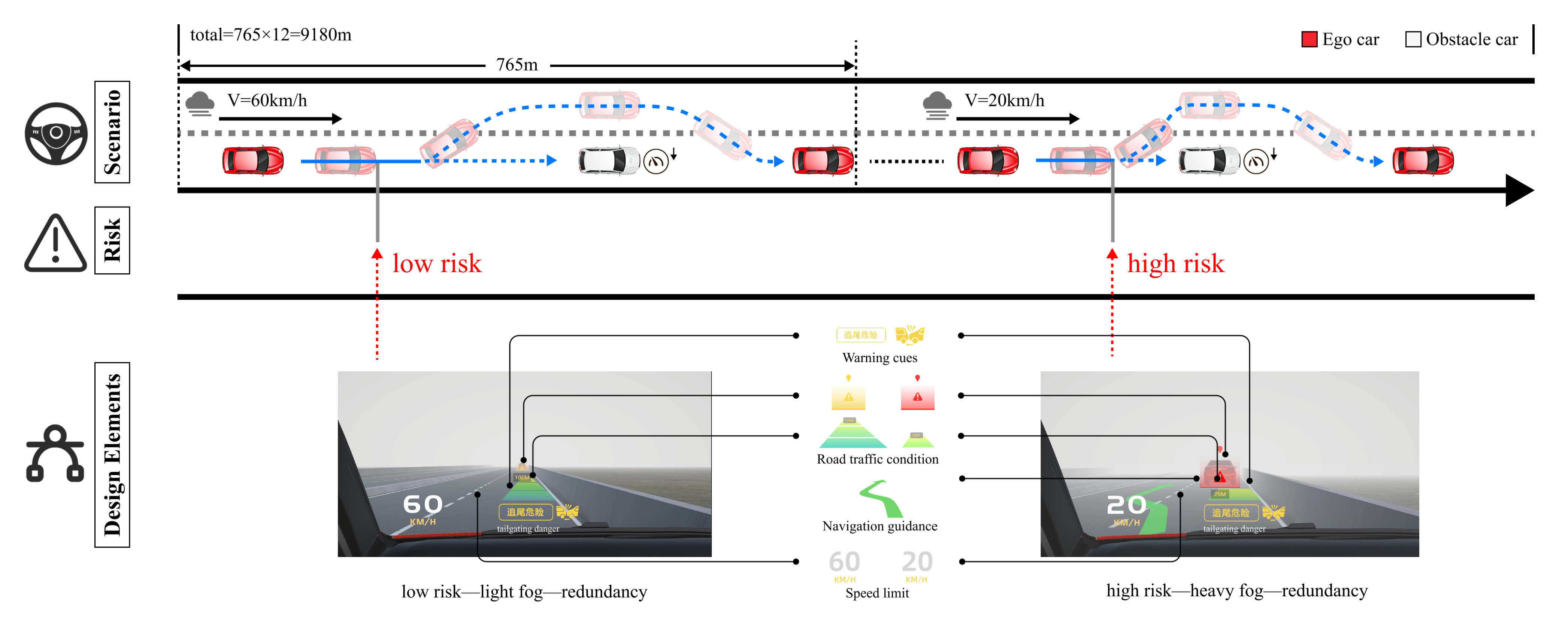

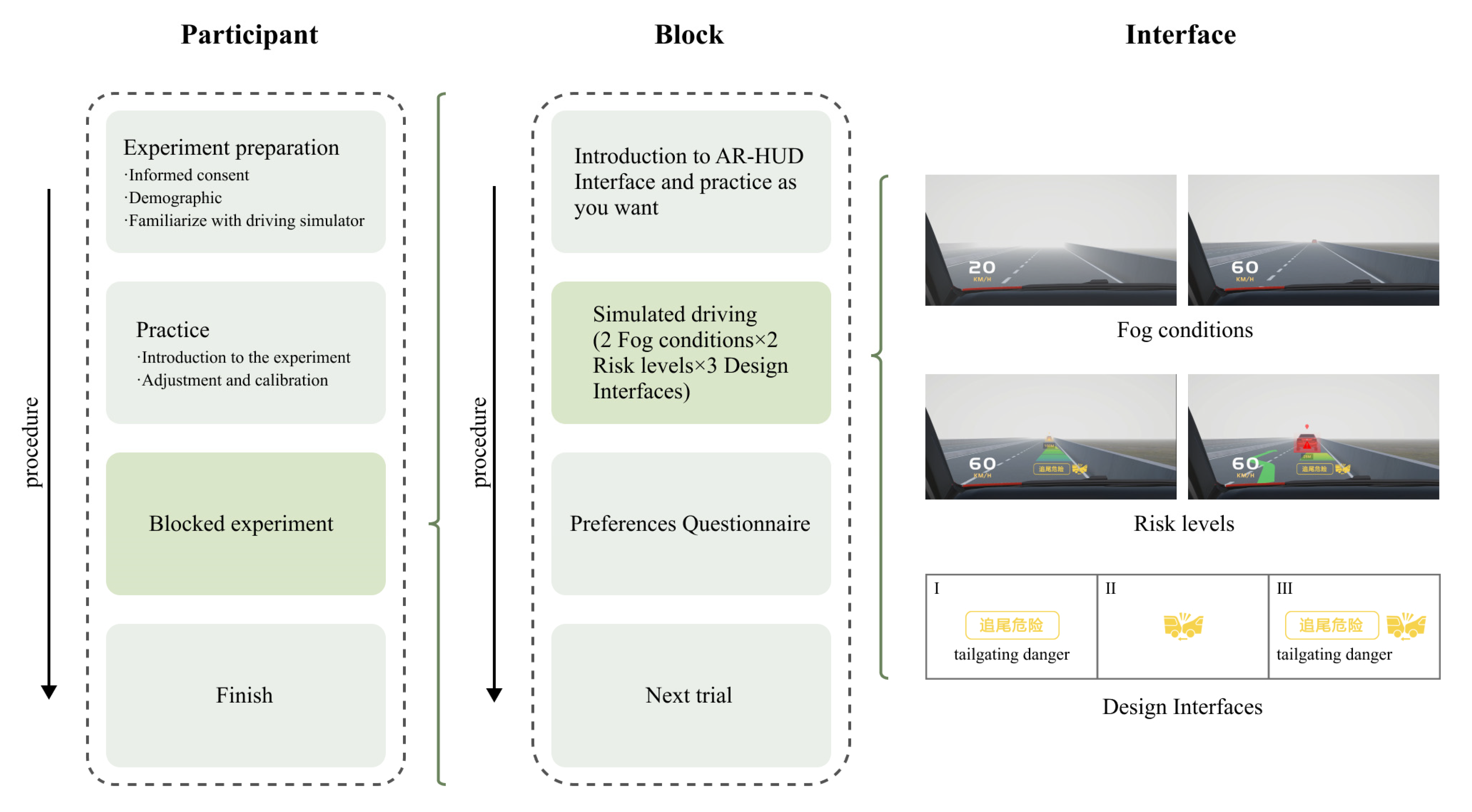

3.3. Experimental Variables and Procedure

3.3.1. Experiment Procedure

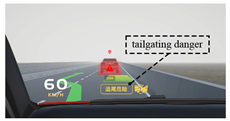

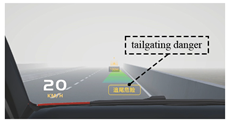

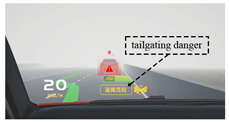

3.3.2. AR-HUD Interface Design

- Condition 1: Light fog with low risk

- Condition 2: Light fog with high risk

- Condition 3: Heavy fog with low risk

- Condition 4: Heavy fog with high risk

4. Results

4.1. Driving Performance

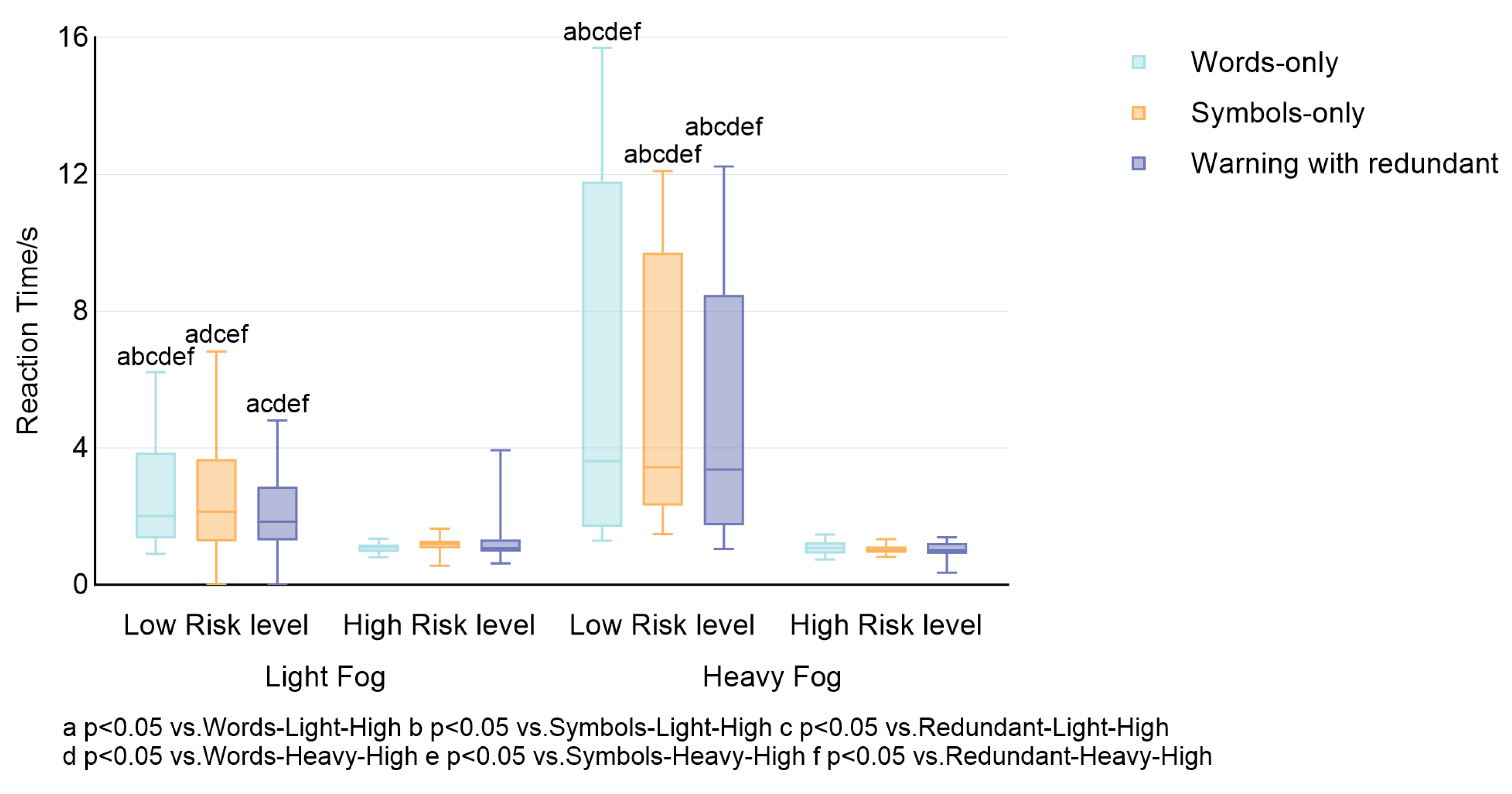

4.1.1. Reaction Time

| Interfaces | Fog | Risk Levels | Median | Z | H | p |

|---|---|---|---|---|---|---|

| Words-only | Light | Low | 2.020 | 2.758 | 115.923 | 0.000 |

| High | 1.110 | −4.086 | ||||

| Heavy | Low | 3.615 | 5.555 | 160.442 | 0.000 | |

| High | 1.075 | −3.917 | ||||

| Symbols-only | Light | Low | 2.140 | 1.965 | 72.808 | 0.238 |

| High | 1.190 | −2.333 | ||||

| Heavy | Low | 3.410 | 5.261 | 173.096 | 0.000 | |

| High | 1.010 | −4.958 | ||||

| Words + Symbols | Light | Low | 1.910 | 2.019 | 93.077 | 0.013 |

| High | 1.075 | −3.476 | ||||

| Heavy | Low | 4.545 | 5.633 | 170.308 | 0.000 | |

| High | 1.015 | −4.421 |

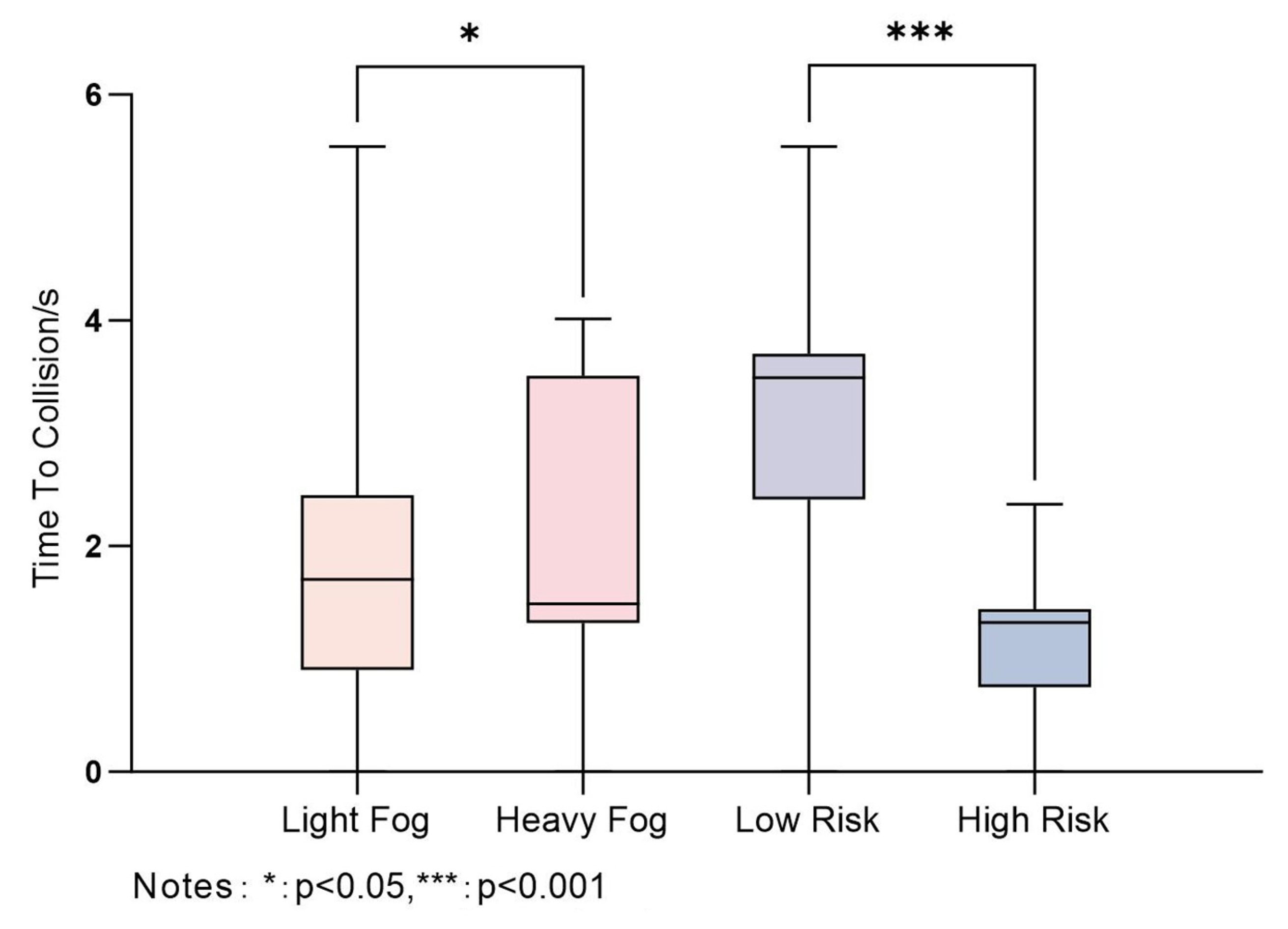

4.1.2. Time to Collision

| Independent | levels | Median | Z | p | η2 |

|---|---|---|---|---|---|

| Fog | Light | 1.705 (0.905, 2.447) | −2.074 | 0.038 | 0.017 |

| Heavy | 1.490 (1.320, 3.510) | ||||

| Risk levels | low | 3.490 (2.412, 3.702) | −12.167 | 0.000 | 0.506 |

| high | 1.325 (0.752, 1.440) |

| Interfaces | Fog | Risk Levels | Median | Z | H | p |

|---|---|---|---|---|---|---|

| Words only | Light | Low | 2.515 | 3.044 | 96.192 | 0.008 |

| High | 1.455 | −2.635 | ||||

| Heavy | Low | 3.515 | 4.598 | 149.558 | 0.000 | |

| High | 1.320 | −4.231 | ||||

| Symbols only | Light | Low | 2.440 | 1.497 | 95.615 | 0.009 |

| High | 0.981 | −4.147 | ||||

| Heavy | Low | 3.510 | 5.621 | 157.25 | 0.000 | |

| High | 1.335 | −3.663 | ||||

| Words + Symbols | Light | Low | 2.445 | 1.423 | 73.788 | 0.210 |

| High | 1.355 | −2.934 | ||||

| Heavy | Low | 3.510 | 5.825 | 173.173 | 0.000 | |

| High | 1.265 | −4.398 |

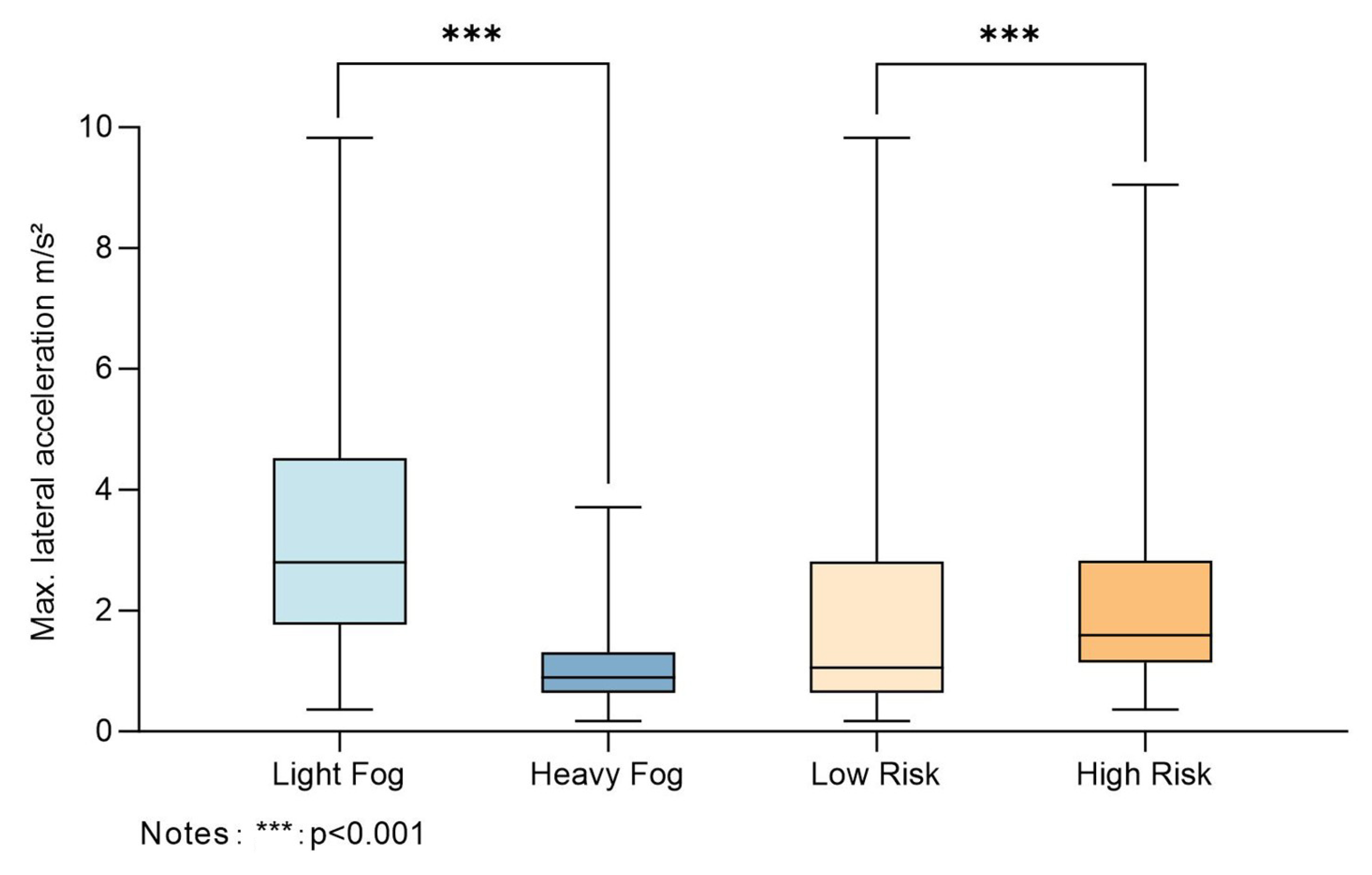

4.1.3. Maximum Lateral Acceleration

| Independent | Levels | Median | Z | p | η2 |

|---|---|---|---|---|---|

| Fog | Light | 2.800 (1.770, 4.517) | −12.589 | 0.000 | 0.363 |

| Heavy | 0.890 (0.640, 1.307) | ||||

| Risk levels | low | 1.055 (0.640, 2.810) | −4.226 | 0.000 | 0.014 |

| high | 1.590 (1.142, 2.827) |

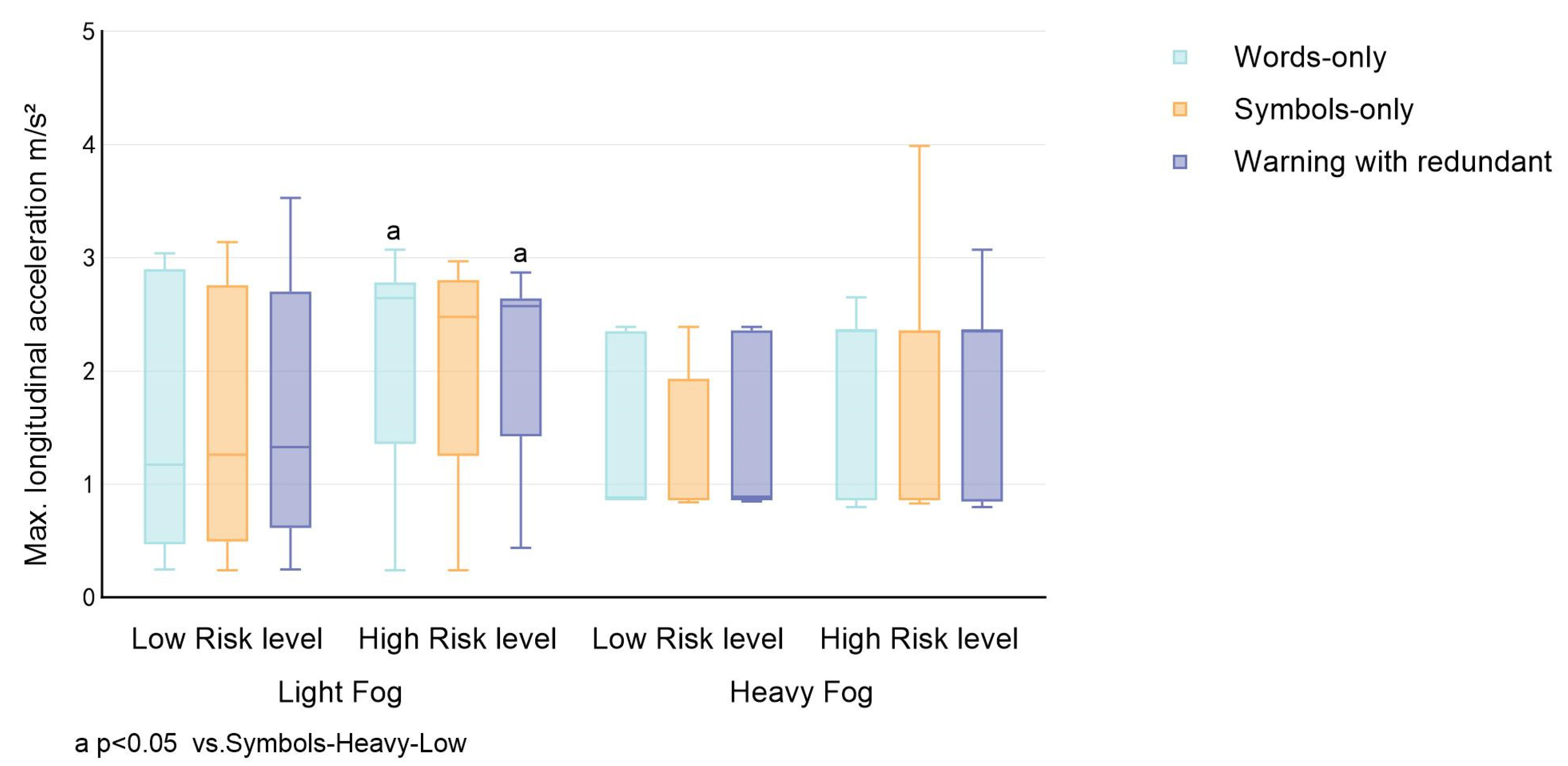

4.1.4. Maximum Longitudinal Acceleration

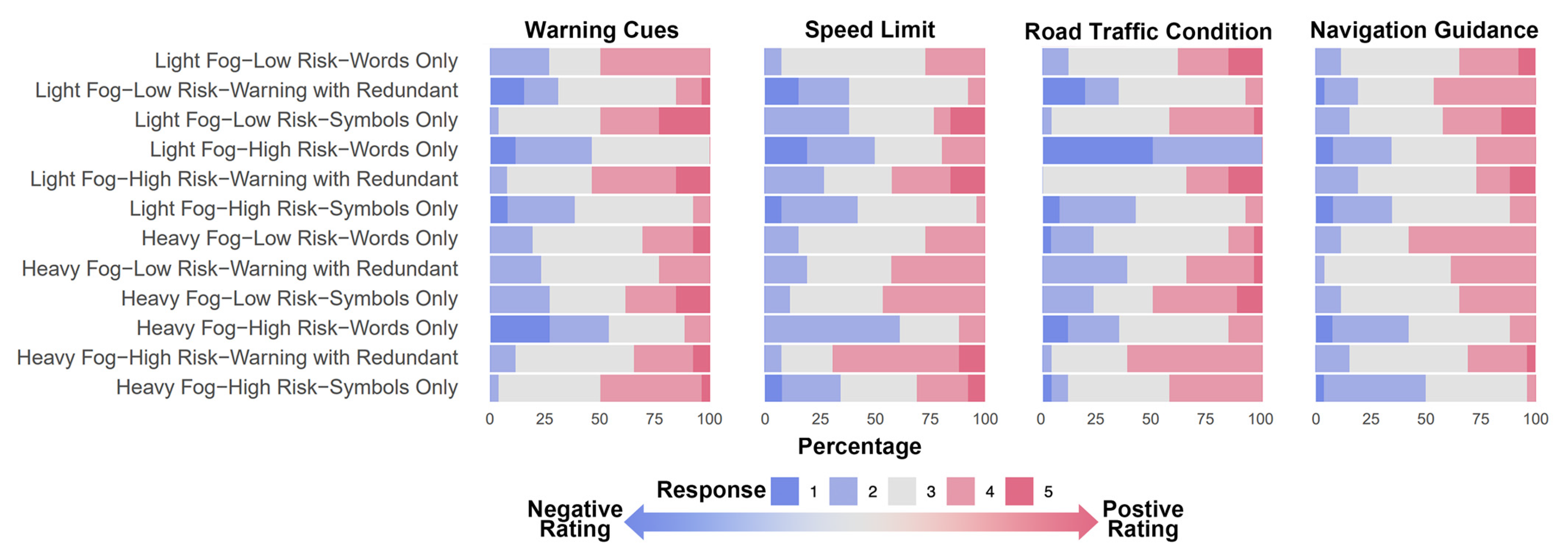

4.2. User’s Preferences

4.2.1. Usability

| Sources of Variation | F Value | η2 |

|---|---|---|

| Risk Levels (RL) | 0.769 | 0.003 |

| Fog Conditions (FC) | 3.427 | 0.011 |

| Interfaces of AR-HUD (AR) | 12.306 *** | 0.076 |

| RL × FC | 0.009 | 0.000 |

| RL × AR | 10.181 *** | 0.064 |

| FC × AR | 1.172 | 0.008 |

| RL × FC × AR | 3.248 * | 0.021 |

4.2.2. Personal Preference

5. Discussion

5.1. Summary of Main Findings

5.2. Limitations and Future Research Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, X.; Chen, Y.; Li, H.; Ma, J.; Li, J. A study of the compliance level of connected vehicle warning information in a fog warning system based on a driving simulation. Transp. Res. Part F Traffic Psychol. Behav. 2021, 76, 215–237. [Google Scholar] [CrossRef]

- You, F.; Zhang, J.; Zhang, J.; Shen, L.; Fang, W.; Cui, W.; Wang, J. A Novel Cooperation-Guided Warning of Invisible Danger from AR-HUD to Enhance Driver’s Perception. Int. J. Hum.-Comput. Interact. 2024, 40, 1873–1891. [Google Scholar] [CrossRef]

- George, P.; Thouvenin, I.; Fremont, V.; Cherfaoui, V. Daaria: Driver assistance by augmented reality for intelligent automobile. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 1043–1048. [Google Scholar] [CrossRef]

- Hwang, Y.; Park, B.-J.; Kim, K.-H. Effects of Augmented-Reality Head-up Display System Use on Risk Perception and Psychological Changes of Drivers. ETRI J. 2016, 38, 757–766. [Google Scholar] [CrossRef]

- Wolffsohn, J.S.; McBrien, N.A.; Edgar, G.K.; Stout, T. The influence of cognition and age on accommodation, detection rate and response times when using a car head-up display (HUD). Ophthalmic Physiol. Opt. 1998, 18, 243–253. [Google Scholar] [CrossRef] [PubMed]

- Wogalter, M.S.; Conzola, V.C. Using technology to facilitate the design and delivery of warnings. Int. J. Syst. Sci. 2002, 33, 461–466. [Google Scholar] [CrossRef]

- Wogalter, M.S.; Racicot, B.M.; Kalsher, M.J.; Simpson, S.N. Personalization of warning signs: The role of perceived relevance on behavioral compliance. Int. J. Ind. Ergon. 1994, 14, 233–242. [Google Scholar] [CrossRef]

- Wogalter, M.S.; Young, S.L. Behavioural compliance to voice and print warnings. Ergonomics 1991, 34, 79–89. [Google Scholar] [CrossRef]

- Wickens, C.D.; Prinett, J.G. Engineering Psychology, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2000; pp. 116–130. [Google Scholar]

- Reddy, G.R.; Blackler, A.; Popovic, V.; Thompson, M.H.; Mahar, D. The effects of redundancy in user-interface design on older users. Int. J. Hum.-Comput. Stud. 2020, 137, 102385. [Google Scholar] [CrossRef]

- Gabbard Joseph, L.; Smith, M.; Tanous, K.; Kim, H.; Jonas, B. AR DriveSim: An Immersive Driving Simulator for Augmented Reality Head-Up Display Research. Front. Robot. AI 2019, 6, 98. [Google Scholar] [CrossRef]

- Guo, H.; Zhao, F.; Wang, W.; Jiang, X. Analyzing Drivers’ Attitude towards HUD System Using a Stated Preference Survey. Adv. Mech. Eng. 2014, 6, 380647. [Google Scholar] [CrossRef]

- Kim, H.; Gabbard, J.L. Assessing Distraction Potential of Augmented Reality Head-Up Displays for Vehicle Drivers. Hum. Factors 2019, 64, 852–865. [Google Scholar] [CrossRef]

- Ahmed, M.M.; Yang, G.; Gaweesh, S. Assessment of Drivers’ Perceptions of Connected Vehicle-Human Machine Interface for Driving Under Adverse Weather Conditions: Preliminary Findings from Wyoming. Front. Psychol. 2020, 11, 1889. [Google Scholar] [CrossRef]

- Jing, C.; Shang, C.; Yu, D.; Chen, Y.; Zhi, J. The impact of different AR-HUD virtual warning interfaces on the takeover performance and visual characteristics of autonomous vehicles. Traffic Inj. Prev. 2022, 23, 277–282. [Google Scholar] [CrossRef] [PubMed]

- Calvi, A.; D’Amico, F.; Ferrante, C.; Ciampoli, L.B. Effectiveness of Augmented Reality Warnings on Driving Behavior Whilst Approaching Pedestrian Crossings: A Driving Simulator Study. Accid. Anal. Prev. 2020, 147, 105760. [Google Scholar] [CrossRef] [PubMed]

- Baran, P.; Zieliński, P.; Dziuda, Ł. Personality and temperament traits as predictors of conscious risky car driving. Saf. Sci. 2021, 142, 105361. [Google Scholar] [CrossRef]

- Zhu, J.; Ma, Y.; Lou, Y. Multi-vehicle interaction safety of connected automated vehicles in merging area: A real-time risk assessment approach. Accid. Anal. Prev. 2022, 166, 106546. [Google Scholar] [CrossRef]

- Karl, J.B.; Nyce, C.M.; Powell, L.; Zhuang, B. How risky is distracted driving? J. Risk Uncertain. 2023, 66, 279–312. [Google Scholar] [CrossRef]

- Rupp, M.A.; Gentzler, M.D.; Smither, J.A. Driving under the influence of distraction: Examining dissociations between risk perception and engagement in distracted driving. Accid. Anal. Prev. 2016, 97, 220–230. [Google Scholar] [CrossRef]

- Zhou, R.; Zhang, Y.; Shi, Y. Driver’s distracted behavior: The contribution of compensatory beliefs increases with higher perceived risk. Int. J. Ind. Ergon. 2020, 80, 103009. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, R.; Zhang, H.; Ding, N.; Ferreira, S.; Shi, X. Driving fingerprinting enhances drowsy driving detection: Tailoring to individual driver characteristics. Accid. Anal. Prev. 2024, 208, 107812. [Google Scholar] [CrossRef]

- Ebel, B.E. Young drivers and the risk for drowsy driving. JAMA Pediatr. 2013, 167, 606–607. [Google Scholar] [CrossRef]

- Rejali, S.; Aghabayk, K.; Shiwakoti, N. A Clustering Approach to Identify High-Risk Taxi Drivers Based on Self-Reported Driving Behavior. J. Adv. Transp. 2022, 2022, 6511225. [Google Scholar] [CrossRef]

- Adavikottu, A.; Velaga, N. Analysis of speed reductions and crash risk of aggressive drivers during emergent pre-crash scenarios at unsignalized intersections. Accid. Anal. Prev. 2023, 187, 107088. [Google Scholar] [CrossRef] [PubMed]

- Bean, P.; Roska, C.; Harasymiw, J.; Pearson, J.; Kay, B.; Louks, H. Alcohol Biomarkers as Tools to Guide and Support Decisions About Intoxicated Driver Risk. Traffic Inj. Prev. 2009, 10, 519–527. [Google Scholar] [CrossRef] [PubMed]

- Peter, R.; Crutzen, R. Hazards Faced by Young Designated Drivers: In-Car Risks of Driving Drunken Passengers. Int. J. Environ. Res. Public Health 2009, 6, 1760–1777. [Google Scholar] [CrossRef]

- Mills, L.; Freeman, J.; Rowland, B. Australian daily cannabis users’ use of police avoidance strategies and compensatory behaviours to manage the risks of drug driving. Drug Alcohol Rev. 2023, 42, 1577–1586. [Google Scholar] [CrossRef]

- Cui, C.; An, B.; Li, L.; Qu, X.; Manda, H.; Ran, B. A freeway vehicle early warning method based on risk map: Enhancing traffic safety through global perspective characterization of driving risk. Accid. Anal. Prev. 2024, 203, 107611. [Google Scholar] [CrossRef]

- Ryan, C.; Murphy, F.; Mullins, M. Spatial risk modelling of behavioural hotspots: Risk-aware path planning for autonomous vehicles. Transp. Res. Part A Policy Pract. 2020, 134, 152–163. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Chen, C.; Yang, M.; She, X. Modeling Lane-Change Risk in Urban Expressway Off-Ramp Area Based on Naturalistic Driving Data. J. Test. Eval. Multidiscip. Forum Appl. Sci. Eng. 2020, 48, 1975–1989. [Google Scholar] [CrossRef]

- Rahman, M.S.; Abdel-Aty, M. Longitudinal safety evaluation of connected vehicles’ platooning on expressways. Accid. Anal. Prev. 2018, 117, 381. [Google Scholar] [CrossRef]

- Zhao, P.; Lee, C. Assessing rear-end collision risk of cars and heavy vehicles on freeways using a surrogate safety measure. Accid. Anal. Prev. 2018, 113, 149–158. [Google Scholar] [CrossRef]

- Rubie, E.; Haworth, N.; Yamamoto, N. Passing distance, speed and perceived risks to the cyclist and driver in passing events. J. Saf. Res. 2023, 87, 86–95. [Google Scholar] [CrossRef]

- Lyu, N.; Cao, Y.; Wu, C.; Xu, J.; Xie, L. The effect of gender, occupation and experience on behavior while driving on a freeway deceleration lane based on field operational test data. Accid. Anal. Prev. 2018, 121, 82–93. [Google Scholar] [CrossRef] [PubMed]

- Cloutier, M.-S.; Lachapelle, U. The effect of speed reductions on collisions: A controlled before-and-after study in Quebec, Canada. J. Transp. Health 2021, 22, 101137. [Google Scholar] [CrossRef]

- Wang, Z.; Rebelo, F.; He, R.; Vilar, E.; Noriega, P.; Zeng, J. Using virtual reality to study the effect of information redundancy on evacuation effectiveness. Hum. Factors Ergon. Manuf. Serv. Ind. 2023, 33, 259–271. [Google Scholar] [CrossRef]

- Cooper, A.; Reimann, R.; Cronin, D.; Noessel, C. About Face: The Essentials of Interaction Design, 4th ed.; John Wiley & Sons: Indianapolis, IN, USA, 2014; pp. 45–80. [Google Scholar]

- Shneiderman, B. Designing the User Interface: Strategies for Effective Human-Compuer Interaction, 5th ed.; Addison-Wesley: Boston, MA, USA, 2009; Volume XVIII, p. 606. [Google Scholar]

- Wiedenbeck, S. The use of icons and labels in an end user application program: An empirical study of learning and retention. Behav. Inf. Technol. 1999, 18, 68–82. [Google Scholar] [CrossRef]

- François, M.; Crave, P.; Osiurak, F.; Fort, A.; Navarro, J. Digital, analogue, or redundant speedometers for truck driving: Impact on visual distraction, efficiency and usability. Appl. Ergon. 2017, 65, 12–22. [Google Scholar] [CrossRef]

- Stojmenova, P.; Tomažič, S.; Sodnik, J. Design of head-up display interfaces for automated vehicles. Int. J. Hum.-Comput. Stud. 2023, 177, 103060. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Richard, M.H.; Naomi, B.R. Design of Diverging Stacked Bar Charts for Likert Scales and Other Applications. J. Stat. Softw. 2014, 57, 1–32. [Google Scholar] [CrossRef]

- He, S.; Du, Z.; Han, L.; Jiang, W.; Jiao, F.; Ma, A. Unraveling the impact of fog on driver behavior in highway tunnel entrances: A field experiment. Traffic Inj. Prev. 2024, 25, 680–687. [Google Scholar] [CrossRef]

- Ivers, R.; Senserrick, T.; Boufous, S.; Stevenson, M.; Chen, H.-Y.; Woodward, M.; Norton, R. Novice Drivers’ Risky Driving Behavior, Risk Perception, and Crash Risk: Findings from the DRIVE Study. Am. J. Public Health 2009, 99, 1638–1644. [Google Scholar] [CrossRef]

- Reddy, G.R.; Blackler, A.; Popovic, V. Intuitive Interaction: Adaptable Interface Framework for Intuitively Learnable Product Interfaces for People with Diverse Capabilities; CRC Press: Boca Raton, FL, USA, 2019; pp. 1–15. [Google Scholar]

- Ulahannan, A.; Thompson, S.; Jennings, P.; Birrell, S. Using Glance Behaviour to Inform the Design of Adaptive HMI for Partially Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4877–4892. [Google Scholar] [CrossRef]

| Indicators | Questions |

|---|---|

| Usability |

|

| |

| |

| |

| |

| |

| |

| |

| |

| |

| Preference |

|

| |

| |

|

| Conditions/Icon | Words Only | Symbols Only | Warnings with Redundant (Words + Symbols) |

|---|---|---|---|

| Light fog with low risk |  |  |  |

| Light fog with high risk |  |  |  |

| Heavy fog with low risk |  |  |  |

| Heavy fog with high risk |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Chen, K.; Chen, M. The Influence of Information Redundancy on Driving Behavior and Psychological Responses Under Different Fog and Risk Conditions: An Analysis of AR-HUD Interface Designs. Appl. Sci. 2025, 15, 11072. https://doi.org/10.3390/app152011072

Li J, Chen K, Chen M. The Influence of Information Redundancy on Driving Behavior and Psychological Responses Under Different Fog and Risk Conditions: An Analysis of AR-HUD Interface Designs. Applied Sciences. 2025; 15(20):11072. https://doi.org/10.3390/app152011072

Chicago/Turabian StyleLi, Junfeng, Kexin Chen, and Mo Chen. 2025. "The Influence of Information Redundancy on Driving Behavior and Psychological Responses Under Different Fog and Risk Conditions: An Analysis of AR-HUD Interface Designs" Applied Sciences 15, no. 20: 11072. https://doi.org/10.3390/app152011072

APA StyleLi, J., Chen, K., & Chen, M. (2025). The Influence of Information Redundancy on Driving Behavior and Psychological Responses Under Different Fog and Risk Conditions: An Analysis of AR-HUD Interface Designs. Applied Sciences, 15(20), 11072. https://doi.org/10.3390/app152011072