Collaborative Multi-Agent Platform with LIDAR Recognition and Web Integration for STEM Education

Abstract

1. Introduction

2. Background

3. Materials and Methods

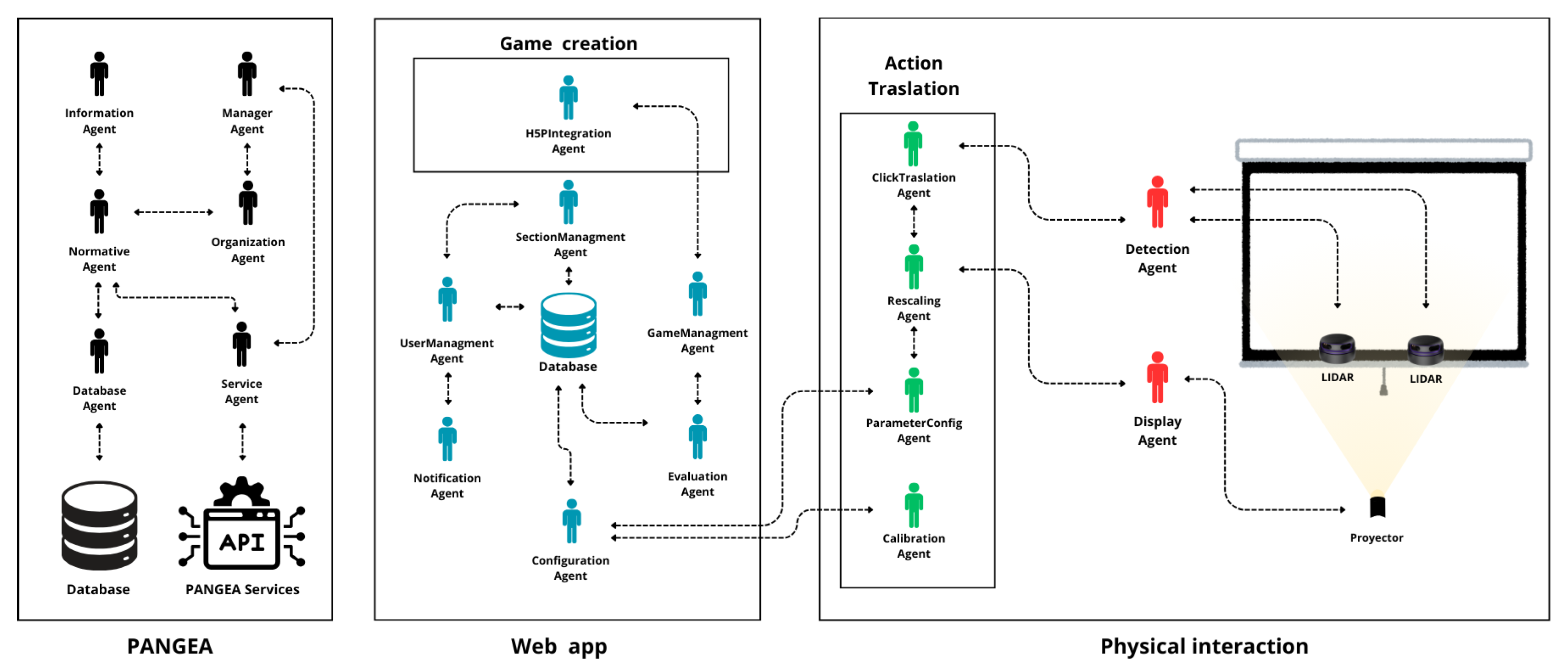

3.1. Multiagent System Architecture

3.1.1. PANGEA Organization

- Manager Agent: was designed to coordinate internal agents and oversee external interactions with other organizations.

- Organization Agent: was designed to maintain the organizational structure and define the roles of the different agents.

- Normative Agent: was designed to be in charge of the rules that other agents must comply with, ensuring consistency and compliance with policies.

- Information Agent: was designed to manage the distribution and processing of information between agents and organizations.

- Database Agent: was designed to be responsible for the connection with the database, guaranteeing access and persistence of information.

- Service Agent: was designed to act as an interface between the PANGEA organization and external services (APIs), allowing integration with external applications.

3.1.2. Web Platform Organization

- User Management Agent: was designed to manage the user lifecycle (registration, authentication, permissions).

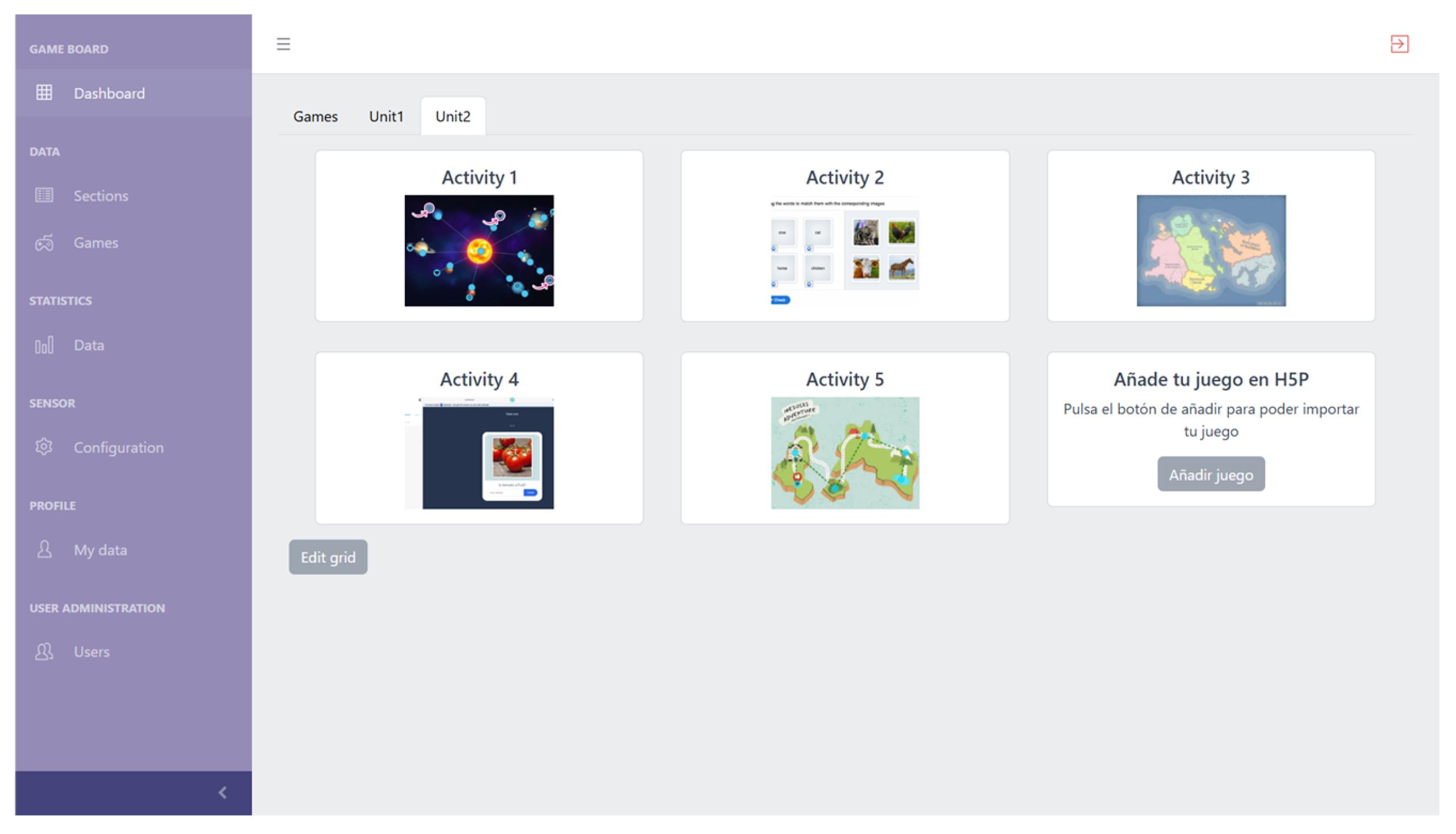

- Section Management Agent: was designed to organize games into sections or groups according to users, making it easy to customize activities.

- Game Management Agent: was designed to manage the creation, storage, and execution of interactive games.

- Evaluation Agent: was designed to process data and generate evaluations about user interaction.

- Notification Agent: was designed to send relevant messages, reminders, or alerts to users.

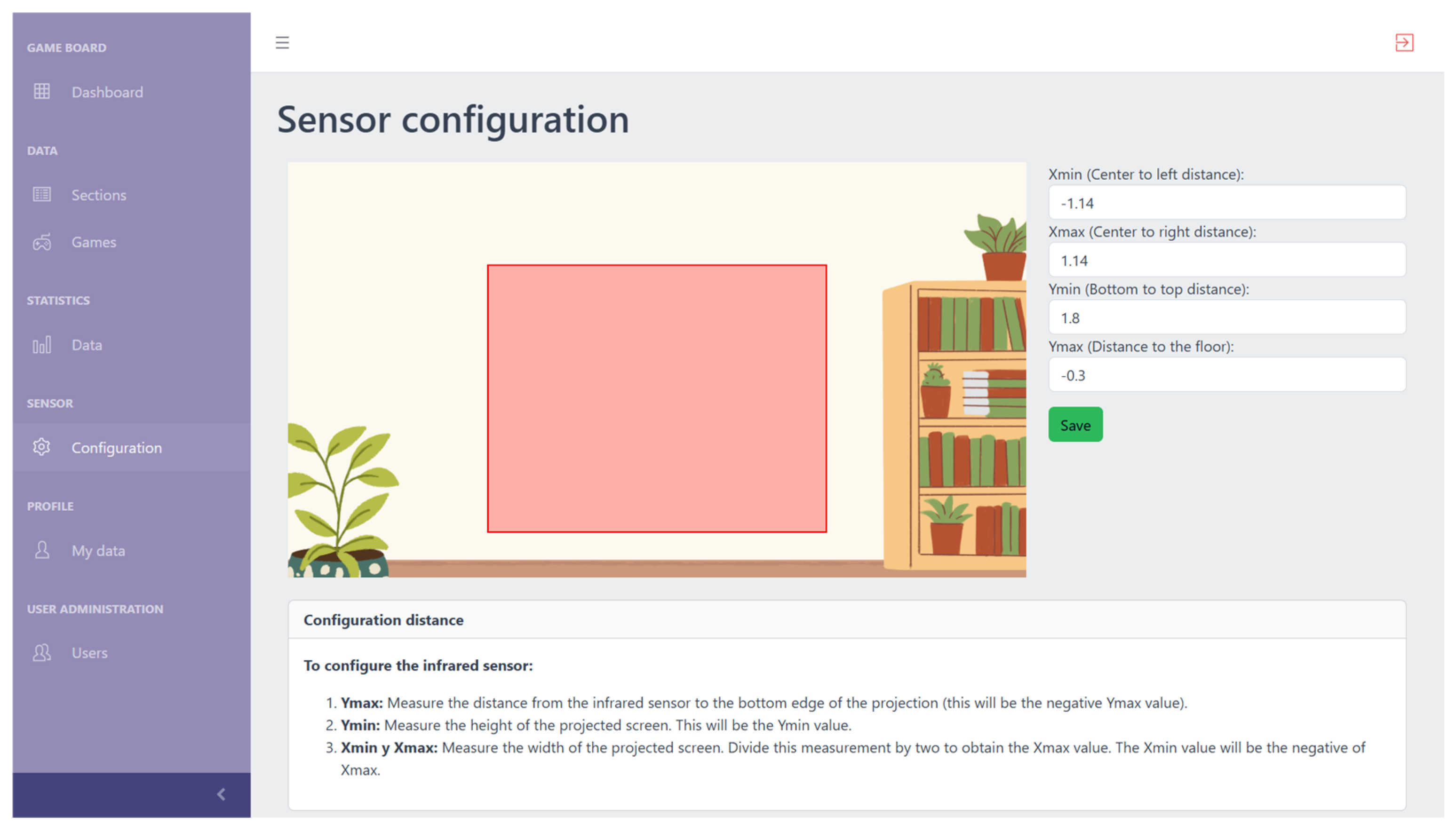

- Configuration Agent: was designed to adjust general application parameters to suit different usage contexts, such as screen size and detection area.

3.1.3. Organization Physical Interaction

- Detection Agent: it is responsible for detecting variations in the physical environment from LIDAR sensors and translates them into events that can be processed by other agents (collisions of balls on the wall, pointers, etc.).

- Display Agent: Manages visual output using a projector, displaying information or games to users.

- Click Translation Agent: Converts the physical actions detected by the Detection Agent into virtual clicks that act like a physical mouse on a computer.

- Rescaling Agent: Scales interactions translated by the Click Translation Agent to match the size of the visualization based on the parameters you set.

- Parameter Config Agent: configures the parameters for translating actions, in coordination with the calibration of the system, to obtain an optimal rescaling system that is adaptable to any display system.

- Calibration Agent: adjusts and calibrates physical devices according to the established parameters and the size of the visualization, ensuring accuracy in detection and visual response.

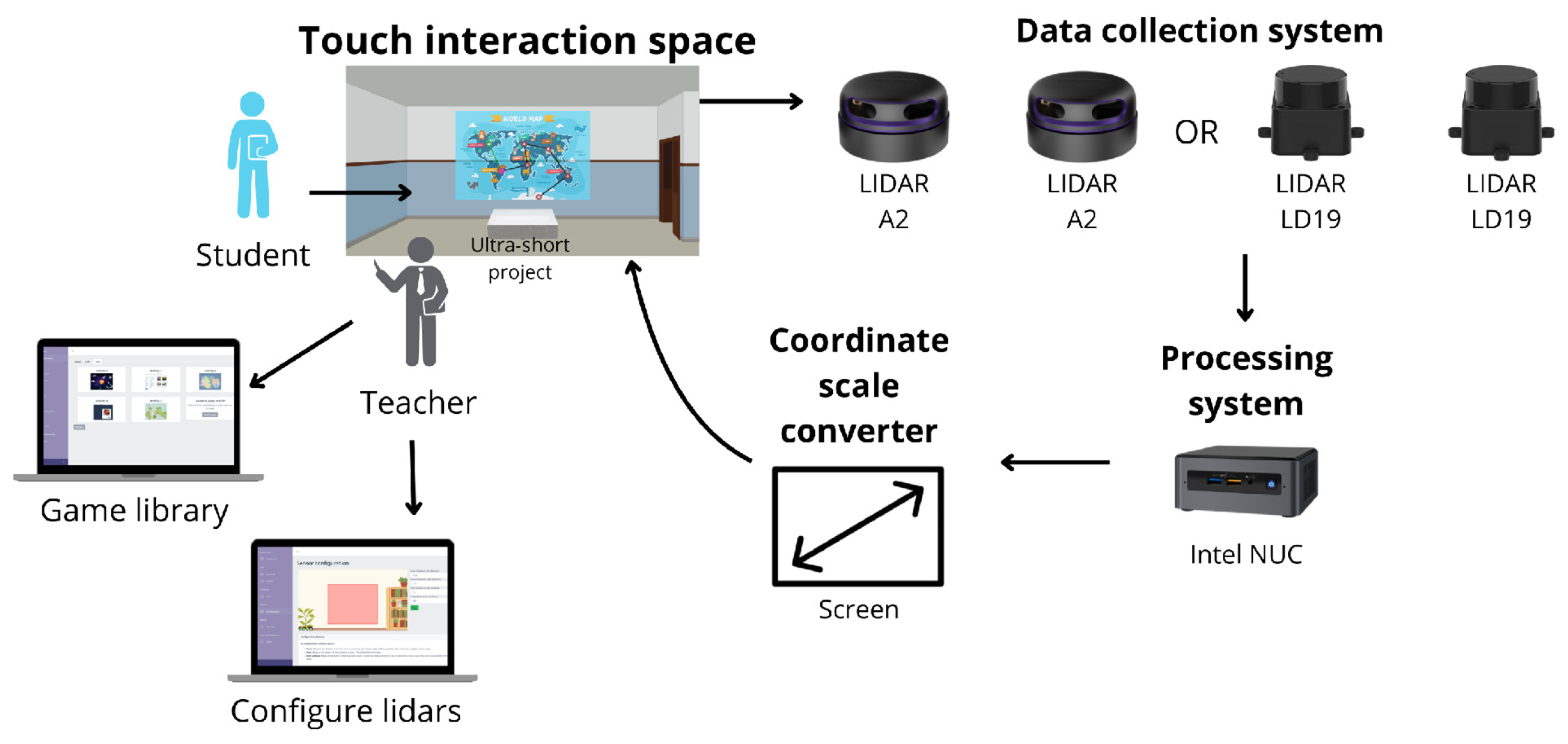

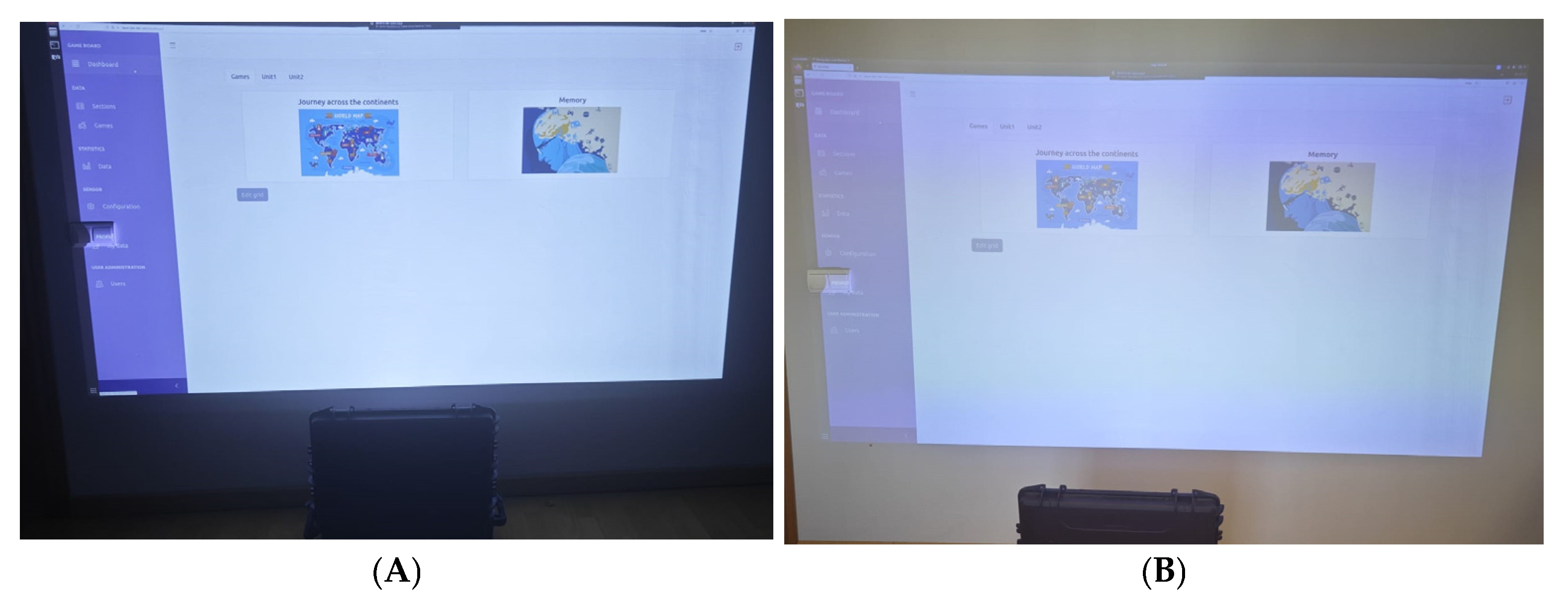

3.2. Implementation of the Prototype

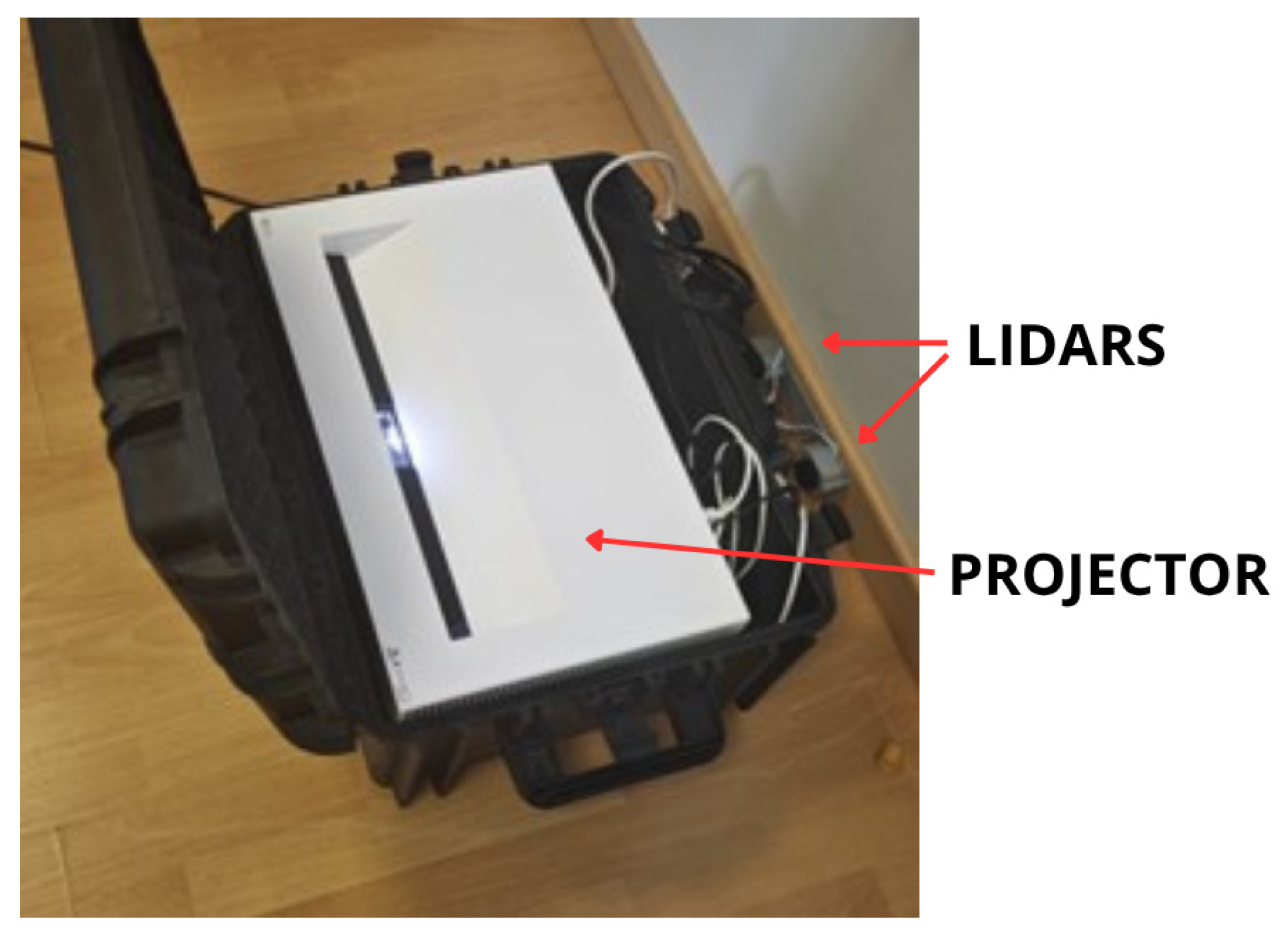

3.2.1. Physical Design and Arrangement of Components

3.2.2. Data Processing

3.2.3. Information Processing Flow

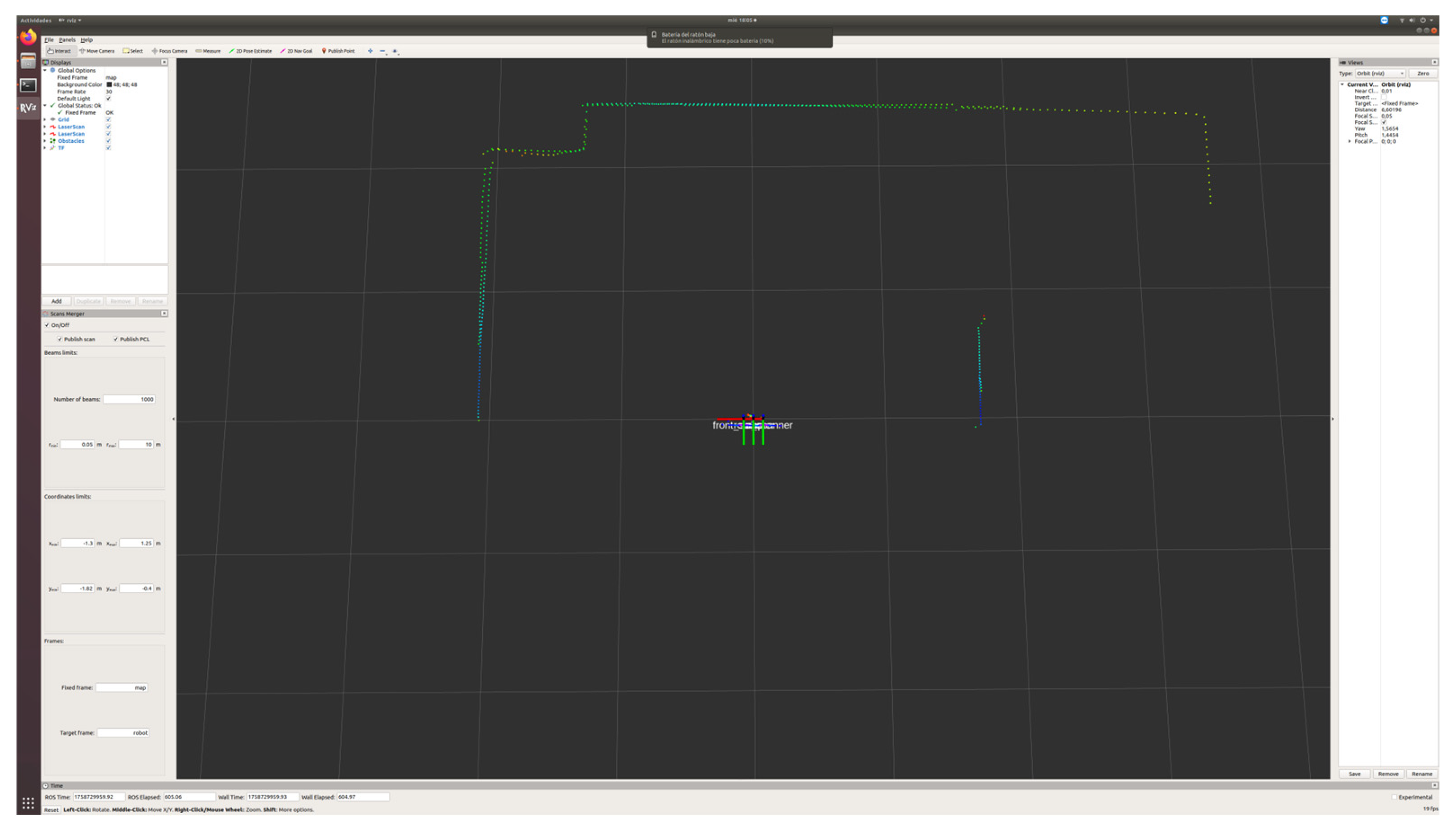

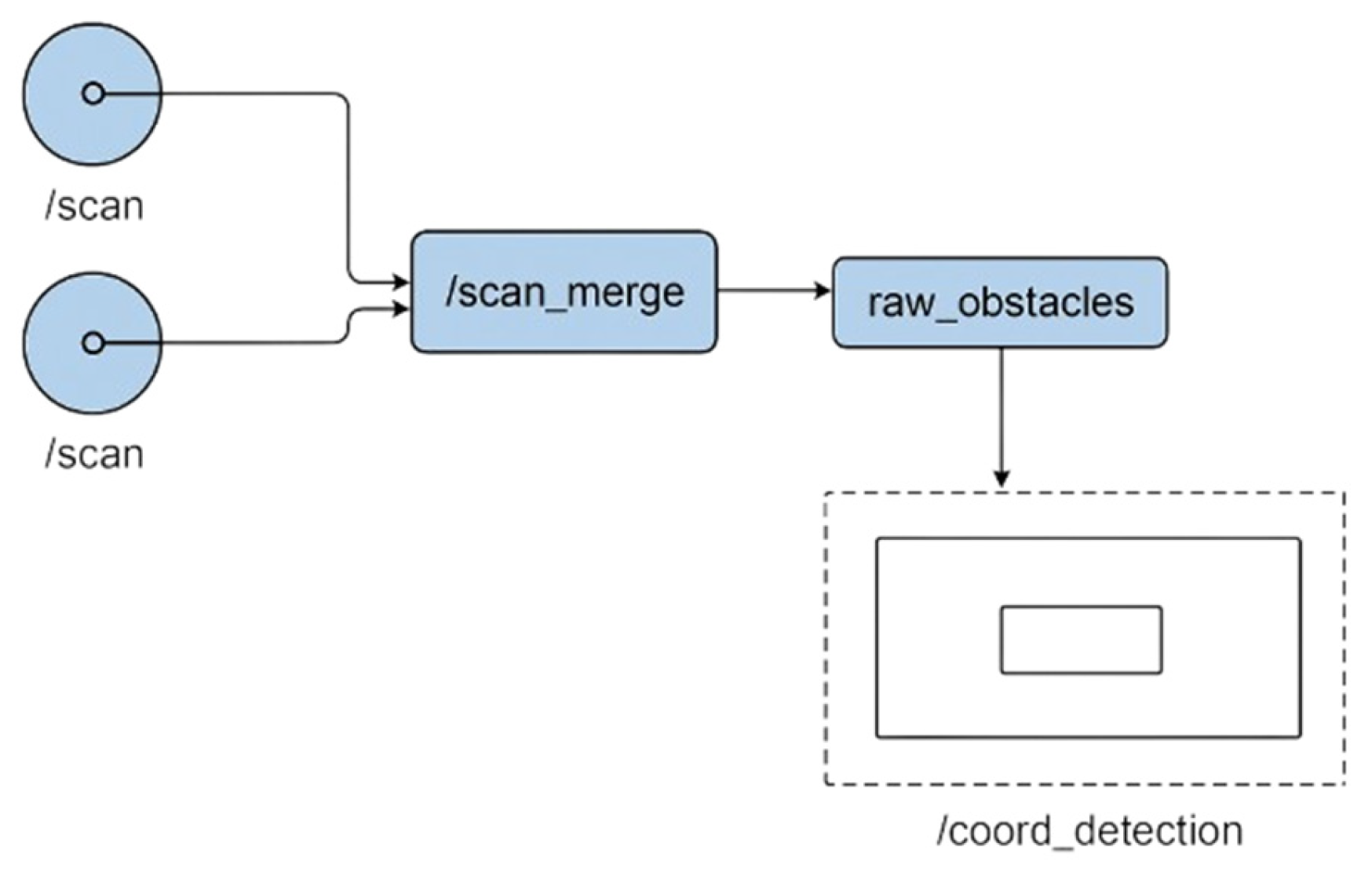

3.2.4. Data Detection and Fusion Logic with ROS

- Area of interest boundaries: The detection is constrained to a rectangular area defined by xmin, xmax, ymin, and ymax. These values are dynamically configured through our web platform to match the dimensions of the projected screen, effectively creating the virtual detection plane.

- Minimum cluster size (min_points): This parameter is set to 4. This threshold ensures that small, spurious detections (e.g., electronic noise or dust particles) are filtered out, and only clusters with at least four points, typical of an intentional interaction, are considered valid.

- Clustering distance (cluster_dist_euclidean): This is set to 0.05 m. This value defines the maximum distance between two points for them to be considered part of the same cluster, which is effective for grouping points generated by a hand or a small object.

- Frame ID: All detections are processed within a unified coordinate frame, typically base_link, ensuring consistency between the two LIDAR sensors.

3.2.5. Coordinate Mapping

- and are the coordinates published in /coord_detection .

- are the equivalent positions in the projected interface, i.e., the coordinates within the screen where the click will be made.

- correspond to the boundaries of the detection rectangle defined in the raw_obstacles packet, i.e., they delimit the area detected by the sensor.

- represent the resolution of the screen (or projector).

3.2.6. Transforming Detections into User Actions

3.3. Materials Used

Component Selection Criteria

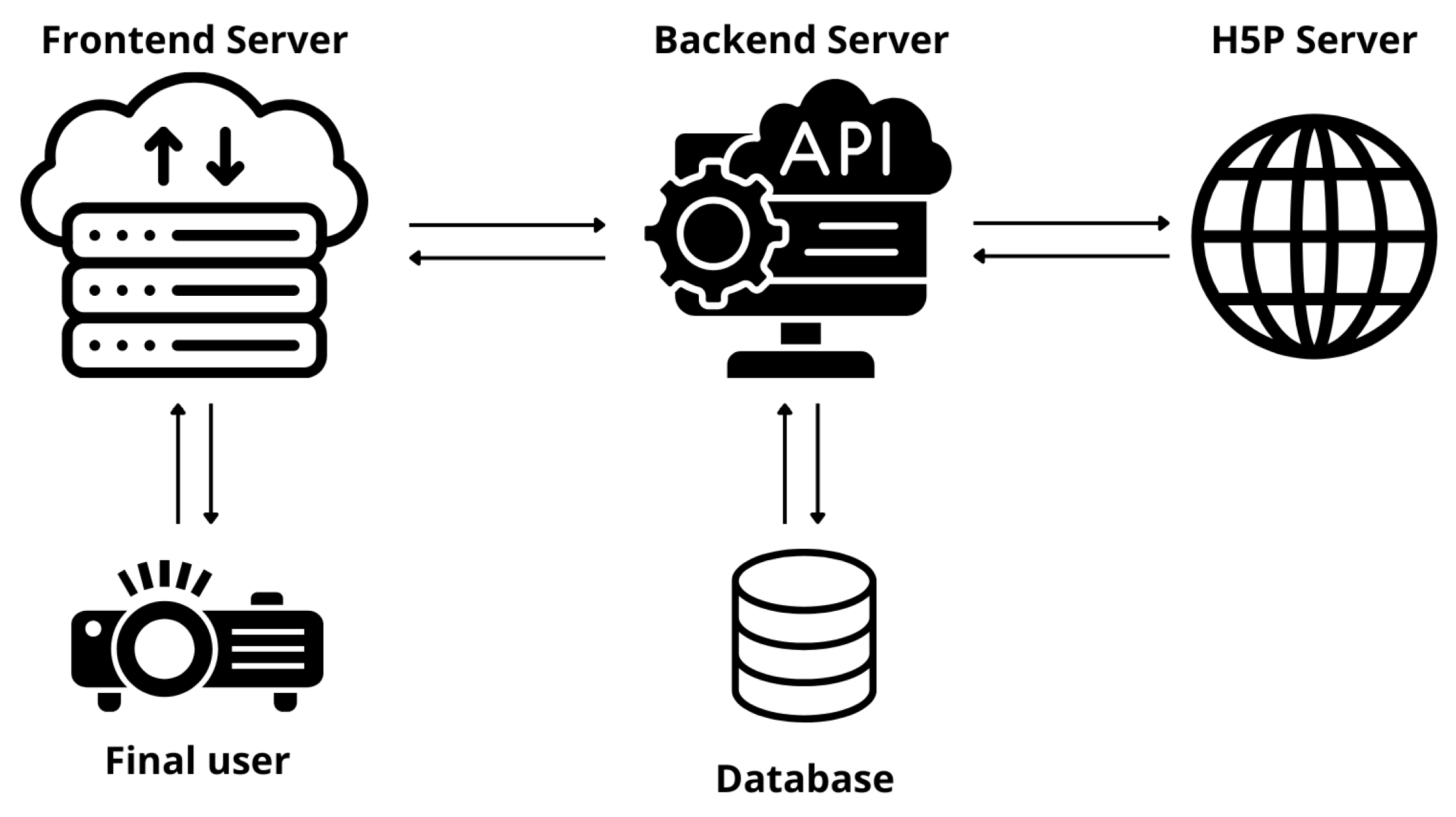

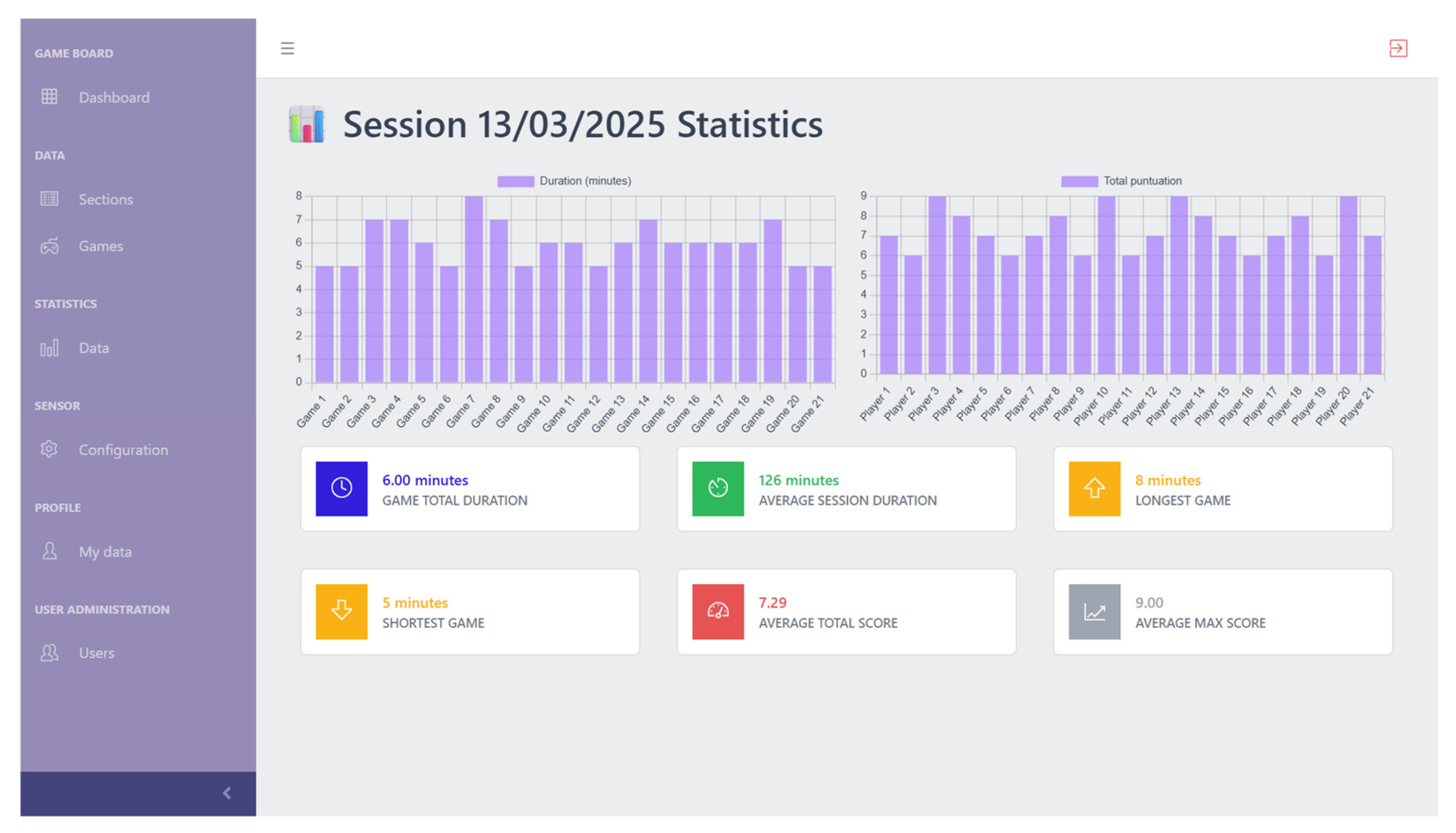

3.4. Web Platform

3.4.1. Web Platform and Activity Management

3.4.2. Frontend Server

3.4.3. Backend Server

4. Results

4.1. Preliminary Evaluation of the Prototype

4.1.1. Validation Methodology

- Stability in different lighting conditions, with tests carried out both in the absence of ambient light and under intense lighting.

- Robustness of detection, verifying the response of the system to varied interactions and the presence of multiple users.

- Diversity in the forms of interaction, including slow approaches, direct contacts, and rapid gestures on the projected surface.

4.1.2. Robustness Under Variable Lighting Conditions

4.1.3. Estimated Interaction Latency

4.1.4. System Stability in Prolonged Use

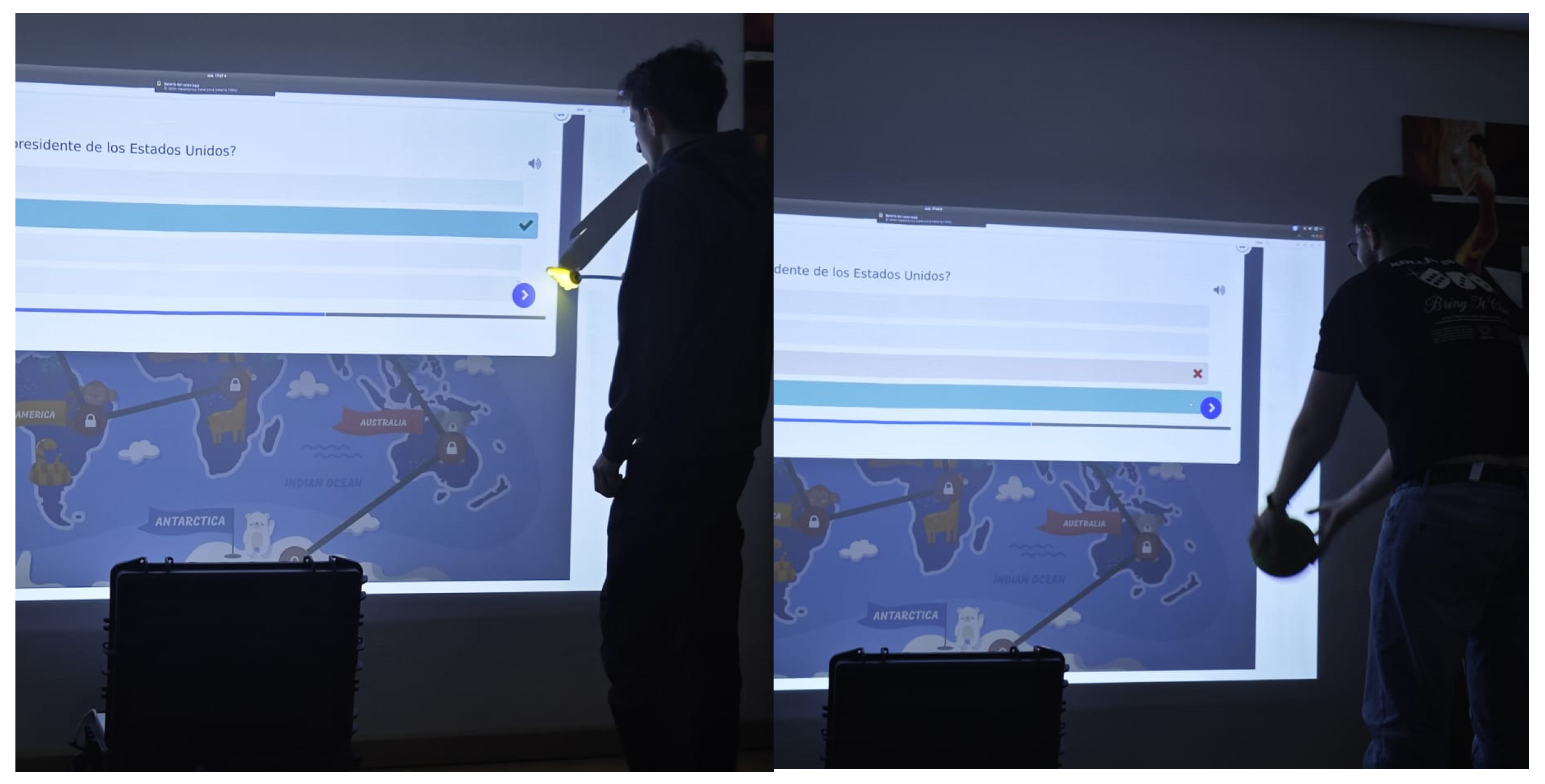

4.1.5. Diversity in the Forms of Interaction

4.2. Preliminary Evaluation with Users

4.2.1. Methodology Used

- Card memory game: Users had to discover and match pairs of cards projected on the surface.

- Quiz game: Participants answered multiple-choice questions associated with different regions of a projected map.

- Perceived ease of use

- Perceived profit

- Intent for Future Use

4.2.2. User Performance Results

- Some specific difficulties were observed in the detection of rapid gestures, although without impeding the normal development of the dynamics.

- Users showed expressions of surprise and joy when they got answers right, indicating motivation even though they had some mistakes in making the shots.

4.2.3. TAM Survey Results

- Perceived Ease of Use (PEOU): Measures the degree to which participants feel that using the platform is effortless and untrained. It includes the items: “Learning to use the platform was easy for me.”; “The interaction with the screen was clear and understandable.”; “I feel comfortable using the platform without assistance.” The mean obtained was 4.4/5, indicating that most of the participants considered the system simple to use.

- Perceived usefulness (PU): students identified a high potential of the system to reinforce content in educational environments, especially in dynamic and collaborative activities. It includes the items: “Using this platform helped me learn the content better.”; “The platform makes the activity more productive than in a traditional classroom.”; “I find the platform useful for my studies.” The average obtained was 4.5/5, showing a high educational potential, especially for dynamic and collaborative activities.

- User satisfaction (US): measures the pleasure and motivation generated by the use of the system. This dimension reflects the user’s attitude towards interacting with the platform. It includes the items: “Using this platform is a good idea.”; “I find it nice to work with this platform.”; “I enjoyed using the platform during the activity.”; The mean obtained was 4.5/5, evidencing that the user experience was positive and pleasant for the students.

- Intention to use future (IB): Participants expressed willingness to use the system in the future and recommend it to other colleagues. Include the items: “I would like to use this platform in future activities.”; “I would recommend this platform to my colleagues.”; “I think I would still use this platform if it was available.” The average obtained was 4.3/5, showing a real interest in continuing to use the system.

| TAM Dimension | Medium (Out of 5) | Standard Deviation | Remarks |

|---|---|---|---|

| Perceived ease of use | 4.4 | 0.5 | Intuitive interaction, no prior training required. |

| Perceived profit | 4.5 | 0.4 | Outstanding educational potential, especially for collaborative dynamics. |

| User satisfaction | 4.5 | 0.5 | Pleasant and motivating user experience for the participants. |

| Intent for Future Use | 4.3 | 0.6 | High willingness to use and recommend the system, with interest in new applications. |

5. Discussion

5.1. Installation and Configuration

5.2. Detection Accuracy and Shadow Sensitivity

5.3. Flexibility in Content Creation

5.4. Latency and User Experience

5.5. Robustness and Adaptability

5.6. Conclusions and Comparison with Other Models

5.7. Future Lines of Work

- Hardware optimization: Explore the integration of more compact and less energy-efficient LIDAR sensors, in order to reduce costs and simplify installation.

- Shared repository system: develop a centralised platform that allows teachers from the same school (or between different schools) to create, store, and share games and activities in a simple way.

- Educational challenges between schools: implement a system of scores, classifications or collaborative/competitive challenges between classrooms and schools, in order to increase student motivation and interest.

- Adaptive gamification: implement algorithms that automatically adjust the difficulty of games according to the level of the students, favoring more personalized learning.

- There are also additional future lines of work aimed at addressing current limitations of the prototype and enhancing its overall performance:

- Migration to ROS 2 for long-term viability: we acknowledge that the current prototype was implemented on ROS1 Melodic, a version that has reached its end-of-life. To ensure the project’s long-term maintainability and sustainability, a priority line of future work is the migration of the entire data processing architecture to ROS 2. This transition will not only address the issue of obsolescence but also allow us to capitalize on the inherent advantages of ROS 2, such as its improved real-time capabilities, a more robust communication framework, and access to ongoing community support and updates.

- Enhanced calibration for improved robustness: We acknowledge that the current coordinate mapping relies on a linear transformation, which assumes the LIDAR scanning plane is perfectly parallel to the projection surface. This simplification can make the system sensitive to misalignments in the physical setup. To address this and increase robustness, a future line of work will involve implementing an advanced calibration process using a perspective transformation (homography). This would require the user to touch four designated corner points on the projected area at the beginning of a session. By mapping these four physical points to their corresponding screen coordinates, the system can compute a homography matrix. This method would automatically correct for tilt, rotation, and perspective distortions, ensuring a highly accurate and reliable user interaction even when the physical setup is not perfectly aligned.

- Implementation of multi-user tracking: Although the current system’s wide detection area is well-suited for collaborative environments, the data processing pipeline currently tracks only a single interaction point at a time. A key future enhancement will be to implement a multi-user tracking mechanism. This would involve upgrading the detection algorithm to identify, track, and differentiate multiple concurrent contact points (e.g., from different users or hands). Each distinct interaction would be published as a unique event, enabling true simultaneous collaboration within educational games and activities. This would significantly enhance the platform’s potential for collaborative learning scenarios.

- Expanded user validation: We acknowledge that the preliminary user evaluation, while yielding positive results, was conducted with a limited sample of 10 university students. For this initial validation phase, recruitment efforts resulted in this sample size. We recognize that this demographic is not fully representative of the primary target audience for a STEM education platform, namely K-12 students and their teachers. Therefore, a crucial future line of work is to conduct a large-scale validation study with these specific user groups. This expanded evaluation will be essential to confirm the current findings regarding usability and acceptance and to gather valuable pedagogical feedback directly from real-world classroom environments. This will allow us to refine the system’s features to better meet the needs of both students and educators.

- The current version of the platform relies on manual export of activity data from external H5P or LMS environments due to licensing and integration constraints. Future iterations will include a dedicated H5P server and an xAPI listener integrated into the backend to capture learning events in real time through a Learning Record Store (LRS). This will allow fully automated and seamless data collection during sessions, eliminating the need for manual uploads and enabling more advanced learning analytics.

- Advanced gesture recognition for continuous interaction: To broaden compatibility with more dynamic educational content, a key future goal is to implement an advanced gesture recognition system. This would allow the platform to support continuous interactions like true drag-and-drop. One proposed implementation involves a mechanism where the user could perform two successive collisions to define the start and end points of a drag path. The system would then automatically execute the drag action between these points, enabling a much richer and more intuitive user experience for a wider array of H5P activities.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ouyang, F.; Xu, W. The effects of educational robotics in STEM education: A multilevel meta-analysis. Int. J. STEM Educ. 2024, 11, 7. [Google Scholar] [CrossRef]

- Morsy, S.; Shaker, A. Evaluation of LiDAR-Derived Features Relevance and Training Data Minimization for 3D Point Cloud Classification. Remote Sens. 2022, 14, 5934. [Google Scholar] [CrossRef]

- Rodrigues, W.G.; Vieira, G.S.; Cabacinha, C.D.; Bulcão-Neto, R.F.; Soares, F. Applications of Artificial Intelligence and LiDAR in Forest Inventories: A Systematic Literature Review. Comput. Electr. Eng. 2024, 120, 109793. [Google Scholar] [CrossRef]

- Castro, A.; Medina, J.; Aguilera, C.A.; Ramirez, M.; Aguilera, C. Robotics Education in STEM Units: Breaking Down Barriers in Rural Multigrade Schools. Sensors 2023, 23, 387. [Google Scholar] [CrossRef]

- Ponce, P.; López-Orozco, C.F.; Reyes, G.E.B.; López-Caudana, E.; Parra, N.M.; Molina, A. Use of Robotic Platforms as a Tool to Support STEM and Physical Education in Developed Countries: A Descriptive Analysis. Sensors 2022, 22, 1037. [Google Scholar] [CrossRef]

- Tselegkaridis, S.; Sapounidis, T. Exploring the Features of Educational Robotics and STEM Research in Primary Education: A Systematic Literature Review. Educ. Sci. 2022, 12, 305. [Google Scholar] [CrossRef]

- Ortiz-Rojas, M.; Chiluiza, K.; Valcke, M.; Bolaños-Mendoza, C. How Gamification Boosts Learning in STEM Higher Education: A Mixed Methods Study. Int. J. STEM Educ. 2025, 12, 1. [Google Scholar] [CrossRef]

- Zourmpakis, A.-I.; Kalogiannakis, M.; Papadakis, S. The Effects of Adaptive Gamification in Science Learning: A Comparison Between Traditional Inquiry-Based Learning and Gender Differences. Computers 2024, 13, 324. [Google Scholar] [CrossRef]

- Montenegro-Rueda, M.; Fernández-Cerero, J.; Mena-Guacas, A.F.; Reyes-Rebollo, M.M. Impact of Gamified Teaching on University Student Learning. Educ. Sci. 2023, 13, 470. [Google Scholar] [CrossRef]

- Kalogiannakis, M.; Papadakis, S.; Zourmpakis, A.-I. Gamification in Science Education. A Systematic Review of the Literature. Educ. Sci. 2021, 11, 22. [Google Scholar] [CrossRef]

- Zato, C.; Villarrubia, G.; Sánchez, A.; Barri, I.; Rubión, E.; Fernández, A.; Rebate, C.; Cabo, J.A.; Álamos, T.; Sanz, J.; et al. PANGEA—Platform for Automatic coNstruction of or Ganizations of intElligent Agents. In Distributed Computing and Artificial Intelligence. Advances in Intelligent and Soft Computing; Omatu, S., De Paz Santana, J., González, S., Molina, J., Bernardos, A., Rodríguez, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 151, pp. 229–239. [Google Scholar] [CrossRef]

- Villarrubia, G.; De Paz, J.F.; Bajo, J.; Corchado, J.M. Ambient Agents: Embedded Agents for Remote Control and Monitoring Using the PANGEA Platform. Sensors 2014, 14, 13955–13979. [Google Scholar] [CrossRef]

- Lewis, J.; Lima, P.U.; Basiri, M. Collaborative 3D Scene Reconstruction in Large Outdoor Environments Using a Fleet of Mobile Ground Robots. Sensors 2023, 23, 375. [Google Scholar] [CrossRef] [PubMed]

- Khoramak, S.; Mahmoudi, F.T. Multi-Agent Hyperspectral and LiDAR Features Fusion for Urban Vegetation Mapping. Earth Sci. Inform. 2023, 16, 165–173. [Google Scholar] [CrossRef]

- Xia, Y.; Wu, X.; Ma, T.; Zhu, L.; Cheng, J.; Zhu, J. Multi-Robot Collaborative Mapping with Integrated Point-Line Features for Visual SLAM. Sensors 2024, 24, 5743. [Google Scholar] [CrossRef] [PubMed]

- Karim, M.R.; Reza, M.N.; Jin, H.; Haque, M.A.; Lee, K.-H.; Sung, J.; Chung, S.-O. Application of LiDAR Sensors for Crop and Working Environment Recognition in Agriculture: A Review. Remote Sens. 2024, 16, 4623. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, Y.; Huang, X.; Li, W.; Long, L.; Ding, S. Smart Classrooms: How Sensors and AI Are Shaping Educational Paradigms. Sensors 2024, 24, 5487. [Google Scholar] [CrossRef]

- He, X.; Hua, X.; Montillet, J.-P.; Yu, K.; Zou, J.; Xiang, D.; Zhu, H.; Zhang, D.; Huang, Z.; Zhao, B. An Innovative Virtual Simulation Teaching Platform on Digital Mapping with Unmanned Aerial Vehicle for Remote Sensing Education. Remote Sens. 2019, 11, 2993. [Google Scholar] [CrossRef]

- Stein, G.; Jean, D.; Kittani, S.; Deweese, M.; Lédeczi, Á. A Novice-Friendly and Accessible Networked Educational Robotics Simulation Platform. Educ. Sci. 2025, 15, 198. [Google Scholar] [CrossRef]

- Plókai, D.; Détár, B.; Haidegger, T.; Nagy, E. Deploying an Educational Mobile Robot. Machines 2025, 13, 591. [Google Scholar] [CrossRef]

- Verner, I.M.; Cuperman, D.; Reitman, M. Exploring Robot Connectivity and Collaborative Sensing in a High-School Enrichment Program. Robotics 2021, 10, 13. [Google Scholar] [CrossRef]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From game design elements to gamefulness: Defining “gamification”, Proceedings of the 15th International Academic MindTrek. Envisioning Future Media Environ. 2011, 9, 15. [Google Scholar] [CrossRef]

- Huotari, K.; Hamari, J. A definition for gamification: Anchoring gamification in the service marketing literature. Electron Mark. 2017, 27, 21. [Google Scholar] [CrossRef]

- Marasco, E.; Behjat, L.; Rosehart, W. Enhancing EDA education through gamification. In Proceedings of the 2015 IEEE International Conference on Microelectronics Systems Education, Pittsburgh, PA, USA, 20–21 May 2015; Volume 25, p. 27. [Google Scholar] [CrossRef]

- Gini, F.; Bassanelli, S.; Bonetti, F.; Mogavi, R.H.; Bucchiarione, A.; Marconi, A. The Role and Scope of Gamification in Education: A Scientometric Literature Review. Acta Psychol. 2025, 259, 105418. [Google Scholar] [CrossRef] [PubMed]

- Mitgutsch, K.; Alvarado, N. Purposeful by design?: A serious game design assessment framework. In Proceedings of the International Conference on the Foundations of Digital Games, New York, NY, USA, 29 May 2012; Volume 121, p. 128. [Google Scholar] [CrossRef]

- Zarraonandia, T.; Diaz, P.; Aedo, I.; Ruiz, M.R. Designing educational games through a conceptual model based on rules and scenarios. Multimed. Tools Appl. 2015, 74, 4535–4559. [Google Scholar] [CrossRef]

- Ar, A.Y.; Abbas, A. Role of Gamification in Engineering Education: A Systematic Literature Review. In Proceedings of the 2021 IEEE Global Engineering Education Conference (EDUCON), online, 21–23 April 2021; Institute of Electrical and Electronics Engineers: New York, NY, USA, 2021; Volume 210, p. 215. [Google Scholar] [CrossRef]

- Pineda-Martínez, M.; Llanos-Ruiz, D.; Puente-Torre, P.; García-Delgado, M.Á. Impact of Video Games, Gamification, and Game-Based Learning on Sustainability Education in Higher Education. Sustainability 2023, 15, 13032. [Google Scholar] [CrossRef]

- Páez Quinde, C.; Arroba-Freire, E.; Espinosa-Jaramillo, M.T.; Silva, M.P. Gamification as Collaborative Learning Resources in Technological Education. In Proceedings of the IEEE Global Engineering Education Conference, Salmiya, Kuwait, 1–4 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Hare, R.; Tang, Y.; Zhu, C. Combining Gamification and Intelligent Tutoring Systems for Engineering Education. In Proceedings of the IEEE Frontiers in Education Conference, College Station, TX, USA, 18–21 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Jääskä, E.; Aaltonen, K.; Kujala, J. Game-Based Learning in Project Sustainability Management Education. Sustainability 2021, 13, 8204. [Google Scholar] [CrossRef]

- Silva, P. Davis’ Technology Acceptance Model (TAM) (1989). In Information Seeking Behavior and Technology Adoption: Theories and Trends; IGI Global: Hershey, PA, USA, 2015; pp. 205–219. [Google Scholar] [CrossRef]

- Roco-Videla, Á.; Flores, S.V.; Olguín-Barraza, M.; Maureira-Carsalade, N. Cronbach’s alpha and its confidence interval. Hosp. Nutr. 2024, 41, 270–271. [Google Scholar] [CrossRef]

- Sánchez San Blas, H.; Sales Mendes, A.; de la Iglesia, D.H.; Silva, L.A.; Villarrubia González, G. Multiagent Platform for Promoting Physical Activity and Learning through Interactive Educational Games using the Depth Camera Recognition System. Entertain. Comput. 2024, 49, 100629. [Google Scholar] [CrossRef]

| Features | Pangea | JADE |

|---|---|---|

| Support for organizations | Native and explicit | Limited, requires manual extensions |

| RFC-based communication | Similar style to web protocols | No, use FIFA-ACL (more closed) |

| Focus on virtual organizations | UO-oriented design | Not directly, focused on individual agents |

| Organizational scalability | High (with hierarchies and roles) | Stocking |

| External interoperability | High (by use of network standards) | Low (requires adaptations) |

| Modularity | High (decoupled components) | Stocking |

| Community and documentation | More academic and specialized | Larger and industrially supported |

| Category | Component | Description | Estimated Price (EUR) |

|---|---|---|---|

| Processing Unit | Mini PC Asus NUC 12 Intel (ASUS, Taipei, Taiwan) | Compact PC for data processing and ROS execution | EUR 350 |

| Sensing and Vision | (×2) Youyeetoo RPLIDAR A2 360° (Youyeetoo, Shenzhen, China) * | 360° LIDAR sensor for object detection and mapping | EUR 250 (×2) |

| (×2) Youyeetoo FHL-LD19 LiDAR (Youyeetoo, Shenzhen, China) * | High-precision LIDAR sensor for short- Range detection | EUR 80 (×2) | |

| Construction Materials | Ender PLA Filament 1.75 mm (1 kg spool, black) (Creality, Shenzhen, China) | PLA filament for prototyping and Structural Components | EUR 17 |

| Connectivity & Power | Pack of power, HDMI, and USB cables | Basic connectivity and data/power transmission | EUR 40 |

| Power connector with fuse | Safe power distribution and protection | EUR 5 | |

| User Interface | LG CineBeam HU715QW Projector (LG Electronics, Seoul, Republic of Korea) | High-resolution projector for interactive display | EUR 1500 |

| Support & Transport | HMF ODK100 Outdoor Photographer Case (HMF, Sundern, Germany) | Protective case for safe transport of the prototype | EUR 30 |

| TOTAL | EUR 2602 |

| Condition of Lighting | Type of Interaction | No. of Attempts | Detections Correct | Failures | Successful Detections (%) | Remarks |

|---|---|---|---|---|---|---|

| Total darkness | Slow approach | 20 | 19 | 1 | 95% | Stable response, no false positives |

| Total darkness | Direct contact | 20 | 20 | 0 | 100% | Immediate and consistent detection |

| Total darkness | Quick gesture | 20 | 18 | 2 | 90% | Some failures in very fast movements |

| Moderate ambient light | Slow approach | 20 | 19 | 1 | 95% | Dark-like performance |

| Moderate ambient light | Direct contact | 20 | 20 | 0 | 100% | No incidents |

| Moderate ambient light | Quick gesture | 20 | 17 | 3 | 85% | Slight reduction in accuracy |

| Intense lighting | Slow approach | 20 | 18 | 2 | 90% | Some loss of spot detection |

| Intense lighting | Direct contact | 20 | 19 | 1 | 95% | Small perceived latency |

| Extensive lighting | Quick gesture | 20 | 17 | 3 | 85% | Greater variability, less robust detection |

| Variable | Minimal | Maximum | Stocking | Standard Deviation |

|---|---|---|---|---|

| PEOU | 3 | 5 | 4.4 | 0.47 |

| PU | 4 | 5 | 4.5 | 0.38 |

| US | 3 | 5 | 4.5 | 0.40 |

| BI | 3 | 5 | 4.3 | 0.50 |

| Variable | PEOU | PU | US | BI |

|---|---|---|---|---|

| PEOU | 1 | 0.78 | 0.74 | 0.70 |

| PU | 0.78 | 1 | 0.82 | 0.76 |

| US | 0.74 | 0.82 | 1 | 0.80 |

| BI | 0.70 | 0.76 | 0.80 | 1 |

| Variable | Cronbach’s Alpha | BI |

|---|---|---|

| PEOU | 0.83 | 3 |

| PU | 0.85 | 3 |

| US | 0.87 | 3 |

| BI | 0.88 | 3 |

| Feature | Previous Prototype | Current Prototype |

|---|---|---|

| Installation | Requires manual adjustment and careful calibration | Quick configuration using parameters on the web platform |

| Portability | Limited, fixed mounting | Portable case with all elements integrated |

| Lighting sensitivity | Affected by shadows and changes in light | Not affected by ambient lighting or shadows |

| Dependence on physical objects | Need for specific colored balls for detection | Compatible with different objects and materials, without color restriction |

| Interaction detection | Based on 2D image processing, perceptible latency | LiDAR and point cloud fusion, virtually unnoticeable latency |

| Content flexibility | Pre-programmed and limited games | H5P to create unlimited activities, integrable with LMS (Moodle) |

| Multiple users | Limited, one-on-one interaction | Supports interaction of multiple users for turn-based activities |

| Robustness | Sensitive to environmental changes | High robustness, stable in different environments and surfaces |

| Educational scalability | Limited to deployed games | Scalable, adaptable to different educational levels and topics |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz García, D.; García González, S.; Álvarez Sanchez, A.; Herrero Pérez, R.; Villarrubia González, G. Collaborative Multi-Agent Platform with LIDAR Recognition and Web Integration for STEM Education. Appl. Sci. 2025, 15, 11053. https://doi.org/10.3390/app152011053

Cruz García D, García González S, Álvarez Sanchez A, Herrero Pérez R, Villarrubia González G. Collaborative Multi-Agent Platform with LIDAR Recognition and Web Integration for STEM Education. Applied Sciences. 2025; 15(20):11053. https://doi.org/10.3390/app152011053

Chicago/Turabian StyleCruz García, David, Sergio García González, Arturo Álvarez Sanchez, Rubén Herrero Pérez, and Gabriel Villarrubia González. 2025. "Collaborative Multi-Agent Platform with LIDAR Recognition and Web Integration for STEM Education" Applied Sciences 15, no. 20: 11053. https://doi.org/10.3390/app152011053

APA StyleCruz García, D., García González, S., Álvarez Sanchez, A., Herrero Pérez, R., & Villarrubia González, G. (2025). Collaborative Multi-Agent Platform with LIDAR Recognition and Web Integration for STEM Education. Applied Sciences, 15(20), 11053. https://doi.org/10.3390/app152011053