5.4.1. Effectiveness Analysis of Short Text Classification Models

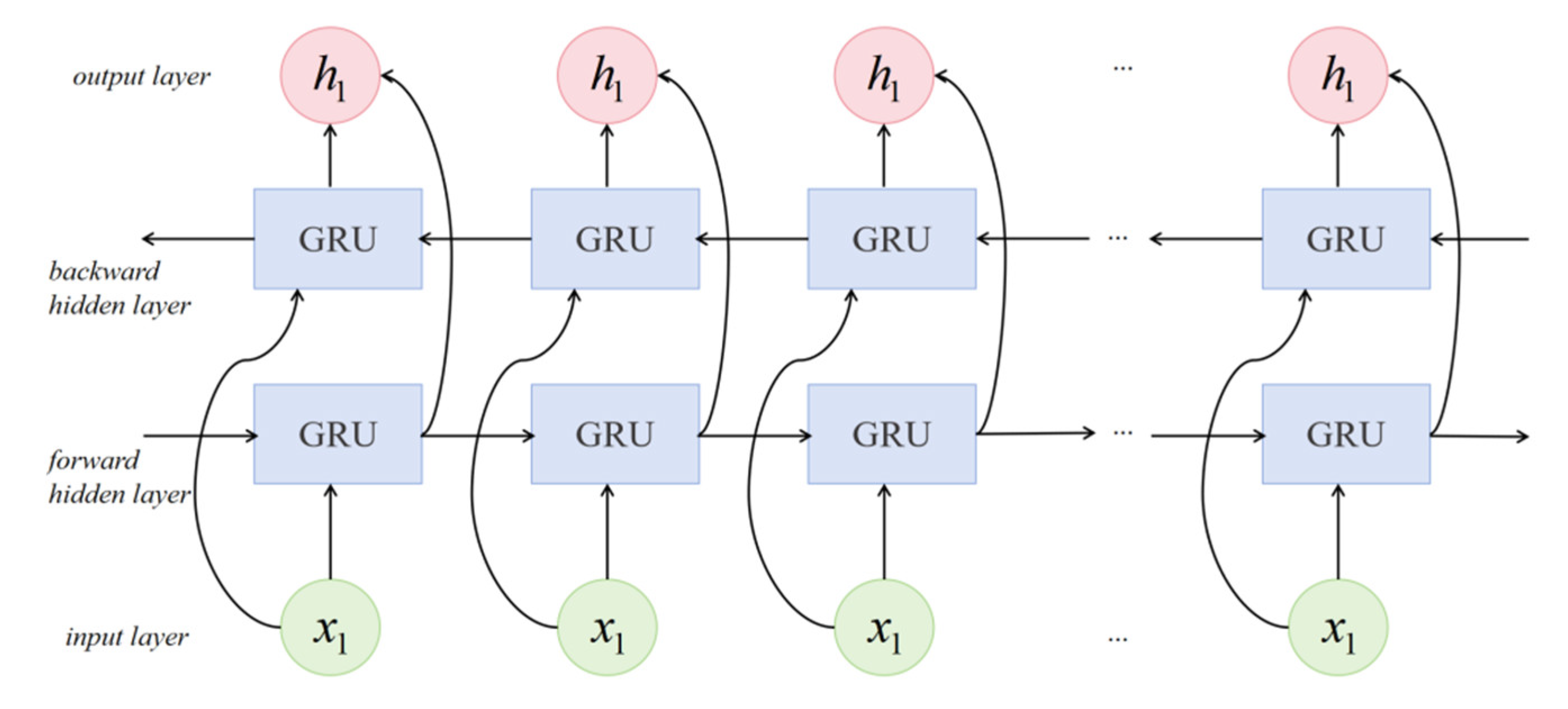

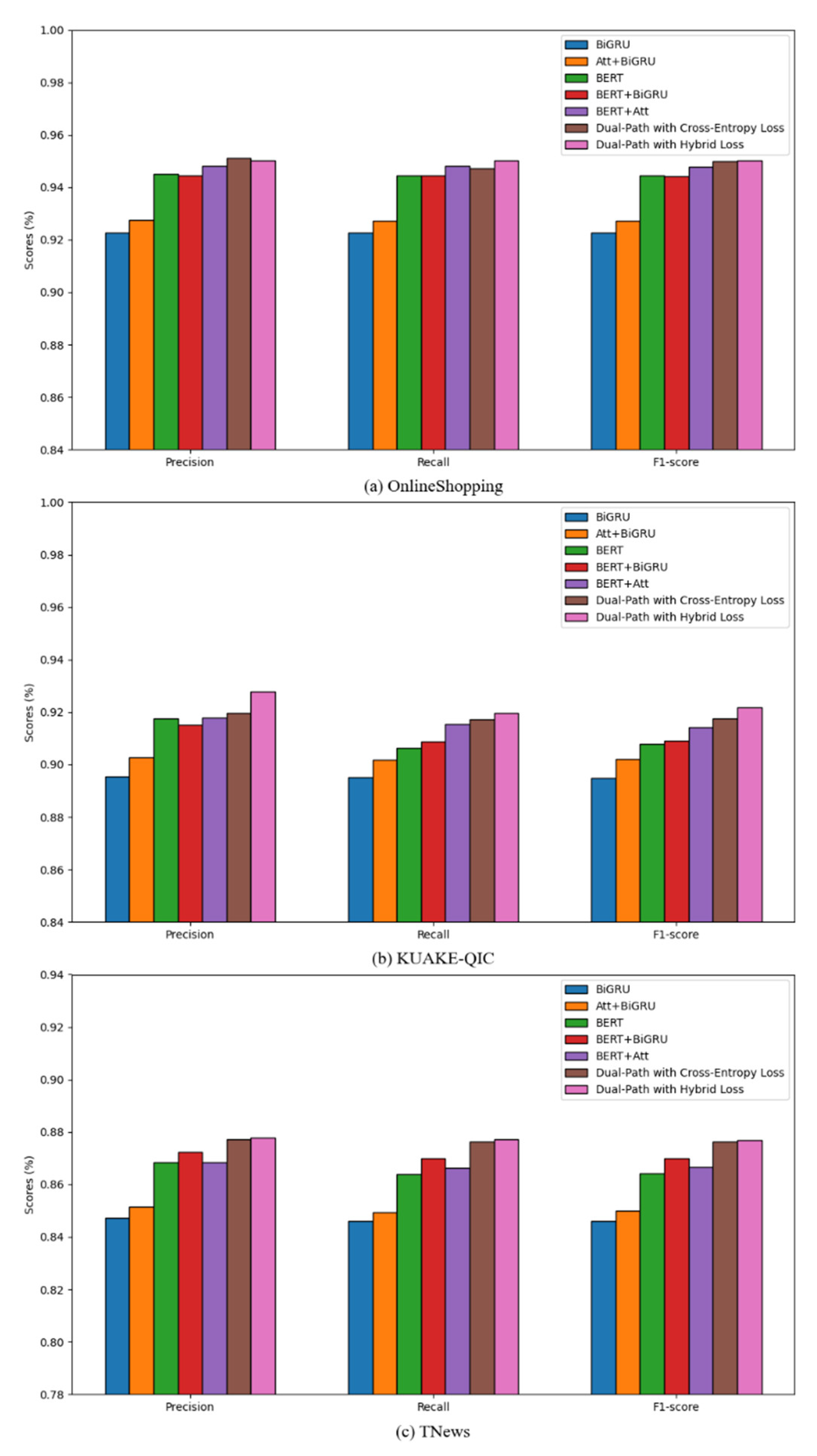

To verify the effectiveness of the proposed model for short text classification tasks, multiple experiments were conducted using different models on three short text datasets of varying scales. Model 1 is a traditional BiGRU network. Model 2 is a BiGRU model with an added attention mechanism, and both models use Word2Vec for text vectorization. Model 3 is a fine-tuned BERT model. Model 4 is a BiGRU network with a BERT embedding module, handling only sentence-level features. Model 5 is a fine-tuned BERT model with an added attention mechanism, handling only word-level features. Model 6 is a dual-path model using the original cross-entropy loss. Model 7 is the proposed dual-path classification model with a hybrid loss. These seven sets of experiments were conducted to verify the impact of different modules on the performance of short text classification. The results are shown in

Table 3.

To more intuitively compare the effects of different models, the three metrics of prediction results for different models on each dataset were visualized. The resulting bar charts are shown in

Figure 9.

The experimental results show that the proposed dual-path classification model with hybrid loss achieved the best classification performance across four different short text datasets. On the binary classification OnlineShopping dataset, the F1 score reached 95.02%. On the 7-class KUAKE-QIC dataset, the F1 score reached 92.17%. On the 13-class TNews dataset, the F1 score reached 87.70%.

Comparing Model 2 with Model 1, and Models 4, 5, 6, and 7 with Model 3, it can be observed that the F1 scores have significantly improved across all datasets. This indicates that the combination of multiple models outperforms single models for short text classification tasks, demonstrating the effectiveness of the proposed integrated model.

Comparing Models 3, 4, 5, 6, and 7 with Models 1 and 2, it can be observed that using the BERT model for word vector representation, as opposed to Word2Vec, significantly improves the performance of short text classification tasks, with F1 scores improving by about 2% across all datasets. While Models 1 and 2 perform well, they are slightly lacking in all metrics compared to BERT and its derivative models. This highlights the strong performance of the BERT pre-trained model in NLP tasks and further amplifies its advantages when combined with other models.

The comparison between Model 2 and Model 1, as well as between Model 5 and Model 3, shows that adding an attention mechanism generally improves metrics across several datasets. The attention mechanism helps the model focus more on important information in the input sequence, increasing its sensitivity to critical context. This focus is crucial for capturing key information and enhancing performance in short text classification tasks.

Models 4 and 5, which, respectively, focus only on sentence-level features and only on word-level features, have achieved good results. However, the dual-path models 6 and 7, which consider both granularities of feature information, show approximately 1% improvement over Models 4 and 5. This indicates that the dual-path architecture, which integrates both word-level and sentence-level information, effectively enhances the performance of the classification model by simultaneously focusing on local key information and global semantic information.

Compared to Model 6, Model 7 shows slight accuracy improvements across several datasets. This indicates that the hybrid loss function, which combines cross-entropy and HingeLoss, not only enhances the model’s classification performance but also helps the model focus more on learning ambiguous and hard-to-distinguish samples, thereby improving its classification performance and generalization ability.

Overall, comparing Model 7 with Models 4, 5, and 6 shows that the combination of multiple models and the choice of loss function have a significant impact on the final classification results. Model 7 achieves the best classification performance. This result demonstrates that among various multi-model combination strategies involving the BERT model, attention mechanism, and BiGRU network, the proposed dual-path model with hybrid loss is the optimal combination strategy.

Additionally, on the KUAKE-QIC dataset, the classification results of the proposed model are compared with the following widely used advanced models:

FastText [

41]: A fast and simple text classification method with a simple model structure and fast training speed.

TextCNN [

3]: A model that uses CNN for NLP tasks, efficiently extracting important features.

LSTM [

7]: An improved version of RNN that addresses the issues of gradient vanishing and explosion during long sequence training.

GRU [

8]: A simplified version of LSTM, which achieves comparable modeling performance with fewer parameters and a simpler structure.

The experimental results are shown in

Table 4.

These experimental results indicate that, compared to other models, the proposed dual-path text classification model with hybrid loss achieves the best recognition performance for short texts. This outcome highlights the importance of fully leveraging pre-trained models, combining different models to capture information at various granularities, and using a hybrid loss function that pays more attention to sample boundaries when handling short text classification tasks.

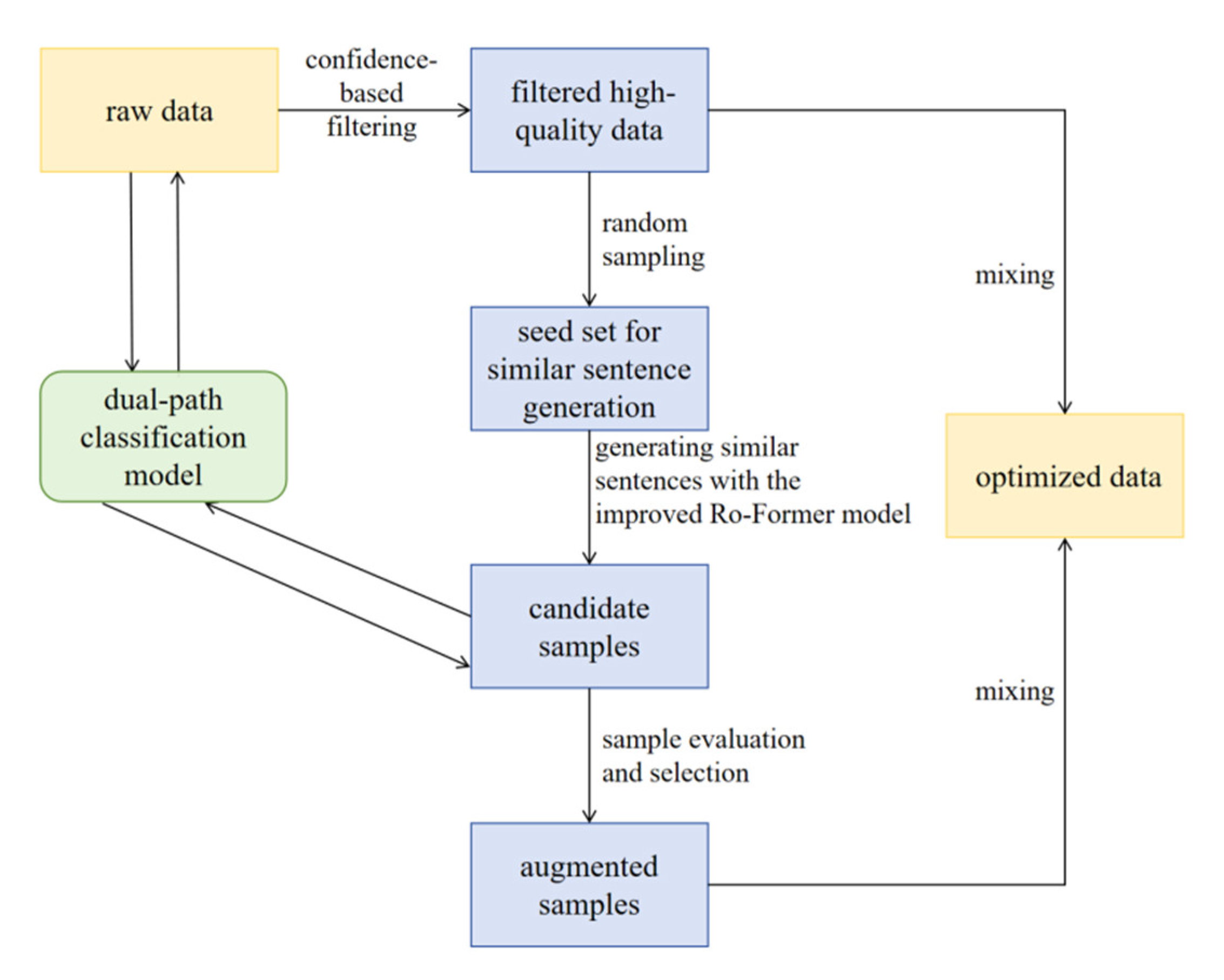

5.4.2. Effectiveness Analysis of Data Optimization Methods

To verify the effectiveness of the data optimization methods proposed in this paper, the three short text datasets introduced earlier were optimized, and experiments were conducted using the proposed dual-path classification model. The confidence distribution levels of the three datasets were obtained based on the confidence calculation method presented in

Section 4. In the OnlineShopping dataset, 0.35% of the samples had a confidence below 0.90. In the KUAKE-QIC dataset, 0.40% of the samples had a confidence below 0.90. In the TNews dataset, 3.72% of the samples had a confidence below 0.90.

Since the vast majority of samples in each dataset have confidence levels concentrated in the 0.90–1.0 range, this section provides a detailed comparison of the confidence distribution levels within the 0.90–1.0 interval for different datasets. The number of samples and corresponding proportions within this confidence range are listed in detail. The specific results are shown in

Table 5.

The confidence filtering threshold for the OnlineShopping dataset was set to 0.95, retaining a total of 24,021 samples. From each category in this dataset, 30% of the samples were randomly sampled as the text augmentation seed set. For the KUAKE-QIC dataset, the confidence filtering threshold was set to 0.95, retaining a total of 4,664 samples. From each category, 100% of the samples were randomly sampled as the text augmentation seed set. For the TNews dataset, the confidence filtering threshold was set to 0.90, retaining a total of 96,280 samples. From each category, 20% of the samples were randomly sampled as the text augmentation seed set.

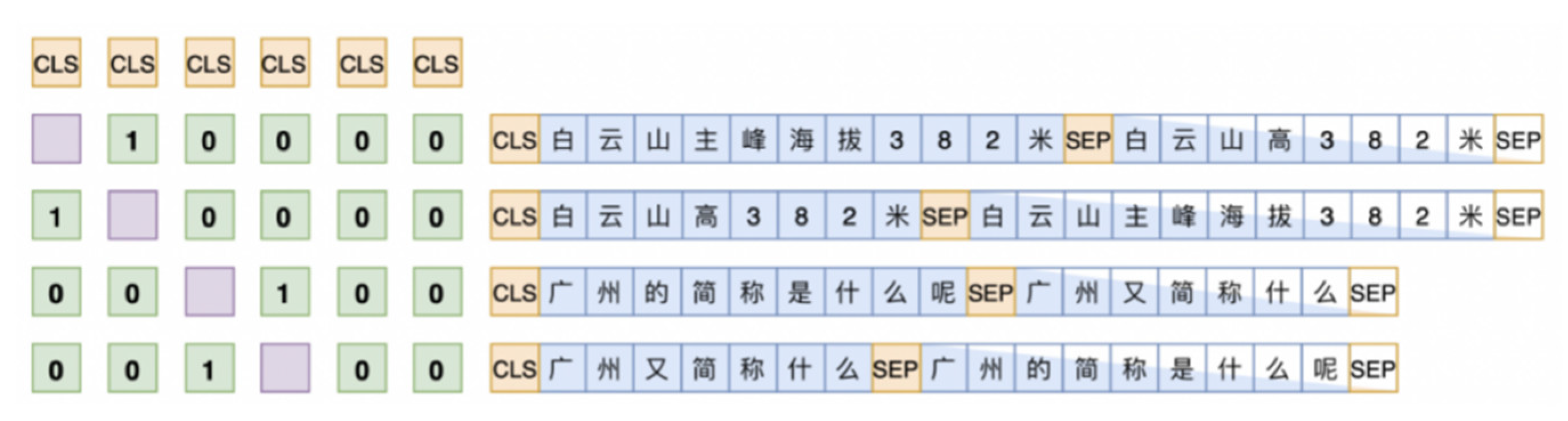

In terms of text data augmentation, the chinese_roformer-sim-char_L-12_H-768_A-12 model was used for similar sentence generation, with Create_Num set to 1, meaning that one similar sentence was generated for each input sentence, and the sentence with the highest similarity was selected. Taking the KUAKE-QIC dataset as an example, a sample of RoFormer-Sim augmented data is showen in

Table 6.

After generating similar sentences using the improved RoFormer-Sim model, the scores of the candidate samples were calculated based on feedback from the classification model trained on the confidence-filtered data. The top 50% of the samples by score were selected as the final augmented samples. After optimization, the final training set sizes were as follows: OnlineShopping dataset had 27,624 samples; KUAKE-QIC dataset had 6996 samples; TNews dataset had 105,908 samples. The final experimental results are shown in

Table 7.

The experimental results show that the models trained on datasets filtered by confidence scores exhibit improved performance metrics compared to those trained on the original datasets. Furthermore, after applying text data augmentation on top of confidence filtering, the performance metrics further improved. This indicates that confidence filtering effectively selects correctly labeled and semantically clear sentences from the original data, while data augmentation compensates for the potential reduction in training samples caused by confidence filtering, thereby enhancing classification performance to some extent. The experiments in this paper demonstrate that the combination of these two methods yields good results, improving data quality to some extent and providing new insights for other researchers addressing similar issues.