Integrating Process Mining and Machine Learning for Surgical Workflow Optimization: A Real-World Analysis Using the MOVER EHR Dataset

Abstract

1. Introduction

- Identify delays in the operating room process, specifically between room entry and exit times (as proxies for surgery start and end).

- Detect unusual or inefficient patterns during off-hours or non-standard workflows.

- Characterize common and uncommon process variants in surgical patient pathways.

- Clustering patients to observe unusual conditions.

- Define the outliers of surgery and recovery durations.

- A refined question-oriented framework tailored to operating room workflows using the L* life cycle model.

- A reproducible pipeline for event log creation and analysis using the MOVER database.

- Identification of process inefficiencies and potential improvement points in operating room workflows.

- Demonstration of basic premortem analysis capabilities based on real-time data simulations.

2. Related Work

3. Materials and Methods

3.1. Data Description

- Patients (cases): 39,685.

- Events: 402,647.

- Start activities:

- ○

- ‘Hospital Admission’: 39,673.

- ○

- ‘Surgery Passed’: 3.

- ○

- ‘Operating Room Enter’: 6.

- ○

- ‘Anesthesia Start’: 1.

- End activities:

- ○

- ‘Hospital Discharge’: 39,573.

- ○

- ‘Anesthesia Stop’: 22.

- ○

- ‘Operating Room Exit’: 88.

- Trace variant discovery.

- Inter-activity time calculations.

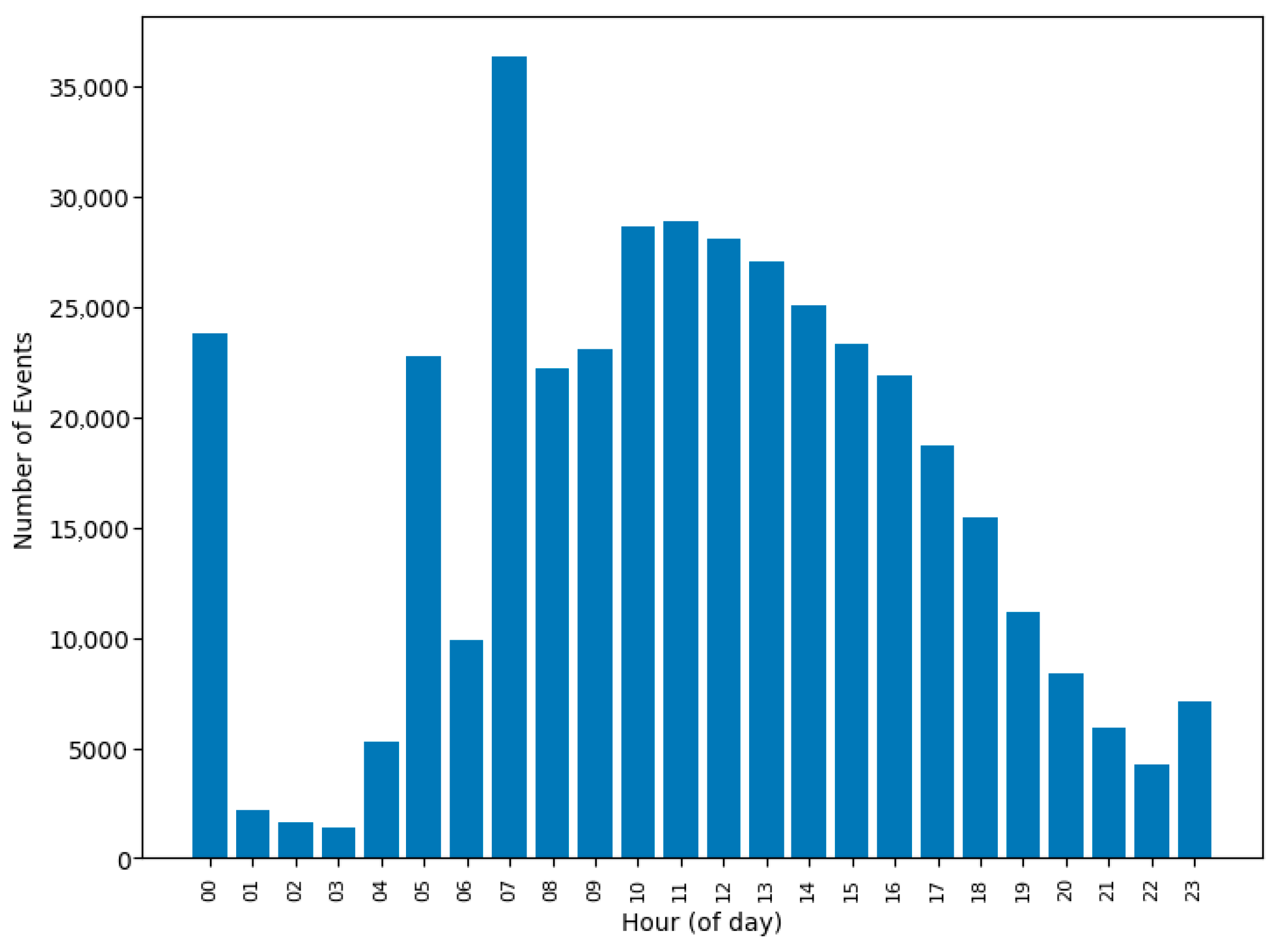

- Workflow visualization at the hourly scale.

3.2. Methodology

- Q1.

- What is the hourly distribution of operating room activities?

- Q2.

- Where do deviations from expected process sequences occur?

- Q3.

- Which process variants are most prevalent, and which ones exhibit anomalies?

- Q4.

- What are the typical delays between operating room entry and exit, and where do bottlenecks emerge?

- Q5.

- Are there any abnormal event sequences (e.g., discharge before surgery)?

- Q6.

- How do delays influence recovery time or length of stay?

3.3. Stage 1: Data Preprocessing

- Hospital admission must precede hospital discharge.

- Operating Room Entry must precede Operating Room Exit.

- Anesthesia Start must precede Anesthesia Stop.

- Surgery planning and cancelation must occur within the admission–discharge window.

- Algorithm 1: Surgery validation—identified valid surgeries using intraoperative timestamps and corrected SURGERY_DATE inconsistencies (e.g., dates defaulted to 00:00 that incorrectly preceded admission).

| Algorithm 1 Determine the Surgery Type for Each Patient |

| Result: Determine the type of surgery for each patient in the dataframe Input: A row of the dataframe containing the following columns:

if all {IN_OR_DTTM, OUT_OR_DTTM, AN_START_DATETIME, AN_STOP_DATETIME} are NULL then ’There is no surgery” if (HOSP_ADMSN_TIME − SURGERY_DATE) > 1 day then return ’Surgery Date Passed’ else return ’Surgery Cancelled’ end else return ’Surgery Performed’ end foreach row in DataFrame do Call surgery_type(row)store the result in a new column SURGERY_TYPE end |

- Algorithm 2: Planning/cancelation adjustment—aligned planning and cancelation timestamps with the admission–discharge interval, and reclassified cases lacking intraoperative timestamps as canceled.

| Algorithm 2 Determine Surgery Plan and Cancellation Times |

| Result: Determine surgery plan and cancellation times for each patient in the DataFrame Input: A row of the DataFrame containing the following columns:

Function times(row): if row[’SURGERY_TYPE’] == ’Surgery Date Passed’ then plan_time = row[’SURGERY_DATE’] cancel_time = row[’SURGERY_DATE’] else if row[’SURGERY_TYPE’] == ’Surgery Performed’ then cancel_time = NaT; if row[’SURGERY_DATE’] ≤ row[’HOSP_ADMSN_TIME’] then plan_time = row[’HOSP_ADMSN_TIME’] + 1 min else plan_time = row[’SURGERY_DATE’] end else if row[’SURGERY_TYPE’] == ’Surgery Cancelled’ then if row[’SURGERY_DATE’] + 23 h 59 min >= row[’HOSP_DISCH_TIME’] then plan_time = row[’HOSP_ADMSN_TIME’] + 1 min cancel_time = row[’HOSP_DISCH_TIME’] − 1 min else plan_time = row[’SURGERY_DATE’] cancel_time = row[’SURGERY_DATE’] + 23 h 59 min end return plan_time, cancel_time foreach row in DataFrame do Call times(row) and store the results in new columns as SRG_PLN_TIME and SRG_CNL_TIME end |

4. Results

4.1. Control-Flow Model Discovery

4.2. Integrated Process Model

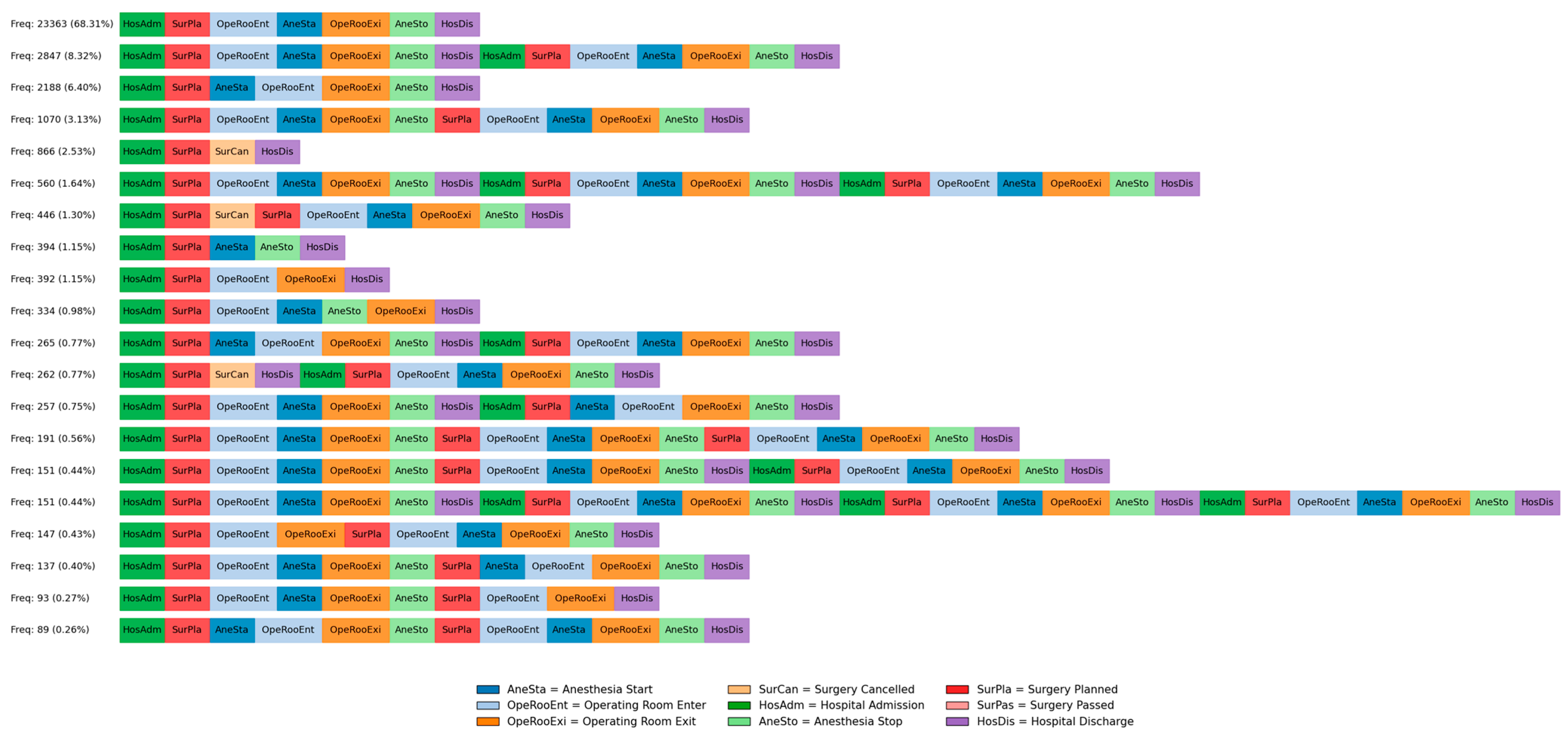

- The top three variants accounted for 87.48% of all patient cases, suggesting a high degree of standardization in core surgical workflows.

- A total of 4477 cases (2847, 1070, and 560 combined) involved patients who underwent multiple surgeries, indicating potential follow-up interventions or complications.

- Variants with surgery cancelations represent a small proportion, highlighting exceptions where planned procedures were not performed.

- Several low-frequency variants (<1%) contain repeated anesthesia start/stop events or multiple operating room entries, suggesting either complex surgical procedures or documentation inconsistencies.

- Loops involving hospital admission, surgery planning, and re-admission indicate cases of postponed or rescheduled surgeries.

- Despite the presence of deviations, the overall process remains highly structured, with most patients following a well-defined pathway from admission to discharge.

4.3. Operational Support

- Demographics and patient status:

- ○

- Age, Sex, ASA Physical Status Classification, ICU Admission Flag.

- Operative characteristics:

- ○

- Primary Anesthesia Type, Surgery Category (e.g., General, Orthopedic, Cardiothoracic, Neurosurgical).

- Workflow-derived time intervals:

- ○

- Surgery Duration (min): Operating room entry → exit.

- ○

- Anesthesia Duration (min): Anesthesia start → stop.

- ○

- Delay from Scheduled Time (min): Deviation between the scheduled surgery time and the OR entry.

- ○

- Anesthesia Induction Lag (min): OR entry → anesthesia starts.

- ○

- Recovery Duration (min): OR exit → hospital discharge.

- ○

- Total Hospital Stay (min): Admission → discharge.

4.4. Predictive Modeling and Clustering

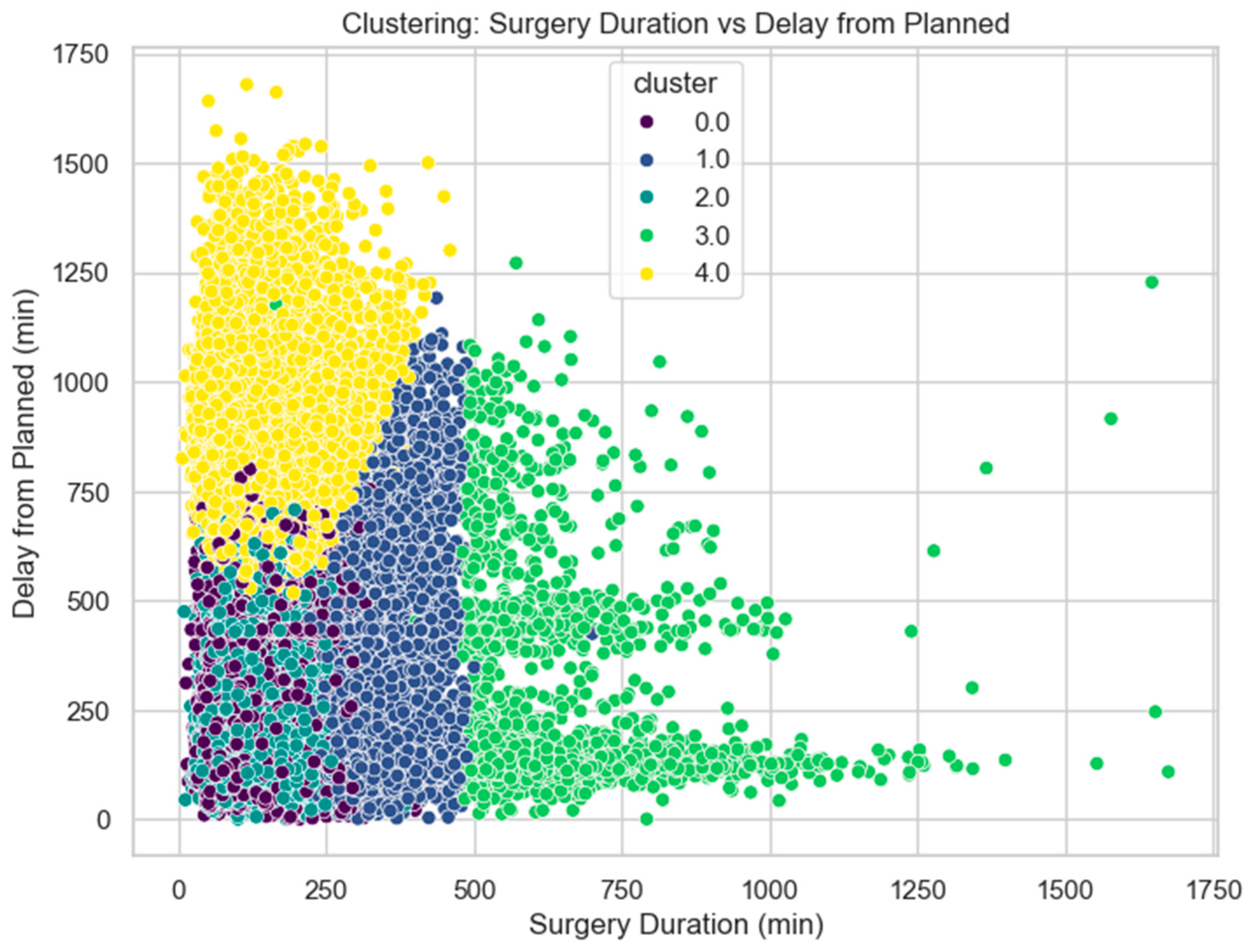

- Cluster 0.0 (purple): Concentrated in the lower left corner with short surgery durations and relatively small delays.

- Cluster 1.0 (blue): Also, in the lower left, but with slightly longer durations or delays than Cluster 0.

- Cluster 2.0 (teal): Forms a band with moderate surgery durations and variable delays.

- Cluster 3.0 (light green): Spreads across longer surgery durations with varying delays.

- Cluster 4.0 (yellow): Concentrated in the upper left, showing short to moderate surgery durations but long delays.

- Deviation from planned surgery time, which was strongly associated with extended downstream delays.

- ICU admission flag, where the absence of ICU admission correlated with shorter recovery, while ICU entry indicated increased clinical risk.

- Surgery and anesthesia durations, which reflect procedure complexity and recovery burden.

- Anesthesia induction lag, potentially capturing operational inefficiencies.

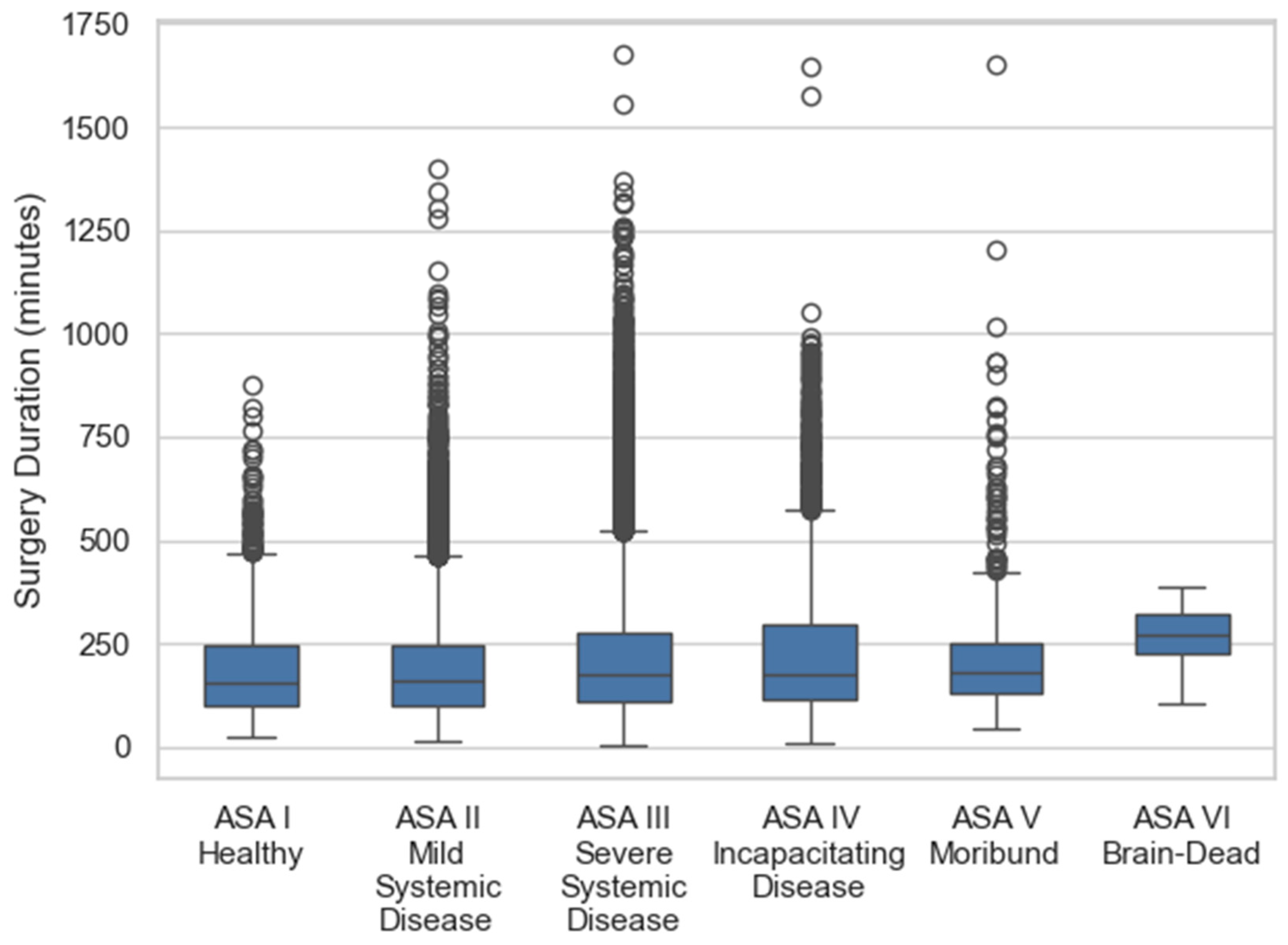

- ASA rating and surgical category are both significant indicators of patient risk and surgical scope.

- Operational bottlenecks—extended delays may indicate OR overcrowding or cascading schedule overruns, which create downstream strain on recovery units.

- Patient complexity—delays may also be linked to high-risk patients requiring additional preoperative stabilization. Consistent with this interpretation, higher ASA categories were associated with both greater delays and longer recovery durations.

5. Discussion

- Statistical testing strengthens the clinical interpretation of our subgroup analyses. While Figure 7 and Figure 8 visually suggested increasing surgical and recovery durations with higher ASA classification and advanced age, the formal Kruskal–Wallis and Mann–Whitney U tests confirmed these associations to be statistically significant. In particular, the differences between higher-risk ASA patients (ASA 4–6) and lower-risk categories, as well as between older (≥60 years) and younger age groups, remained significant after Bonferroni correction for multiple testing. These results reinforce the validity of ASA and age as critical covariates influencing perioperative resource utilization.

- Oncological and plastic reconstructive procedures showed a more complex, wider variance and greater variability in workflow patterns, indicating operational flexibility or inconsistency, while ophthalmological surgery cases followed more predictable sequences.

- Surgery delays were more common in complex categories and among patients with ASA IV, hinting at resource allocation challenges.

- Male patients exhibited marginally longer durations from hospital admission to surgery, potentially due to differing clinical pathways.

- Female patients had slightly shorter median surgery durations and less delay from planned time, though not statistically significant.

- The use of machine learning—particularly the Random Forest classifier—provides an interpretable and actionable tool for anticipating prolonged postoperative recovery.

6. Conclusions

7. Limitations and Future Work

- Dataset constraints: The MOVER dataset applies date anonymization at the patient level, preserving intra-patient temporal order but preventing calendar-level or cross-patient time-series analyses. Surgical incision and closure timestamps were inconsistently recorded, requiring the use of anesthesia or operating room timestamps as proxies for surgical duration.

- Generalisability: Findings are based on a single institutional dataset, which may limit external validity. Replication across multi-institutional and international datasets is needed.

- Outcome imbalance: Rare outcomes (e.g., ICU admission, prolonged recovery) were underrepresented, which may affect predictive model performance despite balancing strategies.

- Interpretability: Machine learning models, such as Random Forests, provide strong predictive accuracy but limited interpretability compared to clinical guidelines, underscoring the importance of domain expert validation.

- Multi-institutional validation: Extending the framework to other hospitals and healthcare systems to test robustness and generalizability.

- Enhanced predictive modeling: Developing tailored approaches for rare outcomes and exploring explainable AI techniques to improve clinical interpretability.

- Integration into clinical workflows: Investigating how offline process mining models can be combined with lightweight, real-time predictive tools in perioperative dashboards.

- Collaborative evaluation: Incorporating surgical domain experts more directly into the iterative design of models to ensure clinical relevance and adoption.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Everson, J.; Rubin, J.C.; Friedman, C.P. Reconsidering Hospital EHR Adoption at the Dawn of HITECH: Implications of the Reported 9% Adoption of a “Basic” EHR. J. Am. Med. Inform. Assoc. 2020, 27, 1198–1205. [Google Scholar] [CrossRef]

- Samad, M.; Angel, M.; Rinehart, J.; Kanomata, Y.; Baldi, P.; Cannesson, M. Medical Informatics Operating Room Vitals and Events Repository (MOVER): A Public-Access Operating Room Database. JAMIA Open 2023, 6, ooad084. [Google Scholar] [CrossRef]

- Mans, R.S.; van der Aalst, W.M.P.; Vanwersch, R.J.B.; Moleman, A.J. Process Mining in Healthcare: Data Challenges When Answering Frequently Posed Questions. In Proceedings of the Process Support and Knowledge Representation in Health Care, Tallinn, Estonia, 3 September 2012; Riaño, D., Lenz, R., Miksch, S., Peleg, M., Reichert, M., ten Teije, A., Eds.; Springer International Publishing: Tallinn, Estonia, 2012; Volume 7738, pp. 140–153. [Google Scholar]

- Homayounfar, P. Process Mining Challenges in Hospital Information Systems. In Proceedings of the 2012 Federated Conference on Computer Science and Information Systems (FedCSIS), Wrocław, Poland, 9–12 September 2012; pp. 1135–1140. [Google Scholar]

- Mans, R.S.; van der Aalst, W.M.P.; Vanwersch, R.J.B. Process Mining in Healthcare: Evaluating and Exploiting Operational Processes; vom Brocke, J., Ed.; SpringerBriefs in Business Process Management; Springer International Publishing: Cham, Switzerland, 2015; ISBN 978-3-319-16070-2. [Google Scholar]

- Striani, F.; Colucci, C.; Corallo, A.; Paiano, R.; Pascarelli, C. Process Mining in Healthcare: A Systematic Literature Review and A Case Study. Adv. Sci. Technol. Eng. Syst. J. 2022, 7, 151–160. [Google Scholar] [CrossRef]

- Munoz-Gama, J.; Martin, N.; Fernandez-Llatas, C.; Johnson, O.A.; Sepúlveda, M.; Helm, E.; Galvez-Yanjari, V.; Rojas, E.; Martinez-Millana, A.; Aloini, D.; et al. Process Mining for Healthcare: Characteristics and Challenges. J. Biomed. Inform. 2022, 127, 103994. [Google Scholar] [CrossRef]

- Mans, R.S.; Schonenberg, M.H.; Song, M.; Van Der Aalst, W.M.P.; Bakker, P.J. Application of Process Mining in Healthcare—A Case Study in a Dutch Hospital. In Proceedings of the International Joint Conference on Biomedical Engineering Systems and Technologies, Funchal, Madeira, Portugal, 28–31 January 2008; pp. 425–438. [Google Scholar]

- Gupta, S. Workflow and Process Mining in Healthcare. Master’s Thesis, Eindhoven University of Technology, Eindhoven, The Netherlands, 2007. [Google Scholar]

- van der Aalst, W. Process Mining; Springer: Berlin/Heidelberg, Germany, 2016; ISBN 978-3-662-49850-7. [Google Scholar]

- Gurgen Erdogan, T.; Tarhan, A. A Goal-Driven Evaluation Method Based on Process Mining for Healthcare Processes. Appl. Sci. 2018, 8, 894. [Google Scholar] [CrossRef]

- Wilkins-Caruana, A.; Bandara, M.; Musial, K.; Catchpoole, D.; Kennedy, P.J. Inferring Actual Treatment Pathways from Patient Records. J. Biomed. Inform. 2023, 148, 104554. [Google Scholar] [CrossRef] [PubMed]

- Benevento, E.; Aloini, D.; van der Aalst, W.M.P. How Can Interactive Process Discovery Address Data Quality Issues in Real Business Settings? Evidence from a Case Study in Healthcare. J. Biomed. Inform. 2022, 130, 104083. [Google Scholar] [CrossRef] [PubMed]

- Rojas, E.; Munoz-Gama, J.; Sepúlveda, M.; Capurro, D. Process Mining in Healthcare: A Literature Review. J. Biomed. Inform. 2016, 61, 224–236. [Google Scholar] [CrossRef] [PubMed]

- Kurniati, A.P.; Hall, G.; Hogg, D.; Johnson, O. Process Mining in Oncology Using the MIMIC-III Dataset. J. Phys. Conf. Ser. 2018, 971, 012008. [Google Scholar] [CrossRef]

- Chen, Q.; Lu, Y.; Tam, C.; Poon, S. Process Mining to Discover and Preserve Infrequent Relations in Event Logs: An Application to Understand the Laboratory Test Ordering Process Using the MIMIC-III Dataset. In Proceedings of the ACIS 2021 Proceedings, Sydney, Australia, 6–10 December 2021. [Google Scholar]

- Mans, R.S.; Schonenberg, M.H.; Song, M.; van der Aalst, W.M.P. Process Mining in Healthcare—A Case Study. In Proceedings of the First International Conference on Health Informatics, Funchal, Madeira, Portugal, 28–31 January 2008; SciTePress—Science and Technology Publications: Funchal, Portugal, 2008; pp. 118–125. [Google Scholar]

- Yang, S.; Sarcevic, A.; Farneth, R.A.; Chen, S.; Ahmed, O.Z.; Marsic, I.; Burd, R.S. An Approach to Automatic Process Deviation Detection in a Time-Critical Clinical Process. J. Biomed. Inform. 2018, 85, 155–167. [Google Scholar] [CrossRef]

- Andrews, R.; Goel, K.; Corry, P.; Burdett, R.; Wynn, M.T.; Callow, D. Process Data Analytics for Hospital Case-Mix Planning. J. Biomed. Inform. 2022, 129, 104056. [Google Scholar] [CrossRef]

- Alvarez, C.; Rojas, E.; Arias, M.; Munoz-Gama, J.; Sepúlveda, M.; Herskovic, V.; Capurro, D. Discovering Role Interaction Models in the Emergency Room Using Process Mining. J. Biomed. Inform. 2018, 78, 60–77. [Google Scholar] [CrossRef]

- Back, C.O.; Manataki, A.; Harrison, E. Mining Patient Flow Patterns in a Surgical Ward. In Proceedings of the HEALTHINF 2020—13th International Conference on Health Informatics, Proceedings; Part of 13th International Joint Conference on Biomedical Engineering Systems and Technologies, BIOSTEC 2020, Valletta, Malta, 24–26 February 2020; SciTePress: Valletta, Malta, 2020; pp. 273–283. [Google Scholar]

- Back, C.O.; Manataki, A.; Papanastasiou, A.; Harrison, E. Stochastic Workflow Modeling in a Surgical Ward: Towards Simulating and Predicting Patient Flow. In Communications in Computer and Information Science; Springer: Cham, Switzerland, 2021; Volume 1400, pp. 565–591. [Google Scholar] [CrossRef]

- Berti, A.; van Zelst, S.; Schuster, D. PM4Py: A Process Mining Library for Python. Softw. Impacts 2023, 17, 100556. [Google Scholar] [CrossRef]

- Padoy, N. Machine and Deep Learning for Workflow Recognition during Surgery. Minim. Invasive Ther. Allied Technol. 2019, 28, 82–90. [Google Scholar] [CrossRef] [PubMed]

- Khuri, S.F.; Henderson, W.G.; DePalma, R.G.; Mosca, C.; Healey, N.A.; Kumbhani, D.J. Determinants of Long-Term Survival after Major Surgery and the Adverse Effect of Postoperative Complications. Ann. Surg. 2005, 242, 326–343. [Google Scholar] [CrossRef]

- Oliver, C.M.; Wagstaff, D.; Bedford, J.; Moonesinghe, S.R. Systematic Development and Validation of a Predictive Model for Major Postoperative Complications in the Peri-Operative Quality Improvement Project (PQIP) Dataset. Anaesthesia 2024, 79, 389–398. [Google Scholar] [CrossRef] [PubMed]

- Lakshmanan, G.T.; Rozsnyai, S.; Wang, F. Investigating Clinical Care Pathways Correlated with Outcomes. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2013; Volume 8094, pp. 323–338. [Google Scholar] [CrossRef]

- Mans, R.S.R.; van der Aalst, W.; Vanwersch, R.J.B.R. Process Mining in Healthcare: Opportunities Beyond the Ordinary; BPMcenter.org: Eindhoven, The Netherlands, 2013. [Google Scholar]

- Futoma, J.; Morris, J.; Lucas, J. A Comparison of Models for Predicting Early Hospital Readmissions. J. Biomed. Inform. 2015, 56, 229–238. [Google Scholar] [CrossRef] [PubMed]

- Blum, T.; Padoy, N.; Feußner, H.; Navab, N. Workflow Mining for Visualization and Analysis of Surgeries. Int. J. Comput. Assist. Radiol. Surg. 2008, 3, 379–386. [Google Scholar] [CrossRef]

- van der Aalst, W. Process Mining, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2018; ISBN 978-3-662-57041-8. [Google Scholar]

- Dahlin, S.; Eriksson, H.; Raharjo, H. Process Mining for Quality Improvement: Propositions for Practice and Research. Qual. Manag. Health Care 2019, 28, 8–14. [Google Scholar] [CrossRef]

- Andrews, R.; Wynn, M.; Vallmuur, K.; ter Hofstede, A.; Bosley, E.; Elcock, M.; Rashford, S. Leveraging Data Quality to Better Prepare for Process Mining: An Approach Illustrated Through Analysing Road Trauma Pre-Hospital Retrieval and Transport Processes in Queensland. Int. J. Environ. Res. Public Health 2019, 16, 1138. [Google Scholar] [CrossRef]

- van der Aalst, W.; Adriansyah, A.; de Medeiros, A.K.A.; Arcieri, F.; Baier, T.; Blickle, T.; Bose, J.C.; van den Brand, P.; Brandtjen, R.; Buijs, J.; et al. Process Mining Manifesto. In Business Process Management Workshops: BPM 2011 International Workshops, Clermont-Ferrand, France, 29 August 2011; Revised Selected Papers, Part I.; Daniel, F., Barkaoui, K., Dustdar, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 169–194. [Google Scholar]

- Dasari, D.; Varma, P.S. Employing Various Data Cleaning Techniques to Achieve Better Data Quality Using Python. In Proceedings of the 2022 6th International Conference on Electronics, Communication and Aerospace Technology, Coimbatore, India, 1–3 December 2022; pp. 1379–1383. [Google Scholar]

- Oladipupo, M.A.; Obuzor, P.C.; Bamgbade, B.J.; Adeniyi, A.E.; Olagunju, K.M.; Ajagbe, S.A. An Automated Python Script for Data Cleaning and Labeling Using Machine Learning Technique. Informatica 2023, 47, 219–232. [Google Scholar] [CrossRef]

- Goyle, K.; Xie, Q.; Goyle, V. DataAssist: A Machine Learning Approach to Data Cleaning and Preparation. In Intelligent Systems and Applications; Arai, K., Ed.; Springer Nature: Cham, Switzerland, 2024; pp. 476–486. [Google Scholar]

- Gholinejad, M.; Loeve, A.J.; Dankelman, J. Surgical Process Modelling Strategies: Which Method to Choose for Determining Workflow? Minim. Invasive Ther. Allied Technol. 2019, 28, 91–104. [Google Scholar] [CrossRef] [PubMed]

- Berti, A.; Van Zelst, S.J.; Van Der Aalst, W.M.P.; Gesellschaf, F. Process Mining for Python (PM4py): Bridging the Gap between Process- and Data Science. In Proceedings of the CEUR Workshop Proceedings, Aachen, Germany, 24–26 June 2019; Volume 2374, pp. 13–16. [Google Scholar]

- Helm, E.; Paster, F. First Steps Towards Process Mining in Distributed Health Information Systems. Int. J. Electron. Telecommun. 2015, 61, 137–142. [Google Scholar] [CrossRef]

- Bernard, G.; Andritsos, P. Accurate and Transparent Path Prediction Using Process Mining. In Proceedings of the European Conference on Advances in Databases and Information Systems, Bled, Slovenia, 8–11 September 2019; pp. 235–250. [Google Scholar]

- Fernandez-Llatas, C.; Benedi, J.M.; Gama, J.M.; Sepulveda, M.; Rojas, E.; Vera, S.; Traver, V. Interactive Process Mining in Surgery with Real Time Location Systems: Interactive Trace Correction. In Interactive Process Mining in Healthcare; Fernandez-Llatas, C., Ed.; Health Informatics; Springer: Cham, Switzerland, 2021; pp. 181–202. ISBN 978-3-030-53992-4. [Google Scholar]

- Alharbi, A.; Bulpitt, A.; Johnson, O. Improving Pattern Detection in Healthcare Process Mining Using an Interval-Based Event Selection Method. In Lecture Notes in Business Information Processing; Springer: Cham, Switzerland, 2017; Volume 297, pp. 88–105. [Google Scholar]

- Rabbi, F.; Banik, D.; Hossain, N.U.I.; Sokolov, A. Using Process Mining Algorithms for Process Improvement in Healthcare. Healthc. Anal. 2024, 5, 100305. [Google Scholar] [CrossRef]

- van der Aalst, W.; Weijters, T.; Maruster, L. Workflow Mining: Discovering Process Models from Event Logs. IEEE Trans. Knowl. Data Eng. 2004, 16, 1128–1142. [Google Scholar] [CrossRef]

- van der Aalst, W.M.P.; van Dongen, B.F.; Herbst, J.; Maruster, L.; Schimm, G.; Weijters, A.J.M.M. Workflow Mining: A Survey of Issues and Approaches. Data Knowl. Eng. 2003, 47, 237–267. [Google Scholar] [CrossRef]

- Petri, C.; Reisig, W. Petri Net. Scholarpedia 2008, 3, 6477. [Google Scholar] [CrossRef]

- Perimal-Lewis, L.; de Vries, D.; Thompson, C.H. Health Intelligence: Discovering the Process Model Using Process Mining by Constructing Start-to-End Patient Journeys. In Proceedings of the 7th Australasian Workshop on Health Informatics and Knowledge Management (HIKM), Auckland, New Zealand, 20–23 January 2014; pp. 59–67. [Google Scholar]

- Berti, A.; Van Der Aalst, W. Reviving Token-Based Replay: Increasing Speed While Improving Diagnostics. In Proceedings of the International Workshop on Algorithms & Theories for the Analysis of Event Data 2019, Aachen, Germany, 25 June 2019; Volume 2371, pp. 87–103. Available online: https://ceur-ws.org/Vol-2371 (accessed on 3 August 2025).

- Norouzifar, A.; Rafiei, M.; Dees, M.; van der Aalst, W. Process Variant Analysis Across Continuous Features: A Novel Framework. In Enterprise, Business-Process and Information Systems Modeling; van der Aa, H., Bork, D., Schmidt, R., Sturm, A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 129–142. [Google Scholar]

- Burattin, A. Process Mining Techniques in Business Environments; van der Aalst, W., Mylopoulos, J., Rosemann, M., Shaw, M.J., Szyperski, C., Eds.; Lecture Notes in Business Information Processing; Springer International Publishing: Cham, Switzerland, 2015; Volume 207, ISBN 978-3-319-17481-5. [Google Scholar]

- Dakic, D.; Stefanovic, D.; Cosic, I.; Lolic, T.; Medojevic, M. Business Process Mining Application: A Literature Review. In Proceedings of the 29th International DAAAM Symposium ‘Intelligent Manufacturing & Automation’, Zadar, Croatia, 24–27 October 2018; Katalinic, B., Ed.; DAAAM International: Vienna, Austria, 2018; pp. 0866–0875. [Google Scholar]

- van der Aalst, W.M.P.; La Rosa, M.; Santoro, F.M. Business Process Management: Don’t Forget to Improve the Process! Bus. Inf. Syst. Eng. 2016, 58, 1–6. [Google Scholar] [CrossRef]

- van der Aalst, W.M.P. Process Mining, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2011; ISBN 978-3-642-19345-3. [Google Scholar]

- Esiefarienrhe, B.M.; Omolewa, I.D. Application of Process Mining to Medical Billing Using L* Life Cycle Model. In Proceedings of the 2021 International Conference on Electrical, Computer and Energy Technologies (ICECET), Cape Town, South Africa, 9–10 December 2021; IEEE: Cape Town, South Africa, 2021; pp. 1–6. [Google Scholar]

- Rebuge, Á.; Ferreira, D.R. Business Process Analysis in Healthcare Environments: A Methodology Based on Process Mining. Inf. Syst. 2012, 37, 99–116. [Google Scholar] [CrossRef]

- De Weerdt, J.; Schupp, A.; Vanderloock, A.; Baesens, B. Process Mining for the Multi-Faceted Analysis of Business Processes—A Case Study in a Financial Services Organization. Comput. Ind. 2013, 64, 57–67. [Google Scholar] [CrossRef]

- Mayhew, D.; Mendonca, V.; Murthy, B.V.S. A Review of ASA Physical Status—Historical Perspectives and Modern Developments. Anaesthesia 2019, 74, 373–379. [Google Scholar] [CrossRef] [PubMed]

- Shafei, I.; Karnon, J.; Crotty, M. Process Mining and Customer Journey Mapping in Healthcare: Enhancing Patient-Centred Care in Stroke Rehabilitation. Digit. Health 2024, 10, 20552076241249264. [Google Scholar] [CrossRef] [PubMed]

- Schuh, G.; Gützlaff, A.; Cremer, S.; Schopen, M. Understanding Process Mining for Data-Driven Optimization of Order Processing. Procedia Manuf. 2020, 45, 417–422. [Google Scholar] [CrossRef]

- van der Aalst, W.; Zhao, J.L.; Wang, H.J. Editorial: “Business Process Intelligence: Connecting Data and Processes”. ACM Trans. Manag. Inf. Syst. 2015, 5, 1–7. [Google Scholar] [CrossRef]

| Refs. | Contribution to the Literature |

|---|---|

| [1] | Highlights foundational challenges in digitizing hospital workflows due to low EHR adoption. |

| [2] | Introduces the open access MOVER dataset, enabling reproducible process mining in surgical workflows. |

| [3,5] | Provide foundational frameworks for healthcare process mining, including the L* lifecycle model and reference models. |

| [4] | Discusses challenges of applying process mining in real hospital settings. |

| [6] | Presents a systematic review, categorizing healthcare process mining methods. |

| [7] | Offers an extensive review of process mining characteristics and challenges in healthcare. |

| [10,14,17] | Apply discovery and conformance checking on clinical event logs. |

| [11] | Introduces the GQFI method for goal-driven evaluation of surgical workflows. |

| [13,18] | Analyze performance deviations and bottlenecks in clinical processes to identify areas for improvement and optimization, thereby enhancing overall efficiency and effectiveness. |

| [15,16] | Utilize the MIMIC-III dataset for an open and reproducible process mining approach. |

| [19,20] | Apply process mining for resource allocation and emergency care modeling. |

| [21,22] | Utilize process mining and simulation to model patient flow in the surgical ward. |

| [23] | Presents PM4Py, a scalable and customizable Python library for process mining. |

| [24] | Applies machine learning and deep learning for workflow recognition during surgery. |

| [25] | Identifies determinants of long-term survival after major surgery and adverse effects of complications. |

| [26] | Develops and validates predictive models for major postoperative complications (PQIP dataset). |

| [27,28] | Investigate clinical care pathways correlated with outcomes using process analysis. |

| [29] | Compares predictive models for early hospital re-admissions. |

| [30] | Introduces workflow mining methods for visualization and analysis of surgical procedures. |

| This Study | Introduces a complete, question-driven framework applied to the MOVER dataset, combining process discovery, conformance checking, temporal analysis, clustering, and predictive modeling. The study not only identifies surgical variants, outliers, and bottlenecks but also demonstrates how process mining and machine learning can jointly support efficiency improvements and perioperative risk prediction. |

| MRN | HOSP_ADMSN_TIME | HOSP_DISCH_TIME | SURGERY_DATE | SURGERY_TYPE | SRG_PLN_TIME | SRG_CNL_TIME | IN_OR_DTTM | AN_START_DATETIME | OUT_OR_DTTM | AN_STOP_DATETIME |

|---|---|---|---|---|---|---|---|---|---|---|

| 0b1b3c15740e98de | 15.08.2021 14:50 | 29.08.2021 17:02 | 24.08.2021 00:00 | Surgery Performed | 24.08.2021 00:00 | 24.08.2021 15:13 | 24.08.2021 15:13 | 24.08.2021 17:10 | 24.08.2021 17:15 | |

| 0b1b3c15740e98de | 10.01.2022 04:58 | 10.01.2022 07:25 | 10.01.2022 00:00 | Surgery Canceled | 10.01.2022 04:59 | 10.01.2022 07:24 | ||||

| 0b1b3c15740e98de | 27.01.2022 05:25 | 31.01.2022 13:10 | 27.01.2022 00:00 | Surgery Performed | 27.01.2022 05:26 | 27.01.2022 07:32 | 27.01.2022 07:33 | 27.01.2022 14:45 | 27.01.2022 14:55 | |

| 0000e45237d1fc96 | 12.02.2021 07:23 | 13.02.2021 17:05 | 12.02.2021 00:00 | Surgery Canceled | 12.02.2021 07:24 | 12.02.2021 23:59 | ||||

| 0b30d71ac00f1339 | 15.06.2022 04:46 | 15.06.2022 09:29 | 30.05.2022 00:00 | Surgery Date Passed | 30.05.2022 00:00 | 30.05.2022 23:59 | ||||

| 0b30d71ac00f1339 | 15.06.2022 04:46 | 15.06.2022 09:29 | 15.06.2022 00:00 | Surgery Performed | 15.06.2022 04:47 | 15.06.2022 07:06 | 15.06.2022 07:06 | 15.06.2022 08:34 | 15.06.2022 08:35 |

| Case_Id | Activity | Timestamp |

|---|---|---|

| 0b1b3c15740e98de | Hospital Admission | 15.08.2021 14:50 |

| 0b1b3c15740e98de | Surgery Planned | 24.08.2021 00:00 |

| 0b1b3c15740e98de | Operating Room Enter | 24.08.2021 15:13 |

| 0b1b3c15740e98de | Anesthesia Start | 24.08.2021 15:13 |

| 0b1b3c15740e98de | Operating Room Exit | 24.08.2021 17:10 |

| 0b1b3c15740e98de | Anesthesia Stop | 24.08.2021 17:15 |

| 0b1b3c15740e98de | Hospital Discharge | 29.08.2021 17:02 |

| 0b1b3c15740e98de | Hospital Admission | 10.01.2022 04:58 |

| 0b1b3c15740e98de | Surgery Planned | 10.01.2022 04:59 |

| 0b1b3c15740e98de | Surgery Canceled | 10.01.2022 07:24 |

| 0b1b3c15740e98de | Hospital Discharge | 10.01.2022 07:25 |

| Anomaly (Transition) | Elective-like | Emergency-like | Total | % Emergency |

|---|---|---|---|---|

| Anesthesia Start before Admission | 1 | 4 | 5 | 80% |

| Anesthesia Start before Discharge | 39 | 16 | 55 | 29% |

| Anesthesia Start before Planning | 78 | 8 | 86 | 9% |

| Anesthesia Stop before OR Entry | 347 | 28 | 375 | 7% |

| Discharge before Anesthesia Start | 1 | 0 | 1 | 0% |

| Discharge before Anesthesia Stop | 27 | 15 | 42 | 36% |

| Discharge before OR Entry | 8 | 0 | 8 | 0% |

| Discharge before OR Exit | 67 | 11 | 78 | 14% |

| OR Entry before Discharge | 31 | 1 | 32 | 3% |

| OR Entry before Planning | 24 | 3 | 27 | 11% |

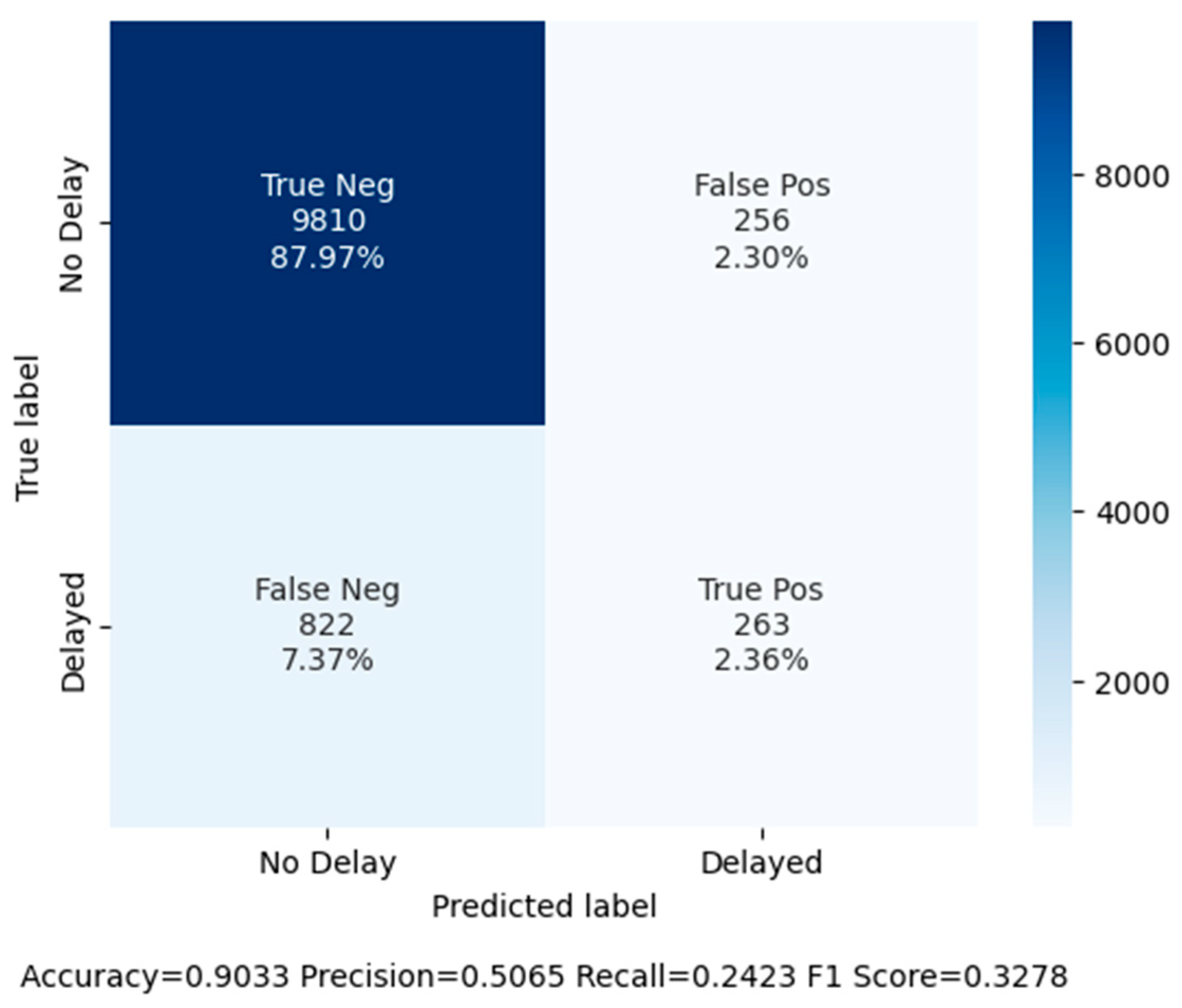

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Random Forest (Baseline) | 90.33 | 50.66 | 24.23 | 0.33 |

| Random Forest + Undersampling | 77.10 | 27.87 | 85.18 | 0.42 |

| Random Forest + SMOTE | 90.00 | 47.55 | 26.59 | 0.34 |

| Random Forest + Class Weights | 90.38 | 51.30 | 22.76 | 0.32 |

| Questions | Answer | Clinical Insight |

|---|---|---|

| Q1 | Peak hours 7 AM–3 PM (Figure 3 and Figure 4) | Schedules can be adjusted for efficiency |

| Q2 | Deviations include anesthesia before registration | Data quality or urgent cases |

| Q3 | Top 3 variants = 87.5%; rare paths flagged | Outlier paths can be audited |

| Q4 | Surgery durations are longer in ASA III+ (Figure 5) | High-risk patients need extended slots |

| Q5 | 72 cases entered OR post-discharge | Workflow or data entry errors |

| Q6 | Delays (defined as deviation between scheduled surgery time and actual OR entry) correlated with prolonged recovery. This association may reflect both operational bottlenecks (e.g., OR overcrowding) and patient complexity (e.g., ASA III–IV). | Consequently, delays may serve as early warning indicators to trigger ICU preparation for high-risk patients. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Celik, U.; Korkmaz, A.; Stoyanov, I. Integrating Process Mining and Machine Learning for Surgical Workflow Optimization: A Real-World Analysis Using the MOVER EHR Dataset. Appl. Sci. 2025, 15, 11014. https://doi.org/10.3390/app152011014

Celik U, Korkmaz A, Stoyanov I. Integrating Process Mining and Machine Learning for Surgical Workflow Optimization: A Real-World Analysis Using the MOVER EHR Dataset. Applied Sciences. 2025; 15(20):11014. https://doi.org/10.3390/app152011014

Chicago/Turabian StyleCelik, Ufuk, Adem Korkmaz, and Ivaylo Stoyanov. 2025. "Integrating Process Mining and Machine Learning for Surgical Workflow Optimization: A Real-World Analysis Using the MOVER EHR Dataset" Applied Sciences 15, no. 20: 11014. https://doi.org/10.3390/app152011014

APA StyleCelik, U., Korkmaz, A., & Stoyanov, I. (2025). Integrating Process Mining and Machine Learning for Surgical Workflow Optimization: A Real-World Analysis Using the MOVER EHR Dataset. Applied Sciences, 15(20), 11014. https://doi.org/10.3390/app152011014