A Robotic Gamified Framework for Upper-Limb Rehabilitation

Abstract

1. Introduction

Current Limitations of Available Technology

2. Robotic Framework Design

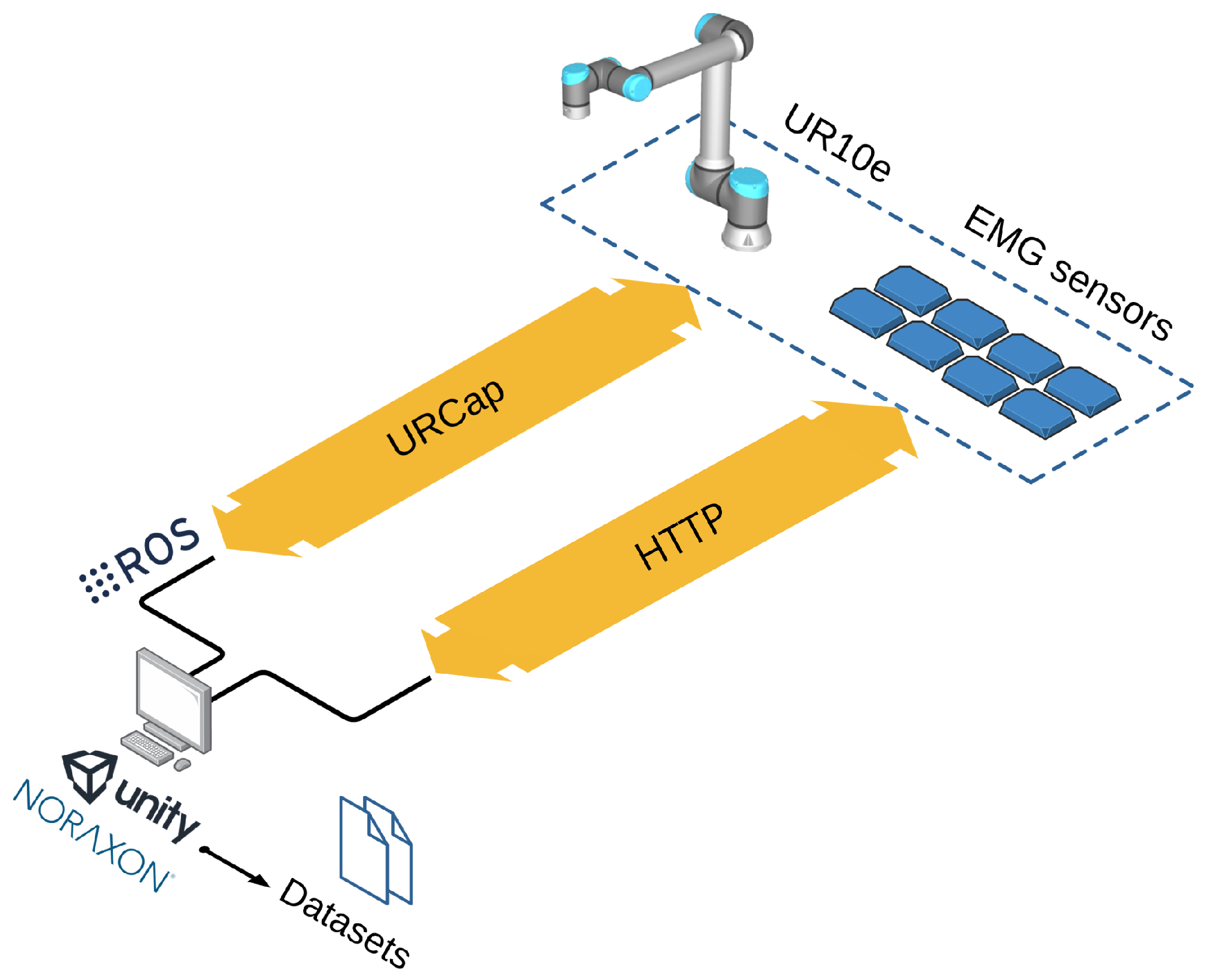

2.1. Control System

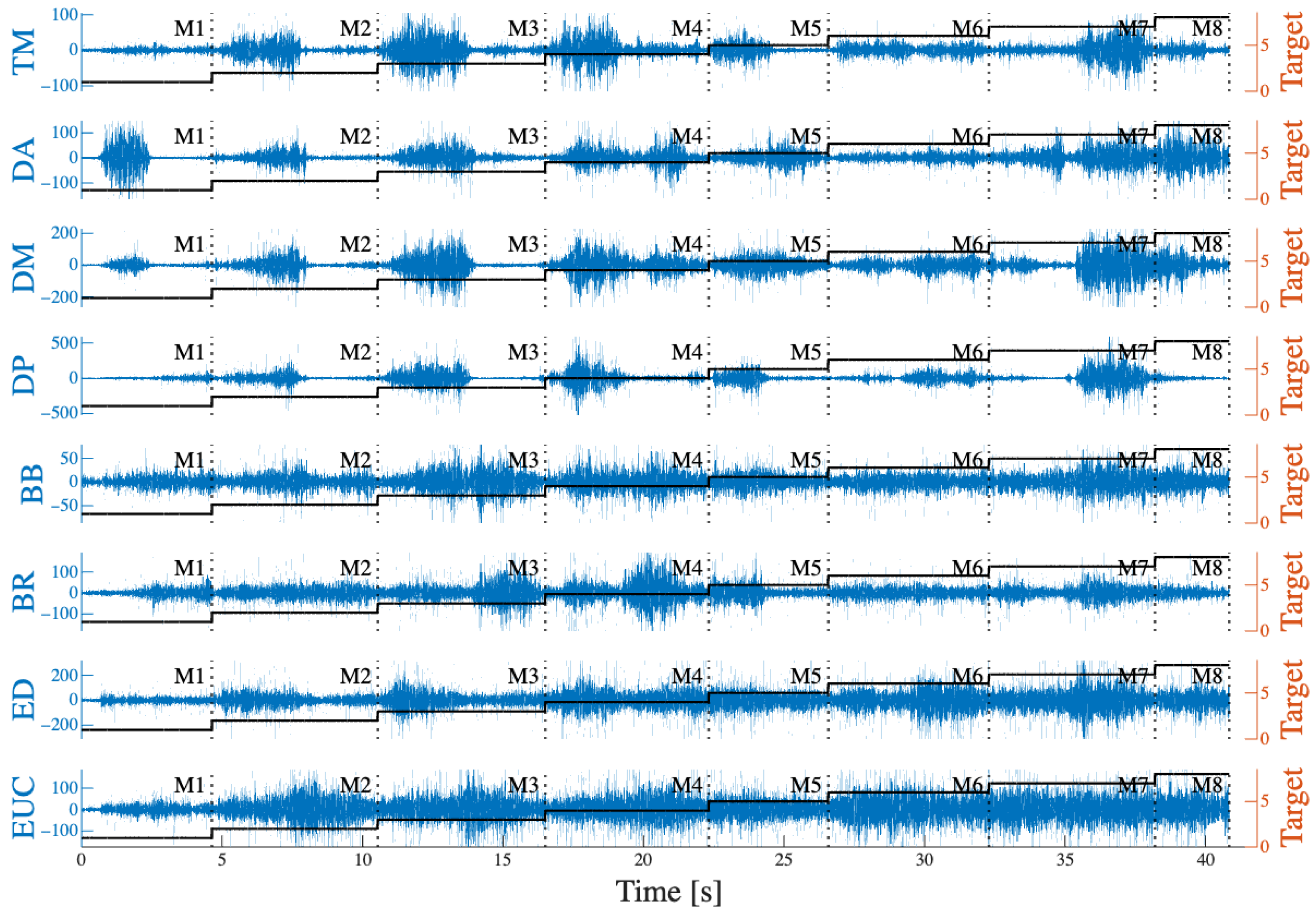

2.2. Data Acquisition and Processing

2.3. User Interaction

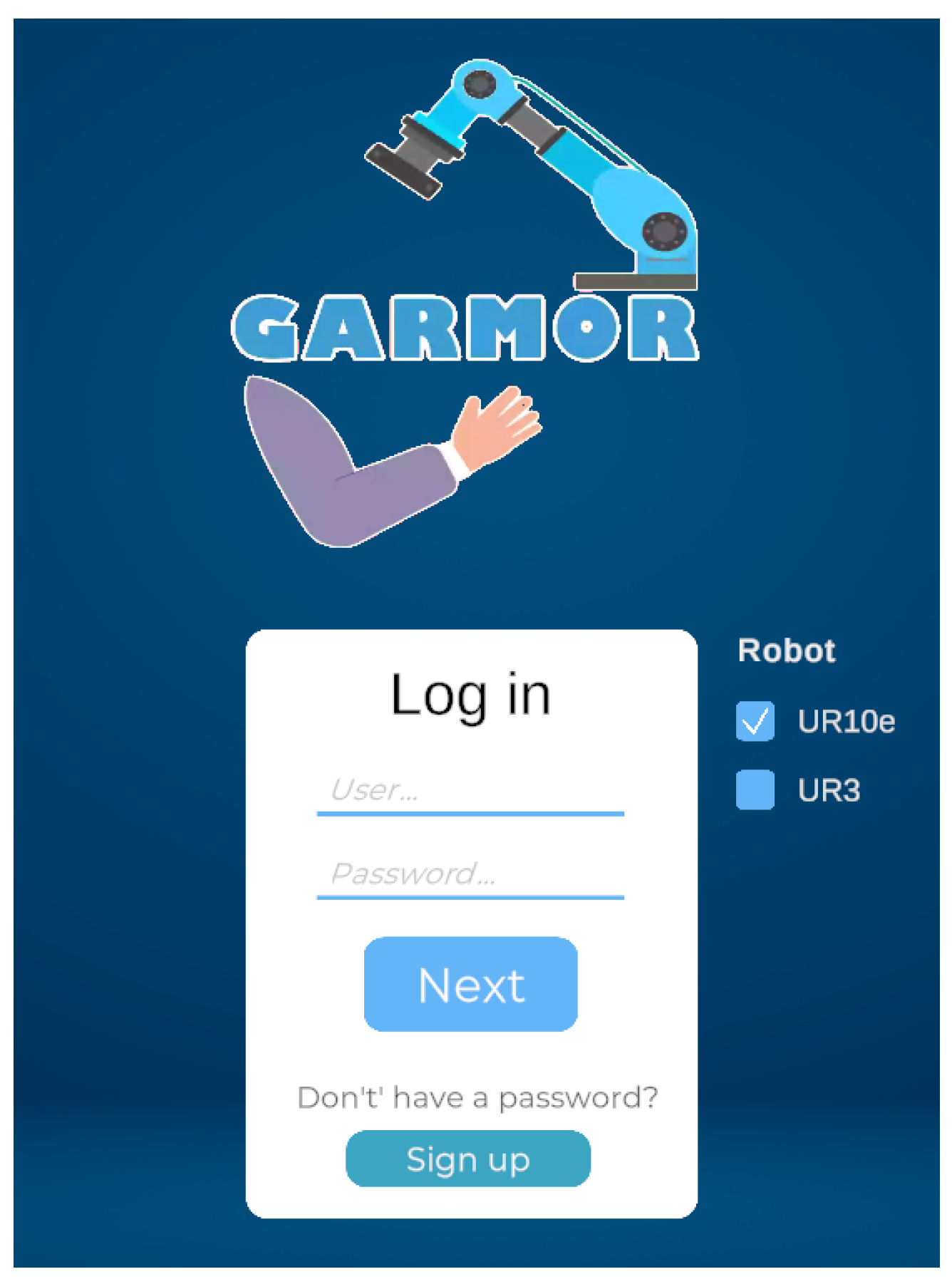

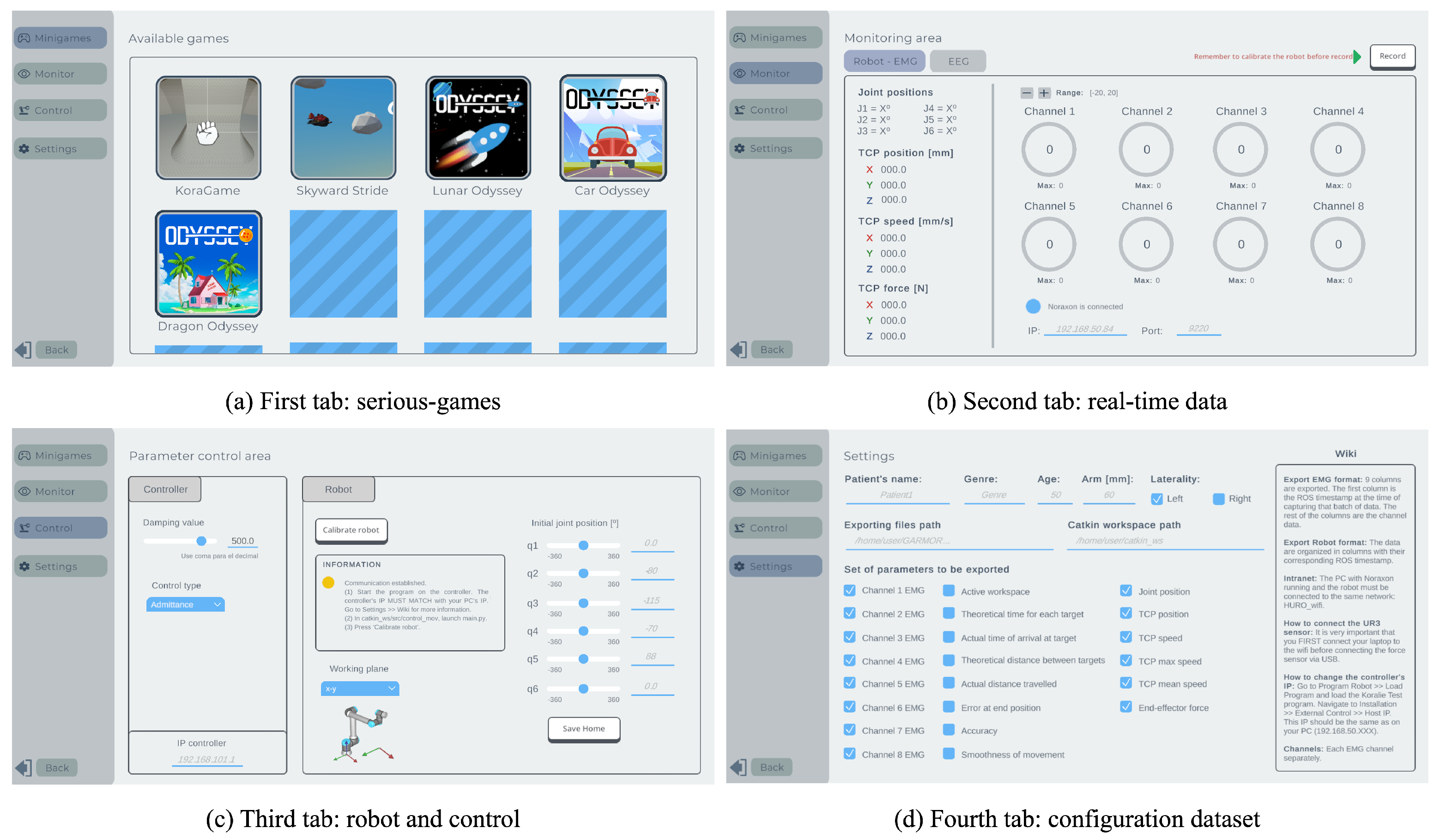

2.3.1. User Interface

2.3.2. Gamification

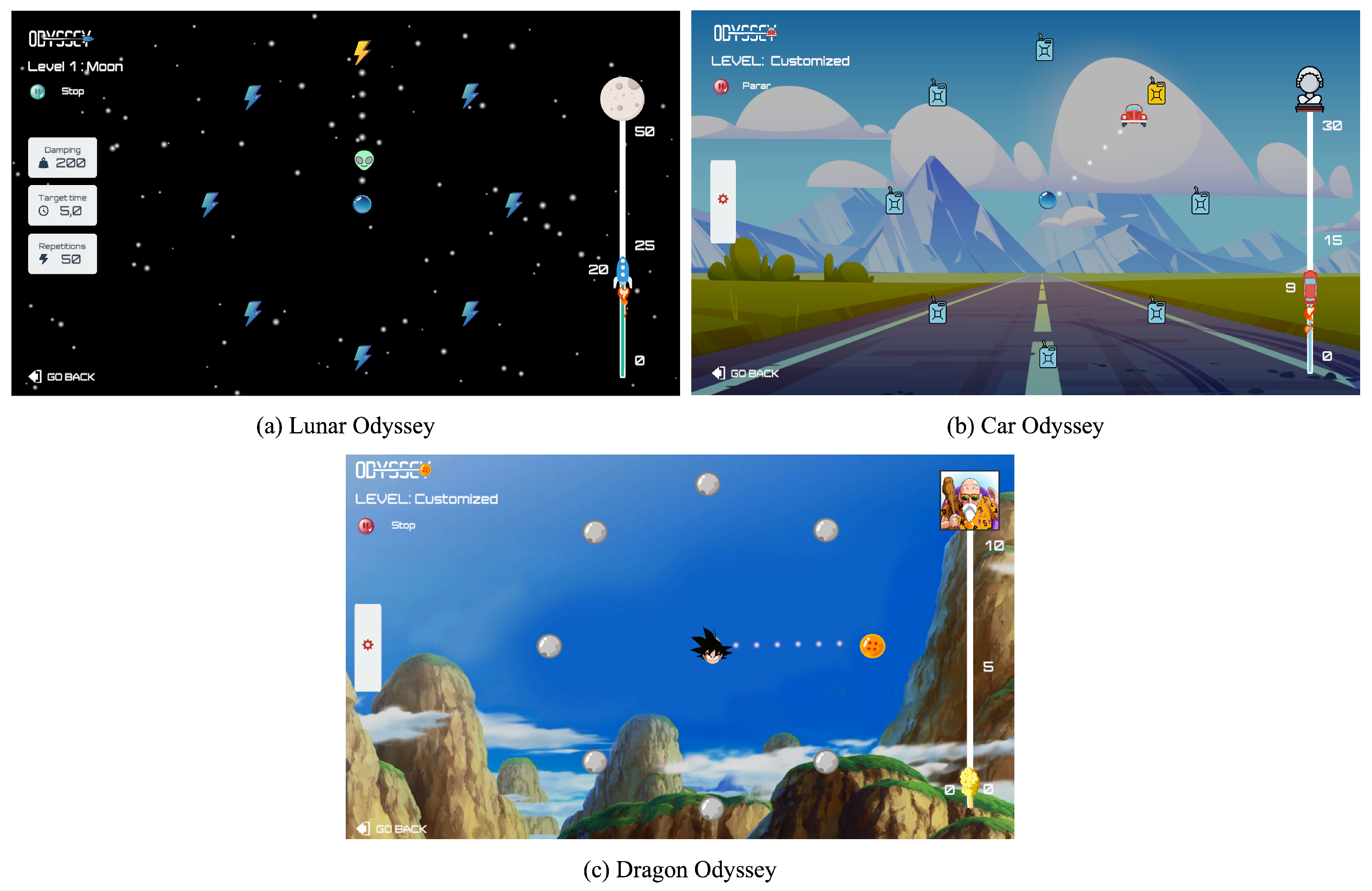

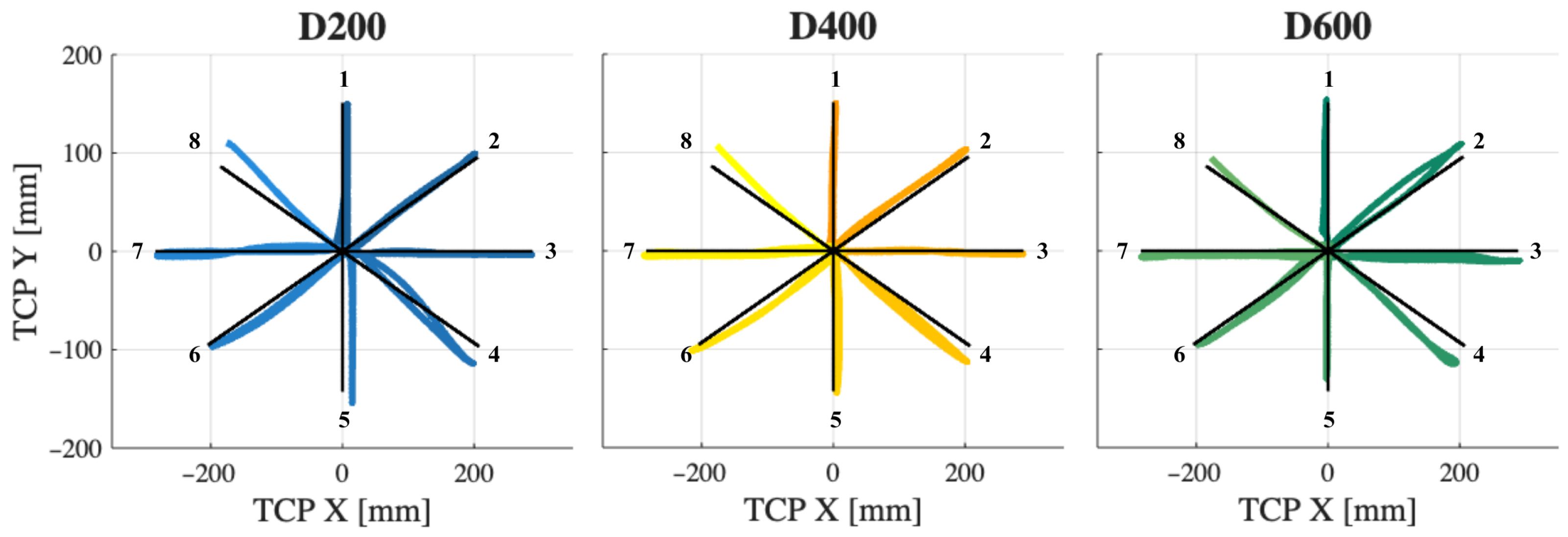

- Odyssey is based on a centre-out approach [32] in which the patient moves the end-effector to reach targets shown on the screen. Eight targets are placed along a circular path, with one target in the centre, making for a total of nine targets. The objective is to move from the centre target to one of the targets on the circumference and then return. Depending on the user, the order of these targets can be randomised or arranged in a clockwise sequence, an option that can be selected in the game’s UI. If the user is a healthy individual, targets can be either randomised or set in a clockwise order. Randomising the targets prevents the user from learning patterns and keeps them constantly focused on the exercise. The target order is set clockwise if the user is an upper-limb rehabilitation patient, since a known sequence is easier to follow and ensures the same number of repetitions in all eight directions. There are three skins for this game: Lunar Odyssey, Car Odyssey, and Dragon Odyssey (Figure 5).

- Skyward Stride consists of an aeroplane that flies through an infinite world in a 2D environment with obstacles to be avoided. The user can change the height of the aeroplane to deal with the obstacles, obtain bonus items, and finish different levels with variable difficulty. For upper-limb rehabilitation, the movements can be controlled by linear displacements on a plane (arm reaching) or commanded by single joint movements of the wrist or elbow.

- Kora Game allows for rehabilitation of the upper limb as the patient moves the end-effector of the robot with admittance control. In this game, the movements of the robot are mapped to a 2D hand that collects apples and pears appearing randomly in a forest, which is as large as the robot’s working space. Different difficulty levels can be set by changing the number of fruits that appear or the maximum time for the user to grasp them.

2.4. Configuration

- The first parameter is the user’s arm length, which is essential for adjusting the robot’s targets to match the user’s maximum arm reach. This measurement is entered into the game, which automatically adjusts the robot’s targets to ensure that the arm is fully extended and not flexed when reaching a target. The targets are mapped in an elliptic shape that allows for full arm extension when reaching them in the movement, where left–right movements have a greater range than forward–backward movements. This design ensures that the patient performs the exercise and stretches the arm to the maximum range in order to reach each target.

- The second personalisation parameter is the robot’s plane of work. Odyssey can be used in two different planes, namely, the transverse and the frontal planes, with the human body as the frame of reference. This allows the rehabilitation exercise to be executed with either horizontal or vertical movements. In both planes, targets are adjusted according to the arm length.

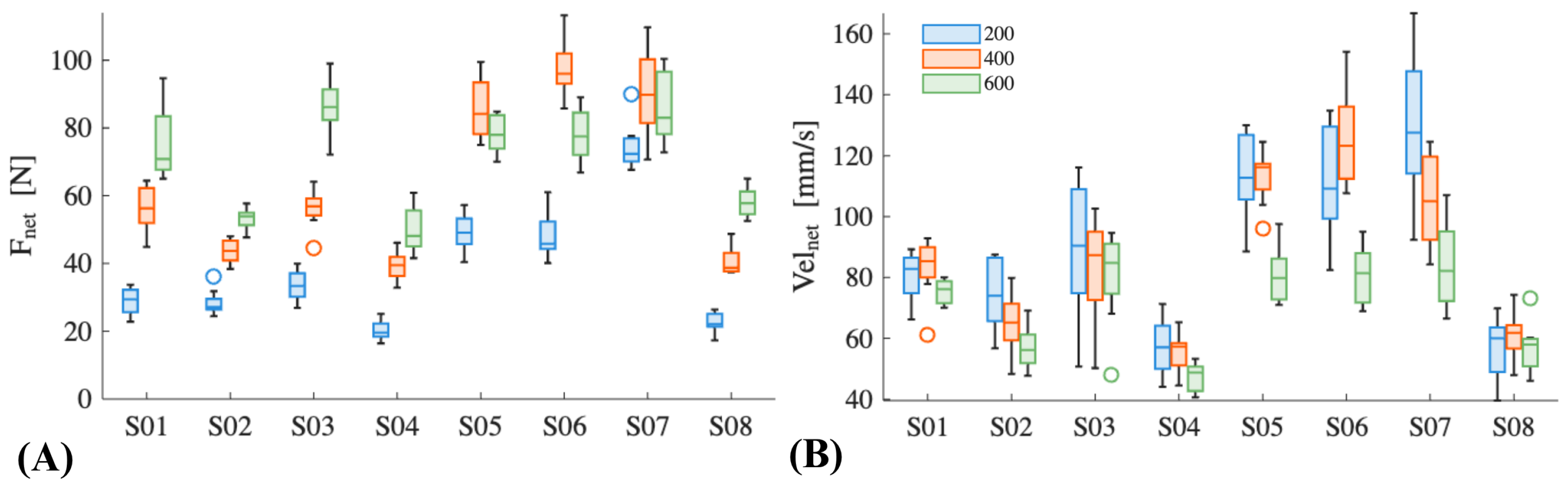

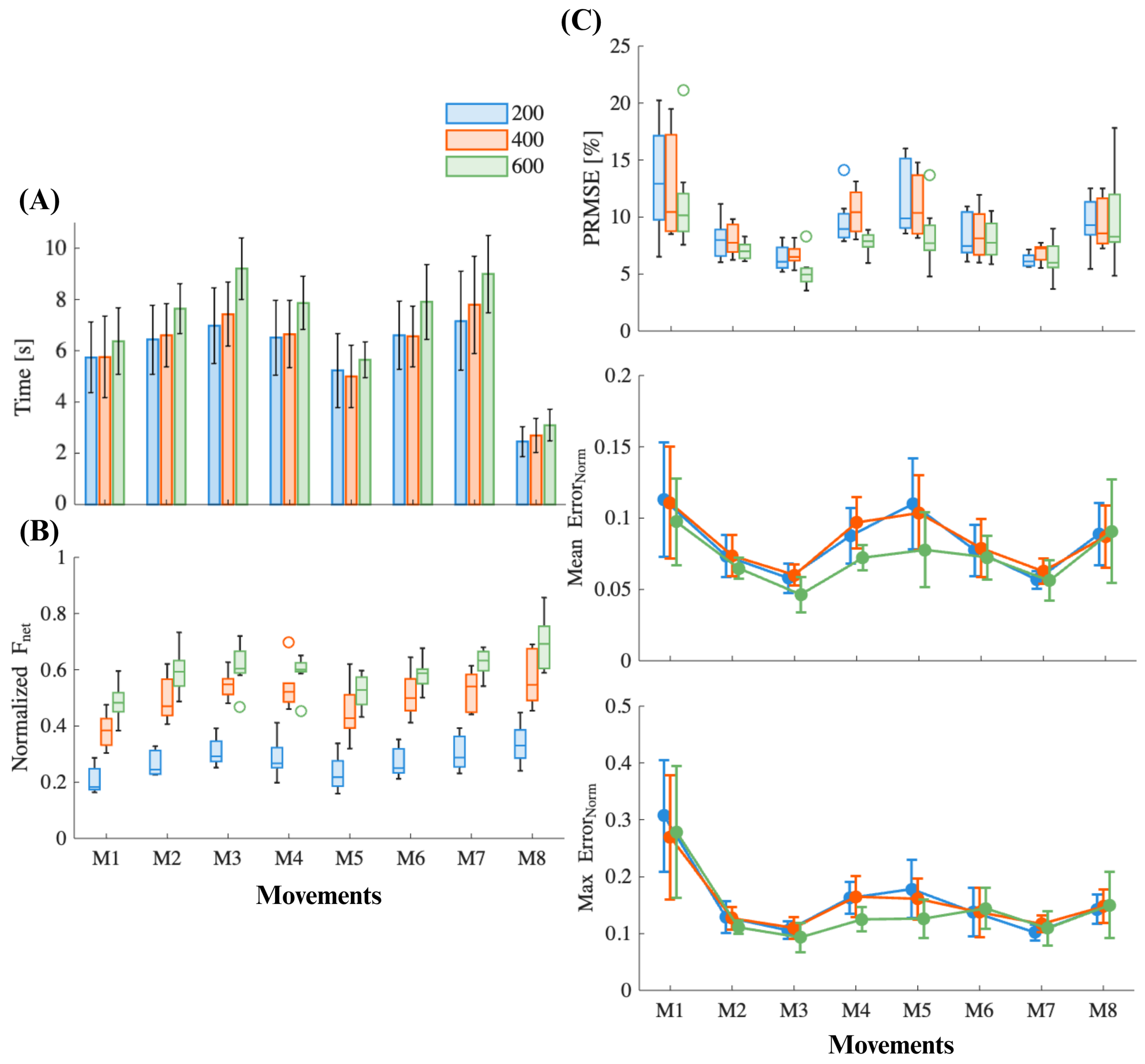

- Third, the damping value for the admittance control is adjusted for each patient. This parameter can be adjusted from 0 to 600 Ns/m in individual exercises or in consecutive exercises with a specific damping for each level. For healthy individuals, it can be set to a high value, causing the robot to provide significant resistance to movement. For rehabilitation patients, the resistance can initially be set to zero and gradually increased as rehabilitation progresses. This refinement and selection of the damping value is modified by the person in charge of the rehabilitation routine, and can be updated at any time during the exercises. When exporting data, the current damping value during the exercise is also stored.

- Another relevant parameter is the number of repetitions required to complete the current game level. One repetition consists of two movements: one from the centre to the active target on the circumference, and a second from the target back to the centre. Visual feedback is implemented in a progress repetition bar (see Figure 5, right side of the visual interface). The number of repetitions depends on the exercise’s purpose and the patient’s resilience, and must be configured by the therapist.

- The damping value and number of repetitions alone are not enough to define the specific speed at which the exercise should be performed. Consequently, the reaching time for each target is constantly measured, and can also be modified. If the user does not reach the target in time, it turns red and a message appears indicating the need to increase speed for the next repetition. When a target is not reached, it is not counted as a successful target. The total number of achieved or failed targets is stored in the generated robot dataset.

- Keeping the patient motivated is an essential aspect of rehabilitation. For this reason, the sixth personalisation parameter is the game skin. Three different themes have been designed: a space adventure (Figure 5a), a driving journey (Figure 5b), and a skin inspired by the Dragon Ball anime show (Figure 5c). This allows the user to choose the theme they prefer before starting rehabilitation.

2.5. Safety and Hardware Requirements

3. Validation Methodology

3.1. Experiment Protocol

3.2. Data Analysis

4. Results

4.1. System Usability

4.2. User Performance

5. Discussion

Future Work and Current Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ABI | Acquired Brain Injury |

| ROS | Robot Operating System |

| EMG | Electromyography |

| UR | Universal Robots |

| DoF | Degrees of Freedom |

| UI | User Interface |

| HTTP | Hypertext Transfer Protocol |

| CSV | Comma-Separated Values |

References

- Zorowitz, R.D.; Chen, E.; Tong, K.B.; Laouri, M. Costs and Rehabilitation Use of Stroke Survivors: A Retrospective Study of Medicare Beneficiaries. Top. Stroke Rehabil. 2009, 16, 309–320. [Google Scholar] [CrossRef]

- Aprile, I.; Germanotta, M.; Cruciani, A.; Loreti, S.; Pecchioli, C.; Cecchi, F.; Montesano, A.; Galeri, S.; Diverio, M.; Falsini, C.; et al. Upper Limb Robotic Rehabilitation after Stroke: A Multicenter, Randomized Clinical Trial. J. Neurol. Phys. Ther. 2020, 44, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Kennard, M.; Hassan, M.; Shimizu, Y.; Suzuki, K. Max Well-Being: A Modular Platform for the Gamification of Rehabilitation. Front. Robot. AI 2024, 11, 1382157. [Google Scholar] [CrossRef]

- Krebs, H.I.; Hogan, N.; Aisen, M.L.; Volpe, B.T. Robot-aided Neurorehabilitation. IEEE Trans. Rehabil. Eng. 1998, 6, 75–87. [Google Scholar] [CrossRef]

- Lum, P.S.; Burgar, C.G.; Shor, P.C.; Majmundar, M.; Van der Loos, M. Robot-assisted Movement Training Compared with Conventional Therapy Techniques for the Rehabilitation of Upper-limb Motor Function after Stroke. Arch. Phys. Med. Rehabil. 2002, 83, 952–959. [Google Scholar] [CrossRef]

- Richardson, R.; Brown, M.; Bhakta, M.; Levesley, M.C. Design and Control of a Three Degree of Freedom Pneumatic Physiotherapy Robot. Robotica 2003, 21, 589–604. [Google Scholar] [CrossRef]

- Reinkensmeyer, D.J.; Kahn, L.E.; Averbuch, M.; McKenna-Cole, A.N.; Schmit, B.D.; Rymer, W.Z. Understanding and Treating Arm Movement Impairment after Chronic Brain Injury: Progress with the ARM Guide. J. Rehabil. Res. Dev. 2000, 37, 653–662. [Google Scholar]

- Zhu, T.L.; Klein, J.; Dual, S.A.; Leong, T.C.; Burdet, E. ReachMAN2: A Compact Rehabilitation Robot to Train Reaching and Manipulation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2107–2113. [Google Scholar] [CrossRef]

- Chiriatti, G.; Palmieri, G.; Palpacelli, M.C. A Framework for the Study of Human-robot Collaboration in Rehabilitation Practices. In Advances in Service and Industrial Robotics, Proceedings of the International Conference on Robotics in Alpe-Adria Danube Region, Kaiserslautern, Germany, 19 June 2020; Springer: Cham, Switzerland, 2020; pp. 190–198. [Google Scholar] [CrossRef]

- Rodrigues, J.C.; Menezes, P.; Restivo, M.T. An Augmented Reality Interface to Control a Collaborative Robot in Rehab: A Preliminary Usability Evaluation. Front. Digit. Health 2023, 5, 1–16. [Google Scholar] [CrossRef]

- Molteni, F.; Gasperini, G.; Cannaviello, G.; Guanziroli, E. Exoskeleton and End-Effector Robots for Upper and Lower Limbs Rehabilitation: Narrative Review. Innov. Influenc. Phys. Med. Rehabil. 2018, 10, 174–188. [Google Scholar] [CrossRef] [PubMed]

- Garro, F.; Chiappalone, M.; Buccelli, S.; De Michieli, L.; Semprini, M. Neuromechanical Biomarkers for Robotic Neurorehabilitation. Front. Neurorobot. 2021, 15, 742163. [Google Scholar] [CrossRef] [PubMed]

- Lencioni, T.; Fornia, L.; Bowman, T.; Marzegan, A.; Caronni, A.; Turolla, A.; Jonsdottir, J.; Carpinella, I.; Ferrarin, M. A Randomized Controlled Trial on the Effects Induced by Robot-assisted and Usual-care Rehabilitation on Upper Limb Muscle Synergies in Post-stroke Subjects. Sci. Rep. 2021, 11, 5323. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Chen, M.; Zhang, Y.; Li, S.; Zhou, P. Model-based Analysis of Muscle Strength and EMG-force Relation with Respect to Different Patterns of Motor Unit Loss. Neural Plast. 2021, 2021, 5513224. [Google Scholar] [CrossRef] [PubMed]

- Goffredo, M.; Proietti, S.; Pournajaf, S.; Galafate, D.; Ciota, M.; Le Pera, D.; Posterato, F.; Francesichini, M. Baseline Robot-measured Kinematic Metrics Predict Discharge Rehabilitation Outcomes in Individuals with Subacute Stroke. Front. Bioeng. Biotechnol. 2022, 10, 1012544. [Google Scholar] [CrossRef] [PubMed]

- Afyouni, I.; Rehman, F.U.; Qamar, A.M.; Ghani, S.; Hussain, S.O.; Sadiq, B.; Rahman, M.A.; Murad, A.; Basalamah, S. A Therapy-driven Gamification Framework for Hand Rehabilitation. User Model. User-Adapt. Interact. 2017, 27, 215–265. [Google Scholar] [CrossRef]

- Simić, M.; Stojanović, G.M. Wearable Device for Personalized EMG Feedback-based Treatments. Results Eng. 2024, 23, 102472. [Google Scholar] [CrossRef]

- Alfieri, F.M.; da Silva Dias, C.; de Oliveira, N.C.; Battistella, L.R. Gamification in Musculoskeletal Rehabilitation. Curr. Rev. Musculoskelet. Med. 2022, 15, 629–636. [Google Scholar] [CrossRef]

- Tuah, N.M.; Ahmedy, F.; Gani, A.; Yong, L.N. A Survey on Gamification for Health Rehabilitation Care: Applications, Opportunities, and Open Challenges. Information 2021, 12, 91. [Google Scholar] [CrossRef]

- Faran, S.; Einav, O.; Yoeli, D.; Kerzhner, M.; Geva, D.; Magnazi, G.; van Kaick, S.; Mauritz, K.-H. Reo Assessment to Guide the ReoGo Therapy: Reliability and Validity of Novel Robotic Scores. In Proceedings of the 2009 Virtual Rehabilitation International Conference, Haifa, Israel, 29 June–2 July 2009. [Google Scholar] [CrossRef]

- Exoskeleton Report, “Upper Body Fixed Rehabilitation: Exoskeleton Catalog Category for Upper Body Fixed (or Stationary) Wearable Exoskeletons”. Available online: https://exoskeletonreport.com/product-category/exoskeletoncatalog/medical/upper-body-fixed-rehabilitation/ (accessed on 19 November 2023).

- Nexum by Wearable Robotics. Available online: https://nexumrobotics.it/ (accessed on 19 November 2023).

- D’Antonio, E.; Galofaro, E.; Patane, F.; Casadio, M.; Masia, L. A Dual Arm Haptic Exoskeleton for Dynamically Coupled Manipulation. In Proceedings of the 2021 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Delft, The Netherlands, 12–16 July 2021; IEEE: Delft, The Netherlands, 2021; pp. 1237–1242. [Google Scholar]

- Calabrò, R.S.; Russo, M.; Naro, A.; Milardi, D.; Balletta, T.; Leo, A.; Filoni, S.; Bramanti, P. Who May Benefit From Armeo Power Treatment? A Neurophysiological Approach to Predict Neurorehabilitation Outcomes. PM&R 2016, 8, 971–978. [Google Scholar] [CrossRef]

- Calabrò, R.S. (Ed.) Translational Neurorehabilitation: Brain, Behavior and Technology; Springer International Publishing: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Hsieh, Y.; Lin, K.; Wu, C.; Shih, T.; Li, M.; Chen, C. Comparison of Proximal versus Distal Upper-Limb Robotic Rehabilitation on Motor Performance after Stroke: A Cluster Controlled Trial. Sci. Rep. 2018, 8, 2091. [Google Scholar] [CrossRef]

- Zhang, L.; Guo, S.; Sun, Q. An Assist-as-Needed Controller for Passive, Assistant, Active, and Resistive Robot-Aided Rehabilitation Training of the Upper Extremity. Appl. Sci. 2021, 11, 340. [Google Scholar] [CrossRef]

- Guatibonza, A.; Solaque, L.; Velasco, A.; Peñuela, L. Assistive Robotics for Upper Limb Physical Rehabilitation: A Systematic Review and Future Prospects. Chin. J. Mech. Eng. 2024, 37, 69. [Google Scholar] [CrossRef]

- Bessler, J.; Prange-Lasonder, G.B.; Schaake, L.; Saenz, J.F.; Bidard, C.; Fassi, I.; Valori, M.; Lassen, A.B.; Buurke, J.H. Safety Assessment of Rehabilitation Robots: A Review Identifying Safety Skills and Current Knowledge Gaps. Front. Robot. AI 2021, 8, 602878. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Asadi, E.; Cheong, J.; Bab-Hadiashar, A. Cable Driven Rehabilitation Robots: Comparison of Applications and Control Strategies. IEEE Access 2021, 9, 110396–110420. [Google Scholar] [CrossRef]

- Mamani, W.; Sempere, N.; Casanova, A.; Morell, V.; Jara, C.A.; Ubeda, A. Upper Limb EMG-based Fatigue Estimation During End Effector Robot-assisted Activities. In Converging Clinical and Engineering Research on Neurorehabilitation V, Proceedings of the International Conference on NeuroRehabilitation, La Granja, Spain, 4–7 November 2024; Springer: Cham, Switzerland, 2024; pp. 441–445. [Google Scholar] [CrossRef]

- Rohrer, B.; Fasoli, S.; Krebs, H.I.; Hughes, R.; Volpe, B.; Frontera, W.R.; Stein, J.; Hogan, N. Movement Smoothness Changes During Stroke Recovery. J. Neurosci. 2002, 22, 8297–8304. [Google Scholar] [CrossRef]

- Mahfouz, D.M.; Shehata, O.M.; Morgan, E.I.; Arrichiello, F. A Comprehensive Review of Control Challenges and Methods in End-Effector Upper-Limb Rehabilitation Robots. Robotics 2024, 13, 181. [Google Scholar] [CrossRef]

- Abdel Majeed, Y.; Awadalla, S.; Patton, J.L. Effects of Robot Viscous Forces on Arm Movements in Chronic Stroke Survivors: A Randomized Crossover Study. J. NeuroEng. Rehabil. 2020, 17, 156. [Google Scholar] [CrossRef] [PubMed]

| Robot | Aim 1 | Training 2 Modes | Gamification | Data 3 |

|---|---|---|---|---|

| ALEx S | S-E | P | ✓ | K |

| ALEx RS | S-E-FA-W | - | +VR | K |

| ArmeoPower | S-E-H * | P | +VR | - |

| ArmeoSpring | S-E-H * | - | ✓ | K |

| ArmeoSpring Pro | S-E-H * | - | ✓ | K |

| Harmony SHR | S-E | P + Ass | - | - |

| Nx-A2 | S-E-H | - | +VR | K |

| ReoGo | S *-E-W | P + Ass | ✓ | K |

| Yidong-Arm1 | S-E-H | P, A, M | - | - |

| REAPlan | S-E | Adap | ✓ | - |

| InMotion Arm | S-E-H | Adap | ✓ | IE-K |

| Subject ID | Sex | Age | Arm Length (mm) | Sleep Time |

|---|---|---|---|---|

| S01 | F | 22 | 540 | 7 h |

| S02 | F | 27 | 460 | 7 h |

| S03 | M | 24 | 560 | 7 h |

| S04 | F | 23 | 490 | 7 h |

| S05 | M | 39 | 560 | 7 h |

| S06 | M | 47 | 580 | 7 h |

| S07 | M | 21 | 530 | 7 h |

| S08 | F | 24 | 460 | <5 h |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casanova, A.; Sempere, N.; Romero, C.; Porcel, K.; Ubeda, A.; Jara, C.A. A Robotic Gamified Framework for Upper-Limb Rehabilitation. Appl. Sci. 2025, 15, 11007. https://doi.org/10.3390/app152011007

Casanova A, Sempere N, Romero C, Porcel K, Ubeda A, Jara CA. A Robotic Gamified Framework for Upper-Limb Rehabilitation. Applied Sciences. 2025; 15(20):11007. https://doi.org/10.3390/app152011007

Chicago/Turabian StyleCasanova, Anahis, Natalia Sempere, Cristina Romero, Koralie Porcel, Andres Ubeda, and Carlos A. Jara. 2025. "A Robotic Gamified Framework for Upper-Limb Rehabilitation" Applied Sciences 15, no. 20: 11007. https://doi.org/10.3390/app152011007

APA StyleCasanova, A., Sempere, N., Romero, C., Porcel, K., Ubeda, A., & Jara, C. A. (2025). A Robotic Gamified Framework for Upper-Limb Rehabilitation. Applied Sciences, 15(20), 11007. https://doi.org/10.3390/app152011007