1. Introduction

In recent years, large language models (LLMs) have revolutionized business approaches, introducing new possibilities in process automation, data analysis, and customer interaction. Thanks to advanced natural language processing algorithms, LLMs can generate answers to questions, create personalized recommendations, and support users in decision-making. In various fields, such as medicine, law, and education, LLMs offer tools that can significantly streamline these processes by providing precise and relevant information.

However, their effectiveness is often limited by the lack of access to internal company data, which prevents the full utilization of these technologies in the context of specific organizational needs and data. This limitation hinders the ability of organizations to leverage LLMs for tasks requiring specific internal knowledge, leading to inaccurate or irrelevant responses, reduced efficiency, and missed opportunities for data-driven decision-making [

1,

2,

3].

The solution to this problem is the application of retrieval-augmented generation (RAG) technology, which enables the integration of external data sources with the company’s internal information resources. RAG combines the text generation capabilities of language models with access to external and internal data sources, allowing for the use of specific organizational information resources to generate more precise and relevant information [

4,

5]. RAG optimization can significantly improve the accuracy and efficiency of systems, providing contextually relevant responses based on unique company data [

6].

Despite the proliferation of RAG optimization guides and frameworks, a critical gap remains, as these resources often address the selection and tuning of components (e.g., chunk size, embedding models, LLMs) in isolation. There is a lack of a unified, comprehensive methodology that systematically evaluates and optimizes the combined impact of vector database configuration (via chunking and embedding selection) and LLM selection on an RAG system’s effectiveness, particularly in practical settings involving long-form internal documents. Addressing this research gap, this article presents a novel and comprehensive methodology for measuring and optimizing RAG systems. The work focuses on analyzing the synergistic impact of key parameters, including chunk size, vector embedding models, and LLM selection, on overall system effectiveness. The study is based on an experimental RAG system using a large biographical dataset, allowing for in-depth analysis and optimization in the context of long text data. The article details the system architecture, the response generation process, and the evaluation methodology, including the use of LLMs to evaluate results, thereby providing practical guidance on selecting optimal, integrated parameters (chunk size, vector embedding models, LLMs) for improved accuracy and efficiency, particularly within the context of long text data and internal company knowledge.

The structure of this article is as follows:

Section 2 presents a review of related work in the field of retrieval-augmented generation (RAG) systems.

Section 3 describes the dataset used in the study.

Section 4 presents the architecture of the research environment.

Section 5 discusses the methodology for measuring the effectiveness of the RAG system.

Section 6 describes the methodology for optimizing the parameters of the RAG system.

Section 7 analyzes the results of the experiments conducted.

Section 8 summarizes the key findings of the research.

Section 9 discusses the limitations of the work, and

Section 10 proposes directions for future research.

2. Related Work

In recent years, we have observed a dynamic development of retrieval-augmented generation (RAG) technology, which is reflected in the growing number of scientific publications. A key element of these systems is vector databases, which enable efficient storage and retrieval of semantic information. In the era of large datasets, the optimization of vector database algorithms becomes crucial [

7]. Researchers are analyzing various approaches to approximate nearest neighbor search (ANNS), such as hashing, tree-based, graph-based, and quantization methods. Hashing methods offer speed at the cost of accuracy, while tree-based methods provide better accuracy but are more computationally demanding [

8]. In the context of specific applications, such as the analysis of atmospheric motion vector data, precise processing and evaluation of vector data in large-scale data processing systems are crucial [

9].

Vector database management techniques constitute another significant area of research, focusing on the challenges associated with feature vector processing, such as the ambiguous concept of semantic similarity, large vector sizes, and the difficulty in handling hybrid queries. These studies provide an overview of query processing, storage and indexing, query optimization, and existing vector database systems, including vector quantization and data compression techniques [

10]. The issue of software testing in the context of vector databases cannot be overlooked, where generating test data, defining patterns of correct results, and evaluating tests are crucial for ensuring greater reliability and trust in vector database systems [

11]. In practical applications, such as question-answering systems in science, vector databases are used to create reliable information systems that provide verifiable answers, e.g., by using RAG techniques to retrieve information from scientific publications [

12].

Optimizing vector databases is crucial, but a comprehensive view of RAG systems, considering the interactions and optimization of other components such as large language models (LLMs), is equally important. Research shows that simple instructions with task demonstrations significantly improve the performance of LLMs in knowledge extraction [

13]. Various RAG methods are analyzed, examining the impact of vector databases, embedding models, and LLMs. These studies emphasize the importance of balancing context quality with similarity-based ranking methods, as well as understanding the trade-offs between similarity scores, token consumption, execution time, and hardware utilization [

2]. These works provide practical guidance on the implementation, optimization, and evaluation of RAG, including the selection of appropriate retriever and generator models [

14].

Research also focuses on analyzing the impact of various components and configurations in RAG systems, such as language model size, prompt design, document fragment size, knowledge base size, search step, query expansion techniques, and multilingual knowledge bases [

15]. In the context of online applications, the importance of filtering less relevant documents using small LLMs and decomposing generation tasks into smaller subtasks is emphasized. The effectiveness of prompt engineering and fine-tuning is also highlighted as crucial for improving response quality [

16,

17]. Techniques for fine-tuning LLMs in closed-domain applications are analyzed, comparing the effectiveness of various optimization methods and providing valuable information on adapting LLMs to specific domain requirements [

18].

While the existing literature, including recent surveys and tuning frameworks (e.g., [

2,

14]), offers detailed insights into individual RAG components, such as vector database search algorithms or LLM prompting techniques, it often lacks an integrated perspective. Specifically, a systematic methodology for simultaneously tuning and evaluating the retriever–generator dependency, where the vector database parameters (chunking strategy, embedding model) are jointly optimized with the LLM selection for a given internal data scenario, remains underdeveloped. This article addresses this deficiency by presenting a comprehensive methodology that enables the evaluation and improvement of RAG systems by analyzing the combined impact of key parameters, such as chunk size, vector embedding models, and LLM selection, on system effectiveness. This unique approach, focusing on the practical, interconnected aspects of RAG implementation in a corporate environment with long text data, constitutes a significant contribution to the development of this field, offering valuable guidance for engineers and researchers working on improving RAG-based systems.

3. Research Dataset

In this study, a retrieval-augmented generation (RAG) system utilizing a large biographical dataset was developed to present and analyze the methodology for measuring and optimizing RAG systems in the context of vector databases and large language models (LLMs).

The study used a publicly available biographical dataset in CSV format from Kaggle, specifically the PII (Personally Identifiable Information) External Dataset, comprising 4434 rows [

19]. Each row contains fictional person data generated by LLMs. Two key columns were utilized: “biographical essay” (content) and “full name” (metadata). The average length of the biographical essays is 352 tokens (ranging from 96 to 607) and 1975 characters (ranging from 498 to 3769). Example snippets of the data are provided in

Appendix A,

Table A1.

The use of a fictional biographical dataset provides a controlled environment for evaluating the RAG methodology. This approach allows for systematic variation in parameters and evaluation of results without the complexities and privacy concerns associated with real-world, sensitive data.

4. Research Environment

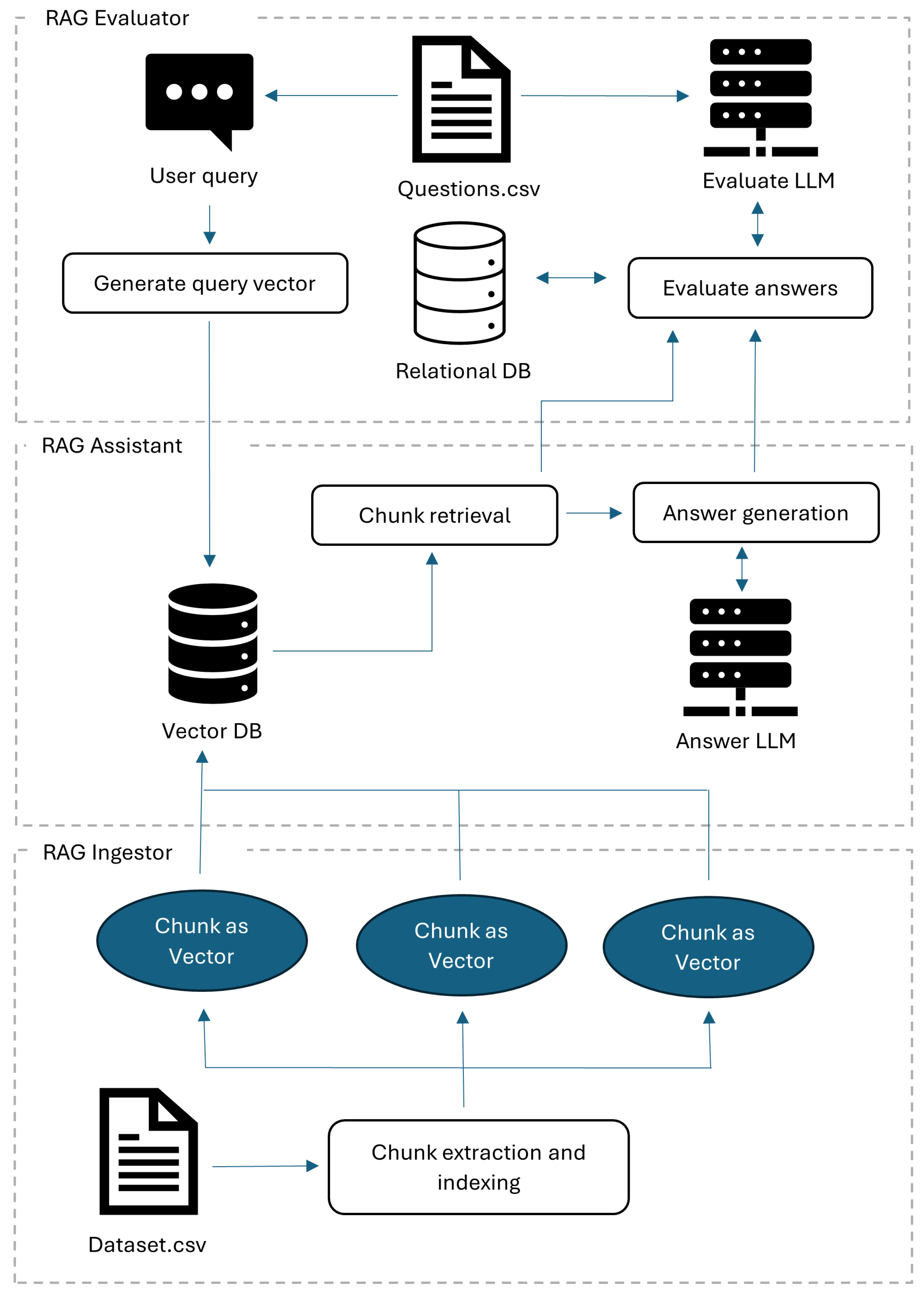

Figure 1 illustrates the architecture of the RAG system, comprising Python 3.12.2 programs and external components:

RAG Ingestor. A Python program used to load data from CSV files into the vector database.

RAG Assistant. A Python program providing an API through which user queries are passed. Vector embedding models used: bge-small-en-v1.5 [

20], bge-base-en-v1.5 [

21], bge-large-en-v1.5 [

22].

RAG Evaluator. A Python program enabling system evaluation based on question sets and expected answers.

Vector DB. Qdrant vector database [

23] in the Qdrant Cloud environment [

24] storing biographical data and returning query results.

Answer LLM. LLM models: Gemini-2.0-flash [

25], Gemini-2.0-flash-lite [

25], Mistral-saba-24b [

26], Gemma2-9b-it [

27] from Google AI Studio [

28] and Groq Cloud [

29], generating answers based on the Vector DB query results.

Evaluate LLM. The Gemini-2.0-flash model from Google AI Studio, evaluating Vector DB and Answer LLM responses.

Relational DB. PostgreSQL relational database [

30], storing the Vector DB results, subsequently passed to the Answer LLM. This database also stores experiment results.

To transform raw data into searchable vectors and generate answers, the RAG system employs a structured data processing flow. Key steps include chunk extraction, where biographical essays are divided into chunks (10, 25, 50, or 100 tokens) with proportional overlap, followed by chunk indexing using the selected embedding model. The chunk retrieval process utilizes a hybrid search, combining vector similarity with metadata filtering to ensure complete essays are retrieved for the relevant persons found.

A detailed description of the data processing flow, including the specific chunking and retrieval logic, is provided in

Appendix A. The configuration options for the RAG programs are summarized in

Appendix A,

Table A2.

RAG Ingestor retrieves data from CSV files and loads it into collections in the Vector DB. When loading data, it is necessary to specify: the collection name, vector model, and chunk size for text data. This allows for the creation of various collections with different chunk sizes and/or vector models. Example collections are presented in

Table 1.

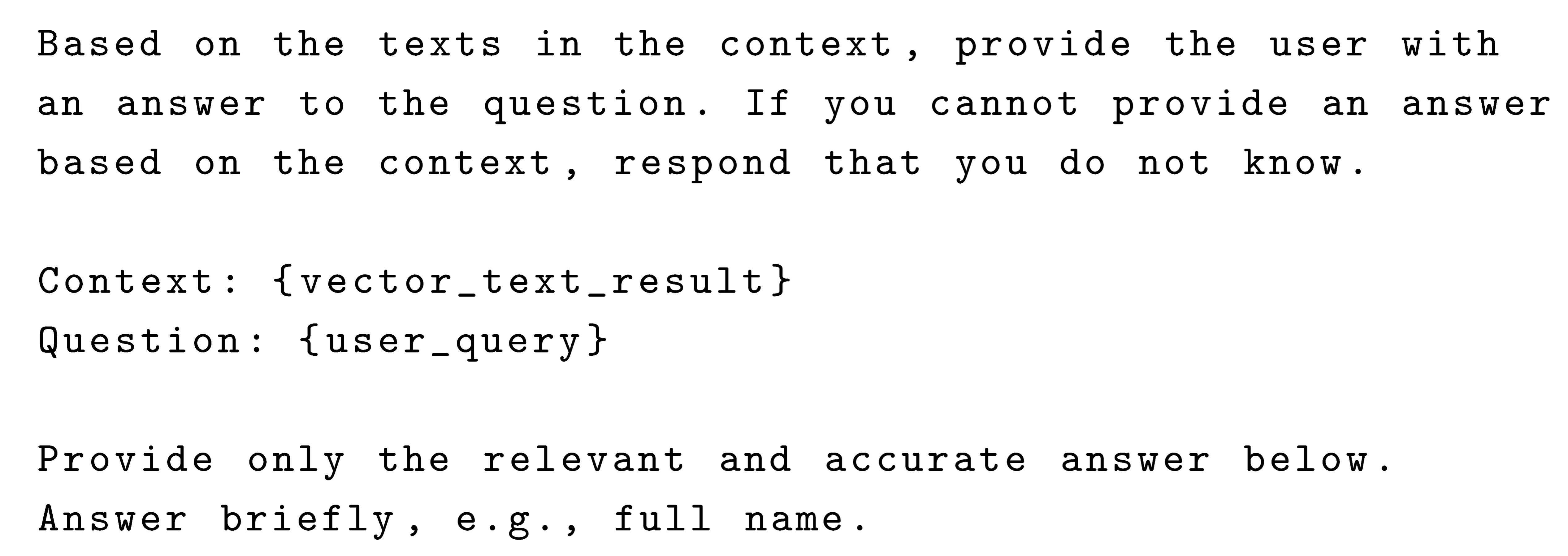

The RAG assistant provides an API through which user queries are passed and responses are returned. To provide a response, the user query is first passed to the vector DB, and then the received results are passed to the answer LLM to generate the final response. Listing 1 shows an example prompt that is passed to the answer LLM to obtain the final response. In this prompt, the vector_text_result parameter is populated with the response from the vector DB, and the user_query parameter is populated with the question from the questions.csv file.

The RAG evaluator retrieves user questions from the questions.csv file, which contains a set of questions and expected answers for both the vector DB and the answer LLM.

Table 2 presents examples of questions and answers subject to evaluation.

| Listing 1. Prompt for the answer LLM. |

![Applsci 15 10886 i001 Applsci 15 10886 i001]() |

The Python programs for vectorization, evaluation, and RAG system orchestration (the RAG ingestor, the RAG assistant, and the RAG evaluator) were executed on a local computer with specifications including the Windows 11 Pro Operating System, an Intel(R) Core(TM) i5-9300H CPU @ 2.40 GHz (4 cores/8 threads) processor, and 16.0 GB of RAM. All core LLM and vector DB tests, however, were conducted using cloud environments, specifically Qdrant Cloud [

24], Google AI Studio [

28], and Groq Cloud [

29]. High computational power is required during the vectorization phase, especially when employing the bge-large-en-v1.5 model, which was performed on a defined compute instance.

5. Measurement Methodology

The evaluation of the accuracy of responses from the vector DB is based on the assumption that if, for a given question from the questions.csv file (

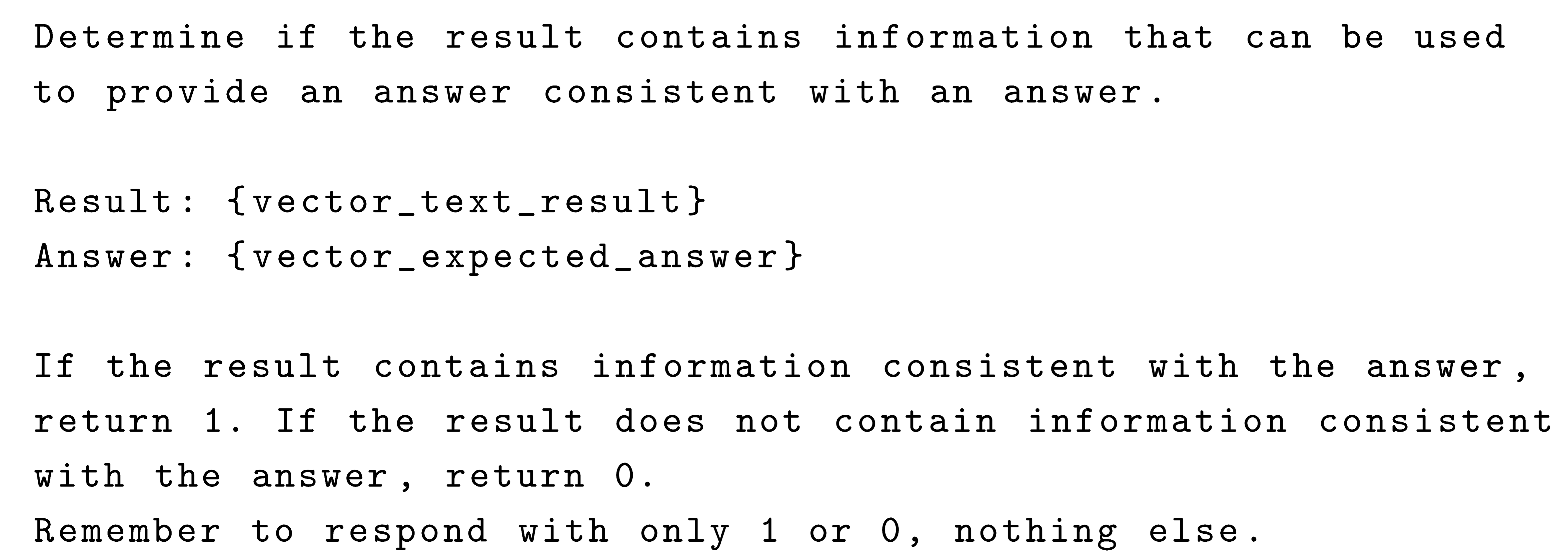

Table 2), documents (biographical essays) are returned that contain information consistent with the “vector expected answer”, the response is considered correct; otherwise, it is considered incorrect. To automate this process, the evaluation LLM based on the Gemini-2.0-flash model was used in the RAG evaluator.

Table 3 presents example responses with their accuracy assessment, and Listing 2 shows the prompt that is sent to the evaluation LLM. In this prompt, the

vector_text_result parameter contains the response from the Vector DB, and the

vector_expected_answer parameter contains the vector expected answer from the Questions.csv file.

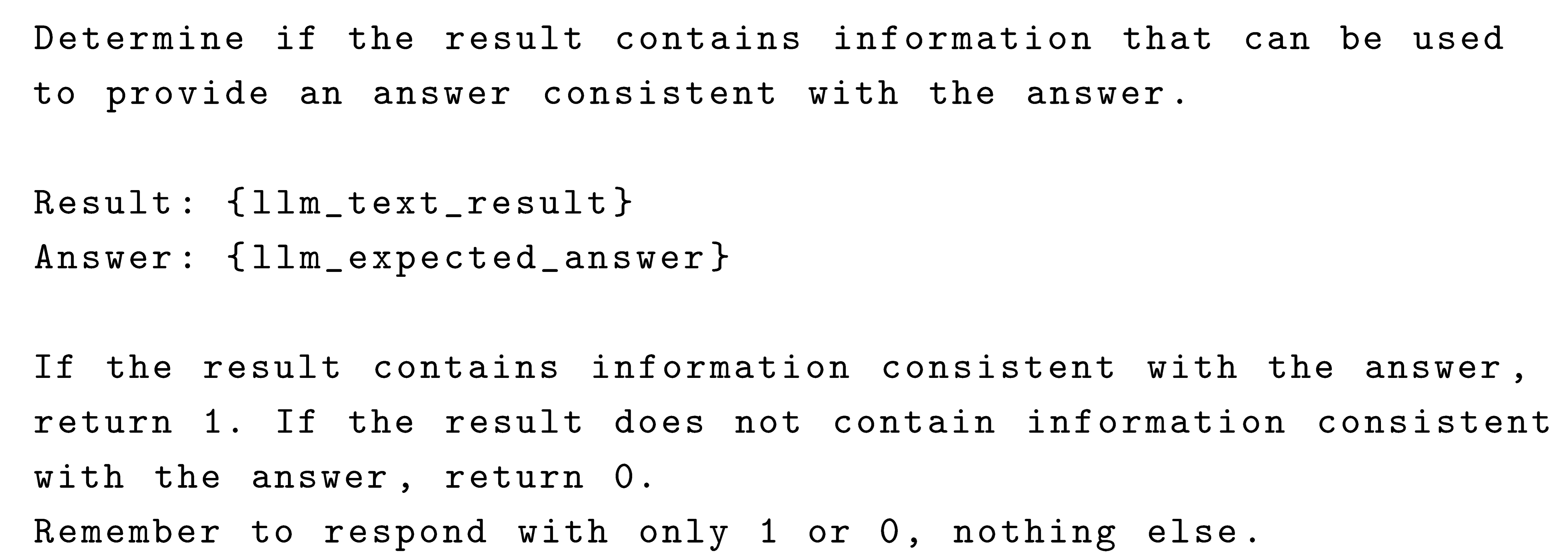

When evaluating the final response of the RAG system, it is also necessary to consider the answer LLM, which is responsible for paraphrasing the results returned by the vector DB, i.e., providing the final user response. The evaluation of the accuracy of the answer LLM response is performed analogously to the evaluation of the vector DB response. If the answer LLM response contains information consistent with the “LLM expected answer” from the questions.csv file (

Table 2), it is considered correct; otherwise, it is considered incorrect. To automate this process, the evaluation LLM was also used.

Table 4 presents example responses from the answer LLM with their accuracy assessment, and Listing 3 shows the prompt that is sent to the evaluation LLM.

| Listing 2. Prompt for evaluation of the Vector DB results. |

![Applsci 15 10886 i002 Applsci 15 10886 i002]() |

| Listing 3. Prompt for evaluation of LLM results. |

![Applsci 15 10886 i003 Applsci 15 10886 i003]() |

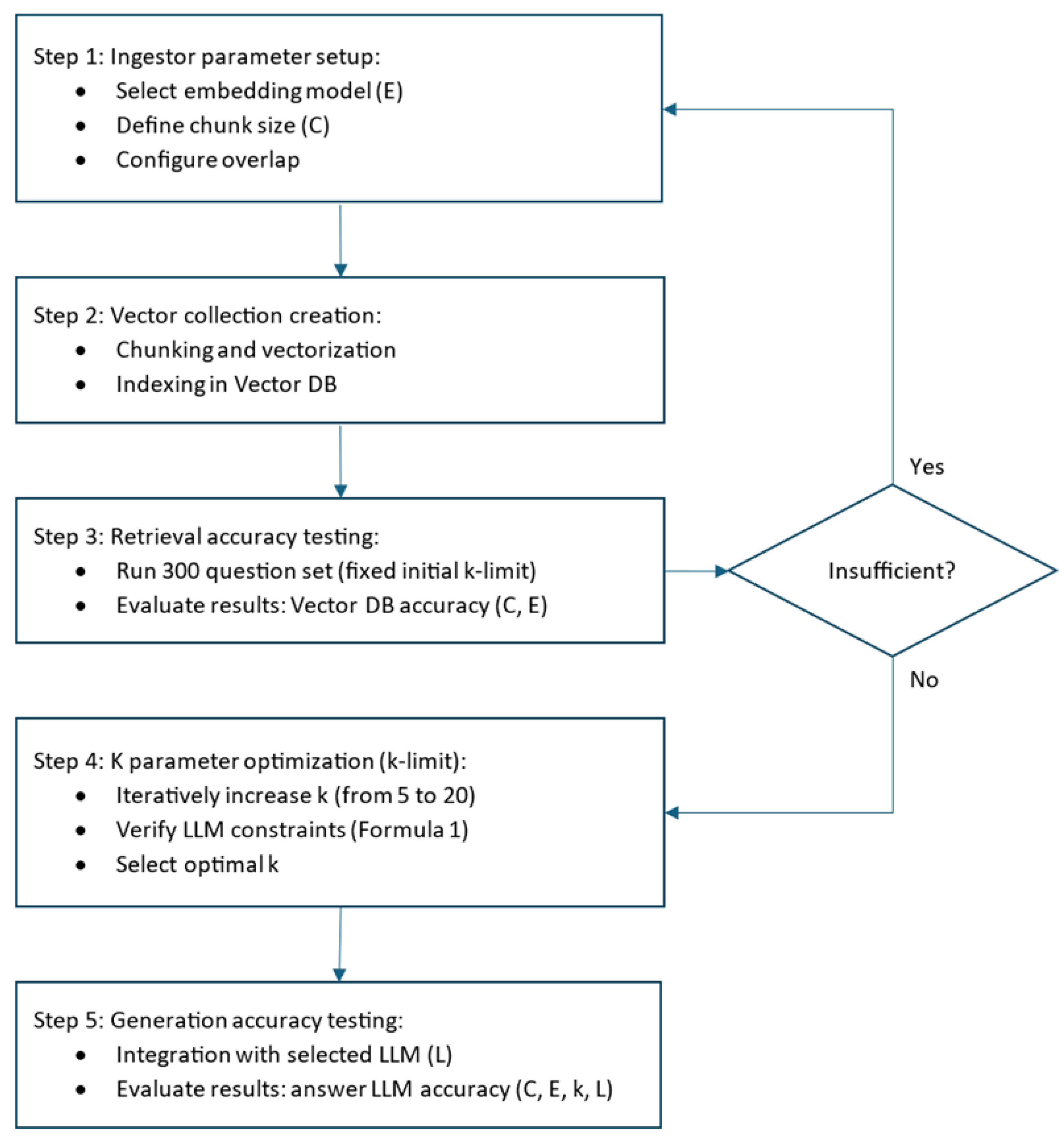

6. Optimization Methodology

In the vector database domain, at the collection loading stage (

Figure 2: Step 1–3), the parameters influencing search effectiveness are the chunk size and the vector embedding model. These parameters are crucial because they cannot be changed after the collection is loaded. Therefore, tests should be conducted for various collection variants, differing in chunk size and vector embedding models, using prepared files with questions and expected answers. Tests are conducted to determine the optimal chunk size that balances semantic coherence and retrieval precision, as well as to evaluate the impact of different vector embedding models on the accuracy of similarity-based searches.

In the context of tuning vector database search (

Figure 2: Step 4), the k-limit parameter can be manipulated to achieve an optimal number of results in terms of accuracy, while avoiding excessive size that could negatively impact system response time. For example, increasing the k-limit from 5 to 10 can improve effectiveness. The k-limit parameter can be selected in various ways, e.g., depending on the collection size. However, it should be noted that in RAG systems, the k-limit parameter is constrained by the context window of the answer LLM model, which generates the final response. The maximum k-limit can be calculated using Formula (

1):

where

max-k-limit is the maximum allowable number of tokens;

llm-ctx-win-tokens is the maximum number of tokens accepted by the model;

prompt-tokens is the number of tokens reserved for the prompt;

question-tokens is the number of tokens reserved for the user question;

largest-doc-tokens is the number of tokens in the largest document loaded into the vector database.

This formula calculates the maximum k-limit by dividing the remaining context window tokens (after accounting for prompt and question) by the size of the largest document, ensuring that all retrieved documents can fit within the LLM’s context window.

Table 5 presents example k-limit calculations for LLM models with different context windows, based on the biographical dataset. Assuming that 50 tokens are reserved for the prompt and question each, and the largest document contains 607 tokens, the k-limit for the Gemma2-9b-it model with the smallest context window is 13. In this case, it is recommended to maintain a reserve and set the k-limit to a lower value, e.g., 10. A reserve may be necessary if documents may grow larger (e.g., to 800 tokens) or if the prompt and question size increase. For the Mistral-saba-24b model, the k-limit is 53, and for Gemini-2.0-flash and Gemini-2.0-flash-lite, it is 1646. Thus, for the latter two, the costs, rather than the context window size, are the limitation. On the Google AI Studio platform, the cost of 1 million tokens is

$0.10 for Gemini-2.0-flash and

$0.075 for Gemini-2.0-flash-lite [

31]. On the Groq Cloud platform, for a developer account, token limits per minute apply: 500,000 for Mistral-saba-24b and 30,000 for Gemma2-9b-it [

32]. Therefore, it is recommended to restrict the k-limit to reasonable values in the range of 5 to 20. Due to the costs and limits associated with using LLM models, it is worth considering several models that meet the requirements, which can result in savings. For example, choosing the Gemini-2.0-flash-lite model instead of Gemini-2.0-flash results in a 25% cost reduction. This cost difference becomes highly relevant in production environments. For example, when processing 1,000,000 user queries, assuming an average of 5000 input tokens per query (context + prompt + question), the total input token volume is 5 billion tokens. The input cost for Gemini-2.0-flash would be

$500.00, compared to only

$375.00 for Gemini-2.0-flash-lite. This explicit calculation confirms the 25% savings in a high-volume scenario. Therefore, it is crucial to test various LLM models and select the most optimal one. The k-limit is optimized through iterative testing, starting with a conservative value (e.g., 5) and gradually increasing it until diminishing returns are observed in terms of accuracy, while monitoring the impact on response time.

Thanks to the ability to configure chunk size, vector model, k-limit, and LLM model in the RAG system, a wide range of experiments and optimizations (

Figure 2: Step 5) can be conducted. Specifically, an examination can be conducted into the following:

- 1.

The impact of different chunk sizes on the quality of vector search results.

- 2.

The impact of different vector embedding models on the quality of vector search results.

- 3.

The impact of changing the k-limit on the results.

- 4.

The effectiveness of different LLM models in generating responses based on vector database results.

In summary, the research methodology is based on creating a configurable experimental environment that enables systematic measurement and optimization of the RAG system in the context of biographical data. The use of a publicly available dataset, modular software architecture, and the ability to precisely configure key parameters allow for comprehensive research and the acquisition of valuable insights regarding the optimization of RAG systems.

7. Results and Analysis

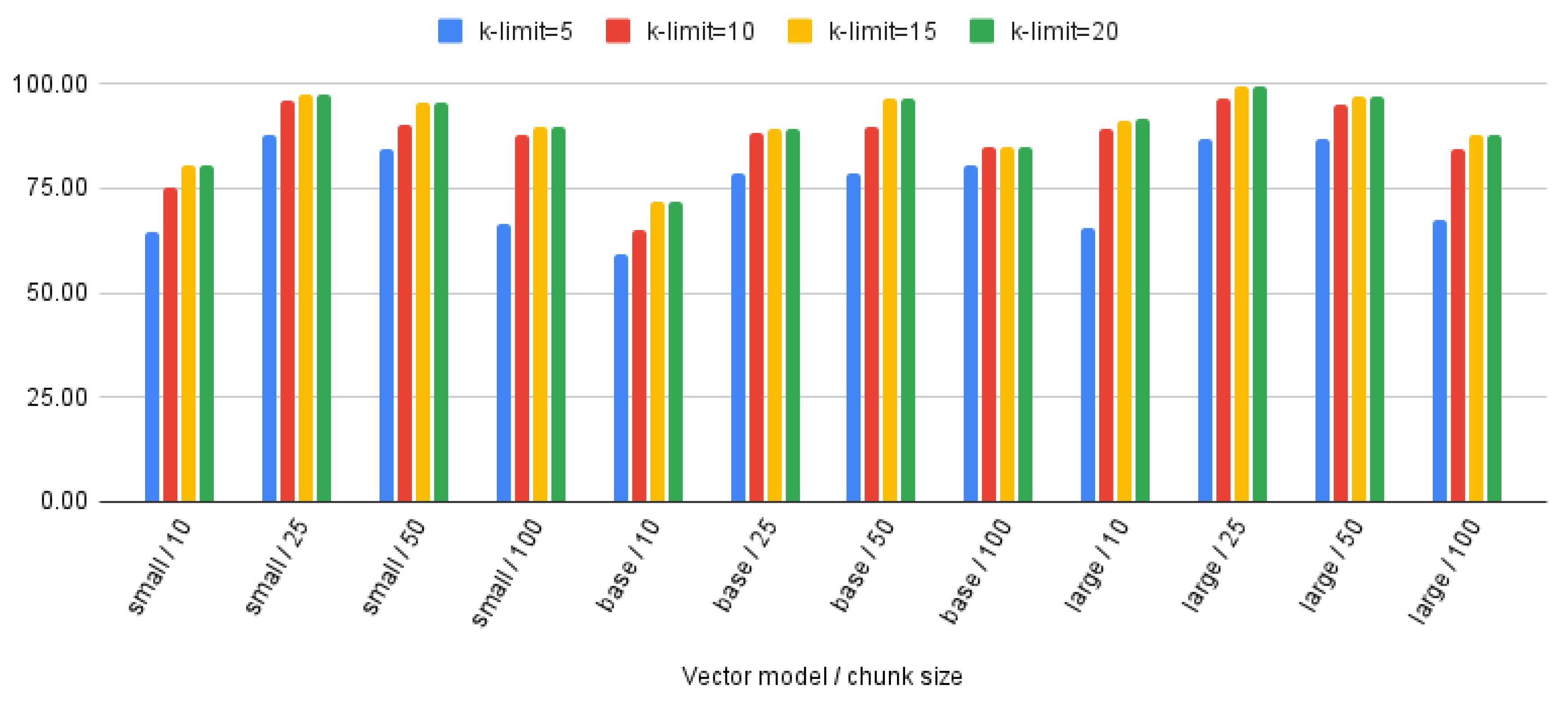

The purpose of the experiments conducted was to compare different RAG system configurations to select optimal operating parameters for the biographical dataset. The accuracy metric, expressed as a percentage, was used to evaluate the effectiveness of responses from both the vector DB and the answer LLM.

In the first phase, the correctness of document retrieval in the vector DB was measured. Each individual experiment involved running 300 questions. These 300 questions were selected using a stratified random sampling method to ensure the evaluation set is representative of the full range of biographical topics contained within the PII external dataset [

19]. This large sample size reflects the final operational performance and serves as the primary measure of statistical power for the reported results. The result for each configuration is reported as the final observed accuracy from this single, comprehensive run. A total of 48 experiments were conducted with various configuration variants, including the following:

- 1.

Vector models: bge-small-en-v1.5, bge-base-en-v1.5, bge-large-en-v1.5.

- 2.

Chunk sizes: 10, 25, 50, 100.

- 3.

k-limit values: 5, 10, 15, 20.

The results presented in

Table 6 and

Figure 3 indicate that the vector model bge-large-en-v1.5 achieved the best results with a chunk size of 25, obtaining 99.33% accuracy for k-limit 15 and 20, and 96.67% for k-limit 10. The bge-small-en-v1.5 model also showed very good results for a chunk size of 25, achieving 97.67% accuracy for k-limit 15 and 20, and 96.00% for k-limit 10. The minimal observed performance difference (less than 2 percentage points) between the top bge-large and bge-small configurations suggests a high degree of empirical stability across these large sample runs. It is worth noting that the bge-base-en-v1.5 model achieved the best results for a chunk size of 50, which differs from the other vector models. It should be remembered that the bge-small-en-v1.5 model requires significantly less computational power than bge-large-en-v1.5, especially when loading data into the vector database collection. In the case of frequent collection loading, it is worth considering the configuration bge-small-en-v1.5, chunk size 25, and k-limit 15 or k-limit 10 with a smaller context window.

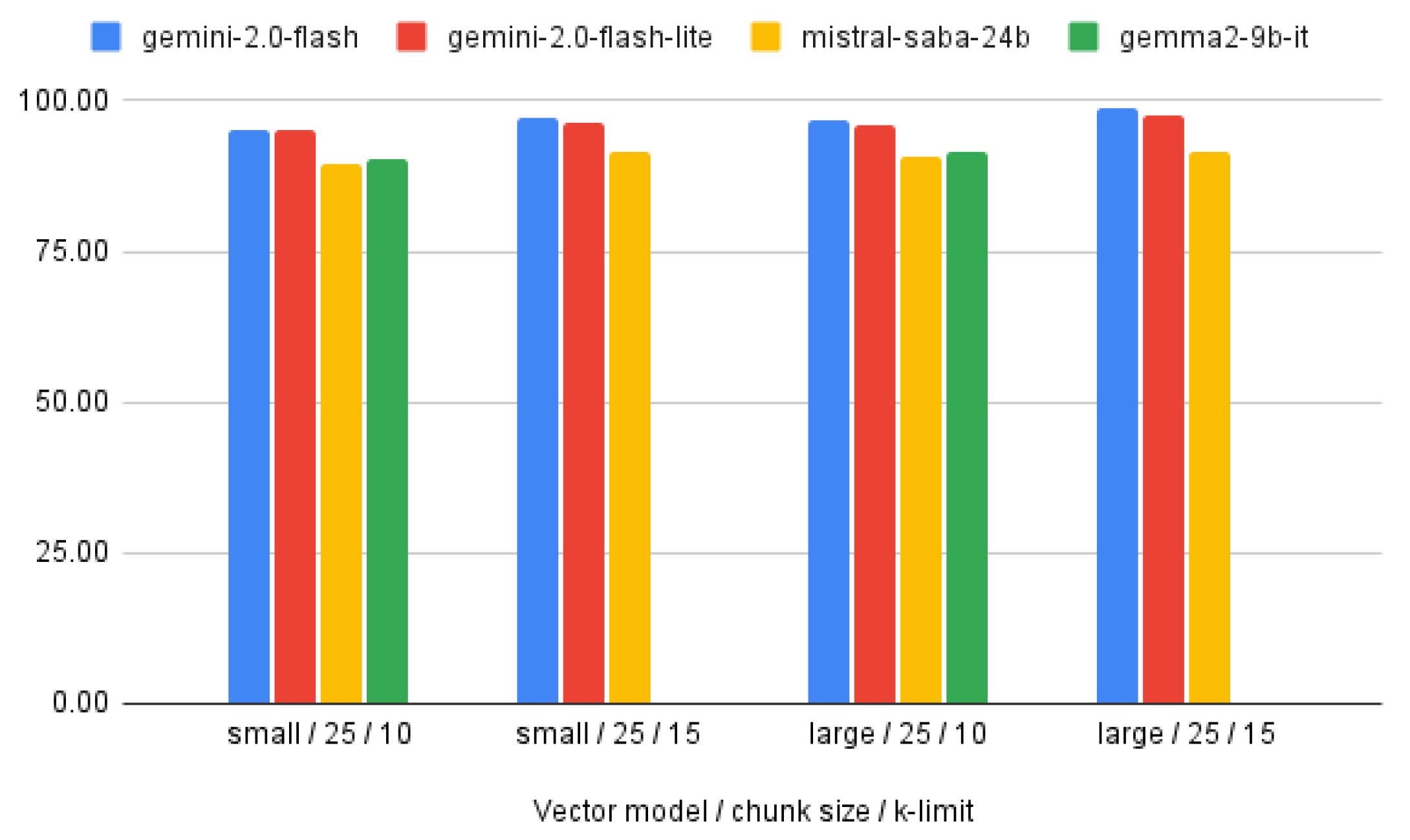

In the second phase, the correctness of the final RAG system response, generated by the answer LLM model based on the vector DB results, was measured. Each experiment involved passing 300 questions along with the vector DB results to the answer LLM, and the obtained response was compared with the expected response (“llm_expected_answer”) from the questions.csv file. There were 14 experiments conducted with various RAG configurations, these configurations relate to both LLM models and vector search parameters, including:

- 1.

LLM models: Gemini-2.0-flash, Gemini-2.0-flash-lite, Mistral-saba-24b, Gemma2-9b-it.

- 2.

Vector search configurations (vector model/chunk size/k-limit): small/25/10, small/25/15, large/25/10, large/25/15.

The results presented in

Table 7 and

Figure 4 indicate that for the vector search configuration small/25/10, the LLM model Gemini-2.0-flash achieved the best result (95.33% accuracy), surpassing the Gemini-2.0-flash-lite model by 0.33 percentage points. The Mistral-saba-24b model obtained a result of 89.67%, which is 5.66 percentage points lower than the Gemini-2.0-flash model. The Gemma2-9b-it model achieved a result of 90.33%, which is 5 percentage points lower than the Gemini-2.0-flash model. In contrast, in the large/25/10 configuration, the Gemma2-9b-it model achieved a result of 91.67%, 5 percentage points lower than the Gemini-2.0-flash model. It is worth noting the least costly vector search configuration small/25/10 with the Gemma2-9b-it model (90.33%), which is 8.34 percentage points lower than the best configuration large/25/15 with the Gemini-2.0-flash model (98.67%). If the goal is to select the model with the highest accuracy, the vector search configuration large/25/15 with the Gemini-2.0-flash model (98.67%) should be chosen. If the goal is to reduce LLM costs by 25%, it is worth considering using the Gemini-2.0-flash-lite model (97.67%). The observed difference of 1 percentage point between the top configurations suggests that the Gemini-2.0-flash-lite offers high reliability at lower operating costs. However, if the goal is to find the least computationally expensive configuration, the small/25/10 configuration with the Gemma2-9b-it model (90.33%) should be considered. If it is necessary to run the system in an on-premise environment where only open models such as Mistral-saba-24b and Gemma2-9b-it are available, the choice indicates the vector configuration large/25/10 with the Gemma2-9b-it model (91.67%). With lower computational power, the small/25/10 configuration with the Gemma2-9b-it model (90.33%) should be chosen.

8. Conclusions

The iterative optimization methodology, detailed in the previous sections, yielded clear, actionable guidance on selecting RAG configuration based on varying project priorities. These core takeaways, distilled from the experimental trade-offs between accuracy, computational cost, and hardware constraints, are summarized in

Table 8.

The experiments conducted in this article provided valuable insights into the optimization of RAG systems, particularly in the context of biographical data. The research demonstrated that the selection of optimal parameters, such as chunk size, vector model, and LLM model, is crucial for system effectiveness. However, as the experiments showed, this selection is not straightforward and depends on many factors that need to be considered.

First and foremost, the context window size of the LLM model plays a significant role in determining the maximum number of chunks that can be passed to the model to generate a response. In the case of models with a limited context window, such as Gemma2-9b-it, it is necessary to use a smaller k-limit parameter, which may affect the quality of the responses. Conversely, models with a larger context window, such as Gemini-2.0-flash, allow for the processing of more information, potentially leading to more precise responses.

Another important factor is the computational power required for data processing. Larger vector models, such as bge-large-en-v1.5, offer better vector embedding quality but require more computational power, especially when loading data into the vector database. In the case of frequent data loading, it may be more efficient to use smaller models, such as bge-small-en-v1.5, which offer a compromise between quality and computational efficiency.

The costs associated with using LLM models cannot be overlooked either. Models with higher response generation quality, such as Gemini-2.0-flash, come with higher costs per token processing. In the case of a limited budget, it is worth considering using lower-cost models, such as Gemini-2.0-flash-lite, which offer similar response quality at lower costs. Furthermore, organizations deploying RAG must account for the total cost of ownership (TCO), including several often-overlooked expenses. These include the significant investment in specialized human resources, such as ML engineers and data scientists, required for the initial development cycle, parameter tuning, and subsequent system deployment. The TCO also covers long-term maintenance costs, which encompass ongoing operational expenses like frequent data re-loading (re-embedding and re-indexing) to ensure knowledge freshness, and the necessary costs associated with monitoring and scaling the infrastructure. It is critical to recognize that optimizing for low LLM operating costs (e.g., Gemini-2.0-flash-lite) may require a trade-off involving higher initial development and specialized tuning investment. Additionally, in on-premise environments where open models are preferred, the choice of LLM model may be limited to models such as Mistral-saba-24b or Gemma2-9b-it, which also affects the final configuration of the RAG system.

In summary, the selection of optimal parameters and LLM models in RAG systems is a multidimensional process that requires considering many factors, such as context window size, computational power, processing costs, and specific application requirements. The experiments conducted provide practical guidance that can help in making informed decisions regarding the configuration of RAG systems in various business contexts. Optimizing RAG systems is a trade-off between response quality, computational cost, and hardware limitations. It is worth emphasizing that the iterative nature of the optimization process is essential to fine-tune the RAG system to achieve optimal performance for specific datasets and use cases.

9. Limitations

While the study conducted provided valuable insights, it is not without limitations that should be considered when interpreting the results. Although the experiments focused primarily on a single dataset of biographical data, the fixed-size chunking strategy employed, which was optimized with overlap for the narrative structure of the biographical essays, may not be fully generalizable to other data types. For example, for technical documents (e.g., manuals), structural chunking should be employed instead of fixed chunk sizes to maintain the integrity of tables and sections. For medical data [

33,

34], it is crucial to use specialized embedding models and segmentation that ensures clinical context (e.g., symptoms and diagnosis) remains within a single segment, minimizing the risk of losing critical information.

The study’s scope also limits the assessment of certain RAG components. The selection of the Qdrant vector database as the sole platform for storing and searching vectors limits the possibility of comparison with other available solutions, such as Pinecone, Weaviate, or Milvus, which may offer different indexing and optimization mechanisms. Similarly, limiting the study to selected LLM models (Gemini-2.0-flash, Gemini-2.0-flash-lite, Mistral-saba-24b, Gemma2-9b-it) does not allow for a full assessment of the potential of other models that may better handle specific tasks or data types.

Additionally, the fixed chunk sizes (10–100 tokens) employed, while effective for short biographical essays (max 607 tokens [

35]), pose a limitation when scaling to longer, highly structured enterprise documents (e.g., technical reports over 1000 tokens). In such cases, fixed splitting often fragments semantically coherent units, which can negatively impact retrieval precision. Furthermore, the current study restricts the answer LLM output to a brief response (answer briefly, e.g., full name [

36]), which is cost-optimal but prevents system application in complex business scenarios requiring detailed explanations or summaries from multiple sources. It should also be added that the tests were conducted in a specific computing environment, which could also have influenced the results.

10. Feature Works

Based on the limitations identified, future work should focus on advancing token management and architectural scalability. This includes proposing flexible output token control for task-specific detail. Developing adaptive chunking based on document type by investigating non-fixed segmentation strategies (e.g., semantic or structural chunking) [

34] that adjust chunk size according to the document type (e.g., technical, legal), which is critical for preserving contextual coherence. Refining Formula (1) [

14] for models with large context windows to account for variable-length documents (non-uniform documents), maximizing the efficient use of the LLM context window.

In future research, it is worth considering several directions that can contribute to the further development and improvement of RAG systems. First and foremost, the scope of experiments should be expanded to include other data types to assess how different parameters and models handle diverse information. In particular, research on the application of RAG in specialized fields such as medicine or law can provide valuable insights. A recently published systematic review [

37] demonstrates the significant performance improvements of retrieval-augmented generation (RAG) in biomedicine, emphasizing the importance of tailored clinical development guidelines for effective implementation. It is also necessary to conduct systematic comparisons of various vector databases to identify best practices and optimal configurations for different applications. Comparative studies, such as those conducted by [

8], can help identify key factors affecting the performance of vector databases in the RAG context. It is also worth investigating the impact of various data augmentation techniques, such as translation, paraphrasing, or synonym generation, on vector embedding quality and search effectiveness. These techniques, described in works such as [

38], can significantly improve the ability of RAG systems to handle linguistic and semantic diversity. More advanced LLM response evaluation metrics that consider various aspects of response quality, such as coherence, fluency, and context consistency, should also be considered. Research on new LLM response evaluation metrics, such as those conducted by [

39], can help develop more reliable and comprehensive evaluation methods. It is also important to investigate the impact of various data chunking strategies, such as semantic chunking or document structure-based chunking, on search result quality. Works such as [

40] analyze the impact of different chunking strategies on RAG system performance. Future research should also address aspects related to data security and privacy, especially in the context of business applications where data may be sensitive or confidential. Research on privacy protection techniques in RAG systems, such as those conducted by [

41], can help develop secure and compliant solutions. It is also necessary to develop methods for automatic selection of optimal RAG system parameters, which can significantly facilitate the deployment and maintenance of these systems. Automatic parameter learning techniques, described in works such as [

42], can help optimize RAG systems without the need for manual tuning. Finally, it is worth exploring the possibility of integrating RAG systems with other tools and technologies, such as knowledge management systems, chatbots, or analytics platforms, to create comprehensive AI-based solutions.