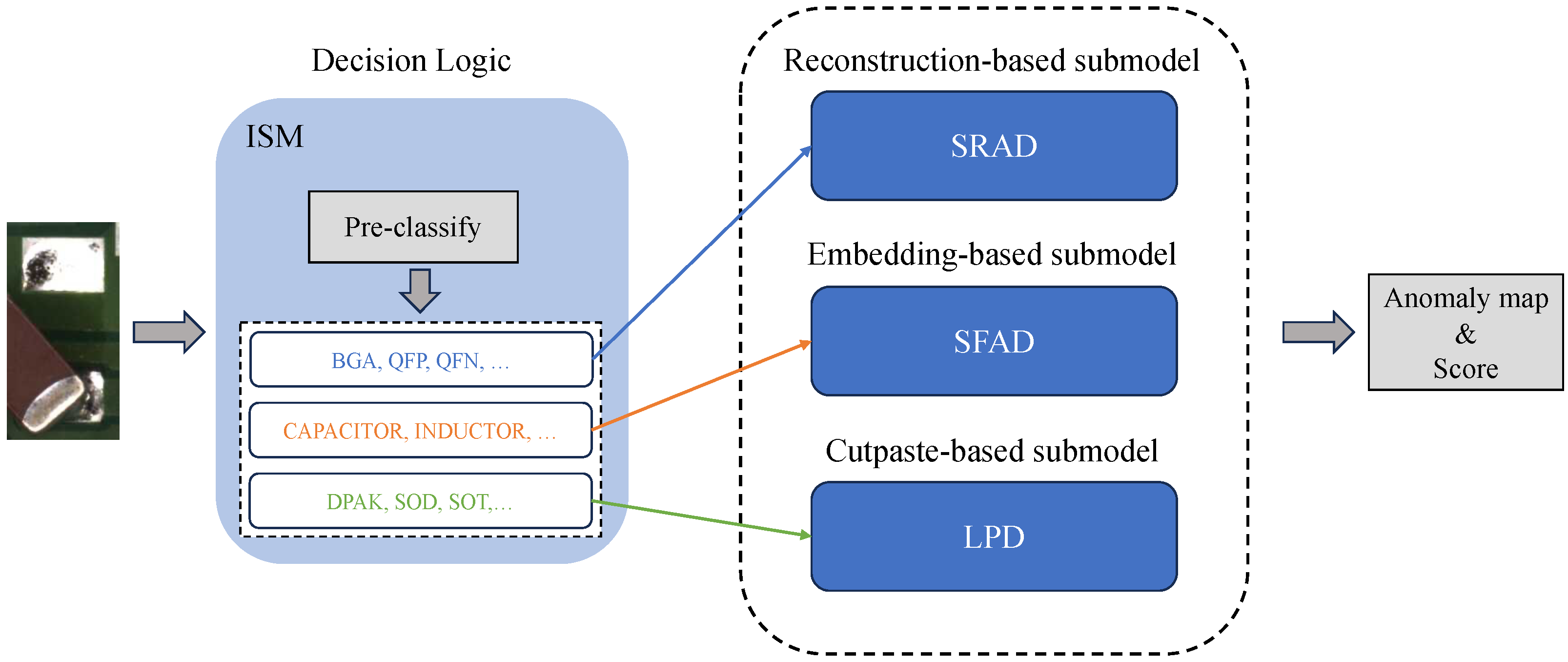

The overall architecture of MADE-Net is illustrated in

Figure 1. It comprises three submodels and a dynamic integration and selection module. Specifically, SRAD, SFAD, and LPD correspond to the reconstruction-based, feature-based, and CutPaste-based approaches, respectively. In addition, we introduce a large-scale benchmark dataset, ManuDefect-21, which addresses the absence of negative samples in existing training sets. All proposed submodels are first trained in an unsupervised manner and subsequently fine-tuned on the entire training set of ManuDefect-21.

3.1. Reconstruction-Based Submodel

Reconstruction-based methods are among the most commonly used in the anomaly detection area. These methods derive their anomaly detection efficacy from the core hypothesis that neural networks, when trained exclusively on normal data, exhibit limited reconstruction fidelity for anomalous regions. This inherent limitation enables anomaly identification through pixel-wise or feature-wise comparisons between the input and its reconstructed output.

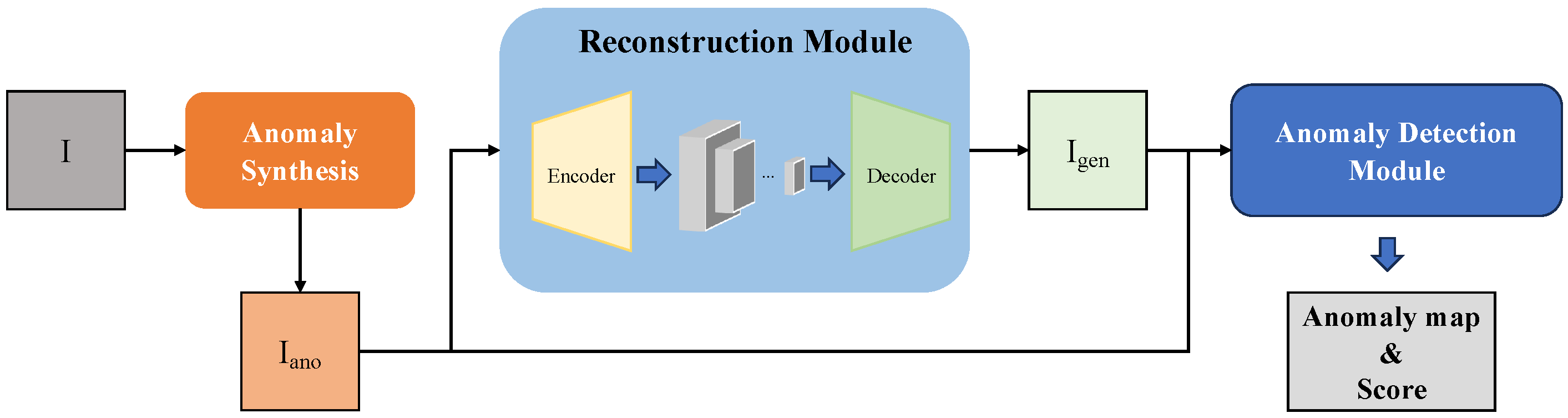

In our paper, SRAD (Submodel of Reconstruction-based Anomaly Detection) is designed as a part of the integrated model to compensate for the shortcomings of other submodels. The architecture of SRAD is outlined in

Figure 2. The input image is used to synthesize anomaly samples. The synthesized sample is encoded and reconstructed using the autoencoder architecture model, aiming to reconstruct the synthesized anomaly into normal. The anomaly detection module is a Unet-based architecture that locates anomalous regions by comparing the input and reconstructed one. To address the foreground–background imbalance inherent in defect segmentation, we adopt Focal Loss [

29], which adaptively down-weights easy negatives and highlights challenging anomalous regions.

3.1.1. Anomaly Synthesis Module

We adopt the anomaly generation strategy proposed in MSTUnet [

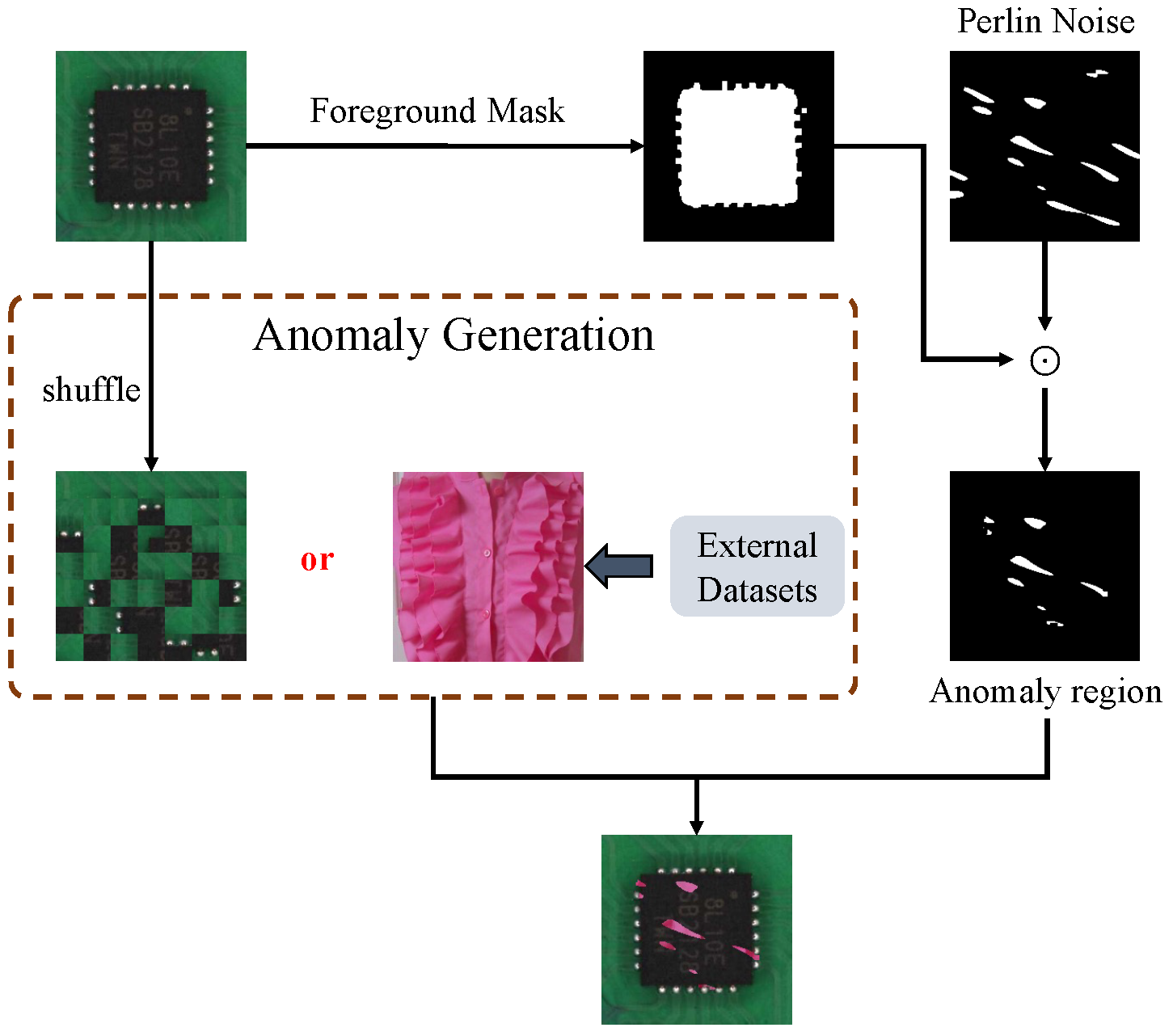

30] to simulate both texture and structural anomalies. As illustrated in

Figure 3, texture anomalies are generated by randomly selecting samples

from the DTD texture dataset [

31], whereas structural anomalies are synthesized by shuffling and recombining patches extracted from a normal ground truth sample

. The resulting simulated anomaly is denoted as

.

An anomaly mask, , is obtained via element-wise multiplication of a Perlin noise-based mask and a foreground mask , where the latter is derived from the ground truth foreground region of . This ensures that the injected anomalies are constrained to the primary object within the normal sample.

The final synthetic anomaly image is computed as

where ⊙ denotes element-wise multiplication.

During training, texture and structural anomalies are introduced with equal probability to ensure balanced exposure to both anomaly types.

3.1.2. Reconstruction Module

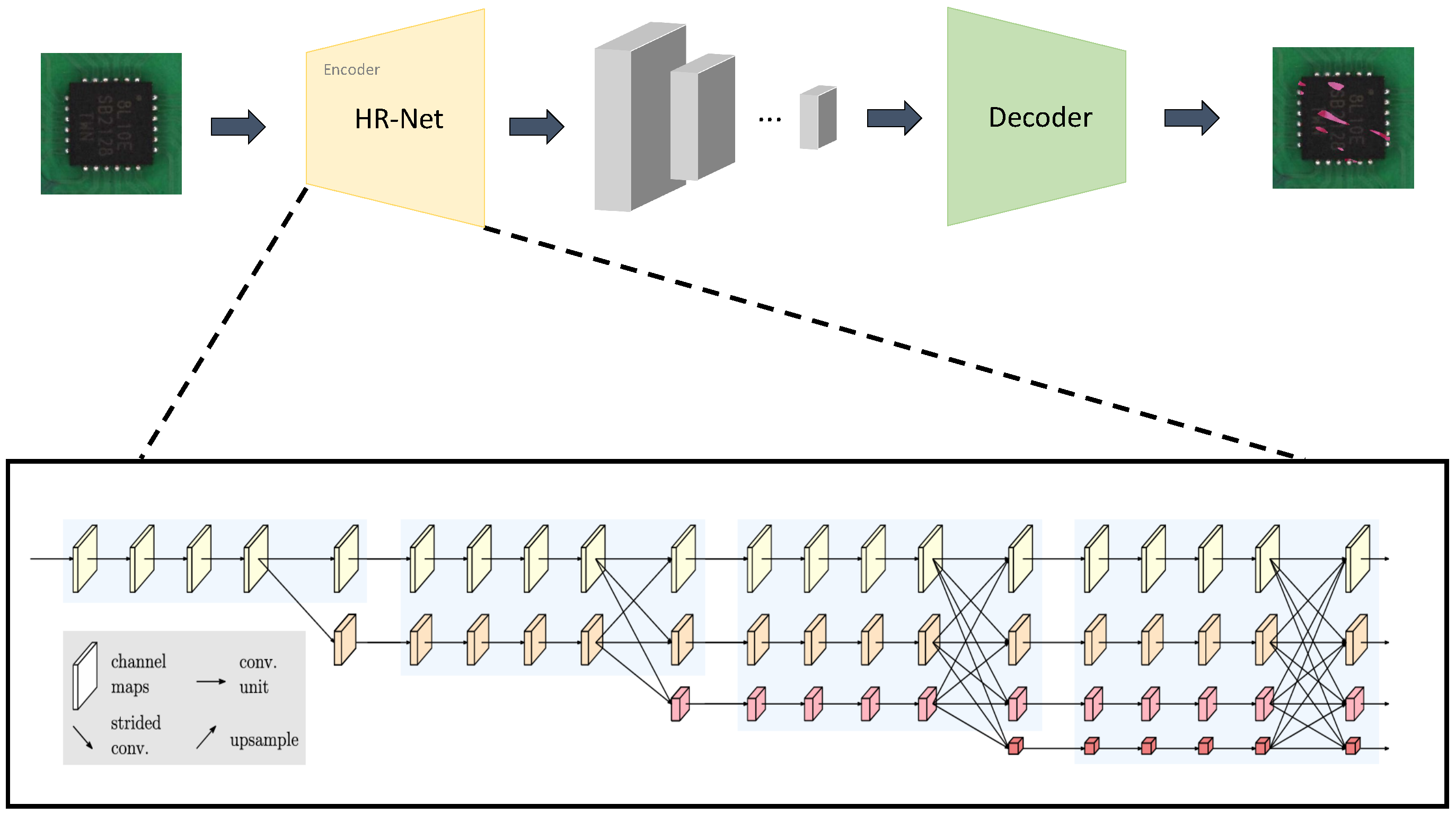

Since the synthesized anomalous sample and the corresponding anomalous mask are obtained, we propose a novel autoencoder-based reconstruction module. The architecture of the reconstruction module is shown in

Figure 4.

Encoder

Current deep networks for feature encoding suffer from two critical limitations: (1) progressive resolution degradation, leading to the compromised representation of small anomalous features, and (2) insufficient multi-scale feature integration, resulting in suboptimal anomaly localization accuracy. To address these challenges, we employ HRNet-W32 [

32] as the encoder backbone of our autoencoder-based reconstruction module, leveraging its unique parallel multi-resolution preservation architecture and dynamic feature exchange mechanism. The HRNet backbone preserves high-resolution feature representations throughout the reconstruction process, significantly improving the localization of small-scale defects. This design effectively maintains fine-grained anomaly signatures while simultaneously enhancing discriminative capability across diverse anomaly scales.

The input image is initially processed through two stride-2 3 × 3 convolutional layers, generating a high-resolution feature map while maintaining 1/4 spatial resolution of the original input. The architecture progressively integrates multi-scale subnetworks across s stages (), where each subsequent stage incorporates additional parallel branches with geometrically decreasing resolutions and exponentially increasing channel dimensions . For instance, Stage 2 comprises dual parallel pathways: preserving the original 1/4 resolution and operating at 1/8 resolution.

Cross-resolution feature integration is achieved through adaptive transformation operators

in the fusion function:

where the transformation mechanism adaptively applies

- -

Upsampling (): Bilinear interpolation followed by 1 × 1 convolution for channel alignment;

- -

Downsampling (): Strided-2 3 × 3 convolution with feature compression;

- -

Identity mapping (): Direct feature propagation.

The final feature encoding is synthesized through the channel-wise concatenation of all subnetwork outputs, followed by spatial upsampling to match the original input dimensions. This architecture effectively preserves high-resolution representations throughout the network via persistent skip connections in the primary pathway, significantly enhancing the spatial localization accuracy for small-scale anomaly detection tasks.

Decoder

The decoder architecture processes the encoded feature representation through a hierarchical refinement pipeline comprising two sequential Residual Network (ResNet) blocks followed by two transposed convolutional upsampling modules. Each ResNet block integrates identity shortcut connections and consists of (1) a 3 × 3 convolutional layer with stride 1, (2) batch normalization, and (3) ReLU activation, designed to enhance feature expressiveness while mitigating gradient vanishing issues. The subsequent transposed convolution blocks progressively restore spatial resolution using 4 × 4 kernels with stride 2 and padding 1, systematically doubling the feature map dimensions at each stage through learnable upsampling operations. This dual-stage upsampling mechanism, interleaved with channel-wise feature recombination, transforms the latent representation into the reconstructed output image while preserving structural coherence.

3.1.3. Anomaly Detection Module

The purpose of the anomaly detection module is to localize the anomaly by inspecting the input image and reconstructed image . The images are concatenated depth-wise and decoded into a segmentation mask M by a U-net-based architecture. M is the output anomaly map indicating the pixel-level location of the anomalies in the image. To also compute the image-level anomaly score, we apply a simple segmentation mask interpretation procedure—the segmentation mask is smoothed by a 21 × 21 averaging filter and globally max-pooled into a single score.

3.1.4. Loss Function and Inference

The reconstruction module aims at turning the anomalous sample into normal, and the Anomaly Detection Module is designed to locate the anomaly area. Since the anomaly is synthesized by the

AS Module during the training stage, the ground truth anomaly mask is known. The loss function is defined below:

and denote the reconstruction loss and segmentation loss, respectively. and represent the reconstructed image and the ground truth normal image. and M are the binary ground truth mask of anomalous regions and the predicted anomaly mask. and are hyperparameters used to balance the contributions of the reconstruction and segmentation losses. and are the focusing parameters of the Focal Loss, where balances the importance of positive and negative examples, and adjusts the rate at which easy examples are down-weighted.

3.2. Feature-Based Submodel

Feature-embedding-based methods represent a prominent class of approaches in image anomaly detection. These methods operate under the central assumption that neural networks trained solely on normal samples learn feature representations that are highly compact for normal data but exhibit significant deviations for anomalous inputs [

8,

22,

33]. By mapping images into a latent feature space, anomalies can be identified by measuring the distance or similarity between an input’s embedded features and a reference distribution of normal features. This strategy enables effective anomaly detection by leveraging the discriminative power of learned feature embeddings.

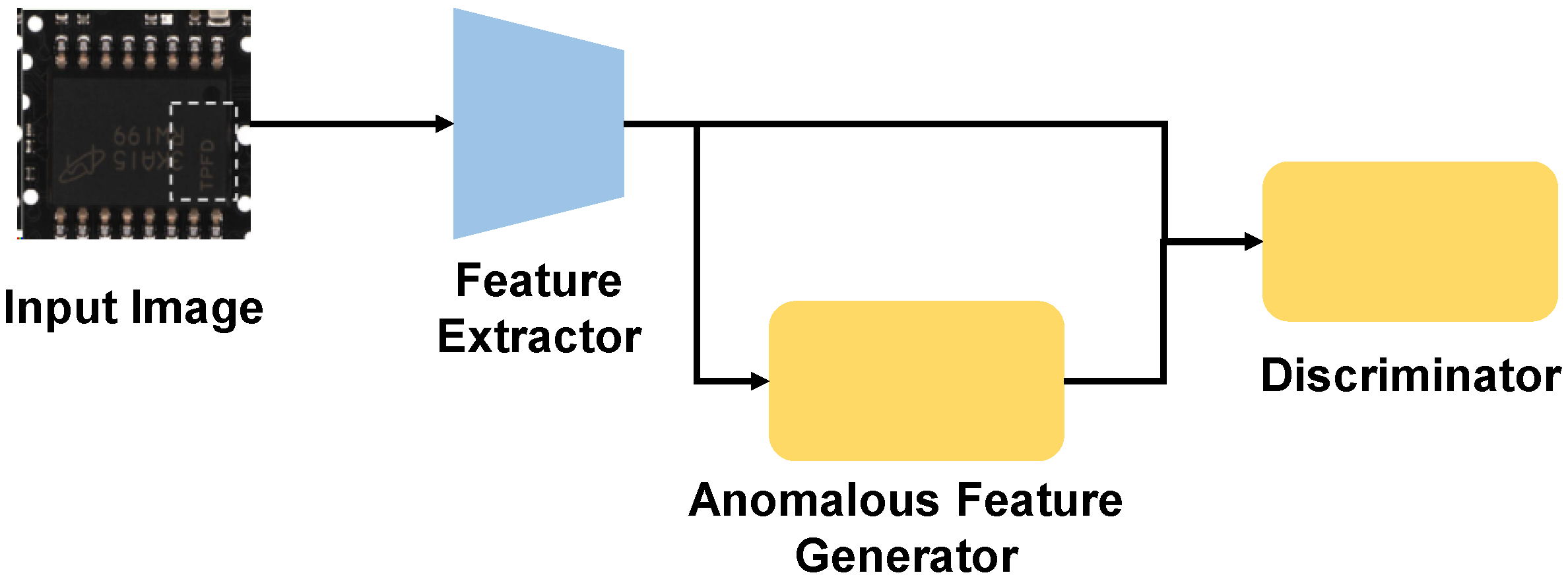

This subsection presents a comprehensive overview of SFAD (Submodel of Feature-based Anomaly Detection), a key part of our integrated model. SFAD integrates three essential components—a Feature Extractor, an Anomalous Feature Generator, and Discriminator—as illustrated in

Figure 5.

During the training phase, an input image is passed through the Feature Extractor to yield a normal feature representation. Subsequently, the Anomalous Feature Generator takes this normal feature to create a synthetic anomalous counterpart. Both the normal and anomalous features are then fed to the Discriminator, which learns to differentiate them. For inference, the pipeline is simplified: the Anomalous Feature Generator is omitted, and the Feature Extractor passes its output directly to the Discriminator to produce the final anomaly score. The following subsections will examine each of these components in detail.

3.2.1. Feature Extractor

To capture semantic irregularities, we propose a feature extractor that exploits local contextual cues and hierarchical semantics. Given an input image from the training set or the test set , we apply a pretrained backbone network to extract intermediate feature maps from multiple layers.

To capture subtle anomalies, we design a feature representation module that leverages both local context and multi-level semantic information. Given an input image from either the training set or the test set , we first extract intermediate features from a pretrained backbone network .

Since generic backbones pretrained on datasets like ImageNet may encode patterns irrelevant to industrial anomalies, we selectively retain a subset of layers L. This choice is a trade-off: deeper layers provide rich semantic information but have poor spatial resolution, while shallower layers offer finer detail but lack high-level context. Our selection is empirically driven to balance these factors, a common practice in feature-based methods. Each chosen feature map is represented as , where .

To incorporate spatial locality, which is crucial for distinguishing local texture from anomalous defects, we define a neighborhood window of size

around each spatial position

:

A local aggregation operation is then applied over each neighborhood to produce a context-aware descriptor:

All aggregated feature maps are subsequently rescaled to a common spatial resolution , typically matching the largest map, and concatenated along the channel dimension to form a unified representation.

We denote the resulting local feature at spatial location as .

To bridge the domain gap between generic pretrained features and task-specific industrial features, a lightweight embedding transformation

is applied. This module, typically a shallow CNN, adapts the generic local feature

to a task-specific embedding

without adding significant computational complexity:

For brevity, the complete feature extraction and transformation process can be represented as a single function:

where

encapsulates both multi-level feature extraction via

and subsequent task-specific adaptation via

.

3.2.2. Anomalous Feature Generator

Effective training of a discriminative anomaly detection model requires not only representative normal samples but also suitable negative examples to define clear decision boundaries. Obtaining real defective samples is often difficult. While prior studies [

25,

26,

34] often rely on complex image-space augmentations to generate pseudo-anomalies, these can introduce unrealistic artifacts or have an unpredictable effect on feature representations. Here, we adopt a more direct and controlled approach by perturbing features in the latent space. This allows us to explicitly simulate the

effect of an anomaly—a deviation from the normal feature manifold—rather than its visual appearance.

Specifically, let

denote a local feature vector. We synthesize its negative counterpart by adding random noise

from an isotropic Gaussian distribution:

where each dimension of

is independently drawn. This isotropic perturbation ensures that we do not make strong assumptions about the nature of anomalies, making the model sensitive to a wide variety of deviations.

This method’s strength lies in its simplicity and directness, providing clear negative examples for training the discriminator. However, we acknowledge its limitation: the synthetic features are not guaranteed to correspond to the representations of real-world defects and lack semantic meaning. The goal is not to perfectly mimic real anomalies, but to effectively train the discriminator to learn a tight decision boundary around the normal data manifold, thereby making it sensitive to any feature that falls “off-manifold”.

3.2.3. Discriminator

We design a Discriminator to estimate a normality score at each spatial location . The discriminator must distinguish on-manifold (normal) features from off-manifold (perturbed) ones. For this task, a simple Multi-Layer Perceptron (MLP) is sufficient and computationally efficient, as it operates on individual feature vectors. This avoids the complexity and potential for overfitting that a larger model might introduce. The discriminator is encouraged to output high scores for normal features and low scores for perturbed ones.

3.2.4. Training Objective and Optimization Strategy

To enable the model to differentiate between normal and perturbed features, we employ a margin-based objective inspired by truncated regression losses. Specifically, for each spatial location

in the feature map, we compute a sample-wise loss as

where

is a discriminative scoring function parameterized by

, applied to both clean features

and their corrupted counterparts

.

is the margin controlling the confidence window, set to 0.5 in our experiments.

The total objective over the training dataset

is given by

where

denotes parameters of the feature adaptor, and optimization is performed jointly over

and

. This formulation encourages the Discriminator to assign high scores to normal regions while penalizing confidently misclassified anomalies beyond a predefined confidence band.

3.2.5. Inference and Anomaly Scoring

During inference, the synthetic anomaly generator is removed, resulting in a fully differentiable end-to-end architecture consisting solely of the feature extractor

and the discriminator

. For a given test image

, the adapted feature map is computed as

where

denotes the feature descriptor at spatial location

.

Anomaly scores are assigned to each spatial position using the learned discriminator:

with higher values corresponding to a higher likelihood of abnormality.

To obtain a spatially resolved representation of potential anomalies, we construct the anomaly map.

this map is upsampled to the original image resolution using bilinear interpolation and further smoothed with a Gaussian filter (

) to suppress boundary noise and enhance spatial coherence.

For image-level anomaly detection, a single scalar score is derived by taking the maximum over all spatial positions:

This strategy ensures that even small but pronounced anomalous regions contribute strongly to the final decision, making the framework sensitive to subtle defects irrespective of their spatial extent.

3.3. Localized Patch Discrimination

In industrial images, normal samples typically exhibit high local structural consistency, meaning that local regions maintain natural continuity with their surrounding context in terms of texture, edges, and color. Based on this assumption, cutting and pasting a local region of an image to a different location disrupts the original structural consistency, thereby creating a localized perturbation that simulates a potential anomaly. Although such perturbations are not real defects, they effectively introduce local structural inconsistencies that can serve as training signals to guide the model in learning to recognize anomalies.

Based on this principle, this paper proposes the Localized Patch Discrimination (LPD). Unlike prior CutPaste-style methods, LPD defines standardized criteria for patch size and perceptibility, integrates self-supervised training directly into the MADE-Net framework, and leverages the learned anomaly-sensitive features for downstream detection tasks, thereby providing both a principled perturbation mechanism and transferable representations. LPD employs a self-supervised learning mechanism. Specifically, it first constructs perturbed images from normal samples by cutting and pasting patches within the same image to generate “pseudo-anomalies”. Then, a discriminative model is trained to distinguish between the original normal images and the perturbed ones, thereby encouraging the model to focus on fine-grained differences in local structures. As shown in the

Figure 6, the overall architecture of LPD is presented.

3.3.1. Local Patch Distortion Generation

Given a normal image , we generate an anomalous counterpart by cutting a rectangular patch and pasting it into a different location in the same image. The patch size is a crucial hyperparameter and is selected as a fraction of the image size to ensure that the perturbation is sufficiently localized yet perceptible enough to be learned by the model. During training, both the cut patch position and the target paste location are randomly sampled to increase the diversity of synthetic anomalies. Additionally, geometric transformations such as rotation and flipping may be applied to the patch before pasting to simulate a wider range of possible defects.

In this study, the “perceptibility” of a patch is defined and assessed through two complementary criteria: (1) a size standard based on relative area and (2) a perceptibility evaluation grounded in statistical differences. Specifically, the patch size is set as a fixed proportion of the original image area (typically 5–20%), determined through preliminary and ablation experiments to ensure that the patch is neither too small to be captured by the model nor so large that it disrupts the global semantics.

To evaluate perceptibility, we employ two measures. First, we compute quantitative differences between the patched region and its surrounding context in terms of texture, color, and structural similarity (e.g., SSIM, mean squared error, edge intensity). Second, we conduct sampled human inspection to confirm that the perturbation is visually discernible.

This standardized procedure ensures that the patch perturbations remain stable across samples and sufficiently localized to be effectively learned and recognized by the model, thereby providing a consistent training signal for anomaly detection.

This procedure creates a realistic and diverse set of localized anomalies that mimic common industrial defects characterized by local disruption of texture or structure. Unlike global image transformations, such as color jitter or blurring, patch recomposition preserves the overall global semantics while introducing subtle but detectable local inconsistencies.

3.3.2. Self-Supervised Learning Objective

The LPD module employs a convolutional neural network (CNN) backbone—typically a ResNet variant—to extract hierarchical feature representations from input images. We adopt ResNet as the backbone due to its proven ability to learn rich and stable feature hierarchies, residual connections that alleviate vanishing gradients, and its effectiveness in a wide range of industrial inspection tasks. Compared with deeper or more complex architectures (e.g., Vision Transformers), ResNet strikes a balance between representational power and computational efficiency, making it well-suited for large-scale industrial datasets.

The extracted features are then fed into a lightweight classification head consisting of fully connected layers that output the probability of the input image x being anomalous. Since our task is essentially a binary discrimination between normal and patched (“pseudo-anomalous”) images, we adopt the binary cross-entropy (BCE) loss. BCE directly models the Bernoulli likelihood for two-class classification, provides well-calibrated probabilistic outputs, and is simpler and more stable than alternatives such as focal loss when the positive and negative classes are reasonably balanced.

We define a binary classification task where normal images are labeled as

and patched images as

. The model is trained using the binary cross-entropy loss:

minimizing this loss encourages the model to learn discriminative features that highlight localized inconsistencies introduced by patch recomposition.

To further enhance the quality of the feature, regularization techniques such as dropout and batch normalization are applied during training. Data enhancement strategies—including patch recomposition—are also used in normal images to improve the robustness of the model.

3.3.3. Feature Transfer and Ensemble Integration

After the self-supervised training phase, the classification head is discarded and the CNN backbone serves as a pretrained feature extractor. The learned representations effectively capture localized anomalies, which can be leveraged in downstream tasks such as object detection and the classification of electronic components.

Specifically, the pretrained backbone weights initialize the feature extractor in a supervised target detection framework (e.g., Faster R-CNN [

35]), providing a strong initialization that accelerates convergence and improves final detection performance. Alternatively, features of LPD can be fused as auxiliary inputs alongside conventional features, enriching the representation with anomaly-sensitive cues.

3.4. Integration and Selection Module

The Integration and Selection Module (ISM) serves as a key component in MADE-Net, enabling dynamic model selection according to the characteristics of each input image. Rather than applying a uniform model to all data, ISM performs a two-stage procedure: (1) pre-classifying the input into 1 of 11 predefined component subcategories, and (2) selecting the most suitable anomaly detection submodel for that category based on empirical performance.

Stage 1: Pre-classification. We employ an EfficientNet-B4 network as the classifier due to its favorable trade-off between accuracy and computational cost in industrial settings. The classifier takes RGB images as input and outputs probabilities across 11 component types (e.g., BGA, CAPACITOR, RESISTOR). It is pretrained on ImageNet and fine-tuned on the ManuDefect-21 training set. The final class prediction is obtained by , where denotes the softmax probability for category i. Only predictions with confidence above 0.85 are accepted; otherwise, the default submodel (SFAD) is invoked to ensure stability. This mechanism enhances robustness against potential misclassification.

Stage 2: Model selection via performance map. A performance map

M is constructed based on the results of extensive ablation studies. For each category

c, we compute the average AUROC and Pixel-AP achieved by each submodel

on its validation subset. The optimal model

is determined by

where

balances image- and pixel-level accuracy. The resulting mapping

is stored and used during inference. This data-driven assignment ensures that each subcategory is processed by the submodel best suited to its typical defect morphology.

3.5. Full-Supervised Fine-Tuning

For all three submodels, we adopt a two-stage training strategy consisting of initial unsupervised training followed by full-supervised fine-tuning. In the unsupervised stage, only normal samples from the training set are utilized. Synthetic anomalies are randomly generated and injected into normal images, and the models are trained to segment and localize these artificial anomalies. This allows the networks to learn robust representations of normal patterns and their structural consistency.

Benefiting from the availability of accurately annotated samples in our dataset, which provides both normal and anomalous instances in the training and test sets, we further introduce a supervised fine-tuning stage. After the unsupervised pretraining, all submodels are fine-tuned using the entire training set that includes both positive and negative samples along with pixel-level annotations. Unlike prior work where supervised refinement is limited by the absence of defect annotations, our dataset enables the direct replacement of synthetic anomalies with real anomalies and their masks during fine-tuning. This transition allows the models to adapt from artificially constructed defects to authentic defect distributions, bridging the gap between simulation and reality. While this strategy demonstrates clear benefits in improving discriminative power under well-annotated conditions, we acknowledge that its applicability in scenarios without exhaustive annotations remains limited. Nevertheless, we consider our approach a step toward bridging unsupervised pretraining and real-world supervised adaptation in industrial anomaly detection.

This two-stage paradigm preserves the generalization capability of unsupervised training while fully exploiting the availability of labeled data, leading to improved accuracy and practical applicability in industrial scenarios.

3.6. Dataset ManuDefect-21

We proposed ManuDefect-21, a specialized dataset sampled from a real-world SMT (surface mount technology) industrial production line. As demonstrated in

Table 2, our proposed dataset exhibits substantial advantages in terms of scale and diversity compared to existing benchmarks. Specifically, our dataset contains 31,050 training images, which is approximately 8.6 times larger than MVTec AD [

11] and 3.6 times larger than VisA [

12]. ManuDefect-21’s test set comprises 10,272 normal samples and 3049 anomalous samples, providing a more comprehensive evaluation platform with balanced representation of both normal and defective cases. Furthermore, our dataset encompasses 82 distinct defect types, surpassing the variety of defect types covered in both MVTec AD (73 types) and VisA (78 types). This extensive collection of diverse defect patterns enables more robust evaluation of anomaly detection methods and better reflects the complexity of real-world industrial inspection scenarios.

Limitations and scope: While ManuDefect-21 offers significant scale and diversity, it also has limitations. Some subcategories, such as ALUMINUM_CAPACITOR, contain relatively few samples, which may affect the reliability of evaluation and model generalization for rare categories. Future work should consider expanding the dataset to cover more process types and address sample imbalance for rare categories.

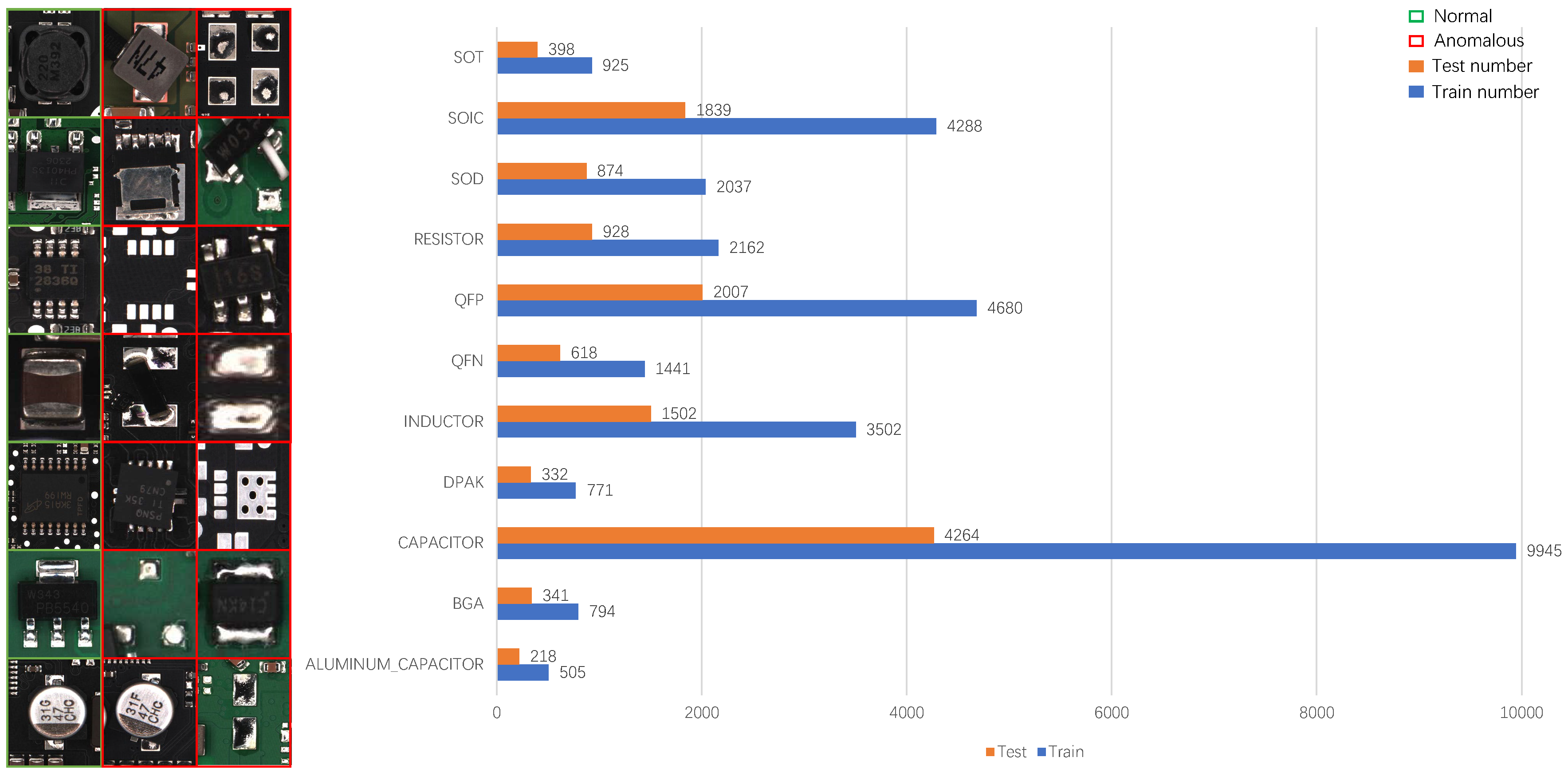

Table 3 provides a detailed breakdown of our dataset across different electronic component categories, demonstrating the comprehensive coverage of various industrial inspection scenarios.

Our dataset covers 11 major electronic component categories commonly found in industrial manufacturing, including capacitors, resistors, inductors, and various integrated circuit packages (BGA, QFN, QFP, SOIC, SOT, SOD, DPAK). Each category contains a substantial number of training samples, with CAPACITOR being the largest category (9945 training images) and ALUMINUM_CAPACITOR being the smallest (505 training images). The dataset maintains a balanced distribution across different defect types, with an average of 8 anomaly types per category, ranging from 5 to 10 types per component category.

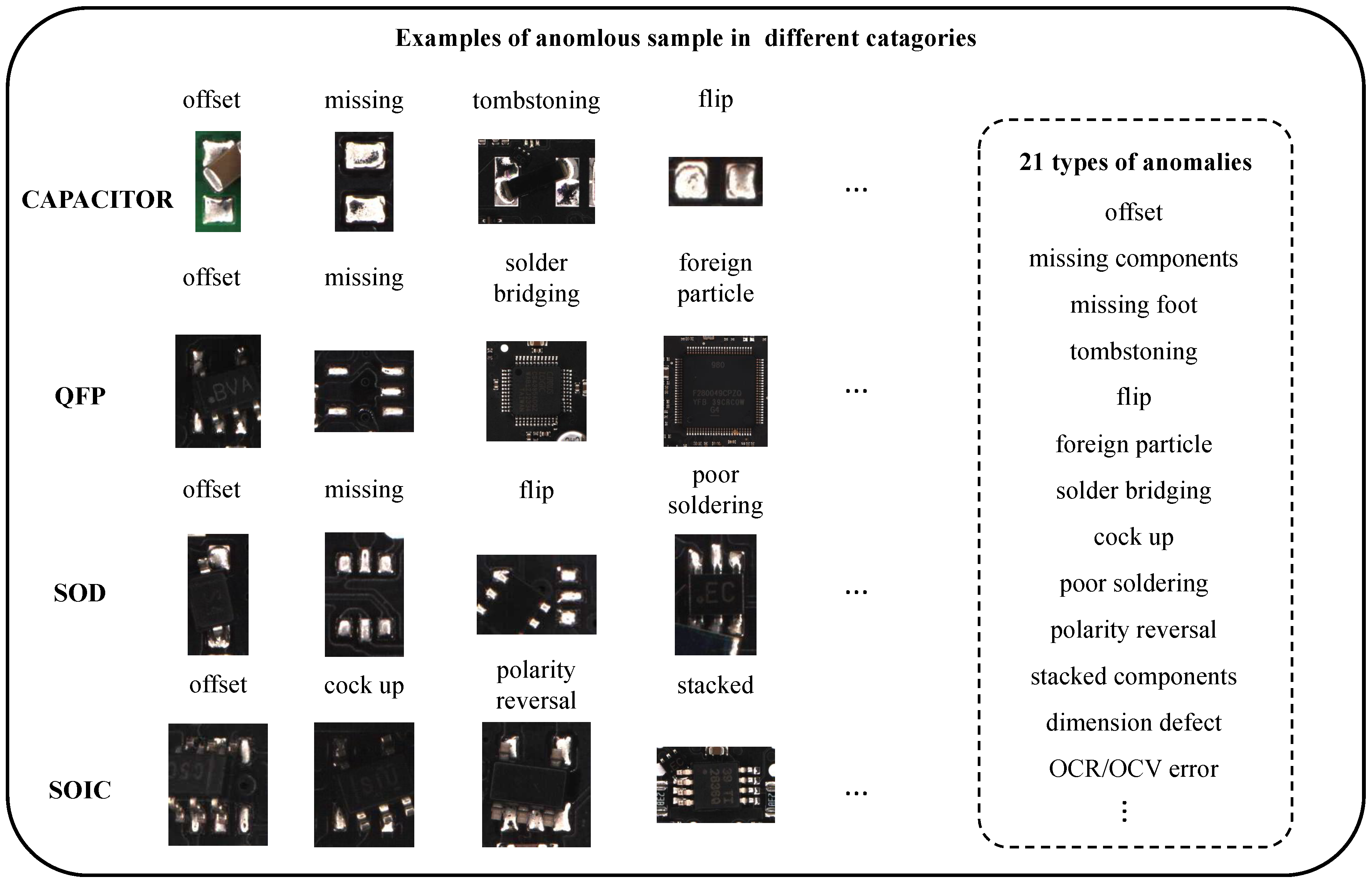

As

Figure 7 shows, our dataset includes 21 well-defined anomaly types spanning mounting, soldering, and surface contamination defects, offering a comprehensive coverage of typical industrial production anomalies. Several anomaly categories exhibit subtle inter-class differences, making the dataset particularly suitable for fine-grained classification and robustness evaluation. In addition to diversity in defect types, the dataset also features challenging visual conditions, such as varying contrast, partial occlusion, and reflective interference, providing a realistic testbed for evaluating model generalization. Through detailed labeling of anomaly types, our dataset expands its applicability beyond image-level anomaly detection to include fine-grained classification of specific defect categories.

Anomaly taxonomy and distinction: For closely related defect types (e.g., insufficient solder vs. cold joint), we follow industrial inspection standards and expert annotation guidelines to define clear classification criteria. Insufficient solder is characterized by a visibly reduced solder volume, while cold joints are identified by dull, grainy surfaces and poor electrical connectivity. All anomaly types are annotated with reference to their physical characteristics and failure modes, ensuring that the dataset supports fine-grained and meaningful classification.

Figure 8 presents the sample distribution and representative images of 11 categories of electronic components in our dataset. The left panel shows images of typical categories, where green bounding boxes indicate normal samples and red bounding boxes denote anomalous samples of the corresponding category. Seven representative categories, including INDUCTOR, RESISTOR, and QFP, are selected to visually illustrate the appearance differences between normal and anomalous instances. The bar chart on the right summarizes the number of samples per category in the training and test sets. It can be observed that the data scale varies across categories, reflecting the actual occurrence frequency and defect probability of components in real production lines. For example, CAPACITOR contains 9945 and 4264 images in the training and test set, respectively, and it is a device with a relatively high occurrence frequency in the production line. The number of ALUMINUM_CAPACITOR images is relatively small, with 505 images in the training set and 218 images in the test set. This type of data distribution reflects that, in actual industrial scenarios, the failure rate of certain devices themselves is extremely low, making it difficult to collect sufficient abnormal samples. Despite this, this dataset still contains a sufficient number of samples in each category, and its overall scale is much larger than that of widely used anomaly detection datasets such as MVTec and VisA. Moreover, ManuDefect-21 provides both normal and anomalous samples in both the training and test sets, while preserving the real-world positive/negative sample ratio observed on production lines. This design not only better reflects the conditions of actual manufacturing environments but also offers a robust basis for evaluating the performance of anomaly detection methods in practical industrial applications.

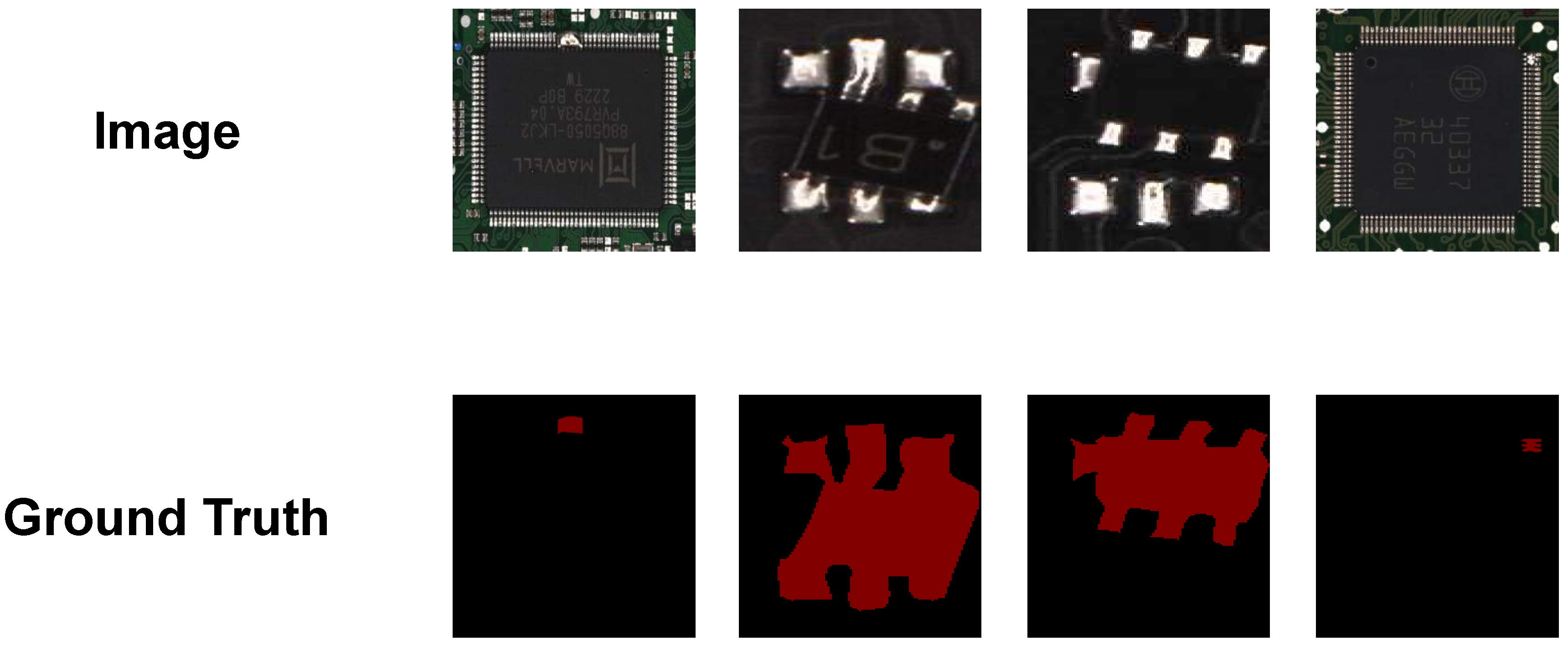

Additionally, a distinctive feature of the ManuDefect-21 dataset is the provision of fine-grained pixel-level annotations for training and evaluation phases, enabling precise anomaly localization and segmentation assessment across all stages of model development. As shown in

Figure 9, these detailed annotations facilitate the comprehensive assessment of model performance in both image-level detection and pixel-level localization tasks, which is particularly valuable for industrial applications requiring precise defect identification and boundary delineation.