Research on Indoor 3D Semantic Mapping Based on ORB-SLAM2 and Multi-Object Tracking

Abstract

1. Introduction

- (1)

- We demonstrate that the 3D object detection algorithm, which generates 3D detection boxes and their semantic categories, is effective. In addition, we perform an analysis on the publicly available SUNRGBD dataset and investigate the dataset imbalance problem.

- (2)

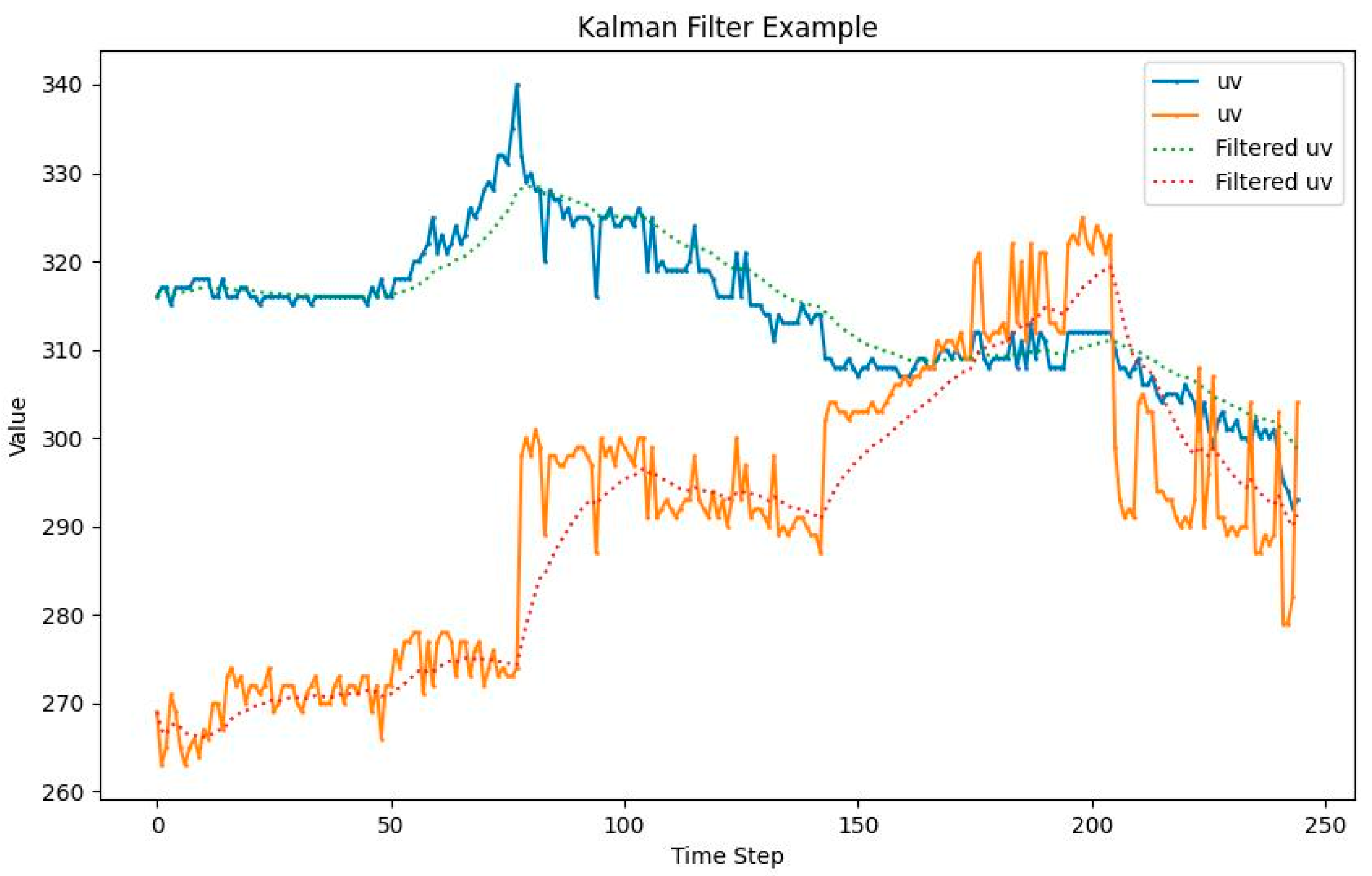

- We show that MOT algorithms such as KF can track and filter 3D object detection results smoothly and accurately.

- (3)

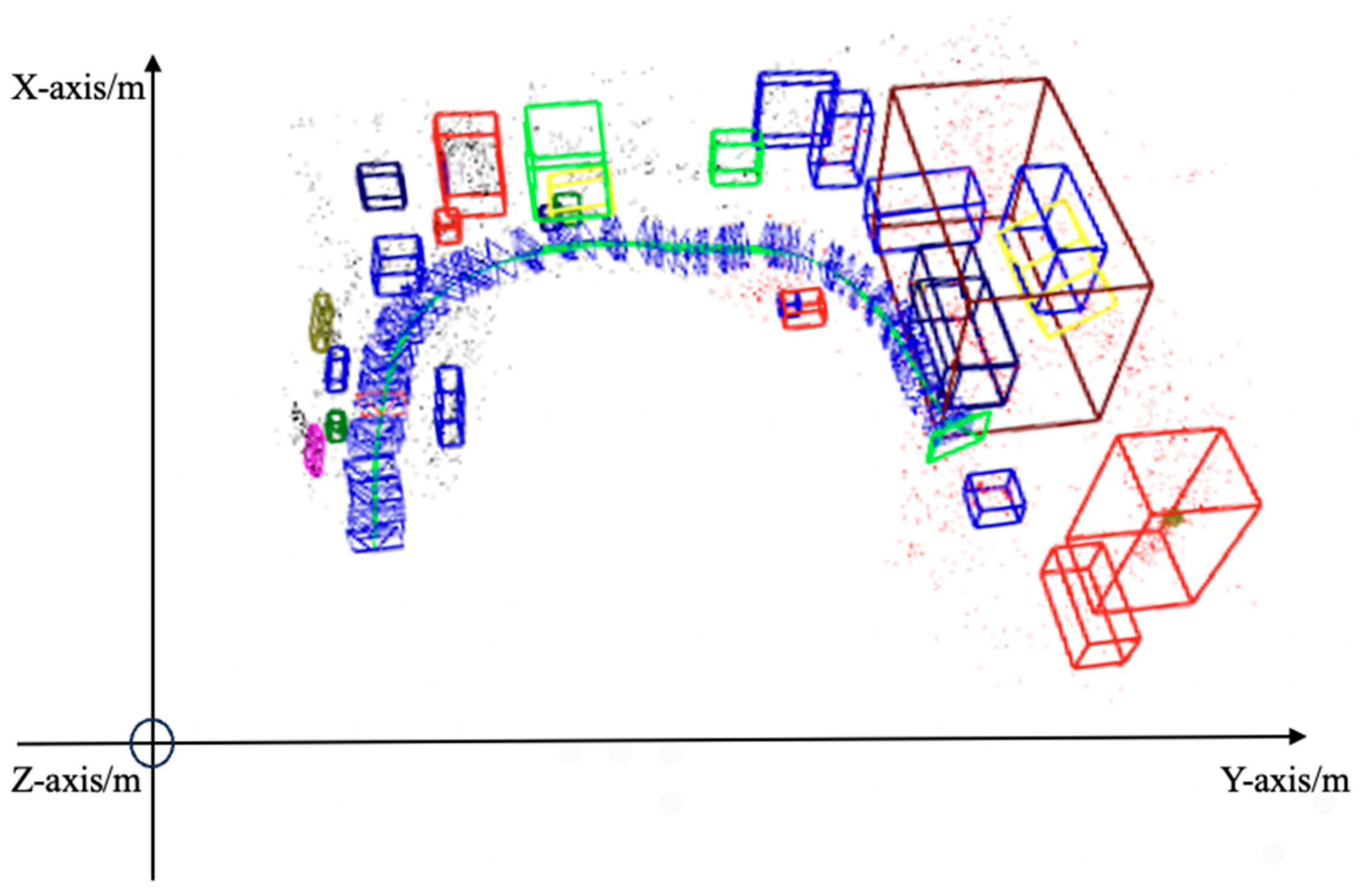

- To the best of our knowledge, this is the first work of integrating 3D object detection and MOT into the visual SLAM system to achieve 3D object mapping.

2. Related Works

2.1. Vision Semantic SLAM

2.2. 3D Object Detection

2.3. MOT

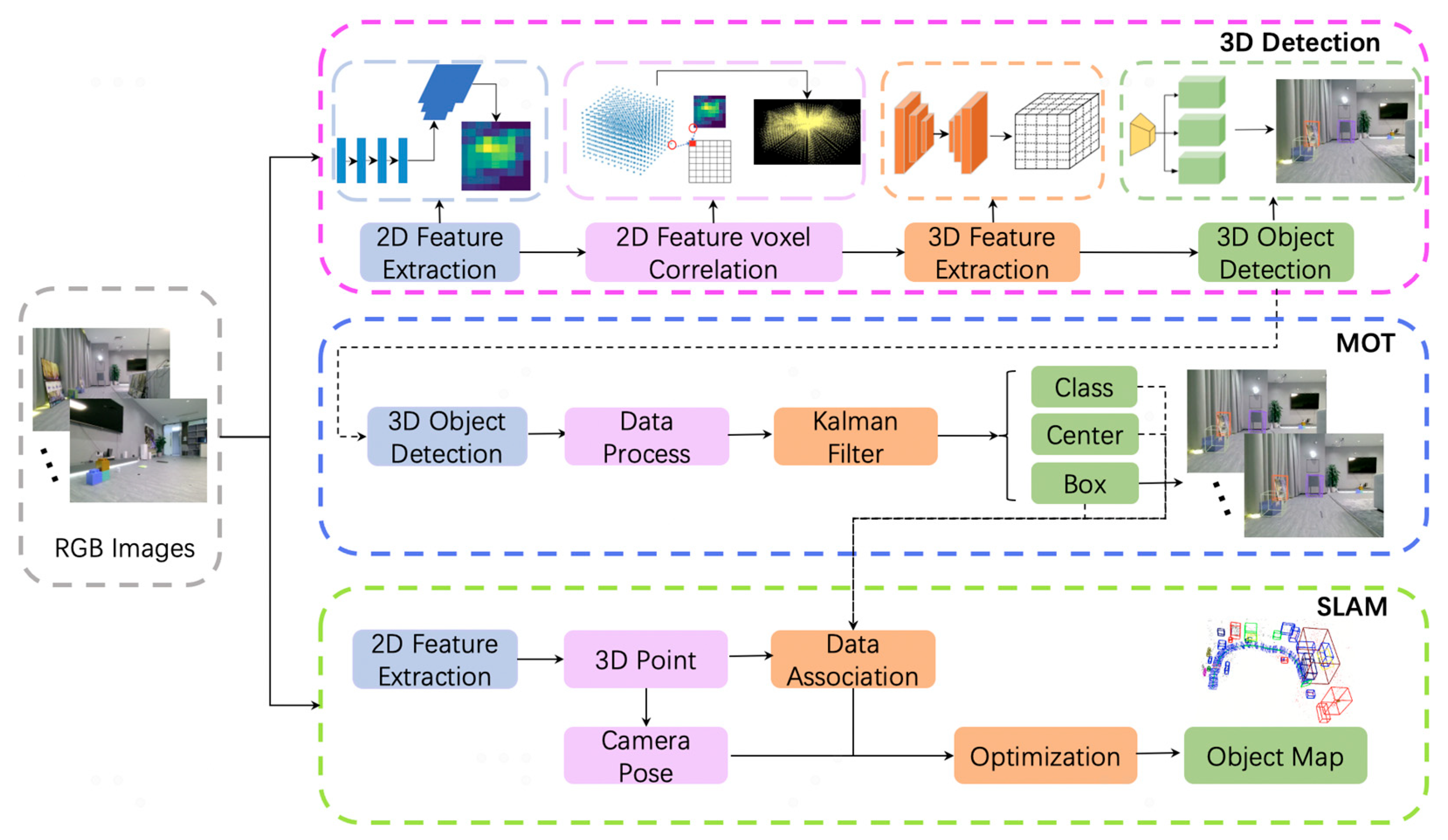

3. Proposed Approach

- (1)

- Dataset preprocessing. To improve training accuracy, we analyze the data distribution and propose a data processing method.

- (2)

- 3D object detection. We map an association feature from 2D to 3D space. Subsequently, we use a 3D convolutional neural network to predict the 3D object boxes and categories.

- (3)

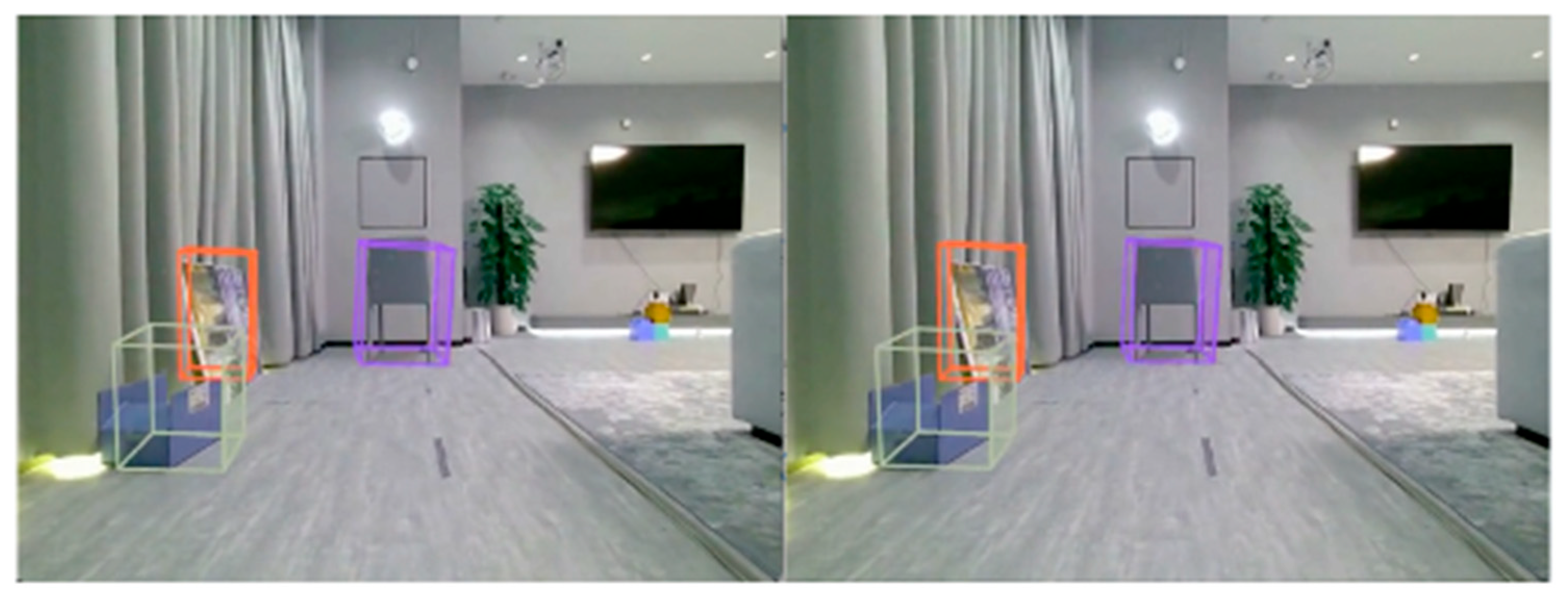

- MOT. We apply traditional filtering methods to track the detection results, addressing the significant jitter of the 3D object boxes across consecutive frames.

- (4)

- Visual SLAM. We integrate the aforementioned 3D object detection and MOT modules with the ORB-SLAM2 framework to realize monocular 3D object semantic SLAM. This proposed framework enhances the capabilities of traditional visual SLAM, providing a comprehensive solution for indoor scene understanding and applications.

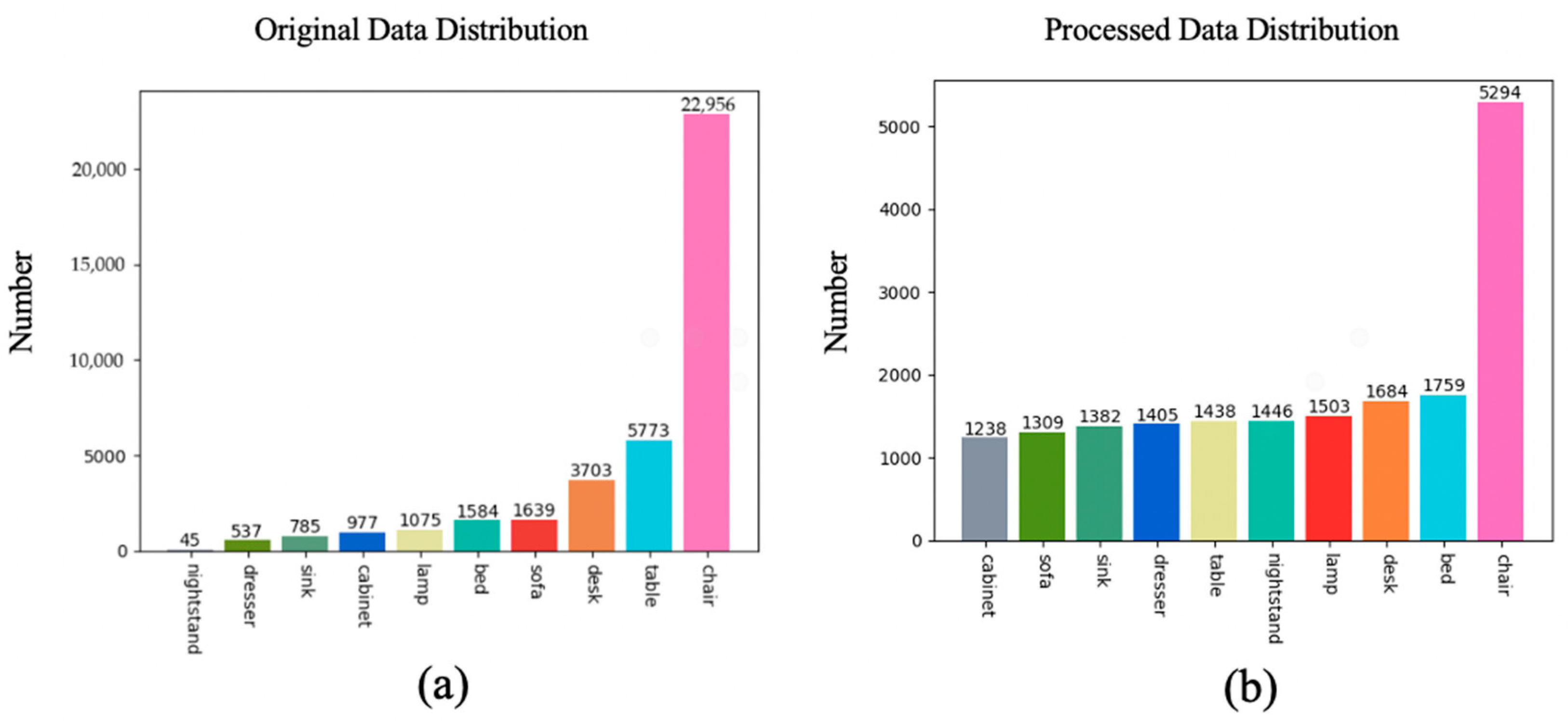

3.1. Dataset Preprocessing

3.2. 3D Object Detection

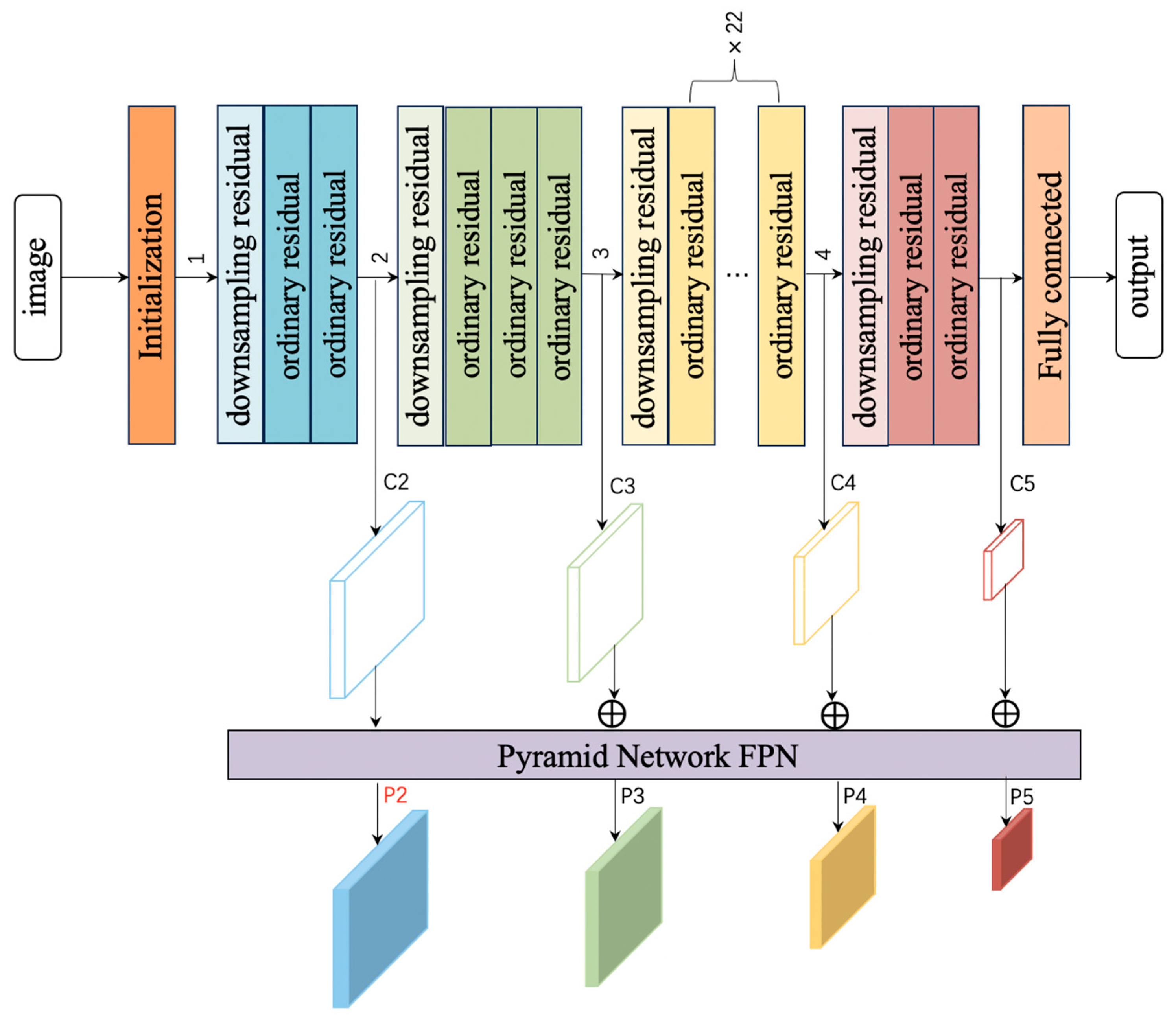

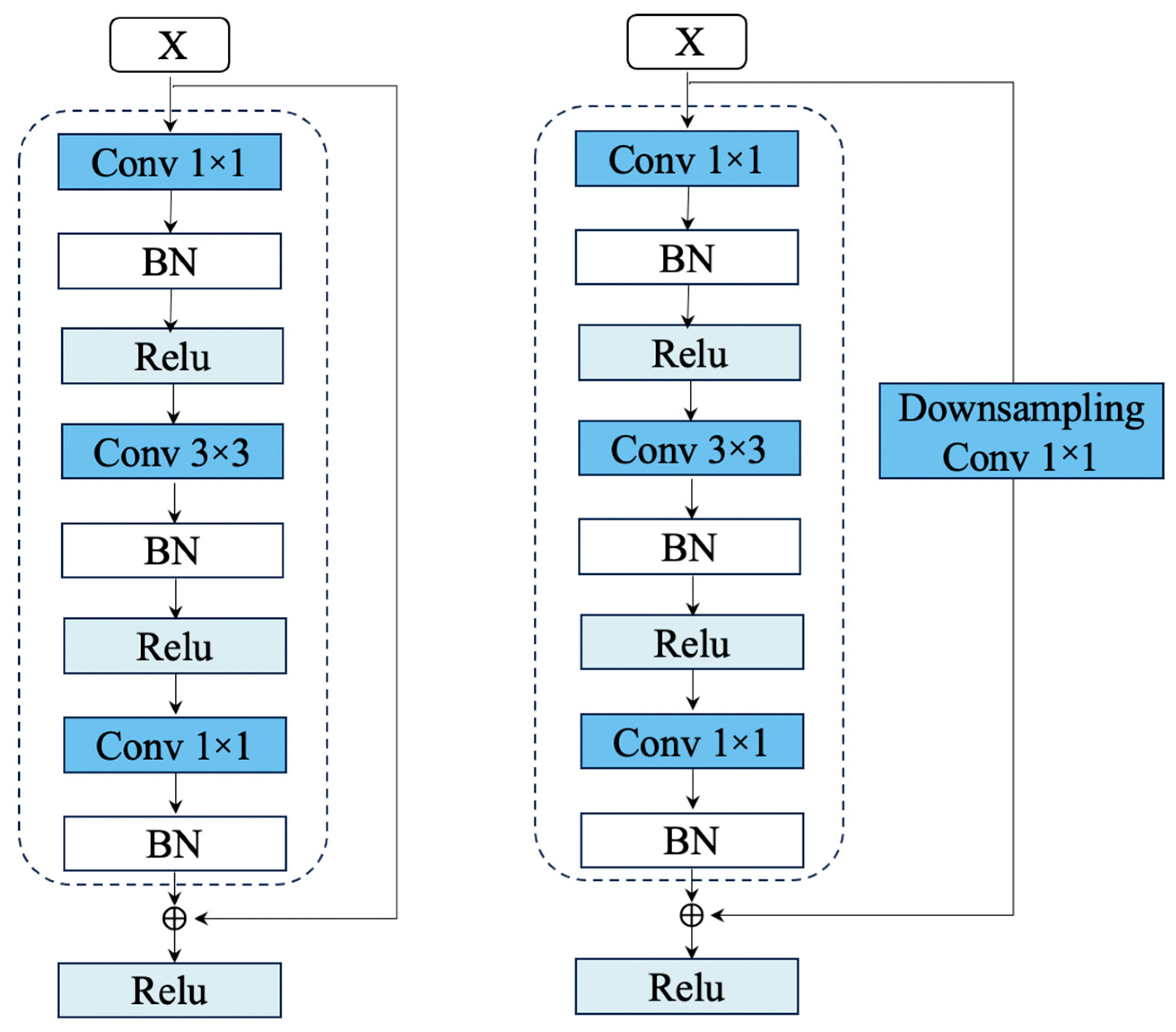

- (1)

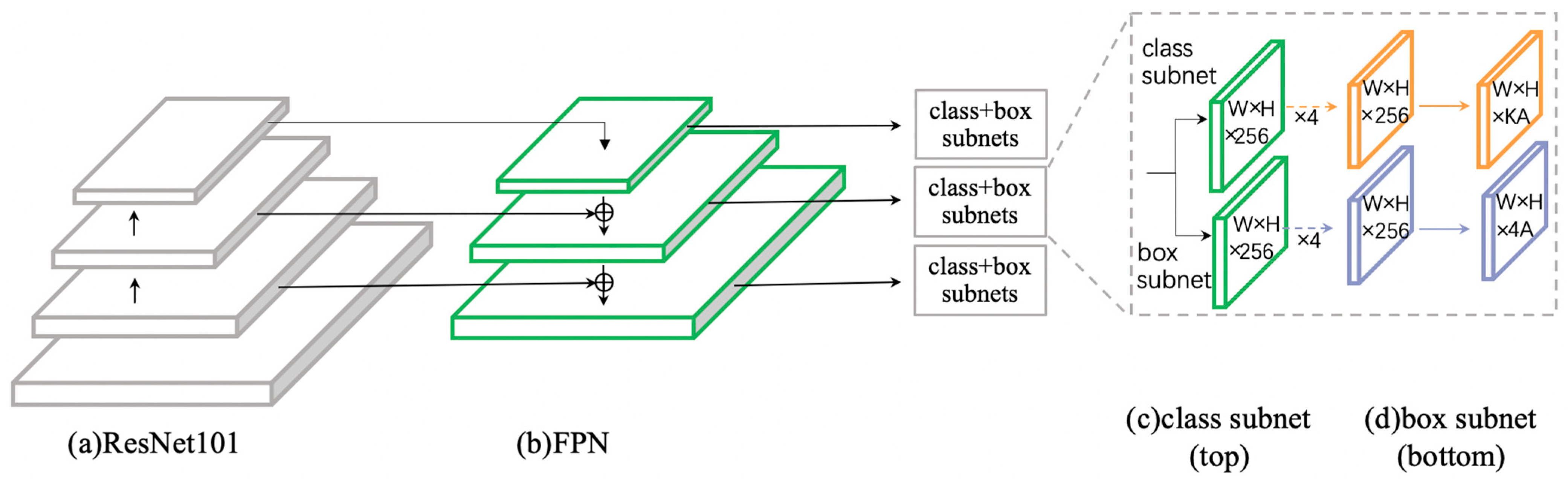

- 2D feature extraction. ResNet101 [58], which is based on the residual learning principle, is adopted as the backbone network to extract 2D features from the input monocular RGB images. To enhance the model’s ability to perceive features of objects with varying sizes, an FPN is integrated into the feature extraction network, enabling multi-scale feature fusion across the multiple feature maps generated by the backbone. Ultimately, a feature map with richer semantic information is output. ResNet101 is a deep residual network consisting of 101 layers; its core characteristic lies in the introduction of “residual learning.” This design effectively mitigates the gradient vanishing and gradient explosion issues in deep neural networks while extracting abundant semantic information. In contrast, FPN does not participate in feature extraction by the backbone network. Instead, it performs multi-scale feature fusion on the feature maps extracted from the input image by the backbone, thereby constructing a feature pyramid with high-level semantic information. Specifically, FPN leverages feature maps from different levels of the feature extraction network to build the pyramid, where each level corresponds to a distinct scale: lower-level feature maps exhibit higher spatial resolution but relatively low semantic information, whereas higher-level feature maps possess more robust semantic information.

- (2)

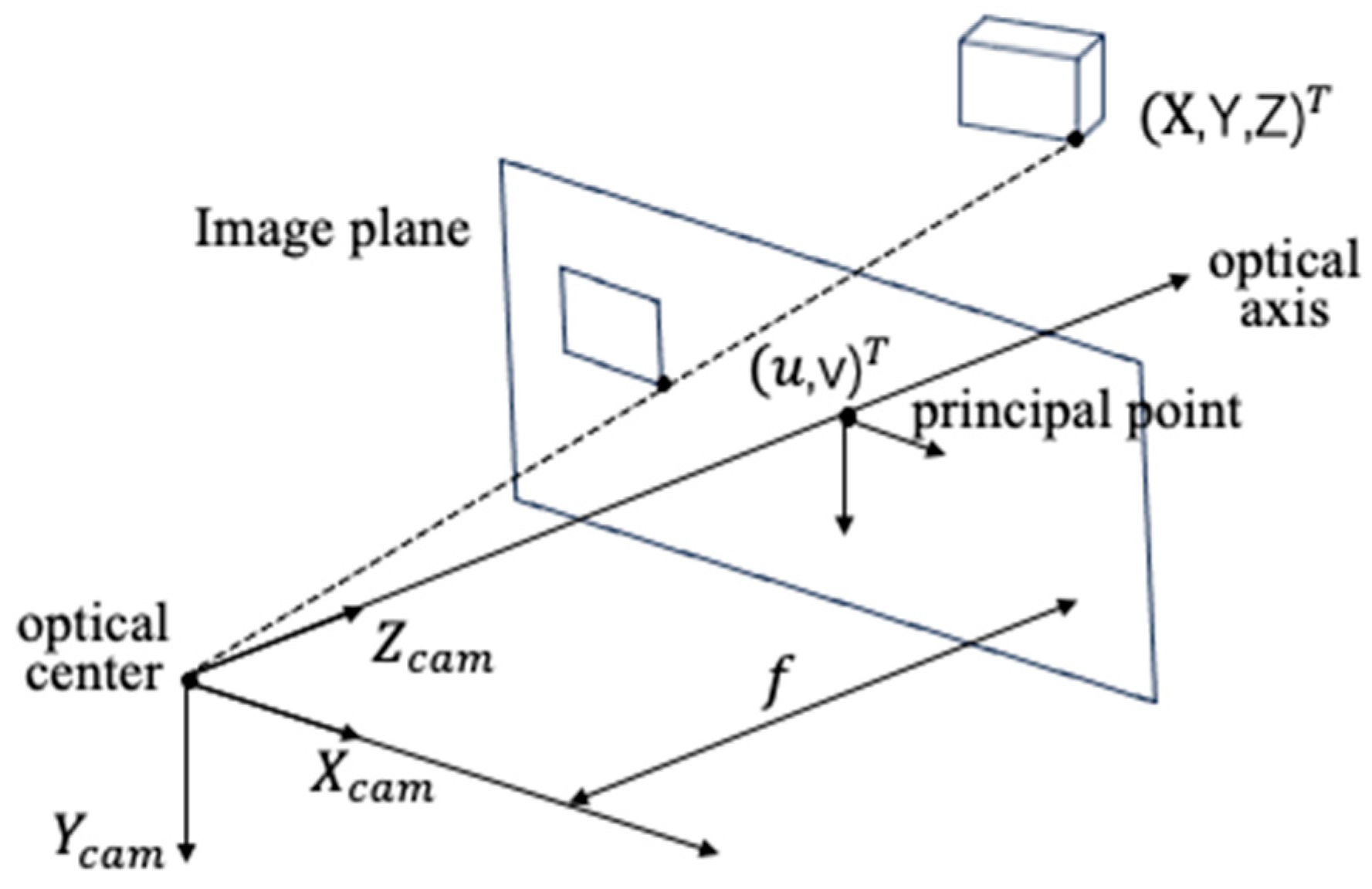

- 2D to 3D mapping. The size [D, H, W] of the above 2D feature extraction represents the number of anchor points distributed along the axes. We generate three-dimensional anchor points (xc, yc, zc) and restrict the range according to the field of view of the camera, as shown in Equation (2). The total number of point clouds given by N = D × H × W. Taking the camera optical center O as the origin, establish the camera coordinate system O-XYZ as shown in Figure 7.

- (3)

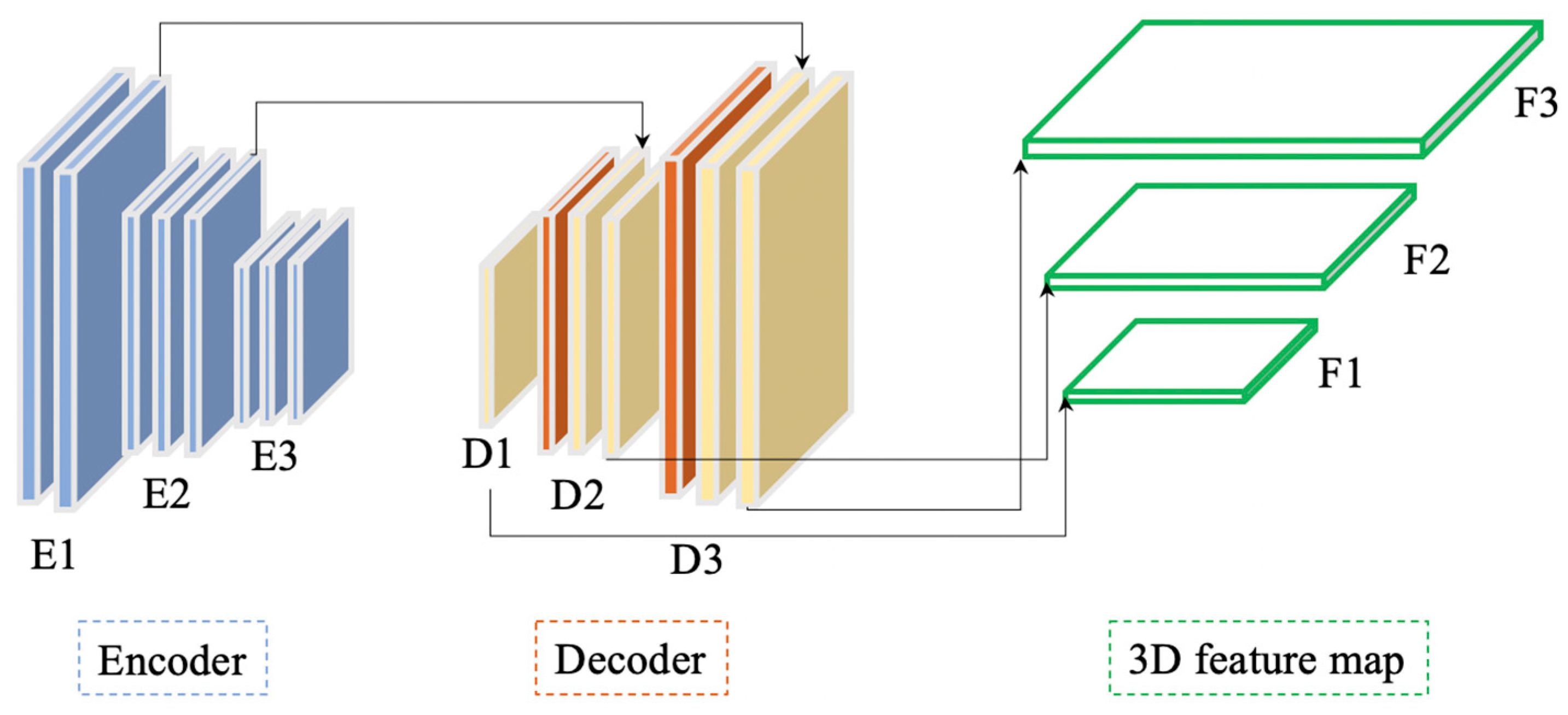

- 3D feature extraction. The 3D feature extraction model adopts an encoder–decoder structure. The schematic of the main network is shown in Figure 9. Three layers of 3D feature maps F1, F2, F3 can be obtained from different levels of the encoder–decoder. The encoder and decoder each consist of three modules. The encoder primarily uses a residual block based on standard 3D convolution. The decoder’s residual block utilizes both transposed 3D convolution and standard 3D convolution. To mitigate issues like gradient vanishing and explosion due to an increasing number of model layers, a skip connection operation is incorporated in the structure.

- (4)

- 3D loss functions. The 3D object detection network takes 3D feature maps as input and predicts three prediction outcomes: object category, object center point, and object detection box. The main framework is depicted in Figure 10. These three parallel outputs are fed into the classification loss function FL, the center point loss function Lc, and the 3D detection box loss function Lbox [59]. The overall training loss is represented in Equation (3).

3.3. Multi-Object Tracking

3.4. Visual SLAM

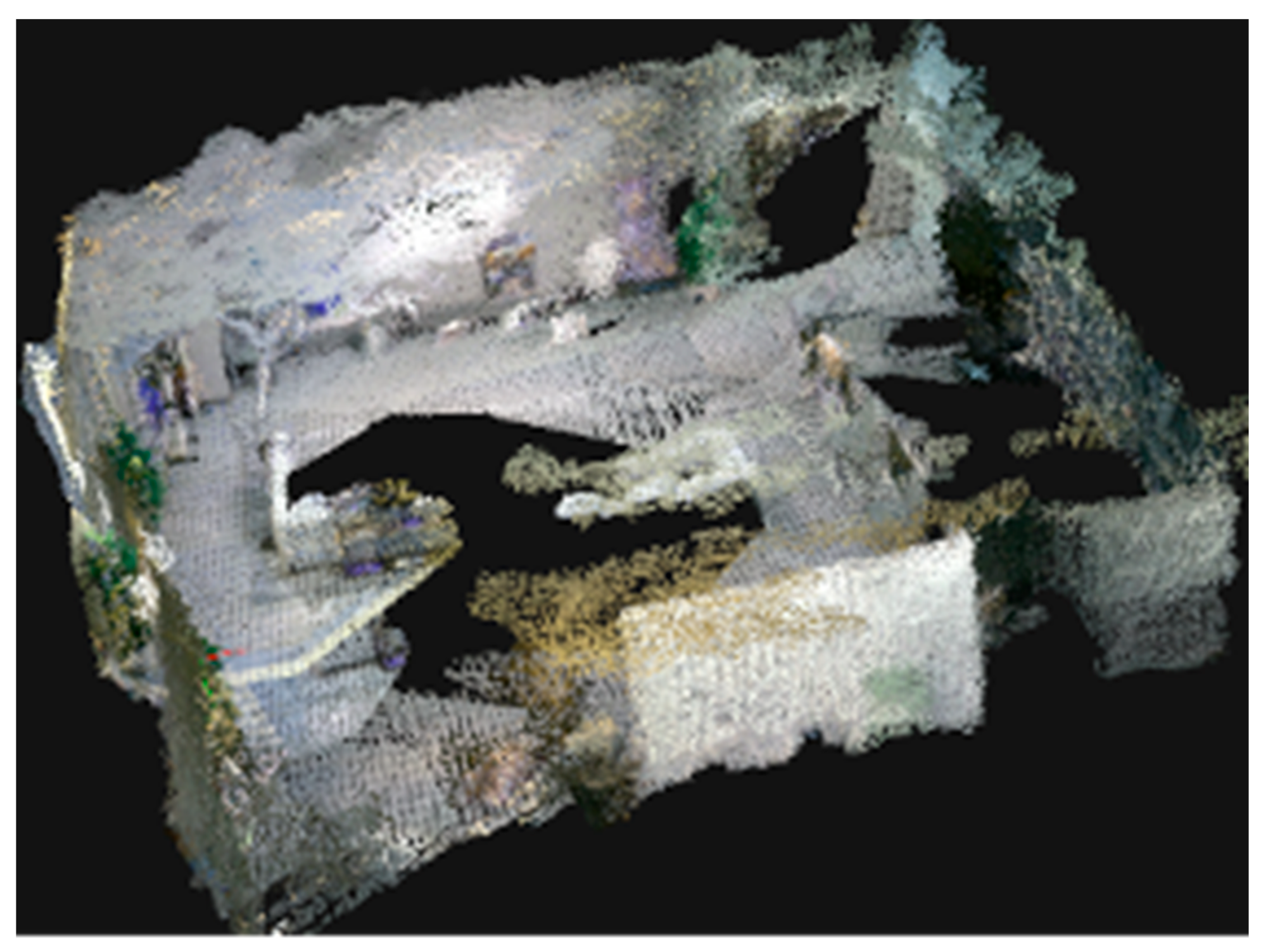

- (1)

- Data association: Based on each image frame, we map the 3D point cloud to the 2D image using the projection relationship. The data association is completed by checking whether a point on the 2D image falls within the boundaries of the 3D detection box.

- (2)

- Loop detection: Using the g2o graph optimization library, we add the center points of the 3D boxes and overlap volume constraints between adjacent frames to reduce ghosting and improve mapping accuracy.

4. Experimental Evaluation

4.1. Datasets

4.2. 3D Object Detection

- (1)

- Training. The training environment involves a GPU and PyTorch 2.0.0 over 12 rounds, using AdamW as the optimizer. The batch size during training is batchsize = 32. The images are resized to 640 × 480. And enhancement operations such as flipping and scaling are used to improve detection capabilities for difficult objects.

- (2)

- Results. We used the evaluation method of the SUNRGBD dataset to evaluate the accuracy of our algorithm. Table 1 and Table 2 show the AP and AR values corresponding to 3D IOU thresholds 0.25 and 0.5 for 12 and 14 objects in the SUNRGBD datasets, respectively. Notably, mAP@0.25 is 50.13 and 57.2, respectively. We also evaluated the average translation error (ATE) and average orientation error (AOE), as shown in Table 3.

4.3. MOT

4.4. Visual SLAM

4.5. Experimental Summary

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, H.; Liu, Y.; Wang, C.; Wei, Y. An Effective 3D Instance Map Reconstruction Method Based on RGBD Images for Indoor Scene. Remote Sens. 2025, 17, 139. [Google Scholar] [CrossRef]

- Perfetti, L.; Fassi, F.; Vassena, G. Ant3D—A Fisheye Multi-Camera System to Survey Narrow Spaces. Sensors 2024, 24, 4177. [Google Scholar] [CrossRef] [PubMed]

- Bedkowski, J. Open Source, Open Hardware Hand-Held Mobile Mapping System for Large Scale Surveys. SoftwareX 2024, 25, 101618. [Google Scholar] [CrossRef]

- Gao, X.; Yang, R.; Chen, X.; Tan, J.; Liu, Y.; Wang, Z.; Tan, J.; Liu, H. A New Framework for Generating Indoor 3D Digital Models from Point Clouds. Remote Sens. 2024, 16, 3462. [Google Scholar] [CrossRef]

- Huang, Y.; Xie, F.; Zhao, J.; Gao, Z.; Chen, J.; Zhao, F.; Liu, X. ULG-SLAM: A Novel Unsupervised Learning and Geometric Feature-Based Visual SLAM Algorithm for Robot Localizability Estimation. Remote Sens. 2024, 16, 1968. [Google Scholar] [CrossRef]

- Cheng, S.; Sun, C.; Zhang, S.; Zhang, D. SG-SLAM: A Real-Time RGB-D Visual SLAM Toward Dynamic Scenes with Semantic and Geometric Information. IEEE Trans. Instrum. Meas. 2023, 72, 7501012. [Google Scholar] [CrossRef]

- Ji, T.; Wang, C.; Xie, L. Towards Real-time Semantic RGB-D SLAM in Dynamic Environments. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11175–11181. [Google Scholar]

- Hu, X. Multi-level map construction for dynamic scenes. arXiv 2023, arXiv:2308.04000. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. Fcos3d: Fully convolutional one-stage monocular 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 913–922. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; Chen, H. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Park, D.; Ambrus, R.; Guizilini, V.; Li, J.; Gaidon, A. Is pseudo-lidar needed for monocular 3d object detection? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 11–17 October 2021; pp. 3142–3152. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Philion, J.; Fidler, S. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 194–210. [Google Scholar]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S.L. Categorical depth distribution network for monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 8555–8564. [Google Scholar]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. Bevdet: High-performance multi-camera 3d object detection in bird-eye-view. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Zhang, R.; Qiu, H.; Wang, T.; Guo, Z.; Cui, Z.; Qiao, Y.; Li, H.; Gao, P. MonoDETR: Depth-guided transformer for monocular 3D object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 9155–9166. [Google Scholar]

- Yin, Z.; Wen, H.; Nie, W.; Zhou, M. Localization of Mobile Robots Based on Depth Camera. Remote Sens. 2023, 15, 4016. [Google Scholar] [CrossRef]

- Wang, T.; Lian, Q.; Zhu, C.; Zhu, X.; Zhang, W. Mv-fcos3d++: Multi-view camera-only 4d object detection with pretrained monocular backbones. arXiv 2022, arXiv:2207.12716. [Google Scholar]

- Yan, L.; Yan, P.; Xiong, S.; Xiang, X.; Tan, Y. Monocd: Monocular 3d object detection with complementary depths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 10248–10257. [Google Scholar]

- Li, Z.; Xu, X.; Lim, S.; Zhao, H. Unimode: Unified monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16561–16570. [Google Scholar]

- Barreiros, M.O.; Dantas, D.O.; Silva, L.C.O.; Ribeiro, S.; Barros, A.K. Zebrafish tracking using YOLOv2 and Kalman filter. Sci. Rep. 2021, 11, 3219. [Google Scholar] [CrossRef] [PubMed]

- Jayawickrama, N.; Ojala, R.; Tammi, K. Using Scene-Flow to Improve Predictions of Road Users in Motion with Respect to an Ego-Vehicle. IET Intell. Transp. Syst. 2025, 19, e70010. [Google Scholar] [CrossRef]

- Guo, G.; Zhao, S. 3D multi-object tracking with adaptive cubature Kalman filter for autonomous driving. IEEE Trans. Intell. Veh. 2022, 8, 512–519. [Google Scholar] [CrossRef]

- Abdelkader, M.; Gabr, K.; Jarraya, I.; AlMusalami, A.; Koubaa, A. SMART-TRACK: A Novel Kalman Filter-Guided Sensor Fusion for Robust UAV Object Tracking in Dynamic Environments. IEEE Sens. J. 2025, 25, 3086–3097. [Google Scholar] [CrossRef]

- Pang, Z.; Li, J.; Tokmakov, P.; Chen, D.; Zagoruyko, S.; Wang, Y.-X. Standing between past and future: Spatio-temporal modeling for multi-camera 3d multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2023; pp. 17928–17938. [Google Scholar]

- Wang, Y.H. Smiletrack: Similarity learning for multiple object tracking. arXiv 2022, arXiv:2211.08824. [Google Scholar]

- Gu, J.; Wu, B.; Fan, L.; Huang, J.; Cao, S.; Xiang, Z.; Hua, X.S. Homography loss for monocular 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 29–24 June 2022; pp. 1080–1089. [Google Scholar]

- Luo, X.; Liu, D.; Kong, H.; Huai, S.; Chen, H.; Xiong, G.; Liu, W. Efficient deep learning infrastructures for embedded computing systems: A comprehensive survey and future envision. ACM Trans. Embed. Comput. Syst. 2024, 24, 1–100. [Google Scholar] [CrossRef]

- Wu, J.; Wang, L.; Jin, Q.; Liu, F. Graft: Efficient Inference Serving for Hybrid Deep Learning with SLO Guarantees via DNN Re-Alignment. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 280–296. [Google Scholar] [CrossRef]

- Tian, P.; Li, H. Visual SLAMMOT Considering Multiple Motion Models. arXiv 2024, arXiv:2411.19134. [Google Scholar] [CrossRef]

- Krishna, G.S.; Supriya, K.; Baidya, S. 3ds-slam: A 3d object detection based semantic slam towards dynamic indoor environments. arXiv 2023, arXiv:2310.06385. [Google Scholar] [CrossRef]

- Tufte, E.R.; Graves-Morris, P.R. The Visual Display of Quantitative Information; Graphics Press: Cheshire, CT, USA, 1983. [Google Scholar]

- Ware, C. Information Visualization: Perception for Design; Morgan Kaufmann: Burlington, MA, USA, 2019. [Google Scholar]

- Cui, L.; Ma, C. SOF-SLAM: A semantic visual SLAM for dynamic environments. IEEE Access 2019, 7, 166528–166539. [Google Scholar] [CrossRef]

- Cui, X.; Lu, C.; Wang, J. 3D semantic map construction using improved ORB-SLAM2 for mobile robot in edge computing environment. IEEE Access 2020, 8, 67179–67191. [Google Scholar] [CrossRef]

- Han, S.; Xi, Z. Dynamic scene semantics SLAM based on semantic segmentation. IEEE Access 2020, 8, 43563–43570. [Google Scholar] [CrossRef]

- Yuan, X.; Chen, S. Sad-slam: A visual slam based on semantic and depth information. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4930–4935. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile Accurate Monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Zhang, J.; Henein, M.; Mahony, R.; Ila, V. VDO-SLAM: A visual dynamic object-aware SLAM system. arXiv 2020, arXiv:2005.11052. [Google Scholar]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. Cnn-slam: Real-time dense monocular slam with learned depth prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6243–6252. [Google Scholar]

- Li, X.; Belaroussi, R. Semi-dense 3d semantic mapping from monocular slam. arXiv 2016, arXiv:1611.04144. [Google Scholar] [CrossRef]

- Ran, Y.; Xu, X.; Luo, M.; Yang, J.; Chen, Z. Scene Classification Method Based on Multi-Scale Convolutional Neural Network with Long Short-Term Memory and Whale Optimization Algorithm. Remote Sens. 2024, 16, 174. [Google Scholar] [CrossRef]

- Bowman, S.L.; Atanasov, N.; Daniilidis, K.; Pappas, G.J. Probabilistic data association for semantic slam. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1722–1729. [Google Scholar]

- Lianos, K.N.; Schonberger, J.L.; Pollefeys, M.; Sattler, T. Vso: Visual semantic odometry. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 234–250. [Google Scholar]

- Civera, J.; Gálvez-López, D.; Riazuelo, L.; Tardos, J.D.; Montiel, J.M.M. Towards semantic SLAM using a monocular camera. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1277–1284. [Google Scholar]

- Rukhovich, D.; Vorontsova, A.; Konushin, A. Imvoxelnet: Image to voxels projection for monocular and multi-view general-purpose 3d object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 2397–2406. [Google Scholar]

- Qi, C.R.; Chen, X.; Litany, O.; Guibas, L.J. Imvotenet: Boosting 3d object detection in point clouds with image votes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 4404–4413. [Google Scholar]

- Ding, Z.; Han, X.; Niethammer, M. Votenet: A deep learning label fusion method for multi-atlas segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 202–210. [Google Scholar]

- Wan, L.; Liu, Y.; Pi, Y. Comparing of target-tracking performances of EKF, UKF and PF. Radar Sci. Technol. 2007, 5, 13–16. [Google Scholar]

- Gupta, S.D.; Yu, J.Y.; Mallick, M.; Coates, M.; Morelande, M. Comparison of angle-only filtering algorithms in 3D using EKF, UKF, PF, PFF, and ensemble KF. In Proceedings of the 2015 18th International Conference on Information Fusion (Fusion), Washington, DC, USA, 6–9 July 2015; pp. 1649–1656. [Google Scholar]

- Weng, X.; Wang, J.; Held, D.; Kitani, K. Ab3dmot: A baseline for 3d multi-object tracking and new evaluation metrics. arXiv 2020, arXiv:2008.08063. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 567–576. [Google Scholar]

- Wang, Y.; Ye, T.Q.; Cao, L.; Huang, W.; Sun, F.; He, F.; Tao, D. Bridged transformer for vision and point cloud 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 12114–12123. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Huang, S.; Qi, S.; Zhu, Y.; Xiao, Y.; Xu, Y.; Zhu, S.C. Holistic 3d scene parsing and reconstruction from a single rgb image. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 187–203. [Google Scholar]

- Huang, S.; Qi, S.; Xiao, Y.; Zhu, Y.; Wu, Y.N.; Zhu, S.-C. Cooperative holistic scene understanding: Unifying 3d object, layout, and camera pose estimation. Adv. Neural Inf. Process. Syst. 2018, 31, 206–217. [Google Scholar]

- Nie, Y.; Han, X.; Guo, S.; Zheng, Y.; Zhang, J.J. Total3dunderstanding: Joint layout, object pose and mesh reconstruction for indoor scenes from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 55–64. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Zhang, Z.; Sun, B.; Yang, H.; Huang, Q. H3dnet: 3d object detection using hybrid geometric primitives. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 311–329. [Google Scholar]

| AP/AR | Bksf | Pil | Chair | Kcct | Tv | Plant | Box | Prin | Sofa | Fcab | Paint | Cntr | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AP_0.25 | 50.85 | 37.37 | 40.56 | 69.92 | 36.40 | 53.70 | 24.64 | 59.18 | 77.87 | 69.36 | 19.21 | 62.45 | 50.13 |

| AR_0.25 | 61.76 | 49.26 | 52.69 | 80.65 | 38.46 | 63.33 | 28.38 | 71.43 | 80.39 | 76.67 | 26.67 | 82.14 | 59.32 |

| AP_0.50 | 29.69 | 20.14 | 19.84 | 37.80 | 24.45 | 33.61 | 15.42 | 42.77 | 40.65 | 52.33 | 5.64 | 24.58 | 28.91 |

| AR_0.50 | 35.29 | 22.79 | 29.62 | 45.16 | 26.92 | 36.67 | 17.57 | 54.29 | 45.10 | 56.67 | 11.11 | 32.14 | 34.44 |

| AP/AR | Bksf | Pil | Chair | Kcct | Tv | Cntr | Paint | Plant | Box | Fcab | Endt | Sofa | Coft | Prin | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AP_0.25 | 63.1 | 36.1 | 50.8 | 65.3 | 44.3 | 57.8 | 39.3 | 65.2 | 22.2 | 57.5 | 75.5 | 68.5 | 81.2 | 74.6 | 57.2 |

| AR_0.25 | 65.1 | 40.4 | 55.4 | 73.3 | 48.2 | 70.4 | 41.4 | 67.6 | 24.4 | 63.2 | 79.2 | 74.2 | 85.4 | 80.0 | 62.0 |

| AP_0.50 | 60.7 | 24.3 | 38.8 | 46.0 | 37.0 | 28.8 | 18.9 | 56.1 | 19.2 | 33.8 | 66.5 | 52.4 | 72.1 | 49.5 | 43.2 |

| AR_0.50 | 62.8 | 26.9 | 42.8 | 50.0 | 37.0 | 33.3 | 22.4 | 59.5 | 20.5 | 39.5 | 66.7 | 57.0 | 75.6 | 60.0 | 46.7 |

| Indicators | Bed | Chair | Sofa | Table | Desk | Dresser | Night_Stand | Sink | Cabinet | Lamp | mATE/mAOE |

|---|---|---|---|---|---|---|---|---|---|---|---|

| ATE | 0.13 | 0.24 | 0.24 | 0.27 | 0.31 | 0.14 | 0.07 | 0.12 | 0.13 | 0.08 | 0.17 |

| AOE | 0.53 | 0.28 | 0.40 | −0.13 | −0.02 | 0.32 | −0.19 | −0.26 | −0.01 | −0.07 | 0.08 |

| Method | Bed | Chair | Sofa | Table | Desk | Dresser | Night_Stand | Sink | Cabinet | Lamp | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|

| HoPR [60] | 58.29 | 13.56 | 28.37 | 12.12 | 4.79 | 13.71 | 8.80 | 2.18 | 0.48 | 2.41 | 14.47 |

| Coop [61] | 63.58 | 17.12 | 41.22 | 26.21 | 9.55 | 4.28 | 6.34 | 5.34 | 2.63 | 1.75 | 17.80 |

| T3DU [62] | 59.03 | 15.98 | 43.95 | 35.28 | 23.65 | 19.20 | 6.87 | 14.40 | 11.39 | 3.46 | 23.32 |

| ImVoxelNet [50] | 79.17 | 63.07 | 60.59 | 51.14 | 31.20 | 35.45 | 38.38 | 45.12 | 19.24 | 13.27 | 43.66 |

| Ours | 87.83 | 48.67 | 66.04 | 40.68 | 44.92 | 74.43 | 87.18 | 56.86 | 60.82 | 66.31 | 63.37 |

| Method | RGB | PC | Bath | Bed | Bookshelf | Chair | Desk | Dresser | Night_Stand | Sofa | Table | Toilet | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F-PointNet [63] | yes | yes | 43.3 | 81.1 | 33.3 | 64.2 | 24.7 | 32.0 | 58.1 | 61.1 | 51.1 | 90.9 | 54.0 |

| VoteNet [52] | no | yes | 74.4 | 83.0 | 28.8 | 75.3 | 22.0 | 29.8 | 62.2 | 64.0 | 47.3 | 90.1 | 57.7 |

| H3DNet [64] | no | yes | 73.8 | 85.6 | 31.0 | 76.7 | 29.6 | 33.4 | 65.5 | 66.5 | 50.8 | 88.2 | 60.1 |

| ImVoteNet [51] | yes | yes | 75.9 | 87.6 | 41.3 | 76.7 | 28.7 | 41.4 | 69.9 | 70.7 | 51.1 | 90.5 | 63.4 |

| ImVoxelNet [50] | yes | no | 71.7 | 69.6 | 5.7 | 53.7 | 21.9 | 21.2 | 34.6 | 51.5 | 39.1 | 76.8 | 40.7 |

| Ours | yes | no | 82.1 | 29.9 | 33.5 | 31.3 | 61.0 | 78.7 | 65.1 | 41.4 | 66.6 | 54.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Wu, R.; Dong, Y.; Jiang, H. Research on Indoor 3D Semantic Mapping Based on ORB-SLAM2 and Multi-Object Tracking. Appl. Sci. 2025, 15, 10881. https://doi.org/10.3390/app152010881

Wang W, Wu R, Dong Y, Jiang H. Research on Indoor 3D Semantic Mapping Based on ORB-SLAM2 and Multi-Object Tracking. Applied Sciences. 2025; 15(20):10881. https://doi.org/10.3390/app152010881

Chicago/Turabian StyleWang, Wei, Ruoxi Wu, Yan Dong, and Huilin Jiang. 2025. "Research on Indoor 3D Semantic Mapping Based on ORB-SLAM2 and Multi-Object Tracking" Applied Sciences 15, no. 20: 10881. https://doi.org/10.3390/app152010881

APA StyleWang, W., Wu, R., Dong, Y., & Jiang, H. (2025). Research on Indoor 3D Semantic Mapping Based on ORB-SLAM2 and Multi-Object Tracking. Applied Sciences, 15(20), 10881. https://doi.org/10.3390/app152010881