MBD-YOLO: An Improved Lightweight Multi-Scale Small-Object Detection Model for UAVs Based on YOLOv8

Abstract

1. Introduction

- We propose a Multi-Branch Feature Fusion (MBFF) architecture, replacing the fixed-receptive-field design of the traditional C2f module. By dynamically adjusting convolutional kernel sizes, MBFF enables collaborative extraction of multi-scale features. This module significantly enhances feature representation capability for small targets, reduces the parameter count, and effectively mitigates feature degradation caused by extreme scale variations in UAV imagery.

- We present a lightweight Bidirectional Multi-Scale Feature Aggregation Pyramid Network (BiMS-FPN), which integrates bidirectional propagation paths and a Multi-Scale Feature Aggregation (MSFA) module into the conventional FPN structure. The BiMS-FPN effectively resolves the spatial misalignment problem inherent in standard FPNs, reinforces geometric consistency across multi-resolution features, and significantly boosts detection accuracy for small targets, while concurrently reducing computational overhead and model parameters.

- We employ the state-of-the-art Dual-Stream Head, featuring a dual-branch task-aligned architecture. This head, combined with hierarchical distillation techniques and a dynamic matching metric, facilitates end-to-end efficient inference. The One-to-Many branch strengthens training supervision, whereas the One-to-One branch enables Non-Maximal Suppression (NMS)-free inference, collectively improving the recall rate for small targets.

- Extensive experiments conducted on the VisDrone2019 dataset demonstrate that MBD-YOLO achieves an optimal balance between model lightweightness and detection accuracy. Compared to the baseline model YOLOv8, our approach exhibits superior detection performance for multi-scale objects, particularly small targets. Additionally, regarding the model parameter count, MBD-YOLO outperforms several recent state-of-the-art YOLO algorithms.

2. Materials and Methods

2.1. MBD-YOLO Model Architecture

2.2. Key Modules

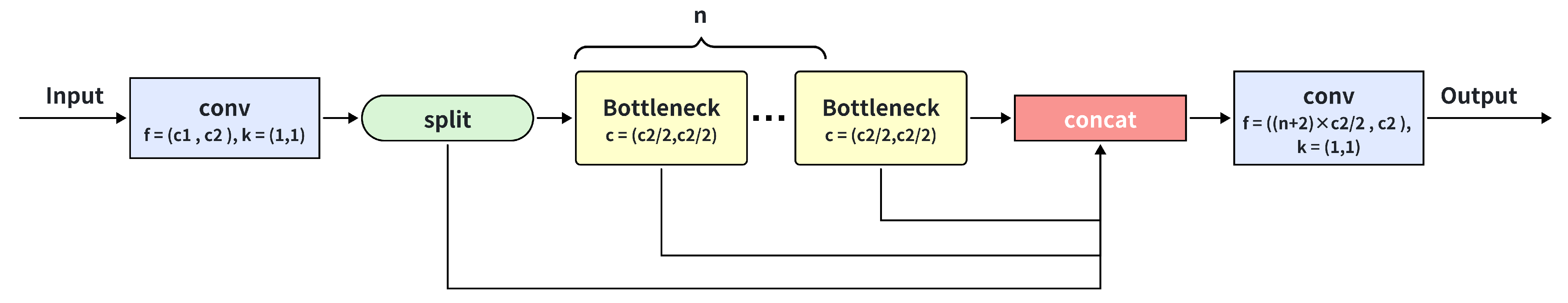

2.2.1. Multi-Branch Feature Fusion—MBFF

- Fixed-size convolutional kernels restrict receptive-field diversity, hindering adaptation to multi-scale targets and significantly degrading detection performance for small or occluded objects;

- Direct concatenation lacks adaptive weight allocation, resulting in insufficient feature fusion capability and ineffective integration of multi-scale contextual information;

- Static computational resource allocation fixes the number of Bottleneck blocks per branch (n = 2), preventing dynamic adjustment based on target complexity, thereby causing redundant computations or inadequate feature extraction.

- is the ReLU activation function;

- and denote the learnable weights and bias of the 1 × 1 convolution;

- is the expanded channel dimension, which is calculated as ;

- W is the number of parallel branches.

- and are point-wise convolution weights;

- denotes depthwise convolution with kernel size ;

- is the intermediate channel dimension.

- is the branch index;

- is the depth level index;

- are the cascaded features from the previous branch.

- represents the final 1 × 1 convolution weights;

- represents the total concatenated channels.

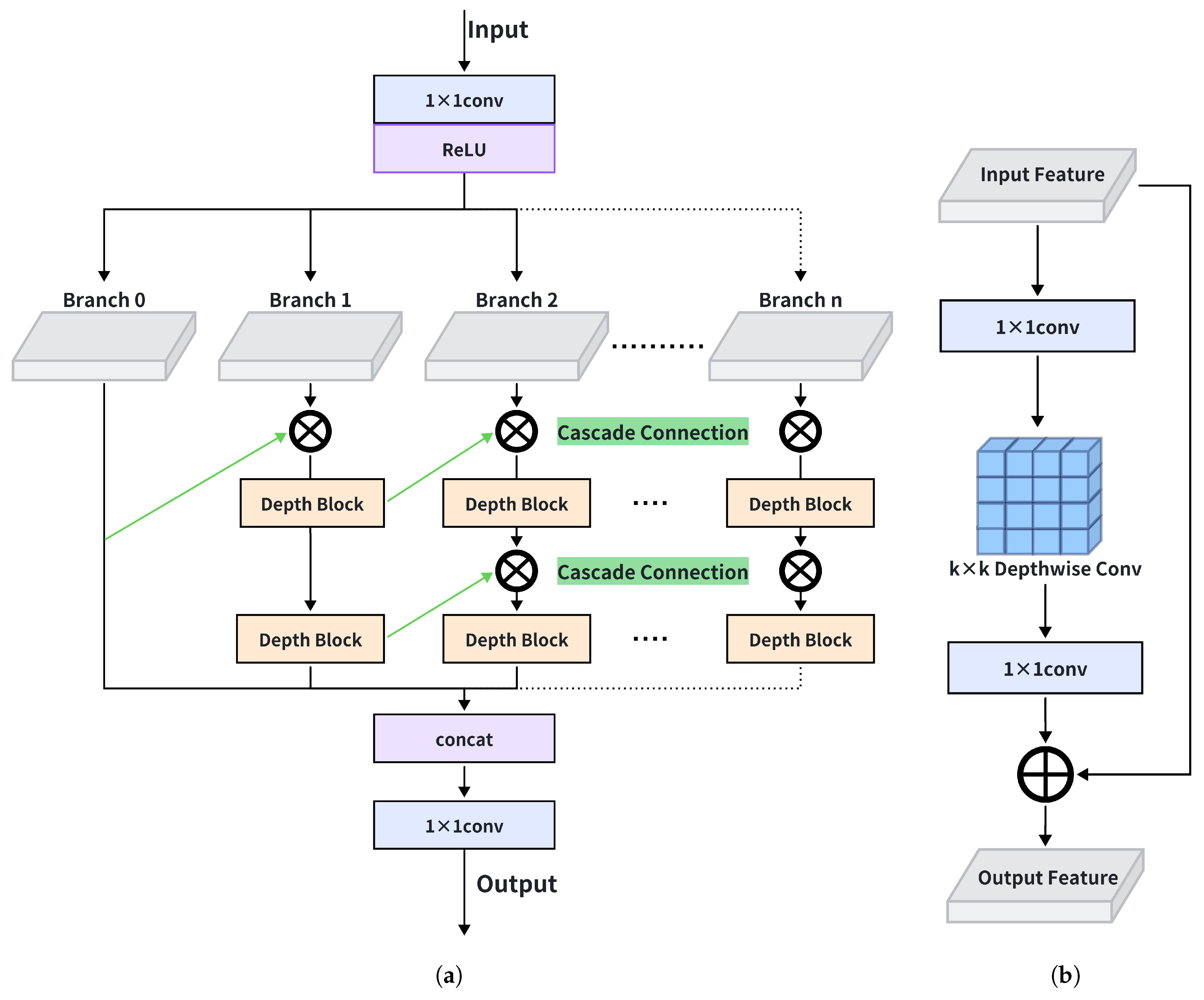

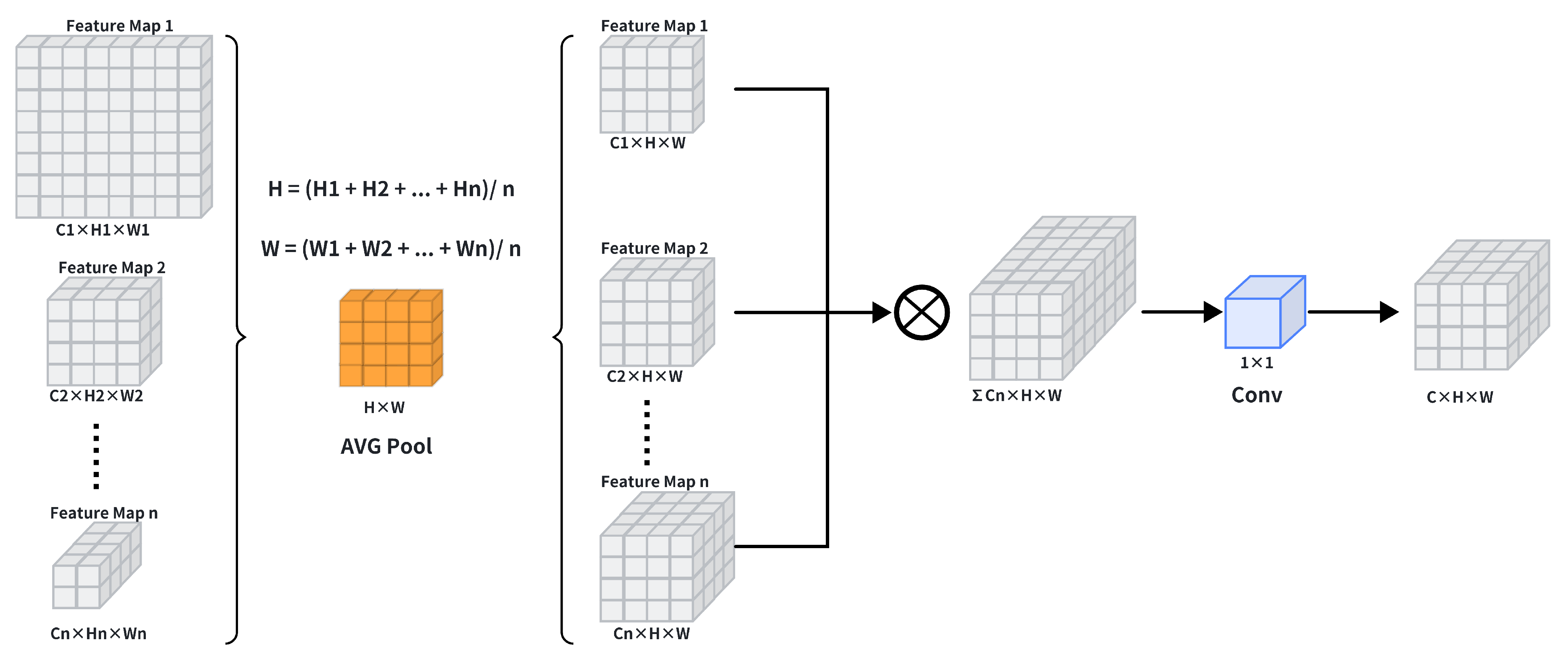

2.2.2. Bidirectional Multi-Scale Feature Aggregation Pyramid Network—BiMS-FPN

- Feature Downsampling: Input feature maps (e.g., P4 level from Backbone) undergo downsampling via Adaptive Average Pooling [54]. The pooling kernel size is dynamically adjusted based on spatial characteristics of input features, formulated as follows:where denotes the input feature. This operation reduces spatial dimensions (, e.g., from to ) while preserving the channel count (C) to mitigate information loss.

- Multi-Scale Feature Alignment: Downsampled features undergo spatial alignment with adjacent-scale features (e.g., P3 or P5). This step is critical because features from different pyramid levels are naturally misaligned due to variations in sampling strides and receptive fields, which can severely impair the effectiveness of subsequent fusion operations [55]. The MSFA module employs learnable masks to automatically correct spatial offsets, ensuring geometric consistency across multi-resolution features and preventing pixel-level misalignment during fusion.

- Hierarchical Concatenation and Output: Aligned features are concatenated along the channel dimension:

- Channel Compression & Output Generation: The concatenated result is processed by a convolution layer to compress channel dimensionality, yielding the final fused feature representation:

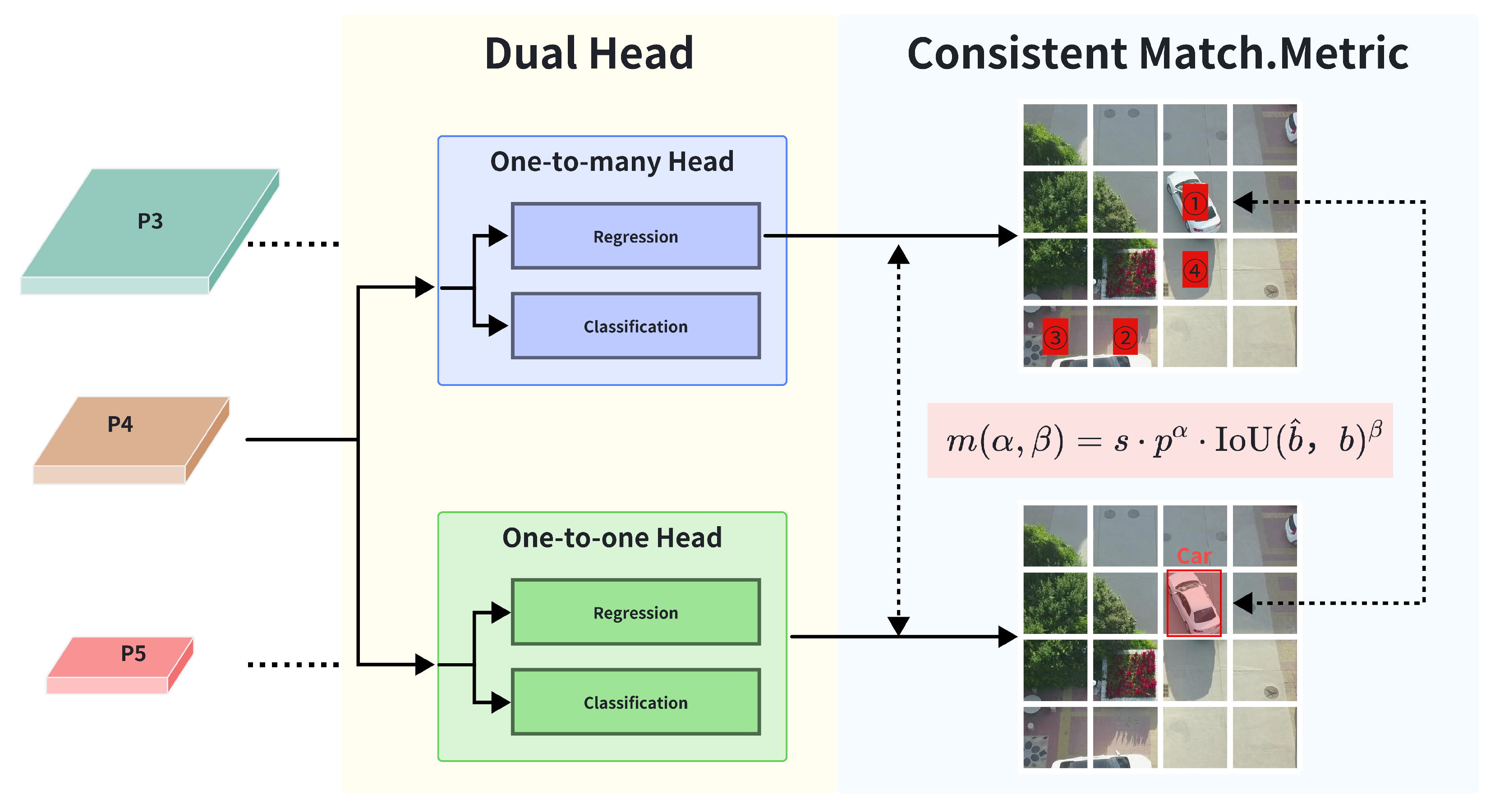

2.2.3. Improved YOLOv8 Detection Head—Dual-Stream Head

- p denotes the classification score;

- represents the predicted bounding box;

- b is the ground-truth bounding box;

- and are tunable hyperparameters balancing task-specific weights;

- s is a learnable scaling factor.

- One-to-Many Head (): Employs and to allocate top-k = 10 predictions per ground truth;

- One-to-One Head (): Uses and for strict one-to-one matching (top-k = 1).

- p is the Euclidean distance between box centers;

- c is the diagonal length of the smallest enclosing rectangle;

- v measures aspect ratio consistency;

- models boundary distributions via discrete probability predictions.

3. Experiments and Results

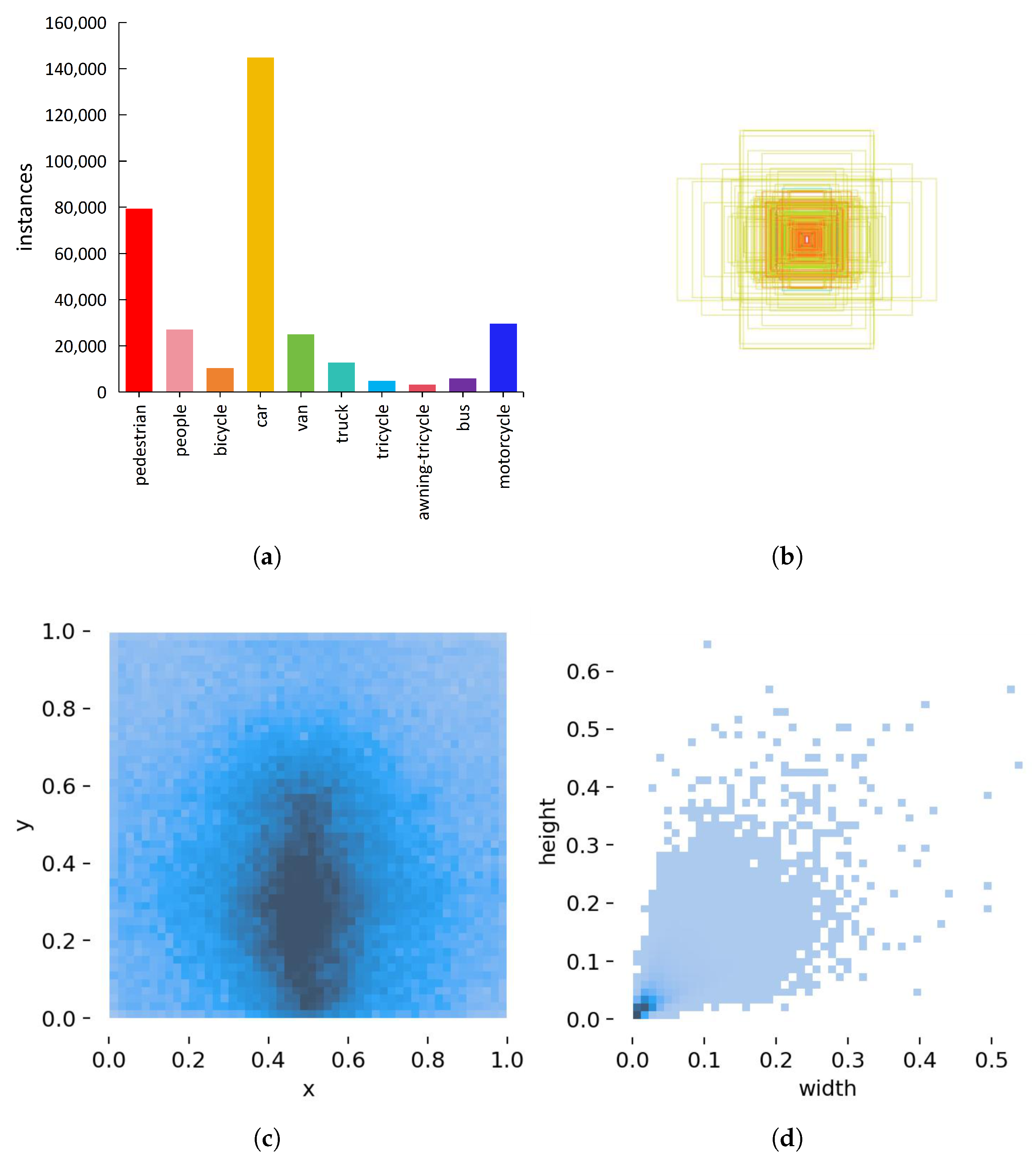

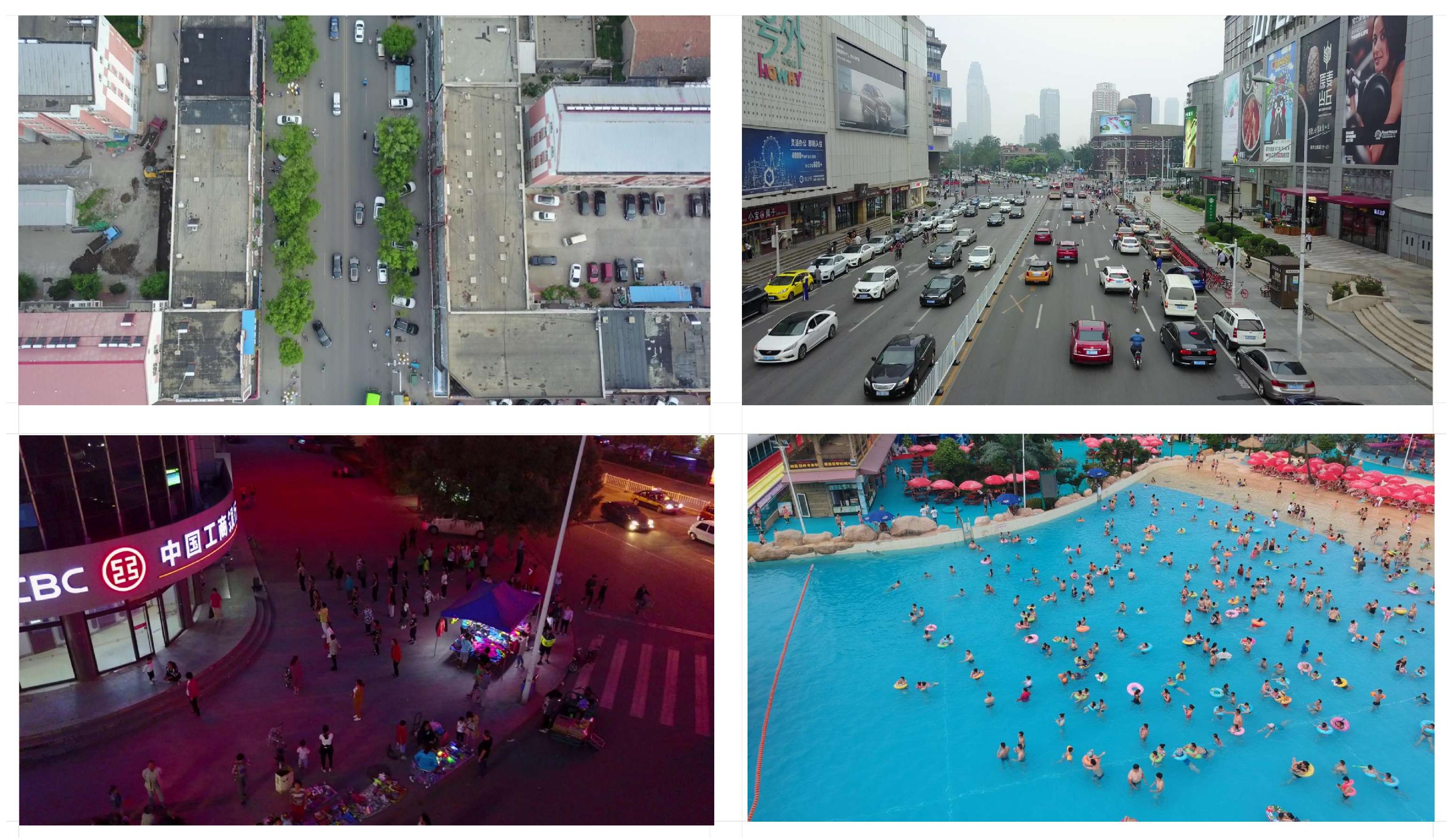

3.1. Datasets

3.2. Experimental Environment and Parameter Confguration

3.3. Evaluation Metrics

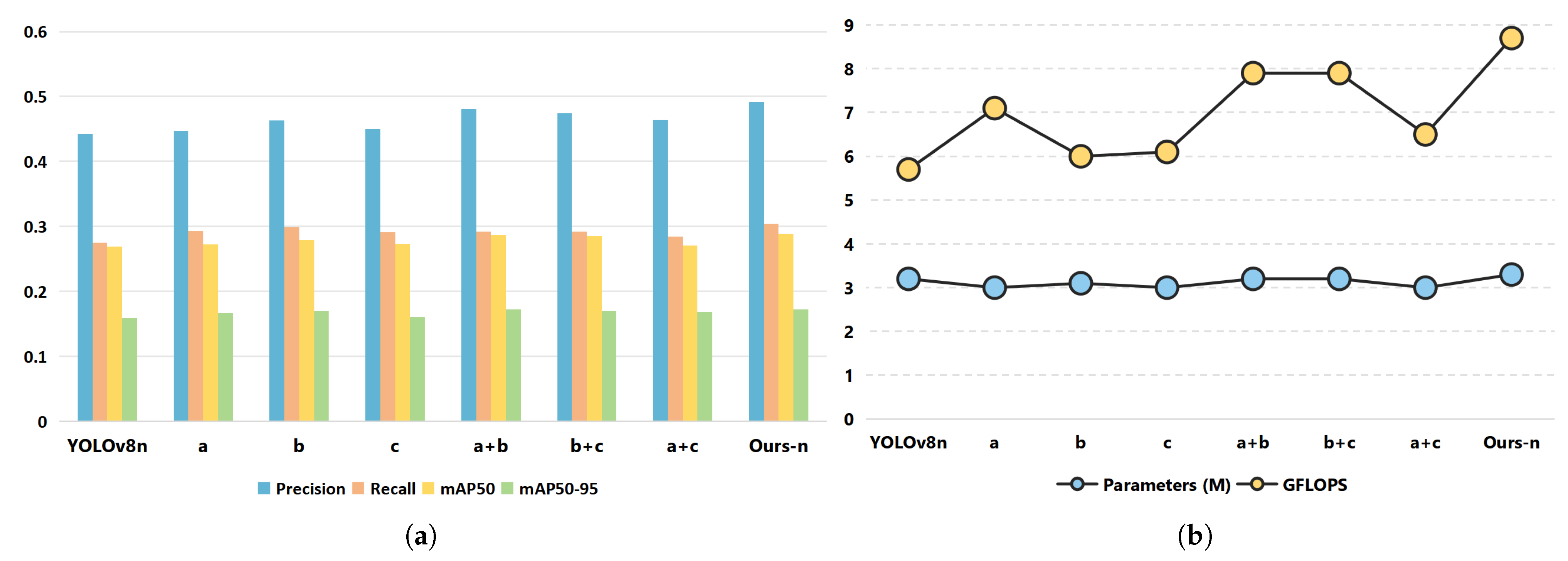

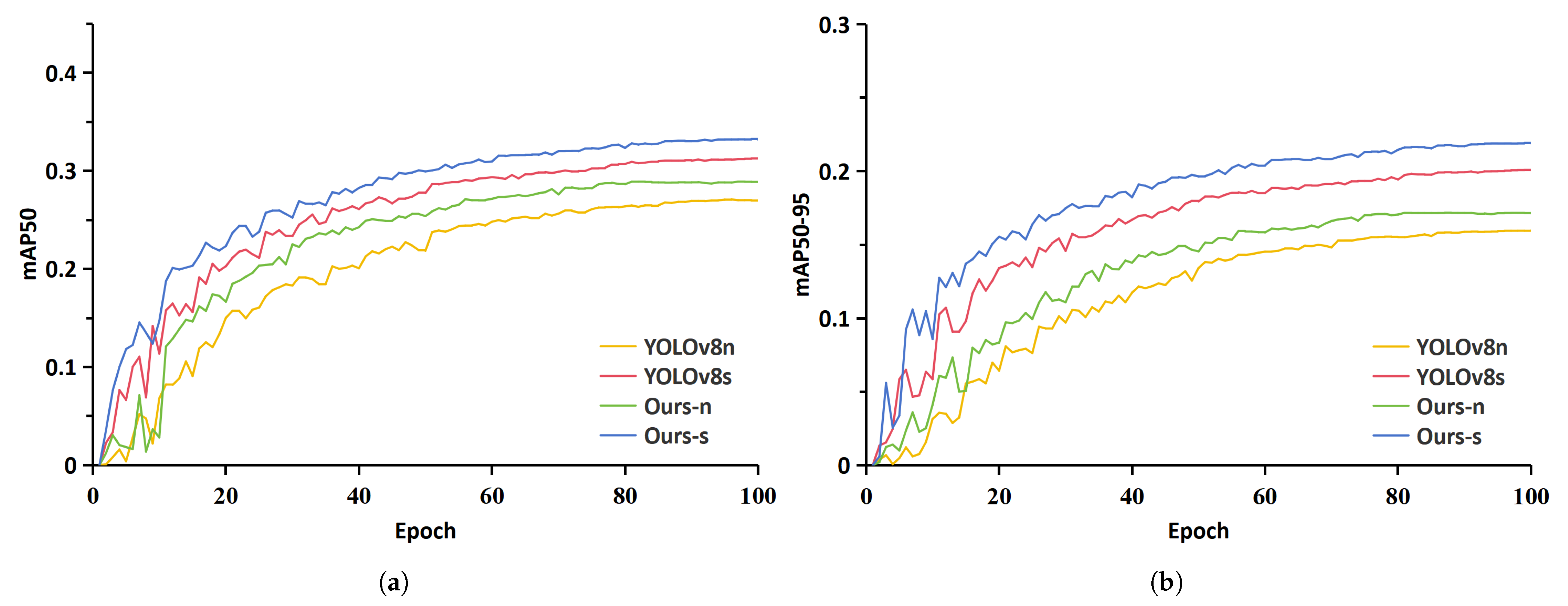

3.4. Ablation Experiment

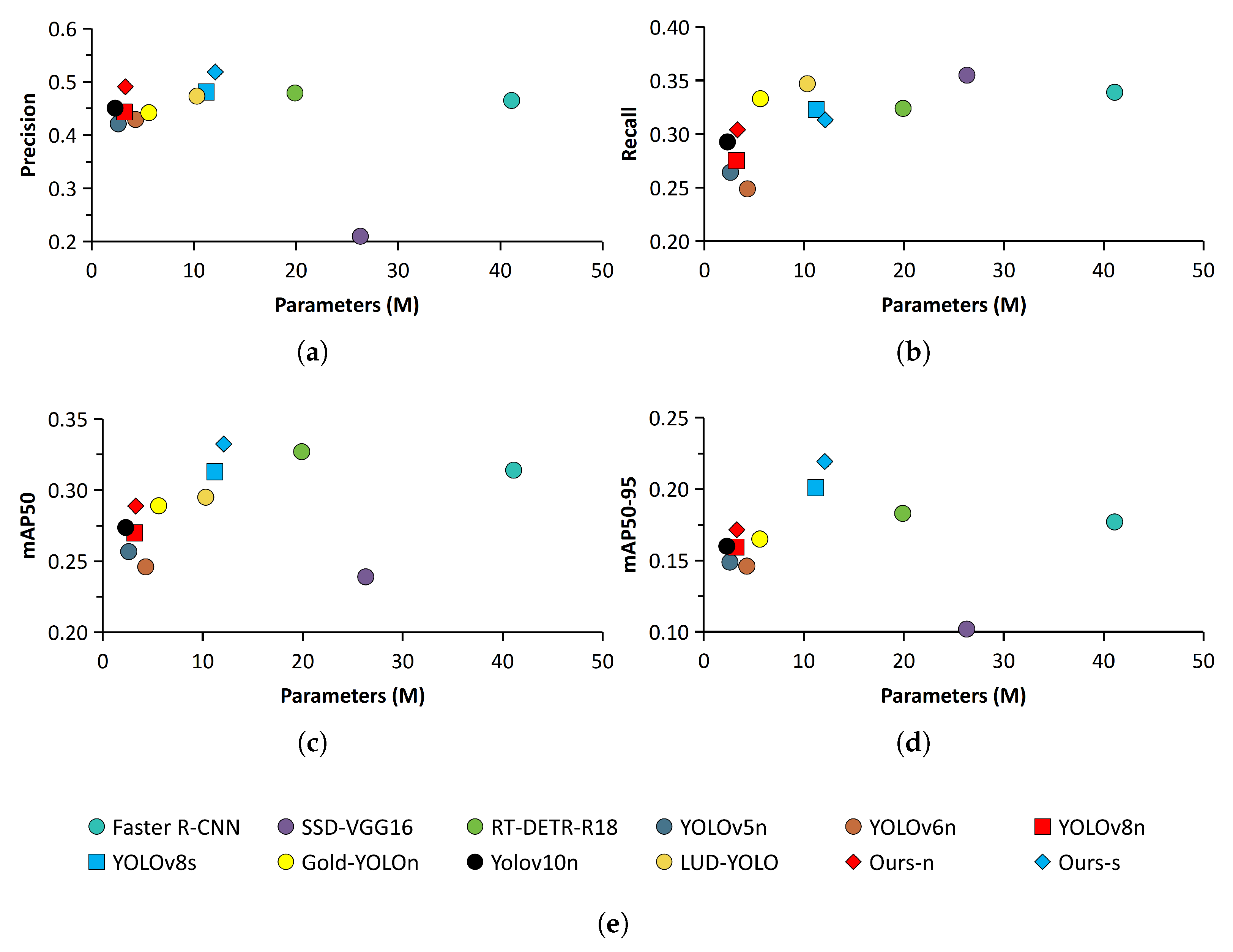

3.5. Comparison Experiment

3.6. Experimental Results Analysis

3.7. Visualization Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Osmani, K.; Schulz, D. Comprehensive Investigation of Unmanned Aerial Vehicles (UAVs): An In-Depth Analysis of Avionics Systems. Sensors 2024, 24, 3064. [Google Scholar] [CrossRef]

- Yuan, S.; Li, Y.; Bao, F.; Xu, H.; Yang, Y.; Yan, Q.; Zhong, S.; Yin, H.; Xu, J.; Huang, Z.; et al. Marine environmental monitoring with unmanned vehicle platforms: Present applications and future prospects. Sci. Total Environ. 2023, 858, 159741. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef] [PubMed]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef] [PubMed]

- Mu, K.; Hui, F.; Zhao, X. Multiple Vehicle Detection and Tracking in Highway Traffic Surveillance Video Based on SIFT Feature Matching. Inf. Process. Syst. 2016, 12, 183–195. [Google Scholar] [CrossRef]

- Yuan, X.; Hao, X.; Chen, H.; Wei, X. Robust Traffic Sign Recognition Based on Color Global and Local Oriented Edge Magnitude Patterns. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1466–1477. [Google Scholar] [CrossRef]

- Chen, S.; Shi, W.; Zhou, M.; Min, Z.; Chen, P. Automatic Building Extraction via Adaptive Iterative Segmentation With LiDAR Data and High Spatial Resolution Imagery Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2081–2095. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle Detection From UAV Imagery With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6047–6067. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, Q.; Lei, G.; Wang, L.; Guo, C. Research on Lightweight Tracking of Small-Sized UAVs Based on the Improved YOLOv8N-Drone Architecture. Drones 2025, 9, 551. [Google Scholar] [CrossRef]

- Bharati, P.; Pramanik, A. Deep Learning Techniques—R-CNN to Mask R-CNN: A Survey. In Computational Intelligence in Pattern Recognition; Das, A.K., Nayak, J., Naik, B., Pati, S.K., Pelusi, D., Eds.; Springer: Singapore, 2020; pp. 657–668. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R.; Ieee. Fast R-CNN. In Proceedings of the IEEE International Conferenceon Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Cham, Switzerland, 17 September 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Olorunshola, O.; Jemitola, P.; Ademuwagun, A. Comparative study of some deep learning object detection algorithms: R-CNN, fast R-CNN, faster R-CNN, SSD, and YOLO. Nile J. Eng. Appl. Sci. 2023, 1, 70–80. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A.; Ieee. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Nida, N.; Irtaza, A.; Javed, A.; Yousaf, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using deep region based convolutional neural network and fuzzy C-means clustering. Int. J. Med Inform. 2019, 124, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Hua, W.; Chen, Q.; Chen, W. A new lightweight network for efficient UAV object detection. Sci. Rep. 2024, 14, 13288. [Google Scholar] [CrossRef] [PubMed]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Hao, W.; Zhili, S. Improved Mosaic: Algorithms for more Complex Images. J. Phys. Conf. Ser. 2020, 1684, 012094. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H.; Ieee Comp, S.O.C. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-Style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Zhao, X.; Zhang, H.; Zhang, W.; Ma, J.; Li, C.; Ding, Y.; Zhang, Z. MSUD-YOLO: A Novel Multiscale Small Object Detection Model for UAV Aerial Images. Drones 2025, 9, 429. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Yang, F.; Ouyang, T.; Liu, C. Deformer-FPN: FPN network based on deformable convolution and lightweight upsampling operator for ship detection in SAR image. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Zeng, M.; He, S.; Zeng, Q.; Niu, Y.; Zhang, R. PA-YOLO: Small Target Detection Algorithm with Enhanced Information Representation for UAV Aerial Photography. IEEE Sens. Lett. 2025, 9, 1–4. [Google Scholar] [CrossRef]

- Xu, S.; Ji, Y.; Wang, G.; Jin, L.; Wang, H. GFSPP-YOLO: A Light YOLO Model Based on Group Fast Spatial Pyramid Pooling. In Proceedings of the 2023 IEEE 11th International Conference on Information, Communication and Networks (ICICN), Xi’an, China, 17–20 August 2023; pp. 733–738. [Google Scholar] [CrossRef]

- Kim, M.; Jeong, J.; Kim, S. ECAP-YOLO: Efficient Channel Attention Pyramid YOLO for Small Object Detection in Aerial Image. Remote Sens. 2021, 13, 4851. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-yolo: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Zhang, Z. Drone-YOLO: An Efficient Neural Network Method for Target Detection in Drone Images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Li, Y.; Hu, B.; Wei, S.; Gao, D.; Shuang, F. Simplify-YOLOv5m: A Simplified High-Speed Insulator Detection Algorithm for UAV Images. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Liu, H.; Sun, F.; Gu, J.; Deng, L. SF-YOLOv5: A Lightweight Small Object Detection Algorithm Based on Improved Feature Fusion Mode. Sensors 2022, 22, 5817. [Google Scholar] [CrossRef]

- Fan, Q.; Li, Y.; Deveci, M.; Zhong, K.; Kadry, S. LUD-YOLO: A novel lightweight object detection network for unmanned aerial vehicle. Inf. Sci. 2025, 686, 121366. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), ELECTR NETWORK, Seattle, WA, USA, 14–19 June 2020; pp. 11030–11039. [Google Scholar]

- Wang, S.; Zhao, Z.; Chen, Y.; Mao, Y.; Cheung, J. Enhancing Thyroid Nodule Detection in Ultrasound Images: A Novel YOLOv8 Architecture with a C2fA Module and Optimized Loss Functions. Technologies 2025, 13, 28. [Google Scholar] [CrossRef]

- Zhong, S.; Wen, W.; Qin, J. SPEM: Self-adaptive Pooling Enhanced Attention Module for Image Recognition. In MultiMedia Modeling; Dang-Nguyen, D.T., Gurrin, C., Larson, M., Smeaton, A.F., Rudinac, S., Dao, M.S., Trattner, C., Chen, P., Eds.; Springer: Cham, Switzerland, 2023; pp. 41–53. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Dong, Y.; Xie, X.; An, Z.; Qu, Z.; Miao, L.; Zhou, Z. NMS-Free Oriented Object Detection Based on Channel Expansion and Dynamic Label Assignment in UAV Aerial Images. Remote Sens. 2023, 15, 5079. [Google Scholar] [CrossRef]

- Wu, Y.; Yao, Q.; Fan, X.; Gong, M.; Ma, W.; Miao, Q. PANet: A Point-Attention Based Multi-Scale Feature Fusion Network for Point Cloud Registration. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2019, arXiv:1911.09070. [Google Scholar] [CrossRef]

- Feng, J.; Yi, C. Lightweight Detection Network for Arbitrary-Oriented Vehicles in UAV Imagery via Global Attentive Relation and Multi-Path Fusion. Drones 2022, 6, 108. [Google Scholar] [CrossRef]

- Yang, J.; Chen, F.; Das, R.K.; Zhu, Z.; Zhang, S. Adaptive-Avg-Pooling Based Attention Vision Transformer for Face Anti-Spoofing. In Proceedings of the 49th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3875–3879. [Google Scholar] [CrossRef]

- Huang, Z.; Wei, Y.; Wang, X.; Liu, W.; Huang, T.S.; Shi, H. AlignSeg: Feature-Aligned Segmentation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 550–557. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Q.; Hu, Q.; Cheng, J. DATE: Dual Assignment for End-to-End Fully Convolutional Object Detection. arXiv 2022, arXiv:2211.13859. [Google Scholar] [CrossRef]

- Zong, Z.; Song, G.; Liu, Y. DETRs with Collaborative Hybrid Assignments Training. arXiv 2022, arXiv:2211.12860. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

| Category | Parameter | Value |

|---|---|---|

| Framework | PyTorch | 2.5.1 |

| CUDA | 12.1 | |

| Hardware | GPU | RTX 3090 |

| Training | Epochs | 100 |

| Batch size | 16 | |

| Augmentation | Mosaic | p = 1.0 |

| Mixup | p = 0.1 | |

| Copy-Paste | p = 0.1 | |

| Learning Rate | Initial (lr0) | 0.01 |

| Final (lrf) | 0.01 | |

| Warmup epochs | 5 | |

| Warmup momentum | 0.8 | |

| Warmup bias LR | 0.1 | |

| Optimizer | Momentum | 0.95 |

| Weight decay | 0.001 | |

| Regularization | Label smoothing | 0.1 |

| Dropout rate | 0.2 | |

| Loss Weights | Box loss | 8.0 |

| Classification loss | 0.8 | |

| DFL loss | 2.0 |

| Models | MBFF | BiMS- FPN | Dual-Stream Head | Precision | Recall | mAP50 | mAP50-95 | Par.(M) | GFLOPS |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv8n | – | – | – | 0.443 | 0.275 | 0.269 | 0.159 | 3.2 | 5.7 |

| a | ✓ | – | – | 0.447 | 0.293 | 0.272 | 0.167 | 3.0 | 7.1 |

| b | – | ✓ | – | 0.463 | 0.299 | 0.279 | 0.170 | 3.1 | 6.0 |

| c | – | – | ✓ | 0.450 | 0.291 | 0.273 | 0.160 | 3.0 | 6.1 |

| a + b | ✓ | ✓ | – | 0.481 | 0.292 | 0.287 | 0.172 | 3.2 | 7.9 |

| b + c | – | ✓ | ✓ | 0.474 | 0.292 | 0.285 | 0.170 | 3.2 | 7.9 |

| a + c | ✓ | – | ✓ | 0.464 | 0.284 | 0.271 | 0.168 | 3.0 | 6.5 |

| Ours-n | ✓ | ✓ | ✓ | 0.491 | 0.304 | 0.289 | 0.172 | 3.3 | 8.7 |

| Models | Precision | Recall | mAP50 | mAP50-95 | Parameters (M) |

|---|---|---|---|---|---|

| Faster R-CNN | 0.465 | 0.339 | 0.314 | 0.177 | 41.1 |

| SSD-VGG16 | 0.210 | 0.355 | 0.239 | 0.102 | 26.3 |

| RT-DETR-R18 | 0.479 | 0.324 | 0.327 | 0.183 | 19.9 |

| YOLOv5n | 0.421 | 0.264 | 0.257 | 0.149 | 2.6 |

| YOLOv6n | 0.429 | 0.249 | 0.246 | 0.146 | 4.3 |

| YOLOv8n | 0.443 | 0.275 | 0.269 | 0.159 | 3.2 |

| YOLOv8s | 0.481 | 0.323 | 0.313 | 0.201 | 11.2 |

| Gold-YOLOn | 0.442 | 0.333 | 0.289 | 0.165 | 5.6 |

| YOLOv10n | 0.451 | 0.293 | 0.274 | 0.160 | 2.3 |

| LUD-YOLO | 0.473 | 0.347 | 0.295 | – | 10.3 |

| Ours-n | 0.491 | 0.304 | 0.289 | 0.172 | 3.3 |

| Ours-s | 0.518 | 0.313 | 0.332 | 0.219 | 12.1 |

| Model | Parameters (M) | mAP50 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ped. | People | Bicycle | Car | Van | Truck | Tricy. | ATricy. | Bus | Motor | All | ||

| YOLOv8n | 3.2 | 0.284 | 0.220 | 0.063 | 0.622 | 0.317 | 0.216 | 0.178 | 0.107 | 0.380 | 0.301 | 0.269 |

| Ours-n | 3.3 | 0.335 | 0.277 | 0.098 | 0.625 | 0.321 | 0.222 | 0.178 | 0.107 | 0.385 | 0.340 | 0.289 |

| YOLOv8s | 11.2 | 0.343 | 0.263 | 0.116 | 0.636 | 0.359 | 0.276 | 0.218 | 0.121 | 0.442 | 0.355 | 0.313 |

| Ours-s | 12.1 | 0.368 | 0.305 | 0.134 | 0.645 | 0.361 | 0.287 | 0.237 | 0.121 | 0.461 | 0.404 | 0.332 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, B.; Cai, D.; Sui, K.; Wang, Z.; Liu, C.; Pei, X. MBD-YOLO: An Improved Lightweight Multi-Scale Small-Object Detection Model for UAVs Based on YOLOv8. Appl. Sci. 2025, 15, 10877. https://doi.org/10.3390/app152010877

Xu B, Cai D, Sui K, Wang Z, Liu C, Pei X. MBD-YOLO: An Improved Lightweight Multi-Scale Small-Object Detection Model for UAVs Based on YOLOv8. Applied Sciences. 2025; 15(20):10877. https://doi.org/10.3390/app152010877

Chicago/Turabian StyleXu, Bo, Di Cai, Kelin Sui, Zheng Wang, Chuangchuang Liu, and Xiaolong Pei. 2025. "MBD-YOLO: An Improved Lightweight Multi-Scale Small-Object Detection Model for UAVs Based on YOLOv8" Applied Sciences 15, no. 20: 10877. https://doi.org/10.3390/app152010877

APA StyleXu, B., Cai, D., Sui, K., Wang, Z., Liu, C., & Pei, X. (2025). MBD-YOLO: An Improved Lightweight Multi-Scale Small-Object Detection Model for UAVs Based on YOLOv8. Applied Sciences, 15(20), 10877. https://doi.org/10.3390/app152010877