DeepFishNET+: A Dual-Stream Deep Learning Framework for Robust Underwater Fish Detection and Classification

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset

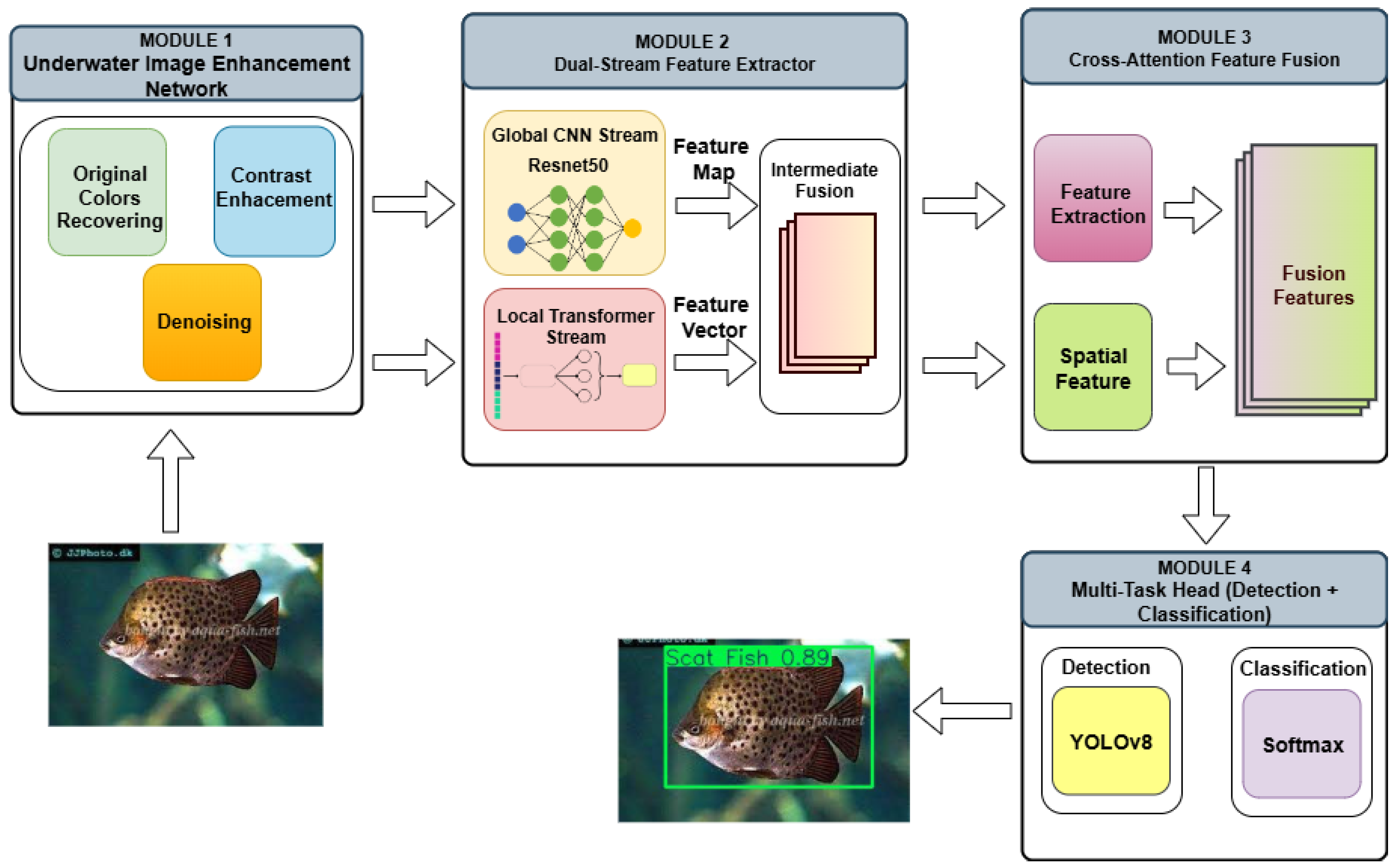

3.2. Proposed Method

3.2.1. Underwater Image Enhancement Network (UIE-Net)

- PSNR (Peak Signal-to-Noise Ratio): This evaluates the fidelity of the restored image compared to the original reference. A higher value indicates better reconstruction quality.

- SSIM (Structural Similarity Index): This measures the structural similarity between two images, taking into account contrast, brightness, and texture. The closer the value is to 1, the better the perceived quality.

- UIQM (Underwater Image Quality Measure): This is a reference-free indicator, suitable for underwater images, which combines sharpness, contrast, and natural colors.

- UCIQE (Underwater Color Image Quality Evaluation): This is an indicator designed for underwater environments, without reference, and based on the dispersion of chromaticity, contrast, and color saturation.

3.2.2. Dual-Stream Feature Extractor

3.2.3. Cross-Attention Feature Fusion

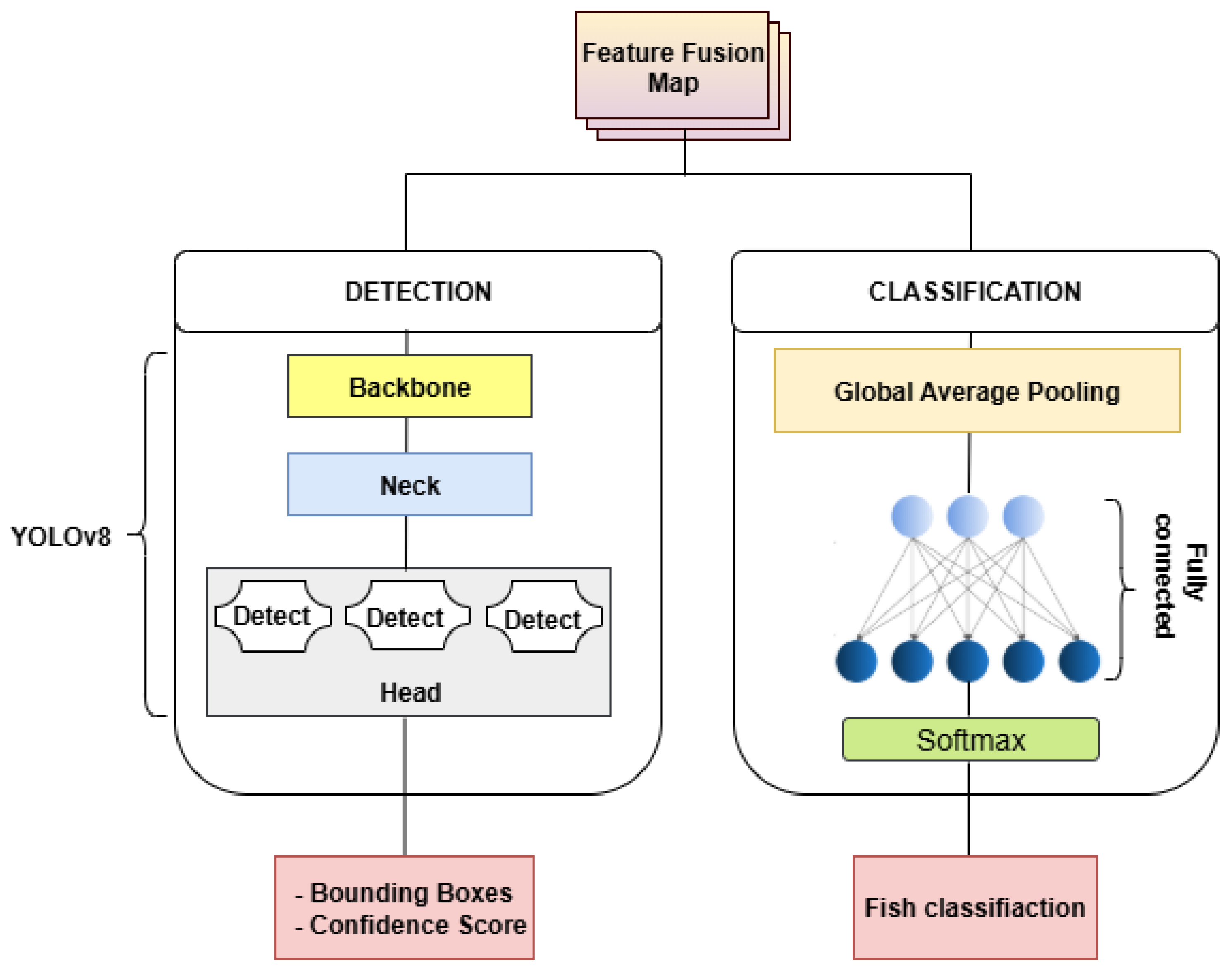

3.2.4. Multi-Task Head

3.3. Evaluation Metrics

- TPs (True Positives): The number of positive instances correctly classified as positive.

- FPs (False Positives): The number of negative instances incorrectly classified as positive.

- TNs (True Negatives): The number of negative instances correctly classified as negative.

- FNs (False Negatives): The number of positive instances incorrectly classified as negative.

3.4. Experimental Setup

4. Results

4.1. Validation of Results Obtained by UIE-Net

4.2. Validation of Results Obtained by Dual-Stream Feature Extractor Module

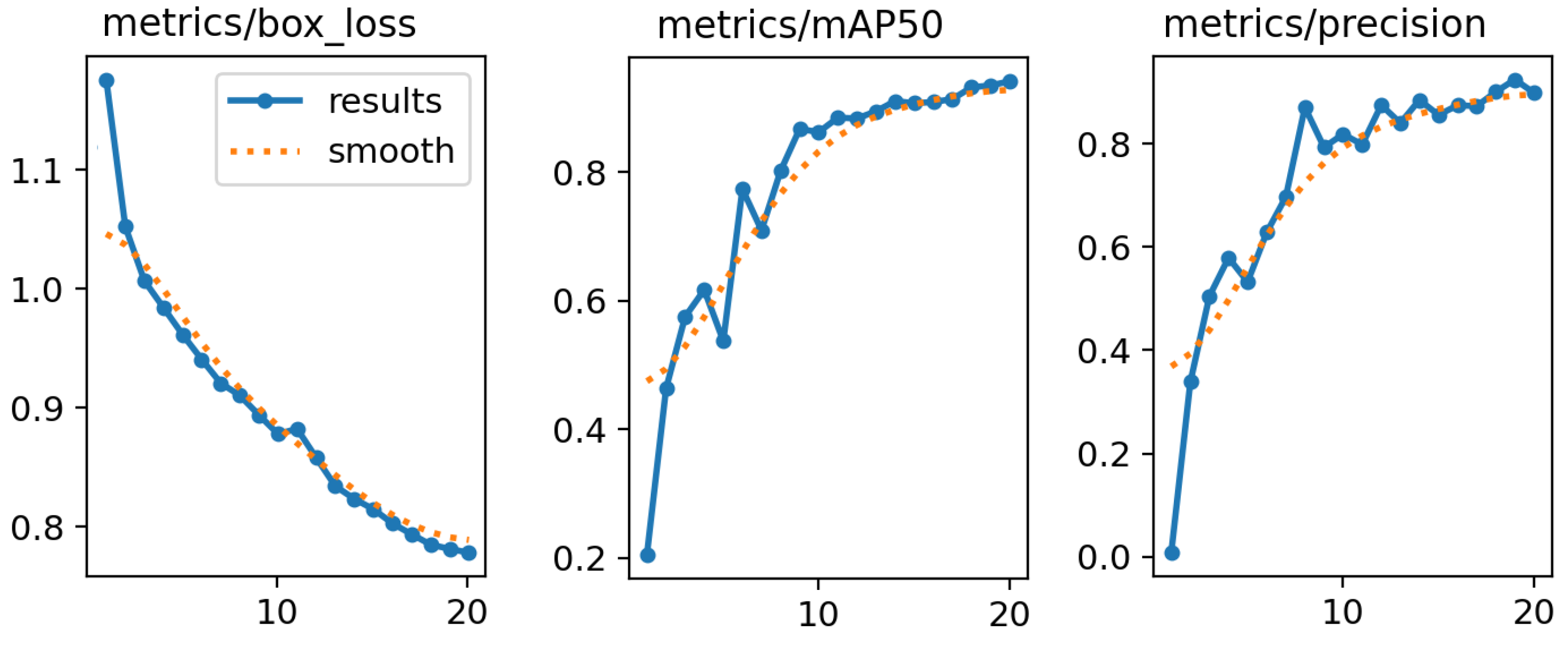

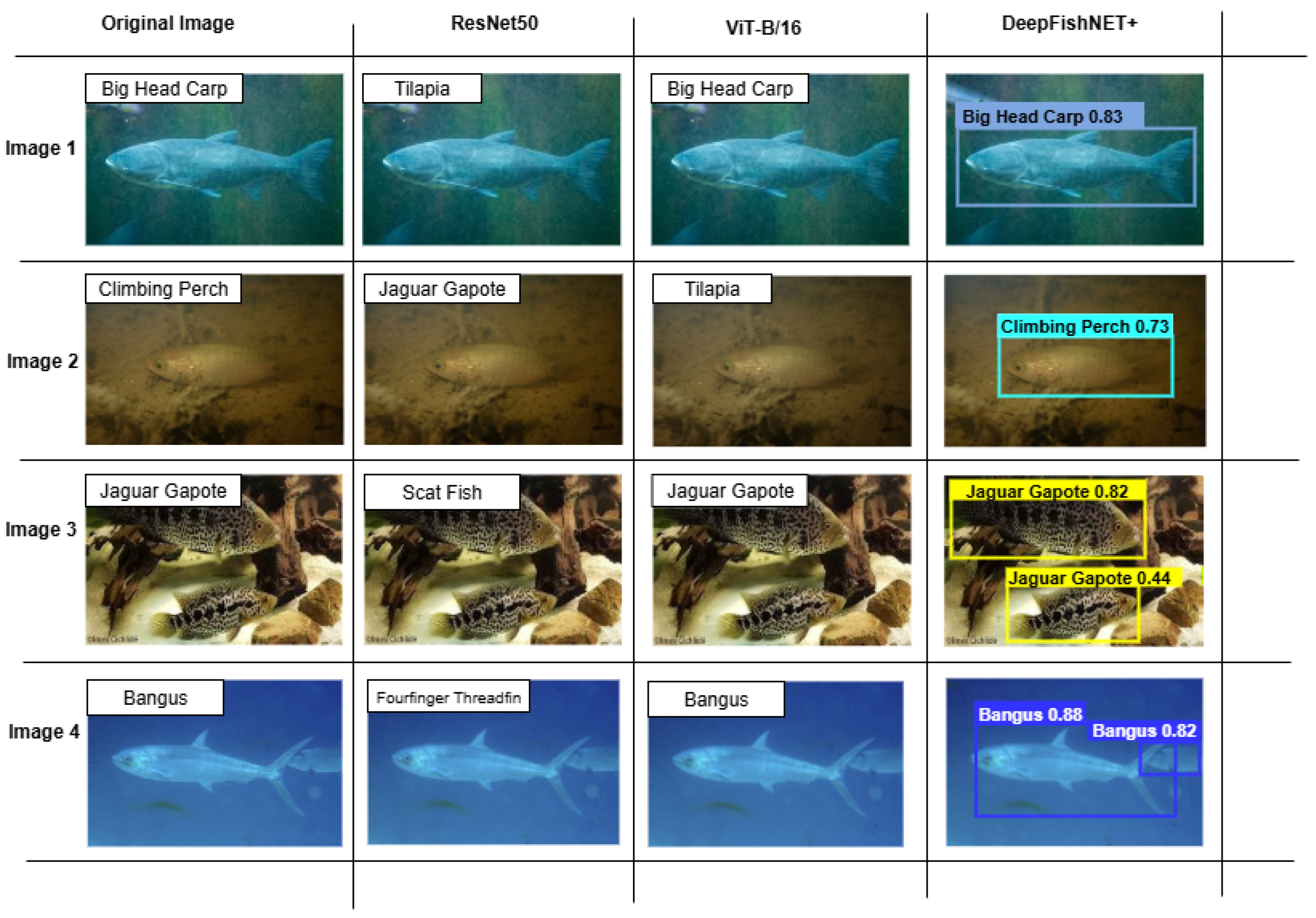

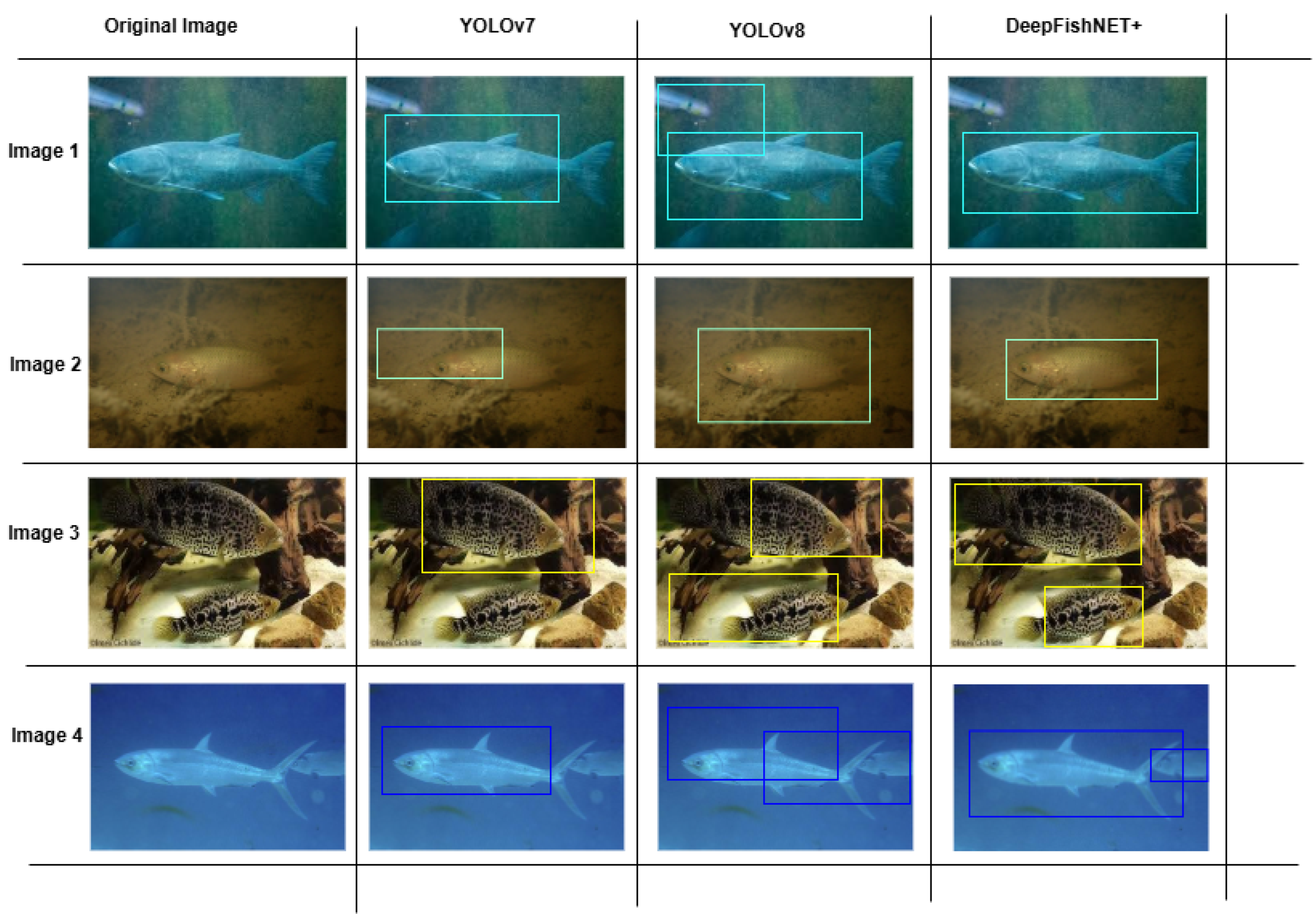

4.3. Method Comparison

4.4. Results Obtained by DeepFishNET+ Method

4.5. Model Validation on Other Datasets

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- FAO. The State of World Fisheries and Aquaculture 2021; FAO: Rome, Italy, 2021; p. 254. [Google Scholar]

- Financial Times. Aquaculture Overtakes Wild Fishing as Main Source of Fish. Available online: https://www.ft.com/content/140ed100-c288-4b20-a9c3-fac16164c7e5 (accessed on 8 September 2025).

- FAO. World Fisheries and Aquaculture 2020; FAO: Rome, Italy, 2020; pp. 1–244. [Google Scholar]

- Sun, K.; Cui, W.; Chen, C. Review of underwater sensing technologies and applications. Sensors 2021, 21, 7849. [Google Scholar] [CrossRef]

- Braithwaite, V.A.; Ebbesson, L.O.E. Pain and stress responses in farmed fish. Rev. Sci. Tech. 2014, 33, 245–253. [Google Scholar] [CrossRef]

- Mandal, A.; Ghosh, A.R. Role of artificial intelligence (AI) in fish growth and health status monitoring: A review on sustainable aquaculture. Aquac. Int. 2024, 32, 2791–2820. [Google Scholar] [CrossRef]

- Li, D.; Zhang, Y.; Wang, X.; Liu, H.; Chen, L.; Zhao, Q. Advanced techniques for the intelligent diagnosis of fish diseases: A review. Animals 2022, 12, 2938. [Google Scholar] [CrossRef]

- Hamzaoui, M.; Ould-Elhassen Aoueileyine, M.; Bouallegue, S.; Bouallegue, R. Enhanced detection of Argulus and epizootic ulcerative syndrome in fish aquaculture through an improved deep learning model. J. Aquat. Anim. Health 2025, 37, 97–109. [Google Scholar] [CrossRef] [PubMed]

- Alsakar, Y.M.; Sakr, N.A.; El-Sappagh, S.; Abuhmed, T.; Elmogy, M. Underwater image restoration and enhancement: A comprehensive review of recent trends, challenges, and applications. Vis. Comput. 2025, 41, 3735–3783. [Google Scholar] [CrossRef]

- Elmezain, M.; Saoud, L.S.; Sultan, A.; Heshmat, M.; Seneviratne, L.; Hussain, I. Advancing underwater vision: A survey of deep learning models for underwater object recognition and tracking. IEEE Access 2025, 13, 17830–17867. [Google Scholar] [CrossRef]

- Naveen, P. Advancements in underwater imaging through machine learning: Techniques, challenges, and applications. Multimed. Tools Appl. 2025, 84, 24839–24858. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, H.; Wang, J.; Li, Y.; Zhao, Q.; Yang, T. UnitModule: A lightweight joint image enhancement module for underwater object detection. Pattern Recognit. 2024, 151, 110435. [Google Scholar] [CrossRef]

- Pachaiyappan, P.; Kumar, A.; Ramesh, S.; Natarajan, K. Enhancing underwater object detection and classification using advanced imaging techniques: A novel approach with diffusion models. Sustainability 2024, 16, 7488. [Google Scholar] [CrossRef]

- Guan, F.; Li, J.; Wang, H.; Chen, L.; Zhao, Y.; Zhang, X.; Liu, Y. AUIE–GAN: Adaptive underwater image enhancement based on generative adversarial networks. J. Mar. Sci. Eng. 2023, 11, 1476. [Google Scholar] [CrossRef]

- Cong, R.; Li, Y.; Wang, X.; Chen, Z.; Zhao, L.; Liu, J. Pugan: Physical model-guided underwater image enhancement using GAN with dual-discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef] [PubMed]

- Hao, X.; Liu, L. DGC-UWnet: Underwater image enhancement based on computation-efficient convolution and channel shuffle. IET Image Process. 2023, 17, 2158–2167. [Google Scholar] [CrossRef]

- Chen, J.; Li, X.; Wang, Y.; Zhang, H. Collaborative compensative transformer network for salient object detection. Pattern Recognit. 2024, 154, 110600. [Google Scholar] [CrossRef]

- Meng, L.; Zhao, Q.; Sun, Y.; Liu, J.; Wang, T. RGB depth salient object detection via cross-modal attention and boundary feature guidance. IET Comput. Vis. 2024, 18, 273–288. [Google Scholar] [CrossRef]

- Saoud, L.S.; Seneviratne, L.; Hussain, I. ADOD: Adaptive domain-aware object detection with residual attention for underwater environments. In Proceedings of the 21st International Conference on Advanced Robotics (ICAR), Abu Dhabi, United Arab Emirates, 5–8 December 2023. [Google Scholar]

- Wen, J.; Li, Y.; Zhang, T.; Zhao, H.; Liu, X.; Chen, L.; Wang, S. EnYOLO: A real-time framework for domain-adaptive underwater object detection with image enhancement. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar]

- Saad Saoud, L.; Seneviratne, L.; Hussain, I. MARS: Multi-Scale Adaptive Robotics Vision for Underwater Object Detection and Domain Generalization. arXiv 2023, arXiv:2312.15275. [Google Scholar] [CrossRef]

- Dai, L.; Wang, X.; Li, J.; Chen, H.; Zhao, Q.; Liu, Y. A gated cross-domain collaborative network for underwater object detection. Pattern Recognit. 2024, 149, 110222. [Google Scholar] [CrossRef]

- Folkman, L.; Pitt, K.A.; Stantic, B. A data-centric framework for combating domain shift in underwater object detection with image enhancement. Appl. Intell. 2025, 55, 272. [Google Scholar] [CrossRef]

- Agrawal, A.; Singh, P.; Kumar, S.; Ramesh, T.; Natarajan, K. Syn2Real Domain Generalization for Underwater Mine-like Object Detection Using Side-Scan Sonar. IEEE Geosci. Remote Sens. Lett. 2025, 22, 5503105. [Google Scholar] [CrossRef]

- Chen, L.; Li, X.; Zhang, Y.; Wang, H.; Zhao, Q.; Liu, T. SWIPENET: Object detection in noisy underwater scenes. Pattern Recognit. 2022, 132, 108926. [Google Scholar] [CrossRef]

- Chen, G.; Li, H.; Wang, X.; Zhang, Y.; Zhao, Q.; Liu, T. HTDet: A hybrid transformer-based approach for underwater small object detection. Remote Sens. 2023, 15, 1076. [Google Scholar] [CrossRef]

- Wang, Z.; Ruan, Z.; Chen, C. DyFish-DETR: Underwater fish image recognition based on detection transformer. J. Mar. Sci. Eng. 2024, 12, 864. [Google Scholar] [CrossRef]

- Pavithra, S.; Kumar, A.; Ramesh, T.; Natarajan, K. An efficient approach to detect and segment underwater images using Swin Transformer. Results Eng. 2024, 23, 102460. [Google Scholar] [CrossRef]

- Lei, J.; Wang, X.; Li, H.; Zhao, Q.; Chen, L.; Liu, T. CNN–Transformer Hybrid Architecture for Underwater Sonar Image Segmentation. Remote Sens. 2025, 17, 707. [Google Scholar] [CrossRef]

- Prasetyo, E.; Suciati, N.; Fatichah, C. Fish-gres dataset for fish species classification. Mendeley Data 2020, 10, 12. [Google Scholar] [CrossRef]

- Fisher, R.B.; Li, X.; Zhang, Y.; Chen, H. (Eds.) Fish4Knowledge: Collecting and Analyzing Massive Coral Reef Fish Video Data; Springer: Berlin/Heidelberg, Germany, 2016; Volume 104. [Google Scholar]

- Ulucan, O.; Karakaya, D.; Turkan, M. A large-scale dataset for fish segmentation and classification. In Proceedings of the 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, 15–17 October 2020. [Google Scholar]

- Shah, S.Z.H.; Khan, A.; Riaz, F.; Ahmed, I. Fish-Pak: Fish species dataset from Pakistan for visual features based classification. Data Brief 2019, 27, 104565. [Google Scholar] [CrossRef]

- Hamzaoui, M.; Ould-Elhassen Aoueileyine, M.; Romdhani, L.; Bouallegue, R. An improved deep learning model for underwater species recognition in aquaculture. Fishes 2023, 8, 514. [Google Scholar] [CrossRef]

- Veiga, R.J.M.; Rodrigues, J.M.F. Fine-Grained Fish Classification from small to large datasets with Vision Transformers. IEEE Access 2024, 12, 113642–113660. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, S. Research on improved lightweight fish detection algorithm based on YOLOv8n. J. Mar. Sci. Eng. 2024, 12, 1726. [Google Scholar] [CrossRef]

| Fish Species | Scientific Name | Count of Samples | Train | Validation | Test |

|---|---|---|---|---|---|

| Bangus | Chanos chanos | 223 | 156 | 34 | 33 |

| Big Head Carp | Aristichthys nobilis | 213 | 150 | 32 | 31 |

| Black Spotted Barb | Puntius binotatus | 190 | 133 | 29 | 28 |

| Climbing Perch | Anabas testudineus | 190 | 133 | 29 | 28 |

| Fourfinger Threadfin | Eleutheronema tetradactylum | 190 | 133 | 29 | 28 |

| Glass Perchlet | Ambassis vachellii | 190 | 133 | 29 | 28 |

| Gourami | Trichopodus trichopterus | 190 | 133 | 29 | 28 |

| Jaguar Gapote | Parachromis managuensis | 190 | 133 | 29 | 28 |

| Scat Fish | Scatophagus argus | 190 | 133 | 29 | 28 |

| Tilapia | Oreochromis niloticus | 190 | 133 | 29 | 28 |

| Parameter | Value Selected |

|---|---|

| Optimizer | AdamW (lr = , weight decay = 0.05) |

| LR Schedule | Warmup (lr: 0 → 0.001) + cosine decay (lr: 0.001 → ) |

| Batch Size | 256 |

| Regularization | Dropout = 0.1, StochDepth = 0.2 |

| Metric | Original | After UIE-Net Enhancement |

|---|---|---|

| PSNR | 18.2 dB | 25.6 dB |

| SSIM | 0.58 | 0.79 |

| UIQM | 6.04 | 7.18 |

| UCIQE | 0.44 | 0.63 |

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| ResNet50 | 91.16% | 93.97% | 92.09% | 91.01% |

| ViT-B/16 | 93.65% | 94.25% | 93.72% | 93.32% |

| Swin Transformer | 96.86% | 96.92% | 96.68% | 96.51% |

| DeepFishNET+ | 98.43% | 98.28% | 98.21% | 98.21% |

| Model | Precision | mAP50 | Box Loss | Time (ms) |

|---|---|---|---|---|

| YOLOv7 | 83.12% | 86.91% | 0.60 | 511.655 |

| YOLOv8 | 89.82% | 90.82% | 0.57 | 529.443 |

| DeepFishNET+ | 92.74% | 97.10% | 0.42 | 542.106 |

| Datasets | Fish Species | Precision in Classification (%) | Precision in Detection (%) |

|---|---|---|---|

| Fish-gres dataset [30] | Chanos chanos, Johnius trachycephalus, Nibea albiflora, Rastrelliger faughni, Upeneus moluccensis, Eleutheronema tetradactylum, Oreochromis mossambicus, and Oreochromis niloticus | 99.72 | 93.01 |

| Fish4Knowledge dataset [31] | Acanthuridae, Pomacentridae, Labridae, Chaetodontidae, Balistidae, Serranidae | 99.12 | 92.93 |

| A large-scale dataset [32] | Gilt head bream, Red sea bream, Sea bass, Red mullet, Horse mackerel, Black sea sprat, Striped red mullet, Trout, Shrimp | 96.86 | 90.72 |

| Fish-Pak dataset [33] | Grass carp, Common carp, Mori, Rohu, Silver carp, Thala | 98.26 | 91.48 |

| Work | Approach | Dataset | Results |

|---|---|---|---|

| [34] | This approach develops an improved YOLOV5 model for locating and classifying fish types. Transfer learning is applied. The final model is based on the weights of another pre-trained model called FishMask, which was itself trained on a dataset containing images of fish masks. | A large-scale dataset | Accuracy: 96% |

| [35] | The proposed approach integrates the Fine-Grained Visual Classification Plugin Module (FGVC-PIM) with the Swin Transformer architecture. While the FGVC-PIM concentrates on identifying the most discriminative regions within an image, the Swin Transformer ensures robust feature extraction. The model was evaluated on multiple datasets under diverse environmental conditions, achieving promising results with accuracy above 83%. | Fish-gres dataset, Fish4Knowledge dataset, Fish Park dataset, A large-scale dataset, among others | Accuracy: Above 83% |

| [36] | The proposed CUIB-YOLO algorithm introduces a C2f-UIB module to reduce model parameters and integrates the EMA mechanism into the neck network to optimize feature fusion. | Roboflow Universe dataset library | mAP@0.5: 95.7% |

| Our work | DeepFishNet+ begins by training a pre-trained VGG16 on ImageNet. DeepLIFT determines heat zones, which are divided into patches. Every two patches representing the same zone are concatenated into a single vector. ViT performs the final classification on these concatenated vectors. | F-DS1, F-DS2 | 99.72% (F-DS2) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamzaoui, M.; Rejili, M.; Aoueileyine, M.O.-E.; Bouallegue, R. DeepFishNET+: A Dual-Stream Deep Learning Framework for Robust Underwater Fish Detection and Classification. Appl. Sci. 2025, 15, 10870. https://doi.org/10.3390/app152010870

Hamzaoui M, Rejili M, Aoueileyine MO-E, Bouallegue R. DeepFishNET+: A Dual-Stream Deep Learning Framework for Robust Underwater Fish Detection and Classification. Applied Sciences. 2025; 15(20):10870. https://doi.org/10.3390/app152010870

Chicago/Turabian StyleHamzaoui, Mahdi, Mokhtar Rejili, Mohamed Ould-Elhassen Aoueileyine, and Ridha Bouallegue. 2025. "DeepFishNET+: A Dual-Stream Deep Learning Framework for Robust Underwater Fish Detection and Classification" Applied Sciences 15, no. 20: 10870. https://doi.org/10.3390/app152010870

APA StyleHamzaoui, M., Rejili, M., Aoueileyine, M. O.-E., & Bouallegue, R. (2025). DeepFishNET+: A Dual-Stream Deep Learning Framework for Robust Underwater Fish Detection and Classification. Applied Sciences, 15(20), 10870. https://doi.org/10.3390/app152010870