Abstract

As big data continues to evolve, cluster analysis still has a place. Among them, the K-means algorithm is the most widely used method in the field of clustering, which can cause unstable clustering results due to the random selection of the initial clustering center of mass. In this paper, an improved honey badger optimization algorithm is proposed: (1) The population is initialized using sin chaos to make the population uniformly distributed. (2) The density factor is improved to enhance the optimization accuracy of the population. (3) A nonlinear inertia weight factor is introduced to prevent honey badger individuals from relying on the behavior of past individuals during position updating. (4) To improve the diversity of solutions, random opposition learning is performed on the optimal individuals. The improved algorithm outperforms the comparison algorithm in terms of performance through experiments on 23 benchmark test functions. Finally, in this paper, the improved algorithm is applied to K-means clustering and experiments are conducted on three data sets from the UCI data set. The results show that the improved honey badger optimized K-means algorithm improves the clustering effect over the traditional K-means algorithm.

1. Introduction

Cluster analysis, an unsupervised learning technique in data mining, discovers the connections between data by organizing the data into distinct groups based on their similarities [1,2]. K-means [3] has found extensive application across various domains, including image segmentation [4], data mining [5], customer segmentation [6], and pattern recognition [7], among others. The K-means algorithm obtains clustering results by minimizing the squared error within the clusters and randomly selecting the center of mass position during the iteration process. Despite its widespread use, the K-means algorithm has notable limitations. Its widespread use notwithstanding, the K-means method has some drawbacks, most notably its sensitivity to the initial cluster center selection, which may lead to less-than-ideal results. Additionally, the algorithm struggles with handling complex data sets [8,9,10]. These defects seriously affect the effectiveness of the algorithm.

As a result, optimizing the initial cluster centers in K-means has become a crucial focus for enhancing the accuracy of clustering analysis. In recent years, the intelligent optimization algorithm, as a meta-heuristic algorithm of sorts, has gradually come into the public’s view. Numerous researchers have discovered that swarm intelligence algorithms can successfully make up for the K-means algorithm’s drawbacks. Common algorithms include the Grasshopper Optimisation Algorithm [11], Firefly Algorithm [12], Flower Pollination Algorithm [13], etc. Such algorithms help to obtain a better initial center of mass during K-means clustering by modeling the communication and cooperation between groups of organisms. Bai L et al. [14] proposed an artificial fish swarming algorithm based on an improved quantum revolving door. By dynamically adjusting the rotation angle of the quantum rotating gate, the accuracy and speed of the next generation updating direction were improved. The algorithm was applied to the K-means clustering method, leading to enhanced clustering accuracy. Xie H [15] proposed two variations of the firefly algorithm, which enhances the search range and search scale of the firefly and solves the stubborn problem of K-means algorithm. The improved firefly algorithm can obtain better clustering center and improve the clustering effect. With the k-means algorithm’s stubborn problem, the improved firefly algorithm can obtain a better clustering center to improve the clustering effect. Huang P [16], in order to solve the clustering problem, introduced a differential evolution strategy to the initial population of the Honey Badger Algorithm, introduced the equalization pooling technique into the updating of the position at the same time, and finally tested the clustering effect on ten benchmark test sets, which proved its superiority in clustering. Jiang S [17] introduced nonlinear inertia weights into the Flower Pollination Algorithm, as well as Cauchy variation in the optimal solution, and finally applied the improved Flower Pollination Algorithm to K-means clustering and obtained a better clustering effect. Li Y [18] and others proposed an adaptive particle swarm algorithm for optimizing the clustering centers so as to avoid the dependence of the K-means algorithm on the initial clustering centers, which was finally applied to customer segmentation. These algorithms have the ability to jump out of local optimal solutions through population initialization and iterative location updates, thus overcoming the limitations of k-means. Although the flaws of the algorithm have been refined, there are still some shortcomings, such as the chosen meta-heuristic algorithms needing to regulate the parameters, as well as the fact that they are computationally intensive and have high time complexity.

The Honey Badger Algorithm is relatively simple to implement and has less parameter tuning compared to other algorithms. The Honey Badger Algorithm (HBA) [19], introduced by Hashim FA et al. in 2022, is a type of meta-heuristic algorithm. The algorithm builds a mathematical model based on honey badger’s predatory behavior, which includes the behaviors of self-predation and cooperation with other organisms. The Honey Badger Algorithm has an extremely strong global search capability, which facilitates a seamless shift among searching on a global scale and searching locally with a short search time, and performs well in many application problems, especially in solving complex problems.

Many researchers have enhanced the Honey Badger Algorithm and implemented it across various domains since its proposal thanks to its simple structure and fast search speed. Han E et al. [20] proposed an improved HBA to optimize modeling proton exchange membrane fuel cells by using oppositional learning and chaotic mechanisms in the improved algorithm. Chen Y et al. [21] used the HBA to solve the optimization of multi-objectives and applied the HBA to the integrated photovoltaic/extended infiltration/battery system optimization. Arutchelvan K et al. [22] proposed a clustering and routing planning scheme for wireless sensor networks based on the Honey Badger Algorithm. The newly proposed model has the longest lifetime compared to the existing techniques. Song Y et al. [23] combined HBA based on the set of good points with the Distance Vector-Hop (DV-Hop) algorithm to solve the problem of insufficient localization accuracy of the traditional DV-Hop algorithm and to prevent the algorithm from converging to a local optimum. Hu G et al. [24] added a segmental optimal decrement neighborhood and a horizontal hybridization strategy with strategic adaptability to HBA and applied the improved algorithm to unmanned aerial vehicle path planning to obtain a more feasible and efficient path. Hussien A.G. et al. [25] combined the advantages of HBA and the Artificial Gorilla Troops Optimizer to enhance the development ability of the algorithm, and the hybrid algorithm was applied to Bot problems to improve the performance and efficiency of the cloud computing system. Fathy A et al. [26] proposed a microgrid energy management scheme based on HBA considering the advantages of HBA in addressing intricate challenges, and finally improved the operation efficiency of the microgrid.

The Honey Badger Algorithm has inherent limitations, encompassing protracted convergence and a propensity to succumb to local optimal solutions [27]. Moreover, the applications of this algorithm in data clustering tasks are still relatively few, so this manuscript introduces a refined iteration of the Honey Badger Algorithm, termed the Improved Honey Badger Algorithm (IHBA). The IHBA is then applied to K-means clustering. The key contributions of this study can be summarized as follows:

- (1)

- A sin chaotic strategy for honey badger populations is introduced to ensure that the population is uniformly distributed throughout the search space.

- (2)

- Improved density factor. This improvement accelerates the rate of convergence.

- (3)

- Nonlinear inertia weights are introduced in position updating, promoting a smooth transition in exploration and development.

- (4)

- Reverse learning of optimal individuals to increase population diversity and refine the algorithm to escape from local optima.

- (5)

- The enhanced algorithm is rigorously assessed through the application of 23 benchmark test functions. Additionally, the clustering analysis problem is addressed with the IHBA proposed in this study, which produces superior clustering results on three UCI test data sets.

The organization of this paper is delineated as follows: Section 2 elucidates the foundational principles underlying the Honey Badger Algorithm. In Section 3, we present an enhanced strategy designed to rectify the inherent limitations associated with the Honey Badger Algorithm. Section 4 meticulously delineates the amalgamation of the K-means algorithm and the Honey Badger Algorithm. Section 5 presents a comprehensive analysis of the results derived from simulation experiments. Finally, Section 6 offers a succinct summary.

2. Honey Badger Algorithm

Honey badgers rely on two main strategies when searching for food: the “digging mode” and the “honey harvesting mode”. In their excavation endeavors, honey badgers harness their acute olfactory abilities to discern the whereabouts of potential prey. Upon arriving in the vicinity of the prey, honey badgers search the neighborhood and then meticulously select an optimal location to excavate and seize their prey. In the mode of honey harvesting, this creature heeds the guidance of its avian companion, enabling it to precisely locate the hive and procure the honey. The entire procedure can be succinctly encapsulated in the following steps.

2.1. Population Initialization

There is a total of N individual honey badgers. Each honey badger is randomly assigned a position within a certain range by using Equation (1).

In this formula, denotes the position of the honey badger. The variables and are the upper and lower bounds. is a random number generated in the range of 0 to 1.

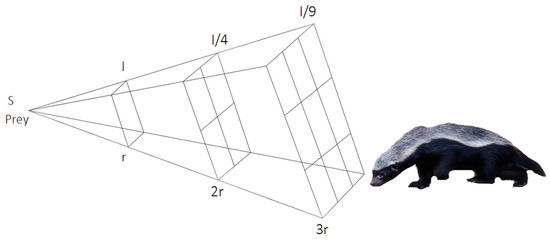

2.2. Defining Intensity I

In the context of honey badger predation, the intensity of odor plays a crucial role in determining the hunting efficacy of the honey badger. An increase in odor intensity enhances the honey badger’s ability to hunt effectively. The strength of the odor is influenced by several factors, including the distance between honey badgers and the concentration of their prey. Odor intensity is directly proportional to the concentration of the prey and inversely proportional to the distance from it, adhering to the principles of the inverse square law. as shown in Figure 1.

where is the concentrated intensity of the honey badger, that is, the square of the distance between two honey badger individuals; denotes the distance of the prey from the th honey badger; is the position of the prey, that is, the current optimal position; and is a randomly generated number within the range of 0 to 1.

Figure 1.

Inverse square law. I represents the intensity, S represents the prey position, and r is a random number that takes values from 0 to 1.

2.3. Define Density Factor

The density factor plays an important role in the honey badger foraging process, and the density factor gradually decreases over time, thus causing the algorithm’s stochasticity to gradually reduce and realizing a smooth transition from global to local search. The specific equation for the density factor is shown in (3).

where is a constant taking the value 2; is the current number of iterations; and is the maximum number of iterations.

2.4. Digging Phase

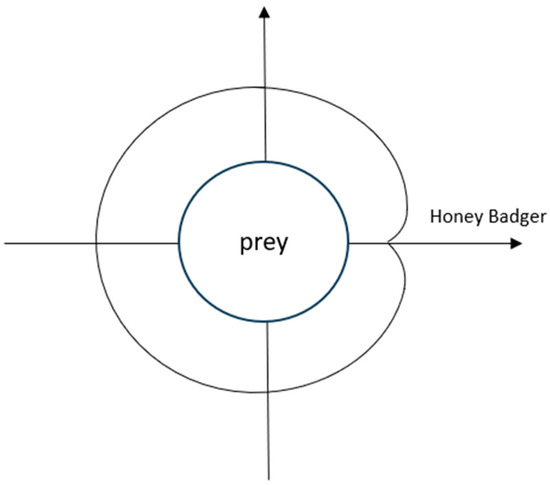

In this model, honey badgers move to the perimeter of the prey according to the effects of odor intensity, distance, and density factor, and then dig around the prey. The trajectory of the movement can be regarded as a cardioid curve as shown in Figure 2. The honey badger’s course of action is modeled through Equation (4).

Figure 2.

Trajectories of honey badgers during the digging phase. Take the location of the prey as the origin.

In this equation, denotes the position of the prey, and also represents the current optimal position. indicates the honey badger’s food-locating ability, defaulting to 6. Additionally, , , and are random variables, each ranging from 0 to 1. F can be altered by taking the value 1 or −1 to change the foraging direction of the honey badger. And the formula for F is shown in Equation (5) as follows:

2.5. Honey Phase

In addition to feeding on their own, honey badgers also like to eat honey, but the badgers are unable to locate the hives, so they enter into a partnership with a guide bird, which lead the badgers to the locations of hives. This process can be modeled as Equation (6):

The random number has a value between 0 and 1. The search behavior of honey badgers is influenced by a variety of factors that work together to guide the honey badger towards the vicinity of its prey.

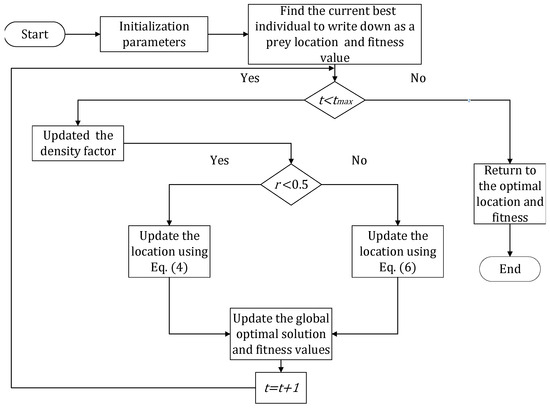

The basic HBA flowchart is shown in Figure 3.

Figure 3.

HBA flowchart.

3. Improved Honey Badger Algorithm

The HBA, despite its simplicity and ease of understanding, has notable limitations. At present, the HBA has some problems such as slow convergence speed and low convergence accuracy. To address these issues, this paper proposes improvements to the algorithm from four aspects, with the goal of enhancing its overall optimization performance. In the following sections, the specific strategies for these improvements will be discussed in detail.

3.1. Sin Chaotic Initial Population

In intelligent optimization algorithms, initialization of the individuals in the population is carried out first. HBA adopts the form of a randomly distributed initial population, which may lead to the individuals of the population concentrating in certain regions of the solution space, which in turn leads to a decrease in the accuracy of the solution. Therefore, this paper adopts sin chaos for population initialization to resolve the aforementioned problems. A sin chaos model [28] is a kind of model with an infinite number of mapping folding times in a finite region, possessing stronger chaotic properties as well as ergodicity. Upon the completion of a predetermined number of iterations, the initial population can spread over the entire solution space.

The procedure for initializing the population utilizing sin chaos is outlined as follows:

- (1)

- N honey badger populations and the position of each individual honey badger are initialized within a D-dimensional space, denoted as , ;

- (2)

- Equation (7) is employed to iteratively generate chaotic sequences for each dimension of Z until the remaining N-1 honey badger individuals are produced;

- (3)

- The aforementioned chaotic sequence is mapped to the initial position of an individual honey badger within the search space:

for the lower world, for the upper world.

The sin chaotic model leverages its advantageous chaotic properties and traversal capabilities to explore various regions within a finite solution space through multiple periodic iterations. This approach enables the optimization process to encompass a broader solution space, thereby providing rich population information for the subsequent optimization phases of the algorithm.

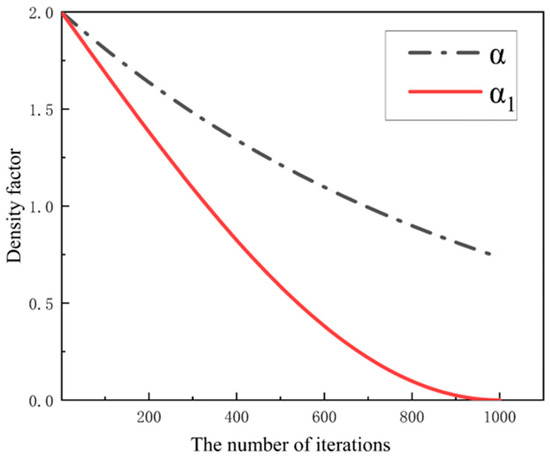

3.2. Improved Density Factor

The density factor plays a crucial role in regulating the randomness of HBA. In the pre-iteration period, the algorithm requires a comprehensive exploration of the solution space, while at the same time, the density factor takes a large value, which increases the randomness and facilitates the algorithm in executing a comprehensive and far-reaching search. Later in the iteration, the algorithm converges towards the optimal solution, and the density factor diminishes to facilitate the algorithm’s ability to identify the optimal solution within a more confined area.

The original density factor decreases over the course of the iterations, but the decrease is slow and takes a narrow range of values, which can lead to premature convergence of the algorithm and output of poor-quality solutions. Therefore, a new density factor is introduced [29]. is formulated as shown in Equation (9):

where is a random number obeying a normal distribution and is the variance of the normal distribution.

As shown in Figure 4, the improved density factor decreases more rapidly by the middle of the iteration, which allows IHBA to have a faster convergence rate in the early stage; also, in the late iteration, varies less so that the algorithm can perform a full local search. also introduces the random number rand and the variance to enhance the diversity of the solutions of .

Figure 4.

Comparison of original and improved density factors.

3.3. Nonlinear Inertia Weights

In intelligent optimization algorithms, the nonlinear inertial weight refers to a dynamically adjusted weight that varies in a non-linear manner throughout the optimization process. Particle swarm algorithms have demonstrated that inertia weights reflect the ability of the latter follower to escape from the previous position, as well as that larger inertia weights lead to better global search capabilities, and conversely, smaller inertia weights lead to better local exploitation [30]. In the HBA, the update of the individual location largely depends on the optimal location found in the last iteration. This over-reliance on past optimal solutions can lead to an imbalance between the potential for exploration and the ability for exploitation.

Therefore, nonlinear inertia weights were introduced into the two foraging patterns of honey badgers. The nonlinear inertia weight parameters [31] are shown in Equation (10):

where is a random number from 0 to 1; is the current iteration number; and is the maximum iterations.

The HBA’s mining phase (Equation (4)) and honey phase (Equation (6)) are updated to Equations (11) and (12).

The inertia weight decreases as the quantity of iterations increases. The early inertia weight is relatively large, which means that the improved Honey Badger Algorithm can rely more on the standing of the present individual so as to achieve a thorough global search. As the algorithm progresses, the inertia weight gradually goes down, and the impact between successive generations decreases. The use of nonlinear inertia weights facilitates a balance between global exploration and local exploitation, thereby reducing the time required to approach the optimal solution.

3.4. Perturbation Strategy Based on Random Opposition

Random Opposition-Based Learning can help algorithms to remove local optimal solutions and improve their diversity. By introducing random numbers, the algorithm can explore different directions and angles during the search process. This random performance enhances the diversity of the population and finds better solutions [32,33,34]. The specific formula of Random Opposition-Based Learning is shown in Equation (13).

Here, is a new solution generated after random reverse learning; the value of rand is randomly generated from 0 to 1; and and are the bounds of the solution space.

Adding the Random Opposition-Based Learning strategy certainly prevents the algorithm from succumbing to a local optimum, but there is no guarantee that the position after perturbation will approach the optimal solution. Therefore, a greedy algorithm [35] is added after Random Opposition-Based Learning to compare the fitness and retain the current optimal solution.

where is the original optimal solution.

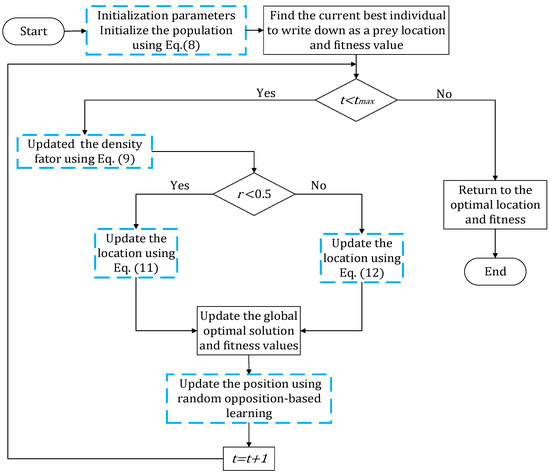

The IHBA flow diagram is shown in Figure 5.

Figure 5.

IHBA flowchart. The blue squares represent the parts of the paper that have been improved.

3.5. Computational Complexity Analysis of IHBA

Time complexity serves as a crucial metric for assessing the efficiency of an algorithm’s solution. Therefore, an analysis of IHBA with respect to its time complexity is conducted. The computational cost of the IHBA can be categorized into four components: the generation of a chaotic initial population, the evaluation of fitness values, position updates, and the computation of inverse solutions. represents honey badger individuals, denotes the maximum number of iterations, and indicates the dimension. The initial phase of the algorithm encompasses the initialization of population positions, fitness evaluation, and other related processes, resulting in an overall complexity of . The complexity of the algorithm’s operations, including position updating and the calculation of the inverse solution, is . Thus, the overall complexity of IHBA is as in Equation (15).

4. K-Means Algorithm Based on Improved Honey Badger Algorithm

In this study, a K-means algorithm based on the Improved Honey Badger Algorithm (IHBA-KM) is proposed as a way to compensate for the lack of random selection of the center of mass in K-means. IHBA-KM uses the sin chaos method for population initialization, thereby increasing the randomness and diversity of initial clustering centers. By introducing an improved density factor, the algorithm can better adapt to different data distributions. In the process of position updating, nonlinear inertial weights are used to make the search process more balanced. In addition, the incorporation of random opposition-based learning significantly enhances the selection process for clustering centers. Next, this paper will describe the detailed steps of IHBA-KM:

Step 1: Initialization parameters are set: data set , population number , maximum number of iterations , and cluster number ;

Step 2: data points are selected randomly from the data set to serve as the positions of honey badgers. These data points will be used as candidate cluster centers in the initial stage, and initial locations will be assigned to honey badgers according to different dimensions. The process will be repeated N times, a total of N honey badger individuals will be generated, and then new locations of the honey badgers will be calculated according to Formula (8).

Step 3: According to step 2, there are honey badger individuals, including the original solution and the chaotic solution. The data set is clustered, the fitness is assigned, and at the same time, the first honey badgers are taken as the initial population.

Step 4: The optimal individual and its fitness are discovered;

Step 5: A random number is generated. is less than 0.5. The algorithm carries out a global search, uses Formula (11) to update the position, and carries out boundary processing;

Step 6: Otherwise, the algorithm carries out a local search, uses Formula (12) to update the position, and carries out boundary processing;

Step 7: Clustering operations are performed after the location update, and the newly generated cluster center is set to the location updated in step 5 or 6;

Step 8: The current optimal solution is updated, and then the opposition solution is calculated according to Formula (13). The fitness of the opposition solution is compared with the original global optimal solution. The solution with the least fitness is the global optimal solution, and the corresponding position is saved as the best position.

Step 9: Step 5 and Step 6 are repeated until termination conditions are met;

Step 10: Using the optimal solution as the cluster center, the distance from the data points to the clustering center is calculated and the data are assigned to the closest cluster. The optimal solution, location, and clustering results are output.

To summarize the steps above, we provide the pseudo code of IHBA-KM, as shown in Algorithm 1.

| Algorithm 1 Pseudo code of IHBA-KM |

| Initialize the parameters of the algorithm: . |

| Initialize population location at random positions. |

| Initialization of the population using the sin chaos. |

| Cluster data set. |

| . |

| . |

| while do |

| using Equation (9). |

| for = do |

| Calculate the intensity I using Equation (2). |

| if < 0.5 then |

| Update the new position using Equation (11). |

| else |

| Update the new position using Equation (12). |

| end if |

| Execute clustering. |

| Evaluate the new position and assign to fitness. |

| . |

| if then |

| end if |

| if then |

| end if |

| end for |

| end while |

| Return , and clustering results. |

5. Simulation Experiment and Analysis

The initial experiment aimed to evaluate the efficacy of the Particle Swarm Optimization (PSO) [36], Whale Optimization Algorithm (WOA) [37], Artificial Rabbit Optimization (ARO) [38], Spider Wasp Optimization (SWO) [39], HBA, and IHBA on the benchmark function, and then to conduct a detailed comparative analysis based on the results. Specific parameter settings are shown in Table 1. The second set of experiments applied IHBA to the K-means clustering task. This was compared with HBA and the standard K-means algorithm to evaluate the advantages of IHBA in clustering performance.

Table 1.

Parameter settings.

5.1. Preparation of Experimental Environment

All experiments conducted in this study were performed on a sophisticated computer system operating under the 64-bit Windows 11 environment. This system was powered by an Intel Core i5-8250U processor and was equipped with a robust 8 GB of RAM, ensuring optimal performance for our research endeavors. The programming language was Python, and the drawing software was MATLAB 2021a and Origin 2021.

In this study, a total of 23 benchmark [40] test functions were chosen to evaluate the performance of the algorithm, as shown in Table 2, Table 3 and Table 4. The test function was divided into three groups: unimodal function (F1–F9), multimodal function (F10–F16), and fixed dimensional function (F17–F23). To ensure fairness, population = 50, maximum number of iterations = 500, and dimension = 30/200 were set in the experiment. Each algorithm was run independently 30 times, and the mean, optimum, and standard deviation of the 30 runs were recorded. These indicators together evaluated the efficiency, stability and optimization ability of the algorithm.

Table 2.

Unimodal functions.

Table 3.

Multimodal functions.

Table 4.

Fixed-dimensional functions.

5.2. Experimental Comparative Analysis of Improved Honey Badger Algorithm

The IHBA and the comparison algorithm were tested at = 30 and = 200, respectively, and the test results are shown in Table 5, Table 6 and Table 7. In the comparison of single-peak test functions, the IHBA showed remarkable advantages, especially in solving accuracy and stability, among the F1–F5 functions. The accuracy of the IHBA average was far ahead compared to other algorithms, indicating the effectiveness of the improvement measures in this paper. The standard deviation of IHBA was 0 for each run, indicating that its stability was better than that of other algorithms in multiple runs. IHBA was closest to the optimal solution in F6. In the F7 to F9 functions, IHBA continued to maintain its advantages, especially in the performance of F7; the average value and optimal solution of IHBA were much lower than other algorithms, showing efficiency and stability in the case of problems. When = 200, the dimensionality increased, leading to a corresponding rise in the complexity of the algorithm. The performance of comparison algorithms was diminished in terms of solving accuracy, standard deviation, and other metrics. In contrast, IHBA continued to outperform other comparative algorithms; it achieved theoretical optimal values for solving accuracy on F1–F9 while maintaining a standard deviation of 0. This indicates that IHBA exhibited strong robustness. In summary, IHBA showed strong solving ability and stability on multiple single-peak test functions, significantly superior to ATR, PSO, WOA, ARO, SWO, and other algorithms.

Table 5.

Error statistics (average, standard deviation, best) for 30 runs of the IHBA and comparison algorithms on single-peak and multi-peak test functions (D = 30).

Table 6.

Error statistics (average, standard deviation, best) for 30 runs of the IHBA and comparison algorithms on single-peak and multi-peak test functions (D = 200).

Table 7.

Error statistics (average, standard deviation, best) for 30 runs of the IHBA and comparison algorithms on fixed-dimension test functions.

In the comparison of multi-modal test functions, IHBA showed a strong search ability; its performance was particularly outstanding, especially in the F12, F14, and F16 functions. When the dimension was set to 30, the average value of IHBA reached 0 in F12, which showed a lower value than other algorithms, indicating that it was more efficient in finding the optimal solution. In F14, the average value, standard deviation, and optimal value of IHBA were all 0, which far exceeded the performance of other algorithms, reflecting its powerful ability to deal with complex functions. In F16, IHBA also obtained the optimal value and revealed a gap with the results of other algorithms. This suggests that it possesses a greater capacity to circumvent the local optimal solution. For F10, IHBA was slightly less stable, and SWO was better. When = 200, the efficacy of the comparative algorithms across all three metrics diminished; however, IHBA continued to maintain a superior position over its counterparts throughout the solution process. In F12, the accuracy and stability of other comparison algorithms decreased to varying degrees. ARO decreased by six orders of magnitude compared with the average value of = 30, and the optimal value in 30 runs decreased by nine orders of magnitude. Compared with = 30, the performance of HBA in this test function also decreased, and the average value decreased by 20 orders of magnitude, while IHBA still reached the theoretical optimal value of 0 in the three indexes. In F10 and F15, the IHBA results decreased relative to the mean and standard deviation at = 30. This shows that the change in dimension had a certain influence on IHBA, which affected the accuracy and stability of the algorithm. In general, although IHBA showed a certain optimization ability and excellent stability on multi-modal test functions, demonstrating its wide applicability in complex optimization problems, it still needs to be further improved.

In the evaluation of fixed-dimension functions, IHBA demonstrated strong performance for functions F17 and F19–F22, achieving both optimal mean values and optimal standard deviations. In function F18, all six algorithms found the optimal value, but IHBA reached the optimal standard deviation, indicating the stability of IBHA on F18. In the F23, ARO demonstrated the highest level of integration, while the IHBA exhibited a slightly lower performance in comparison to the ARO. In summary, IHBA performed well on multiple test functions and demonstrated superiority over the comparison algorithm regarding solution quality and robustness.

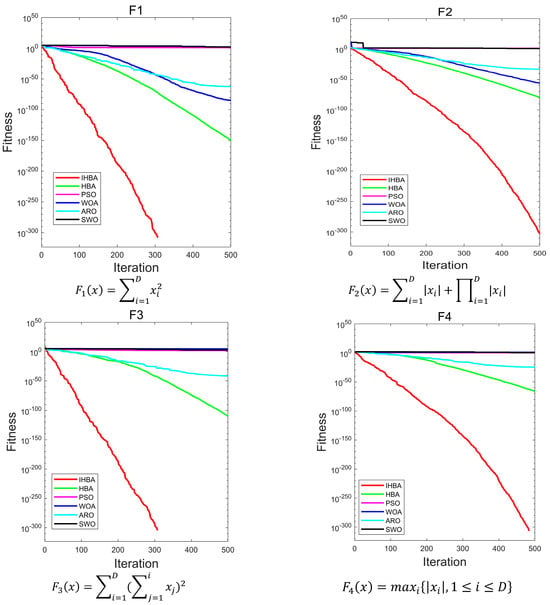

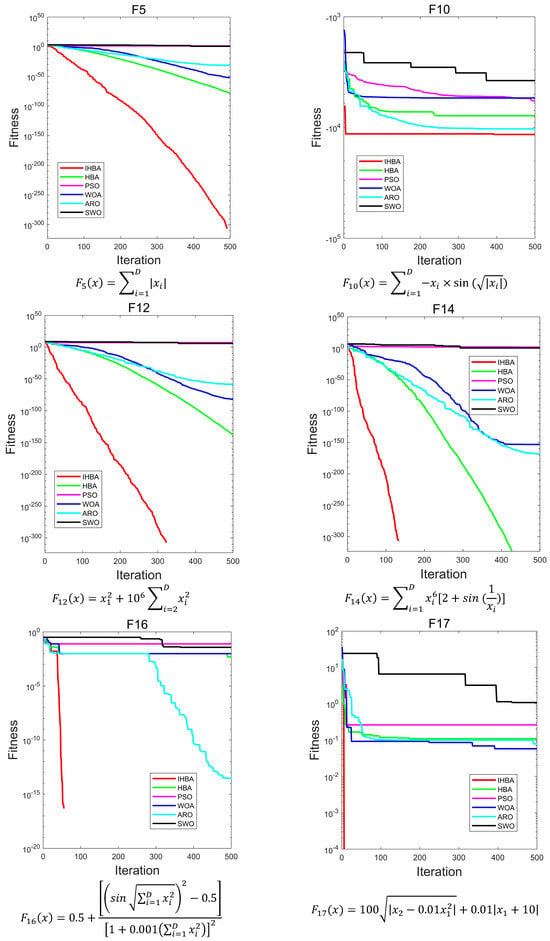

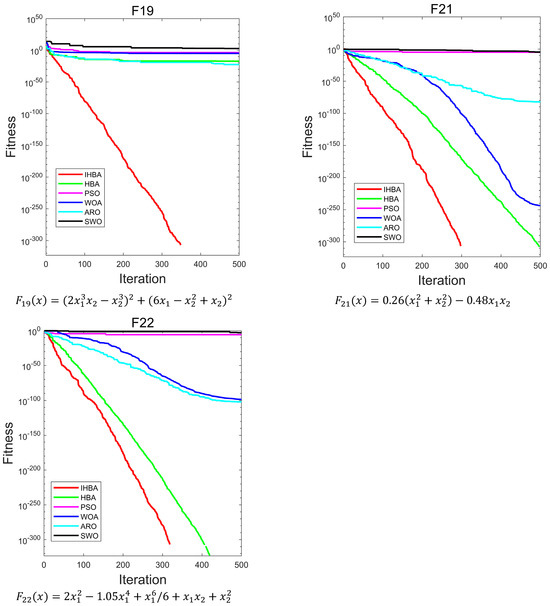

Figure 6 is a convergence diagram of the IHBA on the benchmark function. To ensure fairness, a population = 50, a maximum number of iterations = 500, and a dimension = 30 was set in the experiment. Each algorithm was run independently 30 times. Due to the limited space, this paper selected some test functions for convergence analysis and comparison. F1–F5 in single-peak test function; F10, F12, F14, and F16 in multi-peak test function; and F17, F19, F21, and F22 in constant-dimension test function were selected, respectively.

Figure 6.

Convergence curves of each algorithm on different test functions.

As illustrated in Figure 6, for the unimodal test functions, IHBA demonstrated the fastest convergence speed among the five algorithms. Additionally, IHBA achieved the highest search accuracy throughout the iterative process. In contrast, other algorithms converge slowly and have low convergence accuracy. In the multimodal test function, IHBA also converged quickly and achieved the highest search accuracy among the six algorithms, especially F14. Both IHBA and HBA found the optimal value of 0 in 500 iterations. However, by observing the convergence curve, IHBA converged more quickly than HBA, finding the optimal value first. This demonstrates the good performance of IHBA. In the fixed-dimension test function, IHBA performed well on F17, F19, F21, and F22. It was obviously better than the other comparison algorithms, and also performed well on F21. In general, IHBA showed a better performance.

5.3. Simulation Analysis of K-Means for Improved Honey Badger Algorithm

To further validate the practicality of IHBA, this study selected K-means, IHBA-KM, and K-means algorithm based on Honey Badger Algorithm (HBA-KM) for a clustering experiment comparison. This experiment selected three data sets from the UCI database. For details, see Table 8.

Table 8.

Data sets.

In this paper, the error sum of squares (SSE), accuracy, and F-score were employed as metrics for evaluating clustering results.

SSE is a widely used metric for assessing the quality of clustering, referred to as fitness. It evaluates the sum of the squared distances between each data point and the center of its corresponding cluster. A lower fitness value signifies that a data point’s proximity to the clustering center correlates with improved clustering quality. The specific calculation formula is shown in Equation (16).

k denotes the number of clusters; represents all data points within the kth class; refers to the data points in the kth cluster; and signifies the center of that cluster.

Accuracy refers to the ratio of correctly clustered elements to the total number of elements, as expressed by the following equation. A higher accuracy indicates superior clustering results:

represents the number of samples assigned to the correct cluster, and represents the number of samples.

The F-score is a widely used metric for evaluating clustering results, with a value range from 0 to 1. It reflects the degree of similarity between the clustering outcomes and the true classifications. A higher F-score, closer to 1, indicates a more effective clustering performance. Given the issue of class imbalance present in the data set utilized in this study, we employed the weighted F-score as a metric to evaluate the clustering outcomes. The specific formula is as follows.

where TP is a true-false example, FP is a false-positive example, and FN is a negative-false example. when the data set is a class-unbalanced data set, which focuses more on small class recall, and when the data set is a class-balanced data set, which is the traditional F-score.

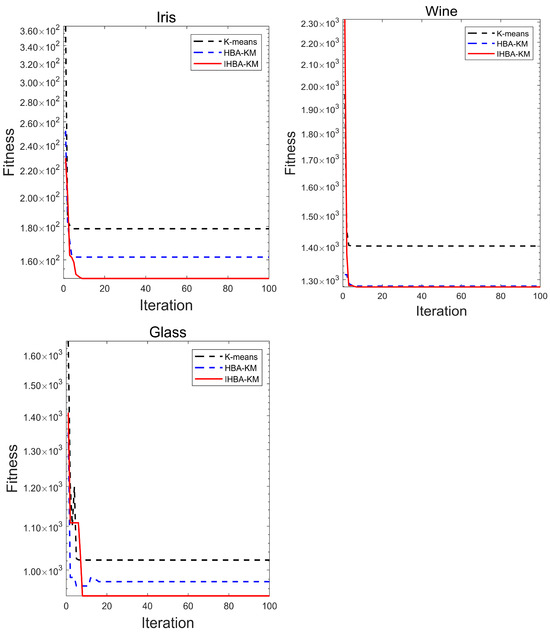

To ensure fairness, the parameters were kept consistent across all experiments: The number of populations was established at , with a maximum iteration limit set to = 100. Each algorithm was executed independently a total of 30 times. The best, worst, and average values, along with the standard deviation, were recorded for each run. Experimental results are summarized in Table 9, Table 10 and Table 11, while the convergence plots are shown in Figure 7.

Table 9.

SSE comparison after running 30 times.

Table 10.

Clustering accuracy comparison for 30 runs of each algorithm.

Table 11.

F-score comparison for 30 runs of each algorithm.

Figure 7.

Convergence curve of the algorithm on the data set.

Comparing the results of the K-means algorithm, HBA-KM, and IHBA-KM, the IHBA-KM algorithm showed obvious advantages in many indexes. According to Table 9, it can be seen that the IHBA-KM in Iris data set was much less adaptive; in terms of standard deviation, IHBA-KM was 54.87 higher than K-means and 6.6 higher than HBA-KM, which strongly proves that the clustering stability and quality of IHBA-KM algorithm are better. It is evident from Figure 7 that the three algorithms exhibited rapid declines during the initial stages of iteration. The K-means algorithm and HBA-KM finally fell into local optimal, while IHBA-KM emerged from the trap in search of a higher-quality solution. The findings illustrate the efficacy of IHBA-KM in clustering within the data set. Especially in the Glass data set, IHBA-KM had the smallest fitness value, and it was found through the convergence curve that the comparison algorithm fell into local optimal rapid convergence, while IHBA-KM jumped out of the trap of local optimal and obtained better clustering centers. However, the standard deviation of 67.5 also indicated that the stability of IHBA was poor, suggesting that the improvement measures in this paper can enhance the clustering effect, but there is still room for improvement.

In summary, IHBA-KM is superior to the traditional K-means algorithm and HBA-KM in terms of clustering effect, stability, and consistency. The experimental results show that the IHBA in this study can alleviate the influence of the initial clustering center on the clustering results and enhance the stability and efficiency of clustering.

In order to further evaluate IHBA-KM, this study continued to explore the use of the accuracy and F-score metrics as criteria. Detailed results can be found in Table 10 and Table 11.

The IHBA-KM showed significant advantages in multiple clustering tasks, especially in the accuracy index. Taking the Iris data set as an example, the average accuracy of IHBA-KM was 89.63%, higher than that of the K-means algorithm (80.73%) and HBA-KM (88.00%). In addition, the maximum accuracy of IHBA-KM was also outstanding, reaching 96.66%. The performance of this algorithm was markedly superior to those of other algorithms, suggesting that it possesses a greater capacity for achieving optimal clustering results. In the context of the Wine data set, IHBA-KM demonstrated a consistently high accuracy rate, averaging approximately 10% higher and achieving a minimum improvement of 27% compared to the K-means algorithm. This indicates its robustness and the stability in its performance. In the Glass data set, the average accuracy of IHBA was 67.62%, while the average accuracy of HBA stood at 64.11%. This represents an improvement of 3.51%, highlighting the positive impact of the enhanced strategy on classification performance. This stability and high accuracy make IHBA-KM a more reliable choice in practical applications, especially in scenarios where high-precision clustering results are required. On the whole, the IHBA-KM algorithm has obvious advantages in terms of clustering effect and accuracy.

As can be observed in Table 11, the IHBA-KM algorithm performed well on F-score indicators, especially on the Iris and Wine data sets. Its average F-score was 0.83, significantly higher than K-means’ 0.73 and HBA-KM’s 0.82. This enhancement reflects the effectiveness of IHBA-KM clustering. In terms of maximum F-score, IHBA-KM reached 0.91, surpassing the other two algorithms, indicating that it can achieve higher classification accuracy. In addition, its minimum F-score remained at 0.77, showing consistency and stability across different data sets. This advantage means that IHBA-KM can provide a reliable performance in a diverse array of application scenarios. In high-dimensional data, feature similarity often becomes less clear, negatively impacting clustering quality. Therefore, according to the Glass data set, the average F-scores for K-means and HBA were 0.40 and 0.42, respectively, while the average F-score for IHBA was 0.45. Compared to the other two algorithms, the clustering performance of IHBA on this data set was marginally superior; however, the relatively low values indicate a potential limitation of IHBA when applied to high-dimensional data.

In summary, the IHBA’s advantage in accuracy and F-score is unquestionable. By incorporating the HBA, IHBA reduces the reliance of the traditional K-means on the initial cluster centers. To address the issues of the HBA, including its vulnerability to local optima and slow convergence, the IHBA introduces several improvements. These enhancements help the algorithm to avoid local optima, speed up convergence, and ultimately enhance the overall efficacy of the clustering process.

6. Conclusions

In this study, an enhanced version of the HBA is proposed to address the issues of slow convergence and the tendency to become stuck in local optima. To improve the initial population distribution and the overall solution quality, chaos-based initialization was introduced at the beginning of the algorithm’s iterations. Additionally, the original density factor was modified to accelerate the algorithm’s convergence, further enhancing its performance. The nonlinear inertia weight was introduced into the formula of position updating to enhance the convergence rate of the algorithm. Random oppositional-based learning was implemented on the optimal individual to mitigate the risk of the algorithm converging to a local extremum. The IHBA was applied to K-means clustering to reduce the sensitivity of initial centroids. The results show that the IHBA-KM showed improved fitness, accuracy, and F1 indexes, and the clustering effect was better. However, the suboptimal performance of IHBA on the Glass data set underscores its limitations in high-dimensional clustering contexts. Future research will further explore enhancements to the Honey Badger Algorithm and the Sine Cosine Algorithm by integrating these approaches and will continue to explore the integration of alternative search strategies to improve their performance, aiming to continually improve algorithm performance. And furthermore, we aim to broaden its applicability in order to encompass multi-class clustering challenges, text clustering, feature selection, and other pertinent issues.

Author Contributions

Conceptualization, S.J. and H.G.; methodology, H.G.; software, H.G. and Y.L.; validation, H.G. and H.S.; investigation, H.G.; writing—original draft preparation, H.G.; writing—review and editing, S.J.; supervision, Y.Z. and M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank all the reviewers for their constructive comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Djouzi, K.; Beghdad-Bey, K. A review of clustering algorithms for big data. In Proceedings of the 2019 International Conference on Networking and Advanced Systems (ICNAS), Annaba, Algeria, 26–27 June 2019; pp. 1–6. [Google Scholar]

- Hu, H.; Liu, J.; Zhang, X.; Fang, M. An effective and adaptable K-means algorithm for big data cluster analysis. Pattern Recognit. 2023, 139, 109404. [Google Scholar] [CrossRef]

- Sinaga, K.P.; Yang, M.-S. Unsupervised K-means clustering algorithm. IEEE Access 2020, 8, 80716–80727. [Google Scholar] [CrossRef]

- Song, L.; Zhuo, Y.; Qian, X.; Li, H.; Chen, Y. GraphR: Accelerating graph processing using ReRAM. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), Vienna, Austria, 24–28 February 2018; pp. 531–543. [Google Scholar]

- Attari, M.Y.N.; Ejlaly, B.; Heidarpour, H.; Ala, A. Application of data mining techniques for the investigation of factors affecting transportation enterprises. IEEE Trans. Intell. Transp. Syst. 2021, 23, 9184–9199. [Google Scholar] [CrossRef]

- Sun, Z.-H.; Zuo, T.-Y.; Liang, D.; Ming, X.; Chen, Z.; Qiu, S. GPHC: A heuristic clustering method to customer segmentation. Appl. Soft Comput. 2021, 111, 107677. [Google Scholar] [CrossRef]

- Gong, R. Pattern Recognition of Control Chart Based on Fuzzy c-Means Clustering Algorithm. In Proceedings of the 2022 4th International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Hamburg, Germany, 7–9 October 2022; pp. 899–903. [Google Scholar]

- Ahmed, M.; Seraj, R.; Islam, S.M.S. The k-means algorithm: A comprehensive survey and performance evaluation. Electronics 2020, 9, 1295. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Ezugwu, A.E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- Das, P.; Das, D.K.; Dey, S. A modified Bee Colony Optimization (MBCO) and its hybridization with k-means for an application to data clustering. Appl. Soft Comput. 2018, 70, 590–603. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Yang, X.-S.; He, X. Firefly algorithm: Recent advances and applications. Int. J. Swarm Intell. 2013, 1, 36–50. [Google Scholar] [CrossRef]

- Yang, X.-S. Flower pollination algorithm for global optimization. In Proceedings of the International conference on Unconventional Computing and Natural Computation, Orléans, France, 3–7 September 2012; pp. 240–249. [Google Scholar]

- Bai, L. K-means clustering algorithm based on improved quantum rotating gate artificial fish swarm algorithm and its application. Appl. Res. Comput. 2022, 39, 797–801. [Google Scholar]

- Xie, H.; Zhang, L.; Lim, C.P.; Yu, Y.; Liu, C.; Liu, H.; Walters, J. Improving K-means clustering with enhanced Firefly Algorithms. Appl. Soft Comput. 2019, 84, 105763. [Google Scholar] [CrossRef]

- Huang, P.; Luo, Q.; Wei, Y.; Zhou, Y. An equilibrium honey badger algorithm with differential evolution strategy for cluster analysis. J. Intell. Fuzzy Syst. 2023, 45, 5739–5763. [Google Scholar] [CrossRef]

- Jiang, S.; Wang, M.; Guo, J.; Wang, M. K-means clustering algorithm based on improved flower pollination algorithm. J. Electron. Imaging 2023, 32, 032003. [Google Scholar] [CrossRef]

- Li, Y.; Chu, X.; Tian, D.; Feng, J.; Mu, W. Customer segmentation using K-means clustering and the adaptive particle swarm optimization algorithm. Appl. Soft Comput. 2021, 113, 107924. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Han, E.; Ghadimi, N. Model identification of proton-exchange membrane fuel cells based on a hybrid convolutional neural network and extreme learning machine optimized by improved honey badger algorithm. Sustain. Energy Technol. Assess. 2022, 52, 102005. [Google Scholar] [CrossRef]

- Chen, Y.; Feng, G.; Chen, H.; Gou, L.; He, W.; Meng, X. A multi-objective honey badger approach for energy efficiency enhancement of the hybrid pressure retarded osmosis and photovoltaic thermal system. J. Energy Storage 2023, 59, 106468. [Google Scholar] [CrossRef]

- Arutchelvan, K.; Priya, R.S.; Bhuvaneswari, C. Honey Badger Algorithm Based Clustering with Routing Protocol for Wireless Sensor Networks. Intell. Autom. Soft Comput. 2023, 35, 3199–3212. [Google Scholar] [CrossRef]

- Song, Y.; Lin, H.; Bian, Y. Improved DV_HOP Location Algorithm Based on distance Correction and Honey Badger Optimization. Electron. Meas. Technol. 2024, 45, 147–153. [Google Scholar]

- Hu, G.; Zhong, J.; Wei, G. SaCHBA_PDN: Modified honey badger algorithm with multi-strategy for UAV path planning. Expert Syst. Appl. 2023, 223, 119941. [Google Scholar] [CrossRef]

- Hussien, A.G.; Chhabra, A.; Hashim, F.A.; Pop, A. A novel hybrid Artificial Gorilla Troops Optimizer with Honey Badger Algorithm for solving cloud scheduling problem. Clust. Comput. 2024, 27, 13093–13128. [Google Scholar] [CrossRef]

- Fathy, A.; Rezk, H.; Ferahtia, S.; Ghoniem, R.M.; Alkanhel, R. An efficient honey badger algorithm for scheduling the microgrid energy management. Energy Rep. 2023, 9, 2058–2074. [Google Scholar] [CrossRef]

- Nassef, A.M.; Houssein, E.H.; Helmy, B.E.-d.; Rezk, H. Modified honey badger algorithm based global MPPT for triple-junction solar photovoltaic system under partial shading condition and global optimization. Energy 2022, 254, 124363. [Google Scholar] [CrossRef]

- Yang, H.; E, J. Adaptive variable scale chaotic immune optimization Algorithm and its application. Control. Theory Appl. 2009, 26, 1069–1074. [Google Scholar]

- Zhou, J.; Zhang, L.; Sun, T. Improved honey badger algorithm based on elite differential variation. Electron. Meas. Technol. 2024, 47, 79–85. [Google Scholar]

- Arasomwan, M.A.; Adewumi, A. On the performance of linear decreasing inertia weight particle swarm optimization for global optimization. Sci. World J. 2013, 2013, 860289. [Google Scholar] [CrossRef]

- WANG, T. Grasshopper optimization algorithm with nonlinear weight and Cauchy mutation. Microelectron. Comput. 2020, 37, 82–86. [Google Scholar]

- Yuan, Y.; Mu, X.; Shao, X.; Ren, J.; Zhao, Y.; Wang, Z. Optimization of an auto drum fashioned brake using the elite opposition-based learning and chaotic k-best gravitational search strategy based grey wolf optimizer algorithm. Appl. Soft Comput. 2022, 123, 108947. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z.; He, W. Opposition-based learning equilibrium optimizer with Levy flight and evolutionary population dynamics for high-dimensional global optimization problems. Expert Syst. Appl. 2023, 215, 119303. [Google Scholar] [CrossRef]

- Jiang, S.; Shang, J.; Guo, J.; Zhang, Y. Multi-strategy improved flamingo search algorithm for global optimization. Appl. Sci. 2023, 13, 5612. [Google Scholar] [CrossRef]

- Feng, M.; Heffernan, N.; Koedinger, K. Addressing the assessment challenge with an online system that tutors as it assesses. User Model. User-Adapt. Interact. 2009, 19, 243–266. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-international conference on neural networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Spider wasp optimizer: A novel meta-heuristic optimization algorithm. Artif. Intell. Rev. 2023, 56, 11675–11738. [Google Scholar] [CrossRef]

- Jamil, M.; Yang, X.-S. A literature survey of benchmark functions for global optimisation problems. J. Math. Model. Numer. Optim. 2013, 4, 150–194. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).