5.1. Software Module Testing

5.1.1. Sample Conversion Module Buffer Test

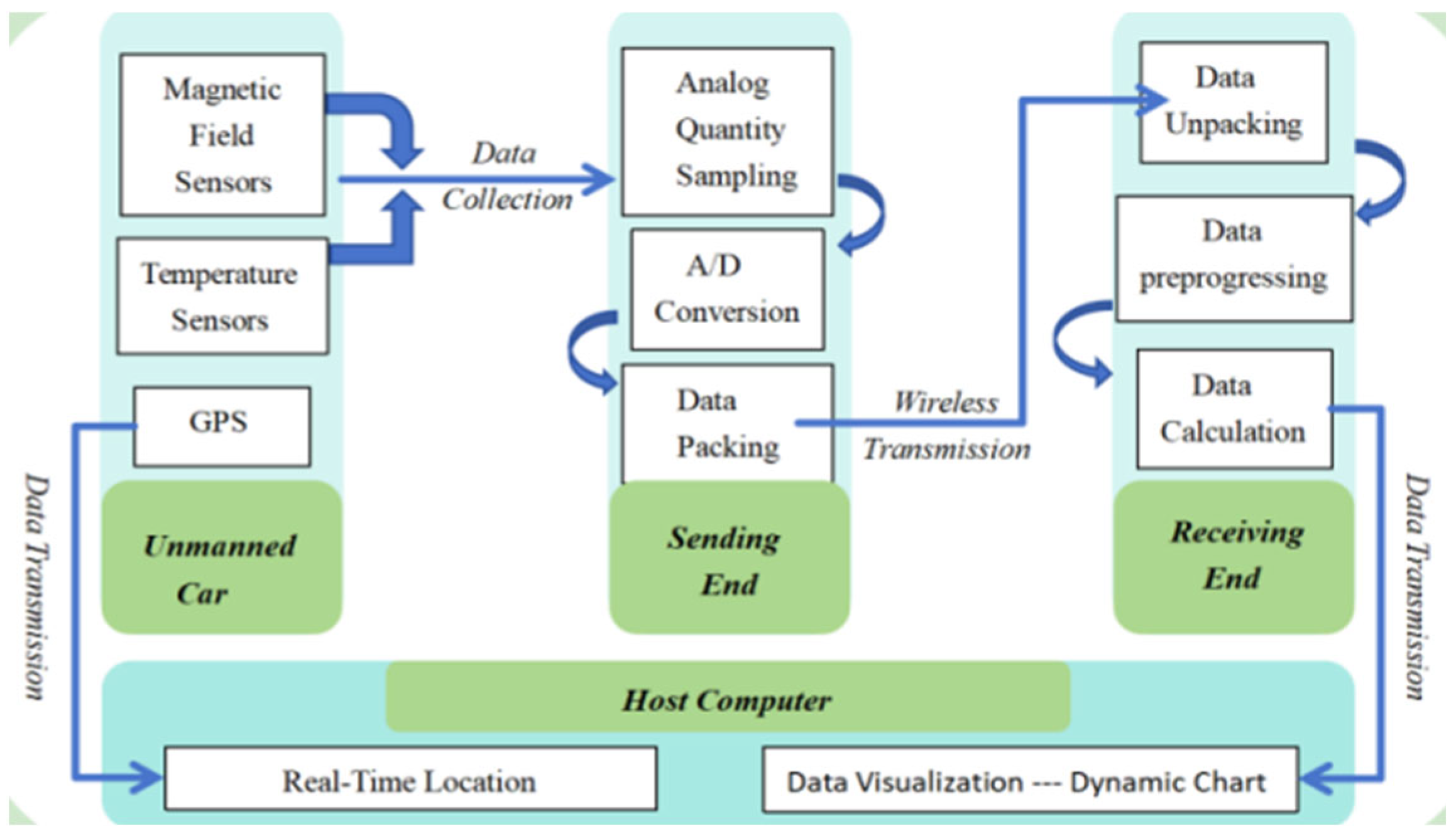

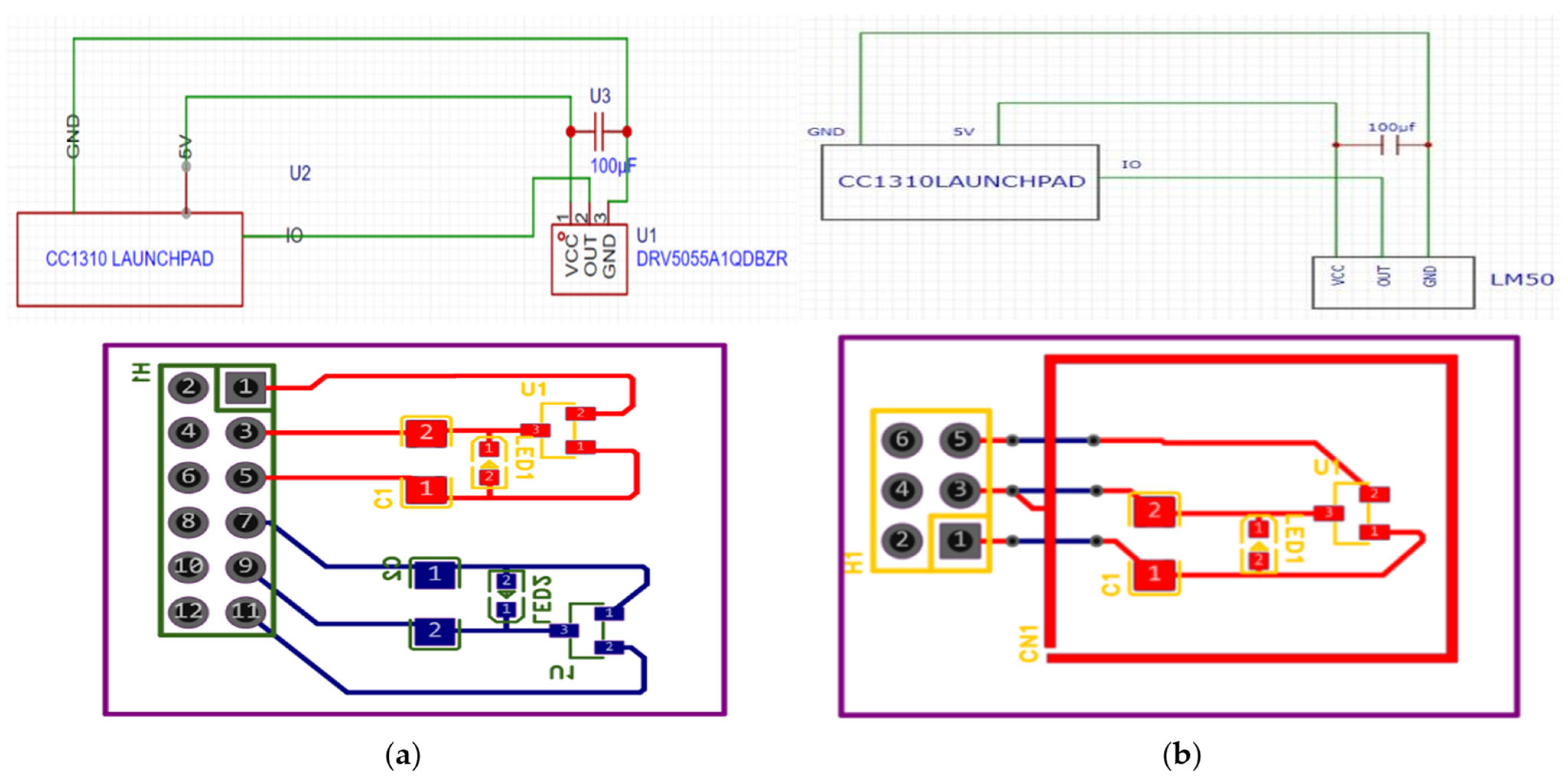

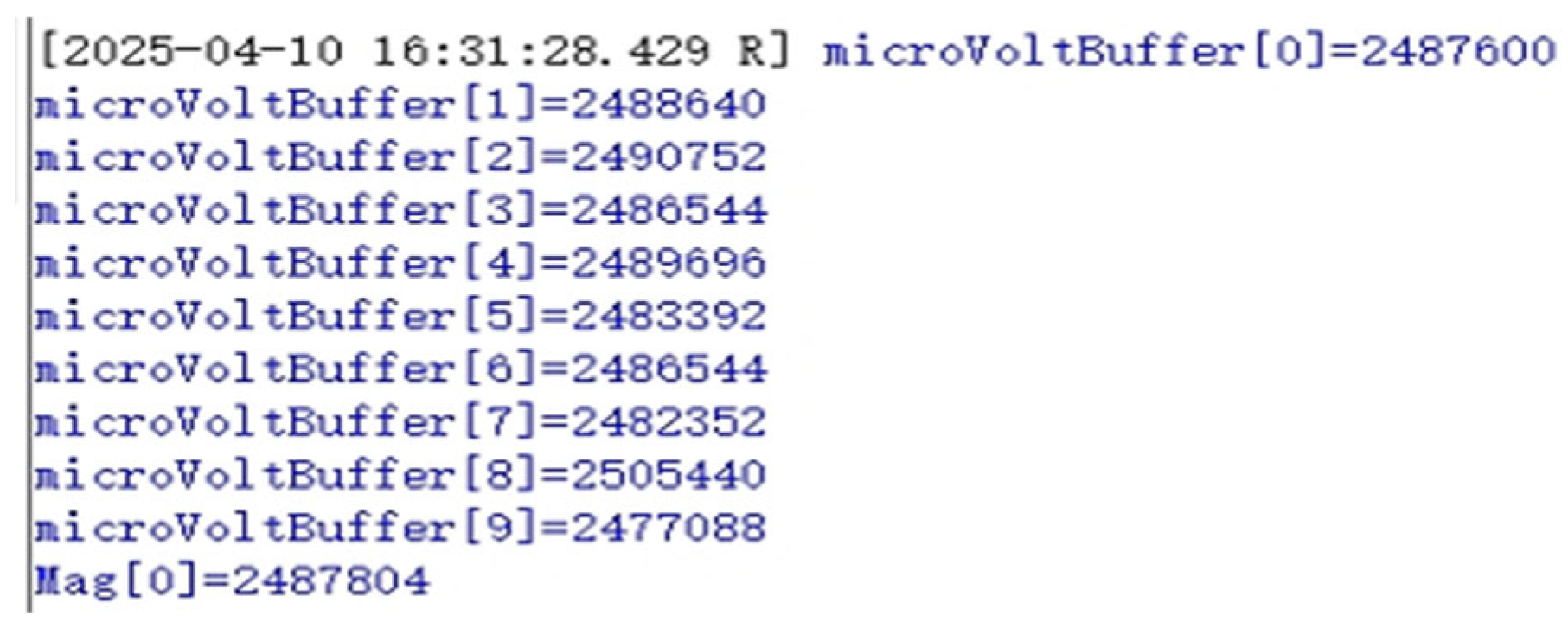

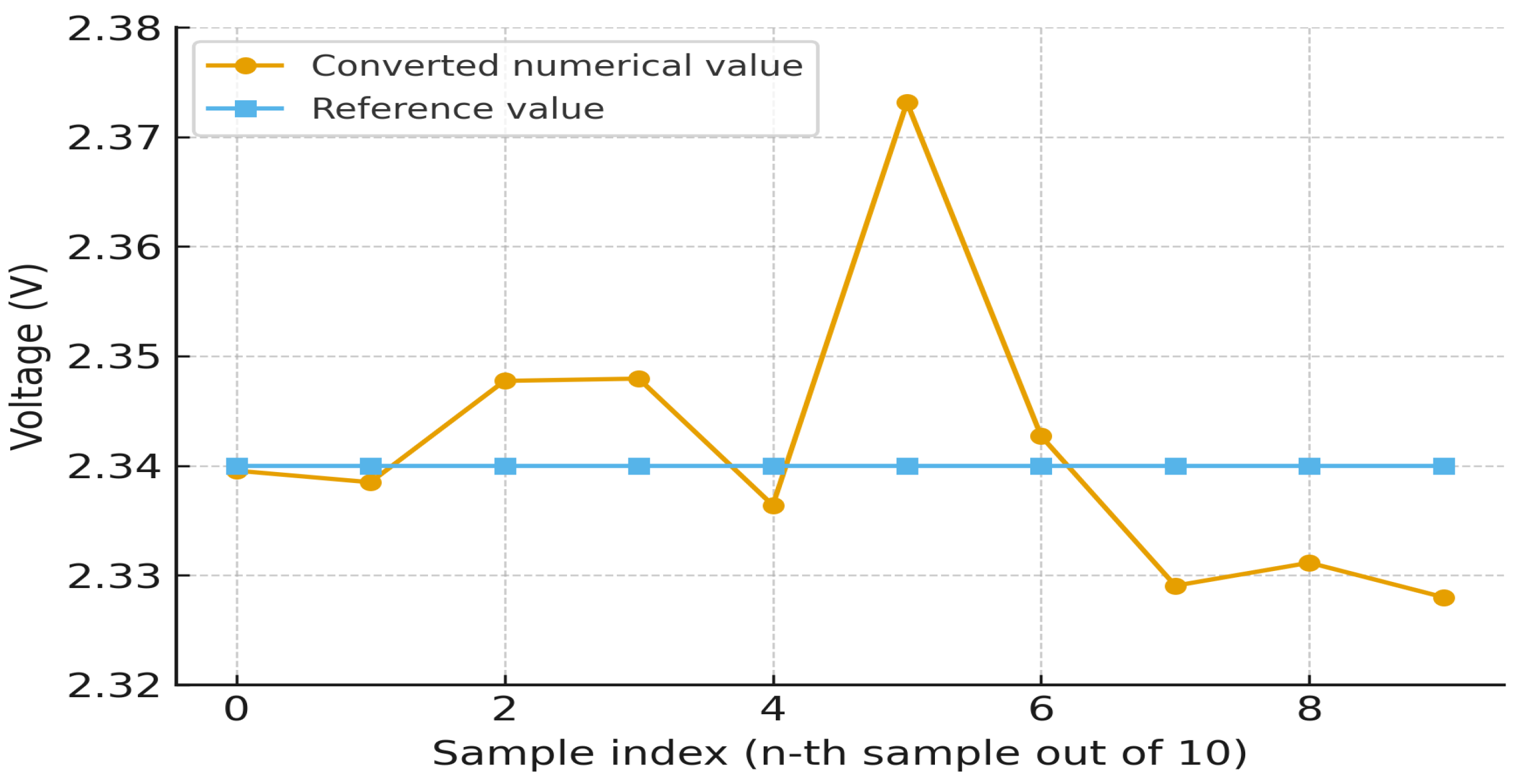

To validate the integrity of signal buffering and UART-based data transmission, the Display and UART functions were integrated into the transmission module firmware. During execution, the system first verifies the sampled data values stored in microVoltBuffer[q] across all active channels to ensure correct voltage acquisition (As shown in

Figure 3). Following this, the processed magnetic field values in Mag[q] (i.e., the magnitude of the magnetic field vector q) are cross-checked against expected reference values. This two-stage verification ensures both raw data capture and subsequent conversion into calibrated magnetic field values are functioning reliably within the expected accuracy bounds.

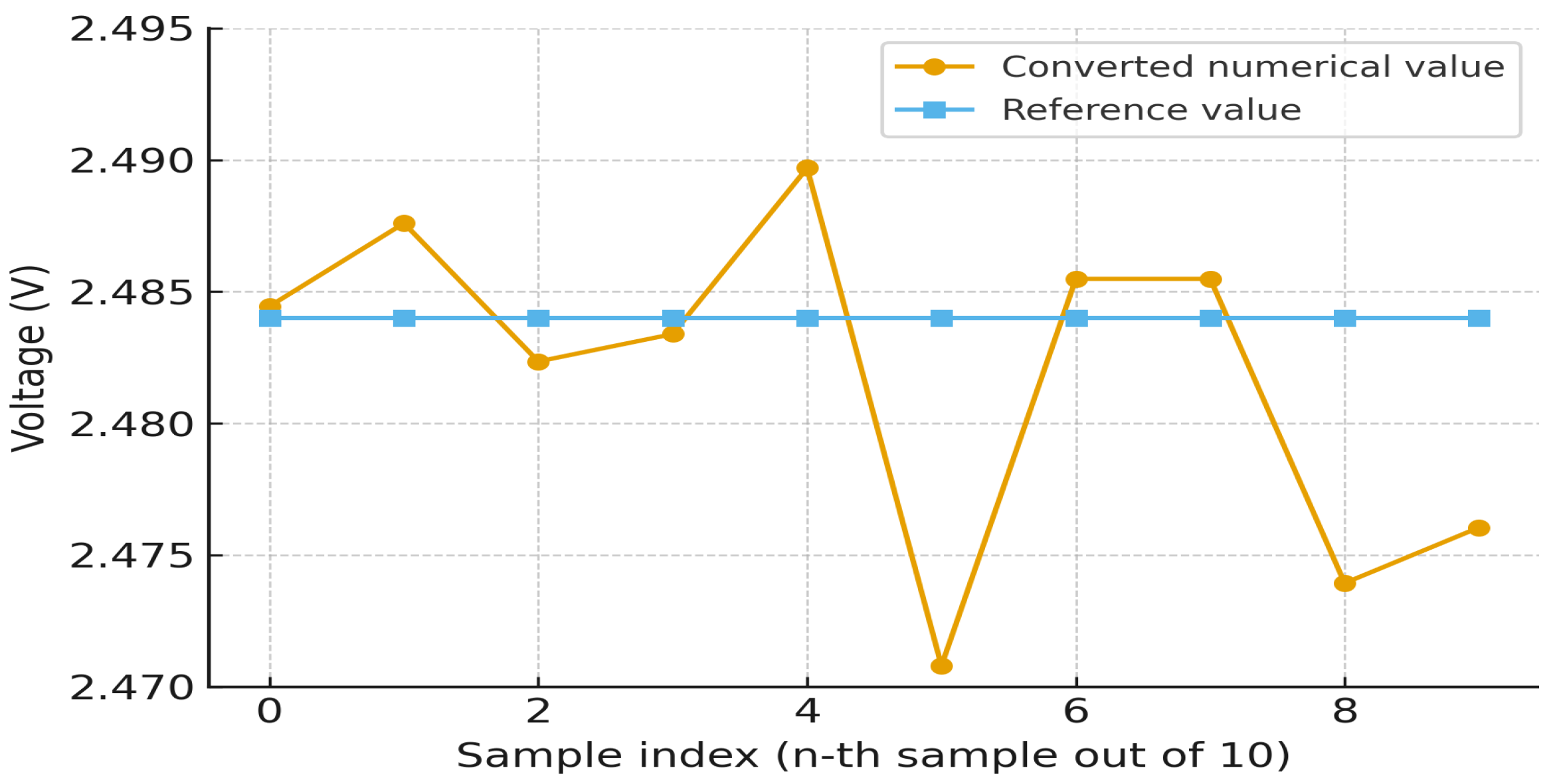

To establish a reference baseline for system accuracy under quiescent conditions, a static test was conducted in the absence of any external magnetic field. Under this condition, the output voltage of the DRV5055 Hall-effect sensor, measured via a calibrated multimeter, was consistently recorded at 2.48 V. According to international electromagnetic radiation monitoring standards, measurement error must remain within ±5% for power-frequency magnetic fields ranging from 30 Hz to 100 kHz and exceeding 200 nT at a nominal ambient temperature of 24 °C. This benchmark serves as the target threshold for evaluating the system’s sampling and conversion accuracy in subsequent tests.

To validate measurement consistency under applied field conditions, the system was tested using a permanent magnet as the excitation source. A calibrated low-frequency Gaussmeter was used to measure the true magnetic field strength, which was then compared with the system’s converted sensor readings. Results confirmed close agreement between the two sources, indicating accurate field reconstruction. The Hall sensor output remained consistent with the previously established 2.48 V baseline in zero-field conditions, confirming sensor linearity and stability across operational states.

Building on the previously established baseline and experimental validation, the sampling conversion module of the proposed electromagnetic field monitoring system demonstrates superior accuracy and stability. Under static, zero-field conditions, the maximum observed channel conversion error was limited to 1.17%, with an average of just 0.72%—well below the international ±5% tolerance threshold for power-frequency magnetic fields above 200 nT (

Figure 4 shows the specific data measured by the system, and

Table 2 shows the error calculated from the data). Under applied-field tests using a permanent magnet, the maximum observed error was 1.42%, while nine other channels maintained deviations within 0.5% (

Figure 5 shows the specific data measured by the system, and

Table 3 shows the error calculated from the data).

These results confirm the effectiveness of the system’s optimized ADC calibration, multi-channel buffering strategy, and built-in sensor compensation in suppressing error propagation. In practice, each acquisition cycle was triggered every 0.5 s, buffering 10 × 200 samples per batch (2000 samples). While only two representative batches (with and without external fields) are shown in the figures for clarity, continuous long-duration sampling produced numerous batches, all of which consistently complied with the ±5% tolerance margin. This systematic validation demonstrates that the observed results are not isolated cases but representative of the complete data collection. Consequently, the system achieves reliable magnetic field measurement performance even under fluctuating or complex electromagnetic conditions, supporting its deployment in industrial-grade monitoring applications.

To comprehensively assess measurement accuracy, three error metrics were analyzed: Peak Error (PE, 1.42%), Mean Absolute Error (MAE, 0.72%), and Root Mean Square Error (RMSE, 0.76%), all derived from ten-channel measurements under static and applied-field conditions. These indicators are internationally recognized and recommended in metrology practice as complementary measures of accuracy and robustness, consistent with the guidelines outlined in ISO/IEC Guide 98-3 [

17].

Quantization error: For a 12-bit ADC with a 0–3.3 V reference span, the least significant bit (LSB) corresponds to 3.3 V/4096 ≈ 0.000806 V (0.806 mV). According to the calibration slope (≈2550.9 μT/V), this voltage step corresponds to ≈2.0 μT, or about ±0.5% of full scale (5000 μT). Such step-induced deviations directly manifest as peak errors in discrete measurement systems, a phenomenon consistently characterized using PE in ADC evaluation standards [

18].

Nonlinearity: During calibration against a Helmholtz coil, the linear regression residuals were analyzed. The maximum deviation of measured points from the fitted line was ≈15 μT, which corresponds to about ±0.3% of full scale. This provides an empirical estimate of the nonlinearity contribution. Similar to prior metrological studies, the average unsigned residual is best captured by MAE, which robustly represents average model deviation [

19].

Thermal drift: The DRV5055 datasheet specifies a sensitivity drift of ~0.12%/°C. With the maximum 10 °C variation observed in laboratory tests, the expected drift is 0.12%/°C × 10 °C ≈ 1.2% of full scale. RMSE, which emphasizes the influence of occasional larger discrepancies, is particularly suited to quantify such drift-induced variations, as commonly applied in forecast and measurement accuracy assessments [

20].

Summing these contributions gives an overall expected error on the order of 2%, which is consistent with the experimental results (PE = 1.42%, MAE = 0.72%, RMSE = 0.76%). These quantified metrics not only clarify the origin of the reported accuracy indicators but also enhance the transparency of system performance, aligning with general measurement uncertainty reporting practices [

17].

These quantified metrics not only clarify the origin of the reported PE, MAE, and RMSE values but also enhance the transparency of system performance, aligning with reporting standards such as ISO/IEC 17025 [

21].

represents the magnetic field value measured by the proposed system (in μT).

represents the reference value measured by a standard high-precision Gaussmeter.

denotes the total number of sampling points.

While overall performance is robust, the remaining deviations can be attributed primarily to three sources: ADC quantization (≈±0.5%), sensor nonlinearity (≈±0.3%), and residual thermal drift (≈1.2%). These contributions collectively explain the observed error levels and are consistent with the theoretical error budget presented above. The error metrics derived from calibration confirm this agreement, providing both worst-case and average measures of accuracy. Furthermore, alternating field experiments verified that all deviations remained within the ±5% tolerance required by IEC standards under 50/60 Hz conditions, demonstrating compliance and robustness for real-world applications such as substations and industrial facilities.

5.1.2. Performance Test of Wireless Transmission Module

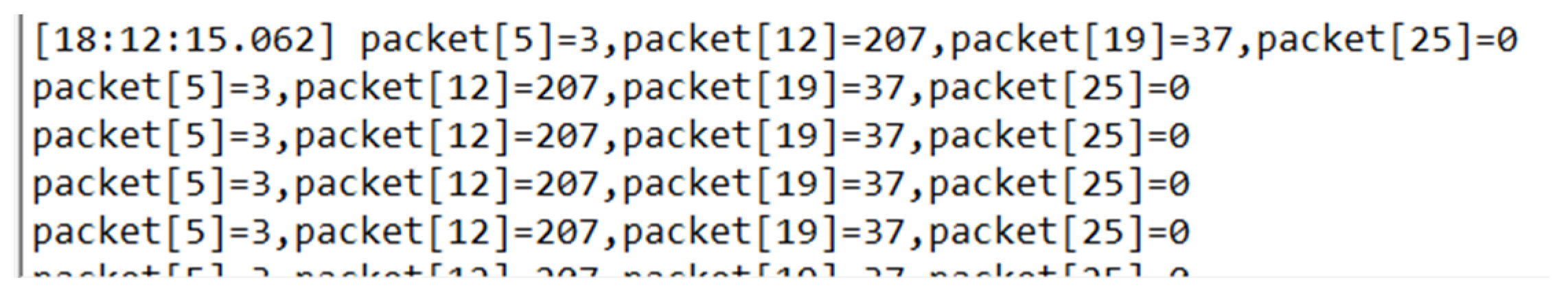

To verify the performance of the wireless transmission module, UART-based tests were carried out to examine the packaging and unpacking process. As illustrated in

Figure 6, one representative sample was segmented into packet[q] = 3, packet[q+7] = 207, packet[q+14] = 37, and packet[q+21] = 0. In little-endian order, these correspond to the binary string 00000000 00100101 11010110 00000011, equivalent to 2,479,619 in decimal. Compared with the nominal sensor-side value of 2,480,000 bits ±5%, the deviation was only 0.015%, demonstrating that the Sub-1 GHz link correctly preserved all transmitted bytes without corruption. Across multiple repetitions of this test, no transmission-related errors were ever observed, further confirming the robustness of the wireless module.

Further analysis indicated that this small deviation originated not from the wireless link but from the sensor acquisition chain. Specifically, the DRV5055 Hall sensor, while incorporating built-in temperature compensation, still exhibits residual thermal drift when exposed to fluctuating ambient conditions and localized heating. In addition, EMI coupling in the analog front-end under substation conditions can subtly perturb the measured voltage prior to digitization. Together, these factors account for the millivolt-level offset (e.g., 2.480 V nominal vs. 2.476 V measured) observed in the experiments.

To comprehensively evaluate wireless reliability, four 24-hour continuous transmission experiments were conducted at a sampling rate of 2 Hz (≈172,800 packets per trial). Two tests were performed in a high-voltage power distribution room with strong electromagnetic interference, and two in an open athletic field. The results showed packet error rates (PER) of 0.92% and 0.87% in the distribution room and 0.41% and 0.36% in the open field. Although a PER approaching 1% may appear relatively high, the large sample size and high reporting frequency ensure statistical robustness. Moreover, because industrial magnetic fields evolve slowly, occasional packet drops do not affect the reconstruction of long-term field trends.

Collectively, these findings confirm that the wireless module provides robust, byte-accurate, and distortion-free transmission, and that the main source of residual error lies in the sensor chain rather than in the data link. This robustness can be explained by the inherent characteristics of Sub-1 GHz narrowband communication, which provides strong penetration and interference tolerance, together with the relatively low transmission rate configured in this study (2 Hz). Operating well below the transceiver’s maximum throughput gives a wide performance margin, which explains why no transmission-related errors were observed during experiments.

5.2. Measurement System Integration Testing

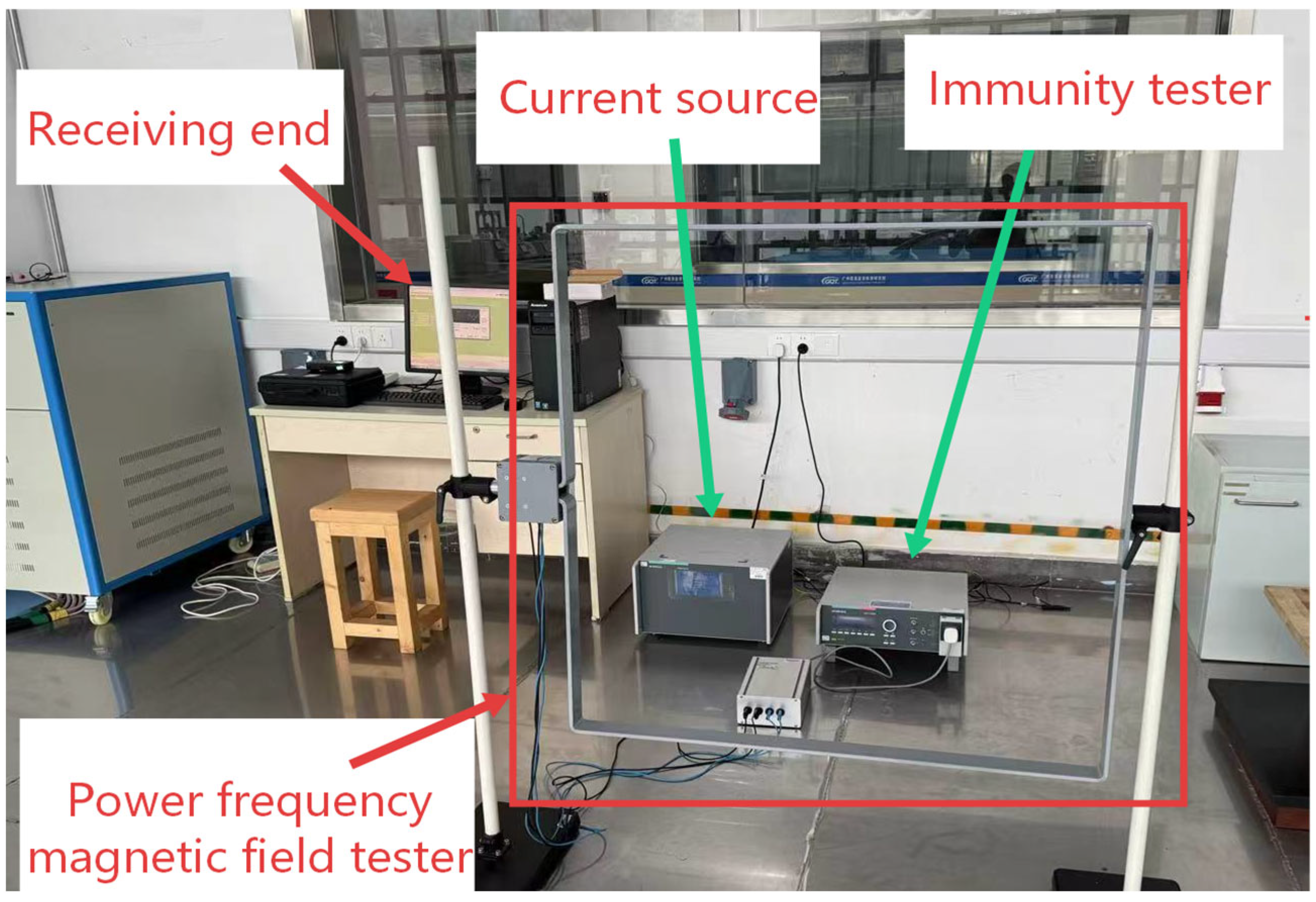

To assess the system’s performance under realistic electromagnetic conditions, power-frequency field testing was conducted at the Guangzhou Testing Institute. A dedicated experimental platform and supporting instrumentation were configured under the supervision of certified inspection personnel to meet the technical requirements for controlled magnetic field exposure.

An industrial-grade magnetic field generator, based on a multi-coil configuration, was used to produce a stable 50/60 Hz alternating magnetic field. The coil was driven by a high-current power supply capable of delivering up to 30 A. As the magnetic coil lacks active thermal management, it relies on passive heat dissipation, thereby limiting its continuous operation under high current excitation. As a result, the maximum allowable excitation current of 30 A was applied in short bursts of no more than one minute, followed by a mandatory cooldown period to prevent thermal overload.

To simulate external electromagnetic disturbances, an EMC immunity tester with a square coil energized by a programmable current source was used (

Figure 7). The system’s receiving end was positioned at the coil center, ensuring uniform 50/60 Hz exposure, while all instruments were computer-controlled for synchronization. When driven at 30 A, the coil produced a stable alternating magnetic field of 220–250 μT, representative of substation environments. Field uniformity and calibration traceability were verified against IEC 61000-4-8 guidelines [

21] and NIST standards.

The six-channel Hall-effect PCB probe measured three orthogonal axes with dual sensors per axis (X1, X2, Y1, Y2, Z1, Z2). Signals were buffered, filtered, and digitized by the CC1310’s 12-bit ADC before wireless transmission to the host for real-time visualization. The probe was mounted on a fixed support to minimize alignment errors.

System-level validation combined both static and alternating fields: a permanent bar magnet provided the DC component, while the calibrated coil supplied the AC field. Reference measurements from low-frequency and DC Gaussmeters confirmed that the proposed system maintained high accuracy and consistency under both conditions at 25–26 °C ambient temperature.

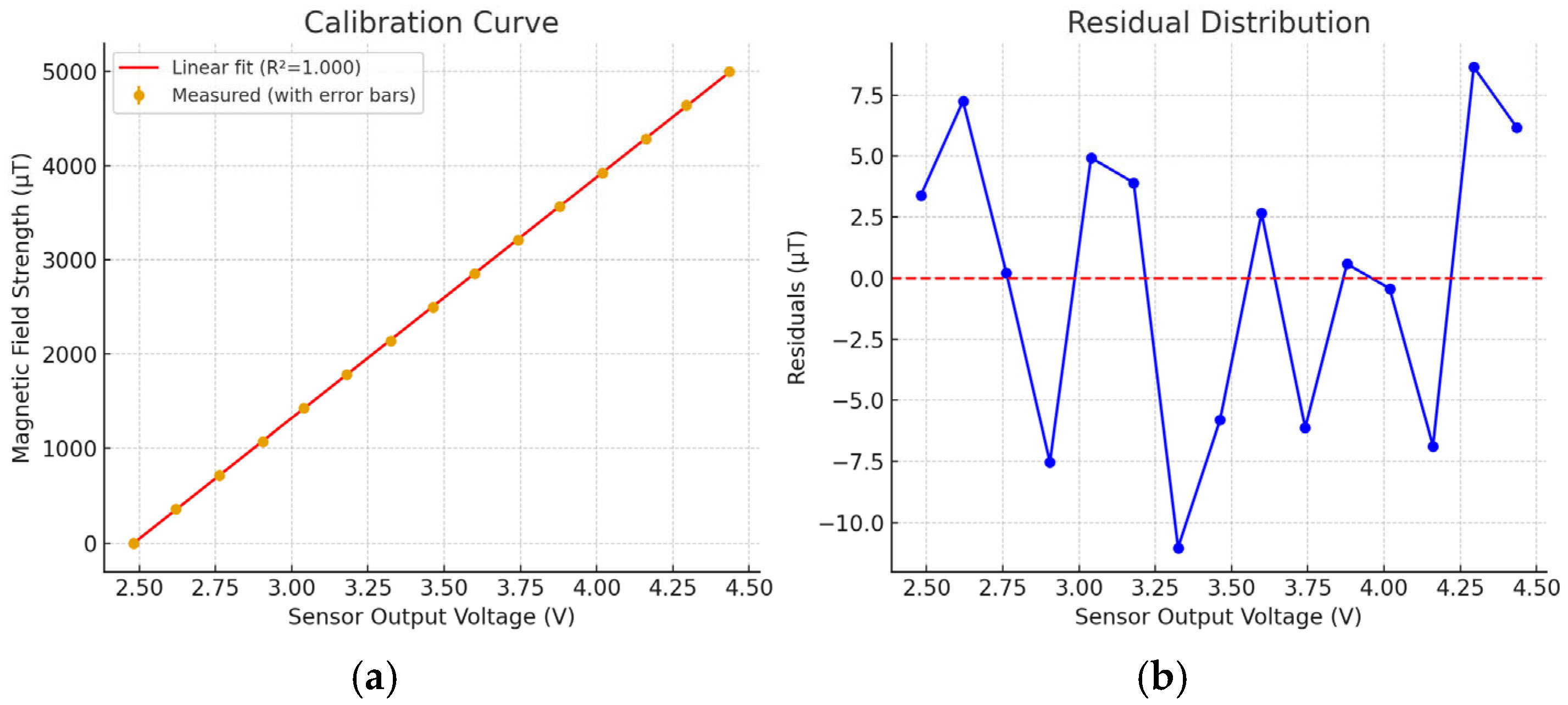

5.2.1. Calibration and Magnetic Field Conversion

To ensure high accuracy of magnetic field measurements, the system was calibrated by mapping sensor output voltage to known magnetic field strengths. A permanent magnet mounted on a micrometer-adjustable platform generated a controlled static magnetic field, while a high-precision Gaussmeter served as the reference. Simultaneously, the DRV5055 Hall sensor output voltage was recorded via ADC under identical field conditions. Ten calibration points were acquired by varying the magnet–sensor distance, covering the sensor’s linear response range. The calibration was performed under controlled ambient conditions (25–26 °C) to minimize thermal drift, with background magnetic noise measured below 0.5 μT. The reference Gaussmeter has a specified accuracy of ±0.5%, and this uncertainty was incorporated into the regression analysis to quantify its influence on the fitted calibration curve.

Figure 8 shows the calibration curve obtained from multiple (B, Vout) pairs. The data exhibit a strong linear relationship, with error bars reflecting repeatability across three independent measurement runs. A least-squares linear regression was applied, yielding the conversion function:

B is the magnetic field strength (μT)

is the sensor output voltage (V)

is the output at 0 μT (measured as 2.480 V)

k is the slope of the fitted curve (calculated as 2550.9 μT/V)

The regression analysis yielded an R2 value of 0.9993, indicating excellent linearity across the 0–5000 µT calibration range. Residuals, computed as the difference between measured and regression-predicted values, fluctuate randomly around zero with no systematic bias, and remain confined within ±10 µT, corresponding to less than ±0.25% of full-scale deviation.

To provide a balanced evaluation of calibration accuracy, three error metrics were calculated from the residuals: the peak error (PE) reached 1.42% FS, capturing the worst-case deviation; the mean absolute error (MAE) was 0.72% FS, representing the average unsigned deviation; and the root mean square error (RMSE) was 0.76% FS, which weights occasional larger discrepancies more strongly. Together, these indicators confirm that the calibration is both accurate and statistically consistent.

Nevertheless, the present analysis was restricted to aggregate regression indicators; more detailed per-axis regression plots, expanded residual diagnostics, and repeatability testing were not conducted and remain tasks for future work.

5.2.2. Performance Test Results

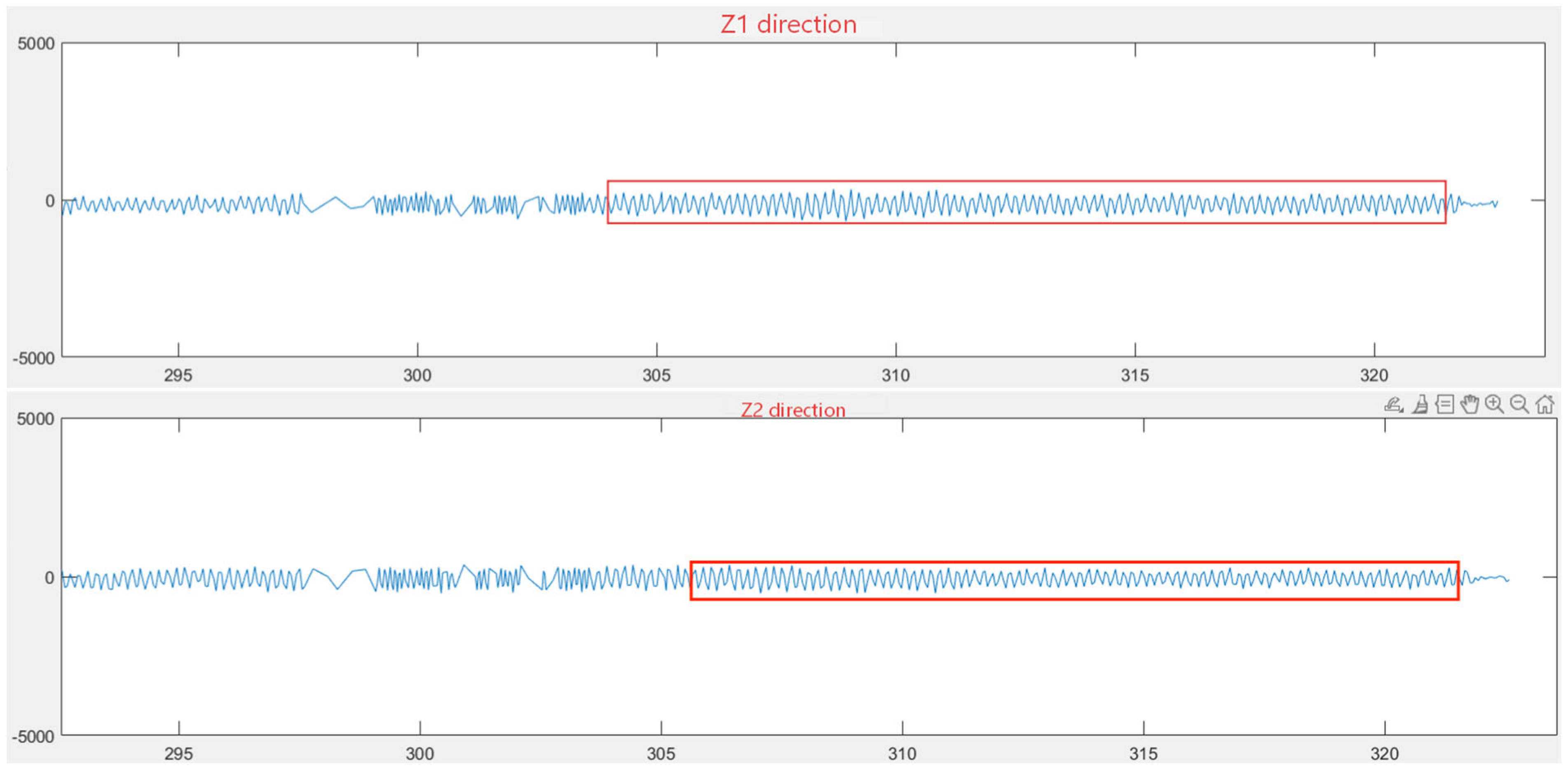

To evaluate accuracy under extreme electromagnetic stress, a high-intensity test was performed at the Guangzhou Quality Inspection Institute. A 30 A, 50 Hz current was applied to the magnetic field coil for one-minute intervals to simulate worst-case leakage conditions. A low-frequency Gaussmeter and the DRV5055 sensor were placed side by side near the coil to ensure consistent field exposure. The Z-axis channels (Z1, Z2), aligned with the coil axis, were selected for analysis, and data were logged via MATLAB.

Figure 9 shows the Z-direction waveforms, which exhibited symmetrical sinusoidal behavior consistent with the applied 50 Hz field. Among the full time record, the interval between 304 and 320 s was selected for quantitative analysis because it corresponds to the period when the field source had reached stable operation after instrument tuning. Within this steady-state window, the measured peaks were +241.87 μT and −241.64 μT, closely matching the Gaussmeter reference of 230.3 μT. The resulting deviation of ~4.7% remains within acceptable limits for industrial monitoring applications. Moreover, the system’s internal baseline differed from the reference by less than 1%, confirming high precision and consistency even under continuous power-frequency exposure. While the complete dataset demonstrates overall system functionality, the focus on this stable segment ensures a representative evaluation of accuracy and robustness.

To further validate the spatial consistency and measurement reliability of the proposed system, the X- and Y-axis sensors were also employed to capture the power-frequency magnetic field under the same experimental conditions. Multiple measurements across both axes showed a maximum relative error within 2%, confirming consistent performance across all six sensing directions.

Given the sinusoidal and periodic nature of industrial power-frequency magnetic fields, we first examine the raw recordings to identify segments with a stable sinusoidal pattern.

Figure 9 shows an unprocessed waveform; the red box highlights the stable sinusoidal portion that defines the analysis window. The processing pipeline and results are presented in

Figure 10. After preprocessing and outlier removal, peak detection was applied to estimate the field magnitude over time, and the peak values clustered around ±241 μT. Following the statistical 3σ principle, this value was adopted as the reference for subsequent error calculation and comparative analysis.

In addition, six-axis vector synthesis was applied to derive the total magnetic field strength at each measurement point. This approach, commonly used in electromagnetic field analysis, computes a scalar resultant by aggregating the orthogonal vector components captured along the X, Y, and Z axes. The synthesized field magnitude is:

It offers a comprehensive and intuitive representation of the local electromagnetic environment. This method enhances spatial resolution and facilitates the subsequent identification of localized field intensity anomalies.

This vector-based evaluation strategy will be further demonstrated in subsequent field deployments, such as in high-voltage electrical substations, where multi-directional magnetic interactions require integrated spatial field strength analysis.

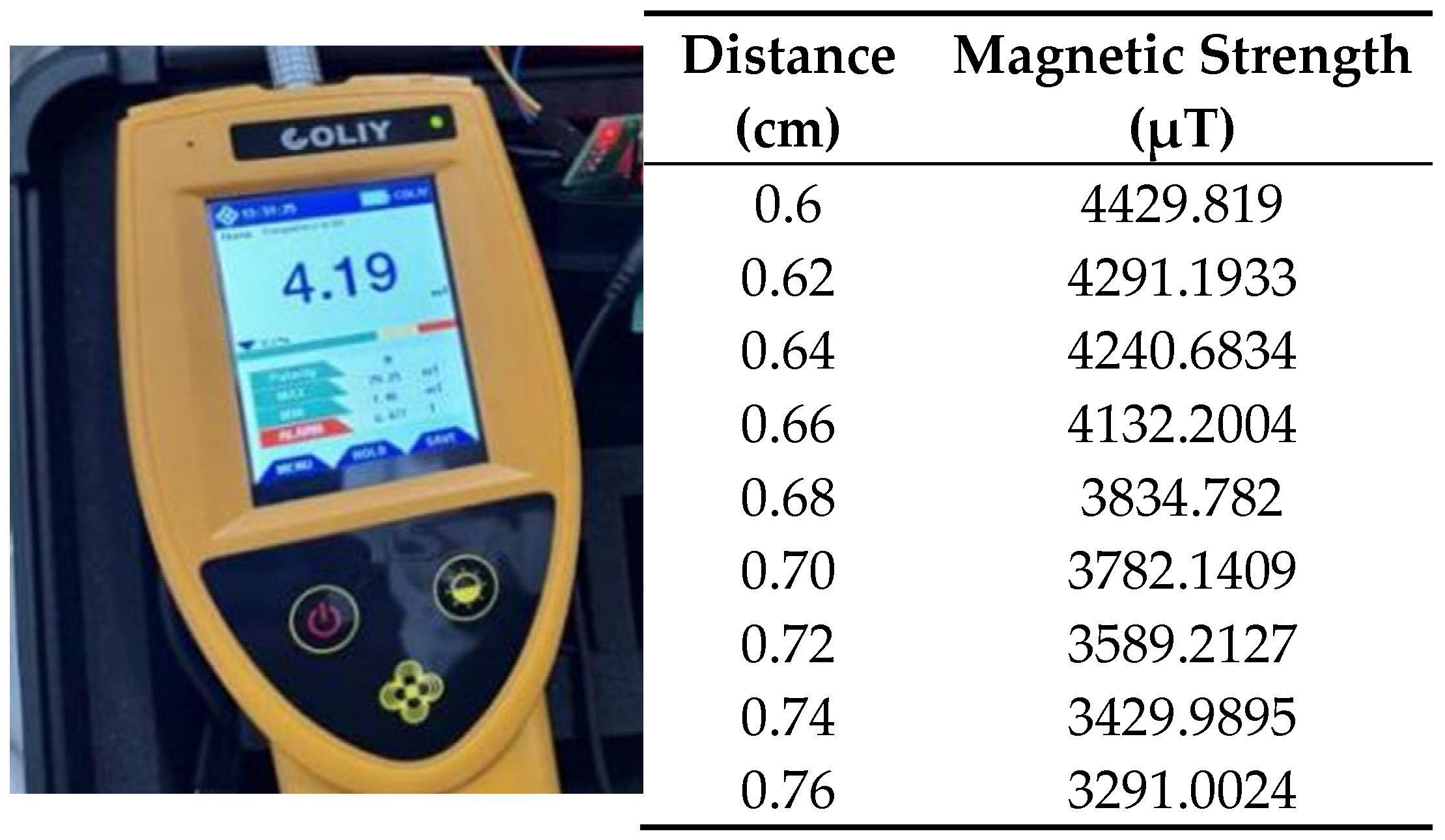

5.2.3. Constant Magnetic Field Measurement

To evaluate the spatial sensitivity and distance-dependent response of the proposed system in static magnetic field conditions, a controlled experiment was conducted using a permanent magnet as the field source. The test investigated the variation in measured magnetic field strength as a function of the probe’s distance from the magnet.

To ensure consistency in spatial reference, the DRV5055 sensor and a calibrated Gaussmeter probe were positioned at comparable distances from the magnetic source. A precision spiral micrometer fixture was used to clamp and gradually adjust the position of the magnet, allowing fine-grained control of displacement during measurement. As expected, the measured field strength increased with decreasing distance, demonstrating the system’s capability to capture spatial gradients in magnetic flux density.

This spatial verification process is critical for validating the accuracy of point-specific measurements and for ensuring that the system’s response is consistent with physical field distribution laws under static field conditions.

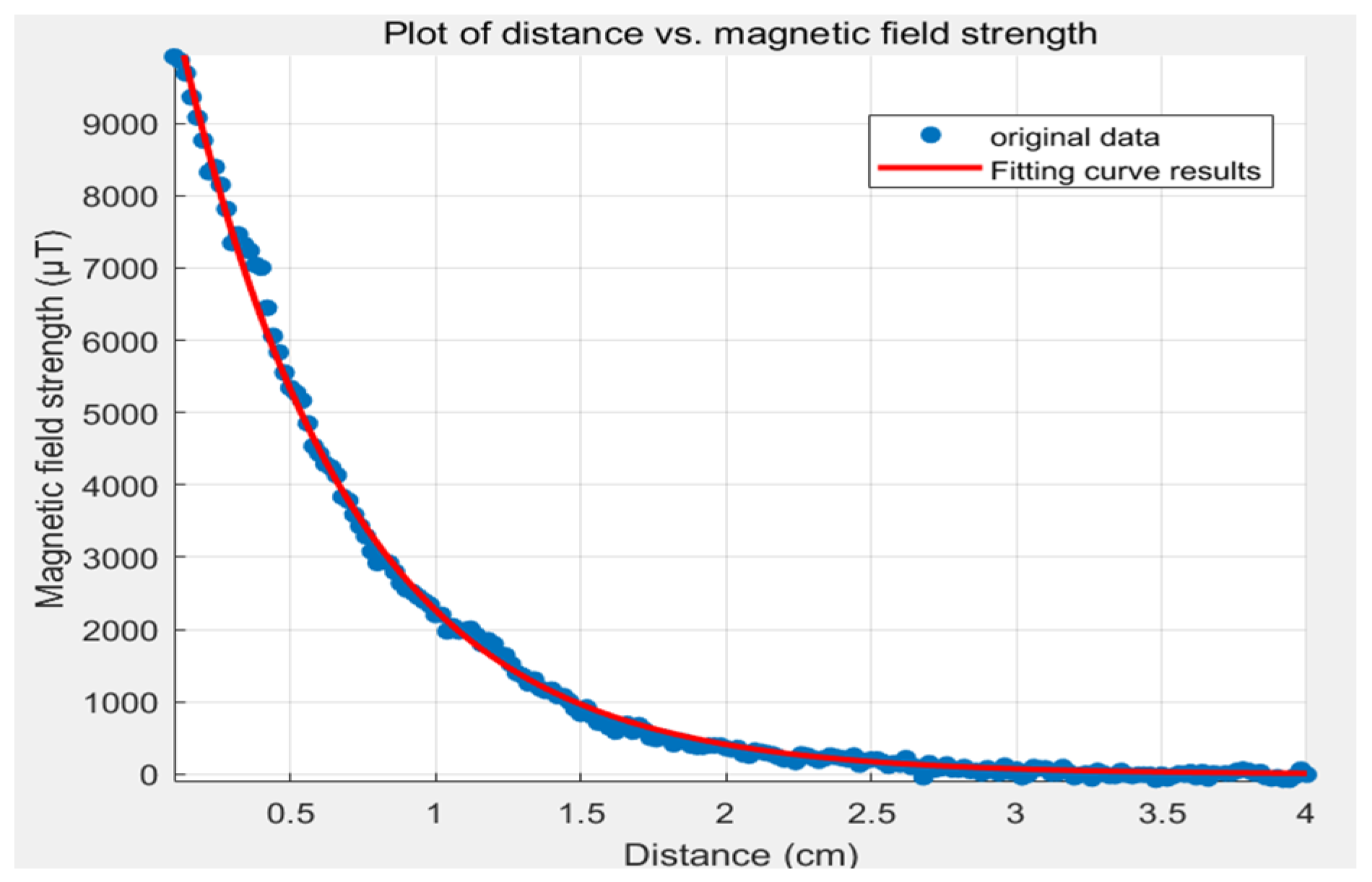

To characterize the spatial response of the system to static magnetic fields, a controlled experiment was conducted to measure the magnetic flux density as a function of distance from a permanent magnet. As shown in

Figure 11, the measured data exhibit a typical inverse relationship between magnetic field strength and distance. In the Strong Field Region (0–1 cm), the magnetic field declines steeply, reaching a peak value of 9921.30 μT at the closest measured point. Beyond 1 cm, the decay becomes more gradual, and the field strength asymptotically approaches zero at approximately 3.84 cm.

According to magnetic dipole field theory, the magnetic flux density is inversely proportional to the cube of the distance from the magnetic source, expressed as:

The experimental data were fitted using this relationship, and the resulting curve demonstrated strong agreement with the original measurements, validating the physical model and confirming the sensor’s accuracy in spatial gradient tracking.

To evaluate absolute measurement accuracy, the system’s output was compared to reference readings from a calibrated Gaussmeter. At a distance of 0.66 cm, the Gaussmeter reported a field strength of 4.13 mT, while the DRV5055-based system measured 4.10 mT—yielding a relative error of approximately 2% (

Figure 12). This level of accuracy complies with the requirements for static magnetic field monitoring and confirms the system’s capability to capture distance-dependent field variations with high fidelity.

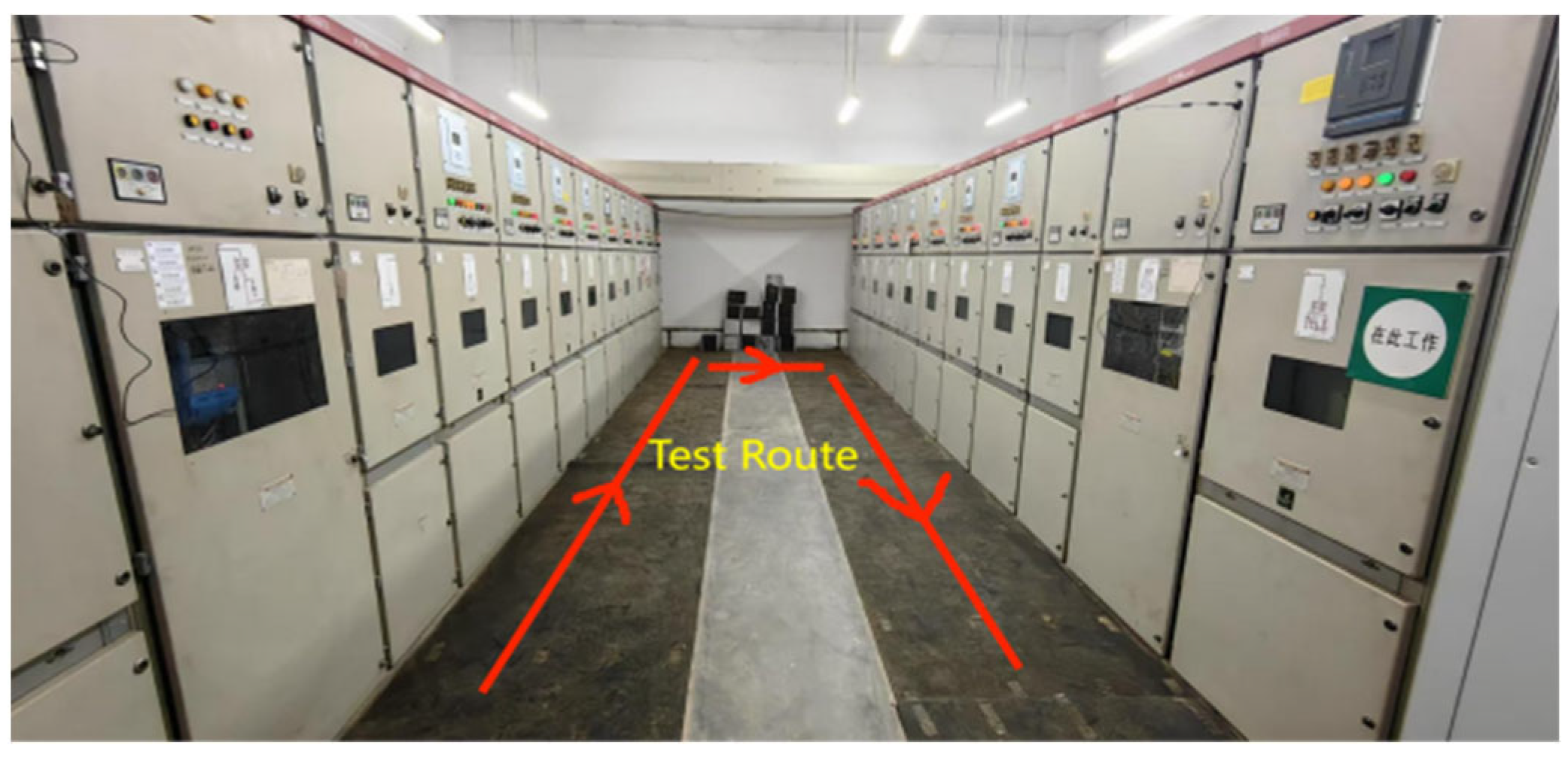

5.3. Field Testing

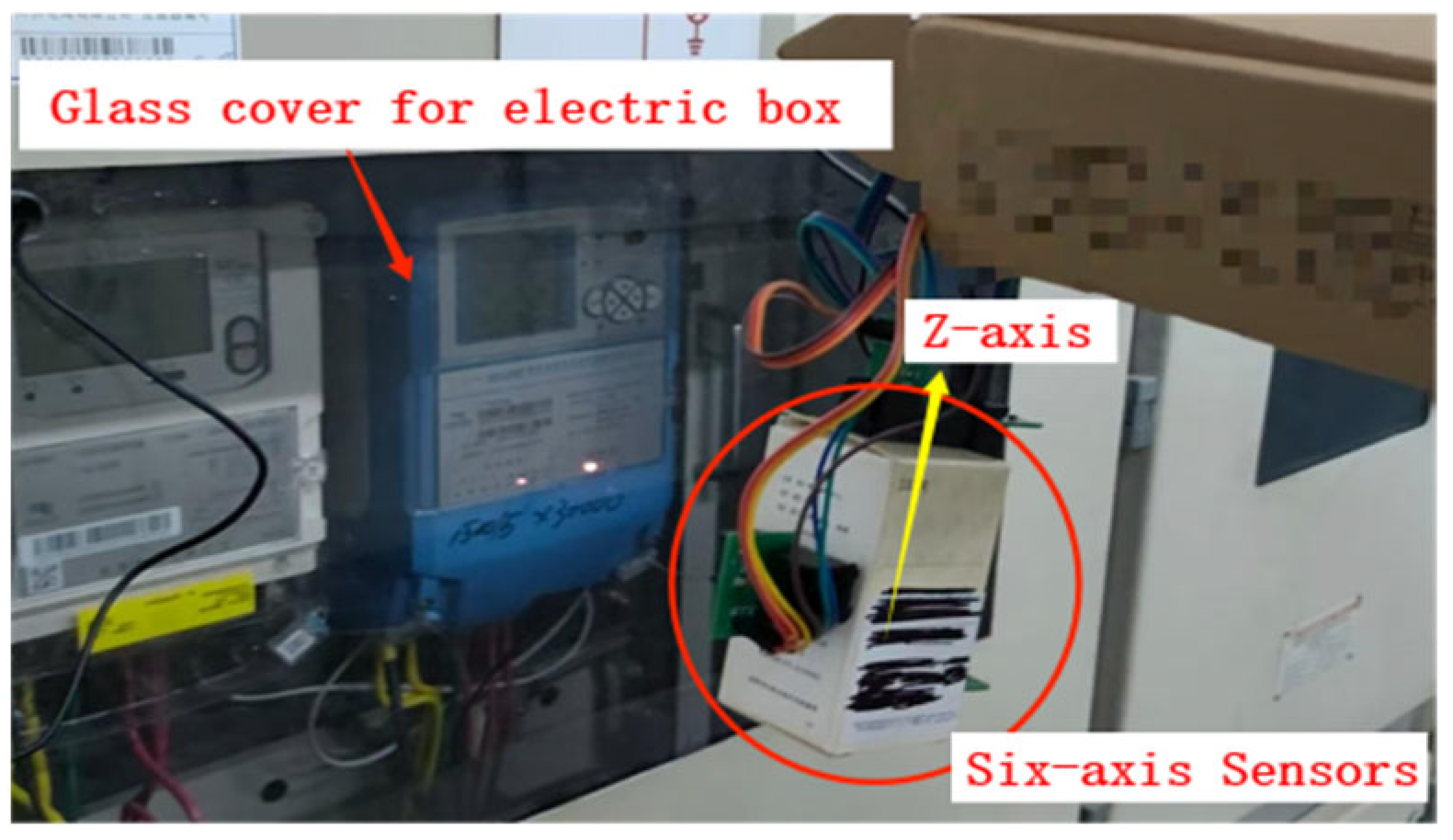

To assess the system’s practical applicability, an in situ electromagnetic field monitoring test was conducted within a high-voltage power distribution room. The experiment simulated mobile environmental sensing by traversing a U-shaped path (as shown in

Figure 13) along the central aisle of the facility, maintaining a constant speed and fixed sensor height.

Preliminary site inspection identified the glass covers of high-voltage switchgear cabinets as primary emission sources of electromagnetic radiation. Accordingly, the sensor module was aligned to the height of these glass panels during the measurement process to capture representative field intensity. As the autonomous vehicle platform for mobile deployment is still under development, the sensor system was manually transported along the path to emulate mobile monitoring behavior.

Throughout the test, magnetic field data were wirelessly transmitted in real time to the host computer, where the data were logged and analyzed. The processed results enabled spatial mapping of the magnetic field strength within the facility and were used to evaluate compliance with established safety exposure limits. This field validation confirms the system’s capability to operate reliably in complex electromagnetic environments and supports its future integration into automated inspection platforms for substations and high-voltage infrastructure.

To complement the spatial field mapping,

Figure 14 presents representative raw time-series data collected during the traversal. The top panel shows real-time ambient temperature, which remained stable at approximately 30 °C, indicating minimal influence from thermal drift during the test. The middle and bottom panels correspond to the magnetic field components along the X1 and X2 directions, respectively. Both traces exhibit short-term fluctuations and occasional transient peaks, which are attributed to switching operations and load variations in the distribution room. Importantly, these fluctuations reflect genuine environmental dynamics rather than sensor noise, as confirmed by repeated measurements. The stable temperature alongside reproducible magnetic field patterns demonstrates that the system can capture both steady-state and transient features of the electromagnetic field, thereby providing useful insights into operational uncertainties in practical monitoring scenarios.

Following data acquisition, time-series magnetic field measurements and corresponding temperature readings were exported from the host system. To ensure data quality and compliance with electromagnetic field monitoring standards, an outlier removal process was applied using the Interquartile Range (IQR) method. Data processing and anomaly filtering followed IEEE C95.3.1-2010 [

22], which recommends non-parametric statistical methods (e.g., IQR) for EMF measurements.

Nevertheless, several limitations should be acknowledged. First, the raw time-series data were only partially reported, and the sampling resolution may not fully capture rapid transient events; higher-frequency logging will be adopted in future work. Second, while temperature stability was confirmed, other environmental confounders such as humidity, variable load levels, and switching sequences were not systematically analyzed. Finally, the field deployment covered only a limited operational subset of conditions, and extended long-term trials across multiple substations are still required. These improvements will be the focus of subsequent studies.

Analysis of six-axis magnetic field data revealed that all channels remained within expected operational bounds, with the exception of the Z2 axis, which exhibited outliers potentially caused by transient environmental interference. The IQR-based filtering procedure was applied to Z2 as follows:

With 120 data points along the Z2 axis, the first (Q1) and third (Q3) quartiles were computed based on ascending order:

- ➁

Outlier Threshold Determination:

Data points outside the following bounds were identified as outliers:

In this case, data values with Z2 < −366.44 μT were excluded from further analysis.

To generalize this procedure, an interquartile range (IQR)-based data cleaning strategy was adopted. For each measurement window, the first (Q1) and third quartiles (Q3) of the dataset were calculated, and the IQR was defined as Q3 − Q1. Data points falling outside the interval [Q1 − 1.5·IQR, Q3 + 1.5·IQR] were classified as outliers and removed. The 1.5·IQR rule, originally proposed by Tukey, is a widely accepted statistical criterion that balances robustness against extreme outliers with preservation of genuine fluctuations. In our datasets, this threshold typically excluded less than 2% of samples, and the removal did not change the mean or RMS values by more than 0.1%. Thus, the adopted IQR-based cleaning effectively suppresses spurious EMI spikes and ADC glitches while ensuring unbiased long-term statistical results.

After filtering, the remaining data were used to recalculate magnetic field intensity metrics. The cleaned results are summarized in

Table 4, confirming improved data stability and consistency for subsequent interpretation.

The design and validation of this electromagnetic field monitoring system were conducted with close reference to internationally recognized electromagnetic field (EMF) testing standards. Specifically, IEC 61000-4-8 defines the required procedures for evaluating immunity to power-frequency magnetic fields (50/60 Hz) 19, and it was used as a guiding standard for setting up uniform field conditions during testing.

An analysis of the raw (pre-cleaning) data revealed two primary contributors to the observed anomalies in the Z-axis magnetic field measurements.

First, although the power house employs a multi-point grounding scheme, the impedance mismatch across different grounding nodes leads to non-uniform current return paths. This condition gives rise to circulating eddy currents in surrounding metallic structures, which in turn induce localized vertical magnetic fields—particularly near conductive enclosures and cable trays (

Figure 15).

Second, the elevated Z-axis readings relative to the X- and Y-axis components are attributed to the superposition of spatial field contributions from vertically arranged high-voltage equipment. The layout of transformers, busbars, and switchgear within the facility contributes to a predominantly vertical magnetic flux distribution, which aligns with the orientation of the Z-axis sensing element. As a result, constructive magnetic field superposition may amplify Z-direction measurements in specific regions.

These findings validate the use of axis-specific filtering techniques and justify the application of IQR-based cleaning to isolate and remove localized distortions in the Z-axis data channel.

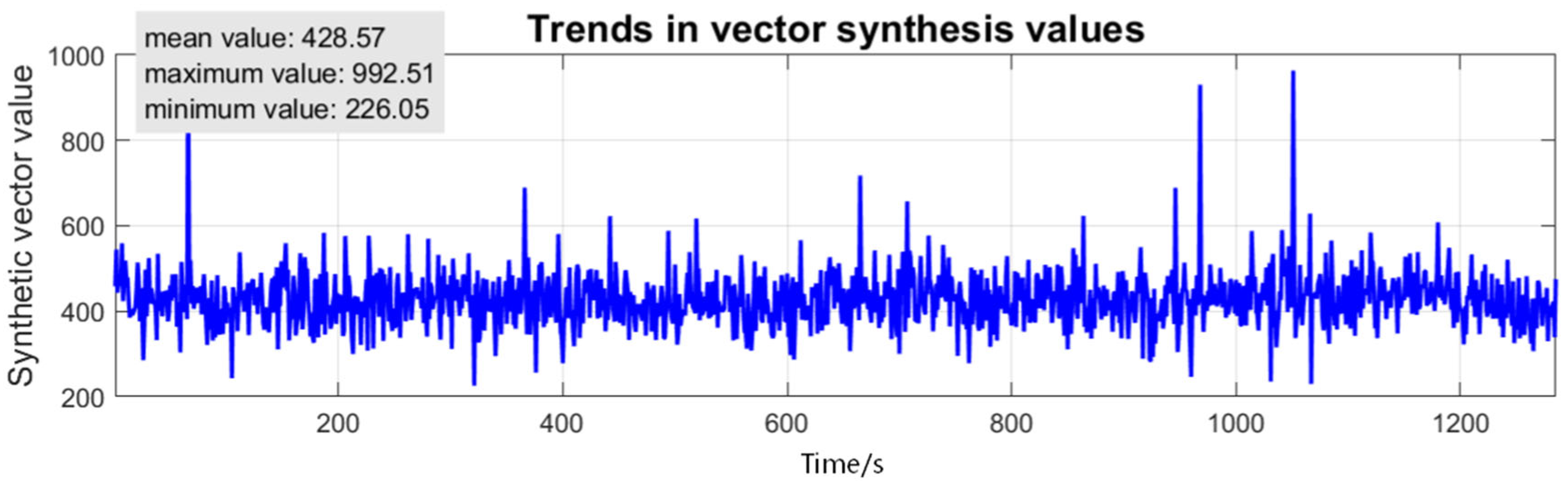

To verify the system’s measurement accuracy in practical scenarios, a calibrated Gaussmeter was used to measure the ambient magnetic flux density within the high-voltage power room. The reference field strength was recorded as 421.8 μT. In parallel, the six-axis composite vector magnitude measured by the proposed system averaged 428.57 μT (as shown in

Figure 16), yielding a relative error of only 1.66%.

This minimal deviation confirms the effectiveness of the vector synthesis approach and highlights the system’s ability to capture real-time magnetic field distributions with high precision. Moreover, the successful deployment and measurement in a complex electromagnetic environment further validate the system’s dynamic responsiveness and operational feasibility for practical power infrastructure monitoring applications.