1. Introduction

In aircraft manufacturing, operation, and maintenance environments, residual foreign object debris (FOD) poses significant threats to flight safety and product quality, potentially causing failures such as short circuits, jams, and blockages during aircraft operation. Traditional methods currently used in industrial settings primarily rely on manual visual inspection and photographic documentation. However, these methods are highly dependent on human vigilance and are prone to missed detections due to fatigue and oversight. Consequently, the development of image-based automated FOD detection methods is of considerable significance for enhancing detection reliability and ensuring aviation safety.

This task falls within the domain of Image Anomaly Detection (IAD). In recent years, deep learning-based object detection and anomaly recognition methods have achieved remarkable progress in the field of industrial visual inspection. Beyond general IAD frameworks, numerous studies have proposed various innovative approaches tailored to specific tasks such as aircraft FOD detection, industrial defect identification, and small object localization. Roth et al. introduced an attention-based multi-scale feature fusion architecture, which enables high-precision localization in semiconductor defect inspection, offering new insights for detecting subtle anomalies in complex backgrounds [

1]. Chen et al. developed a Generative Adversarial Network (GAN)-based anomaly detection model that performs unsupervised anomaly segmentation through reconstruction errors of normal samples, demonstrating strong performance in detecting missing fasteners in aerospace applications [

2]. Wang et al. proposed an Augmented Feature Alignment Network (AFAN) that integrates intermediate domain image generation and adversarial domain training. By leveraging multi-scale feature alignment and region-level domain-invariant feature learning, AFAN significantly outperforms existing methods in both similar and dissimilar unsupervised domain adaptation tasks, effectively mitigating issues of insufficient annotated samples and inadequate feature alignment [

3]. Da et al. designed a Local and Global Feature Aggregation Network (LGFAN) incorporating a Visual Geometry Group backbone, attention mechanisms, and aggregation modules. By efficiently utilizing features to reduce FOD, LGFAN maintains competitive performance across five public datasets while improving computational efficiency, addressing the limitations of existing CNN-based salient object detection algorithms that overly emphasize multi-scale feature fusion at the expense of other critical characteristics [

4].

At the same time, Wang et al. presented an enhanced YOLOv8-based model for detecting aircraft skin defects. By integrating Shuffle Attention++, SIOU, and Focal Loss, a bidirectional feature pyramid network, and depthwise separable convolutions, alongside data augmentation and class-balancing strategies, the model considerably improves detection accuracy, recall, and speed for small objects in complex environments, meeting the demands of high-precision real-time inspection [

5]. Liao et al. developed a system utilizing portable devices (e.g., smartphones and drones) coupled with IoT technology for real-time aircraft skin defect inspection. Employing a YOLOv9 model, the system achieves high recognition accuracy, demonstrating the potential of automated image-based detection in aviation maintenance [

6]. Qiao et al. proposed a Unified Enhanced Feature Pyramid Network (UEFPN), which constructs a unified multi-scale feature domain and incorporates channel attention fusion modules to alleviate feature aliasing while enhancing contextual information in shallow features. UEFPN can be rapidly adapted to various models, and experiments confirm its significant improvements in small object detection performance [

7]. Hu et al. introduced a self-supervised learning framework based on Faster R-CNN that substantially reduces the need for manual annotation in steel surface defect detection by effectively leveraging unlabeled data, offering a scalable and general solution for industrial defect recognition under low annotation budgets [

8]. Zhang et al. designed a lightweight FOD model named LF-YOLO for detecting FOD on runways. By integrating high-resolution feature maps and employing a lightweight backbone and detection head, LF-YOLO achieves superior detection accuracy on small-object FOD datasets with fewer parameters compared to state-of-the-art methods [

9]. Ye et al. proposed an improved YOLOv3-based FOD detection algorithm for identifying FOD on airport runways. Through multi-scale detection and enhanced feature extraction, combined with image processing and deep learning, the method enables efficient autonomous recognition with a detection speed of less than 0.2 s, exhibiting promising environmental adaptability and engineering applicability [

10]. Zhang et al. addressed the challenge of detecting small and inconspicuous FOD on runways—which often leads to false positives and missed detections—by comprehensively refining the YOLOv5 algorithm through structural optimization, novel modules and loss functions, and alternative upsampling methods. Their approach achieves a notable increase of 5.4% on the Fod_Tiny dataset and 1.9% on the Micro_COCO dataset over baseline performance, validating the effectiveness and generalization capability of the proposed improvements [

11]. Shan et al. surveyed traditional, radar, and AI-based techniques for FOD detection in critical areas such as airport runways, outlining the strengths and weaknesses of each method and emphasizing the need for integrated radar and AI systems to enhance detection performance, thereby guiding future research toward safer and more efficient operations [

12]. Kumari et al. introduced an enhanced YOLOv8-based deep learning approach for runway FOD detection, achieving higher precision (mAP50 of 99.022% and mAP50-95 of 88.354%) on the public FOD-A dataset and outperforming conventional methods in complex environments [

13]. Mo et al. proposed an intelligent detection method based on an improved YOLOv5 architecture. Trained on a dual-spectrum dataset, the model achieves real-time detection at 36.3 frames per second with 91.1% accuracy (a 7.4% improvement over the baseline), demonstrating both effectiveness and practical utility [

14]. Yu et al. developed a lightweight FOD detection model based on YOLOv5s, which significantly enhances the accuracy and speed of small FOD detection through optimized feature extraction, receptive field modules, and detection head design, providing an innovative solution for automated FOD inspection in civil aircraft assembly [

15]. These studies offer diverse technical pathways for aircraft FOD detection, encompassing feature fusion, domain adaptation, small object optimization, and real-time processing. Nevertheless, they also underscore the persistent challenges in achieving efficient, accurate, and robust detection in real-world complex scenarios.

In contrast to the aforementioned studies, which are predominantly focused on structured environments such as runways and aircraft skins, this paper addresses the challenge of FOD detection within enclosed aircraft compartments. Such environments present multifaceted difficulties including confined spaces, highly variable viewpoints, uneven illumination, and complex backgrounds, resulting in significantly more complicated detection conditions and a scarcity of large-scale annotated data. To tackle these challenges, we propose a registration-based Siamese network framework for FOD detection, aiming to resolve the critical problem of cross-view anomaly detection in complex interior spaces.

The proposed model is built upon a Siamese architecture and trained using pairs of normal images. To preserve spatial information, the network retains the first three convolutional blocks of ResNet, while incorporating spatial transformation modules to handle geometric variations between image pairs. A feature registration module is further integrated to prevent model collapse. During inference, anomalies are identified by quantifying the deviation between the features of the test image and the established normal distribution. Experimental results demonstrate that our model achieves significant improvements in both image-level and pixel-level AUC (Area Under the Curve) compared to baseline methods.

The main contributions of this work can be summarized as follows: (1) the construction of a dedicated dataset specifically designed for aircraft FOD detection; (2) the development of a novel detection framework that integrates Siamese networks with spatial transformations; (3) comprehensive validation of the proposed model, accompanied by an in-depth analysis of key factors influencing detection performance; and (4) demonstration of the model’s robustness through evaluation on additional datasets.

The remainder of this paper is organized as follows:

Section 2 describes the materials and methods,

Section 3 presents experimental results and analysis,

Section 4 provides discussion, and

Section 5 concludes the study.

2. Materials and Methods

2.1. Siamese Representation Learning

Siamese networks [

16,

17,

18], which employ weight-sharing branches to process paired inputs, have become a cornerstone of unsupervised visual representation learning. Early methods predominantly utilized contrastive learning frameworks, such as SimCLR (A Simple Framework for Contrastive Learning of Representations) [

19] and MoCo (Momentum Contrast) [

20], which maximize the similarity between augmented views of the same image while repelling negative pairs to prevent representation collapse. These approaches typically required large batch sizes or memory banks to maintain performance. Subsequently, alternative strategies were introduced: clustering-based methods like SwAV (Swapping Assignments between Views) [

21] assigned representations to prototypes through online clustering, while BYOL (Bootstrap Your Own Latent) [

22] employed a momentum encoder in one branch to provide stable targets without relying on negative examples. These techniques collectively reinforced the assumption that complex mechanisms—such as negative sampling, large batches, or momentum encoders—were indispensable for avoiding collapse.

A significant shift in this paradigm was introduced by SimSiam, which demonstrated that none of these components is fundamentally required. The architecture of SimSiam is notably simple: it maximizes the similarity between two augmented views using only a weight-shared encoder, a small predictor MLP (Multilayer Perceptron), and a stop-gradient operation. Critically, the removal of the stop-gradient operation leads to immediate model collapse, whereas its inclusion enables the model to achieve competitive accuracy on ImageNet. Although the predictor MLP is essential to the learning process, it does not need to converge fully. Similarly, while batch normalization aids in optimization, it is not the factor preventing collapse.

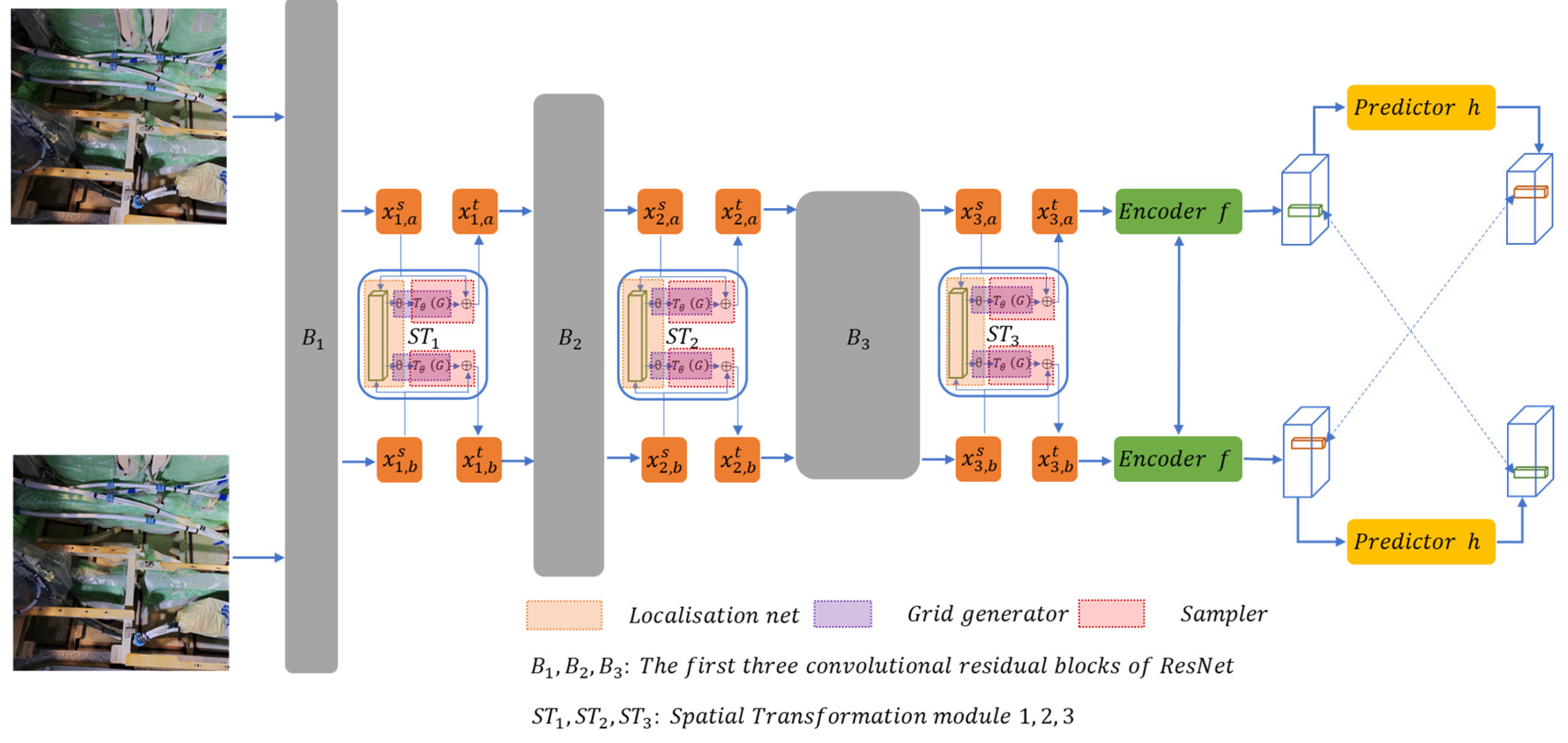

As shown in

Figure 1, the

processes both input views under a weight-sharing mechanism, producing feature representations

. A prediction MLP head, denoted as

, is applied to the output of one view and aligns it with the representation of the other view, yielding

. Defining the two output vectors as

and

, we minimize the negative cosine similarity between them:

A symmetrized loss was defined as:

2.2. Spatial Transformation Module

Spatial Transformation Module [

23] addresses a fundamental limitation of Convolutional Neural Networks (CNNs): the lack of efficient and learnable spatial invariance to geometric input transformations such as translation, rotation, scaling, and warping. Although CNNs excel in tasks such as classification and detection, their ability to handle spatial variations depends primarily on fixed mechanisms like small-kernel max-pooling, which are often inadequate in the presence of significant or complex deformations. The Spatial Transformation Module provides a differentiable component that actively warps feature maps into a canonical form, thereby improving model robustness without requiring additional supervision.

The Spatial Transformation Module consists of three key components:

1. Localisation Net: A sub-network (e.g., a convolutional neural network or a fully connected network) that estimates the transformation parameters

based on the input. The localization function, denoted as

, must include a final regression layer to output the transformation parameters

. The localization network takes an input feature map

, with

W and

H denote the width and height, and

C denotes the number of channels.

2. Grid Generator: This module generates a sampling grid

by applying the transformation parameters

to a predefined target grid

. It maps each coordinate in the output feature map back to the corresponding location in the input feature space. Assuming

represents a 2D affine transformation

, the pointwise mapping can be expressed as:

where

are the target coordinates of the regular grid in the output feature map,

are the source coordinates in the input feature map that define the sample points, and

is the affine transformation matrix. The transformation matrix can be affine (6 parameters), projective (8 parameters), or thin-plate spline (16+ parameters). Crucially, the spatial Transformation module is differentiable end-to-end, enabling seamless integration into CNNs via standard backpropagation.

3. Differentiable Sampler: Warps the input feature map using bilinear interpolation (or other kernels) at the sampled coordinates, ensuring gradients flow back to

. To perform a spatial transformation of the input feature map, a sampler must take the set of sampling points

, along with the input feature map

and produce the sampled output feature map

.

is the value at location

in channel

of the input, and

is the output value for pixel

at location

in channel

.

2.3. Anomaly Scoring

During inference, we compare the registered features of the evidence retention image to its corresponding normal distribution to detect anomalies. Test samples out of the normal distribution are considered anomalies. Given the estimated normal distribution

, denote

as the registered feature of

at the patch position

,

is the sample mean of the

, and the anomaly score of the patch at position

is formulated as:

2.4. Designed Method

Conventional image anomaly detection (IAD) methods typically require training a dedicated model for each object category. However, such a paradigm becomes highly impractical in real-world aircraft inspection scenarios due to the large number of enclosed compartments, each of which must be extensively documented with evidentiary images. A separate model should be trained for each distinct category, the total number of required models would become prohibitively large, rendering this approach infeasible for practical deployment. Standard IAD frameworks, such as those evaluated on the MVTec dataset (15 categories, requiring 15 models) or the MPDD dataset (6 categories, requiring 6 models), operate under this category-specific training assumption. In the context of our study, each evidentiary retention image effectively constitutes a unique category. With more than 150 enclosed zones in a typical aircraft and nearly 100 evidence retention images per zone, the total number of categories would exceed 1500. Consequently, training over 1500 individual models for FOD detection is clearly computationally intractable and economically unviable.

Upon analyzing the process of visual FOD detection, it becomes evident that the underlying mechanism involves mentally comparing the current image against a reference image of the same location without any FOD, thereby identifying anomalies as discrepancies. Inspired by this cognitive process, we propose a computational approach that compares evidence retention images against reference images captured at identical locations but under anomaly-free conditions. However, due to variations in imaging viewpoint, field of view, color balance, and illumination between evidence and reference images, feature-level image registration is essential to align their representations. By comparing the registered features of each evidence retention image against a corresponding normal distribution estimated from reference images, FOD can be effectively identified as statistical anomalies. This transforms the task into training a model capable of performing image registration—a process analogous to human mental comparison and invariant to image content, thereby resulting in a category-agnostic detection framework.

As illustrated in

Figure 1, the proposed model is constructed based on a Siamese network architecture [

16]. It is trained using positive image pairs from the MVTec, MPDD, and a self-built dataset, all belonging to the same object category. First, following the design of PaDiM [

24], we retain the first three convolutional residual blocks of ResNet (B1, B2, and B3) while omitting the final block to preserve sufficient spatial information in the output features. Second, to facilitate image registration, a spatial transformation module [

25] is incorporated after each convolutional residual block. This module supports six transformation modes: affine, shear, rotation–scale, translation–scale, rotation–translation, and rotation–translation–scale. Finally, the outputs from both the convolutional blocks and the spatial transformation modules are fed into a feature registration module, which prevents model collapse during optimization even in the absence of negative samples.

During inference, a normal distribution is first estimated for the target category using a small set of reference images from the same location that are free of FOD. Given the limited number of such reference images, data augmentation is applied to enhance statistical robustness. The registered features of each evidence retention image are then compared against this estimated distribution to compute an anomaly score, which quantifies the deviation from normality and serves as the detection criterion for FOD.

5. Conclusions

Based on image registration, this study investigates an auxiliary detection method for aircraft FOD. Experimental results demonstrate the effectiveness of the proposed model, the influence of key parameters, and its robustness. The detection approach is inspired by the human cognitive process during visual inspection, which involves comparing the currently observed image with a correct mental reference. This work represents an attempt to mimic such human-like comparative reasoning in machine learning. With only a small number of reference images depicting normal conditions, the method can inherently determine whether a test image deviates from the norm. Its applicability extends beyond aircraft FOD detection. For example, it could be employed in tasks such as verifying the presence of fasteners in aircraft assembly—a scenario characterized by high volume and proneness to human error. More importantly, the same model weights can be applied across different tasks. Specifically, the trained model MFOD from this study could, in principle, be directly utilized to detect missing fasteners in aircraft assembly, thereby serving as a universal model for this category of inspection tasks.

However, the extent and limitations of this generalizability—such as the impact of scene complexity—require further in-depth investigation. The proposed method also has certain limitations. Although the self-constructed FOD and Car datasets in this study exhibit significantly higher scene complexity compared with the MVTec and MPDD datasets, further increases in complexity may challenge the method. Under such conditions, discrepancies between test images and reference images may become less detectable due to constraints in the model’s input dimensions. In real-world deployment, differences in shooting viewpoint, illumination, and capture devices between test and reference images may markedly affect model performance; a quantitative analysis of this influence requires further study. Moreover, future research should focus on improving robustness to small objects, transparent or thin structures, color variations, and shadows to facilitate broader practical application.