1. Introduction

Crack detection and analysis are essential processes for maintaining the stability and longevity of infrastructure. To date, these tasks have primarily been conducted through manual visual inspection or relatively simple image processing techniques. However, these traditional methods are highly inefficient in terms of time, labor, and resources when applied to large-scale infrastructure. Visual inspection can yield inconsistent results owing to subjectivity, whereas image-processing-based techniques struggle to detect cracks accurately in complex environments. To address these challenges, computer vision research has been actively exploring deep learning-based automated crack detection and segmentation techniques.

Deep learning has revolutionized image processing tasks, with early research primarily focusing on object classification within images. A notable milestone was the introduction of AlexNet [

1], which won the 2012 ImageNet competition and marked the beginning of deep learning-based image classification. Subsequent models such as visual geometry group network (VGG) [

2], GoogLeNet [

3], and residual network (ResNet) [

4] further improved classification accuracy through deeper networks and architectural innovations. ResNet, in particular, introduced residual learning, which mitigated the vanishing gradient problem in deep networks and significantly enhanced performance. These models have strong performance in image classification, expanding the applicability of deep learning technology.

With advancement in image classification, research expanded to the more complex task of object detection, which aims to identify objects within an image and accurately locate them using bounding boxes. One of the early representative models in this field was regions with convolutional neural networks (regions with CNN features) [

5], which worked by segmenting an image into multiple regions and classifying each region individually. However, this approach was computationally expensive and not suitable for real-time detection. Fast R-CNN [

6] and Faster R-CNN [

7] improved efficiency by integrating region proposal processes into the network. Later, you only look once (YOLO) [

8] and Single Shot MultiBox Detector (SSD) [

9] were introduced to enable real-time object detection through a single network. YOLO analyzes an entire image in one pass, offering high-speed detection, while SSD employs multiple bounding boxes of different sizes to effectively detect objects of varying scales. These models have been widely adopted in applications such as autonomous driving, surveillance, and medical image analysis.

Further technological advancements have enabled the development of image segmentation, which determines the precise pixel-wise locations of objects within an image. Image segmentation is a critical challenge in computer vision that requires fine-grained information processing. The fully convolutional network (FCN) [

10] was the first deep learning-based segmentation model to utilize an entirely convolutional architecture, enabling pixel-wise segmentation without input image size restrictions. U-Net [

11], initially designed for medical image analysis, demonstrates exceptional performance in high-precision tasks and is applied across various domains. The DeepLab series [

12] leverages atrous convolution and spatial pyramid pooling to handle objects of different sizes and enhance accuracy near object boundaries. The Pyramid Scene Parsing Network (PSPNet) [

13] incorporates a pyramid pooling module to utilize global context information, achieving high performance in complex scenes. Mask R-CNN [

14] combines object detection and segmentation to generate precise object masks and is widely used in autonomous driving and medical imaging applications.

Although these models have evolved with distinct strengths and limitations, they continue to face challenges in effectively processing local and global features within input images. CNNs are used to learn local features but are inherently limited in capturing global dependencies. To overcome this limitation, a Vision Transformer (ViT) [

15,

16], inspired by the Transformer architecture [

15] from natural language processing (NLP), was introduced into computer vision. ViT utilizes a global self-attention mechanism that considers the interactions between all image patches, enabling it to learn long-range dependencies and global representations that CNNs may overlook because of their restricted receptive fields. ViT demonstrates a particularly strong performance on large-scale datasets. However, Transformer-based models alone may struggle to capture fine-grained local details.

Hybrid models that combine CNNs and Transformers have been proposed to leverage local and global features simultaneously. These hybrid vision transformer models integrate CNNs’ strength in local feature extraction with Transformers’ ability to capture global context, addressing the shortcomings of existing segmentation models.

This study aims to enhance the accuracy of crack detection and segmentation by adopting a hybrid vision transformer approach. We propose a model that integrates Vision Transformer into the U-Net architecture and evaluate its performance on a crack image dataset. U-Net is highly effective in learning local features, and by incorporating Vision Transformer, which excels in capturing global characteristics, we aim to develop a model capable of effectively segmenting complex crack patterns. By overcoming the limitations of traditional methods, our approach is expected to provide significant practical implications for infrastructure monitoring. First, by enabling automated and precise crack segmentation, it can drastically reduce the time and cost associated with manual inspections, which are often slow and subjective. Second, the ability to accurately detect and measure fine cracks allows for early intervention, shifting maintenance strategies from reactive to proactive and preventing minor issues from escalating into major structural failures. Ultimately, this research aims to provide a core technology for data-driven structural health monitoring systems, allowing asset managers to make more informed decisions for prioritizing repairs and allocating resources effectively.

2. Related Works

Research on crack detection using deep learning models has accelerated with the introduction of Convolutional Neural Networks (CNNs). Initially, crack detection research focused on object detection methods that represent crack regions using bounding boxes. However, it has since expanded to image segmentation, enabling pixel-level precision.

2.1. CNN-Based Crack Detection

Crack detection involves identifying crack regions by representing them as bounding boxes, which include the x and y coordinates, width, and height of the detected cracks.

For instance, Alfarrarjeh et al. [

17] applied the YOLO model to smartphone-captured images to detect various types of road damage, such as cracks and potholes.

Cha et al. [

18] enhanced crack detection accuracy in concrete structures by incorporating batch normalization and dropout techniques into a convolutional model.

Furthermore, Wang et al. [

19] combined the strengths of the Inception and ResNet architectures while employing a genetic algorithm-based k-means clustering method to refine crack detection. This approach improved clustering efficiency and detection speed.

While these object detection-based methods effectively identify crack regions, they do not provide as detailed information as pixel-level image segmentation methods.

2.2. CNN-Based Crack Segmentation

Image segmentation involves classifying each pixel by grouping similar regions or sections of an image into class labels. Traditional CNNs were initially developed for image classification, and their final output layer typically comprises fully connected layers [

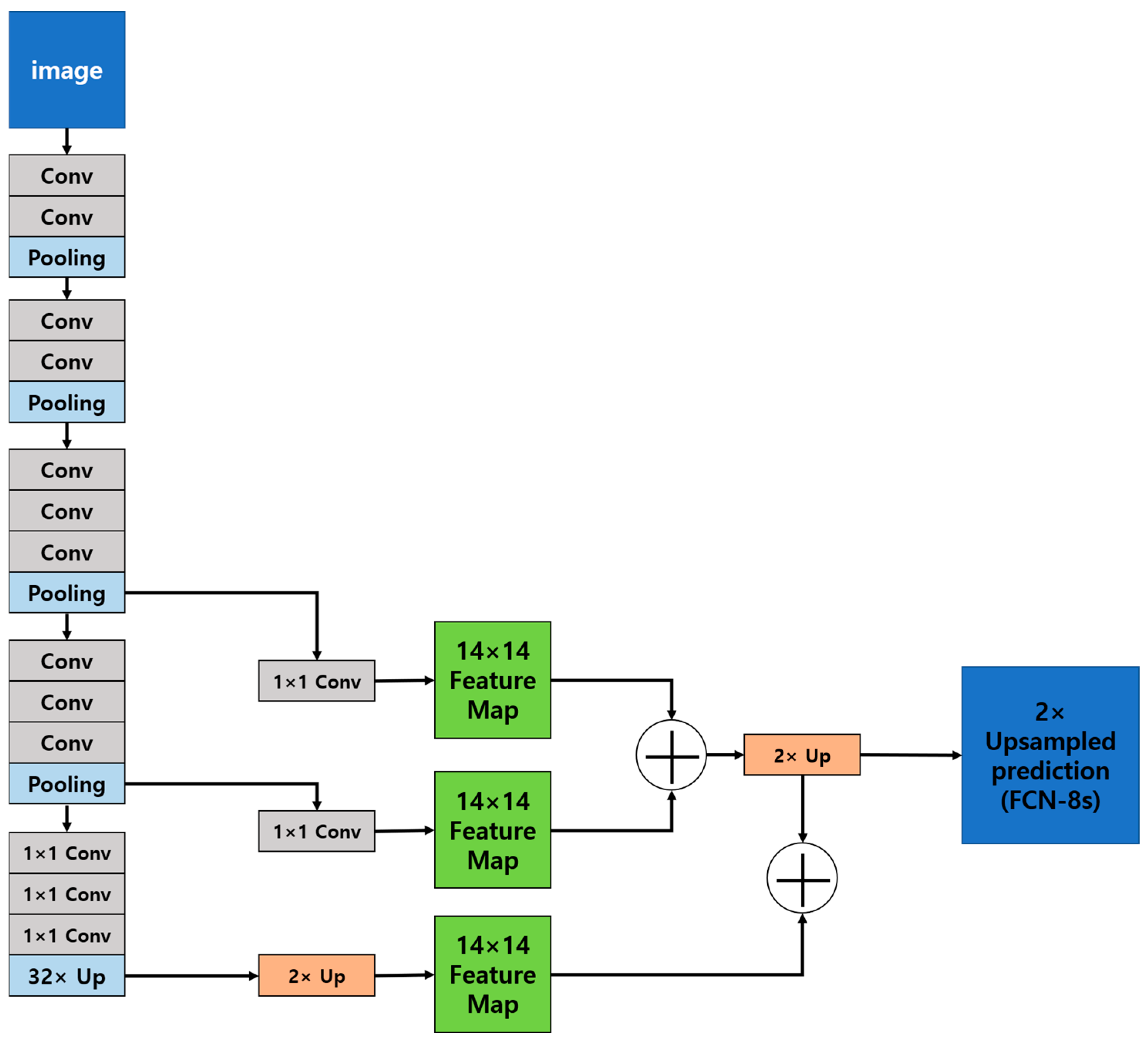

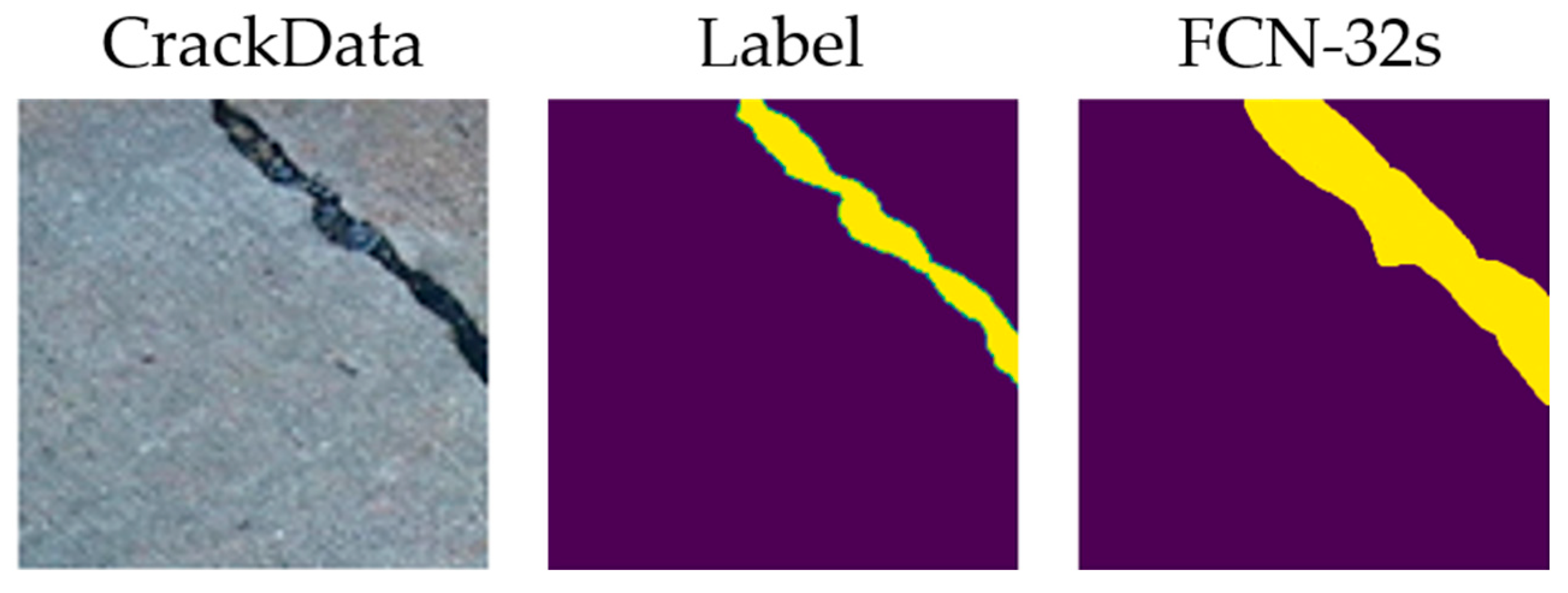

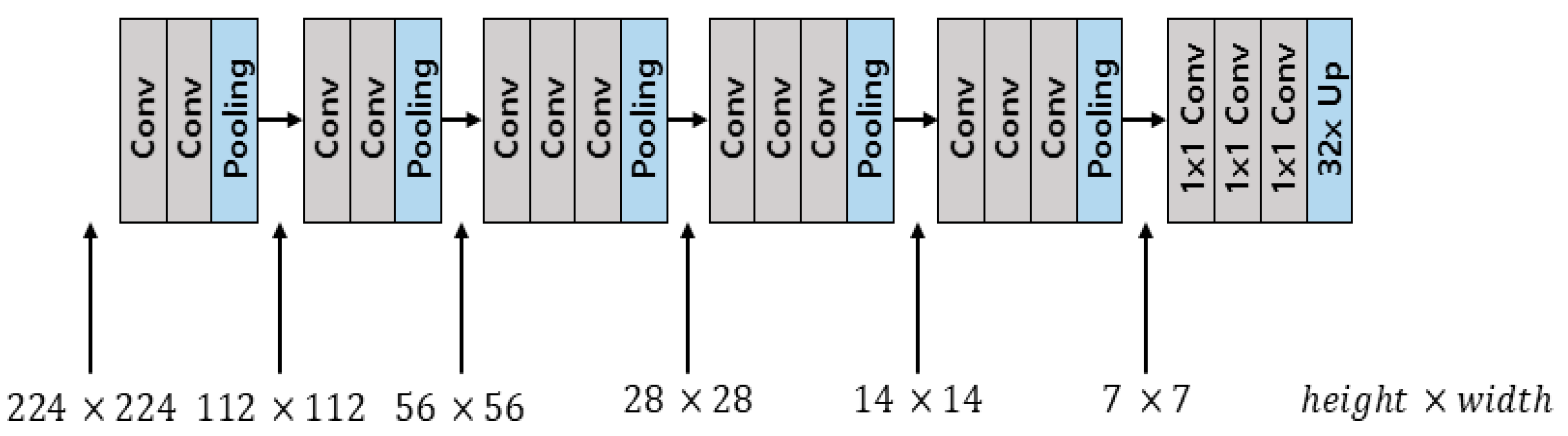

20], which leads to a loss of spatial information. FCN addresses this limitation by replacing fully connected layers with 1 × 1 convolutional layers (

Figure 1 and

Figure 2). However, the segmentation output often lacks fine details because the feature map size is reduced to a 7 × 7 resolution before being upsampled by a factor of 32 (

Figure 3). To address this limitation, FCN employs transposed convolutional upsampling and skip connections, as shown in

Figure 1 (right). The main contribution of FCN is to demonstrate that CNNs, initially designed for classification, can be adapted for segmentation by modifying their final layers.

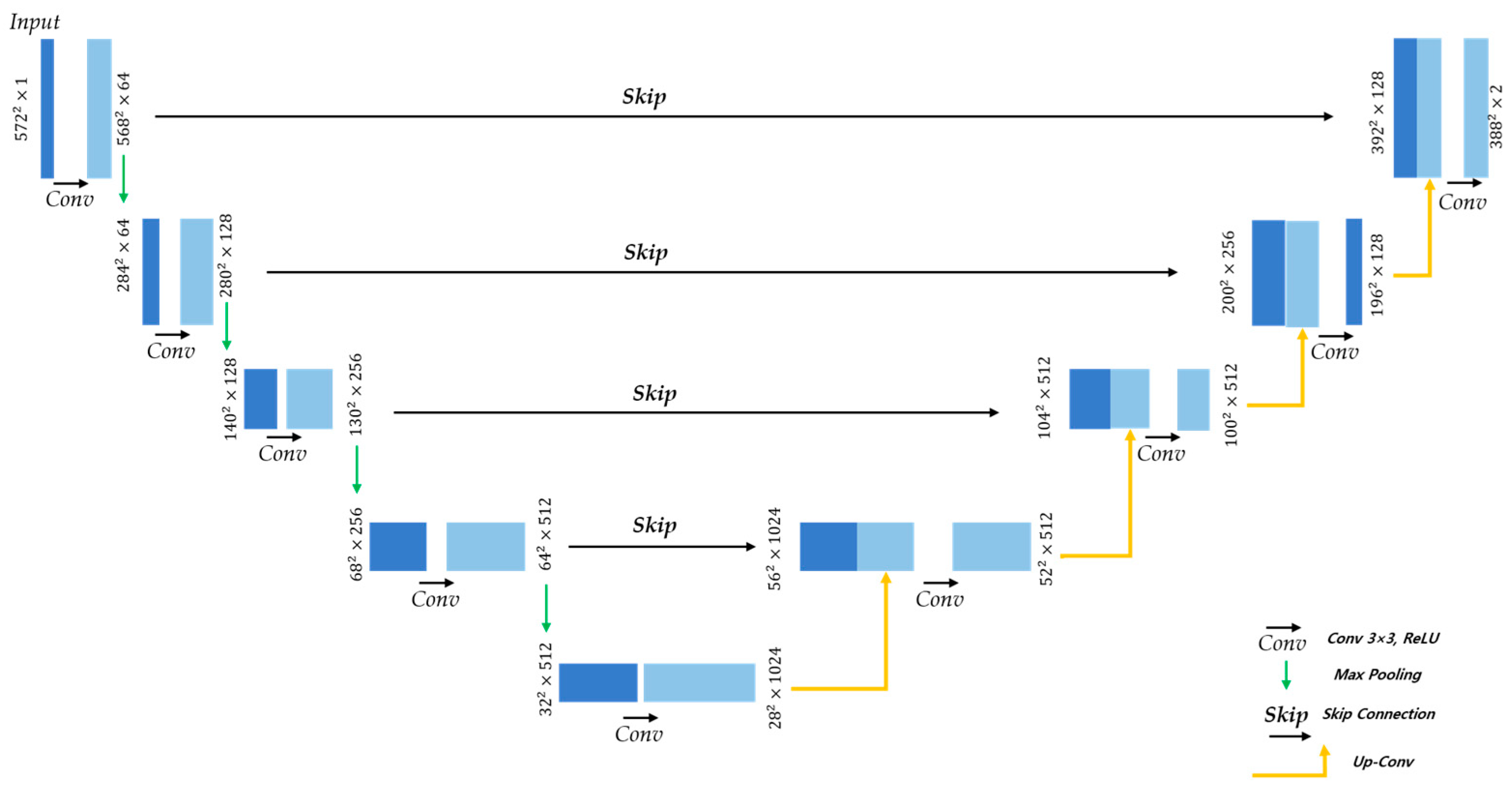

As shown in

Figure 4, U-Net adopts a symmetric U-shaped architecture consisting of an encoder and a decoder. The encoder progressively down-samples the input image through convolutional operations to extract high-level features. Each layer applies two 3 × 3 convolutions with ReLU activation, followed by a 2 × 2 max pooling operation. The decoder restores the original resolution using transposed convolution. To compensate for information lost during downsampling, skip connections concatenate high-resolution features from the encoder with the upsampled features before applying additional 3 × 3 convolutions.

Various studies have applied CNN-based segmentation for crack detection. Liu et al. [

21] proposed DeepCrack, an extension of the FCN and deep supervised network designed to learn hierarchical features and integrate multiscale information. Trained on 300 crack images and tested on 237 images, DeepCrack achieved an F1 score of 86.5 and a mean intersection over union (mIoU) of 85.9. Yang et al. [

22] introduced feature pyramid and hierarchical boosting network, a combination of feature pyramid networks (FPNs) and hierarchical boosting network. The FPN component integrates high-level semantic information, whereas the boosting network assigns different weights to each feature level, thereby improving the crack segmentation performance. Similarly, Fei et al. [

23] enhanced the CrackNet architecture by replacing the pooling layers—which typically reduce input resolution—with convolutional layers of various kernel sizes to extract multiscale features, thereby improving segmentation accuracy.

2.3. Vision Transformer-Based Crack Segmentation

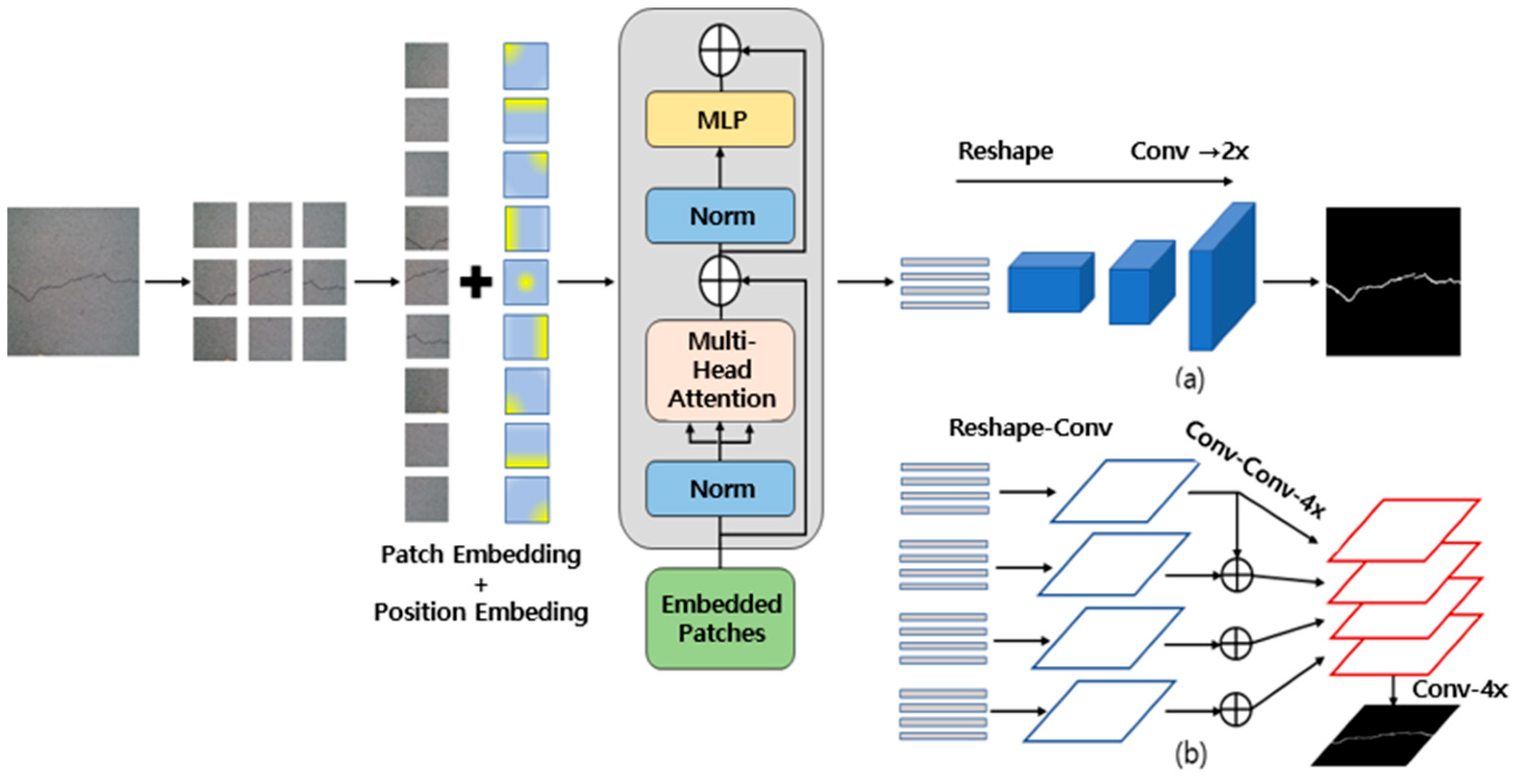

The Transformer model, which revolutionizes the field of NLP through its self-attention mechanism, also offers significantly advanced image segmentation. Traditional CNN-based segmentation models such as FCN rely on fixed-size kernels and downsampling operations to expand the receptive field. However, this approach inherently limits the ability to capture long-range dependencies, which are crucial for accurate pixel-level classification segmentation tasks. To address this issue, researchers have developed a Segmentation Transformer (SETR) [

24] that replaces CNN-based encoders with ViT encoders.

The encoder in the SETR follows a standard ViT structure. The input image is divided into fixed-size patches and embedded with positional encoding to preserve spatial information. These embeddings are processed using a Transformer encoder, where multi-head self-attention (MHSA) extracts global features. The output features are subsequently combined with the initial embeddings and refined using a multilayer perceptron (MLP).

The decoder in SETR reconstructs the original image resolution through upsampling and offers three upsampling approaches. The first method restores resolution using bilinear interpolation. The second method, as shown in

Figure 5a, mimics the FCN by incorporating convolution and upsampling layers. The third method, as shown in

Figure 5b, employs multilevel feature aggregation [

25], where the Transformer encoder extracts hierarchical features that are reshaped, convolved, and concatenated based on the channel dimensions. Among these methods, the third approach yields the best performance.

SETR addresses the inherent limitations of CNN-based segmentation models, particularly their fixed kernel sizes and limited receptive fields [

26]. By adopting a Transformer-based approach, SETR enhances long-range dependency modeling, thereby improving segmentation performance.

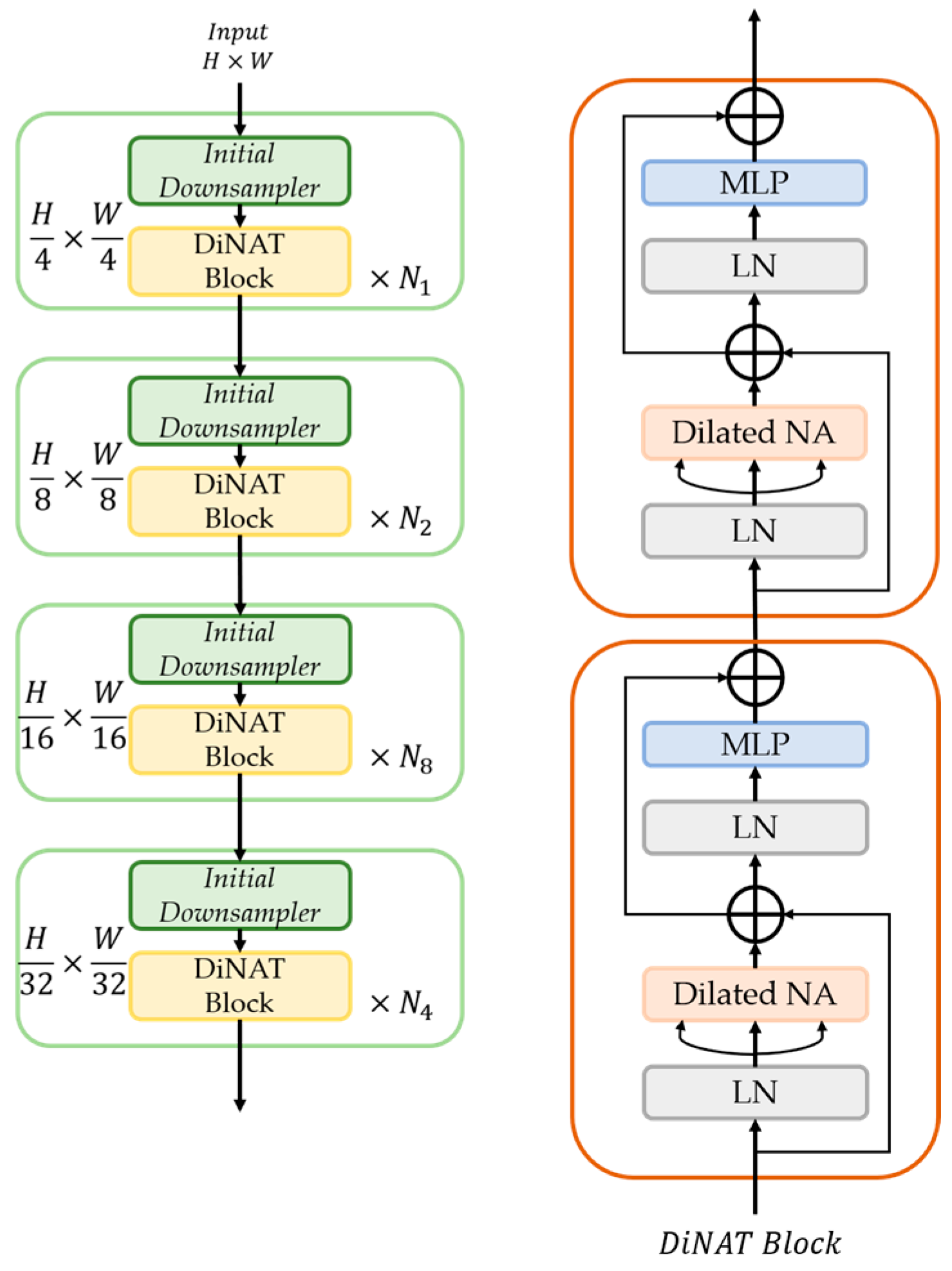

A major drawback of traditional ViTs is the high computational cost required to learn image features. To address this issue, a Dilated Neighborhood Attention Transformer (DiNAT) [

27] was developed as an improved version of the Neighborhood Attention Transformer (NAT) [

28].

NAT employs a window-based attention mechanism, where each pixel computes attention within a local window, reducing computational overhead and making it effective for capturing local features. However, it still relies on fixed window sizes. DiNAT introduces dilated windows and expands the receptive field to capture both local and global features simultaneously. As shown in

Figure 6, DiNAT applies different dilation rates at each layer, enabling it to capture features at multiple scales. Its key contribution lies in addressing the computational inefficiencies of ViTs by leveraging dilated attention, which reduces memory usage and processing time while improving local feature extraction.

3. Proposed Method

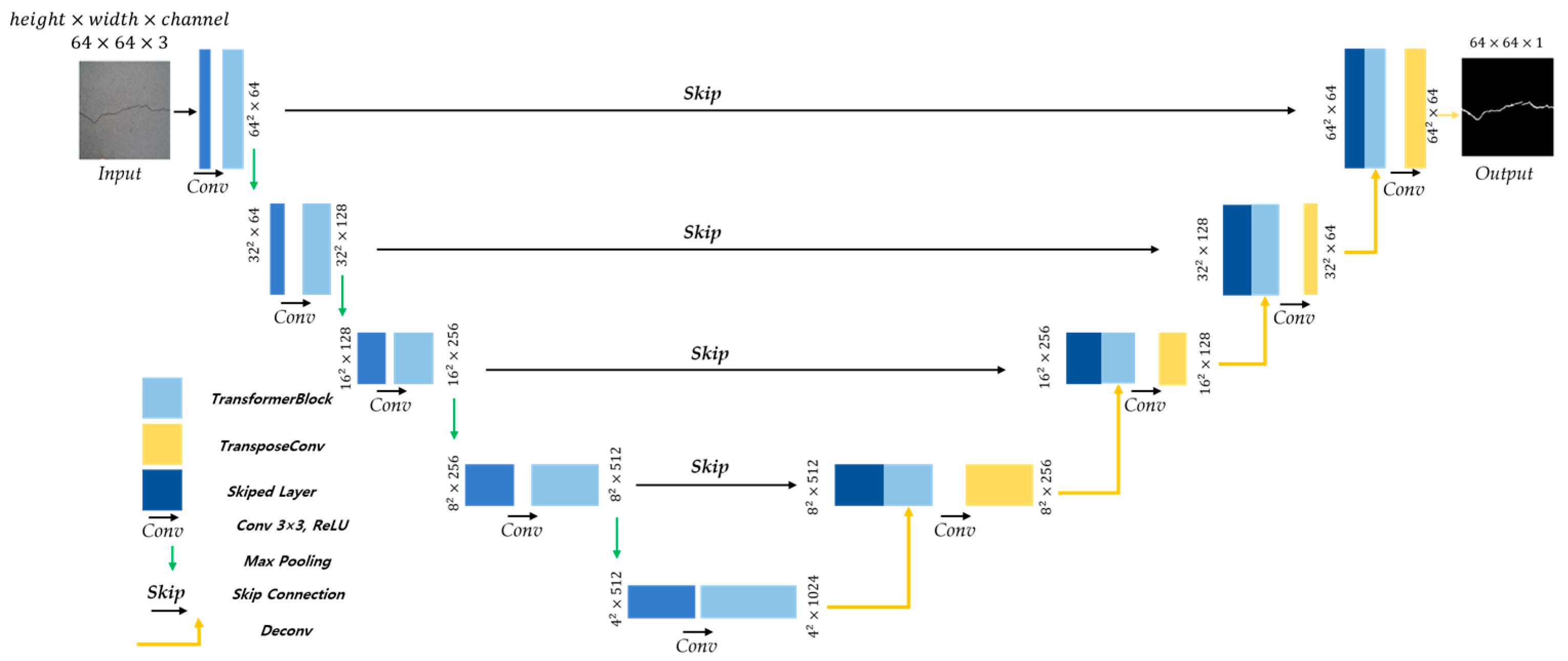

This study enhances crack segmentation accuracy by utilizing a U-Net ViT, a hybrid model that combines CNNs—specialized for capturing local features—with ViTs, which address CNNs’ structural limitations in capturing global features.

3.1. U-Net Vision Transformer Architecture

The overall architecture of the proposed U-Net ViT follows the encoder–decoder structure of U-Net, with the ViT applied to the encoder (

Figure 7).

The encoder takes an input image of 64 × 64 × 3 and extracts feature maps by increasing the number of channels to 64 using convolutional layers. The ViT then extracts the global feature maps, whereas the pooling layer reduces the spatial dimensions from 64 × 64 to 32 × 32. This process continues until the feature map is reduced to 4 × 4, at which point the final ViT generates a 4 × 4 × 1024 feature representation in the bottleneck section.

In the decoder, a 4 × 4 × 1024 feature map is subjected to transpose convolutional upsampling. The global feature maps extracted by the Transformer encoder are concatenated with the upsampled 8 × 8 × 512 feature maps through skip connections. A convolution operation reduces the number of channels from 512 to 256. This process is repeated until a 64 × 64 × 64 feature map is obtained. Finally, a 1 × 1 convolution generates a 64 × 64 × 1 output, producing a crack segmentation result of the U-Net ViT model.

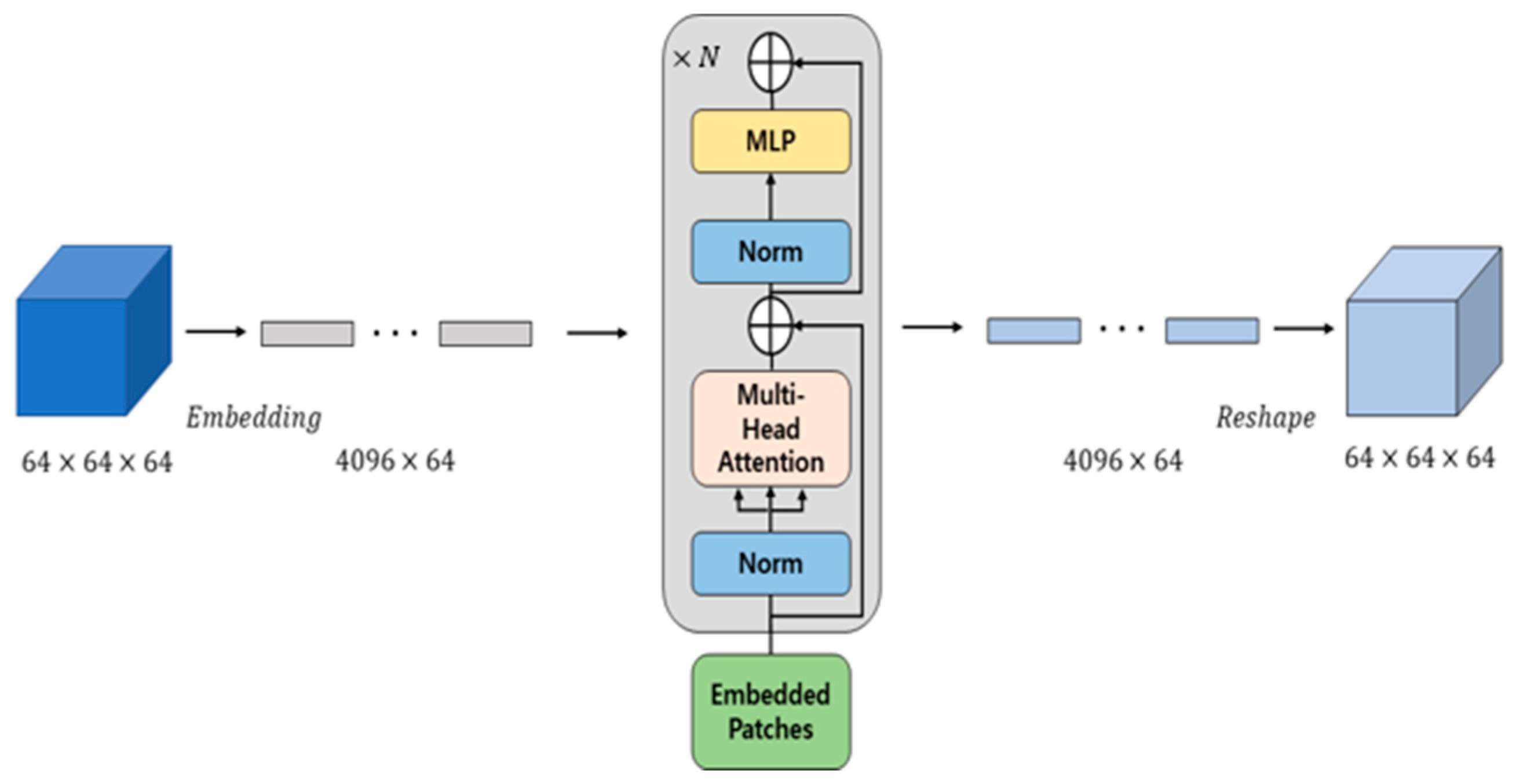

3.2. ViT’s Encoder

The ViT encoder(

Figure 8) processes feature maps through a series of key steps to extract meaningful representations. First, the two-dimensional (2D) (64 × 64 × 64) feature map generated by the convolutional layers is reshaped into a one-dimensional (1D) (4096 × 64) token via a convolutional embedding process.

Subsequently, the embedded tokens are fed into a Transformer block, where layer normalization precedes the MHSA mechanism, which extracts important global features. The attention mechanism converts the input into query (Q), key (K), and value (V) representations. Multiple attention heads analyze the input from different perspectives, and a dropout technique suppresses less significant features, ensuring only the most relevant are highlighted. The original embedded input is preserved through residual connections to maintain important information.

After the attention mechanism, the processed feature representation is passed through MLP with a Gaussian error linear unit activation function, followed by another residual connection to refine the extracted features. Finally, the 1D sequence output is converted back into a 2D feature map (4096 × 64 → 64 × 64 × 64). This reconstructed feature map is used for skip connections in the decoder and for downsampling the spatial dimensions through pooling operations.

4. Experimental Results

4.1. Dataset

The crack dataset used in this study was CrackSeg9k [

29]. CrackSeg9k consolidates multiple open-source datasets, including Crack500, DeepCrack, SDNet, CrackTree, Gaps, Volker, Rissbilder, NonCrack, Masonry, and Ceramic, which have been previously used for crack detection and segmentation. Initially consisting of 9255 images, the dataset was expanded to include 11,298 images. An example of the crack images included in the dataset is shown if

Figure 9.

In the experiment, 11,298 images were divided into 7343 for training, 1695 for validation, and 1695 for testing. As the original images were 400 × 400 in size, they were resized to 128 × 128 using bilinear interpolation and normalized as a preprocessing step to match the input size of the U-Net ViT model.

4.2. Evaluation Metrics

To evaluate the segmentation performance, two standard metrics were used: the F1-score and mIoU.

To explain the F1-score, the confusion matrix presented in

Table 1 was used to explain.

True Positive (TP): the model correctly predicts a positive case.

False Positive (FP): the model incorrectly predicts a negative case as a positive.

True Negative (TN): the model correctly predicts a negative case.

False Negative (FN): the model incorrectly predicts a positive case as negative.

The Precision (Equation (1)) represents the proportion of correctly predicted positive cases among all predicted positive cases.

The Recall (Equation (2)) represents the proportion of actual positive cases correctly predicted using the model.

The F1-score (Equation (3)) represents the harmonic mean of the Precision and Recall. Because it mitigates issues arising from imbalanced datasets, it provides a more reliable measure of model performance. The F1-score ranges from 0 to 1, with 1 indicating the best performance.

The intersection over union (IoU) measures the overlap between the predicted and actual pixels and is calculated as intersection (TP) divided by union (TP + FP + FN).

The mIoU is the average IoU across all the classes (Equation (5)), where N denotes the number of classes. Similarly to the F1-score, higher values indicate better segmentation performance.

4.3. Experimental Results

Table 2 lists the training parameters used in the experiments. The model was trained for 50 epochs with a batch size of one. The learning rate was initialized at 1 × 10

−4 and gradually reduced to 1 × 10

−6. The Adam optimizer was used for training, and binary cross entropy was applied as the loss function.

Table 3 presents the results of the proposed model, achieving Recall: 0.6739, Precision: 0.6003, F-1 score: 0.6350, mIoU: 0.7184

5. Discussion

Table 4 compares the mIoU performance of the proposed model with those of other existing models, including U-Net by Nguyen et al. [

30], Attention U-Net by Di et al. [

31], DDRNet [

32], CrackNex [

33], STDCSeg [

34], and DeepLabV3 [

35]. The proposed model achieved the highest mIoU of 0.7184, indicating that its predicted crack pixels align more closely with the actual crack pixels. Although the numerical improvement over the next-best model, DeepLabV3, is modest, we argue that this performance gain is statistically significant. Beyond pure accuracy, our model’s architectural design was intended to provide a strong balance between performance and computational efficiency. By integrating a Vision Transformer within the established U-Net encoder–decoder structure, we aimed for a more efficient alternative to heavier models. This focus on efficiency makes the proposed model a suitable candidate for deployment in resource-constrained environments, such as on-board systems in drones, where both accuracy and processing speed are critical.

The results can be interpreted from the perspective of previous studies and working hypotheses. These findings and their implications should be discussed within a broader context. Future research directions may also be highlighted.

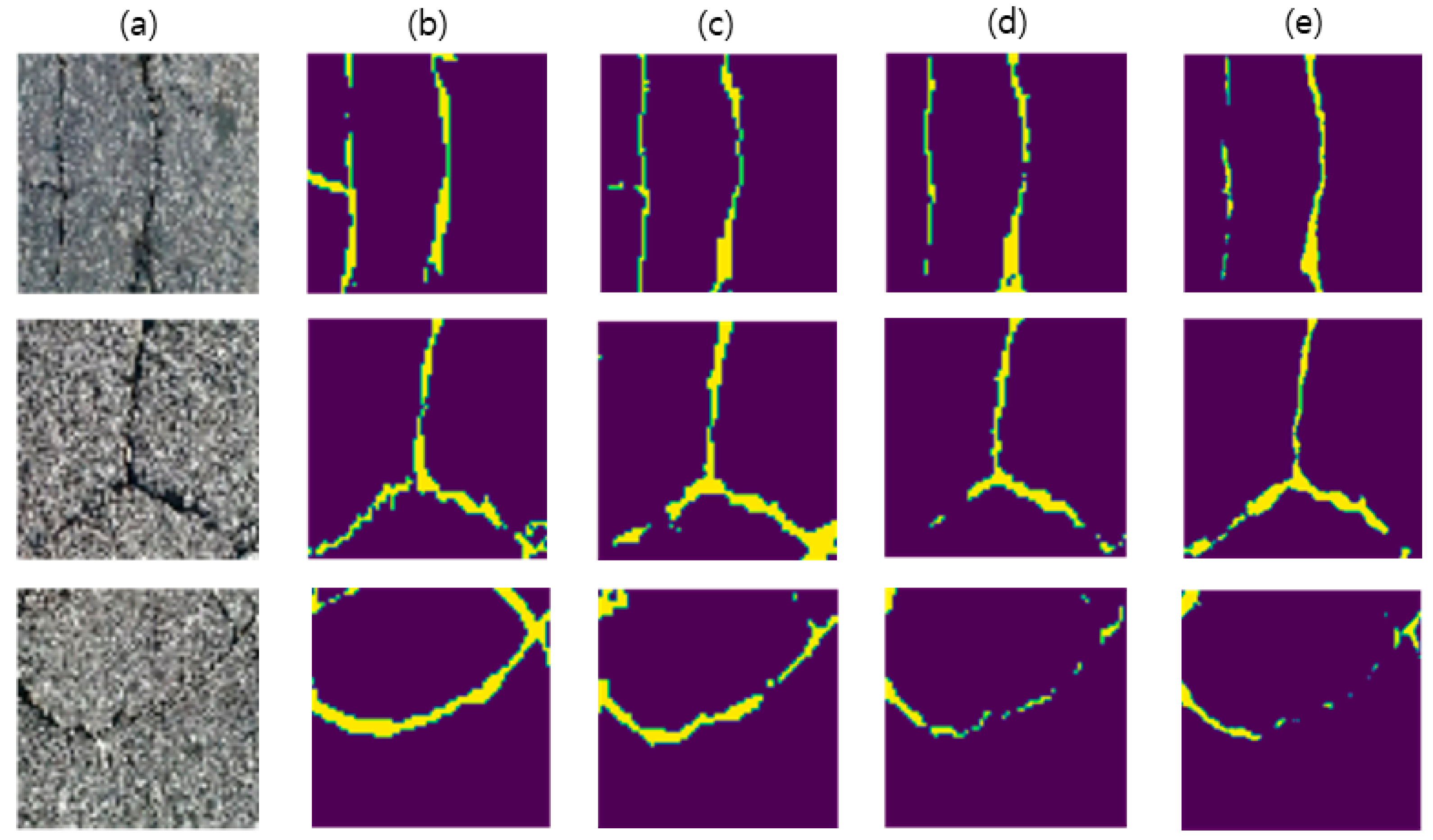

The qualitative results shown in

Figure 10, which presents a visual comparison with Attention U-Net [

31] and U-Net [

30], further illuminate the architectural benefits of our model. In the first and third images, our model successfully segments the cracks as continuous lines, whereas the other models produce fragmented predictions. This improvement in crack connectivity can be attributed to the ViT encoder, which enhances the model’s ability to capture global features and long-range dependencies. Unlike purely CNN-based approaches that are limited by their local receptive fields, our hybrid model can better understand the overall structure of a crack, leading to more coherent segmentation masks.

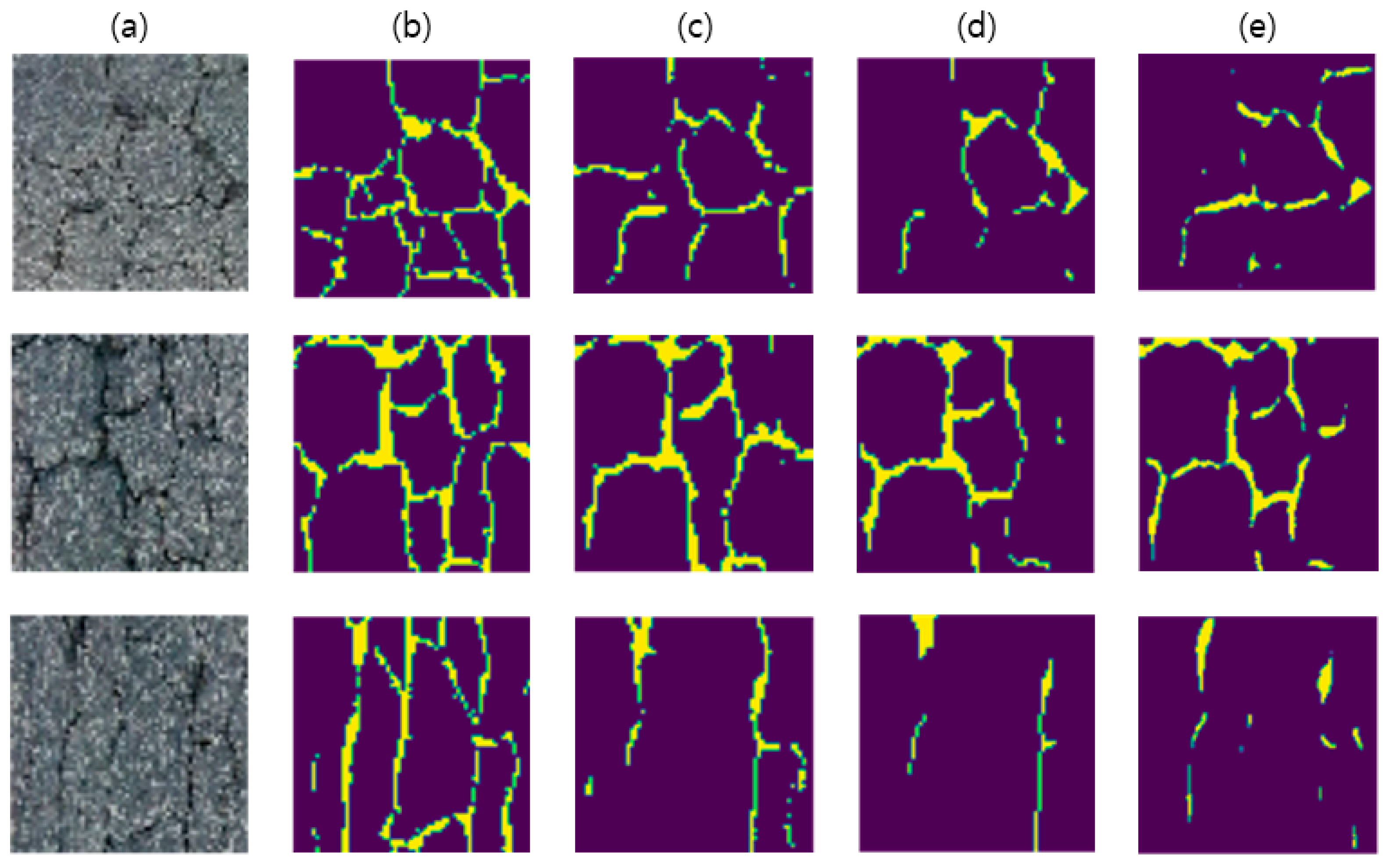

Despite these strengths, the model exhibits limitations. As seen in

Figure 11, segmentation performance declined for cracks with highly complex and fine patterns. This is likely a consequence of information loss from resizing the input images from 400 × 400 to 64 × 64 to accommodate hardware constraints. Our analysis of failure cases also revealed that the model sometimes produces false positives on non-crack images, particularly those with strong shadows or repetitive, high-contrast textures like mortar joints, which can be mistaken for crack features. This is likely exacerbated by the imbalance in the CrackSeg9k dataset.

These findings have significant practical implications for automated infrastructure monitoring. The model’s ability to produce connected and accurate segmentation masks can serve as a foundational technology for automated inspection systems, potentially deployed on drones or robots. This would not only enhance safety and efficiency but also provide objective, consistent data for tracking crack propagation over time, enabling a proactive approach to maintenance. The precise data generated can also be integrated with Building Information Modeling (BIM) or digital twin platforms for advanced, data-driven asset management.

To address the identified limitations, future research will proceed in several directions. First, to mitigate information loss, a patch-based processing strategy will be explored to allow the model to work with high-resolution images. Second, to reduce false positives, the training dataset will be augmented with a wider variety of challenging non-crack images. Finally, to further validate the model’s generalization capabilities, it will be tested on other public crack datasets. These efforts will aim to develop a more robust and practical solution for real-world infrastructure monitoring.

6. Conclusions

In this study, we introduced a hybrid U-Net ViT architecture that combines a CNN for local feature extraction with a ViT for capturing global context, aiming to improve the accuracy of crack segmentation. Our model achieved a higher mIoU than conventional U-Net and Attention U-Net models on the CrackSeg9k dataset, demonstrating its effectiveness in segmenting complex and irregular crack patterns. The qualitative results show that our model can better connect crack segments and detect cracks missed by other models.

However, our study has several limitations. First, the model’s performance decreases when segmenting very fine cracks, largely due to information loss from image resizing to meet hardware constraints. Future work should explore methods to process high-resolution images, for example, by using patch-based processing or more efficient transformer architectures. Second, the model is prone to false positives on images with linear features like shadows or textures. This could be mitigated by augmenting the training data with a wider variety of non-crack images.

Furthermore, the reported mIoU improvement of 0.7% over DeepLabV3 is modest. While our qualitative results show clear improvements in crack connectivity, a more thorough evaluation on multiple datasets would be needed to establish the statistical significance of this improvement. We also did not provide a detailed comparison of runtime and resource usage against CNN-only models, which is an important aspect for practical applications.

For future research, we recommend the following: (1) exploring architectural modifications and hyperparameter optimization to improve performance; (2) evaluating the model on a wider range of datasets to test its generalization capabilities; (3) conducting a thorough analysis of computational costs; and (4) investigating the use of more recent foundation models, such as VLMs, which have shown great promise in various computer vision tasks. Addressing these points will help to better understand the practical value of our proposed approach and guide the development of more robust and efficient crack segmentation models.

Author Contributions

Conceptualization, J.N. and J.J. (Junyoung Jang).; methodology, J.N. and J.J. (Junyoung Jang).; software, J.J. (Junyoung Jang).; validation, J.N., H.Y. and J.J. (Jeonghoon Jo).; formal analysis, J.N. and J.J. (Junyoung Jang).; investigation, J.N.; resources, J.J. (Junyoung Jang).; data curation, J.J. (Junyoung Jang) and J.J. (Jeonghoon Jo).; writing—original draft preparation, J.N.; writing—review and editing, J.N. and H.Y.; visualization, J.J. (Junyoung Jang).; supervision, H.Y.; project administration, H.Y.; funding acquisition, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC were funded by the MSIT (Ministry of Science and ICT), Korea, under the National Program for Excellence in SW supervised by the IITP (Institute of Information & Communications Technology Planning & Evaluation) in 2025, grant number 2024-0-00062.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Alfarrarjeh, A.; Shahabi, C.; Luo, Y.; Liu, Y. A deep learning approach for road damage detection from smartphone images. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5201–5204. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Wang, J.; Liu, S.; Li, S.; Jiang, T. A real-time bridge crack detection method based on an improved inception-resnet-v2 structure. IEEE Access 2021, 9, 93209–93223. [Google Scholar] [CrossRef]

- Basha, S.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, D.; Xu, Y.; Wang, Y. DeepCrack: A deep hierarchical feature learning architecture for crack segmentation. Neurocomputing 2019, 338, 139–153. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1525–1535. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Pixel-level cracking detection on 3D asphalt pavement images through deep-learning-based CrackNet-V. IEEE Trans. Intell. Transp. Syst. 2020, 21, 273–284. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 6881–6890. [Google Scholar]

- Zhang, P.; Wang, D.; Lu, H.; Wang, H.; Ruan, X. Amulet: Aggregating multi-level convolutional features for salient object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Hassani, A.; Shi, H. Dilated neighborhood attention transformer. arXiv 2022, arXiv:2209.15001. [Google Scholar]

- Hassani, A.; Navon, A.; Wolf, L.; Shi, H. Neighborhood attention transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–24 June 2023; pp. 6185–6194. [Google Scholar]

- Kulkarni, S.; Hall, D.; Stein, S.; Körner, M. CrackSeg9k: A collection and benchmark for crack segmentation datasets and frameworks. In Computer Vision–ECCV 2022 Workshops, Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 179–195. [Google Scholar]

- Nguyen, N.T.H.; Nguyen, D.Q.; Tran, N.T.; Nguyen, H.T.; Nguyen, B.N.; Le, V.H. Pavement crack detection using convolutional neural network. In Proceedings of the 9th International Symposium on Information and Communication Technology (SoICT), Danang, Vietnam, 6–7 December 2018; pp. 251–256. [Google Scholar]

- Di Benedetto, A.; Fiani, M.; Gujski, L.M. U-Net-based CNN architecture for road crack segmentation. Infrastructures 2023, 8, 90. [Google Scholar] [CrossRef]

- Peng, J.; Gao, M.; Yang, G.; Xu, Y.; Liu, Y.; Zhou, K. PP-LiteSeg: A superior real-time semantic segmentation model. arXiv 2022, arXiv:2204.02681. [Google Scholar]

- Yao, Z.; Xie, H.; Chen, Y.; Yang, Y.; Yang, W.; Fan, H. CrackNex: A few-shot low-light crack segmentation model based on Retinex theory for UAV inspections. arXiv 2024, arXiv:2403.03063. [Google Scholar]

- Fan, M.; Zhang, R.; Wang, J.; Tang, M.; Zhang, Y. Rethinking BiSeNet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

- Chen, L.C. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).