1. Introduction

With the rapid development of high-speed railways, rising operation and maintenance demands have made “planned inspection and maintenance” insufficient, creating an urgent need for more efficient and precise solutions. Building an intelligent railway operation and maintenance system is becoming a key path to promoting high-quality railway development [

1,

2]. Among various equipment systems, the traction power supply system is critical for stable train operation. As its core component, the catenary system undertakes the dual functions of wire support and high-voltage insulation, so its operating status directly affects railway transportation safety [

3]. Although catenary masts are relatively stable, insulators connecting towers and conductors are more prone to aging and damage due to long-term environmental exposure. Their high fault frequency and dense distribution make them essential targets for monitoring in intelligent operation and maintenance systems.

For a long time, insulator defect identification has been carried out through on-site inspections and helicopter inspections. Although this method is effective in small-scale monitoring, in railway scenarios, due to the complex environment and the generation of massive high-dimensional operation data [

4], these traditional methods face significant limitations. Manual approaches struggle to process the large volume of diverse, high-resolution data required for intelligent railway systems. This challenge is intensified for catenary insulators, which are often densely distributed on a single cable, leading to increased missed or false detections and thus affecting maintenance decisions. Therefore, achieving efficient and accurate defect identification has become a core objective of intelligent railway inspection systems, making it necessary to leverage advanced data collection and image recognition technologies to overcome these bottlenecks.

In recent years, the rapid development of Unmanned Aerial Vehicle (UAV) technology has provided an efficient method for the information acquisition of railway traction equipment [

5,

6]. By obtaining high-resolution aerial images, status collection of insulators can be rapidly completed. However, the large-scale and diverse data collected by UAVs across various environments pose significant challenges for insulator defect detection, particularly in classifying and localizing such key targets. Precise defect detection is therefore required to effectively extract defect information from the UAV images. Centering on this core task, deep learning-based methods have achieved significant breakthroughs. These object detection algorithms are categorized into two-stage and one-stage methods. Two-stage models, such as R-CNN [

7], Fast R-CNN [

8], and Faster R-CNN [

9], generate region proposals followed by bounding-box regression, achieving high accuracy but incurring heavy computation and slow inference. In contrast, one-stage models, including the YOLO series [

10,

11,

12,

13,

14], SSD [

15], and RetinaNet [

16], perform end-to-end detection. Compared with two-stage detection, the YOLO series is widely applied in insulator detection tasks due to its end-to-end detection, decent real-time performance, and efficient deployment.

Numerous studies have successfully applied these advanced models to traction equipment detection, enhancing insulator identification through strategies such as lightweight network design and attention mechanisms. For instance, Zhang et al. [

17] employed a multi-scale large kernel attention (MLKA) module to enhance the model’s ability to extract insulator defect features on the CPLID dataset [

18], ultimately achieving an accuracy of 99.22%. The LDIDD method proposed by Liu et al. [

19] reduced the model size by introducing the MobileNetv3 lightweight backbone network, resulting in a 46.6% reduction in the number of parameters. Hu et al. [

20] effectively enhanced the model’s ability to extract insulator features in high-reflective scenarios by introducing a deformable convolutional module (DCNv2) and a global attention mechanism (GAM), achieving an accuracy of 99.4% on the SFID dataset [

21]. Xu et al. [

22] introduced the MAP-CA attention mechanism combining average pooling and max pooling strategies to enhance the model’s perception in complex backgrounds, achieving an accuracy of 96.6% on CLPID. Although these models display decent detection capabilities under some ideal conditions, they are mainly designed for object detection in generic scenarios and trained on general insulator datasets, but have not yet been optimized for the complex conditions encountered in railway inspection.

In actual railway inspection, the complex and dynamic working environment has put much higher requirements for the detection accuracy and stability of insulators, which can be categorized into the following three aspects:

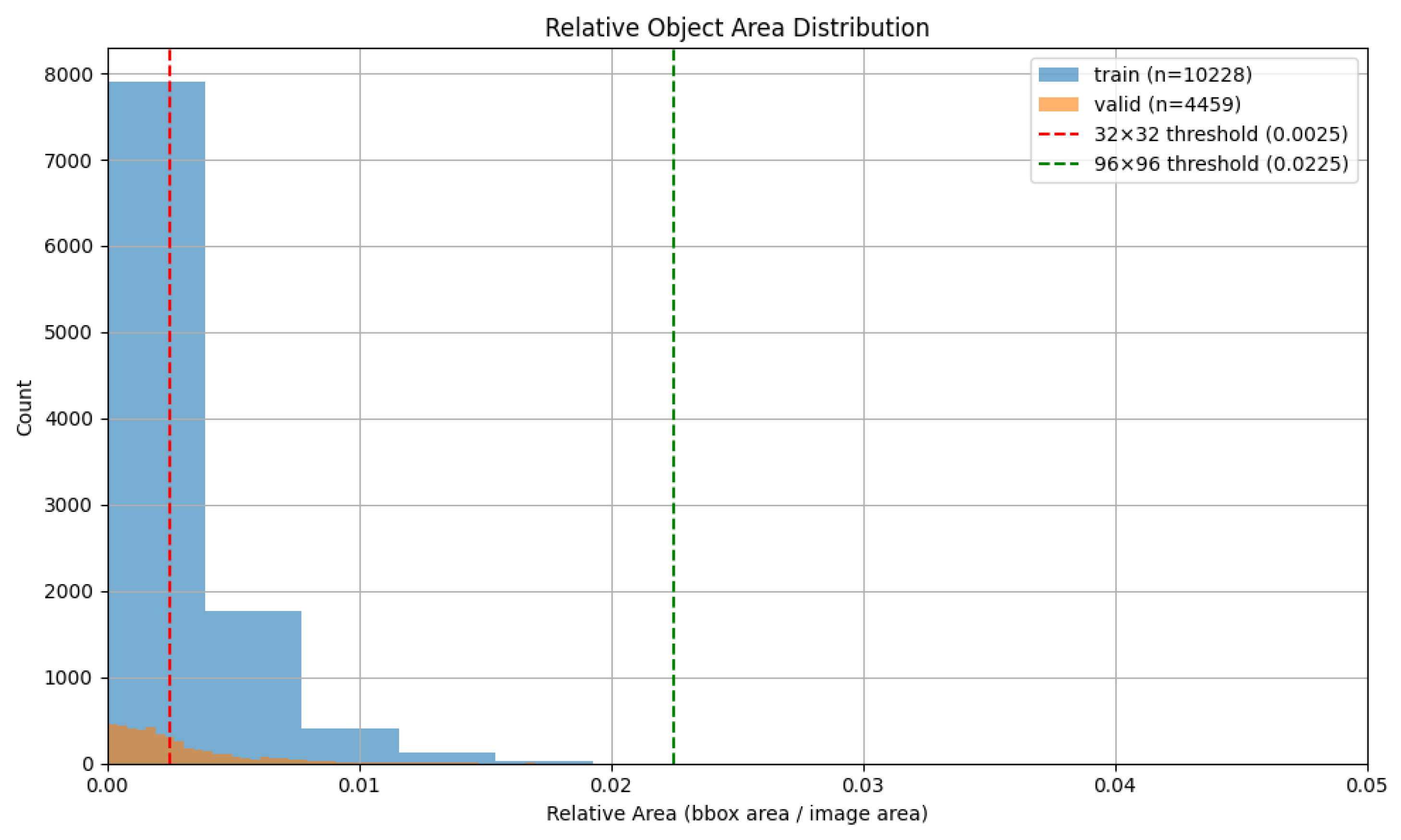

Small targets and feature loss: due to long-distance UAV imaging and limited imaging angles, insulators often occupy less than 0.25% of the image area, with frequent edge truncation and partial occlusion by obstacles.

Dense distribution and background interference: closely arranged insulators, overlapping boundaries, and complex backgrounds such as vegetation and building shadows make feature extraction more difficult.

Low-quality imaging and adverse weather conditions: inadequate lighting near tunnel entrances, UAV vibrations, and unfavorable weather (rain, snow, smog) cause image blur and detection instability.

These ubiquitous interferences pose a key challenge to railway insulator detection. However, existing public datasets such as CPLID [

18], IDID [

23], and SFID [

21] are mostly collected from transmission lines with stable lighting and fail to cover these complexities, leading to a significant degradation of detection models in real railway scenarios.

In addition, all recent work mentioned above attempted to directly build defect detection models that classify insulators in the original image and perform end-to-end detection. While effective on public datasets, detection stability degrades in railway scenarios with long-distance imaging, complex backgrounds, and blurred images, where insulators appear as small, blurry targets with weaker defect features that are harder to extract. Inspired by [

24], this paper proposes a two-stage detection framework: first detect and localize insulators and then perform defect analysis on cropped regions, effectively reducing background interference and improving defect detection accuracy.

This study focuses on the insulator detection and localization. As the results directly serve as inputs for subsequent defect identification, higher accuracy is preferable. To fully address these challenges, this study categorizes them into three key aspects: the detection of small-scale targets, dense distribution and background interference, low-quality imaging, and various weather conditions. Solving these interrelated issues is the key to achieving stable and robust detection in railway environments. Thus, the core objective of this research is to enhance the accuracy and robustness of insulator detection in these complex railway settings, while ensuring model efficiency and deployability for practical applications. The core contributions are as follows:

Construct the first comprehensive insulator detection dataset complexRailway for real railway scenarios: Collected from actual railway operational environments in Jinan, Guangzhou, and Xi’an, the dataset consists of 2500 high-resolution UAV aerial images. It systematically covers the above-mentioned real-world challenges.

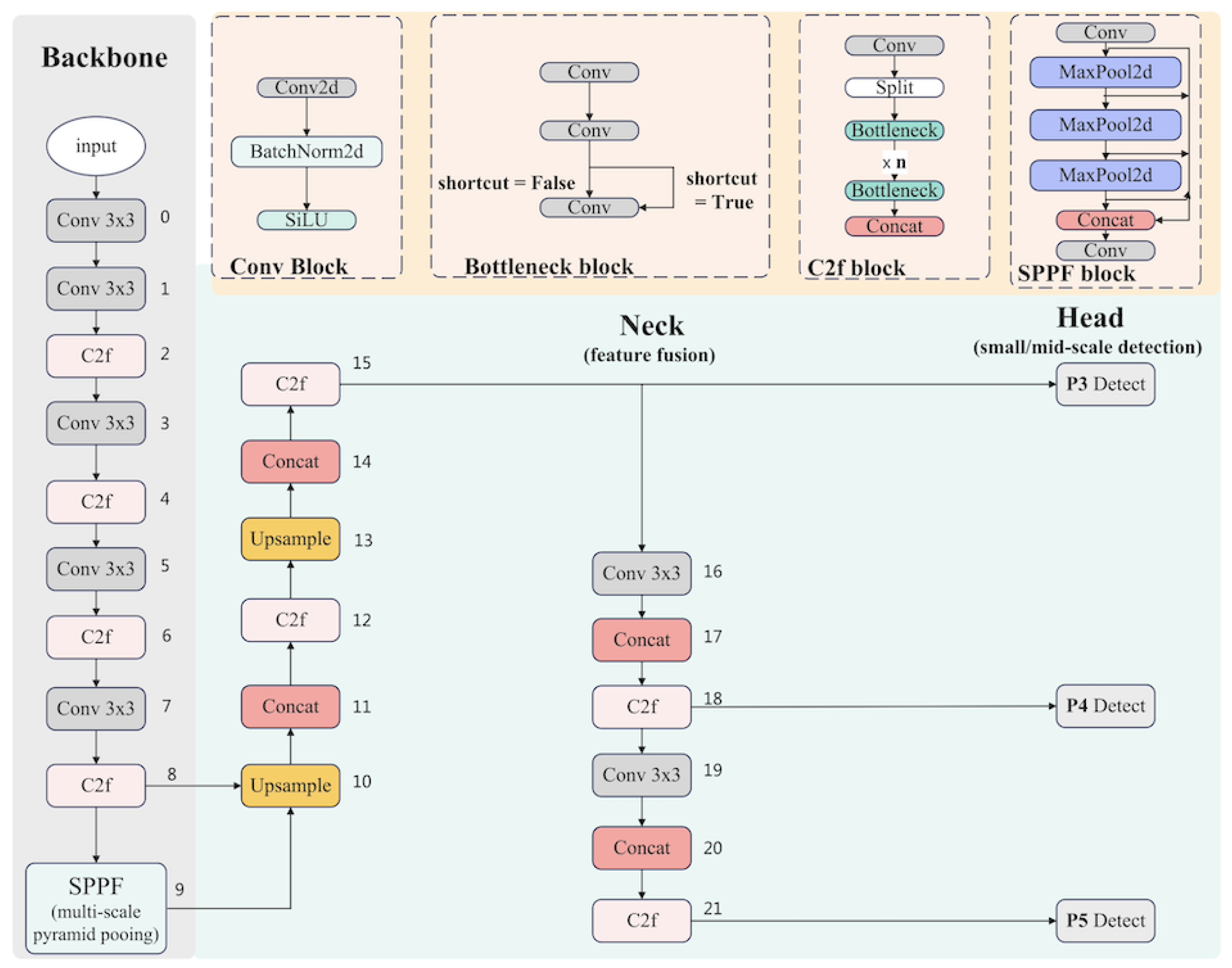

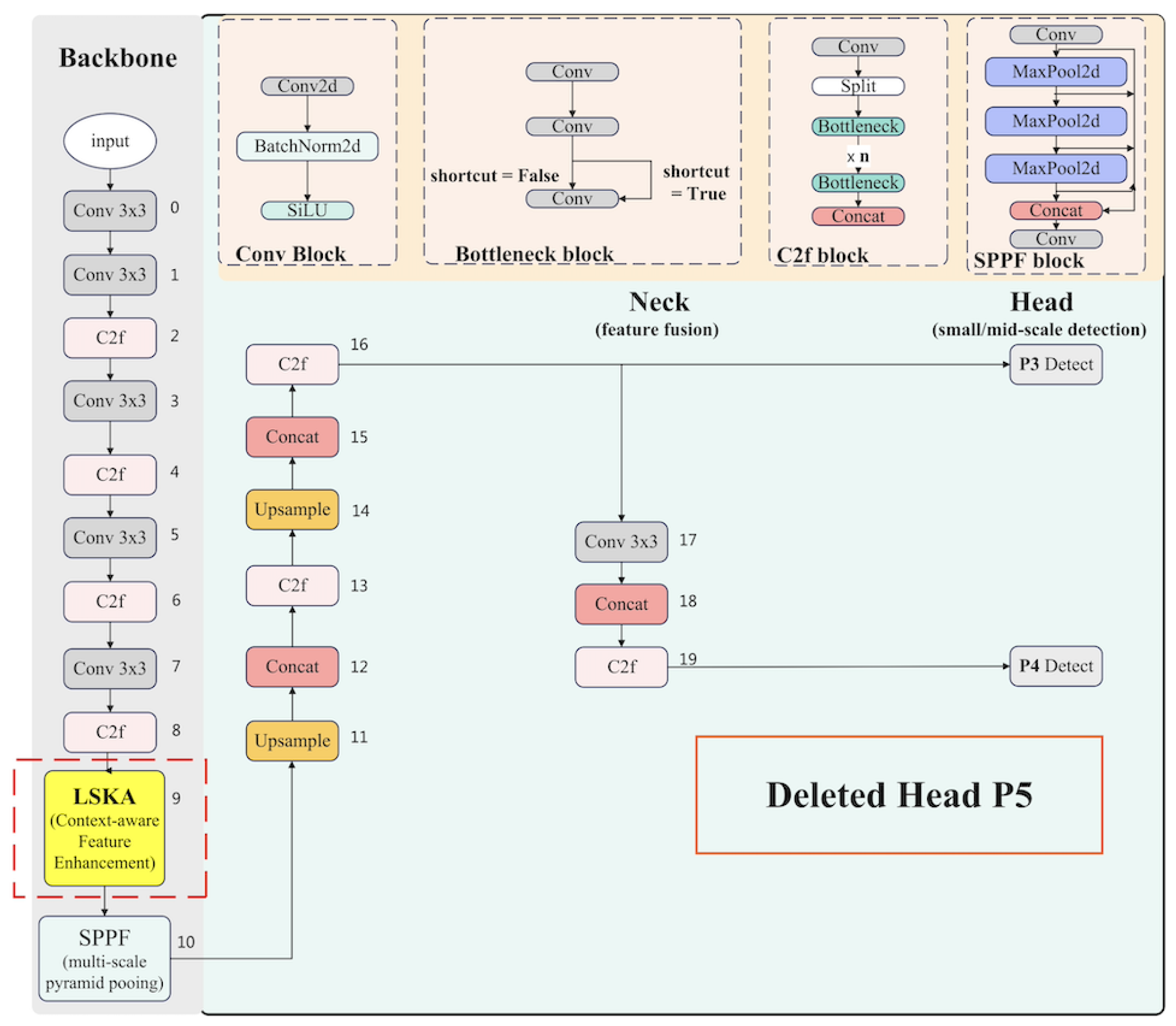

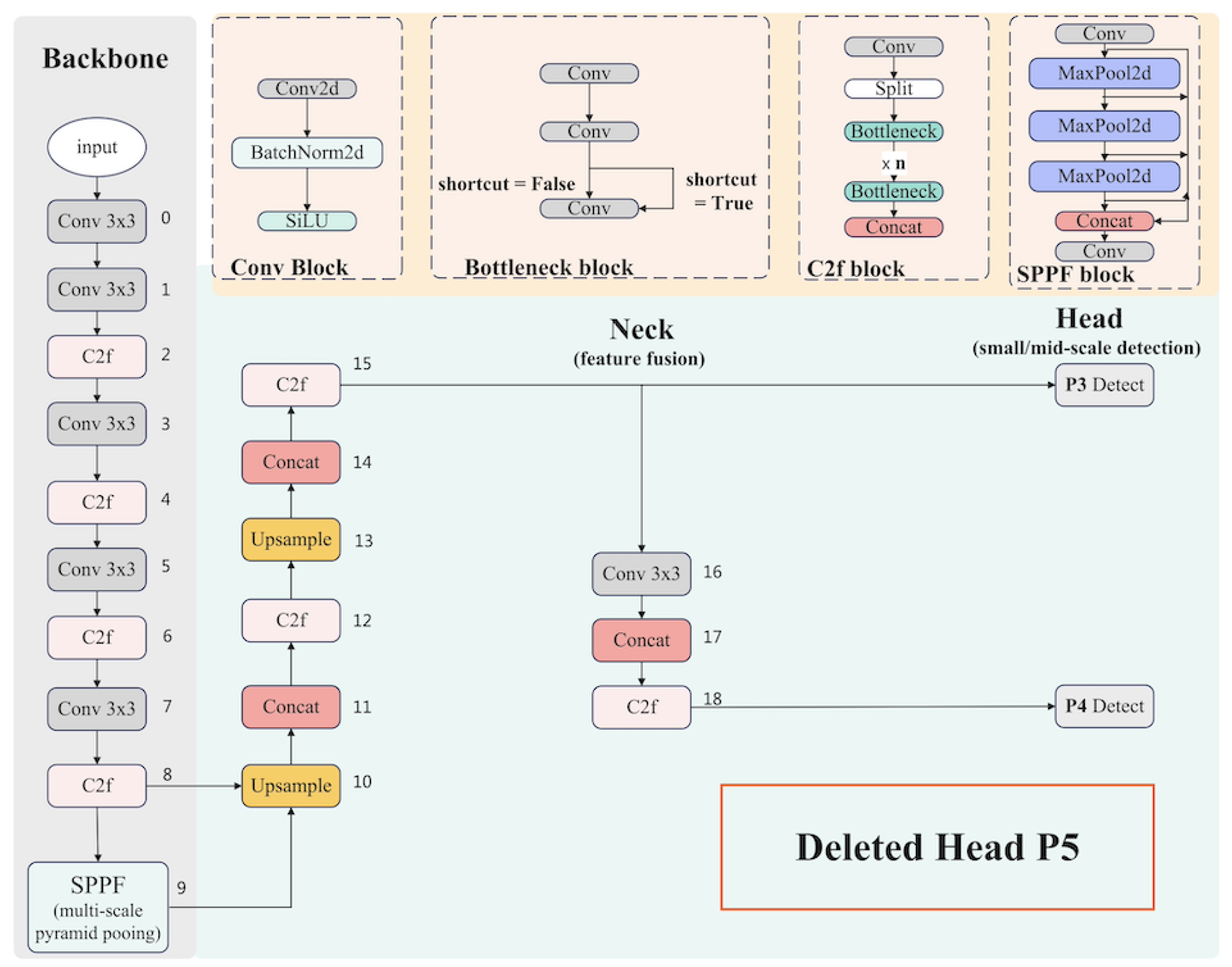

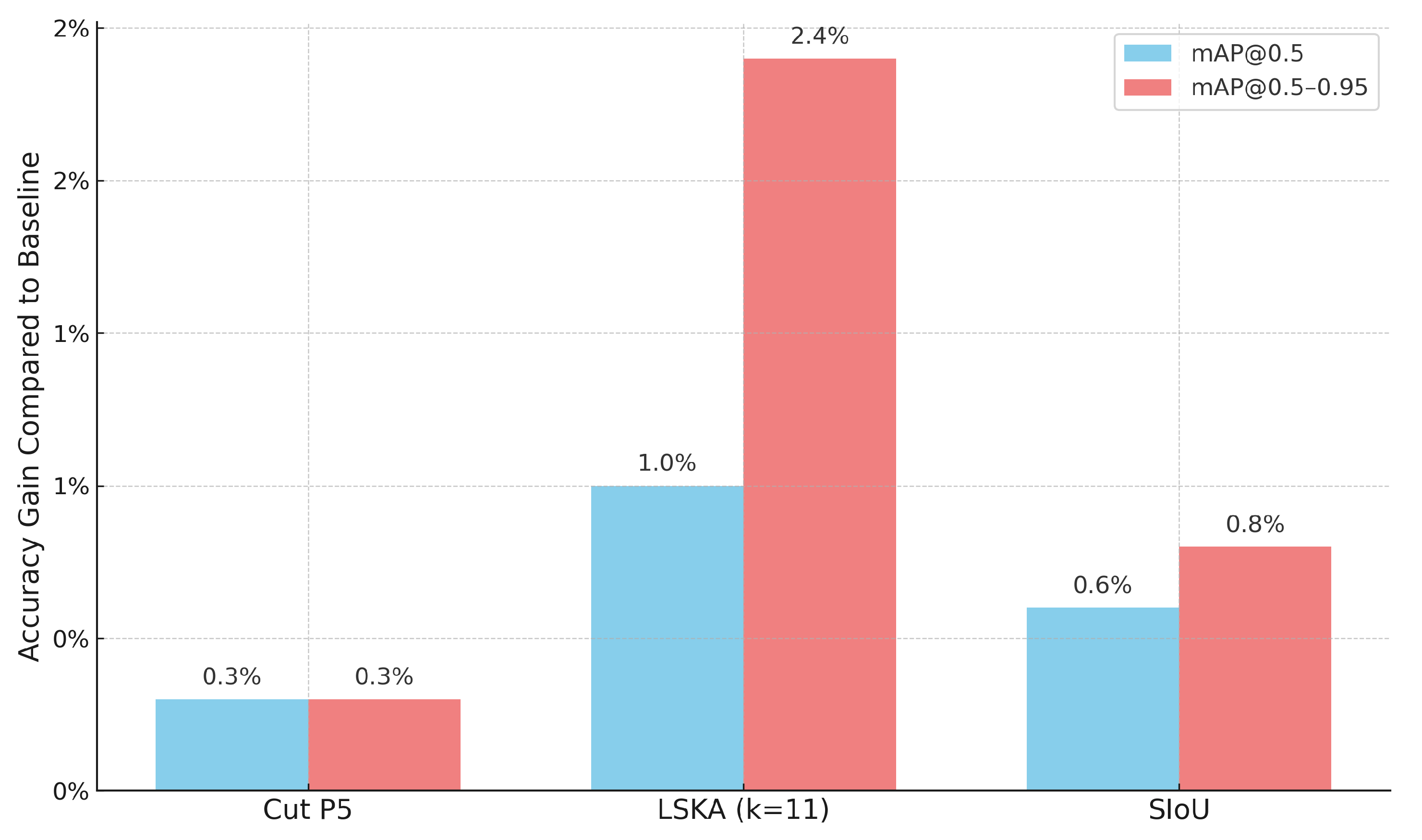

Propose the CutP5-LargeKernelAttention-SIoU (C5LS) detection framework and make three model improvements based on YOLOv8 to enhance detection accuracy and deployment efficiency:

Lightweight detection head design: Remove the P5 detection branch to reduce the computation load and high-level feature noise, making it suitable for resource-constrained UAV edge computing devices.

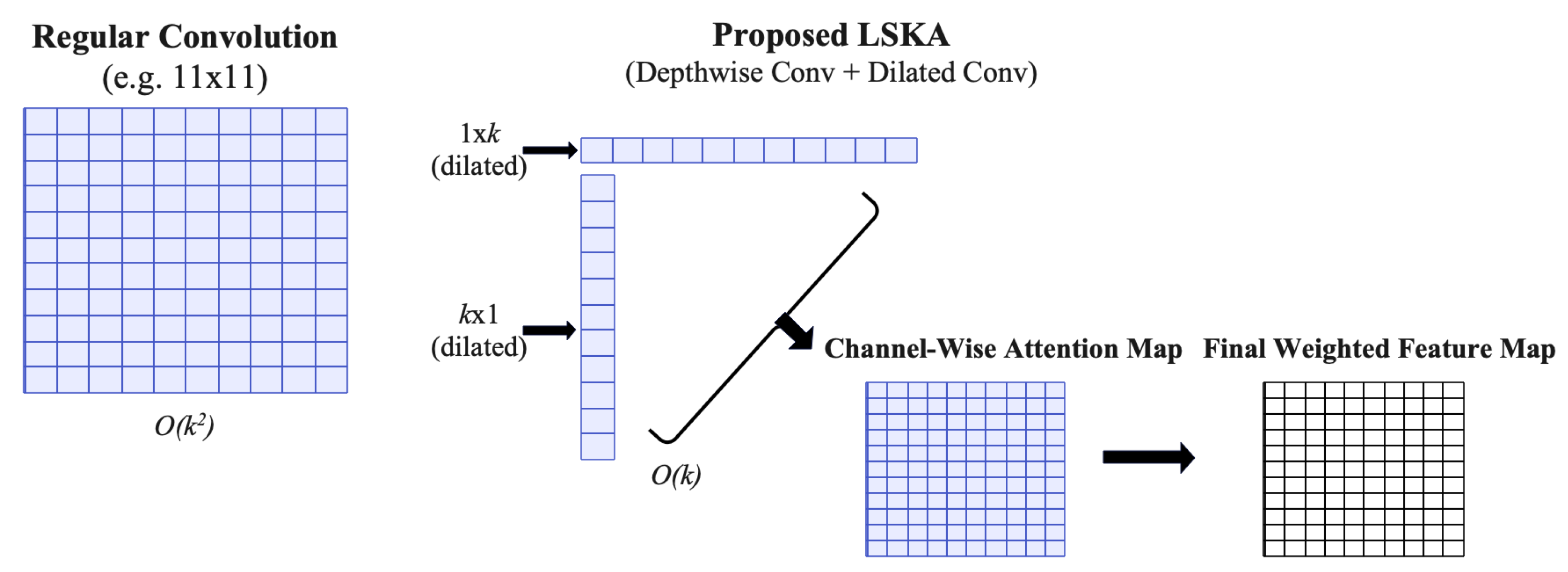

Enhanced feature extraction via Large Kernel Attention (LSKA): Incorporate LSKA to enlarge the receptive field and enhance channel perception under low-quality images, leading to a 1% increase in mAP@0.5.

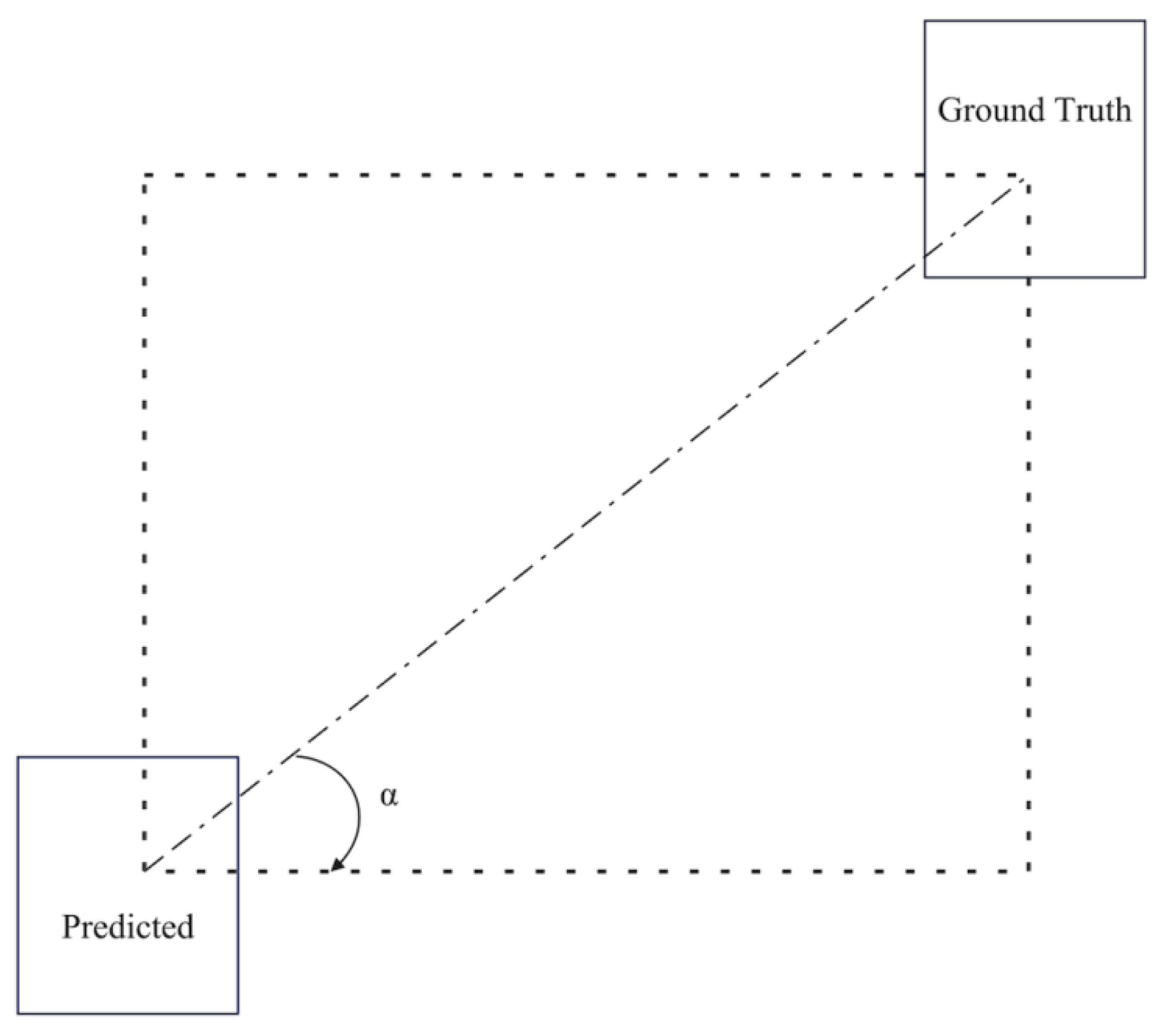

Enhanced localization accuracy via SIoU Loss: Replace CIoU with SIoU loss to strengthen geometric modeling and improve bounding-box regression accuracy for small objects, leading to an approximate 0.6% increase in mAP@0.5.

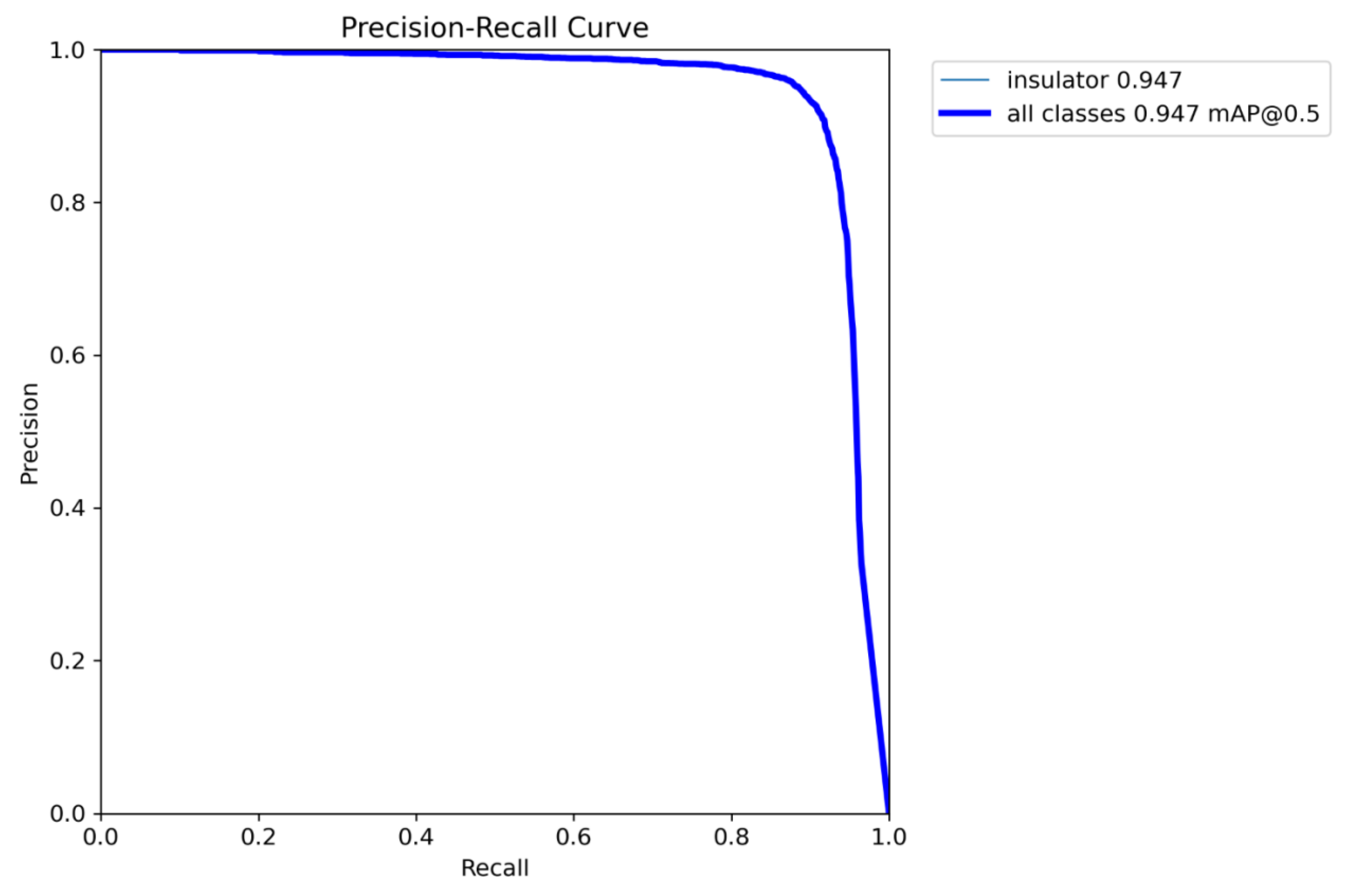

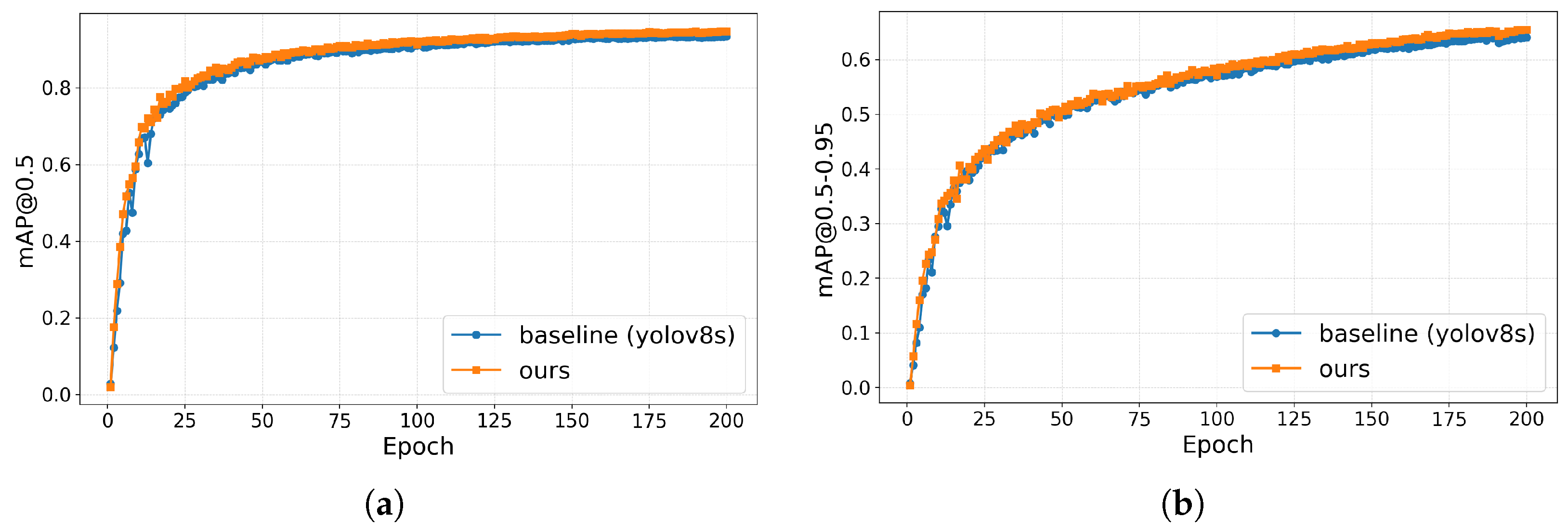

Conduct ablation experiments to evaluate the effects of the above three improvements quantitatively. The results show that the proposed method exhibits higher localization accuracy than mainstream models in complex scenarios. Compared with the baseline, mAP@0.5 improves by 1.9%, mAP@0.5–0.95 improves by 3.5%, the inference time is within 9.6 ms, and the number of parameters is 13 M, indicating strong deployment potential.

4. Discussion

This study introduces the C5LS model, an enhanced YOLOv8 variant, to address the challenges of insulator detection in railway environments characterized by densely distributed small targets, background interference, and low-quality imaging. Through three targeted optimizations, including removing the P5 detection branch to improve focus on small and medium objects, incorporating a Large Separable Kernel Attention (LSKA) module to enhance feature extraction, and adopting the SIoU loss function to refine localization, the model achieves significant improvement in detection accuracy and robustness. Experimental results show that C5LS outperforms the baseline by 1.9% in mAP@0.5 and 3.5% in mAP@0.5–0.95, while maintaining high inference speed and a lightweight structure, demonstrating strong robustness and deployability in challenging railway environments.

Compared with existing methods, the innovations of C5LS lie in its enhanced adaptability to complex railway scenarios. Its enhanced design enables accurate insulator localization, laying a solid foundation for subsequent defect recognition and spatial referencing for maintenance.

However, although the model is optimized for typical railway scenarios, the complexity of real-world environments still exceeds the dataset’s coverage, leading to limited robustness in certain extreme conditions, such as highly reflective metallic backgrounds. Moreover, synthetic weather augmentation, such as simulated rain and snow, may lack fidelity, reducing the robustness of the model under real-world adverse conditions. Additionally, while the integration of LSKA improves the detection accuracy, the increased number of parameters may pose challenges for deployment on resource-constrained edge devices. Future work will explore photo-realistic augmentation techniques, such as GANs, to enhance dataset diversity and integrate defect classification following [

24] and 3D localization. Model lightweightness will also be optimized to further support intelligent and efficient railway maintenance.