Abstract

To address the issues of small-target missed detection, false alarms from cloud/fog interference, and low computational efficiency in traditional wildfire detection for transmission line corridors, this paper proposes a YOLOv11_MDS detection model by integrating Multi-Scale Convolutional Attention (MSCA) and Distribution-Shifted Convolution (DSConv). The MSCA module is embedded in the backbone and neck to enhance multi-scale dynamic feature extraction of flame and smoke through collaborative depth strip convolution and channel attention. The DSConv with a quantized dynamic shift mechanism is introduced to significantly reduce computational complexity while maintaining detection accuracy. The improved model, as shown in experiments, achieves an mAP@0.5 of 88.21%, which is 2.93 percentage points higher than the original YOLOv11. It also demonstrates a 3.33% increase in recall and a frame rate of 242 FPS, with notable improvements in detecting small targets (pixel occupancy < 1%). Generalization tests demonstrate mAP improvements of 0.4% and 0.7% on benchmark datasets, effectively resolving false/missed detection in complex backgrounds. This study provides an engineering solution for real-time wildfire monitoring in transmission lines with balanced accuracy and efficiency.

1. Introduction

Overhead transmission lines frequently traverse mountainous and forested regions characterized by rugged terrain and dense vegetation, where they face severe threats from wildfires. Statistics show that in China, wildfire-induced transmission line trip accidents account for as much as 15–20% of the annual total, resulting in direct economic losses exceeding CNY 1 billion. The risk of wildfire incidents rises sharply during traditional fire-prone periods such as the Qingming Festival and the Spring Festival. Dense wildfire smoke significantly reduces air insulation strength, posing serious safety hazards to power grid equipment. In response, power utilities have accelerated the deployment of digital monitoring infrastructures. For example, China’s Southern Power Grid has mandated the installation of online wildfire monitoring devices in high-risk segments [1]. However, existing systems still suffer from substantial shortcomings: in complex environments, interference from clouds, mist, and artificial light often leads to false alarms (with reported false detection rates as high as 68%), and the massive volume of alarm information requires extensive human verification, thereby aggravating the operation and maintenance burden. Consequently, there is an urgent need to develop intelligent detection algorithms that can accurately identify wildfires while effectively avoiding false positives, thereby enhancing operational efficiency and ensuring grid security.

Intelligent wildfire monitoring in transmission corridors faces severe technical challenges. On one hand, common disturbances in mountainous regions, such as clouds, fog, or industrial smoke, exhibit spectral characteristics highly similar to wildfire smoke, while artificial illumination at night is easily misclassified as flames. As a result, traditional algorithms and even many existing deep learning models struggle to achieve reliable discrimination. On the other hand, early-stage wildfires typically appear as small-scale targets occupying less than 1% of the image pixels, and their morphology changes rapidly with wind direction, which places stringent requirements on the model’s ability to capture fine-grained and dynamic features. Furthermore, the massive scale of monitoring data (over 100,000 images generated daily per line) imposes significant pressure on real-time processing. Although mainstream detectors such as YOLOv8 achieve an inference latency of ∼0.3 s per 800 × 800 image, their efficiency remains inadequate in multi-camera edge-computing scenarios.

To address these issues, researchers have pursued multiple directions. For enhancing feature discrimination, Ye et al. [2] proposed pseudo-anomaly scene synthesis and a Euclidean-distance-based loss function, improving recognition of fire-specific features. Chen et al. [3] introduced the Convolutional Block Attention Module (CBAM) and multi-scale feature fusion into FireNet, thereby improving accuracy in heritage building fire detection. Shu et al. [1] targeted small-scale fire and smoke detection under multiple interference backgrounds in transmission corridors by integrating an Efficient Multi-scale Attention (EMA) mechanism, a weighted Bi-directional Feature Pyramid Network (BiFPN), the WIoU loss, and a Soft-NMS module, and proposed the YOLOv5s-EBWS detector, which effectively reduced false positives caused by mist and light interference. For reducing false alarm rates, multi-stage strategies are commonly adopted: Su et al. [4] first performed coarse detection using YOLOv7 and then introduced a specialized classifier to screen confusing objects, significantly lowering false positives. Similarly, Feng et al. [5] developed a two-stage pipeline, smoke region localization followed by fine-grained recognition, enhancing robustness under complex backgrounds. With respect to efficiency, Huang et al. [6] incorporated depthwise separable convolution and GhostNet into YOLO, greatly compressing the model size while maintaining accuracy. Xue et al. [7] optimized pyramid pooling modules to accelerate global feature extraction of small targets, thereby improving inference speed.

Nevertheless, limitations remain. Most existing models fail to sufficiently capture the dynamic textures of smoke and flames (e.g., vortex structures) and their spectral differences from interference sources, resulting in persistent small-target miss detection in foggy conditions [8,9]. Striking a balance between accuracy and efficiency also remains challenging: models pursuing high precision (e.g., MDCNN-based architectures) often exceed 50M parameters, making them difficult to deploy on resource-constrained edge devices, while lightweight models (e.g., FireNet-AMF) often achieve suboptimal accuracy, with mAP@0.5 scores below 85%. Moreover, wildfire evolution exhibits strong temporal characteristics, yet mainstream approaches largely rely on single-frame analysis. Sun [10] attempted to integrate LSTM modules for temporal decision-making, which improved detection accuracy but introduced excessive latency (up to 2.3 s), incompatible with emergency response requirements. It is noteworthy that approaches in related fields, such as FD-YOLO for fall detection or YOLO-based anomaly detection in photovoltaic cells, have pioneered innovations in temporal modeling and lightweight design, providing valuable insights for wildfire monitoring [11,12].

In summary, although deep learning has driven significant advances in wildfire detection, current algorithms still struggle to extract robust features and maintain reliability under the complex disturbances typical of transmission corridors (clouds, artificial lights, and heat sources). False alarms and missed detections remain prevalent, and practical deployments continue to rely heavily on manual verification [13,14]. Therefore, the development of wildfire detection algorithms tailored to transmission corridor environments, balancing high accuracy with low false alarm rates, remains an urgent and unresolved research problem.

YOLOv11, the latest release from the Ultralytics team, introduces several architectural and training innovations that substantially improve detection speed, accuracy, and efficiency [15]. Compared with its predecessors (e.g., YOLOv8 and YOLOv10), YOLOv11 maintains or enhances accuracy while significantly reducing model parameters and inference latency, making it highly suitable for real-time surveillance scenarios [16]. However, its potential in wildfire detection under the complex backgrounds of transmission corridors remains underexplored, particularly in terms of suppressing false alarms from clouds and artificial lights.

To address this gap, this study systematically incorporates Multi-Scale Convolutional Attention (MSCA) and Distribution-Shift Convolution (DSConv) into YOLOv11, targeting the specific challenges of wildfire detection in transmission corridors, such as small-object recognition, high interference, and stringent real-time requirements. Specifically, we optimize YOLOv11 using MSCA and DSConv to construct an enhanced wildfire detection model tailored for overhead transmission lines, aiming to improve detection accuracy and robustness in complex environments while maintaining computational efficiency. Furthermore, extensive experiments are conducted on a representative wildfire dataset collected from transmission corridors to evaluate the proposed model in terms of accuracy (mAP, precision, and recall), speed (inference latency), and false alarm rates, and to assess its potential for real-world deployment.

2. YOLOv11 Architecture and Proposed Enhancements

2.1. YOLOv11 Object Detection Model

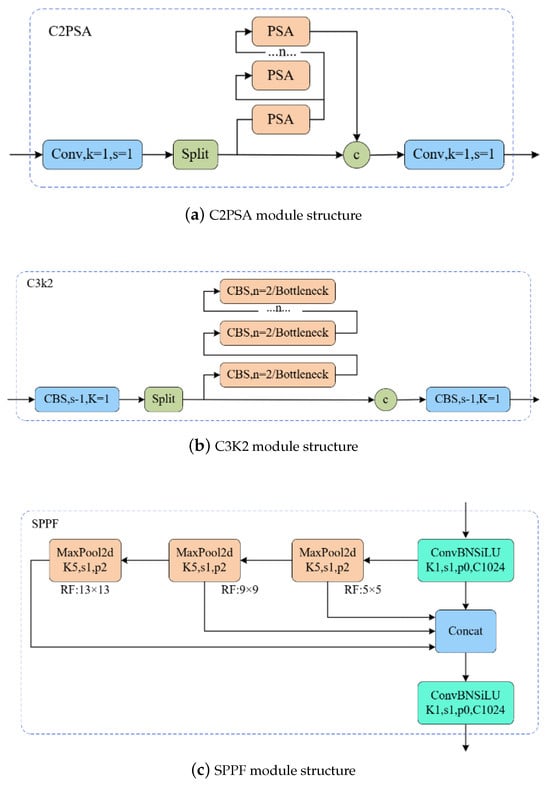

YOLOv11, created by the Ultralytics team, is a cutting-edge object detection model that incorporates a range of architectural, training, and task-oriented innovations to improve detection speed, accuracy, and computational efficiency. As seen in Figure 1, YOLOv11 features several new modules, including the C3K2 block, the Spatial Pyramid Pooling Fusion (SPFF) module, and the Cross-Stage Partial Spatial Attention (C2PSA) block, which work together to strengthen the model’s feature extraction capabilities. In addition to object detection, YOLOv11 is designed to support a variety of other vision tasks, such as instance segmentation, pose estimation, oriented bounding box (OBB) detection, and image classification, making it a comprehensive and unified framework for various computer vision applications.

Figure 1.

Architectural diagrams of the new modules introduced in YOLOv11: (a) C2PSA, (b) C3K2, and (c) SPPF. These modules collectively enhance spatial attention, feature reuse, and multi-scale feature representation.

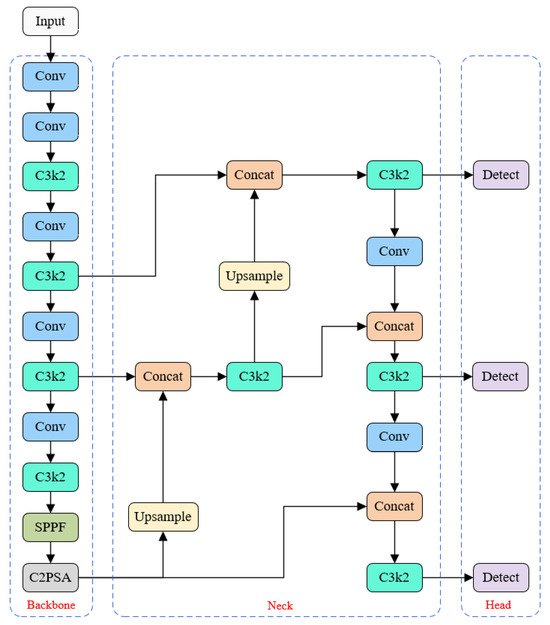

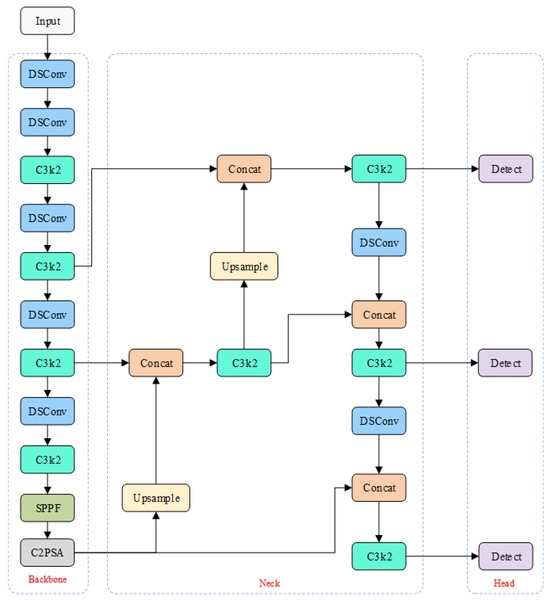

As illustrated in Figure 2, the overall structure of YOLOv11 consists of four major components: the input layer, the backbone, the neck, and the detection head.

Figure 2.

Overall network architecture of YOLOv11, which consists of four main components: the input layer, the backbone (enhanced with C3K2 and C2PSA modules), the neck (based on feature pyramid networks), and the anchor-free detection head.

The backbone is responsible for hierarchical feature extraction and adopts the CSPDarknet architecture, enhanced with several newly designed modules, such as C3K2 and C2PSA. The C3K2 module combines and convolution kernels to achieve multi-scale feature representation. Specifically, the convolution captures local spatial features, while the convolution reduces the channel dimensionality, thereby decreasing computational complexity while preserving feature richness. This design significantly improves feature extraction efficiency without sacrificing representational capacity.

The C2PSA module further enhances feature quality through a spatial attention mechanism that adaptively assigns weights across both spatial and channel dimensions. By emphasizing salient regions and suppressing irrelevant background noise, C2PSA improves the discriminative capability of the features and strengthens the downstream detection performance. The synergy between C3K2 and C2PSA provides the backbone with more robust and fine-grained features for object localization and classification.

The neck utilizes a Feature Pyramid Network (FPN) structure, which integrates high-level semantic information into lower-level feature maps via a top-down pathway with lateral connections. This generates a rich multi-scale feature pyramid that enables the network to leverage both high-resolution shallow features and semantically enriched deep features. Such a design significantly improves the model’s ability to detect small-scale objects.

In YOLOv11, the detection head utilizes depthwise separable convolutions combined with an anchor-free detection mechanism to predict bounding boxes and class probabilities. Depthwise separable convolutions break the conventional convolution process into depthwise and pointwise steps, effectively lowering the number of parameters and computational requirements while retaining strong feature extraction capabilities. The anchor-free design eliminates the dependency on predefined anchor boxes, which are often inflexible and suboptimal. Instead, the model directly predicts bounding box coordinates and class scores at each spatial location on the feature map. This not only reduces inference latency and simplifies the detection pipeline but also enhances flexibility in handling objects of varying shapes and scales.

2.2. Multi-Scale Convolutional Attention (MSCA)

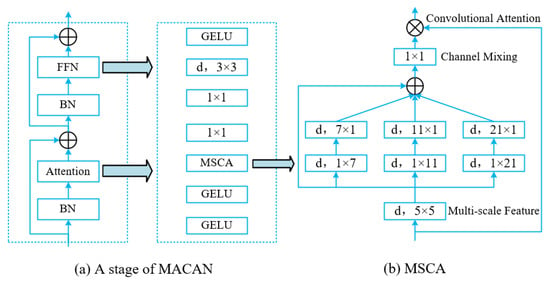

The MSCA (Multi-Scale Convolutional Attention) module is a combined structure that merges multi-scale convolution operations with an attention mechanism, specifically designed to enhance feature extraction in convolutional neural networks for various vision tasks [17]. By dynamically aggregating local and global contextual information at multiple spatial scales, MSCA strengthens the model’s focus on salient regions. This is particularly effective for detecting small objects, handling occlusions, and operating in visually complex environments. The structure of the MSCA module is illustrated in Figure 3.

Figure 3.

Architecture of the Multi-Scale Convolutional Attention (MSCA) module composed of deep convolution, multi-branch strip convolutions, and a channel attention layer.

The MSCA module is made up of three key parts. First, deep convolution is used to efficiently aggregate local features while preserving fine spatial details. Second, a multi-branch depth-wise strip convolution structure, which includes combinations of and kernels, captures contextual information at various scales, enabling hierarchical feature extraction from local to global levels. Third, a convolution is applied to model inter-channel dependencies, allowing dynamic adjustment of feature weights across channels. This design, incorporating local perception, multi-scale context fusion, and channel interaction modeling, optimally balances computational efficiency with feature representation power.

In wildfire detection tasks, challenges such as dynamically changing flame shapes, smoke-induced occlusion, and the loss of small-scale flame features often result in missed or false detections using traditional methods. These challenges are further amplified in complex natural environments, where flames and smoke exhibit diverse scales and are often difficult to distinguish from background clutter. To address these problems and improve detection precision, the MSCA mechanism is innovatively integrated into three critical components of the YOLOv11 architecture: the backbone, neck, and detection head. Specifically, MSCA enhances the network’s ability to extract relevant features by aggregating local details through deep convolution, capturing cross-scale contextual cues via multi-branch strip convolutions, and reinforcing salient feature representation through channel attention. This design significantly improves the model’s sensitivity to small flame targets and its robustness in smoke-obscured or cluttered scenes.

2.3. Distribution-Shifted Convolution (DSConv)

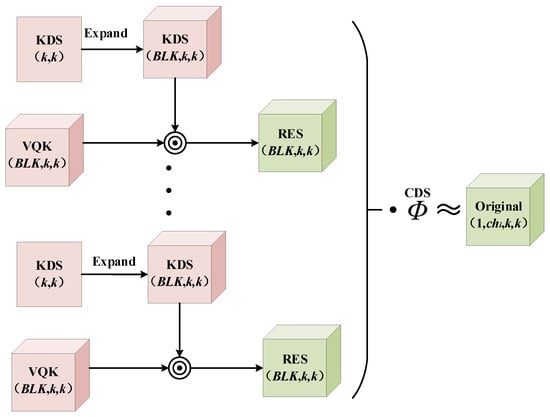

Distribution-Shifted Convolution (DSConv) is a lightweight and efficient convolutional variant that enhances model adaptability by dynamically adjusting the receptive field distribution of convolutional kernels [18]. This design minimizes memory usage and speeds up computation without compromising detection accuracy. The core principle of DSConv is the decomposition of traditional convolution into two key components: a Variable Quantized Kernel (VQK) and a distribution-shift mechanism. The VQK stores integer values in place of floating-point parameters, greatly improving storage efficiency. Meanwhile, the distribution-shift mechanism adaptively adjusts sampling points based on the spatial statistics of the input features, allowing for more precise feature capture in areas with rich edges or dense textures.

As an enhanced form of depthwise separable convolution, DSConv retains the lightweight characteristics of depthwise and pointwise convolution, while its learnable shift mechanism further strengthens the feature extraction process. Notably, it achieves an improved balance between computational cost and accuracy without increasing the number of FLOPs [19]. The architecture of DSConv is illustrated in Figure 4.

Figure 4.

Structure of the proposed DSConv module, including the Variable Quantized Kernel (VQK), Kernel Distribution Shifter (KDS), and Channel Distribution Shifter (CDS).

The innovation of DSConv lies in its two-part architecture: the VQK and the distribution shift unit. The VQK, acting as a quantized component, stores parameters in low-bit integers (e.g., 2-bit values) while maintaining the same dimensionality as conventional kernels . These quantized parameters are typically derived from pretrained floating-point models and offer a significant reduction in model size. The distribution shift component consists of two FP32 tensors: the Kernel Distribution Shifter (KDS) and the Channel Distribution Shifter (CDS). The KDS adjusts the VQK locally across blocks with a granularity of , while the CDS calibrates the distribution globally along the channel dimension [9,20].

For example, a convolutional layer with a kernel size of , when using 2-bit quantization and a block size of , requires only about 7% of the original storage. This is achieved by storing the VQK in integer format, along with two sets of KDS tensors of size and two sets of CDS vectors of size . This design preserves the essential feature extraction capacity of the original convolution while maintaining a highly similar output distribution through fine-grained adjustment by KDS and CDS.

DSConv improves computational efficiency and memory usage through the combination of quantization and dynamic spatial shift, all while maintaining detection accuracy. This makes it highly suitable for real-time wildfire detection applications, where edge device resources are limited. The upgraded YOLOv11 architecture, which integrates DSConv, is depicted in Figure 5.

Figure 5.

Architecture of the enhanced YOLOv11 model with integrated DSConv modules.

2.4. Wildfire Image Detection Methods for Transmission Lines

The improved wildfire image detection algorithm for transmission lines achieves notable enhancements in both accuracy and efficiency through the joint optimization of Multi-Scale Convolutional Attention (MSCA) and Distribution-Shift Convolution (DSConv). The MSCA module employs a pyramid-based multi-scale feature fusion strategy, where small-scale convolutions enhance the sensitivity to fine-grained flames, and large receptive-field convolutions help mitigate occlusion by penetrating smoke interference, thereby improving robustness in obstructed scenes.

At the same time, DSConv breaks down the convolutional structure and integrates a dynamic kernel selection mechanism, ensuring that feature extraction capabilities are retained while reducing both the parameter count and computational complexity.

Building on this, YOLOv11 constructs a lightweight architecture that includes multi-scale detection heads and adaptive training strategies. These improvements enable the model to efficiently handle multi-scale flame targets and occlusions in complex mountainous environments, all while maintaining high detection accuracy and achieving real-time inference performance.

3. Experimental Results and Analysis

3.1. Wildfire Image Dataset

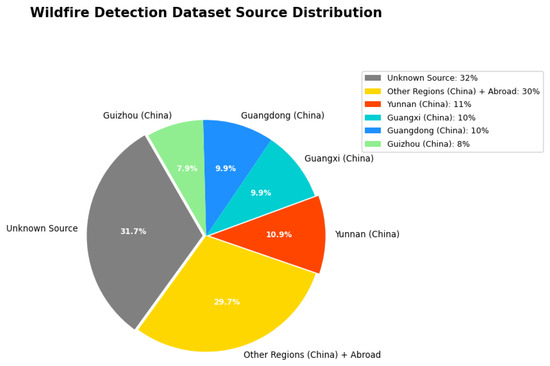

In this research, a dedicated dataset for wildfire detection was constructed by integrating publicly available Internet data with experimentally collected data [21], thereby enhancing both diversity and representativeness.

For Internet-based collection, the research focused on wildfire-prone regions in China (Southwest and South China) and abroad (e.g., California, USA; Alberta, Canada). Wildfire-related images and video materials were systematically gathered from social media platforms such as TikTok and Bilibili, as well as from search engines including Baidu, Google, and Yandex, ensuring comprehensive scene coverage. A total of 5000 images and 76 wildfire videos were obtained, as illustrated in Figure 6. Based on metadata completeness, the videos were divided into two categories: (1) 53 videos containing complete metadata (including occurrence time, geographic coordinates, and device information), and (2) 23 videos with partial metadata, which were retained to enrich the dataset scale and diversity.

Figure 6.

Examples of wildfire images collected from the Internet, covering diverse regions and scenarios.

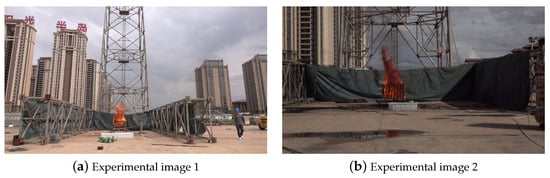

To overcome the limitations of Internet data, such as restricted viewpoints, inconsistent lighting, and lack of controlled conditions, additional wildfire simulation experiments were conducted. In 2022, controlled burns were carried out in a safe, open field in Yunnan Province, China. Dried vegetation materials placed on wooden stacks were ignited to realistically simulate wildfire scenarios. Various devices, including smartphones, drones, and professional video cameras, were employed to capture the events from multiple altitudes and angles, producing a large volume of first-hand imagery under diverse lighting conditions and perspectives. Representative experimental images are shown in Figure 7.

Figure 7.

Representative experimental images collected during controlled wildfire simulation.

For video preprocessing, PotPlayer software (version: 250514) was employed to extract frames sequentially, generating the initial image samples. To enhance data quality, redundant frames and highly similar images were removed using the AntiDupl tool. All remaining images were uniformly cleaned, filtered, and renamed in an incremental format (“fire_xxxx”). After this process, a total of 7500 high-quality wildfire images were obtained. The statistical details of the dataset are presented in Table 1.

Table 1.

Statistical summary of the wildfire image dataset.

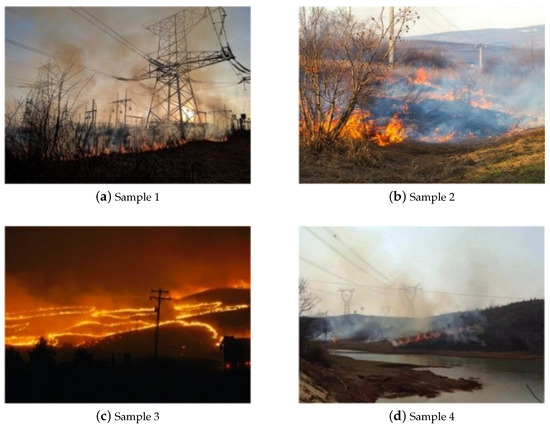

Among them, 312 images contain specific transmission line elements such as towers and conductors, which provide critical data support for wildfire detection in complex environments. Representative samples are shown in Figure 8.

Figure 8.

Representative wildfire images in transmission line corridors from the constructed dataset.

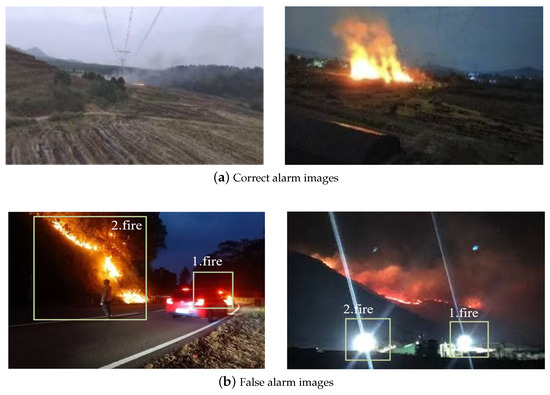

In addition, 3000 background images were collected, primarily including artificial illumination, mountain mist, and cloud patterns in the sky. As shown in Figure 9, Figure 9a presents a true alarm case. The camera was mounted on a transmission tower at a high altitude. During the daytime, farmland burning produced flames and smoke that occupied only a very small proportion of the image, with weak visual features. Due to the long distance, flame contours were barely visible, while solar reflections on the water surface appeared visually similar to flame features, further complicating model discrimination. At night, although flame and smoke features became more apparent when the fire spread, interference from reflections on greenhouse surfaces and nearby street lights still posed challenges for accurate detection.

Figure 9.

Example images of transmission line wildfire monitoring: (a) correct alarms; (b) false alarms caused by light, mist, or clouds.

Figure 9b shows false alarm cases, including misdetections of illumination, fog, and clouds. Without considering the contextual information of the scene, the detected regions bear strong visual resemblance to flame and smoke targets, thereby making them difficult to distinguish and prone to false positives. Analysis of these wildfire monitoring cases indicates that, due to the complexity of environments in transmission line corridors, false alarms and missed detections are almost inevitable [22]. This highlights the necessity of designing a generalized wildfire detection algorithm that ensures high recognition accuracy and robustness against environmental interferences.

3.2. Image Annotation and Dataset Splitting

In this research, all images were manually annotated using the LabelImg tool to generate standardized object bounding boxes. Each image containing wildfire elements was labeled with rectangular boxes for two target categories: smoke and fire. The annotation process involved manually drawing bounding boxes and recording spatial coordinate parameters (xmin, xmax, ymin, and ymax), following the PASCAL VOC format. Each annotation was saved in a structured XML file with the same filename as the corresponding image. Background images without any smoke or flame were excluded from the annotation process.

After annotation, the dataset was divided into training, validation, and test sets with a ratio of 8:1:1. All annotations were extracted and statistically analyzed, and the results are shown in Table 2. As illustrated, the original real-world samples exhibit a significant class imbalance between flame and smoke annotations, which could negatively affect model convergence during training.

Table 2.

Wildfire image dataset statistics.

To address this issue, we constructed a balanced training set by integrating real-scene samples with supplemental collected images. This approach ensured a more uniform distribution of flame and smoke instances, enabling the model to learn robust feature representations for both categories under varied environmental conditions.

3.3. Model Training

In this research, a wildfire detection model for transmission lines was developed based on the YOLOv11 algorithm using the PyTorch deep learning framework. The experiments were conducted on a server equipped with a 12th Gen Intel Core i5-12450F processor and an NVIDIA GeForce RTX 3060 GPU with 12 GB VRAM. The operating system used was Windows 11, and all programs were implemented using Visual Studio Code (v1.95). The training environment included CUDA 11.7 and Python 3.8.0.

During model training, the batch size was set to 16, and the total number of training epochs was 200. The number of parallel data loading workers was four, and the input image size was normalized to 640 × 640 pixels. The Stochastic Gradient Descent (SGD) optimizer was adopted with a momentum of 0.937. The initial learning rate was set to 0.01 and gradually decreased to a final learning rate of 0.0001. The confidence threshold for detection was set to 0.35, and the Intersection over Union (IoU) threshold for Non-Maximum Suppression (NMS) was 0.5.

Before training, the dataset was split and preprocessed, including resizing images and normalizing pixel values. During training, each image was first passed through the backbone and feature fusion network to extract high-level features. These features were then fed into the detection heads to predict object classes, bounding box coordinates, and corresponding confidence scores. Non-Maximum Suppression was applied to filter redundant predictions and retain the optimal bounding boxes and class labels. The loss was then calculated and used for backpropagation. After each epoch, model weights were updated and saved. The training process continued until the loss function converged or the maximum number of epochs was reached. The final model weights were selected based on the lowest validation loss.

3.4. Comparison with Existing Detection Models

3.4.1. Ablation Study

This research quantifies the model’s performance in wildfire detection tasks using Precision (P), Recall (R), Average Precision (AP), and Mean Average Precision at an IoU threshold of 0.5 (mAP@0.5). Furthermore, the Frames Per Second (FPS) metric is utilized to evaluate the model’s inference efficiency in real-time settings.

To validate the two proposed improvement strategies, ablation experiments were conducted on a custom-built wildfire dataset derived from transmission line corridors. A specific focus of the experiment was the placement optimization of the Multi-Scale Convolutional Attention (MSCA) module, and the results are provided in Table 3.

Table 3.

Test results of different MSCA insertion positions.

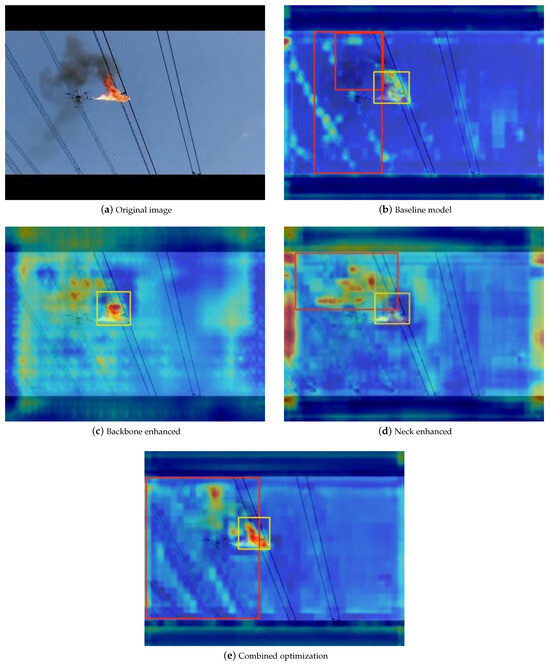

To evaluate the effectiveness of the proposed model improvements, four comparative experiments were conducted: the baseline model (Group 1), backbone-enhanced model (Group 2), neck-enhanced model (Group 3), and the jointly enhanced model (Group 4). Experimental results indicate that optimizing the Backbone and Neck individually led to improvements in detection accuracy of 0.3% and 0.13%, respectively. The joint optimization strategy achieved a notable 0.75% increase, demonstrating the effectiveness of the MSCA module in enhancing flame and smoke detection under complex transmission line corridor scenarios.

The feature heatmaps visualized in Figure 10 further validate the contribution of MSCA in enhancing the model’s attention to target regions. Specifically, backbone optimization strengthened flame localization (Figure 10c), while neck enhancement improved the detection of diffuse smoke regions (Figure 10d). The joint optimization strategy (Figure 10e) exhibited the most robust feature extraction capability, effectively capturing both wildfire and smoke targets. These results confirm the efficacy of the MSCA module from the perspective of feature space representation.

Figure 10.

Visualizationcomparison of different improvement positions. (a) Original image; (b) baseline model; (c) backbone enhanced; (d) neck enhanced; (e) combined optimization.The yellow boxes indicate detected wildfire flames, while the red boxes represent detected smoke.

As shown in Table 4, both the computational efficiency and the Mean Average Precision (mAP@0.5) of the model increase with the number of DSConv replacements. Therefore, replacing the original convolution layers in YOLOv11 with DSConv not only improves detection accuracy but also enhances computational efficiency and reduces memory consumption, making the model more suitable for real-time wildfire monitoring systems.

Table 4.

Impact of different block sizes on model performance.

To further investigate the effectiveness of the proposed module and the rationale behind its optimal configuration, we replaced the standard convolutional layers in the third and fourth stages of the YOLOv11 backbone with DSConv. Keeping all other variables constant, we systematically varied the block size in the kernel distribution shifter and evaluated its impact on model performance. As shown in Table 5, the evaluation metrics included the detection accuracy (mAP@0.5), number of parameters (Parameters), computational cost (FLOPs), and inference speed (FPS).

Table 5.

Ablation study results of YOLOv11_MDS.

The experimental results demonstrate that all configurations incorporating DSConv significantly outperform the standard convolution baseline, with mAP improvements ranging from 0.11 to 0.62. This confirms the effectiveness of explicitly modeling intra-kernel distribution shifts to enhance feature representation capability. Notably, the configuration with a block size of 4 achieved the best performance (86.32% mAP), indicating that a moderately fine granularity is most effective in capturing beneficial distributional variations of kernels for wildfire detection tasks. Although a block size of one theoretically provides the highest flexibility via channel-wise transformation, its performance was slightly inferior to a of block size of four, possibly due to optimization difficulties caused by excessive model complexity.

Further analysis shows that DSConv consistently improves performance while maintaining nearly the same computational cost (FLOPs) and parameter count as the baseline, demonstrating highly controllable overhead. When the block size was set to eight or larger, the inference speed (FPS) was nearly identical to that of the standard convolution, with a block size of eight incurring only a minor mAP loss of 0.17 (compared to a block size of four) in exchange for almost baseline-level inference efficiency. Based on the comprehensive ablation experiments, we adopt a block size of four as the default configuration, which not only achieves the best accuracy but also represents a well-balanced trade-off between feature representation capability and computational efficiency.

Finally, based on the YOLOv11 architecture, MSCA modules were incorporated into both the backbone and neck, and standard convolutional layers were replaced with DSConv modules, resulting in the optimized YOLOv11_MDS model. Different combinations of these improvements were trained and evaluated. As shown in Table 4, Models 1 and 2 represent the introduction of MSCA into the backbone and neck, respectively, and the use of DSConv as a replacement for standard convolution. Compared to the original YOLOv11 model, both methods significantly improve the average detection precision, confirming the effectiveness of the proposed strategies. By combining both improvements, the final model achieves an mAP of 88.1%, an increase of 2.93 percentage points over the baseline, and a frame rate of 242 FPS, representing a 3.9 percentage point improvement in inference speed. These results validate the reasonableness and effectiveness of the proposed dual-enhancement approach.

3.4.2. Comparative Experiments

To evaluate the detection performance of YOLOv11_MDS, comparative experiments were conducted under a unified experimental environment with consistent hardware configurations and hyperparameters. Since our proposed method is a variant built upon YOLO11, we first compare it directly with the original YOLO11 to evaluate the effectiveness of the introduced modules. In addition, we include YOLOv8, which is still one of the most widely used and recognized baseline detectors in both academia and industry, as well as YOLOv10, the latest SOTA YOLO model with an end-to-end NMS-free design. This selection ensures that the comparison covers both mainstream baseline detectors (YOLOv8 and YOLO11) and the most recent state-of-the-art architecture (YOLOv10), along with the two-stage detector Faster R-CNN. As shown in Table 6, YOLOv11_MDS achieved superior results in two key metrics: recall (88.24%) and overall accuracy (mAP@0.5 = 88.21%), while also demonstrating faster detection speed compared with other models. These results confirm that the proposed method provides stronger recognition capability for flame and smoke targets in transmission line scenarios.

Table 6.

Comparison of detection performance across different models.

In addition, the models were tested under resource-constrained hardware conditions using an NVIDIA Jetson Nano platform. The results showed that YOLOv11_MDS maintained the highest inference speed, achieving up to 50 Frames Per Second (FPS), thereby providing a more realistic reflection of its deployment performance in practical applications.

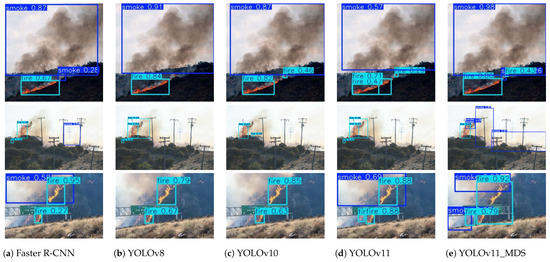

To provide a more intuitive comparison of the detection performance between YOLOv11_MDS and other mainstream algorithms, representative results on selected test samples are visualized in Figure 11.

Figure 11.

Visualcomparison of detection results between the proposed YOLOv11_MDS and other models. Each column represents one detection method applied to the same image sets.

As shown in the figure, faster R-CNN generates a large number of prediction boxes for flames and smoke. Although this leads to relatively high recall, the overlap between the predicted boxes and the ground-truth annotations is limited, resulting in lower precision. This indicates that Faster R-CNN struggles to balance precision and recall for wildfire detection, thereby producing suboptimal accuracy. For YOLOv8, both smoke and flame instances exhibit noticeable missed detections, leading to unsatisfactory accuracy. YOLOv10 performs well in flame detection but fails to accurately capture smoke, particularly small-scale smoke targets and those visually similar to sky textures. YOLOv11 achieves a more balanced performance, with fewer missed detections, and provides higher-confidence predictions for both flames and smoke, though small-target flames and smoke are still occasionally missed.

In contrast, the proposed YOLOv11_MDS accurately detects nearly all flame and smoke targets with higher confidence scores than YOLOv11. Its predicted bounding boxes exhibit tighter alignment with ground-truth targets, and its ability to capture small-scale flames and smoke surpasses that of other algorithms. These results validate the effectiveness of the proposed model in reducing both false negatives and false positives in wildfire detection.

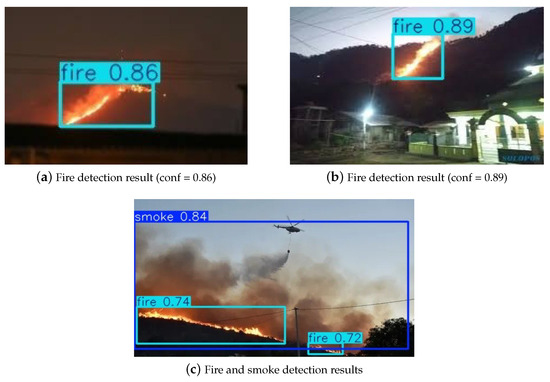

To further validate the generalization ability and robustness of the proposed model, we trained it on the publicly available The Wildfire Dataset provided by Ismail El Madafri on the Kaggle platform and evaluated its performance on the independent test set. Figure 12 presents representative detection results on this dataset, while Table 7 summarizes the comparative performance against mainstream detection models. These experiments were designed to ensure a fair evaluation and to assess the adaptability of the proposed method to previously unseen data with distributional differences.

Figure 12.

Representative detection results of YOLOv11_MDS on the public The Wildfire Dataset (Kaggle). (a) Night-time fire; (b) hillside fire; (c) flame and smoke detection under helicopter suppression.

Table 7.

Comparison of the proposed YOLOv11_MDS with mainstream models on the public wildfire dataset.

As shown in Table 7, when trained and tested on the public dataset, the proposed model exhibited slight decreases in both mAP and recall compared with its performance on the private dataset, which is an expected outcome. We attribute this to domain adaptation challenges: the public dataset contains a substantial number of overhead images captured from UAVs and satellites, where the background complexity and target scales differ significantly from the ground-based surveillance perspective that dominates our private dataset. This discrepancy imposes stricter requirements on the model’s generalization capability.

In contrast, the efficiency-related metrics (FPS, FLOPs, and parameter count) remained nearly identical across datasets, confirming that computational performance is solely determined by the model architecture and independent of the input data. Overall, despite certain fluctuations in absolute performance due to cross-domain testing, the proposed model consistently outperformed all baseline detectors on the public benchmark, thereby demonstrating the effectiveness of the improvements and its strong generalization ability.

4. Conclusions

The main contributions of this paper are summarized as follows:

- We construct the YOLOv11_MDS detection model by embedding the Multi-Scale Convolutional Attention (MSCA) module into the backbone and neck, which enhances multi-scale dynamic feature extraction of flames and smoke and effectively suppresses false alarms caused by background interference such as fog and artificial light. Furthermore, we replace conventional convolution with Distribution-Shift Convolution (DSConv), introducing a quantized dynamic-shift mechanism that reduces computation by 32%. This significantly improves inference efficiency while maintaining detection accuracy, thereby satisfying the lightweight deployment requirements of edge devices.

- On the transmission line wildfire dataset, the proposed model achieves an mAP@0.5 of 88.21%, which is 2.93 percentage points higher than the baseline YOLOv11. For small-scale flame/smoke targets with pixel occupancy below 1%, the recall improves by 3.33%. In addition, the inference speed reaches 242 Frames Per Second (FPS), meeting the real-time requirements of multi-camera concurrent monitoring in transmission corridors.

- The proposed model achieves a favorable balance between accuracy and efficiency, providing an engineering-ready solution for wildfire early-warning systems in transmission corridors. Experimental results demonstrate its feasibility for online monitoring in high-risk sections, effectively addressing the challenges of small-target omission and false alarms caused by fog in traditional methods. This research, therefore, offers practical value for improving wildfire monitoring efficiency in power grids.

Future work will focus on cross-modal detection. We plan to incorporate temporal attention mechanisms with infrared thermal imaging data to construct a multi-source joint detection framework for dynamic scenarios, further reducing false alarm rates in complex environments and advancing the engineering deployment of wildfire early-warning systems in transmission corridors.

Author Contributions

Conceptualization, G.L. and D.H.; methodology, J.D. and D.C.; software, L.D.; validation, Y.J., B.W. and D.H.; formal analysis, T.P. and Y.L.; investigation, C.C. and T.P.; resources, G.L. and L.T.; data curation, C.C. and T.P.; writing—original draft preparation, Y.L., T.P. and J.D.; writing—review and editing, G.L., B.W., D.H. and Y.L.; visualization, T.P. and Y.L.; supervision, D.H. and G.L.; project administration, G.L. and Y.L.; funding acquisition, D.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Technology Project “Science and Technology Project of China Southern Power Grid Corporation” (CGYKJXM20230166).

Data Availability Statement

The wildfire flame dataset used in this study was constructed by integrating publicly available resources (e.g., the Kaggle Wildfire Dataset) and self-collected UAV/field survey images. The dataset was carefully annotated with bounding box labels for flame regions. A curated portion of the dataset, together with the corresponding labels, is available at https://drive.google.com/drive/folders/1D6SIdS1LhK7QmgpI_yXaeB05eCL1sHRm?usp=sharing, accessed on 24 September 2025. The released dataset is organized into two main directories: JPEGImages, which contains the raw flame images in JPEG format, and Annotations, which contains the corresponding label files with bounding box annotations in XML format. The remaining data are contained within the article.

Conflicts of Interest

Authors Guanglun Lei, Jun Dong, Yi Jiang, Li Tang, Dengyong Cheng, Li Dai, and Chuang Chen are employed by the China Southern Power Grid Company Limited Tianshengqiao Bureau of EHV Transmission Company. Author Daochun Huang is employed by Wuhan University. The remaining authors (Tianhao Peng, Biao Wang, and Yifeng Lin) are students of Wuhan University. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Shu, S.; Tang, S.; Xiao, N.; Xu, J.; Fang, C.; Xie, W. Detection Method of Flame and Smoke in Transmission Line Corridors under Multi-background Interference Based on YOLOv5s-EBWS. High Volt. Eng. 2025, 51, 2374–2383. [Google Scholar]

- Ye, W.; Wu, Y.; Li, Z. Unsupervised Fire Detection Based on Contrastive Learning and Pseudo-Anomaly Synthesis. Comput. Syst. Appl. 2024, 33, 28–36. [Google Scholar]

- Feng, L.; Wang, H.; Wang, K.; Lu, Y.; Wang, J. Research and Improvement on the Fire Detection Method of Ancient Buildings Based on FireNet. Fire Sci. Technol. 2024, 43, 183–188. [Google Scholar]

- Su, R.; Xiong, W.; Xia, W.; Zhou, M.; Zhou, Q.; Liu, X. Wildfire Detection in Transmission Lines Based on Object Detection and Confusable Classification. Comput. Technol. Dev. 2025, 35, 205–213. [Google Scholar] [CrossRef]

- Feng, L.; Wang, H.; Wang, K.; Lu, Y.; Wang, J. Fire Smoke Recognition Based on Convolutional Neural Network in Target Region. Laser Optoelectron. Prog. 2020, 57, 83–91. [Google Scholar]

- Huang, J.; He, Z.; Guan, Y. Real-Time Forest Fire Detection by Ensemble Lightweight YOLOX-L and Defogging Method. Sensors 2023, 23, 1894. [Google Scholar] [CrossRef] [PubMed]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Liu, Z.; Niu, B.; Wang, S.; Chen, Q.; Long, Y.; Jiang, L. Research on the Detection Method of Wildfire Smoke in Transmission Lines in Foggy Weather. Fire Sci. Technol. 2021, 40, 390–393. [Google Scholar]

- Chester, F. Carlson Center for Imaging Science. Efficient Smoke Segmentation Using Multiscale Convolutions and Multiview Attention Mechanisms. Electronics 2025, 14, 2593. [Google Scholar]

- Sun, T. Fire Detection Model Based on Static Features and Dynamic Behaviors. J. Saf. Sci. Technol. 2021, 17, 96–101. [Google Scholar]

- Li, J.; Zhang, H.; Wang, Y. FD-YOLO: A YOLO Network Optimized for Fall Detection. Appl. Sci. 2025, 15, 1245. [Google Scholar] [CrossRef]

- Wang, C.; Li, Z.; Chen, J. Fast Object Detection of Anomaly Photovoltaic (PV) Cells Using Deep Neural Networks. IEEE J. Photovolt. 2025, 15, 897–905. [Google Scholar]

- Long, Y. Research on the Detection Method of Wildfire Smoke in Transmission Lines Based on Images and Videos. Ph.D. Thesis, North China Electric Power University, Beijing, China, 2022. [Google Scholar]

- Li, D.; Wang, X.; Li, L.; Ji, Z. Automated Deep Learning System for Image Analysis in Power UAV Inspections: Architecture Design and Key Technologies. Electr. Power Inf. Commun. Technol. 2024, 22. [Google Scholar]

- Yin, X.; Zhao, X. YOLOv11-MAS: An Efficient PCB Defect Detection Algorithm. Comput. Eng. Appl. 2025, 61, 102–111. [Google Scholar]

- Liu, H.; Huang, Z.; Qiu, B. Detection Method of Main Defects in Transmission Lines Based on Improved YOLOv11n. High Volt. Eng. 2025, 1–12. [Google Scholar] [CrossRef]

- Zhou, Y.P.; Qian, H.M.; Ding, P. MSSD: Multi-Scale Object Detector Based on Spatial Pyramid Depthwise Convolution and Efficient Channel Attention Mechanism. J. Real-Time Image Process. 2023, 20, 58. [Google Scholar] [CrossRef]

- Valsesia, D.; Mendoza-Cortes, D.; Magli, E. DSConv: Efficient Convolution Operator. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 30 April 2020. [Google Scholar]

- Campos, R.; Rezende, T.M.; Silva, A.L.; de Oliveira, R.; Alves, D. Real-Time Traffic Light Detection Using DSConv-Optimized YOLOv5. Neural Comput. Appl. 2023, 35, 15783–15794. [Google Scholar]

- Pan, H.; Badawi, D.; Zhang, X.; Cetin, A.E. Additive Neural Network for Forest Fire Detection. Signal Image Video Process. 2020, 14, 1079–1086. [Google Scholar] [CrossRef]

- Wuhan University Team. Wildfire Detection Dataset for Transmission Line Corridors. Google Cloud 2025. Available online: https://drive.google.com/drive/folders/1D6SIdS1LhK7QmgpI_yXaeB05eCL1sHRm?usp=sharing (accessed on 24 September 2025).

- Prema, C.E.; Vinsley, S.S. Image Processing Based Forest Fire Detection Using YCbCr Colour Model. In Proceedings of the 2014 IEEE International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 20–21 March 2014; pp. 1229–1237. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).