From Questionnaires to Heatmaps: Visual Classification and Interpretation of Quantitative Response Data Using Convolutional Neural Networks

Abstract

1. Introduction

2. Background and Related Works

2.1. Ordinal Data Classification Methods

2.2. Visual Coding of Structured Data

2.3. CNN Applications Beyond Natural Images

2.4. Explainable AI and Class Activation Maps (CAMs)

2.5. Synthesis and Positioning of Proposed Approach

3. Materials and Methods

3.1. Dataset and Preprocessing

3.2. Visual Encoding of Features

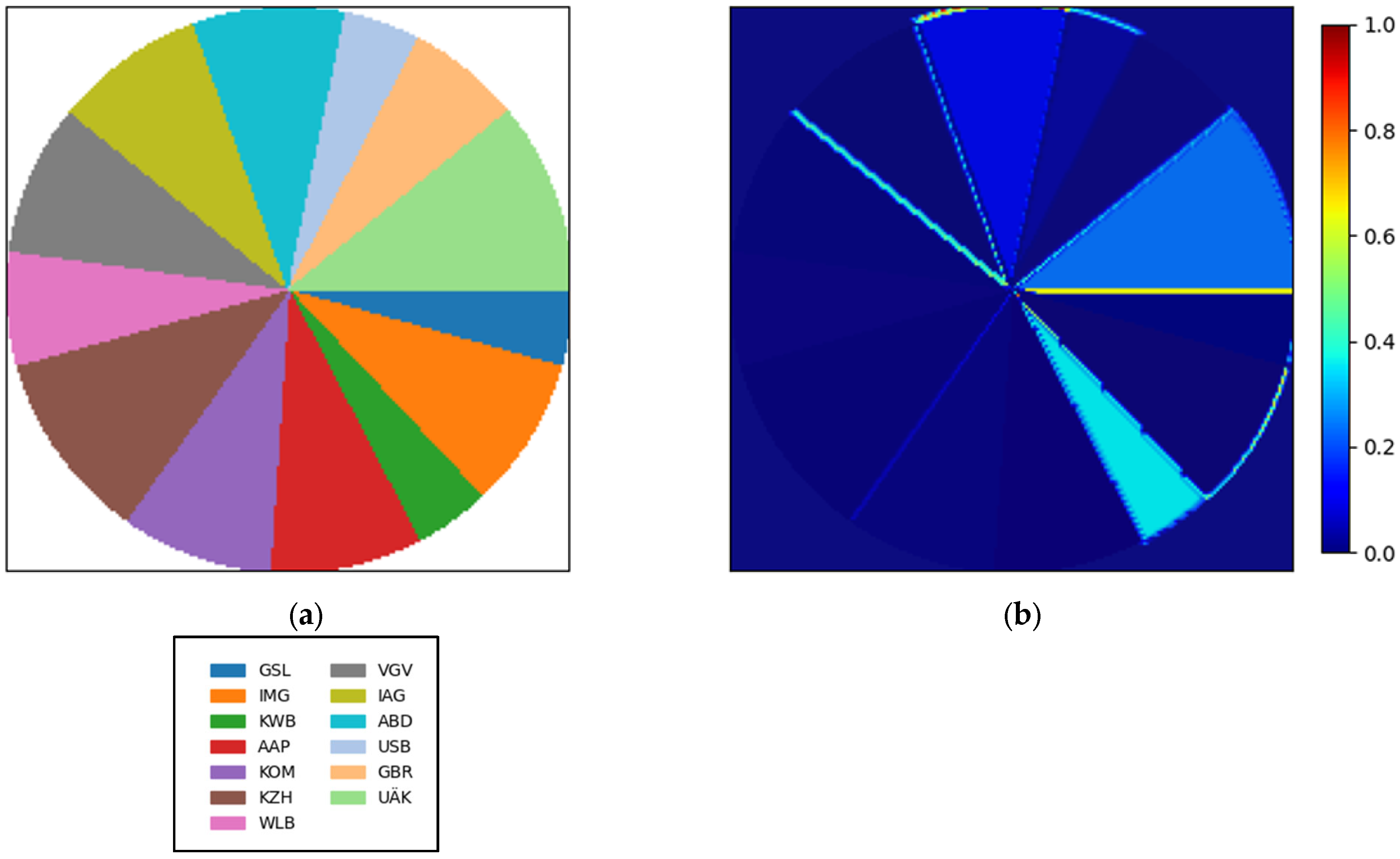

- (a)

- Bar charts

- (b)

- Pie charts

- (c)

- Labelling process

3.3. CNN Architecture and Training

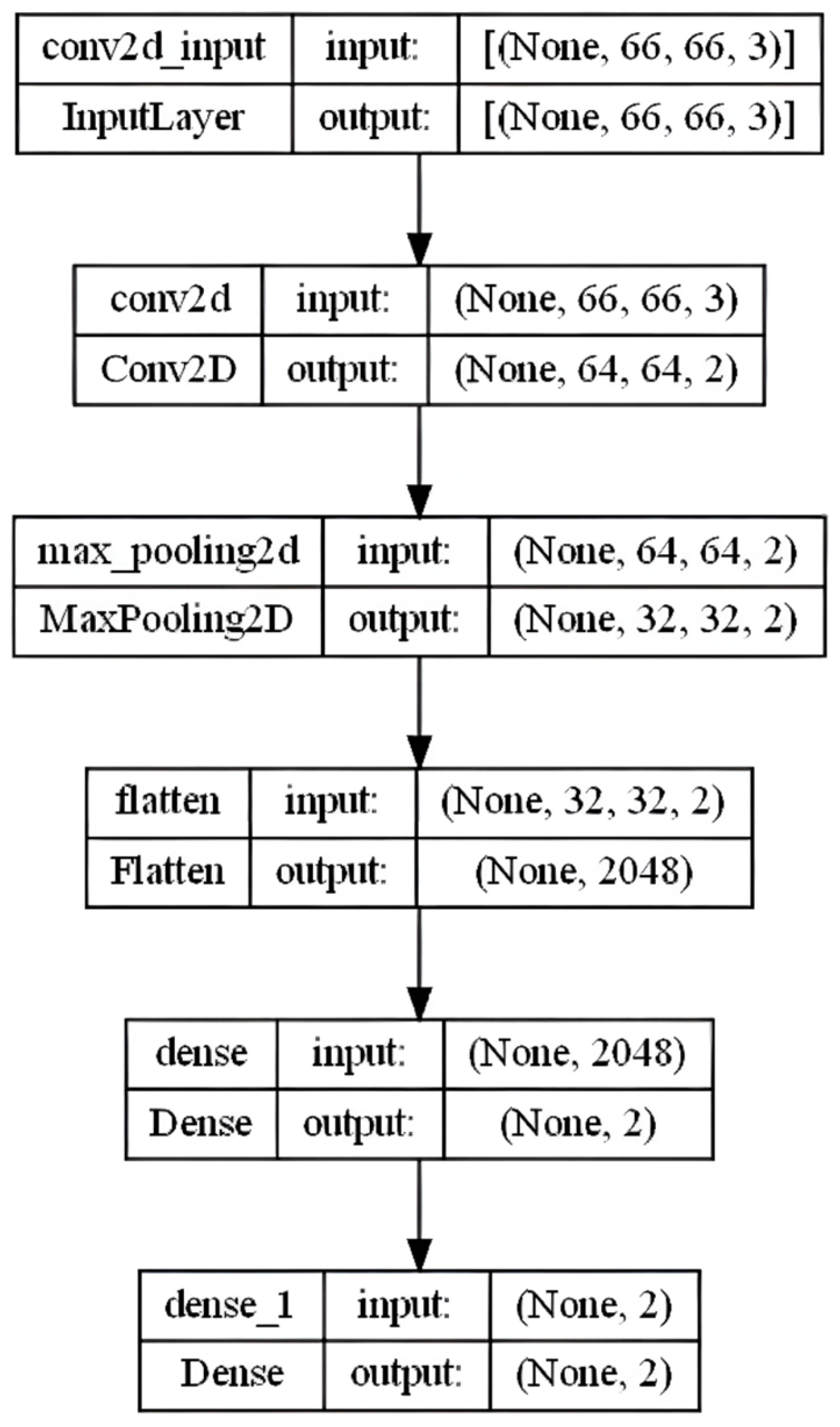

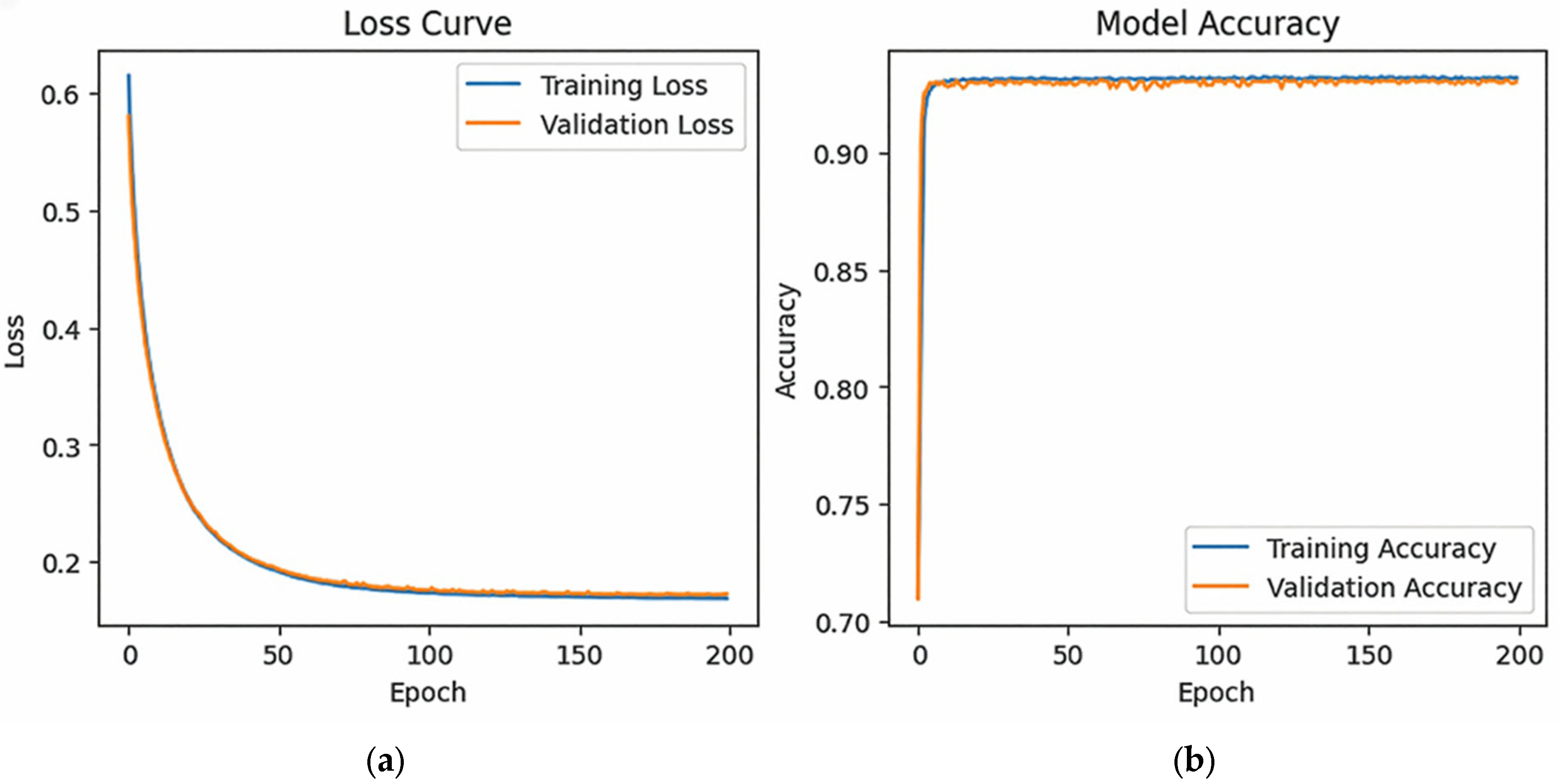

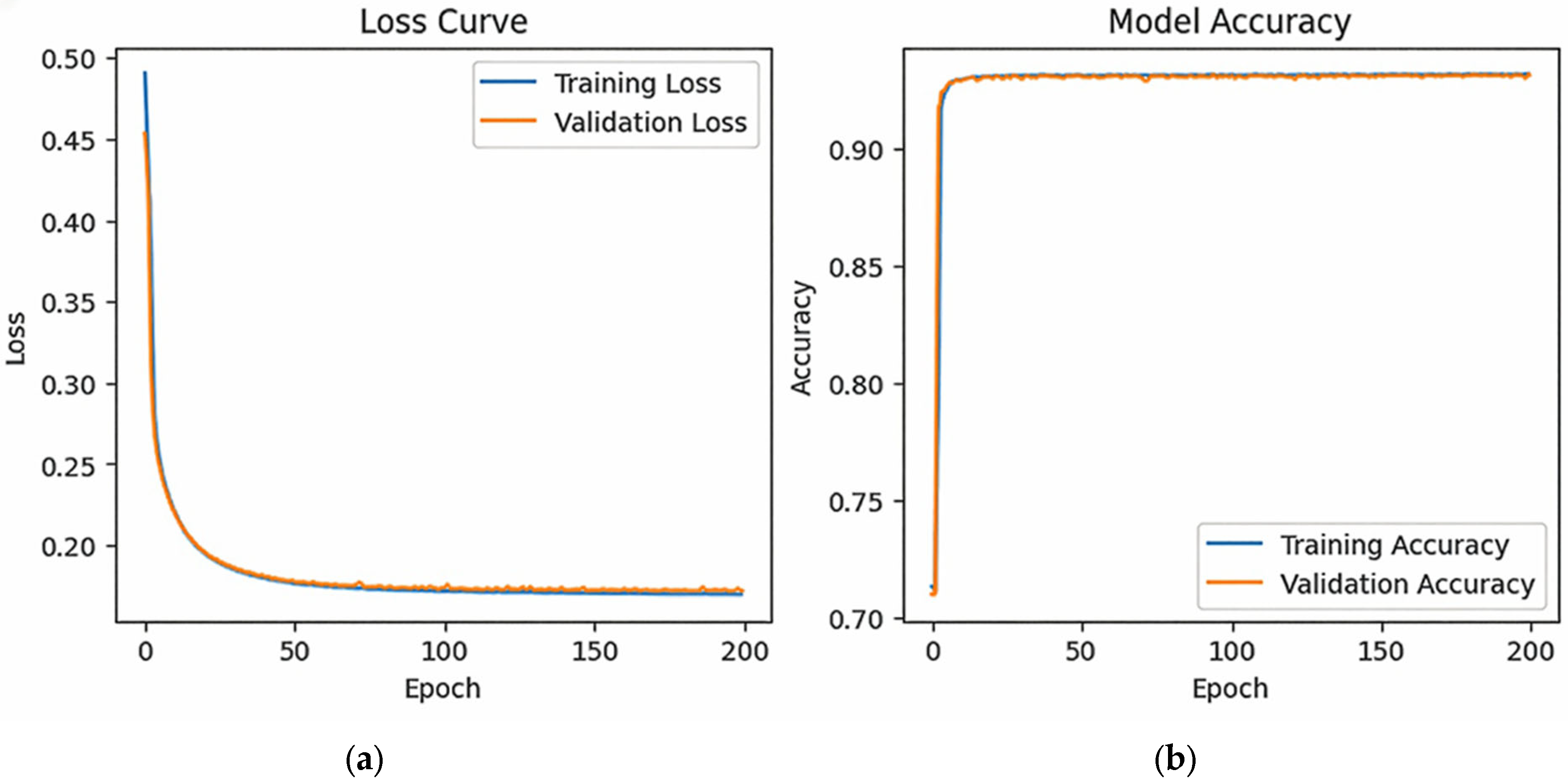

- (a)

- 1× Conv model

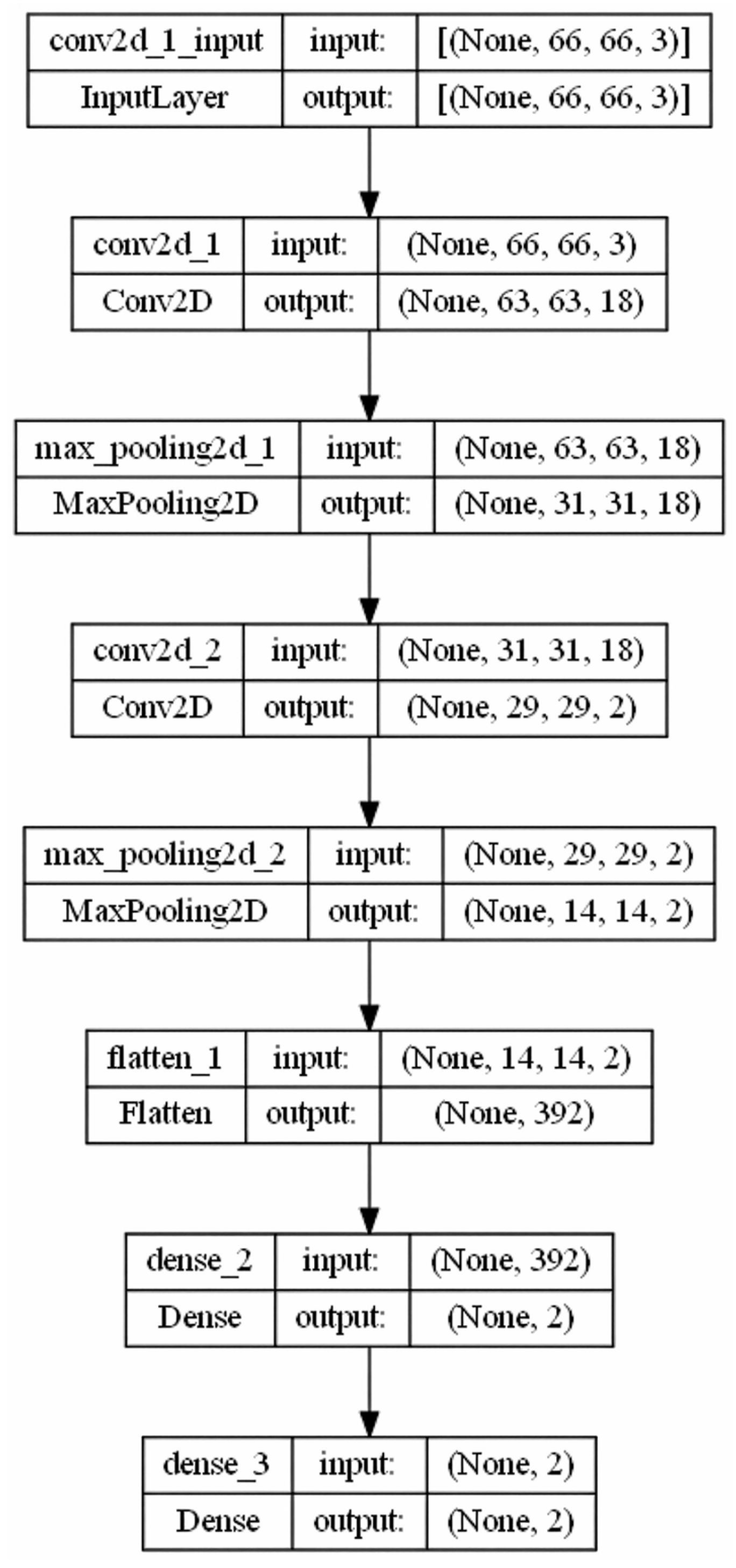

- (b)

- 2× Conv model

3.4. Integration of CAMs for Interpretability

- : The value of the CAM at position (x,y)

- : The activation of the k-th feature map at position (x,y)

- : The weight indicating the contribution of the k-th feature map to the prediction of class c

3.5. Evaluation Metrics

3.6. Implementation Environment

4. Results

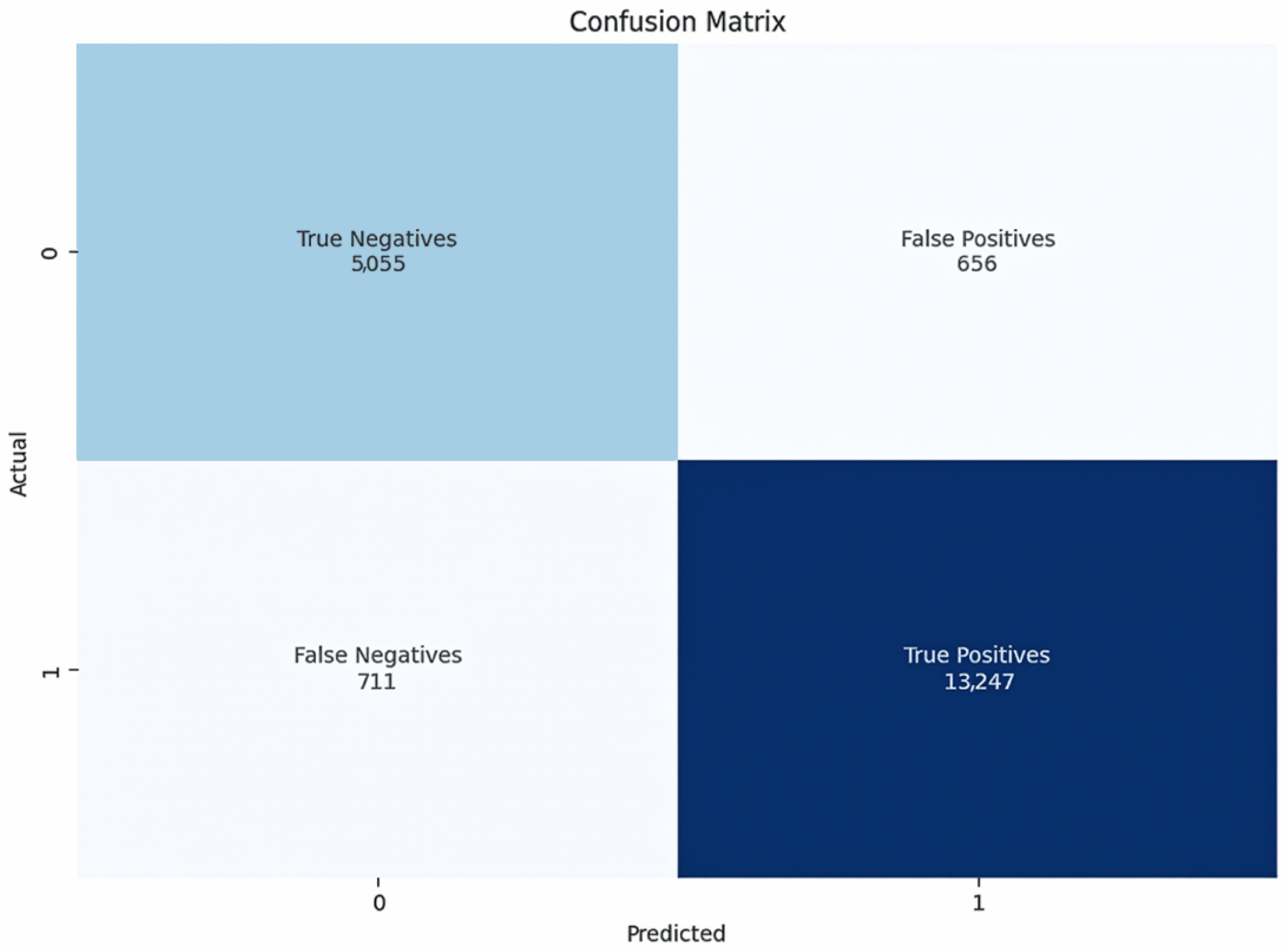

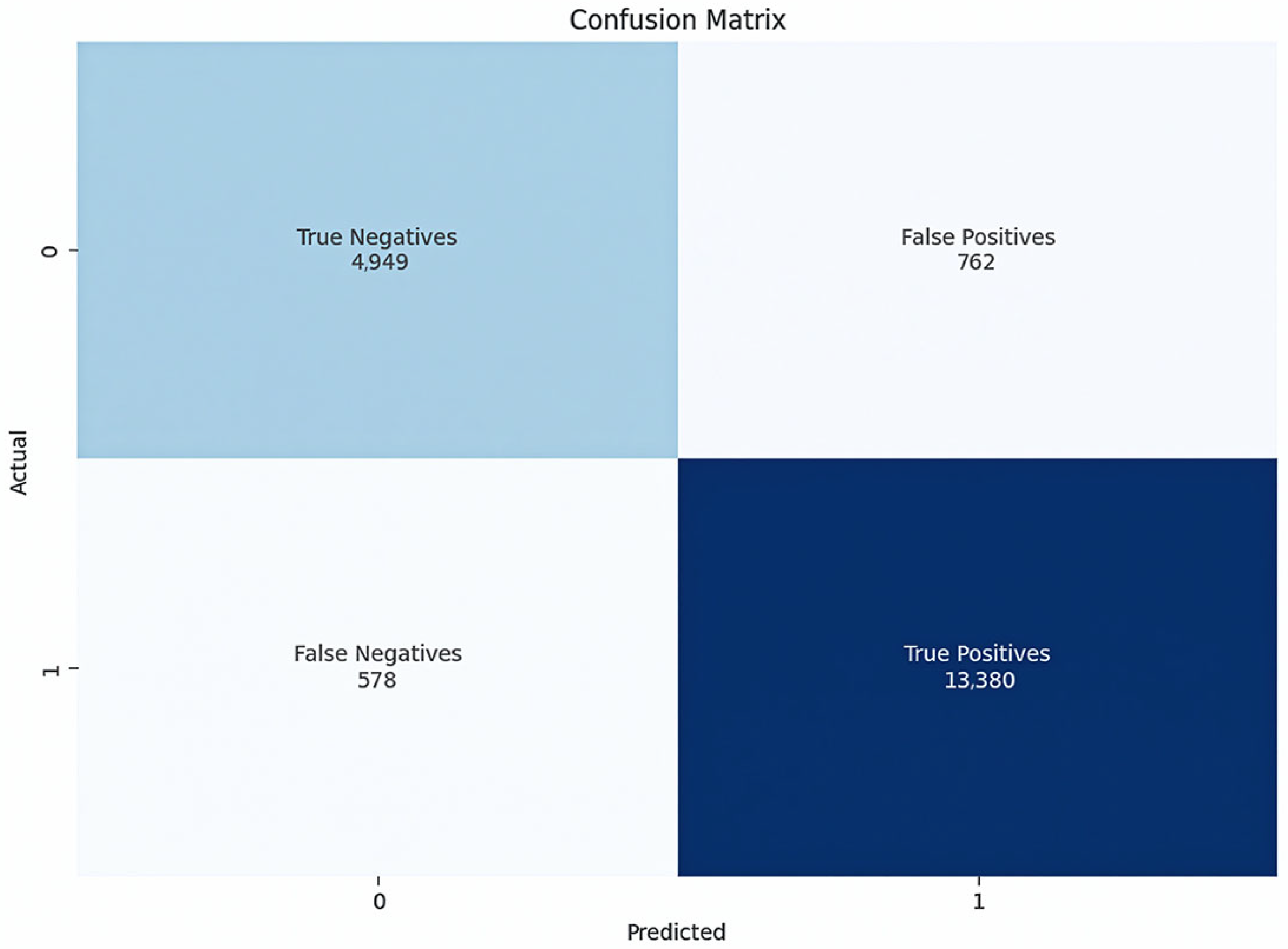

4.1. Performance Evaluation of Visual Encoding: Bar Charts

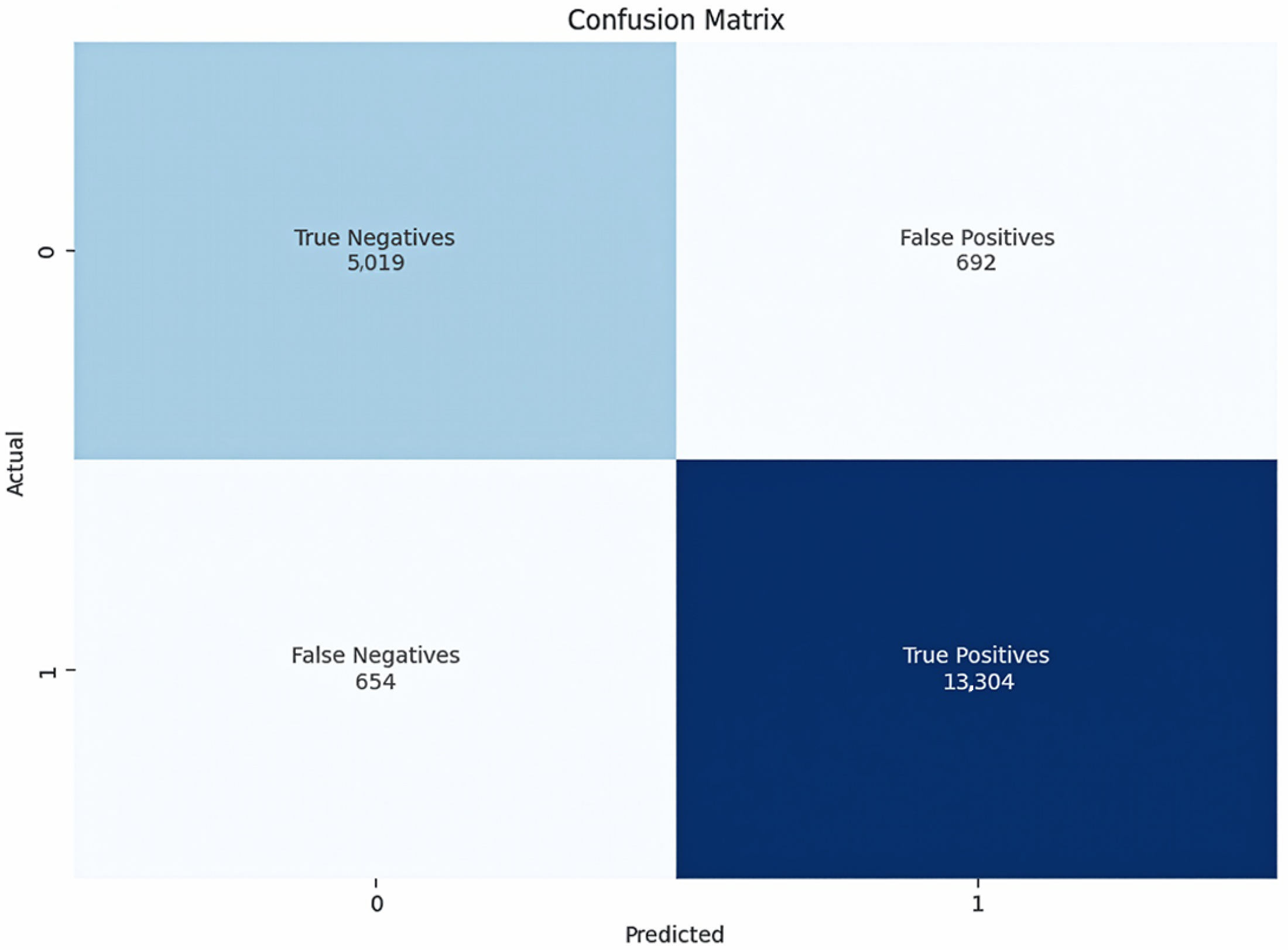

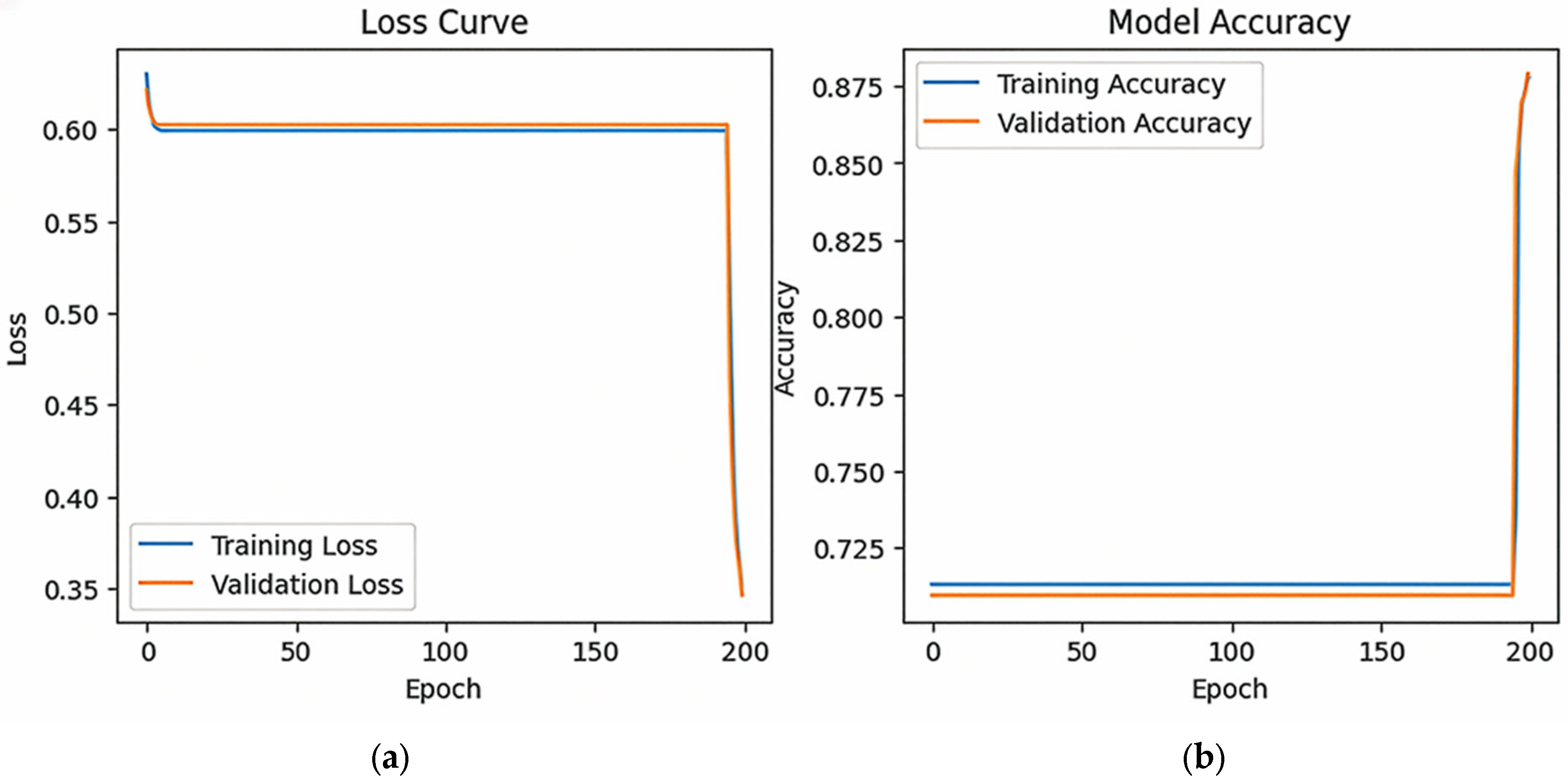

4.2. Performance Evaluation of Visual Encoding: Pie Charts

4.3. Comparison with Logistic Baseline Models

4.4. Analysis of CAM-Based Interpretability

4.5. Interpretation of the Results

5. Discussion

5.1. Key Findings

5.2. Comparison with Existing Methods

5.3. Influence of Visualisation Forms

5.4. Implications for Practice

5.5. Limitations and Future Research

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CAM | Class Activation Map |

| CNN | Convolutional Neural Network |

| DL | Deep learning |

| F1 | F1 score (harmonic mean of precision and recall) |

| GAF | Gramian angular field |

| HR | Human Resources |

| k-NN | k-Nearest Neighbours |

| LIME | Local Interpretable Model-Agnostic Explanations |

| ML | Machine learning |

| PIL | Python Imaging Library |

| RP | Recurrence Plot |

| RGB | Red Green Blue (colour space) |

| SHAP | SHapley Additive Explanations |

| SVM | Support Vector Machine |

| XAI | Explainable Artificial Intelligence |

Appendix A

| Items | Submitted Responses | Missing Responses |

|---|---|---|

| Employer Recommendation | 65,563 | 0 |

| Salary/social benefits | 62,416 | 3147 |

| Image | 61,616 | 3947 |

| Career/training | 61,602 | 3961 |

| Working atmosphere | 65,134 | 429 |

| Communication | 62,736 | 2827 |

| Team spirit | 62,703 | 2860 |

| Work–life balance | 62,341 | 3222 |

| Supervisor behaviour | 62,723 | 2840 |

| Interesting tasks | 62,420 | 3143 |

| Working conditions | 62,276 | 3287 |

| Environmental/social awareness | 60,674 | 4889 |

| Equality | 61,144 | 4419 |

| Treatment of older colleagues | 60,117 | 5446 |

| 85,231 | 44,417 | |

| 5.2% |

| Code | Items |

|---|---|

| WEW | Employer Recommendation |

| GSL | Salary/social benefits |

| IMG | Image |

| KWB | Career/ training |

| AAP | Working atmosphere |

| KOM | Communication |

| KZH | Team spirit |

| WLB | Work–life balance |

| VGV | Supervisor behaviour |

| IAG | Interesting tasks |

| ABD | Working conditions |

| USB | Environmental/social awareness |

| GBR | Equality |

| UÄK | Treatment of older colleagues |

References

- Chen, S.; Goo, Y.-J.J.; Shen, Z.-D. A Hybrid Approach of Stepwise Regression, Logistic Regression, Support Vector Machine, and Decision Tree for Forecasting Fraudulent Financial Statements. Sci. World J. 2014, 2014, 968712. [Google Scholar] [CrossRef]

- Dervovic, D.; Lécué, F.; Marchesotti, N.; Magazzeni, D. Are Logistic Models Really Interpretable? arXiv 2024, arXiv:2406.13427. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar]

- Ayachi, R.; Said, Y.; Atri, M. A Convolutional Neural Network to Perform Object Detection and Identification in Visual Large-Scale Data. Big Data 2021, 9, 41–52. [Google Scholar] [CrossRef]

- Alenizy, H.A.; Berri, J. Transforming Tabular Data into Images via Enhanced Spatial Relationships for CNN Processing. Sci. Rep. 2025, 15, 17004. [Google Scholar] [CrossRef]

- Lim, H.; Song, E. Beef Carcass Grading with EfficientViT: A Lightweight Vision Transformer Approach. Appl. Sci. 2025, 15, 6302. [Google Scholar] [CrossRef]

- Kim, J.-S.G.; Chung, S.; Ko, M.; Song, J.; Shin, S.H. Comparison of Image Preprocessing Strategies for Convolutional Neural Network-Based Growth Stage Classification of Butterhead Lettuce in Industrial Plant Factories. Appl. Sci. 2025, 15, 6278. [Google Scholar] [CrossRef]

- Knapińska, Z.; Mulawka, J. Patient-Tailored Dementia Diagnosis with CNN-Based Brain MRI Classification. Appl. Sci. 2025, 15, 4652. [Google Scholar] [CrossRef]

- Mamdouh, A.; El-Melegy, M.; Ali, S.; Kikinis, R. Tab2Visual: Overcoming Limited Data in Tabular Data Classification Using Deep Learning with Visual Representations. arXiv 2025, arXiv:2502.07181. [Google Scholar] [CrossRef]

- Hu, F.; Sinha, D.; Diamond, S. Perception of Wide-Expanse Symmetric Patterns. Vis. Res. 2024, 223, 108455. [Google Scholar] [CrossRef]

- Ullah, I.; Rios, A.; Gala, V.; Mckeever, S. Explaining Deep Learning Models for Tabular Data Using Layer-Wise Relevance Propagation. Appl. Sci. 2022, 12, 136. [Google Scholar] [CrossRef]

- Thielmann, A.; Reuter, A.; Saefken, B. Beyond Black-Box Predictions: Identifying Marginal Feature Effects in Tabular Transformer Networks. arXiv 2025, arXiv:2504.08712. [Google Scholar] [CrossRef]

- Li, X.; Xue, P. The Role of Social Work in Enhancing Social Governance: Policy, Law, Practice, and Integration of Machine Learning for Improved Outcomes. Alex. Eng. J. 2025, 118, 208–215. [Google Scholar] [CrossRef]

- Padmakala, S.; Chandrasekar, A. From Imputation to Prediction: A Comprehensive Machine Learning Pipeline for Stroke Risk Analysis. In Proceedings of the 2024 International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI), Chennai, India, 9–10 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Jiang, W.; Zhao, M.; Li, H. Time Series Image Coding Classification Theory Based on Lagrange Multiplier Method. Sci. Rep. 2025, 15, 20697. [Google Scholar] [CrossRef]

- Corrêa, J.S.; Cavalca, D.L.; Fernandes, R.A.S. Gramian Angular Field and Recurrence Plots as Feature Engineering Techniques on Residential Appliances Labeling: A Comparative Analysis. In Proceedings of the 2023 IEEE PES Innovative Smart Grid Technologies Latin America (ISGT-LA), San Juan, PR, USA, 6–9 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 230–234. [Google Scholar]

- Damri, A.; Last, M.; Cohen, N. Towards Efficient Image-Based Representation of Tabular Data. Neural Comput. Appl. 2024, 36, 1023–1043. [Google Scholar] [CrossRef]

- Halladay, J.; Cullen, D.; Briner, N.; Miller, D.; Primeau, R.; Avila, A.; Watson, W.; Basnet, R.; Doleck, T. BIE: Binary Image Encoding for the Classification of Tabular Data. J. Data Sci. 2025, 23, 109–129. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-Based Synthetic Medical Image Augmentation for Increased CNN Performance in Liver Lesion Classification. Neurocomputing 2018, 321, 321–331. [Google Scholar] [CrossRef]

- Naidu, G.R.; Rao, C.S. A CNN Based Discrimination between Natural and Computer Generated Images. Panam. Math. J. 2024, 35, 580–589. [Google Scholar] [CrossRef]

- Fang, W.; Zhang, F.; Sheng, V.S.; Ding, Y. A Method for Improving CNN-Based Image Recognition Using DCGAN. Comput. Mater. Contin. 2018, 57, 167–178. [Google Scholar] [CrossRef]

- Cui, Z.; Chen, L.; Wang, Y.; Haehn, D.; Wang, Y.; Pfister, H. Generalization of CNNs on Relational Reasoning With Bar Charts. IEEE Trans. Visual. Comput. Graph. 2025, 31, 5611–5625. [Google Scholar] [CrossRef] [PubMed]

- Nazir, M.I.; Akter, A.; Hussen Wadud, M.A.; Uddin, M.A. Utilizing Customized CNN for Brain Tumor Prediction with Explainable AI. Heliyon 2024, 10, e38997. [Google Scholar] [CrossRef] [PubMed]

- Garg, P.; Sharma, M.K.; Kumar, P. Transparency in Diagnosis: Unveiling the Power of Deep Learning and Explainable AI for Medical Image Interpretation. Arab. J. Sci. Eng. 2025, 1–17. [Google Scholar] [CrossRef]

- Narkhede, J. Comparative Evaluation of Post-Hoc Explainability Methods in AI: LIME, SHAP, and Grad-CAM. In Proceedings of the 2024 4th International Conference on Sustainable Expert Systems (ICSES), Kaski, Nepal, 15–17 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 826–830. [Google Scholar]

- Kumar, S.; Abdelhamid, A.A.; Tarek, Z. Visualizing the Unseen: Exploring GRAD-CAM for Interpreting Convolutional Image Classifiers. J. Artif. Intell. Metaheuristics 2023, 4, 34–42. [Google Scholar] [CrossRef]

- Chakraborty, M.; Sardar, S.; Maulik, U. A Comparative Analysis of Non-Gradient Methods of Class Activation Mapping. In Recent Trends in Intelligence Enabled Research; Bhattacharyya, S., Das, G., De, S., Mrsic, L., Eds.; Advances in Intelligent Systems and Computing; Springer Nature: Singapore, 2023; Volume 1446, pp. 187–196. [Google Scholar]

- Alqutayfi, A.; Almattar, W.; Al-Azani, S.; Khan, F.A.; Qahtani, A.A.; Alageel, S.; Alzahrani, M. Explainable Disease Classification: Exploring Grad-CAM Analysis of CNNs and ViTs. J. Adv. Inf. Technol. 2025, 16, 264–273. [Google Scholar] [CrossRef]

- Shinde, S.; Tupe-Waghmare, P.; Chougule, T.; Saini, J.; Ingalhalikar, M. Predictive and Discriminative Localization of Pathology Using High Resolution Class Activation Maps with CNNs. PeerJ Comput. Sci. 2021, 7, e622. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Yan, W.; Oates, T. Time Series Classification from Scratch with Deep Neural Networks: A Strong Baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1578–1585. [Google Scholar]

- Li, Y.; Zou, L.; Jiang, L.; Zhou, X. Fault Diagnosis of Rotating Machinery Based on Combination of Deep Belief Network and One-Dimensional Convolutional Neural Network. IEEE Access 2019, 7, 165710–165723. [Google Scholar] [CrossRef]

- Chakraborty, M.; Biswas, S.K.; Purkayastha, B. Rule Extraction from Neural Network Using Input Data Ranges Recursively. New Gener. Comput. 2018, 37, 67–96. [Google Scholar] [CrossRef]

- Lantang, O.; Tiba, A.; Hajdu, A.; Terdik, G. Convolutional Neural Network For Predicting The Spread of Cancer. In Proceedings of the 2019 10th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Naples, Italy, 23–25 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 175–180. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

- Ali, M.L.; Thakur, K.; Schmeelk, S.; Debello, J.; Dragos, D. Deep Learning vs. Machine Learning for Intrusion Detection in Computer Networks: A Comparative Study. Appl. Sci. 2025, 15, 1903. [Google Scholar] [CrossRef]

- Kaariniya, S.A.; Praneesh, M. Cardiac Disease Detection Using Machine Learning. Int. J. Adv. Res. Sci. Commun. Technol. 2025, 5, 387–394. [Google Scholar] [CrossRef]

- Fu, R.; Hu, Q.; Dong, X.; Guo, Y.; Gao, Y.; Li, B. Axiom-Based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs. In Proceedings of the British Machine Vision Conference, Online, 7–10 September 2020; British Machine Vision Association: Durham, UK, 2020; pp. 1–13. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Rheude, T.; Wirtz, A.; Kuijper, A.; Wesarg, S. Leveraging CAM Algorithms for Explaining Medical Semantic Segmentation. J. Mach. Learn. Biomed. Imaging 2024, 2, 2089–2102. [Google Scholar] [CrossRef]

- Lee, D.; Byeon, S.; Kim, K. An Inspection of CNN Model for Citrus Canker Image Classification Based on XAI: Grad-CAM. Korean Data Anal. Soc. 2022, 24, 2133–2142. [Google Scholar] [CrossRef]

- Schwarzmüller, T.; Brosi, P.; Spörrle, M.; Welpe, I.M. It’s the Base: Why Displaying Anger Instead of Sadness Might Increase Leaders’ Perceived Power but Worsen Their Leadership Outcomes. J. Bus. Psychol. 2017, 32, 691–709. [Google Scholar] [CrossRef]

- Bekk, M.; Spörrle, M.; Völckner, F.; Spieß, E.; Woschée, R. What is not Beautiful Should Match: How Attractiveness Similarity Affects Consumer Responses to Advertising. Mark. Lett. 2017, 28, 509–522. [Google Scholar] [CrossRef]

- Bekk, M.; Spörrle, M.; Landes, M.; Moser, K. Traits Grow Important with Increasing Age: Customer Age, Brand Personality and Loyalty. J. Bus. Econ. 2017, 87, 511–531. [Google Scholar] [CrossRef]

- Baumbach, L.; Frese, M.; Härter, M.; König, H.-H.; Hajek, A. Patients Satisfied with Care Report Better Quality of Life and Self-Rated Health—Cross-Sectional Findings Based on Hospital Quality Data. Healthcare 2023, 11, 775. [Google Scholar] [CrossRef]

- Jing, P.; Xu, G.; Chen, Y.; Shi, Y.; Zhan, F. The Determinants behind the Acceptance of Autonomous Vehicles: A Systematic Review. Sustainability 2020, 12, 1719. [Google Scholar] [CrossRef]

- Renz, S.; Kalimeris, J.; Hofreiter, S.; Spörrle, M. Me, Myself and AI: How Gender, Personality and Emotions Determine Willingness to Use Strong AI for Self-Improvement. Technol. Forecast. Soc. Change 2024, 209, 123760. [Google Scholar] [CrossRef]

- Anastasiei, B.; Dospinescu, N.; Dospinescu, O. Word-of-Mouth Engagement in Online Social Networks: Influence of Network Centrality and Density. Electronics 2023, 12, 2857. [Google Scholar] [CrossRef]

- Spörrle, M.; Strobel, M.; Stadler, C. Netzwerkforschung im kulturellen Kontext: Eine kulturvergleichende Analyse des Zusammenhangs zwischen Merkmalen sozialer Netzwerke und Lebenszufriedenheit. Z. Psychodrama Soziometr. 2009, 8, 297–319. [Google Scholar] [CrossRef]

| Module | Version | Usage |

|---|---|---|

| opencv-python | 4.8.1.78 | Heatmap overlay |

| Matplotlib | 3.7.3 | Image generation and visualisation |

| NumPy | 1.23.5 | Data management |

| Pandas | 2.3.0 | Data management |

| Pillow | 10.0.1 | Image generation and visualisation |

| scikit-learn | 1.2.2 | Data preprocessing and splits |

| TensorFlow/Keras | 2.12.0 | Model training |

| Variable | β | Std. Error | z | p-Value | 95% CI [Lower, Upper] |

|---|---|---|---|---|---|

| Constant | −9.91 | 0.12 | −80.63 | <0.001 | [−10.15, −9.67] |

| Salary/social benefits | 0.30 | 0.02 | 14.48 | <0.001 | [0.26, 0.34] |

| Image | 0.49 | 0.02 | 21.23 | <0.001 | [0.44, 0.53] |

| Career/training | 0.40 | 0.02 | 18.34 | <0.001 | [0.36, 0.45] |

| Working atmosphere | 0.57 | 0.03 | 20.80 | <0.001 | [0.51, 0.62] |

| Communication | 0.36 | 0.03 | 14.70 | <0.001 | [0.32, 0.41] |

| Team spirit | −0.04 | 0.02 | −1.79 | 0.073 | [−0.09, 0.00] |

| Work–life balance | 0.18 | 0.02 | 8.42 | <0.001 | [0.14, 0.22] |

| Supervisor behaviour | 0.46 | 0.02 | 21.58 | <0.001 | [0.42, 0.50] |

| Interesting tasks | 0.24 | 0.02 | 11.14 | <0.001 | [0.19, 0.28] |

| Working conditions | 0.19 | 0.03 | 7.86 | <0.001 | [0.15, 0.24] |

| Environmental/social awareness | 0.20 | 0.02 | 8.51 | <0.001 | [0.16, 0.25] |

| Equality | −0.01 | 0.02 | −0.35 | 0.724 | [−0.05, 0.04] |

| Treatment of older colleagues | −0.02 | 0.02 | −0.80 | 0.423 | [−0.06, 0.03] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Woelk, M.; Nam, M.; Häckel, B.; Spörrle, M. From Questionnaires to Heatmaps: Visual Classification and Interpretation of Quantitative Response Data Using Convolutional Neural Networks. Appl. Sci. 2025, 15, 10642. https://doi.org/10.3390/app151910642

Woelk M, Nam M, Häckel B, Spörrle M. From Questionnaires to Heatmaps: Visual Classification and Interpretation of Quantitative Response Data Using Convolutional Neural Networks. Applied Sciences. 2025; 15(19):10642. https://doi.org/10.3390/app151910642

Chicago/Turabian StyleWoelk, Michael, Modelice Nam, Björn Häckel, and Matthias Spörrle. 2025. "From Questionnaires to Heatmaps: Visual Classification and Interpretation of Quantitative Response Data Using Convolutional Neural Networks" Applied Sciences 15, no. 19: 10642. https://doi.org/10.3390/app151910642

APA StyleWoelk, M., Nam, M., Häckel, B., & Spörrle, M. (2025). From Questionnaires to Heatmaps: Visual Classification and Interpretation of Quantitative Response Data Using Convolutional Neural Networks. Applied Sciences, 15(19), 10642. https://doi.org/10.3390/app151910642