Adaptive Energy–Gradient–Contrast (EGC) Fusion with AIFI-YOLOv12 for Improving Nighttime Pedestrian Detection in Security

Abstract

1. Introduction

- (1)

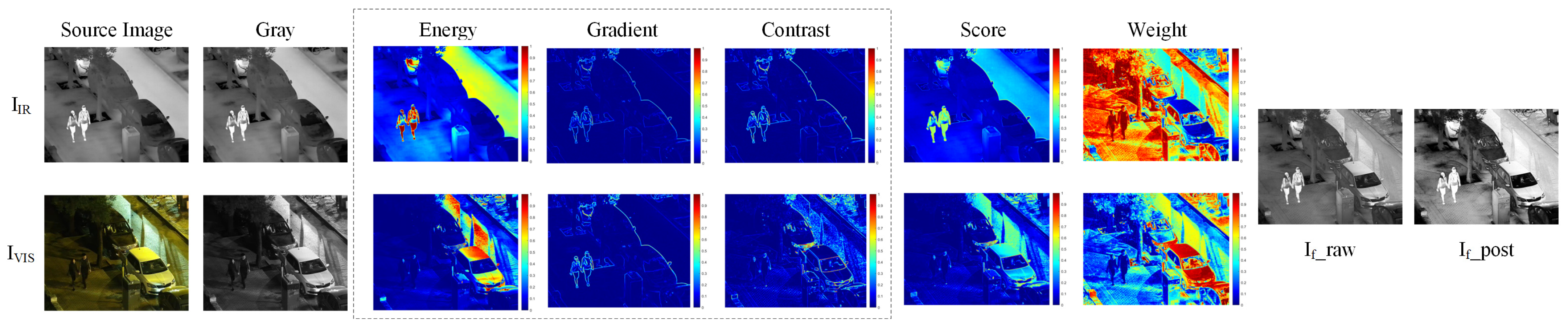

- Adaptive EGC fusion: We propose an adaptive Energy–Gradient–Contrast (EGC) pixel-wise fusion strategy that computes per-pixel weights from energy, gradient, and local contrast to fuse IR and VIS images. This fusion preserves the saliency of thermal imagery while retaining visible-light edge details in low-light/nighttime scenes, thereby improving target boundary localization and the detectability of weak targets [3,6].

- (2)

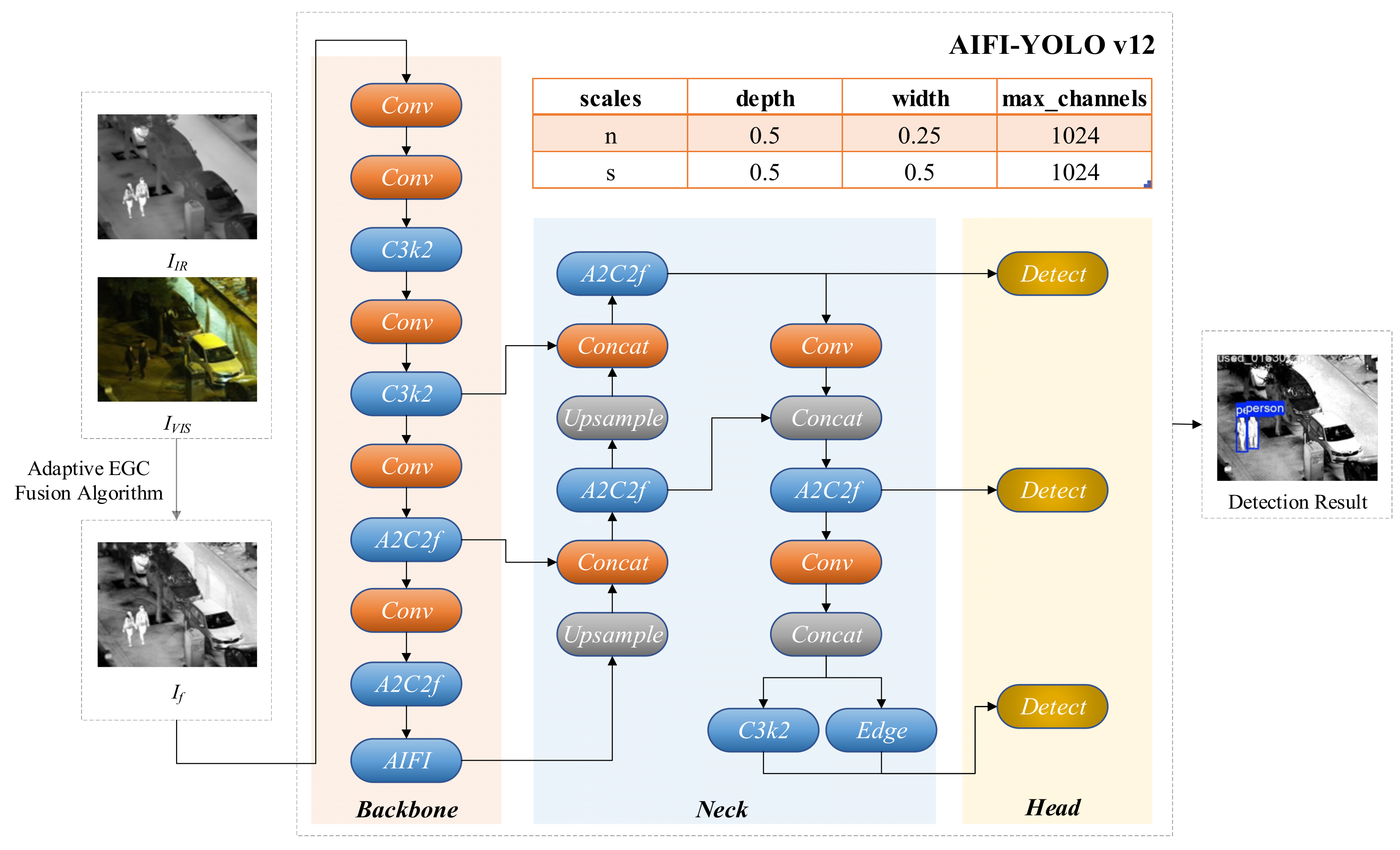

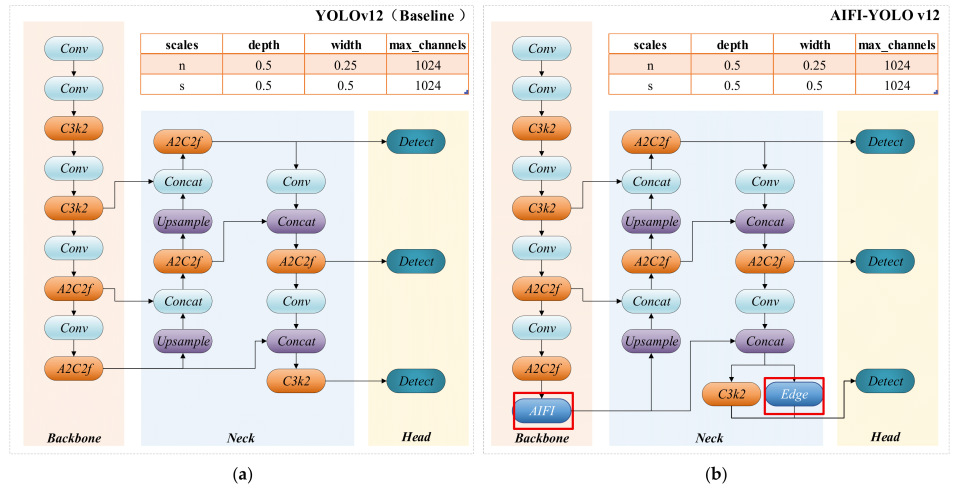

- AIFI-YOLOv12 architecture: We introduce an Adaptive Inter-Feature Interaction (AIFI) module into the high-level layers of the YOLOv12 backbone. By applying joint channel and spatial re-calibration, AIFI suppresses modal noise and enhances discriminative feature responses, helping to reduce false positives caused by thermal noise. We introduce an efficient edge-enhancement branch while retaining P3–P5 multi-scale detection heads, which improves localization and detection of distant, small, and edge-blurred targets without significantly increasing computational burden. The overall architecture balances accuracy and deployment efficiency, making it well-suited for resource-constrained nighttime surveillance scenarios [11,17].

- (3)

- Comprehensive evaluation on LLVIP: We provide a systematic experimental study on the LLVIP dataset, reporting Precision, Recall, mAP@50, mAP@50–95, GFLOPs and FPS, and compare our method to recent baselines (YOLOv8, YOLOv10–YOLOv12, n/s scales) under identical training and evaluation protocols to ensure fair comparison [1,16].

2. Related Work

2.1. Infrared–Visible Image Fusion

2.2. Multimodal (RGB–Thermal) Pedestrian Detection

2.3. Attention Modules and Detector Backbone Design for Degraded Inputs

3. Methodology of the Proposed Framework

3.1. Overview of the Framework

3.2. Adaptive EGC Fusion

- Local energy : computed over a small neighborhood (3 × 3), local energy emphasizes aggregate squared intensity (thermal or luminous power):

- Gradient magnitude : computed by finite differences to capture edge strength and object contours:

- Local contrast : estimated as local standard deviation over a larger neighborhood (5 × 5), capturing texture and intensity variability:

3.3. Improved YOLOv12 Detection Network

3.3.1. Overall Architecture and Design Objectives

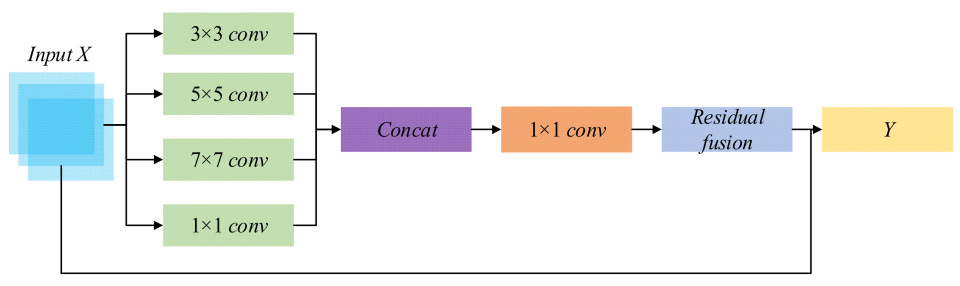

3.3.2. Composite Multi-Receptive-Field Feature Blocks (Backbone)—Retaining Energy and Gradient Cues at Low Levels

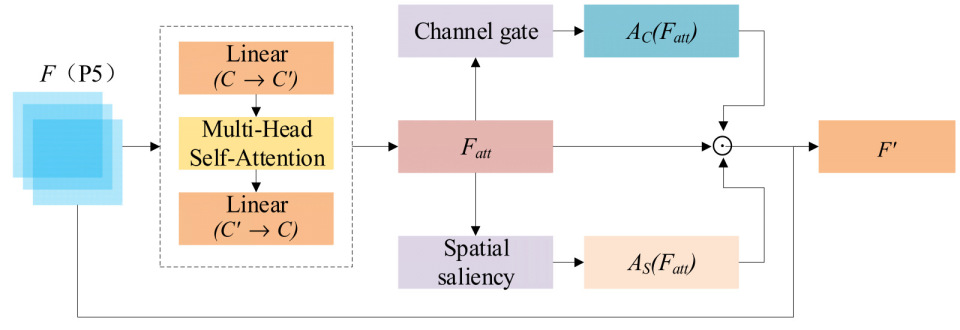

3.3.3. AIFI: Adaptive Inter-Feature Interaction—Semantic and Saliency Re-Calibration at High Level

3.3.4. Edge-Enhanced Multi-Scale Detection Head—Improving Response to Small and Weak-Edge Targets

3.3.5. Implementation Details and Engineering Trade-Offs

4. Experiment Results

4.1. Dataset

- Training Set (70%): Contains 21,683 images (approximately 10,842 image pairs). The training set is used to train the model, and all input images are processed using the Adaptive EGC fusion method to generate fused single-channel images for YOLOv12 detection.

- Validation Set (20%): Contains 6195 images (approximately 3098 image pairs), used for hyperparameter selection and model evaluation during training, ensuring the best-performing model is selected.

- Test Set (10%): Contains 3098 images (approximately 1548 image pairs), used for final evaluation to assess the model’s generalization on an independent test set.

| Total Number of Images | Original Image Size | Input Image Size | Date Split (Train/Validation/Test) |

|---|---|---|---|

| 30,976 (15,488 pairs) | Infrared: 1280 × 720 (pixel) Visible: 1920 × 1080 (pixel) | 512 × 512 (pixel) | 7:2:1 |

4.2. Experimental Environment and Setting

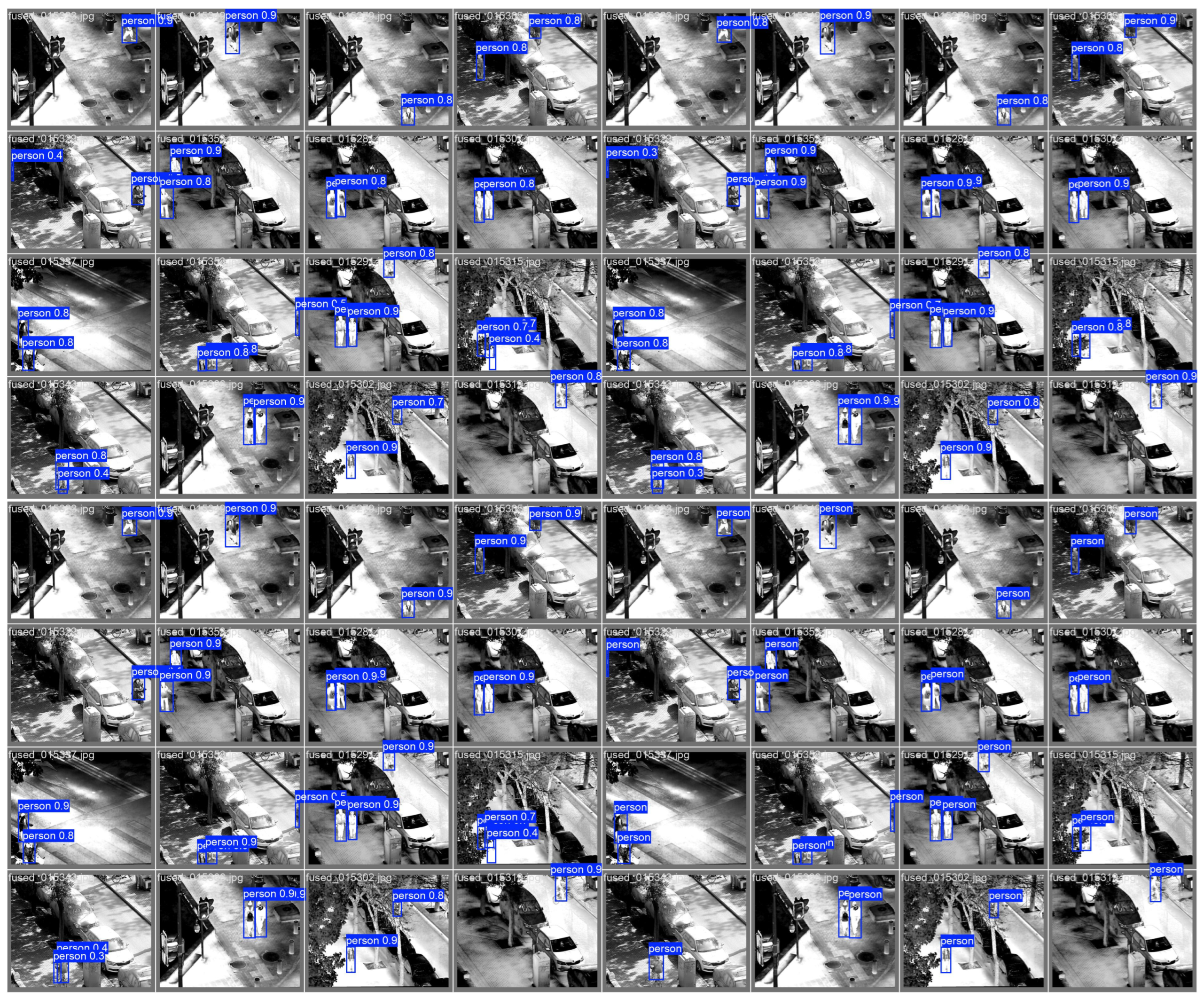

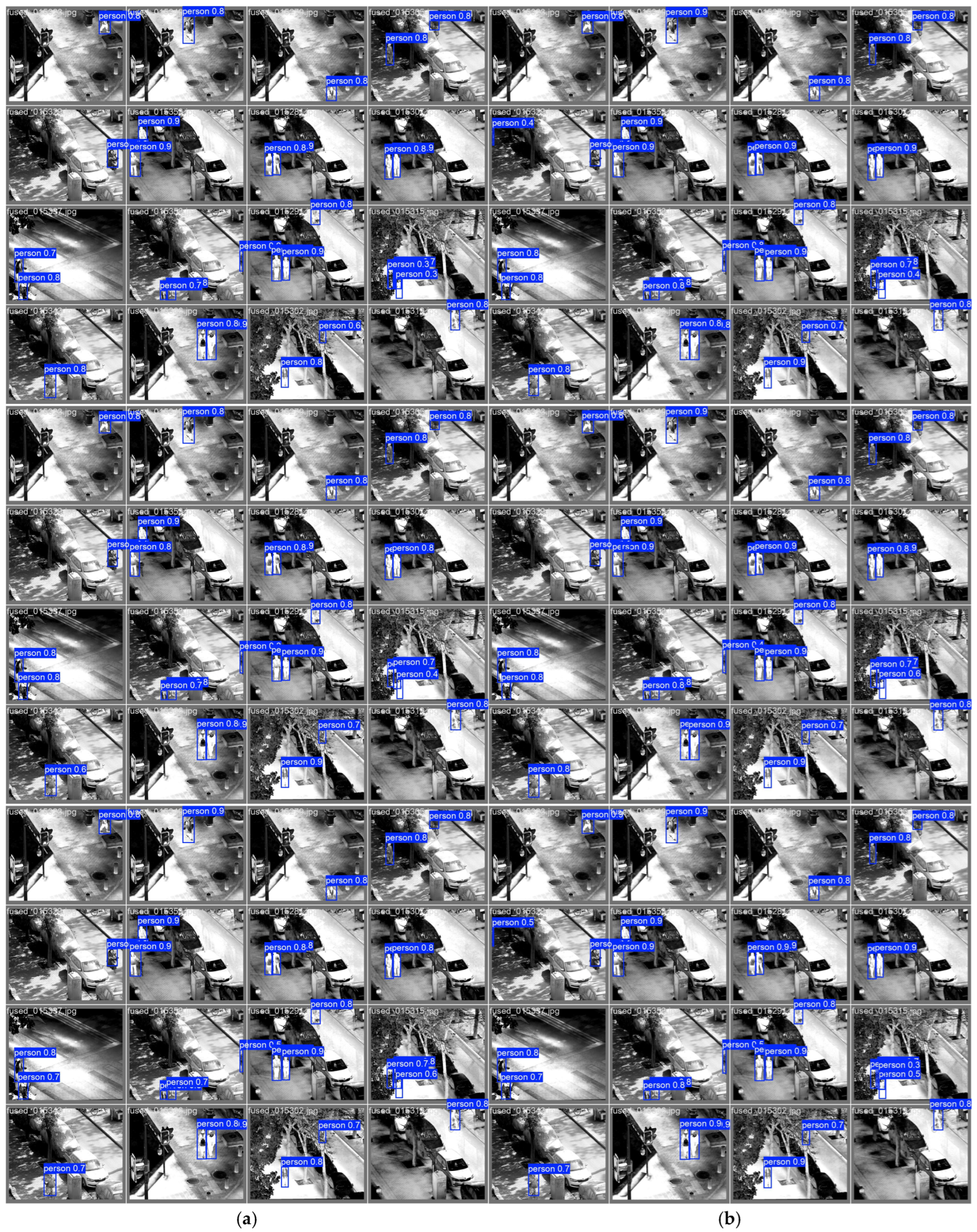

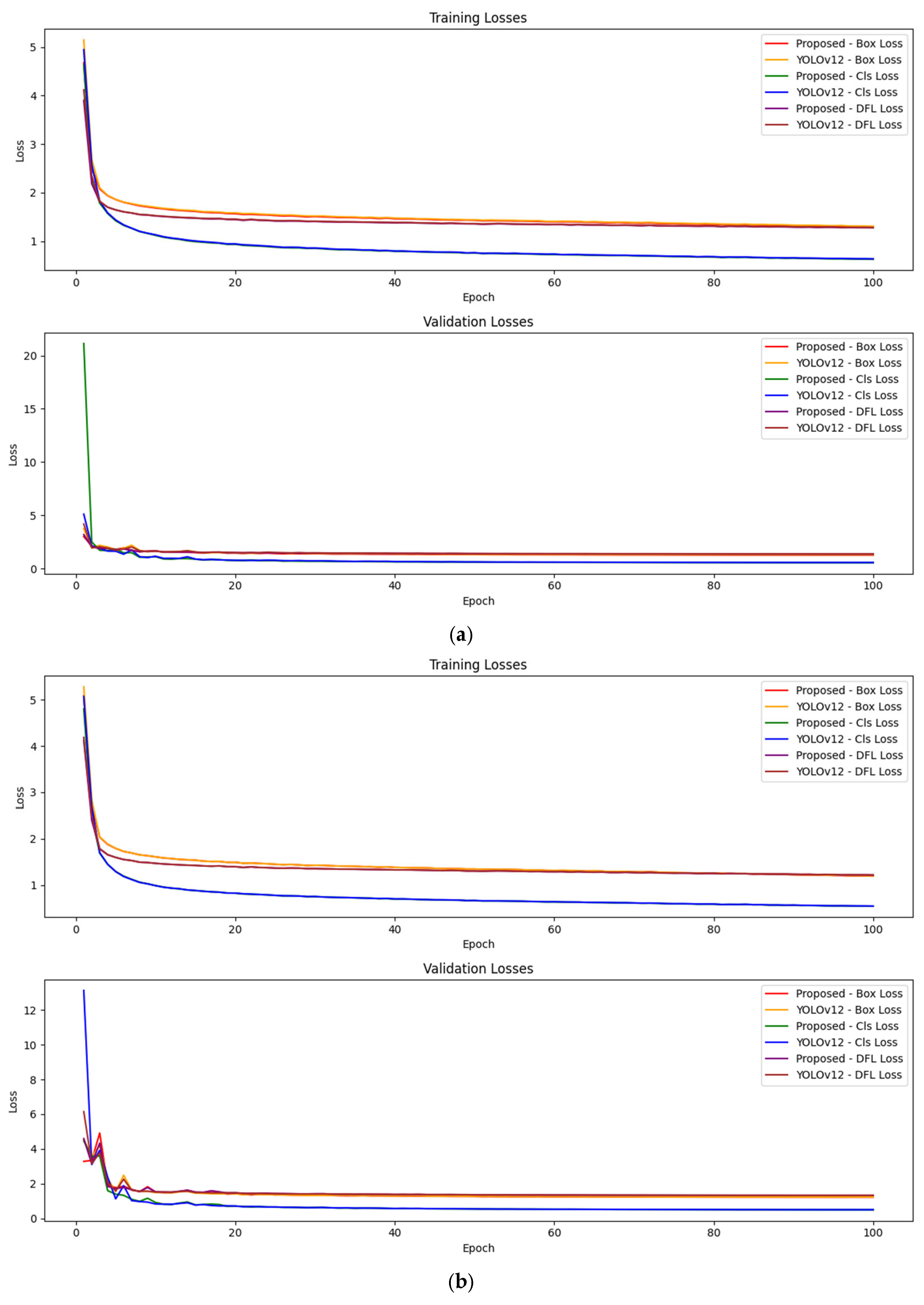

4.3. Experimental Results and Analysis

- Detection Robustness:

- 2.

- Small Object Detection Performance:

- 3.

- Edge and Localization Improvements:

- 4.

- Computational Efficiency:

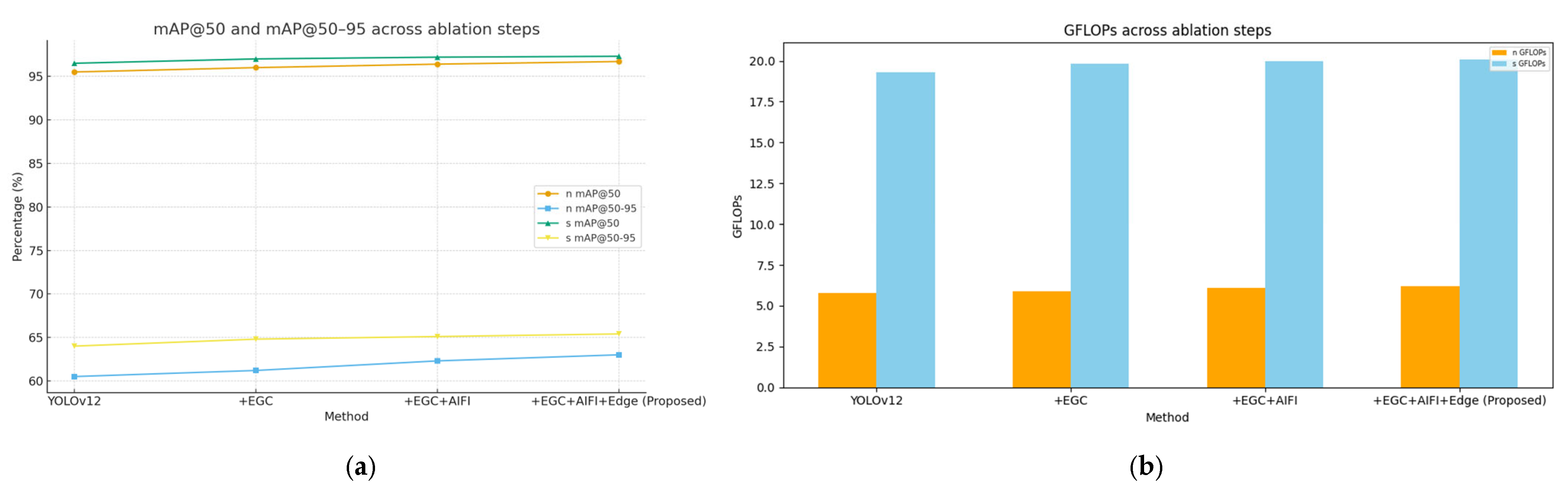

4.4. Ablation Experimental

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Liu, S.; Zhou, W. LLVIP: A Visible–Infrared Paired Dataset for Low-Light Vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar] [CrossRef]

- Meng, B.; Liu, H.; Ding, Z. Multi-scene Image Fusion via Memory Aware Synapses. Sci. Rep. 2025, 15, 14280. [Google Scholar] [CrossRef]

- Yang, K.; Xiang, W. A review on infrared and visible image fusion algorithms based on neural networks. J. Vis. Commun. Image Represent. 2024, 101, 104179. [Google Scholar] [CrossRef]

- Ma, W.; Wang, K.; Li, J.; Yang, S.X.; Li, J.; Song, L.; Li, Q. Infrared and Visible Image Fusion Technology and Application: A Review. Sensors 2023, 23, 599. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, X.-J. DenseFuse: A Fusion Approach to Infrared and Visible Images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A Generative Adversarial Network for Infrared and Visible Image Fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Wang, J.; Ying, J.; Sheng, Z.; Yu, H.; Li, C.; Shen, H.-L. TFDet: Target-Aware Fusion for RGB–T Pedestrian Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 13276–13290. [Google Scholar] [CrossRef]

- Guo, H.; Sun, C.; Zhang, J.; Zhang, W.; Zhang, N. MMYFNet: Multi-Modality YOLO Fusion Network for Object Detection in Remote Sensing Images. Remote Sens. 2024, 16, 4451. [Google Scholar] [CrossRef]

- Li, L.; Shi, Y.; Lv, M.; Jia, Z.; Liu, M.; Zhao, X.; Zhang, X.; Ma, H. Infrared and Visible Image Fusion via Sparse Representation and Guided Filtering in Laplacian Pyramid Domain. Remote Sens. 2024, 16, 3804. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Sun, X.; Xing, H.; Zhang, F.; Song, S.; Yu, S. Advancing Infrared and Visible Image Fusion with an Enhanced Multiscale Encoder and Attention-Based Networks. Information (Open Access/PMC) 2024. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC11459406/ (accessed on 23 August 2025).

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A Generative Adversarial Network with Multi-Classification Constraints for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 5005014. [Google Scholar] [CrossRef]

- Li, J.; Huo, H.; Li, C.; Wang, R.; Feng, Q. AttentionFGAN: Infrared and Visible Image Fusion Using Attention-Based Generative Adversarial Networks. IEEE Trans. Multimed. 2021, 23, 1383–1396. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. Available online: https://arxiv.org/abs/2502.12524 (accessed on 23 August 2025).

- Ultralytics. YOLOv12 Model Family—Documentation. Available online: https://docs.ultralytics.com/models/yolo12/ (accessed on 23 August 2025).

- Liu, R.; Huang, M.; Wang, L.; Bi, C.; Tao, Y. PDT-YOLO: A Roadside Object-Detection Algorithm for Multiscale and Occluded Targets. Sensors 2024, 24, 2302. [Google Scholar] [CrossRef] [PubMed]

- Jegham, N.; Koh, C.Y.; Abdelatti, M.; Hendawi, A. YOLO Evolution: Comprehensive Benchmark (v3 → v12). arXiv 2024, arXiv:2411.00201. Available online: https://arxiv.org/abs/2411.00201 (accessed on 23 August 2025).

- Xue, Z. TFDet Code Repository. Available online: https://github.com/XueZ-phd/TFDet (accessed on 23 August 2025).

- Qi, B.; Bai, X.; Wu, W.; Zhang, Y.; Lv, H.; Li, G. A Novel Saliency-Based Decomposition Strategy for Infrared and Visible Image Fusion. Remote Sens. 2023, 15, 2624. [Google Scholar] [CrossRef]

- He, Q.; Huang, Y. TPFusion: Texture-Preserving and Information Loss Minimization for Infrared and Visible Image Fusion. Sci. Rep. 2025, 15, 26817. [Google Scholar] [CrossRef]

- Song, K.; Zhao, Y.; Huang, L.; Yan, Y.; Meng, Q. RGB-T Image Analysis Technology and Application: A Survey. Eng. Appl. Artif. Intell. 2023, 120, 105919. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Guided Attentive Feature Fusion for Multispectral Pedestrian Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 72–80. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral Pedestrian Detection: Benchmark Dataset and Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. Available online: https://openaccess.thecvf.com/content_cvpr_2015/html/Hwang_Multispectral_Pedestrian_Detection_2015_CVPR_paper.html (accessed on 23 August 2025).

- Zhang, L.; Zhu, X.; Chen, X.; Yang, X.; Lei, Z.; Liu, Z. Weakly Aligned Cross-Modal Learning for Multispectral Pedestrian Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5127–5137. Available online: https://openaccess.thecvf.com/content_ICCV_2019/html/Zhang_Weakly_Aligned_Cross-Modal_Learning_for_Multispectral_Pedestrian_Detection_ICCV_2019_paper.html (accessed on 23 August 2025).

- Yang, X.; Qian, Y.; Zhu, H.; Wang, C.; Yang, M. BAANet: Learning Bi-Directional Adaptive Attention Gates for Multispectral Pedestrian Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2920–2926. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Y.; Wang, L. Attention-Guided Dual-Branch Fusion Network for Infrared-Visible Pedestrian Detection in Low-Light Environments. Sensors 2024, 24, 3215. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Multispectral Fusion for Object Detection with Cyclic Fuse-and-Refine Blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 276–280. [Google Scholar] [CrossRef]

- Xing, Y.; Yang, S.; Wang, S.; Zhang, S.; Liang, G.; Zhang, X.; Zhang, Y. MS-DETR: Multispectral Pedestrian Detection Transformer with Loosely Coupled Fusion and Modality-Balanced Optimization. IEEE Trans. Intell. Transp. Syst. 2024, 25, 20628–20642. [Google Scholar] [CrossRef]

- Zhao, J.; Wen, X.; He, Y.; Yang, X.; Song, K. Wavelet-Driven Multi-Band Feature Fusion for RGB-T Salient Object Detection. Sensors 2024, 24, 8159. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative Cross-Attention Guided Feature Fusion for Multispectral Object Detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Song, X.; Gao, S.; Chen, C. A multispectral feature fusion network for robust pedestrian detection. Alex. Eng. J. 2021, 60, 73–85. [Google Scholar] [CrossRef]

- Cao, Z.; Yang, H.; Zhao, J.; Guo, S.; Li, L. Attention Fusion for One-Stage Multispectral Pedestrian Detection. Sensors 2021, 21, 4184. [Google Scholar] [CrossRef]

- Yang, Y.; Xu, K.; Wang, K. Cascaded Information Enhancement and Cross-Modal Attention Feature Fusion for Multispectral Pedestrian Detection. Front. Phys. 2023, 11, 1121311. [Google Scholar] [CrossRef]

- Fang, Q.; Han, D.; Wang, Z. Cross-Modality Fusion Transformer for Multispectral Object Detection. arXiv 2021, arXiv:2111.00273. Available online: https://arxiv.org/abs/2111.00273 (accessed on 23 August 2025). [CrossRef]

- Liu, J.; Zhang, H.; Li, P.; Wu, Y.; Wang, Y. Learning a Dynamic Cross-Modal Network for Multispectral Pedestrian Detection. In Proceedings of the 30th ACM International Conference on Multimedia (MM ’22), Lisbon, Portugal, 10–14 October 2022; pp. 4926–4935. [Google Scholar] [CrossRef]

- Ji, H.; Zhang, X.; Li, Y.; Wang, P. Infrared and visible image fusion based on iterative control of anisotropic diffusion and regional gradient structure. Sensors 2022, 22, 7144991. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J. An improved infrared and visible image fusion using an adaptive contrast enhancement method and deep learning network with transfer learning. Remote Sens. 2022, 14, 939. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J.; Zhou, Z. Infrared and visible image fusion via fast approximate bilateral filter and local energy characteristics. Sci. Program. 2021, 2021, 3500116. [Google Scholar] [CrossRef]

- Luo, J.; Luo, H. Infrared and visible image fusion algorithm based on gradient attention residual dense block. PeerJ Comput. Sci. 2024, 10, e2569. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, Q.; Yuan, M.; Zhang, Y. SFDFusion: An Efficient Spatial–Frequency Domain Fusion Network for Infrared and Visible Image Fusion. arXiv 2024, arXiv:2410.22837. Available online: https://arxiv.org/abs/2410.22837 (accessed on 24 August 2025).

- Khanam, R.; Hussain, M. A Review of YOLOv12: Attention-Based Enhancements vs. Previous Versions. arXiv 2025, arXiv:2504.11995. Available online: https://arxiv.org/abs/2504.11995 (accessed on 23 August 2025). [CrossRef]

| Environment | |

|---|---|

| Hardware | Intel(R) Xeon(R) Gold 6430 (Intel, Santa Clara, CA, USA) RAM 120 GB NVIDIA GeForce RTX 4090 24 GB × 1 (NVIDIA, Santa Clara, CA, USA) |

| Software | Python 3.12.3 PyTorch 2.2.2 Cuda 12.1 Ultralytics YOLO v8.3.63 |

| Parameter | Value |

|---|---|

| Epochs | 100 |

| Batch size | 16 |

| Input size | 1 × 512 × 512 (single-channel fused input) |

| Optimizer | SGD (lr0 = 0.01, momentum = 0.937, weight_decay = 0.0005) |

| Learning rate | Initial lr0 = 0.01; cosine decay to lr_final = 1 × 10−4 (lrf = 0.01) |

| Pretrained Weights | True (ImageNet-pretrained backbone) |

| Mixed precision | Enabled (FP16, torch.cuda.amp) |

| Loss function | CIoU + BCE + DFL |

| Method | Scale | Precision | Recall | mAP@50 | mAP@50–95 | FPS | GFLOPs | Detection Time (ms) |

|---|---|---|---|---|---|---|---|---|

| Yolov8 | n | 93.9% | 92.3% | 96.6% | 62.6% | 185.2 | 8.1 | 5.4 |

| Yolov10 | n | 93.3% | 92.1% | 96.2% | 62.8% | 120.0 | 12.5 | 8.33 |

| Yolov11 | n | 94.1% | 91.9% | 96.3% | 62.2% | 238.1 | 6.3 | 4.20 |

| Yolov12(baseline) | n | 94.2% | 91.3% | 96.2% | 61.9% | 258.6 | 5.8 | 3.87 |

| Proposed | n | 94.4% | 92.3% | 96.7% | 63.0% | 242.0 | 6.2 | 4.13 |

| Yolov8 | s | 94.6% | 94.2% | 97.3% | 65.1% | 52.8 | 28.4 | 18.94 |

| Yolov10 | s | 94.4% | 93.6% | 97.1% | 65.1% | 61.2 | 24.5 | 16.34 |

| Yolov11 | s | 95.1% | 93.4% | 97.2% | 65.3% | 70.4 | 21.3 | 14.20 |

| Yolov12(baseline) | s | 94.8% | 93.6% | 97.2% | 65.2% | 77.7 | 19.3 | 12.87 |

| Proposed | s | 95.1% | 93.4% | 97.3% | 65.4% | 74.6 | 20.1 | 13.40 |

| Experiment No. | (Energy) | (Gradient) | (Contrast) | mAP@50 | mAP@50–95 |

|---|---|---|---|---|---|

| 1 | 0.4 | 0.3 | 0.3 | 96.7% | 63.0% |

| 2 | 0.33 | 0.33 | 0.33 | 96.2% | 62.1% |

| 3 | 0.5 | 0.25 | 0.25 | 96.4% | 62.5% |

| 4 | 0.6 | 0.2 | 0.2 | 96.0% | 61.8% |

| 5 | 0.3 | 0.4 | 0.3 | 96.3% | 62.3% |

| 6 | 0.2 | 0.6 | 0.2 | 95.8% | 61.5% |

| 7 | 0.3 | 0.3 | 0.4 | 96.1% | 62.0% |

| 8 | 0.2 | 0.2 | 0.6 | 95.5% | 61.2% |

| Method | EGC | AIFI | Edge | mAP@50 | mAP@50–95 | FPS | GFLOPs | Detection Time (ms) |

|---|---|---|---|---|---|---|---|---|

| Yolov12n | × | × | × | 95.5% | 60.5% | 258.6 | 5.8 | 3.87 |

| Yolov12n + EGC | √ | × | × | 96.0% | 61.2% | 250.0 | 5.9 | 4.00 |

| Yolov12n + EGC + AIFI | √ | √ | × | 96.4% | 62.3% | 245.0 | 6.1 | 4.08 |

| Yolov12n + EGC + AIFI + Edge (Proposed) | √ | √ | √ | 96.7% | 63.0% | 242.0 | 6.2 | 4.13 |

| Yolov12s | × | × | × | 96.5% | 64.0% | 77.7 | 19.3 | 12.87 |

| Yolov12s + EGC | √ | × | × | 97.0% | 64.8% | 76.0 | 19.8 | 13.16 |

| Yolov12s + EGC + AIFI | √ | √ | × | 97.2% | 65.1% | 75.0 | 20.0 | 13.33 |

| Yolov12s + EGC + AIFI + Edge (Proposed) | √ | √ | √ | 97.3% | 65.4% | 74.6 | 20.1 | 13.40 |

| Metrics | YOLOv12 (Baseline) vs. YOLOv12 + EGC | YOLOv12 + EGC vs. YOLOv12 + EGC + AIFI | YOLOv12 + EGC + AIFI vs. Proposed (EGC + AIFI + Edge) |

|---|---|---|---|

| Precision | p = 0.02 (significant) | p = 0.01 (significant) | p = 0.01 (significant) |

| Recall | p = 0.05 (marginally significant) | p = 0.03 (significant) | p = 0.04 (significant) |

| mAP@50 | p = 0.03 (significant) | p = 0.05 (marginally significant) | p = 0.02 (significant) |

| mAP@50–95 | p = 0.04 (significant) | p = 0.02 (significant) | p = 0.01 (significant) |

| FPS | p = 0.04 (significant) | p = 0.05 (marginally significant) | p = 0.04 (significant) |

| GFLOPs | p = 0.05 (marginally significant) | p = 0.03 (significant) | p = 0.02 (significant) |

| Metrics | YOLOv12 (Baseline) vs. YOLOv12 + EGC | YOLOv12 + EGC vs. YOLOv12 + EGC + AIFI | YOLOv12 + EGC + AIFI vs. Proposed (EGC + AIFI + Edge) |

|---|---|---|---|

| Precision | p = 0.01 (significant) | p = 0.02 (significant) | p = 0.01 (significant) |

| Recall | p = 0.04 (significant) | p = 0.05 (marginally significant) | p = 0.03 (significant) |

| mAP@50 | p = 0.01 (significant) | p = 0.04 (significant) | p = 0.01 (significant) |

| mAP@50–95 | p = 0.02 (significant) | p = 0.03 (significant) | p = 0.01 (significant) |

| FPS | p = 0.03 (significant) | p = 0.06 (marginally significant) | p = 0.05 (marginally significant) |

| GFLOPs | p = 0.04 (significant) | p = 0.05 (marginally significant) | p = 0.03 (significant) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Bao, Z.; Lu, D. Adaptive Energy–Gradient–Contrast (EGC) Fusion with AIFI-YOLOv12 for Improving Nighttime Pedestrian Detection in Security. Appl. Sci. 2025, 15, 10607. https://doi.org/10.3390/app151910607

Wang L, Bao Z, Lu D. Adaptive Energy–Gradient–Contrast (EGC) Fusion with AIFI-YOLOv12 for Improving Nighttime Pedestrian Detection in Security. Applied Sciences. 2025; 15(19):10607. https://doi.org/10.3390/app151910607

Chicago/Turabian StyleWang, Lijuan, Zuchao Bao, and Dongming Lu. 2025. "Adaptive Energy–Gradient–Contrast (EGC) Fusion with AIFI-YOLOv12 for Improving Nighttime Pedestrian Detection in Security" Applied Sciences 15, no. 19: 10607. https://doi.org/10.3390/app151910607

APA StyleWang, L., Bao, Z., & Lu, D. (2025). Adaptive Energy–Gradient–Contrast (EGC) Fusion with AIFI-YOLOv12 for Improving Nighttime Pedestrian Detection in Security. Applied Sciences, 15(19), 10607. https://doi.org/10.3390/app151910607