Design and Research of an Intelligent Detection Method for Coal Mine Fire Edges

Abstract

1. Introduction

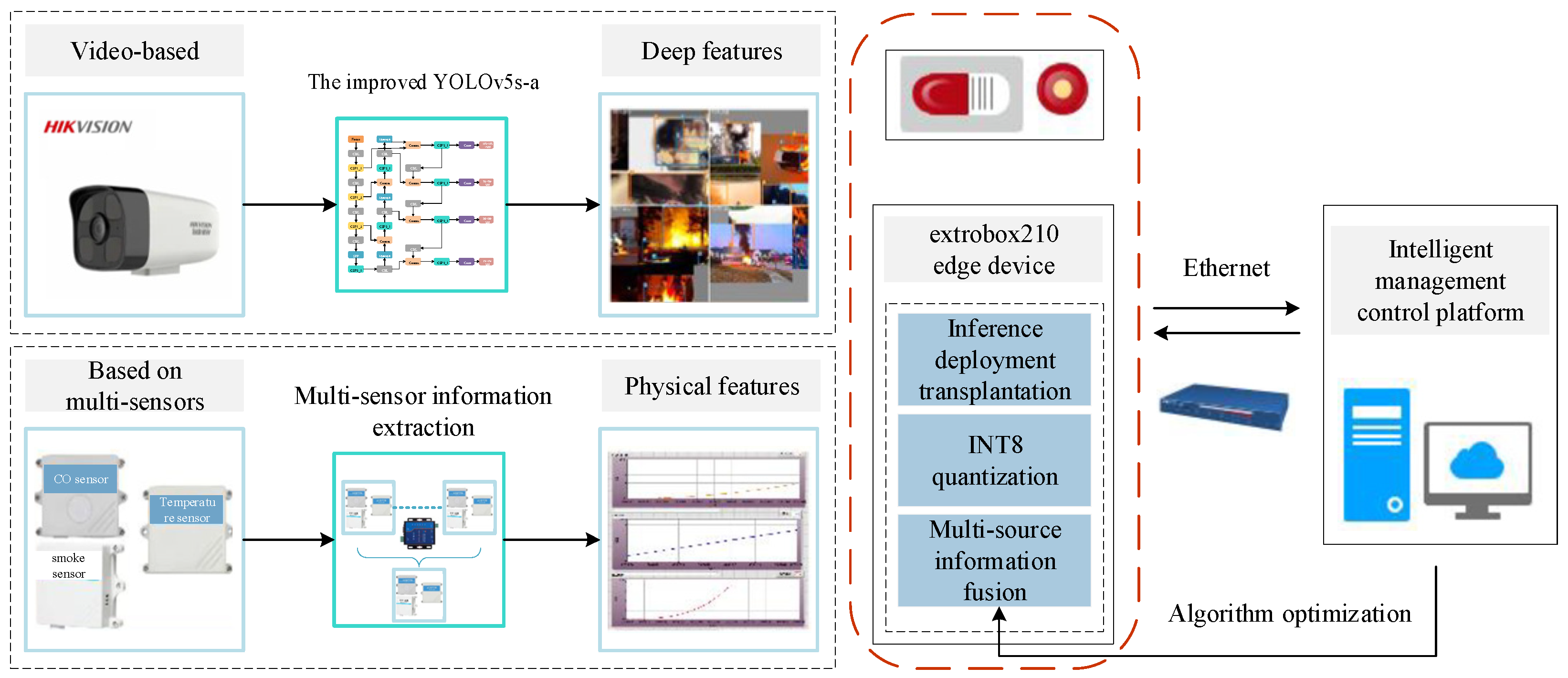

- By incorporating an additional small-object detection layer and introducing an adaptive attention mechanism into the classical YOLOv5s model, this paper enhances the YOLOv5s algorithm to achieve precise detection of small-scale flames while effectively reducing interference from non-fire light sources in underground environments.

- This method dynamically weights the results of video-based and sensor-based detection, adapting in real time based on incoming data. By employing this dynamic fusion approach, the system effectively mitigates potential failures of individual video or sensor data, thereby improving the reliability and accuracy of fire detection in complex underground environments.

- To meet the real-time requirements of fire detection, the proposed fire detection algorithm is deployed on an intelligent edge processor. By processing and analyzing video and sensor data directly at the edge, this approach eliminates the data transmission delays and response latency associated with cloud-based solutions, thereby significantly improving fire detection speed.

2. Related Work

2.1. Video-Based Fire Detection Methods

2.2. Sensor-Based Multi-Source Information Fusion Methods

3. Architecture Design of the System

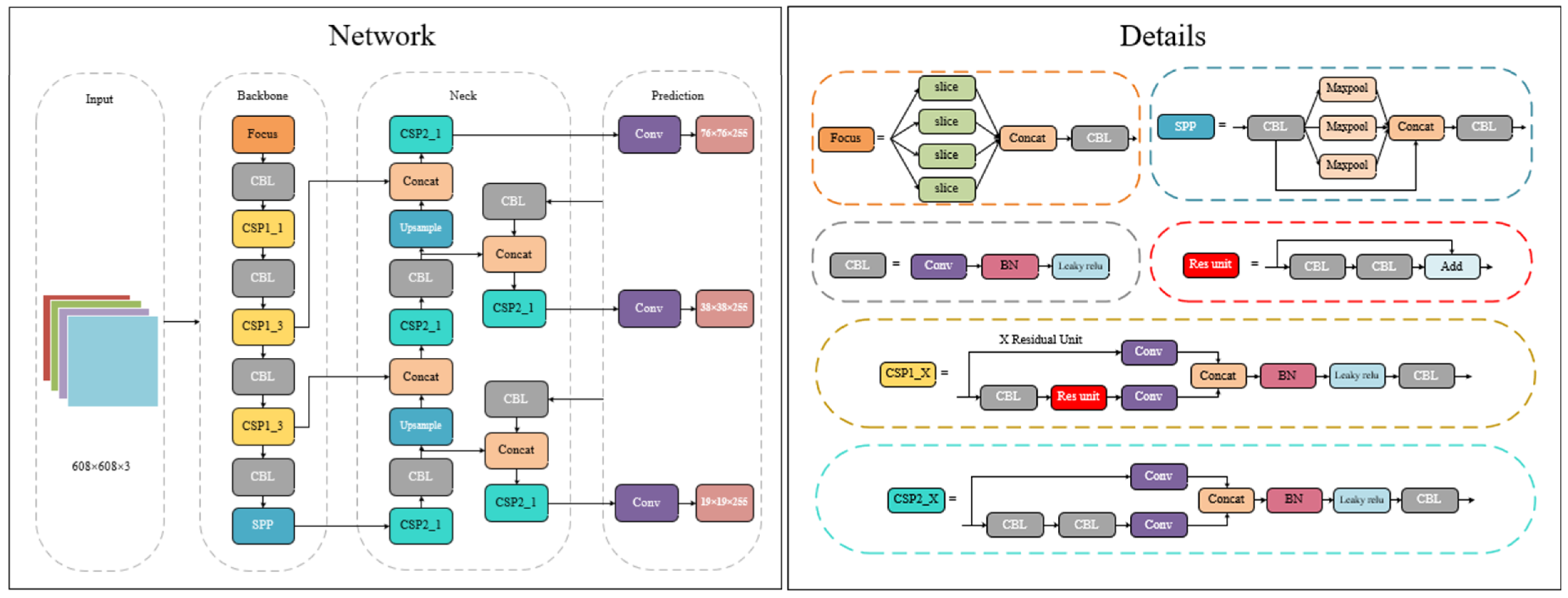

4. Improved YOLOv5s Model

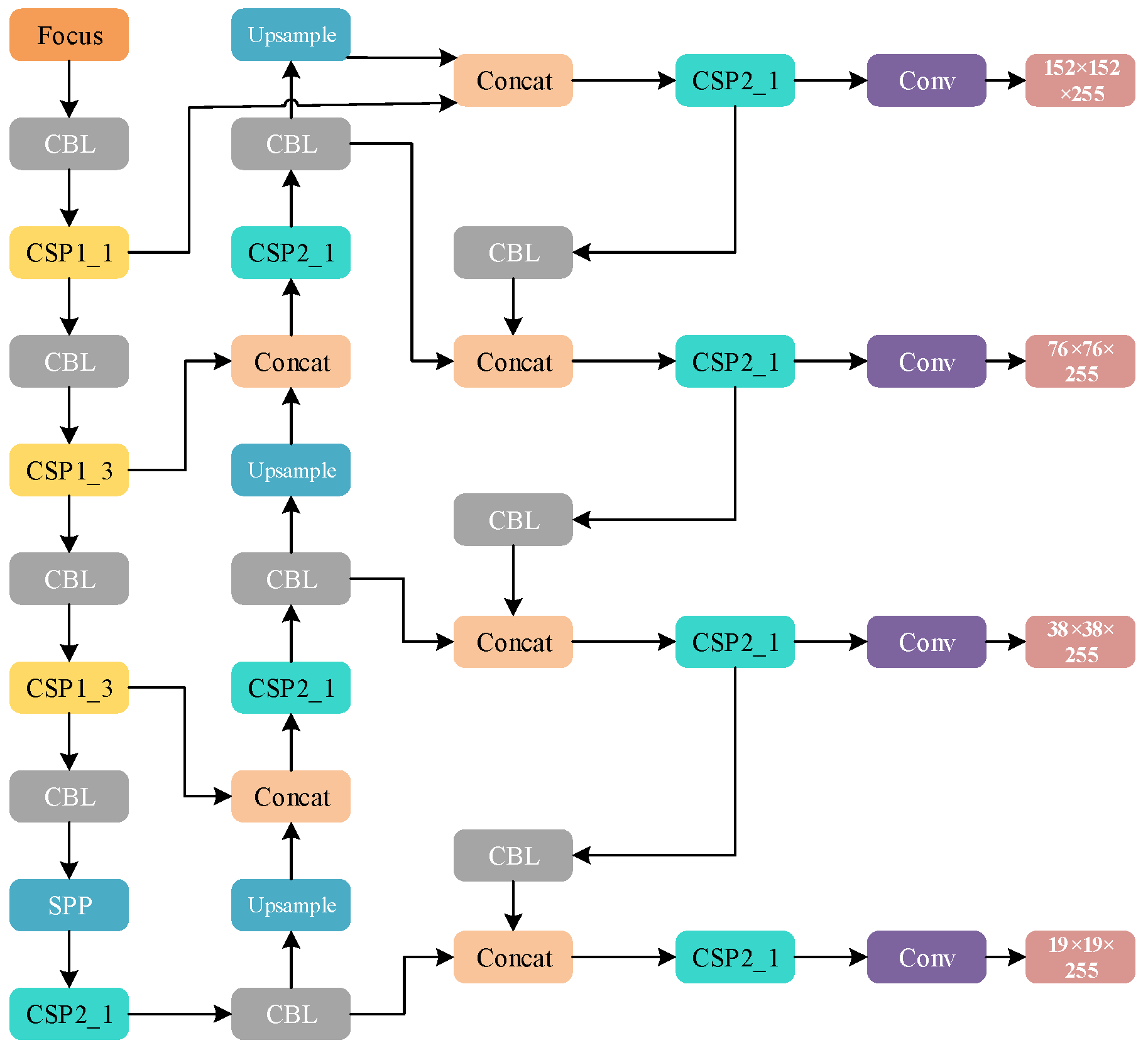

4.1. Small-Target Detection Layer

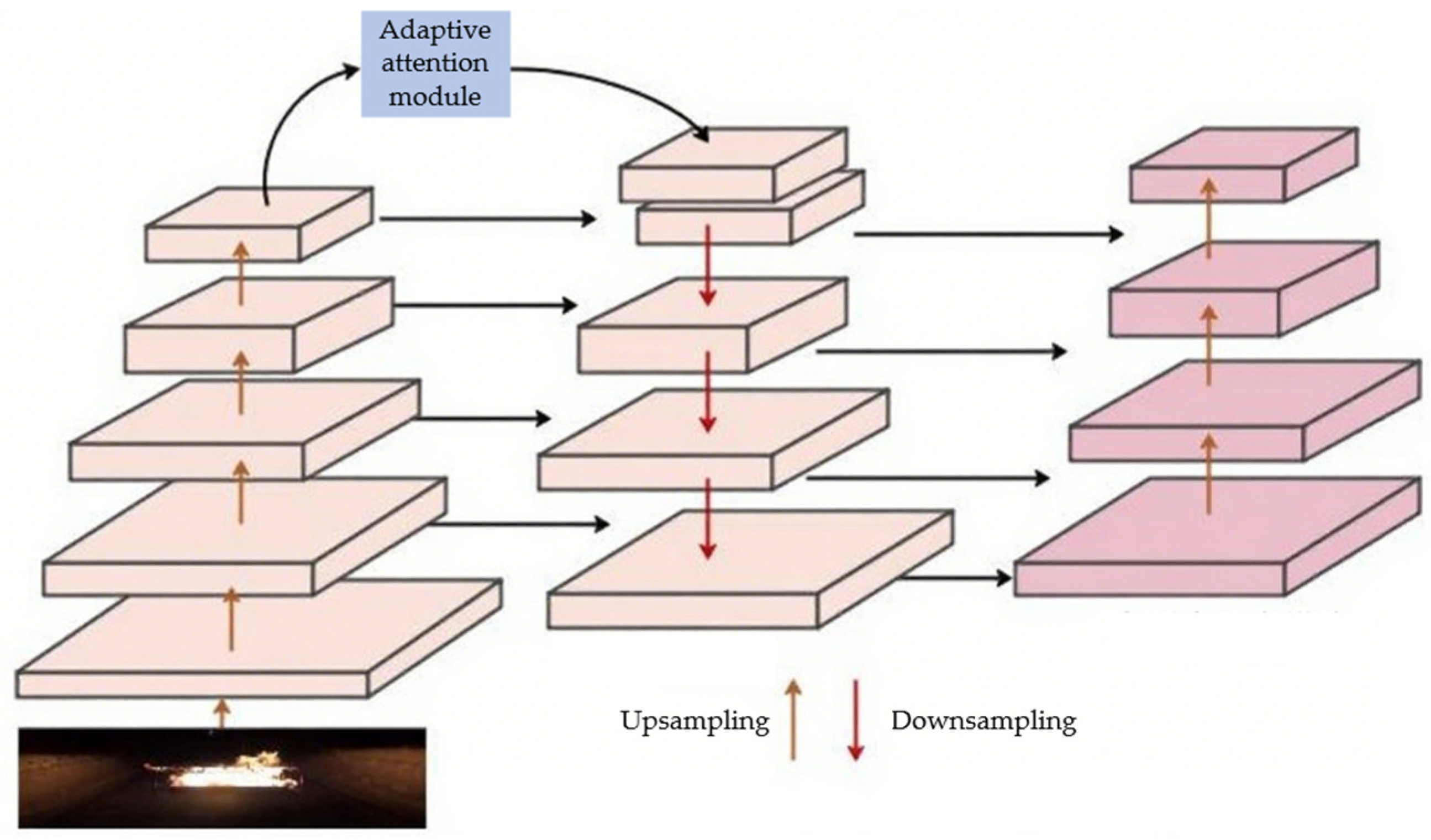

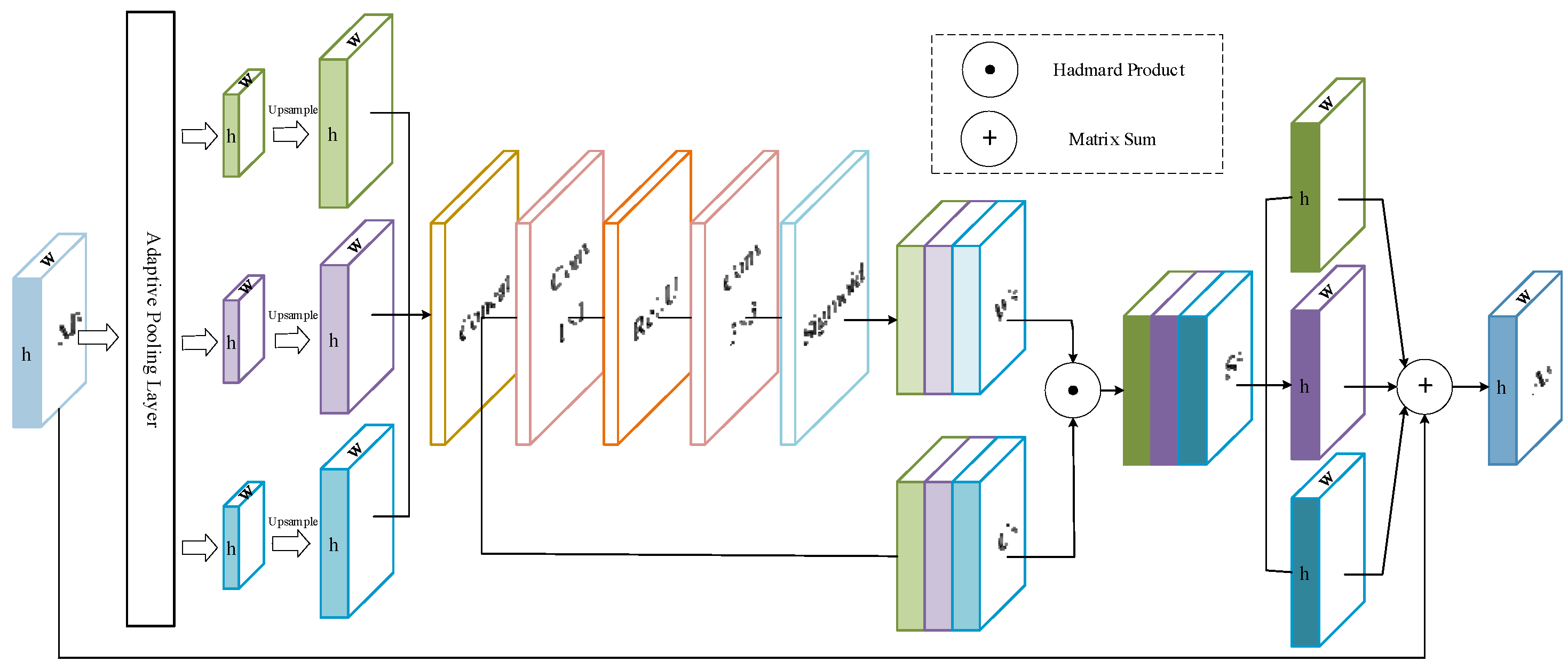

4.2. Adaptive Attention Module

5. Multi-Source Information Fusion

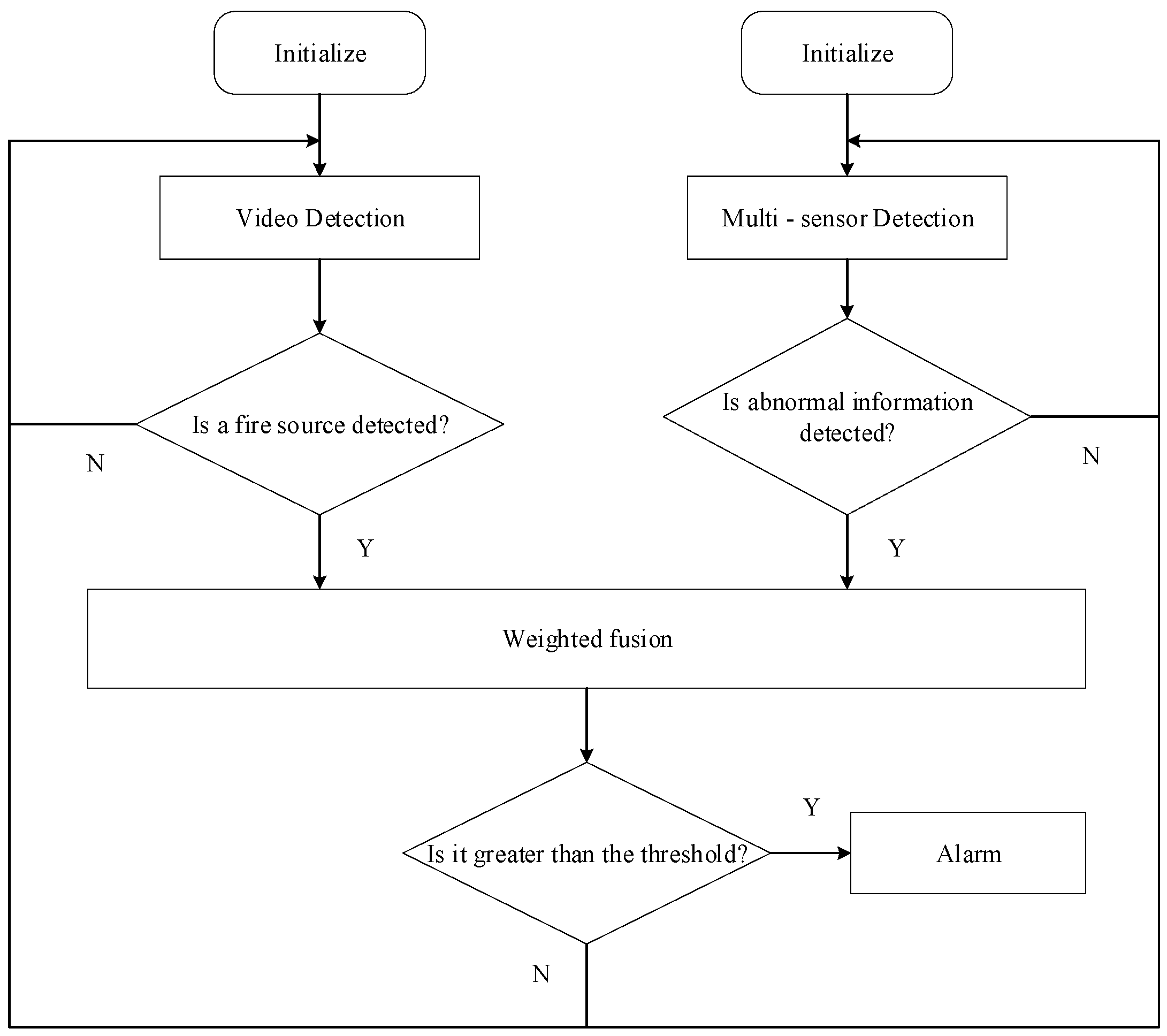

5.1. Multi-Source Information Fusion Module

5.2. Multi-Source Information Fusion Algorithm

6. Experimentation and Analysis

6.1. Dataset and Experimental Environment

6.2. Evaluation Metrics

6.3. Comparative Experiment

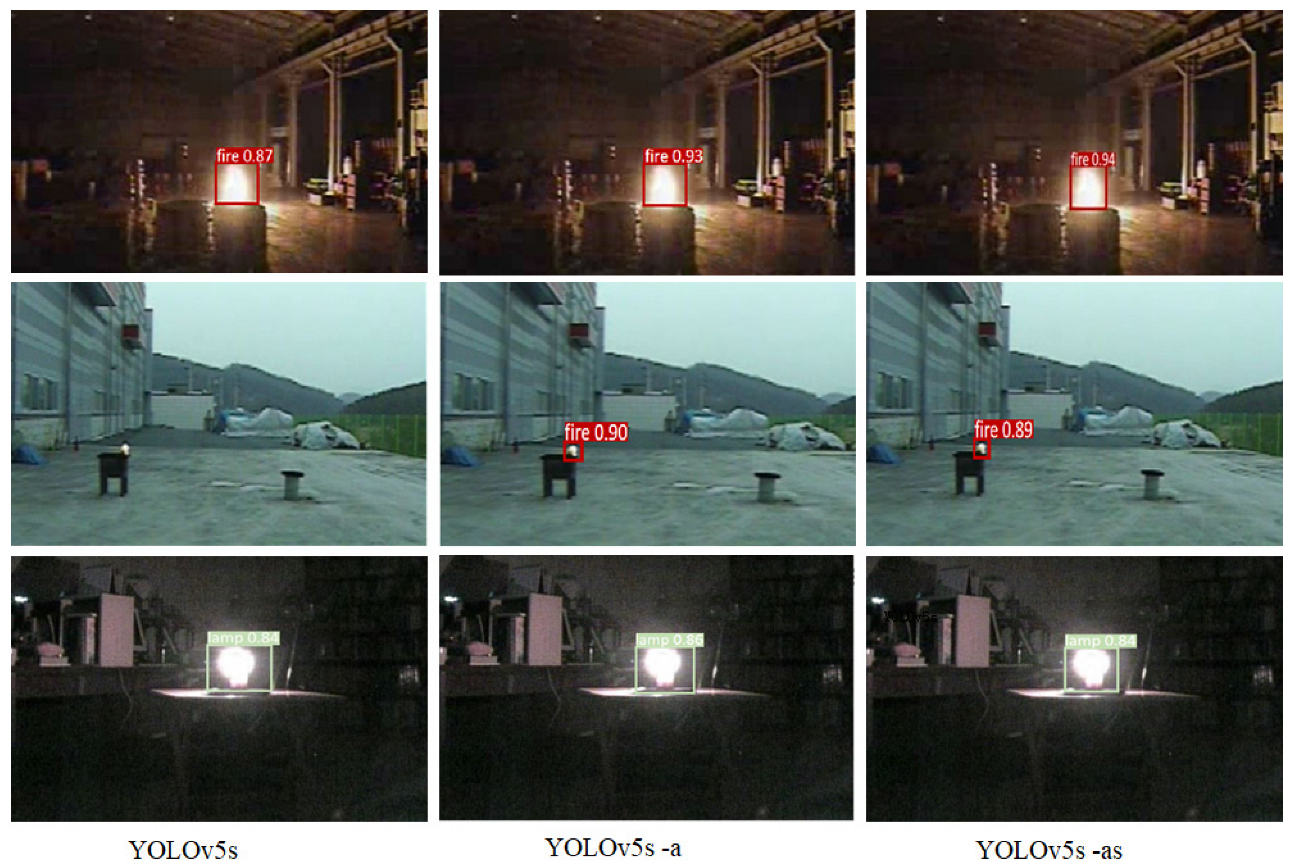

6.3.1. Comparative Experiment Based on Different Models

6.3.2. Performance Comparative Experiment of Edge Devices

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, K.; Feng, X.; Gao, N.; Ma, T. Fine-Grained Image Classification Algorithm Using Multi-Scale Feature Fusion and Re-Attention Mechanism. J. Tianjin Univ. (Sci. Technol.) 2020, 53, 1077–1085. [Google Scholar]

- Huang, C.; Chen, S.; Xu, L. Object Detection Based on Multi-Source Information Fusion in Different Traffic Scenes. In Proceedings of the 2020 12th International Conference on Advanced Computational Intelligence (ICACI), Dali, China, 14–16 August 2020; pp. 213–217. [Google Scholar]

- Zhang, K.; Ye, Y.; Zhang, H. Forest fire monitoring system based on edge computing. Big Data Res. 2019, 5, 79–88. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, W. Convolutional Neural Networks Based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Goel, A. An Emerging Fire Detection System based on Convolutional Neural Network and Aerial-Based Forest Fire Identification. In Proceedings of the IEEE International Conference on Computer Vision and Machine Intelligence, Gwalior, India, 10–11 December 2023; pp. 1–5. [Google Scholar]

- Zhao, L.; Zhi, L.; Zhao, C.; Zheng, W. Fire-YOLO: A Small Target Object Detection Method for Fire Inspection. Sustainability 2022, 14, 4930. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Zhang, Z.; Feng, W. An Improved YOLOv5 video real-time flame detection algorithm. Comput. Appl. Softw. 2024, 41, 225–260+302. [Google Scholar]

- Deng, Q.; Ding, H.; Jiang, P.; Yang, M.; Liu, S.; Chen, Z.; Li, F. Intelligent Detection Algorithm for Fire Smoke in Highway Tunnel Based on Improved YOLOv5s. China J. Highw. Transp. 2024, 37, 194–209. [Google Scholar]

- Hou, C.; Wang, Q.; Wang, K. Improved multi-scale flame detection method. Chin. J. Liq. Cryst. Disp. 2021, 36, 751–759. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, X.; Zhao, J.; Cheng, P.; Wang, S. Research on the Lightweight Forest Fire Video Monitoring Method by MobileNet. Microcomput. Appl. 2024, 40, 5–8. [Google Scholar]

- Fan, Q.; Li, Y.; Liu, Y.; Weng, Z. Mine external fire monitoring method using the fusion of visual features. J. Min. Sci. Technol. 2023, 8, 529–537. [Google Scholar]

- Su, Q.; Hu, Z.; Liu, X. Research on fire detection method of complex space based on multi-sensor data fusion. Meas. Sci. Technol. 2024, 35, 085107. [Google Scholar] [CrossRef]

- Liu, P.; Xiang, P.; Lu, D. A new multi-sensor fire detection method based on LSTM networks with environmental information fusion. Neural Comput. Applic. 2023, 35, 25275–25289. [Google Scholar] [CrossRef]

- Zhang, R.; Wu, T.; Yu, C.; Xv, X. Fire detection algorithm in computer rooms based on multi-sensor data fusion. J. Wuhan Inst. Technol. 2024, 46, 79–84. [Google Scholar]

- Duan, L.; Yang, K.; Mao, D.; Ren, P. Fuzzy evidence theory-based algorithm in application of fire detection. Comput. Eng. Appl. 2017, 53, 231–235. [Google Scholar]

- Li, X.; Zhao, C.; Fan, C.; Qiu, X. Multi-sensor Data Fusion Algorithm Based on Dempster-Shafer Theory. In Proceedings of the 2021 7th International Conference on Computer and Communications (ICCC), Chengdu, China, 10–13 December 2021; pp. 288–293. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops(CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake, UT, USA, 18–23 June 2018; pp. 8579–8768. [Google Scholar]

- Chen, Y.M.; Huang, H.C. Fuzzy logic approach to multisensor data association. Math. Comput. Simul. 2000, 52, 399–412. [Google Scholar] [CrossRef]

- Conti, R.S. , Litton, C.D. A Comparison of Mine Fire Sensors; U.S. Department of the Interior, Bureau of Mines: Washington, DC, USA, 1995. [Google Scholar]

- Wang, H.; Zhang, Y.P.; Xie, J.H.; You, H.D. Application of WSN Hierarchical Clustering Data Fusion in Coal Mine Fire Monitoring. Coal Technol. 2019, 38, 68–70. [Google Scholar]

- Wang, Y.; Wang, W. Mine Fire Simulation and Analysis. Coal Technol. 2012, 31, 77–79. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

| Score | Meaning |

|---|---|

| 1 | Equally important |

| 3 | Slightly important |

| 5 | Quite important |

| 7 | Obviously important |

| 9 | Absolutely important |

| 2, 4, 6, 8 | Median of above standard |

| Number | Temperature/°C | Smoke Concentration /mg m−3 | CO Concentration/ppm |

|---|---|---|---|

| 1 | 24.5 | 0.05 | 12 |

| 2 | 28.7 | 0.37 | 34 |

| 3 | 29.4 | 0.55 | 45 |

| 4 | 31.2 | 0.76 | 57 |

| 5 | 34.9 | 1.05 | 69 |

| Mean value | 29.7 | 0.57 | 43.4 |

| Big Flame | Result | Small Flame | Result | Smoldering | Result |

|---|---|---|---|---|---|

| YOLOv5s | 49/50 | YOLOv5s | 29/50 | YOLOv5s | 1/50 |

| YOLOv5s-a | 49/50 | YOLOv5s-a | 46/50 | YOLOv5s-a | 3/50 |

| YOLOv5s-as | 49/50 | YOLOv5s-as | 48/50 | YOLOv5s-as | 28/50 |

| Camera Serial Number | Camera Angle | Installation Height (m) | Number of Images | Lighting | Dust |

|---|---|---|---|---|---|

| 1 | Horizontal | 0 | 1000 | Normal | Little |

| 2 | Horizontal | 0 | 2000 | Darker | Much |

| 3 | Front 45° | 2.10 | 1200 | Normal | Much |

| 4 | Front 45° | 2.10 | 1000 | Darker | Little |

| 5 | Rear 45° | 2.10 | 1000 | Normal | Much |

| 6 | Rear 45° | 2.10 | 2000 | Darker | Much |

| Dataset | Training Set | Validation Set | Test Set | Total Number |

|---|---|---|---|---|

| Number of images | 6300 | 1800 | 900 | 9000 |

| Number of annotated samples | 11,021 | 3458 | 1934 | 16,413 |

| Model | R | mAP@0.5 | CI | Inference Time (ms) | |

|---|---|---|---|---|---|

| SSD 300 (VGG16) | 76.4% | 73.2% | 3.2% | (0.723,0.741) | 26 |

| SSD 521 (VGG16) | 77.9% | 75.1% | 3.3% | (0.742,0.760) | 62 |

| YOLOv3-SPP | 82.5% | 81.1% | 3.0% | (0.802,0.820) | 24 |

| YOLOv5s | 91.5% | 90.7% | 2.6% | (0.900,0.914) | 18 |

| YOLOv5s-a | 96.7% | 94.1% | 2.4% | (0.934,0.948) | 20 |

| Model | mAP@0.5 | CI | Inference Time (ms) | |

|---|---|---|---|---|

| SSD 300 (VGG16) | 71% | 5.1% | (0.696,0.724) | 19 |

| SSD 521 (VGG16) | 74.2% | 4.4% | (0.730,0.755) | 53 |

| YOLOv3-SPP | 80.3% | 4.3% | (0.791,0.815) | 18 |

| YOLOv5s | 87% | 3.3% | (0.861,0.880) | 11 |

| YOLOv5s-a | 93.3% | 3.2% | (0.924,0.942) | 12 |

| YOLOv5s-as | 94.2% | 2.9% | (0.934,0.950) | 15 |

| Process | Edge Processing | Cloud Computing Processing |

|---|---|---|

| Step 1 | Image and data acquisition = > 41 ms | Image and data acquisition = > 41 ms |

| Step 2 | Edge computing processing = >166 ms | Upload raw images and data = >162 ms |

| Step 3 | Alarm response = >49 ms | Cloud computing processing = >53 ms |

| Step 4 | Upload detection pictures = >158 ms (Alarm completed, not calculated as processing cycle) | Detection result feedback = >110 ms |

| Step 5 | Detection result feedback = > 61 ms (Alarm completed, not calculated as processing cycle) | Alarm response = >45 ms |

| Response cycle | 304 ms | 411 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Zhao, D.; Ge, Y.; Li, T. Design and Research of an Intelligent Detection Method for Coal Mine Fire Edges. Appl. Sci. 2025, 15, 10589. https://doi.org/10.3390/app151910589

Yang Y, Zhao D, Ge Y, Li T. Design and Research of an Intelligent Detection Method for Coal Mine Fire Edges. Applied Sciences. 2025; 15(19):10589. https://doi.org/10.3390/app151910589

Chicago/Turabian StyleYang, Yingbing, Duan Zhao, Yicheng Ge, and Tao Li. 2025. "Design and Research of an Intelligent Detection Method for Coal Mine Fire Edges" Applied Sciences 15, no. 19: 10589. https://doi.org/10.3390/app151910589

APA StyleYang, Y., Zhao, D., Ge, Y., & Li, T. (2025). Design and Research of an Intelligent Detection Method for Coal Mine Fire Edges. Applied Sciences, 15(19), 10589. https://doi.org/10.3390/app151910589