Research on High-Resolution Image Harmonization Method Based on Multi-Scale and Global Feature Guidance

Abstract

1. Introduction

- (1)

- We propose a method that combines multi-scale processing with global feature guidance. Using multi-scale methods, we handle image details at different scales to achieve seamless integration. By introducing global features, we enable transformations of foreground features.

- (2)

- We introduce a lightweight refinement module to effectively integrate different methods, providing a delicate approach for image harmonization tasks.

- (3)

- Extensive experiments conducted on the benchmark iHarmony4 dataset demonstrate that our method performs well across all metrics, achieving significant improvements in time while reducing the number of parameters.

2. Related Work

2.1. Image Harmonization

2.2. Multi-Scale Image Harmonization

2.3. Image Harmonization Global Features

3. Method

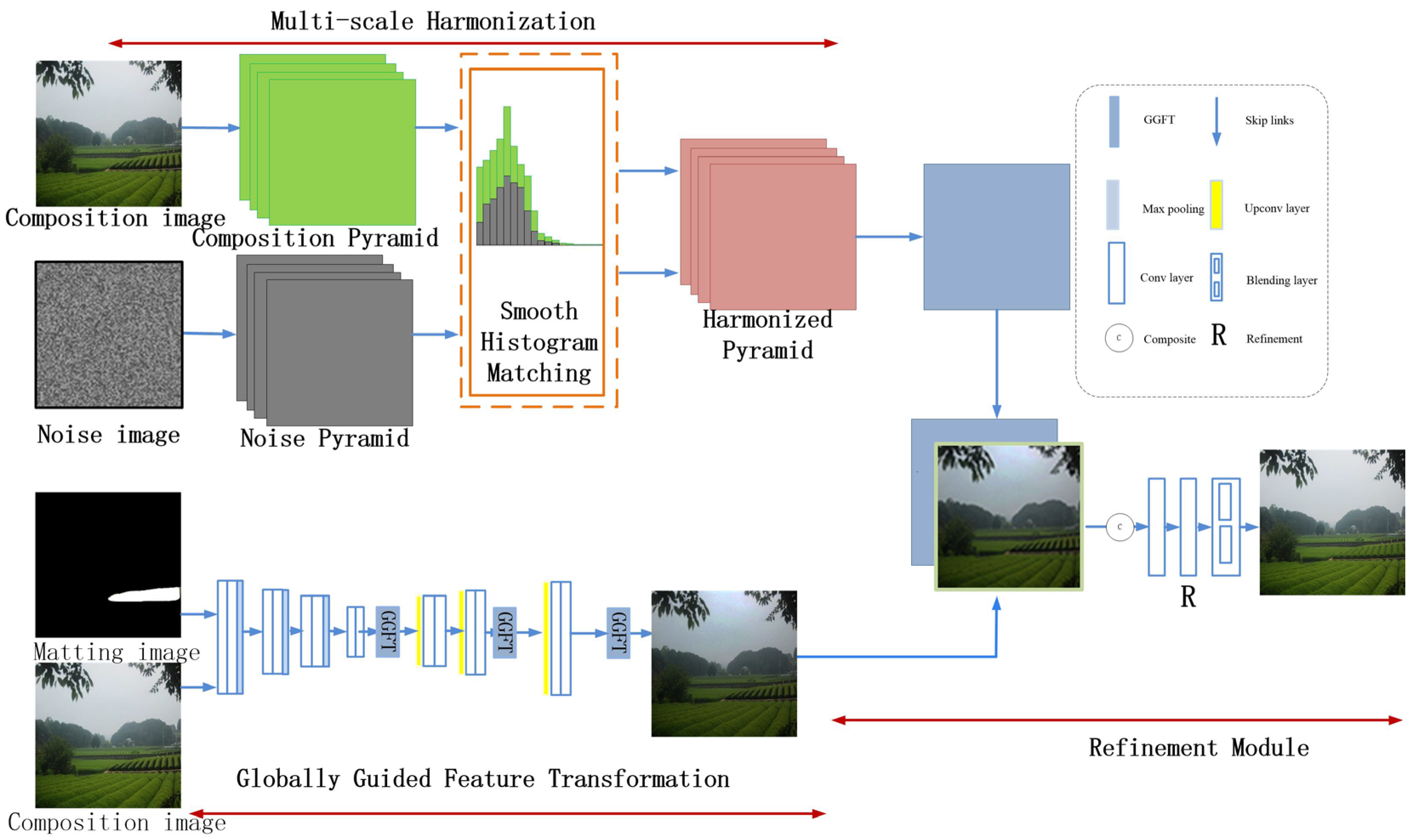

3.1. Design of the Main Framework for Image Harmonization Based on Multi-Scale and Global Feature Guidance

3.2. Multi-Scale Modular Design

3.3. Module Design for Global Feature Guidance

3.4. Lightweight Refined Module Design

3.5. Loss Function Setting

4. Experimentation and Analysis

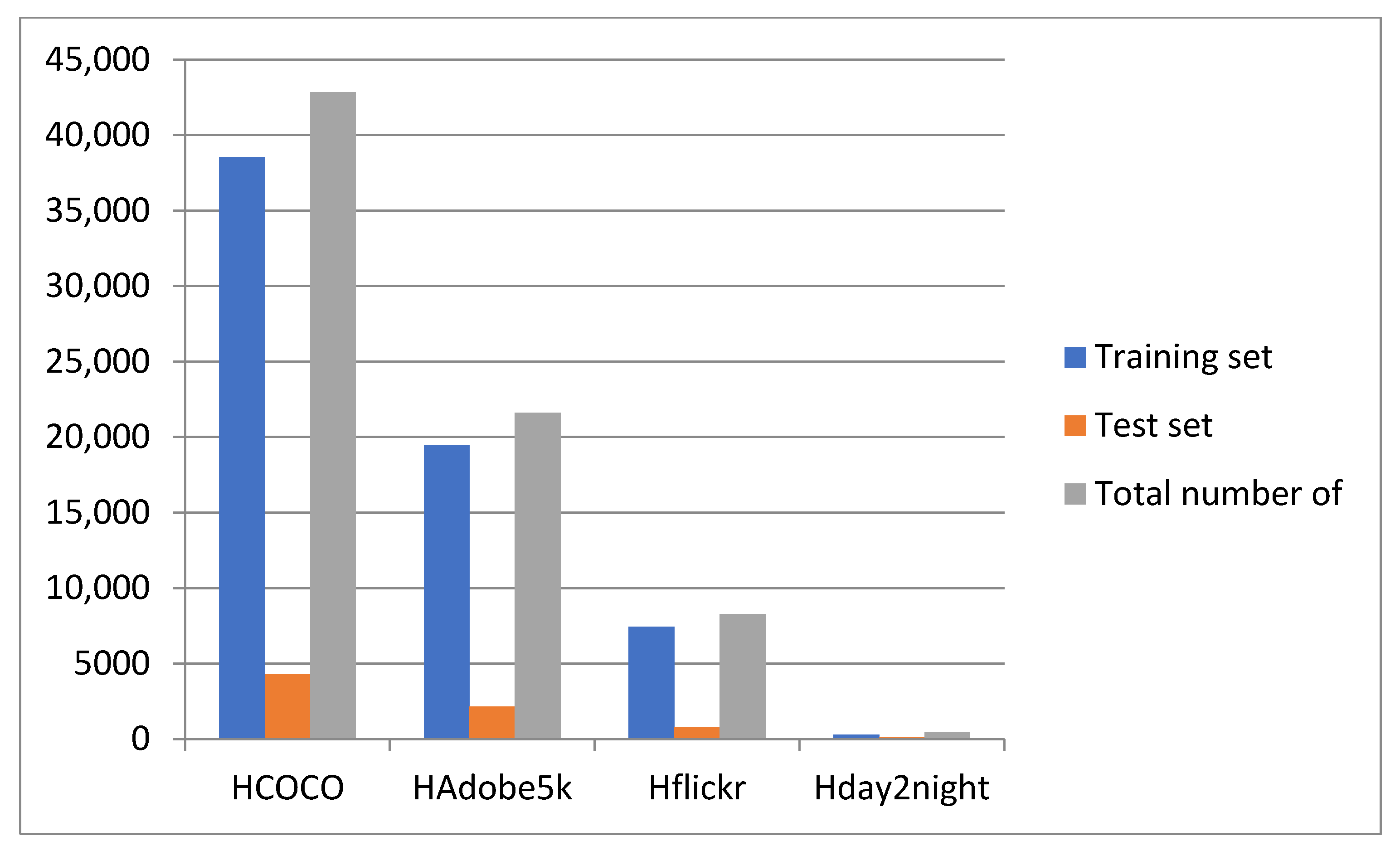

4.1. Experimental Dataset

4.2. Experimental Test Program

4.3. Evaluation Criteria

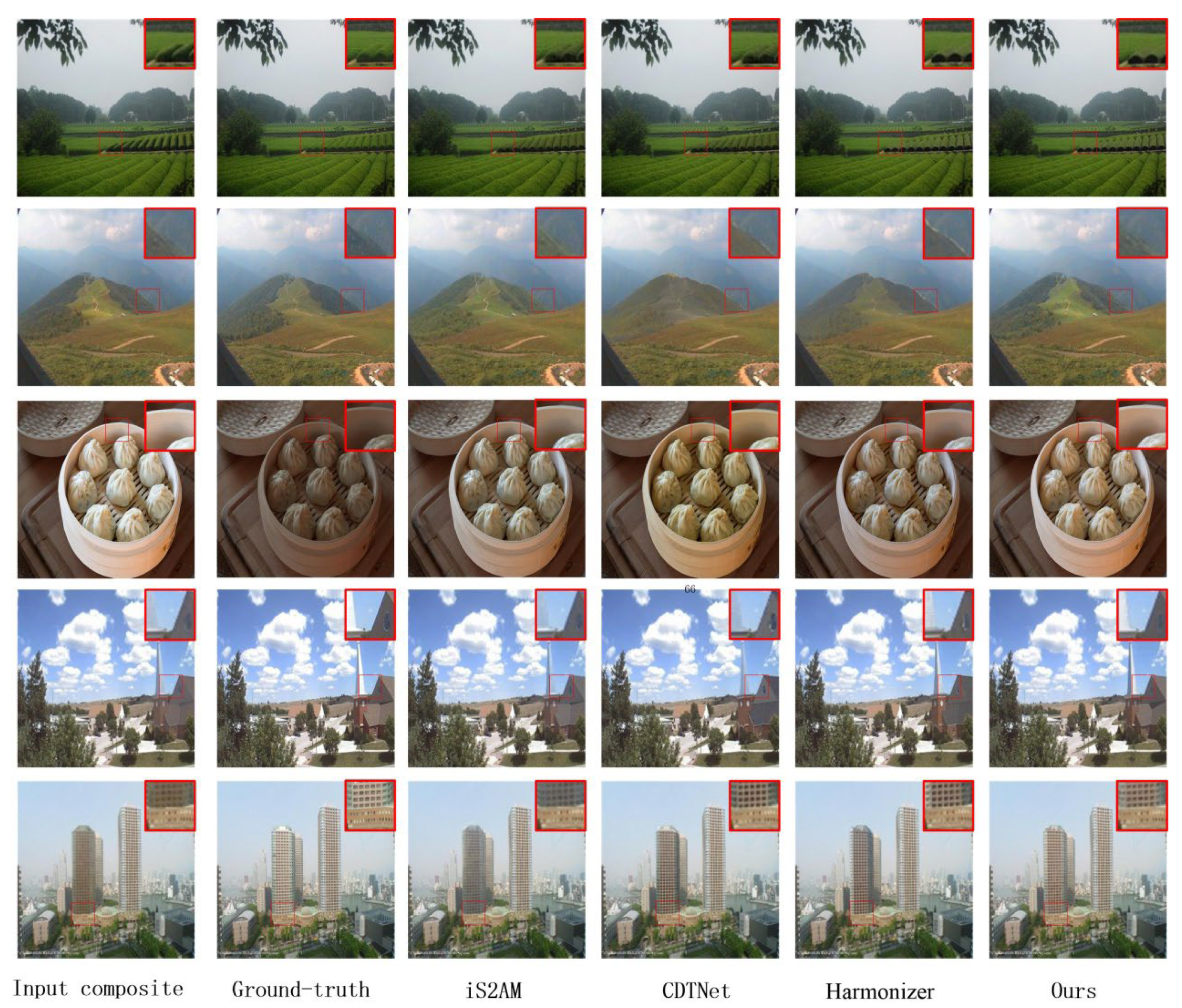

4.4. Comparative Experiments and Analysis

4.5. Computational Efficiency Assessment

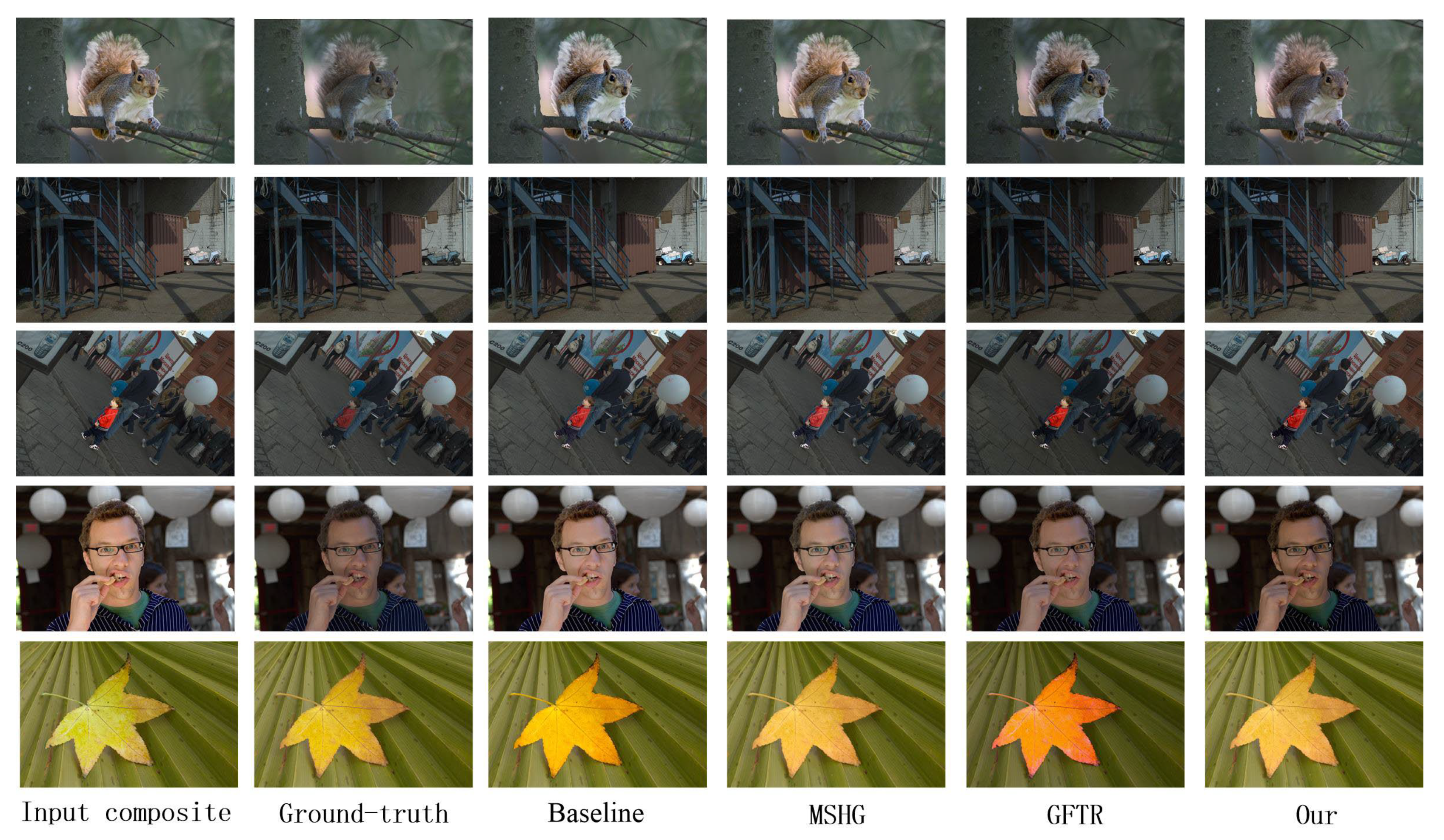

4.6. Analysis of Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MSGF | Multi-scale and global feature |

| VGG | Visual geometry group |

| DovNet | Domain verification discriminator network |

| CNN | Convolutional neural network |

| RGB | Red, green, blue |

| CDTNet | Collaborative dual transformations network |

| GGFT | Globally-guided instance feature transformation |

| iS2AM | Composite image of spatial-separated attention module |

| GAN | Generative adversarial network |

| MSE | Mean square error |

| fMSE | Foreground of mean square error |

| PSNR | Peak signal-to-noise ratio |

| MSHR | Multi-scale module and the lightweight refinement |

| GFTR | Global feature bootstrap and the lightweight refinement |

References

- Sunkavalli, K.; Johnson, M.K.; Matusik, W.; Pfister, H. Multi-scale image harmonization. ACM Trans. Graph. 2010, 29, 125. [Google Scholar] [CrossRef]

- Luan, F.; Paris, S.; Shechtman, E.; Bala, K. Deep painterly harmonization. Comput. Graph. Forum. 2018, 37, 95–106. [Google Scholar] [CrossRef]

- Xue, S.; Agarwala, A.; Dorsey, J.; Rushmeier, H. Understanding and improving the realism of image composites. ACM Trans. Graph. 2012, 31, 84. [Google Scholar] [CrossRef]

- Sofiiuk, K.; Popenova, P.; Konushin, A. Foreground-aware semantic representations for image harmonization. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 1620–1629. [Google Scholar]

- Ke, Z.; Sun, C.; Zhu, L.; Xu, K.; Lau, R.W.H. Harmonizer: Learning to perform white-box image and video harmonization. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 690–706. [Google Scholar]

- Cong, W.; Zhang, J.; Niu, L.; Liu, L.; Ling, Z.; Li, W.; Zhang, L. Dovenet: Deep image harmonization via domain verification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8394–8403. [Google Scholar]

- Guo, Z.; Guo, D.; Zheng, H.; Gu, Z.; Zheng, B.; Dong, J. Image harmonization with transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 14870–14879. [Google Scholar]

- Wang, K.; Gharbi, M.; Zhang, H.; Xia, Z.; Shechtman, E. Semi-supervised parametric real-world image harmonization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5927–5936. [Google Scholar]

- Meng, Q.; Liu, Q.; Li, Z.; Lan, X.; Zhang, S.; Nie, L. High-resolution image harmonization with adaptive-interval color transformation. Adv. Neural Inf. Process. Syst. 2024, 37, 13769–13793. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. In Seminal Graphics Papers: Pushing the Boundaries; Association for Computing Machinery: New York, NY, USA, 2023; Volume 2, pp. 577–582. [Google Scholar]

- Tian, C.; Zhang, Q. Self-Supervised Image Harmonization via Holistic Feature Fusion. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Usama, M.; Nyman, E.; Näslund, U.; Grönlund, C. A domain adaptation model for carotid ultrasound: Image harmonization, noise reduction, and impact on cardiovascular risk markers. Comput. Biol. Med. 2025, 190, 110030. [Google Scholar] [CrossRef] [PubMed]

- Mwubahimana, B.; Yan, J.; Mugabowindekwe, M.; Xiao, H.; Nyandwi, E.; Tuyishimire, J.; Habineza, E.; Mwizerwa, F.; Miao, D. Vision transformer-based feature harmonization network for fine-resolution land cover mapping. Int. J. Remote Sens. 2025, 46, 3736–3769. [Google Scholar] [CrossRef]

- Oriti, D.; Manuri, F.; De Pace, F.; Sanna, A. Harmonize: A shared environment for extended immersive entertainment. Virtual Real. 2023, 27, 3259–3272. [Google Scholar] [CrossRef] [PubMed]

- Duan, L.; Wu, M.; Lou, H.; Yin, J.; Li, X. MRCAN: Multi-scale Region Correlation-driven Adaptive Normalization for Image Harmonization. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 5206–5211. [Google Scholar]

- Cong, W.; Tao, X.; Niu, L.; Liang, J.; Gao, X.; Sun, Q.; Zhang, L. High-resolution image harmonization via collaborative dual transformations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 18470–18479. [Google Scholar]

- Niu, L.; Tan, L.; Tao, X.; Cao, J.; Guo, F.; Long, T.; Zhang, L. Deep image harmonization with globally guided feature transformation and relation distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 7723–7732. [Google Scholar]

- Yuan, J.; Wu, H.; Xie, L.; Zhang, L.; Xing, J. Learning multi-color curve for image harmonization. Eng. Appl. Artif. Intell. 2025, 146, 110277. [Google Scholar] [CrossRef]

- Cong, W.; Zhang, J.; Niu, L.; Liu, L.; Ling, Z.; Li, W.; Zhang, L. Image Harmonization Dataset iHarmony4: HCOCO, HAdobe5k, HFlickr, and Hday2night. arXiv 2019, arXiv:1908.10526. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Part v 13. Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhou, B.; Zhao, H.; Puig, X.; Xiao, T.; Fidler, S.; Barriuso, A.; Torralba, A. Semantic understanding of scenes through the ade20k dataset. Int. J. Comput. Vis. 2019, 127, 302–321. [Google Scholar] [CrossRef]

- Bychkovsky, V.; Paris, S.; Chan, E.; Durand, F. Learning photographic global tonal adjustment with a database of input/output image pairs. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011), Colorado Springs, CO, USA, 20–25 June 2011; pp. 97–104. [Google Scholar]

- Tsai, Y.-H.; Shen, X.; Lin, Z.; Sunkavalli, K.; Lu, X.; Yang, M.-H. Deep image harmonization. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Method | HCOCO | HFlickr | HAdobe5k | Hday2night | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | |

| iS2AM | 16.48 | 266.14 | 39.29 | 69.68 | 443.63 | 33.56 | 22.59 | 166.19 | 37.24 | 40.59 | 591.07 | 37.72 |

| CDTNet | 16.25 | 261.29 | 39.15 | 68.61 | 423.03 | 33.55 | 20.62 | 149.88 | 38.24 | 36.72 | 549.47 | 37.95 |

| Harmo nizer | 17.34 | 298.42 | 38.77 | 64.81 | 434.06 | 33.63 | 21.89 | 170.05 | 37.64 | 33.14 | 542.07 | 37.56 |

| Ours | 16.02 | 260.67 | 39.69 | 60.42 | 350.56 | 33.42 | 18.43 | 130.96 | 39.78 | 33.01 | 480.67 | 37.98 |

| Method | Input Composite | iS2AM | CDTNet | HIM | Ours |

|---|---|---|---|---|---|

| B–T score↑ | 0.387 | 0.465 | 0.893 | 0.851 | 1.328 |

| Method | Time (s) | Model Parameters (in Millions) |

|---|---|---|

| iS2AM | 14.7 | 68 |

| CDTNet | 10.8 | 216 |

| HIM | 0.02 | 21.7 |

| Ours | 0.01 | 20.9 |

| Method | HCOCO | HFlickr | HAdobe5k | Hday2night | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | MSE ↓ | fMSE ↓ | PSNR ↑ | |

| Baseline | 16.48 | 266.14 | 39.29 | 69.68 | 443.63 | 33.56 | 22.59 | 166.19 | 37.24 | 40.59 | 591.07 | 37.72 |

| MSHG | 16.22 | 262.02 | 37.25 | 64.79 | 432.75 | 31.78 | 21.06 | 152.67 | 38.92 | 35.39 | 580.62 | 36.21 |

| GFTR | 16.10 | 261.78 | 38.83 | 62.43 | 398.52 | 32.41 | 19.87 | 143.66 | 39.41 | 34.87 | 503.41 | 37.54 |

| Ours | 16.02 | 260.67 | 39.69 | 60.42 | 350.56 | 33.42 | 18.43 | 130.96 | 39.78 | 33.01 | 480.67 | 37.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Zhang, D.; Geng, S.; Zhou, M. Research on High-Resolution Image Harmonization Method Based on Multi-Scale and Global Feature Guidance. Appl. Sci. 2025, 15, 10573. https://doi.org/10.3390/app151910573

Li R, Zhang D, Geng S, Zhou M. Research on High-Resolution Image Harmonization Method Based on Multi-Scale and Global Feature Guidance. Applied Sciences. 2025; 15(19):10573. https://doi.org/10.3390/app151910573

Chicago/Turabian StyleLi, Rui, Dan Zhang, Shengling Geng, and Mingquan Zhou. 2025. "Research on High-Resolution Image Harmonization Method Based on Multi-Scale and Global Feature Guidance" Applied Sciences 15, no. 19: 10573. https://doi.org/10.3390/app151910573

APA StyleLi, R., Zhang, D., Geng, S., & Zhou, M. (2025). Research on High-Resolution Image Harmonization Method Based on Multi-Scale and Global Feature Guidance. Applied Sciences, 15(19), 10573. https://doi.org/10.3390/app151910573