1. Introduction

With the rapid advancement of unmanned systems, including aerial drones, ground robots, and service platforms, intelligent perception has become a cornerstone for enabling autonomous navigation, accurate positioning, and reliable task execution. In such contexts, robots must be capable of perceiving and interpreting diverse objects in their environment to ensure safety and efficiency. However, transparent objects—such as glass, plastics, and laboratory containers—pose unique challenges due to their refractive and reflective optical properties, which often disrupt conventional vision and depth-sensing mechanisms. The inability to perceive transparent objects may result in navigation errors, manipulation failures, or even safety risks. Therefore, enhancing the perception capabilities of unmanned systems toward transparent objects is of critical importance, as it directly supports applications such as collision-free navigation in cluttered scenes, robotic grasping and manipulation of fragile transparent items, and high-precision localization in safety-critical domains.

Transparent objects are ubiquitous in everyday life, with materials such as glass, plastic, and glass lids being commonly encountered. Similarly, industrial glassware such as beakers, test tubes, and petri dishes are integral to laboratory settings. With the increasing integration of robotics into daily activities, it is essential for robots to be capable of obtaining accurate pose information for transparent objects in their environment [

1]. Depth sensing technologies, such as RGB-D cameras, play a pivotal role in achieving this. Depth maps produced by these cameras have found extensive applications in fields like 3D reconstruction and robotics, offering improved insight into the complex geometric details of dense scenes and fine geometric features of targets when compared to RGB images. However, transparent objects, due to their refractive and reflective properties, present a significant challenge for conventional depth sensors. These optical characteristics disrupt the geometric light path assumptions that depth sensing relies on, complicating the task of acquiring reliable depth data for such objects [

2]. As a result, a hybrid approach that combines the scene geometry captured by RGB images with the sparse depth information provided by depth sensors is necessary to reconstruct a more accurate, higher-density depth map.

Depth completion for transparent objects has emerged as a challenging problem in computer vision in recent years. Given the inherent material properties of transparent objects, hardware-based solutions often struggle to address the complexities of depth recovery in general scenarios [

3]. However, with the rapid advancements in neural networks and large language models, new methodologies for transparent object depth completion have emerged. Currently, approaches in this domain can be broadly classified into two categories: multi-view and single-view methods [

4]. While multi-view approaches offer more comprehensive reconstruction and enhanced perception of transparent objects, they introduce additional challenges in practical scenarios. Specifically, the instability of multi-camera setups during deployment can lead to uncertainties in algorithmic results. Moreover, multi-view methods fail to leverage valuable information present in the original depth map, which may limit their adaptability, particularly in dynamic environments.

Single-view depth completion, in contrast, faces three primary challenges [

5]. First, many current methods rely on the encoder–decoder structure, which is common in visual tasks, to restore depth information. However, this approach often overlooks the difficulties associated with the lack of texture features in transparent objects. Second, there is a tendency to neglect the cross-modal interaction between the shallow feature details of transparent objects and RGB-D data, leading to a loss of local details and unclear object contours in the predicted depth map. Finally, not all areas of a depth image require completion. Depth completion should be applied selectively, focusing only on regions where depth information is missing or erroneous. However, many existing methods employ full convolutional networks, treating all regions equally, which is inefficient and can result in suboptimal performance.

To address the limitations of existing approaches, this paper proposes an end-to-end deep regression network designed to achieve efficient and high-precision depth completion for transparent objects. Our method introduces a two-stage network architecture, consisting of a semantic clue-dominated stage and a depth information-dominated stage. In more detail, the semantic clue-dominated stage primarily focuses on understanding semantic information that is crucial for depth prediction. This stage emphasizes semantic cues, making the predicted depth particularly reliable around the edges of transparent objects. However, it is more sensitive to variations in color and texture. The depth information-dominated stage, on the other hand, takes both the sparse depth data and the depth predictions from the first stage as input to generate a dense depth map. While this stage typically produces more reliable depth estimates, the input sparse depth data can introduce significant noise, especially along the edges of transparent objects. Since the depth maps produced by these two stages are complementary, we perform a deep fusion of their results to achieve a more accurate final output. Additionally, we refine the fused dense depth map using a fine depth recovery process that ensures global consistency across the entire map.

The main contributions of this paper are as follows:

Two-Stage Network Architecture for Transparent Object Depth Completion: To fully extract features from RGB-D images of transparent objects, we propose a two-stage depth completion network tailored for semantic scenes. This architecture integrates a semantic information-guided stage and a depth information-driven stage to perform dense depth prediction, effectively leveraging and combining the cross-modal features of RGB-D data.

Introduction of Self-Attention for Transparent Object Depth Completion: This paper is the first to incorporate a self-attention mechanism into transparent object depth completion. The self-attention mechanism enables comprehensive encoding of surface normal information and edge details, significantly enhancing the performance of depth completion tasks for transparent objects.

Global Consistency via Scale Factor-Based Refinement: We introduce a scale factor approach to refine depth completion in the scale space, improving the global consistency of the depth map. This refinement process greatly enhances the accuracy and quality of the predicted depth map.

2. Related Work

Transparent object perception has long been a challenging problem in computer vision. Early methods often relied on physical priors, such as surface shape measurement techniques based on polarization imaging. These methods reconstruct surface normals by analyzing the polarization characteristics of reflected and transmitted light from transparent objects. However, they typically require high-precision sensors and specific environmental lighting conditions. Stereo vision-based methods, like KeyPose [

6], utilize stereo cameras to predict 3D keypoints of transparent objects, avoiding explicit depth computation. Despite maintaining good generalization performance even with unseen object categories, these methods are dependent on high-quality stereo images and sensitive to feature matching accuracy.

With the development of deep learning, data-driven methods have gradually become mainstream. For example, ClearGrasp uses synthetic data to train a network for depth completion of transparent objects, thereby enhancing the stability of robotic grasping. The introduction of the Trans2Seg and Trans10K datasets has significantly advanced research in transparent object semantic segmentation. However, due to the difficulty of obtaining ground truth depth data for transparent regions, research on depth completion in real-world scenarios remains in its early stages. Recently, light field imaging techniques have been introduced for transparent object detection, utilizing multi-view information and angular constraints to achieve fast detection. For instance, a self-adaptive density clustering method proposed by Zhang et al. [

7] combines feature saliency and motion consistency constraints based on light field data, achieving a fivefold improvement in computational speed without sacrificing accuracy. A 2024 study from the Wuhan Textile University team proposed a depth completion method based on a dual-cross attention network, where the U-Net structure was improved with dual-cross attention modules and spectral residual blocks. This effectively reduced the semantic gap between RGB images and depth maps, enhancing the stability of depth completion. In low-light conditions, a team from MIT [

8] explored deep neural network-based methods for transparent object imaging, achieving successful reconstruction of transparent objects in near-dark environments. Additionally, the Prior Depth Anything framework [

9], developed by Zhejiang University and the University of Hong Kong, supports zero-shot depth completion, super-resolution, and repair tasks. By fusing sparse depth sensor data with geometric priors from RGB images, it has demonstrated outstanding performance across various real-world datasets.

Depth completion aims to generate dense depth maps from sparse or noisy depth measurements, guided by RGB images. Early works mainly used convolutional neural networks (CNNs) or encoder–decoder architectures, such as Eigen et al.’s multi-scale depth prediction network. Recently, Transformer models have been introduced into this field due to their powerful global context modeling capabilities. For example, Dense Prediction Transformer (DPT) [

10] captures long-range dependencies using Vision Transformers [

11], significantly improving edge-preserving abilities in depth maps. However, most methods assume opaque object surfaces, overlooking depth uncertainties caused by refraction and reflection in transparent regions.

Recent studies have begun to explore dedicated depth completion methods for transparent objects. For example, methods based on compressed sensing and super-resolution convolutional neural networks (SRCNNs) [

12] have been used for transparent object imaging, reconstructing surface details at low sampling rates through single-pixel detection and total variation minimization. A 2025 study introduced a multi-frequency time-domain iterative strategy based on stripe modulation, effectively eliminating interference from parasitic reflections on transparent objects’ rear surfaces in defect detection. Additionally, self-supervised learning paradigms such as Monodepth2 [

13] and ManyDepth2 [

14] use photometric consistency constraints from stereo image pairs or video sequences to reduce reliance on ground truth depth annotations. GeoDepth [

15] further models 3D scenes as sets of planes and uses normal and offset parameterization for self-supervised monocular depth estimation, improving depth discontinuity in indoor and outdoor scenes [

9]. The Prior Depth Anything framework adopts a coarse-to-fine approach, performing pixel-level metric alignment before refining the results with conditional monocular depth estimation models, demonstrating robust adaptation to various sparse priors in a zero-shot setting.

Liu et al. [

16] proposed the DualTransNet network that uses segmentation features for transparent depth completion. In our DualTransNet, it feed segmentation features from an extra module to the main network for better depth completion quality. This demonstrates the effectiveness of segmentation features for depth estimation of transparent objects. Zhai et al. [

17] introduced TCRNet, a transparent object depth completion network based on a cascaded refinement structure that effectively balances accuracy and real-time performance. The network utilizes a cascaded refinement mechanism during the decoding stage to iteratively refine features, thereby enhancing the accuracy of the depth information. Additionally, an attention module is incorporated to focus on the depth-related features of the transparent object regions, further improving performance. Gao et al. [

18] presented a method for transparent object depth estimation based on a single RGB-D input, using a U-Net architecture with an efficient channel attention module. Despite employing a minimal number of parameters, the network significantly boosts performance. Li et al. [

19] proposed a voxel-based deep learning approach for transparent object depth completion. This method leverages image features from the RGB input and valid points in the intersecting voxels derived from the point cloud. A multi-layer perceptron is used to predict the missing depth values, optimizing them under the constraint of surface normal consistency.

Jing et al. [

20] proposed a novel simulation-to-real transferable model, CAGT, which incorporates interactive embedding aggregation and geometric perception capabilities for reconstructing severely sparse depth maps of transparent objects. Pathak et al. [

21] introduced the Context Encoders model, utilizing a conditional GAN architecture to enhance the visual realism of generated completion images through adversarial training. This approach effectively improves completion quality by maximizing the similarity between the generated image and the real image. The “Generative Inpainting” model, proposed by Yu et al. [

22], further advances the realism of completion images by combining GANs with local context information. The adversarial training strategy within this model leads to more natural and visually coherent restoration results. Li et al. [

23] investigated the effect of transparency variations on detection accuracy and proposed a detection method based on visual-tactile fusion. Their research highlighted the influence of lighting changes and the diversity of transparent object shapes on the accuracy of detection outcomes.

3. Method

3.1. Problem Formulation

The depth completion task aims to fill in missing regions of depth measurements using the scene geometry cues provided by the corresponding RGB image. The core challenge of this task lies in efficiently fusing the geometric relationships of the monocular scene with the sparse depth data from the depth sensor—two distinct modalities—to achieve accurate depth reconstruction.

Mathematically, given a set of matched data samples, the goal is to learn a mapping function F such that Y = F (X RGB, X Depth), where X RGB ∈ R 3*H*W represents the three-channel RGB image, X Depth ∈ R H*W represents the one-channel sparse depth map, and Y ∈ R H*W denotes the ground truth depth map.

To address this, we propose a high-performance depth completion network with a novel design that enables effective depth completion from a single RGB-D image of a transparent object. Specifically, this paper introduces a two-stage semantic scene-based depth completion algorithm tailored for transparent objects.

3.2. Network Architecture

We propose an end-to-end depth completion learning framework tailored for semantic scenes. As illustrated in the figure, the framework consists of two distinct stages: the scene geometry understanding stage, guided by semantic segmentation features, and the depth completion stage, which is primarily driven by depth information. In the backbone network, the first stage focuses on semantic information and predominantly utilizes color cues to predict relatively dense depth maps. The second stage, on the other hand, is driven by depth information, leveraging depth cues to produce even denser depth maps. The depth maps generated by these two stages are highly complementary, and we further enhance their accuracy by fusing them using confidence-based weighting. Finally, the fused depth map undergoes refinement through depth enhancement based on global consistency. The architecture of this network is designed to fully exploit and integrate the cross-modal features of RGB-D images, ensuring improved depth completion performance. The full network architecture is shown in

Figure 1.

3.2.1. Semantic Information Guidance Phase Based on the Self-Attention Mechanism

Each residual block in both stages follows the classic bottleneck design, composed of three convolutional layers (1 × 1, 3 × 3, 1 × 1) with batch normalization and ReLU activations. Skip connections are employed to facilitate gradient propagation.

The confidence maps (C1 and C2) are generated by feeding the intermediate feature maps from the two stages into parallel 1 × 1 convolution layers followed by a sigmoid activation, representing the reliability of each stage’s depth prediction. These maps are used in the fusion equation as follows:

where ε prevents division by zero.

The proposed cost function integrates three terms, including scale-invariant depth error (L_si), SSIM loss (L_ssim), and smoothness regularization (L_sm), as follows:

where λ1 = 0.1 and λ2 = 0.01. This design aligns with our two-stage proposal and jointly optimizes global accuracy and local structure.

The first stage is the semantic scene branch, which highlights the transparent object regions based on the semantic segmentation results of the transparent object RGB-D image. This stage extracts boundary occlusion and surface normal information for depth prediction, ultimately generating a relatively dense depth map. To enhance effectiveness, the aligned sparse depth map is also incorporated for depth calibration, improving the overall depth estimation.

In this stage, the network follows an encoder–decoder architecture with a symmetrical structure: the encoder consists of one convolutional layer followed by ten residual blocks, while the decoder includes five deconvolutional layers and one convolutional layer. Depth completion involves filling in the missing gaps in a relatively sparse depth map, which can be framed as a regression problem. However, depth regression typically learns to simply copy or interpolate depth values as output. This tendency may cause the network to fall into a local minimum, where it merely copies or interpolates rather than predicting accurate depth values. To address this, we introduce a self-attention mechanism to each convolutional layer, allowing the network to focus on precise feature values at each convolution stage and output more relevant information.

To implement this, gated convolution is employed in our network. Specifically, we define the input of a convolution block as

X, the feature extraction convolution block as Conv

f, and the gating convolution block as Conv

g. The self-attention model can then be defined as follows:

The normalization function normalization (*) is used for spectral normalization, and ⊙ represents element-wise pixel multiplication. The gating operation unique to the self-attention mechanism enables the network to dynamically select the most effective features, highlighting the semantic information within the image. As a result, the model can retain useful feature regions in the output. This convolutional network, aided by self-attention, focuses on finer image details and generates more accurate depth values.

For the self-attention network, surface normals and occlusion boundaries provide essential surface properties and texture features for transparent objects. We combine these two representations with the original sparse depth map to generate the first-stage predicted depth map, which then serves as part of the input for the second-stage network.

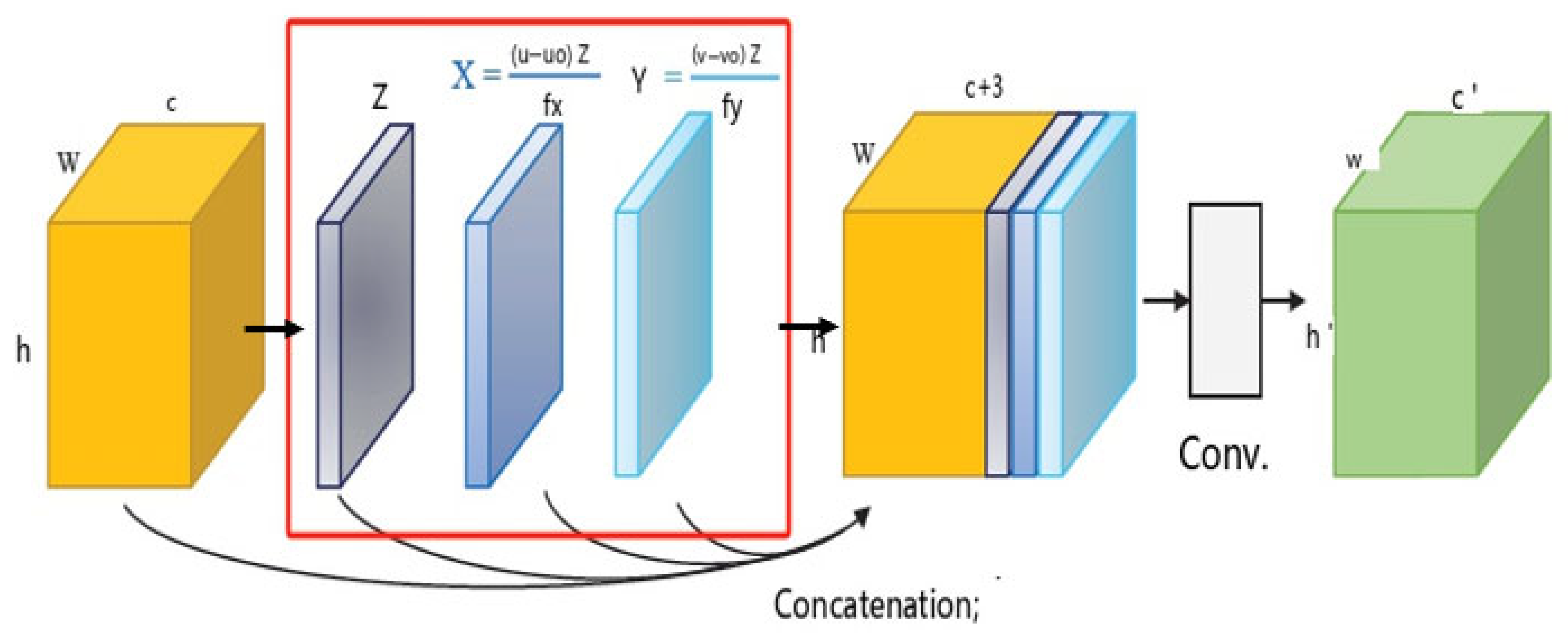

3.2.2. Depth Guidance Phase Based on Geometric Convolution

The primary goal of the second stage is to predict a dense depth map by upsampling the sparse depth map. This branch also follows a similar encoder–decoder architecture. Additionally, we employ a decoder–encoder fusion strategy to integrate the semantic information-dominated features into this branch. Specifically, the decoder features from the semantic information-dominated stage are concatenated with the corresponding encoder features in the depth information-dominated branch. Furthermore, the depth prediction results from the first stage are also fed into this branch. This approach enables the fusion of color and depth modalities across multiple stages.

From a network implementation perspective, the second stage emphasizes 3D geometric cues. Building on the concept of Learning Joint 2D–3D Representations for Depth Completion, we introduce geometric convolutional layers into the encoder of this stage, replacing the conventional convolutional layers in each ResBlock to encode 3D geometric information. To enhance the convolutional layers, we incorporate the 3D position map (X, Y, Z) as additional input. The 3D position map is derived using the following formulas: , , where (u,v) are the pixel coordinates and (u0,v0,fx,fy) are the camera intrinsic parameters.

Additionally, to better encode 3D geometric information into the depth information-dominated branch, the sparse depth map undergoes a minimum pooling operation to reduce the value of Z sufficiently.

When predicting two dense depth maps, we perform depth fusion using the following strategy:

Here, and represent the depth completion results from the first and second stages, respectively, while and are the confidence maps corresponding to each stage.

3.2.3. Fine-Grained Depth Recovery Based on Global Consistency

Leveraging a multi-scale network based on a logarithmic space scale-independent loss function, initially proposed by Eigen, the network employs a coarse-to-fine approach for depth estimation. We make a simple assumption: adjacent pixels with similar intensities in the semantic scene segmentation image should also exhibit similar depths. This process is achieved by optimizing a weighted quadratic cost function, as described in

Section 3.2.1:

Here,

U represents the sparse depth to be completed,

r and

s refer to spatially adjacent pixels,

wrs is the weight, and

N(

r) is defined as follows:

where

and

are the mean and variance of the depth values within the r-domain window. The algorithm in this paper uses a 3 × 3 domain window. Additionally, the corresponding RGB image is denoted as

.

To enhance structural information for the depth completion task, we introduce a structure-related loss term Ls, which is based on the Structural Similarity Index (SSIM). SSIM evaluates the degradation of structural information, and in our task, a higher SSIM index indicates a stronger structural consistency in the completed depth map. By incorporating SSIM, we aim to guide the network to generate depth maps with better structural integrity, resulting in more refined depth completion across different scales while preserving the underlying spatial geometric structure.

3.2.4. Encoder–Decoder Architecture Details

The encoder consists of one convolutional layer followed by ten residual blocks. Each residual block follows the bottleneck design with three convolutional layers (1 × 1, 3 × 3, 1 × 1), batch normalization, and ReLU activations. The decoder consists of five deconvolutional layers and one convolutional layer. Skip connections are used to facilitate gradient propagation. The self-attention mechanism is integrated into each convolutional layer in the first stage, as detailed in

Section 3.2.1 and

Figure 2. The geometric convolution layer, used in the second stage, is illustrated in

Figure 3.

3.3. Loss Function

The previous discussion demonstrated that effective depth cues can be inferred from a single transparent object image. Now, we will focus on two key aspects: the global scale of the unknown scene and the multi-scale challenges between different pixels.

To address these issues, this paper proposes an error function based on scale invariance to evaluate the accuracy of predicted depth:

where n denotes the total number of valid pixels in the depth map, and all operations (multiplication, division, and summation) are performed element-wise over the depth map pixels. Y is the predicted depth, and

is the true depth. Based on this formulation, we observe that multiplying Y by any non-zero scalar α results in the same error:

Thus, the error function proposed in this paper is inherently based on global scale invariance.

To simplify the calculation, we discard the terms that are independent of the predicted depth

Y from the above formula

. The loss function can therefore be optimized as follows:

4. Experiment

4.1. Dataset

The TransCG dataset [

24] consists of 57,715 RGB images and their corresponding depth maps. It includes 51 transparent objects and approximately 200 opaque objects. All images in the dataset are captured from various real-world scenes, encompassing a total of 130 unique scenes. The objects in the dataset are randomly placed in both simple and cluttered environments, simulating real-world robot grasping scenarios. To maintain consistency with the original dataset’s division, we use the same data split, which includes 34,191 images.

4.2. Evaluation Metrics

In this paper, we continue to utilize the evaluation metrics from previous works [

25,

26], employing them to compare the performance of the networks.

(1) Root Mean Squared Error (RMSE): We calculate RMSE to evaluate the error between the predicted depth and the ground truth. It can be calculated as follows:

where

N is the total number of pixels in the depth map,

y represents a pixel in the predicted depth map

Y, and

represents the corresponding pixel in the ground truth depth map

.

(2) Absolute Relative Difference (REL): We calculate REL to indicate the mean absolute relative difference, which can be calculated as follows:

where

N is the total number of pixels in the depth map,

y is a pixel in the predicted depth map, and

is the corresponding pixel in the ground truth depth map.

(3) Mean Absolute Error (MAE): We use MAE to calculate the mean absolute error between estimated depth and ground truth, which can be calculated as follows:

where

N is the total number of pixels in the depth map,

y is a pixel in the predicted depth map, and

is the corresponding pixel in the ground truth depth map.

(4) Threshold: We use the threshold to calculate the percentage of pixels with predicted depths, which can be calculated as follows:

In this paper, we set the threshold with 1.05, 1.10, and 1.25.

In the above formulas, N is the total number of pixels in the depth map, y represents a pixel in the predicted depth map Y, and represents the corresponding pixel in the ground truth depth map .

4.3. Ablation Experiment

We first conducted a series of experiments to validate the effectiveness of the specialized design components proposed in this paper, including the two-stage backbone architecture, the incorporation of a self-attention mechanism, and deep refinement based on scale factors.

Effectiveness of the Two-Stage Backbone Structure: We propose four variants of the backbone, differentiated by whether the sparse depth map is input into the semantic guidance stage and whether the first-stage depth prediction is used as input for the depth-dominant branch. The performance of these variants, labeled M1 to M4, is shown in

Table 1. The results indicate a significant performance improvement when the relative depth input (SG-Input relative depth) benefits from semantic guidance assistance and depth-dominant support. Additionally, we explore another backbone variant (M5), inspired by FusionNet and DeepLiDAR, which generates an additional guidance map from the first stage to assist the second stage. The results suggest that this extra guidance map is unnecessary and even slightly detrimental to performance.

Figure 4 illustrates some typical examples.

Effectiveness of the Self-Attention Mechanism: As shown in

Table 1, the inclusion of the self-attention convolutional layer significantly improves the performance of the backbone network, particularly in terms of RMSE. When the deep refinement module (Re) is added, the final model (M4 + SA + Re) achieves superior detection accuracy, as indicated in the last row of the table.

Figure 5 presents a typical example to illustrate the differences between the models. The model with the self-attention convolutional layer demonstrates superior depth inference, particularly when the color features of foreground transparent objects are obscured by the background color. The first two rows of

Table 2 examine the impact of the self-attention mechanism on full-depth results. Indeed, the self-attention mechanism provides a significant performance boost over the FCN model. For comparison, we use ResNet18, which has similar parameters to the classic FCN. The results indicate that this improvement stems from the network’s ability to attend to convolutional features, allowing the model to focus on critical areas and key features. In this context, the self-attention mechanism enhances the model’s ability to learn and retain geometric information.

SSIM Loss Function Based on Global Consistency: By introducing a smaller weight for the SSIM loss during optimization, the self-attention network learns to balance structural information without significantly affecting RMSE and delta percentage. After incorporating the SSIM loss, the SSIM score increased by 15.3%, demonstrating that the network successfully generates more accurate depth map values.

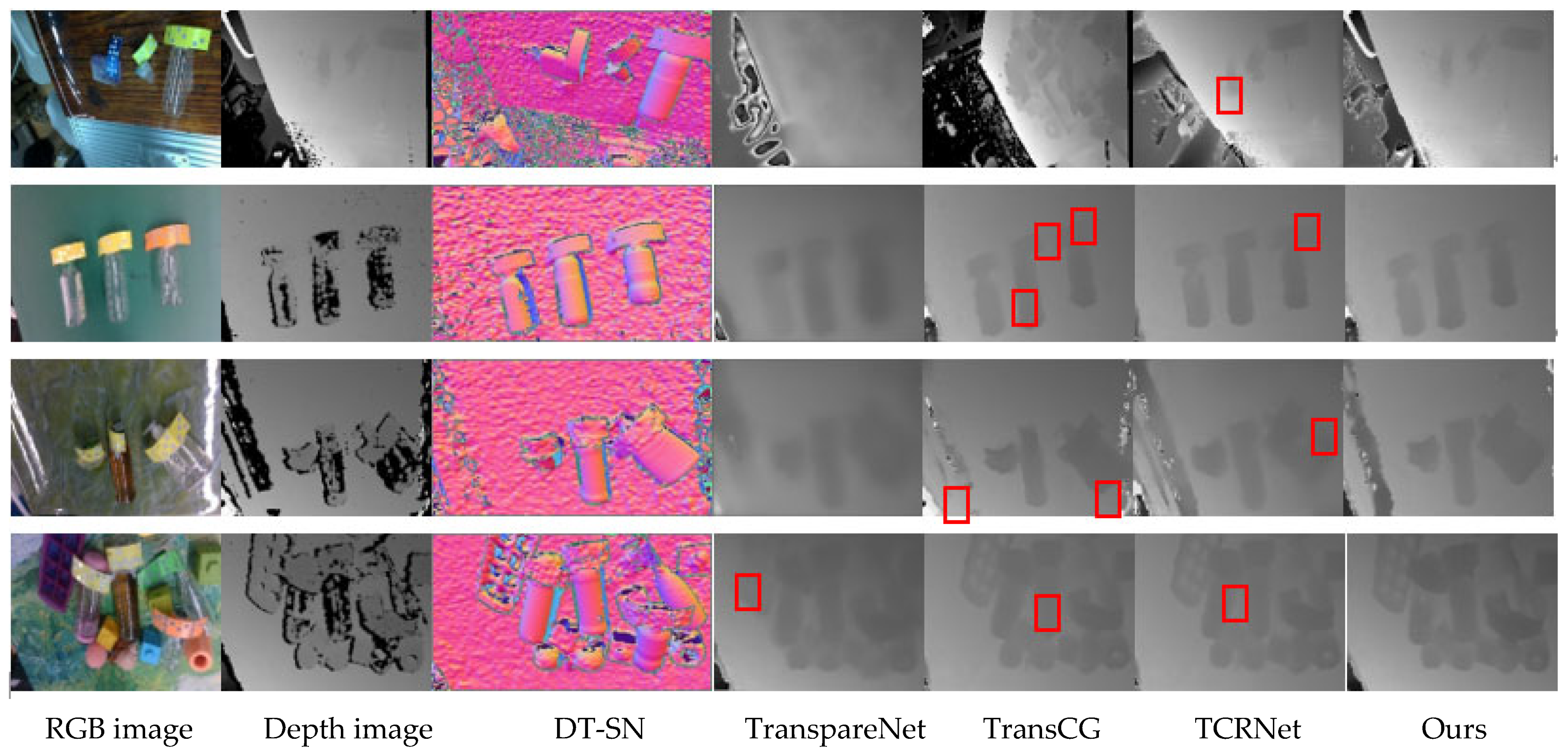

4.4. Comparison with SOTA

Table 3 presents the full quantitative performance of our model, along with comparisons to the top five published or archived papers. The experiments were conducted using Python 3.8, PyTorch 2.1.2, Ubuntu 20.04, and a single 4090 GPU. The results reveal significant improvements in RMSE, which is the primary evaluation metric.

4.5. Generalization Experiment

To further assess the generalizability of the proposed method beyond the TransCG dataset, we conducted additional evaluations on the TOD-10K dataset, a large-scale synthetic dataset for transparent object depth estimation. As shown in

Table 4, our method consistently outperforms other state-of-the-art approaches across most metrics, demonstrating its robustness and strong generalization capability. The results indicate that the design of our two-stage network and the incorporation of the self-attention mechanism are effective not only on real-world data but also in synthetic environments.

4.6. Implementation Details

Our model was implemented using PyTorch 2.1.2 and trained on an NVIDIA GeForce RTX 4090 GPU. We used the Adam optimizer with a learning rate of 1 × 10−4 and a batch size of 8. The input RGB and depth images were resized to 320 × 240 pixels. The model was trained for 50 epochs.

5. Discussion

The quantitative results presented in

Table 3 demonstrate that our method achieves state-of-the-art performance on the TransCG dataset. Specifically, SRNet-Trans attains the lowest RMSE (0.0138), MAE (0.0107), and REL (0.0155), outperforming existing approaches such as TCRNet and TransCG. The significant improvement in these metrics indicates that our model produces more accurate and reliable depth estimates, which is critical for enhancing the performance of downstream robotic tasks such as transparent object grasping and navigation in cluttered environments.

The superior performance can be attributed to the novel two-stage architecture that effectively combines semantic guidance and geometric depth refinement. The first stage, enhanced with the self-attention mechanism, successfully captures fine-grained surface normal and boundary information of transparent objects, which is often challenging due to their lack of texture and high reflectivity. The second stage, equipped with geometric convolutional layers, further improves the spatial accuracy of depth values by explicitly incorporating 3D geometric cues. The complementary nature of the two stages allows for effective fusion, resulting in a dense and consistent depth map.

When compared to existing methods, our approach shows particular strength in recovering detailed edges and thin structures, as visually supported in

Figure 6. For instance, methods like ClearGrasp and TranspareNet often fail to accurately reconstruct highly reflective or curved regions, whereas our model maintains structural integrity owing to the global consistency loss and multi-scale refinement.

Despite these advancements, the computational cost of SRNet-Trans remains non-negligible. The two-stage design and self-attention modules increase inference time, which may hinder deployment in real-time robotic systems. Future work may explore model distillation or lightweight attention variants to improve efficiency.

In summary, while SRNet-Trans sets a new benchmark in transparent object depth completion, achieving real-time performance and broader generalization under challenging physical conditions will be essential for real-world deployment.